Abstract

Retinal microsurgery is technically demanding and requires high surgical skill with very little room for manipulation error. During surgery the tool needs to be inserted into the eyeball while maintaining constant contact with the sclera. Any unexpected manipulation could cause extreme tool-sclera contact force (scleral force) thus damage the sclera. The introduction of robotic assistance could enhance and expand the surgeon’s manipulation capabilities during surgery. However, the potential intra-operative danger from surgeon’s misoperations remains difficult to detect and prevent by existing robotic systems. Therefore, we propose a method to predict imminent unsafe manipulation in robot-assisted retinal surgery and generate feedback to the surgeon via auditory substitution. The surgeon could then react to the possible unsafe events in advance. This work specifically focuses on minimizing sclera damage using a force-sensing tool calibrated to measure small scleral forces. A recurrent neural network is designed and trained to predict the force safety status up to 500 milliseconds in the future. The system is implemented using an existing ”steady hand” eye robot. A vessel following manipulation task is designed and performed on a dry eye phantom to emulate the retinal surgery and to analyze the proposed method. Finally, preliminary validation experiments are performed by five users, the results of which indicate that the proposed early warning system could help to reduce the number of unsafe manipulation events.

I. INTRODUCTION

Retinal surgery continues to be one of the most challenging surgical tasks due to its high precision requirements, small and constrained workspace, and delicate eye tissue. Factors including physiological hand tremor, fatigue, poor kinesthetic feedback, patient movement, and the absence of the maneuver force sensing could potentially lead to surgeon’s misoperations, and subsequently iatrogenic injury. During a general retinal surgery, the surgeon needs to insert small instruments (e.g. 25Ga, ϕ < 0.5mm) through the sclerotomy port (ϕ < 1mm) on the sclera, the white part of the eye, to perform delicate tissue manipulations in the posterior of the eye. The sclera continues to sustain the manipulation force. Any mis-operations could cause extreme scleral force leading to sclera damage. Thus, the manipulation safety of retinal surgery strongly depends on the surgeon’s level of experience and skills.

Present limitations in retinal surgery can be relieved by resorting to advanced robotic assistive technology. Continuing efforts are being devoted to the development of the surgical robotic systems to enhance and expand the capabilities of surgeons during retinal surgery. Two robot-assisted retinal surgeries, retinal vein cannulation and epiretinal membrane peeling, have been performed successfully on human patients recently [1], [2], demonstrating the clinical feasibility of the robotic technology for retinal microsurgery. Major enabling technologies for robot-assisted retinal surgery include teleoperative manipulation systems [3]–[6], handheld robotic devices [7], and flexible micro-manipulators [8]. In prior work we developed the Steady Hand Eye Robot (SHER) based on a cooperative control approach [9]. SHER allows the user to directly hold the tool mounted on the robot end-effector. The velocity of SHER follows the user input force applied on the tool handle, while the user’s hand tremor is damped by the robot’s stiff mechanical structure. SHER provides not only the steady and precise motion, but also the transparent manipulation.

Smart or otherwise responsive instruments with force-sensing capability are strategic and potentially useful for safe interaction between the robot and the patient. An approach for the development of smart instruments is to incorporate a micro sensor in the tool handle [10]. These prototypes were not however, able to distinguish forces applied at the tool tip while the tool was inserted into the eye. Therefore, our group designed and developed a family of ”smart” tools by separately integrating Fiber Brag Grating (FBG) sensors into the contact segments of the tool [11], [12]. The force-sensing tool [13] that can measure the contact force between tool tip and retina, between tool shaft and sclerotomy port (scleral force), is further developed in this work.

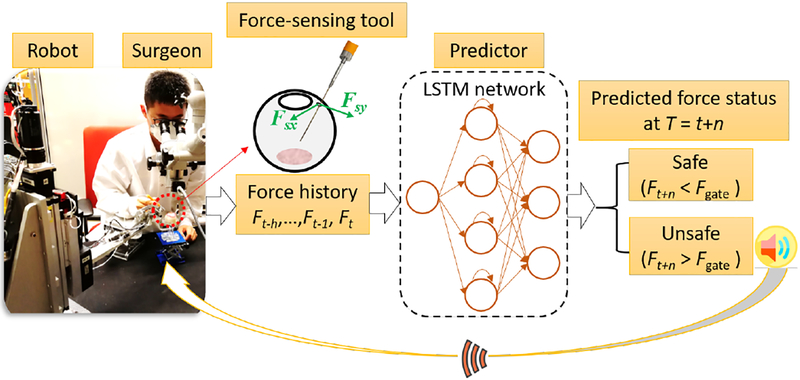

The aforementioned robotic devices can give passive support to the surgeons, i.e. filtering the hand tremor, improving the tool location accuracy, and enhance the surgeon’s tactile ability. However, the surgeon’s mis-operations caused by the deficiency of skills or fatigue cannot be actively filtered or interrupted by the existing robotic system, due to the lack of the information of surgeon’s next manipulation. Making prediction about the surgeon’s manipulation based on his/her operation history can potentially resolve the above mentioned problems. The predicted information can be used to identify the unsafe events that might occur in the near future. It could be further fed back as an early warning to the surgeon, who in turn reacts to the possible dangerous events in advance. The pipeline of the proposed method is shown in Fig. 1. The surgeon’s manipulation in a surgical task is time based, i.e., time series, on which Recurrent Neural Networks (RNNs) can effectively handle. Especially, the Long Short Term Memory (LSTM) RNNs [14] can capture long range dependencies and nonlinear dynamics and model varying-length sequential data, achieving state-of-the-art results for problems spanning clinical diagnosis [15], image segmentation [16], and language modeling [17], which is applied in this work. Other standard sequential models, such as Kalman filters or fixed-lag smoothers and predictors, are ill-equipped to learn long-range dependencies [15].

Fig. 1:

Overview of our approach. Tool-sclera interactive force is measured using the force-sensing tool. An LSTM network is used as the predictor to classify the future force into two categories: safe and dangerousous. The dangerousous case triggers the auditory alarm, it is then fed back to the surgeon.

In this paper we focus on the sclera safety, thus the scleral force is measured and collected, and a short history of it is used to predict a future safe/unsafe event. The LSTM is used and trained to predict the scleral force status (safe and unsafe based on a preset safety force threshold). We choose auditory substitution to convey the predicted force status to the surgeon, since our previous work has proved that auditory feedback could help improve the surgeon’s awareness [18]. A mock retinal surgery, vessel following is designed and performed by five users to validate the proposed method.

II. ROBOT CONTROL METHOD DESIGN

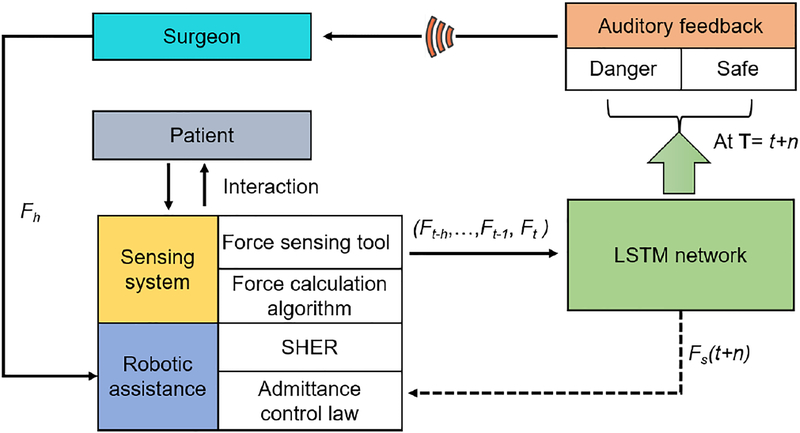

The block diagram of the proposed method is depicted in Fig. 2, where the surgeon manipulates the robotic assistance via the admittance control law. Surgeon’s operative scleral force is collected by the force-sensing tool, it is then fed into the LSTM neural network. The network predicts the sclera force status n timestep ahead and provides it to the auditory feedback module. The dangerous force status triggers the warning alarm. Besides auditory substitution, the predicted information can be further used to implement the multivariable admittance control [13] to enable the robot actively rectify the the user’s misoperations, e.g., reverse moving toward the force decreasing direction.

Fig. 2:

Safety-enhanced robot control scheme. User gets auditory feedback once the predicted force status at time t+n is dangerous. The predicted force is also possibly fed into the robot control law as depicted as dash line.

A. Admittance Control Law

During operation both the user and the robot hold the tool, the user’s manipulation force is applied on the robot handle and fed as an input into the admittance control law [9] as shown in Eq. (2)

| (1) |

| (2) |

where and are the desired robot handle velocities in the handle frame and in the robot frame, respectively as shown in Fig. 3 (a), Fhh is the user’s manipulation force input measured in the robot handle frame, α is the admittance gain tuned by the robot pedal, Adgrh is the adjoint transformation associated with the coordinate frame transformation grh. grh is composed of rotation and translation of the frame transformation and can be written as:

| (3) |

then Adgrh can be denoted as:

| (4) |

where is the skew symmetric matrix that is associated with the vector prh.

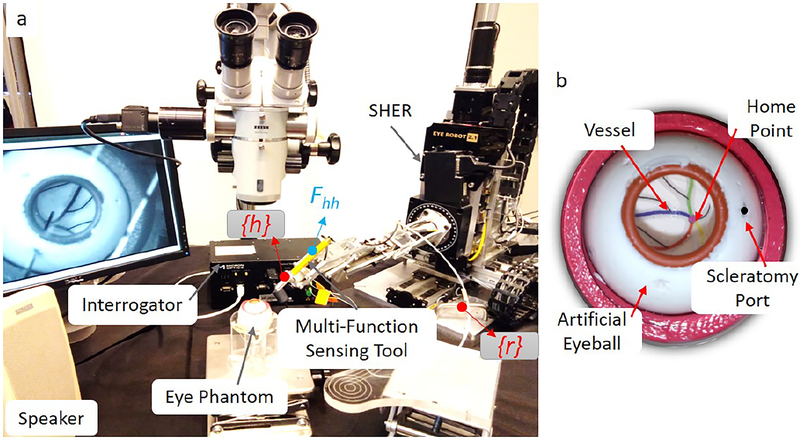

Fig. 3:

Experimental setup of the mock retinal surgery with (a) robotic assistance and (b) the dry eye model. The handle frame denoted {h} locates at the tool handle, and the robot frame denoted as {r} locate as the robot base. User applies maneuver forces denoted as Fhh at the handle.

B. Force Calculation Algorithm

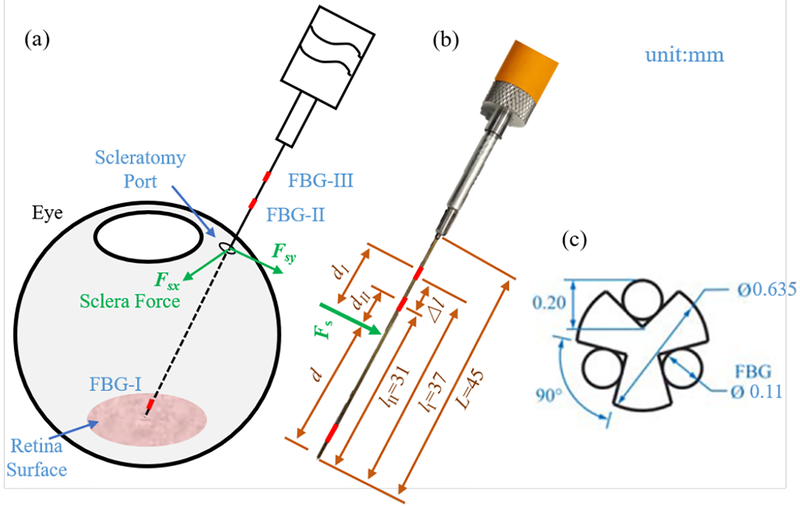

The force-sensing tool is designed and fabricated as shown in Fig. 4. The tool shaft is made of a stainless steel wire with a diameter of 0.635 mm. It is machined to contain three longitudinal V-shape grooves. Each groove is filled and glued with one optical fiber, and each fiber contains three FBG sensors (Technica S.A, Beijing, China), i.e., nine FBG sensors are embedded in the tool shaft in total.

Fig. 4:

force-sensing tool. (a) The depiction of the sclera force, the sclera force is the contact force between the sclerotomy port and the tool shaft. (b) The tool dimension. (c) The cross-section view of the tool shaft with three fibers.

The force-sensing tool can measure the sclera force independently. Considering ambient temperature and noise, we use the sensor reading Δsij to calculate the force defined as follows:

| (5) |

where Δλij is the wavelength shift of the FBG, i = I, II is the FBG segment, j = 1,2,3 denotes the FBG sensors on the same segment. The sclera force exerted on the tool shaft generates strain in the FBG sensors, thus it contributes to the sensor reading:

| (6) |

where ΔSi = [Δsi1,Δsi2,Δsi3]T denotes the sensor reading of FBG sensors in segment i, Fs = [Fx,Fy]T denotes the sclera force applied at sclerotomy port, di denotes the distance from the sclerotomy port to FBG center at segment i along the tool shaft, Mi = [Mx,My]T denotes the moment attributed to Fs on FBG sensors of segment i, Ki (i = I,II) is 3 × 2 constant coefficient matrices, which is obtained through the tool calibration procedures.

Then the sclera force can be calculated using Eq. (6) :

| (7) |

where Δl = lII − lI is the constant distance between FBG sensors of segment I and II as shown in Fig. 4, K* is the pseudo-inverse matrices of K.

C. LSTM neural network

1). Proposed architecture:

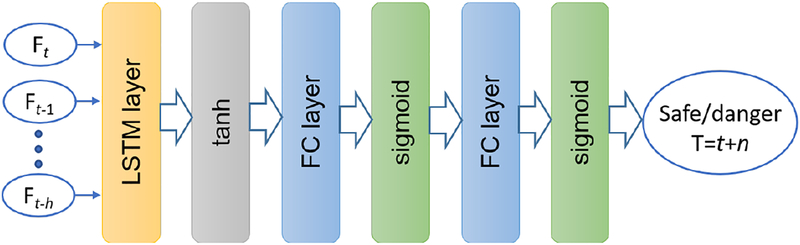

We assume that the scleral force trajectory can be captured through a history of time series of sensor measurements. An LSTM network is adopted to make future predictions based on such history. We formulate the force status prediction problem as a classification task. One LSTM layer and two fully connected layers are assembled to construct the network architecture as shown in Fig. 5. Based on our preliminary study we keep a single LSTM layer because the multiple LSTM layer does not help to improve the prediction performance in our case. The tanh function is applied as the activation layer behind the LSTM layer, the sigmoid function is adopted as the activation layer behind the fully connected layer. The binary crossentropy is used as the loss function at the output layer, that is:

| (8) |

where y is the label, p is the predicted probability.

Fig. 5:

The proposed LSTM neural network. The network gets input from data history with h timestep in past, and outputs the future force status at time t+n.

2). LSTM model:

Among all different variations of LSTMs, we use the one that was applied in [15]. The LSTM update formula for time step t is,

| (9) |

| (10) |

| (11) |

| (12) |

| (13) |

| (14) |

where ht−1 stands for the memory cells at the previous sequence step, σ stands for an element-wise application of the sigmoid (logistic) function, ϕ stands for an element-wise application of the tanh function, and ⊙ is the Hadamard (element-wise) product. The input, output, and forget gates are denoted by i, o, and f respectively, while C is the cell state. W and b are the weight and bias, respectively.

3). Training data:

We design a mock retinal surgery operation, i.e., vessel following, based on the feedback of the clinician for collecting the scleral force. Vessel following incorporates the basic retinal surgery operations, it is depicted as below:

insert the force-sensing tool into the eyeball through the sclerotomy port;

adjust the eyeball’s position and orientation for best view under the microscope using the inserted tool;

locate the tool tip at the home point, then follow one vessel in a round trip without touching the retina surface;

retract the tool axially until the tip reaches the eyeball boundary.

We collect the scleral force as the training data from 50 vessel following trials, these trails are performed by the same user. The network takes the scleral force history of 500 milliseconds as the input, and produces the future force status, i.e., dangerous or safe. Jain et al. found that human auditory reaction time is less than 250 milliseconds [19].

We choose 500 milliseconds as the prediction gap to leave enough time for surgeon to respond to the early alarm. We create the training label by comparing the future forces F with a safety threshold Fsafe it is set as 160 mN based on our earlier study [20]. The label is denoted as dangerous if the duration of the dangerous forces (forces greater than 160 mN) in prediction period is more than 10% of the total time (500 milliseconds), that is:

where t is the current timestep. As comparison, we also train the network to predict the force status 250 millisecond in future.

4). Hyper-parameters:

Successful training critically depends on the proper choice of network hyper-parameters [21]. To find a suitable set of the hyper-parameters, i.e., network size and depth, and learning rate, we apply cross-validation and random search. We use the Adam optimization method [22] as the optimizer. The learning rate is set as a constant number 2e-5. The LSTM layer has 100 neurons, the fully connected layers have the same neurons. Note that for training of the network, we cannot shuffle the sequences of the dataset because the network is learning the sequential relations between inputs and outputs. In our experience, adding dropout does not help with the network performance.

The training dataset is divided into mini-batches of sequences of size 500. We use the popular zero-mean and unit variance input data normalization. Training is performed on a computer equipped with Nvidia Titan Black GPUs, a 20-cores CPU, and 128GB RAM. Single-GPU training takes 90 minutes on the integral data.

III. EXPERIMENTS AND RESULTS

A. Experimental setup

The experimental setup is shown as Fig. 3(a), including the robot assistant, i.e., SHER, the force-sensing tool, a microscope, a FBG interrogator, an eye phantom, a monitor and a speaker.

1). Robot assistant:

SHER is used as robot assistant to provide the ”co-operation” for the user. It incorporates three linear motion stages, one rotation stage, and a parallel links mechanism. It has 5 degrees of freedom and high accuracy (translation resolution is less than 3μm, and the rotation resolution is 0.0005 °).

2). force-sensing tool:

The force-sensing tool is mounted on SHER with a quick release mechanism and used to collect the scleral force. It is calibrated based on Eq. (6) using a precision scale (Sartorius ED224S Extend Analytical Balance, Goettingen Germany) with resolution of 1 mg. The sm 130–700 optical sensor interrogator (Micron Optics, Atlanta, GA) is used to monitor the FBG sensors within the spectrum from 1525 nm to 1565 nm at 2 kHz refresh rate.

The calibration matrices are obtained as follows:

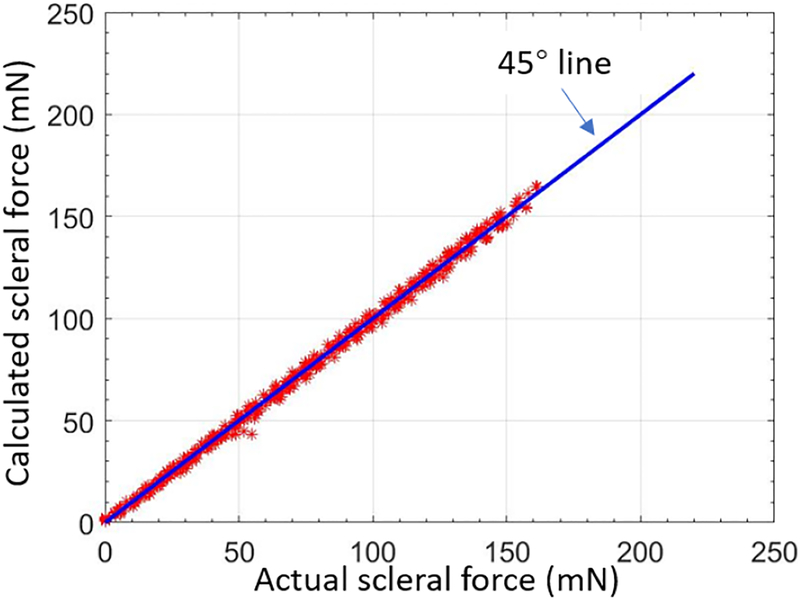

The tool validation experiment is carried out with the same scale to test the calibration results. The validation results of the calculated force and the groundtruth are shown in Fig. 6, where the root mean square error is 2.3 mN.

Fig. 6:

The validation result of the force-sensing tool. The horizontal axis represents the actual forces which are collected by the scale, and the vertical axis represents the calculated forces based on the calibration matrix. The calculation points are supposed to be located on/around the line of 45 °passing origin point.

3). Eye phantom:

A dry eye phantom made of silicon rubber placed into a 3D-printed socket is used for validation. The socket is lubricated with mineral oil to produce a realistic friction coefficient analogous in the eye. A printed paper with several curves representing the retinal vessels in different colors is glued on the eyeball surface as shown in Fig. 3(b). In addition, a microscope (ZEISS, Germany) is utilized to provide the magnified view for users to observe inside the eyeball.

B. Validation experiments

The IRB approved experiments of real-time force status prediction and signaling are carried out to validate the proposed method. Five users participate in the experiments. The user is asked to hold the force-sensing tool mounted on SHER to perform the vessel following tasks, the network predictor predicts the future force status based on the real manipulation force history. The user receive auditory warning once the predicted status is dangerous, then he/she is expected to carry on the upcoming operations with caution (example: reducing manipulation speed, observing the deformation of the sclerotomy port and moving the tool toward the tissue recovery direction). The experiments are carried out under four conditions:

C0: without auditory feedback.

C1: with auditory feedback based on instantaneous scleral force, that is, the alarm is triggered once the current force exceeds the safety threshold.

C2: with auditory feedback based on predicted force status 250 milliseconds ahead.

C3: with auditory feedback based on predicted force status 500 milliseconds ahead.

These four conditions are randomly selected during the experiments, each condition is repeated 5 times. To eliminate the operation bias, the user has no prior knowledge about the experimental condition. The network predictor runs in PYTHON at 40 Hz, while the sensing system runs in C++ at 200 Hz. A ”shared memory” architecture is used to transfer the actual scleral force data and network predictions between PYTHON and C++.

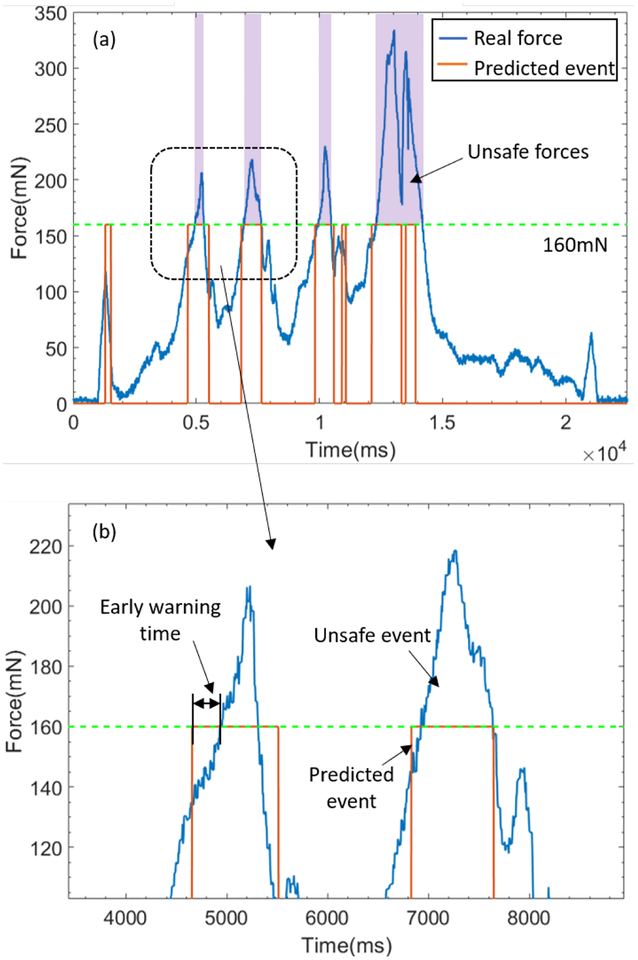

The real forces and prediction results are recorded and analyzed, one complete vessel following manipulation is depicted as shown in Fig. 7. To evaluate the feasibility of our method, we proposed three metrics as below, they are also denoted in Fig. 7:

Fig. 7:

Real time results. (a) Landscape of a complete experiment result. The unsafe force in metric I is denoted. (b) Magnified portion of (a). The early warning time in metric II, the unsafe event and predicted event in metric III are denoted.

Metric I: unsafe force time percentage , where T0 is the accumulative time of the unsafe force, T is the complete time of the experiment.

Metric II: successful prediction rate , where N0 is the number of the predicted unsafe events, N is the number of the total unsafe events in the experiments.

Metric III: early warning time t. The occurring time difference between the predicted event and the following unsafe event.

We calculated the three metrics for each user, the results are shown in Table I, II, and III, respectively. The unsafe force percentage α under condition C3 is the lowest among the four conditions from user 1 to user 4, while the lowest α occurs under condition C2 in the case of user 5, but α under condition C3 is still lower than the ones under conditions C1 and C2. Table II shows that the network predictor captures almost all the unsafe events: 2 events are not detected among 132 events, the overall successful rate reaches 98.4%. The early warning time in Table III shows the network has the ability to detect the unsafe event in advance. While this preliminary study is limited to only five users, there is strong evidence that longer-horizon prediction could play an important role in improving safety.

TABLE I:

The unsafe force time percentage α

| Condition | User1 | User2 | User3 | User4 | User5 |

|---|---|---|---|---|---|

| C0 | 12.2% | 12.13% | 14.29% | 25.7% | 17.97% |

| C1 | 5.4% | 7.05% | 4.02% | 12.45% | 10.35% |

| C2 | 3.5% | 6.81% | 6.77% | 16.86% | 3.53% |

| C3 | 0.45% | 3.58% | 2.11% | 2.87% | 6.96% |

TABLE II:

The successful prediction rate β

| Condition | User1 | User2 | User3 | User4 | User5 |

|---|---|---|---|---|---|

| C2 | 15/15= 100% |

22/23= 95.6% |

22/22= 100% |

17/17= 100% |

8/8= 100% |

| C3 | 4/4= 100% |

12/12= 100% |

10/10= 100% |

8/9= 88.89% |

8/8= 100% |

TABLE III:

The early warning time t

| Condition | User1 | User2 | User3 | User4 | User5 |

|---|---|---|---|---|---|

| C2 | 70.67 | 50.23 | 106.87 | 121.07 | 150.71 |

| C3 | 172.5 | 91.25 | 99.09 | 106.36 | 145 |

IV. DISCUSSION AND CONCLUSION

Our results are based on an LSTM network trained using only one user’s manipulation data and then applied to the prediction of other users behavior. Even based on such simplified training we showed that force feedback with auditory substitution can help to reduce the unsafe events. Moreover, cases C2 and C3 show improved performance than C1, since the instantaneous warning for unsafe events does not provide sufficient time for the user to react. Although C2 and C3 appear to improve the sclera safety, the early warning time achieved in this work may still not be long enough for the user to practically react. In future, we will focus on improving the network’s behavior to provide the prediction information further into the future without significant loss of accuracy. Also, we noticed that the experiment time in C0 is smallest among the other three conditions, since the user needs to pause and/or adjust his/her manipulation once he/she obtains the warning sound in auditory feedback conditions.

Currently only the predicted sclera force is utilized and fed back directly to the user through auditory substitution. Future work will incorporate the predicted insertion depth together with the predicted sclera force to implement multiple variable admittance control, thereby to enable robotic assistance itself to react to an imminent tissue damage during operation. Future work will carry a more in-depth analysis involving significantly larger number of users as well as different surgical tasks. The force-sensing tool can also measure the force at the tool tip, which will also be incorporated into the network training along with robot velocity data in future work to provide more information for robot control. In this paper we use model-free approach i.e., the LSTM network instead of the classic prediction method such as kalman filter to forecast the scleral force, as the uncertainty of users manipulation makes scleral force difficult to be captured and modeled. Also the simple regression method such as fully connected RNN is not able to capture the scleral force either in our prior experiments.

Retinal surgery requires delicate skill of the surgeon, any misoperations, i.e. large sclera manipulation force, could cause tissue damage. While previous research has focused on robotic devices to assist surgeons by improving manipulation accuracy and canceling hand tremor, there is still a need to actively detect and prevent unintentional mis-operations. Our proposed method to provide auditory feedback based on predicted operative force status is a step in this direction. The technique can also be extended to other minimally invasive surgeries by providing safety-critical information to improve the surgical robot control performance.

Our research builds upon prior work across three fields: robotics, machine learning, and safe surgical micro-manipulation. This work focused on a novel way to apply LSTM-based prediction to robot-assisted retinal surgery to enhance safety. We designed and implemented the network to learn the user operative behavior in a mock retinal surgery, and developed a new force-sensing tool to collect the user manipulation information i.e. sclera force. The auditory feedback based on predicted sclera force produced by the trained network model was implemented to signal the upcoming dangerous force status. This system can potentially provide safe micromanipulation that can improve the outcome of the retinal microsurgery, and in addition achieve safety-aware cooperative control during robot-assisted surgical systems. Future work will also further investigate this system in phantoms and in vivo experiments.

Acknowledgments

This work was supported by U.S. National Institutes of Health under grant number 1R01EB023943–01. The work of C. He was supported in part by the China Scholarship Council under Grant 201706020074.

REFERENCES

- [1].Edwards T, Xue K, Meenink H, Beelen M, Naus G, Simunovic M,Latasiewicz M, Farmery A, de Smet M, and MacLaren R, “First-inhuman study of the safety and viability of intraocular robotic surgery,” Nature Biomedical Engineering, p. 1, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Gijbels A, Smits J, Schoevaerdts L, Willekens K, Vander Poorten EB, Stalmans P, and Reynaerts D, “In-human robot-assisted retinal vein cannulation, a world first,” Annals of Biomedical Engineering, pp. 1–10, 2018. [DOI] [PubMed] [Google Scholar]

- [3].He C-Y, Huang L, Yang Y, Liang Q-F, and Li Y-K, “Research and realization of a master-slave robotic system for retinal vascular bypass surgery,” Chinese Journal of Mechanical Engineering, vol. 31, no. 1, p. 78, 2018. [Google Scholar]

- [4].Gijbels A, Wouters N, Stalmans P, Van Brussel H, Reynaerts D, and Vander Poorten E, “Design and realisation of a novel robotic manipulator for retinal surgery,” in Intelligent Robots and Systems (IROS), 2013 IEEE/RSJ International Conference on. IEEE, 2013, pp. 3598–3603. [Google Scholar]

- [5].de Smet MD, Meenink TC, Janssens T, Vanheukelom V, Naus GJ, Beelen MJ, Meers C, Jonckx B, and Stassen J-M, “Robotic assisted cannulation of occluded retinal veins,” PloS one, vol. 11, no. 9, p. e0162037, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Rahimy E, Wilson J, Tsao T, Schwartz S, and Hubschman J, “Robot-assisted intraocular surgery: development of the iriss and feasibility studies in an animal model,” Eye, vol. 27, no. 8, p. 972, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].MacLachlan RA, Becker BC, Tabarés JC, Podnar GW, Lobes LA Jr, and Riviere CN, “Micron: an actively stabilized handheld tool for microsurgery,” IEEE Transactions on Robotics, vol. 28, no. 1, pp. 195–212, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Hubschman J, Bourges J, Choi W, Mozayan A, Tsirbas A, Kim C, and Schwartz S, “the microhand: a new concept of micro-forceps for ocular robotic surgery,” Eye, vol. 24, no. 2, p. 364, 2010. [DOI] [PubMed] [Google Scholar]

- [9].Üneri A, Balicki MA, Handa J, Gehlbach P, Taylor RH, and Iordachita I, “New steady-hand eye robot with micro-force sensing for vitreoretinal surgery,” in Biomedical Robotics and Biomechatronics (BioRob), 2010 3rd IEEE RAS and EMBS International Conference on. IEEE, 2010, pp. 814–819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Menciassi A, Eisinberg A, Scalari G, Anticoli C, Carrozza M, and Dario P, “Force feedback-based microinstrument for measuring tissue properties and pulse in microsurgery,” in Robotics and Automation, 2001. Proceedings 2001 ICRA. IEEE International Conference on, vol. 1 IEEE, 2001, pp. 626–631. [Google Scholar]

- [11].Iordachita I, Sun Z, Balicki M, Kang JU, Phee SJ, Handa J,Gehlbach P, and Taylor R, “A sub-millimetric, 0.25 mn resolution fully integrated fiber-optic force-sensing tool for retinal microsurgery,” International journal of computer assisted radiology and surgery, vol. 4, no. 4, pp. 383–390, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].He X, Handa J, Gehlbach P, Taylor R, and Iordachita I, “A submillimetric 3-dof force sensing instrument with integrated fiber bragg grating for retinal microsurgery,” IEEE Transactions on Biomedical Engineering, vol. 61, no. 2, pp. 522–534, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].He X, Balicki M, Gehlbach P, Handa J, Taylor R, and Iordachita I, “A multi-function force sensing instrument for variable admittance robot control in retinal microsurgery,” in Robotics and Automation (ICRA), 2014 IEEE International Conference on. IEEE, 2014, pp. 1411–1418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Hochreiter S and Schmidhuber J, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997. [DOI] [PubMed] [Google Scholar]

- [15].Lipton ZC, Kale DC, Elkan C, and Wetzel R, “Learning to diagnose with lstm recurrent neural networks,” arXiv preprint arXiv:1511.03677, 2015. [Google Scholar]

- [16].Stollenga MF, Byeon W, Liwicki M, and Schmidhuber J, “Parallel multi-dimensional lstm, with application to fast biomedical volumetric image segmentation,” in Advances in neural information processing systems, 2015, pp. 2998–3006. [Google Scholar]

- [17].Sundermeyer M, Schlüter R, and Ney H, “Lstm neural networks for language modeling,” in Thirteenth Annual Conference of the International Speech Communication Association, 2012. [Google Scholar]

- [18].Cutler N, Balicki M, Finkelstein M, Wang J, Gehlbach P, Mc-Gready J, Iordachita I, Taylor R, and Handa JT, “Auditory force feedback substitution improves surgical precision during simulated ophthalmic surgery,” Investigative ophthalmology & visual science, vol. 54, no. 2, pp. 1316–1324, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Jain A, Bansal R, Kumar A, and Singh K, “A comparative study of visual and auditory reaction times on the basis of gender and physical activity levels of medical first year students,” International Journal of Applied and Basic Medical Research, vol. 5, no. 2, p. 124, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Horise Y, He X, Gehlbach P, Taylor R, and Iordachita I, “Fbg-based sensorized light pipe for robotic intraocular illumination facilitates bimanual retinal microsurgery,” in Engineering in Medicine and Biology Society (EMBC), 2015 37th Annual International Conference of the IEEE. IEEE, 2015, pp. 13–16. [DOI] [PubMed] [Google Scholar]

- [21].Greff K, Srivastava RK, Koutník J, Steunebrink BR, and Schmidhuber J, “Lstm: A search space odyssey,” IEEE transactions on neural networks and learning systems, vol. 28, no. 10, pp. 2222–2232, 2017. [DOI] [PubMed] [Google Scholar]

- [22].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014. [Google Scholar]