Abstract

There are growing concerns about the generalizability of machine learning classifiers in neuroimaging. In order to evaluate this aspect across relatively large heterogeneous populations, we investigated four disorders: Autism spectrum disorder (N=988), Attention deficit hyperactivity disorder (N=930), Posttraumatic stress disorder (N=87) and Alzheimer’s disease (N=132). We applied 18 different machine learning classifiers (based on diverse principles) wherein the training/validation and the hold-out test data belonged to samples with the same diagnosis but differing in either the age range or the acquisition site. Our results indicate that overfitting can be a huge problem in heterogeneous datasets, especially with fewer samples, leading to inflated measures of accuracy that fail to generalize well to the general clinical population. Further, different classifiers tended to perform well on different datasets. In order to address this, we propose a consensus-classifier by combining the predictive power of all 18 classifiers. The consensus-classifier was less sensitive to unmatched training/validation and holdout test data. Finally, we combined feature importance scores obtained from all classifiers to infer the discriminative ability of connectivity features. The functional connectivity patterns thus identified were robust to the classification algorithm used, age and acquisition site differences, and had diagnostic predictive ability in addition to univariate statistically significant group differences between the groups. A MATLAB toolbox called Machine Learning in NeuroImaging (MALINI), which implements all the 18 different classifiers along with the consensus classifier is available from Lanka et al. (Lanka, et al., 2019) The toolbox can also be found at the following URL: https://github.com/pradlanka/malini.

Keywords: Resting-state functional MRI, Supervised machine learning, Diagnostic classification, Functional connectivity, Autism, ADHD, Alzheimer’s disease, PTSD

1. Introduction

The identification of many neurological disorders is based on subjective diagnostic criteria. The development of objective diagnostic tools is a work in progress in the promising field of neuroimaging. Univariate between-group differences in neuroimaging between healthy controls and clinical populations are not yet sufficiently predictive of disease states at the individual level. For automated disorder/disease diagnosis, a machine learning classifier is trained to model the relationship between features extracted from brain imaging data and the labels of individuals in the training dataset (the labels are typically determined via clinical assessment by a licensed clinician), and the model is then used to predict the diagnostic label of a new and unseen subject drawn from a test dataset. However, there are many challenges to this paradigm which include: (i) Lack of availability of large clinical imaging datasets, (ii) Challenges in generalizing results across study populations, (iii) Difficulty in identifying reliable image-based biomarkers which are robust to progress and maturation of the disorder, and (iv) Variability in classifier performance. Many of these issues are interrelated. In fact, the ultimate goal for machine learning based diagnostic classification is to achieve high classification accuracy, in unseen data with varying characteristics (Kelly, Biswal, Craddock, Castellanos, & Milham, 2012). So, to be useful in clinical settings, machine learning classifiers should be generalizable to the wider population. This can be achieved using larger samples that are aggregated by including data from several imaging sites (Huf, et al., 2014). To summarize, the utility of machine learning classifiers as clinical diagnostic tools, depends on them achieving high accuracies in unseen observations in samples representative of the disease populations, using reliable and valid ways to estimate classification performance, that are representative of how such diagnostic tools will be deployed in real-word scenarios.

The main reason for the failure of identification of precise neuroimaging-based biomarkers for various disorders, despite high accuracies reported in many neuroimaging studies, is that many of these studies use small, biologically homogenous samples, and therefore, generalizing their results to larger heterogeneous clinical populations is difficult (Huf, et al., 2014; Nielsen, et al., 2013; Arbabshirani, Plis, Sui, & Calhoun, 2017; Schnack & Kahn, 2016). In fact, results by Arbabshirani et al. (2017) as well as Schnack & Kahn (2016) indicate that the classification accuracy decreases even with increased sample size in multi-site data as compared to smaller single-site data sets. Single study analyses in which the training and the test data are from the same acquisition site give higher classification accuracies than when they are from distinct imaging sites (Nielsen, et al., 2013). A classifier that works well on a particular dataset might fail to discriminate the classes with good accuracy on a different dataset sharing the same clinical diagnosis (Huf, et al., 2014). These prior findings indicate that a classifier may achieve high accuracy in a given data set even with cross-validation, but the accuracy may drop significantly when the classifier is used on a more general p copulation which was not used in cross-validation, as was observed with autism (Chen, et al., 2015). Generalizability of the classifiers cannot be assessed using only a few samples from a single site, but can be shown by including data from various imaging sites. Classifiers which perform well on small training sets generalize poorly, and hidden correlations in the training and validation sets might lead to overoptimistic performance of the classifier (Foster, Koprowski, & Skufca, 2014). This is also borne out by the observation that the overall performance accuracy decreases with sample size (Schnack & Kahn, 2016; Arbabshirani, Plis, Sui, & Calhoun, 2017). Hence, investigators should be extremely cautious in interpreting over-optimistic classification performance results from small datasets.

Classification across heterogeneous populations with considerable variation in demographic and phenotypic profiles, although desirable for generalizability, is extremely challenging, particularly when neuroimaging data is pooled from multiple acquisition sites (Demirci, et al., 2008). Variance introduced in the data due to scanner hardware, imaging protocols, operator characteristics, demographics of the regions and other factors that are acquisition site specific, can affect the classification performance (Schnack & Kahn, 2016). Thus, the neuroimaging-based biomarkers identified must be reliable across imaging sites and age ranges to be useful clinically.

Given the difficulties in disorder/disease classification with multisite studies, the appropriate selection of reliable and sensitive features associated with the underlying disorder/disease is the primary motivating factor in our choice of resting-state functional connectivity (RSFC). RSFC measures the spontaneous low-frequency fluctuations between remote regions in the brain in baseline functional magnetic resonance imaging (fMRI) data, and is typically estimated using the Pearson’s correlation coefficient. This approach has been used extensively to characterize the functional architecture of the brain in both healthy and clinical populations. The reliability and validity of RSFC measures across subjects and scanning sites is of prime importance in order to be useful in disorder/disease classification for improving screening and diagnostic accuracy. RSFC has been shown to have moderate to high reliability and reproducibility across healthy (Chou, Panych, Dickey, Petrella, & Chen, 2012; Shehzad, et al., 2009; Choe, et al., 2015; Guo, et al., 2012; Wang, et al., 2011; Anderson, Ferguson, Lopez-Larson, & Yurgelun-Todd, 2011; Birn, et al., 2013; Braun, et al., 2012; Meindl, et al., 2009), clinical (Pinter, et al., 2016; Somandepalli, et al., 2015), pediatric (Somandepalli, et al., 2015), and elderly (Marchitelli, et al., 2016; Orban, et al., 2015) populations. It has also been shown to have long-term test-retest reliability (Fiecas, et al., 2013; Liang, et al., 2012; Shah, Cramer, Ferguson, Birn, & Anderson, 2016). RSFC is altered in clinical populations such as attention deficit hyperactivity disorder (ADHD), depression, autism, schizophrenia, post-traumatic stress disorder (PTSD) and Alzheimer’s disease (AD). Hence there is growing optimism in the field that modulations in RSFC can help us understand the pathogenesis behind several neurological and psychiatric disorders due to its sensitivity to changes in development, aging, and disease progression. These factors, combined with the ability to standardize protocols, have paved the way for data aggregation across multiple sites, leading to increased statistical power and the generalizability of the findings, and have catapulted RSFC into increasing prominence for diagnostic classification. Given the relatively lower prevalence of certain disorders along with the costs and time associated with aggregating large datasets, the efficiency of data pooling from multiple sites is critical. RSFC protocols are simple to run with little overhead, and hence, it has been implemented in different imaging protocols employing various clinical populations. Also, resting-state fMRI (Rs-fMRI) does not require subjects in uncooperative clinical populations to comply with task instructions, and this has given rise to considerable interest in the use of Rs-fMRI in patients with brain disorders (Horwitz & Rowe, 2011).

With the advent of big data initiatives such as autism brain imaging database (ABIDE), where a large amount of data is collected from multiple sites, there is renewed optimism for reliable and validated disorder/disease classification (Huf, et al., 2014). Generalizability of classifier performance can be increased, by avoiding overfitting, when we have large training data sizes. Another consequence of such big data initiatives and exploratory data analyses is that reliable and repeatable studies for testing novel hypotheses about the identification of relevant clinical biomarkers has taken ground (Kang, Caffo, & Liu, 2016). In the current study we used RSFC measures for diagnostic classification using 18 different classifiers in 4 clinical populations: (i) Autism brain imaging data exchange (ABIDE) for autism spectrum disorder (ASD), (ii) ADHD-200 dataset for attention deficit hyperactivity disorder (ADHD), (iii) PTSD data which were acquired at the Auburn MRI research center for post-concussion syndrome (PCS) and posttraumatic stress disorder (PTSD) and, (iv) Alzheimer’s disease neuroimaging initiative (ADNI) for mild cognitive impairment (MCI) and Alzheimer’s disease (AD). These disorders and datasets were chosen due to the the following factors. Three of the datatsets including ABIDE, ADHD-200, and ADNI are open datasets easily accessable to researchers. Consequently, there are a lot of papers on diagnostic classification using these three datasets. In addition, ABIDE and ADHD-200 datasets have more than 900 subjects which are relatively large datasets, especially in neuroimaging. Finally, the PTSD dataset was acquired in-house at a single acquisition site and each subject was scanned twice. This allowed us to contrast multi-site data sets with single site datasets. These properties of the datasets allowed us to be able to test the generalizability of classifiers under various conditions: (a) Using various disorders whose etiologies are likely different, (b) Using both smaller and larger size of datasets, (c) Using data obtained from both multiple sites as well as single-site, and finally, (d) Using both homogeneous and heterogeneous samples from the population. It should however be noted that several of these factors we plan to study in this paper may be interdependent, and it may not be possible to cleanly attribute the observed effects in classification accuracy into their constituent factors in some cases.

There are four primary goals of this paper. The first goal is to understand the generalizability of machine learning classifiers in the presence of disorder/disease and population heterogeneity, variability in disorder/disease across age, and variations in data caused by multisite acquisitions. We report an optimistic estimate of cross-validation accuracy and an unbiased estimate of performance on a completely independent and blind hold-out test dataset. The entire datasets were split into training/validation, and hold-out test data (with both splits containing both controls and clinical populations) and the cross-validation accuracy was estimated using the training/validation data by splitting it further into training data and validation data. The hold-out test datasets were constructed under three different scenarios: (i) subjects with different, non-overlapping age range compared to training/validation data, (ii) subjects drawn from different imaging sites compared to training/validation data and, (iii) training/validation and hold-out test data matched on all demographics including age as well as acquisition site. We hypothesized that testing our classifiers on homogenous populations could give us optimistic estimates of classifier performance, which might not generalize well to the real-world classification scenarios encountered in the clinic. Therefore, by comparing a holdout test data with the same disorder/disease diagnosis and matched in age and acquisition site as well as unmatched to the training/validation data, would give us a better idea of generalizability and robustness of the classifiers under more challenging classification scenarios.

The second goal is to understand how overfitting can occur in the context of machine learning applied to neuroimaging-based diagnostic classification, whether in feature selection or performance estimation. We demonstrate how smaller datasets might give unreliable estimates of classifier performance which could lead to improper model selection further leading to poor generalization across the larger population. Using ABIDE (N=988) and ADNI (N=132) datasets as examples of large and comparatively smaller datasets, we explore how large variation in the estimate of classification performance in relatively smaller datasets could affect the selection of optimal models and thereby prevent generalization.

The third goal of our paper was to combine multiple classifiers to build a consensus classifier which could transcend the inductive biases of any individual classifier and thus be robust and less sensitive to its assumptions about the underlying mapping between connectivity features and the diagnosed labels. Thus, using multiple classifiers along with consensus classifier could eliminate the possibility that accuracy differences observed between training/validation and hold-out test datasets in unmatched scenarios as an artifact of any single classifier. Using multiple classifiers helps us understand the predictive power of the features and could also help identify which classifiers or class of classifiers give consistently better performance compared to others. Since, a comparison of performance of so many classifiers, has not been done before in the context of neuroimaging, we think such a comparison could be of use to others by helping them choose the appropriate classifier for similar endeavors.

The final goal of this study is to understand how specific functional connectivity patterns encode disorder/disease states and might possess predictive ability (as opposed to conventionally reported statistical separation) to distinguish between health and disease in novel individual subjects. Unlike in some other applications where the final classification performance is more important than identifying discriminative features, in neuroimaging, the goal of identifying discriminative features is equally, if not more important than the classification performance, as it can gives us valuable insight into dysfunctional connectivity patterns in the diseased populations. We set out to identify these connectivity patterns which were not only statistically separated, but also were important for classification irrespective of age mismatch, acquisition site mismatch or the type of classifier used. These connectivity patterns must, therefore, be relatively robust to the age and acquisition site variations, and their predictive ability must not be limited to a single classifier or a particular group of classifiers. In order to accomplish this, we propose feature ranking from multiple classifiers and data splits to construct a single score for the predictive ability of the connectivity features which can potentially be useful in clinical settings.

To achieve our goals, we applied 18 machine learning classifiers based on different principles including probabilistic/Bayesian classifiers, tree-based methods, kernel based methods, a few architectures of neural networks and nearest neighbor classifiers to RSFC metrics derived from ABIDE, ADNI, ADHD-200 and PCS/PTSD datasets described above. Seven of the 18 classifiers were implemented in a feature reduction framework called Recursive cluster elimination (RCE) (Deshpande, et al., 2010). We also built a consensus classifier which leverages the classifying power of all these classifiers to give reliable and robust predictions on the hold-out test dataset. Though many of the issues raised are well known, there is a disconnect between the fact that the issues raised are well known in literature, and yet, in practice, research reports with inflated cross-validation accuracies continue to be published in neuroimaging. Therefore, we want to directly address these issues and provide an open source software such that best practices are adopted.

2. Materials and methods

2.1. Data

2.1.1. Autism spectrum disorder (ASD)

ASD in a heterogeneous neurodevelopmental disorder in children characterized by impaired social communication, repeated behaviors, and restricted interests. With a relatively high prevalence of 1 in 68 children, it is one of the most common developmental disorders in children (CDC, 2014). According to DSM-V, ASD encompasses several disorders previously considered distinct including autism and Asperge’s syndrome (American Psychiatric Association, 2013). Asperge’s syndrome is considered to be a milder form of ASD, with patients in the higher functioning end of the spectrum. Autism is associated with large scale network disruptions of brain networks (Maximo, Cadena, & Kana, 2014; Gotts, et al., 2012; Di Martino, et al., 2014), thus making these clinical groups excellent candidates for diagnostic classification using RSFC.

Resting state fMRI data from 988 individuals from the autism brain imaging data exchange (ABIDE) database (Di Martino, et al., 2014) was used for this study. The imaging data were acquired from 15 different acquisition sites and consists of 556 healthy controls, 339 subjects diagnosed with autism, and 93 with Asperge’s syndrome. The distribution of the data used in this study with the acquisition site can be found in Table 1. Each subject’s information was fully anonymized and was approved by the local Institutional Review Boards of the respective data acquisition sites. More details about the data including scanning parameters can be obtained from http://fcon_1000.proiects.nitrc.org/indi/abide/index.html.

Table 1.

The site distribution for the autism brain imaging data exchange (ABIDE) dataset used in our study

| Imaging Site | Controls | Asperger’s | Autism | Total |

|---|---|---|---|---|

| CALTECH | 19 | 0 | 13 | 33 |

| CMU | 13 | 0 | 14 | 27 |

| KKI | 33 | 11 | 11 | 55 |

| LEUVEN | 35 | 0 | 29 | 64 |

| MAX-MUN | 33 | 22 | 2 | 57 |

| NYU | 105 | 21 | 53 | 179 |

| OLIN | 36 | 0 | 0 | 36 |

| PITT | 27 | 0 | 30 | 57 |

| SBL | 15 | 7 | 2 | 24 |

| SDSU | 22 | 7 | 3 | 32 |

| TRINITY | 25 | 7 | 10 | 42 |

| UCLA | 45 | 0 | 54 | 99 |

| UM | 77 | 10 | 55 | 142 |

| USM | 43 | 0 | 57 | 100 |

| YALE | 28 | 8 | 6 | 42 |

| Total Subjects | 556 | 93 | 339 | 988 |

Note. CALTECH, California Institute of Technology; CMU, Carnegie Mellon University; NYU, NYU Langone Medical Center; KKI, Kennedy Krieger Institute; MAX-MUN, University of Ludwig Maximilians University Munich; PITT, Pittsburgh School of Medicine; SDSU, San Diego State University; OLIN, Olin Institute of Living at Hartford Hospital; UCLA, University of California, Los Angeles; LEUVEN, University of Leuven; TRINITY, Trinity Centre for Health Sciences; USM, University of Utah School of Medicine; YALE, Yale Child Study Center; UM, University of Michigan; SBL, Social Brain Lab

2.1.2. Attention deficit hyperactivity disorder (ADHD)

ADHD is one of the most common neurodevelopmental disorders in children with a childhood prevalence ratio as high as 11%, with significant increases in diagnoses every year (Visser, et al., 2014). ADHD diagnoses can be categorized into three subtypes based on the symptoms exhibited, including ADHD-I (inattention) for persistent inattention, ADHD-H (hyperactive/impulsive) for hyperactivity-impulsivity and ADHD–C (combined) for a combination of both symptoms. There has been a massive increase in research efforts for automated detection of ADHD due to the ADHD-200 competition in 2011 (Consortium, 2012). Although there are standardized approaches to diagnose subtypes of ADHD, there is evidence of multiple etiologies that, while they present as similar clinically, will have unique neurologic underpinnings (Curatolo, D’Agati, & Moavero, 2010).

Nine hundred and thirty subjects were selected from the ADHD-200 dataset, which was used for the ADHD-200 challenge (Consortium, 2012). The sample consists of 573 healthy controls, 208 subjects with ADHD-C, 13 subjects with subtype ADHD-H, and 136 subjects with ADHD-I. Imaging data for a few subjects were not included, as they did not pass the quality control thresholds. The subjects were scanned at seven different acquisition sites as shown in Table 2. The acquisition parameters and other information about the scans be obtained from http://fcon_1000.proiects.nitrc.org/indi/adhd200/

Table 2.

The site distribution for the attention deficit hyperactivity disorder-200 (ADHD-200) data across the seven imaging sites used in our study

| Imaging Site | Controls | ADHD -C | ADHD -H | ADHD-I | Total |

|---|---|---|---|---|---|

| Peking University | 143 | 38 | 1 | 63 | 245 |

| Kennedy Krieger Institute | 69 | 19 | 1 | 5 | 94 |

| NeuroIMAGE Sample | 37 | 29 | 6 | 1 | 73 |

| New York University Child Study Center | 110 | 95 | 2 | 50 | 257 |

| Oregon Health & Science University | 70 | 27 | 3 | 13 | 113 |

| University of Pittsburgh | 94 | 0 | 0 | 4 | 98 |

| Washington University | 50 | 0 | 0 | 0 | 50 |

| Total Subjects | 573 | 208 | 13 | 136 | 930 |

Note. We did not include the data from Brown University in our study since their diagnostic labels were not released; ADHD-C, attention deficit hyperactivity disorder-combined; ADHD-H, attention deficit hyperactivity disorder-hyperactive/impulsive; ADHD-I, attention deficit hyperactivity disorder-inattentive

2.1.3. Post-traumatic stress disorder (PTSD) & post-concussion syndrome (PCS)

PTSD is a debilitating condition which develops in individuals exposed to a traumatic or a life-threatening situation. The estimated lifetime prevalence of PTSD among adult Americans is 6.8% (Kessler, et al., 2005). PCS consists of a set of symptoms that occur after a concussion from a head injury. PTSD is highly prevalent in individuals who sustain a traumatic brain injury, especially combat veterans. Such subjects display overlapping symptoms of both PCS and PTSD. Head injuries and traumatic experiences in the battlefield could be the main reasons for an unusually high prevalence rate of PTSD in combat veterans with a prevalence of 12.1% in Gulf war veteran population (Kang, Natelson, Mahan, Lee, & Murphy, 2003) and 13.8% in military veterans deployed in Afghanistan and Iraq during Operation Enduring Freedom and Operation Iraqi Freedom (Tanielian, Jaycox, & eds, 2008). Unfortunately, despite the serious nature of the problem, the current methods for diagnosis of the disorders rely on subjective reporting and clinician-administered interviews. An objective assessment of these disorders using image-based biomarkers could improve diagnostic accuracy and assessment of PTSD and PCS. One limitation of this data set is that PTSD has subtypes defined by symptom clusters, and severity of symptoms can evolve temporally (e.g., months and years post-trauma). As such, there is likely to be significant heterogeneity in neurologic underpinnings within any given sample of patients with PTSD.

While the three other datasets used in this study are publicly available, PTSD/PCS dataset was acquired in-house. Eighty-seven active duty male US Army soldiers were recruited to participate in this study from Fort Benning, GA and Fort Rucker, AL, USA. In the recruited subjects, 28 were combat controls, 17 were diagnosed with only PTSD, while 42 were diagnosed with both PCS and PTSD. All subject groups were matched for age, race, education and deployment history. The subjects were diagnosed as having PTSD if they had no history of mild traumatic brain Injury (mTBI), or symptoms of PCS in the past five years, with scores > 38 on the PTSD Checlist-5 (PCL5), and <26 on Neurobehavioral Symptom Inventory (NSI). Subjects with medically documented mTBI, post-concussive symptoms, and scores ≥ 38 on PCL5 and ≥ 26 on NSI were grouped as PCS & PTSD. The procedure and the protocols in this study were approved by the Auburn University Institutional Review Board (IRB) and the Headquarters U.S. Army Medical Research and Material Command, IRB (HQ USAMRMC IRB).

The participants were scanned in a Siemens 3T MAGNETOM Verio Scanner (Siemens, Erlangen, Germany) with a 32-channel head coil at Auburn University MRI Research Center. The participants were instructed to keep their eyes open and fixated on a small white cross on a screen with a dark background. A T2* weighted multiband echo-planar imaging (EPI) sequence was used to acquire two runs of resting state data in each subject with the following sequence parameters: TR=600ms, TE=30ms, FA=55°, multiband factor=2, voxel size= 3×3×5 mm3 and 1000 time points. Brain coverage was limited to the cerebral cortex, subcortical structures, midbrain and pons, with the cerebellum excluded.

2.1.4. Mild cognitive impairment (MCI) & Alzheimer’s disease (AD)

MCI can be defined as greater than the normal cognitive decline for a given age, but it does not significantly affect the activities of daily life (Gauthier, et al., 2006). It has a prevalence ranging from 3% to 19 % in adults older than 65 years. AD on the other hand, does significantly affect daily activities of the person. It is the most common neurodegenerative disorder in adults aged 65 and older. It is characterized by cognitive decline, intellectual deficits, memory impairment and difficulty in social interactions. As a large percentage of MCI patients slowly progress to Alzheimer’s disease, the boundaries separating healthy aging from early/late MCI and AD are not very precise leading to diagnostic uncertainty in the disease status (Albert, et al., 2011). Therefore, classifying MCI from AD and healthy older controls is extremely crucial and is particularly challenging. Resting-state functional brain imaging data of 132 subjects were obtained from the Alzheimer’s disease neuroimaging initiative (ADNI) database. The sample consists of subjects in various stages of cognitive impairment and dementia, including 34 subjects with early mild cognitive impairment (EMCI), 34 with late mild cognitive impairment (LMCI), 29 with AD and finally 35 matched healthy controls. More information about the data used for this study along with the image acquisition parameters can be obtained from http://adni.loni.usc.edu/.

2.2. Processing of the Rs-fMRI data

Standard preprocessing pipeline for Rs-fMRI data was implemented using Data Processing Assistant for Resting-State fMRI Toolbox (DPARSF) (Yan & Zang, 2010). The preprocessing pipeline consisted of removal of first five volumes, slice timing correction, volume realignment to account for head motion, co-registration of the T1-weighed anatomical image to the mean functional image, nuisance variable regression which included linear detrending, mean global signal, white matter and cerebrospinal fluid signals and 6 motion parameters. After nuisance variable regression, the data were normalized to the MNI template. The blood-oxygen-level-dependent (BOLD) time series from every voxel in the brain was deconvolved by estimating the voxel-specific hemodynamic response function (HRF) using a blind deconvolution procedure to obtain the latent neural signals (Wu, et al., 2013). The data were then temporally filtered with a band pass filter of bandwidth 0.01–0.1 Hz. Mean time series were extracted from 200 functionally homogeneous brain regions as defined by the CC200 template (Craddock, James, Holtzheimer, Hu, & Mayberg, 2012). After extracting the timeseries, functional connectivity (FC) between the 200 regions was calculated as the Pearson’s correlation coefficient between all region pairs giving us a total of 19,900 FC values. These were then used as features for the classification procedure. For ADHD and PTSD datasets, we did not have whole brain coverage. Therefore, we obtained time series from just 190 regions and 125 regions, respectively. The number of FC paths were accordingly lower for these datasets.

2.3. Data splits for training/validation and hold-out test data

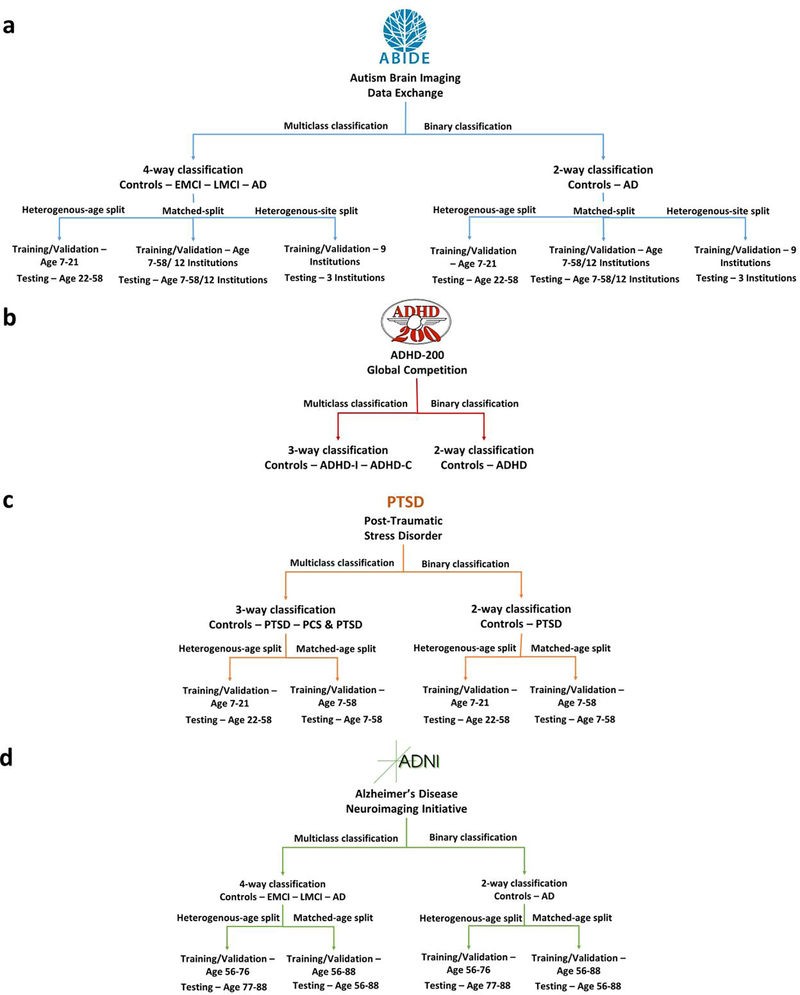

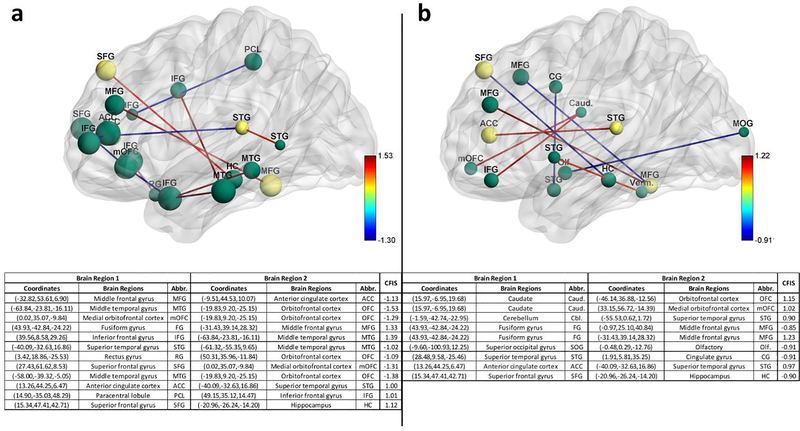

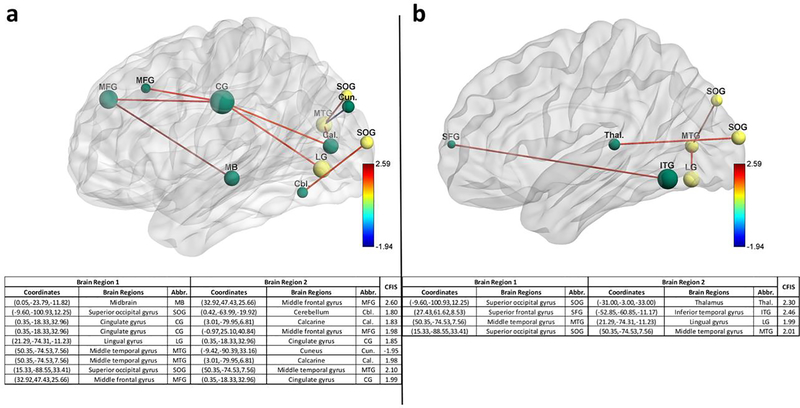

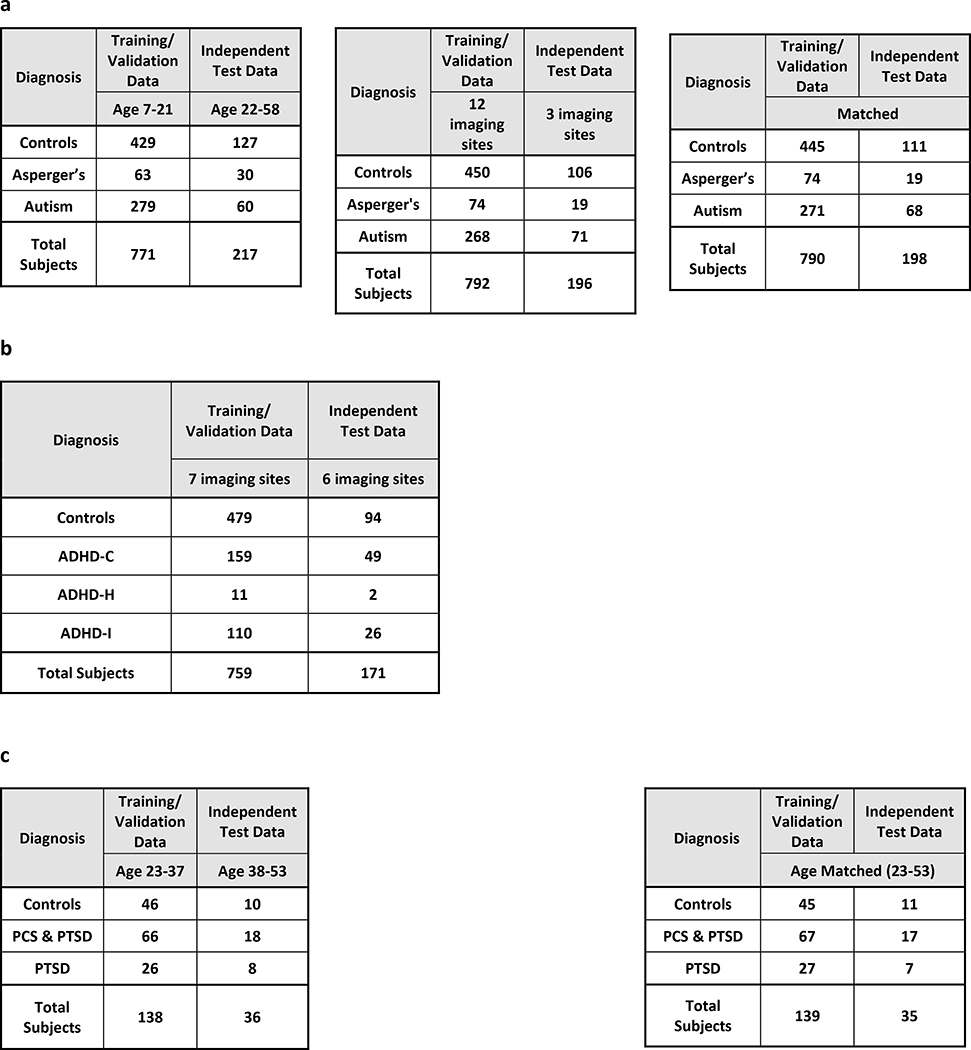

In order to test the generalizabllity of the classifier models, we split all Imaging data into two components. Approximately, 80% of the data was used for training/validation, and the remaining 20% was used as a hold-out test data set (We aimed for an 80–20 split between the training/validation and the hold-out test data. Since we are splitting based on the age and the acquisition site, the ratio is not exact). The training/validation data as a percentage of the total data ranged from 75.8% to 80.2% for various splits for various datasets. More information can be obtained from Table 3. The training/validation datasets were split even further for cross-validation in order to estimate the classifier models as we explain later. However, the hold-out test datasets were not used in cross-validation; instead, they were used only once with the classifier models obtained from cross-validation to obtain truly unbiased test accuracy on completely unseen data. In a few splits, the training/validation and test data came from homogeneous populations, i.e. they were matched for age and acquisition site. In some other splits, the training/validation and hold-out test data were not matched, i.e. they had different age range or acquisition site. With matched data, it is important to note that training/validation and the hold-out test data were matched in age, race, education and gender. In the unmatched splits, age/acquisition site was unmatched, while race, gender, education and acquisition site/age, respectively, were matched. This was done to ensure that only the factor of interest, either age or acquisition site, differed between training/validation and test data in the unmatched splits. All these splits on the four datasets are summarized in Fig. 1 and will be elaborated below. The age-splits in some cases may seem unreasonable as there is a wide difference in age-ranges between the training/validation and the hold-out test datasets considering brain plasticity and maturation as we age. But the primary purpose on such splits was that we wanted to see, whether the patterns learned by the classifier about the underlying disorder generalize to an independent sample with a different age group sharing the same diagnosis. In essence, we wanted to see which classifier could perform reliably well even under such “worst-case” extreme age-mismatch scenarios.

Table 3.

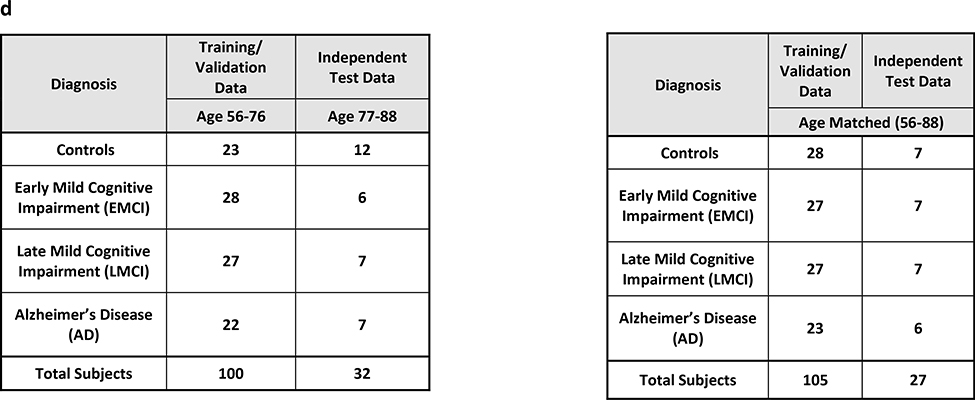

The data distributions for training/validation and hold-out test data for the age and imaging site splits for a autism brain imaging data exchange (ABIDE) dataset, b attention deficit hyperactivity disorder-200 (ADHD-200) dataset, c post-traumatic stress disorder (PTSD) dataset, and d Alzheimer’s disease neuroimaging initiative (ADNI) dataset

|

|

Note. ADHD-C, attention deficit hyperactivity disorder-combined; ADHD-H, attention deficit hyperactivity disorder-hyperactive/impulsive; ADHD-I, attention deficit hyperactivity disorder-inattentive; PCS, post-concussion syndrome

Fig. 1.

The age and imaging site split for the training/validation and the test data both for binary and multiclass classification scenarios. a For the ABIDE dataset, we had age- and site-matched splits as well as unmatched splits for both 2-way and 4-way classifications. In the first split, subjects from an age range of 23–37 years were used in training/validation data and the subjects from the age range 38–53 years formed the hold-out test data. Second, we performed an imaging site split wherein the data from the 12 imaging sites (PITT, OLIN, SDSU, TRINITY, UM, USM, CMU, LEUVEN, NYU, MAXMUN, CALTECH, SBL) were used for the training/validation data while the rest of the 3 imaging sites (Yale, KKI, UCLA) were used as a hold-out test dataset. In the third split, training/validation and hold-out test data were matched for age and acquisition site. b For ADHD we directly used the training/validation and hold-out test data provided by the ADHD-200 Consortium for binary and multiclass classification. c For binary and 3-way classification of the PTSD dataset, we followed an age split in which the training/validation data contained subjects from an age range of 23–37 years while the hold-out test data contained subjects from the age range 38–53 years. This was then compared with a matched training/validation data and hold-out test data with subjects in the age range of 23–53 years. d For both 2-way and 4-way classification of ADNI dataset, we split the entire data by age wherein the training/validation data contained subjects from an age range of 56–76 years while the hold-out test data contained subjects from the age range 77–88 years. This scenario was compared with a matched training/validation data and hold-out test data with subjects in the age range of 56–88 years

ABIDE

We split the ABIDE data into two heterogeneous sets for training/validation and testing, with differences in age group and imagining site: (i) The first heterogeneous split had the training/validation data from age range 7 −21 years while the holdout test data had both ASD and healthy controls in the age range 22–58 years. (ii) For the second split, the training validation data came from 12 imaging sites which participated in the study. The hold-out test data was drawn from the remaining three institutions. (iii) We also had a matched split with data for training/validation and testing drawn from the same age range and institutions. Since the ABIDE data has healthy controls and two subgroups of ASD in autism and Asperge’s syndrome, we performed both binary and multiclass classification with each of the three splits, giving us a total of six splits. The distribution of the subjects in each split is shown in Table 3a.

ADHD-200

The ADHD-200 global competition was structured in a way that training/validation data with diagnostic labels were first provided to the public and many groups around the world submitted their predictions on unlabeled hold-out test data dataset. The organizers of the competition assessed the performance of the classification tools on the hold-out test data set based on the predicted diagnostic labels submitted by the groups. Following the completion of the competition, the labels for hold out test dataset was also publicly released. Therefore, we used the training/validation and hold-out test datasets originally provided by the organizers of the competition and no further splits were performed on the data by age or by acquisition site, as was done for other datasets used in this study. This also helps us stay true to the spirit of the ADHD-200 Global Competition to some extent. We performed binary classification between Controls and ADHD (data from all 3 ADHD subgroups were combined) as well as a three-way classification between controls, ADHD-C, and ADHD-I. ADHD-H was left out in multiclass classification because only 11 subjects with ADHD-H were present in the data. The data distributions for the training/validation and hold i-out test data is shown in Table 3b.

PTSD

Since the imaging data for PTSD was collected solely at our research site (Auburn University MRI Research Center), we could not test the effects of the performance accuracy due to site variability. We performed binary (Controls vs. PTSD) as well as 3-way classification with Controls vs. PTSD vs. PCS & PTSD. Subjects in the age range from 23–37 years were used in training/validate data and ages 38–53 were used in the hold-out test data for the heterogeneous split. Age matched training/validation and test data were also used. These two splits were used for each of the two classification scenarios (binary and 3-way), giving us a total of four splits. It is noteworthy that we had two runs from each of the 87 subjects in this dataset and we considered each run as a separate subject. Therefore, effectively, we had 174 subjects in this dataset. The data distributions of splits are shown in Table 3c.

ADNI

ADNI data contains subjects at various stages of cognitive impairment. Therefore, we tested a 4way classification between healthy adults, EMCI, LMCI and AD. We also performed binary classification using just healthy adults and AD subjects at the extreme ends of the spectrum. We tested the effect of age heterogeneity on the classification performance with subjects from the age range 56–76 years chosen for training/validation data and 77–88 years selected for hold-out test data. We also had a homogeneous split with training /validation and hold-out test data chosen randomly from the entire dataset with the age range of 56–88 years. The data distributions of each of the classes in these splits are shown in Table 3d.

We made no effort to balance the classes with unbalanced sample sizes in the four data sets because: (i) We wanted to identify classifiers which are robust to differences in class occurrences in the training data and, (ii) The number of healthy subjects are usually far greater than the number of subjects with disorders in neuroimaging databases which are assembled retrospectively. While concerted efforts to acquire large and homogenized balanced datasets are currently underway (Miller, et al., 2016), it will be many years before they become publicly available.

2.4. Classification procedure

The number of features obtained by resting state functional connectivity metrics are usually orders of magnitude greater than the number of subjects/samples available. Due to the “curse of dimensionality,” when using high dimensional data, overfitting is a huge concern because the underlying distribution may be under-sampled (Mwangi, Tian, & Soares, 2014; Demirci, et al., 2008; Guyon & Elisseeff, 2003). Having an excess number of features compared to the number of data samples might lead to overfitting and give us poor generalization on the test data (Pereira, Mitchell, & Botvinick, 2009; Mwangi, Tian, & Soares, 2014). The most useful strategies to deal with this issue include collecting more data, adding domain knowledge about the problem to the model or reduce the number of features, while ideally preserving class-discriminative information. Therefore, feature selection is a necessary step either before classification or as a part of the classification procedure, given the sample size of current neuroimaging databases. Most existing feature selection methods can be grouped into filter and wrapper methods.

Filter methods are independent of the classification strategy. A simple univariate score such as a T-score can be used to rank the features and the top ranked features can be utilized for classification (Craddock, Holtzheimer, Hu, & Mayberg, 2009). Although computationally quick, this univariate approach does not take into consideration the relationships between different features and the classifier performance when retaining features.

A wrapper method selects subsets of features which give good classification performance and contain class-discriminative information. Hence the classification procedure is embedded with feature selection in the wrapper method framework. A combination of wrapper and filter methods have been shown to perform well with minimum resources (Deshpande, et al., 2010; Deshpande, Wang, Rangaprakash, & Wilamowski, 2015; Deshpande, Libero, Sreenivasan, Deshpande, & Kana, 2013). Therefore, we have adopted this strategy in the current study. As our filter method, we used a two-sample t-test/ANOVA and selected the features whose means were significantly different between the groups (p<0.05, FDR corrected), after controlling for confounding factors such race, gender, and education for the age unmatched splits. However, for the age-matched splits, age was also controlled for along with race, gender, and education. When selecting significant features in the age-unmatched splits, age was not included because including it in the model would have removed age-related variance from the data.

Rs-fMRI data was then divided into training/validation data and hold-out test data with approximately 80% of the data used for training and validation, and the remaining 20% of the data was used as a separate hold-out test dataset as was mentioned in the previous section on the data splits. In many cases, the training/validation data and hold-out test data differed in a few factors as mentioned previously such as age and acquisition site. As mentioned above, an initial “feature-filtering” was performed wherein only the connectivity paths that were significantly different between the groups (p<0.05, FDR corrected) in the training/validation data were retained (after controlling for head motion, age, race, and education) thereby reducing the number of features from 19,100 to around 1000. No statistical tests were performed on the independent hold-out test data to avoid introducing bias. Therefore, the features with p<0.05 (FDR corrected) in the training/validation dataset were also removed from the hold-out test dataset. Please note that the hold-out test dataset was not used in feature or model selection, and thus, can be expected to give an unbiased estimate of the generalization accuracy. This is contrary to the cross-validation accuracy estimate because using t-test filtering in reducing features on the entire training/validation data will lead to optimistic accuracy estimates given that the training data and the validation data are not completely separated. Even if a t-test was not performed on the validation data during cross-validation, cross-validation accuracy, by definition, is the average accuracy obtained from different splits. Therefore, it does not provide a conservative estimate of the classifier’s performance in a clinical diagnostic classification scenario. To further reduce the number of features while retaining discriminative information, some of the classifiers were embedded in the recursive cluster elimination (RCE) framework (Deshpande, et al., 2010) for feature section (Fig. S1). As we describe later, some of the classifiers had some form of feature selection embedded within them, and hence, such methods were implemented without the RCE framework (Fig. S2).

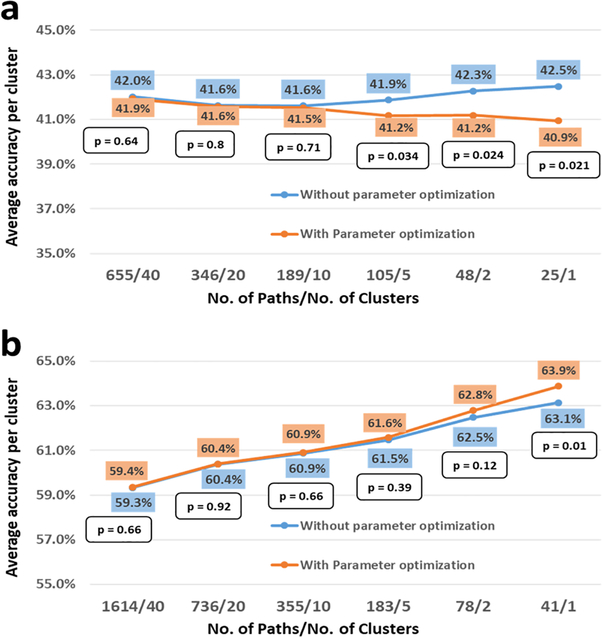

2.4.1. Recursive cluster elimination (RCE) framework

RCE is a heuristic method for identifying a subset of features that have class-discriminative information. RCE is a wrapper method that combines K-means feature clustering with a machine learning classifier to score the discriminative ability of clusters of features, helps retain only features with good discriminative power, and remove the ones without any discriminative power (Deshpande, et al., 2010; Yousef, Jung, Showe, & Showe, 2007). RCE exploits the fact that features (functional connectivity paths in our case) are often correlated with each other, and hence, their discriminative abilities can be ascertained together by clustering the feature space. This provides an order of magnitude increase in speed compared to eliminating each feature individually (Yousef, Jung, Showe, & Showe, 2007). We implemented classifiers in a nested cross-validation (CV) procedure, with the inner CV loop performing feature selection via RCE, and the outer CV loop was used for performance estimation (Fig. S1). We first started with all features after t-test filtering, and clustered these features using the k-means algorithm. The correlation coefficient was used as the distance metric while clustering. Each cluster of features was then used to train a machine learning classifier, and a score was assigned to the cluster based on the performance of the cluster on the validation data. The clusters were ranked according to their classification performance, the clusters with lowest scores were eliminated, and the features in the remaining clusters were merged. This process was iteratively repeated until any further removal of clusters decreased the classification accuracy. This ensured that the best set of feature clusters were identified. This optimal set of feature clusters for each k-fold and partitioning of data of the CV loop, and the final decision surface (or hyper-plane in higher dimension), which gave the best CV performance were saved and used for calculating the accuracy from hold-out test data. For each repetition, a different model, with distinct hyperparameters and features were selected. These models were then used to assess the CV accuracy in the outer k-fold. This ensured that separation was maintained between feature selection by RCE and performance estimation.

Using FC features from training/validation data, classification accuracy was calculated using repeated 6-fold CV. The classifier models obtained from the differences in the partitioning of the training data (repeats × folds) were saved. Test accuracy was calculated on the independent hold-out test data using the saved classifier models by a voting procedure. Each classifier would vote towards a decision on test subjects (accuracy was the percentage of correct votes). This is the voting test accuracy reported. The w/o voting accuracy refers to the mean accuracy and standard deviation for the test data obtained by each of the individual 600 classifier models obtained in each iteration during the cross-validation. To examine the validity of the classifier models, as well as the classification procedure, we first tested them using simulated data by systematically changing the separation between the groups and plotting the classification accuracy. More information on the performance of the classifier on simulated data can be found in the section-2 of Supplementary Information.

2.4.2. Classifier Models

We used a number of classifier models to address the issues in performance estimation and generalizability so that our results are not specific to any particular classifier or type of classifiers. The classifiers we implemented can be broadly divided into the following categories (i) Probabilistic/Bayesian methods: Gaussian naïve Bayes (GNB), linear discriminant analysis (LDA), quadratic discriminant Analysis (QDA), sparse logistic regression (SLR), ridge logistic regression (RLR), (ii) Kernel methods: linear and radial basis function (RBF)-kernel support vector machines (SVM), relevance vector machine (RVM), (iii) Artificial neural networks: MLP-Net (multilayer perceptron neural net), FC-Net (fully connected neural net), ELM (extreme learning machine), LVQNET (linear vector quantization net), (iv) Instance-based learning: k-nearest neighbors (KNN), (v) Decision tree based ensemble methods: bagged trees, boosted trees, boosted stumps, random forest, rotation forest. A brief introduction to the machine learning classifiers used in this paper, can be found in section-1 of Supplementary Information. For classifiers with hyper-parameters in them that needed to be optimized, we performed a grid search to estimate an optimum value. Therefore, it may be possible to further optimize these parameters using more advanced methods. However, a concern with fine-tuning the parameters and testing a large number of models in cases with limited data is that it might lead to overfitting (Rao, Fung, & Rosales, 2008). All the classifiers were implemented in MATLAB environment (Natick, MA). Also note that in this paper, the terms parameters and weights are used interchangeably and refer to values optimized during the learning process whereas the term hyperparameter refers to values that are set before the learning process begins. A toolbox implementing these classifiers to classify subjects into either controls or clinical groups can be obtained from Lanka et al. (Lanka, et al., 2019). The toolbox can also be found at the following URL: https://github.com/pradlanka/malini.

We implemented linear- &RBF-kernel SVM, GNB, LDA, QDA, KNN and ELM in the RCE framework. Many other classifiers we used, such as SLR, RLR, RVM, FC-NN and MLP-NN have built in regularization to control model complexity. Ensemble methods such as bagged trees, random forests, boosted stumps, boosted trees, and rotation forests are not as sensitive to classification problems with a large number of features. Therefore, we did not implement classifiers with built-in regularization as well as ensemble methods in the RCE-framework. KNN was implemented both within and outside the RCE-framework.

2.5. Classification performance metrics

Since many of the datasets which are used in this study are unbalanced in class labels (i.e. each class contains an unequal number of instances), it is important to investigate individual class accuracies. In such cases where one class has more observations in the dataset than the other class, the classifier reports a high accuracy even if the classifier just assigns the majority class label to all instances in the test dataset (Demirci, et al., 2008). In these cases, the overall/unbalanced accuracy is not indicative of the actual performance of the classifier. Therefore, in addition to presenting the overall/unbalanced accuracy, we also report individual class accuracies as well as the balanced accuracy. The individual class accuracies report the ratio of correctly classified instances of a particular class to the total number of instances of the class in the data. The mean of individual class accuracies obtained from both the training/validation data and the hold-out test dataset represents the balanced CV accuracy and the balanced hold-out test accuracy, respectively.

For all the classification scenarios aforementioned, we report the following: (i) The CV accuracy and its standard deviation (in parenthesis), (ii) CV class accuracies of the individual groups, (iii) the balanced CV accuracy obtained by the mean of individual CV class accuracies, (iv) hold-out test accuracy by voting (unbalanced hold-out test accuracy), (v) mean hold-out test accuracy, which is obtained by using mean of the test accuracies calculated from individual classifier models and its standard deviation (in parenthesis), (vi) individual class accuracies of the groups obtained from the hold-out test data, and (vii) the balanced hold-out test accuracy as an average of individual class hold-out test accuracies. A schematic illustrating the derivation of the classification performance metrics from a confusion matrix is shown in Fig. S3.

The evaluation of the classification performance and the diagnostic utility of the classifier must be made taking into consideration all the above performance metrics as well as the classification scenario. It should be noted that in datasets in which some classes have very few instances, classifiers can find it extremely difficult to learn those patterns. The balanced accuracy might also suffer because some disorders such as Asperge’s have a tiny number of samples compared to other groups in the ABIDE dataset, thereby making any reliable classification extremely difficult and giving a low balanced accuracy. The holdout accuracy is a pessimistic estimator of the generalization accuracy because only a portion of the data was given to the classifier for training and the holdout test dataset in our study was chosen to be from a slightly different population than training data. As we demonstrate in this study, the high accuracies commonly reported for leave-one-out cross-validation (LOOCV) and k-fold CV in neuroimaging studies (Anderson, et al., 2011; Deshpande, Wang, Rangaprakash, & Wilamowski, 2015) are misleading, especially when there is significant heterogeneity in the population. It should be noted that though classification accuracy is the most reported classifier performance metric, there are others, such as area under the curve (AUC), sensitivity, specificity, precision or positive predictive value (PPV) etc. But in this manuscript, we limit ourselves to balanced classification accuracy for assessing classification performance since it can be easily interpreted in binary and multiclass classification scenarios. Ideally, one has to evaluate the performance of a classifier using multiple metrics presented above, to assess its performance under both optimistic and pessimistic scenarios, depending on the classification objectives. However, interpreting some of the other measures in the multiclass scenario may not be straightforward.

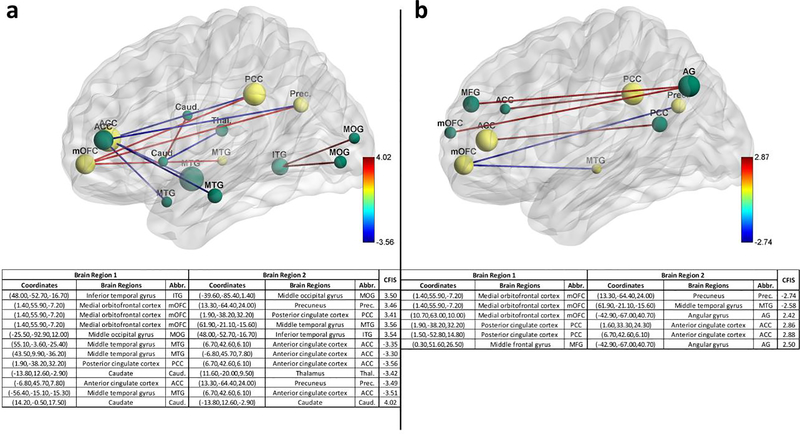

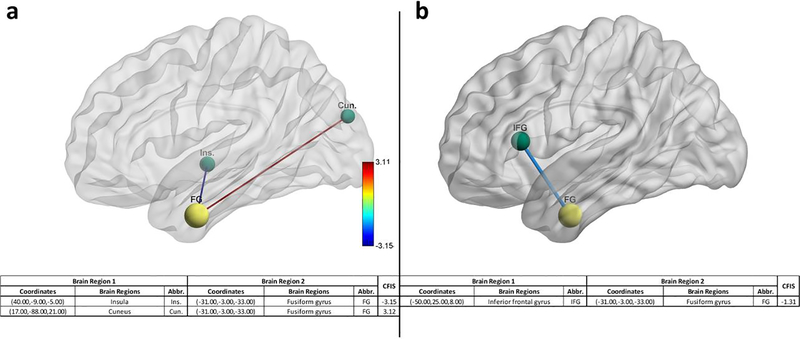

2.6. Calculation of feature importance scores (FIS)

RCE procedure provides us with a feature ranking that indicates the importance of a particular feature in discriminating between the classes. For every step of the RCE loop, we kept the count of the features retained and used the count to assign higher feature importance scores (FIS) to features that were retained by the classification procedure while assigning lower scores to features eliminated early in the feature elimination process. We repeated this for every partitioning of data in the outer k-fold, thereby obtaining the FIS for every classifier implemented in the RCE-framework. We combined the feature importance score of all the classifiers implemented in the RCE-Framework, weighted by their balanced cross-validation accuracy, to obtain a combined score of feature importance (CFIS) for the classification problem. Multiple splits of the entire data into training/validation and hold-out test data gave a slightly different ranking to most classifiers across different splits. We plotted the CFIS of the features commonly found in all the data splits as a scatter plot. We repeated this procedure separately for multiclass and binary classification problems for every dataset. To obtain features which are generalizable across age groups and data acquisition sites, we identified a subset of features in each split, which have high feature importance scores (top 100), implying that they play a significant role in class discriminative ability as well as have significantly different means between the groups (p<0.05, corrected for multiple comparisons using permutation test (Edgington, 1980) by modeling the null distribution of maximum t-scores of features by permuting the class labels of the data). The features or connectivity paths thus identified were then visualized in BrainNet Viewer (Xia, Wang, & He, 2013). Similarly, we also ranked brain regions based on the sum of the CFIS of connectivity paths associated with them. A list of the top 20 brain regions was obtained for every neurological disorder considered in this study.

2.7. Consensus classifier

We have employed 18 different classifiers in this study. Many of them are based on entirely different principles, yet they all attempt to achieve the same result of determining the decision boundary which separates the groups. When multiple classifiers are used in neuroimaging, it is customary to report and emphasize on the one which gave highest classification accuracy (Brown, et al., 2012; Sato, Hoexter, Fujita, & Luis, 2012). This might give an optimistic estimate of the accuracy, and the result might not be repeatable even for data from the same population. Alternatively, we developed an ensemble classifier wherein the performance of all 18 classifiers were combined to provide a consensus estimate, which is referred to as a consensus classifier.

For every classifier, during CV, we resampled the data 600 times (6-fold × 100 repetitions), to get 600 different classifier models for each resampling. We used these 600 models for each classifier to predict the class of the observations in the validation data, giving us a total of 600 predictions for every observation in the hold-out test data. We then calculated individual class probabilities for the hold-out test data by estimating the relative frequency of the 600 target class predictions for the hold-out test data. In this way, the relative frequency of the target class was estimated for each test observation. Then the final class probabilities of the consensus classifiers were calculated by weighing the predicted class frequencies of each classifier with its balanced CV accuracy. The test observation was assigned to the class with the highest probability. This way multiple classifiers can be averaged to provide a consensus classifier which greatly improves the reliability and robustness of inferences made from them and makes the performance estimates more stable. A schematic depicting the predictions of the consensus classifier on the hold-out test data is shown in Fig. S4.

3. Results

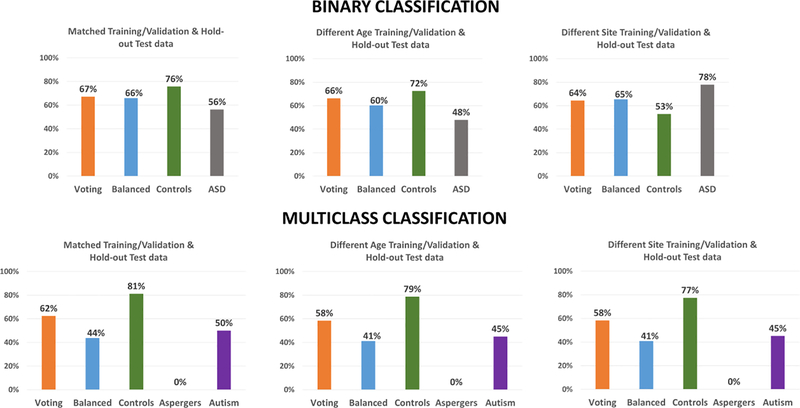

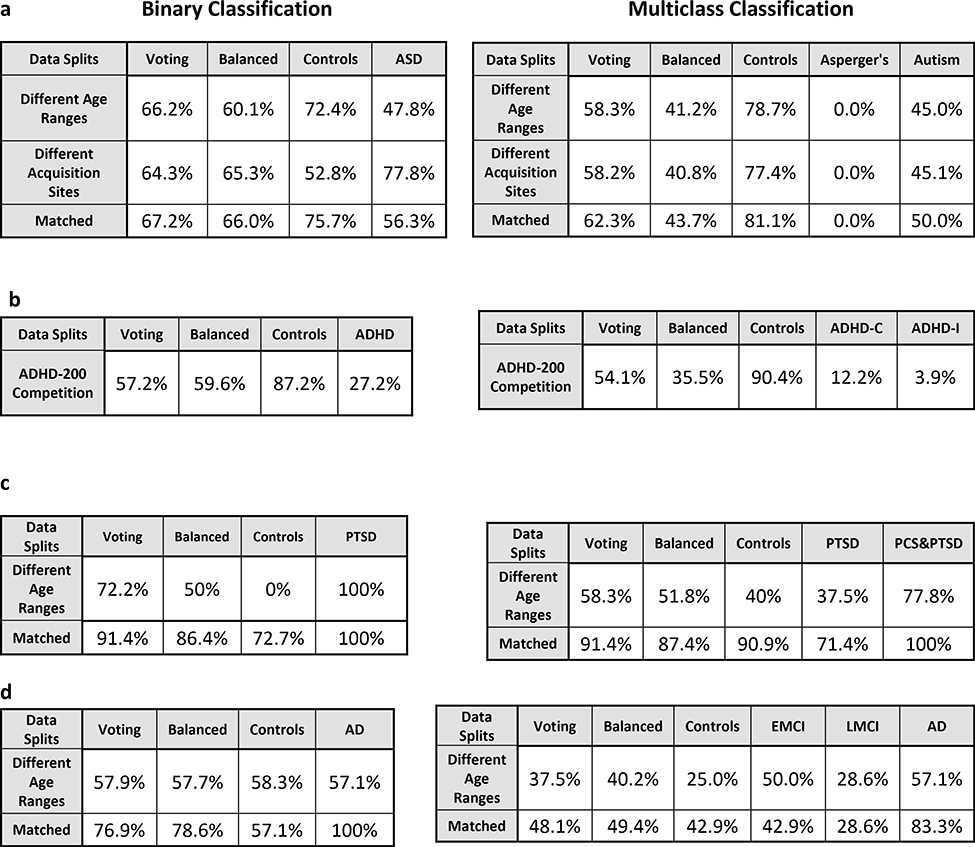

This section is organized as follows. We present classification results for all disorders grouped based on the cross-validation scheme. Accordingly, results from the matched split is first presented, followed by heterogeneous age splits for ABIDE, PTSD and ADNI datasets and the heterogenous site split for ABIDE. Since we followed the split provided by the ADHD consortium, corresponding results are presented separate. Next, results from statistical tests on the difference between classification accuracies obtained across different classifiers and cross-validation schemes are presented. This is followed by results from the consensus classifier, a visualization of the effect of age and site variability, as well as reliability of feature selection and parameter optimization. Finally, we present important connectivity features discriminating each of the disorders as identified by supervised machine learning.

3.1. Matched-split

ABIDE

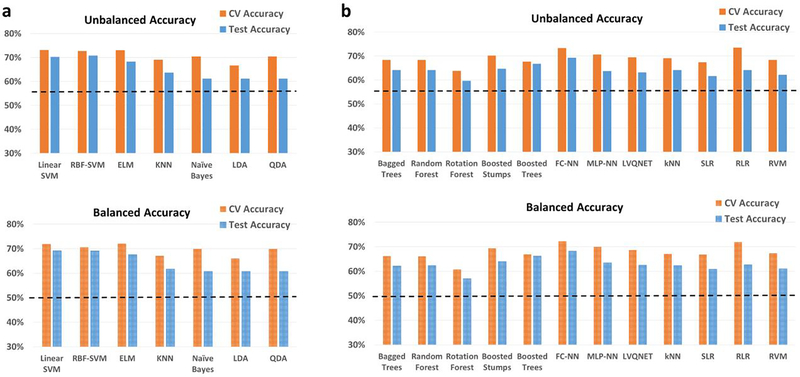

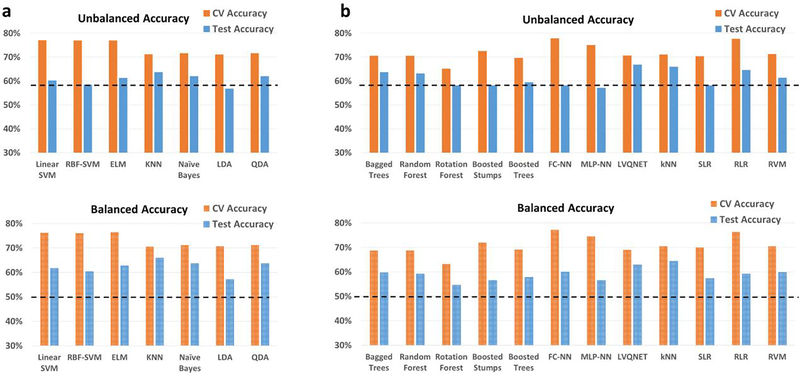

In the matched-split scenario, wherein the training/validation and the holdout test data are matched for age and imaging site, the binary classification results for the binary classification scenario between controls and ASD is shown in Fig. 2. Similarly, in the matched-split scenario, the clasification results for the 3-way multiclass classification scenaio between healthy controls, autism and Asperge’s syndrome are shown in Fig. S5 for the 18 classifiers. The corresponding tables for the binary and multiclass classification scenarios for the matched-split scenario detailing individual class accuracies is shown in Table S1 and Table S2 respectively. For the binary classification scenario, the best hold-out test accuracy at 70.7% obtained with RBF-SVM within the RCE framework while the best-balanced hold-out test accuracy was 69.2% obtained with linear-SVM implemented within the RCE framework. For the 3-way classification, the best hold-out test accuracy was 65.7% achieved with boosted trees while the best balanced hold-out test accuracy was 48.5% obtained with QDA implemented within RCE framework. In multiclass classification scenario, no classifier was able to reliably classify Asperge’s syndrome, which was the reason for lower balanced accuracy, even in this homogeneous matched-split scenario.

Fig. 2.

Unbalanced and balanced accuracy estimates for various classifiers a within the Recursive cluster elimination (RCE) framework, b outside RCE framework for autism brain imaging data exchange (ABIDE) data when the training/validation data and the hold-out test data are matched in imaging sites as well as age group for the binary classification problem between healthy controls and subjects with autism spectrum disorder (ASD). The training/validation and the hold-out test data are from all 15 imaging sites and age range of 7–58 years. The balanced accuracy was obtained by averaging the individual class accuracies. The orange bars indicate the cross-validation (CV) accuracy while the blue bars indicate the accuracy for the hold-out test data obtained by the voting procedure. The dotted line indicates the accuracy obtained when the classifier assigns the majority class to all subjects in the test data. For unbalanced accuracy, this happens to be 56% since healthy controls formed 56% of the total size of the hold-out test data. For balanced accuracy, this is exactly 50%. We chose the majority classifier as the benchmark since the accuracy obtained must be greater than that if it learns anything from the training data. The discrepancy between the biased estimates of the CV accuracy and the unbiased estimates of the hold-out accuracy is noteworthy. The best hold-out test accuracy was 70.7% obtained with RBF-support vector machine (SVM) within the RCE framework, while the best balanced hold-out test accuracy was 69.2% obtained with linear SVM implemented within the RCE framework. ELM, extreme learning machine; KNN, k-nearest neighbors; LDA, linear discriminant analysis; QDA, quadratic discriminant analysis; FC-NN, fully connected neural network; MLP-NN, multilayer perceptron neural network; LVQNET, learning vector quantization neural network; SLR, sparse logistic regression; RLR, regularized logistic regression; RVM, relevance vector machine

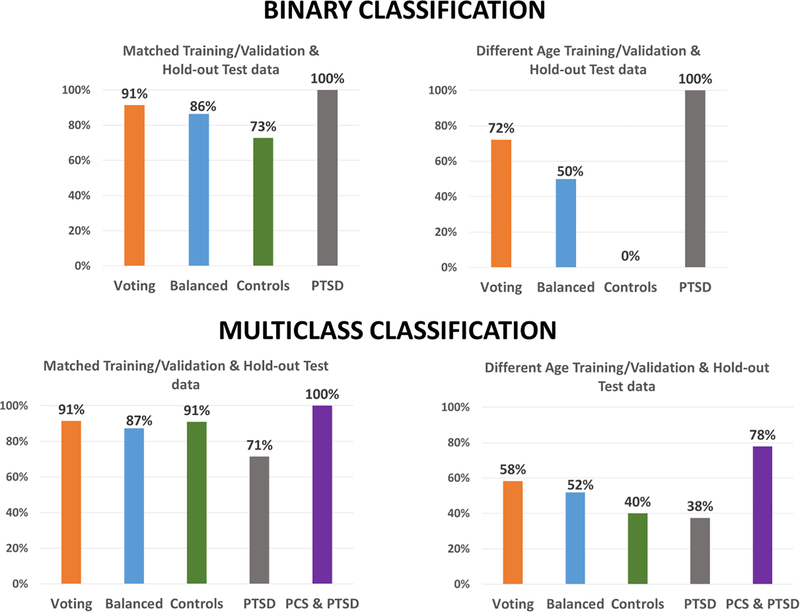

PTSD

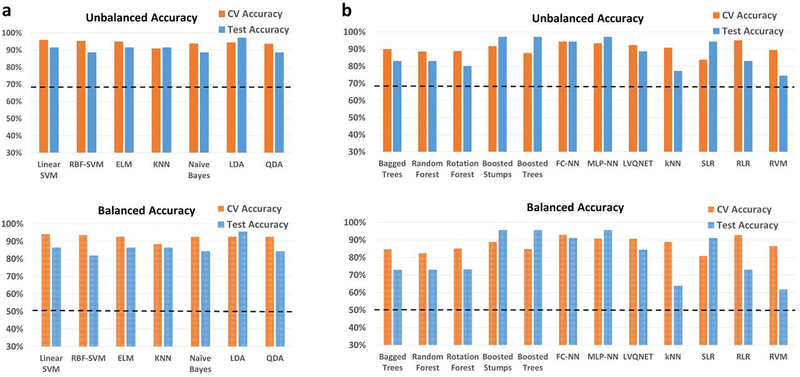

In the matched-age split, the binary classification results with healthy soldiers and PTSD, along with the 3-way multiclass classification between healthy soldiers, PTSD without PCS, and PTSD with PCS are shown in Fig. 3 and Fig. S6, respectively. Similarly, individual class accuracies for both scenarios are shown in Table S3 and Table S4. The best hold-out test accuracy for the binary case was 97.1%, whereas the best balanced hold-out test accuracy obtained was 95.5%, using boosted stumps, MLP-NN and LDA implemented within the RCE framework. For the 3-way classification, the best hold-out test accuracy was 94.3% with boosted stumps, and LDA implemented within RCE framework, while the best balanced hold-out test accuracy obtained was 93.3% with LDA implemented within RCE framework.

Fig. 3.

Unbalanced and balanced accuracy estimates for various classifiers a within recursive cluster elimination (RCE) framework, b outside RCE framework for post-traumatic stress disorder (PTSD) data when the training/validation data and the hold-out test data are from same age groups in the range for the multiclass classification between healthy controls and subjects with PTSD. The training/validation data and the hold-out test data are matched in age with subjects from age range of 23–53 years. The balanced accuracy was obtained by averaging the individual class accuracies. The orange bars indicate the cross-validation (CV) accuracy while the blue bars indicate the accuracy for the hold-out test data obtained by the voting procedure. The dotted line indicates the accuracy obtained when the classifier assigns the majority class to all subjects in the test data. For unbalanced accuracy, this happens to be 68.6% since subjects with PTSD formed 68.6% of the total size of the hold-out test data. For balanced accuracy, this is exactly 50%. We chose the majority classifier as the benchmark since the accuracy obtained must be greater than that if it learns anything from the training data. The discrepancy between the biased estimates of the CV accuracy and the unbiased estimates of the hold-out accuracy is noteworthy. The best hold-out test accuracy was 97.1%, whereas the best balanced hold-out test accuracy obtained was 95.5%, obtained by boosted stumps, boosted trees, multilayer perceptron neural network; (MLP-NN) and linear discriminant analysis (LDA) implemented within the RCE framework. ELM, extreme learning machine; KNN, k-nearest neighbors; QDA, quadratic discriminant analysis; SVM, support vector machine; FC-NN, fully connected neural network; LVQNET, learning vector quantization neural network; SLR, sparse logistic regression; RLR, regularized logistic regression; RVM, relevance vector machine

ADNI

In the homogenous matched-age split, the classification performance for the binary classification scenario between healthy adults and adults diagnosed with Alzheimer’s disease is shown in Fig. 4, with the detailed information about individual class accuracies shown in Table S5. Similarly, results for the multiclass classification scenario (Controls, EMCI, LMCI and AD) are shown in Fig. S7, and Table S6. The best hold-out test accuracy was 84.6% while the best balanced hold-out test accuracy obtained was 85.7%, by both boosted trees and stumps. For the 4-way classification across the spectrum, the best hold-out test accuracy was 51.8% while the best balanced hold-out test accuracy was 53%, obtained with RLR.

Fig. 4.

Unbalanced and balanced accuracy estimates for various classifiers a within recursive cluster elimination (RCE) framework, b outside RCE framework for Alzheimer’s disease neuroimaging initiative (ADNI) data when the training/validation data and the hold-out test data are from the same age groups in the range for the binary classification between healthy controls and subjects with Alzheimer’s disease. The training/validation data and the hold-out test data are matched in age with subjects from age range of 56–88 years. The balanced accuracy was obtained by averaging the individual class accuracies. The orange bars indicate the cross-validation (CV) accuracy while the blue bars indicate the accuracy for the hold-out test data obtained by the voting procedure. The dotted line indicates the accuracy obtained when the classifier assigns the majority class to all subjects in the test data. For unbalanced accuracy, this happens to be 53.8% since healthy controls formed 53.8% of the total size of the hold-out test data. For balanced accuracy, this is exactly 50%. We chose the majority classifier as the benchmark since the accuracy obtained must be greater than that if it learns anything from the training data. The discrepancy between the biased estimates of the CV accuracy and the unbiased estimates of the hold-out accuracy is noteworthy. The best hold-out test accuracy was 84.6% while the best balanced hold-out test accuracy obtained was 85.7%, with boosted trees and stumps. ELM, extreme learning machine; KNN, k-nearest neighbors; LDA, linear discriminant analysis; quadratic discriminant analysis; SVM, support vector machine; FC-NN, fully connected neural network; MLP-NN, multilayer perceptron neural network; LVQNET, learning vector quantization neural network; SLR, sparse logistic regression; RLR, regularized logistic regression; RVM, relevance vector machine

3.2. Heterogeneous-age split

ABIDE

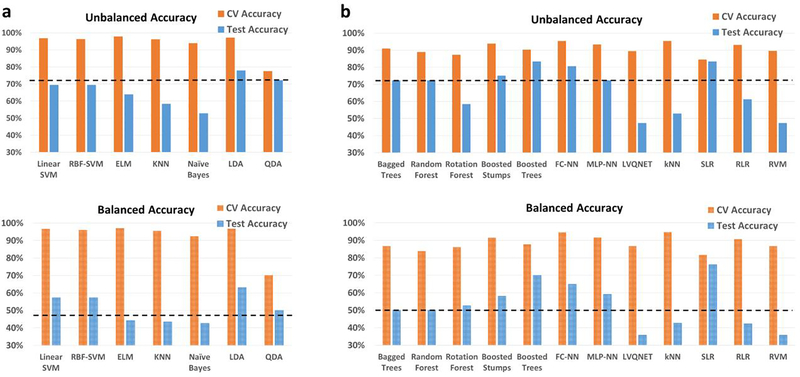

For the heterogeneous-age split, where the training/validation data and the hold-out test data belong to different age ranges, the classification results for the binary classification scenario between healthy controls and subjects with ASD using the ABIDE dataset is shown in Fig. 5. Similarly, the 3-way multiclass classification scenario between the controls, Asperge’s and autism using the ABIDE dataset is shown in Fig. S8. The tables for the binary and multi-class classification showing the detailed individual class accuracies can be found in Table S7 and Table S8, respectively. In the binary classification scenario for the split in which the training/validation and the hold-out test data belong to different age ranges, the best hold-out test accuracy was 66.8% obtained with LVQNET, while the best-balanced hold-out test accuracy was 64.4% obtained with KNN implemented outside the RCE framework. In the multiclass classification for the same split, the best hold-out test accuracy was 61.3% while the best balanced hold-out test accuracy obtained was 46.5%, obtained with LVQNET.

Fig. 5.

Unbalanced and balanced accuracy estimates for various classifiers a within recursive cluster elimination (RCE) framework, b outside RCE framework for autism brain imaging data exchange (ABIDE) data when the training/validation data and the hold-out test data are from different age groups for the binary classification between healthy controls and subjects with autism spectrum disorder. The training/validation data is from an age range of 7–21 years while the data from the age range of 22–58 years was used as a hold-out test data. The balanced accuracy was obtained by averaging the individual class accuracies. The orange bars indicate the cross-validation (CV) accuracy while the blue bars indicate the accuracy for the hold-out test data obtained by the voting procedure. The dotted line indicates the accuracy obtained when the classifier assigns the majority class to all subjects in the test data. For unbalanced accuracy, this happens to be 58.5% since healthy controls formed 58.5% of the total size of the hold-out test data. For balanced accuracy, this is exactly 50%. We chose the majority classifier as the benchmark since the accuracy obtained must be greater than that if it learns anything from the training data. The discrepancy between the biased estimates of the CV accuracy and the unbiased estimates of the hold-out accuracy is noteworthy. The best hold-out test accuracy was 66.8% obtained with learning vector quantization neural network (LVQNET) while the best balanced hold-out test accuracy was 64.4% obtained with k-nearest neighbors (KNN) implemented outside the RCE framework. ELM, extreme learning machine; LDA, linear discriminant analysis; QDA, quadratic discriminant analysis; SVM, support vector machine; FC-NN, fully connected neural network; MLP-NN, multilayer perceptron neural network; SLR, sparse logistic regression; RLR, regularized logistic regression; RVM, relevance vector machine

PTSD

The classification results in the heterogeneous-age split for the binary classification scenario between healthy soldiers and soldiers diagnosed with PTSD is shown in Fig. 6. In the multiclass classification scenario between healthy controls, soldiers diagnosed with just PTSD and soldiers diagnosed with PTSD and PCS is shown in Fig. S9. The corresponding tables for binary and multiclass scenarios can be found in Table S9 and Table S10. In the binary classification scenario for the split in which the training/validation and the hold-out test data belonged to different age ranges, the best hold-out test accuracy was 83.3% obtained by SLR and boosted trees, while the best balanced hold-out test accuracy obtained was 76.2% with SLR. In the multiclass classification for the same split, the best hold-out test accuracy was 80.6% while the best balanced hold-out test accuracy obtained was 73.3%, obtained with boosted stumps.

Fig. 6.

Unbalanced and balanced accuracy estimates for various classifiers a within recursive cluster elimination (RCE) framework, b outside RCE framework for post-traumatic stress disorder (PTSD) data when the training/validation data and the hold-out test data are from different age groups in the range for the multiclass classification between healthy controls and subjects with PTSD. The training/validation data is from an age range of 23–37 years while the data from the age range of 38–53 years was used as a hold-out test data. The balanced accuracy was obtained by averaging the individual class accuracies. The orange bars indicate the cross-validation (CV) accuracy while the blue bars indicate the accuracy for the hold-out test data obtained by the voting procedure. The dotted line indicates the accuracy obtained when the classifier assigns the majority class to all subjects in the test data. For unbalanced accuracy, this happens to be 72.2% since subjects with PTSD formed 72.2% of the total size of the hold-out test data. For balanced accuracy, this is exactly 50%. We chose the majority classifier as the benchmark since the accuracy obtained must be greater than that if it learns anything from the training data. The discrepancy between the biased estimates of the CV accuracy and the unbiased estimates of the hold-out accuracy is noteworthy. The best hold-out test accuracy was 83.3% obtained by sparse logistic regression (SLR) and boosted trees, while the best balanced hold-out test accuracy obtained was 76.2% with SLR. ELM, extreme learning machine; KNN, k-nearest neighbors; LDA, linear discriminant analysis; QDA, quadratic discriminant analysis; SVM, support vector machine; FC-NN, fully connected neural network; MLP-NN, multilayer perceptron neural network; LVQNET, learning vector quantization neural network; RLR, regularized logistic regression; RVM, relevance vector machine

ADNI

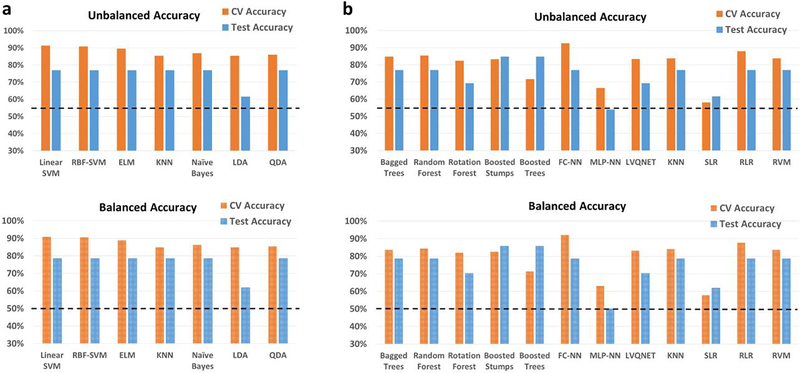

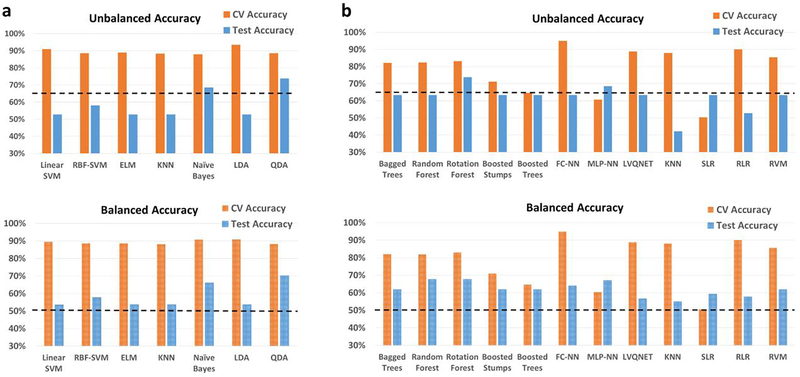

In the heterogenous-age split, the classification performance for the binary classification scenario between healthy adults and adults diagnosed with Alzheimer’s disease is shown in Fig. 7, with the detailed information about individual class accuracies shown in Table S11. Similarly, for results for results for the multiclass classification scenario (Controls, EMCI, LMCI and AD) are shown in Fig. S10, and Table S12. In the binary classification scenario between Controls and AD, the best hold-out test accuracy was 73.7% obtained with random forest, and QDA implemented within RCE framework, while the best balanced hold-out test accuracy obtained was 70.2% with QDA implemented within RCE framework. In the multiclass classification for the same split, the best hold-out test accuracy was 46.9% while the best balanced hold-out test accuracy was 47.9%, obtained with boosted trees.

Fig. 7.

Unbalanced and balanced accuracy estimates for various classifiers a within recursive cluster elimination (RCE) framework, b outside RCE framework for Alzheimer’s disease neuroimaging initiative (ADNI) data when the training/validation data and the hold-out test data are from different age groups in the range for the binary classification between healthy controls and subjects with Alzheimer’s disease. The training/validation data is from an age range of 56–76 years while the data from the age range of 77–88 years was used as a hold-out test data. The balanced accuracy was obtained by averaging the individual class accuracies. The orange bars indicate the cross-validation (CV) accuracy while the blue bars indicate the accuracy for the hold-out test data obtained by the voting procedure. The dotted line indicates the accuracy obtained when the classifier assigns the majority class to all subjects in the test data. For unbalanced accuracy, this happens to be 63.2% since healthy controls formed 63.2% of the total size of the hold-out test data. For balanced accuracy, this is exactly 50%. We chose the majority classifier as the benchmark since the accuracy obtained must be greater than that if it learns anything from the training data. The discrepancy between the biased estimates of the CV accuracy and the unbiased estimates of the hold-out accuracy is noteworthy. The best hold-out test accuracy was 73.7% obtained by Random forest, and quadratic discriminant analysis (QDA) implemented within RCE framework, while the best balanced hold-out test accuracy obtained was 70.2% with QDA implemented within RCE framework. ELM, extreme learning machine; KNN, k-nearest neighbors; LDA, linear discriminant analysis; SVM, support vector machine; FC-NN, fully connected neural network; MLP-NN, multilayer perceptron neural network; LVQNET, learning vector quantization neural network; SLR, sparse logistic regression; RLR, regularized logistic regression; RVM, relevance vector machine

3.3. Heterogeneous-site split

ABIDE

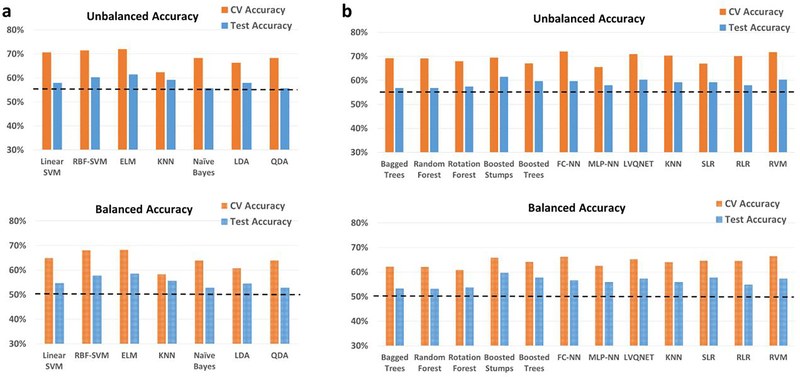

For the heterogeneous-site split, when the training/validation data is from 12 imaging sites, and the hold-out test data is from the remaining three imaging sites, the classification performance for binary and 3-way multiclass classification scenario is shown in Fig. 8 and Fig. S11, respectively. Similarly, detailed information about individual class accuracies for binary and multiclass classification scenarios are shown in Table S13 and Table S14, respectively. For the heterogeneous site split on the ABIDE data, the best accuracy on the hold-out test data was 65.8% obtained with bagged trees as well as Linear SVM implemented within SVM framework, while the best balanced hold-out test accuracy was 66.8% obtained with linear SVM implemented within the RCE framework. In the multiclass scenario between healthy controls, subjects with Asperge’s syndrome and autism, the best hold-out test accuracy was 66% obtained with RLR while the best balanced hold-out test accuracy was 66.8% obtained with ELM implemented within the RCE framework.

Fig. 8.