Abstract

Humans are adept at uncovering abstract associations in the world around them, yet the underlying mechanisms remain poorly understood. Intuitively, learning the higher-order structure of statistical relationships should involve complex mental processes. Here we propose an alternative perspective: that higher-order associations instead arise from natural errors in learning and memory. Using the free energy principle, which bridges information theory and Bayesian inference, we derive a maximum entropy model of people’s internal representations of the transitions between stimuli. Importantly, our model (i) affords a concise analytic form, (ii) qualitatively explains the effects of transition network structure on human expectations, and (iii) quantitatively predicts human reaction times in probabilistic sequential motor tasks. Together, these results suggest that mental errors influence our abstract representations of the world in significant and predictable ways, with direct implications for the study and design of optimally learnable information sources.

Subject terms: Human behaviour, Complex networks, Statistical physics

Humans can easily uncover abstract associations. Here, the authors propose that higher-order associations arise from natural errors in learning and memory. They suggest that mental errors influence the humans’ representation of the world in significant and predictable ways.

Introduction

Our experience of the world is punctuated in time by discrete events, all connected by an architecture of hidden forces and causes. In order to form expectations about the future, one of the brain’s primary functions is to infer the statistical structure underlying past experiences1–3. In fact, even within the first year of life, infants reliably detect the frequency with which one phoneme follows another in spoken language4. By the time we reach adulthood, uncovering statistical relationships between items and events enables us to perform abstract reasoning5, identify visual patterns6, produce language7, develop social intuition8, and segment continuous streams of data into self-similar parcels9. Notably, each of these functions requires the brain to identify statistical regularities across a range of scales. It has long been known that people are sensitive to differences in individual transition probabilities such as those between words or concepts4,6. In addition, mounting evidence suggests that humans can also infer abstract (or higher-order) statistical structures, including hierarchical patterns within sequences of stimuli10, temporal regularities on both global and local scales11, abstract concepts within webs of semantic relationships12, and general features of sparse data13.

To study this wide range of statistical structures in a unified framework, scientists have increasingly employed the language of network science14, wherein stimuli or states are conceptualized as nodes in a graph with edges or connections representing possible transitions between them. In this way, a sequence of stimuli often reflects a random walk along an underlying transition network15–17, and we can begin to ask which network features give rise to variations in human learning and behavior. This perspective has been particularly useful, for example, in the study of artificial grammars18, wherein human subjects are tasked with inferring the grammar rules (i.e., the network of transitions between letters and words) underlying a fabricated language19. Complementary research in statistical learning has demonstrated that modules (i.e., communities of densely connected nodes) within transition networks are reflected in brain imaging data20 and give rise to stark shifts in human reaction times21. Together, these efforts have culminated in a general realization that people’s internal representations of a transition structure are strongly influenced by its higher-order organization22,23. But how does the brain learn these abstract network features? Does the inference of higher-order relationships require sophisticated hierarchical learning algorithms? Or instead, do natural errors in cognition yield a “blurry” representation, making the coarse-grained architecture readily apparent?

To answer these questions, here we propose a single driving hypothesis: that when building models of the world, the brain is finely tuned to maximize accuracy while simultaneously minimizing computational complexity. Generally, this assumption stems from a rich history exploring the trade-off between brain function and computational cost24,25, from sparse coding principles at the neuronal level26 to the competition between information integration and segregation at the whole-brain level27 to the notion of exploration versus exploitation28 and the speed-accuracy trade-off29 at the behavioral level. To formalize our hypothesis, we employ the free energy principle30, which has become increasingly utilized to investigate constraints on cognitive functioning31 and explain how biological systems maintain efficient representations of the world around them32. Despite this thorough treatment of the accuracy-complexity trade-off in neuroscience and psychology, the prevailing intuition in statistical learning maintains that the brain is either optimized to perform Bayesian inference12,13, which is inherently error free, or hierarchical learning10,11,16,18, which typically entails increased rather than decreased computational complexity.

Here, we show that the competition between accuracy and computational complexity leads to a maximum entropy (or minimum complexity) model of people’s internal representations of events30,33. As we decrease the complexity of our model, allowing mental errors to take effect, higher-order features of the transition network organically come into focus while the fine-scale structure fades away, thus providing a concise mechanism explaining how people infer abstract statistical relationships. To a broad audience, our model provides an accessible mapping from transition networks to human behaviors, with particular relevance for the study and design of optimally learnable transition structures—either between words in spoken and written language18,19,33, notes in music34, or even concepts in classroom lectures35.

Results

Network effects on human expectations

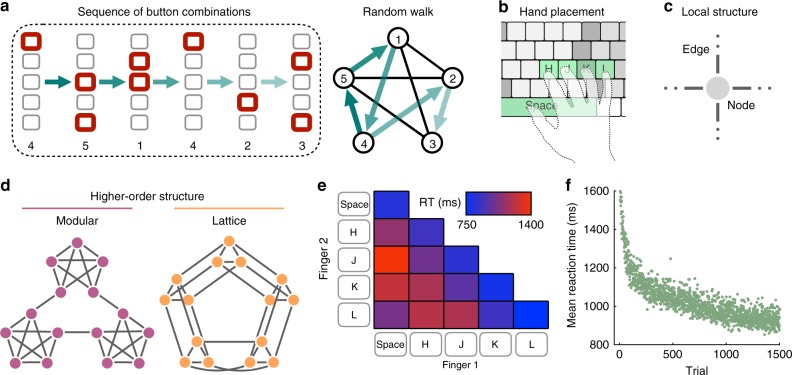

In the cognitive sciences, mounting evidence suggests that human expectations depend critically on the higher-order features of transition networks15,16. Here, we make this notion concrete with empirical evidence for higher-order network effects in a probabilistic sequential response task22. Specifically, we presented human subjects with sequences of stimuli on a computer screen, each stimulus depicting a row of five gray squares with one or two of the squares highlighted in red (Fig. 1a). In response to each stimulus, subjects were asked to press one or two computer keys mirroring the highlighted squares (Fig. 1b). Each of the 15 different stimuli represented a node in an underlying transition network, upon which a random walk stipulated the sequential order of stimuli (Fig. 1a). By measuring the speed with which a subject responded to each stimulus, we were able to infer their expectations about the transition structure: a fast reaction reflected a strongly anticipated transition, while a slow reaction reflected a weakly anticipated (or surprising) transition1,2,22,36.

Fig. 1. Subjects respond to sequences of stimuli drawn as random walks on an underlying transition graph.

a Example sequence of visual stimuli (left) representing a random walk on an underlying transition network (right). b For each stimulus, subjects are asked to respond by pressing a combination of one or two buttons on a keyboard. c Each of the 15 possible button combinations corresponds to a node in the transition network. We only consider networks with nodes of uniform degree k = 4 and edges with uniform transition probability 0.25. d Subjects were asked to respond to sequences of 1500 such nodes drawn from two different transition architectures: a modular graph (left) and a lattice graph (right). e Average reaction times for the different button combinations, where the diagonal elements represent single-button presses and the off-diagonal elements represent two-button presses. f Average reaction times as a function of trial number, characterized by a steep drop-off in the first 500 trials followed by a gradual decline in the remaining 1000 trials. In e and f, averages are taken over responses during random walks on the modular and lattice graphs. Source data are provided as a Source Data file.

While it has long been known that humans can detect differences in transition probabilities—for instance, rare transitions lead to sharp increases in reaction times4,6—more recently it has become clear that people’s expectations also reflect the higher-order architecture of transition networks20–23,37. To clearly study these higher-order effects without the confounding influence of variations in transition probabilities, here we only consider transition graphs with a uniform transition probability of 0.25 on each edge, thereby requiring nodes to have uniform degree k = 4 (Fig. 1c). Specifically, we consider two different graph topologies: a modular graph with three communities of five densely connected nodes and a lattice graph representing a 3 × 5 grid with periodic boundary conditions (Fig. 1d). As all transitions across both graphs have uniform probability, any systematic variations in behavior between different parts of a graph, or between the two graphs themselves, must stem from differences in the graphs’ higher-order modular or lattice structures.

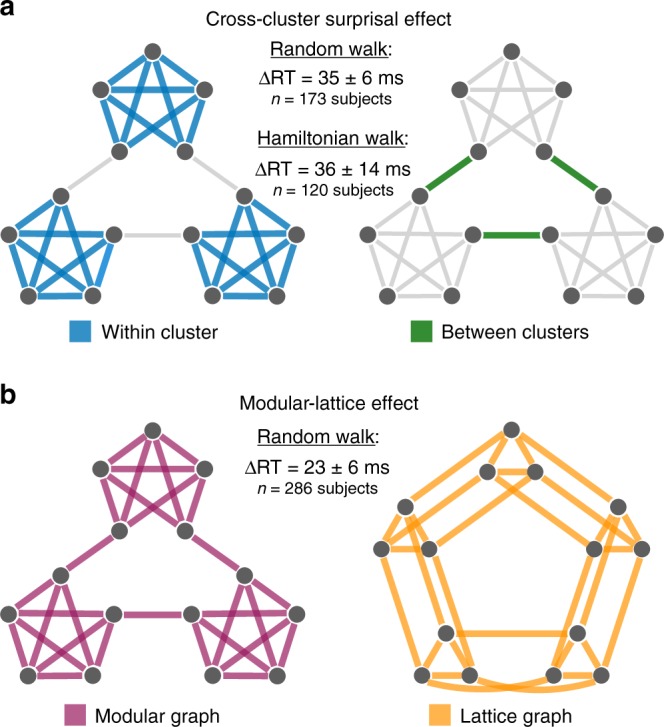

Regressing out the dependence of reaction times on the different button combinations (Fig. 1e), the natural quickening of reactions with time38 (Fig. 1f), and the impact of stimulus recency (Methods), we identify two effects of higher-order network structure on subjects’ reactions. First, in the modular graph we find that reactions corresponding to within-cluster transitions are 35 ms faster than reactions to between-cluster transitions (p < 0.001, F-test; Supplementary Table 1), an effect known as the cross-cluster surprisal22,37 (Fig. 2a). Similarly, we find that people are more likely to respond correctly for within-cluster transitions than between-cluster transitions (Supplementary Table 8). Second, across all transitions within each network, we find that reactions in the modular graph are 23 ms faster than those in the lattice graph (p < 0.001, F-test; Supplementary Table 2), a phenomenon that we coin the modular-lattice effect (Fig. 2b).

Fig. 2. The effects of higher-order network structure on human reaction times.

a Cross-cluster surprisal effect in the modular graph, defined by an average increase in reaction times for between-cluster transitions (right) relative to within-cluster transitions (left). We detect significant differences in reaction times for random walks (p < 0.001, t = 5.77, df = 1.61 × 105) and Hamiltonian walks (p = 0.010, t = 2.59, df = 1.31 × 104). For the mixed effects models used to estimate these effects, see Supplementary Tables 1 and 3. b Modular-lattice effect, characterized by an overall increase in reaction times in the lattice graph (right) relative to the modular graph (left). We detect a significant difference in reaction times for random walks (p < 0.001, t = 3.95, df = 3.33 × 105); see Supplementary Table 2 for the mixed effects model. Measurements were on independent subjects, statistical significance was computed using two-sided F-tests, and confidence intervals represent standard deviations. Source data are provided as a Source Data file.

Thus far, we have assumed that variations in human behavior stem from people’s internal expectations about the network structure. However, it is important to consider the possible confound of stimulus recency: the tendency for people to respond more quickly to stimuli that have appeared more recently39,40. To ensure that the observed network effects are not simply driven by recency, we performed a separate experiment that controlled for recency in the modular graph by presenting subjects with sequences of stimuli drawn according to Hamiltonian walks, which visit each node exactly once20. Within the Hamiltonian walks, we still detect a significant cross-cluster surprisal effect (Fig. 2a; Supplementary Tables 3–5). In addition, we controlled for recency in our initial random walk experiments by focusing on stimuli that previously appeared a specific number of trials in the past. Within these recency-controlled data, we find that both the cross-cluster surprisal and modular-lattice effects remain significant (Supplementary Figs. 2 and 3). Finally, for all of our analyses throughout the paper we regress out the dependence of reaction times on stimulus recency (Methods). Together, these results demonstrate that higher-order network effects on human behavior cannot be explained by recency alone.

In combination, our experimental observations indicate that people are sensitive to the higher-order architecture of transition networks. But how do people infer abstract features like community structure from sequences of stimuli? In what follows, we turn to the free energy principle to show that a possible answer lies in understanding the subtle role of mental errors.

Network effects reveal errors in graph learning

As humans observe a sequence of stimuli or events, they construct an internal representation of the transition structure, where represents the expected probability of transitioning from node i to node j. Given a running tally nij of the number of times each transition has occurred, one might naively expect that the human brain is optimized to learn the true transition structure as accurately as possible41,42. This common hypothesis is represented by the maximum likelihood estimate43, taking the simple form

| 1 |

To see that human behavior does not reflect maximum likelihood estimation, we note that Eq. (1) provides an unbiased estimate of the transition structure43; that is, the estimated transition probabilities in are evenly distributed about their true value 0.25, independent of the higher-order transition structure. Thus, the fact that people’s reaction times depend systematically on abstract features of the network marks a clear deviation from maximum likelihood estimation. To understand how higher-order network structure impacts people’s internal representations, we must delve deeper into the learning process itself.

Consider a sequence of nodes (x1, x2), where xt ∈ {1, …, N} represents the node observed at time t and N is the size of the network (here N = 15 for all graphs). To update the maximum likelihood estimate of the transition structure at time t + 1, one increments the counts nij using the following recursive rule,

| 2 |

where the Iverson bracket if its argument is true and 0 otherwise. Importantly, we note that at each time t + 1, a person must recall the previous node that occurred at time t; in other words, they must associate a cause xt to each effect xt+1 that they observe. Although maximum likelihood estimation requires perfect recollection of the previous node at each step, human errors in perception and recall are inevitable44–46. A more plausible scenario is that, when attempting to recall the node at time t, a person instead remembers the node at time t − Δt with some decreasing probability P(Δt), where Δt ≥ 0. This memory distribution, in turn, generates an internal belief about which node occurred at time t,

| 3 |

Updating Eq. (2) accordingly, we arrive at a learning rule that accounts for natural errors in perception and recall,

| 4 |

Using this revised counting rule, we can begin to form more realistic predictions about people’s internal estimates of the transition structure, .

We remark that P(Δt) does not represent the forgetting of past stimuli altogether; instead, it reflects the local shuffling of stimuli in time. If one were to forget past stimuli at some fixed rate – a process that is important for some cognitive functions47—this would merely introduce white noise into the maximum likelihood estimate (Supplementary Discussion). By contrast, we will see that, by shuffling the order of stimuli in time, people are able to gather information about the higher-order structure of the underlying transitions.

Choosing a memory distribution: the free energy principle

In order to make predictions about people’s expectations, we must choose a particular mathematical form for the memory distribution P(Δt). To do so, we begin with a single driving hypothesis: that the brain is finely tuned to (i) minimize errors and (ii) minimize computational complexity. Formally, we define the error of a recalled stimulus to be its distance in time from the desired stimulus (i.e., Δt), such that the average error of a candidate distribution Q(Δt) is given by . By contrast, it might seem difficult to formalize the computational complexity associated with a distribution Q. Intuitively, we would like the complexity of Q to increase with increasing certainty. Moreover, as a first approximation we expect the complexity to be approximately additive such that the cost of storing two independent memories equals the costs of the two memories themselves. As famously shown by Shannon, these two criteria of monotonicity and additivity are sufficient to derive a quantitative definition of complexity33—namely, the negative entropy .

Together, the total cost of a distribution Q is its free energy F(Q) = βE(Q) − S(Q), where β is the inverse temperature parameter, which quantifies the relative value that the brain places on accuracy versus efficiency31. In this way, our assumption about resource constraints in the brain leads to a particular form for P: it should be the distribution that minimizes F(Q), namely the Boltzmann distribution30

| 5 |

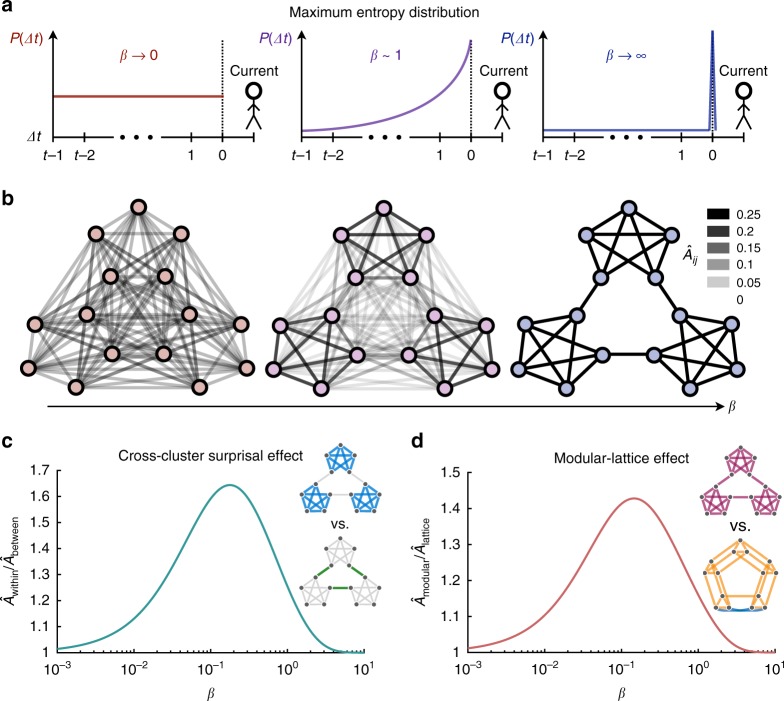

where Z is the normalizing constant (Methods). Free energy arguments similar to the one presented here have been used increasingly to formalize constraints on cognitive functions31,32, with applications from active inference48 and Bayesian learning under uncertainty32 to human action and perception with temporal or computational limitations31,49,50. Taken together, Eqs. (3)–(5) define our maximum entropy model of people’s internal transition estimates .

To gain an intuition for the model, we consider the infinite-time limit, such that the transition estimates become independent of the particular random walk chosen for analysis. Given a transition matrix A, one can show that the asymptotic estimates in our model are equivalent to an average over walks of various lengths, , which, in turn, can be fashioned into the following analytic expression,

| 6 |

where I is the identity matrix (Methods). The model contains a single free parameter β, which represents the precision of a person’s mental representation. In the limit β → ∞ (no mental errors), our model becomes equivalent to maximum likelihood estimation (Fig. 3a), and the asymptotic estimates converge to the true transition structure A (Fig. 3b), as expected51. Conversely, in the limit β → 0 (overwhelming mental errors), the memory distribution P(Δt) becomes uniform across all past nodes (Fig. 3a), and the mental representation loses all resemblance to the true structure A (Fig. 3b).

Fig. 3. A maximum entropy model of transition probability estimates in humans.

a Illustration of the maximum entropy distribution P(Δt) representing the probability of recalling a stimulus Δt time steps from the target stimulus (dashed line). In the limit β → 0, the distribution becomes uniform over all past stimuli (left). In the opposite limit β → ∞, the distribution becomes a delta function on the desired stimulus (right). For intermediate amounts of noise, the distribution drops off monotonically (center). b Resulting internal estimates of the transition structure. For β → 0, the estimates become all-to-all, losing any resemblance to the true structure (left), while for β → ∞, the transition estimates become exact (right). At intermediate precision, the higher-order community structure organically comes into focus (center). c, d Predictions of the cross-cluster surprisal effect (c) and the modular-lattice effect (d) as functions of the inverse temperature β.

Remarkably, for intermediate values of β, higher-order features of the transition network, such as communities of densely connected nodes, come into focus, while some of the fine-scale features, like the edges between communities, fade away (Fig. 3b). Applying Eq. (6) to the modular graph, we find that the average expected probability of within-community transitions reaches over 1.6 times the estimated probability of between-community transitions (Fig. 3c), thus explaining the cross-cluster surprisal effect22,37. Furthermore, we find that the average estimated transition probabilities in the modular graph reach over 1.4 times the estimated probabilities in the lattice graph (Fig. 3d), thereby predicting the modular-lattice effect. In addition to these higher-order effects, we find that the model also explains previously reported variations in human expectations at the level of individual nodes4,6,22 (Supplementary Fig. 1). Together, these results demonstrate that the maximum entropy model predicts the qualitative effects of network structure on human reaction times. But can we use the same ideas to quantitatively predict the behavior of particular individuals?

Predicting the behavior of individual humans

To model the behavior of individual subjects, we relate the transition estimates in Eqs. (3)–(5) to predictions about people’s reaction times. Given a sequence of nodes x1, …, xt−1, we note that the reaction to the next node xt is determined by the expected probability of transitioning from xt−1 to xt calculated at time t − 1, which we denote by . From this internal anticipation a(t), the simplest possible prediction for a person’s reaction time is given by the linear relationship52 , where the intercept r0 represents a person’s reaction time with zero anticipation and the slope r1 quantifies the strength of the relationship between a person’s reactions and their anticipation in our model53.

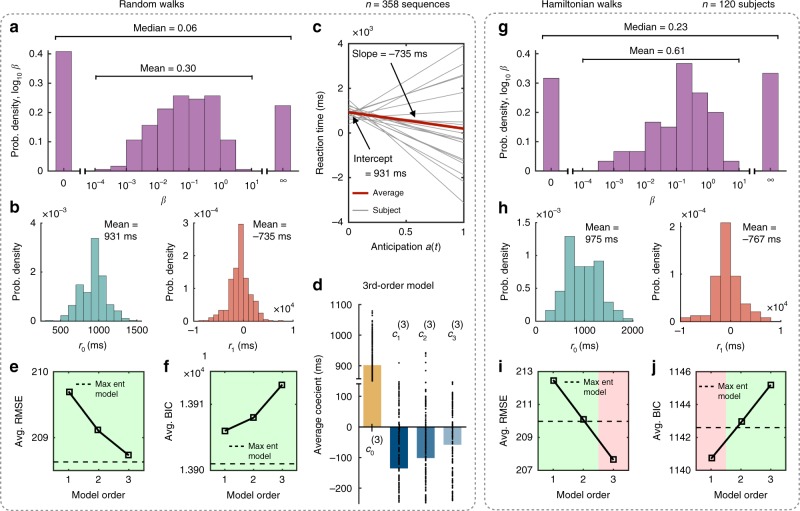

To estimate the parameters β, r0, and r1 that best describe a given individual, we minimize the root mean squared error (RMSE) between their predicted and observed reaction times after regressing out the dependencies on button combination, trial number, and recency (Fig. 1e, f; Methods). The distributions of the estimated parameters are shown in Fig. 4a–b for random walks and in Fig. 4g–h for Hamiltonian walks. Among the 358 random walk sequences in the modular and lattice graphs (across 286 subjects; Methods), 40 were best described as performing maximum likelihood estimation (β → ∞) and 73 seemed to lack any notion of the transition structure whatsoever (β → 0), while among the remaining 245 sequences, the average inverse temperature was β = 0.30. Meanwhile, among the 120 subjects that responded to Hamiltonian walk sequences, 81 appeared to have a non-trivial value of β, with an average of β = 0.61. Interestingly, these estimates of β roughly correspond to the values for which our model predicts the strongest network effects (Fig. 3c, d). In the following section, we will compare these values of β, which are estimated indirectly from people’s reaction times, with direct measurements of β in an independent memory experiment.

Fig. 4. Predicting reaction times for individual subjects.

a–f Estimated parameters and accuracy analysis for our maximum entropy model across 358 random walk sequences (across 286 subjects; Methods). a For the inverse temperature β, 40 sequences corresponded to the limit β → ∞, 73 corresponded to the limit β → 0. Among the remaining 245 sequences, the average value of β was 0.30. b Distributions of the intercept r0 (left) and slope r1 (right). c Predicted reaction time as a function of a subject’s internal anticipation. Gray lines indicate 20 randomly selected sequences, and the red line shows the average prediction over all sequences. d Linear parameters for the third-order competing model; data points represent individual sequences and bars represent averages. e, f Comparing the performance of our maximum entropy model with the hierarchy of competing models up to third-order. Root mean squared error (RMSE; e) and Bayesian information criterion (BIC; f) of our model averaged over all sequences (dashed lines) compared to the competing models (solid lines); our model provides the best description of the data across all models considered. g–j Estimated parameters and accuracy analysis for our maximum entropy model across all Hamiltonian walk sequences (120 subjects). g For the inverse temperature β, 20 subjects were best described as performing maximum likelihood estimation (β → ∞), 19 lacked any notion of the transition structure (β → 0), and the remaining 81 subjects had an average value of β = 0.61. h Distributions of the intercept r0 (left) and slope r1 (right). i Average RMSE of our model (dashed line) compared to that of the competing models (solid line); our model maintains higher accuracy than the competing hierarchy up to the second-order model. j Average BIC of the maximum entropy model (dashed line) compared to that of the competing models (solid line); our model provides a better description of the data than the second- or third-order models. Source data are provided as a Source Data file.

In addition to estimating β, we also wish to determine whether our model accurately describes individual behavior. Toward this end, we first note that the average slope r1 is large (−735 ms for random walks and −767 ms for Hamiltonian walks), suggesting that the transition estimates in our model a(t) are strongly predictive of human reaction times, and negative, confirming the intuition that increased anticipation yields decreased reaction times (Fig. 4b, h). To examine the accuracy of our model , we consider a hierarchy of competing models , which represent the hypothesis that humans learn explicit representations of the higher-order transition structure. In particular, we denote the -order transition matrix by , where counts the number of observed transitions from node i to node j in steps. The model hierarchy takes into account increasingly higher-order transitions, such that the -order model contains perfect information about transitions up to length :

| 7 |

where . Each model contains parameters , where quantifies the predictive power of the kth-order transition structure.

Intuitively, for each model , we expect , to be negative, reflecting a decrease in reaction times due to increased anticipation, and decreasing in magnitude, such that higher-order transitions are progressively less predictive of people’s reaction times. Indeed, considering the third-order model as an example, we find that progressively higher-order transitions are less predictive of human reactions (Fig. 4d). However, even the largest coefficient () is much smaller than the slope in our maximum entropy model (r1 = −735 ms), indicating that the representation is more strongly predictive of people’s reaction times than any of the explicit representations , …. Indeed, averaging over the random walk sequences, the maximum entropy model achieves higher accuracy than the first three orders of the competing model hierarchy (Fig. 4e)—this is despite the fact that the third-order model even contains one more parameter. To account for differences in the number of parameters, we additionally compare the average Bayesian information criterion (BIC) of our model with that of the competing models, finding that the maximum entropy model provides the best description of the data (Fig. 4f).

Similarly, averaging over the Hamiltonian walk sequences, the maximum entropy model provides more accurate predictions than the first two competing models (Fig. 4i) and provides a lower BIC than the second and third competing models (Fig. 4j). Notably, even in Hamiltonian walks, the maximum entropy model provides a better description of human reaction times than the second-order competing model, which has the same number of parameters. However, we remark that the first-order competing model has a lower BIC than the maximum entropy model (Fig. 4j), suggesting that humans may focus on first-order rather than higher-order statistics during Hamiltonian walks—an interesting direction for future research. On the whole, these results indicate that the free energy principle, and the resulting maximum entropy model, is consistently more effective at describing human reactions than the hypothesis that people learn explicit representations of the higher-order transition structure.

Directly probing the memory distribution

Throughout our discussion, we have argued that errors in memory shape human representations in predictable ways, a perspective that has received increasing attention in recent years47,54,55. Although our framework explains specific aspects of human behavior, there exist alternative perspectives that might yield similar predictions. For example, one could imagine a Bayesian learner with a non-Markov prior that “integrates” the transition structure over time, even without sustaining errors in memory or learning. In addition, Eq. (6) resembles the successor representation in reinforcement learning56,57, which assumes that, rather than shuffling the order of past stimuli, humans are instead planning their responses multiple steps in advance (Supplementary Discussion). In order to distinguish our framework from these alternatives, here we provide direct evidence for precisely the types of mental errors predicted by our model.

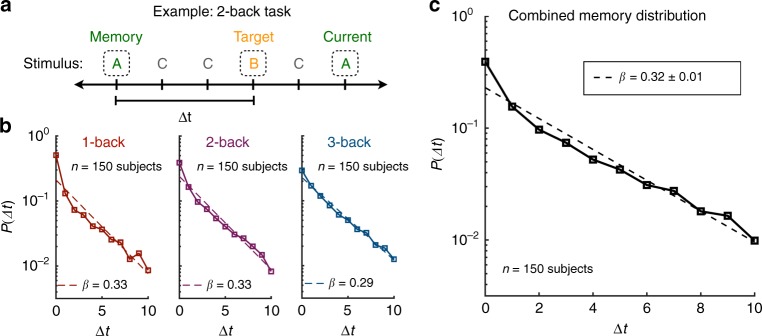

In the construction and testing of our model, we have developed a series of predictions concerning the shape of the memory distribution P(Δt), which, to recall, represents the probability of remembering the stimulus at time t − Δt instead of the target stimulus at time t. We first assumed that P(Δt) decreases monotonically. Second, to make quantitative predictions, we employed the free energy principle, leading to the prediction that P drops off exponentially quickly with Δt (Eq. (5)). Finally, when fitting the model to individual subjects, we estimated an average inverse temperature β between 0.30 for random walks and 0.61 for Hamiltonian walks.

To test these three predictions directly, we conducted a standard n-back memory experiment. Specifically, we presented subjects with sequences of letters on a screen, and they were asked to respond to each letter indicating whether or not it was the same as the letter that occurred n steps previously; for each subject, this process was repeated for the three conditions n = 1, 2, and 3. To measure the memory distribution P(Δt), we considered all trials on which a subject responded positively that the current stimulus matched the target. For each such trial, we looked back to the last time that the subject did in fact observe the current stimulus and we recorded the distance (in trials) between this observation and the target (Fig. 5a). In this way, we were able to treat each positive response as a sample from the memory distribution P(Δt).

Fig. 5. Measuring the memory distribution in an n-back experiment.

a Example of the 2-back memory task. Subjects view a sequence of stimuli (letters) and respond to each stimulus indicating whether it matches the target stimulus from two trials before. For each positive response that the current stimulus matches the target, we measure Δt by calculating the number of trials between the last instance of the current stimulus and the target. b Histograms of Δt (i.e., measurements of the memory distribution P(Δt)) across all subjects in the 1-, 2-, and 3-back tasks. Dashed lines indicate exponential fits to the observed distributions. The inverse temperature β is estimated for each task to be the negative slope of the exponential fit. c Memory distribution aggregated across the three n-back tasks. Dashed line indicates an exponential fit. We report a combined estimate of the inverse temperature β = 0.32 ± 0.01, where the standard deviation is estimated from 1000 bootstrap samples of the combined data. Measurements were on independent subjects. Source data are provided as a Source Data file.

The measurements of P for the 1-, 2-, and 3-back tasks are shown in Fig. 5b, and the combined measurement of P across all conditions is shown in Fig. 5c. Notably, the distributions decrease monotonically and maintain consistent exponential forms, even out to Δt = 10 trials from the target stimulus, thereby providing direct evidence for the Boltzmann distribution (Eq. (5)). Moreover, fitting an exponential curve to each distribution, we can directly estimate the inverse temperature β. Remarkably, the value β = 0.32 ± 0.1 estimated from the combined distribution (Fig. 5c) falls within the range of values estimated from our reaction time experiments (Fig. 4a, g), nearly matching the average value β = 0.30 for random walk sequences (Fig. 4a).

To further strengthen the link between mental errors and people’s internal representations, we then asked subjects to perform the original serial response task (Fig. 1), and for each subject, we estimated β using the two methods described above: (i) directly measuring β in the n-back experiment, and (ii) indirectly estimating β in the serial response experiment. Comparing these two estimates across subjects, we find that they are significantly related with Spearman correlation rs = 0.28 (p = 0.047, permutation test), while noting that we do not use the Pearson correlation because β is not normally distributed (Anderson–Darling test58, p < 0.001 for the serial response task and p = 0.013 for the n-back task). Together, these results demonstrate not only the existence of the particular form of mental errors predicted by our model—down to the specific value of β—but also the relationship between these mental errors and people’s internal estimates of the transition structure.

Network structure guides reactions to novel transitions

Given a model of human behavior, it is ultimately interesting to make testable predictions. Thus far, in keeping with the majority of existing research4,6,20–22,37, we have focused on static transition graphs, wherein the probability Aij of transitioning from state i to state j remains constant over time. However, the statistical structures governing human life are continually shifting59,60, and people are often forced to respond to rare or novel transitions61,62. Here we show that, when confronted with a novel transition—or a violation of the preexisting transition network—not only are people surprised, but the magnitude of their surprise depends critically on the topology of the underlying network.

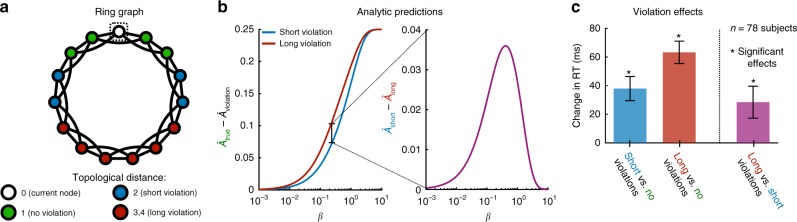

We consider a ring graph where each node is connected to its nearest and next-nearest neighbors (Fig. 6a). We asked subjects to respond to sequences of 1500 nodes drawn as random walks on the ring graph, but with 50 violations randomly interspersed. These violations were divided into two categories: short violations of topological distance two and long violations of topological distances three and four (Fig. 6a). Using maximum likelihood estimation (Eq. (1)) as a guide, one would naively expect people to be equally surprised by all violations—indeed, each violation has never been seen before. In contrast, our model predicts that that surprise should depend crucially on the topological distance of a violation in the underlying graph, with topologically longer violations inducing increased surprise over short violations (Fig. 6b).

Fig. 6. Network violations yield surprise that grows with topological distance.

a Ring graph consisting of 15 nodes, where each node is connected to its nearest neighbors and next-nearest neighbors on the ring. Starting from the boxed node, a sequence can undergo a standard transition (green), a short violation of the transition structure (blue), or a long violation (red). b Our model predicts that subjects’ anticipations of both short (blue) and long (red) violations should be weaker than their anticipations of standard transitions (left). Furthermore, we predict that subjects’ anticipations of violations should decrease with increasing topological distance (right). c Average effects of network violations across 78 subjects, estimated using a mixed effects model (Supplementary Tables 10 and 11), with error bars indicating one standard deviation from the mean. We find that standard transitions yield quicker reactions than both short violations (p < 0.001, t = 4.50, df = 7.15 × 104) and long violations (p < 0.001, t = 8.07, df = 7.15 × 104). Moreover, topologically shorter violations induce faster reactions than long violations (p = 0.011, t = 2.54, df = 3.44 × 103), thus confirming the predictions of our model. Measurements were on independent subjects, and statistical significance was computed using two-sided F-tests. Source data are provided as a Source Data file.

In the data, we find that all violations give rise to sharp increases in reaction times relative to standard transitions (Fig. 6c; Supplementary Table 10), indicating that people are in fact learning the underlying transition structure. Moreover, we find that reaction times for long violations are 28 ms longer than those for short violations (p = 0.011, F-test; Fig. 6c; Supplementary Table 11). In addition, we confirm that the effects of network violations are not simply driven by stimulus recency (Supplementary Figs. 4 and 5). These observations suggest that people learn the topological distances between all nodes in the transition graph, not just those pairs for which a transition has already been observed59–62.

Discussion

Daily life is filled with sequences of items that obey an underlying network architecture, from networks of word and note transitions in natural language and music to networks of abstract relationships in classroom lectures and literature5–9. How humans infer and internally represent these complex structures are questions of fundamental interest10–13. Recent experiments in statistical learning have established that human representations depend critically on the higher-order organization of probabilistic transitions, yet the underlying mechanisms remain poorly understood20–23.

Here we show that network effects on human behavior can be understood as stemming from mental errors in people’s estimates of the transition structure, while noting that future work should focus on disambiguating the role of recency39,40. We use the free energy principle to develop a model of human expectations that explicitly accounts for the brain’s natural tendency to minimize computational complexity—that is, to maximize entropy31,32,49. Indeed, the brain must balance the benefits of making accurate predictions against the computational costs associated with such predictions24–29,50. This competition between accuracy and efficiency induces errors in people’s internal representations, which, in turn, explains with notable accuracy an array of higher-order network phenomena observed in human experiments20–23. Importantly, our model admits a concise analytic form (Eq. (6)) and can be used to predict human behavior on a person-by-person basis (Fig. 4).

This work inspires directions for future research, particularly with regard to the study and design of optimally learnable network structures. Given the notion that densely connected communities help to mitigate the effects of mental errors on people’s internal representations, we anticipate that networks with high “learnability” will possess a hierarchical community structure63. Interestingly, such hierarchical organization has already been observed in a diverse range of real world networks, from knowledge and language graphs64 to social networks and the World Wide Web65. Could it be that these networks have evolved so as to facilitate accurate representations in the minds of the humans using and observing them? Questions such as this demonstrate the importance of having simple principled models of human representations and point to the promising future of this research endeavor.

Methods

Maximum entropy model and the infinite-sequence limit

Here we provide a more thorough derivation of our maximum entropy model of human expectations, with the goal of fostering intuition. Given a matrix of erroneous transition counts , our estimate of the transition structure is given by . When observing a sequence of nodes x1, x2, …, in order to construct the counts , we assume that humans use the following recursive rule: , where Bt(i) denotes the belief, or perceived probability, that node i occurred at the previous time t. This belief, in turn, can be written in terms of the probability P(Δt) of accidentally recalling the node that occurred Δt time steps from the desired node at time t: .

In order to make quantitative predictions about people’s estimates of a transition structure, we must choose a mathematical form for P(Δt). To do so, we leverage the free energy principle31: When building mental models, the brain is finely tuned to simultaneously minimize errors and computational complexity. The average error associated with a candidate distribution Q(Δt) is assumed to be the average distance in time of the recalled node from the target node, denoted . Furthermore, Shannon famously proved that the only suitable choice for the computational cost of a candidate distribution is its negative entropy33, denoted . Taken together, the total cost associated with a distribution Q(Δt) is given by the free energy F(Q) = βE(Q) − S(Q), where β, referred to as the inverse temperature, parameterizes the relative importance of minimizing errors versus computational costs. By minimizing F with respect to Q, we arrive at the Boltzmann distribution p(Δt) = e−βΔt/Z, where Z is the normalizing partition function30. We emphasize that this mathematical form for P(Δt) followed directly from our free energy assumption about resource constraints in the brain.

To gain an analytic intuition for the model without referring to a particular random walk, we consider the limit of an infinitely long sequence of nodes. To begin, we consider a sequence x1, …, xT of length T. At the end of this sequence, the counting matrix takes the form

| 8 |

Dividing both sides by T, the right-hand side becomes a time average, which by the ergodic theorem converges to an expectation over the transition structure in the limit T → ∞,

| 9 |

where denotes an expectation over random walks in A. We note that the expectation of an identity function is simply a probability, such that , where pi is the long-run probability of node i appearing in the sequence and is the probability of randomly walking from node i to node j in Δt + 1 steps. Putting these pieces together, we find that the expectation converges to a concise mathematical form,

| 10 |

Thus far, we have not appealed to our maximum entropy form for P(Δt). It turns out that doing so allows us to write down an analytic expression for the long-time expectations simply in terms of the transition structure A and the inverse temperature β. Noting that and , we have

| 11 |

This simple formula for the representation is the basis for all of our analytic predictions (Figs. 3c, d and 6b) and is closely related to notions of communicability in complex network theory66,67.

Experimental setup for serial response tasks

Subjects performed a self-paced serial reaction time task using a computer screen and keyboard. Each stimulus was presented as a horizontal row of five gray squares; all five squares were shown at all times. The squares corresponded spatially with the keys ‘Space’, ‘H’, ‘J’, ‘K’, and ‘L’, with the left square representing ‘Space’ and the right square representing ‘L’ (Fig. 1b). To indicate a target key or pair of keys for the subject to press, the corresponding squares would become outlined in red (Fig. 1a). When subjects pressed the correct key combination, the squares on the screen would immediately display the next target. If an incorrect key or pair of keys was pressed, the message ‘Error!’ was displayed on the screen below the stimuli and remained until the subject pressed the correct key(s). The order in which stimuli were presented to each subject was prescribed by either a random walk or a Hamiltonian walk on a graph of N = 15 nodes, and each sequence consisted of 1500 stimuli. For each subject, one of the 15 key combinations was randomly assigned to each node in the graph (Fig. 1a). Across all graphs, each node was connected to its four neighboring nodes with a uniform 0.25 transition probability. Importantly, given the uniform edge weights and homogeneous node degrees (k = 4), the only differences between the transition graphs lay in their higher-order structure.

In the first experiment, we presented subjects with random walk sequences drawn from two different graph topologies: a modular graph with three communities of five densely connected nodes and a lattice graph representing a 3 × 5 grid with periodic boundary conditions (Fig. 1c). The purpose of this experiment was to demonstrate the systematic dependencies of human reaction times on higher-order network structure, following similar results reported in recent literature22,37. In particular, we demonstrate two higher-order network effects: In the cross-cluster surprisal effect, average reaction times for within-cluster transitions in the modular graph are significantly faster than reaction times for between-cluster transitions (Fig. 2a); and in the modular-lattice effect, average reaction times in the modular graph are significantly faster than reaction times in the lattice graph (Fig. 2b).

In the second experiment, we presented subjects with Hamiltonian walk sequences drawn from the modular graph. Specifically, each sequence consisted of 700 random walk trials (intended to allow each subject to learn the graph structure), followed by eight repeats of 85 random walk trials and 15 Hamiltonian walk trials (Supplementary Discussion)20. Importantly, we find that the cross-cluster surprisal effect remains significant within the Hamiltonian walk trials (Fig. 2a).

In the third experiment, we considered a ring graph where each node was connected to its nearest and next-nearest neighbors in the ring (Fig. 6a). In order to study the dependence of human expectations on violations to the network structure, the first 500 trials for each subject constituted a standard random walk, allowing each subject time to develop expectations about the underlying transition structure. Across the final 1000 trials, we randomly distributed 50 network violations: 20 short violations of topological distance two and 30 long violations, 20 of topological distance three and 10 of topological distance four (Fig. 6a). As predicted by our model, we found a novel violations effect, wherein violations of longer topological distance give rise to larger increases in reaction times than short, local violations (Fig. 6b, c).

Data analysis for serial response tasks

To make inferences about subjects’ internal expectations based on their reaction times, we used more stringent filtering techniques than previous experiments when pre-processing the data22. Across all experiments, we first excluded from analysis the first 500 trials, in which subjects’ reaction times varied wildly (Fig. 1e), focusing instead on the final 1000 trials (or simply on the Hamiltonian trials in the second experiment), at which point subjects had already developed internal expectations about the transition structures. We then excluded all trials in which subjects responded incorrectly. Finally, we excluded reaction times that were implausible, either three standard deviations from a subject’s mean reaction time or below 100 ms. Furthermore, when measuring the network effects in all three experiments (Figs. 3 and 6), we also excluded reaction times over 3500 ms for implausibility. When estimating the parameters of our model and measuring model performance in the first two experiments (Fig. 4), to avoid large fluctuations in the results based on outlier reactions, we were even more stringent, excluding all reaction times over 2000 ms. Taken together, when measuring the cross-cluster surprisal and modular-lattice effects (Fig. 2), we used an average of 931 trials per subject; when estimating and evaluating our model (Fig. 4), we used an average of 911 trials per subject; and when measuring the violation effects (Fig. 6), we used an average of 917 trials per subject. To ensure that our results are robust to particular choices in the data processing, we additionally studied all 1500 trials for each subject rather than just the final 1000, confirming that both the cross-cluster surprisal and modular-lattice effects remain significant across all trials (Supplementary Tables 6 and 7).

Measurement of network effects using mixed effects models

In order to extract the effects of higher-order network structure on subjects’ reaction times, we used linear mixed effects models, which have become prominent in human research where many measurements are made for each subject38,68. Put simply, mixed effects models generalize standard linear regression techniques to include both fixed effects, which are constant across subjects, and random effects, which vary between subjects. Compared with standard linear models, mixed effects models allow for differentiation between effects that are subject-specific and those that persist across an entire population. Here, all models were fit using the fitlme function in MATLAB (R2018a), and random effects were chosen as the maximal structure that (i) allowed model convergence and (ii) did not include effects whose 95% confidence intervals overlapped with zero69. In what follows, when defining mixed effects models, we employ the standard R notation70.

First, we considered the cross-cluster surprisal effect (Fig. 2a). As we were only interested in measuring higher-order effects of the network topology on human reaction times, it was important to regress out simple biomechanical dependencies on the target button combinations (Fig. 1d), the natural quickening of reactions with time (Fig. 1e), and the effects of recency on reaction times39,40. Also, for the first experiment, since some subjects responded to both the modular and lattice graphs (Experimental Procedures), it was important to account for changes in reaction times due to which stage of the experiment a subject was in. To measure the cross-cluster surprisal effect, we fit a mixed effects model with the formula ‘RT~log(Trial)*Stage+Target+Recency+Trans_Type+(1+log(Trial)*Stage+Recency+Trans_Type|ID)’, where RT is the reaction time, Trial is the trial number (we found that log(Trial) was far more predictive of subjects’ reaction times than the trial number itself), Stage is the stage of the experiment (either one or two), Target is the target button combination, Recency is the number of trials since the last instance of the current stimulus, Trans_Type is the type of transition (either within-cluster or between-cluster), and ID is each subject’s unique ID. Fitting this mixed effects model to the random walk data in the first experiment (Supplementary Table 1), we found a 35 ms increase in reaction times (p < 0.001, F-test) for between-cluster transitions relative to within-cluster transitions (Fig. 2a). Similarly, fitting the same mixed effects model but without the variable Stage to the Hamiltonian walk data in the second experiment (Supplementary Table 4), we found a 36 ms increase in reaction times (p < 0.001, F-test) for between- versus within-cluster transitions (Fig. 2a). We note that because reaction times are not Gaussian distributed, it is fairly standard to perform a log transformation. However, for the above result as well as those that follow, we find the same qualitative effects with or without a log transformation.

Second, we studied the modular-lattice effect (Fig. 2b). To do so, we fit a mixed effects model with the formula ‘RT~log(Trial)*Stage+Target+Recency+Graph+(1+log(Trial)*Stage+Recency+Graph|ID)’, where Graph represents the type of transition network, either modular or lattice. Fitting this mixed effects model to the data in the first experiment (Supplementary Table 2), we found a fixed 23 ms increase in reaction times (p < 0.001, F-test) in the lattice graph relative to the modular graph (Fig. 2b).

Finally, we considered the effects of violations of varying topological distance in the ring lattice (Fig. 6c). We fit a mixed effects model with the formula ‘RT~log(Trial)+Target+Recency+Top_Dist+(1+log(Trial)+Recency+Top_Dist|ID)’, where Top_Dist represents the topological distance of a transition, either one for a standard transition, two for a short violation, or three for a long violation. Fitting the model to the data in the third experiment (Supplementary Tables 10 and 11), we found a 38 ms increase in reaction times for short violations relative to standard transitions (p < 0.001, F-test), a 63 ms increase in reaction times for long violations relative to standard transitions (p < 0.001, F-test), and a 28 ms increase in reaction times for long violations relative to short violations (p = 0.011, F-test). Put simply, people are more surprised by violations to the network structure that take them further from their current position in the network, suggesting that people have an implicit understanding of the topological distances between nodes in the network.

Estimating parameters and making quantitative predictions

Given an observed sequence of nodes x1, …, xt−1, and given an inverse temperature β, our model predicts the anticipation, or expectation, of the subsequent node xt to be . In order to quantitatively describe the reactions of an individual subject, we must relate the expectations a(t) to predictions about a person’s reaction times and then calculate the model parameters that best fit the reactions of an individual subject. The simplest possible prediction is given by the linear relation , where the intercept r0 represents a person’s reaction time with zero anticipation and the slope r1 quantifies the strength with which a person’s reaction times depend on their internal expectations.

In total, our predictions contain three parameters (β, r0, and r1), which must be estimated from the reaction time data for each subject. Before estimating these parameters, however, we first regress out the dependencies of each subject’s reaction times on the button combinations, trial number, and recency using a mixed effects model of the form ‘RT~log(Trial)*Stage+Target+Recency+(1+log(Trial)*Stage+Recency|ID)’, where all variables were defined in the previous section. Then, to estimate the model parameters that best describe an individual’s reactions, we minimize the RMS prediction error with respect to each subject’s observed reaction times, , where T is the number of trials. We note that, given a choice for the inverse temperature β, the linear parameters r0 and r1 can be calculated analytically using standard linear regression techniques. Thus, the problem of estimating the model parameters can be restated as a one-dimensional minimization problem; that is, minimizing RMSE with respect to the inverse temperature β. To find the global minimum, we began by calculating RMSE along 100 logarithmically spaced values for β between 10−4 and 10. Then, starting at the minimum value of this search, we performed gradient descent until the gradient fell below an absolute value of 10−6. For a derivation of the gradient of the RMSE with respect to the inverse temperature β, we point the reader to the Supplementary Discussion. Finally, in addition to the gradient descent procedure described above, for each subject we also manually checked the RMSE associated with the two limits β → 0 and β → ∞. The resulting model parameters are shown in Fig. 4a, b for random walk sequences and Fig. 4g, h for Hamiltonian walk sequences.

Experimental setup for n-back memory task

Subjects performed a series of n-back memory tasks using a computer screen and keyboard. Each subject observed a random sequence of the letters ‘B’, ‘D’, ‘G’, ‘T’, and ‘V’, wherein each letter was randomly displayed in either upper or lower case. The subjects responded on each trial using the keyboard to indicate whether or not the current letter was the same as the letter that occurred n trials previously. For each subject, this task was repeated for the conditions n = 1, 2, and 3, and each condition consisted of a sequence of 100 letters. The three conditions were presented in a random order to each subject. After the n-back task, each subject then performed a serial response task (equivalent to the first experiment described above) consisting of 1500 random walk trials drawn from the modular graph.

Data analysis for n-back memory task

In order to estimate the inverse temperature β for each subject from their n-back data, we directly measured their memory distribution P(Δt). As described in the main text, we treated each positive response indicating that the current stimulus matched the target stimulus as a sample of P(Δt) by measuring the distance in trials Δt between the last instance of the current stimulus and the target (Fig. 5a). For each subject, we combined all such samples across the three conditions n = 1, 2, and 3 to arrive at a histogram for Δt. In order to generate robust estimates for the inverse temperature β, we generated 1000 bootstrap samples of the Δt histogram for each subject. For each sample, we calculated a linear fit to the distribution P(Δt) on log-linear axes within the domain 0 ≤ Δt ≤ 4 (note that we could not carry the fit out to Δt = 10 because the data is much sparser for individual subjects). To ensure that the logarithm of P(Δt) was well defined for each sample—that is, to ensure that P(Δt) > 0 for all Δt—we added one count to each value of Δt. We then estimated the inverse temperature β for each sample by calculating the negative slope of the linear fit of logP(Δt) versus Δt. To arrive at an average estimate of β for each subject, we averaged β across the 1000 bootstrap samples. Finally, we compared these estimates of β from the n-back experiment with estimates of β from subjects’ reaction times in the subsequent serial response task, as described above. We found that these two independent estimates of people’s inverse temperatures are significantly correlated (excluding subjects for which β = 0 or β → ∞), with a Spearman coefficient rs = 0.28 (p = 0.047, permutation test). We note that we do not use the Pearson correlation coefficient because the estimates for β are not normally distributed for either the reaction time task (p < 0.001) nor the n-back task (p = 0.013) according to the Anderson–Darling test58. This non-normality can be clearly seen in the distributions of β in Fig. 4a, g.

Experimental procedures

All participants provided informed consent in writing and experimental methods were approved by the Institutional Review Board of the University of Pennsylvania. In total, we recruited 634 unique participants to complete our studies on Amazon’s Mechanical Turk. For the first serial response experiment, 101 participants only responded to sequences drawn from the modular graph, 113 participants only responded to sequences drawn from the lattice graph, and 72 participants responded to sequences drawn from both the modular and lattice graphs in back-to-back (counter-balanced) sessions for a total of 173 exposures to the modular graph and 185 exposures to the lattice graph. For the second experiment, we recruited 120 subjects to respond to random walk sequences with Hamiltonian walks interspersed, as described in the Supplementary Discussion. For the third experiment, we recruited 78 participants to respond to sequences drawn from the ring graph with violations randomly interspersed. For the n-back experiment, 150 subjects performed the n-back task and, of those, 88 completed the subsequent serial response task. Worker IDs were used to exclude duplicate participants between experiments, and all participants were financially remunerated for their time. In the first experiment, subjects were paid up to $11 for up to an estimated 60 min: $3 per network for up to two networks, $2 per network for correctly responding on at least 90% of the trials, and $1 for completing the entire task. In the second and third experiments, subjects were paid up to $7.50 for an estimated 30 min: $5.50 for completing the experiment and $2 for correctly responding on at least 90% of the trials. In the n-back experiment, subjects were paid up to $8.50 for an estimated 45 min: $7 for completing the entire experiment and $1.50 for correctly responding on at least 90% of the serial response trials.

At the beginning of each experiment, subjects were provided with the following instructions: “In a few minutes, you will see five squares shown on the screen, which will light up as the experiment progresses. These squares correspond with keys on your keyboard, and your job is to watch the squares and press the corresponding key when that square lights up”. For the 72 subjects that responded to both the modular and lattice graphs in the first experiment, an additional piece of information was also provided: “This part will take around 30 minutes, followed by a similar task which will take another 30 minutes”. Before each experiment began, subjects were given a short quiz to verify that they had read and understood the instructions. If any questions were answered incorrectly, subjects were shown the instructions again and asked to repeat the quiz until they answered all questions correctly. Next, all subjects were shown a 10-trial segment that did not count towards their performance; this segment also displayed text on the screen explicitly telling the subject which keys to press on their keyboard. Subjects then began their 1500-trial experiment. For the subjects that responded to both the modular and lattice graphs, a brief reminder was presented before the second graph, but no new instructions were given. After completing each experiment, subjects were presented with performance information and their bonus earned, as well as the option to provide feedback.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

We thank Pedro Ortega for discussions. D.S.B., C.W.L., and A.E.K. acknowledge support from the John D. and Catherine T. MacArthur Foundation, the Alfred P. Sloan Foundation, the ISI Foundation, the Paul Allen Foundation, the Army Research Laboratory (W911NF-10-2-0022), the Army Research Office (Bassett-W911NF-14-1-0679, Grafton-W911NF-16-1-0474, DCIST- W911NF-17-2-0181), the Office of Naval Research, the National Institute of Mental Health (2-R01-DC-009209-11, R01-MH112847, R01-MH107235, R21-M MH-106799), the National Institute of Child Health and Human Development (1R01HD086888-01), National Institute of Neurological Disorders and Stroke (R01 NS099348), and the National Science Foundation (BCS-1441502, BCS-1430087, NSF PHY-1554488 and BCS-1631550). The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the funding agencies.

Source data

Author contributions

C.W.L., A.E.K., and D.S.B. conceived the project. C.W.L. designed the model and performed the analysis. C.W.L., A.E.K., and D.S.B. planned the experiments and discussed the results. C.W.L., A.E.K., and N.N. performed the experiments. C.W.L. wrote the manuscript and Supplementary Information. A.E.K., N.N., and D.S.B. edited the manuscript and Supplementary Information.

Data availability

Source data for Fig. 1 are provided in Supplementary Data File 1. Source data for Fig. 2, Supplementary Figs. 2 and 3, and Supplementary Tables 1–9 are provided in Supplementary Data File 2. Source data for Fig. 5 are provided in Supplementary Data File 3. Source data for Fig. 6, Supplementary Figs. 4 and 5, and Supplementary Tables 10 and 11 are provided in Supplementary Data File 4. Source data from Supplementary Fig. 1 are provided in Supplementary Data File 5.

Code availability

The code that supports the findings of this study is available from the corresponding author upon reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information is available for this paper at 10.1038/s41467-020-15146-7.

References

- 1.Hyman R. Stimulus information as a determinant of reaction time. J. Exp. Psychol. 1953;45:188. doi: 10.1037/h0056940. [DOI] [PubMed] [Google Scholar]

- 2.Sternberg S. Memory-scanning: mental processes revealed by reaction-time experiments. Am. Sci. 1969;57:421–457. [PubMed] [Google Scholar]

- 3.Johnson-Laird PN. Mental models in cognitive science. Cogn. Sci. 1980;4:71–115. doi: 10.1207/s15516709cog0401_4. [DOI] [Google Scholar]

- 4.Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- 5.Bousfield WA. The occurrence of clustering in the recall of randomly arranged associates. J. Gen. Psychol. 1953;49:229–240. doi: 10.1080/00221309.1953.9710088. [DOI] [Google Scholar]

- 6.Fiser J, Aslin RN. Statistical learning of higher-order temporal structure from visual shape sequences. J. Exp. Psychol. 2002;28:458. doi: 10.1037//0278-7393.28.3.458. [DOI] [PubMed] [Google Scholar]

- 7.Friederici AD. Neurophysiological markers of early language acquisition: from syllables to sentences. Trends Cogn. Sci. 2005;9:481–488. doi: 10.1016/j.tics.2005.08.008. [DOI] [PubMed] [Google Scholar]

- 8.Tompson, S. H., Kahn, A. E., Falk, E. B., Vettel, J. M. & Bassett, D. S. Individual differences in learning social and non-social network structures. J. Exp. Psychol. Learn. Mem. Cogn. 45, 253–271 (2019). [DOI] [PMC free article] [PubMed]

- 9.Reynolds JR, Zacks JM, Braver TS. A computational model of event segmentation from perceptual prediction. Cogn. Sci. 2007;31:613–643. doi: 10.1080/15326900701399913. [DOI] [PubMed] [Google Scholar]

- 10.Meyniel, F. & Dehaene, S. Brain networks for confidence weighting and hierarchical inference during probabilistic learning. Proc. Natl. Acad. Sci. USA114, E3859–E3868 (2017). [DOI] [PMC free article] [PubMed]

- 11.Dehaene S, Meyniel F, Wacongne C, Wang L, Pallier C. The neural representation of sequences: From transition probabilities to algebraic patterns and linguistic trees. Neuron. 2015;88:2–19. doi: 10.1016/j.neuron.2015.09.019. [DOI] [PubMed] [Google Scholar]

- 12.Piantadosi ST, Tenenbaum JB, Goodman ND. Bootstrapping in a language of thought: A formal model of numerical concept learning. Cognition. 2012;123:199–217. doi: 10.1016/j.cognition.2011.11.005. [DOI] [PubMed] [Google Scholar]

- 13.Tenenbaum JB, Griffiths TL, Kemp C. Theory-based Bayesian models of inductive learning and reasoning. Trends Cogn. Sci. 2006;10:309–318. doi: 10.1016/j.tics.2006.05.009. [DOI] [PubMed] [Google Scholar]

- 14.Newman ME. The structure and function of complex networks. SIAM Rev. 2003;45:167–256. doi: 10.1137/S003614450342480. [DOI] [Google Scholar]

- 15.Gómez RL. Variability and detection of invariant structure. Psychol. Sci. 2002;13:431–436. doi: 10.1111/1467-9280.00476. [DOI] [PubMed] [Google Scholar]

- 16.Newport EL, Aslin RN. Learning at a distance I. Statistical learning of non-adjacent dependencies. Cogn. Psychol. 2004;48:127–162. doi: 10.1016/S0010-0285(03)00128-2. [DOI] [PubMed] [Google Scholar]

- 17.Garvert, M. M., Dolan, R. J. & Behrens, T. E. A map of abstract relational knowledge in the human hippocampal–entorhinal cortex. Elife6, e17086 (2017). [DOI] [PMC free article] [PubMed]

- 18.Cleeremans A, McClelland JL. Learning the structure of event sequences. J. Exp. Psychol. Gen. 1991;120:235–253. doi: 10.1037/0096-3445.120.3.235. [DOI] [PubMed] [Google Scholar]

- 19.Gomez RL, Gerken L. Artificial grammar learning by 1-year-olds leads to specific and abstract knowledge. Cognition. 1999;70:109–135. doi: 10.1016/S0010-0277(99)00003-7. [DOI] [PubMed] [Google Scholar]

- 20.Schapiro AC, Rogers TT, Cordova NI, Turk-Browne NB, Botvinick MM. Neural representations of events arise from temporal community structure. Nat. Neurosci. 2013;16:486–492. doi: 10.1038/nn.3331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Karuza EA, Thompson-Schill SL, Bassett DS. Local patterns to global architectures: Influences of network topology on human learning. Trends Cogn. Sci. 2016;20:629–640. doi: 10.1016/j.tics.2016.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kahn AE, Karuza EA, Vettel JM, Bassett DS. Network constraints on learnability of probabilistic motor sequences. Nat. Hum. Behav. 2018;2:936–947. doi: 10.1038/s41562-018-0463-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Karuza, E. A., Kahn, A. E. & Bassett, D. S. Human sensitivity to community structure is robust to topological variation. Complexity2019, 1–8 (2019).

- 24.Tversky A, Kahneman D. Judgment under uncertainty: Heuristics and biases. Science. 1974;185:1124–1131. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- 25.de Camp Wilson, T.& Nisbett, R. E. The accuracy of verbal reports about the effects of stimuli on evaluations and behavior. Soc. Psychol. 41, 118–131 (1978).

- 26.Vinje WE, Gallant JL. Sparse coding and decorrelation in primary visual cortex during natural vision. Science. 2000;287:1273–1276. doi: 10.1126/science.287.5456.1273. [DOI] [PubMed] [Google Scholar]

- 27.Tononi G, Sporns O, Edelman GM. A measure for brain complexity: Relating functional segregation and integration in the nervous system. Proc. Natl Acad. Sci. USA. 1994;91:5033–5037. doi: 10.1073/pnas.91.11.5033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cohen JD, McClure SM, Angela JY. Should I stay or should I go? How the human brain manages the trade-off between exploitation and exploration. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2007;362:933–942. doi: 10.1098/rstb.2007.2098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wickelgren WA. Speed-accuracy tradeoff and information processing dynamics. Acta Psychol. 1977;41:67–85. doi: 10.1016/0001-6918(77)90012-9. [DOI] [Google Scholar]

- 30.Jaynes ET. Information theory and statistical mechanics. Phys. Rev. 1957;106:620. doi: 10.1103/PhysRev.106.620. [DOI] [Google Scholar]

- 31.Ortega PA, Braun DA. Thermodynamics as a theory of decision-making with information-processing costs. Proc. R. Soc. A. 2013;469:20120683. doi: 10.1098/rspa.2012.0683. [DOI] [Google Scholar]

- 32.Friston K, Kilner J, Harrison L. A free energy principle for the brain. J. Physiol. Paris. 2006;100:70–87. doi: 10.1016/j.jphysparis.2006.10.001. [DOI] [PubMed] [Google Scholar]

- 33.Shannon CE. A mathematical theory of communication. Bell Syst. Tech. J. 1948;27:379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 34.Brown GJ, Cooke M. Computational auditory scene analysis. Comput. Speech Lang. 1994;8:297–336. doi: 10.1006/csla.1994.1016. [DOI] [Google Scholar]

- 35.Lake BM, Salakhutdinov R, Tenenbaum JB. Human-level concept learning through probabilistic program induction. Science. 2015;350:1332–1338. doi: 10.1126/science.aab3050. [DOI] [PubMed] [Google Scholar]

- 36.McCarthy G, Donchin E. A metric for thought: a comparison of p300 latency and reaction time. Science. 1981;211:77–80. doi: 10.1126/science.7444452. [DOI] [PubMed] [Google Scholar]

- 37.Karuza EA, Kahn AE, Thompson-Schill SL, Bassett DS. Process reveals structure: How a network is traversed mediates expectations about its architecture. Sci. Rep. 2017;7:12733. doi: 10.1038/s41598-017-12876-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Baayen RH, Davidson DJ, Bates DM. Mixed-effects modeling with crossed random effects for subjects and items. J. Mem. Lang. 2008;59:390–412. doi: 10.1016/j.jml.2007.12.005. [DOI] [Google Scholar]

- 39.Murdock BB., Jr The serial position effect of free recall. J. Exp. Psychol. 1962;64:482–488. doi: 10.1037/h0045106. [DOI] [Google Scholar]

- 40.Baddeley AD, Hitch G. The recency effect: Implicit learning with explicit retrieval? Mem. Cogn. 1993;21:146–155. doi: 10.3758/BF03202726. [DOI] [PubMed] [Google Scholar]

- 41.Stachenfeld KL, Botvinick MM, Gershman SJ. The hippocampus as a predictive map. Nat. Neurosci. 2017;20:1643–1653. doi: 10.1038/nn.4650. [DOI] [PubMed] [Google Scholar]

- 42.Momennejad I, et al. The successor representation in human reinforcement learning. Nat. Hum. Behav. 2017;1:680–692. doi: 10.1038/s41562-017-0180-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Boas, M. L. Mathematical Methods in the Physical Sciences (Wiley, 2006).

- 44.Gregory RL. Perceptions as hypotheses. Philos. Trans. R. Soc. Lond. B. 1980;290:181–197. doi: 10.1098/rstb.1980.0090. [DOI] [PubMed] [Google Scholar]

- 45.Howard MW, Kahana MJ. A distributed representation of temporal context. J. Math. Psychol. 2002;46:269–299. doi: 10.1006/jmps.2001.1388. [DOI] [Google Scholar]

- 46.Howard MW, Kahana MJ. Contextual variability and serial position effects in free recall. J. Exp. Psychol. Learn. Mem. Cogn. 1999;25:923. doi: 10.1037/0278-7393.25.4.923. [DOI] [PubMed] [Google Scholar]

- 47.Richards BA, Frankland PW. The persistence and transience of memory. Neuron. 2017;94:1071–1084. doi: 10.1016/j.neuron.2017.04.037. [DOI] [PubMed] [Google Scholar]

- 48.Friston K, Samothrakis S, Montague R. Active inference and agency: Optimal control without cost functions. Biol. Cybern. 2012;106:523–541. doi: 10.1007/s00422-012-0512-8. [DOI] [PubMed] [Google Scholar]

- 49.Ortega, P. A. & Stocker, A. A. In Advances in Neural Information Processing Systems 100–108 (Curran Association, Red Hook, NY, 2016).

- 50.Gershman SJ, Horvitz EJ, Tenenbaum JB. Computational rationality: A converging paradigm for intelligence in brains, minds, and machines. Science. 2015;349:273–278. doi: 10.1126/science.aac6076. [DOI] [PubMed] [Google Scholar]

- 51.Grimmett, G. & Stirzaker, D. Probability and Random Processes (Oxford University Press, 2001).

- 52.Neter, J., Kutner, M. H., Nachtsheim, C. J. & Wasserman, W. Applied Linear Statistical Models Vol. 4 (Irwin, Chicago, 1996).

- 53.Seber, G. A. & Lee, A. J. Linear Regression Analysis, Vol. 329 (John Wiley & Sons, 2012).

- 54.Collins AG, Frank MJ. How much of reinforcement learning is working memory, not reinforcement learning? A behavioral, computational, and neurogenetic analysis. Eur. J. Neurosci. 2012;35:1024–1035. doi: 10.1111/j.1460-9568.2011.07980.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Collins, A. G. & Frank, M. J. Within-and across-trial dynamics of human EEG reveal cooperative interplay between reinforcement learning and working memory. Proc. Natl Acad. Sci. USA115, 2502–2507 (2018). [DOI] [PMC free article] [PubMed]

- 56.Dayan P. Improving generalization for temporal difference learning: The successor representation. Neural Comput. 1993;5:613–624. doi: 10.1162/neco.1993.5.4.613. [DOI] [Google Scholar]

- 57.Gershman SJ, Moore CD, Todd MT, Norman KA, Sederberg PB. The successor representation and temporal context. Neural Comput. 2012;24:1553–1568. doi: 10.1162/NECO_a_00282. [DOI] [PubMed] [Google Scholar]

- 58.Stephens MA. EDF statistics for goodness of fit and some comparisons. J. Am. Stat. Assoc. 1974;69:730–737. doi: 10.1080/01621459.1974.10480196. [DOI] [Google Scholar]

- 59.Whitehead SD, Lin L-J. Reinforcement learning of non-Markov decision processes. Artif. Intell. 1995;73:271–306. doi: 10.1016/0004-3702(94)00012-P. [DOI] [Google Scholar]

- 60.Wang, X. & McCallum, A. Topics over time: A non-markov continuous-time model of topical trends. In SIGKDD 424–433 (ACM, 2006).

- 61.Wolfe JM, Horowitz TS, Kenner NM. Cognitive psychology: Rare items often missed in visual searches. Nature. 2005;435:439. doi: 10.1038/435439a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Tria F, Loreto V, Servedio VDP, Strogatz SH. The dynamics of correlated novelties. Sci. Rep. 2014;4:5890. doi: 10.1038/srep05890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Arenas A, Diaz-Guilera A, Pérez-Vicente CJ. Synchronization reveals topological scales in complex networks. Phys. Rev. Lett. 2006;96:114102. doi: 10.1103/PhysRevLett.96.114102. [DOI] [PubMed] [Google Scholar]

- 64.Guimera R, Danon L, Diaz-Guilera A, Giralt F, Arenas A. Self-similar community structure in a network of human interactions. Phys. Rev. E. 2003;68:065103. doi: 10.1103/PhysRevE.68.065103. [DOI] [PubMed] [Google Scholar]

- 65.Ravasz E, Barabási A-L. Hierarchical organization in complex networks. Phys. Rev. E. 2003;67:026112. doi: 10.1103/PhysRevE.67.026112. [DOI] [PubMed] [Google Scholar]

- 66.Estrada E, Hatano N. Communicability in complex networks. Phys. Rev. E. 2008;77:036111. doi: 10.1103/PhysRevE.77.036111. [DOI] [PubMed] [Google Scholar]

- 67.Estrada E, Hatano N, Benzi M. The physics of communicability in complex networks. Phys. Rep. 2012;514:89–119. doi: 10.1016/j.physrep.2012.01.006. [DOI] [PubMed] [Google Scholar]

- 68.Schall R. Estimation in generalized linear models with random effects. Biometrika. 1991;78:719–727. doi: 10.1093/biomet/78.4.719. [DOI] [Google Scholar]

- 69.Hox, J. J., Moerbeek, M. & van de Schoot, R. Multilevel Analysis: Techniques and Applications (Routledge, 2017).

- 70.Bates, D. et al. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48 (2015).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Source data for Fig. 1 are provided in Supplementary Data File 1. Source data for Fig. 2, Supplementary Figs. 2 and 3, and Supplementary Tables 1–9 are provided in Supplementary Data File 2. Source data for Fig. 5 are provided in Supplementary Data File 3. Source data for Fig. 6, Supplementary Figs. 4 and 5, and Supplementary Tables 10 and 11 are provided in Supplementary Data File 4. Source data from Supplementary Fig. 1 are provided in Supplementary Data File 5.