Abstract

Introduction

There is consistent evidence that the workload in general practices is substantially increasing. The digitalisation of healthcare including the use of artificial intelligence has been suggested as a solution to this problem. We wanted to explore the features of intelligent online triage tools in primary care by conducting a literature review.

Method

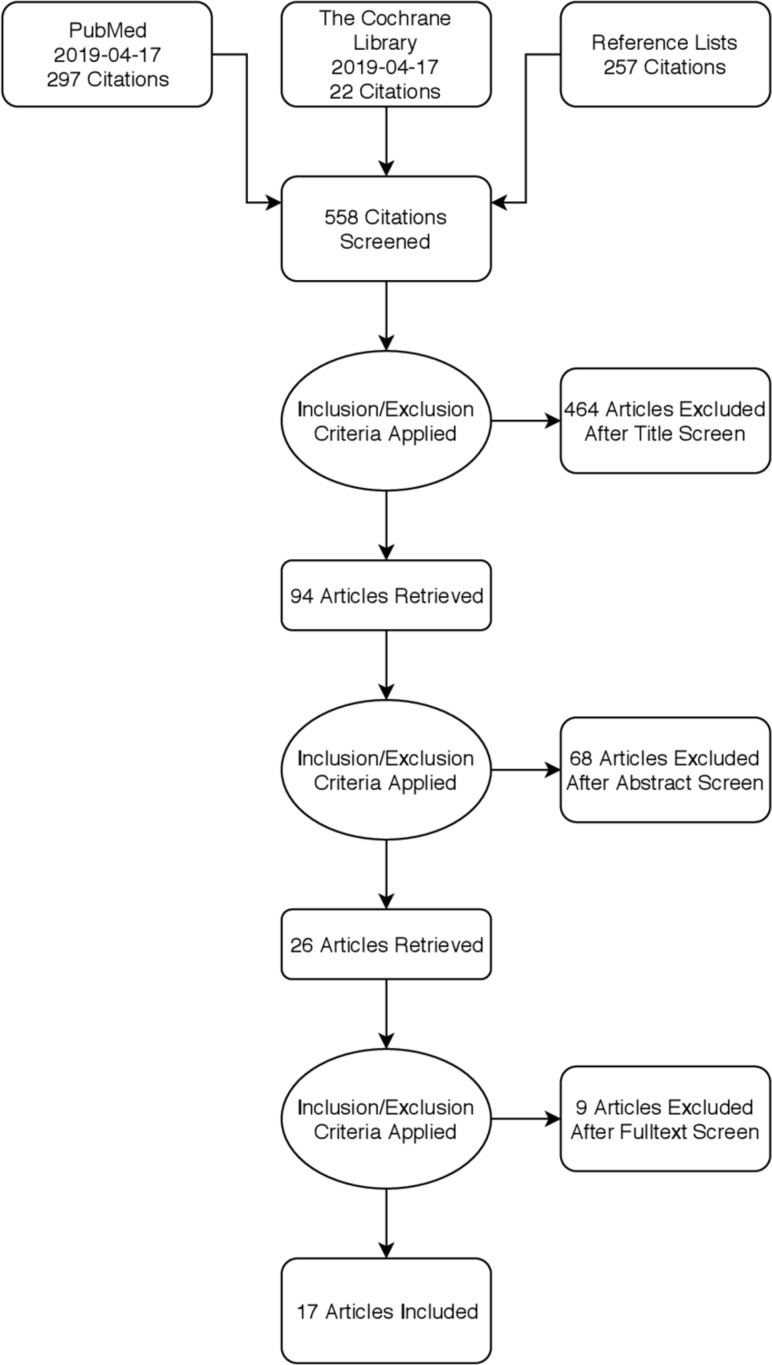

A systematic literature search strategy was formulated and conducted in the PubMed database and the Cochrane Library. Articles were selected according to inclusion/exclusion criteria. Results and data were systematically extracted and thematically analysed. 17 articles of that reported large multimethod studies or smaller diagnostic accuracy tests on clinical vignettes were included. Reviews and expert opinions were also considered.

Results

There was limited evidence on the actual effects and performance of triage tools in primary care. Several aspects can guide further development: concepts of system design, system implementation and diagnostic performance. The most important findings were: a need to formulate evaluation guidelines and regulations; their assumed potential has not yet been met; a risk of increased or redistribution of workloads and the available symptom checker systems seem overly risk averse and should be tested in real-life settings.

Conclusion

This review identified several features associated with the design and implementation of intelligent online triage tools in a primary care context, although most of the investigated systems seemed underdeveloped and offered limited benefits. Current online triage systems should not be used by individuals who have reasonable access to traditional healthcare. Systems used should be strictly evaluated and regulated like other medical products.

Keywords: primary health care, medical informatics, healthcare, information systems

Background

There is consistent evidence that workloads in general practices are substantially increasing and will potentially reach saturation point. There is a clear trend towards increasing consultation rates, consultation durations and patient-facing clinical workloads.1 In parallel, the workforce is declining.2–4 This situation is causing major negative effects such as decreasing accessibility of patients to general practices and decreasing patient satisfaction5 as well as increasing stress and burn-out for doctors.6 Digitalisation has been suggested as a solution to this problem. Increased digitalisation is expected to enable more effective use of health resources and enhanced patient self-management, and is a central part of national health strategies in several countries.7 8

To date, digitalisation has been mainly concerned with the documentation of health visits but it is increasingly being used for communication and consultation between patients and healthcare personnel. There are also great expectations to benefits of self diagnostic or self monitoring devices.

Digitalisation include the possible implementation of Artificial Intelligence (AI) potentially transforming clinical practice. The development and implementation of an easily accessible, online patient-operated triage tool could potentially contribute to easing the pressure on the health system.9 We wanted to study the features of intelligent online triage tools in a primary care context by conducting a literature review.

Method

This literature review explored total populations of study practices seeking initial healthcare contact in the context of primary healthcare. A literature search strategy was formulated after further definition of the investigated population, the intervention and the context. A broad spectrum of digital tools currently exists. The field was narrowed down for this review to digital tools that can be accessed online and operated by the patient. Furthermore, the main component of the tools was to be triaging to enhance the patient’s decision on choosing between such outcomes as self-management, seeking acute medical attention or seeking a planned general practitioner (GP) consultation. In addition, the digital tools were to feature at least some level of AI, defined as the theory and development of computer systems able to perform tasks normally requiring human intelligence.10 Studies dealing with simple triage decision trees were excluded since they did not employ a level of AI along with studies based on tools with a limited scope, such as only skin symptoms. It was anticipated that assessment of the digital tools would involve an unselected population seeking a conventional triage/health professional response or investigation of the diagnostic accuracy of the tool compared with the performance of a real-life doctor. Our aims were to identify the currently available evidence for the efficacy of digital triage tools, the types of tools that have been evaluated, the factors indicating successful use and implementation of AI-powered triage tools and any potential problems or risk factors. Inclusion and exclusion criteria were developed and applied systematically to select articles within this overall frame.

The inclusion criteria were: all studies which investigated an unselected population seeking initial medical advice and using a digital triage tool with some level of AI involvement. The triage tools were to be patient operated. The excluded studies: were published in a language other than English; investigated patients with specific symptoms (eg, chest pain); investigated conventional telephone triage or video-call triage; included the initial decisions being made by health professionals or were carried out in the context of acute or emergency departments.

We conducted a systematic literature search11 to identify the current evidence on the advantages and disadvantages of designing and implementing an intelligent online triage tool in a primary care context. The search strategy is described in detail in online supplementary appendix 1, figure 1 and online supplementary table S1.

Figure 1.

Flow chart article selection.

bmjhci-2019-100114supp001.pdf (87.6KB, pdf)

bmjhci-2019-100114supp002.pdf (27.4KB, pdf)

Results

The search strategy yielded 17 included articles; these varied widely in terms of quality, type, size of study population, methods and conflicts of interest (online supplementary table S2). It was difficult to distinguish clearly between online triage and other features like e-consultation since systems like ‘eConsult’ also contain a built-in triage function where initial decisions are taken by a combination of the user/patient, doctor/nurse and algorithms.12 The larger observational studies were all multifunctional, with the triage function only one feature among others such as help for the self-management of various conditions and communication platforms with health professionals.9 13–15 These articles were all included as long as they included some kind of digital triage tool as defined earlier.

bmjhci-2019-100114supp003.pdf (86.6KB, pdf)

Characteristics of the articles

Five articles described studies based on mixed methods that combined quantitative and qualitative data as well as retrospective and prospective data. Three articles were considered expert opinions. One article described a case report. Four articles described studies of accuracy outcomes. Two articles described observational studies. Two articles were reviews (online supplementary table S2). The articles originated from the USA (n=5), the UK (n=7), Australia (n=4), New Zealand (n=1) and The Netherlands (n=3) and were published between 2001 and 2018. Four articles described the use of clinical vignettes to test the triage tools. Six articles described studies that enrolled real patients. One article described the methods very poorly, and it was not possible to draw conclusions; this article was considered an expert opinion. The studies enrolling real patients had very few subjects who actually used and evaluated the digital tools. One study reported only two e-consultations per 1000 patients per month.13 Another study enrolled 13 133 potential online patients and ended up with only 35 patients going through the complete follow-up, and only 20 patients who actually complied with the advice.15 In a third study that enrolled 80 546 patients, only 6.5% completed the evaluation during a 6-month period.14 At least three articles had clear conflict of interests, since the authors had invested in the AI tool they were evaluating.16–18

Categorising the data

The included articles covered the three main aspects of the overall scope: (1) how to design a digital triage tool, (2) how to implement an existing tool and (3) evaluation of diagnostic accuracy. Three articles contributed critical views on the topic.19–21

The design

Four articles explored the optimal design of an implementable digital AI triage tool.16 22–24 One article pointed out that triage tools should be evaluated in realistic situations on a broad set of randomised cases, in contrast to testing symptom checkers using clinical vignettes which do not reflect real-life complexity or the everyday language of a typical patient. Investigation of the symptom checkers with respect to the balance between correctly identifying a disease and risk of missing a critical diagnosis should be of particular focus.22 Symptom checkers were thought to have great potential for improving diagnosis, quality of care, and health system performance worldwide. However, poorly designed systems could potentially put patients at risk and could even increase the load on health systems if they are too risk averse.22 Implementation of evaluation guidelines specific to each symptom checker was found to be very important for facilitating the development and wide-scale use of the system.22

Another article found that implementing the system ‘Tele-Doc’ resulted in redistribution of work from the doctors to the administrative staff and patients. There was little evidence of any efficiency gains.16 This system appeared to implement a very low level of AI.

A third article focused on the design of an AI-powered decision support system for patients. The main finding was that much thought should be put into customising the delivery of the system, based on close consultation with the target users and an iterative development process, until the system is accessible and useful. The design of system content should go beyond the traditional emphasis on scientific evidence to establish patients’ perspectives of options.23

Other findings were that an intelligent triage system must be able to handle uncertainty and gaps in data, as well as subjective descriptions and perceptions of symptoms since data are filled in by patients. It was stated that, in order to work efficiently, it is more important that the correct interpretation is made than that the correct diagnosis is set, initially.24

Implementation

The main focus of six of the articles was on large scale implementing of an existing online digital tool with some level of AI-powered triage for a real life population. The digital tools appeared to be multifunctional with a low level of AI and with access to online GP consultation.9 13–15 25 26 These studies were mostly multimethod observational studies and were designed to explore multiple facets of the overall scopes.

A large multimethod study on the implementation of the digital tool eConsult in 11 practices in Scotland suggested that the workload was not decreased but, in general, that patients who used eConsult felt that they benefited from the service.14 Factors that would facilitate the implementation included: the presence of a superuser; the inclusion of innovative methods for promoting appropriate use of the tool and the engagement of staff in all areas of the practice. Barriers to the implementation included: delays in system start-ups; marketing not being aligned with practice expectations; challenges in integrating eConsult with existing systems and low numbers of eConsultations. Patients’ perceptions of eConsult were generally positive, particularly because of the ability to use it anytime and the option of having an alternative way of communicating with their GP.14

A similar study on eConsult in 36 practices in England found that its use was actually very low, particularly at weekends, with little effect on reducing staff workload. Additionally, e-consultations may be associated with increased costs and workloads in primary care. Patterns of use suggested that the design could be improved by channelling administrative requests and revisits separately.13

Another UK study evaluated the implementation of WebGP in six practices. During the evaluation period, the actual use of the system was limited, and there was no noticeable impact on practice workloads. Introducing webGP appeared to be associated with shifts in responsibility and workloads between practice staff and between practices and patients. Patients using E-consultations were somewhat younger and more likely to be employed than face-to-face respondents. The motivation for using webGP mostly concerned saving time.25

A large observational study from the Netherlands on a population level evaluated the effect on healthcare usage of providing evidence-based online health information. The study showed that, 2 years after the launch of an evidence-based health website, nationwide primary care usage decreased by 12%. This effect was most prominent for phone consultations and was seen in all subgroups (sorted by sex, socioeconomic status and age) except for the youngest age group. This suggests that eHealth can be effective in improving self-management and reducing healthcare usage in times of increasing healthcare costs.9

Another study from The Netherlands concluded that their web-based triage contributed to a more efficient primary care system, because it facilitated the gatekeeper function.15 Over a period of 15 months, 13 133 individuals used the web-based triage system and 3812 patients followed the triage process to the end. Most commonly (85%), the system advised contacting a doctor but in 15% of the cases the system provided fully automated, problem-tailored, self-care advice.15

The author had earlier reported that less well educated patients, elderly patients and chronic users of medication were especially motivated to use e-consultation, but these patients also reported more barriers to using the system.26

Accuracy

In four articles, the main focus was exploring the accuracy of AI-powered digital triage tools in diagnosing disorders from clinical vignettes (not real patients) compared with the diagnoses of real doctors or the known correct diagnosis.17 18 21 27 28

The development of an Australian online symptom checker ‘Quro’29 was described in a small study that used 30 clinical vignettes. The accuracy ranged between 83.3% and 66.6%, and 100% of the vignettes requiring emergency care were appropriately recalled.17 It was concluded that the chatbots could be greatly improved by adding support for more medical features, such as location, adverse events and recognition of more commonly used medical terms.17

An article from 2016 (USA) described a direct comparison of diagnostic accuracy between 234 physicians and 23 digital symptom checkers. The physicians significantly outperformed the computer algorithms in diagnostic accuracy: 72.1% vs 34.0% (p < 0.001) put the correct diagnosis first and 84.3% vs 51.2% (p<0.001) put the correct diagnosis in the top three listed. In particular, the physicians were more likely to list the correct diagnosis first for high-acuity and uncommon vignettes. Symptom checkers were more likely to list the correct diagnosis first for low-acuity vignettes and common vignettes.28

The same USA-based author reported in 2015 that 23 digital symptom checkers clearly had deficits in both triage and diagnosis interpretations of clinical vignettes. The triage advice from the symptom checkers was generally more risk averse than necessary; users were encouraged to seek professional care for conditions where self-care was reasonable.27 The 23 symptom checkers provided the correct diagnosis first for 34% of the vignettes (95% CI (CI) 31% to 37%).27 Triage performance varied with the urgency of the condition, with appropriate triage advice provided in 80% of emergency cases, 55% of non-emergency cases and 33% of self-care cases. There were wide variations in performance between the algorithms.27

Analysis and thematic synthesis

We identified several advantages and disadvantages associated with the design and implementation of intelligent online triage tools in a primary care context. The results presented above were used to identify key areas of concern.

Features of an intelligent online triage tool

When designing systems for intelligent online triage tools, it is necessary to have a realistic setting for tests and to use an iterative process of development involving trial and adaptation, with the focus on customised delivery of the service.23 In order to enhance self-help and reduce the strain on the health system, the tool should not be overly risk averse.22 It would also be a major advantage if evaluation guidelines were formulated and implemented, since this would enhance the further development and evaluation of the tools.22 An intelligent triage system must be able to handle uncertainty and gaps in the data as well as subjective descriptions and perceptions of symptoms, since data are provided by patients. Also, for the system to work efficiently, it is more important that the correct interpretation is made than that a correct diagnosis is made, initially.24

Large scale implementation of existing online triage tools

The studies investigating the large-scale implementation of existing online tools found that some major expected advantages were not clearly realised. Several studies found that workloads were not decreased14 25 and sometimes costs and workloads were increased.13 16 There were limited numbers of users of the online tools but, for some groups like patients with daytime work, access to primary care was improved.25 The nationwide introduction of ‘eHealth’ in The Netherlands reduced primary care usage after 2 years,9 and improved self-help by patients. However, 85% of users were advised to seek help from a doctor, even for common symptoms,15 which could have increased pressure on primary healthcare systems. The main hindrances to use were delays and technical integration problems, which could lead to loss of engagement. The presence of a superuser and innovative methods for promoting its appropriate use would facilitate implementation of the system.14 Elderly patients and patients with a low level of education could find that a lack of internet skills are a barrier to the use of online systems.26

Diagnostic accuracy of the triage tool

The four studies exploring diagnostic accuracy all used clinical vignettes, thus limiting conclusions on diagnostic accuracy in a real life setting. The chatbot ‘Quro’ was more accurate in suggesting the correct response for emergency cases than in making correct diagnoses.17 The direct comparison of physicians and digital symptom checkers found that physicians outperformed algorithms in diagnostic accuracy. The symptom checkers had difficulty in interpreting the vignettes with respect to both triage and diagnosis. Triage advice from the symptom checkers was generally risk averse and inappropriate for many of the vignettes. It was suggested that physicians should be aware that patients may be using online symptom checkers and that algorithms could be improved by adding more features such as locations, adverse effects and recognition of more commonly used medical entities.17 The evidence on diagnostic accuracy was considered sparse since the studies were vulnerable to bias.19 22

Discussion

This review identified several advantages and disadvantages associated with the design and implementation of intelligent online triage tools in a primary care context, although most of the investigated systems were under-developed and offered limited benefits.

Techno-optimism?

In general, most articles were very optimistic about designing and implementing intelligent online triage tools, predicting potential for substantial advantages even when the systems were performing badly. The disadvantages, such as overly risk-adverse systems, poor diagnostic accuracy and increased workloads, were seen as obstacles that could be overcome, which were being identified in order to improve the next-level algorithms. This may reflect a common techno-optimistic point of view which can bias investigations. On the other hand, it is evident that there is consensus on many levels that ongoing technical evolution is a central aspect of the solutions to current challenges of the healthcare system.4 26

Limited evidence

The identified articles did not provide substantial evidence of the efficacy of digital triage systems since there were only a limited number that evaluated real life implementation and diagnostic accuracy. Furthermore, several weaknesses were found. The multimethod studies included very few patients who actually used and evaluated the systems, making it hard to generalise the results and to gain an idea of the actual effects of using an integrated system. It appears that the concepts investigated were immature and possible effects could have been underestimated. The only articles focusing on system design were expert opinions. Studies on diagnostic accuracy only included clinical vignettes and were therefore not directly comparable to the complexity of advising a real-life patient using natural language. These findings are comparable to those of other studies.20 A recent review on the topic, including patients with specific symptoms, found that research examining the accuracy of triage platforms is limited and that extensive research is needed, keeping in mind that some platforms are designed for a wide range of conditions and others are more specialised.31

Risk aversion and lack of regulations

Evaluations of triage systems should of course focus on safety but, in order to actually improve self help and ease pressure on primary healthcare systems, it is essential that the systems are not too risk averse. Exaggerated risk aversion appears to be a common occurrence for many automated symptom checkers. Users were frequently asked to seek emergency care, sometimes regardless of the type of reported symptoms.18 27 It is a concern that the combination of patients’ confusion, cyberchondria and risk-averse triage advice might result in patients unnecessarily seeking physical care. It has been suggested that future research should focus on understanding how patients interpret and use the advice from digital tools and on understanding the impact of digital tools on care seeking.27 Existing online symptom checkers are performing badly, in terms of both poor diagnostic accuracy and overly risk-averse advice. They are therefore considered to be unsafe and to promote a demand for unnecessary physical care. The results of this review accentuate the current lack of regulations and evaluation guidelines which could facilitate the development of next-level systems and enable users to choose between platforms. This is in agreement with a similar study, which suggested that the interaction of users with technology should be investigated.31

Increase in workload

There was no clear evidence that the implementation of digital triage tools had the proposed effect of decreasing workloads in the primary care setting. Instead, the results indicated that the current tools had a tendency to increase the workload and to shift it to other staff members. A possible explanation for this outcome was the very low number of patients who have actually used the systems.

There were some indications of positive outcomes, however, with some users experiencing increased accessibility to advice14 and another study9 finding a substantial decrease in the primary care workload on a national level that was possibly the result of introducing a national online advice system. This study concluded that online advice systems can be effective in improving self-management and reducing healthcare usage in times of increasing healthcare costs.

Strengths and weaknesses of this review

This review was based on a systematic study of two major databases. Data were transparently and thoroughly extracted from different kinds of studies aiming to provide an overview of the current evidence on the use of online triage tools. In addition to the included studies, numerous studies focused on specific medical conditions or on patients only in an emergency department context; additional information could have been added if these studies had been included. Literature studies are limited in terms of discovering new evidence since they mainly considerer what has already been described. We might have failed to find key evidence. This paper treats primary care contexts in Western countries homogeneously although they might differ in many aspects. Transferability should be considered when evaluating health interventions in different contexts.32

Recommendation for the use of online triage

These results indicate that current online triage systems should not be used by members of the public who have reasonable access to traditional healthcare. The risk of misdiagnosis or inadequate advice is considered too high. Current digital systems integrated in primary practices also seem undeveloped with respect to decreased workloads, and implementation of current systems including additional resources as required should be considered only a part of the process of developing tomorrow’s digital systems. Nonetheless, there is some evidence that easy online access to high quality intelligent medical information could decrease healthcare consumption overall.

Suggestions for improvement and future research

Digital medical triage tools should be strictly evaluated and regulated like other medical products, since consumers are unable to judge the quality of the tools and the potential for harm is great. Because evidence is sparse and could be conflicted by economic interests, research should be independent. Official evaluation guidelines should be formulated and used to regulate the market. Resources should be allocated for exploring and implementing new systems in order to uncover areas where AI-powered triage could lift the accessibility and quality of healthcare and decrease the associated workloads. Commitment to rigorous initial and ongoing evaluation will be critical to ensuring the safe and effective integration of AI in complex sociotechnical settings.33

The diagnostic accuracy of and adequacy of advice on digital triage tools should be independently evaluated on real-life patients. Multimethod studies on real-life implementation of triage systems should be conducted in a context where the tools are actually used by the majority of patients. Reviews, including data from emergency departments and limited-range symptom checkers, should be conducted.

Conclusion

This review has identified several advantages and disadvantages of designing and implementing intelligent online triage tools in a primary care context, although most of the investigated systems appeared to be underdeveloped and to offer limited benefits. It appears that only limited evidence is available on the actual efficacy and performance of existing tools, but studies have uncovered several aspects that could guide further development. These aspects can be categorised into concepts of system design, system implementation and diagnostic performance. The most important findings included the need to formulate evaluation guidelines and regulations, the evidence that the assumed potential of the tools has not yet been met, the risk of actually increasing workloads, the apparent redistribution of existing workloads, and the overly risk-averse symptom checker systems that require tested in real-life settings.

Acknowledgments

This review was conducted as a mandatory part of the Swedish 5-year medical specialisation training. Special thanks to The eHealth Institute, Linnaeus University, Kalmar, Sweden, for facilitating the research and to the County of Kalmar, Sweden, and the primary healthcare clinic Cityläkarna in Kalmar AB, Sweden, for enabling research time.

Footnotes

Twitter: @eHalsoGoran

Contributors: Both authors have been involved in planning and performing the study, analysing data, writing and reviewing manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: All data relevant to the study are included in the article or uploaded as online supplementary information. In this review, all data are available publicly in the internet. Three are no separate files of data.

References

- 1.Hobbs FDR, Bankhead C, Mukhtar T, et al. Clinical workload in UK primary care: a retrospective analysis of 100 million consultations in England, 2007–14. The Lancet 2016;387:2323–30. 10.1016/S0140-6736(16)00620-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.plo_analyse_hver_fjerde_laege_er_over_60_aar.pdf. Available: https://www.laeger.dk/sites/default/files/plo_analyse_hver_fjerde_laege_er_over_60_aar.pdf [Accessed 21 May 2019].

- 3.Addicott Workforce planning in the NHS. Available: https://www.kingsfund.org.uk/sites/default/files/field/field_publication_file/Workforce-planning-NHS-Kings-Fund-Apr-15.pdf [Accessed 21 May 2019].

- 4.Allmän tillgång? Vårdanalys. Available: https://www.vardanalys.se/rapporter/allman-tillgang/ [Accessed 21 May 2019].

- 5.Kontopantelis E, Roland M, Reeves D. Patient experience of access to primary care: identification of predictors in a national patient survey. BMC Fam Pract 2010;11:61. 10.1186/1471-2296-11-61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Orton P, Orton C, Pereira Gray D. Depersonalised doctors: a cross-sectional study of 564 doctors, 760 consultations and 1876 patient reports in UK general practice. BMJ Open 2012;2:e000274. 10.1136/bmjopen-2011-000274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Strategi for digital sundhed_Pages. Available: https://sum.dk/~/media/Filer%20-%20Publikationer_i_pdf/2018/Strategi-for-digital-sundhed-januar-2018/Strategi%20for%20digital%20sundhed_Pages.pdf [Accessed 21 May 2019].

- 8.Wigzell O. Digitala vårdtjänster. p. 59. Available: https://www.socialstyrelsen.se/globalassets/sharepoint-dokument/artikelkatalog/ovrigt/2018-11-2.pdf [Accessed 21 May 2019].

- 9.Spoelman WA, Bonten TN, de Waal MWM, et al. Effect of an evidence-based website on healthcare usage: an interrupted time-series study. BMJ Open 2016;6:e013166. 10.1136/bmjopen-2016-013166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Balkanyi L, Cornet R. The interplay of knowledge representation with various fields of artificial intelligence in medicine. Yearb Med Inform 2019;28:027–34. 10.1055/s-0039-1677899 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dhammi IK, Haq RU. How to write systematic review or Metaanalysis. Indian J Orthop 2018;52:575–7. 10.4103/ortho.IJOrtho_557_18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.eConsult Online triage and consultation tool for NHS GPs. Available: https://econsult.net/ [Accessed 9 Apr 2019].

- 13.Edwards HB, Marques E, Hollingworth W, et al. Use of a primary care online consultation system, by whom, when and why: evaluation of a pilot observational study in 36 general practices in South West England. BMJ Open 2017;7:e016901. 10.1136/bmjopen-2017-016901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cowie J, Calveley E, Bowers G, et al. Evaluation of a digital consultation and self-care advice tool in primary care: a Multi-Methods study. Int J Environ Res Public Health 2018;15. 10.3390/ijerph15050896 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Nijland N, Cranen K, Boer H, et al. Patient use and compliance with medical advice delivered by a web-based triage system in primary care. J Telemed Telecare 2010;16:8–11. 10.1258/jtt.2009.001004 [DOI] [PubMed] [Google Scholar]

- 16.Casey M, Shaw S, Swinglehurst D. Experiences with online consultation systems in primary care: case study of one early adopter site. Br J Gen Pract 2017;67:e736–43. 10.3399/bjgp17X693137 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ghosh S, Bhatia S, Bhatia A. Quro: facilitating user symptom check using a personalised Chatbot-Oriented dialogue system. Stud Health Technol Inform 2018;252:51–6. [PubMed] [Google Scholar]

- 18.Razzaki S, Baker A, Perov Y, et al. A comparative study of artificial intelligence and human doctors for the purpose of triage and diagnosis. ArXiv180610698 Cs Stat 2018. [Google Scholar]

- 19.Enrico C. Paper Review: the Babylon Chatbot [Internet]. The Guide to Health Informatics 3rd Edition, 2018. Available: https://coiera.com/2018/06/29/paper-review-the-babylon-chatbot/ [Accessed 29 May 2019].

- 20.Millenson ML, Baldwin JL, Zipperer L, et al. Beyond Dr. Google: the evidence on consumer-facing digital tools for diagnosis. Diagnosis 2018;5:95–105. 10.1515/dx-2018-0009 [DOI] [PubMed] [Google Scholar]

- 21.Jutel A, Lupton D. Digitizing diagnosis: a review of mobile applications in the diagnostic process. Diagnosis 2015;2:89–96. 10.1515/dx-2014-0068 [DOI] [PubMed] [Google Scholar]

- 22.Fraser H, Coiera E, Wong D. Safety of patient-facing digital symptom checkers. The Lancet 2018;392:2263–4. 10.1016/S0140-6736(18)32819-8 [DOI] [PubMed] [Google Scholar]

- 23.Elwyn G, Kreuwel I, Durand MA, et al. How to develop web-based decision support interventions for patients: a process MAP. Patient Educ Couns 2011;82:260–5. 10.1016/j.pec.2010.04.034 [DOI] [PubMed] [Google Scholar]

- 24.Sadeghi S, Barzi A, Zarrin-Khameh N. Decision support system for medical triage. Stud Health Technol Inform 2001;81:440–2. [PubMed] [Google Scholar]

- 25.Carter M, Fletcher E, Sansom A, et al. Feasibility, acceptability and effectiveness of an online alternative to face-to-face consultation in general practice: a mixed-methods study of webGP in six Devon practices. BMJ Open 2018;8:e018688 10.1136/bmjopen-2017-018688 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nijland N, van Gemert-Pijnen JEWC, Boer H, et al. Increasing the use of e-consultation in primary care: results of an online survey among non-users of e-consultation. Int J Med Inform 2009;78:688–703. 10.1016/j.ijmedinf.2009.06.002 [DOI] [PubMed] [Google Scholar]

- 27.Semigran HL, Linder JA, Gidengil C, et al. Evaluation of symptom checkers for self diagnosis and triage: audit study. BMJ 2015;351:h3480. 10.1136/bmj.h3480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Semigran HL, Levine DM, Nundy S, et al. Comparison of physician and computer diagnostic accuracy. JAMA Intern Med 2016;176:1860–1. 10.1001/jamainternmed.2016.6001 [DOI] [PubMed] [Google Scholar]

- 29.Quro Your Personal Health Assistant [Internet]. Available: https://www.quro.ai [Accessed 24 May 2019].

- 30.nhs long term plan.pdf [Internet]. Available: https://www.longtermplan.nhs.uk/wp-content/uploads/2019/01/nhs-long-term-plan.pdf [Accessed 27 May 2019].

- 31.Aboueid S, Liu RH, Desta BN, et al. The use of artificially intelligent Self-Diagnosing digital platforms by the general public: Scoping review. JMIR Med Inform 2019;7:e13445 10.2196/13445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Schloemer T, Schröder-Bäck P. Criteria for evaluating transferability of health interventions: a systematic review and thematic synthesis. Implementation Sci 2018;13:1–17. 10.1186/s13012-018-0751-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Magrabi F, Ammenwerth E, McNair JB, et al. Artificial intelligence in clinical decision support: challenges for evaluating AI and practical implications. Yearb Med Inform 2019;28:128–34. 10.1055/s-0039-1677903 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjhci-2019-100114supp001.pdf (87.6KB, pdf)

bmjhci-2019-100114supp002.pdf (27.4KB, pdf)

bmjhci-2019-100114supp003.pdf (86.6KB, pdf)