Abstract

The critical state is assumed to be optimal for any computation in recurrent neural networks, because criticality maximizes a number of abstract computational properties. We challenge this assumption by evaluating the performance of a spiking recurrent neural network on a set of tasks of varying complexity at - and away from critical network dynamics. To that end, we developed a plastic spiking network on a neuromorphic chip. We show that the distance to criticality can be easily adapted by changing the input strength, and then demonstrate a clear relation between criticality, task-performance and information-theoretic fingerprint. Whereas the information-theoretic measures all show that network capacity is maximal at criticality, only the complex tasks profit from criticality, whereas simple tasks suffer. Thereby, we challenge the general assumption that criticality would be beneficial for any task, and provide instead an understanding of how the collective network state should be tuned to task requirement.

Subject terms: Dynamical systems; Electrical and electronic engineering; Computational science; Statistical physics, thermodynamics and nonlinear dynamics

Designing efficient artificial networks able to quickly converge to optimal performance for a given task remains a challenge. Here, the authors demonstrate a relation between criticality, task-performance and information theoretic fingerprint in a spiking neuromorphic network with synaptic plasticity.

Introduction

A central challenge in the design of an artificial network is to initialize it such that it quickly reaches optimal performance for a given task. For recurrent networks, the concept of criticality presents such a guiding design principle1–7. At a critical point, typically realized as a second-order phase transition between order and chaos or stability and instability, a number of basic processing properties are maximized, including sensitivity, dynamic range, correlation length, information transfer, and susceptibility8–12. Because all these basic properties are maximized, it is widely believed that criticality is optimal for task performance1,2,4–7,9.

Tuning a system precisely to a critical point can be challenging. Thus ideally, the system self-organizes to criticality autonomously via local-learning rules. This is indeed feasible in various manners by modifying the synaptic strength depending on the pre- and postsynaptic neurons’ activity only6,9,13–19. The locality of the learning rules is key for biological and artificial networks where global information (e.g., task-performance error or activity of distant neurons) may be unavailable or costly to distribute. Recently, it has been shown that specific local-learning rules can even be harnessed more flexibly: a theoretical study suggests that recurrent networks with local, homeostatic learning rules can be tuned toward and away from criticality by simply adjusting the input strength17. This would enable one to sweep the entire range of collective dynamics from subcritical to critical to bursty, and assess the respective task performance.

Complementary to tuning collective network properties such as the distance to criticality, local-learning also enables networks to learn specific patterns or sequences20,21. For example, spike-timing dependent plasticity (STDP) shapes the connectivity, depending only on the timing of the activity of the pre- and postsynaptic neuron. STDP is central for any sequence learning—a central ingredient in language and motor learning20,21. Such learning could strongly speed up convergence, and enables a preshaping of the artificial network—akin to the shaping of biological networks during development by spontaneous activity.

Given diverse learning rules and task requirements, it may be questioned whether criticality is always optimal for processing, or whether each task may profit from a different state, as hypothesized in ref. 10. One could speculate that, e.g., the long correlation time at criticality on the one hand enables long memory retrieval, but on the other hand could be unfavorable if a task requires only little memory. However, the precise relation between the collective state, and specific task requirements is unknown.

When testing networks, the observed network performance is expected to depend crucially on the choice of the task. How can one then characterize performance independently of a specific task, such as classification or sequence memory? A natural framework to characterize and quantify processing of any local circuit in a task-independent manner builds on information theory22,23: classical information theory enables us to quantify the transfer of information between neurons, the information about the past input, as well as the storage of information. The storage of information can be measured within the network or as read out from one neuron. In addition, most recently classical mutual information is being generalized to more than two variables within the framework of partial information decomposition (PID)23–26. PID quantifies the unique and redundant contribution of each source variable to a target, but most importantly also enables a rigorous quantification of synergistic computation, a key contributor for any information integration23–25,27,28. Thereby information theory is a key stepping stone when linking local computation within a network, with global task performance.

Simulations of recurrent networks with plasticity become very slow with increasing size, because every membrane voltage and every synaptic strength has to be updated. Here, neuromorphic chips promise an accelerated and scalable alternative to neural network simulations29–35. To achieve an efficient implementation, physical emulation of synapses and neurons in electrical circuitry are very promising36. In such “neuromorphic chips,” all neurons operate in parallel, and thus the speed of computation is largely independent of the system size, and is instead determined by the time constants of the underlying physical neuron and synapse models—such as in the brain. Realizing such an implementation technically remains challenging, especially when using spiking neurons and flexible synaptic plasticity. The BrainScaleS 2 prototype system combines physical models of neurons and synapses37 with a general purpose processor carrying out plasticity38. In this system, the analog elements provide a speedup, energy efficiency, and enable scaling to very large systems, whereas the general purpose processor enables to set the desired learning rules flexibly. Thus with this neuromorphic chip, we can run the long-term learning experiments—required to study the network self-organization—within very short compute-time.

In the following, we investigate the relation between criticality, task-performance and information-theoretic fingerprint. To that end, we show that a spiking neuromorphic network with synaptic plasticity can be tuned toward and away from criticality by adjusting the input strength. We show that criticality is beneficial for solving complex tasks, but not the simple ones—challenging the common notion that criticality in general is optimal for computation. Methods from classical information theory as well as the novel framework of PID show that our networks indeed enfold their maximum capacity in the vicinity of the critical point. Moreover, the lagged mutual information between the stimulus and the activity of neurons allows to establish a relation between criticality (as set by the input strength) and task performance. Thereby, we provide an understanding how basic computational properties shape task performance.

Results

Model overview

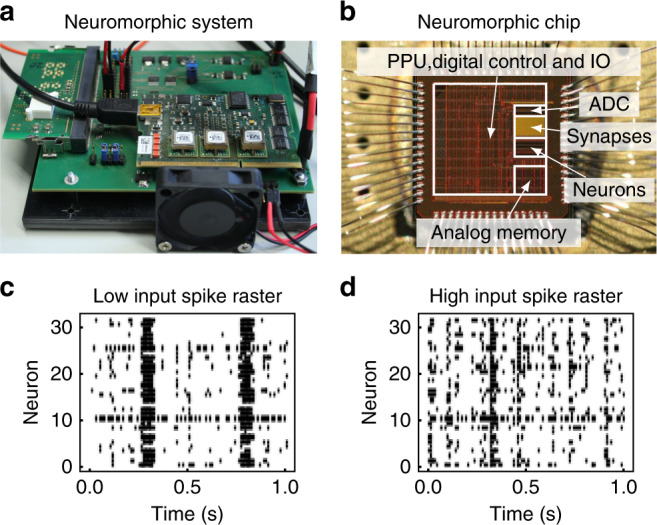

We emulate networks of leaky integrate-and-fire (LIF) neurons on the mixed-signal neuromorphic prototype system BrainScaleS 2, which has N = 32 neurons (Fig. 1 a, b). Future versions of the BrainScaleS 2 chip will feature 512 neuron circuits with adaptive-exponential LIF dynamics and intercompartmental conductances. We use the term emulation in order to clearly distinguish between the physical implementation, where each observable has a measurable counterpart on the neuromorphic chip, and standard software simulations on conventional hardware. The system features an array of 32 × 32 current-based synapses, where 20% of the synapses are programmed to be inhibitory. Synaptic plasticity acts equally on all synapses and is composed of a positive drift and a negative anticausal STDP term. In conjunction both terms lead to homeostatic regulation and thus stable network activity of about 20 Hz per neuron (Supplementary Fig. 1a). Plasticity is executed by an on-chip general purpose processor alongside to the analog emulation of neurons and synapses. This allows for an uninterrupted and fast data acquisition. Even for the small prototype system, the advantages of neuromorphic computing in terms of speed and energy efficiency become important as depicted in ref. 39.

Fig. 1. The degree of external input Kext shapes the collective dynamics of the network.

a The neural network is implemented on the prototype neuromorphic hardware system BrainScaleS 2. b This system features an analog-neural network core as well as an on-chip general purpose processor that allows for flexible plasticity implementation. c For low degree of input (Kext = 0.25), strong recurrent connections develop, and the activity shows irregular bursts, resembling a critical state. d For high degrees of input (Kext = 0.56), firing becomes more irregular and asynchronous.

Neurons are potentially all-to-all connected, but Kext out of the N synapses per neuron are used to inject external Poisson or pattern input. Effectively, Kext quantifies the input strength with the extreme cases of Kext/N = 1 for a feed-forward network and Kext/N = 0 for a fully connected recurrent network, which is completely decoupled from the input. Depending on the degree of external input Kext, the network shows diverse dynamics (Fig. 1c, d). As expected17, Kext shapes the collective dynamics of the network from synchronized for low Kext to more asynchronous irregular for high Kext.

Critical dynamics arise under low input Kext

The transition to burstiness for low Kext suggests the emergence of critical dynamics, i.e., dynamics expected at a nonequilibrium second-order phase transition. Indeed, as detailed in the following, we find signatures of criticality in the classical avalanche distributions (Figs. 2 and 3) as well as in the branching ratio (Fig. 4a), the autocorrelation time (Fig. 4b), the susceptibility, and in trial-to-trial variations (Fig. 4d).

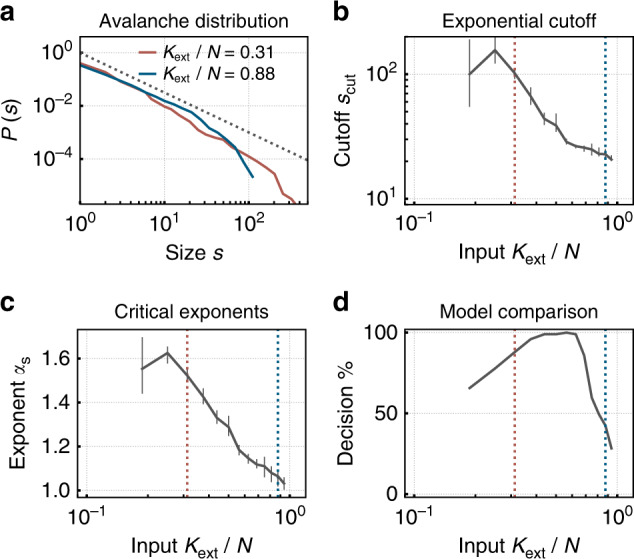

Fig. 2. Under low degree of input Kext, the network self-organizes toward a critical state, and shows long-tailed avalanche distributions.

a Distributions of avalanche sizes show power laws over two orders of magnitude for low Kext. Fitting a truncated power law, b the exponential cutoff scut peaks, and c critical exponents αs approximate 1.5, as expected for critical branching processes. d A maximum-likelihood comparison decides for a power law compared with an exponential fit in the majority of cases. Dashed vertical lines indicate the set of Kext/N values that have been selected in a. In this and all following figures, the median over runs and (if acquired) trials is shown, and the errorbars show the 5–95% confidence intervals.

Fig. 3. Finite-size scaling is assessed using a software implementation with varying system size N.

a Exemplary avalanche size distributions follow a power law for any tested N (degree of input Kext/N = 1/4). b As expected for critical systems, the cutoff scut scales with the system size. The scaling exponent is 1.6 ± 0.2.

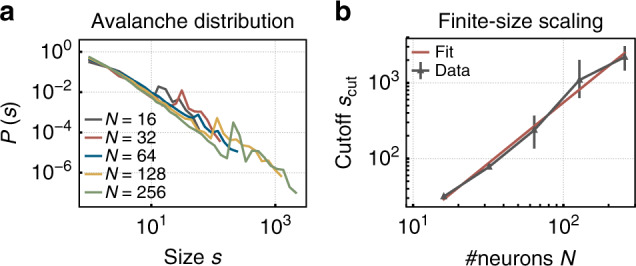

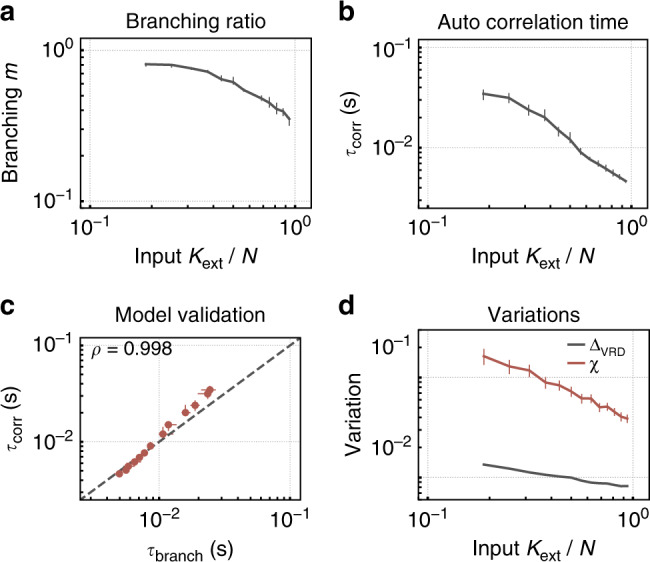

Fig. 4. For low degree of input Kext, the network shows clear signatures of criticality beyond power laws.

Only for low values of Kext, a the estimated branching ratio m tends toward unity, and b the estimated autocorrelation time τcorr peaks. c The match of the τcorr, and the as inferred from m supports the criticality hypothesis (correlation coefficient of ρ = 0.998, p < 10−10). d Trial-to-trial ΔVRD variations as well as the susceptibility χ increase for low Kext.

To test whether the network indeed approaches criticality, we assume the established framework of a branching process8,40–42. In branching processes, a spike at time t triggers on average m postsynaptic spikes at time t + 1, where m is called the branching parameter. For m = 1 the process is critical, and the dynamics give rise to large cascades of activity, called avalanches8,43. The size s of an avalanche is the total number of spikes in a cluster and is power law distributed at criticality. The binwidth for the estimation of the underlying distributions is set to the mean inter-event interval following common methods44. Our network shows power law distributed avalanche sizes s over two orders of magnitude for low Kext (Fig. 2a). For almost any Kext, the distribution is better fitted by a power law than by an exponential distribution45 (Fig. 2d). However, only for low Kext the exponent of the avalanche distribution is close to the expected one, αs ≈ 1.5 (Fig. 2c), and the power law shows the largest cutoff scut (Fig. 2b). For low Kext, the networks tend to get unstable due to the limited number of neurons explaining the decline in scut (Fig. 2b) and in the maximum-likelihood comparison (Fig. 2d). Together, all the quantitative assessment of the avalanches indicate that a low degree of input Kext produces critical-like behavior.

In a control experiment, we investigate finite-size scaling in software simulations, as the current physical system features only 32 neurons. Therefore, a network with the same topology, plasticity rules, and single-neuron dynamics (though without parameter noise and hardware constraints) is simulated for various system sizes N. The resulting avalanche distributions show power laws for any system size (Fig. 3a), and the cutoff scut scales with N as expected at criticality (Fig. 3b). The scaling exponent is 1.6 ± 0.2. Together, these numerical results confirm the hypothesis that for low degrees of input Kext, the small network that is emulated on the chip self-organizes as close to a critical state as possible.

The implementation on neuromorphic hardware promises fast emulation. Already for N = 32, the neuromorphic chip is about a factor of 100 faster than the Brian 2 simulation. To give numbers, a single plasticity experiment with a duration of 600 s biological time is simulated in 570 s on a single core of a Intel Xeon E5-2670 CPU in Brian 2, but emulated in only 6 s on the neuromorphic chip. Hence, a neuromorphic implementation is very promising especially for the future full size chip: When running such detailed networks as classical simulations, the computational overhead scales with due to the all-to-all connectivity and synaptic plasticity on conventional hardware. In contrast, for the neuromorphic system, the execution time is largely independent of the system size N, as long as the network can be implemented on the system.

The assumption that the critical state of the network corresponds to the universality class of critical branching processes is tested further by properly inferring the branching parameter m (Eq. (17)), the autocorrelation times, and the response to perturbations. First, the branching parameter m characterizes the spread of activity and is smaller (larger) than unity for subcritical (supercritical) processes. For our model, it is always in the subcritical regime, but tends toward unity for low Kext (Fig. 4a). Second, the autocorrelation time τcorr is expected to diverge at criticality as 42. Indeed, τcorr as estimated directly from the autocorrelation of the population activity is maximal for low Kext (Fig. 4b). Third, the estimates of m and τcorr are in theory related via the analytical relation . This relation holds very precisely in the model (Fig. 4c, correlation coefficient ρ = 0.998, p < 10−10). Fourth, toward criticality, the response to any perturbation increases. The impact of a small perturbation is quantified by a variant of the van-Rossum distance ΔVRD (Eq. (15)). It peaks for low degrees of the external input Kext (Fig. 4d). Last, one advantage of operating in the vicinity of a critical point is the ability to enhance stimulus differences by the system response. This is reflected in a divergence of the susceptibility at the critical point. The susceptibility χ (Eq. (16)), quantified here as the change in the population firing rate in response to a burst of Npert = 6 additional spikes, is highest for low Kext (Fig. 4d). Thus overall, the avalanche distributions as well as the dynamic properties of the network all indicate that it self-organizes to a critical point under low degree of input Kext.

Network properties have to be tuned to task requirements

It is widely assumed that criticality optimizes task performance. However, we found that one has to phrase this statement more carefully. While certain abstract computational properties, such as the susceptibility, sensitivity, or memory time span are indeed maximal or even approaching infinity at a critical state, this is not necessary for task performance in general5,11,42,46. We find that it can even be detrimental. For every single task complexity, a different distance to criticality is optimal, as outlined in the following.

We study the performance of our recurrent neural network in the framework of reservoir computing: the performance of a recurrent neural network is quantified by the ability of linear readout neurons to separate different sequences47–49. To that end, it is often necessary to maintain information about past input for long time spans. To test performance, we specifically use a n-bit sum and a n-bit parity task and trained a readout on the activity of Nread = 16 randomly chosen neurons of the reservoir. For the two given tasks complexity increases with n: to solve the tasks, the network has to both memorize and process the input from the n past steps. As reservoirs close to a critical point have longer memory as quantified by the lagged mutual information (Iτ, Fig. 7), one expects that particularly the memory intensive tasks profit from criticality (tasks with high n are better at low degrees of input Kext). In contrast, simple tasks (low n) might suffer from criticality because of the maintenance of memory about unnecessary input. Since the estimation of parity, in contrast to the sum, is fully nonlinear, their direct comparison allows to further dissect task complexity. Thus, depending on the task complexity, there should be an ideal Kext, leading to maximal performance.

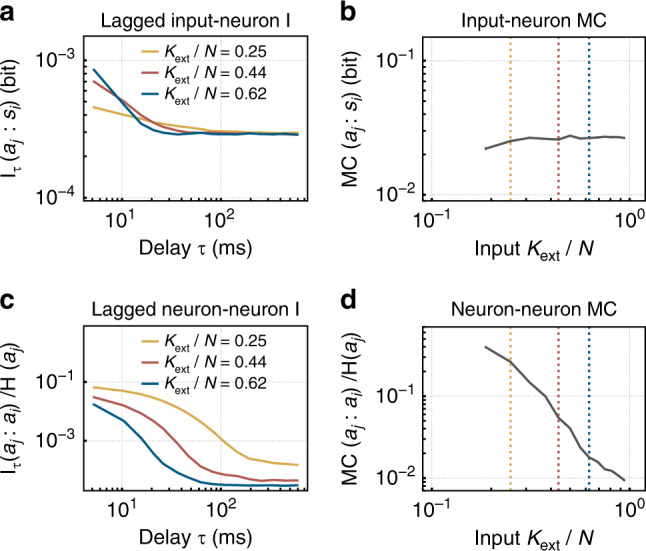

Fig. 7. Long lasting memory accompanies critical network dynamics.

a Memory about the input si as read out from neuron aj after a time lag τ is quantified by the mutual information Iτ(aj, si). Here, high degrees of the external input Kext are favorable for memory on short timescales, whereas low Kext is favorable on larger timescales. b The memory capacity (MC) stays fairly constant, despite of a decreased coupling to the stimulus for low Kext. c The lagged I between the activity of pairs of neurons indicates increasing memory for decreasing Kext, also visible in the memory capacity (MC) (d). The selection of Kext/N in a and c is marked by dashed vertical lines in b and d.

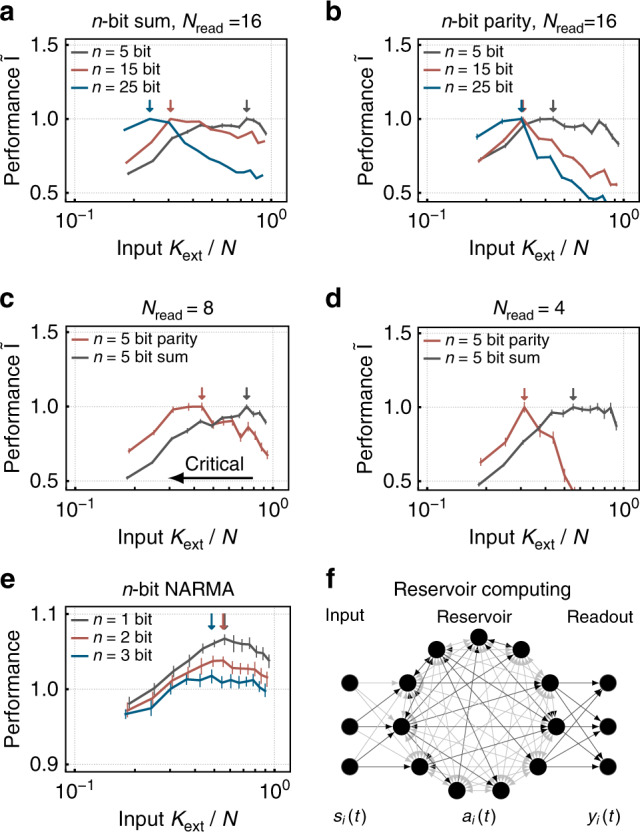

For our network, we find indeed that maximal task performance depends on both, task complexity and distance to criticality: simple sum tasks (n = 5) are optimally solved away from criticality, whereas complex sum tasks (n = 25) profit from the long timescales arising at criticality (Fig. 5a). The nonlinear parity task profits even more from criticality: even for n = 5 networks closer to the critical point promote task performance (Fig. 5b). Hence, we are capable of adapting the networks computational properties to task complexity by fine-tuning the strength of the input.

Fig. 5. Computational challenging tasks profit from critical network dynamics (small Kext)—simple tasks do not.

The network is used to solve (a) a n-bit sum and (b) a n-bit parity task by training a linear classifier on the activity of Nread = 16 neurons. Here, task complexity increases with n, the number of past inputs that need to be memorized and processed. For high n, task performance profits from criticality, whereas simple tasks suffer from criticality. Especially, the more complex, nonlinear parity tasks profits from criticality. Further, task complexity can be increased by restricting the classifier to c Nread = 8 and d Nread = 4. Again, the parity task increasingly profits from criticality with decreasing Nread. The performance is quantified by the normalized mutual information between the vote of the classifier and the parity or sum of the input. e Likewise, the peak performance moves toward criticality with increasing complexity in a NARMA task. The performance is quantified by the inverse NRMSE. Highest performance for a given task is highlighted by colored arrows. f Schematic reservoir computing setup.

Likewise, we investigated the ability of our networks to do combinations of operations by considering the classic nonlinear autoregressive moving average (NARMA) task48. The network’s peak performance again moves toward criticality with higher task complexity (Fig. 5e).

To further tune the difficulty of task, we reduced the number of neurons visible to the readout Nread. We expect that in principle information about, e.g., parity could be available in a single neuron if the network is sufficiently close to criticality, because critical network dynamics are not only characterized by temporal, but also spatial correlations. The ability to condense information about extended stimuli in the activity of few neurons can be valuable. To quantify the effect of spatial correlations on computation, we trained linear classifiers on the activity of a subset of neurons for the 5-bit sum and the parity task. When lowering Nread from 8 to 4, only the nonlinear parity tasks increasingly profits from critical network dynamics (Fig. 5c, d). In contrast, the information necessary to solve the linear sum task seems to be globally available in the network response even for subcritical dynamics. The ability to locally read out global information from the network is of equal importance for both, large neuromorphic systems29 and living networks50,51.

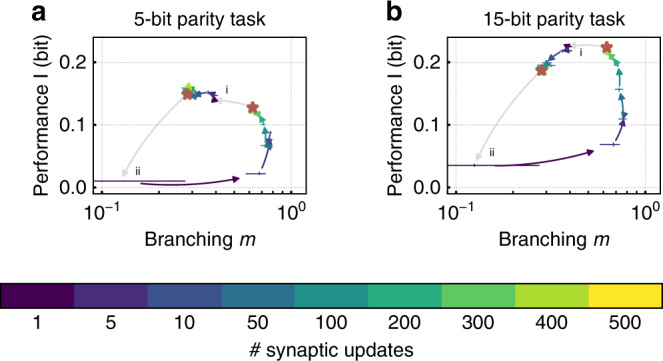

Adaptation to task by dynamic switching of input strengths

We know from the previous experiments that for high n, the n-bit parity task is solved best at criticality, whereas for low n, the subcritical regime leads to best performance. In the following, we investigate how to transit between both states. To achieve this, we take the state of a critical network and switch the degree of the input Kext to a subcritical configuration and vice versa. The performance is evaluated after various numbers of synaptic updates. This task switch generates the same working points as the previous emulations that start with synaptic weights wij = 0 ∀ i, j and have a long adaptation phase (red stars in Fig. 6).

Fig. 6. The network can be dynamically adapted by changing the degree of the input Kext.

After convergence of synaptic weights wij, Kext/N is (i) switched from critical (Kext = 0.3) to subcritical (Kex = 0.8) and (ii) vice versa. The branching ratio m and the performance I of the network on a the 5-bit and b the 15-bit parity task are evaluated after various numbers of synaptic updates. The network reaches the same performance and dynamics as when starting from wij = 0 ∀ i, j (marked by red stars). For both tasks, the transition from subcritical to critical dynamics requires more updates as expected. Moreover, optimal performance for (a) the 5-bit task is achieved under strong input (i), whereas (b) the 15-bit task requires low input (ii). The performance is quantified by the mutual information I between the parity of the input and the vote of a linear classifier.

A fast adaption to different input strengths is required to switch between tasks of different complexity. The transition from critical to subcritical is achieved after the application of about 50 synaptic updates corresponding to 50 s biological time, whereas going from subcritical to critical takes about 500 updates and therefore 500 s (Fig. 6). However, due to the speedup of the neuromorphic chip, the adaptation takes only about 0.5 s wall clock time and can even be lowered by decreasing the integration time over spike pairs in the synaptic update rule. As alternative strategies, one could switch between saved configurations, or run a hierarchy of networks with different working points in parallel52.

Task-independent quantification of computational properties

While task performance is the standard benchmark for any model, such benchmark tasks have two disadvantages: in many biological systems, such as higher brain areas or in vitro preparations, such tasks cannot be applied. Even if tasks can be applied, the outcome will always depend on the chosen task. To quantify computational properties in a task-independent manner, information theory offers powerful tools23. Using the Poisson noise input, we find that the lagged mutual information Iτ between the input si and the activity of a neuron after a time lag τ, aj predicts the performance on the parity task. Here, at high Kext (away from criticality) information about the input is maximal for very short τ, but decays very quickly (Fig. 7a). This fast forgetting is important to irradiate past, task-irrelevant input that would interfere with novel, task-relevant input. At small Kext, the recurrence is stronger and input can be read out for much longer delays (20 ms vs. 60 ms). This active storage of information is required in a reservoir to solve any task that combines past and present input, and hence the more complex parity task also profits from it. However, the representation of input in every single neuron becomes less reliable (i.e., Iτ is smaller). A measure for the representation of the input in the network could be obtained by integrating Iτ over τ. Interestingly, this memory capacity (MC) stays fairly constant (Fig. 7b). Note that we only quantified the representation of the input in a single neuron, a measure very easily accessible in experiments; obviously the readout can draw on the distributed memory across all neurons, which jointly provide a much better readout.

The memory maintenance for task processing has to be realized mainly by activity propagating on the recurrent connections in the network. Therefore, it is often termed active information storage (AIS)53. The recurrent connections become stronger closer to criticality, and as a consequence we find that the lagged mutual information between pairs of neurons in the reservoir also increases (Fig. 7c). As a result the MC of the reservoir increases over almost two orders of magnitude when approximating criticality (lower Kext, Fig. 7d). This increase in internal MC carries the performance on the more complex parity tasks.

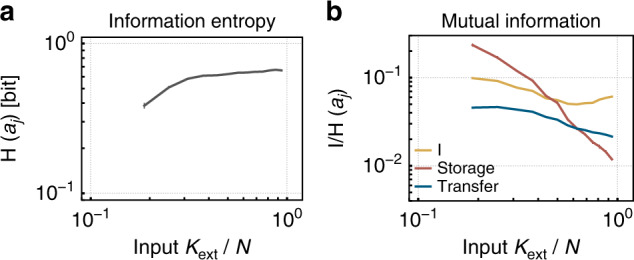

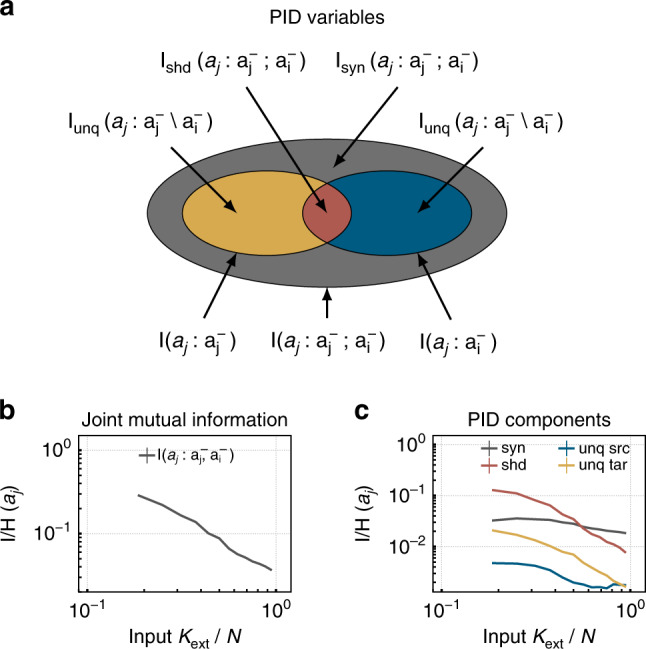

When assessing computational capacities, information theory enables us to quantify not only the entropy (H) and mutual information (I) between units, but also to disentangle transfer and storage of information, as well as unique, redundant, and synergistic contributions of different source neurons23–25,27,54. We find that all these quantities increase with approaching criticality (smaller Kext, Figs. 8b and 9c). This indicates that the overall computational capacity of the model increases, as predicted for the vicinity of the critical state1,2,7,11.

Fig. 8. The information fingerprint changes with the degree of input Kext, thus with distance to criticality.

a The entropy (H) of the spiking activity of a single neuron, aj stays fairly constant, except for low Kext as a consequence of decreasing firing rates. b The mutual information (I) between the activity of two units ai, aj increases with lower Kext (i.e., closer to critical). The network intrinsic memory also increases, indicated by the active information storage (AIS) . Likewise, the information transfer within the network increases with lower Kext. Information transfer is measured as transfer entropy (TE) between pairs of neurons aj and ai, .

Fig. 9. Partial information decomposition (PID) components increase toward criticality (i.e., with smaller input Kext).

a The two input variables for PID correspond to the spiking histories and of two neurons, and the output variable to the present state aj. PID enables to quantify the unique contribution of each source to the firing of the target neuron ( and ), as well as the shared (also called redundant, ), and synergistic contributions (). b The joint mutual information (I) increases with decreasing Kext. c All PID components increase with approaching criticality. Interestingly, the synergistic and shared contributions are always much larger than the unique contributions (note the logarithmic axis). This highlights the collective nature of processing in recurrent neural networks.

In more detail, the AIS of a neuron, as well as the I and the transfer entropy (TE) between pairs of neurons increase with lower Kext (Fig. 8b). In our analysis, these increases reflect memory that is realized as activity propagation on the network, and not storage within a single neuron, because the binsize used for analysis is larger than the refractory period τref, synaptic τsyn, and membrane-timescales τm. Information theory here enables us to show that active transfer and storage of information within the network strongly increases toward criticality. A similar increase in I, AIS, and TE has been observed for the Ising model and reservoirs at criticality1,11, and hence supports the notion that criticality maximizes information processing capacity. Note however, that this maximal capacity is typically not necessary; as shown here, it can even be unfavorable when solving simple tasks.

Very recently, it has become possible to dissect further the contributions of different neurons to processing, using PID24. PID enables us to disentangle for a target neuron ai, how much unique information it obtains from its own past activity , or the past activity of a second neuron ; and how much information is redundant or even synergistic from the two (Fig. 9a). Synergistic information is that part of information that can only be computed if both input variables are known, whereas redundant information can be obtained from one or the other.

All the PID components increase when approaching the critical point (low Kext, Fig. 9c). Quantitatively, the redundant and the synergistic information are always stronger than the unique ones that are about ten times less. The shared information dominates closer to criticality, mirroring the increased network synchrony and redundancy between neurons. Further, the synergistic contribution, i.e., the contributions that rely on the past of both neurons slightly increases, and is indeed the largest contribution for high Kext. This reflects that typically the joint activity of both neurons is required to activate a LIF neuron. Interestingly, the strong increase in shared information (i.e., redundancy) does not seem to impede the performance at criticality (small Kext). However, for even higher synchrony, as expected beyond this critical transition, the shared information might increase too much and thereby decrease performance.

Discussion

In this study, we used a neuromorphic chip to emulate a network, subject to plasticity, and showed a clear relation between criticality, task-performance and information-theoretic fingerprint. Most interestingly, simple tasks do not profit from criticality while complex ones do, showing that every task requires its own network state.

The state and hence computational properties can readily be tuned by changing the input strength, and thus a critical state can be reached without any parameter fine-tuning within the network. This robust mechanism to adapt a network to task requirements is highly promising, especially for large networks where many parameters have to be tuned and in analog neuromorphic devices that are subject to noise in parameters and dynamics.

It has been generally suggested that criticality optimizes task performance1,9. We show that this statement has to be specified: indeed, criticality maximizes a number of properties, such as the autocorrelation time (Fig. 4b), the susceptibility (Fig. 4d), as well as information-theoretic measures (Figs. 7–9). However, this maximization is apparently not at all necessary, potentially even detrimental, when dealing with simple tasks. For our simple task, high network capacity results in maintenance of task-irrelevant information, and thereby harms performance. This is underlined by our results that clearly show that all abstract computational properties are maximized at criticality, but only the complex tasks profit from criticality. Hence, every task needs its own state and therefore a specific distance to critical dynamics.

The input strength could not only be controlled by changing Kext, the number of synapses of a neuron that were coupled to the input. An equally valid choice is a change of external input rate to each neuron. In fact, we showed that changing the input rate has the same effects on the relation between criticality (Supplementary Figs. 1–3), task performance (Supplementary Fig. 4), and information measures (Supplementary Figs. 5 and 6) as changing Kext. Moreover, in this framework the lowest input rates even allow to cross the critical point (Supplementary Figs. 3 and 4). Thus for both control mechanisms or a combination, there exists an optimal input strength, where the homeostatic mechanisms bring the network closest to critical. This optimal input strength has been derived analytically for a mean-field network by Zierenberg et al.17, and could potentially be used to predict the optimal input strength for other networks and tasks as well.

Not only the input strength, but also the strength of inhibition can act as a control parameter. Inhibition plays a role in shaping collective dynamics and is known to generate oscillations55,56. For a specific ratio of excitation and inhibition, criticality has been observed in neural networks18,19,57,58. Likewise, our networks has 20% inhibitory neurons. However, inhibition would not be necessary for criticality13,42. Nevertheless, the existence of more than one control parameters (degree of input, input rate, and inhibition) allows for flexible adjustment even in cases where only one of them could be freely set without perturbing input coding.

Plasticity plays a central role in self-organization of network dynamics and computational properties. In our model, the plasticity, neuron and synapse dynamics feature quite some level of biological detail (Table 1), and thus results could potentially depend on them. All synaptic weights are determined by the synaptic plasticity. Here, we showed results for homeostasis and STDP that implement the negative (anticausal) arm only. When implementing the positive (causal) arm of STDP in addition, the network destabilized, despite counteracting homeostasis. This is a well known problem59. Our implementation is still similar to full STDP, because anticausal correlations are weakened and the causal ones are indirectly strengthened by homeostasis. With its similarity to STDP and its inherent stability, our reduced implementation may be useful for future studies.

Table 1.

Overview of the model parameters.

| Parameter | Symbol | Value |

|---|---|---|

| Threshold potential | uthresh | (554 ± 21) mV |

| Leak potential | uleak | (384 ± 79) mV |

| Reset potential | ureset | (319 ± 18) mV |

| Membrane capacitance | Cm | (2.38 ± 0.02) nF |

| Membrane time constant | τmem | (1.6 ± 1.0) ms |

| Refractory period | τref | (4.9 ± 0.5) ms |

| Synaptic time constant | (3.7 ± 0.5) ms | |

| (2.8 ± 0.3) ms | ||

| Synaptic delay | dsyn | (1.9 ± 0.1) ms |

| Weight scaling | γ | (8.96 ± 0.13) μA |

| Inhibitory synapses per neuron | Ninh | 6 |

| Neurons | N | 32 |

| Degree of input | Kext | 6–32 |

| Input rate | ν | 29 Hz |

| STDP time constant | τSTDP | (6.8 ± 1.2) ms |

| STDP amplitude | η | 0.071 ± 0.023 |

| Correlation scaling | λstdp | 11/128 |

| Drift parameter | λdrift | 1/512 |

| Range of random variable | namp | 15/16 |

| Bias of random variable | 〈n〉 | 3/16 |

| Burn-in experiment duration | Tburnin | 625 s |

| Static experiment duration | 104 s | |

| Static trial experiment duration | Tstatic | 1 s |

| Training experiment duration | Ttrain | 104 s |

| Testing experiment duration | Ttest | 21 s |

| Perturbation experiment duration | Tpert | 2 s |

| Perturbation time | tpert | 1 s |

| Initial weight | 0 μA | |

| Plasticity update period | T | 1 ms |

| Embedding dimension | l | 4 |

| Delay steps | Nτ | 100 |

All time constants are given in biological time. Spike-timing dependent plasticity (STDP) amplitudes as well as time constants were measured using 20 spike pairs. The errors indicate the standard deviation.

The characterization of the network in a task-dependent as well as in a task-independent manner is essential for understanding the impact of criticality on computation. The computational properties in the vicinity of a critical point have been investigated by the classical measures AIS, I, and TE alone5,60, or by PID alone27,61. In this paper, we indeed showed that criticality maximizes capacity, but this does not necessarily translate to maximal task performance. Moreover, the lagged I between the stimulus and the activity of neurons allows to estimate memory timescales required to solve our tasks. This enables us to understand how task complexity and the information-theoretic fingerprint are related. Such understanding is the basis for well-founded design decisions of future artificial architectures.

The presented framework is particularly useful for analog neuromorphic devices as analog components have inherent parameter noise as well as thermal noise, which potentially destabilize the network. Here, the synaptic plasticity plays a key role in equalizing out particularly the parameter noise, as also demonstrated for short-term plasticity62, and thus makes knowledge about parameter variations, as well as specific calibration to some extend unnecessary.

Despite the small system size (N = 32 neurons only), the network not only showed signatures of criticality, but also developed quite complex computational capabilities, reflected in both, the task performance and the abstract information-theoretic quantities. We expect that a scale-up of the system size would open even richer possibilities. Such a scale-up would not even require fine-tuning of parameters, as the network self-tunes owing to the local-learning rules. As soon as larger chips are available, we expect that the abilities of neuromorphic hardware could be exhausted in terms of speed and energy efficiency allowing for long, large-scale, and powerful emulations.

Overall, we found a clear relation between criticality, task-performance and information-theoretic fingerprint. Our result contradicts the widespread statement that criticality is optimal for information processing in general: while the distance to criticality clearly impacts performance on the reservoir task, we showed that only the complex tasks profit from criticality; for simple ones, criticality is detrimental. Mechanistically, the optimal working point for each task can be set very easily under homeostasis by adapting the mean input strength. This shows how critical phenomena can be harnessed in the design and optimization of artificial networks, and may explain why biological neural networks operate not necessarily at criticality, but in the dynamically rich vicinity of a critical point, where they can tune their computational properties to task requirements10,63.

Methods

We start with a description of the implemented network model, followed by a summary of the analysis techniques. All parameters are listed in Table 1 and all variables in the Supplementary Tables 1 and 2.

Model

The results shown in this article are acquired on the mixed-signal neuromorphic hardware system described in ref. 38 (Fig. 1b). In the following a brief overview of the model, which is approximated by the physical implementation on the hardware, and the programmed plasticity rule is given. Since the neuromorphic hardware system comprises analog electric circuits, transistor mismatch causes parameter fluctuations, which can be compensated by calibration37. Here, no explicit calibration on the basis of single neurons and synapses is applied. Instead, only parameters common to all neurons/synapses are set such that all parts behave according to the listed equations, especially that all parts are sensible to input but silent in the absence of input. This choice leads to uncertainties in the model parameters as reported in Table 1.

Neurons: Implemented in analog circuitry, the neurons approximate current-based LIF neurons. The membrane potential uj of the j-th neuron obeys

| 1 |

with the membrane time constant τmem, the leak conductance gleak = Cm/τmem, the leak potential uleak, and the input current Ij(t). The k-th firing time of neuron j, , is defined by a threshold criterion

| 2 |

Immediately after , the membrane potential is clamped to the reset potential uj(t) = ureset for , with the refractory period τref. The neuromorphic hardware system comprises N = 32 neurons, operating in continuous time due to the analog implementation.

Synapses: Like the membrane dynamics, the synapses are implemented in electrical circuits. Each neuron features N = 32 presynaptic partners (in-degree is 32). The synaptic input currents onto the j-th neuron enter the neuronal dynamics in Eq. (1) as the sum of the input currents of all presynaptic partners i, , where Iij(t) is given by

| 3 |

| 4 |

with the excitatory and the inhibitory synaptic time constants and . Ninh synapses of every neuron j are randomly selected to be inhibitory. The external synaptic current depends on the l-th spike time of an external stimulus i, , whereas the recurrent synaptic current depends on the k-th spike time of neuron i, , each of which transmitted to neuron j

| 5 |

| 6 |

with the synaptic delay dsyn and the weight conversion factor γ. The synaptic weight from an external spike source i to neuron j is denoted by , and is the synaptic weight from neuron i to neuron j. Every synapse either transmits external events or recurrent spikes , i.e., if then and vice versa.

Network: The LIF neurons are potentially connected in an all-to-all fashion. A randomly selected set of Kext synapses of every neuron is chosen to be connected to the spike sources. As every synapse could either transmit recurrent or input spikes, the Kext synapses do not transmit recurrent spikes.

Plasticity: In the network, all synapses are plastic, the recurrent and the ones linked to the external input. Therefore, we skip the superscript of the synaptic weight and drop the distinction of and in the following description. Weights are subject to three contributions: a weight drift controlled by the parameter λdrift, a correlation sensitive part controlled by λstdp, and positively biased noise contributions. This is very similar to STDP, however with specific depression, but unspecific potentiation. A specialized processor on the neuromorphic chip is programmed to update synaptic weights to wij(t + T) = wij(t) + Δwij according to

| 7 |

The STDP-kernel function f depends on the pre- and postsynaptic spike times in the time interval [t − T, t)

| 8 |

with , and , and only nearest-neighbor spike times are considered in the sum. ηstdp and τstdp denote the amplitude and the time constant of the STDP-kernel. The term nij(t) adds a uniformly distributed, biased random variable

| 9 |

where namp specifies the range, while 〈n〉 is the bias of the random numbers.

The parameters λstdp and λdrift are chosen such that the average combined force of the drift and the stochastic term is positive. Thus, only the negative arm of STDP is implemented.

Initialization: The synaptic weights are initialized to wij = 0 μA. Afterward, the network is stimulated by N Poisson-distributed spike trains of rate ν by the Kext synapses of every neuron. By applying Eq. (7) for the total duration Tburnin weights wij ≠ 0 μA develop. For every Kext, the network is run 100 times, each with a different random seed. If not stated otherwise, the resulting weight matrices are used as initial conditions for experiments with frozen weights (Δwij = 0) for a duration of on which the analysis is performed.

Simulations: To complement the hardware emulations, an idealized version of the network is implemented in Brian 264. Specifically, no parameter or temporal noise is considered, and weights are not discretized as it is the case for the neuromorphic chip. For simplicity, the degree of the input is implemented probabilistically by connecting each neuron-input pair with probability Kext/N and each pair of neurons with probability (N − Kext)/N.

Evaluation

Binning: The following measures rely on an estimate of activity, therefore we apply temporal binning

| 10 |

where δt corresponds to the binwidth, and 1 is the indicator function. With this definition, we are able to define the binarized activity for a single process i

| 11 |

The variable xi(t) can represent either activity of a neuron in the network ai(t), or of a stimulus spike train si(t), and correspondingly the spike times represent spikes of network neurons or stimulus (input) spike trains.

The population activity a(t) of the network is defined as

| 12 |

Neural avalanches: A neural avalanche is a cascade of spikes in neural networks. We extract avalanches from the population activity a(t), obtained by binning the spike data with δt corresponding to the mean inter-event interval, following established definitions. In detail, one avalanche is separated from the subsequent one by at least one empty time bin43. The size s of an avalanche is defined as the number of spikes in consecutive non-empty time bins. At criticality, the size distribution P(s) is expected to follow a power law.

To test for criticality, we compare whether a power law or an exponential distributions fits the acquired avalanche distribution P(s) better 45. For the fitting, first the best matching distribution is determined based on the fit-likelihood. The fit-range is fixed to s ∈ {4, 3 ⋅ N} as the system is of finite size. An estimation of the critical exponent αs and an exponential cutoff scut is obtained by fitting a truncated power law

| 13 |

for s ≥ 1. Power law fits are performed with the Python package power law described in ref. 65.

Fano factor: The variability of the population activity is quantified by the Fano factor , where is the variance and μa is the mean of the population activity a(t), binned with δt = τref.

Trial-to-trial variability and susceptibility: The trial-to-trial distance ΔVRD is obtained by stimulating the same network twice with the same Poisson spike trains, leading to two different trials m and n influenced by variations caused by the physical implementation. The resulting spike times in trial m emitted by neuron i, termed , are convolved with a Gaussian

| 14 |

and likewise for trial n. The width is chosen to be σVRD = τref and the temporal resolution for the integration is chosen to be 0.1 ms. From different trials m and n the distance is calculated

| 15 |

To obtain an estimate of the networks sensitivity χ to external perturbations, a pulse of Npert additional spikes is embedded in the stimulating Poisson spike trains at time tpert

| 16 |

normalized to the number of external connection to compensate for the decoupling from external input with decreasing Kext. The population activity is estimated with binsize δt = dsyn. By evaluating χ immediately after the perturbation, only the effect of the perturbation is captured by minimizing the impact of trial-to-trial variations.

To calculate χ and ΔVRD, each weight matrix, obtained by the application of the plasticity rule, is used as initial condition for ten emulations with frozen weights and fixed seeds for the Poisson-distributed spike trains of duration Tpert and Tstatic. In addition, a perturbation of size Npert at tpert = Tpert/2 is embedded for the estimation of χ.

Autoregressive model: Mathematically, the evolution of spiking neural networks is often approximated by a first-order autoregressive representation. To assess the branching parameter m of the network in analogy to42,43, we make use of the following ansatz:

| 17 |

where the population activity in the next time step, a(t + 1) is determined by internal propagation within the network (m), and by external input h. Here, 〈.∣.〉 denotes the conditional expectation and m corresponds to the branching ratio. For m = 1, the system is critical, for m > 1 the system is supercritical and activity grows exponentially on expectation (if not limited by finite-size effects), whereas for m < 1 the activity is stationary. The branching parameter m is linked to the autocorrelation time constant by . To obtain the activity a(t) the binwidth δt is set to the refractory time τref. Estimating m is straight forward here, as subsampling66,67 does not impact the estimate. Thus a classical estimator can be used, i.e., m is equal to the linear regression between a(t) and a(t + 1). For model validation purposes, the autocorrelation function ρa,a is calculated on the population activity a(t) binned with δt = τref

| 18 |

where σa is the standard deviation, and μa the mean of the population activity. Subsequently, ρa,a is fitted by an exponential to yield the time constant τcorr.

Information theory: We use notation, concepts and definitions as outlined in the review23. In brief, the time series produced by two neurons represent two stationary random processes X1 and X2, composed of random variables X1(t) and X2(t), t = 1, . . . , n, with realizations x1(t) and x2(t). The corresponding embedding vectors are given in bold font, e.g., . The embedding vector is constructed such that it renders the variable X1(t + 1) conditionally independent of all random variables with , i.e., . Here, (⋅∣⋅) denotes the conditional.

The entropy (H) and mutual information (I) are calculated for the random variables X1 and X2, if not denoted otherwise. This is equivalent to using l = 1 above, e.g., H(X1) and I(X1 : X2) = H(X1) − H(X1∣X2). We abbreviate the past state of spike train 1 by : thus . The current value of the spike train is denoted by X1. With this notation the AIS of, e.g., X1 is given by

| 19 |

In the same way, we define the TE between source X1 and target X2

| 20 |

The lagged mutual information for time lag τ is defined as . Integrating the lagged I defines the MC

| 21 |

with a maximal delay Nτ = 100. The I of a sufficiently large Nτ is subtracted to account for potential estimation biases.

To access the information modification the novel concept of PID is applied24,25,27. Intuitively, information modification in a pairwise consideration should correspond to the information about the present state of a process only available when considering both, the own process past and the past of a source process. Therefore, the joint mutual information is decomposed by PID into the unique, shared (redundant), and synergistic contributions to the future spiking of one neuron, X1, from its own past , and the past of a second neuron or an input stimulus : In more detail, we quantify

The unique information that is contributed from the neurons own past.

The unique information that is contributed from a different spike train (neuron or stimulus).

The shared information that describes the redundant contribution.

The synergistic information , i.e., the information that can only be obtained when having knowledge about both past states.

Isyn is what we consider to be a suitable measure for information modification27.

The joint mutual information as defined here is the sum of the AIS and the TE

| 22 |

We calculated H, AIS, I, and TE with the toolbox JIDT68, whereas the PID was estimated with the BROJA-2PID estimator69. The activity is obtained by binning the spike data with δt = τref and setting l to 4 to incorporate sufficient history. I and TE as well as the PID were calculated pairwise between all possible combinations of processes. Results are typically normalized by H to compensate for potential changes in the firing rate for changing values of Kext (Supplementary Fig. 1). For the pairwise measures, H of the target neuron is used for normalization.

Reservoir computing: The performance of the neural network as a reservoir47,48 is quantified using a variant of the n-bit parity task. The network weights are frozen (i.e., plasticity is disabled) to ensure that the network state is not changed by the input.

To solve the parity requires to classify from the network activity aj(t), whether the last n bits of input carried an odd or even number of spikes. The network is stimulated with a single Poisson-distributed spike train of frequency ν acting equally on all external synapses, i.e., the input spike times are . Spike times are binned according to Eq. (11) with binwidth δt to get a measure of the n past bits. The resulting stimulus activity s(t) is used to calculate the n-bit parity function according to

| 23 |

with and the modulus 2 addition ⊕ , i.e., whether an odd or even number of spikes occurred in the n past time steps of duration δt.

On the activity aj(t) of a randomly selected subset of neurons with cardinality Nread a classifier is trained

| 24 |

where Θ(⋅) is the Heaviside function, and v(t) is the predicted label. The weight vector wj of the classifier is determined using linear regression on a set of training data strain of duration Ttrain

| 25 |

The network’s performance on the parity task is quantified by on a test data set stest of duration Ttest. The performance I is offset corrected by training the very same classifier on a shuffled version of pn[s(t)]. Moreover, we weighted each sample in the regression in Eq. (25) with the relative occurrence of their respective class to compensate for imbalance. Temporal binning with δt = 1 ms is applied to strain, stest, as well as aj(t).

In a second task, the stimulus activity s(t) is used to calculate the n-bit sum according to

| 26 |

i.e., how many spikes occurred in the n past time steps of duration δt. Here, the classifier described above is extended to multiple classes by adding readout units. The decision of the classifier is implemented by a winner-take-all mechanism across units.

In a third task, the readout is trained to calculate the NARMA system xn(t)

| 27 |

with α = 0.3, β = 0.05, γ = 1.5, δ = 0.170, and the normalized stimulus activity

| 28 |

Again, a linear classifier is trained on the network activity

| 29 |

Here, the performance is quantified by the normalized root-mean-square error (NRMSE)

| 30 |

with the standard deviation of the vote of the linear classifier σy.

Supplementary information

Acknowledgements

We thank M. Loidold, J. Pinheiro Neto, K. Schreiber, P. Spitzner, and J. Zierenberg for helpful comments on the manuscript. The authors express their gratitude toward S. Billaudelle, E. Müller, J. Weis, and Y. Stradmann for their support and helpful discussions. This work has received funding from the European Union Sixth Framework Programme ([FP6/2002-2006]) under grant agreement no 15879 (FACETS), the European Union Seventh Framework Programme ([FP7/2007-2013]) under grant agreement no. 604102 (HBP), 269921 (BrainScaleS), and 243914 (Brain-i-Nets), and the Horizon 2020 Framework Programme ([H2020/2014-2020]) under grant agreement no 720270 and 785907 (HBP), as well as the Manfred Stärk Foundation. V.P. was supported by the Max Planck Society. M.W. has received funding from the VolkswagenStiftung. The authors acknowledge support by the state of Baden-Württemberg through bwHPC.

Author contributions

B.C., D.S., and V.P. conceptualized the work. B.C. and V.P. wrote the manuscript. B.C. conducted the experiments. D.S. contributed to many of the experiments. M.K. contributed to the parity task. M.W. contributed to the information theory. V.P. supervised the work. J.S. is the architect and lead designer of the neuromorphic platform. J.S. and K.M. provided conceptual and scientific advice.

Data availability

Data available on request from the authors.

Code availability

Code available on request from the authors.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information Nature Communications thanks Timothée Levi and other anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Benjamin Cramer, Email: benjamin.cramer@kip.uni-heidelberg.de.

Viola Priesemann, Email: viola.priesemann@ds.mpg.de.

Supplementary information

Supplementary information is available for this paper at 10.1038/s41467-020-16548-3.

References

- 1.Boedecker J, Obst O, Lizier JT, Mayer NM, Asada M. Information processing in echo state networks at the edge of chaos. Theory Biosci. 2012;131:205–213. doi: 10.1007/s12064-011-0146-8. [DOI] [PubMed] [Google Scholar]

- 2.Bertschinger N, Natschläger T. Real-time computation at the edge of chaos in recurrent neural networks. Neural Comput. 2004;16:1413–1436. doi: 10.1162/089976604323057443. [DOI] [PubMed] [Google Scholar]

- 3.Legenstein R, Maass W. Edge of chaos and prediction of computational performance for neural circuit models. Neural Netw. 2007;20:323–334. doi: 10.1016/j.neunet.2007.04.017. [DOI] [PubMed] [Google Scholar]

- 4.Kinouchi O, Copelli M. Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2006;2:348–351. [Google Scholar]

- 5.Shew WL, Plenz D. The functional benefits of criticality in the cortex. Neuroscientist. 2013;19:88–100. doi: 10.1177/1073858412445487. [DOI] [PubMed] [Google Scholar]

- 6.Del Papa B, Priesemann V, Triesch J. Criticality meets learning: criticality signatures in a self-organizing recurrent neural network. PloS ONE. 2017;12:e0178683. doi: 10.1371/journal.pone.0178683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Langton CG. Computation at the edge of chaos: phase transitions and emergent computation. Phys. D. 1990;42:12–37. [Google Scholar]

- 8.Harris, T. E. The Theory of Branching Processes (Courier Corporation, 2002).

- 9.Munoz MA. Colloquium: criticality and dynamical scaling in living systems. Rev. Mod. Phys. 2018;90:031001. [Google Scholar]

- 10.Wilting J, et al. Operating in a reverberating regime enables rapid tuning of network states to task requirements. Front. Syst. Neurosci. 2018;12:55. doi: 10.3389/fnsys.2018.00055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Barnett L, Lizier JT, Harré M, Seth AK, Bossomaier T. Information flow in a kinetic ising model peaks in the disordered phase. Phys. Rev. Lett. 2013;111:177203. doi: 10.1103/PhysRevLett.111.177203. [DOI] [PubMed] [Google Scholar]

- 12.Tkačik G, et al. Thermodynamics and signatures of criticality in a network of neurons. PNAS. 2015;112:11508–11513. doi: 10.1073/pnas.1514188112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Levina A, Herrmann JM, Geisel T. Dynamical synapses causing self-organized criticality in neural networks. Nat. Phys. 2007;3:857–860. [Google Scholar]

- 14.Meisel C, Gross T. Adaptive self-organization in a realistic neural network model. Phys. Rev. E. 2009;80:061917. doi: 10.1103/PhysRevE.80.061917. [DOI] [PubMed] [Google Scholar]

- 15.Stepp N, Plenz D, Srinivasa N. Synaptic plasticity enables adaptive self-tuning critical networks. PLoS Comput. Biol. 2015;11:e1004043. doi: 10.1371/journal.pcbi.1004043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tetzlaff C, Okujeni S, Egert U, Wörgötter F, Butz M. Self-organized criticality in developing neuronal networks. PLoS Comput. Biol. 2010;6:e1001013. doi: 10.1371/journal.pcbi.1001013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zierenberg J, Wilting J, Priesemann V. Homeostatic plasticity and external input shape neural network dynamics. Phys. Rev. X. 2018;8:031018. [Google Scholar]

- 18.Poil S-S, Hardstone R, Mansvelder HD, Linkenkaer-Hansen K. Critical-state dynamics of avalanches and oscillations jointly emerge from balanced excitation/inhibition in neuronal networks. J. Neurosci. Res. 2012;32:9817–9823. doi: 10.1523/JNEUROSCI.5990-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shin C-W, Kim S. Self-organized criticality and scale-free properties in emergent functional neural networks. Phys. Rev. E. 2006;74:045101. doi: 10.1103/PhysRevE.74.045101. [DOI] [PubMed] [Google Scholar]

- 20.Bi G-q, Poo M-m. Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. Res. 1998;18:10464–10472. doi: 10.1523/JNEUROSCI.18-24-10464.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Markram H, Lübke J, Frotscher M, Sakmann B. Regulation of synaptic efficacy by coincidence of postsynaptic aps and epsps. Science. 1997;275:213–215. doi: 10.1126/science.275.5297.213. [DOI] [PubMed] [Google Scholar]

- 22.Shannon CE. A mathematical theory of communication. Bell Syst. Tech. J. 1948;27:379–423. [Google Scholar]

- 23.Wibral M, Lizier JT, Priesemann V. Bits from brains for biologically inspired computing. Front. Robot. AI. 2015;2:5. [Google Scholar]

- 24.Williams, P. & Beer, R. Decomposing multivariate information. Preprint at http://arxiv.org/abs/1004.2515 (2010).

- 25.Bertschinger, N., Rauh, J., Olbrich, E. & Jost, J. Shared information-new insights and problems in decomposing information in complex systems. In Proc. of the European Conference on Complex Systems, 251–269 (Springer, 2013).

- 26.Lizier JT, Bertschinger N, Jost J, Wibral M. Information decomposition of target effects from multi-source interactions: perspectives on previous, current and future work. Entropy. 2018;20:307. doi: 10.3390/e20040307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wibral M, Finn C, Wollstadt P, Lizier JT, Priesemann V. Quantifying information modification in developing neural networks via partial information decomposition. Entropy. 2017;19:494. [Google Scholar]

- 28.Wibral M, Priesemann V, Kay JW, Lizier JT, Phillips WA. Partial information decomposition as a unified approach to the specification of neural goal functions. Brain Cogn. 2017;112:25–38. doi: 10.1016/j.bandc.2015.09.004. [DOI] [PubMed] [Google Scholar]

- 29.Schemmel, J. et al. A wafer-scale neuromorphic hardware system for large-scale neural modeling. In Proc. 2010 IEEE ISCAS 1947–1950 (IEEE, 2010).

- 30.Indiveri G, et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 2011;5:73. doi: 10.3389/fnins.2011.00073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Furber SB, et al. Overview of the spinnaker system architecture. IEEE Trans. Comput. 2012;62:2454–2467. [Google Scholar]

- 32.Merolla PA, et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science. 2014;345:668–673. doi: 10.1126/science.1254642. [DOI] [PubMed] [Google Scholar]

- 33.Moradi S, Qiao N, Stefanini F, Indiveri G. A scalable multicore architecture with heterogeneous memory structures for dynamic neuromorphic asynchronous processors (dynaps) IEEE Trans. Biomed. Circuits Syst. 2017;12:106–122. doi: 10.1109/TBCAS.2017.2759700. [DOI] [PubMed] [Google Scholar]

- 34.Davies M, et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro. 2018;38:82–99. [Google Scholar]

- 35.Khoyratee F, Grassia F, Saighi S, Levi T. Optimized real-time biomimetic neural network on fpga for bio-hybridization. Front. Neurosci. 2019;13:377. doi: 10.3389/fnins.2019.00377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mead C. Neuromorphic electronic systems. Proc. IEEE. 1990;78:1629–1636. [Google Scholar]

- 37.Aamir SA, et al. An accelerated lif neuronal network array for a large-scale mixed-signal neuromorphic architecture. IEEE Trans. Circuits Syst. I, Reg. Pap. 2018;65:4299–4312. [Google Scholar]

- 38.Friedmann S, et al. Demonstrating hybrid learning in a flexible neuromorphic hardware system. IEEE Trans. Biomed. Circuits Syst. 2017;11:128–142. doi: 10.1109/TBCAS.2016.2579164. [DOI] [PubMed] [Google Scholar]

- 39.Wunderlich T, et al. Demonstrating advantages of neuromorphic computation: a pilot study. Front. Neurosci. 2019;13:260. doi: 10.3389/fnins.2019.00260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zapperi S, Lauritsen KB, Stanley HE. Self-organized branching processes: mean-field theory for avalanches. Phys. Rev. Lett. 1995;75:4071. doi: 10.1103/PhysRevLett.75.4071. [DOI] [PubMed] [Google Scholar]

- 41.Watson HW, Galton F. On the probability of the extinction of families. JRAI. 1875;4:138–144. [Google Scholar]

- 42.Wilting J, Priesemann V. Inferring collective dynamical states from widely unobserved systems. Nat. Commun. 2018;9:2325. doi: 10.1038/s41467-018-04725-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Beggs JM, Plenz D. Neuronal avalanches in neocortical circuits. J. Neurosci. Res. 2003;23:11167–11177. doi: 10.1523/JNEUROSCI.23-35-11167.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Priesemann V, Shriki O. Can a time varying external drive give rise to apparent criticality in neural systems? PLoS Comput. Biol. 2018;14:e1006081. doi: 10.1371/journal.pcbi.1006081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Clauset A, Shalizi CR, Newman ME. Power-law distributions in empirical data. SIAM Rev. 2009;51:661–703. [Google Scholar]

- 46.Shew WL, Yang H, Yu S, Roy R, Plenz D. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J. Neurosci. Res. 2011;31:55–63. doi: 10.1523/JNEUROSCI.4637-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Maass W, Natschläger T, Markram H. Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 2002;14:2531–2560. doi: 10.1162/089976602760407955. [DOI] [PubMed] [Google Scholar]

- 48.Jaeger, H. The Echo State Approach to Analysing and Training Recurrent Neural Networks-with an Erratum Note. Technical report 148:13 (German National Research Center for Information Technology, Bonn, Germany, 2001).

- 49.Schürmann, F., Meier, K. & Schemmel, J. Edge of chaos computation in mixed-mode vlsi-a hard liquid. Advances in Neural Information Processing Systems17, 1201–1208 (2005).

- 50.Brette, R. Is coding a relevant metaphor for the brain? Behav. Brain Sci.42, 1-44 (2019). [DOI] [PubMed]

- 51.Bernardi D, Lindner B. Optimal detection of a localized perturbation in random networks of integrate-and-fire neurons. Phys. Rev. Lett. 2017;118:268301. doi: 10.1103/PhysRevLett.118.268301. [DOI] [PubMed] [Google Scholar]

- 52.Zierenberg J, Wilting J, Priesemann V, Levina A. Tailored ensembles of neural networks optimize sensitivity to stimulus statistics. Phys. Rev. Res. 2020;2:013115. [Google Scholar]

- 53.Lizier, J. T., Prokopenko, M. & Zomaya, A. Y. The information dynamics of phase transitions in random boolean networks. In ALIFE, 374–381 (2008).

- 54.Schreiber T. Measuring information transfer. Phys. Rev. Lett. 2000;85:461. doi: 10.1103/PhysRevLett.85.461. [DOI] [PubMed] [Google Scholar]

- 55.Whittington MA, Traub R, Kopell N, Ermentrout B, Buhl E. Inhibition-based rhythms: experimental and mathematical observations on network dynamics. Int. J. Psychophysiol. 2000;38:315–336. doi: 10.1016/s0167-8760(00)00173-2. [DOI] [PubMed] [Google Scholar]

- 56.Buzsáki G, Wang X-J. Mechanisms of gamma oscillations. Annu. Rev. Neurosci. 2012;35:203–225. doi: 10.1146/annurev-neuro-062111-150444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Neto, J. P., de Aguiar, M. A., Brum, J. A. & Bornholdt, S. Inhibition as a determinant of activity and criticality in dynamical networks. Preprint at http://arxiv.org/abs/1712.08816 (2017).

- 58.Hesse J, Gross T. Self-organized criticality as a fundamental property of neural systems. Front. Syst. Neurosci. 2014;8:166. doi: 10.3389/fnsys.2014.00166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Keck T, et al. Integrating hebbian and homeostatic plasticity: the current state of the field and future research directions. Philos. Trans. R. Soc. B. 2017;372:20160158. doi: 10.1098/rstb.2016.0158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Mediano, P. A. & Shanahan, M. Balanced information storage and transfer in modular spiking neural networks. Preprint at http://arxiv.org/abs/1708.04392 (2017).

- 61.Tax T, Mediano PA, Shanahan M. The partial information decomposition of generative neural network models. Entropy. 2017;19:474. [Google Scholar]

- 62.Bill J, et al. Compensating inhomogeneities of neuromorphic VLSI devices via short-term synaptic plasticity. Front. Comput. Neurosci. 2010;4:129. doi: 10.3389/fncom.2010.00129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Wilting J, Priesemann V. 25 years of criticality in neuroscience—established results, open controversies, novel concepts. Curr. Opin. Neurobiol. 2019;58:105–111. doi: 10.1016/j.conb.2019.08.002. [DOI] [PubMed] [Google Scholar]

- 64.Goodman DF, Brette R. The brian simulator. Front. Neurosci. 2009;3:26. doi: 10.3389/neuro.01.026.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Alstott J, Bullmore E, Plenz D. powerlaw: a python package for analysis of heavy-tailed distributions. PloS ONE. 2014;9:e85777. doi: 10.1371/journal.pone.0085777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Priesemann V, Munk MH, Wibral M. Subsampling effects in neuronal avalanche distributions recorded in vivo. BMC Neurosci. 2009;10:40. doi: 10.1186/1471-2202-10-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Priesemann, V. et al. Spike avalanches in vivo suggest a driven, slightly subcritical brain state. Front. Syst. Neurosci. 8, 188 (2014). [DOI] [PMC free article] [PubMed]

- 68.Lizier JT. Jidt: an information-theoretic toolkit for studying the dynamics of complex systems. Front. Robot. AI. 2014;1:11. [Google Scholar]

- 69.Makkeh A, Theis D, Vicente R. Broja-2pid: a robust estimator for bivariate partial information decomposition. Entropy. 2018;20:271. doi: 10.3390/e20040271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Jaeger, H. Adaptive nonlinear system identification with echo state networks. Advances in Neural Information Processing Systems15, 609–616 (2003).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data available on request from the authors.

Code available on request from the authors.