Abstract

Objectives

To identify and describe instances of routine patient-reported shared decision-making (SDM) measurement in the USA, and to explore barriers and facilitators of routine patient-reported SDM measurement for quality improvement.

Setting

Payer and provider healthcare organisations in the USA.

Participants

Current or former adult employees of healthcare organisations with prior SDM activity and that may be conducting routine SDM measurement (n=21).

Outcomes

Qualitative interview and survey data collected through snowball sampling recruitment strategy to inform barriers and facilitators of routine patient-reported SDM measurement.

Results

Three participating sites routinely measured SDM from patients’ perspectives, including one payer organisation and two provider organisations—with the largest measurement effort taking place in the payer organisation. Facilitators of SDM measurement included SDM as a core organisational value or strategic priority, trialability of SDM measurement programmes, flexibility in how measures can be administered and existing momentum from payer-mandated measurement programmes. Barriers included competing organisational priorities with regard to patient-reported measurement and lack of perceived comparative advantage of patient-reported SDM measurement.

Conclusions

Payers have a unique opportunity to encourage emphasis on SDM within healthcare organisations, including routine patient-reported measurement of SDM; however, provider organisations are currently best placed to make effective use of this type of data.

Keywords: quality in health care, qualitative research, health services administration & management

Strengths and limitations of this study.

Recruitment for this study involved a near-census of major shared decision-making (SDM) initiatives in the USA.

This study gathered insight from organisations on the leading edge of SDM practice.

The snowball sampling recruitment methodology identified previously unknown examples of routine patient-reported SDM measurement.

Data derived from this small but heterogeneous group of institutions did not reach thematic saturation.

The multimodal data collection approach (interviews and surveys) led to varying levels of detail available across included participants and sites.

Introduction

Policy interest in shared decision-making (SDM) is growing internationally, leading to calls for increased measurement and feedback efforts. Underlying these efforts is preliminary evidence that audit and feedback can improve the quality of healthcare, particularly related to provider behaviours,1 despite some reports that feedback is not always effective in improving clinician performance.2 3 Additional interest in measurement relates to its potential to motivate and monitor focused efforts at multiple levels, from clinic quality improvement (QI) initiatives to system-level performance incentivisation programmes.4 5 This policy interest, while drawing to some extent on academic research, is not necessarily led by clinician or researcher efforts. Additionally, time-delimited research and QI projects in healthcare settings often do not lead to sustained initiatives. Prior research on widespread use of patient experience data for QI purposes found “no single best way to collect or use [patient-reported experience] data for QI”.6

Patient-reported experience measures are questionnaires that “gather information on patients’ views of their experience [of] receiving care”.7 The Consumer Assessment of Healthcare Providers and Systems (CAHPS) surveys are in widespread use in the USA, measuring diverse aspects of the patient experience.8 However, CAHPS lacks a measure that captures the three core dimensions of SDM: (1) information provision; (2) preference elicitation and (3) preference integration.9–11

While measures of SDM have been described in detail elsewhere,12 existing studies do not adequately examine these patient-reported experience measures in the specific context of QI. We seek to identify sites at varying stages of implementing SDM measurement and feedback and gain in-depth insight into their experiences. This will allow us to learn what differentiates organisations that conduct small-scale SDM measurement projects in research and/or QI contexts from those that implement widespread SDM measurement programmes. Understanding their experiences within a US context can inform strategies at other organisations, both domestic and international, that seek to implement SDM measurement and feedback. Therefore, in this study, we differentiate routine patient-reported SDM measurement, that is, an ongoing SDM measurement programme not tied to a specific project and generally internally funded as part of routine operations, from patient-reported SDM measurement as part of research or QI projects. These research or QI projects are often time delimited, smaller in scale, and externally sponsored.

In this study, we aim to (1) identify and describe instances of routine patient-reported SDM measurement in the USA; and (2) explore barriers and facilitators of routine patient-reported SDM measurement for QI using the Greenhalgh et al diffusion of innovations theoretical framework.13 Our primary research question was: what are the barriers and facilitators of routine patient-reported SDM measurement in the USA?

Methods

Given the orientation of the study to explore how and why patient-reported SDM measurement and feedback were undertaken, we adopted a descriptive multiple case study research design.14

To describe examples of patient-reported SDM measurement, we employed a multipronged data collection approach, including a survey of representatives from leading SDM centres, and, as available, in-depth interviews of representatives from relevant sites. Participants received an information sheet describing the research study (survey participants) and/or verbally reviewed the information sheet with the interviewer (interview participants) immediately prior to participation in the survey or interview components of the study. With participants’ verbal permission, interviews were audio recorded.

Inclusion criteria

Sites included healthcare organisations in which the research team was aware of ongoing SDM research or QI efforts. Sites were identified through the research team’s professional network, drawing on prior knowledge of SDM activity in the USA.

Interview and survey participants were current or former adult employees of healthcare organisations that may be conducting routine SDM measurement. Inclusion criteria did not specify job titles of eligible individuals; instead, any staff with knowledge of a relevant SDM measurement programme were eligible for participation.

Recruitment

We adopted a snowball sampling approach to participant recruitment. A snowball sampling approach has the benefit of identifying previously unknown or hidden populations,15 and SDM researchers and practitioners are well placed to be aware of peers active in routine patient-reported SDM measurement. Through their professional networks and drawing on more than two decades of experience in SDM research, the research team initially made email contact with 32 individuals from 23 US centres known to be active in either conducting research on SDM or implementing SDM to participate in a survey or telephone interview. The research team made initial contact by email, followed by either an emailed link to the survey or an interview invitation, depending on participant availability and preference. At the conclusion of each interview, the interviewer (RCF) requested that the participant identify other knowledgeable individuals at his or her site or related sites for possible interview participation. Additional outreach resulting from the snowball sampling approach is described in the Results section of this article.

Data collection

One member of the research team (RCF) also conducted semi-structured interviews with key informants at a sample of sites with ongoing SDM measurement programmes. In-depth interviews were conducted by Zoom teleconference (audio only). The interview guide was developed to investigate several core components of Greenhalgh’s diffusion of innovations model, namely: (1) the innovation; (2) adoption by individuals and (3) system readiness for innovation.13 (See online supplementary appendix 1 for the full interview guide.)

bmjopen-2020-037087supp001.pdf (73.2KB, pdf)

Where we were unable to conduct semistructured interviews with relevant contacts, we conducted a 12-item open-ended survey hosted by Qualtrics online survey software to gain insight into routine SDM measurement efforts. Participants were asked to provide information on (1) which SDM measures were in routine use at their organisations, (2) how the measures were selected, (3) details on measurement volume, (4) what concerns are voiced in their organisations about SDM measurement and (5) how the organisations use the SDM data they collect for QI (see online supplementary appendix 2).

bmjopen-2020-037087supp002.pdf (33.7KB, pdf)

Participants were asked to describe patient-reported SDM measurement and feedback within their organisations, including decision-making processes to establish measurement, dedicated resources and related processes while differentiating between individual-level and system-level adoption.13 Interview questions sought to understand the purpose of SDM measurement and feedback in these organisations, as well as who initiated the work and why. Audio recordings were transcribed verbatim for analysis. Where interviews could not be audio recorded, as was the case in one interview, the interviewer (RCF) took detailed field notes.

Patient and public involvement

Patients and the public were not involved in the conduct or reporting of this research.

Analysis

A single coder (RCF) reviewed survey responses to identify instances of routine SDM measurement. Two coders (RCF and JAE) conducted thematic analysis16 17 of interview transcripts and/or field notes with specific reference to relevant domains of Greenhalgh’s diffusion of innovations model13 using Atlas.ti V.8.4.4 software. Coders reviewed the data in detail and independently generated initial codes. The coders then identified, discussed and iteratively refined themes across the coded data.16 17 Figures were generated using the R visNetwork and tidyverse software packages.18 19

Results

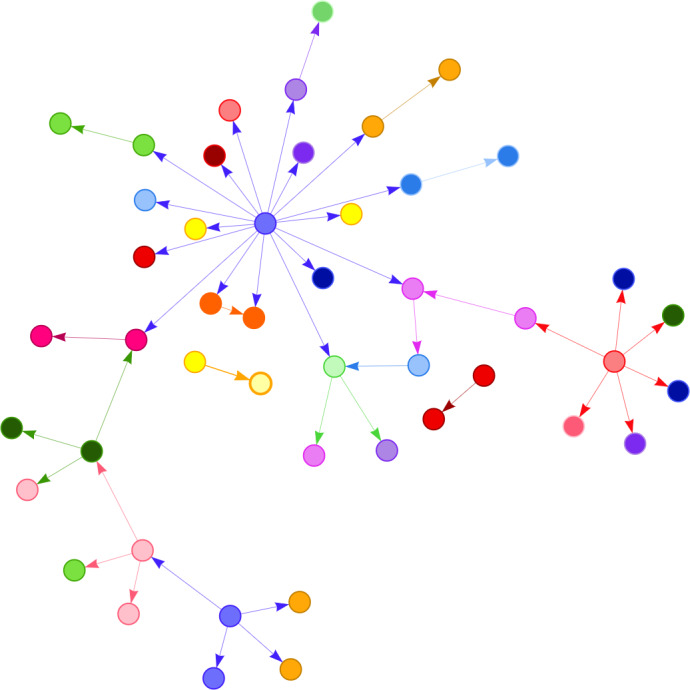

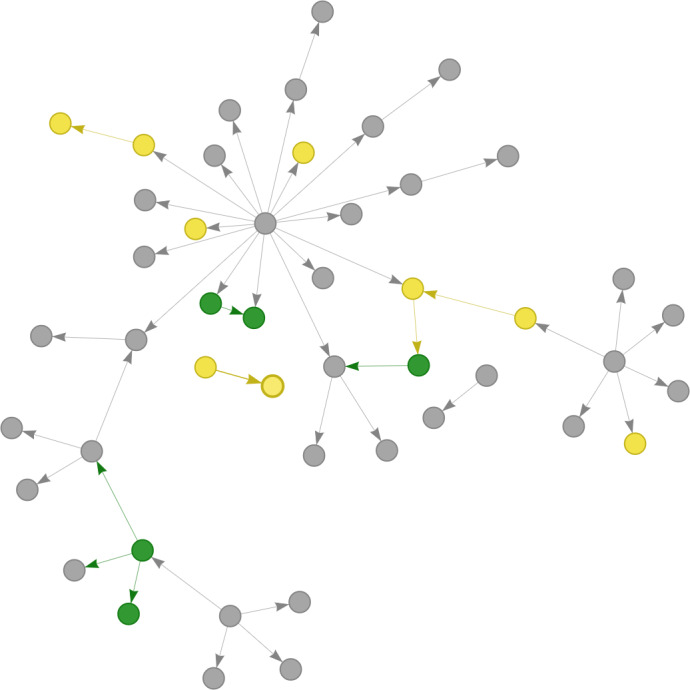

Of 42 people referred to the research team through our initial sample (32 people) and our snowball sampling approach (10 people), 36 people from 26 organisations were contacted for survey and interview recruitment. Eleven people reported no knowledge of routine patient-reported SDM measurement. Three reported only CAHPS surveys in use for routine patient-reported measurement. Three reported proxy measurement of SDM through a non-patient reported channel. Four reported additional routine patient-reported SDM measurement. Six acknowledged receipt of the email invitation but did not provide measurement details. Nine did not respond. Six participants completed semi-structured interviews, with an average interview duration of 40 min. The recruitment process and full snowball sample referral network are depicted in figures 1 and 2. Table 1 summarises SDM measurement at each included site with active SDM measurement initiatives. One health insurance company (site 1) and two provider organisations (sites 2 and 3) routinely measure SDM from patients’ perspectives.

Figure 1.

Recruitment process and Snowball sample referral network, coloured by organisation. Nodes represent individuals working within healthcare organisations; each individual’s referrals to the study team for potential participation are indicated by directed edges. Each colour represents a unique organisation.

Figure 2.

Recruitment process and snowball sample referral network, coloured by organisation’s shared decision-making (SDM) measurement status. Nodes represent individuals working within healthcare organisations; each individual’s referrals to the study team for potential participation are indicated by directed edges. Grey: organisation has no routine SDM measurement; yellow: organisation has routine non-patient-reported SDM measurement; green: organisation has routine patient-reported SDM measurement.

Table 1.

Participant and organisational characteristics where shared decision-making (SDM) measurement is occurring

| Measurement type(s) | Organisation description | Participant profile(s) | |

| Site 1 | Routine patient-reported SDM measurement. | A non-profit organisation providing health insurance coverage to California residents. |

|

| Site 2 | Routine patient-reported SDM measurement; routine CAHPS-based communication measurement. |

A large health system in northern California. |

|

| Site 3 | Routine patient-reported SDM measurement. | A large not-for-profit healthcare system. |

|

| Site 4 | Project-based patient-reported SDM measurement; routine CAHPS-based communication measurement. |

A United States Department of Veterans Affairs medical centre. The Department of Veterans Affairs operates 172 medical centres offering services to military veterans. |

|

| Site 5 | Routine CAHPS-based communication measurement. | A healthcare system affiliated with an academic institution. |

|

| Site 6 | Routine CAHPS-based communication measurement. | A midwestern academic medical centre. |

|

| Site 7 | Routine measurement focused on uptake of patient decision aids. | A regional integrated healthcare payer and provider organisation. |

|

| Site 8 | Routine measurement focused on uptake of patient decision aids. | A regional integrated healthcare payer and provider organisation. |

|

CAHPS, Consumer Assessment of Healthcare Providers and Systems.

Measurement summary: routine patient-reported SDM measurement

Site 1 collects patient-reported SDM measures in selected clinical areas including orthopaedics, gynaecology, bariatrics and cardiology. For several elective procedures, this payer organisation requires in-network healthcare providers to collect a set of patient-reported SDM measures in order for the procedures to be preauthorised for payment. Measures include collaboRATE,9 20 9-item Shared Decision Making Questionnaire (SDM-Q-9)21 and an internally developed measure asking whether patients (1) have enough information, (2) are clear about which benefits and side effects matter most to them and (3) understand the options available to them (see online supplementary appendix 3 for collaboRATE and SDM-Q-9 items). Across the organisation, approximately 10 000 patient reports are collected annually. At this site, the potential for waste reduction, that is, patients receiving only the most appropriate services for them, was the impetus behind the measurement programme.

bmjopen-2020-037087supp003.pdf (44.5KB, pdf)

Site 2 collects the collaboRATE patient-reported measure of SDM9 20 in orthopaedics and urology clinics from all patients making total joint replacement and prostate cancer treatment decisions. The purpose of measurement was initially to meet payer requirements for elective orthopaedic procedures, but then expanded to include other non-mandatory clinical areas.

Site 3 collects the SDM Process measure22 along with the Hip Osteoarthritis Decision Quality Instrument,23 Knee Osteoarthritis Decision Quality Instrument,23 Herniated Disc Decision Quality Instrument23 24 and the Spinal Stenosis Decision Quality Instrument from patients with relevant health conditions (see online supplementary appendix 3 for detail on included measures). The measures are administered through the health system’s electronic medical record as part of the organisation’s patient-reported outcomes measurement system, collecting approximately 1800 patient reports of SDM experience per year for benchmarking and performance improvement purposes.

Measurement summary: other measurement cases

While routine patient-reported measurement at site 4 is largely limited to CAHPS-based patient questionnaires (see table 2 for relevant CAHPS items), a pilot project within the organisation uses the collaboRATE measure9 20 to assess patients’ SDM experience in primary care settings. As part of the pilot, patient responses are collected by a researcher in the clinic setting. The purpose of SDM measurement at site 4 is to support local QI efforts. Sites 5 and 6 report only CAHPS-based routine patient-reported measurement, with no items specific to SDM.

Table 2.

Consumer Assessment of Healthcare Providers and Systems (CAHPS) items related to shared decision-making (SDM) and clinical communication

| CAHPS SDM measure | CAHPS communication measure |

| Did you and this doctor talk about the reasons you might want to take medicine? | How often did this doctor explain things in a way that was easy to understand? |

| Did you and this doctor talk about the reasons you might not want to take medicine? | How often did this doctor listen carefully to you? |

| When you and this doctor talked about starting or stopping a prescription medicine, did this doctor ask what you thought was best for you? | How often did this doctor show respect for what you had to say? |

| How often did this doctor spend enough time with you?. |

Sites 7 and 8 take a similar, non-patient-reported approach to routine SDM measurement. Rather than collecting patient reports of SDM experience, these organisations designate the use of patient decision aids, which are shared through the electronic health record and accessed digitally, as a proxy for SDM. Prevalence of patient decision aid use is then tracked through electronic health record reporting functionality. The stated aim of site 7’s SDM measurement programme is to promote SDM as an effective QI model.

Barriers and facilitators of routine patient-reported SDM measurement

Participants identified various barriers and facilitators both in organisations that do and do not yet conduct routine patient-reported SDM measurement. Greenhalgh’s diffusion of innovations model offers a framework for these barriers and facilitators, summarised in table 3.13

Table 3.

Barrier and facilitator summary

| Attributes/themes | Specific factors/codes | Illustrative quotations | |

| Facilitators | |||

| The innovation | Compatibility |

|

“SDM is seen as important component of patient engagement, which is core organizational value. (P04, site 3) “There’s a big effort at [site] right now to change the way care is provided, take a more whole health approach, patient-centered approach to really provide care that starts with what matters most to the patients.” (P05, site 4) |

| Complexity |

|

“…it’s only three questions. People recoil at a long survey.” (P03, site 2) | |

| Trialability |

|

“We had such great success with [data collection] that we extended it into other policies like, for example, hysterectomy for benign conditions… We also extended it into our bariatric surgery. We extended it into cardiovascular disease.” (P01, site 1) | |

| Observability |

|

“I don’t know if there’s a formal protocol [for feedback of patient experience data] so much as there is keen institutional focus.” (P09, site 7) |

|

| Fuzzy boundaries |

|

“What we’re doing is we’re collecting it at point of care using our research assistant… We didn’t have a whole lot of money to do it. One of our goals, really, with the pilot is usability so we get patients to do it, how long is it going to take.” (P05, site 4) | |

| Adoption by individuals | Meaning |

|

“We recognize that things like [CAHPS] don’t do a good job of helping us understand shared decision-making.” (P09, site 7) |

| The adoption decision |

|

“We asked orthopedic surgeons if we should collect collaboRATE from everyone or just [from a subset of] patients [for whom SDM measurement is required by a payer]; surgeons said everyone.” (P03, site 2) | |

| System readiness for innovation | Innovation-system fit |

|

“We have an electronic [survey] platform… In the EMR, you can invite [patients] to a website [where] you can post questions for them to answer.” (P10, site 8) |

| Support and advocacy |

|

“I was the one that decided this needs to be done.” (P01, site 1) “Some [other clinicians] championed it within their networks [but] more it’s me trying to get people to use the tools.” (P10, site 8) |

|

| Dedicated time and resources |

|

“There are a lot of people involved in data/analytics and reporting, [both] in [clinical] departments and in units separate from departments that send data back to departments.” (P03, site 2) | |

| Barriers | |||

| The innovation | Relative advantage |

|

Interviewer: “Do you collect patient-reported measures specific to shared decision-making?” P09: “We do not, unfortunately. I’ve been trying to get collaboRATE in and I’m unsuccessful…” (P09, site 7) |

| Observability |

|

“And then the biggest thing is competing priorities…if you were to talk to one of the chiefs, they would say, ‘that’s fine, but [CAHPS] is what I need to focus on.’” (P09, site 7) | |

| Assessment of implications |

|

“Operational leadership believes [that patients]…won’t be happy with them if they send long surveys.” (P03, site 2) | |

| System readiness for innovation | Dedicated time and resources |

|

“It wasn’t until recently that there were clearly very pragmatic tools for measuring patients’ perceptions of shared decision-making.” (P09, site 7) |

CAHPS, Consumer Assessment of Healthcare Providers and Systems; SDM, shared decision-making.

Facilitators

The innovation

Facilitators of SDM measurement in this sample were predominantly related to the nature of the innovation.

SDM as a core organisational value or strategic priority was mentioned multiple times as a facilitator (sites 1, 2, 3 and 7), while an organisational culture of continuous QI was mentioned once (site 7). One participant cited a broader shift in the healthcare environment toward SDM as helpful to SDM measurement efforts, explaining that 10 years ago (in 2009), “it was an uphill battle,” but “now there’s general acknowledgement and agreement that SDM is how care should be delivered” (P03, site 2).

Practical aspects of measurement were also important. The brevity of the collaboRATE measure facilitates its use (site 2). In addition, the trialability of patient-reported SDM measurement is an evident facilitator (sites 1 and 2), with measurement beginning in a single clinical context then spreading. Similarly, in one instance, the patient-reported SDM measurement is occurring within the context of a pilot project (site 4). Where routine measurement has been trialled, flexibility in how measures can be collected, that is, electronic data collection (sites 2, 3, 7 and 8) compared with paper data collection (site 4), lends itself to successful implementation. Finally, the ability for SDM outcomes to be tracked over time demonstrates the high observability of SDM measurement and facilitates its implementation (site 7).

Adoption by individuals

Adoption-related facilitators of SDM measurement focused on meaning and the adoption decision itself. This includes acknowledgement of SDM as an important addition to other ongoing patient-reported measurement—“recogniz[ing] that things like [CAHPS] don’t do a good job of helping us understand shared decision-making” (P09, site 7). At another site (site 2), initial routine patient-reported SDM measurement was mandated by a payer organisation (site 1). In debating whether or not to expand the measurement programme beyond the minimum scope required to meet payer requirements, that site actively engaged the clinicians whose performance was being measured, who supported the programme’s expansion.

System readiness for innovation

System readiness for routine patient-reported SDM measurement involved innovation-system fit, support and advocacy within the organisations, and dedicated time and resources for building and maintaining routine measurement. With regard to innovation-system fit, payers have started to require patient-reported SDM measurement for preauthorisation of payment for elective procedures (sites 1 and 3). Further, the capacity for electronic data collection was a system-level factor that fit the demands of routine SDM measurement (site 2, 3, 7 and 8).

Pertaining to support and advocacy for routine SDM measurement, clinical and/or administrative champion involvement was critical (sites 1, 2, 4, 7 and 8). It was also important for operational leadership to recognise SDM as an important issue (P03).

Finally, the availability of material support was an important facilitator of SDM measurement, including dedicated personnel to design SDM measurement programmes and/or process SDM data (sites 1, 2, 4, 7 and 8).

Barriers

The innovation

In settings where SDM measurement relies on a proxy measure of patient decision aid use, the relative advantage of patient-reported measurement is not yet sufficient to spur adoption (sites 7 and 8). Other organisational priorities, particularly those aspects of care assessed by the CAHPS patient experience survey, resulted in less attention being available among organisational leadership for SDM performance management (site 7). The success of financial incentives for patient-reported SDM measurement at sites 1 and 2 suggests that relative advantage is associated with those activities that are rewarded by payers. Another barrier is the perceived patient burden of patient-reported SDM measurement; however, as patient-reported SDM measurement was adopted, those involved found that patients were willing and able to complete the measures without substantial burden (sites 2 and 4).

System readiness for innovation

Finally, a lack of availability of pragmatic patient-reported SDM measures was identified as a barrier to patient-reported SDM measurement, as “it wasn’t until recently that there were clearly very pragmatic tools for measuring patients’ perceptions of shared decision-making” (P09, site 7).

Use of SDM data

Of the organisations reporting routine SDM measurement, benchmarking and internal performance improvement is a common stated use of the data (sites 2, 3, 4 and 7). This takes the form of routine reporting of SDM data to heads of relevant clinical departments, including graphics depicting comparative performance and with subsequent feedback to individual clinicians. Site 2 reports substantial and productive clinic-level engagement with this feedback.

One site, however, struggles to find a use for its extensive SDM data that is deemed acceptable by its community of clinicians (site 1). As a payer organisation, site 1 finds that its collection of SDM data has ‘created a little bit of trepidation’ within the clinician community due to a perception that they could ‘weaponise this information’ (P01). The participant explains:

[Low SDM scores] make the physician look bad and we, as a health plan, could frankly use that information to steer patients away from those kinds of doctors and towards the doctors that get better scores. That’s part of the problem with anything when you’re collecting data, any type of data. Whether it’s shared decision-making data or efficacy data around quality scores or even around outcomes, the perception is that health plans can use that data against them to steer patients away and send them to higher performers. That’s the concern from providers and so we have this data. We don’t intend on doing that. We don’t intend on using the scores in a way to punish or, right now, even provide benefit to those high scorers. We just want to collect the data to better understand shared decision-making. Is the process occurring? How the patients—how are they responding to it? (P01, site 1)

Site 1 aspires to ”use the information to try to educate” and offer training to lower performing clinicians (P01). However, despite a desire to “use it as a mechanism to help educate maybe the lower-scored folks vs the higher-scored folks…[site 1] haven’t quite gone there yet” (P01) with regard to training low-scoring providers in SDM.

Discussion

Key findings

In organisations where patient-reported SDM measurement is routine, facilitators include: (1) compatibility of SDM measurement with core organisational values; (2) brevity of the collaboRATE patient-reported SDM measure; (3) trialability (and potential for subsequent expansion) of patient-reported SDM measurement within the organisation; (4) flexibility in how measures can be implemented; (5) involvement of both clinical champions and rank-and-file clinicians in the decision to measure SDM performance; (6) an environment in which payers (eg, health insurance companies) have begun to require provider organisations to measure patients’ experiences of SDM and (7) dedicated resources (ie, personnel) within the organisations to design and maintain their SDM measurement programmes. Barriers include inadequate perceived relative advantage of patient-reported SDM measurement over proxy measures, a paucity of patient-reported SDM measures that are sufficiently pragmatic for routine and widespread use, and the existence of competing priorities for organisational leadership when it comes to patient experience. The few organisations we identified with routine patient-reported SDM measurement tend to use the resulting information for internal benchmarking and QI initiatives. However, site 1, due to constraints unique to payer-only organisations, is still in the process of developing a tenable use of the extensive patient-reported SDM data it collects.

Results in context

Despite policy momentum, routine patient-reported SDM measurement is rare in the USA. While it occurs in three of the eight organisations in this rarefied sample, there remains an enormous silent denominator—most of which has yet to consider routine patient-reported SDM measurement. The study team contacted 32 individuals affiliated with the US research and clinical centres known to be active in SDM; this population of active SDM sites is an extremely small subset of the more than 600 health systems and hundreds of additional standalone hospitals and private practices in the USA.25 Underlying the sparse routine use of patient-reported SDM measurement is a US context in which the SDM process is not yet widely rewarded by healthcare payers. There are a few emerging exceptions, including the Centers for Medicare and Medicaid Services requiring documentation of SDM for lung cancer screening.26 However, such initiatives tend not to differentiate distribution of patient decision aids from an SDM process in which patients and clinicians share information about potential benefits and harms, engage in dialogue about preferences and values, and jointly decide on next steps. The relative advantage of a valid and reliable SDM measure, inclusive of potential data collection costs, over low-cost proxy measures such as extent of decision aid distribution, is therefore currently absent in sites 7 and 8. In settings where the SDM process is already routine, monitoring decision aid distribution can be a helpful proxy; however, measures of the SDM process itself are needed for patient-centred culture change and SDM skill building. When routine patient-reported measurement of SDM spreads beyond the small number of organisations identified in this study, future research employing network analysis would be helpful to track patterns of diffusion.

This study is the first, to our knowledge, to examine routine patient-reported SDM measurement use cases within the USA. Organisations with routine patient-reported SDM measurement programmes use a variety of measures, including the SDM Process measure, Decision Quality Instrument, SDM-Q-9 and collaboRATE. The use of patient decision aid access data as a proxy for SDM, adopted by two organisations within this sample, is consistent with proxy measures described by Durand et al as part of recent US healthcare policy related to SDM.27 However, while “decision and conversation aids can be valuable in facilitating SDM…they are neither necessary nor sufficient for choosing an approach to address each patient’s situation”.28 Although decision aid use has been associated with improved decisional outcomes such as reduced uncertainty and higher satisfaction with the decision-making process,29 direct comparisons of proxy measures to patient-reported and observer-rated SDM in a future study would further elucidate their validity. A recent systematic review assessing the quality of SDM measurement instruments finds generally limited available information on measurement quality of SDM measurement instruments, including for the SDM Process measure, SDM-Q-9 and collaboRATE.12 More research is needed to critically appraise the psychometric properties of these instruments.

While most uses of patient-reported experience data do not broach the subject of clinician behaviour change,6 some organisations in this sample that conduct SDM measurement provide feedback directly to clinical teams with the intent to enhance clinician skills and modify behaviour. Despite systematic review evidence of a positive effect of audit and feedback on clinician performance,1 recent commentaries have called this relationship into question.3 Implementation science can inform optimal operationalisation of audit and feedback for performance improvement, including pairing feedback with clinician training in SDM, as well as structuring clinical timelines to allow healthcare professionals to address the varied priorities for which they are accountable.30

While site 1 appears to benefit from its leverage as a payer organisation to facilitate the largest and most robust patient-reported SDM measurement programme in this sample, its use of the data is constrained by its role as a payer organisation. These constraints relate to perceived distrust between provider organisations and health insurance companies, including a fear that health insurance companies may weaponise performance data to drive patients away from low-performing professionals. Among managed care health plan members, prior research has demonstrated a sense of vulnerability, worry and fear in relation to health insurance plans31—consistent with our current findings focused on healthcare providers. Overcoming this distrust is critical for health insurance companies to make effective QI use of the data they are well positioned to collect.

Strengths and limitations

Through the authors’ professional networks and a snowball sampling approach, recruitment efforts for this study involved a near census of major SDM initiatives in the USA. Our snowball sampling recruitment method allowed for insight into organisations on the leading edge of SDM measurement. Through our broad snowball sampling approach, we sought to conduct a thorough search of active SDM researchers and leading SDM practitioners in the USA. Data derived from this small but heterogeneous group of institutions did not reach thematic saturation, though we observed several key commonalities as described in the key findings. As this study is an early exploration into routine SDM measurement, we found that the landscape is diverse and currently without consensus. This study therefore presents views of early adopters, relevant even without thematic saturation. However, the multimodal data collection approach led to varying levels of detail available across included participants and sites, which is a limitation.

Conclusion

Payers have a unique opportunity to encourage emphasis on SDM within healthcare organisations, including routine patient-reported measurement of SDM; however, provider organisations are currently best placed to make effective use of this type of data. Next steps for organisations that choose to pursue routine patient-reported SDM measurement, particularly payer organisations with potential for broad impact, include implementing data use that drives widespread SDM QI.

Supplementary Material

Footnotes

Twitter: @glynelwyn

Contributors: Conception or design of the work: RCF, MJM, AJO and GE. Acquisition, analysis or interpretation of data: RCF and JAE. Drafting the work: RCF. Critically revising the work: JAE, MJM, AJO and GE. Final approval of submitted version: all authors.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: GE has edited and published books that provide royalties on sales by the publishers: the books include Shared Decision Making (Oxford University Press) and Groups (Radcliffe Press). GE’s academic interests are focused on shared decision-making and coproduction. He owns copyright in measures of shared decision-making and care integration, namely collaboRATE, integRATE (measure of care integration, consideRATE (patient experience of care in serious illness), coopeRATE (measure of goal setting), toleRATE (clinician attitude to shared decision making, Observer OPTION-5 and Observer OPTION-12 (observer measures of shared decision-making). GE has in the past provided consultancy for organisations, including: (1) Emmi Solutions LLC who developed patient decision support tools; (2) National Quality Forum on the certification of decision support tools; (3) Washington State Health Department on the certification of decision support tools; (4) SciMentum LLC, Amsterdam (workshops for shared decision-making). GE is the Founder and Director of &think LLC which owns the registered trademark for Option Grids TM patient decision aids; Founder and Director of SHARPNETWORK LLC, a provider of training for shared decision making. He provides advice in the domain of shared decision-making and patient decision aids to: (1) Access Community Health Network, Chicago (Adviser to Federally Qualified Medical Centers); (2) EBSCO Health for Option Grids TM patient decision aids (Consultant); (3) Bind On Demand Health Insurance (Consultant), (4) PatientWisdom (Adviser); (5) abridge AI (Chief Clinical Research Scientist).

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Patient consent for publication: Not required.

Ethics approval: This study, including all consent and data collection procedures, was reviewed and approved by Dartmouth College’s Committee for the Protection of Human Subjects (CPHS #31002).

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: No data are available. To protect the confidentiality of research participants, interview and survey transcripts will not be made publicly available.

References

- 1. Ivers N, Jamtvedt G, Flottorp S, et al. . Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev 2012:CD000259. 10.1002/14651858.CD000259.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Vingerhoets E, Wensing M, Grol R. Feedback of patients’ evaluations of general practice care: a randomised trial. Qual Health Care 2001;10:224–8. 10.1136/qhc.0100224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Navathe AS, Lee VS, Liao JM. How to Overcome Clinicians’ Resistance to Nudges. Harvard Business Review, 2019. Available: https://hbr.org/2019/05/how-to-overcome-clinicians-resistance-to-nudges [Accessed 20 Oct 2019].

- 4. Bastemeijer CM, Boosman H, van Ewijk H, et al. . Patient experiences: a systematic review of quality improvement interventions in a hospital setting. Patient Relat Outcome Meas 2019;10:157–69. 10.2147/PROM.S201737 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. James J. Health policy brief: pay-for-performance. Health Affairs 2012. [Google Scholar]

- 6. Gleeson H, Calderon A, Swami V, et al. . Systematic review of approaches to using patient experience data for quality improvement in healthcare settings. BMJ Open 2016;6:e011907. 10.1136/bmjopen-2016-011907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Kingsley C, Patel S. Patient-reported outcome measures and patient-reported experience measures. BJA Educ 2017;17:137–44. 10.1093/bjaed/mkw060 [DOI] [Google Scholar]

- 8. Agency for Healthcare Research & Quality CAHPS measures of patient experience. Available: https://www.ahrq.gov/cahps/consumer-reporting/measures/index.html [Accessed 20 Oct 2019].

- 9. Elwyn G, Barr PJ, Grande SW, et al. . Developing collaborate: a fast and frugal patient-reported measure of shared decision making in clinical encounters. Patient Educ Couns 2013;93:102–7. 10.1016/j.pec.2013.05.009 [DOI] [PubMed] [Google Scholar]

- 10. Elwyn G, Durand MA, Song J, et al. . A three-talk model for shared decision making: multistage consultation process. BMJ 2017;359:j4891. 10.1136/bmj.j4891 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Forcino RC, Thygeson M, O’Malley AJ, et al. . Measuring Patient-Reported Shared Decision-Making to Promote Performance Transparency and Value-Based Payment: Assessment of collaboRATE’s Group-Level Reliability. J Patient Exp 2019;14:237437351988483 10.1177/2374373519884835 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Gärtner FR, Bomhof-Roordink H, Smith IP, et al. . The quality of instruments to assess the process of shared decision making: a systematic review. PLoS One 2018;13:e0191747. 10.1371/journal.pone.0191747 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Greenhalgh T, Robert G, Macfarlane F, et al. . Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q 2004;82:581–629. 10.1111/j.0887-378X.2004.00325.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Yin RK. Case study research. SAGE 2014. [Google Scholar]

- 15. Hogan TM, Olade TO, Carpenter CR. A profile of acute care in an aging America: Snowball sample identification and characterization of United States geriatric emergency departments in 2013. Acad Emerg Med 2014;21:337–46. 10.1111/acem.12332 [DOI] [PubMed] [Google Scholar]

- 16. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3:77–101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 17. Maguire M, Delahunt B. Doing a thematic analysis: a practical, step-by-step guide for learning and teaching scholars. All Ireland J Higher Educ 2017;9. [Google Scholar]

- 18. Almende BV, Thieurmel B, Robert T. visNetwork: Network Visualization using ‘vis.js’ Library, 2019. [Google Scholar]

- 19. Wickham H, Averick M, Bryan J, et al. . Welcome to the Tidyverse. J Open Source Softw 2019;4:1686 10.21105/joss.01686 [DOI] [Google Scholar]

- 20. Barr PJ, Thompson R, Walsh T, et al. . The psychometric properties of collaborate: a fast and frugal patient-reported measure of the shared decision-making process. J Med Internet Res 2014;16:e2. 10.2196/jmir.3085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Kriston L, Scholl I, Hölzel L, et al. . The 9-item shared decision making questionnaire (SDM-Q-9). Development and psychometric properties in a primary care sample. Patient Educ Couns 2010;80:94–9. 10.1016/j.pec.2009.09.034 [DOI] [PubMed] [Google Scholar]

- 22. Barry MJ, Edgman-Levitan S, Sepucha K. Shared Decision-Making & Patient Decision Aids: Focusing on Ultimate Goal. NEJM Catalyst, 2018. Available: https://catalyst.nejm.org/shared-decision-making-patient-decision-aids/ [Accessed 10 Oct 2019].

- 23. Sepucha KR, Stacey D, Clay CF, et al. . Decision quality instrument for treatment of hip and knee osteoarthritis: a psychometric evaluation. BMC Musculoskelet Disord 2011;12:149. 10.1186/1471-2474-12-149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Sepucha KR, Feibelmann S, Abdu WA, et al. . Psychometric evaluation of a decision quality instrument for treatment of lumbar herniated disc. Spine 2012;37:1609–16. 10.1097/BRS.0b013e3182532924 [DOI] [PubMed] [Google Scholar]

- 25. Comparative Health System Performance Initiative Snapshot of US health systems. Available: https://www.ahrq.gov/sites/default/files/wysiwyg/snapshot-of-us-health-systems-2016v2.pdf [Accessed 24 Oct 2019].

- 26. Centers for Medicare & Medicaid Services Decision Memo for screening for lung cancer with low dose computed tomography (LDCT) (CAG-00439N). Available: https://www.cms.gov/medicare-coverage-database/details/nca-decision-memo.aspx?NCAId=274 [Accessed 14 Apr 2020].

- 27. Durand M-A, Barr PJ, Walsh T, et al. . Incentivizing shared decision making in the USA--where are we now? Healthc 2015;3:97–101. 10.1016/j.hjdsi.2014.10.008 [DOI] [PubMed] [Google Scholar]

- 28. Kunneman M, Montori VM, Castaneda-Guarderas A, et al. . What is shared decision making? (and what it is not). Acad Emerg Med 2016;23:1320–4. 10.1111/acem.13065 [DOI] [PubMed] [Google Scholar]

- 29. Stacey D, Légaré F, Lewis K, et al. . Decision AIDS for people facing health treatment or screening decisions. Cochrane Database Syst Rev 2017;4:CD001431. 10.1002/14651858.CD001431.pub5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Millstein JH. From surveys to skill sets: improving patient experience by supporting clinician well-being. J Patient Exp 2019:237437351987425 10.1177/2374373519874254 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Goold SD, Klipp G. Managed care members talk about trust. Soc Sci Med 2002;54:879–88. 10.1016/S0277-9536(01)00070-3 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2020-037087supp001.pdf (73.2KB, pdf)

bmjopen-2020-037087supp002.pdf (33.7KB, pdf)

bmjopen-2020-037087supp003.pdf (44.5KB, pdf)