Abstract

Beamformers are applied for estimating spatiotemporal characteristics of neuronal sources underlying measured MEG/EEG signals. Several MEG analysis toolboxes include an implementation of a linearly constrained minimum-variance (LCMV) beamformer. However, differences in implementations and in their results complicate the selection and application of beamformers and may hinder their wider adoption in research and clinical use. Additionally, combinations of different MEG sensor types (such as magnetometers and planar gradiometers) and application of preprocessing methods for interference suppression, such as signal space separation (SSS), can affect the results in different ways for different implementations. So far, a systematic evaluation of the different implementations has not been performed. Here, we compared the localization performance of the LCMV beamformer pipelines in four widely used open-source toolboxes (MNE-Python, FieldTrip, DAiSS (SPM12), and Brainstorm) using datasets both with and without SSS interference suppression.

We analyzed MEG data that were i) simulated, ii) recorded from a static and moving phantom, and iii) recorded from a healthy volunteer receiving auditory, visual, and somatosensory stimulation. We also investigated the effects of SSS and the combination of the magnetometer and gradiometer signals. We quantified how localization error and point-spread volume vary with the signal-to-noise ratio (SNR) in all four toolboxes.

When applied carefully to MEG data with a typical SNR (3–15 dB), all four toolboxes localized the sources reliably; however, they differed in their sensitivity to preprocessing parameters. As expected, localizations were highly unreliable at very low SNR, but we found high localization error also at very high SNRs for the first three toolboxes while Brainstorm showed greater robustness but with lower spatial resolution. We also found that the SNR improvement offered by SSS led to more accurate localization.

Keywords: MEG, EEG, Source modeling, Beamformers, LCMV, Open-source analysis toolboxes

Highlights

-

•

Different beamformer implementations are reported to sometimes yield differing source estimates for the same MEG data.

-

•

We compared beamformers in four major open-source MEG analysis toolboxes.

-

•

All toolboxes provide consistent and accurate results with 3–15-dB input SNR.

-

•

However, localization errors are high at very high input SNR for the tested scalar beamformers.

-

•

We discuss the critical differences between the implementations.

1. Introduction

MEG (magnetoencephalography) and EEG (electroencephalography) source imaging aims to identify the spatiotemporal characteristics of neural source currents based on the recorded signals, electromagnetic forward models and physiologically motivated assumptions about the source distribution. One well-known method for estimating a small number of focal sources is to model each of them as a current dipole with fixed location and fixed or changing orientation. The locations (optionally orientations) and time courses of the dipoles are then collectively estimated (Mosher et al., 1992; Hämäläinen et al., 1993). Such equivalent dipole models have been widely applied in basic research (see e.g. Salmelin, 2010) as well as in clinical practice (Bagic et al., 2011a, 2011b; Burgess et al., 2011). Distributed imaging estimates source currents across the whole source space, typically the cortical surface. Examples of linear methods for distributed source estimation are LORETA (low-resolution brain electromagnetic tomography; Pascual-Marqui et al., 1994) and MNE (minimum-norm estimation; Hämäläinen and Ilmoniemi, 1994). From estimated source distributions, one often computes noise-normalized estimates such as dSPM (dynamic statistical parametric mapping; Dale et al., 2000). Also, various non-linear distributed inverse methods have been proposed (Wipf et al., 2010; Gramfort et al., 2013b).

While dipole modeling and distributed source imaging estimate source distributions that reconstruct (the relevant part of) the measurement, beamforming takes an adaptive spatial-filtering approach, scanning independently each location in a predefined region of interest (ROI) within the source space without attempting to reconstruct the data. LCMV beamforming can be done in time or frequency domain; time-domain methods (Van Veen and Buckley, 1988, 1997; Spencer et al., 1992; Sekihara et al., 2006) use covariance matrices whereas frequency domain methods, such as DICS (Dynamic Imaging of Coherent Sources; Gross et al., 2001), utilize cross-spectral density matrices. There are also other variants of MEG beamformers, such as SAM (Synthetic Aperture Magnetometry; Robinson and Vrba, 1998) and SAM-based ERB (Event-related Beamformer; Cheyne et al., 2007) etc. They differ slightly in covariance computation, forward model selection, optimal orientation search, and weight normalization of the output power.

The LCMV beamformer estimates the activity for a source at a given location (typically a point source) while simultaneously suppressing the contributions from all other sources and noise captured in the data covariance matrix. For evaluation of the spatial distribution of the estimated source activity, an image is formed by scanning a set of predefined possible source locations and computing the beamformer output (often power) at each location in the scanning space. When the scanning is done in a volume grid, the beamformer output is typically presented by superimposing it onto an anatomical MRI.

There are two main categories of beamformers applied in the MEG/EEG source analysis— vector type and scalar type. Vector beamformers consider all source orientations while scalar beamformers use either a predefined source orientation or they try to find the maximum output power projection. Spatial resolution of scalar beamformers is higher than that of the vector type (Vrba and Robinson, 2000; Hillebrand and Barnes, 2003).

Beamformers have been popular in basic MEG research studies (e.g. Hillebrand and Barnes, 2005; Braca et al., 2011; Ishii et al., 2014; van Es and Schoffelen, 2019) as well as in clinical applications such as in localization of epileptic events (e.g. Mohamed et al., 2013; van Klink et al., 2017; Youssofzadeh et al., 2018; Hall et al., 2018). Many variants of beamformers are implemented in several open-source toolboxes and commercial software for MEG/EEG analysis. Presently, based on citation counts, the most used open-source toolboxes for MEG data analysis are FieldTrip (Oostenveld et al., 2011), Brainstorm (Tadel et al., 2011), MNE-Python (Gramfort et al., 2013a) and DAiSS in SPM12 (Litvak et al., 2011). These four toolboxes have an implementation of an LCMV beamformer, based on the same theoretical framework (Van Veen et al., 1997; Sekihara et al., 2006). Yet, it has been anecdotally reported that these toolboxes may yield different results for the same data. These differences may arise not only from the core of the beamformer implementation but also from the previous steps in the analysis pipeline, including data import, preprocessing, forward model computation, combination of data from different sensor types, covariance estimation, and regularization method. Beamforming results obtained from the same toolbox may also differ substantially depending on the applied preprocessing methods; for example, Signal Space Separation (SSS; Taulu and Kajola, 2005) reduces the rank of the data, which could affect beamformer output unpredictably if not appropriately considered in the implementation.

In this study, we evaluated the LCMV beamformer pipelines in the four open-source toolboxes and investigated the reasons for possible inconsistencies, which hinder the wider adoption of beamformers to research and clinical use where accurate localization of sources is required, e.g., in pre-surgical evaluation. These issues motivated us to study the conditions in which these toolboxes succeed and fail to provide systematic results for the same data and to investigate the underlying reasons.

2. Materials and methods

2.1. Datasets

To compare the beamformer implementations, we employed MEG data obtained from simulations, phantom measurements, and measurements of a healthy volunteer who received auditory, visual, and somatosensory stimuli. For all human data recordings, informed consent was obtained from all study subjects in agreement with the approval of the local ethics committee.

2.1.1. MEG systems

All MEG recordings were performed in a magnetically shielded room with a 306-channel MEG system (either Elekta Neuromag® or TRIUX™; Megin Oy, Helsinki, Finland), which samples the magnetic field distribution by 510 coils at distinct locations above the scalp. The coils are configured into 306 independent channels arranged on 102 triple-sensor elements, each housing a magnetometer and two perpendicular planar gradiometers. The location of the phantom or subject’s head relative to the MEG sensor array was determined using four or five head position indicator (HPI) coils attached to the scalp. A Polhemus Fastrak® system (Colchester, VT, USA) was used for digitizing three anatomical landmarks (nasion, left and right preauricular points) to define the head coordinate system. Additionally, the centers of the HPI coils and a set of ~50 additional points defining the scalp were also digitized. The head position in the MEG helmet was determined at the beginning of each measurement using the ‘single-shot’ HPI procedure, where the coils are activated briefly, and the coil positions are estimated from the measured signals. The location and orientation of the head with respect to the helmet can then be calculated since the coil locations were known both in the head and in the device coordinate systems. After this initial head position measurement, continuous tracking of head movements (cHPI) was engaged by keeping the HPI coils activated to track the movement continuously.

2.1.2. Simulated MEG data

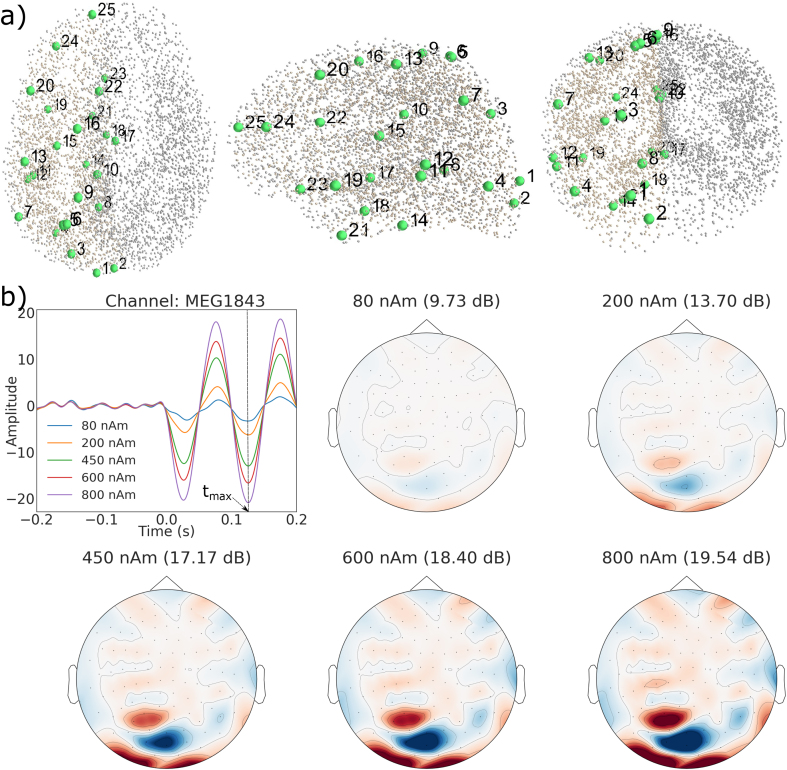

To obtain realistic MEG data with known sources, we superimposed simulated sensor signals based on forward modeling of dipolar sources onto measured resting-state MEG data utilizing a special in-house simulation software. Structural MRI images, acquired from a healthy adult volunteer using a 3-T MRI scanner (Siemens Trio, Erlangen, Germany), were segmented using the MRI Segmentation Software of Megin Oy (Helsinki, Finland) and the surface enveloping the brain compartment was tessellated with triangles (5-mm side length). Using this mesh, a realistic single-shell volume conductor model was constructed using the Boundary Element Method (BEM; Hämäläinen and Sarvas, 1989) implemented in the Source modeling software of Megin Oy. We also segmented the cortical mantle with the FreeSurfer software (Dale et al., 1999; Fischl et al., 1999; Fischl, 2012) for deriving a realistic source space. By using the “ico4” subdivision in MNE-Python, we obtained a source space comprising 2560 dipoles (average spacing 6.2 mm) in each hemisphere (Fig. 1a). Out of these, we selected 25 roughly uniformly distributed source locations in the left hemisphere for the simulations (Fig. 1a). All these points were at least 7.5 mm inwards from the surface of the volume conductor model. Using the conductor model, source locations and sensor locations from the resting-state data in MNE-Python, we simulated dipoles at each of the 25 locations – one at a time – with a 10-Hz sinusoid of 200-ms duration (2 cycles). The dipoles were simulated at eight source amplitudes: 10, 30, 80, 200, 300, 450, 600 and 800 nAm and sensor-level evoked field data were computed. Fig. 1b shows a few of the simulated evoked responses (whitened with noise) at a single dipole location but at different strengths, illustrating the changes in the signal-to-noise ratio (SNR). Here is the time point of the SNR estimate, which is defined later in Section 2.5.

Fig. 1.

Simulation of evoked responses. a) The 25 simulated dipolar sources (green dots) in the source space (grey dots), b) Simulated evoked responses of a dipolar source at five strengths and the field patterns corresponding to the peak amplitude (SNR in parenthesis). The dipole was located at (−19.2, −71.6, 57.8) mm in head coordinates.

The continuous resting-state MEG data with eyes open was recorded from the same volunteer who provided the anatomical data, using an Elekta Neuromag® MEG system (at BioMag Laboratory, Helsinki, Finland). The recording length was 2 min, the sampling rate was 1 kHz, and the acquisition frequency band was 0.1–330 Hz. This recording provided the head position for the simulations and defined their noise characteristics. MEG and MRI data were co-registered using the digitized head shape points and the outer skin surface in the segmented MRI.

The simulated sensor-level evoked fields data were superimposed on the unprocessed resting-state recording with inter-trial-interval varying between 1000 and 1200 ms resulting in ~110 trials (epochs) in each simulated dataset. The resting-state recording was used both as raw without preprocessing and after SSS interference suppression. Altogether, we obtained 400 simulated MEG datasets (25 source locations at 8 dipole amplitudes, all both with the raw and SSS-preprocessed real data). Fig. 2 illustrates the generation of simulated MEG data.

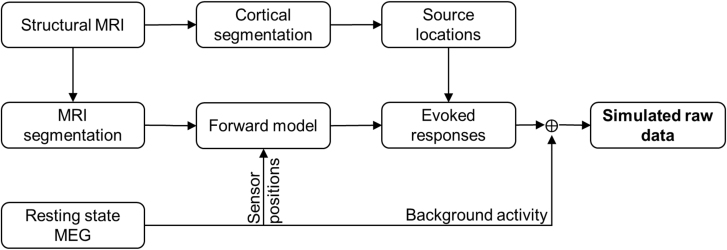

Fig. 2.

MEG data simulation workflow (details in Suppl. Fig. 1).

2.1.3. Phantom data

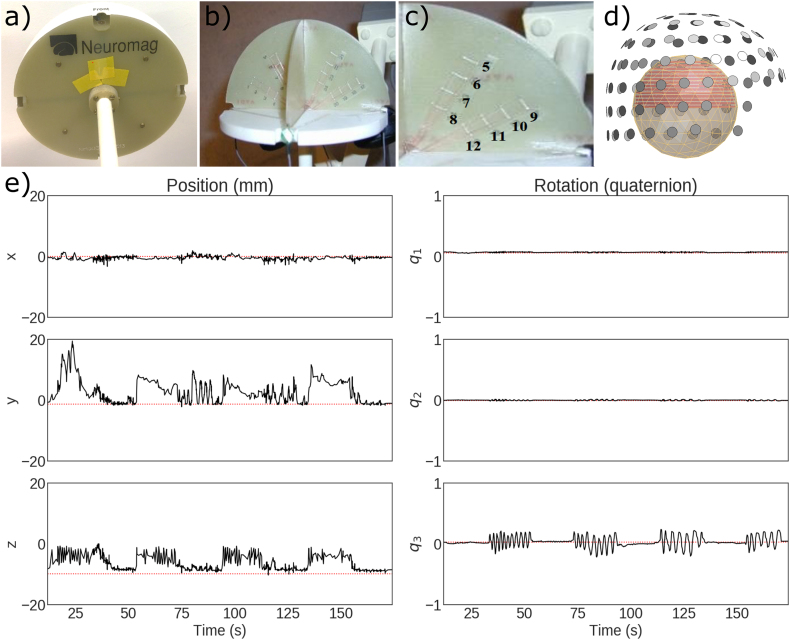

We used a commercial MEG phantom (Megin Oy, Helsinki, Finland) which contains 32 dipoles and 4 HPI coils at distinct fixed locations (see Fig. 3a–c and TRIUX™ User’s Manual, Megin Oy). The phantom is based on the triangle construction (Ilmoniemi et al., 1985): an isosceles triangular line current generates on its relatively very short side a magnetic field distribution equivalent to that of a tangential current dipole in a spherical conductor model, provided that the vertex of the triangle and the origin of the model of a conducting sphere coincide. The phantom data were recorded from 8 dipoles, excited one by one, using a 306-channel TRIUX™ system (at Aston University, Birmingham, UK). The distance from the phantom origin was 64 mm for dipoles 5 and 9 (the shallowest), 54 mm for dipoles 6 and 10, 44 mm for dipoles 7 and 11, and 34 mm for dipoles 8 and 12 (the deepest; see Fig. 3c). The phantom was first kept stationary inside the MEG helmet and continuous MEG data were recorded with 1-kHz sampling rate for three dipole amplitudes (20, 200 and 1000 nAm); one dipole at a time was excited with a 20-Hz sinusoidal current for 500 ms, followed by 500 ms of inactivity. The recordings were repeated with the 200-nAm dipole strength while moving the phantom continuously to mimic head movements inside the MEG helmet. The experimenter made sequences of continuous random rotational and translational movements by holding the phantom rod and keeping the phantom (hemispheric structure) inside the helmet, followed by periods without movement; see the movements in Fig. 3e and Suppl. Fig. 2 for all movement parameters.

Fig. 3.

The dry phantom. (a) Outer view, (b) cross-section, (c) positions of the employed dipole sources, (d) phantom position with respect to the MEG sensor helmet, and (e) position and rotation of the phantom during one of the moving-phantom measurements (Dipole 9 activated).

2.1.4. Human MEG data

We recorded MEG evoked responses from the same volunteer whose MRI and spontaneous MEG data were utilized in the simulations. These human data were recorded using a 306-channel Elekta Neuromag® system (at BioMag Laboratory, Helsinki, Finland). During the MEG acquisition, the subject was receiving a random sequence of visual (a checkerboard pattern in one of the four quadrants of the visual field), somatosensory (electric stimulation of the median nerve at the left/right wrist at the motor threshold) and auditory (1-kHz 50-ms tone pips to the left/right ear) stimuli with an interstimulus interval of ~500 ms. The Presentation software (Neurobehavioral Systems, Inc., Albany, CA, USA) was used to produce the stimuli.

2.2. Preprocessing

The datasets were analyzed in two ways: 1) omitting bad channels from the analysis, without applying SSS preprocessing, and 2) applying SSS-based preprocessing methods (SSS/tSSS) to reduce magnetic interference and perform movement compensation for moving phantom data. The SSS-based preprocessing and movement compensation were performed in MaxFilter™ software (version 2.2; Megin Oy, Helsinki, Finland). After that, the continuous data were bandpass filtered (passband indicated for each dataset later in the text) followed by the removing of the dc. Then the data were epoched to trials around each stimulus. We applied an automatic trial rejection technique based on the maximum variance across all channels, rejecting trials that had variance higher than the 98th percentile of the maximum or lower than the 2nd percentile (see Suppl. Fig. 4). This method is available as an optional preprocessing step in FieldTrip, and the same implementation was applied in the other toolboxes. For each dataset, the covariance matrices (data or noise) were calculated over each trial and normalized by the total number of samples across the trials:

| (1) |

where is the resulting data or noise covariance, is the total number of good trials after covariance-based trial rejection, is the covariance matrix of trial and is the total number of samples used in computing all matrices. Below we describe the detailed preprocessing steps for all datasets.

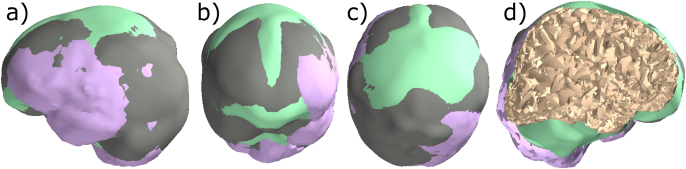

Fig. 4.

Surfaces that bound the source space used by each toolbox. a) Sagittal, b) coronal, and c) axial views of the bounding surfaces in MNE-Python (grey), FieldTrip (lavender), DAiSS (SPM12) (mint) and Brainstorm (coral). d) Transparent view of the overlap and differences of the four surfaces (color indicates the outermost surface).

2.2.1. Simulated data

In each toolbox, the raw data with just bad channels removed or SSS-preprocessed continuous data were filtered using a zero-phase filter with a passband of 2–40 Hz. The filtered data were epoched into windows from −200 to +200 ms relative to the start of the source activity. The bad epochs were removed using the variance-based automatic trial rejection technique, resulting in ~100 epochs. Then using Eq (1), the noise and data covariance matrices were estimated from these epochs for the time windows of −200 to −20 ms and 20–200 ms, respectively.

2.2.2. Phantom data

All 32 datasets (static: 3 dipole strengths and 8 dipole locations; moving: 1 dipole strength and 8 dipole locations) were analyzed both without and with SSS-preprocessing. We applied SSS on static phantom data to remove external interference. On moving-phantom data, combined temporal SSS and movement compensation (tSSS_mc) were applied for suppressing external and movement-related interference and for transforming the data from the continuously estimated positions into a static reference position (Taulu and Kajola, 2005; Nenonen et al., 2012). Then in each toolbox the continuous data were filtered to 2–40 Hz using a zero-phase bandpass filter, and the filtered data were epoched from −500 to +500 ms with respect to stimulus triggers. Bad epochs were removed using the automated method based on maximum variance, yielding ~100 epochs for each dataset. The noise and data covariance matrices were estimated using Eq (1) in each toolbox for the time windows of −500 to −50 ms and 50–500 ms, respectively.

2.2.3. Human MEG data

Both the unprocessed raw data and the data preprocessed with tSSS were filtered to 1–95 Hz using a zero-phase bandpass filter in each toolbox. The trials with somatosensory stimuli (SEF) were epoched between −100 and −10 and 10–100 ms for estimating the noise and data covariances, respectively. The corresponding time windows for the auditory-stimulus trials (AEF) were −150 to −20 and 20–150 ms, and for the visual stimulus trials (VEF) −200 to −50 and 50–200 ms, respectively. Trials contaminated by excessive eye blinks (EOG > 250 μV) or by excessive magnetic signals (MEG > 5000 fT or 3000 fT/cm) were removed with the variance-based automated trial removal technique. Before covariance computation, baseline correction by the time window before the stimulus was applied on each trial. The covariance matrices were estimated independently in each toolbox, using Eq (1).

Since the actual source locations associated with the evoked fields are not precisely known, we defined reference locations using conventional dipole fitting in the Source Modelling Software of Megin Oy (Helsinki, Finland). A single equivalent dipole was used to represent SEF and VEF sources, and one dipole per hemisphere was used for AEF (see Suppl. Fig. 3). The dipole fitting was performed at the time point of the maximum RMS value across all planar gradiometer channels (global field power) of the average response amplitude.

2.2.4. Forward model

For the beamformer scan of simulated data, we used the default or the most commonly used forward model of each toolbox: a single-compartment BEM model in MNE-Python, a single-shell corrected-sphere model (Nolte, 2003) in FieldTrip, a single-shell corrected sphere model (Nolte, 2003) through inverse normalization of template meshes (Mattout et al., 2007) in DAiSS (SPM12), and the overlapping-spheres (Huang et al., 1999) model in Brainstorm. The former three packages utilize inner skull for defining the boundary of the models. For constructing the models for the forward solutions, the segmentation of MRI images was performed in FreeSurfer for MNE-Python and Brainstorm while FieldTrip and SPM12 used the SPM segmentation procedure. In MNE-Python, FieldTrip and SPM12, a volumetric source space was represented by a rectangular grid with 5-mm resolution enclosed by the conductor models in these packages while Brainstorm uses a rectangular grid with the same resolution enclosed by the brain surface. Since each toolbox prepares a head model and source space using slightly different methods, these models may differ from each other. Fig. 4 shows the small discrepancies in the boundary of source spaces used by the three packages. These discrepancies may result in a small shift between the positions and number of the scanning points in these toolboxes. Forward solutions were computed separately in each toolbox using the head model, the volumetric grid sources, and sensor information from the MEG data.

For phantom data, a homogeneous spherical volume conductor model was defined in each toolbox with the origin at the head coordinate system origin. An equidistant rectangular source-point grid with 5-mm resolution was placed inside the upper half of a sphere covering all 32 dipoles of the phantom; see Fig. 3d. Forward solutions for these grids were computed independently in each toolbox. For human MEG data, the head models and the source space were defined in the same way as for the beamformer scanning of the simulated data.

2.3. LCMV beamformer

The linearly constrained minimum-variance (LCMV) beamformer is a spatial filter that relates the magnetic field measured outside the head to the underlying neural activities using the covariance of measured signals and models of source activity and signal transfer between the source and the sensor (Spencer et al., 1992; Van Veen et al., 1997; Robinson and Vrba, 1998). The spatial filter weights are computed for each location in the region of interest (ROI).

Let x be an signal vector of MEG data measured with sensors, and is the number of grid points in the ROI with grid locations . Then the source at any location can be estimated as weighted combination of the measurement x as

| (2) |

where the matrix is known as spatial filter for a source at location . This type of spatial filter provides a vector type beamformer by separately estimating the activity for three orthogonal source orientations, corresponding to the three columns of the matrix. According to Eqs 16–23 in Van Veen et al. (1997), the spatial filter for vector beamformer is defined as

| (3) |

Here is the local leadfield matrix that defines the contribution of a dipole source at location to the measurement x, and is the covariance matrix computed from the measured data samples. To perform source localization using LCMV, the output variance (or output source power) is estimated at each point in the source space (see Eq (24) in Van Veen et al., 1997), resulting in

| (4) |

Usually, the measured signal is contaminated by non-uniformly distributed noise and therefore the estimated signal variance is often normalized with projected noise variance calculated over some baseline data (noise). Such normalized estimate is called Neural Activity Index (NAI; Van Veen et al., 1997) and can be expressed as

| (5) |

Scanning over all the locations in the region of interest in source space transforms the MEG data from a given measurement into an NAI map.

In contrast to a vector beamformer, a scalar beamformer (Sekihara and Scholz, 1996; Robinson and Vrba, 1998) uses constant source orientation that is either pre-fixed or optimized from the input data by finding the orientation that maximizes the output source power at each target location. Besides simplifying the output, the optimal-orientation scalar beamformer enhances the output SNR compared to the vector beamformer (Robinson and Vrba, 1998; Sekihara et al., 2004). The optimal orientation , for location can be determined by generalized eigenvalue decomposition (Sekihara et al., 2004) using Rayleigh–Ritz formulation as

| (6) |

where indicates the eigenvector corresponding to the smallest generalized eigenvalue of the matrices enclosed in Eq (6) curly braces. For further details, see Eq (4.44) and Section 13.3 in Sekihara and Nagarajan (2008).

Denoting instead of , the weight matrix in Eq (3) becomes weight vector , and,

| (7) |

Using in Eq (5), we find the estimate (NAI) of a scalar LCMV beamformer as

| (8) |

When the data covariance matrix is estimated from a sufficiently large number of samples and has full rank, Eq (8) provides the maximum spatial resolution (Lin et al., 2008; Sekihara and Nagarajan, 2008). According to Van Veen et al. (1997), the number of samples for covariance estimation should be at least three times the number of sensors. Thus, sometimes, the amount of available data may be insufficient to obtain a good estimate of the covariance matrices. In addition, pre-processing methods such as signal-space projection (SSP) or signal-space separation (SSS) reduce the rank of the data, which impacts the matrix inversions in Eq (8). These problems can be mitigated using Tikhonov regularization (Tikhonov, 1963) by replacing matrix by its regularized version in Eqs (3), (4), (5), (6), (7), (8) where is called the regularization parameter.

All tested toolboxes set the with respect to the mean data variance, using ratio 0.05 as default:

If the data are not full rank, also the noise covariance matrix needs to be regularized.

2.4. Differences between the beamformer pipelines

Though all the four toolboxes evaluated here use the same theoretical framework of the LCMV beamformer, there are several implementation differences which might affect the exact outcome of a beamformer analysis pipeline. Many of these differences pertain to specific handling of the data prior to the estimation of the spatial filters, or to specific ways of (post)processing the beamformer output. Some of the toolbox-specific features reflect the characteristics of the MEG system around which the toolbox has evolved. Importantly, some of these differences are sensitive to input SNR, and they can lead to differences in the results. Table 1 lists the main characteristics and settings of the four toolboxes used in this study. We used the default settings of each toolbox (general practice) for steps before beamforming but set the actual beamforming steps as similar as possible across the toolboxes to be able to meaningfully compare the results.

Table 1.

Characteristics of the four beamforming toolboxes. The non-default settings of each toolbox are shown in bold. The toolbox version is indicated either by the version number or by the download date (yyyymmdd) from GitHub.

| MNE-Python | FieldTrip | DAiSS (SPM12) | Brainstorm | |

|---|---|---|---|---|

| Version | 0.18 | 20190922 | 20190924 | 20190926 |

| Data import functions | MNE (Python) | MNE (Matlab) | MNE (Matlab) | MNE (Matlab) |

| Internal units of MEG data | T, T/m | T, T/m | fT, fT/mm | T, T/m |

| Band-pass filter type | FIR | IIR | IIR | FIR |

| MRI segmentation | FreeSurfer | SPM8/SPM12 | SPM8/SPM12 | FreeSurfer/SPM8 |

| Head model | Single-shell BEM | Single-shell corrected sphere | Single-shell corrected sphere | Overlapping spheres |

| Source space | Rectangular grid (5 mm), inside of the inner skull | Rectangular grid (5 mm), inside of the inner skull | Rectangular grid (5 mm), inside of the inner skull | Rectangular grid (5 mm), inside of the brain volume |

| MEG–MRI coregistration | Point-cloud co-registration and manual correction | 3-point manual co-registration followed by ICP co-registration | Point-cloud co-registration using ICP | Point-cloud co-registration using ICP |

| Data covariance matrix | Sample data covariance | Sample data covariance | Sample data covariance | Sample data covariance |

| Noise normalization for NAI computation | Sample noise covariance | Sample noise covariance | Sample noise covariance | Sample noise covariance |

| Combining data from multiple sensor types | Prewhitening (full noise covariance) | No scaling or prewhitening | No scaling or prewhitening | Prewhitening (full noise covariance but cross-sensor-type terms zeroed) |

| Beamformer type | Scalar | Scalar | Scalar | Vector |

| Beamformer output | Neural activity index (NAI) | Neural activity index (NAI) | Neural activity index (NAI) | Neural activity index (NAI) |

All toolboxes import data using either Matlab or Python import functions of the MNE software (Gramfort et al., 2014) but represent the data internally either in T or fT (magnetometer) and T/m or fT/mm (gradiometer); see Suppl. Fig. 5. Default filtering approaches across toolboxes change the numeric values, so the linear correlation between the same channels across toolboxes deviates from the identity line; see Suppl. Fig. 6. The default head model is also different across toolboxes; see Section 2.2.4. The single-shell BEM and single-shell corrected sphere model (the “Nolte model”) are approximately as accurate but produce slightly different results (Stenroos et al., 2014).

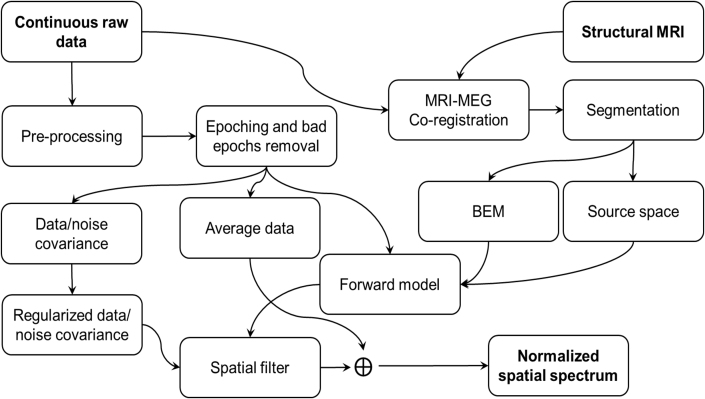

Fig. 5.

The pipeline for constructing an LCMV beamformer for MEG/EEG source estimation. A similar pipeline was employed in all four packages.

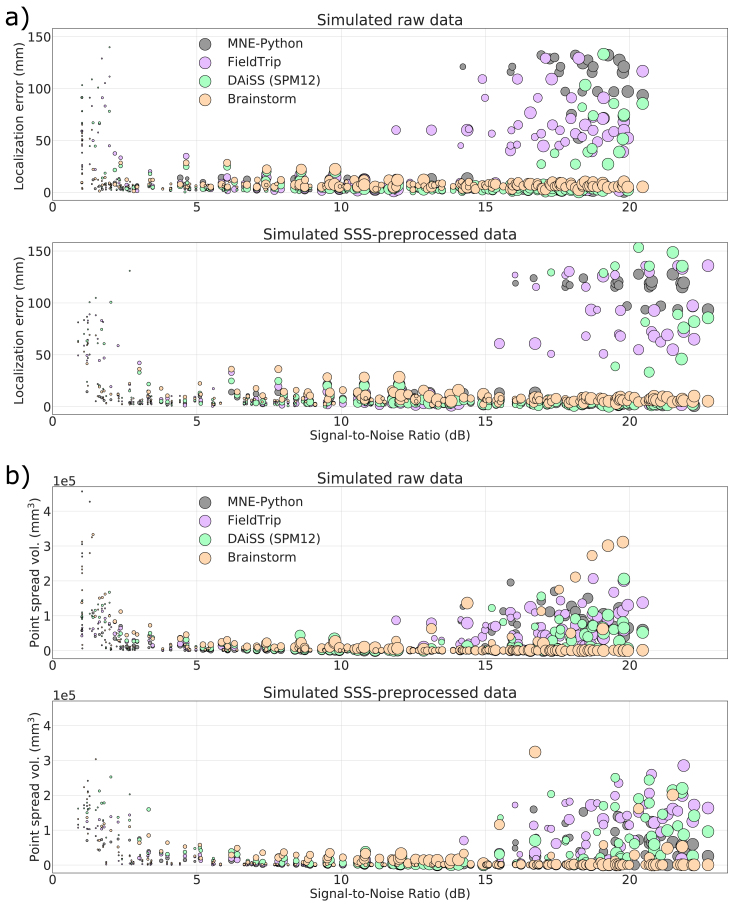

Fig. 6.

Localization error (a) and point-spread volume (b) as a function of input SNR for raw and SSS-pre-processed simulated datasets. The markers size indicates the true dipole amplitude.

For MEG–MRI co-registration, there are several approaches available across these toolboxes such as an interactive method using fiducial or/and digitization points defining the head surface, using automated point cloud registration methods e.g., the iterative closest point (ICP) algorithm. Despite using the same source-space specifications (rectangular grid with 5-mm resolution), differences in head models and/or co-registration methods change the forward model across toolboxes; see Fig. 4. Though there are several approaches to compute data and noise covariances across the four beamformer implementations, by default they all use the empirical/sample covariance. In contrast to other toolboxes, Brainstorm eliminates the cross-modality terms from the data and noise covariance matrices. Also, the regularization parameter is calculated and applied separately for gradiometers and magnetometers channel sets in Brainstorm therefore, the same amount of regularization affects differently.

The combination of two MEG sensor types in the MEGIN triple-sensor array causes additional processing differences in comparison to other MEG systems that employ only axial gradiometers or only magnetometers. Magnetometers and planar gradiometers have different dynamic ranges and measurement units, so their combination must be appropriately addressed in source analysis such as beamforming. For handling the two sensor types in the analysis, different strategies are used for bringing the channels into the same numerical range. MNE-Python and Brainstorm use pre-whitening (Engemann and Gramfort, 2015; Ilmoniemi and Sarvas, 2019) based on noise covariance while FieldTrip and SPM12 assume a single sensor type for all the MEG channels. This approach makes SPM12 to favor magnetometer data (with higher numeric values of magnetometer channels) and FieldTrip to favor gradiometer data (with higher numeric values of gradiometer channels). However, users of FieldTrip and SPM12 usually employ only one channel type of the triple-sensor array for beamforming (most commonly, the gradiometers). Due to the presence of two different sensor types in the MEGIN systems and the potential use of SSS methods, the eigenspectra of data from these systems can be idiosyncratic (see Suppl. Fig. 7) and differ from the single-sensor type MEG systems. Rank deficiency and related phenomena are potential sources of beamforming failures with data that have been cleaned with a method such as SSS. Rank deficiency affects also other MEG sensor arrays using only magnetometers or axial gradiometers when the data are pre-processed with interference suppression methods such as SSP and (t)SSS.

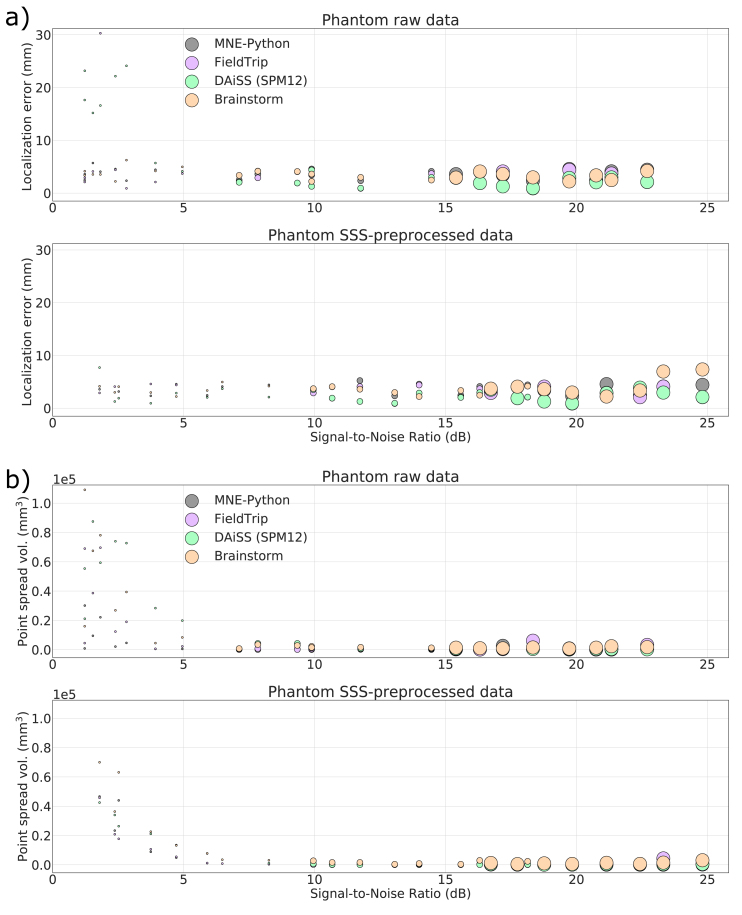

Fig. 7.

Localization error (a) and point-spread volume (b) as a function of input SNR for phantom data recording in a stable position. The markers size indicates the true dipole amplitude.

Previous studies have shown that the scalar beamformer yields twofold higher output SNR compared to the vector-type beamformer, if the source orientation for the scalar beamformer has been optimized according to Eq (6) (Vrba and Robinson, 2000; Sekihara et al., 2004). Most of the beamformer analysis toolboxes have an implementation of optimal-orientation scalar beamformer. In this study, we used the scalar beamformer in MNE-Python, FieldTrip, and SPM12 but a vector-beamformer in Brainstorm since the orientation optimization was not available. To keep the output dimensionality the same across the toolboxes, we linearly summed the three-dimensional NAI values at each source location. The general analysis pipeline used in this study is illustrated in Fig. 5.

2.5. Metrics used in comparison

In this study, a single focal source could be assumed to underlie the simulated/measured data. In such studies, accurate localization of the source is typically desired. We calculated two metrics for comparing the characteristics of the LCMV beamformer results from the four toolboxes: localization error, and point spread volume. We also analyzed their dependence on input signal-to-noise ratio.

Localization Error (LE): True source locations were known for the simulated and phantom MEG data and served as reference locations in the comparisons. Since the exact source locations for the human MEG data were unknown, we applied the location of a single current dipole as a reference location (see Section 2.1.4 “Human MEG data”). The Source Modelling Software (Megin Oy, Helsinki, Finland) was used to fit a single dipole for each evoked-response category at the time point around the peak of the average response providing the maximum goodness-of-fit value. The beamformer localization error is computed as the Euclidean distance between the estimated and reference source locations.

Point-Spread Volume (PSV): An ideal spatial filter should provide a unit response at the actual source location and zero response elsewhere. Due to noise and limited spatial selectivity, there is some filter leakage to the nearby locations, which spreads the estimated variance over a volume. The focality of the estimated source, also called focal width, depends on several factors such as the source strength, orientation, and distance from the sensors. PSV measures the focality of an estimate and is defined as the total volume occupied by the source activity above a threshold value; thus, a smaller PSV value indicates a more focal source estimate. We fixed the threshold to 50% of the highest NAI in all comparisons. In this study, the volume represented by a single source in any of the four source spaces (5-mm grid spacing) was 125 mm3. To compute PSV, we computed the number of active voxels above the threshold and multiplied by the volume of a single voxel.

Signal-to-Noise ratio (SNR): Beamformer localization error depends on the input SNR, which varies – among other factors – as a function of source strength and distance of the source from the sensor array. Therefore, we evaluated beamformer localization errors and PSV as a function of the input SNR of the evoked field data.

We estimated the SNR for each evoked field MEG dataset in MNE-Python using the estimated noise covariance by discarding the smallest near-zero eigenvalues. The data were whitened using the noise covariance, and the effective number of sensors (rank) was then calculated as

| (9) |

where is the number of all MEG channels and is the total number of near-zero eigenvalues of .

Then the input SNR was calculated as:

| (10) |

where is the signal of kth sensor from the whitened evoked field data, is the time point at maximum amplitude of whitened data across all channels and is the number of effective sensors defined in Eq (9). Since the same data were used in all toolboxes, we used the same input SNR value for all of them. Fig. 1b compares simulated evoked responses and the changes in SNR for dipoles at different strengths but at the same location.

2.6. Data and code availability

Our analysis codes are publicly available under a repository https://zenodo.org/record/3471758 (https://doi.org/10.5281/zenodo.3471758). The datasets as well as the specific versions of the four toolboxes used in the study are available at https://zenodo.org/record/3233557 (https://doi.org/10.5281/zenodo.3233557).

3. Results

We computed the source localization error (LE) and the point spread volume (PSV) for each NAI estimate across all datasets from LCMV beamformer in all four toolboxes. We plotted the LE and PSV as a function of the input SNR computed according to Eq (10). To differentiate the localization among the implementations, we followed the following color convention: MNE-Python: grey; FieldTrip: lavender; DAiSS (SPM12): mint; and Brainstorm: coral.

3.1. Simulated MEG data

Localization errors and PSV values were calculated for all simulated datasets and plotted against the corresponding input SNR. The SNR of all 200 simulated datasets ranged between 0.5 and 25 dB. Fig. 6a shows the variation of localization errors over the range of input SNR for the simulated dataset. The localization error goes high for all toolboxes for very low SNR (<3 dB) signals (e.g. < ~80-nAm or deep sources). The localization error within the input SNR range 3–12 dB is stable and mostly within 15 mm, and SSS preprocessing widens this SNR range of stable performance to 3–15 dB. Unexpectedly, we also found high localization error at high SNR (>15 dB) for the toolboxes other than Brainstorm. Fig. 6b plots PSV values against input SNR for raw and SSS-preprocessed simulated data. For the low SNR signals (usually, weak or deep sources), all the four toolboxes show high PSV values. The spatial resolution is highest for the SNR rage ~3–15 dB. For the SNR > ~15 dB (usually, strong or superficial sources) these toolboxes also show high PSV. Fig. 6a and b shows that none of the four toolboxes provides accurate localization for all SNR values and the spatial resolution of LCMV varies over the range of input SNR.

3.2. Static and moving phantom MEG data

In the case of phantom data, the background noise is very low and there is a single source underneath a measurement. Since, both the dipole simulation and beamformer analysis in case of phantom use a homogeneous sphere model that does not introduce any forward model inaccuracy, except the possible small co-registration error. All four toolboxes show high localization accuracy and high resolution for phantom data, if the input SNR is not very low (<~3 dB). Corresponding results for the static phantom data are presented in Fig. 7a and b. Fig. 7a indicates the localization error clear dependency on input SNR, it shows high localization errors at very low SNR raw data sets. The high error is because of some unfiltered artifacts in raw data which was removed by SSS. After SSS, the beamformer shows localization error under ~5 mm for all the datasets. Fig. 7b shows the beamforming resolution in terms of PSV. The PSV values show a high spatial resolution for the data with SNR >5 dB.

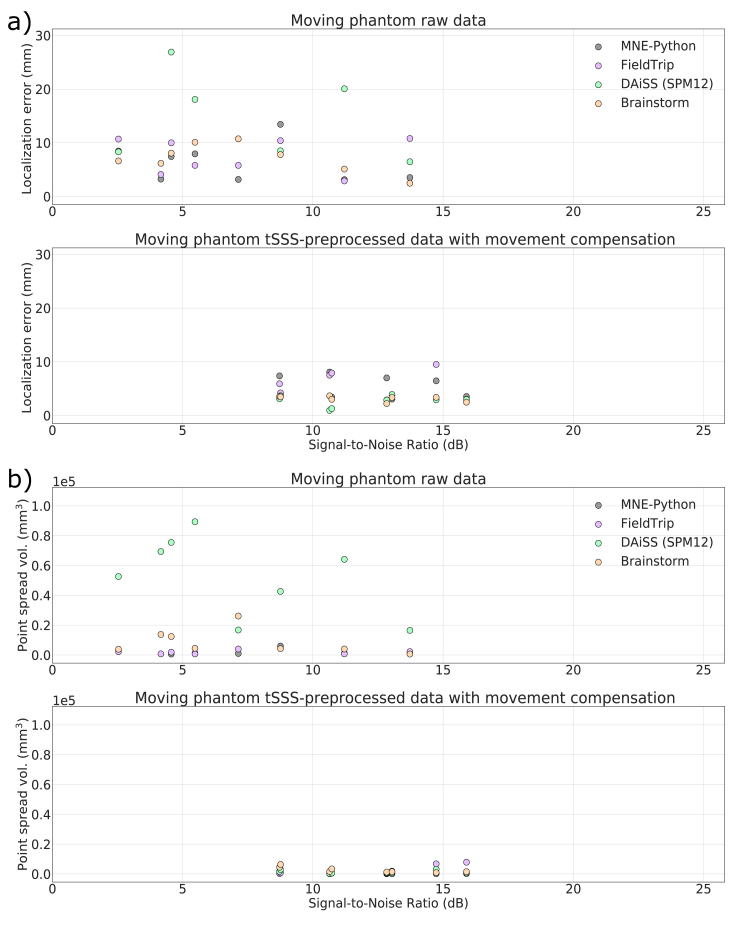

In the cases of moving phantom, Fig. 8a shows high localization errors with unprocessed raw data because of disturbances caused by the movement. The dipole excitation amplitude was 200 nAm, which is enough to provide a good SNR, but the movement artifacts lower the SNR. The most superficial dipoles (Dipoles 5 and 9 in Fig. 3c) possess higher SNR but also higher localization error since they get more significant angular displacement during movement. Because of differences in implementations and preprocessing parameters listed in Section 2.4, apparent differences among the estimated localization error can be seen. Overall, MNE-Python shows the lowest while DAiSS (SPM12) shows the highest localization error with the phantom data with movement artifact. After applying for spatiotemporal tSSS and movement compensation, the improved SNR provided significantly better localization accuracies for all the toolboxes. Fig. 8b shows the PSV for moving phantom data for raw and processed data. The plots indicate improvement in SNR and spatial resolution after tSSS with movement compensation.

Fig. 8.

Localization error (a) and point-spread volume (b) as a function of input SNR for data from the moving phantom.

Table 2 lists the mean localization error and PSV for the simulated and static phantom datasets over three ranges of SNR— 1) very low (less than 3 dB) where all the four implementations show unreliable localization and the lowest spatial resolution, 2) feasible range (3–15 dB) that covers most of the research studies where all the four implementations are reliable and robust, and 3) high SNR (above 15 dB) where the source estimation by Brainstorm is comparatively more robust.

Table 2.

Mean localization error and mean PSV for simulated and static phantom data over the three ranges of signal-to-noise ratio.

| SNR range (dB) | MNE-Python | FieldTrip | DAiSS (SPM12) | Brainstorm | |

|---|---|---|---|---|---|

| Mean loc. error for SSS-pre-processed simulated data (mm) | <3 | 24.9 | 44.9 | 49.6 | 26.3 |

| 3–15 | 6.1 | 6.3 | 5.9 | 9.9 | |

| >15 |

9.5 |

13.3 |

13.9 |

12.9 |

|

| Mean PSV for SSS-pre-processed simulated data (cm3) | <3 | 84.9 | 84.9 | 139.4 | 101.1 |

| 3–15 | 4.6 | 6.8 | 11.7 | 14.0 | |

| >15 |

19.2 |

21.0 |

34.9 |

39.9 |

|

| Mean loc. error for SSS-pre-processed phantom data (mm) | <3 | 3.6 | 2.9 | 3.6 | 3.8 |

| 3–15 | 3.3 | 3.1 | 2.2 | 3.4 | |

| >15 |

3.7 |

3.0 |

2.5 |

3.5 |

|

| Mean PSV for SSS-pre-processed phantom data (cm3) | <3 | 38.0 | 28.1 | 34.3 | 56.5 |

| 3–15 | 1.8 | 2.0 | 4.8 | 5.8 | |

| >15 | 10.1 | 8.0 | 11.6 | 17.5 |

3.3. Human MEG data

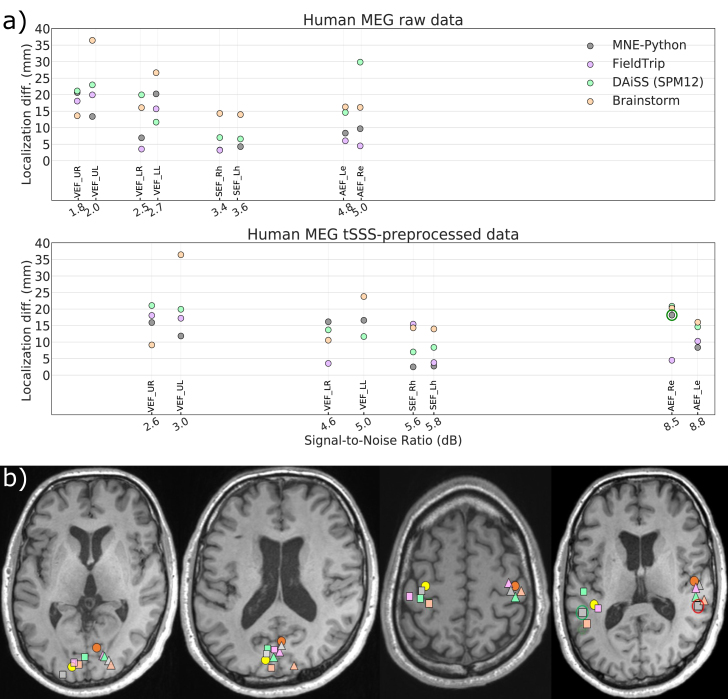

Since the correct source locations for the human evoked field datasets are unknown, we plotted the localization difference as the Cartesian distance between an LCMV-estimated source location and the corresponding reference dipole location as explained in Section 2.2.3. Fig. 9a shows the plots for the localization differences against the input SNRs computed using Eq (10) for four visual, two auditory and two somatosensory evoked-field datasets. The localization differences for both unprocessed raw and SSS preprocessed data are mostly under 20 mm in each toolbox. The higher differences compared to the phantom and simulated dataset could be because of two reasons. First, the recording might have been comprised by some head movement, which could not be corrected because of the lack of continuous HPI information. Second, the reference dipole location may not represent the very same source as estimated by the LCMV beamformer. In contrast to dipole fitting, beamforming utilizes data from the full covariance window, so some difference between the estimated localizations is to be expected. For all SSS-preprocessed evoked field datasets, Fig. 9b shows the estimated locations across the four LCMV implementation and the corresponding reference dipole locations. For simplifying the visualization, all estimated locations in a stimulus category are projected onto a single axial slice. All localizations seem to be in the correct anatomical regions, except the estimated location from right-ear auditory responses by MNE-Python after SSS-preprocessing (Fig. 9b; red circle). This could be because of high coherence between left-right auditory responses. After de-selecting the channels close to the right auditory cortex, the MNE-Python-estimated source location was correctly in the left cortex (Fig. 9b; green circle). Fig. 9a also shows the improvement in input SNR and also in the source localization in some cases after SSS pre-preprocessing. Fig. 8 in Supplementary material shows the PSV values as a function of the input SNR for the evoked-field datasets, demonstrating the spatial resolution of beamforming.

Fig. 9.

Source estimates of human MEG data. (a) Localization difference from the reference dipole location for raw and tSSS-preprocessed data. (b) Peaks of the beamformer source estimate of tSSS-preprocessed data. From left to right: visual stimuli presented to left (triangle) and right (square) upper and lower quadrant of the visual field (the two axial slices showing all sources); somatosensory stimuli to left (triangle) and right (square) wrist; auditory stimuli to the left (triangle) and right (square) ear. Reference dipole locations (yellow and orange circles).

4. Discussion

In this study, we compared four widely-used open-source toolboxes for their LCMV beamformer implementations. While the implementations share the theoretical basis, there are also differences, which could lead to differing source estimates. There are also several other beamformer variants (e.g. Huang et al., 2004; Cheyne et al., 2007; Herdman and Ribary, 2018) but an extensive comparison of all beamformer formulations would be a tedious task; however, most of our findings likely apply to other formulations such as event-related beamformers, too.

We investigated the localization accuracy and beamformer resolution as a function of the input SNR and compared the results across the LCMV implementations in the four tested toolboxes. In the absence of background noise and using perfect sphere model, the phantom data showed high localization accuracy and high spatial resolution if the input SNR >~5 dB. All implementations also showed high localization accuracy for data recording from a moving phantom after compensating the movement and applying tSSS. For the simulated datasets with realistic background noise and imperfect forward model, the localization errors across the LCMV implementations indicated that the reliability of localization in these implementations depends on the SNR of input data. Brainstorm (vector beamformer) reliably localized a single source when SNR was above ~3 dB, including very high SNRs, whereas the other three implementations (scalar beamformer) localized the source reliably within the SNR range of ~3–15 dB. Small deviations were observed in the estimated source locations across the implementations even in this SNR range, likely caused by differences in the pre-processing steps such as filter types, head models, spatial filter and performing the beamformer scan. For the human evoked-response MEG data, all implementations localized sources within about 20 mm from each other.

Our results indicate that with the default parameter settings, none of the four implementations works universally reliable for all datasets and all input SNR values. In the case of low SNR (typically less than 3 dB), the lower contrast between data and noise covariance may cause the beamformer scan to provide a flat peak in the output and so the localization error goes high. The unexpected high localization errors can be observed at some cases of high SNR signals for the three scalar-type beamformer implementations and significant localization differences between the toolboxes are notable. The PSV plots show greater spatial resolution for the SNR range ~3–15 dB whereas low spatial resolution at very low and high SNR. Brainstorm provides reliable localization above ~3 dB but it also compromises spatial resolution; see Fig. 6, Fig. 7 and Table 2. The lower spatial resolution (higher PSV) for the signal with low SNR also agrees with previous studies (Lin et al., 2008; Hillebrand and Barnes, 2003).

For our simulated data, all toolboxes had a disparity between the forward model used in data generation model and the model used in beamforming, i.e, the forward model was not perfect. The width of the source estimate peak depends on both the SNR (Van Veen et al., 1997; Vrba and Robinson, 2000; Gross et al., 2001; Hillebrand and Barnes, 2003) and also on the type of beamformer applied (scalar vs. vector). If the SNR is very high, the peak is also very narrow, and any errors introduced by the forward model will be pronounced, leading to larger localization errors of this peak. For unconstrained vector beamformers, the peak is comparatively broader (higher PSV) and there is a smaller chance of missing the peak; this is the case with Brainstorm in our study. In the following, we discuss the significant steps of the beamformer pipelines, which affect the localization accuracy and introduce discrepancies among the implementations.

4.1. Preprocessing with SSS

Due to the spatial-filter nature of the beamformer, it can reject external interference and therefore SSS-based pre-processing for interference suppression may have little effect on the results. Thus, although the SNR increases as a result of applying SSS, the localization accuracy does not necessarily improve, which is evident in the localization of the evoked responses (Fig. 9).

However, undetected artifacts, such as a large-amplitude signal jump in a single sensor, may in SSS processing spread to neighboring channels and subsequently reduce data quality. Therefore, channels with distinct artifacts should be noted and excluded from beamforming of unprocessed data or from SSS operations. In addition, trials with large artifacts should be removed based on an amplitude thresholding or by other means. Furthermore, SSS processing of extremely weak signals (SNR < ~2 dB) may not improve the SNR for producing smaller localization errors and PSV values. Hence the data quality should be carefully inspected before and after applying preprocessing methods such as SSS, and channels or trials with low-quality data (or lower contrast) should be omitted from the covariance estimation.

4.2. Effect of filtering and artifact-removal methods

All four toolboxes we tested employ either a MATLAB or Python implementation of the same MNE routines (Gramfort et al., 2014) for reading FIFF data files and thus have internally the exact same data at the very first stage (see Suppl. Fig. 6). The data import either keeps the data in SI-units (T for magnetometers and T/m for gradiometers) or rescales the data (fT and fT/mm) before further processing. The actual pre-processing steps in the pipeline may contribute to differences in the results. The filtering step is performed to remove frequency components of no interest, such as slow drifts, from the data. By default, FieldTrip and SPM use an IIR (Butterworth) filter, and MNE-Python uses FIR filters. The power spectra of these filters’ output signals show notable differences and the output data from these two filters are not identical. Significant variations can be found between MNE-Python-filtered and FieldTrip/SPM-filtered data. Although SPM12 and FieldTrip use the same filter implementation, the filtering results are not identical because of numeric differences caused by different channel units (Suppl. Fig 6). These differences affect the estimated covariance matrices, which are a crucial ingredient for the spatial-filter computation and finally may contribute to differences in beamforming results.

4.3. Effect of SNR on localization accuracy

We reduced the impact of the unknown source depth and strength to a well-defined metrics in terms of the SNR. We observed that the localization accuracy is poor for very low SNR values, i.e. below 3 dB. The weaker, as well as the deeper sources, project less power on to the sensor array and thus show lower SNR; see Eq (10). On the other hand, the LCMV beamformer may also fail to localize accurately sources that produce very high SNR, likely because the point spread of the beamformer output becomes narrower than the distance between the scanning grid points. In this case, the estimate is very focal and a small error in forward solution, introduced e.g. by inaccurate coregistration, may lead to missing the true source and obtaining nearly equal power estimates at many source grid locations, increasing the chance of mislocalization. Brainstorm produced a different outcome at high SNR than the other toolboxes, because the vector beamformer in Brainstorm has wider spatial peaks and thus the maximum NAI occurs more likely in one of the source grid locations.

Such high SNRs do not typically occur in human MEG experiments. However, pathological brain activity may produce high SNR, e.g. the strength of equivalent current dipoles (ECD) for modeling sources of interictal epileptiform discharges (IIEDs) typically ranges between 50 and 500 nAm (Bagic et al., 2011a).

4.4. Effect of the head model

Forward modelling requires MEG–MRI co-registration, segmentation of the head MRI and leadfield computation for the source space. The four beamformer implementations use different approaches, or similar approaches but with different parameters, which yields slightly different forward models. From Eqs (3), (4), (5), (6), (7), (8), it is evident that beamformers are quite sensitive to the forward model. Hillebrand and Barnes (2003) showed that the spatial resolution and the localization accuracy of a beamformer improve with accuracy of the forward model. Dalal et al. (2014) reported that co-registration errors contribute greatly to EEG localization inaccuracy, likely due to their ultimate impact on head-model quality. Chella et al. (2019) presented the dependency of beamformer-based functional connectivity estimates on MEG-MRI co-registration accuracy.

The increasing inter-toolbox localization differences towards very low and very high input SNR is also subject to the differences between the head models. Fig. 4 shows the four overlapped source space boundaries prepared from the same MRI where a slight misalignment among them can be easily seen. This misalignment affects source space. Such differences in head models and source spaces contribute differences in forward solutions which further will contribute to differences in beamforming results across the toolboxes.

4.5. Covariance matrix and regularization

The data covariance matrix is a key component of the adaptive spatial filter in LCMV beamforming, and any error in covariance estimation can cause an error in source estimation. We used 5% of the mean variance of all sensors to regularize data covariance for making its inversion stable in FieldTrip, DAiSS (SPM12) and MNE-Python. Brainstorm uses a slightly different approach and applies regularization with 5% of mean variance of gradiometer and magnetometer channel sets separately and eliminates cross-sensor-type entries from the covariance matrices. As SSS preprocessing reduces the rank of the data, usually retaining less than 80 non-zero eigenvalues, the trace of the covariance matrix decreases strongly. At very high SNRs (>15 dB), overfitting of the covariance matrix becomes more prominent; the condition number (ratio of the largest and the smallest eigenvalues) of the covariance matrix becomes very high even after the default regularization, which can deteriorate the quality of source estimates unless the covariance is appropriately regularized. Therefore, the seemingly same 5% regularization can have very different effects before and after SSS; see Suppl. Fig. 7. Thus, the commonly used way of specifying the regularization level might not be appropriate to produce a good and stable covariance model at high SNR, and this could be one of the explanations for the anecdotally reported detrimental effects of SSS on beamforming results.

5. Conclusion

We conclude that with the current versions of LCMV beamformer implementations in the four open-source toolboxes — FieldTrip, DAiSS (SPM12), Brainstorm, and MNE-Python — the localization accuracy is acceptable (within ~10 mm for a true point source) for most purposes when the input SNR is ~3–15 dB. Lower or higher SNR may compromise the localization accuracy and spatial resolution. All toolboxes apply a vector LCMV beamformer as the initial step to find the source location. FieldTrip, DAiSS (SPM12) and MNE-Python find the optimal source orientation and produce a scalar beamformer output. Brainstorm yields robust localization also for input SNR>15 dB but it slightly compromises the spatial resolution.

To extend this useable range, a properly defined scaling strategy such as pre-whitening should be implemented across the toolboxes. The default regularization is often inadequate and may yield suboptimal results. Therefore, a data-driven approach for regularization should be adopted to alleviate problems with low- and high-SNR cases. Our further work will be focusing on optimizing regularization using a more data-driven approach.

CRediT authorship contribution statement

Amit Jaiswal: Methodology, Software, Investigation, Writing - original draft, Writing - review & editing. Jukka Nenonen: Conceptualization, Data curation, Supervision, Writing - review & editing. Matti Stenroos: Methodology, Writing - review & editing. Alexandre Gramfort: Software, Methodology. Sarang S. Dalal: Conceptualization, Writing - review & editing. Britta U. Westner: Visualization, Writing - review & editing. Vladimir Litvak: Software, Writing - review & editing. John C. Mosher: Methodology, Software, Writing - review & editing. Jan-Mathijs Schoffelen: Software, Writing - review & editing. Caroline Witton: Validation, Supervision. Robert Oostenveld: Software, Methodology. Lauri Parkkonen: Conceptualization, Methodology, Writing - review & editing, Supervision.

Acknowledgment

This study has been supported by the European Union H2020 MSCA-ITN-2014-ETN program, Advancing brain research in Children’s developmental neurocognitive disorders project (ChildBrain #641652). SSD and BUW have been supported by a European Research Council Starting Grant (#640448). LP was supported by European Research Council grant (#678578).

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.neuroimage.2020.116797.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- Bagic A.I., Knowlton R.C., Rose D.F., Ebersole J.S., ACMEGS Clinical Practice Guideline (CPG) Committee American clinical magnetoencephalography society clinical practice guideline 1: recording and analysis of spontaneous cerebral activity. J. Clin. Neurophysiol. 2011;28(4):348–354. doi: 10.1097/WNP.0b013e3182272fed. [DOI] [PubMed] [Google Scholar]

- Bagic A.I., Knowlton R.C., Rose D.F., Ebersole J.S., ACMEGS Clinical Practice Guideline (CPG) Committee American clinical magnetoencephalography society clinical practice guideline 3: MEG–EEG reporting. J. Clin. Neurophysiol. 2011;28(4):362–365. doi: 10.1097/WNO.0b013e3181cde4ad. [DOI] [PubMed] [Google Scholar]

- Barca L., Cornelissen P., Simpson M., Urooj U., Woods W., Ellis A.W. The neural basis of the right visual field advantage in reading: an MEG analysis using virtual electrodes. Brain Lang. 2011;118(3):53–71. doi: 10.1016/j.bandl.2010.09.003. [DOI] [PubMed] [Google Scholar]

- Burgess R.C., Funke M.E., Bowyer S.M., Lewine J.D., Kirsch H.E., Bagić A.I., ACMEGS Clinical Practice Guideline (CPG) Committee American Clinical Magnetoencephalography Society Clinical Practice Guideline 2: presurgical functional brain mapping using magnetic evoked fields. J. Clin. Neurophysiol. 2011;28(4):355–361. doi: 10.1097/WNP.0b013e3182272ffe. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chella F., Marzetti L., Stenroos M., Parkkonen L., Ilmoniemi R.J., Romani G.L., Pizzella V. The impact of improved MEG–MRI co-registration on MEG connectivity analysis. Neuroimage. 2019;197:354–367. doi: 10.1016/j.neuroimage.2019.04.061. [DOI] [PubMed] [Google Scholar]

- Cheyne D., Bostan A.C., Gaetz W., Pang E.W. Event-related beamforming: a robust method for presurgical functional mapping using MEG. Clin. Neurophysiol. 2007;118:16911704. doi: 10.1016/j.clinph.2007.05.064. [DOI] [PubMed] [Google Scholar]

- Dalal S.S., Rampp S., Willomitzer F., Ettl S. Consequences of EEG electrode position error on ultimate beamformer source reconstruction performance. Front. Neurosci. 2014;8:42. doi: 10.3389/fnins.2014.00042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale A.M., Fischl B., Sereno M.I. Cortical surface-based analysis I: segmentation and surface reconstruction. Neuroimage. 1999;9(2):179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Dale A.M., Liu A.K., Fischl B.R., Buckner R.L., Belliveau J.W., Lewine J.D., Halgren E. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron. 2000;26(1):55–67. doi: 10.1016/S0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- Elekta Neuromag® TRIUX User’s Manual, (Megin Oy. 2018. [Google Scholar]

- Engemann D.A., Gramfort A. Automated model selection in covariance estimation and spatial whitening of MEG and EEG signals. Neuroimage. 2015;108:328–342. doi: 10.1016/j.neuroimage.2014.12.040. [DOI] [PubMed] [Google Scholar]

- Fischl B. FreeSurfer. Neuroimage. 2012;62(2):774–781. doi: 10.1016/j.neuroimage.2012.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B., Sereno M.I., Dale A.M. Cortical surface-based analysis II: inflation, flattening, and a surface-based coordinate system. Neuroimage. 1999;9(2):195–207. doi: 10.1006/nimg.1998.0396. [DOI] [PubMed] [Google Scholar]

- Gramfort A., Luessi M., Larson E., Engemann D.A., Strohmeier D., Brodbeck C. MEG and EEG data analysis with MNE-Python. Front. Neurosci. 2013;7:267. doi: 10.3389/fnins.2013.00267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort A., Strohmeier D., Haueisen J., Hämäläinen M.S., Kowalski M. Time-frequency mixed-norm estimates: sparse M/EEG imaging with non-stationary source activations. Neuroimage. 2013;70:410–422. doi: 10.1016/j.neuroimage.2012.12.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort A., Luessi M., Larson E., Engemann D.A., Strohmeier D., Brodbeck C. MNE software for processing MEG and EEG data. Neuroimage. 2014;86:446–460. doi: 10.1016/j.neuroimage.2013.10.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross J., Kujala J., Hämäläinen M., Timmermann L., Schnitzler A., Salmelin R. Dynamic imaging of coherent sources: studying neural interactions in the human brain. Proc. Natl. Acad. Sci. U.S.A. 2001;98(2):694–699. doi: 10.1073/pnas.98.2.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall M.B., Nissen I.A., van Straaten E.C., Furlong P.L., Witton C., Foley E. An evaluation of kurtosis beamforming in magnetoencephalography to localize the epileptogenic zone in drug-resistant epilepsy patients. Clin. Neurophysiol. 2018;129(6):1221–1229. doi: 10.1016/j.clinph.2017.12.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hämäläinen M., Hari R., Lounasmaa O.V., Knuutila J., Ilmoniemi R.J. Magnetoencephalography-theory, instrumentation, and applications to noninvasive studies of the working human brain. Rev. Mod. Phys. 1993;65:413–497. doi: 10.1103/RevModPhys.65.413. [DOI] [Google Scholar]

- Hämäläinen M.S., Ilmoniemi R.J. Interpreting magnetic fields of the brain: minimum norm estimates. Med Biol Eng Compt. 1994;32(1):35–42. doi: 10.1007/BF02512476. [DOI] [PubMed] [Google Scholar]

- Hämäläinen M.S., Sarvas J. Realistic conductivity geometry model of the human head for interpretation of neuromagnetic data. IEEE Trans. Biomed. Eng. 1989;36(2):165–171. doi: 10.1109/10.16463. [DOI] [PubMed] [Google Scholar]

- Herdman A., Moiseev A., Ribary U. Localizing event-related potentials using multi-source minimum variance beamformers: a validation study. Brain Topogr. 2018;31(4):546–565. doi: 10.1007/s10548-018-0627-x. 2018 Jul. [DOI] [PubMed] [Google Scholar]

- Hillebrand A., Barnes G.R. The use of anatomical constraints with MEG beamformers. Neuroimage. 2003;20(4):2302–2313. doi: 10.1016/j.neuroimage.2003.07.031. [DOI] [PubMed] [Google Scholar]

- Hillebrand A., Barnes G.R. Beamformer analysis of MEG data. Int. Rev. Neurobiol. 2005;68:149–171. doi: 10.1016/S0074-7742(05)68006-3. [DOI] [PubMed] [Google Scholar]

- Huang M.X., Mosher J.C., Leahy R.M. A sensor-weighted overlapping-sphere head model and exhaustive head model comparison for MEG. Phys. Med. Biol. 1999;44(2):423–440. doi: 10.1088/0031-9155/44/2/010. [DOI] [PubMed] [Google Scholar]

- Huang M.X., Shih J.J., Lee R.R., Harrington D.L., Thoma R.J., Weisend M.P. Commonalities and differences among vectorized beamformers in electromagnetic source imaging. Brain Topogr. 2004;16(3):139–158. doi: 10.1023/B:BRAT.0000019183.92439.51. [DOI] [PubMed] [Google Scholar]

- Ilmoniemi R.J., Hämäläinen M.S., Knuutila J. The forward and inverse problems in the spherical model. In: Weinberg H., Stroink G., Katila T., editors. Biomagnetism: Applications and Theory. Pergamon Press; New York: 1985. pp. 278–282. [Google Scholar]

- Ilmoniemi R.J., Sarvas J. MIT Press; 2019. Brain Signals: Physics and Mathematics of MEG and EEG. [Google Scholar]

- Ishii R., Canuet L., Ishihara T., Aoki Y., Ikeda S., Hata M. Frontal midline theta rhythm and gamma power changes during focused attention on mental calculation: an MEG beamformer analysis. Front. Hum. Neurosci. 2014;8:406. doi: 10.3389/fnhum.2014.00406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin F.H., Witzel T., Zeffiro T.A., Belliveau J.W. Linear constraint minimum variance beamformer functional magnetic resonance inverse imaging. Neuroimage. 2008;43(2):297–311. doi: 10.1016/j.neuroimage.2008.06.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litvak V., Mattout J., Kiebel S., Phillips C., Henson R., Kilner J. EEG and MEG data analysis in SPM8. Comput. Intell. Neurosci. 2011 doi: 10.1155/2011/852961. 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattout J., Henson R.N., Friston K.J. Canonical source reconstruction for MEG. Comput. Intell. Neurosci. 2007 doi: 10.1155/2007/67613. 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohamed I.S., Otsubo H., Ferrari P., Sharma R., Ochi A., Elliott I. Source localization of interictal spike-locked neuromagnetic oscillations in pediatric neocortical epilepsy. Clin. Neurophysiol. 2013;124(8):1517–1527. doi: 10.1016/j.clinph.2013.01.023. [DOI] [PubMed] [Google Scholar]

- Mosher J.C., Lewis P.S., Leahy R.M. Multiple dipole modeling and localization from spatio-temporal MEG data. IEEE Trans. Biomed. Eng. 1992;39(6):541–557. doi: 10.1109/10.141192. [DOI] [PubMed] [Google Scholar]

- Nenonen J., Nurminen J., Kicic D., Bikmullina R., Lioumis P., Jousmäki V., Taulu S., Parkkonen L., Putaala M., Kähkönen S. Validation of head movement correction and spatiotemporal signal space separation in magnetoencephalography. Clin. Neurophysiol. 2012;123(11):2180–2191. doi: 10.1016/j.clinph.2012.03.080. [DOI] [PubMed] [Google Scholar]

- Nolte G. The magnetic lead field theorem in the quasi-static approximation and its use for magnetoencephalography forward calculation in realistic volume conductors. Phys. Med. Biol. 2003;48(22):3637. doi: 10.1088/0031-9155/48/22/002. [DOI] [PubMed] [Google Scholar]

- Oostenveld R., Fries P., Maris E., Schoffelen J.M. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011 doi: 10.1155/2011/156869. 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascual-Marqui R.D., Michel C.M., Lehmann D. Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. Int. J. Psychophysiol. 1994;18(1):49–65. doi: 10.1016/0167-8760(84)90014-X. [DOI] [PubMed] [Google Scholar]

- Robinson S.E., Vrba J. Functional neuroimaging by synthetic aperture magnetometry (SAM) In: Yoshimoto T., Kotani M., Kuriki S., Karibe H., Nakasato N., editors. Recent Advances in Biomagnetism. Tohoku University Press; Japan: 1998. pp. 302–305. [Google Scholar]

- Salmelin R. Multi-dipole modeling in MEG. In: Hansen P., Kringelbach M., Salmelin R., editors. MEG: an Introduction to Methods. Oxford university press; 2010. pp. 124–155. [DOI] [Google Scholar]

- Sekihara K., Scholz B. Generalized Wiener estimation of three-dimensional current distribution from biomagnetic measurements. IEEE Trans. Biomed. Eng. 1996;43(3):281–291. doi: 10.1109/10.486285. [DOI] [PubMed] [Google Scholar]

- Sekihara K., Hild K.E., Nagarajan S.S. A novel adaptive beamformer for MEG source reconstruction effective when large background brain activities exist. IEEE Trans. Biomed. Eng. 2006;53(9):1755–1764. doi: 10.1109/TBME.2006.878119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sekihara K., Nagarajan S.S. Springer Science & Business Media; 2008. Adaptive Spatial Filters for Electromagnetic Brain Imaging. [DOI] [Google Scholar]

- Sekihara K., Nagarajan S.S., Poeppel D., Marantz A. Asymptotic SNR of scalar and vector minimum-variance beamformers for neuromagnetic source reconstruction. IEEE Trans. Biomed. Eng. 2004;51(10):1726–1734. doi: 10.1109/TBME.2004.827926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spencer M.E., Leahy R.M., Mosher J.C., Lewis P.S. Conference Record of the Twenty-Sixth Asilomar Conference on Signals. Systems & Computers; 1992. Adaptive filters for monitoring localized brain activity from surface potential time series; pp. 156–161. 1992. [DOI] [Google Scholar]

- Stenroos M., Hunold A., Haueisen J. Comparison of three-shell and simplified volume conductor models in magnetoencephalography. Neuroimage. 2014;94:337–348. doi: 10.1016/j.neuroimage.2014.01.006. [DOI] [PubMed] [Google Scholar]

- Tadel F., Baillet S., Mosher J.C., Pantazis D., Leahy R.M. Brainstorm: a user-friendly application for MEG/EEG analysis. Comput. Intell. Neurosci. 2011 doi: 10.1155/2011/879716. 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taulu S., Kajola M. Presentation of electromagnetic multichannel data: the signal space separation method. J. Appl. Phys. 2005;97(12):124905. doi: 10.1063/1.1935742. [DOI] [Google Scholar]

- Tikhonov A.N. Solution of incorrectly formulated problems and the regularization method. Soviet Math. 1963;4:1035–1038. [Google Scholar]

- van Es M.W., Schoffelen J.M. Stimulus-induced gamma power predicts the amplitude of the subsequent visual evoked response. Neuroimage. 2019;186:703–712. doi: 10.1016/j.neuroimage.2018.11.029. [DOI] [PubMed] [Google Scholar]

- van Klink N., van Rosmalen F., Nenonen J., Burnos S., Helle L., Taulu S. Automatic detection and visualization of MEG ripple oscillations in epilepsy. NeuroImage Clin. 2017;15:689–701. doi: 10.1016/j.nicl.2017.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Veen B.D., Buckley K.M. Beamforming: a versatile approach to spatial filtering. IEEE ASSP Mag. 1988;5(2):4–24. doi: 10.1109/53.665. [DOI] [Google Scholar]

- Van Veen B.D., Van Drongelen W., Yuchtman M., Suzuki A. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans. Biomed. Eng. 1997;44(9):867–880. doi: 10.1109/10.623056. [DOI] [PubMed] [Google Scholar]

- Vrba J., Robinson S.E. Differences between synthetic aperture magnetometry (SAM) and linear beamformers. In: Nenonen J., Ilmoniemi R.J., Katila T., editors. 12th International Conference on Biomagnetism. Helsinki Univ. of Technology; Espoo, Finland: 2000. pp. 681–684. [Google Scholar]

- Wipf D.P., Owen J.P., Attias H.T., Sekihara K., Nagarajan S.S. Robust Bayesian estimation of the location, orientation, and time course of multiple correlated neural sources using MEG. Neuroimage. 2010;49(1):641–655. doi: 10.1016/j.neuroimage.2009.06.083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Youssofzadeh V., Agler W., Tenney J.R., Kadis D.S. Whole-brain MEG connectivity-based analyses reveals critical hubs in childhood absence epilepsy. Epilepsy Res. 2018;145:102–109. doi: 10.1016/j.eplepsyres.2018.06.001. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Our analysis codes are publicly available under a repository https://zenodo.org/record/3471758 (https://doi.org/10.5281/zenodo.3471758). The datasets as well as the specific versions of the four toolboxes used in the study are available at https://zenodo.org/record/3233557 (https://doi.org/10.5281/zenodo.3233557).