Abstract

The Michigan Department of Corrections operates the Vocational Villages, which are skilled trades training programs set within prisons that include an immersive educational community using virtual reality, robotics, and other technologies to develop employable trades. An enhancement to the Vocational Villages could be an evidence-based job interview training component. Recently, we conducted a series of randomized controlled trials funded by the National Institute of Mental Health to evaluate the efficacy of virtual reality job interview training (VR-JIT). The results suggested that the use of VR-JIT was associated with improved job interview skills and a greater likelihood of receiving job offers within 6 months. The primary goal of this study is to report on the protocol we developed to evaluate the effectiveness of VR-JIT at improving interview skills, increasing job offers, and reducing recidivism when delivered within two Vocational Villages via a randomized controlled trial and process evaluation. Our aims are to: (1) evaluate whether services-as-usual in combination with VR-JIT, compared to services-as-usual alone, enhances employment outcomes and reduces recidivism among returning citizens enrolled in the Vocational Villages; (2) evaluate mechanisms of employment outcomes and explore mechanisms of recidivism; and (3) conduct a multilevel, mixed-method process evaluation of VR-JIT implementation to assess the adoptability, acceptability, scalability, feasibility, and implementation costs of VR-JIT.

Keywords: Virtual reality, Employment, Recidivism, Returning citizens, Job interview

1. Introduction

More than 600,000 state and federal returning citizens reenter the community annually [1] and 44% are rearrested during the first year after their release [2]. Unemployment is often recognized as a critical mechanism of recidivism [3] as unemployed returning citizens are more likely to recidivate than employed returning citizens [4]. Moreover, gainful employment enables returning citizens to secure housing and pay their bills [5,6], which reduces the incentive to commit crimes [7] and helps reduce recidivism [5]. Unfortunately, only 25% of returning citizens are employed within 12 months of re-entering their communities [8]. Therefore, there is an urgent need to enhance vocational rehabilitation services for returning citizens preparing to re-enter their communities.

Approximately 50% of state prisons offer vocational services to support employment opportunities after re-entering the community [9]. Although few vocational rehabilitation services delivered in prisons translate into promising reductions in recidivism [10], even fewer have been rigorously evaluated with a randomized controlled design [11]. Thus, there are promising vocational rehabilitation services that require further evaluation. A recent job acquisition framework suggests active job-search behavior (e.g., job interviewing) is a proximal factor to employment [12]. Nearly all vocational rehabilitation services support practicing job-interview skills because hiring managers ask questions to assess a candidate's work skills [13] and social effectiveness [14,15]. Additionally, how one discusses their prior conviction during the interview can influence whether they receive a job offer. Vocational rehabilitation services typically rely on instructors to train interview skills through role-plays. However, instructors are not typically trained to ask open-ended interview questions to facilitate thoughtful responses during the role-plays, give feedback on clients' levels of anxiety or confidence about interviewing, act like hiring managers, or offer feedback on clients' responses to improve their interview skills [16]. Thus, a major gap in vocational rehabilitation services is the lack of evidence-based practice used to facilitate job-interview training.

Our team developed Virtual Reality Job Interview Training (VR-JIT), which enhanced interview skills and interview confidence, and increased the likelihood of receiving job offers across five randomized controlled efficacy trials in lab-based settings among several vulnerable populations (e.g., individuals with substance abuse histories, schizophrenia, mood disorders, autism, and veterans with PTSD) [[17], [18], [19], [20], [21]]. As this program of research moves forward, we are intent on evaluating whether the efficacy of VR-JIT translates into effectively enhancing employment outcomes within real-world settings providing vocational rehabilitation services. For example, we designed a protocol to evaluate VR-JIT within the individual placement and support model of supported employment services for adults with serious mental illness [22]. Given the low levels of employment among returning citizens and lack of evidence-based vocational rehabilitation services within prison settings [8,11], the current study will investigate VR-JIT as an enhancement to existing vocational services implemented in two prisons in the State of Michigan called the Vocational Villages. The Vocational Villages provide an immersive educational community using virtual reality, robotics, and other technologies to support returning citizens becoming employable tradespeople [23].

Consistent with the Smart Decarceration Initiative [24], the proposed study will evaluate whether VR-JIT is effective at improving employment and reducing recidivism for returning citizens preparing to re-enter their communities. First, we will evaluate the impact of VR-JIT on individual-level outcomes (e.g., interviewing ability, employment and recidivism rate) and system-level outcomes (e.g., staff efficiency, VR-JIT cost-effectiveness). Second, we will evaluate the initial implementation of VR-JIT with regard to adoptability, acceptability, scalability, feasibility, and implementation costs. Lastly, we will adapt an empirical framework on job acquisition via supported employment to explore potential mechanisms of employment (i.e., interviewing anxiety, confidence, and motivation) and employment as a mechanism for reducing recidivism [12].

2. Methods

2.1. Study design

This study uses a two-arm randomized control trial (RCT) design where participants ages 18–29 years old who are enrolled in the Vocational Villages and identified as moderate-to-high risk for re-offense for violent crimes will be randomized to receive services-as-usual (SAU) at the Vocational Villages (i.e., the control group) or to receive SAU at the Vocational Village in combination with VR-JIT (SAU + VR-JIT) (i.e., the intervention group). All study procedures and materials were reviewed and approved by the National Institute of Justice, the Institutional Review Board at the University of Michigan, and the Michigan Department of Corrections Office of Offender Success Administration. Overall, the study will use a Hybrid Type I (HTI) effectiveness-implementation design to evaluate the confirmatory effectiveness of VR-JIT while collecting data on the delivery of VR-JIT in two prisons (one prison is security level I and one prison is security level II) [25].

Initially, the authors from the University of Michigan, Northwestern University, and the Michigan Department of Corrections collaborated on designing the initial protocol using the first author's prior HTI design evaluating VR-JIT in mental health settings [22] and from reviewing prior studies emphasizing lessons learned when conducting RCTs in prison settings [26]. From here, the protocol was reviewed and refined by an expert panel of researchers and stakeholders with expertise in criminal justice research and services who serve as the remaining coauthors (see 3.3). Here are the objectives of the current study:

Objective 1. Evaluate whether SAU + VR-JIT, compared with SAU-only, enhances employment outcomes and reduces recidivism among returning citizens.

Objective 1 Hypotheses. At the individual level, we hypothesize (H) that SAU + VR-JIT trainees, compared with SAU-only trainees, may have higher employment rates (H1) and reduced recidivism (H2) by six-month follow-up; and greater improvement in job-interview skills (H3) between pre-test and post-test assessments. At the system level, we hypothesize that SAU + VR-JIT may be more cost-effective than SAU only (H4).

Sub-objective 1. Explore whether SAU + VR-JIT trainees as compared to SAU only trainees have increased job interviewing confidence and motivation, and reduced job interviewing anxiety between pre- and post-test.

Sub-objective 2. Explore whether using VR-JIT increases time efficiency for SAU staff to engage in non-interview-practice-related vocational training (system level).

Objective 2. Evaluate interview skills as a mechanism of employment outcomes and explore the mechanisms of recidivism.

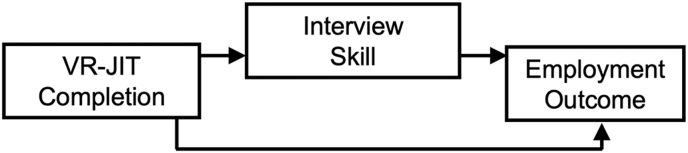

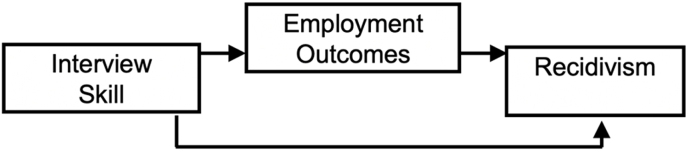

Objective 2 Hypotheses. Using a framework for job acquisition [12], we hypothesize (H) that enhanced interview skills may mediate the effect of interview training on employment outcomes (H5; Fig. 1). We will explore whether employment outcomes mediate the relationship between interview skills and recidivism at six-month follow-up (Fig. 2). Also, we will explore whether job interviewing anxiety, confidence, and motivation mediate the relationship between interviewing skills and employment outcomes.

Fig. 1.

We will test whether interview skills mediate the association between VR-JIT completion and employment.

Fig. 2.

We will test whether employment outcomes mediate the association between skill and recidivism.

Objective 3. Conduct a multilevel mixed-method evaluation of VR-JIT implementation to assess the adoptability, acceptability, scalability, feasibility, and implementation costs of VR-JIT. We will use surveys and semi-structured interviews (among returning citizens, staff, and administrators) to identify facilitators and barriers to implementing VR-JIT in a prison-based vocational service program and describe the process of implementation in this context. We will conduct a budget impact analysis to estimate the cost of implementing VR-JIT at the Vocational Villages.

2.2. Eligibility criteria

We will recruit participants from individuals enrolled in two Vocational Villages. The study inclusion criteria for returning citizens include being: 1) 18–29 years old; 2) identified as at moderate-to-high risk for reoffending violent crimes via an internal Michigan Department of Corrections (MDOC) assessment using the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) [27]; 3) within three months of their earliest release date. In addition, returning citizens are required to have a high school diploma or equivalency. The study exclusion criteria for returning citizens include having: 1) uncorrected hearing or visual problems that prevents one from using VR-JIT; and 2) a medical illness that compromises cognition (e.g., moderate-to-severe traumatic brain injury, per medical records).

2.3. Recruitment and screening

The target sample of 150 participants will be enrolled during a 36-month recruitment window from the two Vocational Villages. Recruitment and screening will involve 4 steps. In step 1, the Vocational Village staff will identify a cohort of 12 returning citizens with the earliest release dates, provide them with a general description of the study, and invite them to a recruitment meeting. In step 2, the research team will lead the recruitment meeting onsite at the prison to give a detailed overview of the study. In step 3, the potential participants will approach the research team to volunteer for the study. In step 4, the research team will review the consent form with a potential participant and then obtain informed consent.

Regarding the potential sampling frame, there will be approximately 110 eligible participants ages 18 to 29 who attend the Vocational Villages each year. Thus, we anticipate 150 participants can be feasibly recruited during the 36 month recruitment window (out of 330 eligible participants). If we experience a recruitment shortfall then we will extend the upper age limit of our eligibility criteria to age 35, which will increase the sampling frame to 768 participants during the recruitment window. In addition, very few students (approximately 3%) are removed from the Vocational Villages (or transferred from the prison) for behavior or lack of progress, and as such, will have a minimal impact on the sample size.

2.4. Randomization and blinding

The study statistician will conduct the randomization using a Web-based system that will generate randomization blocks of 9 participants per site [28]. Participants will be randomly assigned to the SAU + VR-JIT or SAU-only groups in a 2:1 ratio. Participants and Vocational Village educational staff (known as employment readiness instructors) will be notified of participants assigned to the intervention group, but research team assessors will be blinded to treatment assignment as will research team members conducting statistical analyses.

2.5. Participation in the Vocational Villages

Notably, VR-JIT will supplement active engagement in mock job interview practice for participants randomized to SAU + VR-JIT. Employment readiness instructors and participants may continue to complete mock interviews, discuss interview skills, and the participants’ use of the VR-JIT tool. This approach will support a more integrated use of VR-JIT compared to removing all job interview training services. All other services will be provided as usual.

When conducting research in a prison setting, there are regularly implemented prison protocols that may affect the research. Specifically, the prisons conduct mobilizations to lockdown the facility once per month as a test or practice. The mobilizations last approximately 3–4 h. The “scheduled” mobilizations occur randomly, and they minimally affect the Vocational Village trade programs as the programs are located in buildings on the prison campus that are independent from other prison facilities. When the mobilization occurs, MDOC staff lock down the buildings and a count is taken of the returning citizens located in the Vocational Village building. During the “scheduled” mobilization, research for the day will end immediately and will be rescheduled for a later time, and research staff will be moved to the secure staffing rooms within the Vocation Village. If a mobilization occurs due to a real-life issue, the Vocational Village students are returned to their housing units. Lastly, each facility may conduct a mobilization 1 to 2 times per year due to a real-life scenario (e.g., a significant amount of contraband; health care issue where an ambulance would need to be brought inside the facility).

2.6. Study intervention

2.6.1. Delivery of services-as-usual

The MDOC Vocational Villages are residential programs within prison settings where returning citizens live, share meals, and commute to and from the Villages together. The Vocational Villages offer 13 vocational trades and returning citizens choose 1 core trade that they enroll in to complete. In addition to their core trade, returning citizens can earn “stackable” credentials through coursework led by trades instructors. For example, the credentials could include Fork Lift Certification, the Occupational Safety and Health Administration 30 h certification training course for construction and general industry, and the Commercial Driver's License Certification [23].

As part of their enrollment in the Vocational Villages, the returning citizens also complete a pre-employment preparation workshop (lasting approximately 15 h) that is designed to enhance employability skills through lessons and activities in job search, completing an application, cover letter, resume writing, and interviewing. The employment readiness instructors provide each returning citizen with at least 1 mock interview session. The overall number of mock interviews completed will be dependent on the assessment of skill by the employment readiness instructor. In addition, the employment readiness instructors will conduct approximately 1–2 h of classroom instruction and discussion about the importance of job interviews with each participant prior to the participants’ attending real-life job interviews while enrolled at the Vocational Villages. Employment readiness instructors will track and record the total number of role-plays completed with each participant during the study, which will be used as a covariate. All eligible participants will be informed during the consent process that there will be no consequences for declining participation in the study and that they will be able to continue to receive their services-as-usual.

2.6.2. Virtual reality job interview training

SIMmersion, LLC (www.simmersion.com), developed VR-JIT to support job interview training for adults with disabilities and other needs. Our efficacy evaluations of VR-JIT revealed that participants found VR-JIT engaging and after completing 1–2 virtual interviews, they could use the tool on their own [[17], [18], [19], [20], [21],29]. The user experience of VR-JIT involves an interactive simulation comprised of video, speech recognition, and non-branching logic components that work in tandem to challenge trainees to navigate complex social cues and respond appropriately to realistic interpersonal exchanges. Throughout VR-JIT, trainees enhance their knowledge on the basics of interviewing, including how to discuss their conviction history, through electronic learning (e-learning) content. Trainees are also exposed to a virtual character that interacts with trainees’ responses in real-time as they navigate VR-JIT. The virtual character takes on the role of a hiring manager; the character was developed using footage from an actor such that the facial expressions, intonation, and social cues of the character are as realistic as possible. A microphone can be utilized by trainees to allow them to “speak” directly with the virtual hiring manager, as opposed to communicating through keystrokes; a feature that enhances the overall interactive environment of VR-JIT and specifically provides experience for trainees with navigating complex social cues. Trainees are expected to build competency in and increase retention of interviewing skills through repetition.

VR-JIT relies on behavioral learning principals (e.g., repetitive practice) to provide an infrastructure for trainees to improve their interview skills [30,31] as well as the principles for designing effective simulations [32] (Table 1), which supports trainees to sustain behavioral change [33,34]. The experience of having to disclose a prior conviction during a job interview can be distressing for any returning citizen. VR-JIT further supports the work Vocational Villages are doing to prepare returning citizens around this topic by providing opportunities to practice different strategies to disclose their conviction histories in a judgement-free environment.

Table 1.

Virtual reality job interview training learning strategies.

|

|

|

|

|

|

|

|

2.6.2.1. VR-JIT interface and Molly Porter

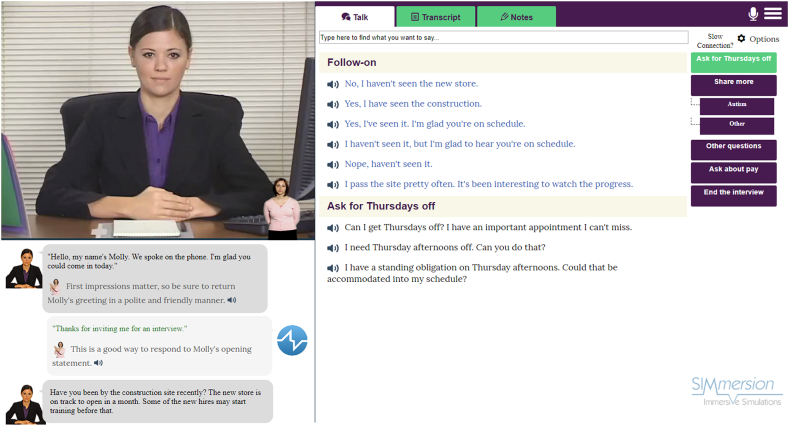

After reviewing the e-learning content, trainees will begin simulating job interviews with a virtual hiring manager named Molly Porter. She works for a fictional company named “Wondersmart.” The interview takes place in Molly's office, where the trainee joins her for an interview. After asking each question during the interview, Molly pauses so participants can speak their responses via microphone, facilitated through the interface displayed in Fig. 3.

Fig. 3.

VR-JIT interface.

Molly is designed to “respond” to trainee's behavior according to three distinct difficulty levels: easy (friendly), medium (direct), and hard (stern). Throughout a single interview, Molly's behavior and personality are also attuned to a trainee's prior responses; she changes her future questions and social cues in response to, for example, inappropriate responses from a trainee. VR-JIT dictates Molly's conditional probabilities for each possible reply as determined by three factors: 1) difficulty level, 2) the conversation history, and 3) Molly's interactive relationship with the trainee. Molly has demonstrated high consistency in both responses and emotional states within each given difficulty level, despite the fact that her questions change from interview to interview. Within the VR-JIT interface, trainees can see options for responding to Molly's questions; they can also access a complete transcript of the interview along with feedback on their statements. See Fig. 3 for a complete layout of the VR-JIT interface, including button navigation.

2.6.2.2. Nonverbal coach

Trainees receive real-time feedback from an on-screen coach (Fig. 4) who displays nonverbal cues about the trainee's responses. Also, trainees can click “help” buttons that clarify interview questions or response options. For example, the coach shows the trainee a “thumbs down” for a problematic response. If the trainee is unclear why their response was a problem, the help button provides a detailed explanation about why the statement was problematic (e.g., “This statement focuses on a negative character trait; try focusing on your strengths”).

Fig. 4.

Nonverbal job coach.

2.6.2.3. Personalized customization

At the beginning of the VR-JIT experience, trainees select one of eight available positions at Wondersmart (the aforementioned fictional company within the simulation) to apply for. After selecting a position (e.g., stock clerk, customer service representative), trainees complete a job application within VR-JIT, including questions about work-related history and skills; this application is consistent with those found online by national retail stores. This component of the simulation provides valuable practice with completing realistic internet-based applications used by many employers. The job application data provided by trainees in VR-JIT is utilized by Molly to generate relevant questions for the job interview. For example, the job application within VR-JIT allows trainees to indicate that they have a prior conviction and when they do so, VR-JIT generates a range of responses for trainees to choose from that assists them in disclosing this conviction to Molly during the interview, when prompted.

2.6.2.4. VR-JIT transcripts and summary feedback

Both during and after the job interview, trainees can review transcripts of the interaction by replaying the entire conversation or individual exchanges with Molly, including a replay of the trainee's voice as captured by speech recognition. Hearing as opposed to simply reading the transcript allows trainees to reflect on tone and other variations in voice they may have missed or misjudged. Trainees can also click on interactive sections in the written transcript to receive specific feedback on how their responses impacted their interview and overall score, and how Molly ‘perceived’ their responses in the simulation; including how their responses shaped her choice of subsequent questions. For example, Molly may ask about the nature of one's conviction and a participant may respond with “It was for assault, but it was just a misunderstanding.” Then their feedback might read “This makes it sound like you do not think you should have been in trouble and like you have not learned from your past. Molly will worry that you may make the same mistakes again because you do not seem to be sorry.” Lastly, the feedback in the transcript is color-coded where green segments of text reflect appropriate or useful responses, red text indicates inappropriate or unconstructive responses, and black coded text denotes neutral responses.

After completing each simulated interview with Molly, trainees receive a score and summary feedback on how to improve in the eight domains of interview skills (i.e., hard worker, sounding easy to work with, sharing things in a positive way, sounding professional, sounding honest, showing interest in the position, negotiation, and overall rapport). The total scores are based on an algorithm that tracks trainees’ performance throughout the interview; they range from 0 to 100 and if they score 90 or above, trainees are notified “You got the job!” Notably, the feedback provided as a part of the summary that accompanies the score assists trainees in decoding the subtleties of interview-based interactions. A more detailed description of VR-JIT can be found here [21].

2.6.3. Delivery of VR-JIT within the Vocational Villages

The research team will train an employment readiness instructor at each prison location to deliver VR-JIT to returning citizens in an onsite computer lab. The labs were organized specifically for this study using available classroom and re-purposed storage space within the Vocational Villages. The employment readiness instructors will complete a fidelity checklist indicating that they have instructed the VR-JIT trainees on how to use the intervention. This checklist will serve as written assurance that VR-JIT was delivered with high fidelity. The research team will review the fidelity checklists to verify that delivery procedures are regularly observed and provide bimonthly supervision to monitor VR-JIT fidelity.

Based on the results of our VR-JIT efficacy research [[17], [18], [19], [20], [21]], and our existing effectiveness trial evaluating VR-JIT [22], we recommended that participants complete 15 virtual interviews within 2–3 weeks. In addition, we provide each participant (randomized to VR-JIT) with a login and password in their release documents so they can continue to access VR-JIT after their release. The research team recommends that trainees dedicate 1–2 h to train with VR-JIT during each visit. This strategy is consistent with our efficacy studies, in which trainees first engaged the e-learning component and then completed an average of 15 trials. A trial will be one complete VR-JIT interview, which takes approximately 20–30 min to complete. Given that the computers located in the prison setting cannot have access to the internet, the participants will print out their transcripts for each session (each transcript includes a record of the participant's score and number of minutes using e-learning and talking with the virtual hiring manager). The transcripts will be used to fill out a ‘lab log’ that participants will present to the employment readiness instructor who validates the data and signs the log. These sessions will also include time for participants to review their feedback to improve their performance and access the e-learning.

Although we designed VR-JIT to be self-guided, the employment readiness instructor can spend 10–15 min (if needed) reviewing the recorded transcript for each virtual interview with trainees to gain insight into why particular responses may or may not be effective. During our prior studies, this process required 2–3 trials before trainees worked independently to review feedback.

Employment readiness instructors will inform the participants when they can progress through the VR-JIT difficulty levels (i.e., from easy-to-medium-to-hard). Trainees will begin with “Friendly Molly” (easy) until they score at least 90 of 100 points or complete 5 “easy” trials. A minimum of 3 trials at the “easy” level will be required before advancing. Trainees will then advance to “Direct Molly” (medium) and continue until they score 90 of 100 points or complete 5 “medium” trials. A minimum of 3 trials at the “medium” level will be required before advancing. Finally, trainees will advance to “Stern Molly” (hard) and continue stay there until completing the training.

2.7. Follow-up and retention strategies

After participants return to the community, the research team will have access to all available contact information, including phone number, email, and alterative contact information for a friend or relative. If necessary, the research team will ask the alternative contact for any updated phone number or email address for the participants. Beginning on the follow-up visit due date, the research team will attempt to contact the partcipants up to 6 times per week. After 6 weeks, the participant will be considered lost to follow-up and the research team will obtain follow-up employment outcomes through MDOC administrative records, which was authorized as part of the informed consent.

To help facilitate retention, the research team will send intermittent (every 2 months) check-in correspondence to participants via mail, email, or text message between their release and six-month follow-up data collection.

2.8. Study measures

2.8.1. Background measures

All participants will complete a background survey to assess their demographic and justice-related characteristics prior to their incarceration (e.g., How many times have you been arrested? How many times have you spent time in prison? What is the total length (in months) of time spent in prison?).

2.8.2. Primary outcomes

The primary outcomes are: 1) job interview skill change between pretest and post-test; 2) employment rate at 6-month follow-up; 3) time-to-employment at 6-month follow-up; and 4) rate of recidivism at 6-month follow-up.

To assess job interview skills, we will use the Mock Interview Rating scale that we created and tested during our efficacy studies of VR-JIT [[17], [18], [19], [20], [21]] and includes 9 items (i.e., comfort level; hard worker, sounding easy to work with, sharing things in a positive way, sounding professional, sounding honest, showing interest in the position, negotiation, overall rapport) scored on a 5-point scale. The anchors can be reviewed here [20]. Participants will complete one role-play at the pre-test visit and one role-play at the post-test visit. The role-plays will require the participant to complete a typical job application, select two fictional job openings (listed below), and participate in 2 job interview role-plays with 2 trained role-play interviewers. The interviews will be video recorded, and the videos will be scored by raters who are blinded to condition and time-point.

Employment history will be assessed via a vocational history self-report at pretest and 6- month follow-up (and validated at follow-up using MDOC administrative records). Additional employment data will be obtained for descriptive purposes, including types of jobs obtained, hours worked, money earned, and job terminations within the follow-up periods. Job types will be coded using the Dictionary of Occupational Titles [36]. Competitive work, as defined by the Substance Abuse and Mental Health Services Administration [37], pays at least minimum wage, occurs in an integrated community setting, and is not set aside for persons with disabilities. Reasons for job termination will be classified as “satisfactory” (e.g., transfer) or “unsatisfactory” (e.g., quitting) [38]. Lastly, we will collect information about length of job tenure to explore whether enhanced skills from VR-JIT can be generalized to a longer duration of employment as social difficulties may lead to poorer vocational outcomes [39].

The rate of recidivism at 6-month follow-up will be assessed via self-report during a 6-month follow-up call (and validated using MDOC administrative records). Specifically, we will evaluate whether a participant was re-arrested since their release and whether that re-arrest led to a conviction. Additional re-arrest data will be obtained for descriptive purposes, including number of arrests, whether or not participant was charged, class of charge (misdemeanor or felony) and whether the charged crime was violent or not).

2.8.3. Secondary outcomes

Secondary outcomes are: 1) job interviewing confidence; 2) job interviewing motivation; and 3) job interviewing anxiety. Interviewing confidence will be measured via 9 items on a 7-point Likert-type scale validated in the VR-JIT efficacy studies [[18], [19], [20], [21]]. Job interviewing motivation will be measured by adapting the Intrinsic Motivation Inventory [40]. Job interviewing anxiety will be measured using the Personal Report of Job Interviewing Anxiety that was adapted from the Personal Report of Public Speaking Anxiety [41]. This assessment will contain 34 items reported on a 5-point Likert scale.

2.8.4. Potential mechanisms of employment

We will explore interviewing confidence, motivation, and anxiety measures as potential mechanisms of the relationship between intervention completion and employment. We will also explore age as a potential moderator. Finally, we will evaluate the attainment of employment as a potential mechanism for recidivism after the completion of VR-JIT. All measures have been previously described.

2.8.5. Implementation and process measures

The Hybrid Type 1 effectiveness-Implementation trial design for this study will use mixed methods to facilitate a multilevel and mixed-method process evaluation of VR-JIT implementation.

Village administrators and employment readiness instructors will complete surveys to evaluate their: 1) pre-implementation acceptability of VR-JIT as a tool; 2) acceptability of the training in VR-JIT delivery; 3) appropriateness of VR-JIT and expected delivery feasibility; and 4) post-implementation evaluation of VR-JIT acceptability (employment readiness instructors only) and potential for sustainability beyond the study period. The items from these surveys were adapted from the Proctor et al. (2011) implementation research outcome domains taxonomy [42]. Additionally, administrators will complete a survey evaluating their prospective implementation plan; while employment readiness instructors will complete surveys evaluating the context and adaptation to the implementation plan (items based on the Stirman adaptation coding taxonomy) [43,44]. Employment readiness instructors and administrators will also complete an individual semi-structured interview based on the Consolidated Framework for Implementation Research guide (www.cfirguide.org) to evaluate facilitators and barriers to VR-JIT implementation to inform strategies used in future full-scale implementation efforts [45,46].

We will recruit returning citizens to evaluate: 1) their view of VR-JIT acceptability (items adapted from the Treatment Acceptability Rating Form [47]); and 2) the usability of VR-JIT (items adapted from the System Usability Scale [48]). The surveys will include open-ended questions to assess the personal reflections of the returning citizens after their use of VR-JIT. Specifically, the questions will ask: 1) What was your favorite thing about VR-JIT?, 2) What was your least favorite thing about VR-JIT?, and 3) Did you use VR-JIT in a different way than you were taught? (If yes, please explain). A summary of the implementation evaluation measures can be found in Table 2.

Table 2.

Summary of the proposed multi-level, mixed methodology for the implementation evaluation of VR-JIT adoption.

| Process Evaluation Domain | Type of method, source of data, examples |

|

|---|---|---|

| Quantitative Data | Qualitative Data | |

| Acceptability (returning citizens) | Training Experience Questionnaire (example questions: How easy was the training to use?; How helpful was this training in preparing you for a job interview?) | Survey for returning citizens (example questions: What was your favorite aspect of VR-JIT?; What did you like least about VR-JIT?) |

| Acceptability (employment readiness instructor) | Intervention Delivery Experience Questionnaire (example questions: how acceptable is the time required to deliver VR-JIT; how disruptive is VR-JIT to the services you provide?) | Semi-structured interviews with employment readiness instructor (example questions: Can you share your thoughts on what was appealing about teaching returning citizens how to use VR-JIT?; How did VR-JIT influence your other services?) |

| Scalability (MDOC administrators) | – | Semi-structured interviews with MDOC administrators (example questions: What are some potential challenges to scaling up the delivery of VR-JIT at the Villages? What adaptations may be needed to the existing implementation strategy for VR-JIT to make it more scalable at the Villages?) |

| Feasibility (returning citizens) | We will administratively monitor: adherence to training visits; reasons for missed visits; number of completed virtual interviews | Survey for returning citizens (example questions: In what ways would you change the way you access VR-JIT to make it easier for you to practice? What difficulties did you experience when trying to use VR-JIT) |

| Feasibility (employment readiness instructor) | We will administratively monitor the time required to train employment readiness instructors to deliver VR-JIT; fidelity of VR-JIT delivery; fidelity checklist completion rates; employment readiness instructor time allocated to VR-JIT implementation | Semi-structured interviews with employment readiness instructor; (example questions: What would need to change in your job to be the primary person delivering VR-JIT to your clients?; What was your experience completing the fidelity checks?; What are barriers to VR-JIT implementation? What are factors that help to successfully facilitate VR-JIT implementation?) |

| Cost (MDOC administrators) | We will monitor the time employment readiness instructor spent on their regular duties before and during the VR-JIT delivery. | Semi-structured interviews with MDOC administrators (example questions: To what extent does the cost of offering VR-JIT to returning citizens affect your decision to use it? Do you expect cost to be a major factor in continuing to use VR-JIT at the Vocational Villages?) |

2.9. RCT and implementation evaluation data collection schedule

The data collection schedule for the RCT can be found in Table 3. First, participants will complete all pretest assessments during visit 1 and visit 2 (T1 data points). Employment readiness instructors will deliver VR-JIT during visits 3–8. Then the returning citizens will complete all posttest assessments during visit 9 (T2 data points), followed by a 6-month check-in call (T3 data points). For the implementation evaluation, we will administer surveys to administrators and employment readiness instructors immediately following training on how to deliver VR-JIT; and during and after the implementation of VR-JIT for each cohort; and at the end of the study to capture baseline, early, and later perceptions of implementation. The semi-structured interviews with employment readiness instructors and administrators will occur after each cohort.

Table 3.

Schedule of assessments.

| Study Measures | Instrument | Collection Method | Timing |

||

|---|---|---|---|---|---|

| T1 | T2 | T3 | |||

| Background, clinical and cognitive measures | |||||

| Vocational history | Employment History Interview | Interview | X | ||

| Felony Offense Risk | Correctional Offender Management Profiling for Alternative Sanctions: Violent-Felony Offense Risk and Non-Violent Felony Offense Risk | MDOC Report | X | X | |

| Justice history | Justice History Interview | Interview | X | ||

| Primary Outcomes | |||||

| Employment rate | Employment History Interview | Interview | X | X | |

| Time-to-employment | Employment History Interview | Interview | X | X | |

| Job interview skill | Mock Interview Rating Scale | Interview Role-Plays | X | X | |

| Recidivism | Follow-up Interview | Interview | X | ||

| Secondary Outcomes | |||||

| Job-Interviewing self-efficacya | Job Interview Self-Efficacy Survey | Self-Report | X | X | |

| Job-Interviewing motivationa | Intrinsic Motivation Inventory | Self-Report | X | X | |

| Job interview anxietya | Personal Report of Job Interview Anxiety | Self-Report | X | X | |

| Exploratory Outcomes/Mechanisms | |||||

| Mental health symptomsa | DSM-5 Self-Rated Level 1 Cross-Cutting Symptom Measure – Adult | Self-Report | X | X | |

| Life stressa | Ten-Item Index of Psychological Distress Based on the Symptom Checklist-90 (SCL-90) | Self-Report | X | X | |

Note: Though it was not an outcome included in the original grant application, this measure was added in response to reviewers.

2.10. Data analyses with power estimates for objective 1 hypotheses

2.10.1. Hypothesis 1: SAU + VR-JIT trainees, compared to SAU-only trainees, will have higher employment rates by T3

H1: SAU + VR-JIT trainees will have higher employment rates than SAU-only by T3. To test H1, we will use multiple logistic regression and a Wald chi-square test of the coefficient to its standard error, to compare the adjusted employment proportions in the two conditions (attained a job = 1 vs. failed to attain a job or censored with a job = 0, between T1 and T3). We calculated power using SAS, accounting for small samples. Our trial, recruiting 150 participants, has 90% power for a two-sided p = 0.05 level test with SAU + VR-JIT effectively increasing the rate from 25% to 54% employment [49,50]. Based on our earlier study, in which we found an OR of 8.7, or effectively tripling the employment rate from 25% to 75%, and a power of 0.99 at this magnitude of effect, we feel confident that we will have sufficient power in this study.

2.10.2. Hypothesis 2: SAU + VR-JIT trainees will have greater improvement in job interview skills than SAU-only by T2

To test Hypothesis 2, we will conduct a linear mixed model with pre- and post-interview scores as repeated measures and treatment group as the fixed factor. Based on our pilot data [[17], [18], [19], [20], [21]], we expect r = 0.70 between T1 and T2 scores, and an effect size of d = 0.67 between pre- and post-interview role-play scores using VR-JIT. Assuming a small-to-moderate effect within SAU-only (e.g., d = 0.25), our power estimate assumes a medium effect size contrasting SAU-only with SAU + VR-JIT (d = 0.67–0.25 = 0.42). Assuming d = 0.67 and using the method of Diggle et al. [51], an N = 150 corresponds to over 80% power.

2.10.3. Hypothesis 3: SAU + VR-JIT trainees will have greater reductions in recidivism than SAU-only between T1 and T3

To test Hypothesis 3, we will compare recidivism percentages between SAU + VR-JIT and SAU-only at T3. With N = 150, we will have 75% power to compare recidivism percentages between SAU + VR-JIT and SAU-only at T3. Assuming that the SAU-only group will have recidivism close to the national recidivism rate of 68% [2], N = 150 will provide 75% power, compared with 40% in the SAU + VR-JIT group. If an additional 11 people are recruited, power will increase to 80%.

2.10.4. Hypothesis 4: SAU + VR-JIT will be more cost-effective (CE) than SAU-only

We will conduct a cost-effectiveness analysis (CEA) to test the hypothesis that in the short-term, SAU + VR-JIT is more cost-effective than SAU-only. We will use a societal perspective, which includes intervention costs and client costs provided by the MDOC program director [52]. Intervention costs include fixed costs (e.g., costs supporting hiring, training, and coordination) and variable costs (e.g., time spent by vocational readiness counselors). We will also assess the cost associated with time spent by clients using VR-JIT. We will apply standard approaches to identifying and assigning unit costs for each cost component [52]. We will use the proportion of clients who attain a job as our effectiveness measure. The key cost-effectiveness metric to be calculated is the incremental cost-effectiveness ratio (ICER), which is defined as the difference in total costs between the SAU + VR-JIT and SAU-only groups divided by the between-group difference in the proportion of clients who receive a job. We will use bootstrapping and Fieller's theorem to calculate confidence intervals around the ICER [53,54]. We will conduct sensitivity analysis by deriving cost-effectiveness acceptability curves that display the probability of SAU + VR-JIT being cost-effective at various threshold values [55].

2.10.5. System-level exploratory analyses

Explore whether using VR-JIT increases time efficiency for SAU staff to engage in non-interview-practice-related vocational training, relative to SAU only (system level).

2.11. Data analyses with power estimates for objective 2 hypotheses

2.11.1. Hypothesis 5: improved interviewing skills will mediate the effect of interview training on employment outcomes

To test hypothesis 5, we will first test if there is a significant SAU + VR-JIT effect on interview skills compared to SAU-only, then we will check for treatment-by-mediator interaction [56], and then we will check on the product of the two coefficients [57] with bootstrapped confidence intervals [58]. We simulated power for this test, finding 80% power when the effect size for the mediator (interview skills) is small (d = 0.20) and the odds for the mediators leading to a job is OR = 1.8; thus, we expect to have sufficient power. These older mediational models are informative but incomplete, and they will be followed by computing the causally interpretable average natural indirect effect [59].

2.12. Data analyses for objective 3

2.12.1. Mixed-methods quantitative analyses

We will report on the descriptive statistics (i.e., mean, standard deviations, range) of VR-JIT implementation (i.e., pre-implementation VR-JIT acceptability (administratorss, employment readiness instructors); prospective delivery (administrators only); acceptability of VR-JIT delivery training (administrators, employment readiness instructors); VR-JIT appropriateness and expected delivery feasibility (administrators, employment readiness instructors); implementation context and adaptation (employment readiness instructors only); post-implementation VR-JIT feasibility and sustainability (administrators, employment readiness instructors), acceptability (administrators, employment readiness instructors, returning citizens), and usability (returning citizens only). Also, we will conduct paired-sample t-tests to evaluate whether there were differences between implementation context and adaptation at delivery midpoint and endpoint. The obtained mean scores in this study will be compared to those of prior VR-JIT studies and to benchmarks in the published literature when appropriate (e.g., System Usability Scale).

2.12.2. Mixed-methods qualitative analyses for administrators and employment readiness instructors

Open-ended semi-structured interview data from administrators and employment readiness instructors will be transcribed in preparation for data analysis. We will analyze the data iteratively using thematic analysis and the constant comparative approach [60,61] to identify emergent themes regarding the acceptibility, scalability, feasibility, and barriers and facilitators of implementing VR-JIT in prisons. Barriers and facilitators will be coded using the CFIR Codebook (http://www.cfirguide.org/CFIRCodebookTemplate10.27.2014.docx). Two research staff will analyze the data using the Ethnograph qualitative data analysis package. They will independently analyze a subset of transcripts to iteratively develop new codes inductively as they emerge and deductively based on topics covered in the CFIR Codebook and on the definitions of implementation outcomes as defined by Proctor et al. [42]. Once a set of final codes and inter-coder reliability is achieved, the codes will be applied to all transcripts [62,63]. Framework analysis will be used to evaluate employment readiness instructors', and administrators’ perceptions of VR-JIT acceptibility, scalability, and feasibility to VR-JIT implementation [64]. To facilitate comparison, a matrix of themes will be developed: participant type (x-axis) vs. barriers and facilitators (y-axis). Matrices will identify y-axis themes common to all groups and features specific to particular subgroups, should they be present in the data [65]. For example, the experience of implementing VR-JIT for trainees may be related to organizational context factors not evident among staff or administrators, and administrators might perceive feasibility and sustainability differently due to barriers that staff and trainees do not report.

2.12.3. Mixed-methods qualitative analyses for returning citizens

We will evaluate the acceptability and usability using the three open-ended questions listed in section 2.8.5. To process and analyze the open-ended responses, two coders will review responses and use an open coding technique to generate themes across the three questions. Preliminary codes and themes will be shared with a third reviewer and a discussion will be held to reach agreement. Lastly, themes will be organized into a final framework which will be confirmed by the coding team.

2.12.4. Cost analyses

We will assess the pre-implementation costs to prepare the prisons to implement VR-JIT and the costs of actually implementing VR-JIT using budget impact analysis (BIA). In addition to estimating the costs of implementing VR-JIT at the Vocational Villages, we will produce a spreadsheet model that other prisons can use to input site-specific parameters in order to estimate their own costs of implementing VR-JIT. Consistent with current best practices for BIA [66], we will use assess costs from the perspective of the implementing organization (the prisons). Beyond the VR-JIT cost components for the CEA described in Hypothesis 4, we will also measure the time spent training by employment readiness instructors, the time spent maintaining the VR-JIT lab, and software costs. All project staff will be provided an Excel-based template to record time spent on each VR-JIT-related activity [67]. Total costs for each arm will be aggregated and then compared. We will use sensitivity analysis to vary key cost component input values (e.g., number of trials per study participant) to estimate the range of total costs for each arm.

2.13. Quality assurance and quality control

2.13.1. Preparation for conducting research in prisons

Before research team members are able to conduct study visits onsite at the prisons, they must first pass a Michigan Law Enforcement Information Network (LEIN) background check. Research team members will complete a LEIN form that will be processed by each prison and needs to be repeated annually. After research team members receive clearance to enter the facilities, they will be required to attend an orientation at one of the prisons. During the orientations, research team members will be asked to complete two forms that will be kept on file at the facility: 1) a personnel information and emergency notification form and 2) a volunteer identification form. After completing the forms, the research team members will meet with Vocational Village administrators who will review the rules and regulations for entering and conducting research within the prison. In addition to this informational session, the Vocational Village administrators will provide a guided tour of the Vocational Village. Although orientation may occur at only 1 prison site, research team members will receive guided tours of both Vocational Villages so that the research team members and the Vocational Village staff can work together to finalize the implementation settings and strategies.

2.13.2. Data management at the University of Michigan

Participants will be recruited using the University of Michigan Institutional Board (IRB) approved strategies, documents, and scripts. A review of these strategies will occur after each recruitment session at the Vocational Village sites to assess if the approved recruitment strategies are effective and successful in enrolling participants or if new strategies are needed. The study coordinator will review enrolled participants to confirm they meet eligibility criteria specified by the study sponsor, the National Institute of Justice. The study coordinator will also audit all study files in order to confirm completion of study data forms. If missing data are identified, the study staff member who completed the data collection visit will be contacted and a plan developed in order to obtain the missing data. All study data will be entered into online data entry forms created via Research Electronic Data capture (REDCap [28]). The double data feature will be enabled in order to ensure best data quality assurance. University of Michigan staff members who collected the data will complete first data entry while another University of Michigan staff member will complete the second data entry. REDCap is a password protected system that can be accessed via a virtual private network and is also behind firewalls. To best protect participant confidentiality, participants will be assigned a personal identification number (PIN). Study data will be linked to this number and not the participant's name within the REDCap database. A separate REDCap database will be created to link the participant name to their PIN but this separate database will not be linked to any other study data. Paper copies and video storage devices will be stored in a locked filing cabinet in a locked office at the University of Michigan School of Social Work. Audio and video files will be encrypted before being stored electronically, and other computer data files will be stored on password-protected computers within the University of Michigan. The study coordinator and statistician will routinely review data collection procedures, data storage, data management, and data analysis, in order to monitor the reliability and validity of the data.

2.13.3. Data management and arrival procedures at the Vocational Villages

All study items that research team members will bring into the Vocational Villages (i.e., video cameras, tripods, headsets, audio recorders, research data lockboxes, and all paper-based data collection materials) will be reviewed and approved by prison administrators prior to initiating the study. Once approved, all study items will be listed on the prison's Gate Manifest, which is a document identifying approved items and signed by an MDOC administrator. Every time research team members enter and exit the prisons with their study items, the Gate Manifest will be referenced. In addition to the Gate Manifest, research team members will also carry a letter from MDOC's Success Administrator, which states that any video footage obtained inside the Vocational Village (i.e., job interview role-plays) is protected data and may be removed from the prisons without review from prison officials.

Regarding arrival procedures, research team members will check in at the prison's front desk and obtain a volunteer pass that will allow them to enter the facility. In order to get their volunteer pass, research team members will need to present a picture ID. Research team members will be required to successfully clear security every time they enter the prison. Clearing security will require walking through a metal detector and being searched by a corrections officer. In addition, a corrections officer will check that the items listed on the Gate Manifest are the items being brought inside the facility. After research team members have cleared security, they will proceed through the gates into the prison and escorted to the Vocational Village by prison staff. In addition, the prisons require additional sign-in sheets inside the gates and upon arrival at the Vocational Village (which is a separate housed facility apart from the general population). These additional sign-in sheets enable the prison to maintain a count of research team members (along with non-custody and other volunteers inside the facility).

2.13.4. Data collection fidelity

It is critical to maintain a high level of fidelity when collecting research data in a prison setting. We will implement the following best practices for training research staff. Research staff will receive training from the study coordinator who has expertise working in the criminal justice system. The training will consist of first attending an orientation at one of the two prisons to learn the rules and regulations of working inside a prison facility. After attending an orientation, a research team member will observe the study coordinator conduct a complete mock study visit where they will consent a participant, administer surveys, and perform a job interview role-play. Next, the study team member will conduct at least three mock study visits independently with the study coordinator observing and providing feedback. These mock study visits will be conducted both at the University of Michigan as well as on-site at the Vocational Village locations. Once the study coordinator determines the research team member is ready to conduct a study visit on their own, the research team member will conduct a final visit with the PI observing. The PI will assess the research team member's performance and, if sufficient, grant him or her authorization to conduct research visits. If the PI decides the research team member requires additional training, they will conduct subsequent mock visits with the PI until their performance is deemed satisfactory. Then, the PI will authorize the research team member to work with participants.

Role-play training will be monitored by the PI, who has expertise in the area of training and supervising study team members to successfully carry out the job interview role-play assessments [[17], [18], [19], [20], [21]]. Study team members will read through the role-play training script, have the opportunity to ask the PI questions, and will then practice administering the role-play three times with the PI. The goal for study team members is to replicate the ‘friendly’ Molly personality on the ‘easy' difficulty level. During these practice sessions, the PI will provide feedback, and then assign the study team members to conduct the role-play with an additional study team member. The PI will again provide feedback after observing these performances, and then give the final authorization for the actor to work actively with participants. In order to monitor fidelity of the study team member's performance, a rating system was developed to be used when reviewing videos of study team member's role-play performances with participants. The rating system is on a scale of 1–3 (1 = no retraining is necessary; 2 = staff member needs minor retraining; 3 = staff member needs significant retraining). Study team members are rated on the following categories: agreeableness/positive affect, evenness of tone, eye contact, assertiveness, and professionalism. If research staff receive a 2 or 3 on any of the categories, they must meet with the PI for review before being allowed to continue to conduct role-plays with participants.

2.13.5. VR-JIT delivery fidelity

First, the employment readiness instructors will attend a 1-h orientation led by the study team on how to use VR-JIT. Next, the employment readiness instructors will use the tool themselves and complete three virtual interviews while becoming more familiar with navigating the e-learning component. The PI designed a self-monitoring fidelity checklist in order to promote a high level of fidelity when teaching VR-JIT. The employment readiness instructors will use the fidelity checklist during the final stage of training where they role play teaching how to use VR-JIT. The role-plays will be supervised by the research team members who will provide feedback on performance.

The employment readiness instructors will be provided these fidelity checklists and required to complete them as they are teaching study participants to ensure that each area of VR-JIT is being covered. The research team will collect and review the fidelity checklist for every cohort of participants. Orientation sessions where employment readiness instructors teach the tool will also be audio recorded and randomly selected for independent review by research staff. If it is discovered during these reviews that an employment readiness instructor does not obtain at least 90% fidelity on the checklist during a session, then that person will be required to undergo refresher training on the delivery process. Refresher training will consist of research staff and employment readiness instructor meeting in person to review the script for delivery and participating in another peer-to-peer orientation until fidelity is 100% attained. Once this refresher training is completed, the employment readiness instructor will be approved to lead future orientation sessions.

The research team will assess participant adherence to VR-JIT by monitoring: 1) total number of completed virtual interviews (SAU + VR-JIT group) and mock interviews (SAU-only group); 2) attendance at the VR-JIT sessions; 3) reasons for missed visits; and 4) attendance at regular SAU services. The research team will review and discuss VR-JIT adherence (and challenges to adherence) during weekly team meetings.

Lastly, given that the participants live, share meals, and commute together, the study has a potential threat to internal validity as the SAU + VR-JIT group may share job interview strategies, learned from VR-JIT, with the SAU group. That said, the participants are actively competing with each other for jobs while residing in the prison as the Village sets up real-life job interviews with community employers while participants are completing their certificates within the Villages. Thus, the potential competition for employment may reduce the risk of treatment diffusion of job interview strategies learned via VR-JIT. Moreover, a core feature of VR-JIT is the opportunity to repeatedly practice interviews which is unavailable to the SAU group.

3. Discussion

The rapid emergence of technology-based interventions has transformed how prison settings can deliver evidence-informed educational practices (e.g., http://apdscorporate.com). However, technology-based interventions are not being leveraged to extend or enhance the delivery of the types of services that are shown to minimize recidivism, such as pre-employment training [3,10]. This study aims to fill that gap by evaluating the effectiveness and implementation of a technology-based intervention to enhance vocational rehabilitation services in prison settings. Further, this study will be among the first to use a novel hybrid type I effectiveness-implementation design that employs a simultaneous evaluation of an intervention's effectiveness while enabling an in-depth process evaluation. This design is specifically suited to improve our initial understanding of whether technology-based interventions such as VR-JIT: 1) can be feasibly adopted in a prison setting; 2) is acceptable to the administrators, employment readiness instructors, and returning citizens in this setting; 3) is scalable; 4) is generalizable to other prison settings; 5) is cost-effective; and 6) might be sustainable long-term. One of the most significant outcomes emerging from this design is the potential to reduce the time needed to translate this innovative research into practice [25].

3.1. Potential enhancements to prison-based vocational training

Assuming that VR-JIT enhances skills and access to employment for returning citizens, the findings from this study have the potential for a widespread impact at both individual- and system-levels within prison settings. Specifically, the use of VR-JIT to facilitate interview training traditionally led by employment readiness instructors could release approximately 3–5 h per week per instructor. Thus, these 3–5 h could be reallocated to other important Vocational Village activities such as completing job development (e.g., resume building, developing relationship with community employers), linking clients to community resources (e.g., connecting clients to the State's Division of Rehabilitation Services), or administration (e.g., case notes, respond to emails, schedule appointments, administer GED exams). In addition, the implementation of VR-JIT within the Vocational Villages could translate into an expansion of the prison's capacity to provide job interview training.

3.2. Pre-funding modifications to the trial

After scientific review by the National Institute of Justice, the research team extended follow-up from 6 months to 12 months. Thus, the T3 data points will use employment and recidivism outcomes by the 12-month mark. We will also conduct exploratory analyses to evaluate the T3 outcomes using the 6-month follow-up data.

3.3. Post-funding modifications to the trial

After the study was funded, we convened an expert panel consisting of scientists, MDOC stakeholders, and a returning citizen stakeholder to review the proposed study and provide recommended modifications based on the acceptability and the potential feasibility of the proposed design. The expert panel included the PI and Co-Is (JJ, GC, SK, JS), MDOCs Assistant Education Manager (RM), and the Reentry Coordinator for the Washtenaw County Sherriff's Office (AS).

3.3.1. Modifications requested by the expert panel

After a full review, the expert panel made the following three recommendations. First, the panel suggested that the study include measures of mental health and life stress as potential covariates for participant engagement in the intervention and to monitor potential changes in stress and mental health after participants’ release as it relates to their potential employment and recidivism. In response, we will ask the participants to complete a 10-item version of the Symptom Checklist, which is a standardized assessment of psychological distress [68]. In addition, all participants will complete the DSM-5 self-rated level 1 cross-cutting symptom measure to assess their current self-reported mental health symptoms that may cut across diagnostic boundaries [69]. Both measures will be collected at T1 and T3 (see Table 3). We will explore the total score of each measure as potential mediators of employment and recidivism. We will also explore potential change in each measure between study time points. Exploratory analyses will use the same statistical approaches chosen to test hypotheses 2 and 5.

Second, the panel suggested adapting the mock interview rating scale to evaluate the returning citizen's ability to disclose their prior conviction during the job interview. In response, the research team sought feedback from a returning citizen stakeholder to support developing the new evaluation anchors around disclosure.

Third, the panel suggested reducing the burden on employment readiness instructors during the implementation evaluation. The proposed study sought employment readiness instructors to complete implementation context and adaptation surveys during the middle and at the end of each cohort and a semi-structured implementation interview after each cohort of recruited participants (i.e., each prison will have a cohort of 9 participants at a time). The panel recommended that the implementation context and adaptation survey will be collected during the middle and at the end of the first cohort, but then only after the completion of each subsequent cohort. In addition, the semi-structured implementation survey will now be conducted after the completion of every 4 cohorts.

Fourth, the panel suggested revising the process measures to allow for unstructured, open-ended interview questions in addition to those semi-structured questions derived from the CFIR. In addition, the qualitative analysis will benefit from the qualitative coders using the CFIR as a reference point for thematic analysis, and then using the constant comparison to search for emerging themes.

3.4. Conclusion

This is a first-of-its-kind trial aimed at evaluating VR-JIT in a prison setting under real-world conditions. This highly sophisticated effort is a critical step in the translational research pipeline, as the intervention is embedded to the greatest extent possible in the typical workflow of an existing delivery system replete with a certain degree of unpredictability regarding how returning citizens, staff, and administrators will respond. Prior efficacy trials have established that the intervention works reliably when delivered in a particular manner. Now, an effectiveness trial refocuses the study on how the service delivery context in which VR-JIT is used affects and relates to its effectiveness in helping returning citizens achieve employment. This also represents a shift from internal validity to one emphasizing external validity. This study is specifically powered to test the effectiveness of VR-JIT compared to pre-employment preparation delivered solely by prison staff (services as usual) in a prison setting with adult males seeking employment after community re-entry. This trial simultaneously allows for a rigorous evaluation of critical implementation factors, which is consistent with the aims of a type I hybrid effectiveness-implementation trial as described by Curran et al. [25]. The data gathered during this study will be utilized for rapid translation and scale-up if the intervention is deemed effective at the conclusion of the trial, while the implementation evaluation data could illuminate the most salient factors relating to the effectiveness, sustainability, and future adoption of VR-JIT. Finally, should VR-JIT be effective and shown to be viable for broad implementation in prison settings, the effects would be wide-ranging given the individual and societal benefits associated with both gainful employment and relatedly, reduced recidivism among those re-entering the community from prison settings [10,70].

Declaration of competing interest

Dr. Matthew Smith will receive royalties on sales of an adapted, unpublished (at the time of this submission) version of virtual reality job interview training (VR-JIT) that will focus on meeting the needs of transition-age youth with autism spectrum disorders. Dr. Smith's research on the effectiveness of the adapted version of VR-JIT is independent of the protocol proposed in this manuscript that will be evaluating the original version of VR-JIT. No other authors report any conflicts of interest.

Acknowledgements

This study was supported by a grant to Dr. Matthew J. Smith (2019-MU-MU-0004) from the National Institute of Justice, United States Department of Justice. The authors wish to acknowledge the Michigan Department of Corrections for their efforts to support the development of the protocol.

References

- 1.Carson E.A., Golinelli D. Office of Justice Programs, Bureau of Justice Statistics; Washington, D.C.: 2013. Prisoners in 2012: Trends in Admissions and Releases, 1991-2012. [Google Scholar]

- 2.Alper M., Durose M.R. Bureau of Justice Statistics; Washington, D.C.: 2018. Update on Prisoner Recidivism: A 9-Year Follow-Up Period (2005-2014), Office of Justice Programs. 2018. [Google Scholar]

- 3.Nally J.M., Lockwood S., Ho T., Knutson K. The post-release employment and recidivism among different types of offenders with A different level of education: a 5-year follow-up study in Indiana. Int. J. Crim. Justice Sci. 2014;9(1):16–34. [Google Scholar]

- 4.Re-entry Police Study Commission . Indianapolis-Marion County City-County Council Re-entry Police Study Commission Report. Indianapolis-Marion County Council; 2013. [Google Scholar]

- 5.Petersilia J. Oxford University Press; New York: 2005. When Prisoners Come Home: Parole and Prisoner Reentry. [Google Scholar]

- 6.Visher C.A., Courtney S. The Urban Institute; Washington, D. C.: 2006. Cleveland Prisoners' Experiences Returning Home. [Google Scholar]

- 7.Petersilia J., Rosenfeld R. National Research Council; Washington, D. C.: 2008. Parole, Desistance from Crime and Community Integration. [Google Scholar]

- 8.Petersilia J. Prisoner reentry: public safety and reintegration challenges. Prison J. 2001;81(3):360–375. [Google Scholar]

- 9.Harlow C. Bureau of Justice Statistics; Washington, D. C.: 2003. Education and Correctional Populations. [Google Scholar]

- 10.Newton D., Day A., Giles M., Wodak J., Graffam J., Baldry E. The impact of vocational education and training programs on recidivism: a systematic review of current experimental evidence. Int. J. Offender Ther. Comp. Criminol. 2018;62(1):187–207. doi: 10.1177/0306624X16645083. [DOI] [PubMed] [Google Scholar]

- 11.Ellison M., Szifris K., Horan R., Fox C. A Rapid Evidence Assessment of the effectiveness of prison education in reducing recidivism and increasing employment. Probat. J. 2017;64(2):108–128. [Google Scholar]

- 12.Corbiere M., Zaniboni S., Lecomte T., Bond G., Gilles P.Y., Lesage A., Goldner E. Job acquisition for people with severe mental illness enrolled in supported employment programs: a theoretically grounded empirical study. J. Occup. Rehabil. 2011;21(3):342–354. doi: 10.1007/s10926-011-9315-3. [DOI] [PubMed] [Google Scholar]

- 13.Huffcutt A.I. An empirical review of the employment interview construct literature. Int. J. Sel. Assess. 2011;19(1):62–81. [Google Scholar]

- 14.Hurtz G.M., Donovan J.J. Personality and job performance: the Big Five revisited. J. Appl. Psychol. 2000;85(6):869–879. doi: 10.1037/0021-9010.85.6.869. [DOI] [PubMed] [Google Scholar]

- 15.Hunter J.E., Hunter R.F. Validity and utility of alternate predictors of job performance. Psychol. Bull. 1984;96:72–98. [Google Scholar]

- 16.Substance Abuse and Mental Health Services Administration (Samhsa) United States. Depatment of Health and Human Services. Center for Mental Helth Services, Substance Abuse and Mental Health Services Administration; Rockville, MD: 2009. Supported employment: training frontline staff. [Google Scholar]

- 17.Smith M.J., Bell M.D., Wright M.A., Humm L., Olsen D., Fleming M.F. Virtual reality job interview training and 6-month employment outcomes for individuals with substance use disorders seeking employment. J. Vocat. Rehabil. 2016;44:323–332. doi: 10.3233/JVR-160802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Smith M.J., Boteler Humm L., Fleming M.F., Jordan N., Wright M.A., Ginger E.J., Wright K., Olsen D., Bell M.D. Virtual reality job interview training for veterans with posttraumatic stress disorder. J. Vocat. Rehabil. 2015;42:271–279. doi: 10.3233/JVR-150748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Smith M.J., Fleming M.F., Wright M.A., Roberts A.G., Humm L.B., Olsen D., Bell M.D. Virtual reality job interview training and 6-month employment outcomes for individuals with schizophrenia seeking employment. Schizophr. Res. 2015;166(1-3):86–91. doi: 10.1016/j.schres.2015.05.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Smith M.J., Ginger E.J., Wright K., Wright M.A., Taylor J.L., Humm L.B., Olsen D.E., Bell M.D., Fleming M.F. Virtual reality job interview training in adults with autism spectrum disorder. J. Autism Dev. Disord. 2014;44(10):2450–2463. doi: 10.1007/s10803-014-2113-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Smith M.J., Ginger E.J., Wright M., Wright K., Boteler Humm L., Olsen D., Bell M.D., Fleming M.F. Virtual reality job interview training for individuals with psychiatric disabilities. J. Nerv. Ment. Dis. 2014;202(9):659–667. doi: 10.1097/NMD.0000000000000187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Smith M.J., Smith J.D., Fleming M.F., Jordan N., Oulvey E.A., Bell M.D., Mueser K.T., McGurk S.R., Spencer E.S., Mailey K., Razzano L.A. Enhancing individual placement and support (IPS) - supported employment: a Type 1 hybrid design randomized controlled trial to evaluate virtual reality job interview training among adults with severe mental illness. Contemp. Clin. Trials. 2019;77:86–97. doi: 10.1016/j.cct.2018.12.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Washington H.E. Creating offender success through education: the Michigan department of corrections efforts to offer a comprehensive approach to prisoner education and employment. Adv. Correct. 2018;6:130–142. Journal of the International Corrections and Prisons Association. [Google Scholar]

- 24.Epperson M., Pettus-Davis C. Oxford University Press; New York , NY: 2017. Smart Decarceration: Achieving Criminal Justice Transformation in the 21st Century. [Google Scholar]

- 25.Curran G.M., Bauer M., Mittman B., Pyne J.M., Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med. Care. 2012;50(3):217–226. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pettus-Davis C., Howard M.O., Dunnigan A., Scheyett A.M., Roberts-Lewis A. Using randomized controlled trials to evaluate interventions for releasing prisoners. Res. Soc. Work. Pract. 2016;26(1):35–43. [Google Scholar]

- 27.Northpointe Institute for Public Management . 1996. Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) Traverse City, MI. [Google Scholar]

- 28.Harris P.A., Taylor R., Thielke R., Payne J., Gonzalez N., Conde J.G. Research electronic data capture (REDCap) - a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inf. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bell M.D., Weinstein A. Simulated job interview skill training for people with psychiatric disability: feasibility and tolerability of virtual reality training. Schizophr. Bull. 2011;37(Suppl 2):S91–S97. doi: 10.1093/schbul/sbr061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cooper J.O. Applied behavior analysis in education. Theory Into Pract. 1982;21(2):114–118. [Google Scholar]

- 31.Cooper J.O., Heron T.E., Heward W.L. Pearson; London: 2007. Applied Behavioral Analysis. [Google Scholar]

- 32.Issenberg S.B. The scope of simulation-based healthcare education. Simulat. Healthc. J. Soc. Med. Simulat. 2006;1(4):203–208. doi: 10.1097/01.SIH.0000246607.36504.5a. journal of the Society for Simulation in Healthcare. [DOI] [PubMed] [Google Scholar]

- 33.Roelfsema P.R., van Ooyen A., Watanabe T. Perceptual learning rules based on reinforcers and attention. Trends Cognit. Sci. 2010;14(2):64–71. doi: 10.1016/j.tics.2009.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Vinogradov S., Fisher M., de Villers-Sidani E. Cognitive training for impaired neural systems in neuropsychiatric illness. Neuropsychopharmacology. 2012;37(1):43–76. doi: 10.1038/npp.2011.251. official publication of the American College of Neuropsychopharmacology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.United States Department of Labor and Employment and Training Administration Affairs . U. S. Employment Service; Washington, D.C.: 1991. Dictionary of Occupational Titles. [Google Scholar]