Abstract

Introduction:

The US National Institutes of Health (NIH) established the Clinical and Translational Science Award (CTSA) program in response to the challenges of translating biomedical and behavioral interventions from discovery to real-world use. To address the challenge of translating evidence-based interventions (EBIs) into practice, the field of implementation science has emerged as a distinct discipline. With the distinction between EBI effectiveness research and implementation research comes differences in study design and methodology, shifting focus from clinical outcomes to the systems that support adoption and delivery of EBIs with fidelity.

Methods:

Implementation research designs share many of the foundational elements and assumptions of efficacy/effectiveness research. Designs and methods that are currently applied in implementation research include experimental, quasi-experimental, observational, hybrid effectiveness–implementation, simulation modeling, and configurational comparative methods.

Results:

Examples of specific research designs and methods illustrate their use in implementation science. We propose that the CTSA program takes advantage of the momentum of the field's capacity building in three ways: 1) integrate state-of-the-science implementation methods and designs into its existing body of research; 2) position itself at the forefront of advancing the science of implementation science by collaborating with other NIH institutes that share the goal of advancing implementation science; and 3) provide adequate training in implementation science.

Conclusions:

As implementation methodologies mature, both implementation science and the CTSA program would greatly benefit from cross-fertilizing expertise and shared infrastructures that aim to advance healthcare in the USA and around the world.

Keywords: Experimental, implementation research, quasi-experimental, trial designs

Background

Implementation Research: Definition and Aims

The US National Institutes of Health (NIH) established the Clinical and Translational Science Award (CTSA) program in response to the challenges of translating biomedical and behavioral interventions from discovery to real-world use [1]. By the time the CTSA program was established, hundreds of millions of NIH dollars had been spent on developing evidence to influence a wide swath of clinical and preventive interventions for improving patient-level outcomes (e.g., observable and patient-reported symptoms, functioning, and biological markers). This emphasis on “The 7 Ps”: pills, programs, practices, principles, products, policies, and procedures [2] resulted in little to show in terms of improved health at the population level. When the CTSA program was first created, comparative effectiveness research was viewed as an important approach for moving the results of efficacy and effectiveness studies into practice [3]. By comparing multiple evidence-based interventions (EBIs), clinicians and public health practitioners would be armed with information regarding which treatments and interventions to pursue for specific populations. However, establishing the best available EBI among multiple alternatives only closes the research-to-practice-gap by a small margin. How to actually “make it work” (i.e., implementation) in an expeditious and cost-effective manner remains largely uninformed by traditional comparative effectiveness research approaches. The need for implementation research was discussed in the 2010 publication of “Training and Career Development for Comparative Effectiveness Research Workforce Development” as a necessary means of ensuring that comparative effectiveness research findings are integrated into practice [3]. This translation has not yet been fully realized within the CTSA program.

According to the NIH, implementation research is “the scientific study of the use of strategies to adopt and integrate evidence-based health interventions into clinical and community settings in order to improve patient outcomes and benefit population health. Implementation research seeks to understand the behavior of healthcare professionals and support staff, healthcare organizations, healthcare consumers and family members, and policymakers in context as key influences on the adoption, implementation and sustainability of evidence-based interventions and guidelines [4].” In contrast to effectiveness research, which seeks to assess the influence of interventions on patient outcomes, implementation research evaluates outcomes such as rates of EBI adoption, reach, acceptability, fidelity, cost, and sustainment [5]. The objective of implementation research is to identify the behaviors, strategies, and characteristics of multiple levels of the healthcare system that support the use of EBIs to improve patient and community health outcomes, to better address health disparities [6].

With the distinction between EBI effectiveness research and implementation research comes differences in study design and methodology. This article describes designs and methods that are currently applied in implementation research. We begin by defining common terms, describing the goals, and presenting some overarching considerations and challenges for designing implementation research studies. We then describe experimental, quasi-experimental, observational, effectiveness–implementation “hybrid,” and simulation modeling designs and offer examples of each. We conclude with recommendations for how the CTSA program can build capacity for implementation research to advance its mission of reducing the lag from discovery to patient and population benefit [7].

Definition of Terms

In this article, we often use “implementation” as shorthand for a multitude of processes and outcomes of interest in the field: diffusion, dissemination, adoption, adaptation, tailoring, implementation, scale-up, sustainment, etc. We use the term “implementation science” to refer to the field of study and “implementation research” in reference to the act of studying implementation. We define “design” as the planned set of procedures to: (a) select subjects for study; (b) assign subjects to (or observe their natural) conditions; and (c) assess before, during, and after assignment in the conduct of the study. With many resources for measurement and evaluation of implementation research trials in the literature [8,9], we focus on the selection and assignment of subjects within the design for the purposes of drawing conclusions about the effects of implementation strategies [10,11]. The goals of implementation research are multifaceted and largely fall within two broad categories: (1) examining the implementation of EBIs in communities or service delivery systems; and (2) evaluating the impact of strategies to improve implementation. The approaches and techniques by which healthcare providers and healthcare systems more generally implement EBIs are via “implementation strategies.” Strategies may target one or more levels within a community or healthcare delivery system (e.g., clinicians, administrators, teams, organizations, and the external environment) and can be used individually or packaged to form multicomponent strategies. Some implementation studies are designed to test, evaluate, or observe the impact of one or more implementation strategies. Others seek to understand implementation context, determinants, barriers, and facilitators that will inform the study design [12].

Characteristics of Implementation Research Designs

Study Design

Study design refers to the overall strategy chosen for integrating different aspects of a study in a coherent and logical way to address the research questions. Implementation research designs share many of the foundational elements and assumptions of efficacy research. In many experimental and quasi-experimental implementation research studies, the independent variable of interest is an implementation strategy; in other implementation research studies, variables of interest relate to the implementation context or process. Much like an EBI in a traditional clinical trial, the construct must be well-defined, particularly when conducting an experimental study, a topic we will explore in later sections. Three broad types of study designs for implementation research are experimental/quasi-experimental, observational, and simulation. The basic difference among these types is that experimental and quasi-experimental designs feature a well-defined, investigator-manipulated, or controlled condition (often an implementation strategy) that is hypothesized to effect desired outcomes, whereas observational studies are meant to understand implementation strategies, contexts, or processes. Of note, quasi-experiments apply statistical methods to data from quasi-experimental designs to approximate what, from a scientific perspective, would ideally be achieved with random assignment. Whereas quasi-experiments attempt to predict relationships among constructs, observational studies seek to describe phenomena. Simulation may feature experimental or observational design characteristics using synthetic (not observed) data. Table 1 provides a summary of the definition and uses of specific research designs covered in this article along with references to published studies illustrating their use in implementation science literatures.

Table 1.

Design types, definitions, uses, and examples from implementation science

| Design types | Definitions | Uses | Examples from implementation science |

|---|---|---|---|

| Experimental design | |||

| Between-site design | This design compares processes and output among sites having different exposures | Allows investigators to compare processes and output among sites that have different exposures | Ayieko et al. [13] Finch et al. [14] Kilbourne et al. [15] |

| Within- and between-site design | The comparisons can be made with crossover designs where sites begin in one implementation condition and move to another | Receiving the new implementation strategy, or when it is unethical to withhold a new implementation strategy throughout the study | Smith and Hasan [16] Fuller et al. [17] |

| Quasi-experimental design | |||

| Within-site design | This design examines changes over time within one or more sites exposed to the same dissemination or implementation strategy | These single-site or single-unit (practitioner, clinical team, healthcare system, and community) designs are most commonly compared to their own prior performance | Smith et al. [18] Smith et al. [19] Taljaard et al. [20] Yelland et al. [21] |

| Observational | |||

| Observational (descriptive) | Describes outcomes of interest and their antecedents in their natural context | Useful for evaluating the real-world applicability of evidence | Harrison et al. [22] Salanitro et al. [23] |

| Other designs/methods | |||

| Configurational comparative methods | Combine within-case analysis and logic-based cross-case analysis to identify determinants of outcomes such as implementation | Useful for identifying multiple possible combinations of intervention components and implementation and context characteristics that interact to produce outcomes | Kahwati et al. [24] Breuer et al. [25] |

| Simulation studies | A method for simulating the behavior of complex systems by describing the entities of a system and the behavioral rules that guide their interactions | Offer a solution for understanding the drivers of implementation and the potential effects of implementation strategies | Zimmerman et al. [26] Jenness et al. [27] |

| Hybrid Type 1 | Tests a clinical intervention while gathering information on its delivery and/or on its potential for implementation in a real-world situation, with primary emphasis on assessing intervention effectiveness | Offers an ideal opportunity to explore implementation to plan for future implementation | Lane-Fall et al. [28] Ma et al. [29] |

| Hybrid Type 2 | Simultaneously tests a clinical intervention and an implementation intervention/strategy | Able to assess intervention effectiveness and feasibility and/or potential impact of an implementation strategy receive equal emphasis | Garner et al. [30] Smith et al. [31] |

| Hybrid Type 3 | Primarily tests an implementation strategy while secondarily collecting data on the clinical intervention and related outcomes | When researchers aim to proceed with implementation studies without completion of the full or at times even a modest portfolio of effectiveness studies beforehand | Bauer et al. [32] Kilbourne et al. [33] |

Experimental Designs

Experimental design is regarded as the most rigorous approach to show causal relationships and is labeled as the “gold-standard” in research designs with respect to internal validity [34]. Experimental design relies on the random assignment of subjects to the condition of interest; random assignment is intended to uphold the assumption that groups (usually experimental vs. control) are probabilistically equivalent, allowing the researcher to isolate the effect of the intervention on the outcome of interest. In implementation research, the experimental condition is often a specific implementation strategy, and the control condition is most often “implementation as usual.” Brown et al. [2] described three broad categories of designs providing within-site, between-site, and within- and between-site comparisons of implementation strategies. Within-site designs are discussed in the section on quasi-experimental designs as they generally lack the replicability standard given their focus on one site or unit. It is important to acknowledge that other authors, such as Miller et al. [35] and Mazzucca et al. [36], have categorized certain designs somewhat differently than we have here.

As research advances through the translational research pipeline (efficacy to effectiveness to dissemination and implementation), study design tends to shift from valuing internal validity (in efficacy trials) to achieving a greater balance between internal and external validity in effectiveness and implementation research. Much in the same way that inclusion criteria for patients are often relaxed in an effectiveness study of an EBI to better represent real-world populations, implementation research includes delivery systems and clinicians or stakeholders that are representative of typical practices or communities that will ultimately implement an EBI. The high degree of heterogeneity in implementation determinants, barriers, and facilitators associated with diverse settings makes isolating the influence of an implementation strategy challenging and is further complicated by nesting of clinicians within practices, hospitals within healthcare systems, regions within states, etc. Thus, the implementation researcher seeks to ensure that any observed effects are attributable to the implementation strategy/ies being investigated and attempts to balance internal and external validity in the design.

Between-site designs

In between-site designs, the EBI is held constant across all units to ensure that observed differences are the result of the implementation strategy and not the EBI. Between-site designs allow investigators to compare processes and output among sites that have different exposures. Most commonly the comparison is between an implementation strategy and implementation as usual. Brown and colleagues emphasize that randomization should be at the “level of implementation” in the between-site designs to avoid cross-contamination [2]. Ayieko et al. [13] used a between-site design to examine the effect of enhanced audit and feedback (an implementation strategy) on uptake of pneumonia guidelines by clinical teams within Kenyan county hospitals. They performed restricted randomization, which involved retaining balance between treatment and control arms on key covariates including geographic location and monthly pneumonia admissions. The study used random intercept multilevel models to account for any residual imbalances in performance at baseline so that the findings could be attributed to the audit and feedback, the implementation strategy of interest [12].

A variant between-site design is the “head-to-head” or “comparative implementation” trial in which the investigator controls two or more strategies, no strategy is implementation as usual, no site receives all strategies, and results are compared [2]. Finch et al. [14] examined the effectiveness of two implementation strategies, performance review and facilitated feedback, in increasing the implementation of healthy eating and physical activity-promoting policies and practices in childcare services in a parallel group randomized controlled trial design. At completion of the intervention period, childcare services that received implementation as usual were also offered resources to use the implementation strategies.

When achieving a large sample size is challenging, researchers may consider matched-pair randomized designs, with fewer units of randomization, or other adaptive designs for randomized trials [37] such as the factorial/fractional factorial [38] or sequential multiple assignment randomized trial (SMART) design. The SMART design allows for building time-varying adaptive implementation strategies (or stepped-care strategies) based on the order in which components are presented and the additive and combined effects of multiple strategies [15]. Kilbourne et al. assessed the effectiveness of an adaptive implementation intervention involving three implementation strategies (replicating effective programs [39], coaching, and facilitation) on cognitive behavioral therapy delivery among schools in a clustered SMART design [40]. In the first phase, eligible schools were randomized with equal probability to a single strategy vs. the same strategy combined with another implementation strategy. In subsequent phases, schools were re-randomized with different combinations of implementation strategies based on the assessment of whether potential benefit was derived from a combination of strategies. Similar to the SMART design is the full or fractional factorial design in which units are assigned a priori to different combinations of strategies, and main and lower order effects are tested to determine the additive impact of specific strategies and their interactions [41].

Another between-site design variant, the incomplete block, is useful when two implementation strategies cannot or were not initially intended to be directly compared. The incomplete block design allows for an indirect comparison of the two strategies by drawing from two independent samples of units, one in which sites are randomized to either strategy A or implementation as usual, and the other in which sites are randomized to strategy B or implementation as usual [42]. The two samples are completely independent and can occur either in parallel or in sequence, and statistical tests are performed for indirect comparison of the impacts of the two strategies “as if” they were directly compared. This requires a single EBI to be implemented and some degree of homogeneity across both of the groups. The incomplete block design is useful when it is not possible to test both strategies in a single study, or when a prior or concurrent study can be leveraged to compare two strategies.

Although the examples of between-site designs are randomized at the site- and organization-level, smaller units within each organization such as the team or clinician may also be randomized to an intervention [2]. Smith, Stormshak, & Kavanagh [18] present the results of a study in which clinicians were randomized to receive training or not, and their assigned families were randomized to receive the EBI or usual services. Effectiveness (family functioning and child behaviors) and implementation outcomes (adoption and fidelity) were evaluated after the 2-year period of intervention delivery.

Within- and between-site designs

This design involves crossovers where units begin in one condition and move to another (within-site element), which is repeated across units (or clusters of units) with staggered crossover points (between-site element). This broad class of designs has been referred to as “roll-out” designs [43] and dynamic wait-list designs [44]. We use the term “roll-out” to describe within- and between-site designs. The defining characteristic of roll-out designs is the assignment of all units in the study to the time when the implementation strategy will begin (i.e., the crossover). Assignments within roll-out designs can either be random, non-random, or quasi-random. In the context of implementation research, the roll-out design offers three practical and scientific advantages. First, all units in the trial will eventually receive the implementation strategy. Ensuring that all participating units receive the strategy promotes equity and enables all participants to contribute data. Second, the roll-out design allows the research team and the partner organizations to distribute resources required to administer the implementation strategy over time, rather than having to implement in all sites simultaneously as might be done in another type of multisite design. Third, the design allows researchers to account for the effect of unanticipated confounders (e.g., change in accreditation standards that requires use of the implementation strategy) that can occur during the trial period. For example, if some sites start implementation before an external event occurs, and other sites start afterwards, the impact of the event on the implementation process and resulting outcomes can be measured.

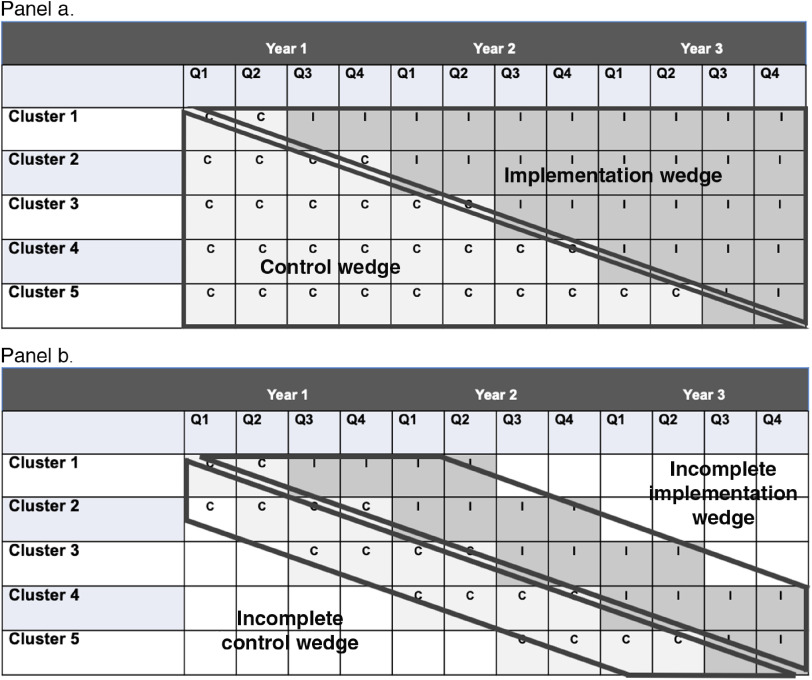

A common roll-out design is the stepped-wedge. The stepped-wedge is a specific design in which measurement of all units begins simultaneously at T0 and units cross over from one condition (e.g., implementation as usual or usual care) to the experimental implementation strategy condition following a series of “steps” at a predetermined interval (steps refer to the crossover). The result is a “wedge” below the steps of implementation as usual that can be compared to the wedge above the step representing the implementation strategy condition. The stepped-wedge is illustrated in Fig. 1 (panel a).

Fig. 1.

Roll-out designs: the stepped wedge (panel a) and incomplete wedge (panel b).

A variant of this design is the incomplete (or modified) wedge roll-out design (Fig. 1, panel b). The difference from the stepped-wedge is that pre-implementation outcomes measurement begins immediately prior (e.g., 4–6 months) to the step rather than at T0 [16]. Incomplete wedge roll-out designs might be preferred to the traditional stepped-wedge design because there is less burden on participating sites to collect data for long periods and it allows researchers the option of staged enrollment in the trial if needed to achieve the full target sample in a way that does not threaten the study protocol. In this latter situation, randomization would occur in as few stages as possible to maintain balance and a variable for stage of enrollment would be included in all analyses to account for any differences in early vs. later enrollees. Last, the unit of randomization can be single units, clusters, or repeated, matched pairs [45]. Smith and Hasan [16] provide a case example of an incomplete wedge roll-out design in a trial testing the implementation of the Collaborative Care Model for depression management in primary care practices within a large university health system. In that trial, measurement of implementation began 6 months prior to the crossover to implementing the Collaborative Care Model in each primary care practice in a multi-year roll-out.

Quasi-Experimental Designs

Quasi-experimental designs share experimental design goals of assessing the effect of an intervention on outcomes of interest. Unlike experiments, however, quasi-experiments do not randomly assign participants to intervention and usual care groups. This key distinction limits the internal validity of quasi-experimental designs because differences between groups cannot be attributed exclusively to the intervention. However, when randomization is not possible or desirable for assessing the effectiveness of an implementation strategy or other intervention, quasi-experimental designs are appealing. Internal validity is strengthened when techniques of varying strength are used to bolster internal validity in lieu of randomization, including pre- and post-; interrupted time-series; non-equivalent group; propensity score matching; synthetic control; and regression-discontinuity designs [46].

In the context of implementation research, quasi-experimental designs fall under Brown and colleagues’ broad category of within-site designs. These single-site or single-unit (practitioner, clinical team, healthcare system, and community) designs are most commonly compared to their own prior performance. The simplest variant of a within-site study is the post design. This design is relevant when a site or unit has not delivered a service before, and thus, has no baseline or pre-implementation strategy data for comparison. The result of such a study is a single “post” implementation outcome that can only be compared to a criterion metric or the results of published studies. In contrast to a post design where data are only available after an implementation strategy or other intervention is introduced, a pre-post design compares outcomes following the introduction of an implementation strategy to the results from care as usual prior to introducing the implementation strategy.

To increase power and internal validity of within-site studies, interrupted time-series designs can be used [47]. Time-series designs involve multiple observations of the dependent variable (e.g., implementation) before and after the introduction of the implementation strategy, which “disrupts” the time-series data stream. Time-series designs are highly flexible and can involve multiple sites in the multiple baseline and replicated single-case series variants, which increase internal validity through replication of the effect. Examples of interrupted time-series studies exist in implementation research that exemplify their practicality for studying implementation (see Table 1). Limitations of this design in implementation research include the challenge of defining the interruption (i.e., when the implementation began) and that the effects of new implementations are unlikely to be immediate. Therefore, analysis of interrupted time-series in implementation research might favor examining changes in slope between pre-implementation and implementation phases, rather than testing immediate changes in level of the outcome after the interruption.

Observational Designs

In observational studies, the investigator does not intervene with study participants but instead describes outcomes of interest and their antecedents in their natural context [48]. As such, observational studies may be particularly useful for evaluating the real-world applicability of evidence. Observational designs may use approaches to data collection and analysis that are quantitative [16] (e.g., survey), qualitative [49] (e.g., semi-structured in-depth interviews), or mixed methods [50] (e.g., sequential, convergent analysis of quantitative and qualitative results). Quantitative, qualitative, and mixed methods can be especially helpful in observational studies for systematically assessing implementation contexts and processes.

Hybrid Designs

With the goal of more rapidly translating evidence into routine practice, Curran et al. [51,52] proposed methods for blending: 1) design components of experiments intended to test the effectiveness of clinical interventions and 2) approaches to assessing their implementation. Such hybrid designs provide benefits over pursuing these lines of research independently or sequentially, both of which slow the progress of translation. Curran and colleagues state that effectiveness–implementation hybrid designs have a dual, a priori focus on assessing clinical effectiveness and implementation [51,52]. Hybrids focus on both effectiveness and implementation but do not specify a particular trial design. That is, the aforementioned experimental and observational designs can be used for any of the hybrid types. References to hybrid studies in implementation science are provided in Table 1.

Curran et al. describe the conditions under which three different types of hybrid designs should be used, which helps researchers determine the most appropriate type based on whether evidence of effectiveness and implementation exists. Linking clinical effectiveness and implementation research designs may be challenging, as the ideal approaches for each often do not share many design features. Clinical trials typically rely on controlling/ensuring delivery of the clinical intervention (often by using experimental designs) with little attention to implementation processes likely to be relevant to translating the intervention to general practice settings. In contrast, implementation research often focuses on the adoption and uptake of clinical interventions by providers and/or systems of care [53] often with the assumption of clinical effectiveness demonstrated in previous studies. The three hybrid designs are described below.

Hybrid Type 1

Hybrid Type 1 tests a clinical intervention while gathering information on its delivery and/or potential for implementation in a real-world context, with primary emphasis on clinical effectiveness. This type of design advocates process evaluations of delivery/implementation during clinical effectiveness trials to collect information that may be valuable in subsequent implementation research studies, answering questions such as: What potential modifications to the clinical intervention could be made to maximize implementation? What are potential barriers and facilitators to implementing this intervention in the “real world”? Hybrid Type 1 designs provide the opportunity to explore implementation and plan for future implementation.

Hybrid Type 2

Hybrid Type 2 simultaneously tests a clinical intervention and an implementation intervention/strategy. In contrast to the Hybrid Type 1 design, the Hybrid Type 2 design puts equal emphasis on assessing both intervention effectiveness and feasibility and/or potential impact of an implementation strategy. In a Hybrid Type 2 study, where an implementation intervention/strategy is simultaneously tested to promote uptake of the clinical intervention under study. Type 2 hybrid designs appear less frequently than the other two types due to the resources required.

Hybrid Type 3

Hybrid Type 3 primarily tests an implementation strategy while secondarily collecting data on the clinical intervention and related outcomes. This design can be used when researchers aim to proceed with implementation studies without an existing portfolio of effectiveness studies. Examples of these conditions are when: health systems attempt implementation of a clinical intervention without comprehensive clinical effectiveness data; there is strong indirect efficacy or effectiveness data; and potential risks of the intervention are limited. National priorities (e.g., the opioid epidemic) may also drive implementation before effectiveness data are robust.

Modeling

Implementation research is, by definition, a systems science in that it simultaneously studies the influence of individuals, organizations, and the environment on implementation [54]. The field of systems science is devoted to understanding complex behaviors that are both highly variant and strongly dependent on the behaviors of other parts of the system. Systems science is a challenging field to study using traditional clinical trial methods for various reasons, most notably the complexity involved in the many interactions and dynamics of multiple levels, constant change, and interdependencies. Simulation studies offer a solution for understanding the drivers of implementation and the potential effects of implementation strategies [55]. Modeling typically involves simulating the addition or configuration of one or more specific implementation strategies to determine which path should be taken in the real world, but it can also be used to test the likely effect of implementing one or more EBIs to determine impact for specific populations.

Agent-based modeling (ABM) [56] and participatory systems dynamics modeling (PSDM) [57] have both been used in implementation research to model the behavior of systems and determine the impact of moving certain implementation “levers” in the system. ABM is a method for simulating the behavior of complex systems by describing the entities (called “agents”) of a system and the behavioral rules that guide their interactions [56]. These agents, which can be any element of a system (e.g., clinicians, patients, and stakeholders), interact with each other and the environment to produce emergent, system-level outcomes [58], many of which are formal implementation outcomes. As ABM produces a mechanistic model, researchers are able to identify the implementation drivers that should be leveraged to most effectively achieve the predicted impacts in practice. Whereas ABM has wide ranging applications for implementation science, PSDM is an example of a method for a specific implementation challenge. Zimmerman et al. [26] used PSDM to triangulate stakeholder expertise, healthcare data, and modeling simulations to refine an implementation strategy prior to being used in practice. In PSDM, clinic leadership and staff define and evaluate the determinants (e.g., clinician knowledge, implementation leadership, and resources) and mechanisms (e.g., self-efficacy, feasible workflow) that determine local capacity for implementation of an EBI using a visual model. Given local capacity and other factors, simulations predict overall system behavior when the EBI is implemented. The process is iterative and has been used to prepare for large initiatives where testing implementation using standard trial methods was infeasible or undesirable due to the cost and time involved.

Configurational Comparative Methods

Configurational comparative methods, which are an umbrella term for methods that include but are not limited to qualitative comparative analysis [59], combine within-case analysis and logic-based cross-case analysis to identify determinants of outcomes such as implementation. Configurational comparative methods define causal relationships by identifying INUS conditions: those that are an Insufficient but Necessary part of a condition that is itself Unnecessary but Sufficient for the outcome. Configurational comparative methods may be preferable to standard regression analyses often used in quasi-experiments when the influence of an intervention on an outcome is not easily disentangled from how it is implemented or the context in which it is implemented – i.e., complex interventions. Complex interventions often have interdependent components whose unique contributions to a given outcome can be challenging to isolate. Furthermore, complex interventions are characterized by blurry boundaries among the intervention, its implementation, and the context in which it is implemented [60]. For example, the effectiveness of care plans for cancer survivors in improving care coordination and communication among providers likely depends upon a care plan's content, its delivery, and the functioning of the cancer program in which it is delivered [61]. Configurational comparative methods facilitate identifying multiple possible combinations of intervention components and implementation and context characteristics that interact to produce outcomes. To date, qualitative comparative analysis is the type of configurational comparative methods that has been most frequently applied in implementation research [62]. To identify determinants of medication adherence, Kahwati et al. [24] used qualitative comparative analysis to analyze data from 60 studies included in a systematic review. Breuer et al. [25] used qualitative comparative analysis to identify determinants of mental health services utilization.

Relevance and Opportunities for Application in CTSAs

In the early days of the CTSA program, resources allocated to implementation science were most frequently embedded in clinical or effectiveness research studies, and few had robust, standalone implementation science programs [63,64]. As the National Center for Advancing Translational Sciences (NCATS) and other federal and non-federal sources have increased their investment in implementation science capacity, the field has grown dramatically. More CTSAs are developing implementation research programs and incorporating stakeholders more fully in this process, as reflected in the results of the Dolor et al [65] environmental scan. Washington University and the University of California at Los Angeles have documented their efforts to engage practice and community partners, offer professional development opportunities, and provide consultations to investigators both in and outside the field of implementation science [66,67]. The CTSA program could take advantage of this momentum in three ways: integrate state-of-the-science implementation methods into its existing body of research; position itself at the forefront of advancing the science of implementation science by collaborating with other NIH institutes that share the goal of advancing implementation science, such as NCI and NHLBI; and providing training in implementation science.

Integrating state-of-the-science implementation methods to CTSAs’ existing bodies of research

Many CTSAs have the expertise to consult with their institution's investigators on the potential role of implementation science in their research. Implementation research consultations involve creating awareness and appropriate use of specific study designs and methods that match investigators’ needs and result in meaningful findings for real-world clinical and policy environments. As described by Glasgow and Chambers, these include rapid, adaptive, and convergent methods that consider contextual and systems perspectives and are pragmatic in their approach [68]. They state that “CTSA grantees, among others, are in a position to lead such a change in perspective and methods, and to evaluate if such changes do in fact result in more rapid, relevant solutions” to pressing public health problems. Through consultation services, CTSAs can encourage the use of implementation science early (e.g., designing for dissemination and implementation [69]) and often, positioning CTSAs – the hub for translation – to fulfill their mission by reducing the lag from discovery to patient and population benefit.

Advancing the science of implementation science

The centers funded by the CTSA program are able to conduct large-scale implementation research using the multisite U01 mechanism which requires the involvement of three centers. With the challenges of recruitment, generalizability, and power that are inherent in many implementation trials, the inclusion of three or more CTSAs, ideally representing diversity in region, populations, and healthcare systems, can provide the infrastructure for cutting-edge implementation science. Thus far, there are few examples of this mechanism being used for implementation research. In addition, with the charge of speeding translation of bench and clinical science discoveries to population impact, CTSAs have both the incentive and perspective to conduct implementation research early and consistently in the translational pipeline. As the hybrid design illustrates, there has been a paradigmatic shift away from the sequential translational research pipeline to more innovative methods that reduce the lag between translational steps.

Training in implementation science

NIH has funded several formal training programs in implementation science, including the Training Institute in Dissemination and Implementation in Health [70], Implementation Research Institute [71], and Mentored Training in Dissemination and Implementation Research in Cancer [72]. These training programs address the need to gain greater clarity around the implementation research designs described in this article, but the demand for training outpaces available resources. CTSAs could provide an avenue for meeting the needs of the field for training in dissemination and implementation science methods. CTSA faculty with expertise in implementation research could offer implementation research training programs for scholars on many levels using the T32, KL2, K12, TL1, R25, and other mechanisms. Chambers and colleagues have recently noted these capacity-building and training opportunities funded by the NIH [73]. Indeed, given the mission of the CTSA program, they are the ideal setting for implementation research training programs.

Conclusion

The field of implementation science has established methodologies for understanding the context, strategies, and processes needed to translate EBIs into practice. As they mature alongside one another, both implementation science and the CTSA program would greatly benefit from cross-fertilizing expertise, infrastructure, and aim to advance healthcare in the USA and around the world.

Acknowledgements

The authors wish to thank Hendricks Brown and Geoffrey Curran who provided input at different stages of developing the ideas presented in this manuscript.

Research reported in this publication was supported, in part, by the National Center for Advancing Translational Sciences, grant UL1TR001422 (Northwestern University), grant UL1TR002489 (UNC Chapel Hill), and grant UL1TR001450 (Medical University of South Carolina); by National Institute on Drug Abuse grant DA027828; and by the Implementation Research Institute (IRI) at the George Warren Brown School of Social Work, Washington University in St. Louis through grant MH080916 from the National Institute of Mental Health and the Department of Veterans Affairs, Health Services Research and Development Service, Quality Enhancement Research Initiative (QUERI) to Enola Proctor. Dr. Birken's effort was supported by the National Center for Advancing Translational Sciences, National Institutes of Health, through grant KL2TR002490. The opinions expressed herein are the views of the authors and do not necessarily reflect the official policy or position of the National Institute for Advancing Translational Science, the National Institute on Drug Abuse, the National Institute of Mental Health, the Department of Veterans Affairs, or any other part of the US Department of Health and Human Services.

Disclosures

The authors have no conflicts of interest to declare.

References

- 1. Reis SE, et al. Reengineering the national clinical and translational research enterprise: the strategic plan of the National Clinical and Translational Science Awards Consortium. Academic Medicine 2010; 85(3): 463–469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Brown CH, et al. An overview of research and evaluation designs for dissemination and implementation. Annual Review of Public Health 2017; 38: 1–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Kroenke K, et al. Training and career development for comparative effectiveness research workforce development: CTSA consortium strategic goal committee on comparative effectiveness research workgroup on workforce development. Clinical and Translational Science 2010; 3(5): 258–262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Department of Health and Human Services. Dissemination and implementation research in health (R01) NIH funding opportunity: PAR-19-274. NIH grant funding opportunities; 2018. In.

- 5. Proctor E, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research 2011; 38(2): 65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. McNulty M, et al. Implementation research methodologies for achieving scientific equity and health equity. Ethnicity & Disease 2019; 29(Suppl 1): 83–92. doi: 10.18865/ed.29.s1.83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Balas EA, Boren SA. Managing Clinical Knowledge for Health Care Improvement. Yearbook of Medical Informatics. Schattauer: Stuttgart, 2000. [PubMed] [Google Scholar]

- 8. Rabin BA, et al. Measurement resources for dissemination and implementation research in health. Implementation Science 2016; 11(1): 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Lewis CC, Proctor EK, Brownson RC. Measurement issues in dissemination and implementation research In: Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health: Translating Research to Practice. 2nd ed New York: Oxford University Press; 2017. 229–244. [Google Scholar]

- 10. Powell BJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Science 2015; 10: 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Powell BJ, et al. Enhancing the impact of implementation strategies in healthcare: a research agenda. Frontiers in Public Health 2019; 7: 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Smith JD, et al. Landscape of HIV implementation research funded by the National Institutes of Health: a mapping review of project abstracts. AIDS and Behavior 2019. doi: 10.1007/s10461-019-02764-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Ayieko P, et al. Effect of enhancing audit and feedback on uptake of childhood pneumonia treatment policy in hospitals that are part of a clinical network: a cluster randomized trial. Implement Science 2019; 14(1): 20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Finch M, et al. A randomised controlled trial of performance review and facilitated feedback to increase implementation of healthy eating and physical activity-promoting policies and practices in centre-based childcare. Implement Science 2019; 14(1): 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. American Journal of Preventive Medicine 2007; 32(5 Suppl): S112–118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Smith JD, Hasan M. Quantitative approaches for the evaluation of implementation research studies. Psychiatry Research 2019: 112521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Fuller C, et al. The Feedback Intervention Trial (FIT) — improving hand-hygiene compliance in UK healthcare workers: a stepped wedge cluster randomised controlled trial. PLoS One. 2012; 7(10): e41617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Smith JD, Stormshak EA, Kavanagh K. Results of a pragmatic effectiveness-implementation hybrid trial of the Family Check–Up in community mental health agencies. Administration and Policy in Mental Health and Mental Health Services Research 2015; 42(3): 265–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Smith MJ, et al. Enhancing individual placement and support (IPS) – Supported employment: a Type 1 hybrid design randomized controlled trial to evaluate virtual reality job interview training among adults with severe mental illness. Contemporary Clinical Trials 2019; 77: 86–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Taljaard M, et al. The use of segmented regression in analysing interrupted time series studies: an example in pre-hospital ambulance care. Implementation Science 2014; 9(1): 77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Yelland J, et al. Bridging the gap: using an interrupted time series design to evaluate systems reform addressing refugee maternal and child health inequalities. Implementation Science 2015; 10(1): 62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Harrison MB, et al. Guideline adaptation and implementation planning: a prospective observational study. Implementation Science 2013; 8(1): 49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Salanitro AH, et al. Multiple uncontrolled conditions and blood pressure medication intensification: an observational study. Implementation Science 2010; 5(1): 55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Kahwati L, et al. Using qualitative comparative analysis in a systematic review of a complex intervention. Systematic Reviews 2016; 5(1): 82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Breuer E, et al. Using qualitative comparative analysis and theory of change to unravel the effects of a mental health intervention on service utilisation in Nepal. BMJ Global Health 2018; 3(6): e001023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Zimmerman L, et al. Participatory system dynamics modeling: increasing stakeholder engagement and precision to improve implementation planning in systems. Administration and Policy in Mental Health and Mental Health Services Research 2016; 43(6): 834–849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Jenness SM, et al. Impact of the centers for disease control's HIV preexposure prophylaxis guidelines for men who have sex with men in the United States. The Journal of Infectious Diseases 2016; 214(12): 1800–1807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Lane-Fall MB, et al. Handoffs and transitions in critical care (HATRICC): protocol for a mixed methods study of operating room to intensive care unit handoffs. BMC Surgery 2014; 14(1): 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Ma J, et al. Research aimed at improving both mood and weight (RAINBOW) in primary care: a Type 1 hybrid design randomized controlled trial. Contemporary Clinical Trials 2015; 43: 260–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Garner BR, et al. Testing the implementation and sustainment facilitation (ISF) strategy as an effective adjunct to the Addiction Technology Transfer Center (ATTC) strategy: study protocol for a cluster randomized trial. Addiction Science & Clinical Practice 2017; 12(1): 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Smith JD, et al. An individually tailored family-centered intervention for pediatric obesity in primary care: study protocol of a randomized type II hybrid implementation-effectiveness trial (Raising Healthy Children study). Implementation Science 2018; 13(11): 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Bauer MS, et al. Partnering with health system operations leadership to develop a controlled implementation trial. Implementation Science 2016; 11(1): 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Kilbourne AM, et al. Protocol: Adaptive Implementation of Effective Programs Trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implementation Science 2014; 9(1): 132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Trochim WMK, Donnelly JP. The Research Methods Knowledge Base. 3rd ed Mason, OH: Cengage Learning, 2008. [Google Scholar]

- 35. Miller CJ, Smith SN, Pugatch M. Experimental and quasi-experimental designs in implementation research. Psychiatry Research 2019; 283: 112452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Mazzucca S, et al. Variation in research designs used to test the effectiveness of dissemination and implementation strategies: a review. Frontiers in Public Health 2018; 6: 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Brown CH, et al. Adaptive designs for randomized trials in public health. Annual Review of Public Health 2009; 30: 1–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Dziak JJ, Nahum-Shani I, Collins LM. Multilevel factorial experiments for developing behavioral interventions: power, sample size, and resource considerations. Psychological Methods 2012; 17(2): 153–175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Kilbourne AM, et al. Implementing evidence-based interventions in health care: application of the replicating effective programs framework. Implementation Science 2007; 2(1): 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Kilbourne AM, et al. Adaptive School-based Implementation of CBT (ASIC): clustered-SMART for building an optimized adaptive implementation intervention to improve uptake of mental health interventions in schools. Implementation Science 2018; 13(1): 119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Collins LM, et al. Factorial experiments: efficient tools for evaluation of intervention components. American Journal of Preventive Medicine 2014; 47(4): 498–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Dagne GA, et al. Testing moderation in network meta-analysis with individual participant data. Statistics in Medicine 2016; 35(15): 2485–2502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Brown CH, et al. An overview of research and evaluation designs for dissemination and implementation. Annual Review of Public Health 2017; 38(1): 1–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Brown CH, et al. Dynamic wait-listed designs for randomized trials: new designs for prevention of youth suicide. Clinical Trials 2006; 3(3): 259–271. [DOI] [PubMed] [Google Scholar]

- 45. Wyman PA, et al. Designs for testing group-based interventions with limited numbers of social units: the dynamic wait-listed and regression point displacement designs. Prevention Science 2015; 16(7): 956–966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Newcomer KE, Hatry HP, Wholey JS. Handbook of Practical Program Evaluation. 4th ed San Francisco, CA: Jossey-Bass, 2015. [Google Scholar]

- 47. Smith JD. Single-case experimental designs: a systematic review of published research and current standards. Psychological Methods 2012; 17(4): 510–550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Thiese MS. Observational and interventional study design types; an overview. Biochemia Medica (Zagreb) 2014; 24(2): 199–210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Hamilton AB, Finley EP. Qualitative methods in implementation research: an introduction. Psychiatry Research 2019; 280: 112516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Palinkas LA, Rhoades Cooper B. Mixed methods evaluation in dissemination and implementation science In: Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health. 2nd ed New York, NY: Oxford University Press, 2017, pp. 335–353. [Google Scholar]

- 51. Curran GM, et al. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care 2012; 50(3): 217–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Landes SJ, et al. An introduction to effectiveness-implementation hybrid designs. Psychiatry Research 2019; 280: 112513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Grol R, et al. Improving Patient Care: The Implementation of Change in Health Care. 2d ed Chichester: John Wiley & Sons, Ltd, 2013. [Google Scholar]

- 54. Lich KH, et al. A call to address complexity in prevention science research. Prevention Science 2013; 14(3): 279–289. [DOI] [PubMed] [Google Scholar]

- 55. Landsverk J, et al. Design and analysis in dissemination and implementation research In: Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health: Translating Research to Practice. 2nd ed New York: Oxford University Press, 2017, pp. 201–227. [Google Scholar]

- 56. Wilensky U, Rand W. An Introduction to Agent-Based Modeling: Modeling Natural, Social, and Engineered Complex Systems with NetLogo. Cambridge, MA: MIT Press; 2015. [Google Scholar]

- 57. Hovmand P. Community-Based System Dynamics Modeling. New York, NY: Springer, 2014. [Google Scholar]

- 58. Maroulis S, et al. Modeling the transition to public school choice. Journal of Artificial Societies and Social Simulation 2014; 17(2): 3. [Google Scholar]

- 59. Ragin CC. Using qualitative comparative analysis to study causal complexity. Health Services Research 1999; 34(5 Pt 2): 1225–1239. [PMC free article] [PubMed] [Google Scholar]

- 60. Baumgartner M. Regularity theories reassessed. Philosophia 2008; 36(3): 327–354. [Google Scholar]

- 61. Mayer DK, Birken SA, Chen RC. Avoiding implementation errors in cancer survivorship care plan effectiveness studies. Journal of Clinical Oncology 2015; 33(31): 3528–3530. [DOI] [PubMed] [Google Scholar]

- 62. Baumgartner M, Ambühl M. Causal modeling with multi-value and fuzzy-set coincidence analysis. Political Science Research and Methods 2018. doi: 10.1017/psrm.2018.45 [DOI] [Google Scholar]

- 63. Gonzales R, et al. A framework for training health professionals in implementation and dissemination science. Academic Medicine 2012; 87(3): 271–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Mittman BS, et al. Expanding D&I science capacity and activity within NIH Clinical and Translational Science Award (CTSA) Programs: guidance and successful models from national leaders. Implementation Science 2015; 10(1): A38. [Google Scholar]

- 65. Dolor RJ, Proctor E, Stevens KR, Boone LR, Meissner P, Baldwin L-M. Dissemination and implementation science activities across the Clinical Translational Science Award (CTSA) Consortium: Report from a survey of CTSA leaders. Journal of Clinical and Translational Science 2019. doi: 10.1017/cts.2019.422 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Inkelas M, et al. Enhancing dissemination, implementation, and improvement science in CTSAs through regional partnerships. Clinical and Translational Science 2015; 8(6): 800–806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Brownson RC, et al. Building capacity for dissemination and implementation research: one university's experience. Implementation Science 2017; 12(1): 104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Glasgow RE, Chambers D. Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clinical and Translational Science 2012; 5(1): 48–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Ramsey AT, et al. Designing for Accelerated Translation (DART) of emerging innovations in health. Journal of Clinical and Translational Science 2019; 3(2–3): 53–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Meissner HI, et al. The US training institute for dissemination and implementation research in health. Implementation Science 2013; 8(1): 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Proctor EK, et al. The implementation research institute: training mental health implementation researchers in the United States. Implementation Science 2013; 8(1): 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Padek M, et al. Training scholars in dissemination and implementation research for cancer prevention and control: a mentored approach. Implementation Science 2018; 13(1): 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Chambers DA, Pintello D, Juliano-Bult D. Capacity-building and training opportunities for implementation science in mental health. Psychiatry Research 2020; 283: 112511. [DOI] [PubMed] [Google Scholar]