Highlights

-

•

We analysed over 450 references from all well-famed databases.

-

•

We provided a comprehensive survey on multimodal data fusion in neuroimaging.

-

•

This review encompassed current challenges & applications, strengths &limitations.

-

•

Fundamental fusion rules, and fusion quality assessment methods were reviewed.

-

•

Atlas-based fusion segmentation, quantification, & applications were reviewed.

Keywords: Multimodal data fusion, Neuroimaging, Magnetic resonance imaging, PET, SPECT, Fusion rules, Assessment, Applications, Partial volume effect

Abstract

Multimodal fusion in neuroimaging combines data from multiple imaging modalities to overcome the fundamental limitations of individual modalities. Neuroimaging fusion can achieve higher temporal and spatial resolution, enhance contrast, correct imaging distortions, and bridge physiological and cognitive information. In this study, we analyzed over 450 references from PubMed, Google Scholar, IEEE, ScienceDirect, Web of Science, and various sources published from 1978 to 2020. We provide a review that encompasses (1) an overview of current challenges in multimodal fusion (2) the current medical applications of fusion for specific neurological diseases, (3) strengths and limitations of available imaging modalities, (4) fundamental fusion rules, (5) fusion quality assessment methods, and (6) the applications of fusion for atlas-based segmentation and quantification. Overall, multimodal fusion shows significant benefits in clinical diagnosis and neuroscience research. Widespread education and further research amongst engineers, researchers and clinicians will benefit the field of multimodal neuroimaging.

1. Introduction

Neuroimaging has been playing pivotal roles in clinical diagnosis and basic biomedical research in the past decades. As described in the following section, the most widely used imaging modalities are magnetic resonance imaging (MRI), computerized tomography (CT), positron emission tomography (PET), and single-photon emission computed tomography (SPECT). Among them, MRI itself is a non-radioactive, non-invasive, and versatile technique that has derived many unique imaging modalities, such as diffusion-weighted imaging, diffusion tensor imaging, susceptibility-weighted imaging, and spectroscopic imaging. PET is also versatile, as it may use different radiotracers to target different molecules or to trace different biologic pathways of the receptors in the body.

Therefore, these individual imaging modalities (the use of one imaging modality), with their characteristics in signal sources, energy levels, spatial resolutions, and temporal resolutions, provide complementary information on anatomical structure, pathophysiology, metabolism, structural connectivity, functional connectivity, etc. Over the past decades, everlasting efforts have been made in developing individual modalities and improving their technical performance. Directions of improvements include data acquisition and data processing aspects to increase spatial and/or temporal resolutions, improve signal-to-noise ratio and contrast to noise ratio, and reduce scan time. On application aspects, individual modalities have been widely used to meet clinical and scientific challenges. At the same time, technical developments and biomedical applications of the concert, integrated use of multiple neuroimaging modalities are trending up in both research and clinical institutions. The driving force of this trend is twofold. First, all individual modalities have their limitations. For example, some lesions in MS can appear normal in T1-weighted or T2-weighted MR images but show pathological changes in DWI or SWI images [1]. Second, a disease, disorder, or lesion may manifest itself in different forms, symptoms, or etiology; or on the other hand, different diseases may share some common symptoms or appearances [2, 3]. Therefore, an individual image modality may not be able to reveal a complete picture of the disease; and multimodal imaging modality (the use of multiple imaging modalities) may lead to a more comprehensive understanding, identify factors, and develop biomarkers of the disease.

In the narrow sense, a multimodal imaging study would mean the use of multiple imaging devices such as PET and MRI scanners, different imaging modes such as structural MRI, diffusion-weighted imaging, and magnetic resonance spectroscopy, or even different contract mechanism such as with or without contract agents in a single examination or experiment of a subject. This practice has been widely used in clinical diagnosis and medical research. For example, a routine protocol of MRI examination of a stroke patient may include T1-weighted, T1-weighted high-resolution structural MRI scans, diffusion-weighted imaging, SWI, etc [4, 5]. A protocol of an MRI study of a psychiatric disorder may contain a combination of structural MRI, functional MRI, MR spectroscopic imaging, etc [6, 7].

In the broad sense, a multimodal imaging study may mean the use of multimodal imaging data obtained separately, from different subjects, and/or from different clinical or research sites. This practice offers the advantages of large and diverse datasets. However, it also comes with challenges of sophisticated models, complicated data normalization (that includes correction of errors and variations imbedded in data from different institutions), data fusion, and data integration [8, 9].

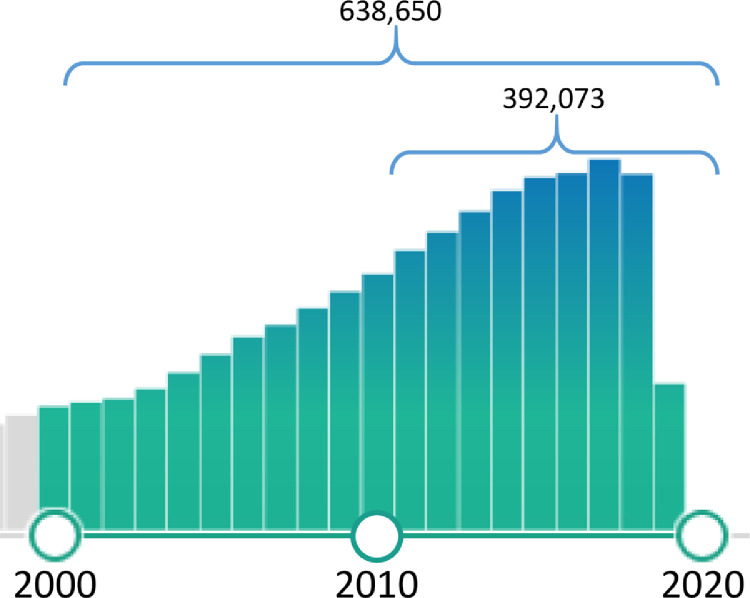

In recent years, the quantity of peer-reviewed journal articles on neuroimaging has been increasing steadily. A database (PubMed) query using the keywords in titles of “neuroimaging” OR “brain imaging” returned more than 39,000 articles from 2010 to the present time when this paper was drafted in Feb 2020 (Fig. 1 ). These publications include not only applications of multimodal neuroimaging in clinical examinations and biomedical research but also methodological studies in imaging processing and fusion of multimodal neuroimaging.

Fig. 1.

Numbers of peer-reviewed papers with the keywords of “neuroimaging” or “brain imaging” in titles (the numbers and the bar graph were generated by PubMed in Feb 2020).

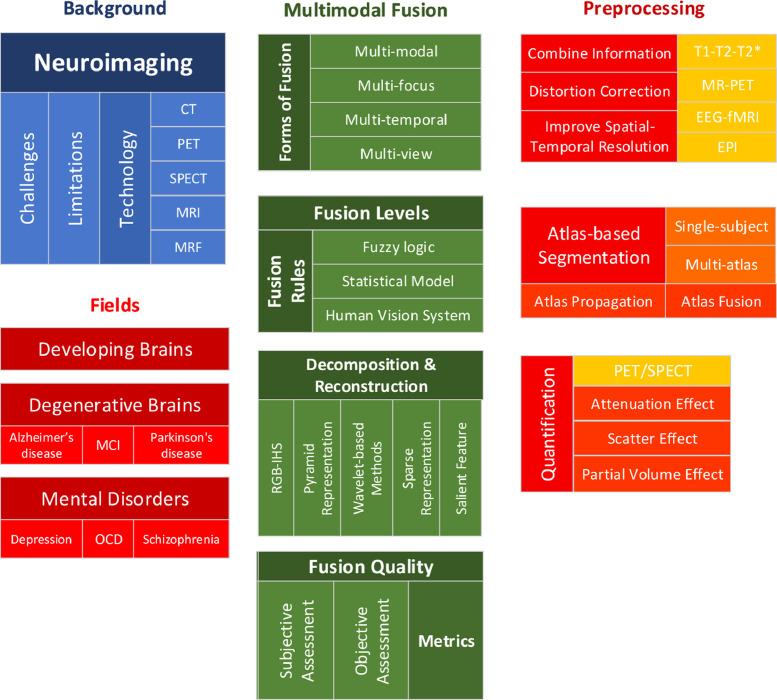

Therefore, this present paper will focus on the following two main aspects: (1) we will review some of the recent, typical papers that exhibit the strength and limitations of the neuroimaging modalities and the corresponding analysis methods, and in particular, the needs for improved image fusion methods and (2) we will review recent methodological development in data preprocessing and data fusion in multimodal neuroimaging. We note that although we tried to cover all neuroimaging modalities, we inevitably paid more attention to MRI modalities. This is not only due to the most practical application and versatility of the MRI but also due to the limitations of our expertise. Fig. 2 shows the taxonomy of this review.

Fig. 2.

Taxonomy of this review.

The main contents of the paper are organized as follows. Chapter 2 will give a brief introduction to neuroimaging, and challenges of multimodal imaging; Chapter 3 introduces the commonly used neuroimaging modalities, which include computerized tomography, positron emission tomography, single-proton emission computed tomography, and magnetic resonance imaging, which has many modalities in its own right. For each modality, we will concisely describe its signal source, energy level, spatial resolution, temporal resolution, and major applications; Chapter 4 describe applications of neuroimaging in three major areas: the developing brains, the degenerative brains, and mental disorders. In each part, we will first briefly describe what the clinical and/or biomedical problems are, we then review recent papers on how neuroimaging has been used to address these problems, and we point out what the unmet needs and challenges;

Chapters 5 to 9 are devoted to the multimodal neuroimaging fusion, covering some important procedures in data fusion. The topics are not necessarily complete and their order of presentation is not necessarily coherent with the pipeline of fusion processing. Chapter 5 reviews the fundamental methods, which covers types, rules, atlas-based segmentation, decomposition, reconstruction, and quantification; Chapter 6 reviews subjective and objective assessment of data fusion in multimodal neuroimaging; Chapter 7 reviews the advantages of data fusion in improving the spatial/temporal resolution, distortion correction, and contrast; it also reviews the benefits of these advantages in fusing structural and functional images; Chapter 8 reviews atlas-based segmentations in multimodal imaging fusion; Chapter 9 reviews the quantification in multimodal neuroimaging fusion. While the focus of this part is given to PET and SPECT, some of the approaches and principles discussed here, such as partial volume correction and attenuation (relaxation), can be applied to quantitative MRI modalities, such as DTI, ASL, quantitative susceptibility mapping (QSM), etc. Chapter 10 concludes the paper.

2. Multimodal imaging data fusion: challenges in neuroimaging

In this part, we will review the current challenges of neuroimaging, including limited spatial/temporal resolution, lack of quantification, and imaging distortions. These challenges often create fundamental limitations on individual modalities of neuroimaging, while some challenges also exist in current multi-modal neuroimaging. This part will mainly cover the challenges of individual neuroimaging modalities that led to the development and ongoing research of multimodal neuroimaging methods.

2.1. Individual modality imaging

Neuroimaging can be divided into structural imaging and functional imaging according to the imaging mode. Structural imaging is used to show the structure of the brain to aid the diagnosis of some brain diseases, such as brain tumors or brain trauma. Functional imaging is used to show how the brain metabolizes while carrying out certain tasks, including sensory, motor, and cognitive functions. Functional imaging is mainly used in neuroscience and psychological research, but it is gradually becoming a new way of clinical-neurological diagnosis [10].

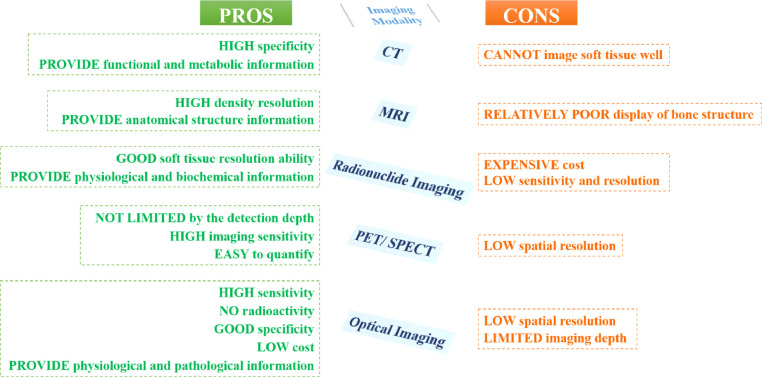

The amount of information obtainable through single-mode imaging is limited and often cannot reflect the complex specificity of organisms. For instance, although CT imaging is effective in identifying normal structures and abnormal diseased tissues according to their density and thus can provide clear anatomical structure information, it cannot image soft tissue well. Generally speaking, MRI imaging has good soft-tissue contrast resolution for most sequences, but its display of bone structure is relatively poor. PET imaging and SPECT imaging are not limited by the detection depth, with high imaging sensitivity, and are easy to quantify, but their spatial resolutions are low [11]. Optical imaging refers to the detection of fluorescence or bioluminescent dyes using the principle of light emission. This technique has high sensitivity, no radioactivity, good specificity, and low cost. Optical imaging allows dynamical monitoring of the replication process of virus bacteria in organisms. However, it has low spatial resolution and limited imaging depth [12, 13]. Therefore, it can be observed that various imaging technologies all have both benefits and drawbacks, as shown in Fig. 3 , and it is difficult to provide comprehensive and accurate information through utilizing individual modality imaging.

Fig. 3.

Advantages and disadvantages of various individual modality imaging.

2.2. Low spatial/temporal resolution

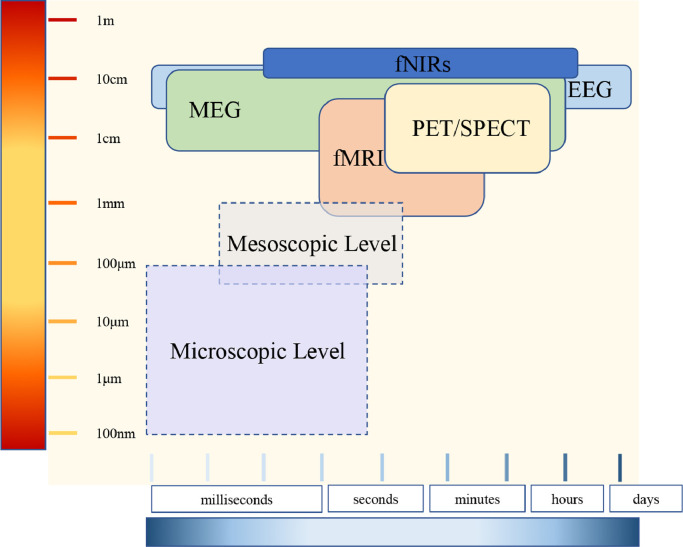

The nowadays most commonly used noninvasive functional imaging methods and their spatial and temporal range are illustrated in Fig. 4 . It can be distinctly observed that among these most advanced methods, functional MRI (fMRI) reaches the highest range of spatial resolution. fMRI can assess the whole brain and image the hemodynamic processes at the layered and columnar levels of the human cortex, under the condition of a high-intensity magnetic field (i.e., submillimeter level) [14]. However, it has a relatively lower temporal resolution in terms of imaging the neuronal population dynamics. Electroencephalogram (EEG) and Magnetoencephalography (MEG) can both measure electromagnetic changes in the scale of milliseconds. However, their spatial resolution/uncertainty is more than several millimeters [15, 16]. The microscopic level of neuroscience is often beyond the reach of noninvasive imaging techniques due to the requirement of high spatial or temporal resolution.

Fig. 4.

Functional neuroimaging modalities.

2.3. Non-quantitative

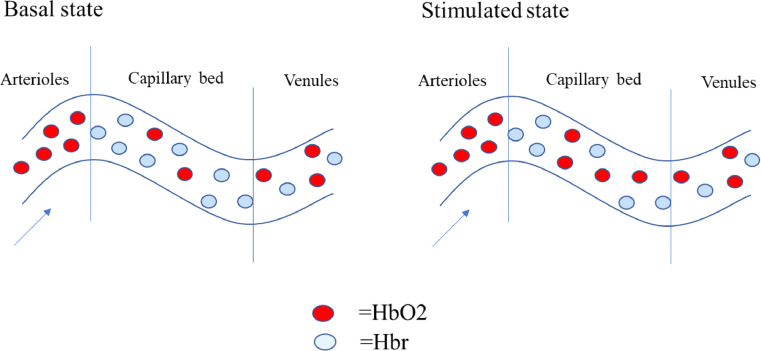

The majority of imaging modalities are non-quantitative and have to gain complement information from other data. This additional information allows the normalization of signals, acquirement of absolute units, and inter-subject comparison. As an example, the fMRI signal is a measure of neuronal activity incited hemodynamic changes caused by a combination of complex physical and physiological processes. In different subjects or brain areas, the same level of neuronal activity can evoke different corresponding fMRI signals. As a consequence, fMRI signals can only be considered as roughly proportional to the activity of neurons. Four years later, in 2008, the studies from Ances have found that the cerebral blood flow (CBF) is relevant to fMRI signal variations in individual's brain regions, patients’ age groups, and health conditions. This broad relevance brings fMRI signal high sensitivity [17]. Various approaches have been proposed to explain the ensuing sensitivity differences. Amongst these approaches, the so-called calibrated BOLD approach proposed and improved by Blockley, Chiarelli and Hoge over the years has been the most widely used [18], [19], [20]. However, the more reliable absolute quantitative results of CBF with improved spatial and temporal resolutions are provided by the Arterial spin labeling (ASL) technique by the UMICH fMRI lab [21].

2.4. Distortion

Some neuroimaging modalities are prone to geometric distortions. Echo‐planar imaging (EPI) is a fast imaging approach that could obtain the complete k‐space data set just in a single acquisition. Due to its unmatched acquisition speed, it has revolutionized the field of neuroimaging and has served as the standard readout module for most fMRI and dMRI acquisition. Nevertheless, EPI suffers from distortion and intensity loss mainly caused by field inhomogeneities, leading to relatively poor image quality [22]. In general, the imperfection of equipment may result in information loss, noise amplification and artifacts, resulting in the distortion of images.

To conclude, in practical application, utilizing individual modality imaging often has limitations, such as low sensitivity and specificity, low spatial/temporal/contrast resolution, distortion and so on. Because of these deficiencies, we need to introduce the usage of multimodal neuroimaging to eliminate those shortcomings in some degree.

3. Multimodal imaging data fusion: imaging technologies

Neuroimaging, more commonly known as brain imaging, is referred to as different types of technologies to display the function, pathology, and structure of the nervous system. There are mainly two types of neuroimaging: the functional imaging that directly or indirectly visualizes the processing of information by the central neural system of the brain and the structural imaging that shows the structure information of the brain. Neuroimaging is used for a patient who is found a neurological disorder by a physician to have a more in-depth investigation. There are different types of imaging modalities, such as Magnetic resonance imaging (MRI), Single-Photon Emission Computed Tomography, Computerized Tomography, Positron Emission Tomography, Pneumoencephalography, Functional Magnetic Resonance Imaging (fMRI). According to the types of possible diseases, patients will be investigated by different methods.

3.1. Computerized tomography

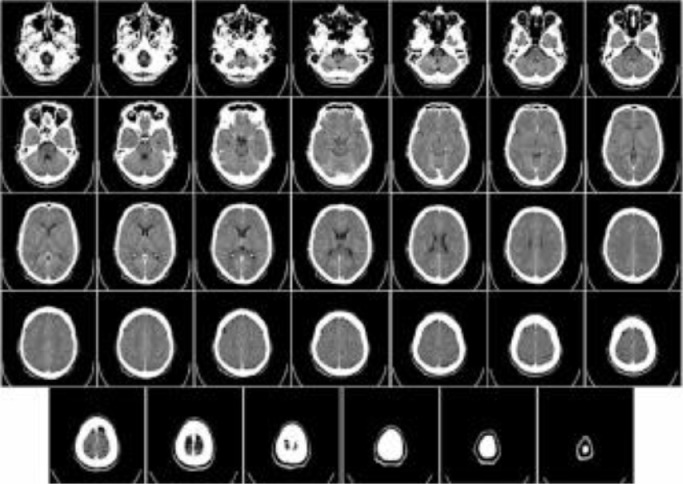

Computerized Tomography (CT), also known as computerized x-ray imaging, combines a series of X-ray signals obtained from multiple angles around the body and creates cross-sectional images by computer processing. Different from the conventional X-ray [23] that uses a fixed X-ray tube, the CT scanner uses a motorized x-ray source, which rotates around a gantry, a circular frame in a donut-shaped structure. CT scan images, therefore, can provide more information than conventional X-rays. CT can be recommended for disease or injury of various parts of the body, such as lesions or tumors of the abdomen, different types of heart disease, injuries, tumors or clots of the head [24], [25], [26]. Fig. 5 [27] shows an example of a CT scan of a brain.

Fig. 5.

An example of a CT brain scan.

3.2. Positron emission tomography

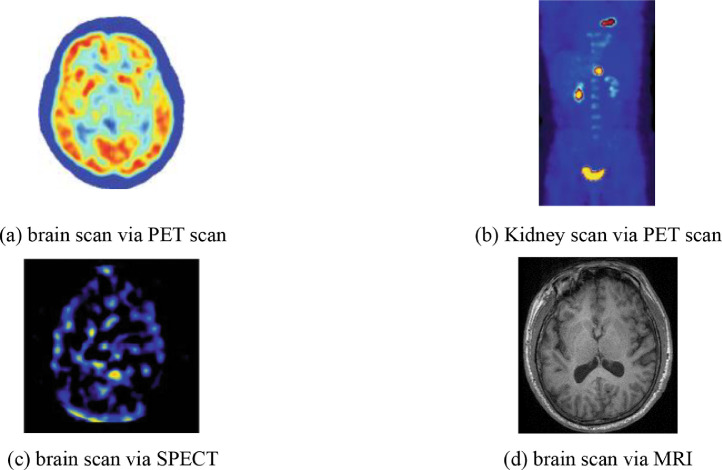

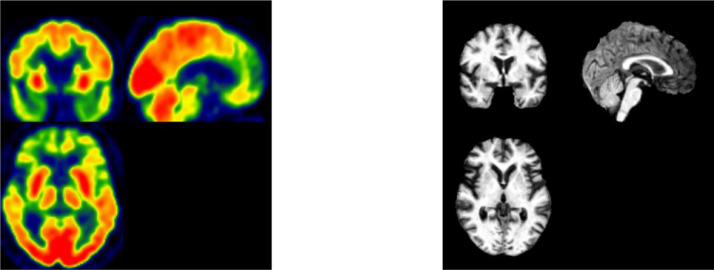

Positron Emission Tomography, shortened as PET, is a combination of nuclear medicine and biochemical analysis that is mostly used for the diagnosis of brain or heart conditions and cancer. Instead of detecting the amount of a radioactive substance existing in body tissues of a specific location to check the tissue's function, PET detects the biochemical changes within body tissues. The biochemical changes can reveal the onset of a disease process before other imaging processes can visualize the anatomical changes related to the disease. During PET studies, only a tiny amount of radioactive substance is needed for the examination of targeted tissues. PET scans not only can be used to detect the presence of disease or other conditions of organs or tissues but can also be used to evaluate the function of organs, like the heart or the brain. The most common application of PET scan is cancer detection and treatment. Fig. 6 (a) shows a PET image of the brain, and Fig. 6(b) shows a PET scan of the kidney [27].

Fig. 6.

Common scan modalities.

3.3. Single-photon emission computed tomography

Single-photon emission computed tomography, commonly known as SPECT, uses gamma rays as the tracer to detect blood flow in organs or tissue. Therefore, a gamma-emitting radioisotope, such as isotope gallium, should be injected into the bloodstream of the patient for SPECT. The computer collects the gamma rays from the tracer and shows it on the CT cross-section. It bears similarity with the traditional nuclear medicine planar imaging but provides 3D information as multiple images of cross-sectional slices through the patient. The information can be manipulated or reformatted freely according to diagnostic or research requirements. Besides detecting blood flow, SPECT scanning is also applied for the presurgical evaluation of medically controlled seizures. Fig. 6(c) [27] shows a SPECT scan of the brain

3.4. Magnetic resonance imaging

Magnetic resonance imaging (MRI) utilizes strong magnetic field, magnetic field gradients, and radio waves to generate pictures of the anatomy and the physiological processes of the body [28]. Different from PET or CT, MRI does not need the injection of ionizing radioisotopes or involve X-rays. As all radiation instances can cause ionization that leads to cancer, MRI, without exposing the body to radiation, becomes a better choice than CT and one of the safest medical procedures. MRI is widely used in hospitals and clinics for the medical diagnosis of different body regions, including the brain, spinal cord, bones and joints, breasts, heart and blood vessels, and other internal organs, such as the liver, womb or prostate gland [29], [30], [31]. Besides, MRI can also be used for non-living objects [32]. Fig. 6(d) shows an MRI brain image [33].

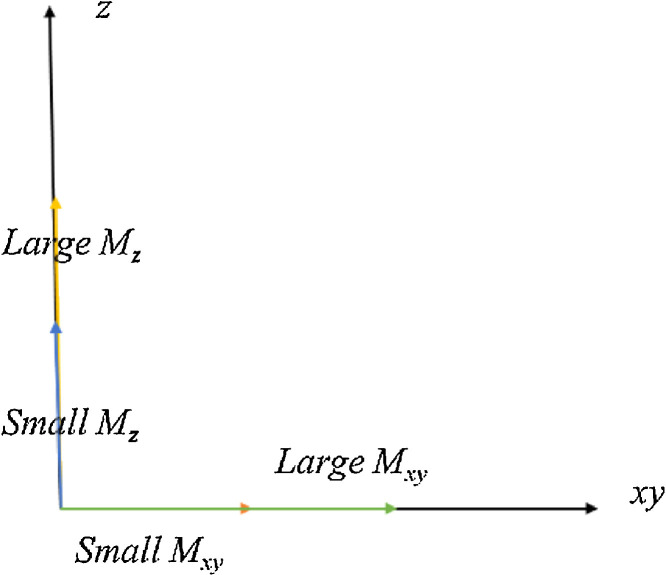

3.4.1. T1, T2, and proton density

T1, T2 and Proton density (PD) are three basic types of MRI imaging. T1, T2 and PD, which all vary with sequence parameters, can simultaneously determine the contrast of the MR images [34]. Via selecting different pulse sequences with different timings, we can decide the contrast in the region being imaged. There are also other types of sequences, such as fluid attenuates inversion recovery (FLAIR) and short tau inversion recovery (STIR). In this section, we will only mention the three main types. Fig. 7 shows the relationship between Mz and Mxy, of which xy refers to plane [35].

Fig. 7.

The relationship between Mz and Mxy.

Higher Mz at the time of applying the 90° RF pulse brings the larger transverse signal (Mxy). The time to repetition (TR) is defined as the determent of the length of time between 90° RF pulses. The Echo time [26] is defined as the time between the excitation pulse and the peak of the signal.

T1, the longitudinal relaxation time, is defined as a time constant that stands for the magnetization to recover from 0 to 63% of their maximum Mz in a static magnetic field. T1 values of hydrogen nuclei are different for different molecules and different tissues. T1 relaxation is defined as the recovery of the longitudinal magnetization. T1 is commonly applied for detecting fatty tissue, general morphological information, and characterizing focal liver lesions.

T2, the transverse relaxation time, is defined as the time for transverse magnetization Mxy to decay to 37% of its initial Mxy. Similar to T1 values, we also have different T2 values of Hydrogen nuclei in different molecules and different tissues. T2 weighted imaging is suitable for revealing cerebral white matter lesions and assessing edema, inflammation and zonal anatomy in the prostate and uterus.

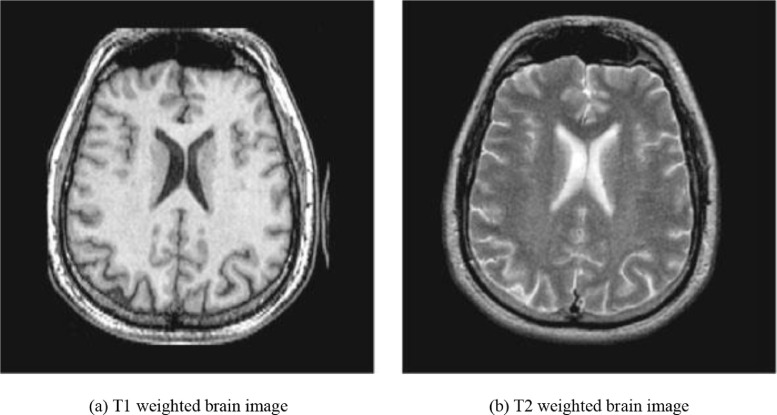

Different from T1 and T2, which mainly focuses on the magnetic characteristics of the hydrogen nuclei, PD is more related to the number of nuclei in the region being imaged. PD weighted images are obtained by a short echo time and a long repetition time, which can provide a more apparent distinction between the gray matter and white matter. PD weighted imaging is specifically useful for detecting joint disease and injury. Fig. 8 (a) and (b) show a T1 weighted brain image and T2 weighted brain image, respectively [27].

Fig. 8.

Two types of weighted images of the same brain.

3.4.2. Functional magnetic resonance imaging

Functional magnetic resonance imaging or functional MRI (fMRI) measures brain activity by detecting changes associated with blood flow. As it has been proved that when an area of the brain is in use, the blood flow to that area increases, which means that the neuronal activation and cerebral blood flow are matched. fMRI is a particular type of imaging technology used to map the neuron activities in the spinal cord and brain of humans or animals by visualizing the change in blood flow, which is related to the energy use by brain cells. fMRI also includes resting-state fMRI [36] or task-less fMRI, which can provide subjects’ baseline BOLD service [37].

3.4.3. Diffusion weighted/tensor imaging

Diffusion Weighted/Tensor Imaging (DWI) generates image contrast from the differences in the magnitude of diffusion of water molecules within the brain. Diffusion in biology is defined as the passive movement of molecules from a higher concentration region to a lower concentration region, which is also known as Brownian motion [38]. Diffusion within the brain is affected by many factors, such as temperature, type of molecule under investigation, and the microenvironmental architecture in which the diffusion takes place. Based on the MRI sequences of which diffusion is sensitive to, the image contrast can be generated according to the difference in diffusion rates. DWI is highly effective for the early diagnosis of ischemic tissue injury, even before the pathology can be shown by the traditional MR sequence. Therefore, DWI provides the time window for tissue salvaging interventions.

3.4.4. Perfusion and susceptibility weighted imaging

Perfusion weight imaging (PWI) is defined as a variety of MRI techniques that are able to provide insights into the perfusion of tissues by blood [39]. PWI can be used for the evaluation of ischaemic conditions, neoplasms, and neurodegenerative diseases. Perfusion MRI mainly has three main techniques: Dynamic susceptibility contrast (DSC), Dynamic contrast-enhanced (DCE), and Arterial spin labeling (ASL).

Susceptibility weighted imaging (SWI), previously known as BOLD venographic imaging, is a type of MRI sequence that is extremely sensitive to venous blood, hemorrhage, and iron storage. As an fMRI technique, SWI can explore the susceptibility differences between tissues and detect differences based on the phase image. An enhanced contrast magnitude image can be obtained by combing the magnitude and phase data. SWI is commonly used in traumatic brain injuries (TBI) and high-resolution brain venographies as it is sensitive to venous blood.

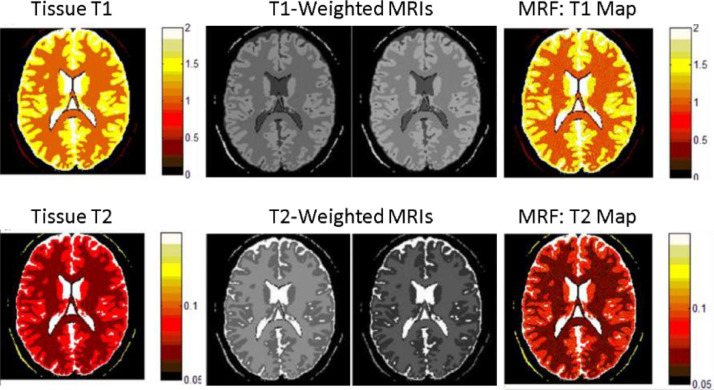

3.4.5. Magnetic resonance fingerprinting

Magnetic resonance fingerprinting (MRF) [40] is new MRI technique that integrates MR physics theory and computer pattern recognition technology and realizes fast and multi-parameter parallel quantization imaging. The technique consists of three modules. First, the fingerprinting signals are excited and acquired from the subject in the MR scanner by the pseudorandom temporal varied pulse sequence to reflect the physiological property of tissue. Second, the evolution of fingerprinting signals with different physiological parameter combinations are predicted by the computer simulation using the Bloch equation; and a fingerprint dictionary indexed by the quantized parameters is constructed. Finally, the pattern recognition technology is applied to find the matched fingerprinting entries for the measured fingerprinting signals, so as to obtain the corresponding quantization parameters and realize quantization MR imaging. Different from most of the conventional MRI modalities, which provide qualitative contrast-based images that are determined not only by the tissue properties but also by experimental conditions, MRF provides quantitative images of tissue properties that reflects pathological conditions of the subject. Fig. 9 shows digital phantom experiments of conventional MRI and MRF. The upper row shows a digital brain with T1 values (left), T1-weighted MRIs with different experimental parameters (middle), and MRF reconstructed T1 map (right), respectively; The lower row shows a digital brain with T2 values (left), T2-weighted MRIs with different experimental parameters (middle), and MRF reconstructed T2 map, respectively. The experiments demonstrated that the contrasts of the conventionally “weighted” MRI images depend on both the tissue properties and experimental parameters, but MRF can reconstruct the parameter images independent of experimental parameters.

Fig. 9.

Digital phantom experiments of conventional T1 weighted and T2 weighted MRIs, and MRF.

Currently, the applications of MRF have been limited to biomedical research and the fusion of MRF with other neuroimaging modalities has not been reported. Given its parametric and quantitative features, the MRF technique will play an important role not only in neuroimaging but also in fusion of multimodal neuroimaging.

3.5. Comparison of imaging methods

Table 1 lists the main advantages, disadvantages and applications of each neuroimaging technology.

Table 1.

Comparison of various imaging methods.

| Imaging methods | Advantages | Disadvantages | Applications |

|---|---|---|---|

| Computerized Tomography (CT) | • Painless, noninvasive and accurate | • Radiation | • Brain tumors. |

| • Blood clots and blood | |||

| • Image bone, soft tissue | vessel defects. enlarged ventricles | ||

| and blood vessels all at the same time | |||

| • Not recommended for pregnant women | • Abnormalities in the | ||

| nerves or muscles of the eye | |||

| • Fast and simple | |||

| Positron Emission Tomography (PET) | • Double the diagnostic clarity compared to CT | • Not recommended for pregnant women | • Cancer |

| • Heart disease | |||

| • Diabetics require certain precautions. | • Brain disorders | ||

| • Easy,Nondisruptive | |||

| Single-photon Emission Computed Tomography (SPET) | • More available and widely used | • Long scan times | • Functional brain imaging |

| • Low-resolution and prone to artifacts and attenuation | |||

| • Functional cardiac imaging | |||

| • Less expensive than PET | |||

| Magnetic Resonance Imaging (MRI) | • No radiation | • Expensive | • Anomalies of the brain and spinal cord |

| • Apparent, detailed images of soft-tissue structures compared to other imaging techniques | • Cannot find all cancers | ||

| • Tumors, cysts, and other anomalies in various parts of the body | |||

| • Cannot always distinguish between malignant or benign tumors | |||

| • Breast cancer screening for women who face a high risk of breast cancer | |||

| • Injuries or abnormalities of the joints, such as the back and knee | |||

| • Certain types of heart conditions | |||

| • Diseases of the liver and other abdominal organs | |||

| • The evaluation of pelvic pain in women, with causes including fibroids and endometriosis | |||

| • Suspected uterine anomalies in women undergoing infertility evaluation | |||

3.6. Databases

In this section, we listed some public databases, as shown in Table 2 . The International Cat Association (TICA) database is an extensive database that contains different types of medical images of cancers, including lung cancer, breast cancer, and kidney cancer. ATLAS is a public database of Harvard University, which mainly contains image data of Cerebrovascular Disease, Neoplastic Disease, Degenerative Disease, Inflammatory or Infectious Disease. CTisus has numerous MRI, CT, X-rays of different organs and tissues. The Open Access Series of Imaging Studies (OASIS) dataset contains 2000 MR sessions, which includes: T1 weighted image, T2 weighted image, FLAIR, ASL, SWI, time of flight, resting-state BOLD and DTI sequences, PET images from three types of traces, PIB, AV45 and FDG. The Alzheimer's Disease Neuroimaging Initiative (ADNI) database contains several types of data like MR, PET from a group of volunteers and dementia patients. The Federal Interagency Traumatic Brain Injury Research (FITBIR) shares the data for Traumatic Brain Injury (TBI) research.

Table 2.

Public datasets.

| Database Name | Web Address |

|---|---|

| TCIA | http://www.cancerimagingarchive.net/ |

| ATLAS | http://www.med.harvard.edu/aanlib/home.html |

| CTisus | http://www.ctisus.com/ |

| OASIS | https://www.oasis-brains.org/ |

| ADNI | http://adni.loni.usc.edu/ |

| FITBIR | https://fitbir.nih.gov/ |

4. Multimodal imaging data fusion: diseases

In this part, we will review recent advancements in the application of multimodal neuroimaging in some clinical and research areas such as early brain development, neurodegenerative diseases, psychiatric disorders, and neurological diseases. It is not our intention to cover all aspects or provide a complete review of these areas. Instead, we focus on the aspects related to the development and applications of the multimodal neuroimaging techniques that meet the expectations and challenges of biomedicine. As such, each of the areas will begin with a brief description of background information such as clinical features, pathology, diagnosis, treatment of the diseases; then a general introduction of the roles, applications, and current status of the medical imaging techniques to the disease; the major part will be a review of recent papers that used one or more imaging modalities and used image fusion in multiple imaging modalities.

4.1. Developing brains

Recent studies show that the human brain experiences a rapid development in the first eight years and continues to develop and change into adulthood. During this long period, the brain develops in size, neuroanatomy, and functions. This period is significant for a person's physical and mental health, intellectual and emotional development, and learning, working, and life success [41], [42], [43].

Many factors have influences on the brain development of young children, which will have an impact on cognitive abilities and mental health in later life. These influencing factors include genes, maternal stress, and drug abuse, exposure to toxic environments, infectious diseases, socioeconomic status of the family, etc. Approximately one-third of genes in the human genome are expressed primarily in the brain and will affect brain development. Many psychiatric and mental disorders, such as autism, ADHD, bipolar, and schizophrenia, are highly heritable or have genetic risk factors. Maternal stress and drug abuse are associated with preterm birth and low birth weight and increased risk of neurodevelopmental disorders and mental disorders in children [44]. The nutritional status of a child, which is affected by the socioeconomic status of the family, has a significant impact on neurocognitive development [45].

Neuroimaging techniques have been used to study normal and/or abnormal development of the brain, enhancing our understanding of neuroanatomy, connectivity, and functionality of the brain. These techniques also reveal the etiological associations of abnormal brain development with risk factors and contribute to the development of intervention procedures for diseased children [42]. Young children are more sensitive to radiation than adults are, so the use of PET and CT is limited. Thanks to the in vivo nature and versatility of MRI, not only young children but also newborn babies can be imaged, offering the opportunity to study white matter development and cognition in babies [46], [47], [48]. MRI has become the most important pediatric neuroimaging modality and has been widely used to study normal and abnormal brain development, allowing repeated longitudinal observation of the changes of brains of the same individuals before and after birth [49]. In the following, our review will focus on the major MRI modalities in pediatric imaging, which include structural, functional, and diffusion tensor imaging.

Early pediatric brain MRI studies focused on the anatomical aspects using T1-weighted and T2-weighted images. Qualitative studies provided information about changing patterns of gray matter and white matter differentiation and myelination in the first months of birth [50] and early childhood [51]. Quantitative studies also revealed the changes in water contents, T1, and T2 relaxation times in both gray matter and white matter; age-related changes in gray matter, white matter, and CSF volumes. All these reflect ongoing maturation and remodeling of the central nervous system [52, 53].

Compared with adult cohorts, brain MR imaging of young children is challenging because of several factors. Young children are less cooperative than adults with scanning procedures, which can be long, noisy, and uncomfortable when lying still for long; the images are often plagued with motion artifacts. The brain changes rapidly with age in early life after birth; the brain is not well myelinated; the contrast between gray matter and white matter is low. These pose difficulties to the optimized parameters for data acquisition protocol and also the standard parameters or criteria for the postprocessing procedures, such as the segmentation of the brain to determine cortical thickness [54]. As a result, the physical properties, such as relaxation times, water content, diffusion coefficients, of the developing brain are not very well characterized. Other technical challenges exist to scan young children [55].

Knowledge of the variations of biophysical properties, such as T1 and T2 relaxation times, water contents in GM and WM during the early life of children, is of critical importance to the understanding of neurodevelopment of young children and also to the development of diagnostic protocols of abnormal brain development. The measurement of these biophysical properties is challenging due to the prolonged scan time. Recent technical development of magnetic resonance fingerprinting (MRF) allows rapid and quantitative analysis of multiple tissue properties [40]. For example, MRF can provide T1, T2, and proton density maps of the brain in contrast to the conventional T1-weighted, T2-weighted, or proton density-weighted images. A recent paper reported an application of the MRF to study the T1, T2, and MWF of children aged from 0 to 5 years old [56]. This study was able to record different patterns of variations of tissue biophysical parameters over different age stages. MRF techniques were also used to parametrically characterize brain tumors in children and young adults [57]. In a broad sense, the parametric information in MRF opened doors to studies of the correlations between brain tissue properties and brain development, impairment, and physiopathology. Techniques of image fusion can play an essential role in the processing, interpretation, and application of MRF data.

4.2. Degenerative brains

Degenerative brain diseases are caused by the decline of neuronal function and the reduction of numbers of neurons in the central nervous system (CNS). Known degenerative brain diseases include mild cognition impairment, Alzheimer's disease (AD), Parkinson's disease, etc. The patients of these diseases suffer from losses of functions in memory, speech, movement, etc. Most of these diseases (except for some mild cognitive impairment subtypes) are progressive, i.e. the symptoms deteriorate as the brains age. As the population is rapidly aging, degenerative brain diseases post enormous impacts on individuals, families, and society. The etiology of these diseases is still unknown, and there is currently no cure. In the following sessions, we will review advances of neuroimaging on MCI, ADs, and PDs.

4.2.1. Mild cognitive impairment

Mild cognitive impairment (MCI) is a clinical transition between normal aging and dementia or Alzheimer's disease (AD), in which individuals have memory or other cognitive impairments beyond their age, but not to the extent of dementia. Patients with MCI often only have minor difficulties in functional ability.

In studies based on people older than 65 years of age, the incidence of MCI is estimated to be at 10–20% [58], and the Mayo clinical study on aging shows an 11.1% incidence of amnestic MCI (aMCI) and 4.9% incidence of non-amnestic MCI (naMCI) in undiagnosed patients aged 70-89 years [59]. Several longitudinal studies have shown that most MCI patients have a significantly higher risk of developing to dementia compared to the general U.S. population (1-2%/ year) [60], the community population (5-15%/ year), and the clinical patients (10-15%/ year) [61], [62], [63]. The latter data suggest that cognitive impairment tends to develop more rapidly for the patients that display serious symptoms. Although some studies have shown that the incidence of MCI reversals to normal cognitive function is as high as 25-30%, recent studies suggest that the incidence may be lower. In addition, cognitive reversals over a short period of follow-up study showed that they did not prevent subsequent disease progression.

Magnetic resonance imaging

Magnetic resonance imaging (MRI) techniques have been used in the clinical identification of MCI and various types of dementia to predict the progression of MCI to dementia. For MRI measurements of brain structure, linear, area, or volume measurements can be used. The results showed that the area of MCI brain atrophy was consistent with AD, but to a lesser extent, between the normal elderly (control group) and AD patients [64], [65], [66], [67]. Similar results were found using voxel-based measurement and analysis, with abnormal changes in not only gray matter but also white matter [68, 69]. The previous diagnosis of AD by structural MRI was mainly based on the degree of brain atrophy, especially in the medial temporal lobe. The structural MRI studies showed the atrophy along the hippocampal pathway (entorhinal cortex, hippocampus and posterior cingulate cortex), which was consistent with the loss of early memory. As the disease progresses, the temporal, frontal, and apical lobes shrink with neuronal loss, causing abnormalities in language, practice, vision, and behavior [70, 71]. However, no definitive biomarkers have been identified by structural MRI alone to distinguish MCI and AD, to stage MCI, and to predict MCI conversion to AD or not [72, 73].

MRI-based functional imaging has been applied to the understanding of and to the discrimination between AD and MCI. These techniques include perfusion-weighted imaging (PWI), diffusion-weighted imaging (DWI), diffusion tensor imaging (DTI), and blood oxygen-dependent fMRI (including task execution and resting state) [72]. Functional MRI allows the delineation of microstructural brain changes, which is complementary to structural MRI that can depict the global changes of the brain in MCI. An MRI-based functional imaging study that employed PWI, DTI and proton MRS showed significant abnormalities in parameters derived from the three imaging modalities for AD patients. PWI and DTI parameters showed a significant, but a lower degree of abnormalities in some areas for MCI patients. fMRI has also been used to distinguish AD and MCI and to predict the transition from cognitive normal to MCI and from MCI to AD. Recent studies show that BOLD-fMRI can detect changes in brain function before MCI progresses to AD, making it an important technique to study the neural mechanism of MCI [74, 75].

Proton magnetic resonance spectroscopy (1HMRS) is a noninvasive imaging method that can detect biochemical and metabolic changes in brain tissue in vivo and conduct quantitative analysis. Early MRS studies show abnormal concentrations of N-acetylaspartate (NAA), creatine, and choline are associated with the status of memory and cognition impairment and have a promise for assessing cognitive status, evaluating response to medicine, and monitoring progression during treatment [76], [77], [78]. In recent years, with advances in the technical development of MR hardware and pulse sequences, the roles of glutamate, the excitatory neurotransmitter, and GABA, the inhibitory neurotransmitter, in MCI patients became the main focus [79], [80], [81]. For example, with ultra-high field 7 Tesla MR scanner, abnormal concentrations of GABA, glutamate, NAA, glutathione, and myo-inositol (mI) in different brain regions were detected [82]. The manifestations of 1HMRS in MCI patients were mainly shown in decreased NAA/Cr ratio and increased mI/Cr ratio. The pathological results showed neuronal deletion and glial proliferation, and the changes in metabolite concentration were consistent with the pathological results [82, 83].

Multimodal imaging

PET and SPECT provide insight into blood perfusion and metabolism in tissues and organs, as well as explore changes in function. The nuclear medical images of aMCI patients showed decreased perfusion and metabolism in the hippocampus, temporoparietal lobe, and posterior cingulate gyrus. Studies using PET, SPECT, and MRI have shown that glucose metabolism in the hippocampus, glucose metabolism rate in the bilateral temporal-parietal lobe, and blood perfusion in patients with aMCI are lower than those in normal elderly. These studies have also shown that low glucose metabolism in the temporal-parietal lobe is a reliable indicator of conversion to AD [84], [85], [86]. Excessive deposition of β-amyloid peptide in the brain and the cascade reaction caused by it are the early onset of AD. Therefore, early detection of β-amyloid peptide in the brain can help identify patients with aMCI, and monitor the progression of the disease and treatment effect. It was found that the 11C-PiB-PET could attach to Aβ in the brain. PET imaging showed the amount and location of Aβ deposition in the brain, which was expected to be an early diagnostic method for AD [87], [88], [89].

Multimodal imaging techniques involving MRI-based imaging and PET-based imaging have been frequently used for prediction, characterization, and classification of MCI [90, 91]. In facilitating these complex tasks, imaging fusion methods based on artificial intelligence, neural network, deep learning and graph theory have been used [92], [93], [94]. Brain network studies based on multimodal MRI and graph theory analysis have found that the topological properties of AD and aMCI affected brain networks have undergone abnormal changes, which mainly manifested as the imbalance between functional differentiation and integration. This approach provided a new way to reveal topological mechanisms and pathophysiological mechanisms of brain networks [93, 95]. In addition, the combination of graph theory analysis and classification analysis suggests that the brain network topology attribute can be used as an imaging marker of AD and has a good clinical application prospect.

4.2.2. Alzheimer's disease

Alzheimer's disease (AD) is a neurodegenerative disorder and the most common cause of dementia. AD is characterized by progressive memory loss, aphasia, loss of use, loss of recognition, impairment of visual-spatial skills, executive dysfunction, and personality and behavior changes [96, 97]. It has become one of the major diseases that seriously threaten the health and quality of life of the elderly [98]. The onset of AD is slow or insidious, with patients and their families often unable to tell when it starts. It is more common in the elderly over the age of 70 (the average male is 73, and the average female is 75 years old), with more females than males (female to male ratio of 3:1) [99].

There is currently no cure for AD, but large numbers of novel compounds are currently under development that have the potential to modify the course of the disease and to assess the efficacy of these proposed treatments. There is a pressing need for imaging biomarkers to improve understanding of the disease and to assess the efficacy of these proposed treatments.

Magnetic resonance imaging

Structural MRI (sMRI) is the most widely used imaging modality for the study of AD. The techniques for analyzing sMRI are classified into volume-based and surface-based methods [100]. Previous studies have shown that hippocampal volume atrophy and whole-brain atrophy independently predicted the progression of AD [101]. Hippocampal damage or atrophy occurs in the early stage of AD, which is an important structural basis for the clinical manifestations of AD. Although global hippocampal atrophy in AD was well accepted, the differences were often detected large sample-size studies [102].

De Winter et al. studied 48 elderly AD patients with depression and 52 healthy control elderly people and examined all the subjects with sMRI and neuropsychology [103]. They found that there was no significant difference in the positive rate of Aβ between the depression group and the healthy control group. However, the hippocampal volume in the depression group was significantly smaller than that in the healthy control group. There is significant hippocampal atrophy in elderly depression patients, and hippocampal atrophy has nothing to do with Aβ, which challenges the reliability of hippocampal atrophy in the clinical diagnosis of AD. It is suggested that hippocampal atrophy not only occurs in AD but also in senile depression. The study of sMRI indicates that the brain atrophy shown by brain morphology and structure has reference value for the diagnosis of AD. However, the diagnosis of AD still needs to be confirmed by combining clinical manifestations, neuropsychological assessments, and other examination methods. It also indicates that follow-up is needed for suspected depression in patients with AD. The above studies showed the limitations of structural MRI and the necessity of the multimodal approach in the study of AD [104].

Other MRI modalities, including functional MRI, DWI, PWI, have also been widely used in the study of neurodegenerative diseases. We will review recent advances of the resting-state functional magnetic resonance imaging (rs-fMRI) as an example. As opposed to the conventional task-based fMRI, rs-fMRI does not require the subject to perform any task or be subjected to any external excitation. The rs-fMRI captures the low-frequency oscillations signals that are related to the spontaneous neural activity of the brain by analyzing the brain blood oxygen level dependent (BOLD) signal. Sophisticated methods of analysis of the rs-fMRI data depict the functional connectivity of the brain. The rs-fMRI has been used to reveal how the networks of the functional connectivity are correlated to the brain functions of individuals with cognitive impairment. Zamboni et al. found that the recognition task of AD patients was related to the increased activation of the lateral prefrontal area, which also overlapped with the functional connection enhancement area indicated by the rs-fMRI [105]. Zhou et al. predicted the pathological changes of AD by using the calculation model of resting brain function network and studied five different brain regions vulnerable to neurodegenerative diseases through the use of task state fMRI [106]. They found that the brain network of AD patients may have the phenomenon of weak functional connectivity and their ability to transmit information of functional brain network decline. Wang et al. found that the functional brain network of MCI patients had different degrees of functional connectivity disorder. The evaluation of overall functional brain connectivity of patients plays an important role in the early diagnosis and treatment of AD [107]. Abnormal brain connectivity can be a biomarker of the disease.

Many neuropsychiatric diseases and dementia can change the default mode network (DMN) of the brain. Identification of the change in the connectivity of DMN is constructive for the early recognition of AD. Jin et al. collected 8 patients with aMCI and 8 healthy people to analyze rs-fMRI data by independent component analysis (ICA) [108]. They found that the functional activities of the lateral prefrontal cortex, left medial temporal lobe, left middle temporal gyrus and right angular gyrus in aMCI patients decreased, while the activity of the middle and medial prefrontal cortex and the left parietal cortex increased. Further studies found that the functional activities of the left lateral prefrontal cortex, left middle temporal gyrus and right angular gyrus were positively correlated with memory, especially delayed memory [109]. Although there was no significant difference between the two groups in the degree of medial temporal lobe atrophy, the functional activities of the left medial temporal lobe decreased. This decrease suggests that the functional changes of DMN may occur in the early stage of AD, i.e. aMCI, and the functional changes may occur before the obvious change of brain structure.

Multimodal imaging

Due to severe overlap in symptoms and findings of individual imaging modalities of the neurodegenerative diseases, it is difficult to identify the biomarkers that could be used to differentiate the types of these diseases and/or to stage the progress of a disease. Therefore, multimodal neuroimaging techniques are used to overcome the challenges [110]. As pointed out in [111], individual modalities of MRI and EEG lack precision in AD diagnosis and staging. By employing both imaging modalities, with the MRI measuring the cortical thickness and the EEG measuring the rhythmic activities, the authors found joint markers that identified the subjects of Alzheimer's disease with an accuracy of 84.7%, a significant increase from those of individual modalities. While some studies of multimodal imaging confirmed correlations of findings among individual modalities as in a study of sMRI and fMRI [112], multimodal imaging studies can also be used to dissociate the tau deposition and brain atrophy in early ADs using PET and MRI. The study found that the tau load had little effect on the gray matter atrophy, and this might imply that tau protein deposit precedes and predicts brain atrophy. The multimodal imaging studies require statistical and analytical models, advanced computing algorithms, and especially, novel data fusion methods [113], [114], [115], [116], which will be reviewed in detail in the following sections.

4.2.3. Parkinson's disease

Parkinson's disease (PD) is a chronic progressive degenerative disease of the central nervous system, which is commonly seen in elderly patients. Typical clinical manifestations of PD include static tremor, myotonia, bradykinesia, and abnormal posture and pace [117]. With the continuous increase of the aging population, the incidence and disability rates of the population are also increasing year by year. The results of epidemiological surveys indicate that the prevalence of PD in people over 65 is about 1.7%, and the prevalence of PD in people over 80 is as high as 4% [118], [119], [120]. PD is more and more harmful to the health of the middle-aged and elderly, especially involving the central nervous system.

Due to the lack of objective basis and diagnostic criteria for the diagnosis of PD, the previous clinical diagnosis of PD was mainly based on the clinical symptoms, resulting in a low coincidence rate between the clinical diagnosis and pathology of PD and in a significant lag behind the pathological changes of brain microstructure. With the increasingly standardized diagnosis and treatment of PD, neuroimaging examination has become an indispensable part of the diagnosis. This differential diagnosis of PD can help identify different movement disorders, locate anatomical dysfunction sites, and determine the causes of the lesion, which will improve clinical evaluation and prognosis [121, 122].

Magnetic resonance imaging

Structural cranial MRI can distinguish white matter from gray matter by setting different imaging parameters while avoiding radiation. It is better than cranial CT in revealing white matter lesions, small infarcts, subacute intracerebral hemorrhage, and lesions in the brain stem, subcortical regions, and posterior fossa. On structural MRI such as T1, T2-weighted, and fluid-attenuated inversion recovery (FLAIR) images, PD patients usually exhibit broadening of the ventricles (caused by extrapyramidal atrophy) and widened sulci (diffuse brain cortical atrophy) [123]. The quantitative measurements of cortical atrophy can be measured based on voxel morphometric assessment. When the compact belt of the substantia nigra shrinks and the short T2 signal of the substantia nigra disappears, the width of the dense belt of the substantia nigra, the ratio of the width of the dense belt of the substantia nigra to the diameter of the midbrain, the caudex nucleus, the putamen nucleus, the thalamus and other areas of interest are measured. In evaluating the extent of atrophy, physiological changes such as age increase and relevant clinical supporting evidence should be taken into account [124].

Neuromelanin-sensitive MRI is used to detect neuromelanin, a surrogate biomarker for the PD. Neuromelanin is a dark pigment found in neurons in the substantia nigra pars compacta. The concentration of neuromelanin increases with age but is found to be around 50% higher in PD patients compared with age-matched non-PD subjects, due to the death of cells in the substantia nigra. Neuromelanin-sensitive MRI allows the visualization of the neuromelanin-containing neurons in the substantia nigra, pars compacta. With the use of morphological analysis and signal intensity (contrast to noise ratio), the width and CNR of the lateral and central substantia nigra were found to be significantly lower in the PD subjects than in the control group and untreated essential tremor (ET) group [125, 126]. Therefore, this imaging technique can be potentially used as a biomarker to differentiate ET from the de novo tremor-dominant PD subtype. The neuromelanin levels were quantitatively assessed using neuromelanin-sensitive MRI and quantitative susceptibility mapping (QSM) [127], an MRI modality for measuring the absolute concentrations of iron, calcium, and other substances in tissues based on changes of local susceptibility [128]. While the neuromelanin imaging found significantly lower neuromelanin levels in the PD group than the health controls (HC), which is in agreement with the neuromelanin MRI only study, the QSM values were significantly higher in the PS group than in the HC group. This result suggested the usefulness of QSM in detecting PD [127].

Resting functional magnetic resonance imaging (fMRI) is a technique that collects the blood oxygen level dependent signal changes of patients in the awake and resting states to obtain the functional activity level of the brain in the baseline state. In recent years, fMRI has been widely used in clinical studies of all motor disorders or neurodegenerative diseases, including PD [129], [130], [131]. Resting-state functional MRI (rs-fMRI) can calculate a variety of brain activity attributes, such as local consistency, range of low-frequency fluctuation, and amplitude of low-frequency fluctuation etc. By observing the correlation between time-dependent signals of blood oxygen levels in different voxels or areas of interest, we can further evaluate the synchronization of functional activity in different brain areas, i.e. functional connectivity [129, 132, 133]. In recent years, calculation methods based on independent component analysis, Granger causality analysis and Graph Theory can help to find complex pattern changes in the brain network of PD patients.

Other imaging modalities and multimodal imaging

Other imaging modalities, including PET, SPECT, EEG, CT, have been applied to study the functional and structural abnormalities and changes of PD patients, and they provide much complementary information to MR-based imaging modalities mentioned earlier. PET studies investigated cerebral glucose metabolism with or without medications, with or without brain stimulations [134], [135], [136]. Metabolic and brain chemical changes related to dopamine neurons in PD patients were also studied using SPECT, which cannot be assessed by other MRI modalities including proton magnetic resonance spectroscopy (MRS) [137]. By jointly applying SPECT and DTI, this study identified regions and connections of the brain that differentiate PD patients and healthy controls. Different from the imaging modalities in that study, a recent study employed PET scans with two different tracers and rs-fMRI to investigate variations of metabolism and functional connectivity of the PD patients [138]. It identified correlations between motor impairments with hypometabolism and hypoconnectivity in multiple brain regions. With the use of different modalities under similar aims, results from these studies can provide complementary information for the impaired regions. The data from them can be integrated and analyzed using data fusion like the work in [139], in which data of anatomical MRI, rs-fMRI, and DTI were analyzed for more accurate and reliable biomarkers of PD.

4.3. Mental disorders

Mental disorders are conditions that affect a person's thinking, mode, behavior, relationship with others, and functions of daily life and work. Major psychiatric disorders include depressive disorders, bipolar disorders, obsessive-compulsive disorders, schizophrenic disorders, autistic spectrum disorders, attention deficit, and hyperactivity disorder. It is estimated that nearly one-fifth of adults aged 18 or older in the United States live with a psychiatric disorder [140]. The World Health Organization estimates that mental disorders affect one-fourth of the worldwide population [141]. The high prevalence of mental disorders have a significant impact on the wellbeing of societies and the development of the world economy [142], [143], [144]

Unlike the diagnosis of other diseases, such as cancer and diabetes, there are currently no medical tests that can determine mental illness. The diagnosis of mental illness is determined by a psychiatrist using official criteria such as The Diagnostic and Statistical Manual of Mental Disorders, fifth edition (DSM-5) according to the feeling, symptoms, and behaviors of the patient. However, neuroimaging techniques have been used to detect, identify, differentiate, and understand the abnormalities, differences, etiologies, and biomarkers of psychiatric disorders [145], [146], [147], [148], [149].

4.3.1. Depression

Depression is a common mood disorder, which can be caused by a variety of reasons. The main clinical feature is marked with persistent depression of mood, which is incompatible with the situation. In severe cases, suicidal thoughts and behaviors may occur. Most cases tend to show recurrence; and most can be relieved each time, while some may have residual symptoms or progress to chronic depression. At least 10% of clinical depression patients also show manic episodes and should be diagnosed as bipolar disorder [150, 151]. What we commonly call depression is clinical or major depression, which affects 16% of the population at some point in their lives [152]. In addition to the severe emotional and social costs of depression, the economic costs are also enormous. According to the World Health Organization, depression has become the fourth most serious disease in the world and is expected to become the second most serious disease after coronary heart disease by 2020 [153].

So far, the etiology and pathogenesis of depression are not clear, and there are no obvious signs or laboratory indicators of abnormality. Although there have been many basic and clinical studies on depression, no critical breakthrough has been made in the three most important clinical problems: pathogenesis, objective diagnosis, and efficient treatment. A key breakthrough in these issues is to find and establish a stable biological marker from gene to clinical phenotype and then further study its pathogenesis, establish objective diagnostic methods and develop efficient clinical therapy.

Magnetic resonance imaging

Up to now, in the clinical research field of mental illness, especially depression, the most sought after biological markers may potentially be provided by the study of neuroimaging, especially brain MRI. Brain MRI examination have characteristics of good clinical applicability, non-invasive, simple operation, universal, relatively stable results and easy to repeat, but its sensitivity and specificity need to be improved. Brain MRI research has become an intermediate mechanism from molecular research to clinical phenotype. Through this mechanism research, we can not only explore how genes, molecules, and proteins affect the brain structure and function of patients with depression but also use MRI as an objective diagnostic tool for the most urgent clinical needs. Over the past 20 years, the application of multi-mode MRI technology to study the brain structural and functional characteristics of depression, especially to establish clear biological marker targets around the characteristics of emotional circuits, has become one of the major scientific frontiers in the basic and clinical research of neuroscience.

Many studies have found that patients with depression have abnormalities in brain structure and function of emotional circuits, as well as in neurotransmitters associated with these circuits [149, 154]. MRI studies in recent years found that the depressive mood is associated with three brain regions, namely in the amygdala and the ventral striatum as the primary mood areas, the orbital gyrus, medial prefrontal cortex and cingulate gyrus as the emotional auto-regulation areas, and the dorsolateral and ventrolateral prefrontal cortex as the center of the active emotional regulation area [154], [155], [156].

Multimodal MRI

Multimodal MRI techniques used in mental disorders seek to find correlated, complementary, and/or converging image features from multiple image modalities and applied sophisticated analytical methods to identify robust biomarkers for the types of depression.

A study employed DTI, magnetic resonance spectroscopy (MRS), rs-fMRI, and magnetoencephalography (MEG) and revealed patterns of abnormalities of patients with major depressive disorders. These patterns included factors in the neurotransmitters (glutamate concentration), white matter fibers (fractional anisotropy), and functional excitations (fMRI) [157]. A multimodal MRI study involves structural MRI and ASL to assess grey matter volume and regional cerebral blood flow in MDD patients. This multimodal study revealed negative correlations between the extent of depressive symptoms and CBF in the bilateral para-hippocampus and between depressive symptoms and CBF in the right middle frontal cortex [158]. In addition to confirming the correlations among findings of individual modalities, some multimodal MRI studies, however, found disrelations among individual findings in the MDD group [159]. Further multimodal data analysis involving MRI imaging data and clinical, neurobiological metrics of the patients may resolve the disparities.

Among the methods of the multimodal MRI image fusion in depressive disorders, support vector machine (SVM) [160] and linked independent component analysis [161] was recently used, respectively, to identify biomarkers for the classification and prediction of symptom loads of heterogeneous MDD cohorts. Imaging data and neurobiological data were included in the data fusion. In both studies, the results did not show strong support for the hypothesis and did not provide sufficient evidence for the sought biomarkers [160, 161].

4.3.2. Obsessive-compulsive disorder

Obsessive-compulsive disorder (OCD) is a group of neuropsychiatric disorders with obsessive thinking and compulsive behavior as the main clinical manifestations. It is characterized by the co-existence of conscious compulsion and anti-compulsion, and the repeated intrusion of thoughts or impulses into the daily life of patients that are often meaningless and involuntary. Although patient perceives that these thoughts or impulses are their own and resist them to the utmost degree, he or she is still unable to control them. The intense conflict between the two causes the patient great anxiety and pain, which affects his other study, work, interpersonal communication, and even daily life.

Magnetic resonance imaging

Voxel-based morphometry was widely used in the sMRI studies of OCD. These studies measure the structures and volumes of regions of interest in various OCD groups and healthy control groups. OCD patients were found to have lower grey matter volumes in specific regions of the brain. For children with OCD, these regions include the bilateral frontal lobe, cingulate cortex, and temporal-parietal junction [162]. For adults with OCD, these regions are the left and right orbitofrontal cortex [67]. Lower volumes were also seen in white matter in the cingulate and occipital cortex, right frontal and parietal and left temporal regions [162] and in a small area of the parietal cortex for patients with OCD [163]. fMRI studies can provide information about the pathophysiology of OCD [164, 165]. However, whether this information from single fMRI modality alone could be of clinical value in the diagnosis of individual patients is not clear [166]. The pathophysiological feature of OCD, as revealed by fMRI studies, suggest that abnormal brain metabolites may be implied in OCDs. Abnormalities in brain metabolite concentrations in patients with OCD were investigated using proton MRS [167], [168], [169], [170]. Among about ten detectable metabolites, glutamate, glutamine, and GABA are of particular interest, as they are involved in neurotransmission. Recent studies show that OCD patients had an elevated GABA level and a higher GABA/glutamate ratio in the anterior cingulate cortex [171], and they had lower GABA concentration in the prefrontal lobe, as compared to healthy control groups [172]. The roles of glutamate and glutamine in OCD are in the focus of research interest, but the findings lacked reasonable consistency [173], [174], [175]. The heterogeneity of structural neuroimaging findings of OCD may reflect the heterogeneity of the disease itself.

Multimodal MRI

Multimodal MRI studies in OCD provide complementary, correlated and/or integrated information of findings from individual modalities [176, 177]. Early structural MRI study suggested that the volume reduction of superior temporal gyrus (STG) is associated with the pathophysiology of OCD [178]. A functional MRI study found increased low-frequency fluctuations in neural activities in STG [179]. A correlation between these findings was found in a combined structural MRI and fMRI study, which shows that the volume of the superior temporal sulcus is strongly correlated with functional connectivity between several brain regions that may form a neuro-network [177, 180]. The simultaneous 1H-MRS and DTI study, to investigate metabolic and white matter integrity alterations in OCD, found that the level of Glx to Cr ratio in the anterior cingulate cortex was higher in the OCD group than the healthy control group [181]. The study also found from DTI analysis that the FA values in the left cingulate bundle of the OCD group were significantly higher than the healthy controls. A limitation of this study is that the Glx level, which is a combination of glutamate and glutamine, was measured instead of measuring glutamate and glutamine individually. It has been recognized that it is difficult to distinguish these two structurally similar metabolites using 3 Tesla scanners and ultra-high magnetic field (e.g. 7T) scanners are required.

4.3.3. Schizophrenia

Schizophrenia is a group of serious psychosis with unknown etiology, which usually starts slowly or sub-acute in the young and middle-aged individuals [182]. Clinically, it often manifests as a syndrome with different symptoms, including abnormalities in sensory perception, thinking, emotion, and behavior, as well as uncoordinated mental activities [183]. Schizophrenia is a multifactor disease [184]. Although the understanding of etiology is not clear at present, effects of the susceptible quality of individual psychology and the adverse factors of external social environment on the occurrence and development of the disease have been widely recognized. Both susceptible quality and external adverse factors may lead to the occurrence of disease through the joint action of internal biological factors. The course of schizophrenia is generally protracted, showing repeated attack, aggravation, or deterioration. Some patients eventually show recession and mental disability, but some patients can maintain recovery or basic recovery after treatment [185].

Magnetic resonance imaging

Structural MRI was widely used to study the morphology and volumetry of the brains of schizophrenia patients. Studies found that the average brain volume of schizophrenia patients was smaller than that of healthy people [186, 187]. The abnormal volume and structure of white matter usually appear before the onset of the disease, and these abnormalities tend to be stable during the development of the disease [188]; the change of gray matter volume is more evident after the onset of the disease and decreases progressively over time [189]. According to a longitudinal study, gray matter deficiency in schizophrenia mainly occurs in the first five years [190].

Quiet complement to the structural MRI, DTI reveals the abnormalities of white matter microstructure of schizophrenia patients [191]. Decreased fractional anisotropy in white matter tracts, different cortical regions, and subcortical regions was found in schizophrenia patients in some studies. However, controversial findings were also reported [192, 193]. These inconsistencies might be attributed, in part, to small sample sizes. A large-scale DTI study involving more 4,000 subjects found widespread white matter microstructural differences between schizophrenia patients and healthy controls [194]. Significantly reduced fractional anisotropy values were found in 20 of the 25 investigated regions within the white matter. Furthermore, significantly higher mean diffusivity and radial diffusivity were also observed in schizophrenia patients than in healthy controls.

Functional MRI techniques are used to detect the deficits in neural networks of patients with schizophrenia [195]. Brain network studies show that the functional connectivity of the default mode network (DMN) in schizophrenic patients has changed. Although the research structures are inconsistent, most studies show that DMN functional connectivity is enhanced in schizophrenia, and functional connectivity in the prefrontal cortex is weakened (especially in the prefrontal cortex) [196]. In addition, the functional connections of auditory/linguistic networks and basal nuclei are related to auditory hallucinations and delusional symptoms. The study of brain structure networks found that the number of frontal and temporal core nodes decreased and the average shortest path increased, indicating decrease in global efficiency. Wang et al constructed a network of DTI images of 79 schizophrenia patients and 96 age-matched normal subjects [197]. They found that: compared with the normal subjects, the global efficiency of the schizophrenic group decreased; the local efficiency of the core nodes distributed in the frontal cortex, the paralimbic system, the limbic system and the left putamen decreased; and the global efficiency of the network was negatively correlated with the PANSS score. Research shows that the change in brain structure network started at the beginning of the disease. More severe symptoms indicate lower the global or local efficiency of the network, and the slower the speed of information integration.

Magnetic resonance spectroscopy studies found that the brain metabolism of schizophrenia patients was abnormal [198], [199], [200]. The levels of N-acetyl-aspartic acid (NAA) in the hippocampus, frontal lobe, temporal lobe, and thalamus of schizophrenic patients decreased; the levels of NAA in the thalamus of high-risk groups also decreased, while the level of NAA in temporal lobe decreased. It was also found that the increase of glutamate level in the hippocampus and medial temporal lobe was related to the decrease of executive function.

Multimodal imaging

The heterogeneities of findings of individual imaging modalities have been driving the multimodal neuroimaging approach to the search of the more consistent and precise biomarkers for the deficits, abnormalities in functions of schizophrenia patients. A concerted use of three MRI modalities, namely, resting-state of fMRI, structural MRI, and diffusion MRI, was able to simultaneously reveal abnormalities from these three kinds of MRI images and, thereby, identify the cortico-striato-thalamic circuits that might be related the cognitive impairments in schizophrenia [201]. In addition to the multimodal imaging investigation of structural and functional brain abnormalities in schizophrenia, proton MRS has also been used in combination with fMRI to investigate cognitive impairment in schizophrenia at both neurometabolic and functional levels [202]. The combined proton MRS and fMRI study are particularly useful for short-term longitudinal studies on the effects of medication, which is invariant to brain structural change. In this study [202], the relationship between Glx/Cr levels and BOLD response significantly changed after six weeks of medication for schizophrenia patients, although factors that confound interpretations of the results remain. More examples of multimodal imaging studies on schizophrenia are given in recent review articles [203, 204].

5. Multimodal imaging data fusion: methodology

Multimodal fusion has gradually entered the center of research interest as an approach to tackle the challenges of neuroimaging. The first main reason is that there exists a great complementarity between different imaging modes. For instance, the images obtained by positron emission tomography imaging (PET) or single-photon emission tomography imaging (SPECT) do not contain high resolution, three-dimensional anatomical information. On the other hand, high-resolution structural images can be obtained via the use of CT and/or MRI. These images complement each other to provide a complete picture of the targeted organs’ anatomy, physiology, and pathology. The fusion of these images is of great significance for the relevant clinical and pre-clinical studies.

Another outstanding merit of utilizing multimodal fusion is that it efficiently enhances the spatial and temporal resolution in the characterization of brain processes. In other words, multimodal imaging may allow the combination of the hyper-temporal resolution of one imaging mode with the hyper-spatial resolution of another, taking advantage of the spatial-temporal complementarity. Take a study in 2014 as an example, Ke Zhang et al have successfully measured the cerebral blood flow with a combination of Arterial Spin Labeling (ASL), MRI and PET [205]. Apart from this, utilizing EEG together with fMRI to improve spatial and temporal resolution has also been studied by many scientists in neuroscience [206], [207], [208], [209]

It is worth mentioning that, in both narrow and wide senses, multimodal data fusion has a high capacity of generalization. A typical instance is the alignment of functional MRI, EEG, and fNIRS images to an anatomical coordinate system. The coordinate system can act as a template to standardize reported results. The alignment of the images to a single coordinate system not only allows comparison with other studies but also allows the combination of functional and structural information [210].

PET/CT combination is a multimodal absolute quantification approach where CT provides structural data information on bones, which also serves as the main absorber for the γ-rays in PET. This combination allows decay correction, in which the accumulation of radioactive isotopes in human tissues becomes apparent, and the amount of radioactive decay can be absolutely quantified [211, 212].

Multimodal imaging also has the benefit of utilizing data from one modality to improve the data quality of another modality, such as correcting the geometric distortions of EPI images by acquiring a B0 field map or obtaining EPI with different parameters [213, 214]. Another classic case is in a combined MR-PET study, where the motion information provided by high-temporal resolution MRI data was used to help the reconstruction of the PET data [10].

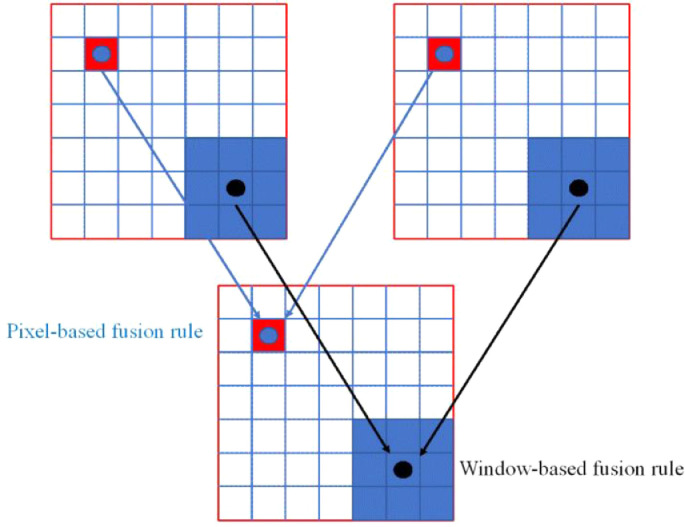

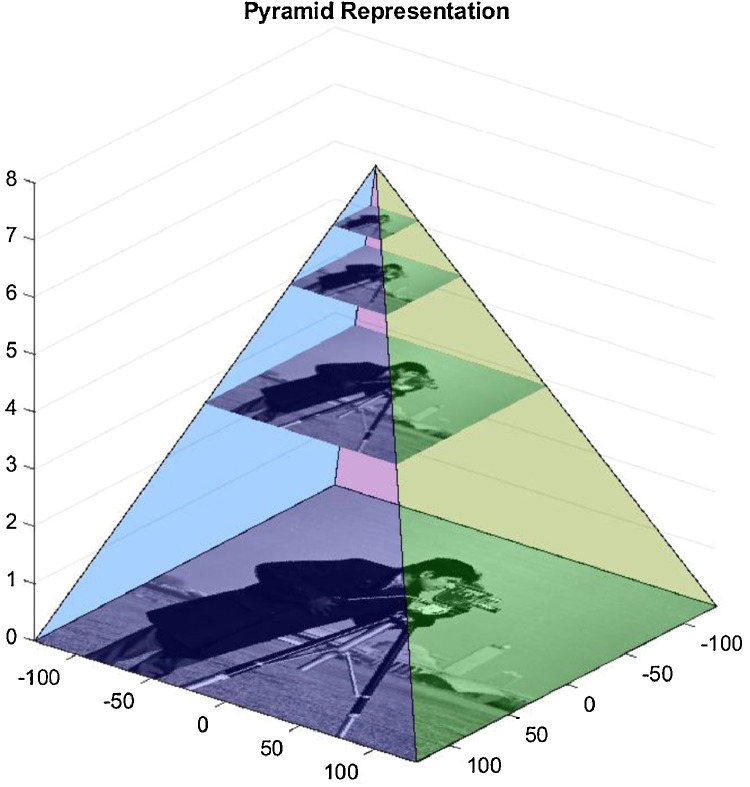

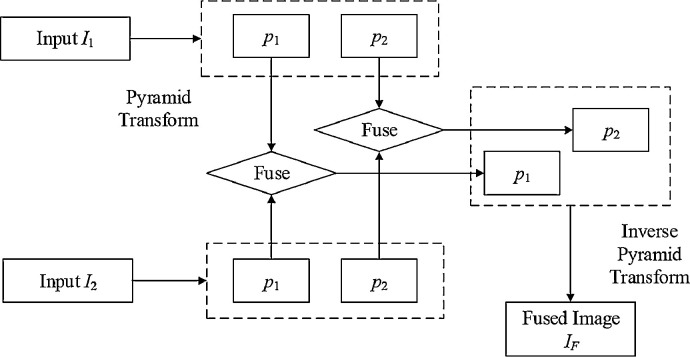

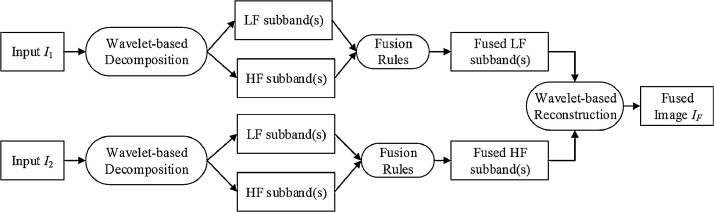

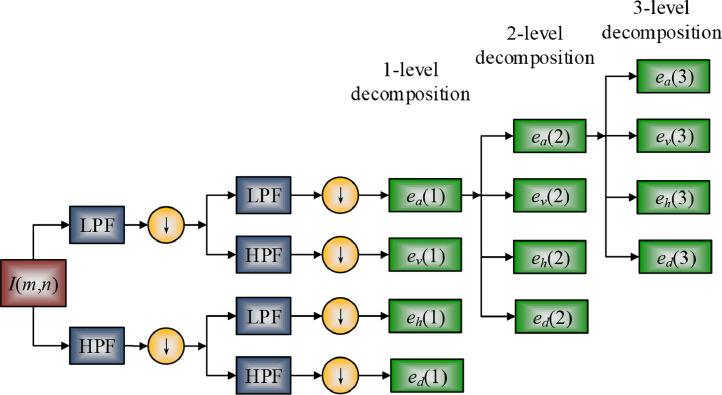

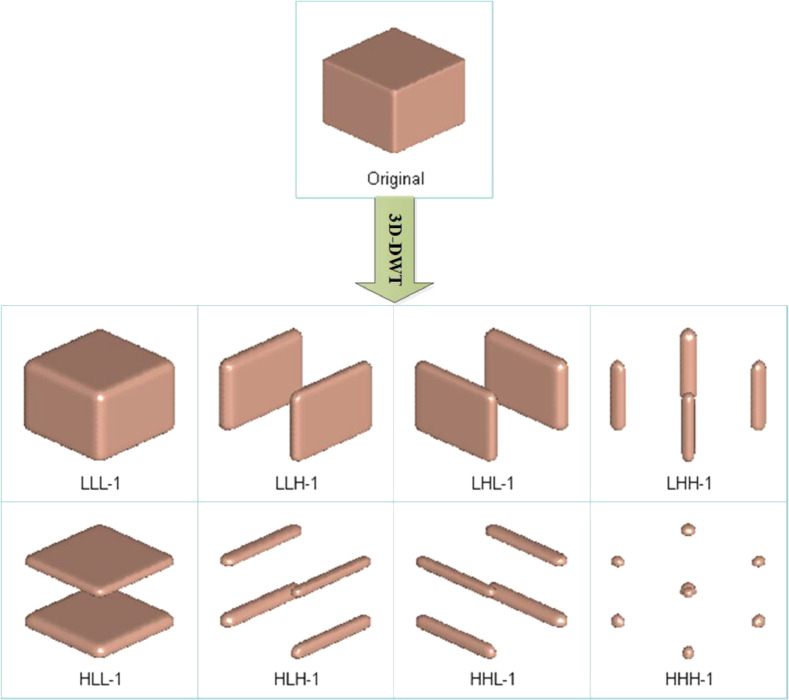

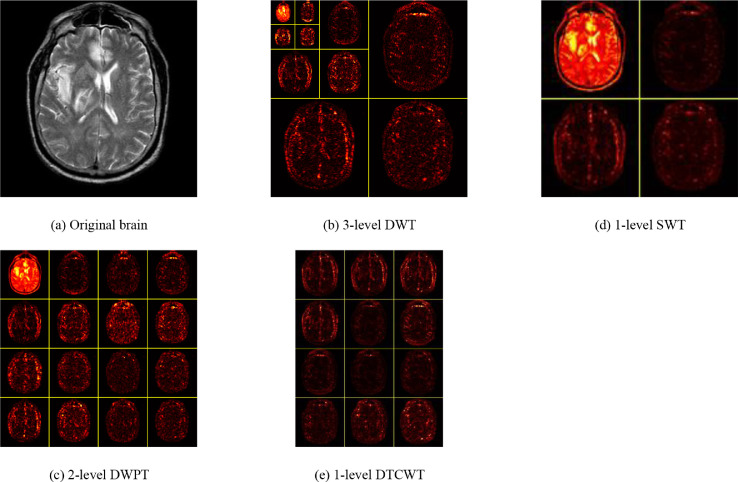

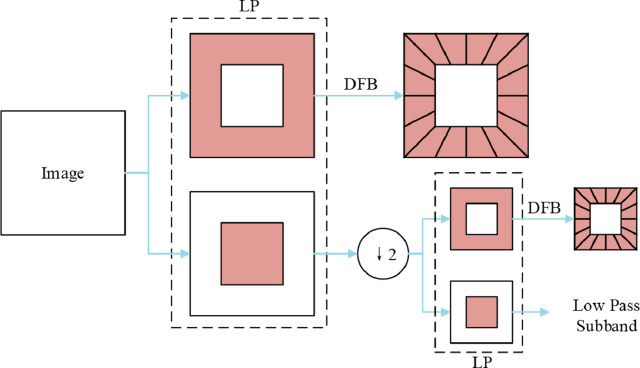

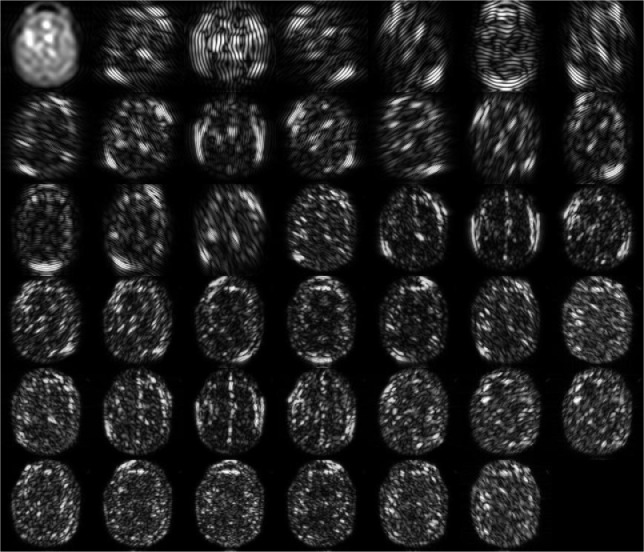

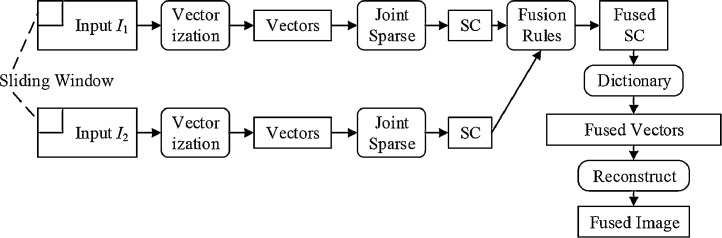

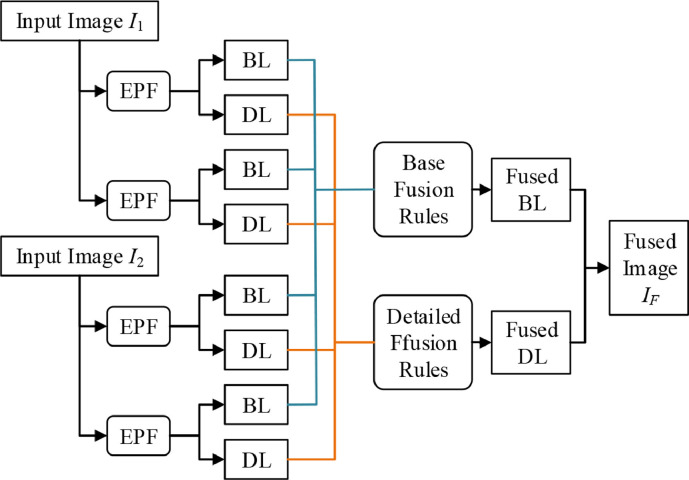

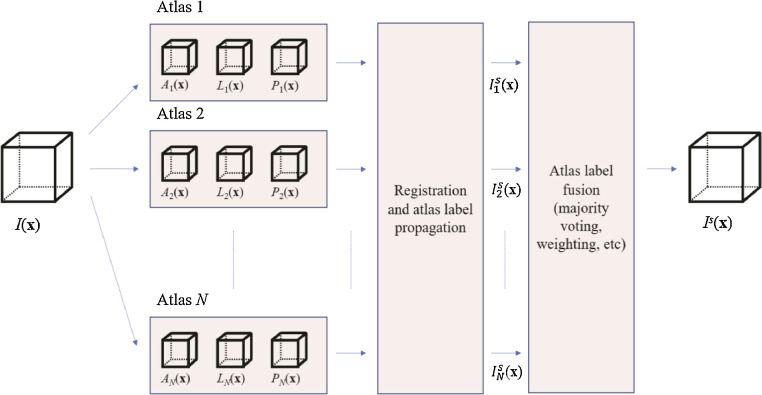

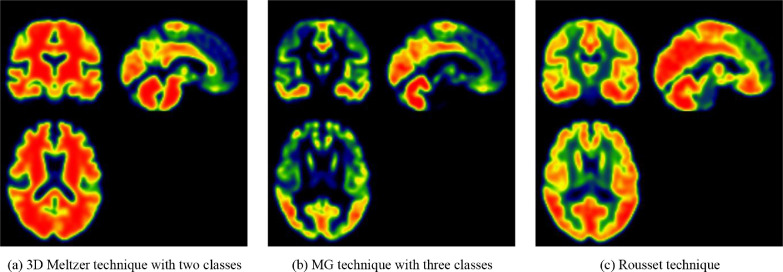

5.1. Forms of multimodality fusion