Abstract

Precision medicine for Alzheimer's disease (AD) necessitates the development of personalized, reproducible, and neuroscientifically interpretable biomarkers, yet despite remarkable advances, few such biomarkers are available. Also, a comprehensive evaluation of the neurobiological basis and generalizability of the end‐to‐end machine learning system should be given the highest priority. For this reason, a deep learning model (3D attention network, 3DAN) that can simultaneously capture candidate imaging biomarkers with an attention mechanism module and advance the diagnosis of AD based on structural magnetic resonance imaging is proposed. The generalizability and reproducibility are evaluated using cross‐validation on in‐house, multicenter (n = 716), and public (n = 1116) databases with an accuracy up to 92%. Significant associations between the classification output and clinical characteristics of AD and mild cognitive impairment (MCI, a middle stage of dementia) groups provide solid neurobiological support for the 3DAN model. The effectiveness of the 3DAN model is further validated by its good performance in predicting the MCI subjects who progress to AD with an accuracy of 72%. Collectively, the findings highlight the potential for structural brain imaging to provide a generalizable, and neuroscientifically interpretable imaging biomarker that can support clinicians in the early diagnosis of AD.

Keywords: Alzheimer's disease, computer‐aided diagnosis, neurobiological basis, neuroscientifically interpretable biomarkers, structural magnetic resonance imaging

This study proposes a deep learning model (3D attention network) to simultaneously capture imaging biomarkers with an attention mechanism module and advance the diagnosis of Alzheimer's disease. The generalizability and reproducibility are cross‐validated on independent databases with an accuracy up to 92%. Significant associations between the classification output and clinical characteristics of patients provide solid neurobiological support for the model.

1. Introduction

Alzheimer's disease (AD) is the most prevalent cause of dementia, leading to irreversible brain damage. The disease is accompanied by memory deficits, communication difficulties, disorientation, and behavior changes and is a leading cause of death.[ 1 ] Mild cognitive impairment (MCI), especially amnestic MCI, has a relatively high risk of conversion to AD and may be an intermediate state between healthy aging and dementia.[ 2 , 3 ] It is essential to identify underlying biomarkers or neuroimaging measures that can accurately capture clinical early diagnosis and quantify the stage of disease.[ 4 , 5 , 6 ] However, despite decades of research, generalizable and reproducible biomarkers have not yet emerged.

Structural magnetic resonance imaging (sMRI) analysis provides an effective way to characterize anatomic abnormalities and the progression of AD, making it possible for medical scientists to identify imaging biomarkers of early neurodegeneration.[ 7 , 8 ] Existing structural MRI‐based studies have performed extensive morphometric analyses at the voxel level or region of interest (ROI) level, with the goal of quantifying the morphological characteristics of relevant regions in terms of volume, shape, and cortical thickness.[6] However, statistical mapping methods can only characterize the detailed feature presentation of disease‐related changes from one perspective, such as volume or shape. Such neuroimaging biomarkers are modeled by compressing multi‐voxel imaging data into one or several values based on a pre‐determined ROI, a process which may have limited usefulness for individual diagnosis. To address this limitation, extracting high‐dimensional morphometric features has attracted increasing attention.[ 9 , 10 , 11 , 12 , 13 ]

Deep learning methods, especially convolutional neural networks, have been gradually applied to various medical image analysis tasks.[ 14 , 15 , 16 , 17 ] A convolutional neural network (CNN) can automatically learn the features that optimally represent the data. The CNN model, as a type of end‐to‐end architecture, can optimize both the representation of features and the classification performance based on a brain image. Conversely, the black box aspect of neural networks hinders us from obtaining valuable information about the focus of a network (i.e., the most discriminative localizations of brain abnormalities), which plays a key role in the diagnosis of disease. To this end, the attention mechanism module was developed to reveal the network on which to focus and to refine the feature representation and increase the representation power.[ 18 , 19 , 20 , 21 , 22 ] Attention‐based networks have achieved successful applications in fields such as natural language processing,[ 23 ] object detection,[ 22 ] image classification, and synthesis.[ 18 ]

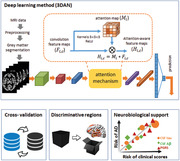

Inspired by recent advances in attention‐based networks,[ 20 , 21 , 22 ] we propose a 3D attention network (3DAN) that integrates an attention mechanism with a residual neural network (ResNet) to automatically capture the most discriminative localizations in brain images and jointly optimize the feature extraction and classifier performance for AD based on structural MRI images (Figure 1 ). Because the extent to which brain structures are affected by AD varies,[ 11 , 24 , 25 , 26 ] we adopted an attention module to emphasize important atrophy localizations and suppress unnecessary ones along the spatial dimension. Based on the attention module, the discriminative localizations and refined feature representation were simultaneously learned in a data‐driven fashion. To test the robustness and generalizability of the imaging biomarkers for AD, cross validations were performed with two totally independent databases (n = 1832, in total). We also expected that the classification output would have a solid neurobiological basis. To investigate this hypothesis, we researched the association between the attention network output and clinical measures [that is the cognitive function measured by the Mini‐Mental State Examination (MMSE), CSF beta‐amyloid (Aβ), CSF tau, and polygenic risk scores (PGRS)] in the AD and MCI groups. Finally, we investigated whether the 3DAN could capture features that could predict the progression of disease.

Figure 1.

Schematic of the data analysis pipeline. A) The architecture of the 3D attention network (3DAN). In the attention mechanism module, each voxel i of the H × W × D‐dimensional feature maps F i,c was weighted by the H × W × D‐dimensional attention map Mi. The trainable attention map Mi was independent of the channel of the features and was only related to the spatial position. B) The attention score map (left: in‐house database, right: ADNI database) was generated by the attention mechanism module of the 3DAN model, indicating the discriminative power of various brain regions for AD diagnosis. C) To test the robustness and generalizability of the 3DAN model, cross validations were performed using two completely independent databases (an in‐house database and the ADNI database) (Details can be found in Table 1). D) Investigation of the association between the classification output and clinical measures [that is the cognitive function measured by Mini‐Mental State Examination (MMSE), CSF beta‐amyloid (Aβ), CSF tau, and polygenic risk scores (PGRS)] in the AD and MCI groups.

2. Results

2.1. Diagnostic Performance

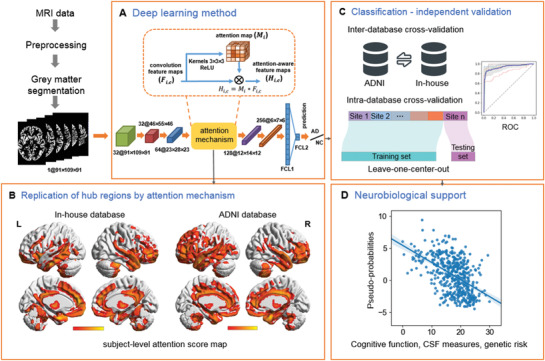

In total, 1832 subjects from our in‐house multi‐center database (n = 716) and the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (n = 1116) were employed in this study (Table S1, Supporting Information). For the AD versus normal control (NC) classification, we conducted cross‐validations between the ADNI and the in‐house databases. For each strategy, one of the two databases was used as the training set and the other as the testing set. The classification accuracies were 86.1% (sensitivity (SEN) = 88.1%, specificity (SPE) = 84.6%, area under the curve (AUC) = 0.912) and 87% (SEN = 78.9%, SPE = 96.1%, AUC = 0.913) when taking the ADNI database and the in‐house database as the testing set, respectively (Figure 2A,B, Table 1 ). Then, we performed cross‐validations between the different scanners in the in‐house database using leave‐center‐out cross validation. The mean classification accuracy was 90.9% (SEN = 86.9%, SPE = 95.7%, AUC = 0.940, Figure 2C, Table 1). In the ADNI database, we performed a tenfold cross‐validation, and the mean classification accuracy was 92.1% (SEN = 89%, SPE = 94.4%, AUC = 0.941) (Figure 2D, Table 1; Figure S1, Supporting Information). For the progressive MCI (pMCI) versus the stable MCI (sMCI) classification, the mean classification accuracy was 71.7% (SEN = 74.0%, SPE = 70.1%, AUC = 0.721) using a tenfold cross‐validation on the ADNI database (Figure 2E,F).

Figure 2.

Diagnostic performance of the ROC curves for AD/NC classification (A–D) and pMCI/sMCI classification (E,F). A) ROC curve for the classifier that was trained on the in‐house database and tested on the ADNI database; B) ROC curve for the classifier that was trained on the ADNI database and tested on the in‐house database; C) ROC curve for the classifier that was trained and tested on the in‐house database with leave‐center‐out cross‐validation (CV); D) ROC curve for the classifier that was trained and tested on the ADNI database with tenfold cross‐validation; E) ROC curve of the pMCI/sMCI classification with tenfold cross‐validation on the ADNI database; F) violin plots for the distributions of the pMCI/sMCI classifications.

Table 1.

Classification performance of the proposed 3DAN method in the AD and NC classification tasks

| Training set | Testing set | ACC | SEN | SPE | AUC | |

|---|---|---|---|---|---|---|

| Strategy 1 | In‐house(n = 716) | ADNI(n = 1116) | 0.861 | 0.881 | 0.846 | 0.912 |

| Strategy 2 | ADNI | In‐house | 0.870 | 0.789 | 0.961 | 0.913 |

| Strategy 3 | In‐house leave‐center‐outcross‐validation | 0.909 | 0.869 | 0.957 | 0.940 | |

| Strategy 4 | ADNI 10‐foldcross‐validation | 0.921 | 0.890 | 0.944 | 0.941 | |

Abbreviations: ACC = accuracy; SEN = sensitivity; SPE = specificity; AUC = area under the curve of the receiver operating characteristic.

2.2. Important Regions Captured by the 3D Attention Network

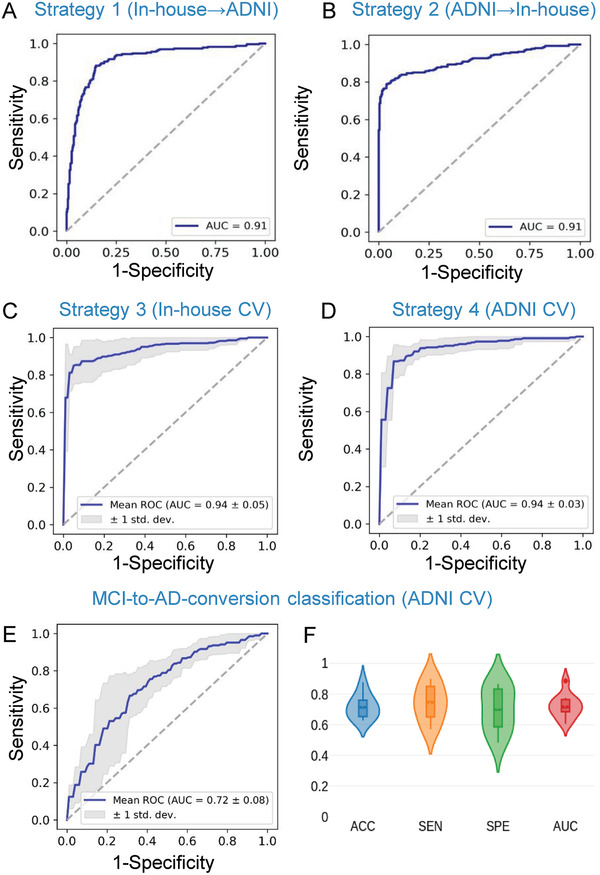

For each testing sample, by introducing the attention mechanism module, we obtained an attention value map, which indicated the discriminative power of various brain regions for AD diagnosis (Figure 3A). The higher the value, the greater the discrimination ability of the region and the greater its potential as a biomarker. We resampled the mean attention map from an image size of 23 × 28 × 23, which is derived through two pooling operations (Figure 1A) on the original images for visualization. The attention network highlighted brain regions that were mainly located in the temporal lobe, hippocampus, parahippocampal gyrus, cingulate gyrus, thalamus, precuneus, insula, amygdala, fusiform gyrus, and medial frontal cortex (Figure 3A). More importantly, the attention pattern for the in‐house database and the ADNI database were significantly correlated (r = 0.59, p = 4.75e‐27, Figure 3A), which indicates the strong reproducibility of the results.

Figure 3.

A) Mean attention score map derived from the in‐house database (left) and the ADNI database (right) and the correlation between these two attention maps (middle). Brighter colors indicate that the region is more discriminative for AD classification. The regions whose attention scores were in the top 30% (82/273) are displayed. The correlation figure indicates the replicability of the in‐house and ADNI databases. B) Correlation analysis between the mean attention score and the t statistic score of the gray matter volume between the NC and AD for the 273 ROIs in the Brainnetome Atlas for the in‐house database (left) and ADNI database (right). C) Correlation maps between the attention score for the regions and the MMSE scores with FDR correction (p < 0.05) in the in‐house database (left) and the ADNI database (right) and the relationship between the two correlation maps (middle). The correlation figure indicates the replicability of the in‐house and ADNI databases. D) Correlation between classification accuracy and the mean attention score of K groups of regions. The abscissa value of each point in the scatter plots represents the mean attention score of [273/K] brain regions in each group, and the ordinate value of each point in the scatter plots represents the classification accuracy based on the images of [273/K] brain regions in each group. At each K, the fact that higher attention scores are associated with higher classification accuracy reflects the effectiveness of the attention mechanism (Details of the method can be found in Figure S4, Supporting Information).

2.3. Attention Score for the Network is Associated with the Atrophy Pattern and MMSE Score

To evaluate whether the attention score changes were associated with brain alteration, we performed a correlation analysis between the significance of the group differences (T‐map) and the attention scores of regions derived from the network. The attention values had a significant correlation with the group difference map in the in‐house database (r = 0.30, p = 4.97e‐7) and the ADNI database (r = 0.22, p = 2.66e‐4), which indicates that the proposed model captured the features of the abnormal regions in AD (Figure 3B). We also repeated the above correlation analyses for the linear SVM model based on the Brainnetome (BN) atlas and found that the weights of the regions had no significant correlation with brain atrophy in either the in‐house database (r = 0.09, p = 0.14; Figure S2A, Supporting Information) or the ADNI database (r = 0.11, p = 0.07, Figure S2B, Supporting Information).

To evaluate whether the attention scores were associated with the patients’ cognitive abilities, regional correlation coefficients were calculated to measure the relationship between the attention scores and the Mini‐Mental State Exam (MMSE) scores in the AD and MCI groups. The attention scores of 220 (220/273 = 81%) and 210 (210/273 = 77%) brain regions were significantly associated with the MMSE scores (p < 0.05, FDR correction) for the in‐house and the ADNI databases, respectively. The high significance of this correlation indicates that the variability in the brain regions with higher attention scores in the subjects may reflect the degree of cognitive impairment of the subjects to some extent. These two MMSE‐associated maps of the in‐house and ADNI databases were significantly correlated (r = 0.46, p = 6.17e‐16, Figure 3C), which indicates that the results can be replicated across sites.

In addition, we preformed correlation analyses between the regional attention scores and the regional correlation coefficients to evaluate the overlap between the regions with higher attention scores and the regions whose attention score showed a significant correlation with the MMSE scores. The result showed that the greater the attention score for brain regions, the more significant the correlation between the attention score and the MMSE (r = 0.22, p = 2.73e‐4 for the in‐house database, r = 0.30, p = 3.77e‐7 for the ADNI database, Figure S3, Supporting Information). We also calculated the Dice similarity coefficient between the regions with a significant correlation with the MMSE score (regions in Figure 3C) and the top n regions with higher attention scores (n = 220 and 210 for the in‐house and ADNI databases, respectively). The results showed that regions with significant correlations between the attention scores and the MMSE had a wide overlap with the regions with higher attention scores (Dice coefficients = 0.83 and 0.82 for the in‐house and ADNI databases, respectively).

2.4. Effectiveness of the Attention Mechanism for Key Regions Identification

To evaluate the effectiveness of the attention module at capturing the key regions, we performed a correlation analysis between the attention score of a region and the classification accuracy of the model trained on those regions (Figure S4, Supporting Information). We carried out the experiments with a mean attention map obtained from models trained on the ADNI and in‐house databases, separately. There was a significant correlation between the attention score and classification performance (Figure 3D), which indicates that the key regions captured by the attention mechanism might be potential biomarkers for AD diagnosis.

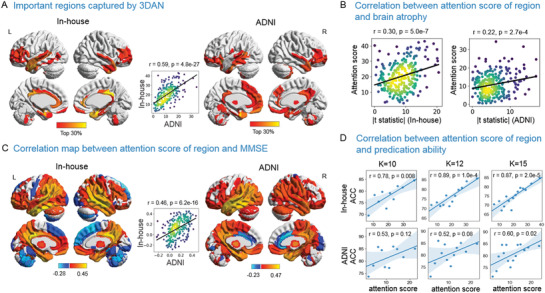

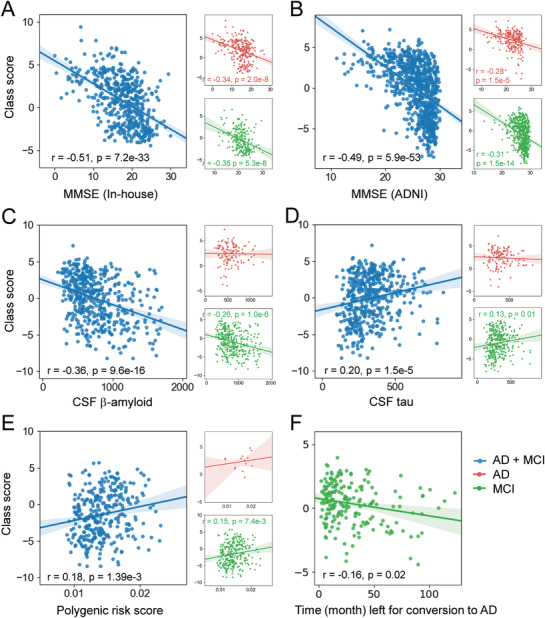

2.5. Classification Probability is Related to Clinical Measures, Cognitive Functions, and Genetic Risk

To investigate the clinical relevance of the prediction performance, we first performed a correlation analysis between the classification output and the MMSE scores of the AD and MCI groups (two groups together and separately) in the ADNI and in‐house databases. There was a significant correlation between the probability of AD predicted by the model and the cognitive impairment in the AD and MCI groups, with age and gender controlled (all p < 0.001, Figure 4A,B). Then, we analyzed the correlation between the classification output and the CSF Aβ (n = 472), tau (n = 472), and PGRS (n = 321) using the ADNI database (details can be found in the Supporting Information). These results showed that the predicted probability of AD correlated significantly with the neuropathological changes and genetic factors (Figure 4C–E). There was a significant difference between the apolipoprotein E ε4 (APOE ε4) negative and APOE ε4 positive subjects in the MCI group, with age and gender controlled (t 341 = 2.56, p = 0.01; Figure S5, Supporting Information). More importantly, we found that the more similar an MCI individual was to the AD group, the shorter the time to convert to AD (r = −0.16, p = 0.02, Figure 4F).

Figure 4.

Correlations between the class scores and the MMSE scores in the in‐house database (A) and the ADNI database (B). Correlations between the class scores and the CSF Aβ (n = 472) (C), CSF tau (n = 472), (D) and polygenetic risk factors (n = 321) (E) of individual subjects in the ADNI database. F) Correlation between the classification output and the length of time before conversion to AD of the pMCI individuals in the ADNI database.

We also performed correlation analyses between the classification output of a linear SVM model based on the BN atlas and the MMSE score, CSF Aβ, tau, PGRS, and disease progression of the AD and MCI groups in the in‐house and ADNI databases. The classification output (i.e., individual pseudo‐probability of AD) was represented by the distances of the individual samples from the discrimination hyperplane. The results also showed significant correlations between the classification output and the neurobiological indices, MMSE scores, and disease progression (Figure S6, Supporting Information). Compared with the results of the linear SVM model, the individual pseudo‐probabilities of AD from our 3DAN model were more related to cognitive impairment, neurobiological changes, and genetic factors.

2.6. Comparison with Other Methods

From Table 2 , we can observe that the proposed method generally outperformed the VBM‐based and ROI‐based methods in the AD versus NC classifications for both the ADNI and the in‐house databases. It is worth noting that the in‐house database included subjects from different hospitals with different scanners. Although the heterogeneity between the data sources was great, the proposed method still achieved a classification accuracy of 87%. This indicates that the proposed method has good generalizability and robustness in dealing with independent datasets, factors which make this method very useful for clinical applications. The proposed method generally had better classification accuracies than VBM‐based (about 82%) and ROI‐based methods (about 81%) for AD diagnosis, which could be due to the richer feature representation power learned by the neural network.

Table 2.

Comparison of the classification performance of the proposed 3DAN method with other methods for AD diagnosis in Strategies 1 and 2 in Table 1

| Method | Training: In‐house, Testing: ADNI | Training: ADNI, Testing: In‐house | ||||||

|---|---|---|---|---|---|---|---|---|

| ACC | SEN | SPE | AUC | ACC | SEN | SPE | AUC | |

| 3DAN | 0.861 | 0.881 | 0.846 | 0.912 | 0.870 | 0.789 | 0.961 | 0.913 |

| ResNet | 0.853 | 0.863 | 0.846 | 0.907 | 0.860 | 0.759 | 0.974 | 0.910 |

| VBM | 0.712 | 0.947 | 0.538 | 0.907 | 0.821 | 0.667 | 0.996 | 0.908 |

| ROI‐AAL | 0.720 | 0.947 | 0.551 | 0.885 | 0.811 | 0.651 | 0.991 | 0.888 |

| ROI‐BNA | 0.744 | 0.960 | 0.584 | 0.901 | 0.813 | 0.651 | 0.996 | 0.894 |

Abbreviations: ACC = accuracy; SEN = sensitivity; SPE = specificity; AUC = area under the curve of the receiver operating characteristic; BNA = Brainnetome Atlas; AAL = anatomical automatic labeling; ROI = region of interest; VBM = voxel‐based morphometric.

3. Discussion

To the best of our knowledge, this is the first attention‐based network to integrate automatic discriminative regions detection and classification into a unified framework for the identification of potential imaging biomarkers and the diagnosis of brain disease. The simple yet effective 3D attention network achieved a remarkable classification performance without handcrafted feature generation and model stacking when trained and tested on large‐ and multi‐scale analyses of databases (n = 1832) across sites and countries, highlighting the robustness and reproducibility of our proposed 3DAN method. It is also worth noting that the 3DAN introduced an attention mechanism to capture important atrophy localizations that are particularly associated with the diagnosis of disease. The classification output showed a strong association with the neurobiological indices (that is cerebrospinal fluid (CSF) amyloid β (Aβ), tau, and genetic risk scores). These results are highly advantageous for understanding the neurobiological architecture of a complex brain disease, thus making a step forward toward a precise early diagnosis of AD.

In terms of feature representation methods, the typical deep learning methods for AD, MCI, and normal control (NC) classification can be roughly categorized into four classes, including i) 2D slice‐level,[ 15 , 27 ] ii) 3D patch‐level,[ 11 , 28 ] iii) ROI‐level,[ 29 ] and iv) 3D subject‐level.[ 30 , 31 , 32 ] Contrary to conventional statistical mapping methods, few studies have provided valuable information about the discriminative localizations of images. Liu and colleagues proposed a two‐stage framework, by which the first stage could be used to identify discriminative landmarks at the patch‐based level and the second stage could be used to train a classifier using the selected patch‐based features.[ 28 ] Lian and colleagues proposed a hierarchical, fully convolutional network to integrate the process of discriminative patches identification and the training of classification models into a unified framework for AD diagnosis.[ 11 ] However, the potential for learning the discriminative localizations of the brain at the 3D subject level had not been well explored. Specifically, the proposed method introduced an attention module that highlights discriminative brain regions (such as the temporal lobe, hippocampus, parahippocampal gyrus, cingulate gyrus, and medial frontal cortex) at the subject level for AD diagnosis, and the post hoc analysis clearly showed that a higher attention score contributed significantly to the classification and that the attention maps had a high degree of replication (Figure 3A,D). The above related brain regions have been confirmed to be affected by AD pathology and are correlated with cognitive impairment in AD and/or MCI.[ 33 , 34 , 35 ] As opposed to the conventional ROI‐ and voxel‐level based pattern analysis methods,[ 36 , 37 , 38 , 39 ] our proposed method does not require a priori information about pre‐defined brain regions. Basically, the method integrates a feature extraction process with a classification task and automatically learns discriminative features in a data‐driven fashion, leading to optimal classification performance.

Deep learning methods have increasingly been used in the computer‐aided diagnosis of AD due to their ability to learn to optimize feature representation and robustness. Thus, the most important advance offered by the present study is that the classifiers achieved high accuracy in the cross validation based on large independent multi‐site databases. Cross validation is very important for biomarker searching while the traditional leave‐one‐out or N‐fold cross validation using single site data is limited by relative smaller sample sizes, often leading to a poor generalization performance by the classifiers.[ 40 , 41 , 42 , 43 , 44 , 45 ] The proposed method achieved higher accuracy and a larger area under the curve (AUC) compared with the traditional support vector machine (SVM) classifiers based on ROI features in inter‐sites cross validations. The models trained here can be directly applied to new datasets; such generalizability is very important for future clinical translation.[ 40 , 41 , 43 , 46 , 47 ] Another finding was that the classifier retained a relatively highly accurate prediction using only the baseline data to predict whether or not an MCI subject would convert to AD within three years, highlighting the effectiveness of the 3DAN and, therefore, its translational potential.

Critically, when we evaluated the relationship between the imaging measures and clinical features, the attention scores of the identified brain regions that were replicated across sites and between databases were significantly associated with the MMSE scores in these two databases. A highly significant positive correlation was also found between the attention scores and the pattern of atrophy in the gray matter (Figure 3B), which indicates that 3DAN captured the abnormal regions with a significant group difference between the AD and NC groups. Furthermore, there was a significant correlation between the classification output and the MMSE scores, revealing that the probability of an individual being classified as a patient with AD was correlated with the severity of the cognitive symptoms. AD patients are typically characterized by the presence of Aβ, tau neuritic plaques, and neurofibrillary tangles in the cerebral cortex.[ 5 , 48 ] The PGRSs are used to assess the cumulative genetic risk for a disorder,[ 49 ] especially for AD,[ 50 , 51 , 52 ] as confirmed by previous large‐scale genome‐wide association studies for the association between the PRGSs for AD and clinical markers (cognitive abilities, clinical evaluation, brain atrophy, tau, and Aβ).[ 53 , 54 , 55 , 56 ] More importantly, for a MCI individual, the closer the classification output was to that of the AD group, the more quickly the subject converted to AD. This finding provided additional evidence of the effectiveness of the 3DAN model. Hence, the significant associations between the classification output and the Aβ, tau, PGRS indices, and the length of time for MCI patients to convert to AD further provided a solid neurobiological basis for potential clinical applications of 3DAN.[ 57 , 58 ] And our further analyses showed that 5/6 of these correlations are stronger in 3DAN model than that in SVM (Figure S6, Supporting Information) highlighted the effectiveness of the attention mechanism for key regions identification and early classification.

While the experimental results emphasized promising potential clinical applications of the proposed method for AD studies, our study has some limitations that warrant consideration. First, the proposed method achieved good results in detecting important regions and diagnosing AD on two multi‐center databases. However, the performance and robustness of the proposed method should be further validated on a larger population before any actual clinical use.[ 59 ] Second, we only employed one sMRI scan for each subject in the databases to explore the discriminative power of single mode data. It should be noted that in the present study the ADNI dataset was used to show the biological meaningfulness of the class scores, but the in‐house dataset only showed the disease severity associated alterations in the patient groups due to lack of other neurobiological measures. In the future, we need to introduce longitudinal and multimodal data, such as functional MRI, PET images, genetic data, and other techniques, to further improve the classification performance and understand the neurological basis(es) of AD.[ 27 , 60 , 61 , 62 ] The ability to predict which subjects had a higher risk of progression as well as the ability to detect earlier stages of AD is of great importance. Although we successfully classified pMCI and sMCI subjects with a classification accuracy of 72%, the classification performance for pMCI and sMCI needs further improvement using a large longitudinal dataset.[ 63 ] Finally, higher resolution images and more advanced deep neural networks to improve the performance will be critical avenues for future studies.[ 61 , 64 , 65 ]

Overall, our proposed method is a novel model that integrates the attention mechanism into a deep CNN algorithm; we tested it in the largest neuroimaging analysis of AD to date. Without multi‐step feature selection and classification processes, the proposed end‐to‐end network achieved a better classification performance by leveraging the attention module. The interpretability of the 3DAN is conducive to promoting the clinical application of the deep learning algorithm for AD diagnosis. Our research team plans to investigate more specific neuroanatomical traits and AD phenotypes and explore sophisticated brain imaging measures to improve our understanding of AD and to increase early diagnosis and progression prediction.

4. Experimental Section

Study Design

Two sMRI databases were employed to cross validate the results in this study, 1) an in‐house multi‐center database; and 2) the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.usc.edu). These databases contain baseline brain MR imaging from AD patients and normal controls (NC). This study was approved by the Medical Ethics Committee of the Institute of Automation, Chinese Academy of Sciences. The demographic information about the subjects in both the in‐house and ADNI databases are presented in Table S1, Supporting Information.

In‐house

The in‐house database consisted of 716 (261 AD, 224 MCI, and 231 NC) subjects imaged by six different scanners. These studies were approved by the medical ethics committees of the local hospitals. All the subjects or their legal guardians signed written consent forms and met identical stringent methodological criteria. Comprehensive clinical details can be found elsewhere in our previous studies.[ 66 , 67 , 68 , 69 , 70 ] Detailed MRI acquisition protocol information for the different scanners is provided in the Supporting Information (Tables S1 and S2, Supporting Information).

ADNI

The data included in the present study consists of 1.5T or 3T T1‐weighted MR images acquired from a total of 1116 subjects (227 AD, 584 MCI, and 305 NC subjects). Of these subjects, 612 subjects had cerebrospinal fluid (CSF) amyloid‐β (Aβ), tau, and apolipoprotein E ε4 (APOE ε4) genotype measurements, and 536 subjects had polygenic risk scores (PGRS). The detailed information can be found in Tables S3 and S4, Supporting Information. In addition, MCI patients were further divided into progressive MCI (pMCI) subjects who converted to AD and stable MCI (sMCI) subjects who did not convert to AD. The subjects (203 and 295 sMCI) who had follow‐up data more than one year after the initial MRI and had consistent diagnoses for all their longitudinal scans were employed for pMCI/sMCI classification (Table S5, Supporting Information).

Image Pre‐Processing

To learn valuable information about regional changes in gray matter for the training model, structural MRI images were pre‐processed with the standard steps in the CAT12 toolbox (http://dbm.neuro.uni‐jena.de/cat/). All sMRI data were bias‐corrected, segmented into gray matter (GM), white matter (WM), and cerebrospinal fluid (CSF) and registered to Montreal Neurological Institute (MNI) space using a sequential linear (affine) transformation. The gray matter images were resliced to 2 mm × 2 mm × 2 mm cubic size, resulting in a volume size of 91 × 109 × 91 with 2 mm3 isotropic voxels.

Attention Based 3D Deep Learning Method

A simple yet effective 3D attention‐based network (3DAN) was proposed for AD diagnosis and to identify discriminative localizations.[ 71 ] Specifically, the residual network (ResNet) [ 72 ] was used as the basic architecture. The 3DAN consisted of a convolutional layer, eight residual blocks, an attention mechanism module and a fully connected layer (Figure 1A). Each basic block consisted of two convolutional layers and each convolutional layer was followed by batch normalization, and a nonlinearity activation function ReLU.[ 73 ] The sizes of the 3D feature maps were reduced from 91 × 109 × 91 to 46 × 55 × 46 to 23 × 28 × 23 by an average pooling function with a kernel size of 3 × 3 × 3. In the residual block, the output of each block H (x) adds the input x and the stacked nonlinear mapping of input F(x) directly through “shortcut connection” that addresses the degradation problem.

| (1) |

The attention mechanism was carried out simply by a convolution layer with a set of filters of 3 × 3 × 3 kernel size.[ 71 ] In the attention mechanism module, each voxel i of the H × W × D‐dimensional feature maps F i,c was weighted by the H × W × D‐dimensional attention map Mi. The trainable attention map Mi was independent of the channel of the features and was only related to the spatial position (Figure 1).

| (2) |

where, the spatial position (x, y, z) of the voxel is defined as i (i∈{1, …, H × W × D}, x ∈{1, …, H}, y∈{1, …, W}, z∈{1, …, D}) and c ∈ {1, …, C} is the index of the channel. The proposed network was implemented using Python based on the platform of Pytorch (version = 0.3.1). The code can be downloaded at https://github.com/YongLiuLab. The input is the normalized 3D gray matter density image and the output is a probability for each individual obtained by a soft‐max classifier trained with cross‐entropy loss. It is worth noting that this end‐to‐end network has no need of prior knowledge to design its selected features. The attention generation process for 3D feature maps has less computation overhead and adds little network complexity. The proposed network was optimized using the Adam algorithm with an initial learning rate of 10−6, and the batch size was set as 8.

Diagnostic Performance

To maximize the generalizability of the classifications, multiple classifiers that could provide individual‐level predictions of group status under four different cross‐validation strategies (Table 1) were created to evaluate the impact of pooling data across sites and training/testing at different centers. For the first strategy, the model was trained on an in‐house database and it was tested on the ADNI database. For the second strategy, subjects from ADNI were used as the training set, while subjects from in‐house database were used as an independent testing set. For the third strategy, the situation of inter‐site cross‐validation was considered, in which leave‐center‐out cross‐validation was conducted for the in‐house database between the different scanners. For the fourth strategy, the classifiers were trained and tested within the ADNI database using tenfold cross‐validation for discriminating the AD from NC (Table 1). In addition, the classifiers were trained and tested the classification performance for the pMCI versus sMCI classifications. For all the analyses, the accuracy, sensitivity, specificity, and area under the curve (AUC) of the receiver operating characteristic were used to evaluate the performance of our model.

The Link between the Network's Attention Score, Predication Ability, and Atrophy Pattern

To further evaluate the effectiveness of the attention module for capturing the key regions, the relationship between the attention score and the discriminative capacity of the brain regions involved in AD diagnosis was assessed. Specifically, the attention score for each of the 273 regions designated in the Brainnetome Atlas (http://atlas.brainnetome.org/) was first calculated. To reduce the number of repetitions needed for the retraining and classification process, the 273 brain regions were subdivided into K groups by sorting the attention scores. For groups 1 to K − 1, each group had [273/K] regions, and for group K, it had 273 − (K − 1)[273/K] regions (see Figure S4, Supporting Information). The mean attention score for each group of brain regions was calculated, so that each of the K groups had an attention score. To evaluate the classification capacity of each group, the gray matter density images of the brain regions represented by each group were used as the input for the network to retrain the classification model and recalculate the accuracy of the AD versus NC classification. The retraining and classification process was conducted K times. Finally, the Pearson's correlation coefficients between the classification accuracies and the attention scores for the K groups were calculated. To evaluate the generalization of the result, three different values were used for K (K = 10, 12, 15). This process was repeated three times (The details also can be found in Figure S4, Supporting Information).

In addition, a correlation analysis was performed between the significance of the group difference as indicated by a two‐sample t test and the attention scores of the regions derived from the network. Specifically, the mean gray matter density of 273 ROIs was calculated based on the Brainnetome Atlas and performed a two‐sample two‐side t test between the patients with AD and the NC group after regressing out the effects of age, gender, and site. Then, the Pearson's correlation coefficient between the absolute value of the t statistics and the mean attention score for the 273 ROIs in the in‐house database and in the ADNI database were calculated separately.

To validate whether the importance of the brain regions identified by the attention mechanism was associated with cognitive ability, the correlation between the attention scores and the MMSE scores for each ROI was also investigated, based on the Brainnetome Atlas, in the inter‐database cross validation strategies (the first and second strategies). The correlation between the two correlation maps that were obtained from the two databases to test the reproducibility was also calculated.

Correlation between Prediction Performance and Clinical Measures, Cognition, and Genetic Risk

To further assess the clinical relevance of the prediction performance, the correlations between the class score (i.e., individual pseudo‐probability of AD) and cognitive ability, neuropathological changes, or genetic risk factors of individual subjects in the ADNI and in‐house databases (here with the MCI subjects included to test whether a disease severity association exists) were investigated. The un‐normalized class scores from the 3DAN model were used, rather than the class posteriors returned by the soft‐max layer. The Pearson correlation coefficients were calculated between the classifier output and MMSE scores, CSF beta‐amyloid (Aβ), tau, or polygenic risk scores (PGRS) (details about the PGRS can be found in the Supporting Information) in individual subjects in the AD and MCI groups (also each group separately) after regressing out the effects of age and gender. We also evaluated the effect of the high risk APOE gene for the MCI group in the ADNI database by a two‐sample two‐sided t test between the two subgroups (APOE ε4+ versus APOE ε4‐) (p < 0.05). In addition, to test whether a disease conversion rate association could be identified, the relationship between the classifier output and the length of the time to convert to AD for the pMCI individuals in the ADNI database was also evaluated.

Methods for Comparison

The proposed 3D attention‐based method was compared with four conventional classification methods. These were 1) base ResNet architecture and 2) support vector machine (SVM) classifiers based on the anatomical automatic labeling (AAL) atlas, 3) SVM classifiers based on the Brainnetome (BN) atlas, and 4) SVM classifiers based on voxel‐level features. 1) The residual network architecture with 18 layers (ResNet‐18) was trained for AD diagnosis and included a convolutional layer, eight basic ResNet blocks, and a fully connected layer. Unlike our attention‐based method, the base ResNet did not integrate the attention mechanism into the network. SVM based on ROI features: Region‐level features were extracted from gray matter images based on 2) a coarse‐grained template (AAL atlas) and 3) a finer template (Brainnetome Atlas, BN atlas). The gray matter volumes of the respective 90 ROIs and 273 ROIs were separately quantified to train non‐linear support vector machine classifiers with a Gaussian RBF kernel. 4) SVM based on voxel‐level features: Considering the high dimension of the voxel‐based features, a statistical group comparison analysis was performed based on a t test to reduce the dimensionality and then constructed a non‐linear SVM classifier for disease classification.[ 28 ]

Conflict of Interest

The authors declare no conflict of interest.

Supporting information

Supporting Information

Acknowledgements

D.J. and B.Z. contributed equally to this work. The authors express appreciation to Drs. Rhoda E. and Edmund F. Perozzi for English language and editing assistance. The authors thank Dr. Feng Shi (United Imaging Intelligence), Dr. Shandong Wu (University of Pittsburgh) and Dr. Li Su (University of Cambridge) for their critical discussions and comments. The authors gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan Xp GPU. This work was partially supported by the National Key Research and Development Program of China (Nos. 2016YFC1305904, 2018YFC2001700), the Strategic Priority Research Program (B) of the Chinese Academy of Sciences (Grant No. XDB32020200), the National Natural Science Foundation of China (Grant Nos. 81871438, 81901101, 61633018, 81571062, 81400890, 81471120, 81701781), the Medical Big Data R&D Project of the PLA General Hospital (No. 2018MBD028). Data collection and sharing for this project were funded by the Alzheimer's Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH‐12‐2‐0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer's Association; Alzheimer's Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol‐Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann‐La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research provides funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer's Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California.

Jin D., Zhou B., Han Y., Ren J., Han T., Liu B., Lu J., Song C., Wang P., Wang D., Xu J., Yang Z., Yao H., Yu C., Zhao K., Wintermark M., Zuo N., Zhang X., Zhou Y., Zhang X., Jiang T., Wang Q., Liu Y., Generalizable, Reproducible, and Neuroscientifically Interpretable Imaging Biomarkers for Alzheimer's Disease. Adv. Sci. 2020, 7, 2000675 10.1002/advs.202000675

Contributor Information

Qing Wang, Email: wangqing663@163.com.

Yong Liu, Email: yliu@nlpr.ia.ac.cn.

References

- 1. Matthews K. A., Xu W., Gaglioti A. H., Holt J. B., Croft J. B., Mack D., McGuire L. C., Alzheimer's Dementia 2019, 15, 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Petersen R. C., Doody R., Kurz A., Mohs R. C., Morris J. C., Rabins P. V., Ritchie K., Rossor M., Thal L., Winblad B., Arch. Neurol. 2001, 58, 58. [DOI] [PubMed] [Google Scholar]

- 3. Petersen R. C., Smith G. E., Waring S. C., Ivnik R. J., Tangalos E. G., Kokmen E., Arch. Neurol. 1999, 56, 56. [DOI] [PubMed] [Google Scholar]

- 4. Hampel H., Bürger K., Teipel S. J., Bokde A. L., Zetterberg H., Blennow K., Alzheimer's Dementia 2008, 4, 4. [DOI] [PubMed] [Google Scholar]

- 5. Nakamura A., Kaneko N., Villemagne V. L., Kato T., Doecke J., Dore V., Fowler C., Li Q. X., Martins R., Rowe C., Tomita T., Matsuzaki K., Ishii K., Ishii K., Arahata Y., Iwamoto S., Ito K., Tanaka K., Masters C. L., Yanagisawa K., Nature 2018, 554, 249. [DOI] [PubMed] [Google Scholar]

- 6. Rathore S., Habes M., Iftikhar M. A., Shacklett A., Davatzikos C., NeuroImage 2017, 155, 530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Frisoni G. B., Fox N. C., Jack C. R. Jr., Scheltens P., Thompson P. M., Nat. Rev. Neurol. 2010, 6, 67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Albert M., Zhu Y., Moghekar A., Mori S., Miller M. I., Soldan A., Pettigrew C., Selnes O., Li S., Wang M. C., Brain 2018, 141, 877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Davatzikos C., Fan Y., Wu X., Shen D., Resnick S. M., Neurobiol. Aging 2008, 29, 514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Eskildsen S. F., Coupe P., Fonov V. S., Pruessner J. C., Collins D. L., Neurobiol. Aging 2015, 36, S23. [DOI] [PubMed] [Google Scholar]

- 11. Lian C., Liu M., Zhang J., Shen D., IEEE Transact. Pattern Anal. Machine Intellig. 2020, 42, 880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Davatzikos C., NeuroImage 2019, 197, 652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Li H., Habes M., Wolk D. A., Fan Y., I. Alzheimer's Disease Neuroimaging , B. the Australian Imaging , Alzheimers Dement 2019, 15, 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Litjens G., Kooi T., Bejnordi B. E., Setio A. A. A., Ciompi F., Ghafoorian M., van der Laak J., van Ginneken B., Sanchez C. I., Medical Image Analysis 2017, 42, 60. [DOI] [PubMed] [Google Scholar]

- 15. Vieira S., Pinaya W. H., Mechelli A., Neurosci. Biobehav. Rev. 2017, 74, 58. [DOI] [PubMed] [Google Scholar]

- 16. Tang Z., Chuang K. V., DeCarli C., Jin L. W., Beckett L., Keiser M. J., Dugger B. N., Nat. Commun. 2019, 10, 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Hazlett H. C., Gu H., Munsell B. C., Kim S. H., Styner M., Wolff J. J., Elison J. T., Swanson M. R., Zhu H., Botteron K. N., Collins D. L., Constantino J. N., Dager S. R., Estes A. M., Evans A. C., Fonov V. S., Gerig G., Kostopoulos P., McKinstry R. C., Pandey J., Paterson S., Pruett J. R., Schultz R. T., Shaw D. W., Zwaigenbaum L., Piven J., Network I., Clinical S., Data Coordinating C., Image Processing C., Statistical A., Nature 2017, 542, 348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Xiao T., Xu Y., Yang K., Zhang J., Peng Y., Zhang Z., 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, : 2015, pp. 842–850. [Google Scholar]

- 19. Chen L., Zhang H., Xiao J., Nie L., Shao J., Liu W., Chua T. ‐ S., 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, : 2017, pp. 6298–6306. [Google Scholar]

- 20. Wang F., Jiang M., Qian C., Yang S., Li C., Zhang H., Wang X., Tang X., 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, : 2017, pp. 6450–6458. [Google Scholar]

- 21. Hu J., Shen L., Albanie S., Sun G., Wu E., IEEE Transact. Pattern Analysis Machine Intelligence 2019, 1, 10.1109/TPAMI.2019.2913372. [DOI] [PubMed] [Google Scholar]

- 22. Woo S., Park J., Lee J. ‐ Y., Kweon I. S., Proc. of European Conf. on Computer Vision (ECCV), 2018. [Google Scholar]

- 23. Vaswani A., Shazeer N., Parmar N., Uszkoreit J., Jones L., Gomez A. N., Kaiser Ł., Polosukhin I., Advances in Neural Information Processing Systems, 2017, pp. 5998–6008. [Google Scholar]

- 24. Braak H., Braak E., Acta Neuropathol. 1991, 82, 239. [DOI] [PubMed] [Google Scholar]

- 25. Frisoni G. B., Boccardi M., Barkhof F., Blennow K., Cappa S., Chiotis K., Demonet J. F., Garibotto V., Giannakopoulos P., Gietl A., Hansson O., Herholz K., Jack C. R. Jr., Nobili F., Nordberg A., Snyder H. M., Ten Kate M., Varrone A., Albanese E., Becker S., Bossuyt P., Carrillo M. C., Cerami C., Dubois B., Gallo V., Giacobini E., Gold G., Hurst S., Lonneborg A., et al., Lancet Neurol. 2017, 16, 661. [DOI] [PubMed] [Google Scholar]

- 26. Thompson P. M., Hayashi K. M., Dutton R. A., Chiang M. C., Leow A. D., Sowell E. R., De Zubicaray G., Becker J. T., Lopez O. L., Aizenstein H. J., Toga A. W., Ann. N. Y. Acad. Sci. 2007, 1097, 183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Ding Y., Sohn J. H., Kawczynski M. G., Trivedi H., Harnish R., Jenkins N. W., Lituiev D., Copeland T. P., Aboian M. S., Mari Aparici C., Behr S. C., Flavell R. R., Huang S. Y., Zalocusky K. A., Nardo L., Seo Y., Hawkins R. A., Hernandez Pampaloni M., Hadley D., Franc B. L., Radiology 2019, 290, 456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Liu M., Zhang J., Adeli E., Shen D., Med. Image Anal. 2018, 43, 157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Aderghal K., Benois‐Pineau J., Afdel K., Proceedings of the 2017 ACM on International Conference on Multimedia Retrieval, ACM, : 2017, pp. 494–498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Ge C., Qu Q., Gu I. Y.‐H., Jakola A. S., Neurocomputing 2019, 350, 60. [Google Scholar]

- 31. Qureshi M. N. I., Ryu S., Song J., Lee K. H., Lee B., Front. Aging Neurosci. 2019, 11, 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Wang H., Shen Y., Wang S., Xiao T., Deng L., Wang X., Zhao X., Neurocomputing 2019, 333, 333. [Google Scholar]

- 33. Devanand D. P., Pradhaban G., Liu X., Khandji A., De Santi S., Segal S., Rusinek H., Pelton G. H., Honig L. S., Mayeux R., Stern Y., Tabert M. H., de Leon M. J., Neurology 2007, 68, 828. [DOI] [PubMed] [Google Scholar]

- 34. Jones B. F., Barnes J., Uylings H. B., Fox N. C., Frost C., Witter M. P., Scheltens P., Cereb Cortex 2006, 16, 16. [DOI] [PubMed] [Google Scholar]

- 35. Killiany R. J., Hyman B. T., Gomez‐Isla T., Moss M. B., Kikinis R., Jolesz F., Tanzi R., Jones K., Albert M. S., Neurology 2002, 58, 1188. [DOI] [PubMed] [Google Scholar]

- 36. Adaszewski S., Dukart J., Kherif F., Frackowiak R., Draganski B., Neurobiol. Aging 2013, 34, 2815. [DOI] [PubMed] [Google Scholar]

- 37. Hinrichs C., Singh V., Mukherjee L., Xu G., Chung M. K., Johnson S. C., NeuroImage 2009, 48, 138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Liu M., Zhang D., Shen D., Hum. Brain Mapp. 2015, 36, 36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Zhan Y., Yao H., Wang P., Zhou B., Zhang Z., Guo Y., An N., Ma J., Zhang X., Liu Y., IEEE J. Select. Topics Signal Proces. 2016, 10, 1182. [Google Scholar]

- 40. Varoquaux G., NeuroImage 2018, 180, 68. [DOI] [PubMed] [Google Scholar]

- 41. Rozycki M., Satterthwaite T. D., Koutsouleris N., Erus G., Doshi J., Wolf D. H., Fan Y., Gur R. E., Gur R. C., Meisenzahl E. M., Zhuo C., Yin H., Yan H., Yue W., Zhang D., Davatzikos C., Schizophrenia Bulletin 2018, 44, 1035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Ma Q., Zhang T., Zanetti M. V., Shen H., Satterthwaite T. D., Wolf D. H., Gur R. E., Fan Y., Hu D., Busatto G. F., Davatzikos C., NeuroImage: Clin. 2018, 19, 476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Woo C. W., Chang L. J., Lindquist M. A., Wager T. D., Nat. Neurosci. 2017, 20, 365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Teipel S. J., Wohlert A., Metzger C., Grimmer T., Sorg C., Ewers M., Meisenzahl E., Kloppel S., Borchardt V., Grothe M. J., Walter M., Dyrba M., NeuroImage: Clin. 2017, 14, 183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Xia M., Si T., Sun X., Ma Q., Liu B., Wang L., Meng J., Chang M., Huang X., Chen Z., Tang Y., Xu K., Gong Q., Wang F., Qiu J., Xie P., Li L., He Y., Group D. I.‐M. D. D. W., NeuroImage 2019, 189, 700. [DOI] [PubMed] [Google Scholar]

- 46. Feczko E., Miranda‐Dominguez O., Marr M., Graham A. M., Nigg J. T., Fair D. A., Trends Cognit. Sci. 2019, 23, 584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Dansereau C., Benhajali Y., Risterucci C., Pich E. M., Orban P., Arnold D., Bellec P., NeuroImage 2017, 149, 220. [DOI] [PubMed] [Google Scholar]

- 48. Sepulcre J., Grothe M. J., d'Oleire Uquillas F., Ortiz‐Teran L., Diez I., Yang H. S., Jacobs H. I. L., Hanseeuw B. J., Li Q., El‐Fakhri G., Sperling R. A., Johnson K. A., Nat. Med. 2018, 24, 1910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Torkamani A., Wineinger N. E., Topol E. J., Nat. Rev. Genet. 2018, 19, 581. [DOI] [PubMed] [Google Scholar]

- 50. Gaiteri C., Mostafavi S., Honey C. J., De Jager P. L., Bennett D. A., Nat. Rev. Neurol. 2016, 12, 413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Tan C. H., Bonham L. W., Fan C. C., Mormino E. C., Sugrue L. P., Broce I. J., Hess C. P., Yokoyama J. S., Rabinovici G. D., Miller B. L., Yaffe K., Schellenberg G. D., Kauppi K., Holland D., McEvoy L. K., Kukull W. A., Tosun D., Weiner M. W., Sperling R. A., Bennett D. A., Hyman B. T., Andreassen O. A., Dale A. M., Desikan R. S., Brain 2019, 142, 142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Axelrud L. K., Santoro M. L., Pine D. S., Talarico F., Gadelha A., Manfro G. G., Pan P. M., Jackowski A., Picon F., Brietzke E., Grassi‐Oliveira R., Bressan R. A., Miguel E. C., Rohde L. A., Hakonarson H., Pausova Z., Belangero S., Paus T., Salum G. A., Am. J. Psychiatry 2018, 175, 555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Mormino E. C., Sperling R. A., Holmes A. J., Buckner R. L., De Jager P. L., Smoller J. W., Sabuncu M. R., Neurology 2016, 87, 481.27385740 [Google Scholar]

- 54. Kunkle B. W., Grenier‐Boley B., Sims R., Bis J. C., Damotte V., Naj A. C., Boland A., Vronskaya M., van der Lee S. J., Amlie‐Wolf A., Bellenguez C., Frizatti A., Chouraki V., Martin E. R., Sleegers K., Badarinarayan N., Jakobsdottir J., Hamilton‐Nelson K. L., Moreno‐Grau S., Olaso R., Raybould R., Chen Y., Kuzma A. B., Hiltunen M., Morgan T., Ahmad S., Vardarajan B. N., Epelbaum J., Hoffmann P., Boada M., et al., Nat. Genet. 2019, 51, 51.30578418 [Google Scholar]

- 55. Dubois B., Epelbaum S., Nyasse F., Bakardjian H., Gagliardi G., Uspenskaya O., Houot M., Lista S., Cacciamani F., Potier M.‐C., Bertrand A., Lamari F., Benali H., Mangin J.‐F., Colliot O., Genthon R., Habert M.‐O., Hampel H., Audrain C., Auffret A., Baldacci F., Benakki I., Bertin H., Boukadida L., Cavedo E., Chiesa P., Dauphinot L., Dos Santos A., Dubois M., Durrleman S., et al., Lancet Neurol. 2018, 17, 17. [DOI] [PubMed] [Google Scholar]

- 56. Schott J. M., Crutch S. J., Carrasquillo M. M., Uphill J., Shakespeare T. J., Ryan N. S., Yong K. X., Lehmann M., Ertekin‐Taner N., Graff‐Radford N. R., Boeve B. F., Murray M. E., Khan Q. U., Petersen R. C., Dickson D. W., Knopman D. S., Rabinovici G. D., Miller B. L., Gonzalez A. S., Gil‐Neciga E., Snowden J. S., Harris J., Pickering‐Brown S. M., Louwersheimer E., van der Flier W. M., Scheltens P., Pijnenburg Y. A., Galasko D., Sarazin M., Dubois B., et al., Alzheimer's Dementia 2016, 12, 862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Brier M. R., Gordon B., Friedrichsen K., McCarthy J., Stern A., Christensen J., Owen C., Aldea P., Su Y., Hassenstab J., Cairns N. J., Holtzman D. M., Fagan A. M., Morris J. C., Benzinger T. L., Ances B. M., Sci. Transl. Med. 2016, 8, 338ra66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Holtzman D. M., Goate A., Kelly J., Sperling R., Sci. Transl. Med. 2011, 3, 3ps148. [DOI] [PubMed] [Google Scholar]

- 59. Pellegrini E., Ballerini L., Hernandez M., Chappell F. M., Gonzalez‐Castro V., Anblagan D., Danso S., Munoz‐Maniega S., Job D., Pernet C., Mair G., MacGillivray T. J., Trucco E., Wardlaw J. M., Alzheimers Dement (Amst) 2018, 10, 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Aoyagi A., Condello C., Stohr J., Yue W., Rivera B. M., Lee J. C., Woerman A. L., Halliday G., van Duinen S., Ingelsson M., Lannfelt L., Graff C., Bird T. D., Keene C. D., Seeley W. W., DeGrado W. F., Prusiner S. B., Sci. Transl. Med. 2019, 11, 490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Jack C. R. Jr., Bennett D. A., Blennow K., Carrillo M. C., Dunn B., Haeberlein S. B., Holtzman D. M., Jagust W., Jessen F., Karlawish J., Liu E., Molinuevo J. L., Montine T., Phelps C., Rankin K. P., Rowe C. C., Scheltens P., Siemers E., Snyder H. M., Sperling R., Alzheimer's Dementia 2018, 14, 535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Spasov S., Passamonti L., Duggento A., Lio P., Toschi N., NeuroImage 2019, 189, 276. [DOI] [PubMed] [Google Scholar]

- 63. Gordon B. A., Blazey T. M., Su Y., Hari‐Raj A., Dincer A., Flores S., Christensen J., McDade E., Wang G., Xiong C., Cairns N. J., Hassenstab J., Marcus D. S., Fagan A. M., Jack C. R. Jr., Hornbeck R. C., Paumier K. L., Ances B. M., Berman S. B., Brickman A. M., Cash D. M., Chhatwal J. P., Correia S., Forster S., Fox N. C., Graff‐Radford N. R., la Fougere C., Levin J., Masters C. L., Rossor M. N., Lancet Neurol. 2018, 17, 241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Abi‐Dargham A., Horga G., Nat. Med. 2016, 22, 1248. [DOI] [PubMed] [Google Scholar]

- 65. Duzel E., Acosta‐Cabronero J., Berron D., Biessels G. J., Bjorkman‐Burtscher I., Bottlaender M., Bowtell R., Buchem M. V., Cardenas‐Blanco A., Boumezbeur F., Chan D., Clare S., Costagli M., de Rochefort L., Fillmer A., Gowland P., Hansson O., Hendrikse J., Kraff O., Ladd M. E., Ronen I., Petersen E., Rowe J. B., Siebner H., Stoecker T., Straub S., Tosetti M., Uludag K., Vignaud A., Zwanenburg J., Speck O., Alzheimers Dement (Amst) 2019, 11, 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. He X., Qin W., Liu Y., Zhang X., Duan Y., Song J., Li K., Jiang T., Yu C., Human Brain Mapping 2014, 35, 3446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Zhang Z., Liu Y., Jiang T., Zhou B., An N., Dai H., Wang P., Niu Y., Wang L., Zhang X., Neuroimage 2012, 59, 59. [DOI] [PubMed] [Google Scholar]

- 68. Li J., Jin D., Li A., Liu B., Song C., Wang P., Wang D., Xu K., Yang H., Yao H., Zhou B., Bejanin A., Chetelat G., Han T., Lu J., Wang Q., Yu C., Zhang X., Zhou Y., Zhang X., Jiang T., Liu Y., Han Y., Sci. Bull. 2019, 64, 64. [DOI] [PubMed] [Google Scholar]

- 69. Zhao K., Ding Y., Han Y., Fan Y., Alexander‐Bloch A. F., Han T., Jin D., Liu B., Lu J., Song C., Wang P., Wang D., Wang Q., Xu K., Yang H., Yao H., Zheng Y., Yu C., Zhou B., Zhang X., Zhou Y., Jiang T., Zhang X., Liu Y., Sci. Bull. 2020, 10.1016/j.scib.2020.04.003. [DOI] [PubMed] [Google Scholar]

- 70. Jin D., Wang P., Zalesky A., Liu B., Song C., Wang D., Xu K., Yang H., Zhang Z., Yao H., Zhou B., Han T., Zuo N., Han Y., Lu J., Wang Q., Yu C., Zhang X., Zhang X., Jiang T., Zhou Y., Liu Y., Hum. Brain Mapp. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Jin D., Xu J., Zhao K., Hu F., Yang Z., Liu B., Jiang T., Liu Y., 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI), 2019, pp. 1047–1051. [Google Scholar]

- 72. He K., Zhang X., Ren S., Sun J., 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, : 2016, pp. 770–778. [Google Scholar]

- 73. Nair V., Hinton G. E., Proceedings of the 27th International Conference on Machine Learning (ICML‐10), 2010, pp. 807–814. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information