Abstract

Objectives

To make informed decisions about healthcare, patients and the public, health professionals and policymakers need information about the effects of interventions. People need information that is based on the best available evidence; that is presented in a complete and unbiased way; and that is relevant, trustworthy and easy to use and to understand. The aim of this paper is to provide guidance and a checklist to those producing and communicating evidence-based information about the effects of interventions intended to inform decisions about healthcare.

Design

To inform the development of this checklist, we identified research relevant to communicating evidence-based information about the effects of interventions. We used an iterative, informal consensus process to synthesise our recommendations. We began by discussing and agreeing on some initial recommendations, based on our own experience and research over the past 20–30 years. Subsequent revisions were informed by the literature we examined and feedback. We also compared our recommendations to those made by others. We sought structured feedback from people with relevant expertise, including people who prepare and use information about the effects of interventions for the public, health professionals or policymakers.

Results

We produced a checklist with 10 recommendations. Three recommendations focus on making it easy to quickly determine the relevance of the information and find the key messages. Five recommendations are about helping the reader understand the size of effects and how sure we are about those estimates. Two recommendations are about helping the reader put information about intervention effects in context and understand if and why the information is trustworthy.

Conclusions

These 10 recommendations summarise lessons we have learnt developing and evaluating ways of helping people to make well-informed decisions by making research evidence more understandable and useful for them. We welcome feedback for how to improve our advice.

Keywords: health policy, quality in health care, medical journalism, public health

Strengths and limitations of this study.

Our approach to preparing this checklist has been pragmatic in terms of the methods we have used.

We have provided explanations of the basis for each recommendation and references to supporting research.

We did not conduct a systematic review to inform our guidance.

We did not review non-English language literature.

We did not systematically grade the certainty of the evidence or strength of our recommendations.

Introduction

Access to healthcare information is necessary if people are to be involved in decisions regarding their own health.1 Recognising this, governments in several countries have included the right to healthcare information in patients’ charters. These charters commonly establish people’s right to access information about treatments,2 including the benefits and harms of these treatments.3 Patients’ charters also underline the need to provide this information in a way that people can understand and that is adapted to each individual’s needs.2 4

Having the right to information does not necessarily mean that this information is available, and many patients and members of the public struggle to find information that is relevant to their circumstances. At the same time, most people are bombarded with claims in the media and other aspects of day-to-day life about what they should and should not do to maintain or improve their health.

Many health claims are unreliable and conflicting.5–14 When they are purported to be based on research, this might also contribute to a lack of trust in research. For example, surveys in the UK have shown that only about one-third of the public trust evidence from medical research, while about two-thirds trust the experiences of friends and family.15

It cannot therefore be assumed that people will trust advice simply because it is based on research evidence and given by authorities. Nor should they, as the opinions of experts or authorities do not alone provide a reliable basis for judging the benefits and harms of interventions.16 17 Doctors, researchers and public health authorities—like anyone else—often disagree about the effects of interventions. This may be because their opinions are not always based on systematic reviews of fair comparisons of interventions.18 Government authorities and professional organisations host many websites that provide health advice to the public. However, these websites often provide information that is unclear, incomplete and misleading.11 We were able to find only three websites that provide information about the effects of healthcare interventions that were explicitly based on systematic reviews.19 Even where information is based on systematic reviews, it may still be unclear, incomplete and misleading.

People who summarise lengthy research reports to make them more accessible are faced with many choices. This includes decisions about which evidence to present, how this evidence should be interpreted, and the format in which it should be presented. Our own experiences creating summaries based on Cochrane reviews have shown us that there are many pitfalls.20–25 A fundamental challenge is to find an appropriate balance between accuracy and simplicity. On the one hand, summaries should give a reasonably complete, nuanced and unbiased representation of the evidence. On the other hand, they should be succinct and understandable to people without research expertise.

Another challenge to making research evidence easier to use is that people with expertise in a field have been found to pay attention to read and interpret information differently from people without expertise.26 A common publishing strategy is to accommodate these differences by creating different versions of information for experts and non-experts; for example, for health professionals and for patients. However, both health professionals and patients frequently lack research expertise.22 26–29 In terms of understanding evidence-based information about the effects of treatments, ‘experts’ are the people who have acquired the skills needed to understand and interpret results from quantitative studies and systematic reviews. Everybody else could be considered ‘non-experts’ in this area.

This does not mean that this large group of non-experts are universally similar regarding their information needs. They may have different levels of language literacy, health literacy and numeracy, or they may need to use evidence for different kinds of decision-making tasks. However, when it comes to the specific task of understanding research evidence and using this information to weigh the trade-offs between possible benefits and harms, most users are non-experts. Consequently, most people would benefit from information about the effects of interventions that are presented in a way that recognises the needs of non-experts. This includes patients, health professionals and policymakers.

In summary, to make informed choices or decisions, people need information that is accessible, easy to find, relevant, based on the best available evidence, accurate, complete, not misleading, nuanced, unbiased, easy to understand and trustworthy.

The aim of this paper is to provide guidance and a checklist to anyone who is preparing and communicating evidence-based information on the effects of interventions (ie, information based on systematic reviews of fair comparisons) that is intended to inform decisions by patients and the public, health professionals or policymakers.

Methods

Ethical considerations

Development of this checklist was guided by ethical considerations underlying informed consent and patients’ rights. Informed consent in medical research has received a huge amount of attention.30 Informed consent in clinical and public health practice has received far less attention,31 and a double standard has existed for at least 50 years.32 Consent in clinical and public health practice is reviewed, if at all, only in retrospect. Health professionals are exhorted to obtain informed consent, but in daily practice, as opposed to in clinical trials, they often minimise uncertainties about interventions and they may feel duty bound to provide unequivocal recommendations.32

Our starting point in preparing this checklist was the belief that patients and the public have the right to be informed when making health choices—such as a personal choice about whether to adhere to advice, a decision about whether to participate in research or in taking a position regarding a health policy. Specifically, they should have access to the best available research evidence, including information about uncertainty, summarised in plain language. We do not assume that everyone wants this information.

Many people are not interested or prefer for someone else to make healthcare decisions on their behalf. For example, a systematic review of patient preferences for decision roles found that a substantial portion of patients prefer to delegate decision-making to their physician, although in most studies most patients reported a preference for shared decision-making.33 Some patient’s rights charters take this into account—for instance, the right to waive one’s ‘right to be informed’ is specifically mentioned in the Norwegian Patient Rights legislation.4 We would argue that under most circumstances it is good clinical practice to respect patient preferences.31 Those people who do not want information on the effects of treatments do not need to read or listen to information, but it should be there for those who want it.

Literature review

To inform the development of this checklist, we compiled research evidence that is relevant to giving guidance on how to communicate evidence-based information about the effects of interventions. We started with our own research and then identified related research through a snowballing and citation reference method. We supplemented this with broad searches for evidence on communicating research evidence and intervention effects and specific searches for each item in the checklist. We did not conduct a systematic review. We have, however, referenced systematic reviews to support each item in the checklist when one was available. When we were not able to find a relevant systematic review, we have referenced the best available evidence that we have found. In addition, we have reviewed relevant guidance and reference lists. This included guidance for plain language summaries of research evidence,34 for reporting and using systematic reviews,35 36 for making judgements about the certainty of evidence and for going from evidence to recommendations37–39 and for risk communication.40

Synthesis

We used an iterative, informal consensus process to synthesise our recommendations. This was informed by our own experience and research spanning over three decades, our review of the literature, comparing our recommendations to other relevant guidance, and feedback from colleagues. We met initially to discuss our recommendations, divided up tasks, prepared drafts and then discussed these until we reached agreement on a final set of recommendations. In addition to the checklist summarising our main recommendations, we prepared a flowchart, providing guidance for implementing our recommendations. After agreeing on a set of recommendations, we compared these to recommendations made by others and sent a draft report to 40 people and received feedback from 30 (see Acknowledgements section) requesting structured feedback (online supplementary additional file 1).

bmjopen-2019-036348supp001.pdf (206.5KB, pdf)

Patient and public involvement

We did not directly involve patients in planning or executing this study.

Results

Our recommendations are summarised in a checklist with 10 items (box 1). The basis for each recommendation is provided in online supplementary additional file 2 and explanations for each of the recommendations are provided in online supplementary additional file 3. All of our recommendations could be considered ‘good practice statements’. Good practice statements are recommendations that do not warrant formal ratings of the certainty of the evidence.41 One way of recognising such recommendations is to ask whether the unstated alternative is absurd.41 Arguably, that is the case for all the recommendations in box 1.

Box 1. Checklist for communicating effects.

Make it easy for your target audience to quickly determine the relevance of the information, and to find the key messages.

Clearly state the problem and the options (interventions) that you address, using language that is familiar to your target audience—so that people can determine whether the information is relevant to them.

Present key messages up front, using language that is appropriate for your audience and make it easy for those who are interested to dig deeper and find information that is more detailed.

Report the most important benefits and harms, including outcomes for which no evidence was found—so that there is no ambiguity about what was found for each outcome that was considered.

For each outcome, help your target audience to understand the size of the effect and how sure we can be about that; and avoid presentations that are misleading.

Explicitly assess and report the certainty of the evidence.

Use language and numerical formats that are consistent and easy to understand.

Present both numbers and words and consider using tables to summarise benefits and harms, for instance, using Grading of Recommendations Assessment, Development and Evaluation (GRADE) summary of finding tables or similar tables.

Report absolute effects.

Avoid misleading presentations and interpretations of effects.

Help your audience to avoid misinterpreting continuous outcome measures.

Explicitly assess and report the credibility of subgroup effects.

Avoid confusing ‘statistically significant’ with ‘important’ or a ‘lack of evidence’ with a ‘lack of effect’.

Help your target audience to put information about the effects of interventions in context and to understand why the information is trustworthy.

Provide relevant background information, help people weigh the advantages against the disadvantages of interventions and provide a sufficient description of the interventions.

Tell your audience how the information was prepared, what it is based on, the last search date, who prepared it and whether the people who prepared the information had conflicts of interest.

bmjopen-2019-036348supp002.pdf (343.1KB, pdf)

bmjopen-2019-036348supp003.pdf (533.3KB, pdf)

Flowchart

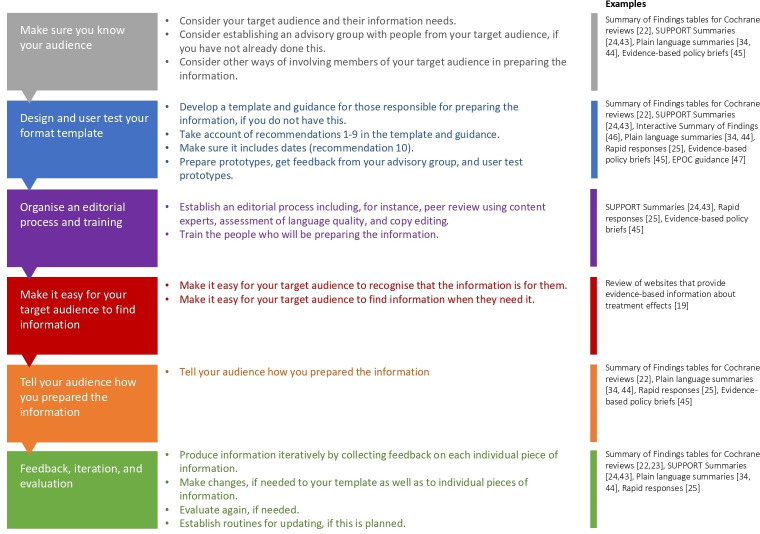

The flowchart (figure 1) outlines a process for producing evidence-based information about the effects of interventions. It provides examples that illustrate each step of the process. 42–46 The process begins with making sure that you know your target audience. It is important to consider how members of your target audience will be involved in the process. The next steps in the process are designing and user testing a template for the information that you will prepare, organising an editorial process and training and considering ways of making it easy for your target audience to find your information. Although the flowchart suggests a linear process, development should be approached as an iterative, cyclical process. The last step in figure 1 is to collect feedback on each individual piece of information from people in your target audience; to make changes if needed (to your template as well as to individual pieces of information); and to evaluate again, if needed. It also includes establishing routines for updating the information that you prepare, if this is planned.

Figure 1.

Flow chart outlining a process for producing evidence-based information about the effects of interventions

Discussion

How our checklist compares with related checklists and guidance

Although our guidance overlaps with other guidance,38 47–54 for the most part other guidance does not specifically addressing preparation of evidence-based information for decision-makers about the effects of interventions. The one exception or which we are aware is the ‘guideline for evidence-based health information’ prepared by the German Network for Evidence-based Medicine (DNEbM),55 which is only partially translated to English as of April 2020. The DNEbM recommendations are consistent with or recommendations to present both numbers and words and report absolute effects. They do not explicitly address our other recommendations. Comparison of our guidance with other guidance is summarised in table 1.

Table 1.

Comparison of our checklist with other guidance

| Guidance | Purpose | Comparison with our checklist |

| The Conference On Guideline Standardisation checklist for reporting clinical practice guidelines47 | The checklist is intended to minimise the quality defects that arise from failure to include essential information and to promote development of recommendation statements that are more easily implemented. | Focus is on content of a full guideline report rather than on presentation of information. It does not include guidance for how to present information about benefits and harms. It is consistent with our checklist for the items that overlap. Some of the 18 items are outside of the scope of our checklist. |

| DISCERN instrument for judging the quality of written consumer health information on treatment choices48 | To enable patients and information providers to judge the quality of written information about treatment choices and to facilitate the production of new, high-quality, evidence-based consumer health information. | There is some overlap, but the focus is on content of information for patients and the public rather than on presentation of that information; and the checklist is presented as an instrument for assessing the quality of information rather than as a guide for preparing it. |

| Ensuring Quality Information for Patients tool49 | To provide a practical measure of the presentation quality for all types of written healthcare information. | There is some overlap, but it does not address how to present evidence-based information about the effects of interventions. It includes some relevant suggestions that we have not included: |

| ||

| ||

| ||

| ||

| Evidence-based risk communication50 | Key findings to inform best practice from a systematic review of the comparative effectiveness of methods of communicating probabilistic information to patients who maximise their cognitive and behavioural outcomes. | The findings from this systematic review are largely consistent with our recommendations for how to help people understand the size of effects. It includes some suggestions that we have not: |

| ||

| ||

| ||

| ||

| GRADE guidelines38 | To provide guidance for use of the GRADE* system of rating the certainty of evidence and grading the strength of recommendations in systematic reviews, health technology assessments and clinical practice guidelines. | This is a series of articles that provide detailed guidance for people preparing systematic reviews, health technology assessments, or guidelines. We have helped to develop this guidance and have drawn on it. Our checklist is consistent with GRADE guidance for summary of finding tables and communicating information about uncertainty. |

| International Patient Decision Aid Standards (IPDAS) Patient Decision Aid User Checklist51 52 | To provide a set of quality criteria for patient decision aids. | Many of the items in the IPDAS checklist overlap with our checklist. It also includes items that are outside of the scope of our checklist (eg, decision aids for tests, helping users to clarify their values and evaluation of decision aids) as well as some items that are within our scope, which we have not included. They are reformulated here as guidance: |

| ||

| ||

| ||

| ||

| ||

| ||

| ||

| Risk and uncertainty communication53 | Explores the major issues in communicating risk assessments arising from statistical analysis and concludes with a set of recommendations. | Largely consistent with our checklist. Includes a set of recommendations about visualisations, such as: |

| ||

| ||

| ||

| ||

| ||

| ||

| US National Standards for the Certification of Patient Decision Aids54 | To provide criteria for a potential decision aid certification process in the USA | Although there is some overlap with our checklist, the criteria do not address how to present information about the effects of interventions other than ‘adopting risk communication principles’. |

*Grading of Recommendations Assessment, Development and Evaluation

The Ensuring Quality Information for Patients (EQIP) tool49 and the International Patient Decision Aid Standards (IPDAS) checklist51 52 include specific recommendations related to using plain language (short sentences and a reading level not exceeding a reading age of 12). We have included key principles for plain language in our detailed guidance (online supplementary additional file 3).

The EQIP tool,49 the IPDAS checklist51 52 as well as a systematic review on evidence-based risk communication by Zipkin and colleagues50 recommend using visual aids. The last two recommend using graphs to show probabilities. We agree that information for people making decisions about interventions should be visually appealing and that well-designed visualisations can help some people to understand information about the effects of interventions. The DNEbM guidelines55 recommend that ‘graphics may be used to supplement numerical presentations in texts or tables’ based on ‘low-quality’ evidence. They also recommend that ‘if graphics are used as a supplement, then either pictograms or bar charts should be used’ based on ‘moderate-quality’ evidence. Spiegelhalter53 recommends visualisations in communication about risk and uncertainty, which seems sensible. However, we do not think there currently is enough evidence to support recommendations about when to use visualisations or what type of visualisation to use.50 53 56 57

The systematic review on evidence-based risk communication50 suggests being aware that positive framing (stating benefits rather than harms) increases acceptance of therapies. The IPDAS checklist52 53 recommends presenting probabilities using both positive and negative frames (eg, showing both survival and death rates). We do not think there currently is enough evidence for either of these recommendations.58

Zipkin and colleagues50 suggest placing a patient’s risk in context by using comparative risks of other events. We do not think there is currently enough evidence to support this recommendation and question its relevance for many decisions about interventions.

The DNEbM guidelines55 suggest ‘interactive elements may be used in health information’ based on ‘moderate-quality’ evidence. Similarly, the IPDAS checklist51 52 recommends allowing patients to select a way of viewing the probabilities (eg, words, numbers, diagrams). We agree this is sensible and, in previous work, we have designed an interactive summary of findings with this in mind.45 However, there is limited evidence to support this recommendation. We attempted to test this hypothesis in a randomised trial.59 Because of technical problems (the interactive summary of findings and data collection did not work for some participants), we were not able to complete the trial. The qualitative data that we collected suggested that participants (people in Scotland with an interest in participating in randomised trials of interventions60) had mixed views about their preferences for an interactive versus a static presentation. They also had mixed views regarding which initial presentation they preferred in the interactive presentation.

Finally, the DNEbM guidelines conclude that ‘narratives cannot be recommended’ based on ‘low-quality’ evidence. In contrast, the IPDAS checklist51 52 recommends including stories of other patients’ experiences and using audio and video to help users understand the information. We agree that this may be helpful. However, it is also possible that stories that specifically describe patients’ experiences of treatment effects and side effects can have unintended consequences. For example, people’s perceptions of their own risks of experiencing a benefit or harm could be influenced by whether they identify with the person telling the story or not. We are not aware of evidence from randomised trials comparing information with and without patients’ experiences, audio or video; or comparing different types of presentations. A recent systematic review on the use of narratives to impact health policymaking did not find any trials.61

Strengths and weaknesses of our checklist

We did not conduct a systematic review to inform our guidance, review non-English language literature, assess the certainty of the evidence supporting each recommendation, grade the strength of our recommendations or use a formal consensus process. However, we have provided explanations of the basis for each recommendation and references to supporting research. Our approach to preparing this checklist has been pragmatic in terms of the methods we have used. We hope that others will find the checklist practical and helpful. To facilitate use of the checklist, we have prepared a flowchart with examples (figure 1).

Implementation of the guidance can be facilitated by developing a template, specific guidance for those charged with using the template to prepare the information and training for those people. Links to examples of these are found in the flowchart. User testing can help to ensure that people in your target audience experience the information positively and as intended. We have provided links to examples of user tests of information about the effects of interventions and to resources for user testing in the flowchart.

Implications for research

There remain many important uncertainties about how best to present evidence-based information about the effects of interventions to people making decisions about those interventions There is a need for more primary research and more systematic reviews in this field. We have summarised key uncertainties that we identified while preparing this checklist in table 2. In addition, there is a need for a methodological review and a consensus on appropriate outcomes for studies evaluating different ways of communicating evidence-based information about the effects of interventions.62

Table 2.

Important uncertainties about how to present evidence-based information about the effects of interventions to people making decisions

| Question | What is known | Research that is needed |

| What are the effects of alternative visual displays of intervention effects on understanding and users’ experience of the information? | Not all visual displays are more intuitive than text or numbers, some visual displays can be misleading, some may require explanation in order for people to understand them and people tend to prefer simplicity and familiarity, which may not be associated with accurate quantitative judgements.50 53 56 57 63 64 | Design and user testing of ways of visualising effects of multiple outcomes; randomised trials comparing different graphs or visualisations to each other and to information (tables and text) without visualisations and a systematic review of those trials. |

| What are the effects of positive versus negative framing for different types of decisions on people’s understanding and decisions? | Low to moderate certainty evidence suggests that both attribute and goal framing may have little if any consistent effect on patients’ behaviour.58 Unexplained heterogeneity between studies suggests the possibility of a framing effect under specific conditions. | Randomised trials comparing positive to negative framing for different types of decisions and a systematic review of those trials. |

| When should CIs be reported and how should they be presented and explained? | Although CIs are more informative than p values, CIs can also be misinterpreted.43 65 66 There are pros and cons to reporting CIs and little evidence to support a recommendation either to include them or exclude them, or how to present and explain them, if they are included. Deciding whether and how to report CIs may depend on the target audience. | User testing of ways of presenting and explaining CIs; randomised trials comparing different ways of presenting and explaining CIs to other ways and to not presenting CIs and a systematic review of those trials. |

| What are the effects of interactive presentations of information about the effects of interventions compared with static presentations, on comprehension, ease of use and usefulness in decision-making for people across a broad range of target audiences? | Different people prefer different types of presentation formats, and access information for different reasons that require different amount of detail. Instead of offering multiple tailored static formats to different audiences, an alternative solution is making multiple types of presentations available to all viewers through an interactive solution. Unpublished qualitative data from a failed trial with patients and the public59 suggest that there may be mixed preferences for an interactive versus a static presentation. There is also uncertainty about which initial presentation to use for interactive presentations. | Design and user testing of interactive presentations; randomised trials comparing interactive to static presentations in a heterogeneous group, comparing alternative initial presentations across different subgroups and a systematic review of this evidence. |

| What are the effects of including stories of patients’ experiences in patient information? | People want this information and value it.20 | Design and user testing of ways of incorporating patients’ experiences, including the use of patients’ stories to describe treatment benefits and harms or to describe the treatment or condition; randomised trials comparing information with and without patients’ experiences and a systematic review of this evidence. |

| What are the effects of audio and video presentations of information about the effects of interventions on peoples’ understanding, decisions and experience of the information? | Audio and video presentations are likely to be helpful for people with poor reading skills and some people may prefer these presentations either as an alternative or as a supplement to reading. | Design and user testing of audio and video presentations; randomised trials comparing information with and without audio and video presentations and a systematic review of this evidence. |

Conclusions

The checklist that we have developed, which includes 10 items, is the top layer of our recommendations for how to prepare evidence-based information on the effects of interventions that is intended to inform decisions by patients and the public, health professionals or policymakers. These 10 recommendations summarise the lessons that we have learnt from our review of relevant research. The recommendations draw on our own experience over the past 20–30 years in developing and evaluating ways of helping people to make well-informed health choices by making research evidence more understandable and useful to them. We welcome feedback and suggestions for how to improve our advice.

Supplementary Material

Acknowledgments

The following people provided feedback on an earlier version of our checklist: Angela Coulter, Anne Hilde Røsvik, Baruch Fischhoff, Christina Rolfheim-Bye, Daniella Zipkin, David Spiegelhalter, Donna Ciliska, Elie Akl, Frode Forland, Glyn Elwyn, Gord Guyatt, Hanne Hånes, Holger Schünemann, Iain Chalmers, Jessica Ancker, John Ioannidis, Knut Forr Børtnes, Magne Nylenna, Marita Sporstøl Fønhus, Mike Clarke, Mirjam Lauritzen, Nancy Santesso, Nandi Siegfried, Pablo Alonso Coello, Paul Glasziou, Per Kristian Svendsen, Ray Moynihan, Rebecca Bruu Carver, Richard Smith and Tove Skjelbakken.

Footnotes

Twitter: @RosenbaumSarah

Contributors: ADO, CG, SF, SL and AF are health service researchers. SR is a designer and researcher. The authors have worked together for over two decades studying ways to help health professionals, policymakers, patients and the public make well-informed healthcare decisions. All the authors participated in discussions about the recommendations, and this report helped review the literature and respond to external feedback on a draft report and provided feedback on each draft of the report. ADO is the guarantor of the article.

Funding: All the authors are employed by the Norwegian Institute of Public Health and work in the Centre for Informed Health Choices.

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting or dissemination plans of this research.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: Data sharing not applicable as no datasets generated and/or analysed for this study.

References

- 1.Coulter A. How to provide patients with the right information to make informed decisions. Pharm J 2018;301. [Google Scholar]

- 2.Health Professions Council of South Africa National Patients’ Rights Charter Pretoria, 2008. Available: https://www.safmh.org.za/documents/policies-and-legislations/Patient%20Rights%20Charter.pdf [Accessed 22 Nov 2019].

- 3.NHS Scotland Your health, your rights: the charter of patient rights and responsibilities. Edinburgh: Scottish government, 2012. Available: https://www.gov.scot/resource/0039/00390989.pdf [Accessed 22 Nov 2019].

- 4.Norwegian Ministry of Health and Care Services Patient and user rights act, 2018. Available: https://lovdata.no/dokument/NL/lov/1999-07-02-63 [Accessed 22 Nov 2019].

- 5.Wang MTM, Grey A, Bolland MJ. Conflicts of interest and expertise of independent commenters in news stories about medical research. CMAJ 2017;189:E553–9. 10.1503/cmaj.160538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Walsh-Childers K, Braddock J, Rabaza C, et al. One step forward, one step back: changes in news coverage of medical interventions. Health Commun 2018;33:174–87. 10.1080/10410236.2016.1250706 [DOI] [PubMed] [Google Scholar]

- 7.Sumner P, Vivian-Griffiths S, Boivin J, et al. Exaggerations and caveats in press releases and health-related science news. PLoS One 2016;11:e0168217. 10.1371/journal.pone.0168217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schwitzer G. A guide to reading health care news stories. JAMA Intern Med 2014;174:1183–6. 10.1001/jamainternmed.2014.1359 [DOI] [PubMed] [Google Scholar]

- 9.Moorhead SA, Hazlett DE, Harrison L, et al. A new dimension of health care: systematic review of the uses, benefits, and limitations of social media for health communication. J Med Internet Res 2013;15:e85. 10.2196/jmir.1933 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schwartz LM, Woloshin S, Andrews A, et al. Influence of medical Journal press releases on the quality of associated newspaper coverage: retrospective cohort study. BMJ 2012;344:d8164. 10.1136/bmj.d8164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Glenton C, Paulsen EJ, Oxman AD. Portals to Wonderland: health portals lead to confusing information about the effects of health care. BMC Med Inform Decis Mak 2005;5:7. 10.1186/1472-6947-5-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Moynihan R, Bero L, Ross-Degnan D, et al. Coverage by the news media of the benefits and risks of medications. N Engl J Med 2000;342:1645–50. 10.1056/NEJM200006013422206 [DOI] [PubMed] [Google Scholar]

- 13.Coulter A, Entwistle V, Gilbert D. Sharing decisions with patients: is the information good enough? BMJ 1999;318:318–22. 10.1136/bmj.318.7179.318 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sansgiry S, Sharp WT, Sansgiry SS. Accuracy of information on printed over-the-counter drug advertisements. Health Mark Q 1999;17:7–18. 10.1300/J026v17n02_02 [DOI] [PubMed] [Google Scholar]

- 15.Academy of Medical Sciences Enhancing the use of scientific evidence to judge the potential benefits and harms of medicines. London: Academy of Medical Sciences, 2017. https://acmedsci.ac.uk/file-download/44970096 [Google Scholar]

- 16.Oxman AD, Guyatt GH. The science of reviewing research. Ann N Y Acad Sci 1993;703:125–34. 10.1111/j.1749-6632.1993.tb26342.x [DOI] [PubMed] [Google Scholar]

- 17.Oxman AD, Chalmers I, Liberati A. A field guide to experts. BMJ 2004;329:1460–3. 10.1136/bmj.329.7480.1460 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rada G. What is the best evidence and how to find it.BMJ best Practice.EBM toolkit. Available: https://bestpractice.bmj.com/info/toolkit/discuss-ebm/what-is-the-best-evidence-and-how-to-find-it/ [Accessed 22 Nov 2019].

- 19.Oxman AD, Paulsen EJ. Who can you trust? A review of free online sources of "trustworthy" information about treatment effects for patients and the public. BMC Med Inform Decis Mak 2019;19:35. 10.1186/s12911-019-0772-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Glenton C. Developing patient-centred information for back pain sufferers. Health Expect 2002;5:319–29. 10.1046/j.1369-6513.2002.00196.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Glenton C, Underland V, Kho M, et al. Summaries of findings, descriptions of interventions, and information about adverse effects would make reviews more informative. J Clin Epidemiol 2006;59:770–8. 10.1016/j.jclinepi.2005.12.011 [DOI] [PubMed] [Google Scholar]

- 22.Rosenbaum SE, Glenton C, Nylund HK, et al. User testing and stakeholder feedback contributed to the development of understandable and useful summary of findings tables for Cochrane reviews. J Clin Epidemiol 2010;63:607–19. 10.1016/j.jclinepi.2009.12.013 [DOI] [PubMed] [Google Scholar]

- 23.Rosenbaum SE, Glenton C, Oxman AD. Summary of findings tables improved understanding and rapid retrieval of key information in Cochrane reviews. J Clin Epidemiol 2010;63:620–6. [DOI] [PubMed] [Google Scholar]

- 24.Rosenbaum SE, Glenton C, Wiysonge CS, et al. Evidence summaries tailored to health policy-makers in low- and middle-income countries. Bull World Health Organ 10.2471/BLT.10.075481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mijumbi-Deve R, Rosenbaum SE, Oxman AD, et al. Policymaker experiences with rapid response briefs to address health-system and technology questions in Uganda. Health Res Policy Syst 2017;15:37. 10.1186/s12961-017-0200-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Council NR How people learn: brain, mind, experience, and school: expanded edition. Washington, DC: The National Academies Press, 2000. [Google Scholar]

- 27.Ancker JS, Kaufman D. Rethinking health numeracy: a multidisciplinary literature review. J Am Med Inform Assoc 2007;14:713–21. 10.1197/jamia.M2464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Reyna VF, Nelson WL, Han PK, et al. How numeracy influences risk comprehension and medical decision making. Psychol Bull 2009;135:943–73. 10.1037/a0017327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gigerenzer G, Gaissmaier W, Kurz-Milcke E, et al. Helping doctors and patients make sense of health statistics. Psychol Sci Public Interest 2007;8:53–96. 10.1111/j.1539-6053.2008.00033.x [DOI] [PubMed] [Google Scholar]

- 30.BMJ, Doyal L, Tobias JS, Informed consent in medical research. London: BMJ Publications, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Oxman AD, Chalmers I, Sackett DL. A practical guide to informed consent to treatment. BMJ 2001;323:1464–6. 10.1136/bmj.323.7327.1464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Silverman WA. The myth of informed consent: in daily practice and in clinical trials. J Med Ethics 1989;15:6–11. 10.1136/jme.15.1.6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Chewning B, Bylund CL, Shah B, et al. Patient preferences for shared decisions: a systematic review. Patient Educ Couns 2012;86:9–18. 10.1016/j.pec.2011.02.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Glenton C. How to write a plain language summary of a Cochrane intervention review. Cochrane Norway, 2017. https://www.cochrane.no/sites/cochrane.no/files/public/uploads/how_to_write_a_cochrane_pls_27th_march_2017.pdf [Google Scholar]

- 35.Higgins JPT.Green S, Cochrane Handbook for systematic reviews of interventions. Version 5.1.0 The Cochrane Collaboration, 2011. www.handbook.cochrane.org [Google Scholar]

- 36.Murad MH, Montori VM, Ioannidis PA, et al. Understanding and applying the results of a systematic review and meta-analysis. Chapter 23 : Guyatt G, Rennie D, Meade MO, et al., Users' guides to the medical literature: a manual for evidence-based clinical practice. 3rd ed Chicago: JAMA Evidence, 2015.. [Google Scholar]

- 37.Guyatt GH, Oxman AD, Vist GE, et al. Grade: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008;336:924–6. 10.1136/bmj.39489.470347.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Guyatt G, Oxman AD, Akl EA, et al. Grade guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables. J Clin Epidemiol 2011;64:383–94. 10.1016/j.jclinepi.2010.04.026 [DOI] [PubMed] [Google Scholar]

- 39.Alonso-Coello P, Schünemann HJ, Moberg J, et al. Grade evidence to decision (ETD) frameworks: a systematic and transparent approach to making well informed healthcare choices. 1: introduction. BMJ 2016;353:i2016. 10.1136/bmj.i2016 [DOI] [PubMed] [Google Scholar]

- 40.Fischhoff B, Brewer NT, Downs JS. Communicating Risks and Benefits: An Evidence Based User’s Guide. Silver Spring: Federal Drug Administration, 2011. [Google Scholar]

- 41.Guyatt GH, Alonso-Coello P, Schünemann HJ, et al. Guideline panels should seldom make good practice statements: guidance from the grade Working group. J Clin Epidemiol 2016;80:3–7. 10.1016/j.jclinepi.2016.07.006 [DOI] [PubMed] [Google Scholar]

- 42.SUPPORT Summaries Evidence of the effects of health system interventions for low- and middle-income countries. Available: https://supportsummaries.epistemonikos.org/ [Accessed 22 Nov 2019].

- 43.Glenton C, Santesso N, Rosenbaum S, et al. Presenting the results of Cochrane systematic reviews to a consumer audience: a qualitative study. Med Decis Making 2010;30:566–77. 10.1177/0272989X10375853 [DOI] [PubMed] [Google Scholar]

- 44.The SURE Collaboration Sure guides for preparing and using evidence-based policy Briefs. The sure collaboration, 2011. Available: https://www.who.int/evidence/sure/guides/en/ [Accessed 22 Nov 2019].

- 45.isof GRADE\Decide interactive Summary of Findings. Available: https://isof.epistemonikos.org/#/ [Accessed 22 Nov 2019].

- 46.EPOC Cochrane effective practice and organisation of care (EPOC). Reporting the review. EPOC resources for review authors, 2018. Available: https://epoc.cochrane.org/resources/epoc-resources-review-authors [Accessed 22 Nov 2019].

- 47.Shiffman RN, Shekelle P, Overhage JM, et al. Standardized reporting of clinical practice guidelines: a proposal from the conference on guideline standardization. Ann Intern Med 2003;139:493–8. 10.7326/0003-4819-139-6-200309160-00013 [DOI] [PubMed] [Google Scholar]

- 48.Charnock D, Shepperd S, Needham G, et al. Discern: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health 1999;53:105–11. 10.1136/jech.53.2.105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Moult B, Franck LS, Brady H. Ensuring quality information for patients: development and preliminary validation of a new instrument to improve the quality of written health care information. Health Expect 2004;7:165–75. 10.1111/j.1369-7625.2004.00273.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zipkin DA, Umscheid CA, Keating NL, et al. Evidence-Based risk communication: a systematic review. Ann Intern Med 2014;161:270–80. 10.7326/M14-0295 [DOI] [PubMed] [Google Scholar]

- 51.Elwyn G, O'Connor A, Stacey D, et al. Developing a quality criteria framework for patient decision AIDS: online international Delphi consensus process. BMJ 2006;333:417. 10.1136/bmj.38926.629329.AE [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.IPDAS International patient decision aid standards (IPDAS) collaboration. Available: http://ipdas.ohri.ca/ [Accessed 22 Nov 2019].

- 53.Spiegelhalter D. Risk and uncertainty communication. Annu Rev Stat Appl 2017;4:31–60. 10.1146/annurev-statistics-010814-020148 [DOI] [Google Scholar]

- 54.Elwyn G, Burstin H, Barry MJ, et al. A proposal for the development of national certification standards for patient decision AIDS in the US. Health Policy 2018;122:703–6. 10.1016/j.healthpol.2018.04.010 [DOI] [PubMed] [Google Scholar]

- 55.German Network Evidence-Based Medicine Guideline for evidence-based health information, 2017. Available: https://www.leitlinie-gesundheitsinformation.de/?lang=en [Accessed 6 Apr 2020].

- 56.Zwanziger L. Practitioner perspectives : Fischhoff B, Brewer NT, Downs JS, Communicating Risks and Benefits: An Evidence Based User’s Guide. Silver Spring: Federal Drug Administration, 2011. [Google Scholar]

- 57.Ancker JS, Senathirajah Y, Kukafka R, et al. Design features of graphs in health risk communication: a systematic review. J Am Med Inform Assoc 2006;13:608–18. 10.1197/jamia.M2115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Akl EA, Oxman AD, Herrin J, et al. Framing of health information messages. Cochrane Database Syst Rev 2011:CD006777. 10.1002/14651858.CD006777.pub2 [DOI] [PubMed] [Google Scholar]

- 59.Moberg J, Treweek S, Rada G, et al. Does an interactive Summary of Findings table improve users’ understanding of and satisfaction with information about the benefits and harms of treatments? Protocol for a randomized trial. IHC Working Paper, 2017. Available: http://www.informedhealthchoices.org/wp-content/uploads/2016/08/isof-trial-protocol_IHC-Working-Paper.pdf [Accessed 22 Nov 2019].

- 60.NHS Scotland Share. Available: https://www.registerforshare.org/ [Accessed 22 Nov 2019].

- 61.Fadlallah R, El-Jardali F, Nomier M, et al. Using narratives to impact health policy-making: a systematic review. Health Res Policy Syst 2019;17:26. 10.1186/s12961-019-0423-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Carling C, Kristoffersen DT, Herrin J, et al. How should the impact of different presentations of treatment effects on patient choice be evaluated? A pilot randomized trial. PLoS One 2008;3:e3693. 10.1371/journal.pone.0003693 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Lipkus IM, Numeric LIM. Numeric, verbal, and visual formats of conveying health risks: suggested best practices and future recommendations. Med Decis Making 2007;27:696–713. 10.1177/0272989X07307271 [DOI] [PubMed] [Google Scholar]

- 64.Visschers VHM, Meertens RM, Passchier WWF, et al. Probability information in risk communication: a review of the research literature. Risk Anal 2009;29:267–87. 10.1111/j.1539-6924.2008.01137.x [DOI] [PubMed] [Google Scholar]

- 65.Greenland S, Senn SJ, Rothman KJ, et al. Statistical tests, P values, confidence intervals, and power: a guide to misinterpretations. Eur J Epidemiol 2016;31:337–50. 10.1007/s10654-016-0149-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.McCormack L, Sheridan S, Lewis M, et al. Communication and dissemination strategies to facilitate the use of health-related evidence. Rockville, MD: Agency for Healthcare Research and Quality, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2019-036348supp001.pdf (206.5KB, pdf)

bmjopen-2019-036348supp002.pdf (343.1KB, pdf)

bmjopen-2019-036348supp003.pdf (533.3KB, pdf)