Abstract

NAMD is a molecular dynamics program designed for high-performance simulations of very large biological objects on CPU- and GPU-based architectures. NAMD offers scalable performance on petascale parallel supercomputers consisting of hundreds of thousands of cores, as well as on inexpensive commodity clusters commonly found in academic environments. It is written in C++ and leans on Charm++ parallel objects for optimal performance on low-latency architectures. NAMD is a versatile, multipurpose code that gathers state-of-the-art algorithms to carry out simulations in apt thermodynamic ensembles, using the widely popular CHARMM, AMBER, OPLS, and GROMOS biomolecular force fields. Here, we review the main features of NAMD that allow both equilibrium and enhanced-sampling molecular dynamics simulations with numerical efficiency. We describe the underlying concepts utilized by NAMD and their implementation, most notably for handling long-range electrostatics; controlling the temperature, pressure, and pH; applying external potentials on tailored grids; leveraging massively parallel resources in multiple-copy simulations; and hybrid quantum-mechanical/molecular-mechanical descriptions. We detail the variety of options offered by NAMD for enhanced-sampling simulations aimed at determining free-energy differences of either alchemical or geometrical transformations and outline their applicability to specific problems. Last, we discuss the roadmap for the development of NAMD and our current efforts toward achieving optimal performance on GPU-based architectures, for pushing back the limitations that have prevented biologically realistic billion-atom objects to be fruitfully simulated, and for making large-scale simulations less expensive and easier to set up, run, and analyze. NAMD is distributed free of charge with its source code at www.ks.uiuc.edu.

I. INTRODUCTION

Grasping the function of very large biological objects, such as those of the cell machinery, necessitates at its very core not only the structural knowledge of these organized systems but also their dynamical signature. However, despite formidable advances on the experimental front, the intrinsic limitations of conventional approaches have often thwarted access to the missing microscopic detail of these complex, dynamic molecular constructs, restricting their observation to static pictures. The so-called computer revolution, which began over 40 years ago, considerably modified the perspectives, paving the road to structural biology investigations by means of numerical simulations from first principles. Such simulations form the central idea of the computational microscope,1,2 an emerging instrument for cell biology at atomic resolution, which the molecular dynamics (MD) program NAMD embodies.

A. The NAMD philosophy

The goal of NAMD development since its beginning has been to enable practical supercomputing for biomedical research. This goal of practicality is reflected first by the pursuit of affordable hardware such as workstation clusters in the 1990s, Linux clusters in the 2000s, and GPU acceleration in the 2010s—viewed as another computer revolution. Practicality is more deeply and enduringly reflected by the attitude of the NAMD development community that the target user of the program is the experimentalist or their collaborator, not the programmer or computer expert, or even the method developer.

In pursuit of this goal, NAMD has been designed to be a single program available across all platforms, preserving the knowledge of the users as their science grows from reproducing tutorials and case studies on a laptop, to production science on departmental commodity clusters, to large and multi-copy simulations on leadership-class supercomputers. NAMD is distributed free of charge for both academic and private-sector use as both the source code and pre-compiled binaries for most platforms. As cutting-edge biomolecular simulations are never truly routine, user extensions that are portable without recompilation across both platforms and NAMD releases are supported via the Tcl and Python scripting languages.

NAMD development relies on symbiotic relationships with multiple stakeholders. The oldest longstanding relationship is with the computer scientist developers of the Charm++ parallel programming system (see Sec. II), with which the NAMD developers have shared both a 2002 Gordon Bell Prize and a 2012 IEEE Fernbach Award. As the most popular Charm++ application, NAMD provides the Charm++ developers with real-world feedback from a broad community of users and drives access and support for Charm++ on leadership platforms. In return, Charm++ supplies enhancements that address performance, usability, and programmability issues faced by both NAMD users and developers.

The second indispensable relationship is between the NAMD and Charm++ developers and the high-performance computing technology providers, such as Intel, NVIDIA, AMD, IBM, Cray, and Mellanox. These corporations provide critical insights into current and upcoming technology, as well as software engineering expertise and code contributions to improve the performance of both NAMD algorithms and Charm++ high-speed network communication.

The third relationship that drives NAMD development is with computing resource providers, at both the various National Science Foundation (NSF) centers and Department of Energy (DOE) national laboratories that lead the United States exascale program. Early development and science access to leadership platforms ensures NAMD users of a smooth transition to new technologies and biomolecular applications of a generous share of high-performance computing resources, which could be readily consumed by other fields of science with less potential impact on human health and well-being. In return, the centers can promote the early scientific impacts of their machines, such as the all-atom model of the HIV-1 virus capsid3 solved during the early science period of the National Center for Supercomputing Applications (NCSA) Blue Waters machine.

The final symbiotic relationship driving NAMD is with the broad community of computational scientists who are ever expanding and furthering the scope of simulation methods. The NAMD core developers in Urbana together with other NAMD contributors scattered across the world maintain a strong intellectual connection with this community. The team of core developers and contributors meet yearly to coordinate ongoing efforts, discuss new methodological advances, and plan future changes to the program. In recent years, these joint efforts have facilitated the implementation of important advanced techniques, including integration algorithms,4 polarizable force fields,5 free-energy calculations, and enhanced-sampling strategies.6,7 Nevertheless, some tensions are to be expected between performance and innovation—NAMD was never designed to serve as a virtual laboratory platform for method development, and hence, sacrifices in modularity and modifiability have been made in the interest of scalability and performance. Regardless, NAMD offers today a mature and complete set of simulation capabilities, incorporating many features that have proven of general utility and continue to be extended and improved in response to both feedback from the NAMD user community and innovation by the method developers, who appear as co-authors on this new reference paper.

B. Perspective

To this date, NAMD has been downloaded by over 110 000 registered users, over 30 000 of whom have downloaded multiple releases. The 2005 NAMD reference paper8 has been cited over 13 000 times, and the NAMD user email list has over 1000 subscribers. NAMD is also typically reported as one of, if not the most utilized program at the NSF supercomputing centers. For a code developed for over two decades to enable leading-edge simulations on emerging platforms, NAMD serves a surprisingly large community of researchers. This can be attributed to the leadership of the late Klaus Schulten, who sought to share not only his scientific achievements with the world but also the tools with which they were accomplished and gathered a team of software and method developers that shared this vision.

Most users obtain NAMD by downloading a pre-compiled binary from www.ks.uiuc.edu. Current and multiple previous NAMD releases are available for download, along with the most recent nightly build. The download process requires creating an account by completing a brief registration form, which provides the NAMD developers with user statistics to justify future funding. The download process also asks the user to agree to a license that prohibits redistribution and requires attribution and citation of NAMD in publications resulting from its use. The standard license allows NAMD to be employed by both academic and private-sector researchers but prohibits commercialization of the program. Derived work may utilize up to 10% of the NAMD source code without restriction when combined with an equal amount of original code, allowing for substantial reuse by the biomolecular simulation community. All releases include the source code for both NAMD and the specific version of the Charm++ parallel runtime with which it was built. The NAMD and the Charm++ source code are also available via separate public Git repositories, and binaries for both releases and recent builds are maintained publicly on several NSF and DOE supercomputers. Official NAMD releases occur approximately annually and are each preceded by a series of beta releases to aid in bug discovery. The NAMD source code is intended to be of production quality at all times, and hence, bug fixes implemented after the beta period are not back-ported to the previous release.

The size of the NAMD user community both necessitates and enables a community support model. Basic training in MD simulation concepts and their application in NAMD is provided in a series of hands-on workshops, which can be attended in person or streamed at any time, and the pedagogical material, i.e., tutorials and case studies, used in the workshops is available for download. The tutorials require only a laptop, allowing them to be done without access to external resources, and possibly continued after the workshop. The pedagogical team of the workshops is formed of faculty members and teaching assistants, who are experienced NAMD users. Questions regarding the use of the program are directed to the public NAMD mailing list both because the collective experience of the user community will generally produce more useful and varied responses than the developers could and to provide a publicly searchable record of previous questions and answers. End-users are encouraged to search the mailing list archives along with the manual and other training materials before asking their questions on the mailing list. Reports of suspected bugs along with logs and ideally a reproducing input set for the latest NAMD release are regularly sent to the developer mailing list. Furthermore, high-level personal support is provided for selected driving projects that are testing or co-developing new NAMD capabilities.

NAMD software engineering practices are based on two decades of experience in ensuring correctness with minimal overhead. Both NAMD and Charm++ use Git for revision control, with Charm++ also employing formal issue tracking due to its larger numbers of both active developers and minor enhancement requests. NAMD has a smaller number of developers, with more differentiated expertise and goals, and this separation of responsibilities makes formal centralized issue tracking less beneficial. Moreover, due to the dynamic nature of development and changing priorities, schedules of planned enhancements are not reliable and are not advertised. It is better that the user make progress with the currently available version of the code than delay work based on the promise of an unproven feature. Separate developer documentation is avoided with the intent that the source code itself be legible and discoverable on its own.

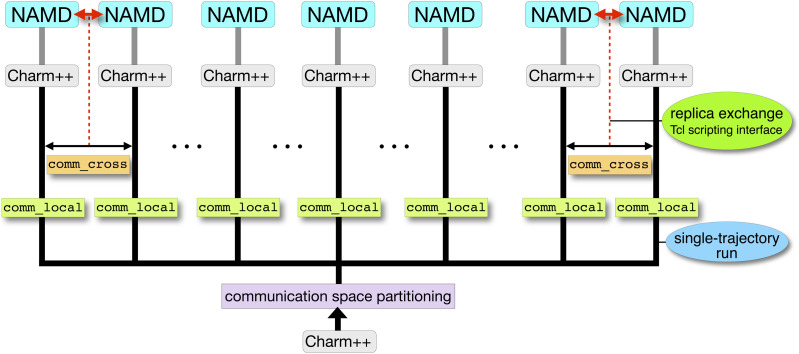

NAMD development is guided by a small set of driving projects, which are scientifically important and require enhanced NAMD capability. While the driving project provides the essential eager end-user to test and provide feedback on the implementation of the new capability, it is important to note that no single-user or single-project features are implemented in NAMD itself. Instead, the goal of the development process is either to extend an existing capability to enable the driving project or, if necessary, to implement some new general-purpose and scalable feature that will enable both the specific driving project and a larger class of related simulations. Necessary project-specific code may be segregated outside of the NAMD code base through a Tcl scripting interface. Capabilities enabled via scripting in NAMD include top-level protocols such as equilibration, annealing, and replica-exchange or multiple-copy strategies, as well as application of long-range steering forces onto small numbers of atoms and application of independent boundary forces to each atom in the molecular system. While an optional Python scripting interface is available, Tcl remains the recommended choice due to its stable interface, compact and embeddable interpreter, simple and flexible syntax, and shell-like appearance, which appeals to non-programmers.

NAMD quality assurance practices are adapted to the unique challenges of feature-rich scientific software running at scale on a wide variety of platforms. All-platform builds and installs are automated, and record both default and optional modules loaded during compilation, as well as the specific version of Charm++ utilized. Extensive testing of each build on different platforms is prohibitively complex to manage and expensive in terms of computer time. Still, the most likely sources of observed defects in reviewed and merged code are compiler bugs and rare unanticipated edge cases in new end-user input sets. For this reason, NAMD contains multiple internal consistency checks, which are active at all times, including in production runs. The goal of consistency checks is not so much to prevent crashes, which are often easily diagnosed and relatively harmless, but to avoid silently generating flawed simulation outputs, which would waste both computer and human time and leave bad science in their wake. Consistency-check failures raise fatal errors, both in order to halt the program without wasting future cycles and because, in our experience, end-users ignore warning messages—and often barely read error messages.

C. Key features

NAMD supports classical MD simulations, most commonly of full atomistic nature, in explicit solvent, with periodic boundary conditions and full particle mesh Ewald (PME) electrostatics (see Sec. V) in a variety of thermodynamic ensembles (see Sec. IV), including constant–pH (see Sec. XI), although coarse-grained models, implicit solvent, and non-periodic or semi-periodic boundary conditions can also be employed. CHARMM9 and similar academic force fields, e.g., AMBER,10 OPLS–AA,11 and GROMOS,12 are supported, including the CHARMM Drude polarizability model.5,13 A flexible interface for quantum-mechanical/molecular-mechanical (QM/MM) calculations connects NAMD to external quantum-chemistry codes (see Sec. XII), namely, ORCA14 and MOPAC,15 thereby allowing physical phenomena that are not captured by classical models to be captured, e.g., chemical reactions involving bond formation and rupture. NAMD also supports alchemical free-energy calculations (see Sec. IX).16

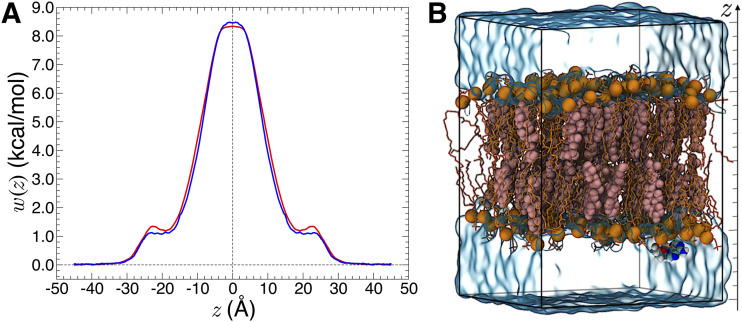

Built on this foundation are a variety of features to add external forces in the simulations, including the Colvars module,6 which allows the end-user to define collective variables (CVs) as control parameters for biased MD and free-energy calculations (see Sec. VI). Flexible fitting17 of structures to electron density maps from cryo-electron microscopy (cryo-EM) can be performed via grid potentials18 (see Sec. XIII). Methods for accelerating sampling include user-customizable multiple-copy algorithms (MCAs) (see Sec. X) for both parallel-tempering strategies and free-energy calculations of geometrical and alchemical nature.16 The workflow architecture of a NAMD simulation is summarized in Fig. 1.

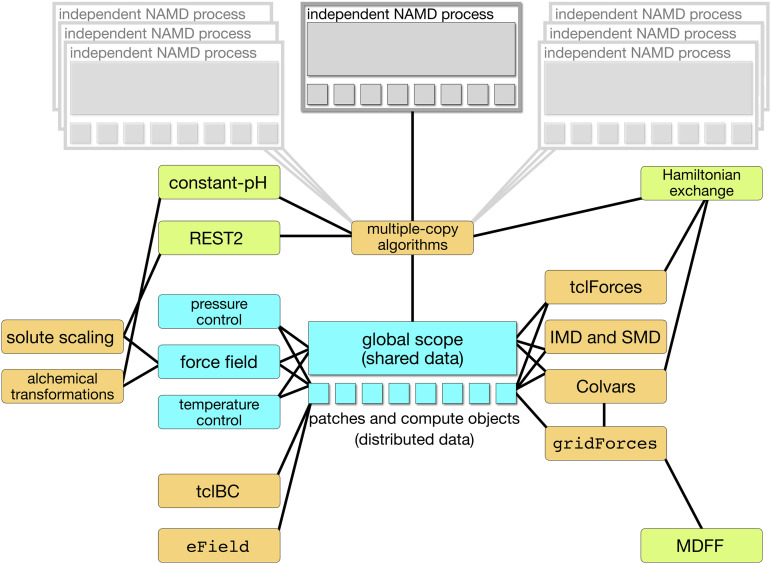

FIG. 1.

Workflow architecture of a NAMD simulation. The blue boxes denote the core physical details of the simulation, the orange boxes denote the features that are embedded in the code (written in C++), and the green boxes denote the features that are fully implemented through user scripts (written in Tcl or Python).

Support for setup, analysis, and visualization of NAMD simulations is implemented in the co-developed program VMD.19 In VMD, molecular structures can be assembled either with the low-level psfgen module or via the QwikMD plugin,20 which provides a graphical interface and guides the user through the stages of the standard MD workflow (see Sec. XV). A large number of VMD plugins are available to aid in analysis tasks. Guidance on the use of NAMD and VMD is provided by a variety of tutorials and case studies available on the www.ks.uiuc.edu website.

NAMD supports most computational platforms, ranging from MacOS–and Windows–operated laptops to leadership-class supercomputers. NVIDIA GPU acceleration has been implemented since 2009 (see Sec. III),21 and support for AMD and Intel GPUs is being developed as part of readiness programs for the Oak Ridge Frontier and Argonne Aurora exascale machines. Simulation sizes of up to 2 × 109 atoms are possible when the program is compiled in memory-optimized mode, which is recommended above 2 × 106 atoms.

Most users will have no need to compile NAMD from the source code. NAMD achieves cross-platform extensibility through scripting in Tcl, a language familiar to the end-users, notably from its use in VMD, allowing custom simulation protocols to be implemented without recompiling the program. Pre-compiled binaries are available for laptops, desktops, and clusters, both with or without high-speed InfiniBand networks. No MPI library is required, but the standard mpiexec program may be leveraged to simplify launching NAMD within the batch environment of the cluster.

II. THE PARALLEL, OBJECT-ORIENTED PROGRAMMING LANGUAGE CHARM++

NAMD is implemented on top of Charm++,22,23 an adaptive, asynchronous, distributed, message-driven, task-based parallel programming model using C++.

A. Underpinnings of Charm++

In Charm++, computations are partitioned into migratable objects called chares, each with its own data. Chares communicate by sending messages that invoke methods on other objects, which can be located locally or remotely, on a different node than the sender. An example of chare layout and messaging is shown in Fig. 2. Charm++ also features a powerful introspective runtime system (RTS), which measures and tunes the performance of applications at runtime. Put together, these properties allow for dynamic load balancing, in which the runtime relocates chares to different processors to evenly distribute computational load. These aspects of Charm++ allow high performance and scalability to be achieved on a wide variety of applications and large-scale computer architectures. Charm++ has also been carefully crafted to maximize portability so that applications can be executed on virtually any hardware, from laptops to supercomputers.

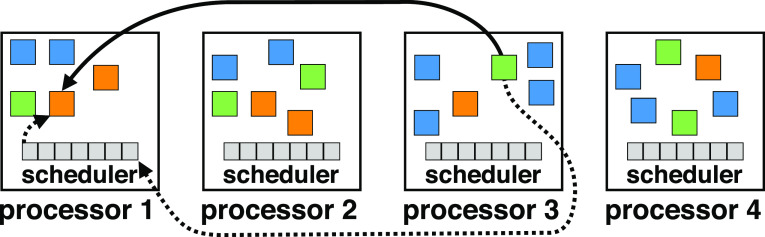

FIG. 2.

Overview of a Charm++ application on four processor elements (PEs). The solid line indicates an object on PE 3 sending a message to an object on PE 1, and the dashed line shows how the message is sent via per-PE schedulers.

1. Over-decomposition

Most parallel programming paradigms partition a problem into the number of processors that are being used to execute the application. This approach tries to minimize overhead while placing the onus on the programmer to provide an even distribution of work to the processors. However, in Charm++, applications are intended to be over-decomposed or broken into many more pieces than there are processors in the system. Using this finer decomposition may increase overhead, but it also provides scope for the runtime system to perform optimizations outweighing it, especially for dynamic, irregular applications. One virtue of this approach is communication-computation overlap. Since there are many small objects on a processor, as opposed to fewer large objects, the RTS can schedule the computation of an object while it awaits communication required by another. In doing so, Charm++ more effectively uses both the processors and network, thereby reducing starvation of processors due to network delays.

2. Load balancing

Another key facet of the Charm++ model is dynamic load balancing.24 Over-decomposition creates many high-granularity objects, giving the RTS scope to finely control distribution of load via chare migration. The Charm++ RTS actively measures the per-object load at runtime, which it then feeds into one of many different, customizable algorithms to determine a new and improved distribution of chares based on these empirical data. This design implies that load balancing retains efficacy even for applications with load properties that are difficult to predict a priori. Native support for general load balancing in the Charm++ RTS constitutes a dramatic improvement over frameworks such as MPI, which require end-users to write application-specific load-balancing code. In general, load balancing is critical to achieve high scalability, as the likelihood of significantly overloading some processor grows as more processors are added to a job.

3. Modern Charm++ features

While currently unused by NAMD, Charm++ has many other features to tune applications. Remaining within certain power and temperature budgets has become vital as supercomputers grow ever denser and larger. Charm++ includes modules that work with the processor and system controls over these properties while still retaining high performance.25 For instance, it can leverage the load balancer to migrate away chares from overheated processors to avoid slowdowns when the system underclocks them to reduce their temperatures. Charm++ features a checkpoint-restart facility, which makes applications fault tolerant.26 In the case of system-level hardware or software failures, rather than crashing, potentially wasting hours of computer time, the RTS can automatically recover and continue execution using the non-failed parts of the system. Charm++ also has a module to integrate GPU data and task management with the RTS.27 Programming models that are built on top of Charm++ also exist, such as Adaptive MPI, which allows MPI applications to execute atop Charm++ and gain several of its advantages,28 as well as charm4py, a Python interface to Charm++.29

B. Parallel structure of NAMD and its use of Charm++

The various features of Charm++ described previously have contributed to the success of NAMD in achieving high performance, scalability, and portability.30–32 NAMD and Charm++ share a long history, having been synergistically codeveloped since 1994. In fact, NAMD was the first large, driving application for Charm++, and many features and design decisions of Charm++ were inspired by the needs and challenges presented by NAMD. The architecture of NAMD maps very naturally to Charm++, allowing the structure and parallelism of the code to be easily and cleanly expressed without sacrificing speed.

MD simulations involve calculating and applying forces, bonded and nonbonded, that simulated atoms exert onto each other. NAMD takes as input a molecular structure, iteratively computes these bonded and nonbonded forces, and integrates the equations of motion to update atomic positions and velocities. NAMD implements this process using a unique hybrid approach, decoupling the distribution of data from the distribution of computation. The simulation space is spatially divided into small boxes called “patches”—objects containing simulation data, while force calculations are done by objects called “computes,” which operate on data received from patches. After the force calculation is completed by the computes, the forces are sent back to patches, which update their constituent atoms. Hydrogen atoms are stored on the same patch as the heavy atom they are bonded to, and atoms are reassigned to different patches as they move in space.

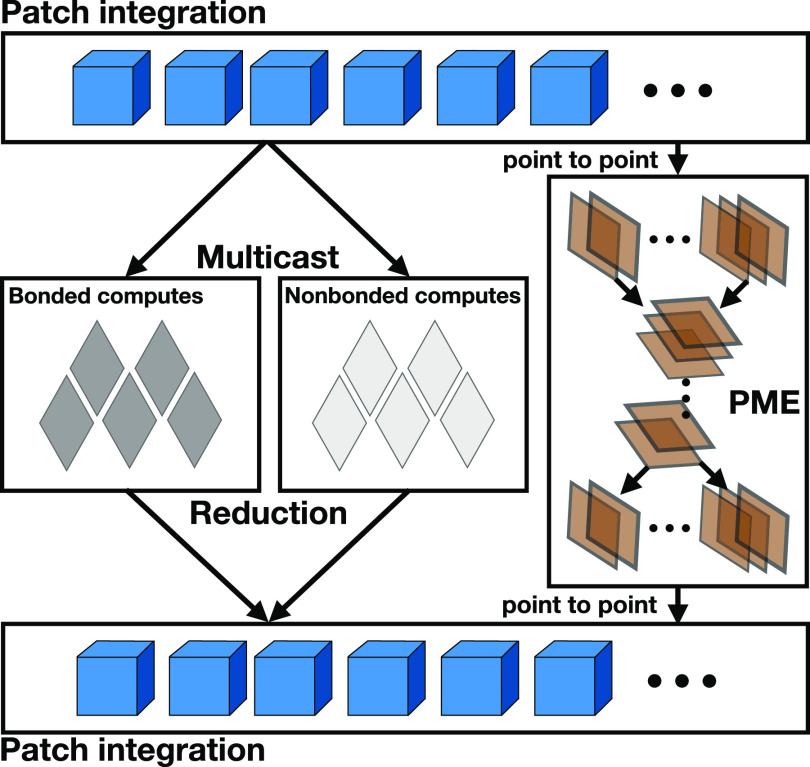

An overview of the parallel structure of NAMD is depicted in Fig. 3. There are three different kinds of computes, namely, bonded, nonbonded, and PME, respectively, responsible for bonded forces, short-range nonbonded forces, and long-range nonbonded electrostatic forces using the PME algorithm (see Sec. V). Naïve computation of all nonbonded forces would result in a computational complexity in , where N stands for the number of particles, whereas the PME approach of only computing pairwise nonbonded forces explicitly for atoms within some cutoff radius and interpolating the reciprocal-space Ewald sums for more distant atoms improves the overall complexity to .33 Patches are sized such that only the 26 neighbors of the three-dimensional patch are involved in the bonded and short-range nonbonded interactions or, formally, that non-neighboring patches are separated by at least the cutoff radius. Each nonbonded compute is responsible for calculating the forces between a given pair of neighboring patches, including self-pairs, and, correspondingly, each patch sends its data to the twenty-seven non-bonded computes that use its atoms. PME computes are done using a two-dimensional pencil decomposition of the charge grid and consist of three different subtypes, x, y, z, one for each of the three directions of the Cartesian space. This step involves performing several fast Fourier transforms (FFTs) and transpositions between the different dimensions, making PME relatively light in terms of computation but heavy in terms of communication. It is noteworthy that Charm++ supports message priorities, which are set to high in the case of PME, since communication is crucial here. Bonded computes are also relatively light computationally, since they only operate on the bonded components, so the majority of the computational load comes from the nonbonded computes.

FIG. 3.

NAMD software architecture. “Computes” are objects responsible for force calculations, operating on data received from “patches,” small boxes generated by the spatial decomposition of the computational assay. Long-range electrostatic forces are handled by the PME algorithm.

At runtime, these compute objects in NAMD are distributed throughout the available processors for the job. Notably, only nonbonded computes are relocatable as they represent most of the load. Patches and the other computes are statically assigned to the available processors at startup. Loads and communication patterns of the various objects, whether relocatable or not, are empirically measured and fed to the load balancer, which redistributes the nonbonded computes to minimize communication and equalize load between processors.

Generally, the above descriptions are equally valid for the CPU-based and GPU-based versions of NAMD. However, there are a few extra considerations in the GPU version, chiefly that of aggregating data and work requests. GPUs are computationally extremely powerful, but since they are physically separated from the CPUs and, thus, have high communication latency, performing many small transfers of data, or starting many small kernels, is relatively expensive. In order to avoid this bottleneck, NAMD aggregates data and work requests for the GPU, sending the data of several patches or invoking a kernel corresponding to several computes at once. While this strategy limits in some way the ability of Charm++ to perform optimizations, it also allows NAMD to leverage the immense power of GPUs without inordinate penalties due to latency, making it a worthwhile trade-off.

III. GPU CAPABILITIES AND PERFORMANCE

Historically, NAMD was designed for optimal performance on large arrays of computing units interconnected by low-latency, high-bandwidth networks. As use of GPUs in high-performance computing gained steam, NAMD has been adapted to novel, GPU-based architectures. In this section, we first review the performance of the code on a variety of platforms, prior to describing ongoing efforts toward more fully utilizing GPU acceleration.

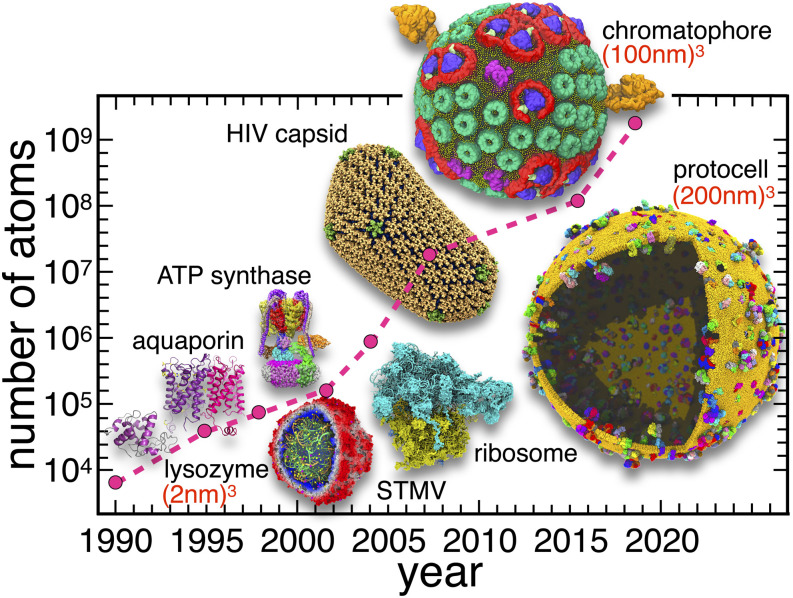

A. NAMD performance

From its inception, NAMD was designed for high-performance classical simulations of large atomic models of biomolecular systems, often comprising 100 000 atoms or more. Through pervasive use of parallel computing technologies, the system size scales and simulation timescales amenable to NAMD have grown by orders of magnitude, as the program evolved from being able to handle a few nanoseconds of simulated time on hundreds of thousands of atoms34 to a hundred nanoseconds for millions of atoms,3 finally arriving at where we stand today, namely, being able to simulate hundreds of nanoseconds on hundred-million atom systems.35

The growth in performance achieved by NAMD can be primarily attributed to its ability to harness the computational power of tens of thousands to millions of processing elements in parallel. Over the past decade, computer architectures have undergone a paradigm shift toward hardware designs that favor parallelism as the primary mechanism for improved performance, e.g., by increasing core counts from one processor generation to the next. NAMD supports diverse computing hardware architectures, including multicore and many-core CPUs with wide single-instruction-multiple-data (SIMD) vector arithmetic units and heterogeneous computing platforms containing massively parallel GPU accelerators. NAMD decomposes MD simulation algorithms into very fine-grained data-parallel operations, which can be executed by the available pool of computing resources, maximizing the use of hardware-optimized functions, or so-called “kernels,” on CPU vector units or GPU accelerators. These kernels parallelize operations on individual atoms using a hardware-specific approach, thereby maximizing arithmetic performance and simulation throughput for the target hardware.

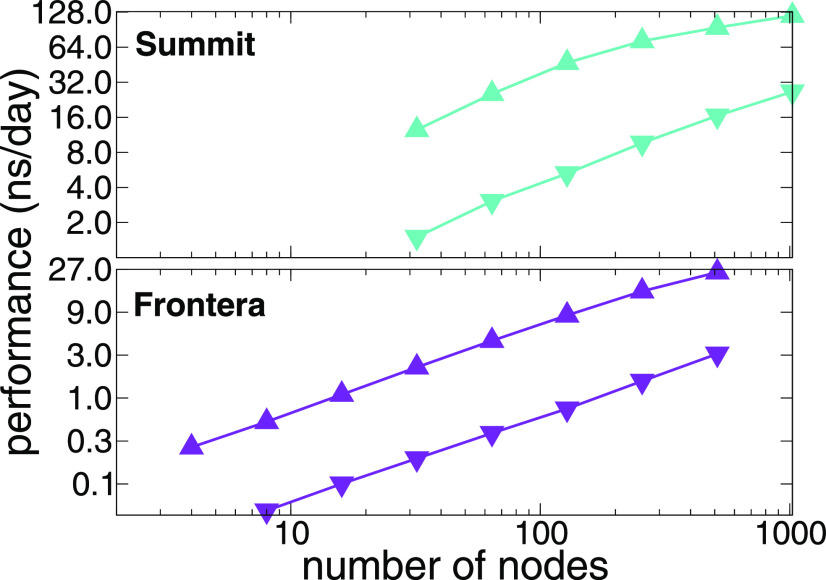

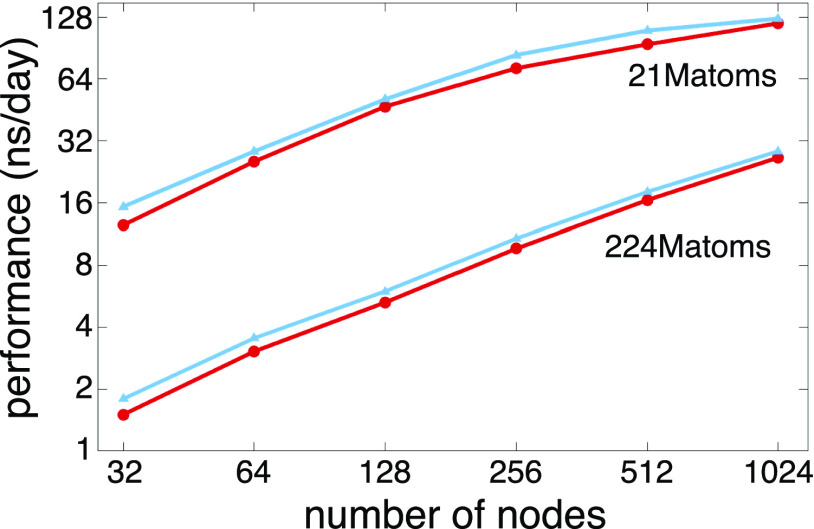

NAMD distributes work to shared memory CPU cores and distributed memory compute nodes using a three-dimensional decomposition of interactions among patches (see Sec. II), spatial subvolumes sized such that interactions with the 26 nearest neighbor patches embrace all of the bonded, van der Waals, and short-range electrostatics force contributions. Decomposition of the simulation into patch–patch interactions affords a large amount of parallelism for shared- and distributed-memory parallelism and enables NAMD to maintain load balance by shifting work from overloaded to underloaded cores or nodes over the course of the simulation. Building on these design points, extensive use of asynchronous message passing techniques and Charm++ runtime system features have allowed state-of-the-art NAMD simulations to run at high performance on petascale supercomputers,36–40 as well as pre-exascale supercomputers.32 Figure 4 summarizes the distributed-memory scaling performance of NAMD simulating two benchmark systems, each consisting of a tiled array of a 1 × 106-atom satellite tobacco mosaic virus (STMV),41 on two contemporary leadership supercomputers with CPU-based (Frontera) and GPU-accelerated (Summit) hardware platforms.

FIG. 4.

NAMD distributed memory parallel scaling benchmarks performed on satellite tobacco mosaic virus (STMV) in water on leadership supercomputers, Summit, using up to 1024 nodes containing 6144 GPUs (upper panel), and Frontera, using up to 512 CPU-based nodes (lower panel). Two benchmark systems were constructed as a tiled array of a periodic 1.067M-atom system, a 5 × 2 × 2 tiling for 21M atoms (▴), and a 7 × 6 × 5 tiling for 224M atoms (▾).

B. GPU acceleration

One of the biggest changes to the high-performance computing ecosystem in the past decade has been the emergence of GPUs as the dominant type of accelerator for scientific applications, leading to their rapid adoption and widespread use in computational chemistry applications.42 NAMD was the first fully featured MD package to exploit GPU acceleration,21 and it was also a pioneer in supporting GPU-accelerated clusters.43,44 NAMD development has evolved to encompass two main computing approaches, namely, (i) large-scale distributed memory parallel computing (continuing past NAMD 2.x approach) and (ii) GPU-resident computing to support new and emerging platforms that provide dense, tightly coupled GPU accelerators, with shared memory among GPUs and hosts (newly added in NAMD 3.x).

The initial use of GPUs in NAMD accelerated calculation of the short-range non-bonded forces, the biggest computational workload at each time step. The GPU kernels themselves, similar to the CPU, interpolate force interactions from a table but make use of fast-texture memory lookup and automatic linear interpolation, which avoids calculation of square-root and exponential functions required for the PME algorithm33 (see Sec. V) and eliminates conditionals needed to support switching functions for van der Waals forces. Energies are similarly computed with table lookup and only when required for output. Enhancements were introduced to improve performance by streaming the force calculations back to the patches, enabling each patch to proceed asynchronously with time stepping as soon as all of its forces are computed.21 GPU acceleration was next applied to the PME long-range electrostatics calculation, specifically to the patch-based calculations involving the spreading of charges from atoms to grid points and the gathering of forces from grid points to atoms.45 Parallel scalability is improved by doubling the PME grid spacing, together with increasing the interpolation order from 4 to 8 to maintain the same accuracy, thereby reducing the communication bandwidth by a factor of 8 while increasing the intensity of arithmetic offloaded to the GPU.

Since then, NAMD has evolved to allow all force terms to be computed on the GPU. With the 2.12 release, NAMD incorporated new CUDA kernels for both short-range, nonbonded forces and long-range electrostatics calculations, which yield better performance and scaling in general. The new short-range non-bonded kernels compute pairwise interactions between two sets of atoms subdivided into tiles of 32 × 32, producing tile lists that can be executed by any CUDA thread block. To further improve performance, the kernels also benefit from Newton’s third law, avoiding duplicated calculation between atom pairs and eliminating the need for synchronization between thread blocks, which allows CUDA warps to execute independently.46 This new scheme also introduced generalized Born implicit solvent (GBIS) neighbor list calculation kernels for existing GPU-accelerated GBIS functions.47 The revised implementation of PME now offloads the reciprocal-space calculation as well to GPU and uses the NVIDIA cuFFT library for calculating forward and inverse FFTs,46 although the scalability of this approach is limited to no more than four nodes. The 2.13 release of NAMD added new CUDA kernels for calculating the bonded forces and the correction for excluded interactions.32 The 2.14 release yields better performance on modern GPU architectures and contains a more stable pair list generation scheme for large domain decomposition cycles. NAMD 2.14 performance results reported in this contribution represent the outcome from runs using the traditional distributed memory NAMD design.

NAMD 3.0 maintains the traditional large-scale distributed memory computing paradigm but is the first version to pioneer the new GPU-resident computing approach. The NAMD 3.0 benchmarks reported here represent the currently achieved performance using the new GPU-resident computing scheme, applied to single-node GPU-accelerated hardware platforms. We note that since NAMD 3.0 and its new GPU-resident computing approach are still actively in development at the time of writing this article, we expect to achieve even higher performance by the time it is finalized.

Based on a Charm++ parallel object paradigm, NAMD over-decomposes the total work into small, easily migratable tasks at startup, which are consequently distributed across the available allocated CPU resources for a particular run (see Sec. II). This scheme is effective on large parallel computers with a myriad of CPU cores as tasks are small enough to allow for dynamic load balancing at runtime and better scaling overall. However, fine-grained decompositions that are appropriate for large CPU core counts often result in task sizes that are insufficient to saturate GPU with work, as is necessary to approach peak GPU performance. Moreover, running large batches of small tasks typically requires excessive host-device memory transfers as they have disjoint memory spaces. Thus, for launching GPU tasks efficiently, NAMD usually performs an aggregation step, whereby patches are gathered together into contiguous memory spaces, and their corresponding atoms are rearranged in order to coarsen task grain size to improve GPU utilization and reduce overhead. It is also possible for the end-user to control which force terms are offloaded onto the GPU, as force calculations are scheduled independently, for best combined use of hybrid CPU and GPU computing resources.

The improvements to existing GPU kernels and more effective GPU offloading schemes have allowed NAMD to benefit not only from thousands of CPU cores but also from thousands of discrete GPUs. Figure 4 depicts NAMD benchmarks occupying up to a quarter of ORNL Summit, a GPU-dense supercomputer with 4600 compute nodes, each containing two IBM POWER9 CPUs and six NVIDIA Volta V100 GPUs. Two different tiled replications of the 1.067 × 106 atom, freely distributed, STMV computational assays were employed as benchmark systems—a 5 × 2 × 2 replication with 21 × 106 atoms and a 7 × 6 × 5 replication with 224 × 106 atoms. The results demonstrate the fact that NAMD is able to benefit from the thousands of GPUs available in Summit, delivering approximately eight times improved performance compared to CPU-only runs.

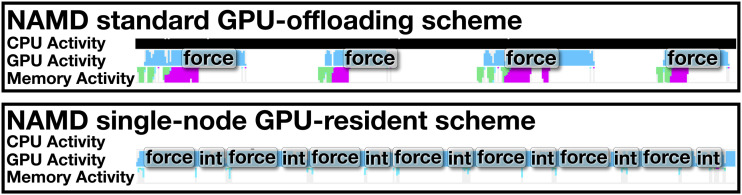

The rapid pace of performance gains on state-of-the-art computing architectures has tipped the balance of computing power even more dramatically in favor of GPUs. The traditional GPU offloading scheme in NAMD overlaps CPU and GPU work, with forces being calculated on the GPU and the integration of the Newton equations of motion being calculated on the CPU as soon as the forces are processed by the GPU. However, in-depth NAMD performance analysis reveals that current high-end GPUs are idling for a large fraction of the simulation time, since integration is a critical step and must be performed before the next launch of GPU force kernels. Ongoing development efforts have begun to ameliorate this imbalance, whereby a new GPU-resident computing scheme maintains data in-place on the GPU throughout force calculations and integration of atomic coordinates, with drastically reduced involvement from host CPUs and minimal host-device memory transfers. Kernels for the Verlet integration (see Sec. IV), rigid bond constraints, and Langevin dynamics have been introduced, without any need for data transfer between the CPU and GPU. Figure 5 depicts a timeline profile of a simulation of apolipoprotein 1 (ApoA1) in the microcanonical ensemble, with roughly 92 224 atoms, on a NVIDIA Titan-V GPU, demonstrating that the standard offloading scheme is not capable of fully saturating the GPU with work as there are large gaps between the GPU force calculations with integration tasks running on the CPU.

FIG. 5.

Standard GPU offload approach compared against new GPU-resident execution scheme for a single-node NAMD simulation of apolipoprotein 1 (ApoA1) in water, consisting of 92 224 atoms. The light blue line tracks GPU activity, while the black strip tracks CPU activity. GPU force calculations are labeled “force,” and GPU integration calculations are labeled “int.”

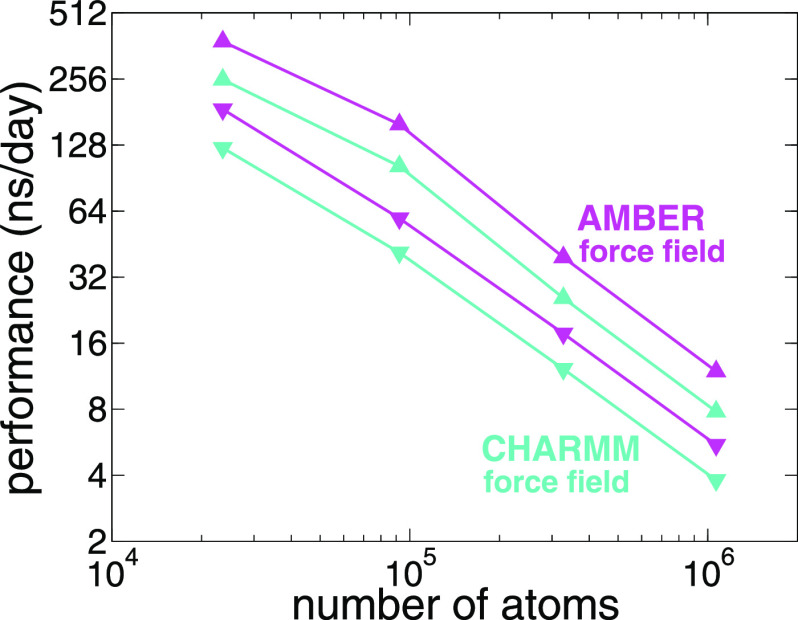

The new, GPU-resident computing scheme is able to effectively saturate the GPU with work, showing almost no gaps between kernel calls. Figure 6 shows preliminary benchmarks of this GPU-resident scheme for simulations in the microcanonical ensemble, with a 2-fs time step and the standard CHARMM force-field parameters, on a NVIDIA Titan V GPU and an Intel Xeon E5-2650, for four independent computational assays of increasing size.

FIG. 6.

Comparison of NAMD GPU computing schemes for simulations with increasing atom counts, namely, (i) reductase enzyme (DHFR) with 23 558 atoms, (ii) apolipoprotein 1 (ApoA1) with 92 224 atoms, (iii) F1-ATPase with 327 506 atoms, and (iv) STMV with 1 066 628 atoms for the GPU-resident scheme in NAMD 3.0 (▴) and for standard GPU offloading scheme in NAMD 2.14 (▾). The simulations were performed in the microcanonical ensemble, using the CHARMM9 (turquoise line) and AMBER-like10 (magenta line) cutoff schemes: 12 Å and 8 Å, respectively.

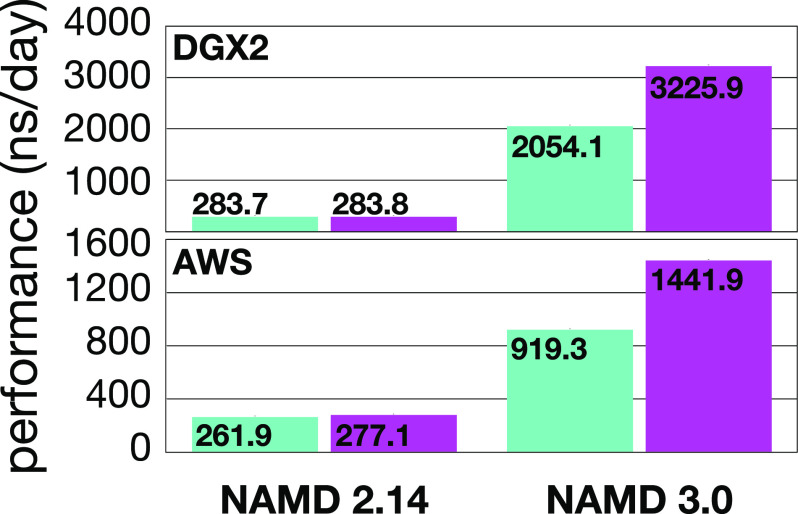

The new GPU-resident computing scheme, using only a single CPU core, outperforms the original GPU offload scheme by approximately a factor of 2, despite the original offload scheme’s use of all 16 CPU cores. In addition, since most of the computational bottlenecks have been removed from the CPU, it is possible to achieve perfectly linear scaling when running independent replica simulations on single-node multi-GPU platforms, with one replica per GPU. This approach can produce aggregate simulation times of microseconds per day, as shown in Fig. 7.

FIG. 7.

NAMD performance compared on two platforms, NVIDIA DGX-2 (upper panel) and Amazon Web Services (AWS) P3.16xlarge (lower panel), using the standard GPU offloading scheme in NAMD 2.14 and the GPU-resident scheme in NAMD 3.0. The DGX-2 and AWS P3.16xlarge platforms consisted, respectively, of 16× NVIDIA Tesla V100 GPUs and 8× NVIDIA Tesla V100 GPUs associated with a 48-core CPU. The benchmarks were conducted on replica simulations of apolipoprotein 1 (ApoA1) in water, representing 92 224 atoms, in the microcanonical ensemble, with one independent replica running on each GPU. The benchmarks were carried out with the CHARMM9 (turquoise bars) and AMBER-like10 (magenta bars) cutoff schemes: 12 Å and 8 Å, respectively.

IV. PROPAGATORS

One of the features that has made NAMD a widely popular MD engine is its ability to generate trajectories in apt thermodynamic ensembles, with minimal approximation and corner-cutting. This section reviews the numerical schemes implemented in NAMD to integrate the equations of motion, together with the algorithms utilized to maintain the temperature and the pressure constant.

A. Numerical integration

It is useful to distinguish the two ways whereby MD simulations might be carried out. For actual dynamics, the dynamical parameters, e.g., the mass and the thermostat and barostat coupling parameters, are to be given physically realistic values. For sampling, however, parameters can be chosen to reduce autocorrelation times, thereby increasing the number of independent samples. In both cases, it is, however, normally expected that a numerical integrator retains the following property of the dynamics: if an ensemble of trajectories has initial positions and velocities chosen from a given distribution, e.g., the canonical ensemble, this distribution is preserved as the trajectories evolve. This is a useful property even for sampling, in which only a single, long trajectory is generated.

1. Verlet and symplectic integrators

NAMD provides both deterministic and stochastic equations of motion. The deterministic model rests upon the Newton equations of motion, and the basic integrator is provided by the Verlet algorithm.48 This method happens to be symplectic, which ensures that numerical trajectories possess properties similar to those of the analytical Hamiltonian dynamics. In particular, Hamiltonian dynamics preserves volume in phase space and conserves the energy exactly, which, together, ensure the preservation of any distribution, such as the Boltzmann distribution, that is a function of the Hamiltonian alone. A symplectic integrator also preserves volume in phase space but conserves a so-called “shadow” energy, which differs from the actual energy by a modest . Actually, this is not precisely correct—there is a “very small” drift in the conservation of the energy over “very long” timescales.49 The very small error shrinks dramatically, and the very long timescales expand dramatically as Δt is reduced. In a plot of the actual energy, the fluctuations indicate the error in the shadow energy, and an insignificant secular drift indicates a sound integration. In practice, for a symplectic integrator, it is adequate that the time step be not much smaller than that needed to avoid drift. For one thing, the greatest error in the shadow Hamiltonian occurs in the less important high-frequency modes. Second, temporal discretization error is typically much smaller than that due to limited sampling. Of any integrator that employs only full force evaluations, the Verlet integrator allows the largest stable time step for a given computational effort.50

2. Multiple time stepping

Attaining larger time steps with a symplectic integrator is possible if the energy function is partitioned based on timescales and the forces corresponding to shorter timescales are evaluated more frequently. This numerical scheme,51 known variously as r-RESPA52 and the impulse method, is implemented in NAMD with up to three levels of multiple time stepping, namely, bonded, short-range nonbonded, and long-range nonbonded, e.g., 1 fs, 2 fs, and 4 fs. There is a limit to the largest time step due to possible resonances between the frequencies of the long-range impulses and natural frequencies of the bonded interactions.53

3. Constrained dynamics

For constrained dynamics, NAMD uses an extension of Verlet known as SHAKE.54 This method is dynamically equivalent to RATTLE,55 which is symplectic.56 More specifically, RATTLE performs a post-processing on velocities, which is purely cosmetic, in the sense that it affects only the output velocities, but has no effect on the trajectory.57 In the NAMD implementation, only covalent bonds to hydrogen atoms are allowed to be constrained, thereby reducing the frequencies of the fastest bonded interactions while avoiding any additional parallel communication, since each cluster of atoms to be constrained is in close enough proximity to be kept together on a single processing element. A consequence of removing these hard degrees of freedom by means of holonomic constraints is the possibility to utilize longer time steps to integrate the equations of motion. Generally, the use of SHAKE requires an iterative process to satisfy all constraints. To rigidify water molecules, the constrained equations of motion are solved analytically, employing a formulation known as SETTLE.58

4. Stochastic dynamics

Commonly used to simulate a heat bath, the Langevin dynamics implementation of NAMD is based on a second-order accurate extension of Verlet, known as the Brooks–Brünger–Karplus (BBK) scheme.59 A less computationally demanding approach incorporated in NAMD and referred to as stochastic velocity rescaling60 leans on a stochastic process to infer the rescaling parameter. NAMD also employs Langevin dynamics to control piston fluctuations for controlling pressure in the context of the Langevin piston method.61

B. Thermostats and barostats

NAMD provides mechanisms to control temperature and pressure in a way that generates the correct ensemble distribution. For isothermal simulations, thermostat control is provided by Langevin dynamics or, alternatively, by stochastic velocity rescaling.60 Langevin dynamics is advantageous for parallel scaling since no additional communication is required. Moreover, the friction term that appears in the Langevin equation tends to enhance dynamic stability. The method also lends itself to a great deal of flexibility, where different parts of the computational assay, e.g., the solute and the solvent, can be handled using different coupling coefficients defined on a per-atom basis. However, the computational cost of the integration is increased with respect to the basic Verlet implementation due to the need for a Gaussian-distributed random number for each degree of freedom and at every time step.

NAMD provides a less costly alternative in the form of the stochastic velocity rescaling method,60 which is an inexpensive stochastic extension of the weak-coupling Berendsen thermostat,62 requiring just two Gaussian-distributed random numbers for every rescaling of the velocities. The method has the virtue of being less disruptive to the underlying dynamics than Langevin dynamics, conserving both holonomic constraints and linear momentum. However, each rescaling involves a global broadcast of the rescaling parameter. Rescaling should not be done more often than the largest time step employed in the simulation and can in practice be done less often, corresponding to the update of the domain decomposition of the cell, which typically occurs every 20 time steps. The overall reduction in computational effort results in up to a 20% performance improvement for parallel, GPU-accelerated simulations, as demonstrated by the parallel scaling benchmarks on the Summit supercomputer at Oak Ridge National Laboratory depicted in Fig. 8.

FIG. 8.

NAMD scaling on the Summit supercomputer shows the performance advantage of using stochastic velocity rescaling (blue line) over the Langevin damping thermostat (red line), up to 20% faster for the same number of nodes.

Isothermal–isobaric simulations are handled in NAMD with an implementation of the Langevin piston algorithm,61 which combines the Hoover constant-pressure equations of motion63,64 with piston fluctuations controlled by Langevin dynamics.61 (Reference 64 details the difference between the Hoover formulation and the original Nosé–Andersen equations.65,66) The resulting equations of motion were independently proposed in another work, which proved that the correct ensemble is generated.67 To perform MD simulations in the isothermal–isobaric ensemble, the Langevin piston barostat must be used in conjunction with either of the aforementioned thermostats.

V. ELECTROSTATICS

Evaluation of electrostatic interactions represents a significant component of the computational effort in MD simulations. In this section, we review the algorithms implemented in NAMD to handle electrostatic forces, in particular, their long-range nature.

A. Periodic electrostatics

NAMD supports periodic boundary conditions, or PBCs, with periodicity in one, two, or three directions, as well as nonperiodic simulations. Periodicity gives rise to forces resulting from infinite sums of image charges. For periodicity in two or three directions, these forces are not well defined, the net force depending on the ordering of the terms. To have a well defined force, it is necessary to be more precise about the limiting process. The best such construction envisions a sphere of complete periodic cells and considers the limit as its radius goes to infinity. The result is the classic Ewald model68 plus a dipole term, the coefficient of which depends on the dielectric constant of the surroundings. Such a term seems inadvisable for solvated biomolecules, so a so-called “tin foil” boundary is assumed to ensure that it is equal to zero. For a system with a nonzero net charge, the limiting process remains valid—provided that a neutralizing uniform background charge is introduced, which has no effect on forces but introduces a constant correction to the electrostatic energy per periodic cell.69

B. Ewald summation

The energy and forces associated with the Ewald sum represent a significant part of the computational effort in an MD simulation. Ewald summation decomposes the Coulomb interaction kernel into a short-range part plus a long-range part, based on the error function erf, 1/r = erfc(βr)/r + erf(βr)/r, where erfc is the complementary error function. The short-ranged first term gives rise to a real-space summation between nearby pairs of atoms. The long-ranged and bounded second term gives rise to a summation of interactions between the charge densities of the unit cell and all of its periodic images, which converges rapidly in reciprocal space after applying a Fourier transformation. The parameter β is chosen to minimize the computational effort, yielding an operation count proportional to N3/2.70

1. Smoothed particle–mesh Ewald

NAMD reduces the operation count to 33 by employing the smoothed algorithm.71 PME achieves this speedup by replacing the complex exponential in the reciprocal space sum with a B-spline interpolant, which yields an approximation on a grid amenable to the use of the FFT.

2. Implementation of particle–mesh Ewald

To enhance the performance of PME, NAMD tabulates quantities used in the PME energy calculation and interpolates from these quantities, thereby avoiding the calculation of expensive transcendental functions, erfc and exp, during the simulation. The exact details, however, depend on the computer. In the CPU version of NAMD, cubic Hermite interpolation results in an energy function with continuous first derivatives, and its gradient is used to calculate forces. Conversely, the GPU version utilizes a linear interpolation of the force and an additional linear interpolation of the energy when needed for output. Having continuous forces is important for minimization and crucial for dynamics. Furthermore, having a force that is a gradient is equally crucial for Hamiltonian dynamics.

3. Performance of particle–mesh Ewald

The FFTs calculated by PME pose a challenge to parallel scaling due to communication requirements. A one-dimensional pencil decomposition of the three-dimensional FFT improves the scaling for larger assemblies of atoms over the two-dimensional slab decomposition originally supported by earlier versions of NAMD, where the FFTW library is employed to calculate the constituent FFTs on CPU cores. GPUs can be used with PME to calculate the spreading of charge from atoms to grid points and the gathering of the force from grid points to atoms. This use of GPU acceleration can outperform the CPU version when one doubles the grid spacing and increases the order of interpolation from 4 to 8, which maintains the expected accuracy and simultaneously increases the arithmetic intensity of the GPU while reducing the communication bandwidth by a factor of 8. There is also a version of PME for single-node MD simulations, which implements all kernels on the GPU and employs the NVIDIA cuFFT library for the FFT calculation. The performance of PME in the context of the adaptive, asynchronous programming model Charm++ is discussed in Sec. II.

4. Conservation of momentum

The best known fast methods for electrostatics are all based on a gridded approximation72 and, therefore, compute energies that are not translation-independent. Consequently, the total linear momentum is not expected to be conserved. NAMD, however, provides a simple device73 that conserves momentum without incurring energy drift of any significance (comparing to Sec. IV of Ref. 71).

C. Multilevel summation method

For simulations that are not periodic or periodic in only two directions, the use of FFT is less efficient. Additionally, FFT does not parallelize very well for very large molecular objects simulated on a large array of processors. These drawbacks are overcome by the multilevel summation method (MSM), which generalizes the basic idea of PME by decomposing the Coulomb interaction kernel into two or more parts of increasing length scale. The intermediate parts are approximated on meshes/grids of increasing coarseness, and these intermediate computations are performed in real space rather than in reciprocal space, exploiting the finite range of the intermediate parts of the kernel. The long part, of infinite range, is on a grid coarse enough for the computation to be carried out efficiently by an FFT or even directly. In practice, instead of erf(βr)/r, a softened kernel is employed,74 which has the virtue of creating short-range and intermediate-range kernels that are exactly zero beyond a given cutoff. NAMD implements MSM based on the use of piecewise polynomial interpolation having continuous first derivatives.75

D. Treatment of induced polarization

In addition to the simpler, pairwise additive force fields with fixed electric charges featured in the program, NAMD offers an extension that models induced electronic polarization using a classical Drude-oscillator approach.5,13 Potential functions that represent electrostatic interactions in terms of fixed effective partial charges are clearly limited by their ability to provide a realistic and accurate representation of both microscopic and thermodynamic properties, most notably when induced electronic polarization is expected to play a significant role. One approach to incorporate these effects is the classical oscillator model introduced by Drude,76 which addresses induction phenomena by introducing an auxiliary charged particle attached to each polarizable atom by means of a harmonic spring.13 One noteworthy advantage of this model is that it preserves the simple particle–particle Coulomb electrostatic interaction employed in pairwise additive force fields, allowing an implementation that is computationally simple and effective. The development and parametrization of the Drude force field over the last 15 years now includes water, ions, proteins, lipids, nucleic acids, and carbohydrates.77,78 To avoid the computationally prohibitive self-consistent field (SCF) procedure, which is normally required to treat induced polarization, an extended Lagrangian with a dual Langevin thermostat scheme applied to the Drude-nucleus pairs has been developed.5,13 This approach enables the efficient generation of classical trajectories that are near the SCF limit. To achieve SCF-like dynamics, it is critical that the cold thermostat act on the atom-Drude oscillator pairs rather than on the Drude particles directly.13 The implementation in NAMD is achieved by separating the dynamics of each atom-Drude pair with coordinates in terms of the global motion of the center of mass and the relative internal motion of the oscillator. This implementation has made possible the efficient simulation of very large molecular systems accounting for through-space induction phenomena.5

E. Generalized Born implicit solvation

NAMD features alternatives to an explicit description of the environment, chief among which is the generalized Born implicit solvent (GBIS) model.79 GBIS is a fast, albeit approximate, model for the calculation of electrostatic interactions within a solvent described as a dielectric continuum by means of the Poisson–Boltzmann equation. It allows large molecular objects to be simulated at a fraction of the cost of a model that would include explicit solvent molecules and is available in both the CPU and GPU versions of NAMD.47,80

VI. THE COLLECTIVE VARIABLES MODULE

The collective variables module, or Colvars, is a contributed software library, which supports enhanced-sampling methods in a space of reduced dimensionality.6 Since its introduction as part of NAMD version 2.7, Colvars has provided the computational platform for most of the enhanced-sampling methods listed in Sec. VII and other derived methods.81 It is written primarily in C++ and included in the official source code as well as precompiled NAMD builds.

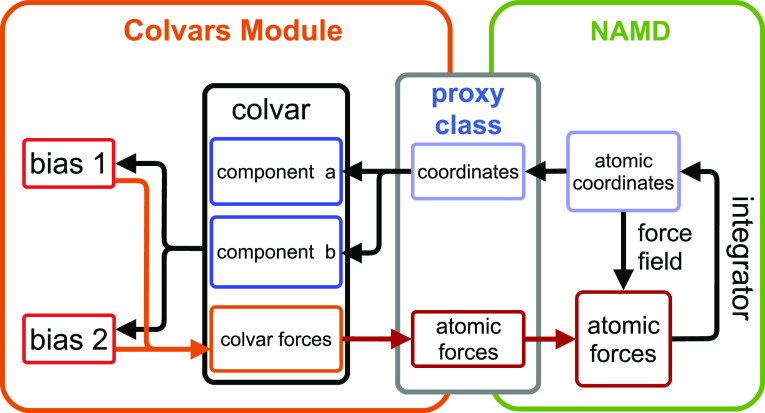

A. Principles of a biased simulation with Colvars

Simulations using methods implemented by Colvars require the end-user to choose and define two entities, namely, (i) at least one collective variable (CV) (colvar), which is a function of atomic coordinates, and (ii) a method that modifies the dynamics of the CV, i.e., a bias. There can be of course multiple variables or biases defined simultaneously. The Colvars module offers many choices of both variables and biasing methods, ranging from massively parallel compiled code to arbitrary functions chosen by the user at runtime; both are described hereafter (Fig. 9).

FIG. 9.

Graphical representation of a Colvars configuration.

B. Using VMD to prepare or analyze simulations

Setting up Colvars calculations with NAMD is now facilitated by the interactive Colvars dashboard in VMD, which provides helpers for preparing a configuration describing the CVs. The configuration can be saved to a file that is directly readable by NAMD for biased simulations. The Colvars dashboard also facilitates the analysis of NAMD simulations, regardless of whether these were carried out with Colvars. It is distributed with VMD, starting with version 1.9.4.

C. Parallel performance

When NAMD is run in parallel on multiple nodes, only one instance of the Colvars module is run on the first, master node. This requires all-to-one communication of selected atomic coordinates, or their partial contributions to the CVs, and one-to-all communication of the biasing forces on the atoms. Updating the Colvars module is executed asynchronously alongside the force calculation by NAMD, resulting in efficient latency hiding.

Two types of parallelization schemes are implemented in Colvars/NAMD, addressing, respectively, cases of CPU and communication bottlenecks. On multicore platforms that support shared-memory parallelism, calculation of multiple CV components and biases is distributed over all cores of the first node. This is helpful when several computationally expensive variables or components are defined. Separately, when CVs depend on atomic coordinates only via the centers of mass of groups of atoms, the centers of massare calculated in parallel using NAMD functions. There, a single biasing force is computed for each group and is distributed onto the constituent atoms only within each node that carries them. This arrangement achieves parallelism without communicating the entire molecular system over all nodes, preserving the capability of NAMD to treat very large computational assays. Similarly, biasing forces can also be applied indirectly to atoms via volumetric maps, as will be detailed hereafter.

D. Scripting interface

Colvars takes advantage of the NAMD embedded scripting interpreters to enable custom extensions without the need to write and compile C++ codes. These custom extensions may take two forms: (i) directives in the main NAMD script and (ii) callbacks to user-defined functions. Scripting directives are typically employed to control simulation workflows based on CVs, forming the basis for the implementation of numerical schemes such as the string method with swarms of trajectories82 or the adaptive multilevel splitting algorithm.83,84 The primary scripting language of Colvars in NAMD is Tcl. Colvars can also be called from a Python script indirectly through the Tcl interface. In response to a growing demand from a broad community of users, Python objects will be made available in the near future, in conjunction with improved Python support by recent NAMD builds.

Complementary to workflow control, user-defined functions, i.e., callbacks, can also be used by the Colvars library. Such scripted functions provide the framework for the implementation of custom-tailored CVs and biasing algorithms without the necessity to recompile NAMD. Compared with tclForces and tclBC, Colvars-based callbacks carry the advantage that custom variables and biases are typically calculated in low-dimensional spaces. They have, therefore, minimal performance overhead because atom-level processing is done by C++ functions of Colvars and NAMD. A simpler but slightly less flexible way to define variables as custom mathematical functions of existing components is provided using the lightweight expression parsing library Lepton written by Eastman.85 Using Lepton requires no knowledge of programming at all as new variables can be expressed in conventional mathematical notation. Gradients of such custom-tailored variables are calculated transparently using automatic differentiation. The example configuration below defines a CV based on two components, namely, the scalar distance, d, between two atom groups and the vector distance, r, between the same groups. The value of the CV is the unit vector joining the two groups, the individual scalar components of which are calculated by three custom functions.

| colvar { |

| name myUnitVectorColvar |

| # Uses two predefined basis functions, |

| # scalar and vector distance |

| distance { |

| name d |

| # This quantity is referred to by its |

| # name ’d’ in custom functions |

| group1 { atomNumbers 1 2 3 4 } |

| group2 { atomNumbers 5 6 7 8 } |

| } |

| distanceVec { |

| name r |

| # Scalar components of the vector r are accessed |

| # as ′r1′, ′r2′, and ′r3′ |

| group1 { atomNumbers 1 2 3 4 } |

| group2 { atomNumbers 5 6 7 8 } |

| } |

| # Together, the 3 instances of customFunction |

| # define a 3-vector colvar |

| customFunction r1 / d |

| customFunction r2 / d |

| customFunction r3 / d |

| } |

E. Projecting atomic forces on collective variables

A key feature of Colvars is the projection of total atomic forces onto specific CVs, forming the basis of the classic thermodynamic integration (TI) free-energy estimator.86 This estimator is typically used in the adaptive biasing force (ABF) method.87–89 Starting with NAMD 2.13, it can also be used in combination with other methods, including steered MD (SMD),90 umbrella sampling,91 and metadynamics92 (see Sec. VII).6

Because the projection of total forces requires the fulfillment of certain mathematical conditions,88,93 the TI estimator cannot be used directly in some cases. However, variables can still be coupled to an extended Lagrangian system, the forces of which approximate then the true total forces of the variables for estimating the free energy, as is done, for instance, in the extended-system ABF algorithm,94 implemented in Colvars.95 The free energy can then be recovered from unbiased estimators.95,96 In two- and three-dimensional cases, the free energy is now automatically computed based on estimates of its gradients. To work around the problem that the inexact numerical estimate of the gradients is not generally the gradient of a scalar field,97 the free-energy landscape is obtained as the solution of a Poisson equation, stating that the Laplacian of the free energy is equal to the divergence of the gradient estimator, subject to appropriate boundary conditions (periodic or non-periodic depending on the CVs in use).

The collection of total forces requires that Colvars computations are carried out at the end of the force calculation by NAMD, which would introduce an additional latency. To circumvent this shortcoming, total forces computed at the previous time step, rather than the current one, are utilized by Colvars. This approach is specific to NAMD and is not currently used by Colvars in other MD engines. The one-step lag is inconsequential for methods that rely on force averages over many time steps. This detail must, however, be kept in mind when the time series of total forces is important.

A second noteworthy detail is that the total forces computed by NAMD include contributions both from the force field and any externally applied forces. Colvars automatically subtracts its own biasing forces under the most typical scenarios, when the TI free-energy estimator is employed by a single enhanced-sampling method, e.g., ABF. Otherwise, it should be noted that any external restraints will be accounted for by the TI free-energy estimator,86,93 thereby potentially introducing a bias.

F. New and notable coordinates

The basis set of coordinates provided by the C++ Colvars library has been extended with spherical polar coordinates, employed, for instance,98 in a restraint scheme for standard binding free-energy calculations,99 dipole-moment magnitude and direction,100 and geometric path-based variables.101 Independently, path-collective variables102 are available in Tcl as scripted functions. The variable that calculates coordination numbers (coordNum) has quadratic complexity in the number of atoms involved, with a potentially high computational cost. It can now be computed at a tunable level of approximation using pair lists, drastically improving its performance.

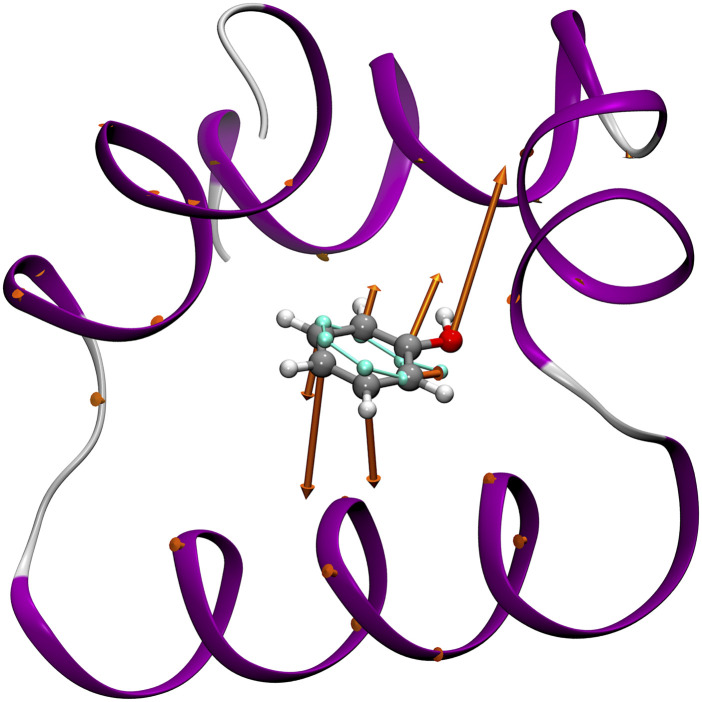

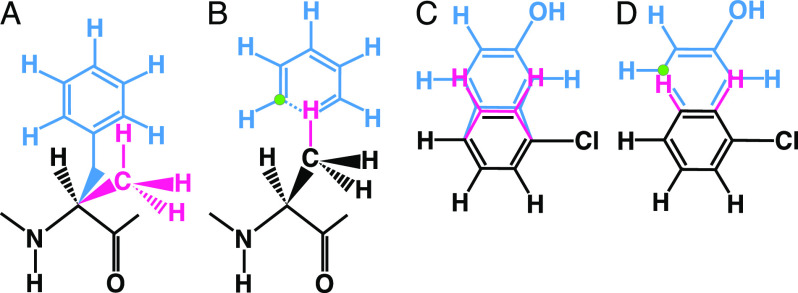

Any CV implemented by Colvars can be calculated in a moving frame of reference tied to a set of atoms in the molecular system. This way, any external degree of freedom can be turned into an internal degree of freedom of the relevant subsystem (the reader is referred to Ref. 6 for further details). This approach facilitates the description of the relative motion of molecular objects and is the foundation of the Distance to Bound Configuration (DBC) coordinate for absolute binding free-energy calculations, which measures the deviation of translational, rotational, and conformational degrees of freedom of the ligand (see Fig. 10).81

FIG. 10.

Gradients of the Distance to Bound Configuration (DBC) coordinate for a phenol molecule bound to T4 lysozyme. The reference pose of phenol heavy atoms is shown in light cyan. The arrows are proportional to the derivatives of the CV with respect to atomic Cartesian coordinates. The small gradient contributions on protein Cα carbons illustrate the purely roto-translational counter-forces exerted on the receptor when biasing forces are applied to a DBC coordinate.

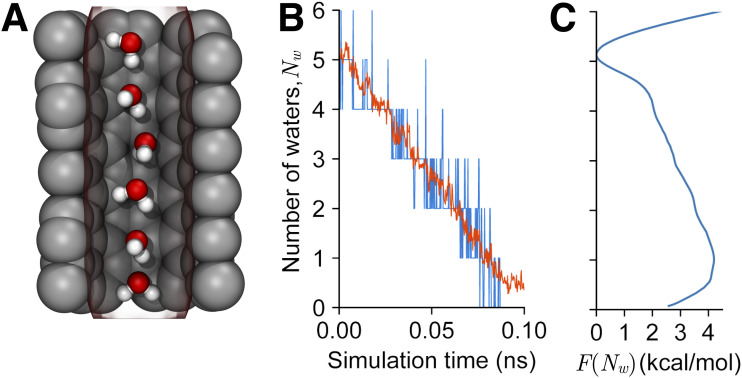

Certain phenomena, such as protein–solvent interaction or membrane dynamics, require dynamical selections of the relevant atoms. Toward this end, volumetric maps (see Sec. XIII) may be used to define CVs that, for instance, “count” the number of atoms within an arbitrary region of space. The recently introduced Multi-Map variable103 utilizes this approach to study the deformation of lipid membranes over biologically relevant scales, as well as solvent-density fluctuations in confined cavities (see Fig. 11). This functionality will also serve as the basis for new sampling methods based on electron density maps (see Sec. XIV).

FIG. 11.

CVs for solvent reorganization based on volumetric maps. Snapshot of a hydrophobic cavity containing a varying number of water molecules (a). A volumetric map (red transparent contour) is used to define a continuous variable measuring the number of molecules in the cavity. Trajectory of a short SMD simulation on (red), compared to the number of molecules counted with VMD (blue) (b). PMF of , computed over ∼400 ns. The use of gridForces maps (see Sec. XIII) in Colvars allows the development of methods for enhanced sampling of fully dynamic aggregates, such as water densities and lipid membranes103 (c).

G. New and notable biasing methods

In addition to the biasing methods originally described in the Colvars reference,6 other notable methods have been introduced more recently, particularly in the context of introducing experimental constraints into the simulation. Leveraging tools added to NAMD for other replica-exchange algorithms (see Sec. X), the multiple-walker (MW) version of ABF104 has been introduced for free-energy calculation encumbered by hidden barriers (see Sec. VII).105 Two new methods target directly a certain probability distribution via harmonic restraints on the probability distribution computed over multiple copies of certain atoms106 or by the more general ensemble-biased metadynamics method.107 Alternatively, experimental restraints may be enforced following the maximum-entropy principle as a constant-force, linear restraint.108 The magnitude of the force can be learned automatically within the simulation, such as in the adaptive linear bias (ALB) method109 and in the restrained-average dynamics (RAD) method,110 which further reduces nonequilibrium effects by incorporating the experimental uncertainty in the biasing forces.

VII. ENHANCED SAMPLING METHODS

NAMD provides a large set of methods and algorithms to enhance, boost, and accelerate the natural molecular motions during MD simulations. Depending upon the context and implementation, several of these methods may be used to enhance conformational sampling while remaining consistent with a Boltzmann equilibrium distribution. A broad set of approaches, referred to as Hamiltonian tempering,111 aim at enhancing configurational sampling via a modification of the underlying potential energy function of the system. Those include accelerated MD112,113 (aMD) and its Gaussian variant114 (GaMD), which, as will be detailed in Sec. VIII, attempt to parametrically “lift” the energy floor of the potential function to make the energy wells more shallow yet without perturbing the energy barriers. Another method is solute tempering (REST2),115 which aims at enhancing sampling by scaling the solute–environment interaction energy to lower the barriers that separate conformational states. Hamiltonian tempering methods effectively attempt to smooth the potential energy surface in order to enhance sampling. Hamiltonian tempering methods can generate Boltzmann-distributed configurations either via a post-hoc re-weighting analysis or by generating the simulation within a Hamiltonian tempering replica-exchange scheme (see Sec. X).

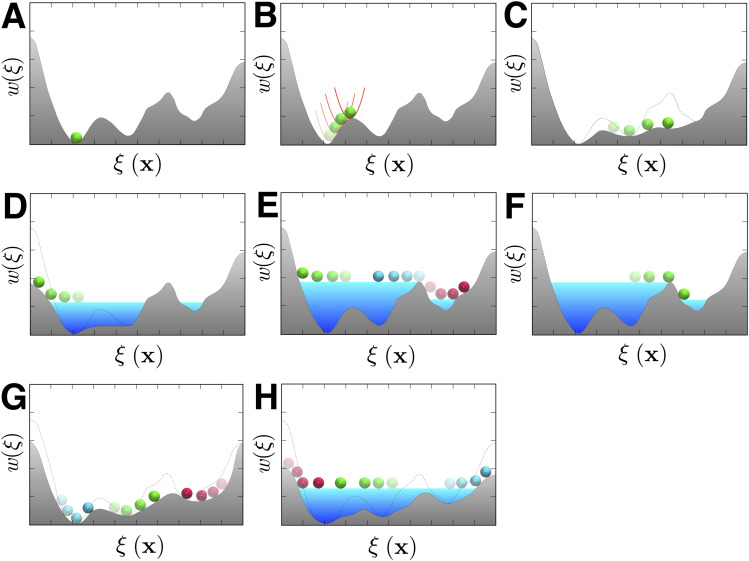

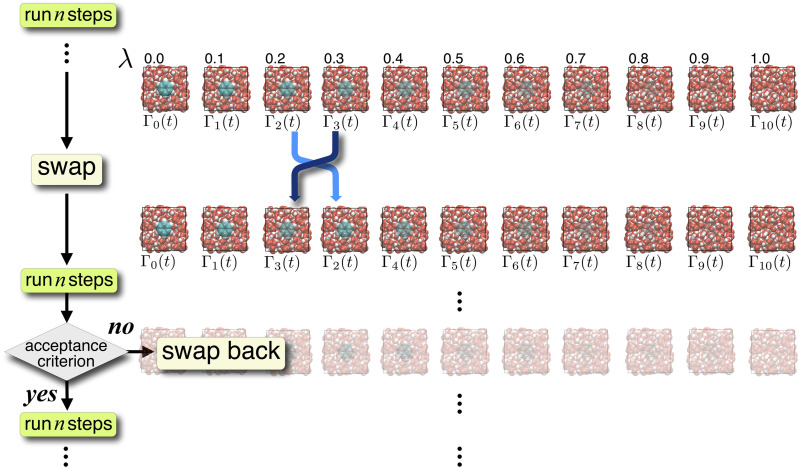

Studies of complex conformational transitions occurring on long timescales often proceed by identifying a geometric transformation associated with a general-extent parameter,116 , often referred to as the reaction-coordinate model117 and formed by collective variables.88 Several enhanced-sampling algorithms16,118,119 aimed at encouraging the exploration of relevant regions of configurational space along such a user-chosen reaction-coordinate model are available in NAMD. One of the most direct choices to boost the motion of a system along this reaction-coordinate model consists in applying a time-dependent non-equilibrium perturbation via SMD.90 In principle, such non-equilibrium SMD trajectories can be used to determine the equilibrium free energy via post-hoc analysis based on the Jarzynski identity,120 although reaching convergence may be challenging in practice. By far, the most widely used approaches to map the free-energy landscape along the reaction-coordinate model of complex biomolecular systems rely on configurational sampling in the presence of some biasing potential, following the general statistical technique of importance sampling,16,118,119 which seeks to estimate a particular distribution by sampling from a different distribution. The Colvars module,6 described in Sec. VI, serves as a repository for these importance-sampling approaches, as well as a toolkit for the implementation of new numerical schemes. Among the widely used algorithms associated with geometric transformations that are available in Colvars are umbrella sampling91,121 (US), metadynamics92,122,123 (MtD), well-tempered metadynamics124 (WT-MtD), and ABF87,89 and its different variants95,96,125,126 (see Fig. 12).

FIG. 12.

Sampling of a rugged free-energy landscape. Boltzmann sampling favors low-energy regions (a). Free-energy barriers can be overcome by depositing harmonic potentials, as is done in US (b); by applying a time-dependent bias that yields a Hamiltonian bereft of an average force along ξ(x), as is done in ABF, or its extended-Lagrangian variant, eABF (c); or by flooding valleys by means of Gaussian potentials, or hills, as is done in MtD (d). Combination of MtD and eABF, meta-eABF, concurrently shaves free-energy barriers and floods valley (e). To enhance ergodic sampling, a multiple-walker extension of MtD (f), ABF or eABF (g), and meta-eABF (h) has been implemented.

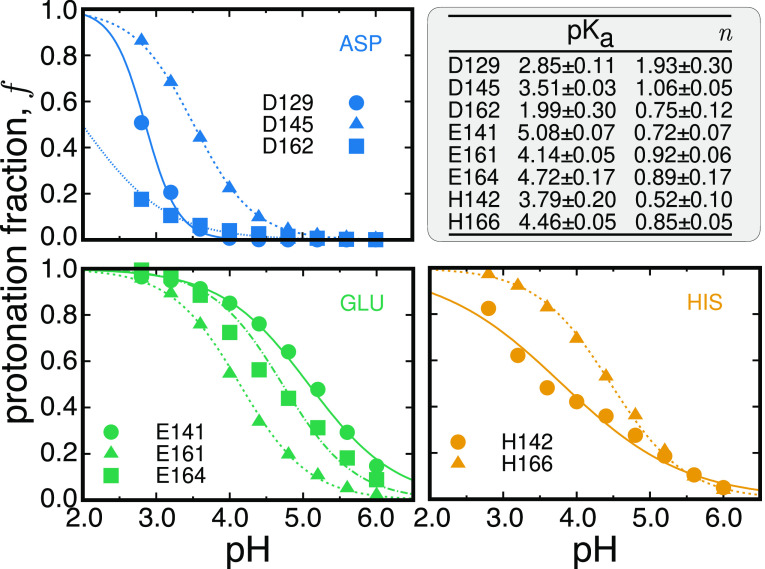

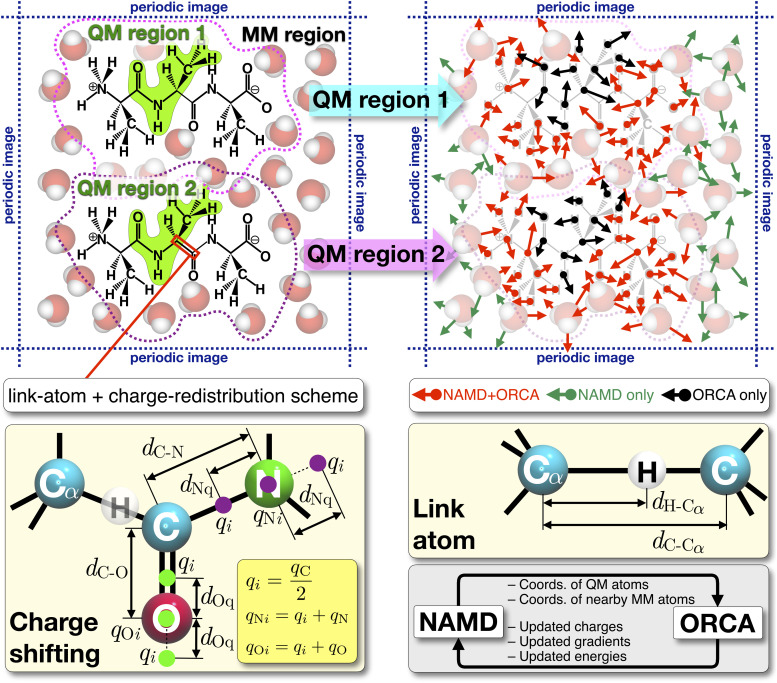

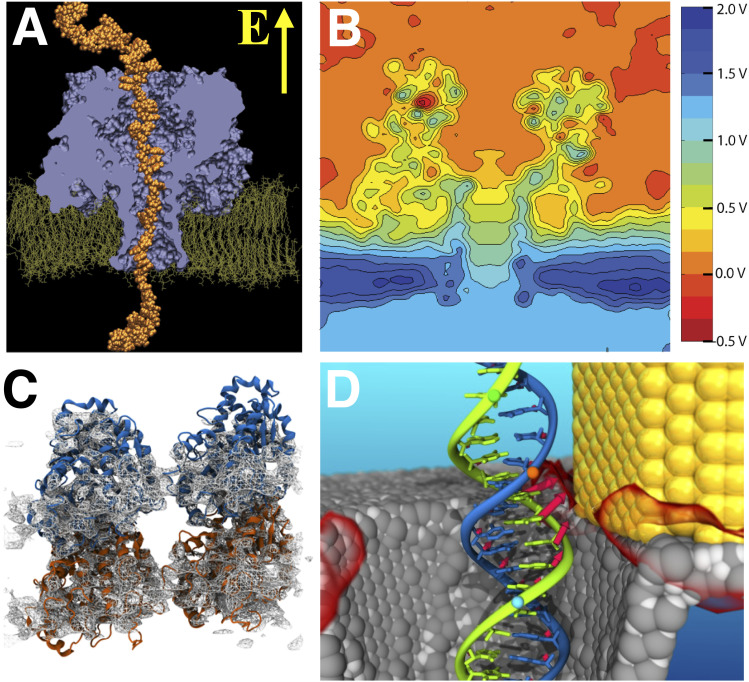

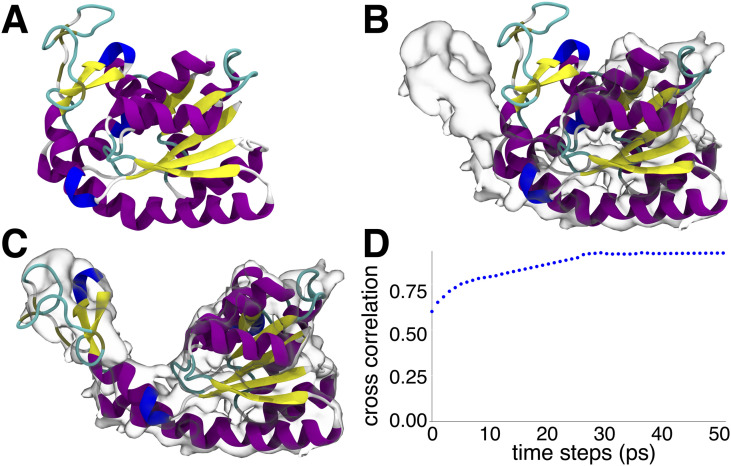

Importance sampling approaches such as US, MtD, and ABF, which promote configurational exploration by means of a bias along a chosen direction ξ(x), remain plagued by slow degrees of freedom in directions orthogonal to ξ(x).127 For instance, misrepresentation of the true conformational transformation associated with the molecular transition in terms of a naive reaction-coordinate model, ξ(x), consisting of a single collective variable, may often result in severe sampling nonuniformity.118,119 While it is never possible to formally guarantee a complete ergodic sampling, multiple-walker (MW) strategies, e.g., MW-MtD128 or MW-ABF,104,105 are available to address this issue. A common denominator is the combination of information accrued by the different walkers, namely, the Gaussian-potential weights and widths in MW-MtD, and the free-energy gradients in MW-ABF. Those algorithms, directly embedded in Colvars,6 can be brought to a higher level of sophistication by means of Tcl scripting. For instance, Darwinian selection rules clone good walkers that cover large stretches of ξ(x) while eliminating the less efficient, kinetically trapped ones.105 A number of replica-exchange strategies built on the powerful multiple-copy algorithm129 (MCA) infrastructure of NAMD may be used (see Sec. X). These strategies include multi-canonical temperature and Hamiltonian tempering replica-exchange MD111,130–132 (REMD) and bias-exchange window-swapping umbrella sampling133 (BEUS). Available MCA sampling algorithms may be extended via the Tcl scripting interface.