Abstract

Introduction

Analyses of simulation performance taking place during postsimulation debriefings have been described as iterating through phases of unawareness of problems, identifying problems, explaining the problems and suggesting alternative strategies or solutions to manage the problems. However, little is known about the mechanisms that contribute to shifting from one such phase to the subsequent one. The aim was to study which kinds of facilitator interactions contribute to advancing the participants’ analyses during video-assisted postsimulation debriefing.

Methods

Successful facilitator behaviours were analysed by performing an Interaction-Analytic case study, a method for video analysis with roots in ethnography. Video data were collected from simulation courses involving medical and midwifery students facilitated by highly experienced facilitators (6–18 years, two paediatricians and one midwife) and analysed using the Transana software. A total of 110 successful facilitator interventions were observed in four video-assisted debriefings and 94 of these were included in the analysis. As a starting point, the participants’ discussions were first analysed using the phases of a previously described framework, uPEA (unawareness (u), problem identification (P), explanation (E) and alternative strategies/solutions (A)). Facilitator interventions immediately preceding each shift from one phase to the next were thereafter scrutinised in detail.

Results

Fifteen recurring facilitator behaviours preceding successful shifts to higher uPEA levels were identified. While there was some overlap, most of the identified facilitator interventions were observed during specific phases of the debriefings. The most salient facilitator interventions preceding shifts to subsequent uPEA levels were respectively: use of video recordings to draw attention to problems (P), questions about opinions and rationales to encourage explanations (E) and dramatising hypothetical scenarios to encourage alternative strategies (A).

Conclusions

This study contributes to the understanding of how certain facilitator behaviours can contribute to the participants’ analyses of simulation performance during specific phases of video-assisted debriefing.

Keywords: debriefing, Interaction Analysis, video-assisted feedback

Introduction

Feedback has been identified as one of the most important features of simulation-based medical education1 2 and now and then debriefing is referred to as an ‘art’.3 Approaches about how to debrief vary; for example, being instructor or team led, verbal or video assisted, structured or semistructured, judgemental or non-judgemental and with different timing in relation to the simulation event.4–10 Video-assisted debriefing is growing in popularity11 and the need for further studies exploring how discussion should be structured around the video has been called for.11–13 Different conversational techniques such as learner self-assessment, directive feedback and focused facilitation techniques including advocacy inquiry have been proposed. These techniques place more or less responsibility on learners versus instructors to analyse performance.14 Current learning theory has come to recognise learners as participants 15 in professional practices or even as collaborative creators of knowledge,16 17 and Cheng and colleagues have recently presented strategies for promoting learner centredness in healthcare simulation debriefing.18 Dismukes and colleagues have previously argued for a ‘mind-shift’ among instructors to allow learners to develop skills in critically analysing their own performance.19 However, analysing performance is difficult and learners cannot be expected to develop such skills unless they know where and how improvements should be made.20 In addition, poor performers tend to be unaware of their lack of competence and overestimate their expertise.21 One crucial task for a facilitator is, therefore, to help participants by drawing the participants’ attention to problematic and improvable behaviours that they may not have been aware of.

Few studies describe how debriefing contributes to reflection and learning.22 One study showed that facilitators mostly asked questions on lower (descriptive and evaluative) levels even though these were able to also promote reflection on a higher level.23 Another study which investigated novice doctors’ reflection during debriefings concluded that the relatively inexperienced participants only reached lower levels of reflection.24 They could however not note any differences in the instructor interventions across different levels of participant reflection.

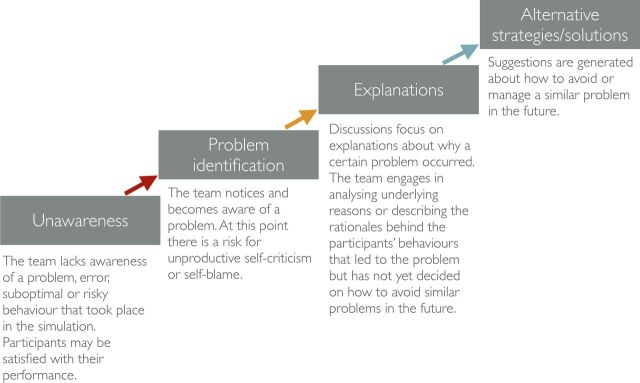

Most debriefing approaches break up the postsimulation debriefing into a series of phases3 25 26 but the number and types of phases vary.14 The participants’ perspective in debriefings can be described as progressing through certain phases (see figure 1).

Figure 1.

Previous studies indicate that analyses during debriefings progress through a number of phases.

The uPEA (unawareness (u), problem identification (P), explanation (E) and alternative strategies/solutions (A)) framework is a result of several studies on debriefings and the acronym refers to the trajectory of qualitatively different phases describing how participants analyse simulation performance during debriefings.27 28 The first phase (u) is characterised by unawareness of or overlooking a problem that took place in the simulation. Not infrequently, participants may be satisfied with their performance. The second phase (P), consequently, is noticing or becoming aware of a certain problem and at this point there is instead a risk for unproductive self-criticism or self-blame. If the analysis continues adequately, the focus will often move to explanations (E) about why the problem occurred. In the final phase, suggestions are generated about alternative strategies (A) that could prevent similar problems in the future.

Over the last couple of decades, the number of studies of detailed interpersonal communication in healthcare has been growing.29 There is an increasing interest in field research that draws on ethnomethodology but which also makes use of the opportunities provided by video recordings.30–32 The use of video recording enables a detailed scrutiny of how facilitators make use of talk and artefacts such as video recordings, whiteboards, algorithms and medical equipment.

Problem statement, aim and research questions

There is a lack of research about which facilitator interventions are productive in advancing and supporting participants’ analyses of their team’s performance and how they can be facilitated to develop such skills further. The details of when and how facilitators in practice should verbally interact33 or use the video recordings are rarely explicated in the literature. The aim was therefore to study which kinds of facilitator interactions contribute to advancing the participants’ analyses during video-assisted postsimulation debriefing. The following research question has been in focus: which kinds of facilitator interventions precede successful shifts to a subsequent uPEA phase?

Methods

Study setting

The study was carried out at the Clinical Simulation Center at South General Hospital (Södersjukhuset) in Stockholm, Sweden. The centre applies the CEPS debriefing approach, which has been in use since 1998. It is a widespread approach in Sweden for training interprofessional medical teams using high-fidelity simulation. It is based on theories about adult learning and is characterised by a thorough prebriefing with the aim of creating a safe learning environment. It also employs a distinct learner-centred teaching approach; the role of the instructor is not mainly to lecture or deliver feedback but to facilitate the teams in analysing their own performance. The term ‘facilitator’ will therefore be used in this paper. All facilitators have taken at least a 1-week course. Another characteristic feature is the use of video-assisted feedback; all simulations are video recorded and the entire recordings are analysed collaboratively by the participants and facilitators. Whenever either a participant or a facilitator so wishes, the video is paused for a discussion about the observation that was made. The debriefings highlight both adherence to medical guidelines as well as crisis resource management. The debriefing approach is well defined and described as non-judgemental and honest with a focus on enhancing both good behaviours and closing performance gaps.

Each simulation course used as a data source in this study consisted of four full-scale simulations of neonatal resuscitations. The participating teams were interprofessional and consisted of six to nine medical students in their fifth year and nurses in their second year of training to become midwives. They had previous experience of simulation training but little or none of video-assisted debriefing. They were facilitated by one to three paediatricians or midwifes whose experience of simulation-based education ranged from 6 to 18 years. The simulations were 8–16 min long and each immediately followed by a 15–45 min debriefing.

Methodological approach

An Interaction Analysis was performed which has been described as a detailed analysis of video recordings to investigate human activities such as talk, non-verbal interaction and the use of artefacts and technologies to identifying routine practices and problems, and the resources for solutions.31 32 34 This method has its roots in ethnography and places a strong emphasis on grounding all assertions on verifiable observations. It enhances traditional field observations by making use of video recordings in order to overcome the gap between what people say they do and what they, in fact, do but also as a way of overcoming the bias of the researcher. Preconceived coding schemes are not used in Interaction Analysis and attempts are made to keep the observations free from predetermined analytic categories. A number of typical analytic foci or simply ways of looking at the data, are however typically employed because they have turned out to be relevant again and again.34 The following are common examples: the structure of events, the temporal organisation of activity, turn-taking, participation structures, and artefacts and documents. Focus in this study was on the interactions between the facilitators and participants including talk, pauses, turn-taking, gestures, movement, postures, facial expressions and the use of various artefacts (video, whiteboards, medical equipment). In particular, the focus was on what facilitators said, to whom they turned their attention and gaze, gestures and how they used the video recordings. This study can be described as an Interaction-Analytic case study examining effective facilitator behaviours in the specific educational setting described in the previous section. The video analysis tool Transana was used to transcribe the video recordings.35 The overall focus was identifying what the instructors said and did to advance the debriefings from one uPEA phase to the next one. The uPEA framework27 28 was chosen as it focuses specifically on the learning and improvement aspect of debriefing and includes steps for becoming aware of performance gaps and explaining and discussing these constructively rather in contrast to more general models about reflective levels. It was found particularly useful for a learner-centred18 debriefing setting because it has a focus on the learners’ perspective (their analyses) rather than on general phases of a debriefing controlled by the facilitators.

Data collection and analysis

Video data were collected from six half-day simulation courses making up a total of 9 hours and 37 min of debriefing time. All recordings were reviewed and 1 hour and 7 min of debriefing from a course including a normal size of participants, displayed a breadth of discussed topics and included highly experienced facilitators (two paediatricians and one midwife), was analysed in detail, as described below.

The video material was first reviewed by KK and FL collaboratively during which preliminary categories of facilitator interventions were created and documented in a content log with a rough summary listing of events. This review was followed by a deductive-inductive data analysis.31 First, the uPEA categories were used to categorise the participants’ discussions during the debriefings. The categorisations were performed independently by KK and FL using the uPEA categories ‘Unawareness’ (when participants appeared unaware of a problem that the facilitator was trying to draw attention to), ‘Problem’ (discussions about a problem, difficulty, mistake, challenge, or suboptimal or risky behaviour), ‘Explanation’ (discussions about explanations of why a problem occurred, underlying reasons for it or rationales for the participants’ behaviours leading to it) and ‘Alternative strategy/solution’ (discussions about alternative strategies and solutions in order to manage or avoid the problem). The categorisation of the transcripts was reviewed collaboratively and analysed until an agreement about the categorisation was reached. In total, 107 uPEA phases were observed: unawareness (u, n=20), problems (P, n=31), explanations (E, n=18) and alternative strategies (A, n=38).

Second, each case of shifting to the following uPEA category was analysed. A total of 110 facilitator interventions preceding phase shifts to higher uPEA levels were observed. Interventions which occurred at least twice at a certain phase shift were included, excluding those which were not repeated to lower the risk of including unintended, accidental behaviours. Fifty-seven facilitator interventions were observed immediately before Problem identification (6 excluded), 15 before Explanations (5 excluded) and 38 before Alternative strategies/solutions (5 excluded), resulting in a total of 94 facilitator interventions included in the analysis. In some cases, the participants shifted to a subsequent phase without any observable facilitator intervention: this happened eight times: three (u->P), three (P->E) and two (E->A) times.

The facilitator interventions were scrutinised in detail (talk, pauses, focus of attention, gaze, gestures, movements, use of tools, and so on) and the preliminary categories were refined and in some cases merged with others or discarded. As recommended by Jordan and Henderson, the analysis was done collaboratively as such an approach is powerful for neutralising preconceived notions on the part of researchers.34 To avoid the tendency for ‘confirmation bias’ the idea was to ground all assertions about what is happening on the video recordings and all observed facilitator interventions were reviewed several times before the categories were set and not completed until full consensus was reached. The categories were thereafter member checked with facilitators at the training centre to ensure their credibility. Sometimes, several simultaneous facilitator behaviours were observed during a shift (eg, moving, pointing and saying something at the same time).

Results

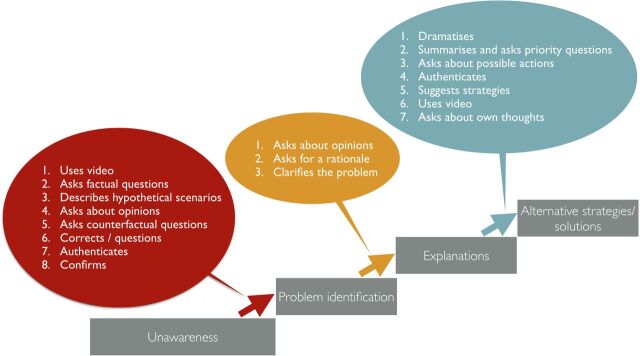

Specific facilitator interventions were observed between different uPEA phases (see figure 2 which gives an overview). Transcripts are presented to exemplify the most characteristic and common interactions for each phase shift. In the transcripts, a simplified version of Jeffersonian notation is used36 (see online supplementary appendix 1). In the examples, facilitator has been abbreviated as ‘F’ and the participants are coded as ‘P1’, ‘P2’, ‘P3’, and so on.

Figure 2.

The most frequently occurring facilitator interventions that immediately precede a successful shift to a more advanced phase, in order of frequency.

bmjstel-2018-000374supp001.pdf (47.7KB, pdf)

Identifying problems

Certain interventions appeared repeatedly when facilitators wanted to draw attention to a problem and support the participants in identifying these (see table 1).

Table 1.

Observed facilitator interventions immediately before the debriefings moved into the Problem identification phase, number of occurrences and descriptions of the interventions

| Facilitator intervention | Occurrence | Description |

| Uses video | 10 | The facilitator makes use of the video recordings: for example, gestures towards an area on the screen or gestures to follow an activity unfolding on the screen, stops the video and pauses in silence or points at something in a frozen picture (see transcript in figure 3). |

| Asks factual questions | 9 | The facilitator asks questions about what happened in the simulation or about events shown in the video recordings, for example, questions what a person was doing or questions about events that took place (eg, ‘And what is she doing?’). |

| Describes hypothetical scenarios | 5 | The facilitator describes an alternative scenario which differs from what happened in the simulation to make comparisons or to illustrate a point. In some cases, the facilitators pretended to be a person in such a situation (points and pretends to quote: ‘Sally, can you continue ventilating?’) and even enacted behaviours including walking to the neonatal resuscitation table gesturing to concretise potential problems. |

| Asks about opinions | 3 | The facilitator asks the participants about their opinions regarding behaviours and events in the simulation (eg, ‘Then the question is how did that report go?’). |

| Asks counterfactual questions | 2 | Rather than asking about what was visible on the video screen, the facilitator asks questions about aspects which did not take place (‘Is something missing here?’). |

| Corrects/questions | 2 | The facilitator corrects a misconception about the simulation. For instance, one participant incorrectly believed that Sally had forgotten to present herself and the facilitators says, ‘No, Sally said “I am Sally”.’ |

| Authenticates | 2 | The facilitator describes aspects of a similar situation in a real case, for example, the composition of teams during resuscitations (‘… you can not be certain in advance exactly who will be there, which professions, how many of each and their levels of experience…’). |

| Confirms | 2 | Confirms what the participants say (‘Yes’; ‘Okay’) or by repeating what was said. |

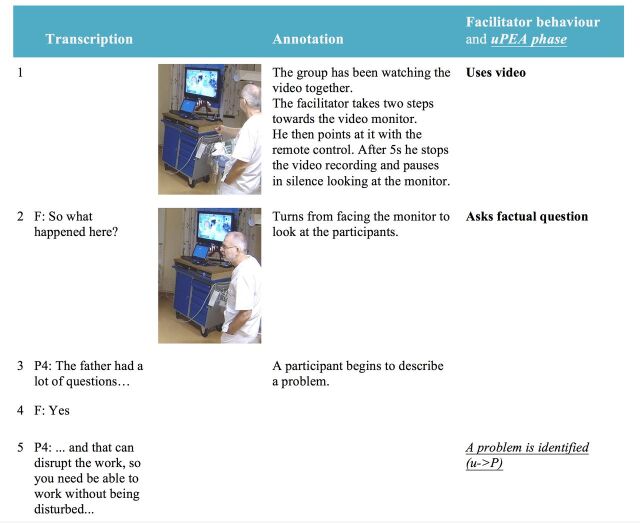

The transcript presented (figure 3) exemplifies how the video recording plays a central role in identifying a problem (u->P). The atmosphere in the debriefing had at this stage been quite cheerful; the participants had been laughing at comments made in the video and did not seem to be aware of any problems. The facilitator then takes a couple of steps towards the video monitor, points at it with the remote control (line 1) leading to that the participants seem to watch the screen more intently. The facilitator stops the video, pauses in silence looking at the video and then asks, ‘So what happened here?’ (line 2). A participant now begins describing a problem (line 3): the father of the newborn was asking a lot of questions which was challenging as this risked disrupting the work of the team (line 5). The other participants go on to elaborate on this problem and one participant points out that she now noticed that the ventilation frequency actually decreased during the conversation with the father. Although all team members had been watching the video they had not reflected on the disturbance and its consequences. By drawing attention to the video and asking a question about what happened, the facilitator managed to help the team discover a problematic issue that had gone unnoticed.

Figure 3.

An example transcript illustrating how the facilitator (F) makes use of the video recording by turning to and pointing at the screen and then asks a (factual) question about the events in order to draw attention to a problem that had gone unnoticed. uPEA, unawareness (u), problem identification (P), explanation (E) and alternative strategies/solutions (A).

Encouraging explanations about the identified problems

The focus of the discussions has until this point been on identifying and discussing problems but not yet on understanding the reasons for why they appeared. Facilitators will therefore strive at guiding discussions towards explanations underlying the identified problem. In comparison to the previous phase shifts, other mechanisms were more successful in advancing the discussions to focus more on explanations (see table 2).

Table 2.

Observed facilitator interventions immediately before the debriefings moved into the Explanation phase, number of occurrences and descriptions of the interventions

| Facilitator intervention | Occurrence | Description |

| Asks about opinions | 5 | The facilitator asks for participants’ opinions about events or behaviours in the simulations or to suggestions made by individual participants (‘Do you think it [the ventilation] was [done] too fast?’). |

| Asks for a rationale | 3 | The facilitator asks for reason underlying the problem or explanations as to why a problem occurred (‘Why do you have difficulties discovering that there is another person in the room, Donald?’). |

| Clarifies the problem | 2 | The facilitator clarifies a problem by summarising it or reminding the participants about it (‘you switch places … but at that point you haven’t secured the ventilation… if you change the position of the head then you may not have a free airway…’). |

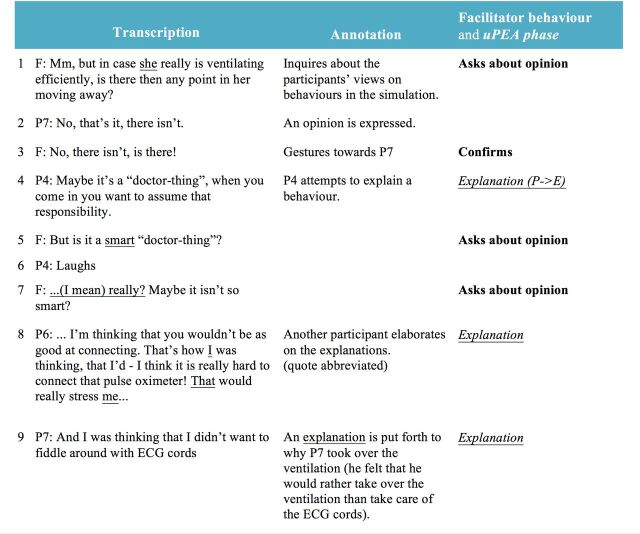

The example illustrates how the facilitator asks the team about their opinions regarding certain events and how this seems to stimulate the participants to offer explanations (figure 4). The team is discussing a problem: they handed over the task of ventilating the patient but neglected to check that the ventilation was still adequate after the shift. The facilitator asks about the participants’ opinions about this handover (lines 1 and 3). One of the participants then puts forth an explanation of their actions (line 4) which is elaborated on by two other team members (lines 8 and 9).

Figure 4.

The facilitator (F) asks the participants about opinions to encourage discussions about explanations to a suboptimal sequence of actions. uPEA, unawareness (u), problem identification (P), explanation (E) and alternative strategies/solutions (A).

Stimulating the generation of alternative strategies

Up to this point, the debriefings have dealt with identifying potential problems and explaining them. The final phase is about suggesting alternative strategies to avoid similar problems. This phase is prospective, looking towards managing future scenarios as opposed to retrospectively analysing what happened in the simulations. Other kinds of facilitator interventions advancing the debriefings were observed during this phase (see table 3).

Table 3.

Observed facilitator interventions immediately before the debriefings moved into the Alternative strategies/solutions phase, number of occurrences and descriptions of the interventions

| Facilitator intervention | Occurrence | Description |

| Dramatises | 8 | The facilitator acts out possible behaviours in a hypothetical situation often quoting something that an imagined person could have been saying and often in combination with moving around the room gesturing to imagined participants and as if they were using tools and instruments in the hypothetical situation (‘What would you do if… a father wants to know X?’, ‘Here, you can say precisely what you know: “the child’s heart is beating and we are assisting him with his breathing,” you can say that. Can you cause any harm by saying that?’). The dramatising illustrated possible behaviours and made points about how they might be experienced by others and about their possible consequences, both positive or negative ones. |

| Summarises and asks priority questions | 7 | The facilitator lists what the team had done. Sometimes also encouraging participants to prioritise between possible alternatives. The summaries reminded the participants of the multiple events that had taken place and related the question to a specific time and situation in the simulation and the facilitators encouraged them to make prioritisations between different activities (‘…and what is most important for the child then and there, that second?’). |

| Asks about possible actions | 5 | The facilitator inquires directly about how a team could or should act in a specific situation (‘…how can you ensure that a report really has been understood or not?’). |

| Authenticates | 4 | The facilitator relates to what could happen in a clinical setting by describing a similar case that they have experienced or might be possible in a real event. |

| Suggests strategies | 4 | The facilitator suggests alternate future actions to spur discussions. The facilitators sometimes asked whether the team wanted suggestions and offered recommendations about how the team could have acted (‘May I give you a suggestion?’; ‘It’s a good thing to talk about the pulse and whether it increases or not and whether the chest is rising.’). |

| Uses video | 3 | The video is used in a similar way as described in the Problem section. However, here the video image is used to refer to a concrete situation and to create a starting point for the discussion about alternative strategies (eg, the facilitator points at the monitor and says, ‘And if we had been back in this situation here … if you are uncertain about what you should do, how should you solve that problem?’). |

| Asks about own thoughts | 2 | The facilitator checks that the participants have understood the facilitator’s line of thought, for example, after attempting to explain a problematic event (‘…And what do you think my point (of saying this) is here?’). |

In the example (see table 4), the facilitator uses the frozen image as a starting point for discussing what happened at a specific point in time (line 1). The video image has a key role in setting the scene for the discussion about what could be done: on line 2 the facilitator gestures to draw attention to the team leader’s gaze. Such pointing is easy to achieve with the video but would have been cumbersome to describe in words. The facilitator then begins dramatising to illustrate how difficult it is to ventilating the (imagined) infant and simultaneously follow the ECG and oxygen saturation readings (lines 3–5) and continues doing so until the team begins suggesting and discussing alternative behaviours (lines 6, 10, 12).

Table 4.

The facilitator (F) uses the video as a starting point and then dramatises a possible scenario and summarises the need for information to encourage the participants to develop strategies to avoid similar problems in the future

| Transcription | Annotation | Facilitator behaviour and uPEA phase | ||

| 1 | F: But say that we now, if we were to redo it from here on, right here. |

|

The facilitator puts his index finger on the TV monitor to emphasise which situation he is referring to. | Uses video |

| 2 | F: …and you look down. |

|

Points at the team leader (P7) on the screen to clarify who he is talking about. And then makes a back and forth gesture moving between the team leader’s eyes and the infant’s head on the neonatal resuscitation table to emphasise what the team leader is looking at. |

Uses video |

| 3 | F: …and say this: ‘I will concentrate on the ventilation.’ |

|

Pretends to be the team leader himself, puts out both hands as if holding the infant’s head, while turning his gaze towards the imagined infant in front of him and makes an imaginary quote to illustrate what could be said. | Dramatises |

| 4 | F: But you want to know this and that, for example what the ECG is saying and what the saturation is. What should you do in such a case? (.) |

|

The facilitator summarises the situation and the need for information in it. He still pretends to be the team leader and continues to look down at the imagined infant while pointing towards the ECG on the screen to exemplify. He then asks about possible actions. | Summarises and uses video |

| 5 | (.) |

|

Continues to pretend to be the team leader holding one hand on the infant’s head and the index finger of the other hand moving up and down as if he were ventilating with the T-piece device and waits for suggestions from the participants. | Dramatises |

| 6 | P4: Ask somebody to… say… ‘What is the pulse?’, or? | Suggests a possible question that the team leader could have asked. | Alternative strategies/solutions (E->A) | |

| 7 | F: Yes! ‘P4, can you please tell me the numbers up there.’ |

|

Makes another imaginary quote in line with the suggestion accompanied by pointing towards the screen as if he were pointing in a real situation. | Dramatises |

| 8 | F: You could say that, couldn’t you? ‘Can you tell me what the cardio scope is saying, please?’ | Makes one more hypothetical quote still ventilating the imagined infant. | Dramatises | |

| 9 | F: That’s an example. (.) Right? |

|

Looks up to the participants and holds out his hands. | |

| 10 | P7: Mm but there… ‘cause I remember when we we’re taking the advanced-CPR [course]… | |||

| 11 | F: Yes. | |||

| 12 | P7: …it was sort of a rule that the person responsible in the room was not the person doing compressions. And similarly, you should be able to have a rule that the one responsible doesn’t ventilate. | P7 makes a suggestion about a possible strategy in analogy to another context. | Alternative strategies/solutions | |

| 13 | F: Yes, you got it! |

CPR, cardiopulmonary resuscitation; uPEA, unawareness (u), problem identification (P), explanation (E) and alternative strategies/solutions (A).

Discussion

A primary goal of a facilitator is to help learners identify and close gaps in knowledge and skills22 and previous research on the relationship between facilitators’ questions and the level of reflection in postsimulation debriefing has indicated the need for understanding which facilitator questions promote reflection.23 The Interaction Analysis in this study has enabled identifying and characterising a number of recurring types of facilitator behaviours, which successfully contributed to advancing the participants’ analyses.

Timing has been singled out as an element of debriefing needing more study22 and it was clear that some facilitator behaviours were especially successful during certain phases of the debriefings rather than others. Debriefings have often been described as being organised as a series of phases such as description, analogy/analysis and application.14 25 26 While the number and type of phases may vary, the series of phases is viewed as something regulated by the facilitator.14 This study has shown that the participants in a learner-centred context may need to iterate through such phases again and again each time something problematic, unusual or suboptimal is noticed and regardless of the facilitators’ initial plans. The participants’ analyses iterated continuously through phases of unawareness, identifying problems, discussing explanations to why these took place and suggesting alternative strategies for how the problems could be avoided or handled. In many cases participants are fully aware of problems without any need for facilitation, in these cases the discussions typically move faster to discussions about possible explanations and strategies to manage the problems. Discussion points can be managed in different ways depending on the debriefing approach18 and there are two likely reasons for the highly iterative nature of the debriefings in our data. First, the focus was primarily on the gaps brought up by the participants during the debriefings and not only on those which the instructors in advance had identified. Second, the debriefings were organised around collaborative analysis of the entire video recordings (rather than just short clips) allowing the introduction of new gap analyses as the video recordings were being watched.

Moving between phases without a facilitator can be troublesome3 and the shifts in this study did not take place automatically; immediately before the phase shifts, facilitators engaged in various kinds of interventions to support and encourage the participants’ reflection and analysis. In many cases this amounted to more than simply making an insightful comment about the team’s simulation performance.

The facilitators’ use of the video recordings in various ways stood out as the primary means of drawing attention to events and behaviours which were problematic or missing. By showing the video and by gesturing and pointing out aspects of events taking place in the recordings accompanied by questions about the events, the facilitators managed to initiate discussions about the problematic behaviours or events.

When a problem had been identified, the facilitator behaviours shifted in character. The next step involved clarifying why the problem had taken place by generating explanations and clarifying the rationales behind behaviours that took place. The importance of getting to understand the ‘internal frames’ of learners has been emphasised in some debriefing approaches as these may drive learners’ actions and in turn produce clinical results.8 At this stage, the facilitators prompted discussion by asking the participants about their opinions about or rationales underlying their behaviours.

Finally, to encourage discussion about alternative strategies for avoiding or managing similar situations in the future, another set of facilitator interventions was displayed. Role playing and drama are a natural part of simulation training and associated with enhancing the capacity to reflect and think critically.37 Less attention has been given to the fact that facilitators also can use drama during debriefings. In our study, the facilitators frequently dramatised to advance the analyses; they described hypothetical scenarios and enacted behaviours using quotes, movement, gaze and gestures in the imagined scenarios to illustrate alternative ways of managing a challenging situation. Such dramatising was also used to make a point, for example, to concretise consequences of a suggested strategy.

The video recordings had a key role and by basing the discussions on the video recordings rather than on recollections of what happened, valuable debriefing time appeared to be saved as it did not have to be spent on verbal accounts of what had happened. The recordings enabled asking specific and very detailed questions such as ‘What is he doing here in the background?’ which would have been difficult or impossible without the video. Research on video debriefing has so far not very convincingly shown beneficial effects in comparison with non-video debriefing.3 5 11–13 38 39 This study makes a contribution to our understanding about how, and during which phases, it may be worthwhile structuring discussions around the video. As mentioned, the video recordings were helpful in creating awareness of problems that had occurred. But in contrast, the video did not have a prominent role at all in the subsequent phase of encouraging explanations. And when the debriefings moved on to discuss alternative strategies the video again became more important. This is slightly surprising as this phase per definition is prospective, forward-looking. Nevertheless, the frozen screen images provided a concrete starting point for brainstorming about alternative potential actions.

It was also clear that simply watching the video was not enough. Often more was needed. When the facilitators’ interventions were in phase they had the possibility of being eye-opening in the sense that they helped participants catch sight of problems that they had been unaware of, helped them realise underlying explanations to why the problems took place and eventually to envision alternative ways of working in the future.

Methodological considerations

The point of this study was to identify and characterise behaviours of experienced facilitators that successfully advanced video-supported debriefing in a learner-centred setting. The described behaviours may of course reflect the practices and personal characteristics of the facilitators in this particular study setting. The number of facilitators investigated was limited but the intention of this study was not to claim that the identified facilitator interventions are expected to be representative of or typical for all facilitators in all contexts. As is often the case in qualitative research, external validity is typically replaced by ‘transferability,’ the ability to transfer the findings to situations with similar parameters, populations and characteristics by providing descriptions that are thick enough.40 Readers are invited to make connections between the findings of the study and their own experience. Other behaviours can most likely be discovered in other contexts and added to the ones identified here.

The present study has had a focus on the analysis of team performance but an important aspect of facilitation is also to encourage, strengthen and support participants emotionally and motivationally. Such aspects have not been in focus in this study. Also, the focus of this study may appear to be on problems and exclude positive aspects. However, many good ideas were included but these were typically good ideas about alternative behaviours and hence related to the alternative strategies/solutions phase. Moreover, although facilitators have a strong influence on the discussion, the facilitator’s interventions should not be viewed as strictly determining what happens next. In many cases participants debrief each other and contribute to advancing the debriefings without any facilitator interventions. The participants were students and it is possible that facilitators debrief in other ways in comparison with more experienced participants. When asked about this, the facilitators recognised only one such aspect; the need for authenticating was considered lower with more experienced participants.

Conclusions

This study characterised the facilitator behaviours taking place just before participants manage to move to a more advanced level of analysis during video-assisted postsimulation debriefing. A number of such behaviours were identified and at which phases they occurred. This study contributes to the understanding of how specific facilitator interactions may contribute to the participants’ analyses. More studies are needed to further clarify productive and less productive facilitation in different kinds of debriefing approaches as well as quantitative studies exploring such interventions in different contexts.

Acknowledgments

Special thanks to the participants and facilitators of the courses and the staff at KTC, the Clinical Training Facility, at the Södersjukhuset Hospital. We also thank the editor and the anonymous reviewers for their constructive comments which helped us improve the manuscript.

Footnotes

Contributors: The manuscript has been read and approved by all named authors and there are no other persons who satisfied the criteria for authorship but who are not listed. All authors have contributed to the design, data collection, analysis, writing, revision and approval of the manuscript. We further confirm that the order of authors listed in the manuscript has been approved by all of us.

Funding: This work was supported by the regional agreement on medical training and clinical research (ALF) between Stockholm County Council and Karolinska Institutet.

Competing interests: None declared.

Ethics approval: Approval was obtained from the regional ethical review board in Stockholm (2016/2102-31/5).

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: All data relevant to the study are included in the article or uploaded as supplementary information.

References

- 1. Barry Issenberg S, Mcgaghie WC, Petrusa ER, et al. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach 2005;27:10–28. 10.1080/01421590500046924 [DOI] [PubMed] [Google Scholar]

- 2. Overstreet ML. The current practice of nursing clinical simulation Debriefing: a multiple case study. University of Tennessee, 2009. [Google Scholar]

- 3. Fanning RM, Gaba DM. The role of Debriefing in simulation-based learning. Simul Healthc 2007;2:115–25. 10.1097/SIH.0b013e3180315539 [DOI] [PubMed] [Google Scholar]

- 4. Beaubien JM, Baker DP. Post-Training feedback: the relative effectiveness of team- versus instructor-led debriefs. The Human Factors and Ergonomics Society, 2003. [Google Scholar]

- 5. Chronister C, Brown D. Comparison of simulation Debriefing methods. Clinical Simulation Nursing 2012;8:e281–8. 10.1016/j.ecns.2010.12.005 [DOI] [Google Scholar]

- 6. Ramani S, Krackov SK. Twelve tips for giving feedback effectively in the clinical environment. Med Teach 2012;34:787–91. 10.3109/0142159X.2012.684916 [DOI] [PubMed] [Google Scholar]

- 7. Salas E, Klein C, King H, et al. Debriefing medical teams: 12 evidence-based best practices and tips. Jt Comm J Qual Patient Saf 2008;34:518–27. 10.1016/S1553-7250(08)34066-5 [DOI] [PubMed] [Google Scholar]

- 8. Rudolph JW, Simon R, Dufresne RL, et al. There's no such thing as "nonjudgmental" debriefing: a theory and method for debriefing with good judgment. Simul Healthc 2006;1:49–55. 10.1097/01266021-200600110-00006 [DOI] [PubMed] [Google Scholar]

- 9. Eppich W, Cheng A. Promoting excellence and reflective learning in simulation (pearls). Simul Healthc 2015;10:106–15. 10.1097/SIH.0000000000000072 [DOI] [PubMed] [Google Scholar]

- 10. Rudolph JW, Simon R, Rivard P, et al. Debriefing with Good Judgment: Combining Rigorous Feedback with Genuine Inquiry. Anesthesiol Clin 2007;25:361–76. 10.1016/j.anclin.2007.03.007 [DOI] [PubMed] [Google Scholar]

- 11. Cheng A, Eppich W, Grant V, et al. Debriefing for technology-enhanced simulation: a systematic review and meta-analysis. Med Educ 2014;48:657–66. 10.1111/medu.12432 [DOI] [PubMed] [Google Scholar]

- 12. Sawyer T, Sierocka-Castaneda A, Chan D, et al. The effectiveness of video-assisted Debriefing versus oral Debriefing alone at improving neonatal resuscitation performance: a randomized trial. Simul Healthc 2012;7:213–21. 10.1097/SIH.0b013e3182578eae [DOI] [PubMed] [Google Scholar]

- 13. Savoldelli GL, Naik VN, Park J, et al. Value of Debriefing during simulated crisis management. Anesthesiology 2006;105:279–85. 10.1097/00000542-200608000-00010 [DOI] [PubMed] [Google Scholar]

- 14. Sawyer T, Eppich W, Brett-Fleegler M, et al. More Than One Way to Debrief - A Critical Review of Healthcare Simulation Debriefing Methods. Simul Healthc 2012;11:209–17. [DOI] [PubMed] [Google Scholar]

- 15. Sfard A. On two metaphors for learning and the dangers of choosing just one. Educ Res 1998;27:4–13. 10.3102/0013189X027002004 [DOI] [Google Scholar]

- 16. Paavola S, Hakkarainen K. The knowledge creation metaphor – an emergent epistemological approach to learning. Sci Educ 2005;14:535–57. 10.1007/s11191-004-5157-0 [DOI] [Google Scholar]

- 17. Paavola S, Lipponen L, Hakkarainen K. Models of innovative knowledge communities and three metaphors of learning. Rev Educ Res 2004;74:557–76. 10.3102/00346543074004557 [DOI] [Google Scholar]

- 18. Cheng A, Morse KJ, Rudolph J, et al. Learner-Centered Debriefing for health care simulation education. Simul Healthc 2016;11:32–40. 10.1097/SIH.0000000000000136 [DOI] [PubMed] [Google Scholar]

- 19. Dismukes RK, Gaba DM, Howard SK. So many roads: facilitated Debriefing in healthcare. Simul Healthc 2006;1:23–5. 10.1097/01266021-200600110-00001 [DOI] [PubMed] [Google Scholar]

- 20. Mackway-Jones K, Walker M. The pocket guide to teaching for medical Instructors. London: BMJ Books, 1998. [Google Scholar]

- 21. Dunning D, Johnson K, Ehrlinger J, et al. Why people fail to recognize their own incompetence. Curr Dir Psychol Sci 2003;12:83–7. 10.1111/1467-8721.01235 [DOI] [Google Scholar]

- 22. Raemer D, Anderson M, Cheng A, et al. Research regarding Debriefing as part of the learning process. Simul Healthc 2011;6:S52–7. 10.1097/SIH.0b013e31822724d0 [DOI] [PubMed] [Google Scholar]

- 23. Husebø SE, Dieckmann P, Rystedt H, et al. The relationship between facilitators' questions and the level of reflection in postsimulation Debriefing. Simul Healthc 2013;8:135–42. 10.1097/SIH.0b013e31827cbb5c [DOI] [PubMed] [Google Scholar]

- 24. Kihlgren P, Spanager L, Dieckmann P. Investigating novice doctors’ reflections in debriefings after simulation scenarios. Med Teach 2015;37:437–43. 10.3109/0142159X.2014.956054 [DOI] [PubMed] [Google Scholar]

- 25. Steinwachs B. How to facilitate a Debriefing. Simul Gaming 1992;23:186–95. 10.1177/1046878192232006 [DOI] [Google Scholar]

- 26. Kessler DO, Cheng A, Mullan PC. Debriefing in the emergency department after clinical events: a practical guide. Ann Emerg Med 2015;65:690–8. 10.1016/j.annemergmed.2014.10.019 [DOI] [PubMed] [Google Scholar]

- 27. Sins P, Karlgren K. Identifying and overcoming tension in interdisciplinary teamwork in professional development. In: Baker M, Andriessen J, Järvelä S, eds. Affective Learning Together - Social and emotional dimensions of collaborative learning. Paris: Routledge, 2013. [Google Scholar]

- 28. Karlgren K. Trialogical Design Principles as Inspiration for Designing Knowledge Practices for Medical Simulation Training. In: Moen A, Mørch AI, Paavola S, eds. Collaborative knowledge creation: practices, tools, and concepts. Oslo: Sense, 2012: 163–84. [Google Scholar]

- 29. Heath C, Luff P, Svensson MS. Video and qualitative research: analysing medical practice and interaction. Med Educ 2007;41:109–16. 10.1111/j.1365-2929.2006.02641.x [DOI] [PubMed] [Google Scholar]

- 30. Erickson F. Definition and analysis of data from videotape: Some research procedures and their rationales. In: Green JL, Camilli G, Elmore PB, eds. Handbook of complementary methods in education research. Mahwah, NJ: Erlbaum, 2006: 177–205. [Google Scholar]

- 31. Derry SJ, Pea RD, Barron B, et al. Conducting video research in the learning sciences: guidance on selection, analysis, technology, and ethics. J Learn Sci;19:3–53. 10.1080/10508400903452884 [DOI] [Google Scholar]

- 32. Koschmann T, Stahl G, Zemel A. The Video Analyst's Manifesto (or the implications of Garfinkel's policies for studying instructional practice in design-based research). In In: Goldman R, Pea RD, Barron B, et al., eds. Video research in the learning sciences. NJ: Routledge, 2007. [Google Scholar]

- 33. Seelandt JC, Grande B, Kriech S, et al. DE-CODE: a coding scheme for assessing Debriefing interactions. Bmj Stel 2018;4:51–8. 10.1136/bmjstel-2017-000233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Jordan B, Henderson A. Interaction analysis: foundations and practice. J Learn Sci 1995;4:39–103. 10.1207/s15327809jls0401_2 [DOI] [Google Scholar]

- 35. Woods D, Fassnacht C. Transana v2.53-Mac [program. Madison, WI: Spurgeon Woods LLC, 2013. [Google Scholar]

- 36. Jefferson G. Glossary of transcript symbols with an Introduction. In: Lerner GH, ed. Conversation analysis: studies from the first generation. Philadelphia: John Benjamins Publishing Company, 2004: 13–23. [Google Scholar]

- 37. Negri EC, Mazzo A, Martins JCA, et al. Clinical simulation with dramatization: gains perceived by students and health professionals. Rev Lat Am Enfermagem 2017;25. 10.1590/1518-8345.1807.2916 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Rossignol M. Effects of video-assisted Debriefing compared with standard oral Debriefing. Clin Simul Nurs 2017;13:145–53. 10.1016/j.ecns.2016.12.001 [DOI] [Google Scholar]

- 39. Farooq O, Thorley-Dickinson VA, Dieckmann P, et al. Comparison of oral and video Debriefing and its effect on knowledge acquisition following simulation-based learning. Bmj Stel 2017;3:48–53. 10.1136/bmjstel-2015-000070 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Lincoln YS, Guba EG, Pilotta JJ. Naturalistic inquiry. Newbury Park, CA: Sage Publications, 1985: 438–9. 10.1016/0147-1767(85)90062-8 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjstel-2018-000374supp001.pdf (47.7KB, pdf)