Summary

Objective : The more people there are who use clinical information systems (CIS) beyond their traditional intramural confines, the more promising the benefits are, and the more daunting the risks will be. This review thus explores the areas of ethical debates prompted by CIS conceptualized as smart systems reaching out to patients and citizens. Furthermore, it investigates the ethical competencies and education needed to use these systems appropriately.

Methods : A literature review covering ethics topics in combination with clinical and health information systems, clinical decision support, health information exchange, and various mobile devices and media was performed searching the MEDLINE database for articles from 2016 to 2019 with a focus on 2018 and 2019. A second search combined these keywords with education.

Results : By far, most of the discourses were dominated by privacy, confidentiality, and informed consent issues. Intertwined with confidentiality and clear boundaries, the provider-patient relationship has gained much attention. The opacity of algorithms and the lack of explicability of the results pose a further challenge. The necessity of sociotechnical ethics education was underpinned in many studies including advocating education for providers and patients alike. However, only a few publications expanded on ethical competencies. In the publications found, empirical research designs were employed to capture the stakeholders’ attitudes, but not to evaluate specific implementations.

Conclusion : Despite the broad discourses, ethical values have not yet found their firm place in empirically rigorous health technology evaluation studies. Similarly, sociotechnical ethics competencies obviously need detailed specifications. These two gaps set the stage for further research at the junction of clinical information systems and ethics.

Keywords: Health care ethics, clinical information system, electronic health record, mobile patient data, education

1 Introduction

In the recent years, there has been an increase in the adoption of clinical information systems (CIS), in particular electronic health records/electronic clinical records worldwide 1 2 . The adoption rates and the speed of diffusion may vary between countries 3 4 5 but the trend is towards more clinicians using these systems 6 , sharing data across institutional boundaries 7 , and patients having the opportunity to access their health data 8 . Particularly clinicians in countries with high adoption rates practically also experience the flaws and drawbacks when using them in their daily work, e.g., burn out syndrome 9 10 , and bad usability 11 . Generally speaking, and judging from a stance of connectivity and network theory, the more people there are who use these systems, the more promising the benefits are, and the more daunting the risks will be. Adoption and use may be regarded as the stepping stone towards a general belief that clinical information systems must be scrutinized not only in terms of effectivity and efficiency but also in terms of ethical values. These findings can hint at the systems’ capability of either compromising or facilitating ethical values.

In parallel, disrespecting ethical considerations might be a predictor of poor user acceptance and staunch skepticism towards new and challenging health technologies, in particular when vulnerable groups are affected by the technology. This holds as more and more intelligent technologies must penetrate the personal sphere of patients to become effective, as it is the case with smart assistive technologies and other devices that capture data directly from patients.

Against this backdrop, the present paper will undertake the quest for ethical values as studied in recently published work. In particular, it will investigate the current state of debate on ethics in clinical information systems as reflected in evaluation studies, reviews and discussion papers. Having in mind the broadness of the field of Clinical Information Systems we narrowed the window of this review drawing on recent publications on Clinical Information Systems in the IMIA Yearbook of Medical Informatics for identifying crucial areas, trends, and frequently used terms to guide the search. In his IMIA Yearbook article of 2016, Gardner 12 found patient safety in combination with the quality of care as one of the three major challenges for the next 25 years. In taking this one step further, it can be contended that the other two mentioned by Gardner, namely evaluation for evidence-based information systems and their immersion into clinical practice, are effectively both also associated with the leitmotiv of safe and high-quality care, contributing to its success and the one of related clinical information systems. All three challenges of Gardner touch on ethical values, most saliently patient safety and quality of care in the sense of non-maleficence and beneficence. Another interesting source renders a complementary – not contradictory – picture. The most frequent keywords in Hackl and Hoerbst’s review 13 of the literature on clinical information systems revealed “humans” in combination with “female/male/child/adult” as the most often used terms, followed by electronic health records and health communication. Notwithstanding human issues standing out within the CIS literature, ethics was not found to be so frequent that it made it into the various cluster analyses of Hackl and Hoerbst 13 . This is in line with a recent review by Tran and colleagues 14 .

Hackl and Hoerbst concluded that big data still played a major role in clinical information systems, yet the difference compared to the recent years was a shift towards the methodological approach to big data with an emphasis on machine learning, automated processes, and data obtained directly from the patient.

The conclusions are diverse and manifold. Clinical information systems are strongly associated first and foremost with the themes of patient safety and quality of care but also with humans that strongly allude to ethical inquiries. However, these inquiries are not necessarily and automatically reflected by the current literature. Thus, it seems as if it was worthwhile to take a dedicated and combined look at clinical information systems and ethics to grasp the most current propositions.

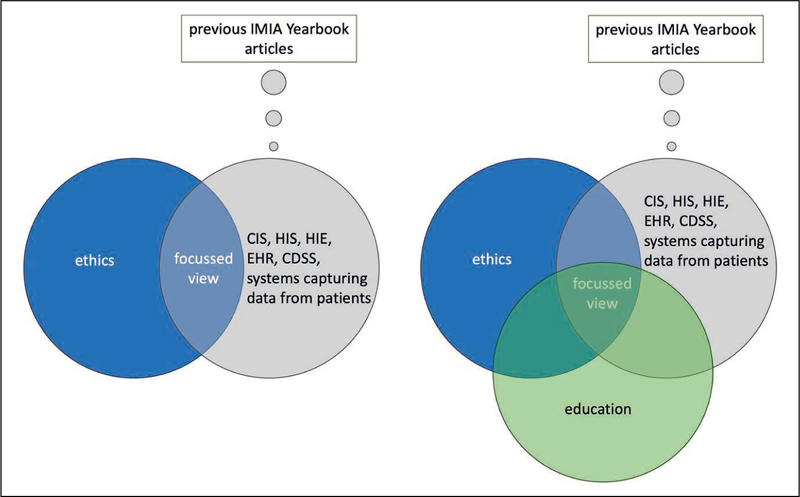

Furthermore, there is evidence that ethics is an emerging theme, and that it may have even already become an established topic in health informatics education 15 16 . This perspective moves the focus from current debates to actionable consequences with the opportunity of changing the mindset and the attitudes that underly an ethical use of clinical information systems. Goodman 17 asserted that information technology, which was pervading the professional and private everyday life in an unprecedented way today, could serve as an excellent catalyst and fulcrum to spawn ethical inquiries and to propel the two in a combined fashion into the curricula of medical and nursing schools. He posed the question of what the specific ethical issues of privacy, end-of-life care, access to care, as well as informed consent and communication were that originated from using health information technologies instead of non-digital media. His opinion paper may motivate and validate both research questions. Figure 1 presents the rationale of this review that underlies the research questions.

Fig. 1.

Rationale of this review. (CIS = clinical information system, HIS = health information system, HIE = health information exchange, EHR = electronic health record, CDSS = clinical decision support system).

This review will thus explore the area of ethical values starting with the tenets of bioethics, namely autonomy, beneficence, non-maleficence, and justice as defined by Beauchamp and Childress 18 . It hereby examines the field from the perspective of innovative CIS prompting an ethical debate. Furthermore, it adopts the position of the people wishing to comply with ethical norms when using CIS and asking for appropriate competencies and education.

It is therefore guided by the two research questions:

What ethical considerations and debates are spurred through the availability and use of innovative clinical information systems ?

What competencies do users , i.e. health professionals and patients, need to have to become proficient in using the technology in an ethical manner?

2 Methodology

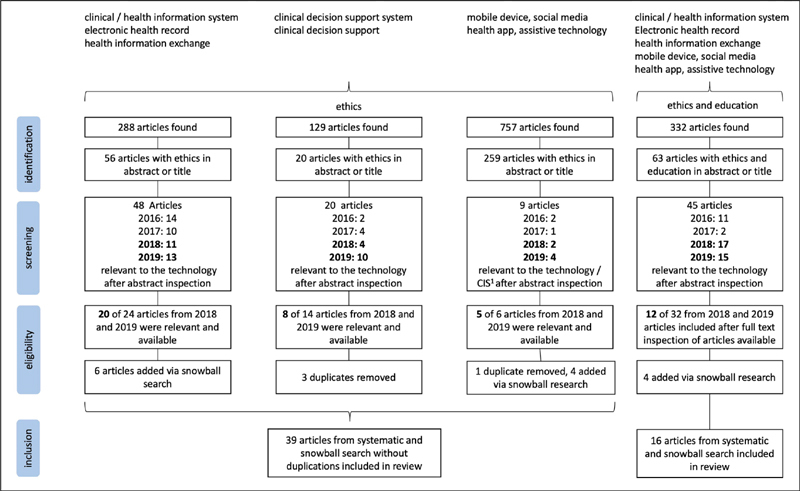

To answer these research questions, we undertook a literature review covering ethics topics in the various environments and applications of clinical information systems. To this end, MEDLINE was extensively searched via PubMed to garner as many different perspectives ( Figure 2 ). The PRISMA statement served as a guide to perform this literature review 19 .

Fig. 2.

Search strategy and results.1 Clinical information systems include health information systems, health information exchange and electronic health records.

The first research question addresses ethics in the context of intra- and trans-institutional information systems and their data-driven applications and systems which record patient data from outside the clinical institutions, e.g ., at home. To cover socio-technical systems within and across institutions, the search terms “clinical / health information system”, “health information exchange”, or “electronic health record” were combined with “ethics”. This initial approach was extended by a separate search to address applications in particular clinical decision support respectively “clinical decision support system”, in combination with “ethics”. Although there are many more applications implemented in clinical information systems, clinical decision support belongs to the most outstanding one that prompts ethical discussions, particularly if patients are active stakeholders. Furthermore, the terms “mobile device”, “health app”, “assistive technology”, and “social media” were paired with “ethics” to account for systems capturing data directly from patients . The second research question addresses the competencies recommended for both health professionals and patients to ensure an ethical use of health information technology tools. Consequently, these terms ( i.e ., “clinical / health information system”, “electronic health record”, “mobile device”, “health app”, “social media”, “assistive technology”) were searched together with the terms “education” and “ethics” (all search strings see Appendix). Ethics and education had to be in the title or the abstract. Only articles published in the English language, between 2016 and 2019, and with an abstract available were included. The number of relevant hits in the years 2016 and 2017 hereby served as references to evaluate the trend over the four years. However, only articles of the years 2018 and 2019 were included in the survey to reflect the current state of affairs. Searches were conducted in the period from October to December 2019 and underwent an update on January 2 nd 2020 to catch all the articles published in 2019. The number of hits for 2018 and 2019 was enriched by articles found in a snowball search. For the sake of the comparability of methods, they did not enter the statistics over the four years. All three authors were involved in the process of identification, screening, and eligibility checking, at least two of them performed the tasks independently and discussed conflicts to resolve them.

3 Results

3.1 What Ethical Considerations and Debates are Spurred through the Availability and Use of Innovative Clinical Information Systems?

3.1.1 Overview

Systems and applications referred to in the articles included focused primarily on electronic records (some spoke more generally about health information technology or eHealth) as a means for documentation, clinical decision support, education, exchanging data to provide continuity of care, sharing data for research purposes, and as a source of data for any type of Artificial Intelligence (AI) and Machine Learning (ML) applications. These electronic records embraced the ones where data are administered by health providers, typically then called “electronic health records” (EHRs) or “medical records”, or by patients, then sometimes specifically called “personal health records”. These record systems were not further detailed in terms of their technical design or architecture. They were rather regarded as a model EHR reflecting current manifestations of EHRs. The minority of studies put a technical concept forward, e.g. , for data curation 20 or described an existing infrastructure 21 22 . Two studies demonstrated how EHRs could be augmented to accommodate ethically relevant information, i.e. code status, advance directive 23 , and clinical ethics consultation 24 . In contrast, AI and ML procedures were partly described at more length in an overview style 25 26 27 . When devices were referred to in the studies, they were dealt with as a cluster of technologies, i.e. wearable sensors, smart mobile devices with health apps and social media which delivered data. The articles did not expand on any particular technical details 28 .

Most of the papers were (systematic) literature reviews engendering recommendations, propositions, and critiques. A few yielded empirical insights through quantitative, qualitative, mixed-methods, or case study research designs. Three countrywide case studies were used to prompt ethical inquiries: two from Australia’s My Health Record 29 30 and one from Haiti 31 . As mentioned hereinabove, there were some concepts and technical papers, but none of them aimed at evaluating a particular system. The depth with which ethics was discussed strongly varied from determining the mindset of the entire paper to playing a secondary role in a paragraph devoted to this topic. Ethically sensitive scenarios, such as mental health care including dementia, child and adolescent care, diagnosis and treatment involving genetic data, screening of breast cancer and the end-of-life phase, underwent particular scrutiny. However, the ethical debate pervaded any type of health care, including primary care and specialist care, such as general and plastic surgery and pain research, diagnosis and treatment. Table 1 provides an overview of the characteristics of the papers included in this review for the first research question.

Table 1. Articles included in the review for research question #1.

| Article | Country | Design | Medical / health specialty | Main ethical issues addressed |

|---|---|---|---|---|

| Ashton and Sullivan 55 | USA | Multiple case studies | Mental health care | Confidentiality |

| Baldini et al. 38 | Italy, Netherlands | Review and concept paper | Lifestyle | Privacy, data protection, psychological biases, accountability, digital divide |

| Boers et al. 36 | Netherlands, UK | Review and opinion paper | Primary care | Ethical tensions, explicability, patient-provider relationship, responsibility, autonomy, disparities, digital divide |

| Bourla et al. 45 | France | Observational mixed methods study | Mental care | Pre-emptive medical intervention, reinforcing anwety instead of providing a feeling of safety, stigmatization, provider-patient relationship |

| Brill et al. 49 | USA | Review and opinion paper | Care | Autonomy, privacy, beneficence, ethical tension, provider-patient relationship |

| Brisson et al. 33 | USA | Concept paper | Education | Privacy |

| Carter et al. 35 | Australia | Review and opinion paper | Breast cancer care | Professional ethics, responsibility, explicability, bias in data sets, consent, privacy, confidentiality |

| Davenport and Kalakota 25 | USA | Review and opinion paper | Care | Algorithmic transparency and explicability, biases in data, accountability |

| Lie Kiel et al. 31 | USA, Haiti | Case study | Care | Privacy, security |

| Duckett 29 | Australia | Case study | Care | Ownership of health information, threats to illegal access to health information |

| tberlin et al. 52 | USA | Review and opinion paper | Plastic surgery | Privacy, security, identity |

| Erikainen et al. 28 | UK | Review and opinion paper | Care and lifestyle | Ethical tension, digital divide, disparities, data ownership, quality of data |

| Evans and Whicher 47 | USA | Review and opinion paper | Research and care | Explicability, data integrity, privacy, and confidentiality |

| Galvin et al. 48 | USA | Review and opinion paper | Care and research | Democratization, co-creation of knowledge |

| Gensheimer et al. 54 | USA | Guidelines | Care and research | Consent |

| Gooding 46 | Australia | Review and opinion paper | Mental care | Transparency, harm minimization, accountability, privacy, and security |

| Graham et al. 26 | USA | Review and opinion paper | Mental care | Biases in data (subjectivity), ethical problems due to poor digital literacy |

| Ho and Quick 57 | Canada, USA, UK | Review | Care | Safety, patient-provider relationship |

| Lenca et al. 56 | Switzerland, USA | Review | Care of dementia patients | Autonomy, privacy, beneficence, non-maleficence, interdependence, justice |

| Kogetsu et al. 21 | Japan | Concept paper | Research | Privacy, data protection, autonomy (own intent) |

| Kuhnel 53 | USA | Case study and review | Mental care | Privacy, confidentiality, loss of information control, provider-patient relationship (trust), integrity and validity, patient consent |

| Laurie 58 | UK | Review and case study | Research and care | Privacy, public benefit, transparency, accountability, trustworthiness |

| Lehmann et al. 23 | USA | Concept and consensus paper | Care | Informed consent |

| Loftus et al. 37 | USA | Review and opinion paper | Surgical care | Algorithmic bias, accountability in case of errors |

| Macdonald et al. 50 | Canada, UK | Observational qualitative study | Care | Provider-patient relationship |

| Mars et al. 51 | South Africa, Canada | Review and opinion paper | Dermatology care | Responsibility, provider-patient relationship, consent, confidentiality, security, professional ethics |

| McBride et al. 40 | USA | Review and opinion paper | (nursing) care | Professional ethics, ethical tension, moral distress, data integrity |

| McWilliams et al. 20 | UK | Concept paper | Research | Confidentiality, beneficence (common good) |

| Meredith et al. 30 | Australia, UK | Review and case study | Adolescents care | Consent, autonomy, privacy and confidentiality, ethical tension |

| Moscatelli et al. 22 | Italy | System description | Research | Privacy and confidentiality |

| Musher et al. 39 | USA | Case studies | Care | Fraud/data integrity, professional ethics |

| Natsiavas et al. 43 | Greece | Observational quantitative study | Care | Informed consent (autonomy), confidentiality |

| Pathak and Chou 44 | USA | Discussion paper | Adolescents care | Confidentiality, ethical tension |

| Rashidi et al. 27 | USA | Review and opinion paper | Pain research | Patient-physician relationship |

| Robichaux et al. 41 | USA | Review and opinion paper | Care | Stigmatization and biases, technomoral virtues humility (to know the limits), patient-provider relationship, justice, data integrity, moral leadership |

| Sanchez et al. 34 | Spain | Review of guidelines | Research | Consent, privacy, beneficence (common good) |

| Sanelli-Russo et al. 24 | USA | Concept paper | Care | Clinical ethics consultation |

| Stockdale et al. 42 | UK, Ireland | Systematic review | Research | Privacy, security, trust, consent |

| Wilburn 32 | USA | Case study | Education | Confidentiality, ethical tension |

Almost all of them scrutinized ethical principles in patient care or research, some of them leading the way towards the learning health system (LHS) paradigm, where the boundaries between care and research are volatile. Exceptions were educational applications of EHRs 32 33 .

3.1.2 General Ethical Discourses

All papers were selected on the ground that they distinctly prompted an ethical debate or were associated with ethical concerns. By far, most of the discourses were dominated by privacy, confidentiality, and informed consent issues, sometimes also paired with data ownership. These issues appeared either in care or research scenarios, or in a combined fashion taking into account the LHS approach and methodology. These inquiries were often ignited in situations of exchanging and sharing data either across professions, stakeholders (including parents and families), institutions, settings, or countries in a continuity of care mode or in the sense of reusing data from different locations, to pool them for research purposes. Large scale infringement of privacy and confidentiality was discussed in the context of data leakage, for example, followed by employers and insurers getting unwanted data access 34 , such as in case of social security and health data 29 . The feasibility of obtaining classic informed consent and the ethically appropriate level of patient consent should be further investigated to reach robust and acceptable solutions.

Intertwined with confidentiality, trust was regarded as essential for the provider-patient relationship, which came to the fore in many papers and was extensively discussed against the backdrop of AI and ML scenarios, where machines could take over some tasks previously performed by health professionals or at least could assist with these tasks. Perceiving the patient in full empathy could not be delegated and was considered as the core of humanity in the provider-patient relationship.

AI and ML applications of EHR data brought along another ethical issue that was running through all papers on these topics like a golden thread, i.e. the opacity of algorithms and the lack of explicability of the deep learning algorithms, which posed an inevitable challenge of the provider’s duty to explain the decision to the patient 35 . Alongside the algorithms, all papers on AI and ML addressed the paramount importance of the data sets on which the algorithms were trained. Low quality, inherent biases ( e.g. , due to under-represented groups), and subjectivity in clinical notes led to the amplification of errors on a large scale and very often to disparities and discrimination of disadvantaged groups 25 26 35 36 . In turn, clean, reliable, and valid data would bring AI into the position to mitigate injustice in decision making arising from human preconceptions 37 . Justice and equality were also discussed in the context of availability of technologies and competencies to use them, which could widen the digital divide even more between those who have it and those who do not have it 28 36 38 . This also held for digital literacy on the part of physicians and patients, an asset that could make the difference 26 .

The foundations of the ethical use of EHRs were set by the professional standards of documents applying to both the paper and the electronic world. However, challenges have come along with electronic versions particularly concerning data integrity issues that were not only tapped in the context of AI and ML but also in the very rudimentary task of data entry. Prepopulated forms, auto-fill in options, and copy-paste mechanisms in EHRs virtually invite users to provide fraudulent and corrupted data. Strong emphasis should, therefore, be put on professional integrity in particular in case of administrative pressure, to come up with financially relevant data 39 40 .

Several papers made ethical tensions a subject of discussion about trade-offs, for example, regarding what is legally possible – here, sharing anonymized student data, is it also ethically desirable? 30 . Do the new achievements in safety due to better connectivity of providers stand in contrast to responsibility gaps in care networks? 38 . Is the opportunity of greater autonomy due to disease self-management bought by the burden of measurements? Or more generally, is patient empowerment not always accompanied by “responsibilization”? 28 . The grand theme of keeping data private versus the potential of contributing to a common good, if the data become public, was also debated. Against the backdrop of conflicting ethical demands, Robichaux and colleagues 41 proposed an ethics framework for technomoral virtues grounded in polarity thinking as an antidote for “binary thinking” 41 .

3.1.3 Specific Ethical Discourses

Beyond all theoretical discourses, it is important to hear the voice of patients and citizens . The patient’s point of view was comprehensively reflected in a systematic review combining qualitative and quantitative studies on the use of EHR in the UK and Ireland 42 . The review revealed the considerable knowledge of patients about their data stored in the GP’s EHR and a general willingness to share their data for biomedical research in the sense of contributing to the common good. Their concerns about privacy breaches were mainly associated with a lack of own control in terms of who accessed the data and for what purpose. Distrust was expressed in the context of poor skills to shield data from attacks and misuse and in relation to questionable motivation beyond public interests. Patients in these studies advocated committees with a well-balanced membership representing a wide range of stakeholders, to decide about granting the right to access the data. Natsiavas and colleagues’ European survey on health data exchange due to cross border medical treatment aimed at exploring the citizens’ view on informed consent and confidentiality 43 . The large majority of the responders found that consent had to be obtained beforehand, and it was only in a few exceptional cases that care could be continued without consent, e.g ., in emergency scenarios. In addition, a similarly high percentage of people favored sharing personal data for research purposes, if anonymization was warranted. Barriers to data exchange were lack of trust in the intention of data collection and the risk that data might be linked with other personal data that were already existing.

Ethical inquiries often emerged from digital applications for vulnerable groups . Meredith et al. , 30 investigated the autonomy of adolescents, in particular their right to manage their own data in a personal health record, here the Australian My Health Record, and the due right of privacy and confidentiality of these data when shared with a provider. Conflicts would arise when parents in their role as guardians wished to gain access to these data or as Pathak and Chou 44 wrote when a provider felt the parents had to be informed. When different technologies in psychiatric care were probed including mobile momentary assessment and adaptive testing of patients, psychiatrists felt many of them were risky also in an ethical sense, e.g ., fostering pre-emptive medical interventions, despite the high reliability of the results 45 . Gooding 46 sketched a broad variety of ethically and legally critical mental health use cases involving people seeking support, mental health practitioners, managers, criminal justice systems, companies, businesses, and education providers.

Learning health systems with their intentionally blurred boundaries between research and care - particularly in the case of EHR decision support - require a new approach of oversight to be adopted. Measures towards securing enough insight into the certainty and reliability of algorithms and offering additional recommendations, e.g ., from guidelines, together with monitoring data quality and preserving confidentiality need particular oversight to be implemented via establishing an independent body of experts with the capability to enforce the rules 47 . The AMIA working group Ethical, Legal, and Social Issues 48 worked on patient access to EHRs in a learning health system rather from the opportunity than from the risk position. A mechanism for democratization, sharing data analysis plans and research findings with patients were advocated as a model for co-creating knowledge. Drawing upon a meaningful patient-provider relationship, encouraging patients to read their clinical notes was explicitly recommended for behavioral health patients to become agents in a therapeutic alliance on an equal footing. Brill and colleagues 49 boiled down the different fields flourishing from the availability of electronic records to the statement that big data, predictive analytics, and accountable organizations were transforming the patient-provider relationship which now should become the yardstick of guiding and evaluating these innovations. The qualitative study by Macdonald et al ., 50 supported the view of providers welcoming patients to become their partners, forge an alliance, and conduct a “two-way conversation” (p. 4).

There was a general demand for guidance in ethically laden situations . Sánchez et al ., 34 compared two recent versions of pertinent ethical guidelines, namely the one by the Council for International Organizations of Medical Sciences (CIOMS) and the one by the World Medical Association (WMA). As their main focus was on data collection and storage, the authors concluded that they could not provide any meaningful advice for data sharing as was needed for global research infrastructures. Mars et al., 51 also complained about too little guidance in case of unsolicited patient data arriving in physician offices, in this case, teledermatology images. The responsibilities of physicians and patients were unclear alike in this scenario. In the absence of guidelines, several groups came up with recommendations at the junction of ethics and the use of technologies. Eberlin et al ., 52 proposed a consented set of recommendations for the communication between providers and patients in plastic surgery which embraced, amongst others, proper identity management of colleagues and patients, obtaining consent before recording an electronic communication and the duty of documenting the electronic contact. In other critical applications, such as a patient-targeted googling, a practice that is found in mental health and social work, the authors 53 reported about a rigorous set of recommendations including self-inquiries about the motivation, obtaining consent, checking validity and integrity of the data found in social media, and documenting professionally the search history. Moreover, Gensheimer et al ., 54 presented recommendations in the form of guidelines for including patient-reported outcomes (PROs) that were developed in collaboration with two PRO-EHR Users’ Groups. The intended use (patient care, research, publication surveillance) of data determined the procedures for information sharing and informed consent. Ashton and Sullivan 55 provided a set of best practices for psychologists amongst others for informed consent, use of electronic health records, confidentiality, and beneficence versus harm.

More and more health data do not solely stem from EHRs but are captured directly by the patient . These systems comprise a large and heterogeneous family of intelligent devices and mobile software applications with the help of which the patient may produce content. A comprehensive review of intelligent assistive technologies (IATs) by Ienca et al ., 56 provided an overview of ethical themes grouped as families and subfamilies. There was a preponderance of studies found on independence and safety considerations. Based on the large majority of IATs in dementia, designed without ethical values in mind, they argued that these values should be proactively included in the design. Likewise, Baldini et al ., 38 discussed the option of an in-built ethical design to respect the rights of citizens to protect their intimacy and privacy. The principle of this design was to anticipate crucial situations and allow the users to make their own choices of ethical values to counteract reduced human agency, poor awareness, and limited control of thoughts and acts.

Ho and Quick 57 focusing on safety issues concerning self-monitoring emphasized the positive ethical values that could accrue with the use of these technologies, whereas concerns arose when the information provided in these apps was not correct and not clinically meaningful. For maleficence to be avoided, they spoke in favor of educational and supervisory involvement of professional organizations to upskill patients in their interaction with these devices. The use of smart and mobile technologies and the collection of data via these devices, which were designed for use in well-being and thus outside the traditional provision of health care, could add complexity and problems, Laurie 58 argued. Similar reasoning resonated in Erikainen et al. ’s article 28 on what “patienthood” really meant in a situation where the roles of patients, consumers, and participants were so inextricably intertwined. Laurie 58 contended that as the potential re-use and analysis of these data was not predictable from the onset of the measurements, no linkage, e.g ., with EHR data, or sensible interpretation was feasible without meaningful metadata mirroring the context where these data were originally captured. Aside from these epistemological issues, the possible data access and linkage should be determined through a process of negotiations with all stakeholders for which Laurie 58 envisioned data stewards taking the role of guides.

3.2 What Competencies Do Users, i.e., Health Professionals and Patients, Need to Have to Become Proficient in Using the Technology in an Ethical Manner?

3.2.1 Overview

There were several studies empirically underpinning the necessity of ethical education when intending to use technology in care. Students’ course documents in a class that included ethics policy development in precision medicine demonstrated that they clearly identified the demand for training clinical and public health researchers and practitioners 59 . Although the large majority of providers had received ethics training, many of them did not feel at ease or confident when utilizing technology in the intersection with patients particularly when compromising patient privacy and autonomy were at risk 60 . Not all providers were aware of the dangers of infringing privacy when using social media as Alshakh et al ., found in their survey 61 . Similarly, when exposed to cases with privacy breaches, only nurses with higher education including more elaborate as well as more recent ethics training could identify the threats 62 .

The need for adopting ethical principles was also expressed in publications drawing on the pertinent literature. Ethical principles needed to be respected before the technology could be used appropriately. This also applied to systems that were used for educational purposes such as social media 63 64 65 66 or electronic health records 33 . Whether the users should acquire these competencies via formal education or informal learning was often not explicitly mentioned. However, hands-on recommendations that were derived from prior literature reviews 66 67 68 69 70 or from existing standards of care 71 on how to make use of technology safely were presented and could serve as guidance for educational measures of any kind.

Education for providers and patients alike was advocated in several publications. Ho and Quick 57 spoke in favor of dedicated education to ensure patient safety when using smart technologies, favorably in cooperation with patient advocacy groups and as part of medical training on devices. Reamer 71 cited the codes of ethics of various professional associations that coupled the mandate for digital intervention, therapy, supervision, and social work with appropriate prior training and education. Sussman and DeJong 72 referring to the particularly sensitive area of mental health care for adolescents and children and the ubiquitous use of social media in this age group and beyond voted for engaging in education as an “ethical requirement”.

Not only would it contribute to education on the safe use of technology, but it could also pave the way towards adoption and public as well as professional acceptance of innovations. It was argued that ethical standards assessment together with pertinent patient education only laid the foundation for realistic and sustainable system adoption of innovative applications for dementia patients 68 . Professional associations, such as the Canadian Association of Radiologists 70 pled to offer public education programs to engage citizens in the medical use of AI and ultimately prepare the ground for an open and positive attitude in data sharing. European and North American Radiological Associations 67 concurred in the importance of educating physicians to be better prepared to make decisions on the effective use of AI. Table 2 summarizes the articles included in the review to answer research question 2.

Table 2. Articles included in the review for research question #2.

| Article | Country | Design | Medical / health specialty | Use of technology | Ethical competency areas |

|---|---|---|---|---|---|

| Alshakhs et al. 61 | Saudi Arabia | Observational quantitative study | Care | Education | n/a |

| Bittner et al. 66 | USA | Review and recommendations | Gastrointestinal and endoscopic surgery | Education | Informed consent, privacy, confidentiality, transparency |

| Bopp et al. 63 | USA | Review | Physical literacy | Education | HON code values: objective, transparent, ethical, verifiable, trustworthy content |

| Brisson et al. 33 | USA | Concept paper | Care | Education | Privacy, consent, data economy |

| Chandawarkar et al. 69 | USA | Observational quantitative study | Plastic surgery | Care | No specific competencies named but guidelines proposed to be used in educational settings |

| Demiray et al. 62 | Turkey | Observational quantitative study | Nursing care | Care | No specific competencies named |

| Estrada-Hernandez and Bahr 60 | USA | Observational quantitative study | Rehabilitation | Education | Beneficence, non-maleficence, autonomy, justice, fidelity |

| Geis et al. 67 | Europe, North American | Review and recommendations | Radiology | Care | Data ethics: informed consent, privacy and data protection, ownership, objectivity, transparency, digital divide Ethics of algorithms: fairness, equality, explicability, transparency; Ethics of practice: automation bias, sources of liability |

| Ho and Quick 57 | Cnnndn USA, UK | Review | Care | Care | Safety, patient-provider relationship |

| Jaremko et al. 70 | Canada | Review and recommendations | Radiology | Care | Data value and ownership, privacy, consent |

| Le Barge and Broom 65 | USA | Review | Primary care | Education | Professional ethics, patient-provider/practice relationship |

| Modell et al. 59 | USA | Observational qualitative study | Public health / precision medicine | Public health | Assurance (access, equity, disparities); Participation (involvement, representativeness); Ethics (consent, privacy, benefit-sharing); Treatment of people (stigmatization, discrimination) |

| Reamer 71 | USA | Review and recommendations | Behavioral health care | Care | Privacy, confidentiality, consent, provider-patient relationship |

| Robillard et al. 68 | Canada, USA | Review and recommendations | Care of people suffering from dementia | Education | Evaluation of key standard ethical factors surrounding privacy, confidentiality, and informed consent |

| Sussman and DeJong 72 | USA | Review and case studies | Adolescent mental care | Care | Professional ethics: development perspective, beneficence, non-maleficence, justice, fidelity, autonomy, confidentiality, legal consideration should not replace ethical ones, patient-provider relationship |

| Zimba et al. 64 | Ukraine | Review and opinion paper | Rheumatology | Education | Patient-provider relationship, privacy and confidentiality, professional ethics |

3.2.2 Ethics Themes as Candidates for Competencies

Although many of the publications referred to ethical tenets and to the need for education, only a few expanded on ethical competencies directly. Reamer 71 citing various standards of care summarized that providers needed to acquire competencies to balance benefits and risks, to maintain confidentiality and privacy also in the context of ensuring the professional boundaries, to confirm the identity of the patient and to assess the patient’s necessary level of familiarity and comfort with the technology, i.e ., social media and telemedicine. Other authors leaned on general professional ethics to be applied when using social media in care situations 64 65 72 . Estrada-Hernandez and Bahr 60 recommended that providers should be able to conceptualize the ethical dilemma and to cope with the situation striving for a patient-centered solution. Sussman and DeJong 72 also identified the recognition of a dilemma as the first challenge to be mastered followed by the realization of the ethical issues at stake.

Speaking in a broader sense, all of the ethical issues that were presented and discussed could be translated into competency areas but not into real practical competencies. By and large, there were no particular ethical aspects to be considered depending on the type of technology except for artificial intelligence and social media. In the case of artificial intelligence and big data analytics, ethics of algorithms, i.e ., fairness, equality, explicability and transparency, alongside ethics of data and practice were particularly emphasized 66 . Many authors in referring to social media regarded the maintenance of a good and appropriate patient-provider relationship as a central ethical demand that has gained new importance due to the risk of blurring the boundaries between the provider and patient in online communication 64 65 71 72 .

4 Discussion

4.1 Summary

This review revealed that technology in the context of clinical and health information systems prompted ethical inquiries. Judging on the retrieved articles, published in the past four years, and which passed the screening, there was a clear trend towards an increasing number of articles when comparing the years 2016-2017 and 2018-2019, which seems to reflect an increase in the interest for this topic. Many of the themes identified were not entirely new ( e.g. , 73 ) but they continue to pervade the scientific and practical discourses as more and more technologies come to fruition, moving from sheer concepts to daily reality.

Potential reasons could be that humans in the person of patients and citizens are getting increasingly in touch with systems that used to be enclosed in health organizations and meant to be utilized only by health professionals in the past. This opening typically happens via patient portals or personal health record systems that are linked with institutional systems. Another route of connecting people with clinical and health information systems is via external smart devices and social media, which were integrated to this review because they harvest data from patients or allow patients to produce contents that potentially go into clinical record systems in case of a care episode. Another salient reason for the increased interest could be that patient data, now used, shared, and analyzed for research purposes outside patient care is gaining public attention and additional importance. Thus, the virtual opening of clinical and health information systems, the fuzzy boundaries between care and research in learning health systems, the intertwined roles of patients, customers, and participants, including the risk of an unclearly defined provider-patient relationship particularly in case of social media, obviously called upon the assistance from the ethical domain.

Consequently, it was not surprising that ethical education was regarded as important and necessary for nearly all the studies, underscoring this notion empirically or through inspecting the literature. In addition, the studies included to answer the first research question mirrored the need for education. However, competency descriptions were rather scarce and could only be indirectly derived from guidelines and recommendations in most cases.

Ethical topics addressed by the literature for both research questions covered a wide spectrum, certainly with a thematic priority on confidentiality, informed consent, and privacy. It became also clear that in the case of AI, ML, and clinical decision support, ethical values typically cascade along the process of data acquisition and management, analysis, and utilization. For example, confidentiality, informed consent, data quality including potential biases in the data (injustice) play a central role during data acquisition, while explicability and transparency of the algorithms and their results as well as the autonomy of the providers, come into play during utilizing clinical decision support systems. Preserving privacy is a matter of data acquisition, and choosing the right algorithms is thus located at the interface between data acquisition, data management, and analysis. These selected examples illustrate that different ethical values pervade clinical information systems throughout.

4.2 Need to Include Ethics in Evaluation Studies

Most of the articles included in this review arrived at their findings and conclusions through literature reviews. Empirical research designs were employed to capture the opinions and attitudes of the stakeholders, but not to empirically evaluate any specific instances of technology, e.g ., a system implemented at a certain hospital or used by a group of patients. Along the same line, most papers referred to the technology from bird’s-eye view while not expanding on technical features. This allows rather general propositions to be made in arguing beyond the details of a specific realization. The drawback of this approach is that different types of technical designs or architectures, representations of data, information and knowledge, user interfaces, and interaction modes were left unconsidered. This obviously would have implied a different level of granularity to be scrutinized. Against the background of the great and broad interest in ethical debates covering the variety of technologies and health specialties included in this review, it seems worthwhile considering reconciling the perspective of technical details with the perspective of ethical values in rigorous empirical research designs. The suggestions put forward by Stockdale et al ., 42 could provide a first step towards such aspirations as the two following examples illustrate.

“Do the methods of data collection and usage in the proposal respect individual patient autonomy? (Respect for Autonomy)” and “Could granting access to the data, or granting a particular use of the data, lead to individual or collective harm? (Non-maleficence)” 42 .

Hereby, “methods of data collection and usage” as well as “granting access” would have to be further detailed. The evaluation framework offered by Manzeschke et al ., 74 provides a systematic scheme along which ethical values, i.e ., care, autonomy, safety, justice, privacy, participation, and self-conception of a technology and its use can be judged according to their degree of ethical sensitivity for the individual, organizational, and social level. This three-dimensional scheme integrates relevant ethical questions to be answered by the evaluation, which also includes the deliberation of conflicting ethical values. Developed initially for the evaluation of assisting technologies, it would have to be adapted for other sociotechnical systems. As data are moving more and more into the forefront 75 76 , the mechanisms and results of data evaluations are becoming of paramount interest. These examples are by far not exhaustive and further research seems advisable.

4.3 Need for the Better Alignment of Ethics Education with Technologies

Despite the interest in ethics that pervades many application areas and technologies, sociotechnical ethics education remains vague. The hope raised by Goodman 17 to move the two – ethics and technology - into the curricula in a combined fashion seems to be not yet fulfilled. Referring students, clinicians, and researchers to professional ethics guidelines is not wrong but it is often insufficient for three reasons. Very often, examples and illustrations are needed where and when ethical values are at risk when using the variety of technologies. Furthermore, students, clinicians, and researchers cannot be left alone with these guidelines and they would certainly benefit from initial and continuing education particularly as these technologies further develop. Finally, the need for patient and citizen involvement also entails ethical responsibilities that need to be accounted for. This is why educational concepts had been advocated in cooperation with patient organizations. While competencies surrounding all ethical issues are needed, a particular emphasis should be placed on knowledge and skills on how to solve ethical dilemmas practically. They often lead to a standstill in decision-making and can finally keep the providers from applying a certain technology, which is a missed opportunity. In conclusion, here is yet another field for research and practice to be advanced.

4.4 Limitations

This review is limited to clinical and health information systems, electronic health records, clinical decision support, health information exchange, and devices and media used by the patient. It does not include the wide field of artificial intelligence, big data, and precision medicine as a whole but only addresses it here and there in the context of the systems mainly targeted. As a field that attracts so much attention, it would have gone beyond the limits of this review particularly as the field conceptualized in this review is already broad enough. In addition, the review was restricted to MEDLINE via PubMed which certainly is also a limitation.

5 Conclusion

This review demonstrates that clinical and health information systems reaching out to other organizations as well as to patients and citizens beyond their intramural confines causes ethical debates to be initiated. Many of the themes identified in previous reviews prevail and seem to be accentuated. Despite this interest, ethical values have not yet found their firm place in empirical evaluation studies. Similarly, sociotechnical ethics competencies obviously need further clarification. Both strands mirror actionable and practical consequences arising from ethical discourses and set the stage for further research at the junction of clinical information systems and ethics.

References

- 1.Evans R S. Electronic health records: then, now, and in the future. Yearb Med Inform. 2016:48–61. doi: 10.15265/IYS-2016-s006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zelmer J, Ronchi E, Hyppönen H, Lupiáñez-Villanueva F, Codagnone C, Nøhr C et al. International health IT benchmarking: learning from cross-country comparisons. J Am Med Inform Assoc. 2017;24(02):371–9. doi: 10.1093/jamia/ocw111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Esdar M, Hüsers J, Weiß J P, Rauch J, Hübner U. Diffusion dynamics of electronic health records: A longitudinal observational study comparing data from hospitals in Germany and the United States. Int J Med Inform. 2019;131:103952. doi: 10.1016/j.ijmedinf.2019.103952. [DOI] [PubMed] [Google Scholar]

- 4.Hüsers J, Hübner U, Esdar M, Ammenwerth E, Hackl W O, Naumann L et al. Innovative Power of Health Care Organisations Affects IT Adoption: A bi-National Health IT Benchmark Comparing Austria and Germany. J Med Syst. 2017;41(02):33. doi: 10.1007/s10916-016-0671-6. [DOI] [PubMed] [Google Scholar]

- 5.Kim Y G, Jung K, Park Y T, Shin D, Cho S Y, Yoon D et al. Rate of electronic health record adoption in South Korea: A nation-wide survey. Int J Med Inform. 2017;101:100–7. doi: 10.1016/j.ijmedinf.2017.02.009. [DOI] [PubMed] [Google Scholar]

- 6.Adler-Milstein J, Jha A K. HITECH Act Drove Large Gains In Hospital Electronic Health Record Adoption. Health Aff (Millwood) 2017;36(08):1416–22. doi: 10.1377/hlthaff.2016.1651. [DOI] [PubMed] [Google Scholar]

- 7.Everson J, Adler-Milstein J. Sharing information electronically with other hospitals is associated with increased sharing of patients. Health Serv Res. 2002;55(01):128–35. doi: 10.1111/1475-6773.13240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Haux R, Ammenwerth E, Koch S, Lehmann C U, Park H A, Saranto K et al. A brief survey on six basic and reduced eHealth indicators in seven countries in 2017. Appl Clin Inform. 2018;9:704–13. doi: 10.1055/s-0038-1669458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Vehko T, Hyppönen H, Puttonen S, Kujala S, Ketola E, Tuukkanen J et al. Experienced time pressure and stress: electronic health records usability and information technology competence play a role. BMC Med Inform Decis Mak. 2019;19(01):160. doi: 10.1186/s12911-019-0891-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gesner E, Gazarian P, Dykes P. The Burden and Burnout in Documenting Patient Care: An Integrative Literature Review. Stud Health Technol Inform. 2019;264:1194–8. doi: 10.3233/SHTI190415. [DOI] [PubMed] [Google Scholar]

- 11.Kaipio J, Hyppönen H, Lääveri T. Physicians’ Experiences on EHR Usability: A Time Series from 2010, 2014 and 2017. Stud Health Technol Inform. 2019;257:194–9. [PubMed] [Google Scholar]

- 12.Gardner R M.Clinical Information Systems - From Yesterday to Tomorrow Yearb Med Inform 20161(Suppl 1):S62–S75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hackl W O, Hoerbst A. Managing Complexity. From Documentation to Knowledge Integration and Informed Decision Findings from the Clinical Information Systems Perspective for 2018. Yearb Med Inform. 2019;28(01):95–100. doi: 10.1055/s-0039-1677919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tran B X, Vu G T, Ha G H, Vuong Q H, Ho M T, Vuong T T et al. Global Evolution of Research in Artificial Intelligence in Health and Medicine: A Bibliometric Study. J Clin Med. 2019;8(03):360. doi: 10.3390/jcm8030360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hübner U, Shaw T, Thye J, Egbert N, de Fatima Marin H, Chang Pet al. Technology Informatics Guiding Education Reform – TIGER Methods Inf Med 201857(Open 1):e30–e42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Egbert N, Thye J, Hackl W O, Müller-Staub M, Ammenwerth E, Hübner U. Competencies for nursing in a digital world. Methodology, results, and use of the DACH-recommendations for nursing informatics core competency areas in Austria, Germany, and Switzerland. Inform Health Soc Care. 2018:1–25. doi: 10.1080/17538157.2018.1497635. [DOI] [PubMed] [Google Scholar]

- 17.Goodman K W. Health Information Technology as a Universal Donor to Bioethics Education. Camb Quarterly Healthc Ethics. 2017;26:341–7. doi: 10.1017/S0963180116000943. [DOI] [PubMed] [Google Scholar]

- 18.Beauchamp T L, Childress J F.Principles of Biomedical Ethics. 8th ed Oxford University Press; 2019(first published in 1977)

- 19.Moher D, Liberati A, Tetzlaff J, Altman D G.The PRISMA Group.Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement PLoS Med 2009607e1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McWilliams C, Inoue J, Wadey P, Palmer G, Santos-Rodriguez R, Bourdeaux C. Curation of an intensive care research dataset from routinely collected patient data in an NHS trust. F1000Res. 2019;8:1460. doi: 10.12688/f1000research.20193.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kogetsu A, Ogishima S, Kato K. Authentication of Patients and Participants in Health Information Exchange and Consent for Medical Research: A Key Step for Privacy Protection, Respect for Autonomy, and Trustworthiness. Front Genet. 2018;9:167. doi: 10.3389/fgene.2018.00167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Moscatelli M, Manconi A, Pessina M, Fellegara G, Rampoldi S, Milanesi Let al. An infrastructure for precision medicine through analysis of big data BMC Bioinformatics 201819(Suppl 10):351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lehmann C U, Petersen C, Bhatia H, Berner E S, Goodman K W. Advance Directives and Code Status Information Exchange: A Consensus Proposal for a Minimum Set of Attributes. Camb Q Healthc Ethics. 2019;28(01):178–85. doi: 10.1017/S096318011800052X. [DOI] [PubMed] [Google Scholar]

- 24.Sanelli-Russo S, Folkers K M, Sakolsky W, Fins J J, Dubler N N. Meaningful Use of Electronic Health Records for Quality Assessment and Review of Clinical Ethics Consultation. J Clin Ethics. 2018;29(01):52–61. [PubMed] [Google Scholar]

- 25.Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J. 2019;6(02):94–8. doi: 10.7861/futurehosp.6-2-94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Graham S, Depp C, Lee E E, Nebeker C, Tu X, Kim H C et al. Artificial Intelligence for Mental Health and Mental Illnesses: an Overview. Curr Psychiatry Rep. 2019;21(11):116. doi: 10.1007/s11920-019-1094-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rashidi P, Edwards D A, Tighe P J. Primer on machine learning: utilization of large data set analyses to individualize pain management. Curr Opin Anaesthesiol. 2019;32(05):653–60. doi: 10.1097/ACO.0000000000000779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Erikainen S, Pickersgill M, Cunningham-Burley S, Chan S.Patienthood and participation in the digital era Digit Health 2019. Apr 23;51–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Duckett S. Australia’s new digital health record created ethical dilemmas. Healthc Manage Forum. 2019;32(03):167–8. doi: 10.1177/0840470419827719. [DOI] [PubMed] [Google Scholar]

- 30.Meredith J, McCarthy S, Hemsley B. Legal and Ethical Issues Surrounding the Use of Older Children’s Electronic Personal Health Records. J Law Med. 2018;25(04):1042–55. [PubMed] [Google Scholar]

- 31.de Riel E, Puttkammer N, Hyppolite N, Diallo J, Wagner S, Honoré J G et al. Success factors for implementing and sustaining a mature electronic medical record in a low-resource setting: a case study of iSanté in Haiti. Health Policy Plan. 2018;33(02):237–46. doi: 10.1093/heapol/czx171. [DOI] [PubMed] [Google Scholar]

- 32.Wilburn A. Nursing Informatics: Ethical Considerations for Adopting Electronic Records. NASN Sch Nurse. 2018;33(03):150–3. doi: 10.1177/1942602X17712020. [DOI] [PubMed] [Google Scholar]

- 33.Brisson G E, Barnard C, Tyler P D, Liebovitz D M, Neely K J. A Framework for Tracking Former Patients in the Electronic Health Record Using an Educational Registry. J Gen Intern Med. 2018;33(04):563–6. doi: 10.1007/s11606-017-4278-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sánchez M C, Sarría-Santamera A.Unlocking data: Where is the key?Bioethics2019 [DOI] [PubMed]

- 35.Carter S M, Rogers W, Win K T, Frazer H, Richards B, Houssami N. The ethical, legal and social implications of using artificial intelligence systems in breast cancer care. Breast. 2019;49:25–32. doi: 10.1016/j.breast.2019.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Boers S N, Jongsma K R, Lucivero F, Aardoom J, Büchner F L, de Vries M et al. SERIES: eHealth in primary care. Part 2: Exploring the ethical implications of its application in primary care practice. Eur J Gen Pract. 2019:1–7. doi: 10.1080/13814788.2019.1678958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Loftus T J, Tighe P J, Filiberto A C, Efron P A, Brakenridge S C, Mohr A M et al. Artificial Intelligence and Surgical Decision-Making. JAMA Surg. 2019;10:1001. doi: 10.1001/jamasurg.2019.4917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Baldini G, Botterman M, Neisse R, Tallacchini M. Ethical Design in the Internet of Things. Sci Eng Ethics. 2018;24:905–925. doi: 10.1007/s11948-016-9754-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Musher D M, Hayward C P, Musher B L. Physician Integrity, Templates, and the ‘F’ Word. J Emerg Med. 2019;57(02):263–5. doi: 10.1016/j.jemermed.2019.03.046. [DOI] [PubMed] [Google Scholar]

- 40.McBride S, Tietze M, Robichaux C, Stokes L, Weber E.Identifying and Addressing Ethical Issues with Use of Electronic Health RecordsOnline J Issues Nurs201823(1):Manuscript 5

- 41.Robichaux C, Tietze M, Stokes F, McBride S. Reconceptualizing the Electronic Health Record for a New Decade: A Caring Technology? ANS Adv Nurs Sci. 2019;42(03):193–205. doi: 10.1097/ANS.0000000000000282. [DOI] [PubMed] [Google Scholar]

- 42.Stockdale J, Cassell J, Ford E. “Giving something back”: A systematic review and ethical enquiry into public views on the use of patient data for research in the United Kingdom and the Republic of Ireland. Wellcome Open Res. 2019;3:6. doi: 10.12688/wellcomeopenres.13531.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Natsiavas P, Kakalou C, Votis K, Tzovaras D, Koutkias V. Citizen Perspectives on Cross-Border eHealth Data Exchange: A European Survey. Stud Health Technol Inform. 2019;264:719–23. doi: 10.3233/SHTI190317. [DOI] [PubMed] [Google Scholar]

- 44.Pathak P R, Chou A. Confidential Care for Adolescents in the U.S. Health Care System. J Patient Cent Res Rev. 2019;6(01):46–50. doi: 10.17294/2330-0698.1656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bourla A, Ferreri F, Ogorzelec L, Peretti C S, Guinchard C, Mouchabac S. Psychiatrists’ Attitudes Toward Disruptive New Technologies: Mixed-Methods Study. JMIR Ment Health. 2018;5(04):e10240. doi: 10.2196/10240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Gooding P. Mapping the rise of digital mental health technologies: Emerging issues for law and society. Int J Law Psychiatry. 2019;67:101498. doi: 10.1016/j.ijlp.2019.101498. [DOI] [PubMed] [Google Scholar]

- 47.Evans E L, Whicher D. What Should Oversight of Clinical Decision Support Systems Look Like? AMA J Ethics. 2018;20(09):E857–E863. doi: 10.1001/amajethics.2018.857. [DOI] [PubMed] [Google Scholar]

- 48.Galvin H K, Petersen C, Subbian V, Solomonides A. Patients as Agents in Behavioral Health Research and Service Provision: Recommendations to Support the Learning Health System. Appl Clin Inform. 2019;10(05):841–8. doi: 10.1055/s-0039-1700536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Brill S B, Moss K O, Prater L. Transformation of the Doctor-Patient Relationship: Big Data, Accountable Care, and Predictive Health Analytics. HEC Forum. 2019;31(04):261–82. doi: 10.1007/s10730-019-09377-5. [DOI] [PubMed] [Google Scholar]

- 50.Macdonald G G, Townsend A F, Adam P, Li L C, Kerr S, McDonald M et al. eHealth Technologies, Multimorbidity, and the Office Visit: Qualitative Interview Study on the Perspectives of Physicians and Nurses. J Med Internet Res. 2018;20(01):e31. doi: 10.2196/jmir.8983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Mars M, Morris C, Scott R E. Selfie Telemedicine - What Are the Legal and Regulatory Issues? Stud Health Technol Inform. 2018;254:53–62. [PubMed] [Google Scholar]

- 52.Eberlin K R, Perdikis G, Damitz L, Krochmal D J, Kalliainen L K, Bonawitz S C. Electronic Communication in Plastic Surgery: Guiding Principles from the American Society of Plastic Surgeons Health Policy Committee. Plast Reconstr Surg. 2018;141(02):500–5. doi: 10.1097/PRS.0000000000004022. [DOI] [PubMed] [Google Scholar]

- 53.Kuhnel L. TTaPP: Together Take a Pause and Ponder: A Critical Thinking Tool for Exploring the Public/Private Lives of Patients. J Clin Ethics. 2018;29(02):102–13. [PubMed] [Google Scholar]

- 54.Gensheimer S G, Wu A W, Snyder C F. Oh, the Places We’ll Go: Patient-Reported Outcomes and Electronic Health Records. Patient. 2018;11(06):591–8. doi: 10.1007/s40271-018-0321-9. [DOI] [PubMed] [Google Scholar]

- 55.Ashton K, Sullivan A. Ethics and Confidentiality for Psychologists in Academic Health Centers. J Clin Psychol Med Settings. 2018;25(03):240–9. doi: 10.1007/s10880-017-9537-4. [DOI] [PubMed] [Google Scholar]

- 56.Ienca M, Wangmo T, Jotterand F, Kressig R W, Elger B. Ethical Design of Intelligent Technologies for Dementia: A Descriptive Review. Sci Eng Ethics. 2018;24:1035–55. doi: 10.1007/s11948-017-9976-1. [DOI] [PubMed] [Google Scholar]

- 57.Ho A, Quick O. Leaving patients to their own devices? Smart technology, safety and therapeutic relationships. BMC Med Ethics. 2018;19(01):18. doi: 10.1186/s12910-018-0255-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Laurie G T. Cross-Sectoral Big Data: The Application of an Ethics Framework for Big Data in Health and Research. Asian Bioeth Rev. 2019;11(03):327–39. doi: 10.1007/s41649-019-00093-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Modell S M, Citrin T, Kardia S LR.Laying Anchor: Inserting Precision Health into a Public Health Genetics Policy CourseHealthcare (Basel)20186(3) [DOI] [PMC free article] [PubMed]

- 60.Estrada-Hernandez N, Bahr P. Ethics and assistive technology: Potential issues for AT service providers. Assist Technol. 2019;24:1–7. doi: 10.1080/10400435.2019.1634657. [DOI] [PubMed] [Google Scholar]

- 61.Alshakhs F, Alanzi T. The evolving role of social media in health-care delivery: measuring the perception of health-care professionals in Eastern Saudi Arabia. J Multidiscip Healthc. 2018;11:473–9. doi: 10.2147/JMDH.S171538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Demiray A, Çakar M, Açil A, Ilaslan N, Savas Yucel T. Social media use and ethics violations: Nurses’ responses to hypothetical cases. Int Nurs Rev. 2019:1–8. doi: 10.1111/inr.12563. [DOI] [PubMed] [Google Scholar]

- 63.Bopp T, Vadeboncoeur J D, Stellefson M, Weinsz M.Moving Beyond the Gym: A Content Analysis of YouTube as an Information Resource for Physical LiteracyInt J Environ Res Public Health201916(18) [DOI] [PMC free article] [PubMed]

- 64.Zimba O, Radchenko O, Strilchuk L.Social media for research, education and practice in rheumatologyRheumatol Int2019 [DOI] [PubMed]

- 65.LaBarge G, Broom M. Social Media in Primary Care. Mo Med. 2019;116(02):106–10. [PMC free article] [PubMed] [Google Scholar]

- 66.Bittner J G, Logghe H J, Kane E D, Goldberg R F, Alseidi A, Aggarwal R et al. A Society of Gastrointestinal and Endoscopic Surgeons (SAGES) statement on closed social media (Facebook®) groups for clinical education and consultation: issues of informed consent, patient privacy, and surgeon protection. Surg Endosc. 2019;33(01):1–7. doi: 10.1007/s00464-018-6569-2. [DOI] [PubMed] [Google Scholar]

- 67.Geis J R, Brady A P, Wu C C, Spencer J, Ranschaert E, Jaremko J Let al. Ethics of Artificial Intelligence in Radiology: Summary of the Joint European and North American Multisociety Statement Radiology 2019. Nov;29302436–40. [DOI] [PubMed] [Google Scholar]

- 68.Robillard J M, Cleland I, Hoey J, Nugent C. Ethical adoption: A new imperative in the development of technology for dementia. Alzheimers Dement. 2018;14(09):1104–13. doi: 10.1016/j.jalz.2018.04.012. [DOI] [PubMed] [Google Scholar]

- 69.Chandawarkar A A, Gould D J, Stevens W G. Insta-grated Plastic Surgery Residencies: The Rise of Social Media Use by Trainees and Responsible Guidelines for Use. Aesthet Surg J. 2018;38(10):1145–52. doi: 10.1093/asj/sjy055. [DOI] [PubMed] [Google Scholar]

- 70.Jaremko J L, Azar M, Bromwich R, Lum A, Alicia Cheong L H, Giber Met al. Canadian Association of Radiologists White Paper on Ethical and Legal Issues Related to Artificial Intelligence in Radiology Can Assoc Radiol J 2019. May;7002107–118. [DOI] [PubMed] [Google Scholar]

- 71.Reamer F G. Evolving standards of care in the age of cybertechnology. Behav Sci Law. 2018;36:257–69. doi: 10.1002/bsl.2336. [DOI] [PubMed] [Google Scholar]

- 72.Sussman N, DeJong S M. Ethical Considerations for Mental Health Clinicians Working with Adolescents in the Digital Age. Curr Psychiatry Rep. 2018;20(12):113. doi: 10.1007/s11920-018-0974-z. [DOI] [PubMed] [Google Scholar]

- 73.Denecke K, Bamidis P, Bond C et al. Ethical Issues of Social Media Usage in Healthcare. Yearb Med Inform. 2015;10(01):137–47. doi: 10.15265/IY-2015-001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Manzeschke A, Weber K, Rother E, Fangerau H. Berlin: VDI/VDE publications; 2015. Ethical questions in the area of age appropriate assisting systems.

- 75.Floridi L, Taddeo M. What is data ethics? Phil Trans R Soc A. 2016;374:2.016036E7. doi: 10.1098/rsta.2016.0360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Cahan E M, Hernandez-Boussard T, Thadaney-Israni S, Rubin D L.Putting the data before the algorithm in big data addressing personalized healthcare NPJ Digit Med 2019. Aug 19;278. [DOI] [PMC free article] [PubMed] [Google Scholar]