Abstract

Background

Computerized decision support systems (CDSSs) are software programs that support the decision making of practitioners and other staff. Other reviews have analyzed the relationship between CDSSs, practitioner performance, and patient outcomes. These reviews reported positive practitioner performance in over half the articles analyzed, but very little information was found for patient outcomes.

Objective

The purpose of this review was to analyze the relationship between CDSSs, practitioner performance, and patient medical outcomes. PubMed, CINAHL, Embase, Web of Science, and Cochrane databases were queried.

Methods

Articles were chosen based on year published (last 10 years), high quality, peer-reviewed sources, and discussion of the relationship between the use of CDSS as an intervention and links to practitioner performance or patient outcomes. Reviewers used an Excel spreadsheet (Microsoft Corporation) to collect information on the relationship between CDSSs and practitioner performance or patient outcomes. Reviewers also collected observations of participants, intervention, comparison with control group, outcomes, and study design (PICOS) along with those showing implicit bias. Articles were analyzed by multiple reviewers following the Kruse protocol for systematic reviews. Data were organized into multiple tables for analysis and reporting.

Results

Themes were identified for both practitioner performance (n=38) and medical outcomes (n=36). A total of 66% (25/38) of articles had occurrences of positive practitioner performance, 13% (5/38) found no difference in practitioner performance, and 21% (8/38) did not report or discuss practitioner performance. Zero articles reported negative practitioner performance. A total of 61% (22/36) of articles had occurrences of positive patient medical outcomes, 8% (3/36) found no statistically significant difference in medical outcomes between intervention and control groups, and 31% (11/36) did not report or discuss medical outcomes. Zero articles found negative patient medical outcomes attributed to using CDSSs.

Conclusions

Results of this review are commensurate with previous reviews with similar objectives, but unlike these reviews we found a high level of reporting of positive effects on patient medical outcomes.

Keywords: CDSS, performance, outcomes

Introduction

Rationale

Computerized decision support systems (CDSSs) are software programs that support the decision making of patients, practitioners, and staff with knowledge and person-specific information. CDSSs present several tools and alerts to enhance the decision-making process within the clinical workflow [1]. Knowledge-based CDSSs were the earliest classes of CDSSs using a data repository to draw conclusions. Knowledge-based systems use traditional computing methods giving programmed results. Non–knowledge-based CDSSs are the most common forms used today. These systems use artificial intelligence (AI) assistance to augment clinical decisions made at the point of care. AI-supported CDSSs use patient data to analyze relationships between symptoms, treatments, and patient outcomes to make clinical decisions. These patient data are usually derived from electronic health records (EHRs): digital forms of patient records that include patient information such as personal contact information, patient’s medical history, allergies, test results, and treatment plan [2]. Artificial intelligence, software, or algorithms able to perform tasks that normally require human intelligence are integrated into CDSS processes. Data mining, a process usually assisted by AI, is often used by CDSSs to identify new data patterns from large data sets (like patient EHRs) [3]. The conclusions reached by AI used for data mining can be used by both non–knowledge-based CDSSs and knowledge-based CDSSs [3]. CDSSs are integrated into technologies such as computerized physician order entry (CPOE) [4] tools and electronic medical record (EMR)/EHR databases and use a wide variety of drug, patient, and treatment data and more to make clinical decisions that provide the best recommendations for treatment. CDSS utility varies widely, drawing conclusions about different ailments, disorders, and syndromes. Prospects for this technology may employ patient preferences or financial capabilities.

In prior studies, CDSSs have been shown to improve practitioner performance, but the effects on patient outcomes were inconsistent and required further study. A review conducted in 1998 evaluated studies for the previous 5 years and found a benefit to physician performance in 66% of studies analyzed (n=65), but only 14 of those analyzed discussed outcomes, so no conclusions were made [5]. The review was repeated in 2005 with a larger sample (n=100) and found a positive impact on physician performance in 64% of studies analyzed, but like the 1998 review, effects on patient outcomes were insufficient to make generalizations [6]. In 2010, a research protocol was registered to repeat the review, but no publication followed. In 2011, the review was repeated with a similar size of articles analyzed (n=91) and identified a positive effect of CDSSs on practitioner performance for 57% of articles analyzed; however, consistent with previous reviews, no conclusions could be made concerning patient outcomes [7].

Since the last publication on this topic in 2011, CDSSs have seen significant industry growth, becoming more accessible, cost-effective, and reliable and possessing greater computational power [8]. In addition to hardware improvements, the inclusion of software such as artificial intelligence (AI) programs is growing rapidly in CDSSs, but as of yet these improvements have not been systematically reviewed to determine any impacts they might have on patient outcomes and practitioner performance.

Objective

The purpose of this systematic review is to conduct a similar review to those from 1998 and 2005 to analyze the association between CDSSs, practitioner performance, and patient outcomes. The methods used in the 2010 manuscript were never published, and those used in the 2011 review were significantly different than those in 1998 and 2005. The taxonomy of CDSSs has changed greatly since 1998, so search terms used 23 years ago will not be relevant today. CDSS employment is rapidly growing, especially with increased access to CDSS AI-supported software. Because the effects are understudied, our goal is to review the effectiveness of CDSS technologies, their employment, and their overall utility.

Methods

Protocol Registration and Eligibility Criteria

This review was not registered. The methods followed a technique of sharing workload from the Assessment of Multiple Systematic Reviews (AMSTAR) [9]. The format of the review uses the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [10]. Conceptualization of the overall review, including standardized data extraction tools, follows the Kruse protocol for writing systematic reviews in a health-related program [11]. Articles were eligible for inclusion if they were published in the English language within the last 10 years, had full text available, and reported on the elements of the objective statement: measures of effectiveness of CDSSs on practitioner performance or patient outcomes. A 10-year window was justified because we wanted the research to be current, and this exceeds the window of the 1998 and 2005 reviews, which used only 5 years. At first, we limited the search to studies in peer-reviewed journals, but because our sample was too small, we expanded the search to include grey literature. However, we limited our choices to use only those that had results.

Information Sources

Five common research databases were queried: PubMed (the web-based components of MEDLINE, life science journals, and online books), CINAHL, Embase, Web of Science, and Cochrane (reviews, controlled trials, methodologies, and health technology assessments). Searches were conducted from January 29 to January 31, 2020. Databases were chosen at the recommendation of the National Institutes of Health, which recommends at least three databases: PubMed, Embase, and Cochrane [12]. This practice also follows established practice in published systematic reviews [11].

Search and Study Selection

Searches in each database were identical: (“Clinical decision support systems” OR “computerized provider order entry” OR “diagnosis, computer assisted” OR “drug therapy, computer-assisted” OR “expert systems”) AND (“patient reported outcomes” OR “practitioner performance”). Embase and Web of Science do not allow Boolean searches, so an advanced search was used. Articles were eligible for inclusion if they were published in the last 10 years and discussed both CDSSs and either practitioner performance or patient-reported outcomes. We excluded reviews. In CINAHL, we excluded MEDLINE to avoid duplication with the results from PubMed.

The search strings for the 1998 and 2005 reviews were not available, but the search string for the 2011 study was available: (literature review[tiab] OR critical appraisal[tiab] OR meta analysis[pt] OR systematic review[tw] OR medline[tw]) AND (medical order entry systems[mh] OR medical order entry system*[tiab] OR computerized order entry[tiab] OR computerized prescriber order entry[tiab] OR computerized provider order entry[tiab] OR computerized physician order entry[tiab] OR electronic order entry[tiab] OR electronic prescribing[mh] OR electronic prescribing[tiab] OR cpoe[tiab] OR drug therapy, computer assisted[mh] OR computer assisted drug therapy[tiab] OR decision support systems, clinical[mh] OR decision support system*[tiab] OR reminder system*[tiab] OR decision making, computer assisted[mh] OR computer assisted decision making [tiab] OR diagnosis, computer assisted[mh] OR computer assisted diagnosis[tiab] OR therapy, computer assisted[mh] OR computer assisted therapy[tiab] OR expert systems[mh] OR expert system*[tiab]). It is important to note the limited terms used for CDSSs also included lesser known terms indexed by PubMed’s Medical Subject Headings: clinical decision support; clinical decision supports; decision support, clinical; support, clinical decision; supports, clinical decision; decision support, clinical; and decision support systems, clinical. Searching for CPOE also included order entry systems, medical; medication alert systems; alert system, medication; medication alert system; system, medication alert; alert systems, medication; computerized physician order entry system; CPOE; computerized provider order entry; and computerized physician order entry. Searching for diagnosis, computer assisted also included the following: computer-assisted diagnosis; computer assisted diagnosis; computer-assisted diagnoses; and diagnoses, computer assisted. Searching for drug therapy included the following: drug therapy, computer assisted; therapy, computer-assisted drug; computer-assisted drug therapies; drug therapies, computer-assisted; therapies, computer-assisted drug; therapy, computer assisted drug; computer-assisted drug therapy; computer assisted drug therapy; protocol drug therapy, computer-assisted; and protocol drug therapy, computer assisted. A search of expert systems also included expert system; system, expert; and systems, expert.

Abstracts were independently screened by each reviewer, and a consensus meeting was called to discuss disagreement. A kappa score was calculated to provide a measure of agreement between reviewers.

Data Collection and Data Items

A standardized Excel spreadsheet (Microsoft Corporation) was used as a data extraction tool, in accordance with the Kruse protocol [11]. This tool acted as a template for reviewers to collect study design, participants, sample size, intervention, observed bias, and effect size, where applicable. A literature matrix was created to list and organize all articles, extract data between multiple reviewers, and discuss observations in consensus meetings. Three consensus meetings were held for reviewers to discuss disagreement and share observations. This practice created a synergy effect and ensured everyone progressed with a like mind.

Risk of Bias in Individual Studies

Reviewers noted any observation of bias. We used the Johns Hopkins Nursing Evidence-Based Practice (JHNEBP) tool as a quality assessment of studies analyzed. Other forms of bias were noted as well, which are described in risk of bias across studies.

Synthesis of Results

The Excel spreadsheet was used to synthesize our observations and data collected. The spreadsheet enabled a narrative analysis which identified themes, as is the practice in multiple disciplines. We did not combine results of studies because this was not a meta-analysis.

Risk of Bias Across Studies

Additional forms of bias other than selection bias were noted on the spreadsheet such as localized studies or surveillance bias.

Additional Analysis

Reviewers read each article two times [11]. During the second reading, reviewers made independent notes of major themes related to the objective, using the Excel data extraction tool. After a third consensus meeting debriefing the observations and themes, detailed notes were formulated about health policy implications of telemedicine. Frequency of occurrence of each of the major common themes was captured in affinity matrices for further analysis. Data and calculations are available upon request.

Results

Study Selection and Study Characteristics

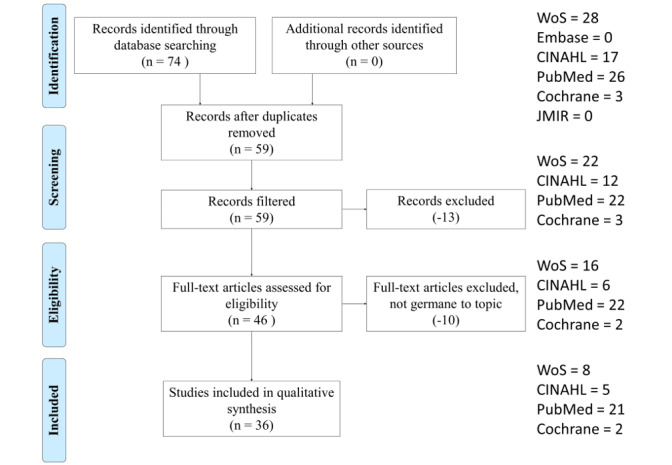

The study selection process is illustrated in Figure 1. The 74 results from the search string in five databases were placed into an Excel spreadsheet and shared among reviewers for selection and analysis. Filters were applied in each database to capture only the last 10 years (January 30, 2011, to January 30, 2020). Reviewers independently removed duplicates and screened abstracts. A statistic of agreement, kappa, was calculated. The kappa score produced was .98, showing almost complete agreement on all reviewed articles [13,14]. The remaining 36 results were read in full for relevance. Observations for the 36 articles that remained were placed in an Excel spreadsheet for independent data analysis.

Figure 1.

Article selection process with selection criteria.

Reviewers collected standard patient/participants, intervention, comparison, outcome, study design (PICOS) observations plus indications of either practitioner performance or patient medical outcomes (Multimedia Appendix 1). Bias was also noted. Following the Kruse protocol, observations were distilled into themes for further analysis. Three consensus meetings were used to discuss disagreement. A summary of all observations is listed in Table 1. Articles are listed in reverse chronological order. The details extracted were year of publication, authors, title, study design, participants, sample size, intervention, bias, and observations about barriers or facilitators to the adoption of telemedicine.

Table 1.

Summary of analysis.

| Authors | Efficiency (practitioner performance) | Efficiency themes | Effectiveness (medical outcomes) | Effectiveness themes |

| Grout et al [15] | Practitioner performance not discussed | Not reported or discussed | Self-reporting by adolescents increased (doubled) by 19.3 percentage points | Improved screening |

| Connelly et al [16] | Number of prescriptions written for migraines increased significantly; average length of time per use of tool was 3.3 minutes | More accurate prescribing | Medical outcomes not reported or discussed | Not reported or discussed |

| Salz et al [17] | Facilitated a more comprehensive visit | Improved care plans | Provided a way for patients to chronicle other physicians who had been involved in medical decisions enabling doctors to communicate | Improved feedback |

| Kirby et al [18] | Increased referral awareness by providers for patients with severe aortic stenosis (which is a known quality issue); increase in referral rate from 72% to 98% | Increased awareness | Medical outcomes not reported or discussed | Not reported or discussed |

| Dolan and Veazie [19] | Practitioner performance not statistically different | No difference reported | Medical outcomes not reported or discussed | Not reported or discussed |

| Jackson and De Cruz [20] | Practitioner performance not discussed | Not reported or discussed | Improvement in relapse duration, medication adherence, cost, and number of clinic visits | Improved symptoms |

| Caballero-Ruiz et al [21] | Face-to-face visits with patients reduced by 89% time devoted by clinicians to patient evaluation was reduced by 27%; automatic detection of 100% of patients who needed insulin therapy | Improved performance | Diet prescriptions provided without clinician intervention; patients were very pleased with the tool | Improved disease management |

| Raj et al [22] | System did not improve pain intensity, therefore no significant differences in dose of opiates compared with control; had no effect on practitioner performance | No difference reported | Did not improve or worsen pain management | No difference reported |

| Mooney et al [23] | Enabled providers to follow up based on feedback from patients | Better follow-up with patients | Intervention group demonstrated fewer severe and moderate symptoms | Improved symptoms |

| Baypinar et al [24] | Correct prescribing increased from 54% to 91% (P<.01) for folic acid and 11% to 40% (P<.001) for vitamin D, and stopped orders increased from 3% to 14% (P<.002) | More accurate prescribing | Medical outcomes not reported or discussed | Not reported or discussed |

| Zini et al [25] | Practitioners improved prevention, diagnosis, and treatment | Improved care plans | Medical outcomes not reported or discussed | Not reported or discussed |

| Muro et al [26] | Practitioner performance not discussed | Not reported or discussed | Improved symptoms; decreased adverse events | Improved symptoms |

| Kistler et al [27] | Practitioner performance not discussed | Not reported or discussed | More patients agreed to screening in the intervention group than the control | Improved screening |

| Lawes and Grissinger [28] | Practitioners performed worse when CDSSa was not available or when incorrect data were entered for weight | More accurate prescribing | Adverse drug events no doubt occurred because of error, but no outcomes were discussed | Not reported or discussed |

| Kouladjian et al [29] | Average time to complete task to recognize sedative and anticholinergic medicines in practice was 7:20 (SD 1:45) minutes | Improved performance | Medical outcomes not reported or discussed | Not reported or discussed |

| Norton et al [30] | Surgeons rated the tool very useful or moderately useful (25%), neutral (47%), or moderately useless or not useful (28%) | Improved care plans | Medical outcomes not reported or discussed | Not reported or discussed |

| Pombo et al [31] | Resolving missing data | Improved documentation | Resolving missing data in daily diary improved the feedback loop to the pain manager | Improved feedback |

| Cox and Pieper [32] | Practitioner performance not discussed | Not reported or discussed | Treatment in the doxazosin arm was stopped early due to a 1.25-fold increase in the incidence of CVDb and a 2-fold increase in the incidence of heart failure compared with the diuretic arm | Improved efficacy |

| Schneider et al [33] | Once CDSS scored significantly more exams as appropriate; better interface of one CDSS versus the other influenced provider willingness to use the CDS system | Improved screening; improved buy-in of CDSSs | Medical outcomes not reported or discussed | Not reported or discussed |

| Zhu and Cimino [34] | Accuracy improved: reduced inaccuracy | Improved accuracy and performance | Improved patient safety | Improved safety |

| Utidjian et al [35] | Proportions of doses administered declined during the baseline seasons (from 72% to 62%) with partial recovery to 68% during the intervention season; palivizumab-focused group improved by 19.2 percentage points in the intervention season compared with the prior baseline season (P<.001), while the comprehensive intervention group only improved 5.5 percentage points (P=.29); difference in change between study groups was significant (P=.05) | More accurate prescribing | A quality improvement initiative supported by CDS and workflow tools integrated in the EHRc improved recognition of eligibility and may have increased palivizumab administration rates; palivizumab-focused group performed significantly better than a comprehensive intervention | Improved disease management |

| Semler et al [36] | No statistically significant difference in performance (also low use of tool) | No difference reported | No statistically significant difference: mortality 14% versus 15%, ICUd-free days 17 versus 19, vasopressor-free days 22.2 versus 22.6 | No difference reported |

| Peiris et al [37] | Patients more likely to receive screening with CDSS (63% vs 53%); no improvements in prescription of recommended medications at the end of the study | Improved screening | Improved cardiovascular disease risk management; no difference in prescription rates | Improved disease management |

| Chow et al [38] | Only one-quarter of patients received antibiotics despite recommendations of CDSSs | More accurate prescribing | Patients aged <65 years had greater mortality benefit (ORe 0.45, 95% CI 0.20-1.00; P=.05) than patients >65 years (OR 1.28, 95% CI 0.91-1.82; P=.16); no effect was observed on incidence of Clostridium difficile (OR 1.02, 95% CI 0.34-3.01) and multidrug-resistant organism (OR 1.06, 95% CI 0.42-2.71) infections; no increase in infection-related readmission (OR 1.16, 95% CI 0.48-2.79) was found in survivors; receipt of CDSS-recommended antibiotics reduced mortality risk in patients aged ≤65 years and did not increase risk in older patients | No difference reported |

| Wilson et al [39] | Practitioner performance not discussed | Not reported or discussed | Improved self-efficacy and decreased fecal aversion | Improved efficacy |

| Loeb et al [40] | Training greatly improved documentation | Improved documentation | Medical outcomes not reported or discussed | Not reported or discussed |

| Mishuris et al [41] | Practitioner performance not discussed | Not reported or discussed | Patients who visited clinics missing at least one of the CDSS functions were more likely to have controlled blood pressure (86% vs 82%; OR 1.3, 95% CI 1.1-1.5) and more likely to not have adverse drug event visits (99.9% vs 99.8%; OR 3.0, 95% CI 1.3-7.3) | Improved symptoms |

| Dexheimer et al [42] | No difference in time to disposition decision; no change in hospital admission rate; no difference in EDf length of stay | No difference reported | CDSS supported communication between patient and provider | Improved feedback |

| Heisler et al [43] | Practitioner performance not discussed | Not reported or discussed | Decrease in diabetes distress, but no difference in other outcomes | Improved symptoms |

| Eckman et al [44] | Decisions are based on >0.1 QALYsg; tool identified the 50% who would benefit from this threshold | Improved performance | Significant gain in quality-adjusted life expectancy | Improved mortality |

| Zaslansky et al [45] | Audit, feedback, and benchmarking provided to practitioners to identify when their practice is not in line with data | Improved benchmarking | Provides real-time feedback on PROsh | Improved feedback |

| Lobach et al [46] | No treatment-related differences between groups | No difference reported | Among patients <18 years, those in the email group had fewer low severity (7.6 vs 10.6/100 enrollees; P<.001) and total ED encounters (18.3 vs 23.5/100 enrollees; P<.001) and lower ED ($63 vs $89, P=.002) and total medical costs ($1736 vs $2207, P=.009); patients who were ≥18 years in the latter group had greater outpatient medical costs | Improved symptoms |

| Barlow and Krassas [47] | Annual cycle of care plans increased by 12% | Improved care plans | Patients better able to meet targets for microalbumin; glycemic control well managed | Improved symptoms |

| Robbins et al [48] | A total of 90% of providers involved with the RCTi supported adopting the intervention | Improved buy-in of CDSSs | Increased CD4+ lymphocyte count and reduced suboptimal follow-up appointment | Improved symptoms |

| Chen et al [49] | New CDSS identified 70 records needing reassessment of triglyceride level | Improved screening | Medical outcomes not discussed | Not reported or discussed |

| Seow et al [50] | A total of 87% of respondents strongly agreed or somewhat agreed that the “ESASj was important to complete because it helped the health care team to know what symptoms [they] were having and how severe they were” | Improved screening | A total of 79% of respondents rated that their “pain and other symptoms have been controlled to a comfortable level” always or most of the time compared with 8% of respondents who rated this as rarely or never occurring | Improved symptoms |

aCDSS: computerized decision support system.

bCVD: cardiovascular disease.

cEHR: electronic health record.

dICU: intensive care unit.

eOR: odds ratio.

fED: emergency department.

gQALY: quality-adjusted life year.

hPRO: patient-reported outcome.

iRCT: randomized controlled trial.

jESAS: Edmonton Symptom Assessment System.

Risk of Bias Within Studies

Bias was not observed in all studies analyzed. A full review of the bias observed is provided in Multimedia Appendix 1. The JHNEBP tool found no quality measure below Level IV or C.

Results of Individual Studies

General observations and thematic analysis are listed in Table 1. Articles are listed in reverse chronological order. A table of PICOS is provided in Multimedia Appendix 1.

Risk of Bias Across Studies

Multimedia Appendix 1 provides a table of PICOS and bias. Outcomes are reported in Table 1. Bias was similar across articles reviewed: most research took place in one facility, organization, or state, which is a form of selection bias and limits the broad application of results. A sample taken from a limited geographic area is inherently limited in its ability to generalize results to the general population unless steps have been taken to ensure the sample is representative of the population.

Additional Analysis

Twelve themes were identified for practitioner performance, two of which were no difference and not discussed. These themes are listed in Table 2 in order of occurrence first for positive effect followed by no difference and not discussed.

Table 2.

Summary of themes identified for practitioner performance (n=38).

| Efficiency themes | Occurences | Incidence, n (%) |

| More accurate prescribing | 16,24,28,35,38 | 5 (13) |

| Improved screening | 33,37,49,50 | 4 (11) |

| Improved performance | 21,29,34,44 | 4 (11) |

| Improved care plans | 17,25,30,47 | 4 (11) |

| Improved documentation | 31,40 | 2 (5) |

| Improved buy-in of CDSSsa | 33,48 | 2 (5) |

| Increased awareness | 18 | 1 (3) |

| Better follow-up with patients | 23 | 1 (3) |

| Improved accuracy | 34 | 1 (3) |

| Improved benchmarking | 45 | 1 (3) |

| No difference reported | 19,22,36,42,46 | 5 (13) |

| Not reported or discussed | 15,20,26,27,32,39,41,43 | 8 (21) |

aCDSS: computerized decision support system.

As illustrated, 66% (25/38) of the occurrences of themes identified 10 positive indicators of practitioner performance [16-18,21,23-25,28-31,33-35,37,38,40,44,45,47-50]. Practitioner performance was reported as more accurate prescribing, improved screening of patients, improved overall performance, increased awareness of patient conditions, improved follow-up due to better communication with patients, improved accuracy of diagnosis, improved documentation, improved benchmarking, improved care plans, and improved buy-in of CDSSs. A total of 21% (8/38) of articles did not discuss practitioner performance [15,20,26,27,32,39,41,43].

Practitioners using CDSSs experienced more accurate prescribing [16,24,28,35,38], improved screening [33,37,49,50], improved overall performance [21,29,34,44], improved care plans [17,25,30,47], improved documentation [31,40], overall improved buy-in for CDSSs [33,48], increased awareness of needs of patients [18], improved follow-up with patients due to enhanced communication channels enabled by the application [23], improved accuracy of diagnosis [34], and improved benchmarking [45].

Nine themes were identified for patient medical outcomes, two of which were no difference and not discussed. These themes are listed in Table 3 by order of greatest occurrence for positive effect followed by no difference and not discussed.

Table 3.

Summary of themes identified for patient medical outcomes (n=36).

| Effectiveness themes | Occurences | Incidence, n (%) |

| Improved symptoms | 20,23,26,41,43,46-48,50 | 9 (25) |

| Improved feedback | 17,31,42,45 | 4 (11) |

| Improved disease management | 21,35,37 | 3 (8) |

| Improved efficacy | 32,39 | 2 (6) |

| Improved screening | 15,27 | 2 (6) |

| Improved safety | 34 | 1 (3) |

| Improved mortality | 44 | 1 (3) |

| No difference reported | 22,36,38 | 3 (8) |

| Not reported or discussed | 16,18,19,24,25,28-30,33,40,49 | 11 (31) |

As illustrated, 61% (22/36) of occurrences of themes identified 7 positive patient medical outcomes as a result of using CDSSs [15,17,20,21,23,26,27,31,32,34,35,37,39,41-48,50]. Patients experienced improved symptoms [20,23,26,41,43,46-48,50], improved feedback from provider [17,31,42,45], improved disease management [21,35,37], improved efficacy of treatment [32,39], improved screening [15,27], and improved safety [34], and one study even reported improved mortality [44]. Although 11 articles did not discuss patient medical outcomes [16,18,19,24,25,28-30,33,40,49], only 3 reported no statistically significant difference in outcomes between control and intervention groups [22,36,38].

Discussion

Summary of Evidence

Our review methodology enabled a meticulous evaluation of the efficiency and effectiveness of CDSSs for practitioner performance and medical outcomes. A summary of the findings from the review are listed in Table 1. Of the 36 articles analyzed that reported efficiency or effectiveness, 25 reported positive performance and 22 reported positive outcomes; 9 did not report practitioner performance and 11 did not report patient medical outcomes.

Commensurate with previous reviews on this topic [6,7], a majority of articles analyzed reported improvement in practitioner performance [16-18,21,23-25,28-31,33-35,37, 38,40,44,45,47-50], but contrary to the previous reviews, our review found articles that reported patient outcomes, and a majority were positive outcomes [15,17,20,21,23,26,27,31,32,34,35,37,39,41-48,50]. Although 9 articles did not discuss practitioner performance [15,20,26,27,32,39,41,43], only 5 articles reported no difference in productivity [19,22,36,42,46].

The decision of whether to adopt a CDSS is one of complexity and change management. Providers and administrators need to discuss the advantages and disadvantages. The organization’s infrastructure must support the application, providers must be trained on how to implement it, and administrators must ensure that budget and organizational dynamics can afford acquisition and implementation. The literature is clear in the efficacy of CDSSs, and this should assist organizations in gaining user acceptance. Providers should carefully integrate CDSSs into their processes and clinical practice guidelines to ensure they are an asset more than a hindrance. They should be used to augment patient care rather than coming between patients and providers.

It is interesting that previous reviews did not find results of medical outcomes. This could have been a limitation in search strategy. It could also be due to the maturation of CDSSs in general. At the time the other reviews were conducted, it may have just been too soon for reviews to see the positive results in medical outcomes.

Because CDSSs present providers with knowledge-based information at the point of care, they augment decision making. Timely tools are available to providers through CDSSs that may not otherwise be available at the point of care. AI-supported recommendations provided by CDSSs analyze symptoms, possible treatments, clinical practice guidelines, and patient outcomes [1,2]. These capabilities are most likely the catalyst for improved practitioner performance and patient outcomes.

There does not appear to be one CDSS panacea for all practices, specialties, or templates. The literature is mixed on which products are best of breed systems. Clearly, additional research should continue to be conducted in this valuable area of medical practice. While other industries have fully embraced the digitized environment, health care in general has been slow to adopt, which is understandable when health is at stake. Based on the results of this review compared with similar ones in the past, CDSSs are diffusing across the health care industry as the systems improve. Further research into CDSSs should look to improve productivity and standardize their integration into clinical practice guidelines.

Another interesting note is that alert fatigue was not raised in any of the studies analyzed. Alert fatigue is a known phenomenon and worthy of note [51]. It is attributed to medical error in the areas of pharmacy and physician ordering systems, which are common attributes in CDSSs [52]. Even in clinical trials, alert fatigue is known to be persistent over time [53]. It is interesting that it was not noted, and if it was not noted, it was not controlled for in the studies analyzed.

Limitations

The small group of articles for analysis was a limitation. Only 36 articles met the selection criteria. A larger group for analysis would strengthen the external validity of the results because we could be better assured that our group is representative of the population. The effects of selection bias were reduced using multiple reviewers to screen and analyze articles [9]. Only two reviewers screened abstracts and analyzed articles for themes. One additional reviewer might have increased the number of observations. Publication bias was reduced through the inclusion of grey literature that included more than just peer-reviewed material; however, these articles were discarded if they did not include results. We considered only articles published in the English language. It is possible that additional observations could have been gained by expanding the search to other languages. This review is also limited by the techniques used in the trials analyzed, and statistics and effect sizes could not be combined due to the wide range used in the articles. We analyzed both qualitative and quantitative methods, and effect size is only viable for the latter. Sample sizes were widely different between studies analyzed, ranging from 6 to 900 million. Such a wide disparity makes consolidation of results difficult. We also did not analyze or compare the heuristics and algorithms used by CDSSs within the studies. To compensate for a limitation from a similar review in 2005, we expanded our analysis beyond randomized controlled trials to pre-post and other designs [6].

Conclusion

Overall , the research generally supports the efficiency of CDSS technologies for practitioner performance [16-18,21,23-25,28-31,33-35,37,38,40,44,45,47-50] and effectiveness in patient medical outcomes [15,17,20,21,23,26,27,31,32,34,35,37,39,41-48,50]; however, a further in-depth review of their effectiveness, in particular for aspects such as the avoidance of alert fatigue and extension of CDSS utility, is important. Decision-support tools extend beyond the practitioner to the patient, and some tools are not software-based but based on patient-reported data [46]. The implementation of CDSSs can mutually benefit the practitioner and patient, and they show great promise for health care in the future.

Abbreviations

- AI

artificial intelligence

- AMSTAR

Assessment of Multiple Systematic Reviews

- CDSS

computerized decision support system

- CPOE

computerized physician order entry

- EHR

electronic health record

- EMR

electronic medical record

- JHNEBP

Johns Hopkins Nursing Evidence-Based Practice

- PICOS

patient/participants, intervention, comparison, outcome, study design

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

Appendix

Participants, intervention, comparison, outcome, study design table.

Footnotes

Authors' Contributions: NE drafted the initial introduction and discussion and analyzed the articles. CSK designed the review, served as senior editor, analyzed the articles, and wrote the final version of all sections. Special thanks to my assistant, Leah Frye, who helped organize the references.

Conflicts of Interest: None declared.

References

- 1.Clinical decision support. [2020-08-05]. https://www.healthit.gov/topic/safety/clinical-decision-support.

- 2.Electronic health records. Centers for Medicare and Medicaid Services. [2020-08-05]. https://www.cms.gov/Medicare/E-Health/EHealthRecords/index.html.

- 3.Han J, Kamber M. Data Mining: Concepts and Techniques. 3rd edition. New York: Elsevier; 2012. [Google Scholar]

- 4.Dixon B, Zafar M. Inpatient computerized provider order entry. Rockville: Agency for Health Care Research and Quality; 2009. [2020-08-05]. https://digital.ahrq.gov/sites/default/files/docs/page/09-0031-EF_cpoe.pdf. [Google Scholar]

- 5.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998 Oct 21;280(15):1339–1346. doi: 10.1001/jama.280.15.1339. [DOI] [PubMed] [Google Scholar]

- 6.Garg AX, Adhikari NKJ, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, Sam J, Haynes RB. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005 Mar 9;293(10):1223–1238. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 7.Jaspers MW, Smeulers M, Vermeulen H, Peute LW. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc. 2011 May 01;18(3):327–334. doi: 10.1136/amiajnl-2011-000094. http://europepmc.org/abstract/MED/21422100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sim I, Gorman P, Greenes RA, Haynes RB, Kaplan B, Lehmann H, Tang PC. Clinical decision support systems for the practice of evidence-based medicine. J Am Med Inform Assoc. 2001;8(6):527–534. doi: 10.1136/jamia.2001.0080527. http://jamia.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=11687560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, Moher D, Tugwell P, Welch V, Kristjansson E, Henry DA. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017 Dec 21;358:j4008. doi: 10.1136/bmj.j4008. http://www.bmj.com/cgi/pmidlookup?view=long&pmid=28935701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009;151(4):264–269. doi: 10.7326/0003-4819-151-4-200908180-00135. http://europepmc.org/abstract/MED/19622551. [DOI] [PubMed] [Google Scholar]

- 11.Kruse CS. Writing a systematic review for publication in a health-related degree program. JMIR Res Protoc. 2019 Oct 14;8(10):e15490. doi: 10.2196/15490. https://www.researchprotocols.org/2019/10/e15490/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Systematic review service. National Institutes of Health. [2020-08-05]. https://www.nihlibrary.nih.gov/services/systematic-review-service.

- 13.McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb) 2012;22(3):276–282. http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3900052&tool=pmcentrez&rendertype=abstract. [PMC free article] [PubMed] [Google Scholar]

- 14.Light RJ. Measures of response agreement for qualitative data: some generalizations and alternatives. Psychol Bull. 1971;76(5):365–377. doi: 10.1037/h0031643. [DOI] [Google Scholar]

- 15.Grout RW, Cheng ER, Aalsma MC, Downs SM. Let them speak for themselves: improving adolescent self-report rate on pre-visit screening. Acad Pediatr. 2019 Jul;19(5):581–588. doi: 10.1016/j.acap.2019.04.010. [DOI] [PubMed] [Google Scholar]

- 16.Connelly M, Bickel J. Primary care access to an online decision support tool is associated with improvements in some aspects of pediatric migraine care. Acad Pediatr. 2019 Dec 04; doi: 10.1016/j.acap.2019.11.017. [DOI] [PubMed] [Google Scholar]

- 17.Salz T, Schnall RB, McCabe MS, Oeffinger KC, Corcoran S, Vickers AJ, Salner AL, Dornelas E, Raghunathan NJ, Fortier E, McKiernan J, Finitsis DJ, Chimonas S, Baxi S. Incorporating multiple perspectives into the development of an electronic survivorship platform for head and neck cancer. JCO Clin Cancer Inform. 2018 Dec;2:1–5. doi: 10.1200/CCI.17.00105. http://ascopubs.org/doi/full/10.1200/CCI.17.00105?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kirby AM, Kruger B, Jain R, OʼHair DP, Granger BB. Using clinical decision support to improve referral rates in severe symptomatic aortic stenosis: a quality improvement initiative. Comput Inform Nurs. 2018 Nov;36(11):525–529. doi: 10.1097/CIN.0000000000000471. [DOI] [PubMed] [Google Scholar]

- 19.Dolan JG, Veazie PJ. The feasibility of sophisticated multicriteria support for clinical decisions. Med Decis Making. 2018 May;38(4):465–475. doi: 10.1177/0272989X17736769. http://europepmc.org/abstract/MED/29083251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jackson BD, Con D, De Cruz P. Design considerations for an eHealth decision support tool in inflammatory bowel disease self-management. Intern Med J. 2018 Jun;48(6):674–681. doi: 10.1111/imj.13677. [DOI] [PubMed] [Google Scholar]

- 21.Caballero-Ruiz E, García-Sáez G, Rigla M, Villaplana M, Pons B, Hernando ME. A web-based clinical decision support system for gestational diabetes: automatic diet prescription and detection of insulin needs. Int J Med Inform. 2017 Dec;102:35–49. doi: 10.1016/j.ijmedinf.2017.02.014. [DOI] [PubMed] [Google Scholar]

- 22.Raj SX, Brunelli C, Klepstad P, Kaasa S. COMBAT study—computer-based assessment and treatment: a clinical trial evaluating impact of a computerized clinical decision support tool on pain in cancer patients. Scand J Pain. 2017 Oct;17:99–106. doi: 10.1016/j.sjpain.2017.07.016. [DOI] [PubMed] [Google Scholar]

- 23.Mooney KH, Beck SL, Wong B, Dunson W, Wujcik D, Whisenant M, Donaldson G. Automated home monitoring and management of patient-reported symptoms during chemotherapy: results of the symptom care at home RCT. Cancer Med. 2017 Mar;6(3):537–546. doi: 10.1002/cam4.1002. doi: 10.1002/cam4.1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Baypinar F, Kingma HJ, van der Hoeven RTM, Becker ML. Physicians' compliance with a clinical decision support system alerting during the prescribing process. J Med Syst. 2017 Jun;41(6):1–6. doi: 10.1007/s10916-017-0717-4. [DOI] [PubMed] [Google Scholar]

- 25.Zini EM, Lanzola G, Bossi P, Quaglini S. An environment for guideline-based decision support systems for outpatients monitoring. Methods Inf Med. 2017 Aug 11;56(4):283–293. doi: 10.3414/ME16-01-0142. [DOI] [PubMed] [Google Scholar]

- 26.Muro N, Larburu N, Bouaud J, Seroussi B. Weighting experience-based decision support on the basis of clinical outcomes' assessment. Stud Health Technol Inform. 2017;244:33–37. [PubMed] [Google Scholar]

- 27.Kistler CE, Golin C, Morris C, Dalton AF, Harris RP, Dolor R, Ferrari RM, Brewer NT, Lewis CL. Design of a randomized clinical trial of a colorectal cancer screening decision aid to promote appropriate screening in community-dwelling older adults. Clin Trials. 2017 Dec;14(6):648–658. doi: 10.1177/1740774517725289. http://europepmc.org/abstract/MED/29025270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lawes S, Grissinger M. Medication errors attributed to health information technology. Pa Patient Saf Advis. 2017 Mar;14(1):1–8. [Google Scholar]

- 29.Kouladjian L, Gnjidic D, Chen TF, Hilmer SN. Development, validation and evaluation of an electronic pharmacological tool: the Drug Burden Index Calculator©. Res Social Adm Pharm. 2016;12(6):865–875. doi: 10.1016/j.sapharm.2015.11.002. [DOI] [PubMed] [Google Scholar]

- 30.Norton WE, Hosokawa PW, Henderson WG, Volckmann ET, Pell J, Tomeh MG, Glasgow RE, Min S, Neumayer LA, Hawn MT. Acceptability of the decision support for safer surgery tool. Am J Surg. 2015 Jun;209(6):977–984. doi: 10.1016/j.amjsurg.2014.06.037. http://europepmc.org/abstract/MED/25457241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pombo N, Rebelo P, Araújo P, Viana J. Combining data imputation and statistics to design a clinical decision support system for post-operative pain monitoring. Procedia Comput Sci. 2015;64:1018–1025. doi: 10.1016/j.procs.2015.08.621. [DOI] [Google Scholar]

- 32.Cox JL, Pieper K. Harnessing the power of real-life data. Eur Heart J Suppl. 2015 Jul 10;17(suppl D):D9–D14. doi: 10.1093/eurheartj/suv036. [DOI] [Google Scholar]

- 33.Schneider E, Zelenka S, Grooff P, Alexa D, Bullen J, Obuchowski NA. Radiology order decision support: examination-indication appropriateness assessed using 2 electronic systems. J Am Coll Radiol. 2015 Apr;12(4):349–357. doi: 10.1016/j.jacr.2014.12.005. [DOI] [PubMed] [Google Scholar]

- 34.Zhu X, Cimino JJ. Clinicians' evaluation of computer-assisted medication summarization of electronic medical records. Comput Biol Med. 2015 Apr;59:221–231. doi: 10.1016/j.compbiomed.2013.12.006. http://europepmc.org/abstract/MED/24393492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Utidjian LH, Hogan A, Michel J, Localio AR, Karavite D, Song L, Ramos MJ, Fiks AG, Lorch S, Grundmeier RW. Clinical decision support and palivizumab: a means to protect from respiratory syncytial virus. Appl Clin Inform. 2015;6(4):769–784. doi: 10.4338/ACI-2015-08-RA-0096. http://europepmc.org/abstract/MED/26767069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Semler MW, Weavind L, Hooper MH, Rice TW, Gowda SS, Nadas A, Song Y, Martin JB, Bernard GR, Wheeler AP. An electronic tool for the evaluation and treatment of sepsis in the ICU: a randomized controlled trial. Crit Care Med. 2015 Aug;43(8):1595–1602. doi: 10.1097/CCM.0000000000001020. http://europepmc.org/abstract/MED/25867906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Peiris D, Usherwood T, Panaretto K, Harris M, Hunt J, Redfern J, Zwar N, Colagiuri S, Hayman N, Lo S, Patel B, Lyford M, MacMahon S, Neal B, Sullivan D, Cass A, Jackson R, Patel A. Effect of a computer-guided, quality improvement program for cardiovascular disease risk management in primary health care: the treatment of cardiovascular risk using electronic decision support cluster-randomized trial. Circ Cardiovasc Qual Outcomes. 2015 Jan;8(1):87–95. doi: 10.1161/CIRCOUTCOMES.114.001235. http://circoutcomes.ahajournals.org/cgi/pmidlookup?view=long&pmid=25587090. [DOI] [PubMed] [Google Scholar]

- 38.Chow ALP, Lye DC, Arah OA. Mortality benefits of antibiotic computerised decision support system: modifying effects of age. Sci Rep. 2015 Nov 30;5:17346. doi: 10.1038/srep17346. doi: 10.1038/srep17346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wilson CJ, Flight IH, Turnbull D, Gregory T, Cole SR, Young GP, Zajac IT. A randomised controlled trial of personalised decision support delivered via the internet for bowel cancer screening with a faecal occult blood test: the effects of tailoring of messages according to social cognitive variables on participation. BMC Med Inform Decis Mak. 2015 Apr 09;15(1):25. doi: 10.1186/s12911-015-0147-5. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-015-0147-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Loeb D, Sieja A, Corral J, Zehnder NG, Guiton G, Nease DE. Evaluation of the role of training in the implementation of a depression screening and treatment protocol in 2 academic outpatient internal medicine clinics utilizing the electronic medical record. Am J Med Qual. 2015;30(4):359–366. doi: 10.1177/1062860614532681. http://europepmc.org/abstract/MED/24829154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mishuris RG, Linder JA, Bates DW, Bitton A. Using electronic health record clinical decision support is associated with improved quality of care. Am J Manag Care. 2014 Oct 01;20(10):e445–e452. http://www.ajmc.com/pubMed.php?pii=85780. [PubMed] [Google Scholar]

- 42.Dexheimer JW, Abramo TJ, Arnold DH, Johnson K, Shyr Y, Ye F, Fan K, Patel N, Aronsky D. Implementation and evaluation of an integrated computerized asthma management system in a pediatric emergency department: a randomized clinical trial. Int J Med Inform. 2014 Nov;83(11):805–813. doi: 10.1016/j.ijmedinf.2014.07.008. http://europepmc.org/abstract/MED/25174321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Heisler M, Choi H, Palmisano G, Mase R, Richardson C, Fagerlin A, Montori VM, Spencer M, An LC. Comparison of community health worker-led diabetes medication decision-making support for low-income Latino and African American adults with diabetes using e-health tools versus print materials: a randomized, controlled trial. Ann Intern Med. 2014 Nov 18;161(10 Suppl):S13–S22. doi: 10.7326/M13-3012. http://europepmc.org/abstract/MED/25402398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Eckman MH, Wise RE, Speer B, Sullivan M, Walker N, Lip GYH, Kissela B, Flaherty ML, Kleindorfer D, Khan F, Kues J, Baker P, Ireton R, Hoskins D, Harnett BM, Aguilar C, Leonard A, Prakash R, Arduser L, Costea A. Integrating real-time clinical information to provide estimates of net clinical benefit of antithrombotic therapy for patients with atrial fibrillation. Circ Cardiovasc Qual Outcomes. 2014 Sep;7(5):680–686. doi: 10.1161/CIRCOUTCOMES.114.001163. http://circoutcomes.ahajournals.org/cgi/pmidlookup?view=long&pmid=25205788. [DOI] [PubMed] [Google Scholar]

- 45.Zaslansky R, Rothaug J, Chapman RC, Backström R, Brill S, Engel C, Fletcher D, Fodor L, Funk P, Gordon D, Komann M, Konrad C, Kopf A, Leykin Y, Pogatzki-Zahn E, Puig M, Rawal N, Schwenkglenks M, Taylor RS, Ullrich K, Volk T, Yahiaoui-Doktor M, Meissner W. PAIN OUT: an international acute pain registry supporting clinicians in decision making and in quality improvement activities. J Eval Clin Pract. 2014 Dec;20(6):1090–1098. doi: 10.1111/jep.12205. [DOI] [PubMed] [Google Scholar]

- 46.Lobach DF, Kawamoto K, Anstrom KJ, Silvey GM, Willis JM, Johnson FS, Edwards R, Simo J, Phillips P, Crosslin DR, Eisenstein EL. A randomized trial of population-based clinical decision support to manage health and resource use for Medicaid beneficiaries. J Med Syst. 2013 Feb;37(1):1–10. doi: 10.1007/s10916-012-9922-3. [DOI] [PubMed] [Google Scholar]

- 47.Barlow J, Krassas G. Improving management of type 2 diabetes: findings of the Type2Care clinical audit. Aust Fam Physician. 2013;42(1-2):57–60. http://www.racgp.org.au/afp/2013/januaryfebruary/type2care-clinical-audit/ [PubMed] [Google Scholar]

- 48.Robbins GK, Lester W, Johnson KL, Chang Y, Estey G, Surrao D, Zachary K, Lammert SM, Chueh HC, Meigs JB, Freedberg KA. Efficacy of a clinical decision-support system in an HIV practice: a randomized trial. Ann Intern Med. 2012 Dec 04;157(11):757–766. doi: 10.7326/0003-4819-157-11-201212040-00003. http://europepmc.org/abstract/MED/23208165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Chen CC, Chen K, Hsu C, Li YJ. Developing guideline-based decision support systems using protégé and jess. Comput Methods Programs Biomed. 2011 Jun;102(3):288–294. doi: 10.1016/j.cmpb.2010.05.010. [DOI] [PubMed] [Google Scholar]

- 50.Seow H, King S, Green E, Pereira J, Sawka C. Perspectives of patients on the utility of electronic patient-reported outcomes on cancer care. J Clin Oncol. 2011 Nov 01;29(31):4213–4214. doi: 10.1200/JCO.2011.37.9750. [DOI] [PubMed] [Google Scholar]

- 51.Cash J. Alert fatigue. Am J Health Sys Pharm. 2009;66(23):2098–2101. doi: 10.2146/ajhp090181. [DOI] [PubMed] [Google Scholar]

- 52.Kesselheim AS, Cresswell K, Phansalkar S, Bates DW, Sheikh A. Clinical decision support systems could be modified to reduce ‘alert fatigue’ while still minimizing the risk of litigation. Health Affairs. 2011 Dec;30(12):2310–2317. doi: 10.1377/hlthaff.2010.1111. [DOI] [PubMed] [Google Scholar]

- 53.Embi PJ, Leonard AC. Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study. J Am Med Informat Assoc. 2012 Jun 01;19(e1):e145–e148. doi: 10.1136/amiajnl-2011-000743. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Participants, intervention, comparison, outcome, study design table.