Abstract

CITRUS is a supervised machine learning algorithm designed to analyze single cell data, identify cell populations, and identify changes in the frequencies or functional marker expression patterns of those populations that are significantly associated with an outcome. The algorithm is a black box that includes steps to cluster cell populations, characterize these populations, and identify the significant characteristics. This chapter describes how to optimize the use of CITRUS by combining it with upstream and downstream data analysis and visualization tools.

Keywords: CITRUS, Biomarker discovery, Supervised machine learning, viSNE

1. Introduction

Analyzing single cell data with traditional, biaxial approaches can lead investigators to focus only on cell populations that they are already aware of and might lead them to miss important discoveries in other populations [1, 2]. This is particularly important in studies that are designed to identify novel biomarkers for a specific clinical endpoint like disease status or response to therapy.

CITRUS [3] is a supervised machine learning algorithm for single cell biomarker discovery. It has been used to identify single cell biomarkers in many contexts. For example, CITRUS has been used to identify: biomarkers for response and resistance to CD19 CAR-T cell therapy in chronic lymphocytic leukemia [4]; age-related epithelial subpopulations in breast tissue [5]; peripheral blood biomarkers for response to anti-CTLA-4 and anti-PD-1 checkpoint blockade [6]; and differences in immune populations between placebo-responders and controls in a mouse model [7].

The CITRUS algorithm includes steps to cluster cell populations, characterize these cell populations, and identify the characteristics of the cell populations that are statistically significant biomarkers that distinguish outcome groups. In this way, CITRUS is a black box that identifies statistically significant single cell biomarkers for any discrete outcomes of interest. Since the CITRUS algorithm seamlessly integrates these steps, it is able to search efficiently for the boundaries of a cell population that is significantly different between the outcome groups. The clustering step identifies nested clusters such that each “parent” cluster contains all of the cells within each of its “child” clusters. Then, the significance testing is performed for each of these nested levels of cell populations: clusters that are too narrow or too broad in their boundary of a population will not be identified as significant. Since CITRUS performs so many statistical tests, it uses permutations and the False Discovery Rate method [8] to report only single cell biomarkers that are significant after correction for multiple hypothesis testing. The single cell biomarkers it identifies can be either population abundances or population-specific median expression of functional markers. In addition to correlative analyses that identify all of the significant single cell biomarkers, CITRUS can build predictive models that identify a minimum set of biomarkers necessary to discriminate effectively between the groups, and estimate the accuracy with which these biomarkers will be able to do this.

This chapter describes our protocol for combining the CITRUS algorithm with upstream and downstream data analysis tools to take full advantage of CITRUS’s unbiased biomarker discovery. With any machine learning method, data QC and cleanup is essential to generating meaningful results. In addition, effective exploratory data analysis is essential to understanding the biology and heterogeneity that drives those results (or lack of results). Thus, our protocol includes considerations for experimental setup, data tidying and quality control, visualization and exploratory data analysis, clustering and biomarker discovery, and visualization for communication of results (Fig. 1). As a part of this pipeline, we utilize viSNE [9, 10], biaxial plots, and heatmaps for the visualization and exploration of significant clusters. Together, these tools allow unbiased identification of significant single cell biomarkers and effective communication of these results.

Fig. 1.

Single cell biomarker discovery pipeline with CITRUS. Key steps outlined for biomarker discovery analysis pipeline. (a) Experimental setup (b) data tidying and quality control and (c) exploratory data analysis, clustering and biomarker discovery, and visualizations to communicate results. Steps (a) and (b) are required before initiating any of the components in step (c). Each part of step (c) (i–iii) may be initiated following experimental setup and data tidying/quality control

2. Materials

CITRUS was developed in and may be run using the R programming language [3]. This chapter discusses CITRUS as implemented in Cytobank, a cloud-based informatics platform available at www.cytobank.org.

3. Methods

3.1. Considerations for Analysis Setup

Thinking through certain aspects of the analysis setup before beginning analysis will reduce time spent in later steps of the analysis.

During data acquisition, ensure that channel short names and long names are consistent across all of the files that will be analyzed together. As much as possible, include information about outcome groups, stratifying variables, and technical factors in file names.

Define the outcomes and stratifying dimensions you will compare. The outcomes should be discrete (i.e., have defined categories with groups of samples) and the samples in each group should be independent (i.e., not consist of multiple observations on the same individual, even under different conditions) (see Note 1). In addition to outcomes, there may be stratifying variables, or other dimensions in the data that you wish to compare across. These stratifying variables should also be discrete, but samples split across these stratifying variables do not have to be independent (see Note 2). An example of typical outcome, stratifying, and technical variables you might track is shown in Table 1.

Determine the number of samples you will have for each group of outcome and stratifying dimensions (see Note 3). CITRUS will be run for your outcome variable within each stratifying group. For each of these comparisons, you need a minimum of three samples per group to run CITRUS, and we recommend that you have at least eight samples per outcome group to obtain reliable results.

Determine whether the samples were run in multiple batches and whether control samples are available. If the samples were run in multiple batches, determine whether the outcome and stratifying variables were distributed equally across the batches and whether a technical control sample was run with each batch (see Note 4). Identify any factors based on experimental setup or lab observations while processing samples and acquiring data that were tracked; these can be used later for sensitivity analyses.

Determine whether the scientific question requires a correlative or predictive analysis, or both. Correlative analyses will identify all of the significant biomarkers that can be detected in the data, while predictive analyses will identify a minimum set of markers needed to best predict an outcome (see Note 5). The choice of analysis will dictate which models to select in CITRUS (Fig. 2).

Determine which markers in the panel will be used for phenotyping and which will be used as functional readouts (see Note 6).

Complete standard preprocessing steps for the mass cytometry data, which may include normalization for time and de- barcoding if barcoding was used.

Table 1.

Example of Stratifying, Outcome, and Technical Variables in an Experiment

| Stratifying or outcome variable | # in each category |

|---|---|

| Baseline (pre) or post-therapy | Pre: 40 files Post: 40 files |

| Therapy response |

Responders (R): 28 individuals (2 files each) Non-responders (NR): 12 individuals (2 files each) |

| Patient ID | 40 unique patient IDs (2 files each) |

| Staining batch (1–4), labeled by date | 1 = 20 files 2 = 20 files 3 = 20 files 4 = 20 files |

In this example study, patient peripheral blood mononuclear cells (PBMCs) have been acquired in batches for samples pre- and post-therapy. The study outcome variable to be used for CITRUS (highlighted in italics) is therapy response. Timepoint (pre- or post- therapy) is a stratifying variable in this study; one CITRUS run will be performed on the pre-therapy samples, and a separate CITRUS run will be performed on the post-therapy samples. Staining batch will be used to look for batch effects. Timepoint, therapy response, patient ID, and staining batch are annotated by Cytobank sample tags. The number of files expected in each category is outlined in the second column and used to check the files present in Cytobank before starting the analysis

Fig. 2.

Flowchart to determine correlative vs. predictive CITRUS and CITRUS models to use. The CITRUS models to select are determined by the overall analysis goals. Use the above flowchart to determine which models will address the scientific question. Regardless of the model selection, significant populations identified by CITRUS can be visualized on viSNE maps

3.2. Data Tidying and Quality Control

The goal of the data tidying and quality control (QC) steps is to identify any problems in the data that may result in the exclusion of samples, markers, or batches of samples from analysis. For issues that are identified related to batches of samples, normalization may be performed in an attempt to control for batch effects.

Upload data to Cytobank and annotate the data according to outcome groups, stratifying variables, and technical factors using Cytobank’s Sample Tags (see Note 7).

Check that the number of files in each tagged group matches what you expect according to your experimental design (Table 1) (see Note 8).

Check that the panels are annotated as expected based on the assay(s) that were run. Each CITRUS analysis should be performed on a set of samples run on a single panel (see Note 9). Fix any discrepancies in channel names that may have happened accidentally during acquisition. Identify any channels that will need to be excluded because they differed across samples in the experiment. If there are a few samples that have extensive panel differences, you may choose to exclude these samples instead of excluding discrepant channels.

Identify files that are outliers for total event count and check whether there were issues acquiring data for that sample. These samples will likely need to be excluded from the analysis.

Check the scales for all markers in the panel across all samples. Perform or adjust transformations as required (see Note 10). If your data are off the plot or bunched on the axis you may need to adjust your scale range settings (see Note 11).

Examine technical controls for evidence of batch effects or problems with marker staining using histograms or other basic plot types (see example in Fig. 3a, Note 12). If you find markers for which staining does not appear to have worked (either within a particular batch or across all batches), these markers should be excluded from the CITRUS analysis.

If you think that observed differences across technical control replicates are due to batch effects, run viSNE on the technical control samples from each batch to identify whether observed differences between batches will impact downstream analysis results (see example in Fig. 3b, Note 13).

If you identify batch effects that will impact your downstream analysis results, one approach is to normalize the data [11, 12] (see Note 14). After normalization, reexamine the scales, rerun viSNE on the technical controls, and look again for evidence of batch effects to assess whether the normalization was successful (see example in Fig. 3b). Alternatively, batches for which large batch effects are identified may be excluded, so that only batches without any apparent differences across batches are included.

Fig. 3.

Technical control evaluation. Peripheral blood from one healthy donor was collected and cryopreserved to use as a technical control in this study. A different antibody cocktail was prepared for each of the five indicated staining batches and evaluated for differences across batch using the technical control. (a) Pre- normalized data for technical control samples shown. Histograms displaying a few measured markers are examined across batch. Intensity of indicated marker and the negative and positive spread are compared across batch. The red line indicates the middle of the plot. Data reveal staining differences. (b) Pre and post- normalization viSNE maps are compared by coloring across channel. Here, only CD19 expression is displayed. Technical control samples per batch were assessed using viSNE as an exploratory data analysis tool. Row 1 show data pre-normalization and row 2 show data post z-score normalization by batch. Data post- normalization highlight more uniform intensity of CD19 expression and reveal more uniform viSNE “island” shapes. The effect of normalization can be quantified using a clustering algorithm to define cell populations and then measuring the correlation of the cell populations across the batches pre- and post-normalization

3.3. Pre-gating

Pre-gating to intact singlets or similar might be required in order to perform some of the investigations described above. For the rest of the CITRUS analysis, further pre-gating might be desired. This decision will depend on the cell populations the panel is designed to measure, the number of samples being comparing, and the scientific questions being answered. If a large number of samples are being compared, where differences in rare cell populations are being investigated, further pre-gating may be required to include enough cells per sample to detect these rare cell populations reliably.

At a minimum, pre-gate to the events the panel and scientific question are focused on. For example, gate to select CD45+ cells if lymphocytes are the focus, or gate to remove cells stained with antibodies in a dump channel.

Determine the maximum number of events you can include in a single viSNE map. This is limited in Cytobank by your subscription options [13]. Care should be taken in interpreting viSNE plots of over 100,000 cells, as data crowding will start to skew population distributions.

Determine the number of samples to include in the viSNE map. At a minimum, this is the number of samples that will be included in a single CITRUS run for a set outcomes within a given stratifying variable group (see Note 15). Exclude samples based on the conclusions from data tidying and QC.

Determine the number of events per file in the initial pre-gated population. For the exercise below, use the file with the minimum number of events in the pre-gated population. If one or more files have a very low number of events in this population, plan to exclude them and use the next lowest number.

Determine the expected frequency of the rarest cell population of interest as a fraction of your initial pre-gated population.

Use the flowchart in Fig. 4 to determine whether you need to pre-gate further (see Note 16) and whether you will use multiple pre-gated populations for your analysis (see Note 17).

For the final pre-gated population(s), check whether any samples have pre-gated event counts lower than the number of events you determined was necessary per file. These samples should be excluded from the analysis.

Fig. 4.

Determine required pre-gating using event count. For example, we use the following logic to determine how many events are needed to be able to detect Tregs with a desired 5% CV starting with all CD3+ T cells for 50 samples. Let’s say the file with the lowest number of CD3+ T cells has 50,000 events in this population, and the maximum number of events we can include on viSNE is 1.3 M. (a) 1.3 M max events/ 50 samples = 26,000 events, which is <50,000. (b) We estimate that Tregs have a frequency of about 2% of CD3+ T cells. Thus, the # of events needed per file to detect Tregs is 20,000. 26,000 events is greater than the 20,000 events needed per file. (ii) No additional pre-gating is required in this example. If our samples did not meet these conditions, we could (i) reduce the number of samples being analyzed, or (c) perform further pre-gating to isolate a smaller and more specific group of cells containing the Treg cells

3.4. Visualization and Exploratory Data Analysis

Run one viSNE for each pre-gated population and set of samples that are necessary for the data and scientific questions above. Exclude any samples selected for exclusion during file QC, data QC, and pre-gating. Set the total number of events based on pre-gating event count calculations, and select equal sampling. Select the channels that were identified as phenotyping markers in Subheading 3.1 as clustering channels, but exclude any channels used during pre-gating (for a discussion on exceptions see Note 18). If including a large number of total events, increase the number of iterations and perplexity (see Note 19).

Check the quality of the resulting viSNE map to ensure that no further tuning of any of the algorithm settings is required. In Cytobank, set up a working illustration that shows the viSNE map for every file on the rows colored by every channel on the columns. If this map is not high enough quality (see Note 20), adjust the advanced settings and rerun viSNE.

Create a concatenated viSNE map across all samples, and use the working illustration dot plots to color it by the expression of each of your clustering marker channels (see Note 21, and an example of separate viSNE maps in Fig. 5 compared to a concatenated viSNE map in Fig. 6c).

Create views of the viSNE map to help with exploration of the data. viSNE analysis, as described here, can also be used after performing CITRUS clustering (described below) to help explain the CITRUS results (see Note 22). Samples may be kept individual or concatenated in groups according to the outcome, stratifying, and technical variables you annotated with Cytobank’s Sample Tags. To visualize differences in individual samples or groups of samples, use dot plots colored by marker expression to visualize heterogeneity. When using viSNE after CITRUS clustering (as described below) dot plots colored by marker expression can be used to visualize differences between and within cell clusters identified by CITRUS, and dot plots colored by density can be used to visualize differences in abundance of cell clusters identified by CITRUS.

If using some markers as functional readouts that weren’t included in the parameters used to generate the viSNE map (such as phosphorylated antigens or cytokines) these can still be visualized on the viSNE map by creating a colored dot plot (see example Fig. 5 and Note 23).

Fig. 5.

Using viSNE for Exploratory Data Analysis. Synthetic example viSNE grid, colored by marker expression, shown for patients with B cell malignancies. Two individuals from each group of interest are shown—two patients from Outcome A (therapy responders) and two patients from Outcome B (therapy non-responders). PBMCs were isolated from each patient prior to therapy (baseline) and stimulated with anti-BCR, to see if baseline stimulation responses will correlate with the outcome of therapy. Expression of each marker is indicated by scale bar (red for high, blue for low). In this example, each column represents the expression of the indicated marker (p-S6, p-PLCγ, p-ERK) for intact live cells from peripheral blood mononuclear cells (PBMCs). The B cell “island” of the viSNE map is circled in blue and reveals the inter- and intra-heterogeneity in expression of measured markers between groups and across patients. This exploratory data analysis highlights how patients who responded to therapy (from outcome group A) were able to respond to BCR stimulation prior to therapy; whereas, patients from outcome group B were not. The strongest response from patients in outcome group A was for p-S6 expression

Fig. 6.

Biomarker discovery results visualization. CITRUS using SAM with a 5% FDR was run on non-T cell subsets (i.e., CD3 negative pre-gated cells in PBMC samples) to look for any differences in abundances of non-T cells among three different disease types—Group A, Group B, Group C. (a) Display of the CITRUS defined clusters. Highlighted clusters show cell subsets that had a significantly different abundance among the Fig. 6 three cohorts. The parent clusters (which are “highest” up the tree), 1 and 2, are highlighted in orange and blue, respectively. (b) Boxplot displays show that the both clusters are most abundant in Group A and least abundant in Group C. (c) viSNE plots for each sample were concatenated and the expression of the displayed marker for the combined assessed patient population is displayed (e.g., CD11c, CD14). The significant clusters identified by CITRUS are shown on the viSNE coordinates in the bottom row to the right. Comparison of these plots helps identify the phenotype of these significant clusters. (d) Heatmap and (e) histograms show the expression of the indicated marker on the significant clusters (1 and 2) vs. all other non-T cells

3.5. Clustering and Correlative Biomarker Discovery Analysis

Run one CITRUS analysis to compare a set of outcome groups (such as therapy responders vs. non-responders) within one stratifying variable (such as pre-therapy, or post-therapy) that was defined above. For each CITRUS, start from the viSNE experiment that contains the appropriate samples (see Note 24) and select the pre-gated population. For each CITRUS run, choose to either look at differences in the abundances of cell populations between your groups (as a percentage of your pre-gated population) or the medians of functional marker expression in cell populations between your groups (see Note 25). Regardless of which choice you make, select the clustering channels that you chose to use for phenotyping markers in Subheading 3.1. Assign the “file groupings” based on the outcome groups you are planning to compare (see Note 26). For correlative analysis, choose the SAM model [14] and in the “Additional Settings” box, make sure that the number of events sampled per file is large enough that all of the events from your viSNE will be included in the CITRUS. Set the minimum cluster size (%) to the approximate percentage of the lowest abundance population you are interested in detecting. Set the cross-validation folds to 1 and leave the FDR set at the default (see Note 27). Select “Normalize Scales.”

Select a false discovery rate (FDR) level at which you will assess the SAM results (see Note 28).

Determine whether you have any significant results. If there are no significant biomarkers found, no model output will be returned (see Note 29).

If the model returns significant results, select the clusters to focus on. Use the “featurePlots” output from CITRUS for the chosen FDR and identify the significant nodes highlighted in red on the tree. When there are multiple significant clusters that are immediate neighbors of each other, CITRUS will draw a bubble around these neighbors. Choose the highest cluster on the tree within each bubble to focus on (see example in Fig. 6a and Note 30).

Review how these clusters differ between the outcome groups on the “features” boxplots output from CITRUS for the chosen FDR (see example in Fig. 6b and Note 31).

From the completed CITRUS page, export the clusters you selected to focus on to a new experiment along with the original files.

Map these clusters back to viSNE to understand their phenotypes (see example in Fig. 6c and Note 32). Concatenate the events separately for each selected cluster and all of the original files. Create a viSNE dot plot for all of the concatenated files with the significant cluster files overlaid on the original file (see Note 33). Compare this viSNE map showing the significant clusters to the concatenated viSNE expression maps that were created in Subheading 3.4 to help explain the phenotype of the significant CITRUS clusters.

Examine the selected clusters on the “markerPlots” (see Note 34) and the “clusters” histograms (see example in Fig. 6e and Note 35) output by CITRUS. Check that this expression matches what you see on the viSNE map in the region where the significant clusters are overlaid.

Create a heatmap in the working illustration (see example in Fig. 6d) of the exported clusters experiment with the clustering channels as the rows and the concatenated files for each significant cluster as the columns. Check that this expression matches what is seen on the viSNE map in the region where the significant clusters are overlaid (see Note 36).

Back-gate the significant CITRUS clusters onto biaxial plots using color overlay dot plots (see Note 37).

3.6. Clustering and Predictive Biomarker Model Development

Run one CITRUS for each predictive model you would like to build (see Note 38). Set the parameters for this CITRUS as for the correlative analysis (see Subheading 3.5), except: (1) choose the PAM model [15], and additionally choose the lasso model [16] if only two groups are being compared (see Note 39); and (2) in the “additional settings” box, set the cross-validation folds to at least 5 and no more than 10 (more will take longer) and set the FDR to the significance level at which you would like to evaluate your results. Take note of the number of samples in each CITRUS group (see Note 40).

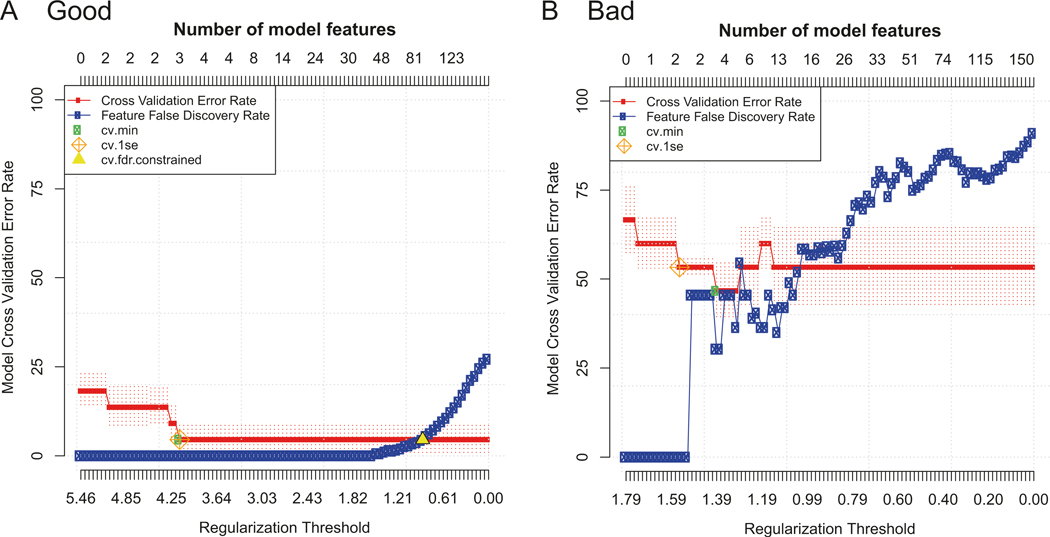

Determine whether the biomarkers included in the predictive model are able to discriminate the groups using the model error rate plot (see example Fig. 7 and Note 41). Identify the green dot representing the CV min constrained model (see Note 42) and calculate the cross-validated estimation of the accuracy of your model to discriminate your outcome groups as one minus the model cross-validation error rate (y-axis) at this point. Determine whether the accuracy of your model is “good” given the context of your analysis (see Note 43).

Determine whether the predictive model results are also significant (when using PAM) by comparing the predictive model constrained by the minimum cross-validated error rate (the CV min model, green circle) to the model constrained by the false discovery rate (the FDR model, yellow triangle) on the model error rate plot (see Note 44). Check that the FDR constrained model contains more features than the CV min constrained model (i.e., the yellow triangle should be further to the right on the X-axis); this indicates that all of the features in the predictive model are statistically significant.

As described for the correlative analysis results in Subheading 3.5, select the clusters to focus on, review how these clusters differ between the outcome groups, and map the clusters back to the viSNE map to understand their phenotypes. Compare the results of the predictive and correlative analyses and of all of the predictive models that you build (see Note 45).

Fig. 7.

“Good” vs. “bad” example model error rate plots. Two examples of model error rate plots output from predictive CITRUS analyses using PAM and an FDR of 5% with equally balanced groups. In each plot, the cross-validation error rate (red line) and false discovery rate (blue line) are shown as the number of features in the model is increased (top x-axis) according to the regularization threshold used to build the model (bottom x-axis). (a) A “good” model error rate plot. An FDR constrained model (yellow triangle) was identified with more features than the CV constrained models (cv.min, cv.1se). The CV min model had a CV error rate of around 5% using three features (cluster abundances in this example), which was considered excellent for this context. (b) A “bad” model error rate plot. The CV error rate never drops below 50%, meaning the model fails to accurately predict the groups 50% of the time. No FDR constrained model was identified (no yellow triangle), meaning that the features included in the CV constrained models are not statistically significant

3.7. Further Analyses to Validate the CITRUS Results

There are several reasons why additional CITRUS analyses might be run, in order to understand how your results are affected by certain factors in your data. Generally, sensitivity analyses involve excluding certain samples or markers from the CITRUS analysis and checking that you identify the same trends that you see when you use all of the data to ensure the effects that you find are consistent across your entire dataset and are not being driven by outlying samples or markers (see Note 46). In addition to the sensitivity analyses, once you have identified biomarkers of interest, you should repeat your CITRUS analysis to guard against false positives due to stochasticity (see Note 47).

Rerun CITRUS excluding any samples or markers for which there are concerns about data quality. Such data quality concerns may have been identified during the data tidying and QC phase.

Rerun CITRUS excluding any batches of samples for which there are concerns about batch effects, or, if several samples per group within each batch are available, rerun CITRUS stratifying analyses by batch.

Rerun CITRUS excluding, combining or splitting samples from certain outcome groups if there are more than two (see Note 48).

If a predictive model is being built with unbalanced groups, rerun CITRUS many times, each time arbitrarily selecting subsets of the samples so that the groups used to build the model and assess accuracy are equal in size.

Rerun the entire viSNE + CITRUS analysis pipeline described above to reproduce any results of interest. Do not set the same seed for viSNE (as rerunning with the same seed will result in an identical analysis). For correlative CITRUS analyses, significant clusters with the same phenotype in each repeat should be identifiable (see Note 49). For predictive CITRUS analyses, the cross-validated model error rate seen on the model error rate plot should be similar for each repeat (see Note 50).

Experimental replication of findings in additional samples is also an important step in any project.

Use exploratory data analysis on the viSNE map as described in Subheading 3.4 to assist in understanding why a particular CITRUS cluster is significant or not in its clinical, biological, and experimental context.

Footnotes

Notes

Examples of typical outcomes include different disease categories (e.g., healthy donors vs. disease or two different disease groups), different response categories to a therapy, or different disease severity categories. You can compare two or more outcomes within a single CITRUS run, but there may be additional considerations for sensitivity analyses and interpretation of results when you have more than two groups (discussed below). While other implementations of CITRUS can manage continuous outcomes, discrete outcomes are necessary for analysis in Cytobank.

Examples of typical stratifying variables include time points (e.g., samples from the same patients across time in a clinical study, or the same biological sample aliquoted and measured at various time points after stim) and experimental conditions (e.g., the same samples measured under different culture or stim conditions).

Generally, the number of samples you have in each group will be driven by the study design and (in human studies) recruitment and any loss to follow-up. When the goal is to build a predictive model with CITRUS (as opposed to running a correlative analysis), interpretation becomes more straightforward if there is an equal (or roughly equal) number of samples in each group.

If there will be multiple batches of samples, randomizing samples across batches according to outcome groups, stratifying variables and including a technical control sample that is run in every batch can help to assess and control batch effects. Ideally, the technical control sample will be the same sample aliquoted so that one aliquot is run with each batch (e.g., a pool of healthy donor peripheral blood mononuclear cells (PBMCs), if the cell populations being measuring are present in healthy PBMCs).

Even if the project goal is to build a predictive model, we often find it useful to also run a correlative analysis to identify all of the significant biomarkers in the dataset. Since predictive analyses return a minimum set of markers that can best predict the differences between the groups, there are often additional markers that are significantly different between the groups.

The markers to use for phenotyping and functional readouts can be decided based on their traditional use, but we often find it useful to use at least some of the non-traditional phenotyping markers in a panel as clustering markers for viSNE and CITRUS. Since these methods can define populations even when there is not a clear split between positives and negatives, including markers that would traditionally be functional readouts for phenotyping can help identify more nuanced cell types based on co-expression of functional markers and determine how these cell types vary between sample groups.

If you have carefully named your files to include information about all of these variables, this will expedite the process of creating sample tags since Cytobank will automatically put files into sample tag groups as you create them if the group name is in the file name. Otherwise, you can use a spreadsheet to import the sample tags from a CSV file; this matches the file name to the sample tags.

The experiment summary page in Cytobank can be used to quickly review the number of files in the experiment, sample tags associated with each file, and the total event count per file.

If multiple panels were run on the sample set, a separate CITRUS analysis can be performed for each panel, and the results combined for a final report or publication.

The arcsinh transformation helps accommodate the negative values that may be exported from flow cytometry instruments, while displaying the data in a log-like manner (for more information, see http://blog.cytobank.org/2012/03/30/making-beautiful-plots-data-display-basics). The arcsinh scale argument (or cofactor) should be set so that the distribution of each marker is as expected based on the known biology of that marker and all collected data are visible. The higher the cofactor, the greater the compression of low-value data to zero. The default axis transformation for mass cytometry data in Cytobank is arcsinh with a cofactor of 5. For flow cytometry data, cofactor values up to 2000 are commonly used. As an example, peripheral blood mononuclear cells (PBMCs) should exhibit negative, intermediate, and high expression of CD4 on non T cells, monocytes, and CD4+ helper T cells, respectively. If CD4 expression on monocytes was apparent before axis transformation, but absent after, then the cofactor may be set too high.

The range of the scales should be set broadly enough that you can see most of the data on the plot. In Cytobank, if the range is too narrow, the data will appear stacked next to the limits of the plot.

Create a working illustration displaying all of the technical control files on the rows and all of the markers on the columns. Either histograms or dot plots with a widely expressed marker like CD45 on the y-axis work well for these plots. Looking down the rows for each marker, identify whether there are any technical controls that appear to have either poor staining (i.e., little to no expression) for a marker or have batch effects (i.e., an overall higher or lower distribution of expression of the marker compared to the other batches).

Run viSNE on intact singlets (or similar) with equal sampling from all of the technical control samples using the clustering markers you will use for your main CITRUS analysis. Create a working illustration displaying this viSNE map with each of the technical control samples on the rows; look at it using density dot plots, contour plots colored by density, and colored by the expression of each clustering marker. If you find variation in distribution of the markers or in the viSNE map across technical controls, this indicates batch effects that are large enough to influence analysis results.

There are several methods available for normalizing data for batch effects and a thorough review of these is outside the scope of this chapter. Any method that returns normalized data for every cell across each channel can be applied as part of the CITRUS pipeline. One class of methods will be applied one-by-one to each batch, with the goal being that the batches may be combined for analysis after normalization. One method of this type that has worked well is z-score normalization (or “mean scaling”) [11]. To use this method, concatenate the events from all of the samples in a given batch. Then, normalize each value in a given channel by subtracting the mean of that channel and dividing by the standard deviation of that channel. Finally, split the data back into separate files for continued analysis. Normalization using the 5th and 95th quantiles of the data for each channel may also work well and may be less sensitive to outlier cells. A second class of methods will be applied sample by sample, with the goal being to identify and align peaks for each marker [12]. We have seen good results with these methods, which must be run in R at the moment as far as we are aware (unpublished data), but will not be discussed in detail here. A third class of normalization methods takes into account cell populations when normalizing the data; these are typically not as applicable to the CITRUS analysis pipeline because they usually return cell populations rather than normalized per-cell data [17, 18].

If possible (depending on the calculations made for the number of events required per sample), it can be beneficial to include the samples for CITRUS runs in multiple stratifying variable groups in the same viSNE map.

To determine the number of events needed per file to detect rare populations with an acceptable percent CV, we recommend following the advice of [19]. For example, to detect a population that is 1% of the pre-gated population with a 5% CV, you need to include 40,000 events per file in the viSNE and CITRUS, whereas to detect a population that is 2% of the pre-gated population with the same CV, you only need to include 20,000 events per file.

If you find that you cannot include enough cells per file in the analysis to detect the rare populations you are interested in, but you have acquired enough cells per file to do detect these populations, you can start parallel CITRUS analysis pipelines for different pre-gated populations. For example, you might pre-gate to T cells and B cells separately and start CITRUS analysis pipelines for each of these subsets separately.

It may be helpful to include a pre-gating marker (e.g., CD45 or CD3) as a clustering channel depending on your particular data and biology. For example, T cells exposed to a chronic inflammatory environment may downregulate expression of the ζ-chain of CD3 [20]. Therefore, if collected data include T cells from unique environments, varying expression of CD3 may indicate an important biological phenomenon that would be helpful to capture by clustering on that marker.

It is best to set iterations and perplexity according to some rough guidelines rather than to simply maximize them because they can dramatically increase run time. A good starting point for iterations is roughly 1000 iterations for every 100k events. Perplexity is more difficult to set guidelines for because the required perplexity is influenced greatly by the specific data set. Increasing perplexity can also have a large impact on algorithm run time. We recommend starting with a perplexity of 30 if the number of events in the viSNE is less than 1 M, 50 for 1 M to 1.5 M events, and 70 for more than 1.5 M events [13].

In a high quality viSNE map, cells with similar expression of clustering markers will form either separate viSNE islands (if they are very different from other cell types) or separate regions within viSNE islands. A poor quality viSNE map will have overlapping and poorly formed islands that don’t separate the expression of a single marker into distinct locations on the map. Cells expressing a given marker may appear in a stringlike or spindly pattern. If the viSNE map is poorly converged, rerun viSNE with additional iterations. viSNE maps can also be converged with the islands not very well separated. In this case, rerun viSNE with higher perplexity. Note that if starting with a distinct pre-gated population (e.g., CD4+ T cells) it is expected that the viSNE map will only have one island; expression of the clustering markers should define regions of the island rather than separate islands.

This is often done with the files separate as part of assessing viSNE map quality, but a better publication visual can be made on the concatenated file across all groups. This will be useful for visually explaining the phenotype of significant clusters.

We recommend making this exploratory data analysis with the viSNE map an iterative process that is used to explore the data before and after running CITRUS.

To keep track of the cell populations being examined, it may be helpful to manually gate the islands of the viSNE map and then display these on the working illustration. Do this by selecting “show gates” and using the gate label “gate name.”

One CITRUS analysis is required for each separate stratifying variable that is included in a single viSNE. For example, if included healthy and disease samples from two stimulation conditions in the same viSNE, start one CITRUS comparing healthy and disease samples for each of the stimulation conditions. Alternatively, depending on your event count calculations done above, these stimulation conditions may be in separate viSNEs.

If both are desired, these can be done in separate CITRUS runs. If using medians, be sure to select equal sampling and specify the functional readout markers that were identified in Subheading 3.1 as the functional markers to characterize.

Since equal sampling was chosen for viSNE, all of the files listed should already have the same number of events.

Neither cross-validation fold nor FDR influence the SAM model.

CITRUS results are statistically significant below a FDR threshold which you select. FDR is similar to a p-value that’s adjusted for multiple hypothesis testing, but it is less conservative (i.e., it will identify more significant biomarkers than, for example, a Bonferroni correction) and therefore useful for biomarker discovery. The SAM model outputs results at FDR thresholds of 1, 5, and 10% for every run. One of these can be selected to report as “these results are statistically significant below a false discovery rate threshold of xx%,” where xx is the threshold you select.

There are several reasons why no model output will be returned by CITRUS. First, there may not be any significant difference between the outcome groups that can be measured by the panel that was used. Second, CITRUS might not find any significant biomarkers if there is an insufficient number of samples per group. Like any statistical test, whether or not a significant difference was detected depends on the magnitude of the difference in the biomarker between groups, the variation in the biomarker within each group, and the number of samples measured. We recommend running CITRUS with at least eight samples per group. Third, CITRUS might not find any significant biomarkers if an insufficient number of cells were included from a population that would otherwise exhibit useful biomarkers. Being able to include sufficient cells depends on collecting enough events in the experiment and pre-gating enough that enough events can be included to detect them. Finally, CITRUS might not find any significant biomarkers if the analysis combines more than two outcome groups that interact with each other. If including outcome groups that represent a single outcome (such as disease vs. healthy) and something that might be a stratifying variable (such as young vs. old) in the same CITRUS, it might be difficult for CITRUS to identify significant differences between the outcome groups if they are also impacted by the stratifying variable. If this is possibly the case, split the samples into multiple CITRUS runs and use the viSNE map to compare results and see if the same cell populations are significant at each level of the stratifying variable.

Since the clusters are nested, this cluster includes all of the cells within the lower clusters on the tree in this bubble.

These boxplots will show which outcome group has greater abundance or medians (whichever was used for CITRUS) for these clusters, and how spread out these values are within each group. Identifying trends in these differences across more than two outcome groups or across different significant biomarkers can be helpful to explain the biological importance of the results and build confidence in them.

Since CITRUS was started from within the viSNE experiment in Cytobank, the tSNE channels that give the viSNE map coordinates are contained within the CITRUS results files, even though they haven’t been used for clustering.

The dot size and/or plot size can be increased or decreased to make the visualization larger or smaller.

The “markerPlots” show the same CITRUS trees that were examined to find the significant nodes to focus on colored by marker expression.

The “clusters” histograms output by CITRUS for significant clusters show the expression of each clustering marker in a given significant cluster as compared to cells in all other clusters that were used in the CITRUS analysis (“background”).

This expression should match what is seen on the viSNE map in the region where the significant clusters are overlaid, but provides a summary of the median expression of each marker in each cluster.

Back-gating the CITRUS clusters onto biaxial plots will help with understanding how the significant clusters might have been identified or overlooked in a biaxial gating hierarchy.

Typically, build one predictive model for each outcome group comparison within each stratifying variable defined above (just like for the correlative analysis). However, if a correlative analysis was run prior to building any predictive models, and that analysis did not find any significant results, a predictive model should not be built. Furthermore, based on the results of the correlative analysis, it might be suitable to build a predictive model for the outcome groups at a single level of the stratifying variables using either abundances or medians. For example, a predictive model might be built using median signaling marker expression at baseline to predict an outcome measured at a 2 month time point if significant marker expression in the corresponding correlative analysis was seen and if the scientific goal is to find baseline predictors of the 2-month outcome.

When building a predictive model, it is permissible to run multiple model-building algorithms (PAM and lasso) and select the one that gives the best prediction (lowest cross-validated model error rate) of the outcome. If the two models are equal, it is permissible to choose the simplest model with the fewest biomarkers.

When assigning “file groupings” for predictive models, it is best to have balanced groups with the same number of samples per group. CITRUS uses overall accuracy predicting all groups to select the markers to use in the final model. Thus, if one group has a much larger number of samples than another group, the group with the larger number of samples will have more influence on this overall accuracy calculation.

In general, as the number of features shown across the top of the plot increases, the cross-validation error rate (red line) should decrease up to a point. If it does not, you most likely have not measured biomarkers with any ability to discriminate your outcome groups.

The CV min constrained model is the model with the minimum number of features required to achieve the best cross- validated estimation of model accuracy possible with the dataset. This model is what you might carry forward to use in a withheld validation/testing dataset.

In a clinical context where the best previously known predictor of response has a 50% accuracy (or 50% model error rate), a 70% accuracy (or 30% model error rate) would be an outstanding improvement, but in other contexts this accuracy might be considered poor. If the outcome groups are not balanced, it may be difficult to determine whether the accuracy is good or not, since the accuracy is determined across all samples and will therefore be influenced more heavily by groups with more samples. One option to avoid this concern is to arbitrarily select the same number of samples from each group to run the analysis, and then to perform some repeated analyses with different included samples to test the effect of arbitrary sample selection.

If there is no FDR constrained model shown on the model error rate plot, then none of the biomarkers in your predictive model have statistically significant differences between your outcome groups.

If you are building more than one predictive model, for example, across different levels of a stratifying variable, you might identify different biomarkers in each of the predictive models even if the same markers were present in the correlative analysis. This is expected since CITRUS will select a set of the fewest markers necessary to best predict the outcome, and there may be more than one equivalent set.

In these sensitivity analyses, the effects that you see may not be significant (particularly with fewer samples), but the trends should be the same.

Since CITRUS includes some stochastic elements, it’s important to ensure that any significant biomarkers you identify are not false positives resulting from this stochasticity. If no downsampling of events was performed during the creation of the viSNE map or CITRUS analysis, there is no need to repeat the pipeline.

For example, parallel CITRUS analyses that break a response variable into four categories (e.g., progressive disease, stable disease, partial response, complete response) or two categories (e.g., nonresponse or response) can be considered.

Since the events are down-sampled to create equal sampling for the viSNE map, the clusters may not consist of identical events, but the expression pattern of markers in these clusters should be very similar. To evaluate this, it may be helpful to use all of the tools outlined above for confirming the phenotype of CITRUS clusters. In addition, it may be helpful to compare numerical summaries (e.g., median) of surface marker expression that go along with heatmaps and the numerical summaries of cluster abundance or marker expression differences between groups in the CITRUS results to make sure that these are consistent.

For predictive models built with CITRUS, the included biomarkers are NOT necessarily expected to be identical between repeated CITRUS runs because there may be more than one set of equally predictive markers.

References

- 1.Kvistborg P, Gouttefangeas C, Aghaeepour N et al. (2015) Thinking outside the gate: single-cell assessments in multiple dimensions. Immunity 42(4):591–592. 10.1016/j.immuni.2015.04.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Newell EW, Cheng Y (2016) Mass cytometry: blessed with the curse of dimensionality. Nat Immunol 17(8):890–895. 10.1038/ni.3485 [DOI] [PubMed] [Google Scholar]

- 3.Bruggner RV, Bodenmiller B, Dill DL et al. (2014) Automated identification of stratifying signatures in cellular subpopulations. Proc Natl Acad Sci U S A 111(26):E2770–E2777. 10.1073/pnas.1408792111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fraietta JA, Lacey SF, Orlando EJ et al. (2018) Determinants of response and resistance to CD19 chimeric antigen receptor (CAR) T cell therapy of chronic lymphocytic leukemia. Nat Med 24(5):563–571. 10.1038/s41591-018-0010-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pelissier Vatter FA, Schapiro D, Chang H et al. (2018) High-dimensional phenotyping identifies age-emergent cells in human mammary epithelia. Cell Rep 23(4):1205–1219. 10.1016/j.celrep.2018.03.114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Subrahmanyam PB, Dong Z, Gusenleitner D et al. (2018) Distinct predictive biomarker candidates for response to anti-CTLA-4 and anti- PD-1 immunotherapy in melanoma patients. J Immunother Cancer 6(1):18 10.1186/s40425-018-0328-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ben-Shaanan TL, Azulay-Debby H, Dubovik T et al. (2016) Activation of the reward system boosts innate and adaptive immunity. Nat Med 22(8):940–944. 10.1038/nm.4133 [DOI] [PubMed] [Google Scholar]

- 8.Benjamini Y, Hochberg Y (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc 57(1):289–300 [Google Scholar]

- 9.van der Maaten LJP, Hinton GE (2008) Visualizing high-dimensional data using t-SNE. J Mach Learn Res 9:2579–2605 [Google Scholar]

- 10.Amir el AD, Davis KL, Tadmor MD et al. (2013) viSNE enables visualization of high dimensional single-cell data and reveals phenotypic heterogeneity of leukemia. Nat Biotechnol 31(6):545–552. 10.1038/nbt.2594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Knapp D, Kannan N, Pellacani D et al. (2017) Mass cytometric analysis reveals viable activated caspase-3(+) luminal progenitors in the normal adult human mammary gland. Cell Rep 21(4):1116–1126. 10.1016/j.celrep.2017.09.096 [DOI] [PubMed] [Google Scholar]

- 12.Hahne F, Khodabakhshi AH, Bashashati A et al. (2010) Per-channel basis normalization methods for flow cytometry data. Cytometry A 77(2):121–131. 10.1002/cyto.a.20823 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cytobank (2018) How to configure and run a viSNE analysis. https://support.cytobank.org/hc/en-us/articles/206439707-How-to-Configure-and-Run-a-viSNE-Analysis. Accessed 27 July 2018

- 14.Tusher VG, Tibshirani R, Chu G (2001) Significance analysis of microarrays applied to the ionizing radiation response. Proc Natl Acad Sci U S A 98(9):5116–5121. 10.1073/pnas.091062498 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Tibshirani R, Hastie T, Narasimhan B et al. (2002) Diagnosis of multiple cancer types by shrunken centroids of gene expression. Proc Natl Acad Sci U S A 99(10):6567–6572. 10.1073/pnas.082099299 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B 58:267–288 [Google Scholar]

- 17.Finak G, Jiang W, Krouse K et al. (2014) High-throughput flow cytometry data normalization for clinical trials. Cytometry A 85(3):277–286. 10.1002/cyto.a.22433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Van Gassen S, Gaudiliere B, Dhaene T, et al. (2017) A cross-sample cell-type specific normalization algorithm for clinical mass cytometry datasets. Paper presented at the 32nd congress of the International Society for Advancement of cytometry, Boston, MA [Google Scholar]

- 19.Hoy T (2006) Rare-event detection In: Wulff S (ed) Guide to flow cytometry. Dako, Carpinteria, CA, pp 55–58 [Google Scholar]

- 20.Baniyash M (2004) TCR zeta-chain downregulation: curtailing an excessive inflammatory immune response. Nat Rev Immunol 4(9):675–687. 10.1038/nri1434 [DOI] [PubMed] [Google Scholar]