Abstract

For multiple index models, it has recently been shown that the sliced inverse regression (SIR) is consistent for estimating the sufficient dimension reduction (SDR) space if and only if , where p is the dimension and n is the sample size. Thus, when p is of the same or a higher order of n, additional assumptions such as sparsity must be imposed in order to ensure consistency for SIR. By constructing artificial response variables made up from top eigenvectors of the estimated conditional covariance matrix, we introduce a simple Lasso regression method to obtain an estimate of the SDR space. The resulting algorithm, Lasso-SIR, is shown to be consistent and achieve the optimal convergence rate under certain sparsity conditions when p is of order o(n2λ2), where λ is the generalized signal-to-noise ratio. We also demonstrate the superior performance of Lasso-SIR compared with existing approaches via extensive numerical studies and several real data examples.

1. Introduction

Dimension reduction and variable selection have become indispensable steps for modern-day data analysts in dealing with the “big data,” where thousands or even millions of features are often available for only hundreds or thousands of samples. With these ultra high-dimensional data, an effective modeling strategy is to assume that only a few features and/or a few linear combinations of these features carry the information that researchers are interested in. One can consider the following multiple index model [Li, 1991]:

| (1) |

where x follows a p-dimensional elliptical distribution with mean zero and covariance matrix Σ, the βi’s are unknown projection vectors, d is unknown but is assumed to be much smaller than p, and the error ϵ is independent of x and has mean 0. When p is very large, it is reasonable to further restrict each βi to be a sparse vector.

Since the introduction of the sliced inverse regression (SIR) method (Li [1991]), many methods have been proposed to estimate the space spanned by (β1, ⋯, βd) with few assumptions on the link function f(·). Assume the multiple index model (1), the objective of all the SDR (Sufficient Dimension Reduction, Cook [1998]) methods is to find the minimal subspace such that , where stands for the projection operator to the subspace . When the dimension of x is moderately large, all the SDR methods, including SIR, are proven to be successful [Xia et al., 2002, Ni et al., 2005, Li and Nachtsheim, 2006, Li, 2007, Zhu et al., 2006]. However, these methods were previously known to work well when the sample size n grows much faster than the dimension p, an assumption that becomes inappropriate for many modern-day datasets, such as those from biomedical researches. It is important to have a thorough investigation of “the behavior of these SDR estimators when n is not large relative to p”, as raised by Cook et al. [2012].

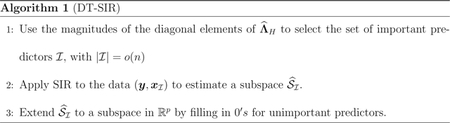

Lin et al. [2015] made an attempt to address the aforementioned challenge for SIR. They showed that, under mild conditions, the SIR estimate of the central space is consistent if and only if goes to zero as n grows. Additionally, they showed that the convergence rate of the SIR estimate of the central space (without any sparsity assumption) is ρn. When p is greater than n, certain constraints must be imposed in order for SIR to be consistent. The sparsity assumption, i.e., the number of active variables s must be an order of magnitude smaller than n and p, appears to be a reasonable one. In a follow-up work, Neykov et al. [2016a] studied the sign support recovery problem of the single index model (d = 1), suggesting that the correct optimal convergence rate for estimating the central space might be , a speculation that is partially confirmed in Lin et al. [2016]. It is shown that, for multiple index models with bounded dimension d and the identity covariance matrix, the optimal rate for estimating the central space is , where s is the number of active covariates and λ is the smallest non-zero eigenvalue of . They further showed that the Diagonal-Thresholding algorithm proposed in Lin et al. [2015] achieves the optimal rate for the single index model with the identity covariance matrix.

The main idea.

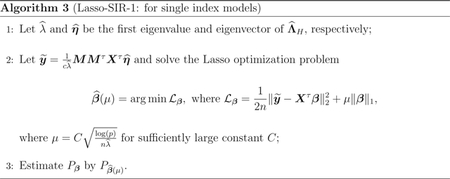

In this article, we introduce an efficient Lasso variant of SIR for the multiple index model (1) with a general covariance matrix Σ. Consider first the single index model: y = f(βτx,ϵ). Let η be the eigenvector associated with the largest eigenvalue of . Since β ∝Σ−1η, there are two immediate ways to estimate the space spanned by β. The first approach, as discussed in Lin et al. [2015], estimates Σ−1 and η separately (see Algorithm 1). The second one avoids a direct estimation of Σ−1 by solving the following penalized least square problem: , where X is the p × n covariate matrix formed by the n samples (see Algorithm 2). However, similar to most L1-penalization methods for nonlinear models, theoretical underpinning of this approach has not been well understood. Since these two approaches provide good estimates compared with earlier approaches (e.g.,Li [1991], Li and Nachtsheim [2006], Li [2007]) as shown in Lin et al. [2015] and Supplementary Materials, we set the two approaches as benchmarks for comparisons.

We note that an eigenvector of , where is an estimate of the conditional covariance matrix using SIR [Li, 1991], must be a linear combination of the column vectors of X. Thus, we can construct an artificial response vector such that , and estimate β by solving another penalized least square problem: (see Algorithm 3). We call this algorithm “Lasso-SIR”, which is computationally very efficient. In Section 3, we further show that the convergence rate of the estimator resulting from Lasso-SIR is , which is optimal if s = O(p1−δ) for some positive constant δ. Note that Lasso-SIR can be easily extended to other regularization and SDR methods, such as SCAD (Fan and Li [2001]), Group Lasso (Yuan and Lin [2006]), sparse Group Lasso (Simon et al. [2013]), SAVE (Cook [2000]), etc.

Connection to Other work Estimating the central space is widely considered as a generalized eigenvector problem in the literature [Li, 1991, Li and Nachtsheim, 2006, Li, 2007, Chen and Li, 1998]. Lin et al. [2016] explicitly described the similarities and differences between SIR and PCA (as first studied by Jung and Marron [2009]) under the “high dimension, low sample size (HDLSS)” scenario. However, after comparing their results with those for Lasso regression, Lin et al. [2016] advocated that a more appropriate prototype of SIR (at least for the single index model) should be the linear regression. In the past three decades, tremendous efforts have been put into the study of linear regression models y = xτβ + ϵ for HDLSS data. By imposing the L1 penalty on the regression coefficients, the Lasso approach [Tibshirani, 1996] produces a sparse estimator of β, which turns out to be rate optimal [Raskutti et al., 2011]. Because of apparent limitations of linear models, there are many attempts to build flexible and computationally friendly semi-parametric models, such as the projection pursuit regression [Friedman and Stuetzle, 1981, Chen, 1991], sliced inverse regression [Li, 1991], MAVE [Xia et al., 2002]. However, none of these methods work under the HDLSS setting. Existing theoretical results for HDLSS data mainly focus on linear regressions [Raskutti et al., 2011] and submatrix detections [Butucea et al., 2013], and are not applicable to index models. In this paper, we provide a new framework for the theoretical investigation of regularized SDR methods for HDLSS data.

The rest of the paper is organized as follows. After briefly reviewing SIR, we present the Lasso-SIR algorithm in Section 2. The consistency of the Lasso-SIR estimate and its connection to the Lasso regression are presented in Section 3. Numerical simulations and real data applications are reported in Sections 4 and 5. Some potential extensions are briefly discussed in Section 6. To improve the readability, we defer all the proofs and brief reviews of some existing results to the appendix.

2. Sparse SIR for High Dimensional Data

Notations.

We adopt the following notations throughout this paper. For a matrix V, we call the space generated by its column vectors the column space and denote it by col(V). The i-th row and j-th column of the matrix are denoted by Vi,* and V*,j, respectively. For (column) vectors x and , we denote their inner product 〈x, β〉 by x(β), and the k-th entry of x by x(k). For two positive numbers a, b, we use a ∨ b and a ∧ b to denote max{a, b} and min{a, b} respectively; We use C, C′, C1 and C2 to denote generic absolute constants, though the actual value may vary from case to case. For two sequences {an} and {bn}, we denote an ≻ bn and an ≺ bn if there exist positive constants C and C′ such that an ≥ Cbn and an ≤ C′bn, respectively. We denote an ≍ bn if both an ≻ bn and an ≺ bn hold. The (1, ∞) norm and (∞, ∞) norm of matrix A are defined as and max1≤i,j≤n∥Ai,j∥ respectively. To simplify discussions, we assume that is sufficiently small. We emphasize again that our covariate data X is a p × n instead of the traditional n × p matrix.

A brief review of Sliced Inverse Regression (SIR).

In the multiple index model (1), the matrix B formed by the vectors β1,…,βd is not identifiable. However, col(B), the space spanned by the columns of B is uniquely defined. Given n i.i.d. samples (yi, xi), i = 1, ⋯, n, SIR [Li, 1991] first divides the data into H equal-sized slices according to the order statistics y(i), i = 1,…,n. To ease notations and arguments, we assume that n = cH and , and re-express the data as yh,j and xh,j, where h refers to the slice number and j refers to the order number of a sample in the h-th slice, i.e., yh,j = y(c(h-1)+j), xh,j = x(c(h-1)+j) Here x(k) is the concomitant of y(k). Let the sample mean in the h-th slice be denoted by , then can be estimated by:

| (2) |

where XH is a p×H matrix formed by the H sample means, i.e., . Thus, col(Λ) is estimated by , where is the matrix formed by the top d eigenvectors of . The was shown to be a consistent estimator of col(Λ) under a few technical conditions when p is fixed [Duan and Li, 1991, Hsing and Carroll, 1992, Zhu et al., 2006, Li, 1991, Lin et al., 2015], which are summarized in the online supplementary file. Recently, Lin et al. [2015, 2016] showed that is consistent for col(Λ) if and only if as n → ∞, when the number of slices H can be chosen as a fixed integer independent of n and p when the dimension d of the central space is bounded. When x’s distribution is elliptically symmetric, Li [1991] showed that

| (3) |

and thus our goal is to recover col(B) by solving the above equation. It is shown in [Lin et al., 2015] that when consistently estimate col(B) where is the sample covariance matrix of X. However, this simple approach breaks down when ρn ↛ 0, especially when p ≫ n. Although stepwise methods [Zhong et al., 2012, Jiang and Liu, 2014] can work under HDLSS settings, the sparse SDR algorithms proposed in Li [2007] and Li and Nachtsheim [2006] appeared to be ineffective. Below we describe two intuitive non-stepwise methods for HDLSS scenarios, which will be used as benchmarks in our simulation studies to measure the performance of newly proposed SDR algorithms.

Diagonal Thresholding-SIR.

When p ≫ n, the Diagonal Thresholding (DT) screening method [Lin et al., 2015] proceeds by marginally screening all the variables via the diagonal elements of and then applying SIR to those retained variables to obtain an estimate of col(B). The procedure is shown to be consistent if the number of nonzero entries in each row of Σ is bounded.

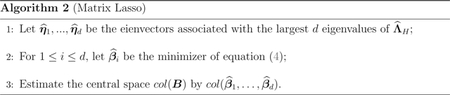

Matrix Lasso.

We can bypass the estimation and inversion of Σ by solving an L1 penalization problem. Since (3) holds at the population level, a reasonable estimate of col(B) can be obtained by solving a sample-version of the equation with an appropriate regularization term to cope with the high dimensionality. Let be the eigenvectors associated with the largest d eigenvalues of . Replacing Σ by its sample version and imposing an L1 penalty, we obtain a penalized sample version of (3):

| (4) |

for some appropriate μi’s.

This simple procedure can be easily implemented to produce sparse estimates of βi’s. Empirically it works reasonably well, so we set it as another benchmark to compare with. Since we later observed that its numerical performance was consistently worse than that of our main algorithm, Lasso-SIR, we did not further investigate its theoretical properties.

The Lasso-SIR algorithm.

First consider the single index model

| (5) |

Without loss of generality, we assume that (xi,yi), i = 1,…,n, are arranged in a way such that y1 ≤ y2 ≤ ⋯ ≤ yn. Construct an n × H matrix M = IH ⊗ 1c, where 1c is the c × 1 vector with all entries being 1. Then, according to the definition of XH, we can write XH = XM/c. Let be the largest eigenvalue of and let be the corresponding eigenvector of length 1. That is,

Thus, by defining

| (6) |

we have . Note that a key in estimating the central space col(β) of SIR is the equation η ∝ Σβ. If approximating η and Σ by and respectively, this equation can be written as . To recover a sparse vector , one can consider the following optimization problem

which is known as the Dantzig selector [Candes and Tao, 2007]. A related formulation is the Lasso regression, where β is estimated by the minimizer of

| (7) |

As shown by Bickel et al. [2009], the Dantzig selector is asymptotically equivalent to the Lasso for linear regressions. We thus propose and study the Lasso-SIR algorithm in this paper.

There is no need to estimate the inverse of Σ in Lasso-SIR. Moreover, since the optimization problem (7) is well studied for linear regression models [Tibshirani, 1996, Efron et al., 2004, Friedman et al., 2010], we may formally “transplant” their results to the index models. Practically, we use the R package glmnet to solve the optimization problem where the tuning parameter μ is chosen using cross-validation.

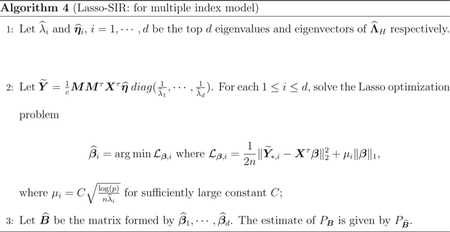

Last but not least, Lasso-SIR can be easily generalized to the multiple index model (1). Let , be the d-top eigenvalues of and be the corresponding eigenvectors. Similar to the definition of the “pseudo response variable” for the single index model, we define a multivariate pseudo response as

| (8) |

We then apply the Lasso on each column of the pseudo response matrix to produce the corresponding estimate.

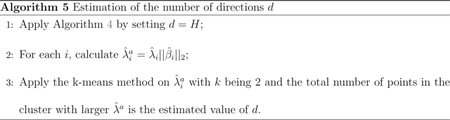

The number of directions d plays an important role when implementing Algorithm 4. A common practice is to locate the maximum gap among the ordered eigenvalues of the matrix , which does not work well under HDLSS settings. In Section 3, we show that there exists a gap among the adjusted eigenvalues where is the i-th output of Algorithm 4. Motivated by this, we estimate d according to the following algorithm:

Remark 1. In another paper that the authors are preparing, it is shown that the Lasso-SIR algorithm works on the joint distribution of (X, Y) and is thus not tied to the single or multiple index models. We choose the single/multiple index models to have a clear representation of the central subspace , i.e., .

Remark 2. When dealing with real data, we suggest that the users employ quantile normalization to transform each covariate when X is not normally distributed. When p is too large and beyond our bound of , as required by our provided R-package (see Section 7 for its downloading information), the user can first conduct variable screening based on DT-SIR, which is also included in this package.

3. Consistency of Lasso-SIR

For simplicity, we assume that x ~ N(0, Σ). The normality assumption can be relaxed to elliptically symmetric distributions with sub-Gaussian tail; however, this will make technical arguments unnecessarily tedious and is not the main focus of this paper. From now on, we assume that d, the dimension of the central space, is bounded; thus we can assume that H, the number of slices, is a large enough but finite integer [Lin et al., 2016, 2015]. In order to prove the consistency, we need the following technical conditions:

A1) There exist constants Cmin and Cmax such that 0 < Cmin < λmin(Σ) ≤ λmax(Σ) < Cmax;

A2) There exists a constant κ ≥ 1, such that

A3) The central curve satisfies the sliced stability condition.

Condition A1 is commonly imposed in the analyses of high-dimensional linear regression models. Condition A2 is merely a refinement of the coverage condition that is commonly imposed in the SIR literature, i.e., . For single index models, there is a more intuitive explanation of condition A2. Since , condition A2 is simplified to 0 < λ = λ1 ≤ λmαx(Σ) which is a direct corollary of the total variance decomposition identity (i.e., ). We may treat λ as a generalized SNR and A2 simply requires that the generalized SNR is non-zero. Condition A3 is a property of the central curve, or equivalently, a regularity condition on the link function f(·) and the noise ϵ introduced in Lin et al. [2015].

Remark 3 (Generalized SNR and eigenvalue bound). Recall that the signal-to-noise ratio (SNR) for the linear model y = βτx + ϵ, where x ~ N(0, Σ) and ϵ ~ N(0,1), is defined as

where β0 = β/∥β∥2. A simple calculation shows that

and

where is the unique non-zero eigenvalue of . This leads to the following identity for the linear model:

Thus, in a multiple index model we call λ, the smallest non-zero eigenvalue of , the model’s generalized SNR.

Theorem 1 (Consistency of Lasso-SIR for Single Index Models). Assume that nλ = pα for some α > ½ and that conditions A1–A3 hold for the single index model, , where β0 is a unit vector. Let be the output of Algorithm 3, then

holds with probability at least 1 – C2 exp(−C3 log(p)) for some constants C2 and C3.

When no sparsity on η is assumed, the condition α > 1/2 is necessary. This condition can be relaxed if a certain sparsity structure is imposed on η or Σ such that Σβ becomes sparse. Next, we state the theoretical result regarding the multiple index model (1).

Theorem 2 (Consistency of Lasso-SIR). Assume that nλ = pα for some α > ½, where λ is the smallest nonzero eigenvalue of , and that conditions A1–A3 hold for the multiple index model (1). Assume further that the dimension d of the central subspace is known. Let be the output of Algorithm 4, then

holds with probability at least 1 − C2 exp(−C3 log(p)) for some constants C2 and C3.

Lin et al. [2016] have shown that the lower bound of the risk is when (i) d = 1, or (ii) d(> 1) is finite and λ > c0 > 0. This implies that if s = O(p1–δ) for some positive constant δ, the Lasso-SIR algorithm achieves the optimal rate, i.e., we have the following corollary.

Corollary 1. Assume that conditions A1–A3 hold. If nλ = pα for some α > 1/2 and s = O(p1−δ), then Lasso-SIR estimate achieves the minimax rate when (i) d =1, or (ii) d(> 1) is finite and λ > c0 > 0.

Remark 4. Consider the linear regression y = βτx + ϵ, where x ~ N(0, Σ), ϵ ~ N(0,1). It is shown in Raskutti et al. [2011] that the lower bound of the minimax rate of the l2 distance between any estimator and the true β is and the convergence rate of Lasso estimator is . Namely, the Lasso estimator is rate optimal for linear regression when s = O(p1–δ) for some positive constant δ. A simple calculation shows that , if ||β||2 is bounded away from ∞. Consequently,

| (9) |

holds with high probability. In other words, if we treat Lasso as a dimension reduction method (where d = 1 and the link function is linear), the projection matrix based on Lasso is rate optimal. The Lasso-SIR has extended the Lasso to the non-linear multiple index models. This justifies a statement in Chen and Li [1998], stating that ”SIR should be viewed as an alternative or generalization of the multiple linear regression”. The connection also justifies a speculation in Lin et al. [2016] that ”a more appropriate prototype of the high dimensional SIR problem should be the sparse linear regression rather than the sparse PCA and the generalized eigenvector problem”.

Determining the dimension d of the central space is a challenging problem for SDR, especially for HDLSS cases. If we want to discern signals (i.e., the true directions) from noises (i.e., the other directions) simply via the eigenvalues of , i = 1,…, H, we face the problem that all these ‘s are of order p/n, but the gap between the signals and noises is of order λ (≤ Cmax). With the Lasso-SIR, we can bypass this difficulty by using the adjusted eigenvalues , i = 1,…, H. To this end, we have the following theorem.

Theorem 3. Let be the output of Algorithm 4 for i = 1,…, H. Assume that nλ = pα for some α > ½, s log(p) = o(nλ), and H > d, then, for some constants C1,C2 C3 and C4,

hold with probability at least 1 − C5 exp(−C6 log(p)) for some constants C5 and C6.

Theorem 3 states that, if s log(p) ∨ (plog(p))½ = o(nλ), there is a clear gap between signals and noise. The Lasso-SIR algorithm then provides us the rate optimal estimation of the central space. It can be easily verified that p½ dominants s log(p) if s < p½ and s log(p) dominants p½ if s > p½. The region s2 = o(p) and the region p = o(s2) are often referred to as the “highly sparse” and “moderately sparse” regions [Ingster et al., 2010], respectively. These two scenarios should be treated differently in high dimensional SIR and SDR frameworks, just like what has been done in high dimensional linear regression (Ingster et al. [2010]).

4. Simulation Studies

4.1. Single index models

Let β be the vector of coefficients and let be the active set; namely, . Furthermore, for each , we simulated independently βi ~ N(0,1). Let x be the design matrix with each row following N(0, Σ). We consider two types of covariance matrices: (i) Σ = (σi,j) where σii = 1 and σi,j = ρ|i–j|; and (ii) σii = 1, σi,j = ρ when i, or i, , and σi,j = 0.1 when , or vice versa. The first one represents a covariance matrix which is essentially sparse and we choose ρ among 0, 0.3, 0.5, and 0.8. The second one represents a dense covariance matrix with ρ chosen as 0.2. In all the simulations, we set n = 1, 000 and let p vary among 100, 1,000, 2,000, and 4,000. For all the settings, the random error ϵ follows N(0, In). For single index models, we consider the following model settings:

-

I

.

-

II

.

-

III

.

-

IV

.

-

V

.

The goal is to estimate col(β), the space spanned by β. As in Lin et al. [2015], the estimation error is defined as , col(β)), where , the distance between two subspaces M, , is defined as the Frobenius norm of PM − PN where PM and PN are the projection matrices associated with these two spaces. The methods we compared with are DT-SIR, matrix Lasso (M-Lasso), and Lasso. The number of slices H is chosen as 20 in all simulation studies. The number of directions d is chosen according to Algorithm 5. Note that both benchmarks (i.e., DT-SIR and M-Lasso) require the knowledge of d as well. To be fair, we use the estimated based on Algorithm 5 for both benchmarks. For comparison, we have also included the estimation error of Lasso-SIR assuming d is known. For each p, n, and ρ, we replicate the above steps 100 times to calculate the average estimation error for each setting. We tabulated the results for the first type of covariance matrix with ρ = 0.5 in Table 1 and put the results for other settings in Tables 4–7 in the online supplementary file. The average of estimated directions is reported in the last column of these tables.

Table 1:

Estimation error for the first type covariance matrix with ρ = 0.5.

| p | Lasso-SIR | DT-SIR | Lasso | M-Lasso | Lasso-SIR(Known d) | ||

|---|---|---|---|---|---|---|---|

| I | 100 | 0.12 ( 0.02 ) | 0.47 ( 0.11 ) | 0.11 ( 0.02 ) | 0.19 ( 0.08 ) | 0.12 ( 0.02 ) | 1 |

| 1000 | 0.18 ( 0.02 ) | 0.65 ( 0.14 ) | 0.15 ( 0.02 ) | 0.26 ( 0.02 ) | 0.18 ( 0.02 ) | 1 | |

| 2000 | 0.2 ( 0.02 ) | 0.74 ( 0.15 ) | 0.16 ( 0.02 ) | 0.3 ( 0.03 ) | 0.2 ( 0.02 ) | 1 | |

| 4000 | 0.23 ( 0.09 ) | 0.9 ( 0.17 ) | 0.18 ( 0.01 ) | 0.39 ( 0.09 ) | 0.23 ( 0.03 ) | 1 | |

| II | 100 | 0.07 ( 0.01 ) | 0.6 ( 0.1 ) | 0.23 ( 0.03 ) | 0.27 ( 0.31 ) | 0.07 ( 0.01 ) | 1 |

| 1000 | 0.12 ( 0.02 ) | 0.78 ( 0.11 ) | 0.31 ( 0.04 ) | 0.17 ( 0.02 ) | 0.12 ( 0.02 ) | 1 | |

| 2000 | 0.15 ( 0.02 ) | 0.86 ( 0.13 ) | 0.34 ( 0.05 ) | 0.2 ( 0.03 ) | 0.15 ( 0.02 ) | 1 | |

| 4000 | 0.2 ( 0.04 ) | 0.99 ( 0.15 ) | 0.37 ( 0.05 ) | 0.28 ( 0.06 ) | 0.19 ( 0.03 ) | 1 | |

| III | 100 | 0.21 ( 0.03 ) | 0.55 ( 0.12 ) | 1.25 ( 0.19 ) | 0.26 ( 0.11 ) | 0.21 ( 0.03 ) | 1 |

| 1000 | 0.28 ( 0.04 ) | 0.74 ( 0.14 ) | 1.32 ( 0.18 ) | 0.51 ( 0.04 ) | 0.27 ( 0.04 ) | 1 | |

| 2000 | 0.35 ( 0.17 ) | 0.87 ( 0.17 ) | 1.34 ( 0.14 ) | 0.66 ( 0.14 ) | 0.31 ( 0.05 ) | 1.1 | |

| 4000 | 0.46 ( 0.28 ) | 1 ( 0.25 ) | 1.33 ( 0.16 ) | 0.83 ( 0.22 ) | 0.39 ( 0.1 ) | 1.1 | |

| IV | 100 | 0.46 ( 0.05 ) | 0.92 ( 0.09 ) | 0.78 ( 0.12 ) | 0.58 ( 0.06 ) | 0.45 ( 0.04 ) | 1 |

| 1000 | 0.62 ( 0.22 ) | 1.07 ( 0.18 ) | 0.87 ( 0.11 ) | 0.78 ( 0.22 ) | 0.59 ( 0.04 ) | 1.1 | |

| 2000 | 0.71 ( 0.34 ) | 1.22 ( 0.26 ) | 0.89 ( 0.12 ) | 0.94 ( 0.31 ) | 0.59 ( 0.04 ) | 1.3 | |

| 4000 | 0.71 ( 0.26 ) | 1.3 ( 0.18 ) | 0.91 ( 0.13 ) | 1 ( 0.22 ) | 0.63 ( 0.04 ) | 1.2 | |

| V | 100 | 0.12 ( 0.02 ) | 0.37 ( 0.1 ) | 0.42 ( 0.18 ) | 0.15 ( 0.02 ) | 0.12 ( 0.02 ) | 1 |

| 1000 | 0.2 ( 0.03 ) | 0.55 ( 0.15 ) | 0.55 ( 0.22 ) | 0.41 ( 0.05 ) | 0.2 ( 0.05 ) | 1 | |

| 2000 | 0.38 ( 0.34 ) | 0.8 ( 0.29 ) | 0.6 ( 0.24 ) | 0.67 ( 0.27 ) | 0.29 ( 0.18 ) | 1.2 | |

| 4000 | 0.78 ( 0.51 ) | 1.22 ( 0.31 ) | 0.77 ( 0.25 ) | 1.06 ( 0.41 ) | 0.48 ( 0.31 ) | 1.5 |

The simulation results in Table 1 show that Lasso-SIR outperformed both DT-SIR and M-Lasso under all settings. The performance of DT-SIR has become worse when the dependence is stronger and denser. The reason is that this method is based on the diagonal threshold and is only supposed to work well for the diagonal covariance matrix. Overall, Algorithm 5 provided a reasonable estimate of d especially for moderate covariance matrix. When assuming d is known, the performances of both DT-SIR and M-Lasso are inferior to Lasso-SIR, and are thus not reported.

Under Setting I when the true model is linear, Lasso performed the best among all the methods, as expected. However, the difference between Lasso and Lasso-SIR is not significant, implying that Lasso-SIR does not sacrifice much efficiency without the knowledge of the underlying linearity. On the other hand, when the models are not linear (Case II-VI), Lasso-SIR worked much better than Lasso. We observed that Lasso performed better than Lasso-SIR for Setting V when ρ=0.8 (Supplemental Materials) or when the covariance matrix is dense. One explanation is that Lasso-SIR tends to overestimate d under these conditions while Lasso used the actual d. If assuming known d = 1, Lasso-SIR’s estimation error is smaller than that of Lasso.

The results, reported in the supplementary material, for the other values of ρ are similar to what we observed when ρ = 0.5. The Lasso-SIR performed the best when compared to its competitors.

4.2. Multiple index models

Let β be the p × 2 matrix of coefficients and be the active set. Let x be simulated similarly as in Section 4.1, and denote z = xβ. Consider the following settings:

-

VI

-

VII

-

VIII

-

IX

For the multiple index models, we compared both benchmarks (DT-SIR and M-Lasso) with Lasso-SIR. Lasso is not applicable for these cases and is thus not included. Similar to Section 4.1, we tabulated the results for the first type covariance matrix with ρ = 0.5 in Table 2 and put the results for others in Tables 8–11 in the online supplementary file.

Table 2:

Estimation error for the first type covariance matrix with ρ = 0.5.

| p | Lasso-SIR | DT-SIR | M-Lasso | Lasso-SIR (Known d) | ||

|---|---|---|---|---|---|---|

| VI | 100 | 0.26 ( 0.06 ) | 0.57 ( 0.15 ) | 0.31 ( 0.05 ) | 0.26 ( 0.05 ) | 2 |

| 1000 | 0.33 ( 0.07 ) | 0.74 ( 0.17 ) | 0.62 ( 0.04 ) | 0.33 ( 0.07 ) | 2 | |

| 2000 | 0.36 ( 0.11 ) | 0.92 ( 0.18 ) | 0.73 ( 0.07 ) | 0.38 ( 0.08 ) | 2 | |

| 4000 | 0.44 ( 0.14 ) | 1.12 ( 0.25 ) | 0.87 ( 0.1 ) | 0.42 ( 0.09 ) | 2 | |

| VII | 100 | 0.32 ( 0.04 ) | 0.67 ( 0.11 ) | 0.42 ( 0.04 ) | 0.32 ( 0.04 ) | 2 |

| 1000 | 0.6 ( 0.28 ) | 0.93 ( 0.22 ) | 1.02 ( 0.2 ) | 0.66 ( 0.3 ) | 2.1 | |

| 2000 | 0.95 ( 0.44 ) | 1.18 ( 0.27) | 1.35 ( 0.32 ) | 0.83 ( 0.35 ) | 2.3 | |

| 4000 | 1.17 ( 0.38 ) | 1.43 ( 0.31 ) | 1.47 ( 0.33 ) | 1.08 ( 0.33 ) | 2.1 | |

| VIII | 100 | 0.29 ( 0.09 ) | 0.61 ( 0.11 ) | 0.34 ( 0.08 ) | 0.25 ( 0.03 ) | 2 |

| 1000 | 0.37 ( 0.08 ) | 0.82 ( 0.14 ) | 0.69 ( 0.13 ) | 0.35 ( 0.07 ) | 2 | |

| 2000 | 0.54 ( 0.35 ) | 1 ( 0.25 ) | 0.92 ( 0.28 ) | 0.47 ( 0.22 ) | 2.2 | |

| 4000 | 0.88 ( 0.45 ) | 1.37 ( 0.26 ) | 1.27 ( 0.31 ) | 0.71 ( 0.37 ) | 2.5 | |

| IX | 100 | 0.43 ( 0.06 ) | 0.74 ( 0.12 ) | 0.48 ( 0.05 ) | 0.43 ( 0.07 ) | 2 |

| 1000 | 0.47 ( 0.09 ) | 0.91 ( 0.15 ) | 0.91 ( 0.05 ) | 0.48 ( 0.09 ) | 2 | |

| 2000 | 0.58 ( 0.23 ) | 1.11 ( 0.23 ) | 1.12 ( 0.16 ) | 0.5 ( 0.1 ) | 2.1 | |

| 4000 | 0.57 ( 0.18 ) | 1.25 ( 0.22 ) | 1.23 ( 0.1 ) | 0.56 ( 0.11 ) | 2 |

For the identity covariance matrix (ρ = 0), there was little difference between performances of Lasso-SIR and DT-SIR. However, Lasso-SIR was substantially better than DT-SIR in other cases. Under all settings, Lasso-SIR worked much better than the matrix Lasso. For the dense covariance matrix Σ2, Algorithm 5 tended to underestimate d, which is worthy of further investigation.

The results, reported in the supplementary material, for the other values of ρ are similar to what we observed when ρ = 0.5. The Lasso-SIR performs the best when compared to its competitors.

There are other sparse inverse regression method, such as the Sparse SIR, given in Li and Nachtsheim [2006]. In Lin et al. [2015], we have shown that the DT-SIR outperforms this method. We thus did not include the numerical comparison. For the reason of completeness, we have included the numerical results of comparing Lasso-SIR and Sparse SIR in Section D of the online supplementary file, showing that Lasso-SIR is better than Sparse-SIR.

4.3. Discrete responses

We consider the following simulation settings where for the response variable Y is discrete.

- Let z = xβ where , β is a p by 2 matrix with β1:7,1, β 8:12,2 ~ N(0,1) and βi,j = 0 otherwise. The response yi is

where ϵi,j ~ N(0,1).

In settings X, XI, and XII, the response variable is dichotomous, and βi ~ N(0,1) when and βi = 0 otherwise. Thus the number of slices H can only be 2. For Setting XIII where the response variable is trichotomous, the number of slices H is chosen as 3. The number of direction d is chosen as H − 1 in all these simulations.

Similar to the previous two sections, we calculated the average estimation errors for Lasso-SIR (Algorithm 4), DT-SIR, M-Lasso, and generalized-Lasso based on 100 replications and reported the result in Table 3 for the first type covariance matrix with ρ = 0. 5 and the results for other cases in Tables 12–15 in online supplementary file. It is clearly seen that Lasso-SIR performed much better than DT-SIR and M-Lasso under all settings and the improvements were very significant. The generalized Lasso performed as good as Lasso-SIR for the dichotomous response; however, it performed substantially worse for Setting XIII.

Table 3:

Estimation error for the first type covariance matrix with ρ = 0.5.

| p | Lasso-SIR | DT-SIR | M-Lasso | Lasso | |

|---|---|---|---|---|---|

| X | 100 | 0.22 ( 0.03 ) | 0.66 ( 0.05 ) | 0.26 ( 0.03 ) | 0.2 ( 0.03 ) |

| 1000 | 0.26 ( 0.04 ) | 1.21 ( 0.03 ) | 0.52 ( 0.03 ) | 0.28 ( 0.03 ) | |

| 2000 | 0.27 ( 0.03 ) | 1.33 ( 0.02 ) | 0.59 ( 0.02 ) | 0.29 ( 0.04 ) | |

| 4000 | 0.28 ( 0.04 ) | 1.39 ( 0.02 ) | 0.65 ( 0.03 ) | 0.3 ( 0.04 ) | |

| XI | 100 | 0.32 ( 0.07 ) | 0.83 ( 0.07 ) | 0.6 ( 0.17 ) | 0.33 ( 0.07 ) |

| 1000 | 0.43 ( 0.1 ) | 1.32 ( 0.02 ) | 1.07 ( 0.05 ) | 0.45 ( 0.09 ) | |

| 2000 | 0.45 ( 0.09 ) | 1.38 ( 0.01 ) | 1.15 ( 0.04 ) | 0.46 ( 0.09 ) | |

| 4000 | 0.49 ( 0.12 ) | 1.41 ( 0.01 ) | 1.2 ( 0.05 ) | 0.51 ( 0.12 ) | |

| XII | 100 | 0.24 ( 0.03 ) | 0.63 ( 0.05 ) | 0.52 ( 0.35 ) | 0.22 ( 0.03 ) |

| 1000 | 0.33 ( 0.03 ) | 1.18 ( 0.04 ) | 0.53 ( 0.03 ) | 0.32 ( 0.03 ) | |

| 2000 | 0.37 ( 0.05 ) | 1.3 ( 0.04 ) | 0.62 ( 0.03 ) | 0.35 ( 0.03 ) | |

| 4000 | 0.4 ( 0.04 ) | 1.38 ( 0.03 ) | 0.68 ( 0.03 ) | 0.39 ( 0.04 ) | |

| XIII | 100 | 0.38 ( 0.06 ) | 1.09 ( 0.06 ) | 0.61 ( 0.05 ) | 1.07 ( 0.02 ) |

| 1000 | 0.39 ( 0.07 ) | 1.79 ( 0.02 ) | 1.12 ( 0.05 ) | 1.08 ( 0.02 ) | |

| 2000 | 0.38 ( 0.07 ) | 1.91 ( 0.02 ) | 1.24 ( 0.04 ) | 1.09 ( 0.03 ) | |

| 4000 | 0.42 ( 0.07 ) | 1.98 ( 0.01 ) | 1.32 ( 0.03 ) | 1.1 ( 0.03 ) |

5. Applications to Real Data

Arcene Data Set.

We first apply the methods to a two-class classification problem, which aims to distinguish between cancer patients and normal subjects from using their mass-spectrometric measurements. The data were obtained by the National Cancer Institute (NCI) and the Eastern Virginia Medical School (EVMS) using the SELDI technique, including samples from 44 patients with ovarian and prostate cancers and 56 normal controls. The dataset was downloaded from the UCI machine learning repository (Lichman [2013]), where a detailed description can be found. It has also been used in the NIPS 2003 feature selection challenge (Guyon et al. [2004]). For each subject, there are 10,000 features where 7,000 of them are real variables and 3,000 of them are random probes. There are 100 subjects in the validation set.

After standardizing X, we estimated the number of directions d as 1 using Algorithm 5. We then applied Algorithm 3 and the sparse PCA to calculate the direction of β and the corresponding components, followed by a logistic regression model. We applied the fitted model to the validation set and calculated the probability of each subject being a cancer patient. We also fitted a Lasso logistic regression model to the training set and applied it to the validation set to calculate the corresponding probabilities.

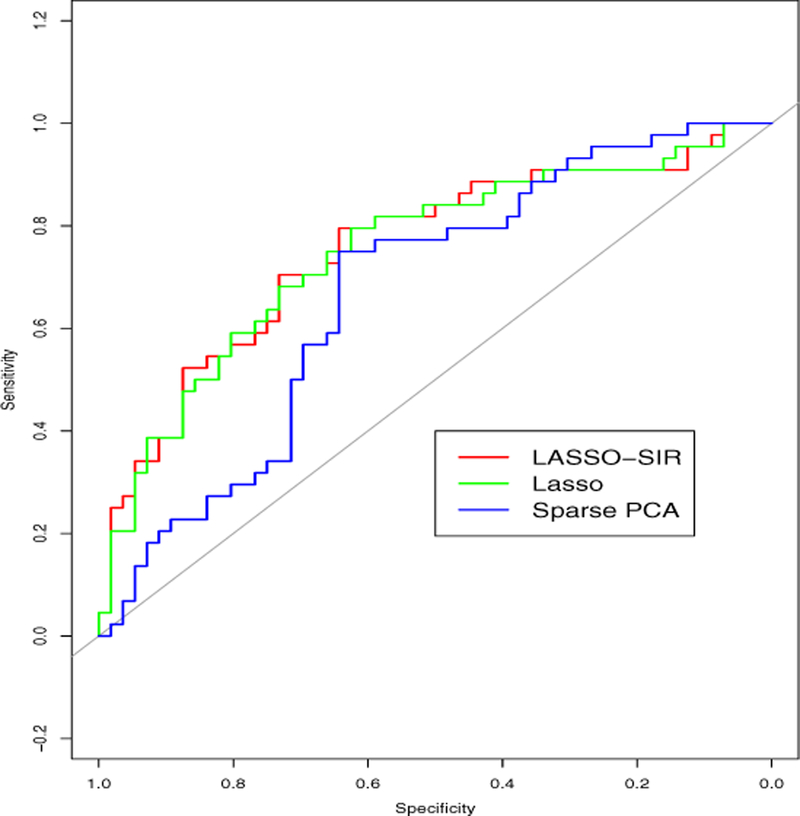

In Figure 1, we plot the Receiver Operating Characteristic (ROC) curves for various methods. Lasso-SIR, represented by the red curve, was slightly better than Lasso (insignificant) and the sparse PCA, represented by the green and blue curves respectively. The areas under these three curves are 0.754, 0.742, and 0.671, respectively.

Figure 1:

ROC curve of various methods for Arcene Data set.

HapMap.

In this section, we analyzed a data set with a continuous response. We consider the gene expression data from 45 Japanese and 45 Chinese from the international “HapMap” project (Thorisson et al. [2005],Thorgeirsson et al. [2010]). The total number of probes is 47,293. According toThorgeirsson et al. [2010], the gene CHRNA6 is the subject of many nicotine addiction studies. Similar toFan et al. [2015], we treat the mRNA expression of CHRNA6 as the response Y and expressions of other genes as the covariates. Consequently, the number of dimension p is 47,292, much greater than the number of subjects n=90.

We first applied Lasso-SIR to the data set with d being chosen as 1 according to Algorithm 5. The number of selected variables was 13. Based on the estimated coefficients β and X, we calculated the first component and the scatter plot between the response Y and this component, showing a moderate linear relationship between them. We then fitted a linear regression between them. The R-sq of this model is 0.5596 and the mean squared error of the fitted model 0.045.

We also applied Lasso to estimate the direction β. The tuning parameter λ is chosen as 0.1215 such that the number of selected variables is also 13. When fitting a regression model between Y and the component based on the estimated β, the R-sq is 0.5782 and the mean squared error is 0.044. There is no significant difference between these two approaches. This confirms the message that Lasso-SIR performs as good as Lasso when the linearity assumption is appropriate.

We have also calculated a direction and the corresponding components based on the sparse PCA [Zou et al., 2006]. We then fitted a regression model. The R-sq is only 0.1013 and the mean squared error is 0.093, significantly worse than the above two approaches.

Classify Wine Cultivars.

We investigate the popular wine data set which has been used to compare various classification methods. This is a three-class classification problem. The data, available from the UCI machine learning repository (Lichman [2013]), consists of 178 wines grown in the same region in Italy under three different cultivars. For each wine, the chemical analysis was conducted and the quantities of 13 constituents are obtained, which are Alcohol, Malic acid, Ash, Alkalinity of ash, Magnesium, Total Phenols, Flavanoids, Non-flavanoid Phenols, Proanthocyanins, Color intensity, Hue, OD280/OD315 of diluted wines, and Proline. One of the goals is to use these 13 features to classify the cultivar.

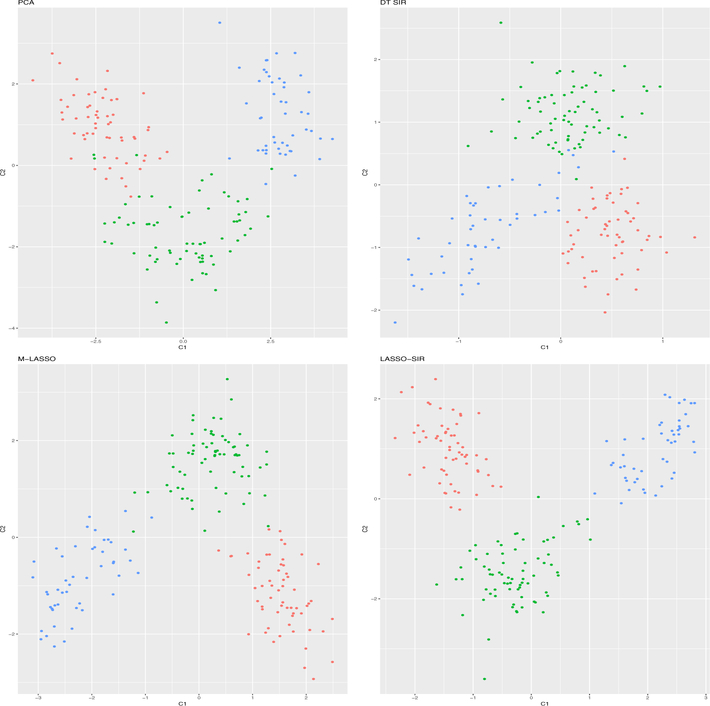

The number of directions d is chosen as 2 according to Algorithm 5. We tested PCA, DT-SIR, M-Lasso, and Lasso-SIR, for obtaining these two directions. In Figure 2, we plotted the projection of the data onto the space spanned by two components. The colors of the points correspond to three different cultivars. It is clearly seen that Lasso-SIR provided the best separation of the three cultivars. When using one vertical and one horizontal line to classify three groups, only one subject would be wrongly classified.

Figure 2:

We plotted the second component versus the first component for all the wines, which are labeled with different colors, representing different cultivars (1–red, 2–green, 3–blue). The four methods for calculating the directions are PCA, DT-SIR, M-Lasso, and Lasso-SIR from top-left to bottom-right. It is clearly seen that Lasso-SIR offered the best separation among these three groups.

6. Discussion

Researchers have made some attempts to extend Lasso to non-linear regression models in recent years (e.g.,Plan and Vershynin [2016], Neykov et al. [2016b]). However, these approaches are not efficient enough for SDR problems. In comparison, Lasso-SIR introduced in this article is an efficient high-dimensional variant of SIR [Li, 1991] for obtaining a sparse solution to the estimation of the SDR subspace for multiple index models. We showed that Lasso-SIR is rate optimal if nλ = pα for some α > 1/2, where λ is the smallest nonzero eigenvalue of . This technical assumption on n, λ, and p is slightly disappointing from the ultra-high dimensional perspective. We believe that this technical assumption arises from an intrinsic limitation in estimating the central subspace, i.e., some further sparsity assumptions on either Σ or or both are needed to show the consistency of any estimation method. We will address such extensions in our future researches.

Cautious reader may find that the concept of “pseudo-response variablee is not essential for developing the theory of the Lasso-SIR algorithm. However, by re-formulating the SIR method as a linear regression problem using the pseudo-response variable, we can formally consider the model selection consistency, regularization path and many others for multiple index models. In other words, the Lasso-SIR does not only provide an efficient high dimensional variant of SIR, but also extends the rich theory developed for Lasso linear regression in the past decades to the semi-parametric index models.

The R-package, LassoSIR, is available on CRAN (https://cran.r-project.org/package=LassoSIR).

Supplementary Material

7. Acknowledgement

Jun S. Liu is partially supported by the NSF Grants DMS-1613035 and DMS-1713152, and NIH Grant R01 GM113242–01. Zhigen Zhao is partially supported by the NSF Grant IIS-1633283.

A Appendix: Sketch of Proofs of Theorems 1, 2 and 3

We assume the condition A1) A2) and A3) hold throughout of the rest of the paper. In particular, the sliced stability condition A3) requires that H > d is a large enough but finite integer. We denote the SIR estimate of by (see e.g., (2)) and its eigenvector of unit length associated to the j-th eigenvalue by . To avoid unnecessary confusion, we assume that and are sufficiently small. We call an event Ω happens with high probability if for some absolute constants C1 and C2.

A.1. Assistant Lemmas

A.1.1. Concentration Inequalities

Lemma 1. Let d1, …, dp be positive constants. We have the following statements:

- For p i.i.d. standard normal random variables x1, …, xp, there exist constants C1 and C2 such that for any sufficiently small a, we have

(10) For 2p i.i.d. standard normal random variables x1;… ,xp, y1, ⋯ , and yp, there exist constants C1 and C2 such that for any sufficiently small a , we have

| (11) |

Proof. ii) is a direct corollary of i). We put the proof of i) in the supplementary materials.

A.1.2 Sine-Theta Theorem

Lemma 2 (Sine-Theta Theorem). Let A and A + E be symmetric matrices satisfying

where [F0, F1] and [G0, G1] are orthogonal matrices. If the eigenvalues of A0 are contained in an interval (a,b), and the eigenvalues of Λ1 are excluded from the interval (a − δ, b + δ) for some δ > 0, then

and

A.1.3. Restricted Eigenvalue Properties

We briefly review the restricted eigenvalue (RE) property, which was first introduced in Raskutti et al. [2010]. Given a set S ⊂ [p] = {1, …, p}, for any positive number α, define the set as

We say that a sample matrix XXτ/n satisfies the restricted eigenvalue condition over S with parameter (α, κ) ϵ [1, ∞) × (0, ∞) if

| (12) |

If (12) holds uniformly for all the subsets S with cardinality s, we say that XXτ/n satisfies the restricted eigenvalue condition of order s with parameter (α,κ). Similarly, we say that the covariance matrix Σ satisfies the RE condition over S with parameter (α, κ) if ∥Σ½θ∥2 ≥ κ∥θ∥ for all . Additionally, if this condition holds uniformly for all the subsets S with cardinality s, we say that Σ satisfies the restricted eigenvalue condition of order s with parameter (α, κ). The following Corollary is borrowed from Raskutti et al. [2010].

Corollary 2. Suppose that Σ satisfies the RE condition of order s with parameter (α,κ). Let X be the p × n matrix formed by n i.i.d samples from N(0, Σ). For some universal positive constants a1, a2 and a3; if the sample size satisfies

then the matrix satisfies the RE condition of order s with parameter with probability at least 1 − a1 exp (−a2n).

It is clear that λmin(Σ) ≥ Cmin implies that Σ satisfies the RE condition of any order s with parameter . Thus, we have the following proposition.

Proposition 1. For some universal constants a1, a2 and a3, if the sample size satisfies that n > a1s log(p), then the matrix satisfies the RE condition for any order s with parameter with probability at least 1 – a2 exp (−a3n).

A.1.4. The sliced approximation Lemma

Let be a sub-Gaussian random variable. For any unit vector , let x(β) = 〈x, β〉 and . In order to get the deviation properties of the statistics varH(x(β)), Lin et al. [2015] has introduced the sliced stable condition, i.e., the condition A3 in this paper. For the exact definition and more discussion, we refer to Lin et al. [2015].

Lemma 3. Let be a sub-Gaussian random variable. Assume that is sliced table with respect to y. For any unit vector , let x(β) = 〈x, β〉 and , we have the following:

- If var(m(β)) = 0, there exist positive constants C1,C2 and C3 such that for any b and sufficiently large H, we have

- If var(m(β)) ≠ 0, there exist positive constants C1,C2 and C3 such that, for any ν > 1, we have

with probability at most

where we choose H such that Hϑ > C4ν for some sufficiently large constant C4.

The following proposition is a direct corollary.

Proposition 2. There exist positive constants C1,C2 and C3, such that

| (13) |

with probability at most C1 exp .

Proof. It follows from Lemma 3 and the fact that for any . ◽

A.1.5. Properties of ‘s.

Proposition 3. Recall that is the eigenvector associated to the j-th eigenvalue of . If nλ = pa for some a > 1/2, there exist positive constants C1 and C2 such that

i) for j = 1, …, d, one has

| (14) |

ii) for j = d + 1, …, H, one has

| (15) |

hold with high probability.

Remark: This result might be of independent interest. In order to justify that the sparsity assumption for the high dimensional setting is necessary, Lin et al. [2015] have shown that for single index models, if and only if lim . Proposition 3 states that the projection of , j = 1, …, d, onto the true direction is non-zero if nλ > pa where a > 1/2.

Proof. Let x = z + w be the orthogonal decomposition with respect to and its orthogonal complement. We define two p × H matrices ZH = (z1,,…, zH,.) and WH = (w1,,…, wH,) whose definition are similar to the definition of XH. We then have the following decomposition

| (16) |

By definition, we know that and . Let Σ1 be the covariance matrix of w, then where EH is a p × H matrix with i.i.d. standard normal entries.

For sufficiently large ν1 and a, Lemma 3 implies that

| (17) |

happens with high probability and Lemma 1 implies

| (18) |

happens with high probability.

For any ω ∈ Ω = Ω1 ∩ Ω2, we can choose a p × p orthogonal matrix T and an H × H orthogonal matrix S such that

| (19) |

where B1 is a d × d matrix, B2 is a d × d matrix, B3 is a d × (H − d) matrix and B4 is a (p − 2d) × (H − d) matrix. By definition of the event ω, we have

| (20) |

Proposition 3 follows from the linear algebraic lemma below:

Lemma 4. Assume that nλ = pa for some a > ½. To avoid unnecessary confusion, we also assume that is sufficiently small. Let be a p × H matrix, where B1 is a d × d matrix, B2 is a d × d matrix, B3 is a d × (H − d) matrix and B4 is a (p − 2d) × (H − d) matrix satisfying (20). Let be the eigenvector associated with the j-th eigenvalue of MMτ, j = 1, …, H. Then the length of the projection of onto its first d-coordinates is at least for j = 1, …, d and is most for j = d +1, …, H.

Proof. Let us consider the eigen-decompositions of

where D1 (resp. D2) is a d × d (resp. (H − d) × (H − d)) diagonal matrices satisfying that λmin(D1) ≥ λmax(D2). (20) implies that

On the other hand, we could consider the eigen-decomposition of

where (resp. ) is a d × d (resp. (H − d) × (H − d)) diagonal matrices satisfying that . (20) implies that

Thus the eigen-gap is of order (which is of order λ, since nλ = pa for some α > 1/2). From (20), we know that . The Sine-Theta theorem (see e.g., Lemma 2) implies that

| (21) |

i.e., . Similar argument gives us that .

Let η be the (unit) eigenvector associated to the non-zero eigenvalue of MMτ. Let us write where η1, and . Let where and . It is easy to verify that is the (unit) eigenvector associated to the eigenvalue of MτM and

If is among the first d eigenvalues of MτM, then is bounded below by some positive constant. Thus . If is among the last H − d eigenvalues of MτM, then . Thus . ◽

A.2. Sketch of Proof of Theorem 1

We only sketch some key points of the proof here and leave the details in the online supplementary files. Recall that for single index model where β0 is a unit vector, we have denoted by the eigenvector of associated to the largest eigenvalue . Let be the minimizer of

where such that . Let η0 = Σβ0, and . Since we are interested in the distance between the directions of and β0, we consider the difference . A slight modification of the argument in Bickel et al. [2009] implies that, if we choose for sufficiently large constant C, we have with high probability. The detailed arguments are put in the online supplementary file. The Proposition 3, Condition A1) and , imply that holds with high probability for some constants C1 and C2. Thus, we have

| (22) |

holds with high probability. ◽

A.3. Proof of Theorem 2

Recall that ‘s are the (unit) eigenvectors associated to the j-th eigenvalues of , j = 1, …, d. We introduce the following notations,

| (23) |

Applying the argument in Theorem 1 on these eigenvectors, we have

| (24) |

for some constant C hold with high probability. Since we assume that d is fixed, if we can prove that

I) the lengths of , j = 1, …, d, are bounded below by

II) the angles between any two vectors of , j = 1, …, d, are bounded below by some constant, hold with probability, then the Gram-Schmit process implies that holds with high probability from (24). It is easy to verify that I) follows from the Proposition 3, the Condition A1) and the definition of , j = 1, …, d. II) is a direct corollary of the following two statements.

Statement A. The angles between any two vectors in ‘s, j = 1, …, d are nearly π/2. Since nλ = pa for some a > 1/2, we only need to prove that

| (25) |

holds with high probability for any i ≠ j. Recall that we have the following decomposition XH = ZH + WH. It is easy to see that and is identically distributed to a matrix, ε1, with all the entries are i.i.d. standard normal random variables. Let us choose an orthogonal matrix T such that and where A is a d × H matrix and B is a (p − d)× H matrix. Thus, is the eigenvector of associated with the j-th eigenvalue , j = 1, …, d. If we have a) λmin(AAτ) ≥ λ, b) and c) for some scalar μ > 0, then the statement I is reduced to the following linear algebra lemma.

Lemma 5. Let A be a d × H matrix (d < H) with λmin(AAτ) = λ. Let B be a (p − d) × H matrix such that . Let be the j-th (unit) eigenvector of CCτ associated with the j-th eigenvalue where Cτ = (Aτ, Bτ) and be the projection of onto its first d-coordinates. If , then for any i ≠ j,

| (26) |

Thus, ′s are nearly orthogonal if nλ = pa for some a > ½.

Proof. Let , then and . It is easy to see that and . Since is also the (unit) eigenvector of

for any i ≠ j, we have

Since and , we have

Note that a) follows from the Lemma 2, b) follows from Proposition 3 and c) follows from the Lemma 1. Thus statement A holds.

Statement B. The angles between any two vectors in ′s are bounded away from 0. Since , we only need to prove that there exists a positive constant ζ < 1 such that

| (27) |

Let , where T is a p × d orthogonal matrix. Since are nearly mutually orthogonal, we know that MτM is nearly an identity matrix. Thus, by some continuity argument, the statement is reduced to the following linear algebra lemma.

Lemma 6. Let A be a p × p positive definite matrix such that Cmin ≤ λmin(A) ≤ λmax (A) ≤ Cmax for some positive constants Cmin and Cmax. There exists constant 0 < ζ < 1 such that for any p × d orthogonal matrix B, we have

| (28) |

Proof. When d is finite, without loss of generality, we can assume that B is a p × 2 matrix. Note that the expression on the left side is invariant under orthogonal transformation of B. We can simply assume that B is a matrix with the last p – 2-rows consisting of all zeros. The result follows immediately based on basic calculation. ◽

A.4. Proof of Theorem 3

Recall that is the eigenvector associated with the i-th eigenvalue of , and , i = 1, …, H (see e.g., (23)). The argument in Theorem 1 implies that, for any 1 ≤ i ≤ H,

| (29) |

The Proposition 1 implies that

| (30) |

The above two statements give us the desried result in Theorem 3. ◽

REFERENCES

- Bickel PJ, Ritov Y, and Tsybakov AB. Simultaneous analysis of Lasso and Dantzig selector. The Annals of Statistics, 37(4): 1705–1732, 2009. [Google Scholar]

- Butucea Cristina, Ingster Yuri I, et al. Detection of a sparse submatrix of a high-dimensional noisy matrix. Bernoulli, 19(5B):2652–2688, 2013. [Google Scholar]

- Candes E and Tao T. The Dantzig selector: Statistical estimation when p is much larger than n. The Annals of Statistics, 35(6) :2313–2351, 2007. [Google Scholar]

- Chen CH and Li KC. Can SIR be as popular as multiple linear regression? Statistica Sinica, 8(2):289–316, 1998. [Google Scholar]

- Chen H. Estimation of a projection-pursuit type regression model. The Annals of Statistics, 19(1) :142–157, 1991. [Google Scholar]

- Cook DR. Regression graphics Wiley Series in Probability and Statistics: Probability and Statistics. John Wiley & Sons, Inc, New York, 1998. [Google Scholar]

- Cook DR. SAVE: a method for dimension reduction and graphics in regression. Communications in statistics-Theory and methods, 29(9–10):2109–2121, 2000. [Google Scholar]

- Cook DR, Forzani L, and Rothman AJ. Estimating sufficient reductions of the predictors in abundant high-dimensional regressions. The Annals of Statistics, 40(1): 353–384, 2012. [Google Scholar]

- Duan N and Li KC. Slicing regression: a link-free regression method. The Annals of Statistics, 19(2):505–530, 1991. [Google Scholar]

- Efron B, Hastie T, Johnstone I, and Tibshirani R. Least angle regression. The Annals of statistics, 32(2):407–499, 2004. [Google Scholar]

- Fan J and Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American statistical Association, 96(456):1348–1360, 2001. [Google Scholar]

- Fan J, Shao Q, and Zhou W. Are discoveries spurious? distributions of maximum spurious correlations and their applications. arXiv preprint arXiv:150204237, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, and Tibshirani R. Regularization paths for generalized linear models via coordinate descent. Journal of statistical software, 33(1): 1, 2010. [PMC free article] [PubMed] [Google Scholar]

- Friedman JH and Stuetzle W. Projection pursuit regression. Journal of the American statistical Association, 76(376):817–823, 1981. [DOI] [PubMed] [Google Scholar]

- Guyon I, Gunn S, Ben-Hur A, and Dror G. Result analysis of the NIPS 2003 feature selection challenge. In Advances in neural information processing systems, pages 545–552, 2004. [Google Scholar]

- Hsing T and Carroll RJ. An asymptotic theory for sliced inverse regression. The Annals of Statistics, 20(2): 1040–1061, 1992. [Google Scholar]

- Ingster Yuri I, Tsybakov Alexandre B, Verzelen Nicolas, et al. Detection boundary in sparse regression. Electronic Journal of Statistics, 4:1476–1526, 2010. [Google Scholar]

- Jiang B and Liu JS. Variable selection for general index models via sliced inverse regression. The Annals of Statistics, 42(5):1751–1786, 2014. [Google Scholar]

- Jung S and Marron JS. PCA consistency in high dimension, low sample size context. The Annals of Statistics, 37(6B):4104–4130, 2009. [Google Scholar]

- Li KC. Sliced inverse regression for dimension reduction. Journal of the American Statistical Association, 86(414):316–327, 1991. [Google Scholar]

- Li L. Sparse sufficient dimension reduction. Biometrika, 94(3):603–613, 2007. [Google Scholar]

- Li L and Nachtsheim CJ. Sparse sliced inverse regression. Technometrics, 48(4):503–510, 2006. [Google Scholar]

- Lichman M. UCI machine learning repository, 2013. URL http://archive.ics.uci.edu/ml.

- Lin Q, Zhao Z, and Liu JS. On consistency and sparsity for sliced inverse regression in high dimensions. arXiv preprint arXiv:1507.03895, 2015. [Google Scholar]

- Lin Q, Li X, Dong H, and Liu JS. On optimality of sliced inverse regression in high dimensions. 2016.

- Neykov M, Lin Q, and Liu JS. Signed support recovery for single index models in high-dimensions. Annals of Mathematical Sciences and Applications, 1(2):379–426, 2016a. [Google Scholar]

- Neykov M, Cai T, and Liu JS. L1-regularized least squares for support recovery of high dimensional single index models with gaussian designs. JMRL, 17:1–37, 2016b. [PMC free article] [PubMed] [Google Scholar]

- Ni L, Cook DR, and Tsai CL. A note on shrinkage sliced inverse regression. Biometrika, 92(1):242–247, 2005. [Google Scholar]

- Plan Y and Vershynin R. The generalized lasso with non-linear observations. IEEE Transactions on information theory, 62(3):1528–1537, 2016. [Google Scholar]

- Raskutti G, Wainwright MJ, and Yu B. Restricted eigenvalue properties for correlated gaussian designs. The Journal of Machine Learning Research, 11:2241–2259, 2010. [Google Scholar]

- Raskutti G, Wainwright MJ, and Yu B. Minimax rates of estimation for high-dimensional linear regression over-balls. Information Theory, IEEE Transactions on, 57(10):6976–6994, 2011. [Google Scholar]

- Simon N, Friedman J, Hastie T, and Tibshirani R. A sparse-group lasso. Journal of Computational and Graphical Statistics, 22(2):231–245, 2013. [Google Scholar]

- Thorgeirsson TE, Gudbjartsson DF, and many others Sequence variants at CHRNB3-CHRNA6 and CYP2A6 affect smoking behavior. Nature genetics, 42(5): 448–453, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thorisson GA, Smith AV, Krishnan L, and Stein LD. The international HapMap project web site. Genome research, 15(11): 1592–1593, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B, pages 267–288, 1996. [Google Scholar]

- Xia Y, Tong H, Li WK, and Zhu LX. An adaptive estimation of dimension reduction space. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 64(3): 363–410, 2002. [Google Scholar]

- Yuan M and Lin Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 68(1):49–67, 2006. [Google Scholar]

- Zhong W, Zhang T, Zhu Y, and Liu JS. Correlation pursuit: forward stepwise variable selection for index models. Journal of the Royal Statistical Society: Series B, 74(5): 849–870, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu L, Miao B, and Peng H. On sliced inverse regression with high-dimensional covariates. Journal of the American Statistical Association, 101(474):640–643, 2006. [Google Scholar]

- Zou Hui, Hastie Trevor, and Tibshirani Robert. Sparse principal component analysis. Journal of computational and graphical statistics, 15(2):265–286, 2006. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.