Abstract

Aims

The purpose of this study is twofold: (1) to examine the feasibility of providing high-tech augmentative and alternative communication (AAC) treatment to people with chronic aphasia, with the goal of evoking changes in spoken language; and (2) to identify evidence of AAC-induced changes in brain activation.

Method & Procedures

We employed a pre- post-treatment design with a control (usual care) group to observe the impact of an AAC treatment on aphasia severity and spoken discourse. Further, we used functional magnetic resonance imaging (fMRI) to examine associated neural reorganization.

Outcomes & Results

Compared to the usual care group, the AAC intervention trended toward larger treatment effects and resulted in a higher number of responders on behavioral outcomes. Both groups demonstrated a trend toward greater leftward lateralization of language functions via fMRI. Secondary analyses of responders to treatment revealed increased activation in visual processing regions, primarily for the AAC group.

Conclusions

This study provides preliminary guidance regarding how to implement AAC treatment in a manner that simultaneously facilitates language recovery across a variety of aphasia types and severity levels while compensating for residual deficits in people with chronic aphasia. Further, this work motivates continued efforts to unveil the role of AAC-based interventions in the aphasia recovery process and provides insight regarding the neurobiological mechanisms supporting AAC-induced language changes.

Keywords: Aphasia, Augmentative and Alternative Communication, fMRI, Cortical Plasticity, Neuroimaging

In aphasia rehabilitation, usual care is focused on helping people recuperate as much of their pre-stroke language capacity as possible (Hersh, 1998; Simmons-Mackie, 1998; Weissling & Prentice, 2010). Typically, usual care is a non-standardized therapy that is tailored to the specific needs of the person with aphasia; including management of co-occurring motor speech disorder(s), when appropriate (Brady, Kelly, Godwin, Enderby, & Campbell, 2016; Godecke et al., 2016). Once a person reaches a plateau in language recovery, if augmentative and alternative communication (AAC) is implemented, the focus is on circumventing, or compensating for the communication challenges associated with aphasia (Dietz et al., 2014; Elman, Cohen, & Silverman, 2016). However, the last decade of aphasiology treatment research has generated a plethora of data demonstrating that with treatment, people with aphasia are capable of making improvements in linguistic function, well into the chronic stages of stroke recovery (e.g., Boyle, 2004; Crosson et al., 2009; Edmonds, Nadeau, & Kiran, 2009; Fridriksson, Morrow-Odom, Moser, Fridriksson, & Baylis, 2006; Thompson, den Ouden, Bonakdarpour, Garibaldi, & Parrish, 2010). One strategy people with aphasia use to facilitate spoken language during anomic events, or communication breakdowns is self-cueing. For example, they might spontaneously point to the first letter of the target (via a letter board or AAC device) (Dietz, Weissling, Griffith, McKelvey, & Macke, 2014; Kathryn Garrett, Beukelman, & Low-Morrow, 1989). Similarly, it is also common for people with aphasia to self-cue with writing (either in the air or on paper) the initial letter, or letters, of the target (Wambaugh & Wright, 2007). There is also anecdotal and at least some empirical evidence (Farias, Davis, & Harrington, 2006) that people with aphasia can successfully use drawing to self-cue during anomic events. The literature on gestural treatment for people with aphasia fairly substantial in terms of self-cueing to facilitate word retrieval (e.g., Raymer et al., 2006; Rose, 2013; Rose & Sussmilch, 2008). The spoken language expression improvements observed when a person self-cues can be explained in terms of intersystemic reorganization.

First described by Luria in 1972, the theory of intersystemic reorganization posits that a weak system can be restored/strengthened during intervention when it is paired with a stronger, or intact system. Intersystemic reorganization requires a person to organize two functionally different systems into a new, related system. This theory has guided apraxia interventions for decades; whereby vibrotactile cues (Rubow, Rosenbek, Collins, & Longstreth, 1982), finger tapping (Knollman-Porter, 2008), and/or gestural (meaningful and non-meaningful) movements are used to promote improved articulatory planning (Knollman-Porter, 2008; Rubow et al., 1982). Relatively recently, improvements in spoken language recovery following gestural treatments (e.g., Raymer et al., 2006; Rose, 2013; Rose & Sussmilch, 2008) have been attributed to the theory of intersystemic reorganization. As such, it stands to reason that the use of the aforementioned self-cueing strategies that pair an intact system, such as movement (i.e., pointing to a letter, writing a letter, drawing, or gesturing), to overcome an anomic event can also be attributed to the theory of intersystemic reorganization. By extension, the use of an AAC intervention could be exploited to augment spoken language recovery—rather than using it solely as an alternative to spoken language. That is, a person with aphasia can be instructed to use the intact visual system to enhance the weaker spoken language system. Moreover, AAC interfaces that also include text likely stimulate the existing language system and affords the person with aphasia the opportunity to self-cue language via existing networks that draw on visual input modalities (written words). Thus, there is perhaps a novel coupling of canonical language and visual processing neural networks as well as an enhancement of visual input routes to the existing language network.

Together with the theory of intersystemic reorganization, the ability of people with aphasia to recover language function well-into the chronic phase of stroke recovery and self-cue to promote word retrieval during anomic events offer the solution for how an AAC device could be employed as a dual-purpose tool to augment language recovery and compensate for deficits. This approach, however, requires a shift in how AAC treatment is implemented. With the goal of language recovery, treatment needs to focus on instructing people with aphasia how to use AAC systems as a mechanism for self-cueing, rather than as a tool to replace speaking. This novel approach to AAC implementation would avoid teaching people with aphasia “learned non-use” (Pulvermuller & Berthier, 2008, p. 569) of spoken language; and instead, promote language recovery by coupling the canonical language and visual processing neural networks.

Neurobiological Biomarkers in Aphasia Rehabilitation

Researchers are able to capture ongoing brain activity, in vivo, with the high spatial resolution of functional MRI (fMRI) (Rutten & Ramsey, 2016). fMRI studies of language function in post-stroke aphasia have suggested that immediately post-stroke, there is a shift of language function to the right, non-dominant hemisphere, but individuals who have better language recovery in the longer-term revert language function back to the left-hemisphere perilesional tissue (Saur et al., 2006; Saur et al., 2010; Szaflarski, Allendorfer, Banks, Vannest, & Holland, 2013). However due to large left-hemisphere lesion size, it may not be possible for some people with aphasia to exploit the perilesional tissue; these individuals may benefit most from treatments designed to harness the right-hemisphere strengths to improve language function (Crosson et al., 2009; Zumbansen, Peretz, & Hébert, 2014).

Rather than focusing on either a left- or a right-hemisphere theory of language recovery, perhaps post-stroke language recovery can be explained by bilateral mechanisms. For example, the ventral visual stream in the extrastriate cortex, which is responsible for recognizing and discriminating faces, objects, and words (Harel, Kravitz, & Baker, 2013a, 2013b; Peissig & Tarr, 2007; Vogel, Petersen, & Schlaggar, 2014) (commonly included in AAC systems), may also be tapped to support language recovery. Although the link to language function is not yet clear; the ventral visual pathway courses anteriorly toward the medial and inferior temporal lobe, where information is linked to the long-term memory and linguistic (semantic) system (Goodale & Milner, 1992; Milner & Goodale, 2008) (at least in the left hemisphere), respectively (Harel et al., 2013a, 2013b; Peissig & Tarr, 2007; Vogel et al., 2014). From the temporal region, these fibers project to the inferior frontal gyrus; this pathway (inferior frontooccipital fascicle) may be a crucial tract that supports the ventral semantic system (Forkel et al., 2014; Martino et al., 2013; Tremblay & Dick, 2016). den Ouden and colleagues (2009) offer fMRI data to support the notion that the visual system indeed plays a crucial role in word retrieval that seems to have relevance for AAC design. Specifically, they examined difference between verb naming under two elicitation conditions: line-drawing pictures and a video presentation of transitive and intransitive verbs. Compared to the video presentation, the line drawing naming condition elicited a significant increase in posterior bilateral activation (i.e., superior occipital gyrus, fusiform gyrus, middle occipital gyrus, superior parietal gyrus). Moreover, when contrasted with intransitive verbs, transitive verbs yielded greater activation intensity across temporo-parieto-occipital regions. For these reasons, the idea that regions distal to the classical perisylvian areas can support language function is gaining momentum, and there is a subsequent push to think beyond the classic model of language neurobiology (Forkel et al., 2014; Martino et al., 2013; Tremblay & Dick, 2016). While the importance of Broca’s and Wernicke’s areas cannot be altogether dismissed, neither can the mounting functional (fMRI) (den Ouden, et al., 2009; Catani et al., 2016; Tomasi & Volkow, 2012) and structural (DTI) (Forkel et al., 2014; Martino et al., 2013; Tremblay & Dick, 2016) connectivity evidence linking canonical language regions of interest (ROIs) to distal ROIs. These data

In summary, the majority of the work in aphasia treatment that exploits the tenets of Luria’s intersystemic reorganization (Luria, 1972; Rose, 2016) has focused on natural modalities, such as writing, drawing, and gesturing. However, the explosion of mobile technology has somewhat normalized the use of AAC (i.e., iPads, iPhones, etc.) (AAC-RERC, 2011; Light & McNaughton, 2014). Further, use of AAC systems for people with aphasia often includes the development of a shared communication space (Light & Drager, 2007) and results in more enjoyable and successful interactions, as reported by the communication partner (Dietz, McKelvey, Schmerbach, Weissling, & Hux, 2010; A. Dietz et al., 2014; Hux, Beuchter, Wallace, & Weissling, 2010). Thus, it is imperative we find a way to unify aphasia rehabilitation approaches that have ostensibly opposing methods (i.e., AAC versus usual care) into a combined approach that facilitates language recovery and supports people with aphasia during communication breakdowns/anomic events. Moreover, researchers have begun to delineate the neural mechanisms of recovery for naming (Fridriksson et al., 2006; Fridriksson, Richardson, Fillmore, & Cai, 2012) and syntactic (Thompson et al., 2010; Thompson, Riley, den Ouden, Meltzer-Asscher, & Lukic, 2013) processing in people with aphasia; however, the neurobiological markers of AAC-induced language recovery in people with aphasia have not been established. However, there is support for unique neurobiological markers for spoken production of verb-based symbols, indicating a connection between traditional language ROIs to distal visual processing ROIs (e.g., den Ouden et el., 2009). Thus, the next logical step to bridging the gap between the fields of AAC and aphasiology, is to test the feasibility of harnessing the principles of intersystemic reorganization to use an AAC device as a language recovery tool. Likewise, to build a model that predicts response to treatment, it is critical to understand the underlying changes in neuroanatomy associated with AAC-induced language recovery. Therefore, the purpose of this study is twofold: (1) to examine the feasibility of providing high-tech AAC treatment to people with chronic aphasia, with the goal of evoking changes in spoken language; and (2) to identify evidence of AAC-induced changes in brain activation.

Method

Participants

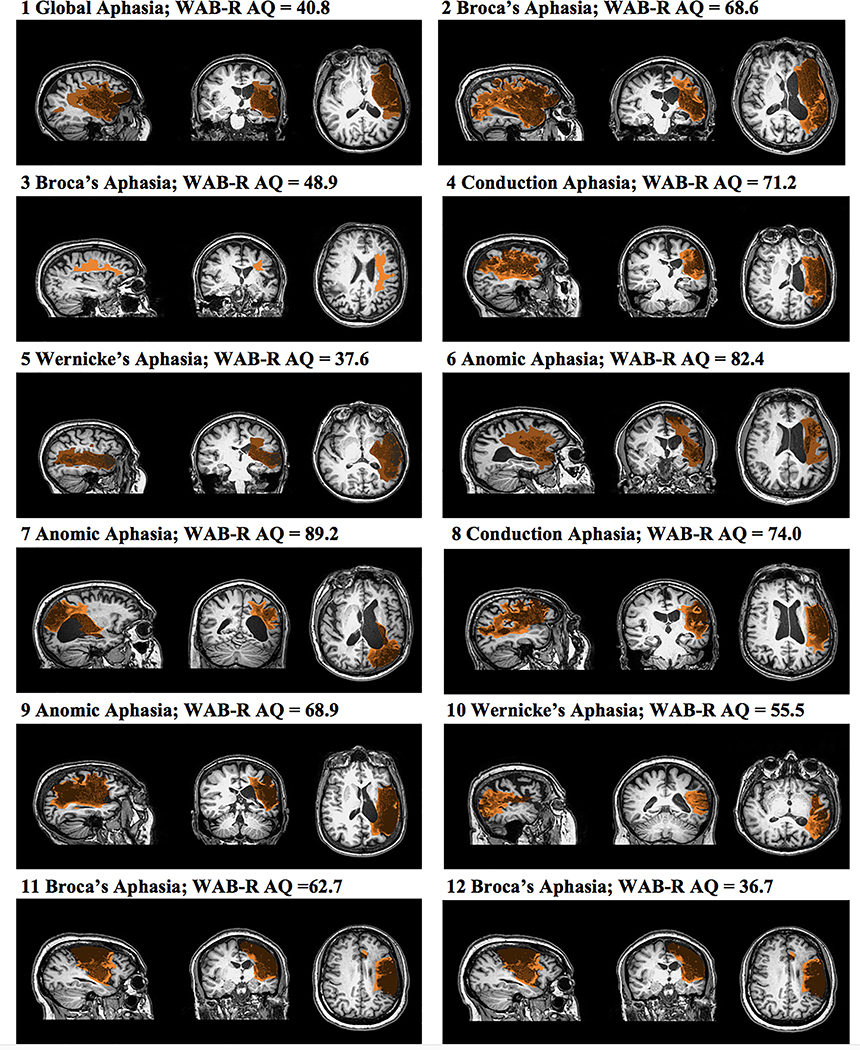

Fourteen people with post-stroke aphasia (> 12 months) were recruited through support groups, and local hospitals. All participants had a single left middle cerebral artery (MCA) infarct (ischemic), were right-handed, pre-stroke (except Participant 5) based on the Edinburgh Handedness Inventory (i.e., score ≥ 50) (Oldfield, 1971), had no history of major psychotic episodes and substance abuse, had at least a high school education, were not currently enrolled in speech-language therapy, and were native speakers of American English. From this pool, 12 people completed the study; Table 1 summarizes the participants’ demographic data (Figure 1 details the lesion location for each participant). Testing revealed a wide range of severity and aphasia types. All participants reported previous participation in post-stroke aphasia rehabilitation; however, none received treatment while enrolled in this study. Further, all participants passed an audiometric hearing screening at 40db HL, in at least one ear at 1000, 2000, and 4000 Hz, reported normal or corrected vision, and passed a visual field/attention screening task (Kathryn Garrett & Lasker, 2005).

Table 1.

Demographic and pre-treatment linguistic profile of participants.

| Treatment Group | ID | Gender | Ethnicity | Age | Number lesioned voxels | MPOa | AACb exper. | Level of education | Aphasia typec | Aphasia severityc | RCBA-2d |

|---|---|---|---|---|---|---|---|---|---|---|---|

| AAC | 1 | Female | Caucasian | 57 | 18727 | 79 | None | Some coll.e | Global | 40.8 | 72 |

| 2 | Male | Caucasian | 61 | 30570 | 91 | LTg | Bachelor’s | Broca’sf | 68.6 | 69 | |

| 3f | Female | Caucasian | 47 | 14616 | 124 | LT, HTh | Bachelor’s | Broca’sf | 48.9 | 72 | |

| 4 | Female | Caucasian | 63 | 17705 | 170 | LT, HT | Bachelor’s | Conduction | 71.2 | 95 | |

| 5i | Female | Caucasian | 57 | 7849 | 16 | None | High school | Wernicke’s | 37.6 | 81 | |

| 6 | Female | Caucasian | 39 | 18807 | 44 | None | Some coll. | Anomic | 82.4 | 86 | |

| Usual Care | 7 | Female | African amer. | 58 | 1583 | 48 | None | Bachelor’s | Anomic | 89.2 | 74 |

| 8 | Female | Caucasian | 60 | 12950 | 105 | None | Some coll. | Conduction | 74.0 | 86 | |

| 9 | Male | Caucasian | 59 | 14009 | 73 | LT | Master’s | Anomic | 68.9 | 71 | |

| 10 | Male | Caucasian | 47 | 10989 | 69 | None | High school | Wernicke’s | 55.5 | 63 | |

| 11 | Male | Caucasian | 71 | 14066 | 69 | LT | Master’s | Broca’sf | 62.7 | 93 | |

| 12 | Male | Caucasian | 66 | 30110 | 38 | LT, HT | Bachelor’s | Broca’sf | 36.7 | 67 |

Note.

Months post-onset

Augmentative and Alternative Communication Experience

Western Aphasia Battery-Revised used to determine type and severity, Aphasia Quotient out of 100

Reading Comprehension Battery for Aphasia-2nd Edition, total points available = 100

Some College

Apraxia of Speech present based on clinical judgment

Low-Tech

High-tech

Left-handed (pre-stroke).

Figure 1.

A depiction of the manual lesion delineation for each participant and summary of aphasia type and severity (via the Western Aphasia Battery-Revised Aphasia Quotient (WAB-R AQ) (Kertesz, 2006) at enrollment.

Materials and Equipment

AAC device and recording equipment

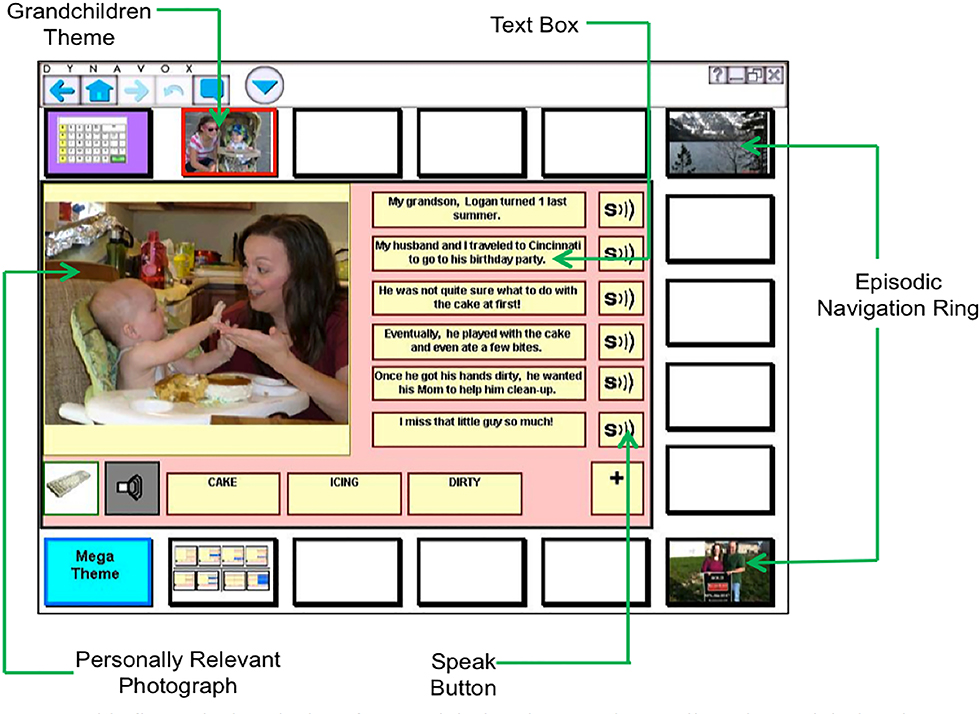

The researchers created personalized AAC interface for each person on the DynaVox Vmax;™ see Figure 2 for an example. To allow accurate transcription of the narrative retell (see below), all narrative retell sessions were videotaped using three digital video camera recorders (Canon FS200) to capture the (a) faces and upper bodies of the participants, (b) screen of the DynaVox VMax, which displayed the programmed narratives, and (c) pad of paper to record messages conveyed through writing or drawing.

Figure 2.

This figure depicts the interface used during the narrative retell session and during the AAC treatment. Above, all elements of the system are displayed. For the purposes of this study, we removed the episodic navigation ring (which allows the person to talk about different topics). © 2015 DynaVox Mayer-Johnson. All rights reserved.

Story development and AAC device programming

Each participant helped to program two personally relevant stories into the high-tech AAC device. To prepare for the device programming session, the researchers provided the participants and their caregivers with an example story and photographs the same day they were consented and enrolled in the study. At this time, they were instructed to gather pictures for six to eight stories that they would feel comfortable sharing during treatment, and with unfamiliar people. When possible, the researchers encouraged the participants to have a caregiver help to write out key ideas for each story. This was used as a starting point for what to program into the VMax. A member of the research team worked with the participant to determine which two stories included the most representative, highly-contextualized pictures and text-based content to program into the AAC device. Next, the content was programmed using previously described procedures (Dietz et al., 2014; Griffith, Dietz, & Weissling, 2014). Although no limits were placed on the topics that should be included, the themes were fairly universal and included stories about their stroke/rehabilitation, anniversary and birthday parties, and vacations. Each narrative included two relevant photographs from the participants’ personal image library and six textboxes that included sentence-level text. The speak buttons were programmed to match the content included in the textboxes (see Figure 2).

Neuroimaging parameters

MRI Scans were completed on a 3.0 Philips Achieva Whole Body MRI/MRS system. High-resolution T1-weighted anatomical images were obtained using the following parameters: TR/TE=8.1/3.7 ms, FOV 25.6 × 25.6 × 19.2 cm, matrix 256 × 256, slice thickness = 1mm. The fMRI scanning was performed with the following parameters: TR/TE = 2000/38 ms, FOV 24.0 X 24.0 cm, matrix 64 × 64, slice thickness = 4 mm, SENSE factor = 2. This resulted in a voxel size of 3.75 × 3.75 × 4 mm and 32 axial slices. The event-related verb generation task (see below) was presented using DirectRT and an Avotec audio-visual system.

Verb generation fMRI task

A detailed description of the verb generation task used in the current study can be found in a recent publication (Dietz et al., 2016); however, a brief summary is provided here. Prior to the fMRI, participants were instructed on the paradigm and practiced the task until they achieved success. Inside the scanner, the participant was presented with a concrete noun, and given visual instructions to respond either by thinking of verbs associated with the noun (covert verb generation), saying aloud the associated verbs (overt verb generation), or repeating the noun (overt repetition). The nouns were designed to elicit transitive verbs. For example a noun stimulus is cookie, which typically generates verbs such as eat (the cookie) bake (the cookie). Prior to beginning the paradigm, the participant viewed aReady screen for 4 seconds, followed by 45, 12-second trials, which included three blocks of 15 trials each for covert verb generation, overt verb generation, and overt repetition (conditions presented in the same order across all participants). MRI silence occurred during the first 6 seconds of each trial, followed by 6 seconds of fMRI data acquisition (Schmithorst & Holland, 2004).

Intervention

AAC treatment

The idea for this treatment was born out of several recent case series studies, which documented that people with aphasia (and no significant motor speech impairment) produced more on-topic comments, experienced fewer communication breakdowns, and had higher rates of repairs during breakdowns in the personalized conditions. The participants also spoke about 70% of the time when using VMax to retell personal narratives (Dietz et al., 2014; Griffith et al., 2014)—and produced a higher number of correct information units (CIUs) (Nicholas & Brookshire, 1993), or content related to the narrative during narrative retells, when the interface included personally relevant photographs and text (Collier & Dietz, 2014). This work led to the development of a novel discourse-level AAC intervention designed to harness the principles of intersystemic reorganization (Luria, 1972; Rose, Attard, Mok, Lanyon, & Foster, 2013) and thus promote language recovery. Briefly, the participants were instructed on how to locate key words associated with their personal narrative via the pictures, text, or speak buttons, while always attempting to speak target words as they used the device to supplement their spoken language. Further, during practice retells with the clinician and with naïve listeners, the participants received instruction on how to self-cue to facilitate word retrieval by using the various elements of the interface. For example, the participant may self-cue for word retrieval by pointing target word or relevant aspect of the picture, while attempting to say the target. The reader is referred to Appendix A in the Supplemental Materials document, for a summary of the intervention steps.

Usual care

The usual care treatment involved a traditional restorative treatment regime, which did not include the use of the VMax. A single clinician (who was not a member of the research team) evaluated the language testing and designed a treatment program to strengthen the connection between the auditory system and the impaired language system using a variety of tasks. The SLP described the provided treatment grounded in Schuell’s stimulation approach (Coelho, Sinotte, & Duffy, 2008). Although participants completed a personalized story retell pre- and post-treatment, as described in further detail below, it is important to note that storytelling was not a part of the usual care intervention, for any participant. However, this was not an a priori decision; based on the Schuell’s stimulation approach, the treating clinician determined that the participants required strengthening of foundational skills needed to be addressed. Appendix B, in the Supplemental Materials document, provides a summary of the specific treatment tasks used for each participant in the usual care group. Due to the highly personalized nature of this intervention, treatment fidelity checks were not conducted.

Procedures

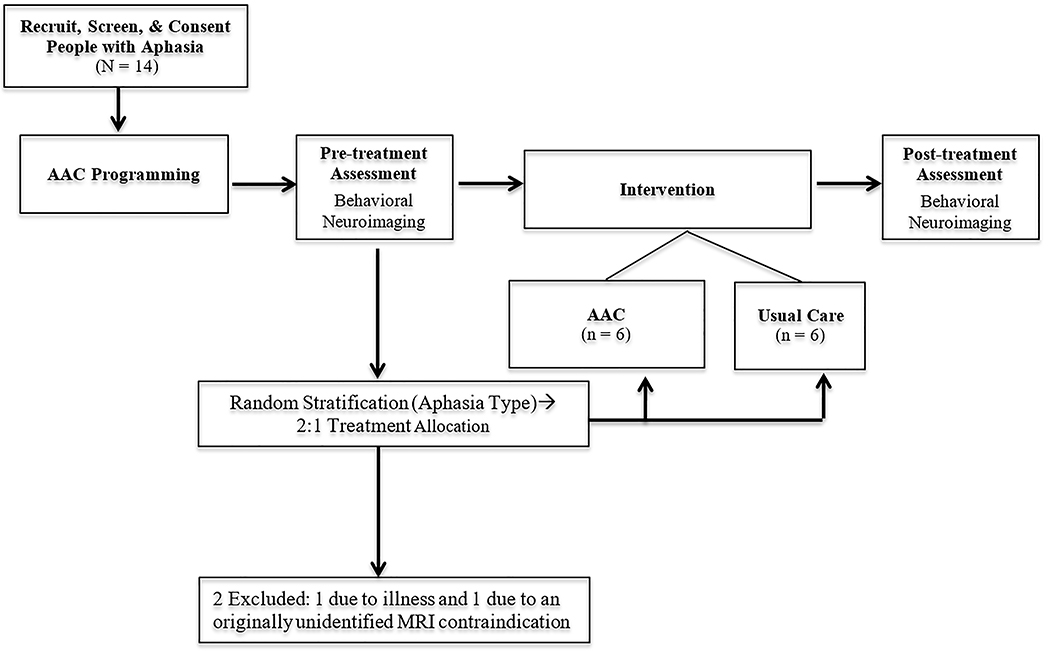

Figure 3 depicts the progression of experimental procedures described in the following sections.

Figure 3.

Depiction of study tasks and sequence.

AAC Programming

Pre-treatment, each participant attended an assessment session (2–3 hours in length, depending on their fatigue levels) to complete the initial screening/testing activities. Next, within a few days of the testing session, the participants attended one-to-two (also depending on their endurance) sessions to co-construct and program two preselected personal stories into the AAC device, as described in the materials and equipment section.

Pre-treatment story retell and MRI

Within one week of the initial assessment sessions, participants attended a second testing session to retell their stories and complete the fMRI testing. Participants in both the AAC and usual care group retold both narratives (6 minutes each) to a research team member who served as a naïve listener (JV); however, researchers randomly assigned one story to be retold with the AAC device (also the treated story for those in the AAC group), and the second to be retold without the AAC device (for both treatment groups). During the retells, the listener was instructed to begin each conversation with, I understand you want to tell me about (insert story title). She was also instructed to give adequate pause time after questions, ask open-ended questions, and use conversation continuers such as, Tell me more about that or, to verify content, From what I understand, (insert interpretation). Further, the listener was encouraged to only use vocabulary related to the story once the participant introduced it; either via spoken language or by referencing the VMax in some manner. In other words, she could not point-out pictures or words (or select the speak button) on the AAC device, or otherwise aid participants in word retrieval. Instead, she was encouraged to nod her head and use the conversation continuers previously described. For the neuroimaging task, all participants demonstrated the ability to complete the verb generation task outside of the scanner; the researchers monitored each response to ensure task adherence was maintained.

Intervention assignment

After day one of pre-treatment testing, the researchers informed the study statistician of the participant’s aphasia type. To balance the number of people with fluent and nonfluent aphasia each group, a stratified randomization approach was employed. However, the balance of aphasia type was disrupted because two participants—one fluent (AAC group) and one nonfluent (usual care group)—were unable to complete the study due to either personal conflicts or an illness. Regardless, the 12 remaining participants received either 12 hours (three, one-hour treatment sessions, across four weeks) of AAC therapy (n = 6) or usual care (n = 6). To maintain equivalence of treatment time/language practice, homework was not assigned for either group. As such, the participants in the AAC group only used the VMax in the clinic.

Post-treatment assessment

Within one week of completing intervention, all participants returned for two testing days; one to complete the behavioral testing, and a second to retell their stories and complete the fMRI testing.

Research Design and Data Analyses

We employed a pre- post-treatment design with a control group (i.e., usual care group).

Aphasia severity testing

Before and after intervention, aphasia type and severity (i.e., Aphasia Quotient (AQ)) was determined via the WAB-R (Kertesz, 2006).

Story retells: Dependent measures

Spoken discourse

The spoken language of the participants during the story retells (with and without the AAC device) were transcribed, verbatim, by a trained research assistant and verified by two additional researchers, who then jointly coded five spoken discourse measures: (1) counted words (Nicholas & Brookshire, 1993), (2) correct information units (CIUs) (Nicholas & Brookshire, 1993), (3) CIUs/minute, (4) mazes (Shadden, 1998), and (5) T-Units (Hunt, 1970) via the Systematic Analysis of Language Transcripts (SALT) program (Miller, Andriacchi, & Knockerts, 2011). For these analyses, only spoken language (not device output) was transcribed and coded. Although Boyle (Boyle, 2015; Brookshire & Nicholas, 1994) examined the stability of a variety of discourse measures using standardized stimuli, these recommendations cannot be generalized to the personally relevant stimuli used in the current study. Therefore, pre- to post-treatment changes larger than the standard error of measurement (SEM) for each task (and group) were considered to be indicative of clinically relevant change on all behavioral measures and used to identify treatment responders.

Expressive modality units

During each retell, the researchers transcribed and coded four expressive modality units (EMUs), defined as information that can be expressed through spoken or non-spoken modalities (written, drawn, or gestural) (Dietz et al., 2014; Griffith et al., 2014). Spoken EMUs reflect a single thought or idea (adapted from Mentis &Prutting, 1993) and are not necessarily an indicator of the informativeness (i.e., CIUs) or the complexity (i.e., T-Units) of the spoken language. For example, a spoken EMU may include paraphasias, mazes, and/or be agrammatic in nature. During the retell with the VMax, researchers coded for three additional EMUs communicated via the AAC device: (1)photograph, (2) text box, and (3) speak button; non-communicative gestures and/or references to the device were not coded as EMUs. Operational definitions of all EMUs, and examples, are detailed in the supplemental materials provided by Dietz and colleagues (2014).

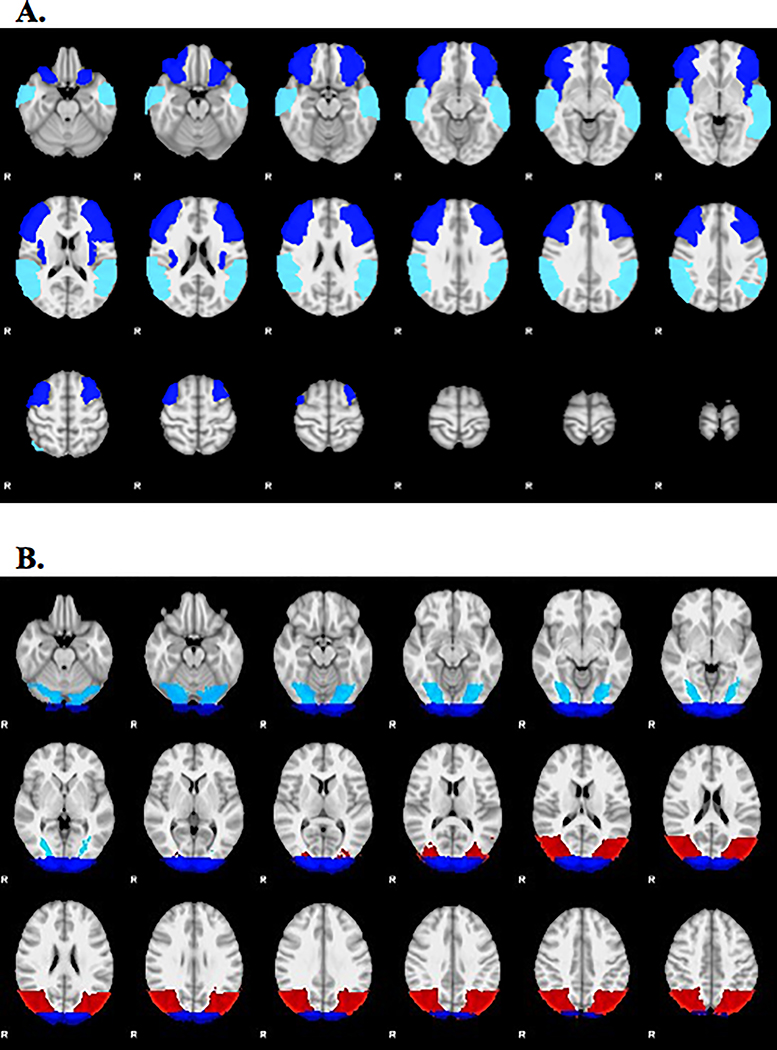

Neuroimaging

Stroke lesion masks were traced manually on each person’s anatomical MRI image by a neurologist using Analysis of Functional Neuroimages (AFNI) (Cox, 2012) (see Figure 1). Researchers used the Oxford FMRIB Software Library (FSL) to perform spatial normalization, apply motion correction, and spatial smoothing. For the purposes of calculating the lateralization index (LI), anatomically-defined ROIs known to support the linguistic systems required to successfully complete the verb generation fMRI task were selected (Allendorfer, Kissela, Holland, & Szaflarski, 2012; Dietz et al., 2016; Szaflarski et al., 2014). Briefly, the anterior ROI included the inferior frontal gyrus, and contiguous areas of middle frontal gyrus and anterior insula, and the posterior ROI included the posterior portions of the superior and middle temporal gyri, extending into supramarginal gyrus and angular gyrus (see Figure 4A). The left ROIs were then mirrored to the right in MNI space. A general linear model was used to determine significant activation related to overt verb generation > overt repetition. Active voxels were defined as those above the median value of positive voxels in both the left and right ROI. The LI was calculated as the difference between the number of active voxels in the left and right ROIs divided by the sum. LI values < −0.1 indicates right-lateralization and LI > 0.1 indicate left-lateralization; whereas values between −0.1 < LI ≤ 0.1 represent bilateral, or symmetric language distribution (Dietz et al., 2016; Szaflarski et al., 2014; Szaflarski, Holland, Schmithorst, & Byars, 2006; Wilke & Lidzba, 2007).

Figure 4.

(A) Anatomical regions of interest (ROI) used to calculate change in lateralization index (LI) and activation intensity (mean z-score) for the anterior and posterior language areas: blue = inferior frontal gyrus, middle frontal gyrus, and anterior insula, turquoise = superior temporal gyrus, middle temporal gyrus, supramarginal gyrus, and angular gyrus. (B) The anatomical ROIs used to calculate the change in z-score for three visual areas: turquoise = fusiform gyrus, red = inferior lateral occipital gyrus, blue = occipital pole.

Secondary Analyses

Responders

Responders were defined as participants who made clinically relevant improvements (i.e., ≥ SEM) on at least three spoken language dependent measures (i.e., WAB-R AQ (Kertesz, 2006) and/or counted words, CIUs, CIUs/minute, Mazes, T-Units); either with, or without the AAC device (see Supplemental Materials, Tables S1 and S2 for pre- to post-treatment group means and SEM values, as well as individual spoken discourse performance).

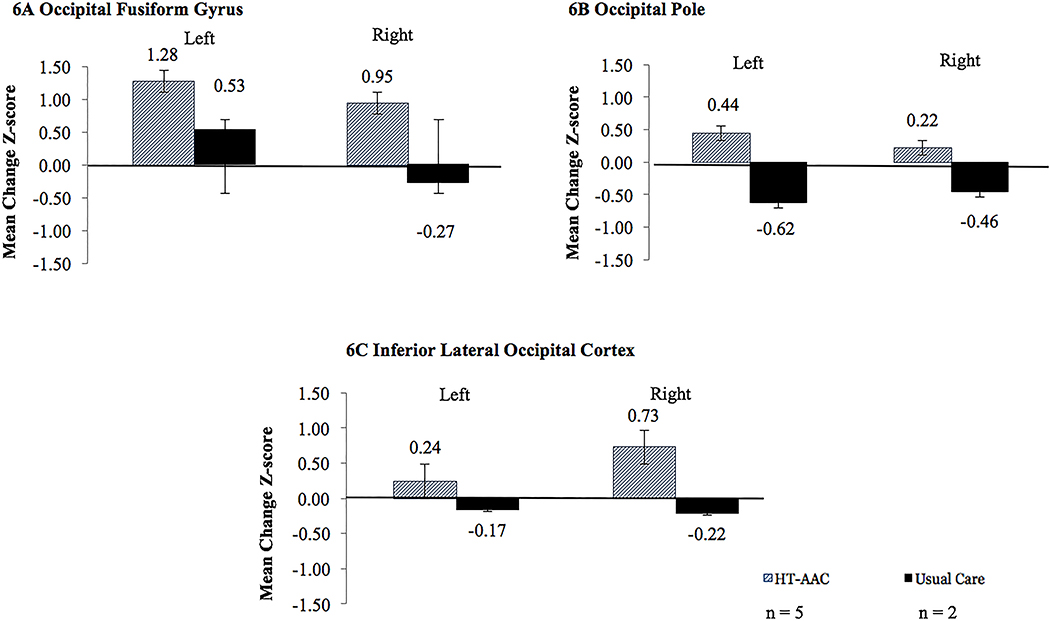

Activation intensity: Visual processing ROIs

Given the visual nature of the AAC treatment (i.e., photographs and text), we examined pre- to post-treatment changes in activation intensity (i.e., change in mean z-score) during the verb generation task (contrast = overt verb generation > overt repetition) for three bilateral ROIs dedicated from the ventral visual stream that support visual processing (i.e., recognizing and discriminating faces, objects, and words): (1) the occipital fusiform gyrus, (2) inferior occipital gyrus, and (3) occipital poles, as defined by the Harvard-Oxford atlas (Desikan et al., 2006) (see Figure 4B). Extrastriate cortex in the ventral visual stream is responsible for recognizing and discriminating faces, objects, and words (Harel et al., 2013a, 2013b; Peissig & Tarr, 2007; Vogel et al., 2014), items commonly included in AAC devices. Similar neural circuitry underlying visual processing may also be tapped to support language recovery.

Results

Coding Reliability, Fidelity, and Procedural Integrity

Aphasia severity scores

Two speech-language pathologists (SLPs) independently verified the final WAB-R AQ (Kertesz, 2006) scores for all 12 participants. All minor miscalculations were corrected and confirmed by both SLPs and aphasia classification reached 100% agreement.

Treatment Fidelity

A sampling of 25% of AAC treatment sessions, spanning all 4 steps, revealed treatment fidelity of 97.59% across 4 different clinicians. The usual care was not sampled for fidelity due to the personalization of each treatment plan.

Procedural Integrity of Listener Adherence to Story Retell Guidelines

A procedural integrity check on 30% of sessions (7 of 24 pre- and post-treatment retell sessions) revealed that the listener followed the guidelines 93.95% of the time.

Story retell coding

Jointly-coded intrarater reliability checks on roughly 30% (15 of 48) of transcripts revealed very good agreement (Cohen, 1968; Fleiss, Levin, & Paik, 2004), with a mean Cohen’s kappa of 0.97 on measures of spoken discourse (CIUs = 0.96; mazes = 0.98, T-Units = 0.97). Intrarater reliability yielded good agreement (Cohen, 1968; Fleiss et al., 2004) on expressive modality units, with mean kappa of 0.78 (spoken = 0.85; written = 0.82; drawn = 0.89; gestural = 0.66; speak button= n/a; text box = 0.68; photograph = 0.79).

Behavioral Measures-Results

A series of t-tests revealed that there was not a statistically significant difference between the groups on behavioral measures at pre- or post-treatment. Clinically significant changes for aphasia severity, spoken discourse, as well as expressive modality units, were determined by calculating Cohen’s d (Cohen, 1992). These findings are summarized below and in Table 2.

Table 2.

Cohen’s d effect sizes comparing the pre- to post-treatment mean change between groups on spoken discourse measures.

| Mean Change |

||||

|---|---|---|---|---|

| Retell Condition | Measure | AAC n = 6 |

Usual Care n = 6 |

Effect Sizea |

| M(SD) | M(SD) | Cohen’s d | ||

| WAB-R AQb | 3.20(5.75) | 1.83(4.10) | 0.27 | |

| Spoken Discourse | ||||

| Retell with the AAC device | %Counted Words | 7.27(8.50) | 1.58(4.58) | 0.83 |

| %CIUsc | 2.48(11.72) | −4.69(5.49) | 0.78 | |

| CIUS/Minute | −1.53(6.88) | 0.00(10.62) | −0.17 | |

| %Mazed Wordsd | −0.70(7.13) | 1.09(4.04) | −0.31 | |

| %Tunitse | 11.13(8.49) | 0.71(10.50) | 1.09 | |

| Expressive Modality Units | ||||

| %Spokene | −4.64(5.75) | 2.24(10.9) | −0.79 | |

| %Drawn | −3.67(0.92) | −0.21(0.46) | 0.11 | |

| % Gesture | −2.25(6.07) | −3.67(5.32) | −0.25 | |

| %Written | −1.07(5.38) | 0.24(1.04) | −0.34 | |

| % Photograph | 5.16(6.32) | −0.36(6.03) | 0.89 | |

| %Speak Button | 0(0) | 0(0) | NA | |

| %Text Box | 2.87(9.27) | 3.29(10.7) | 0.04 | |

|

Retell without the AAC device |

||||

| Spoken Discourse | ||||

| %Counted Words | −0.17(4.84) | −3.15(10.44) | 0.37 | |

| %CIUsc | −1.44(8.70) | −2.32(5.34) | 0.12 | |

| CIUS/Minute | 0.92(2.91) | −2.92(6.40) | 0.72 | |

| %Mazed Wordsd | 0.68(2.11) | 2.19(6.77) | −0.30 | |

| %Tunitse | 8.18(7.97) | −0.03(12.92) | 0.77 | |

| Expressive Modality Units | ||||

| %Spokene | 1.83(8.2) | −0.86(11.18) | 0.20 | |

| %Drawn | −1.00(2.94) | −0.69(1.68) | −0.13 | |

| %Gesture | 5.84(9.80) | −7.49(8.99) | 1.48 | |

| %Written | −5.48(5.81) | 2.77(2.27) | −1.87 | |

| % Photograph | NA | NA | NA | |

| %Speak Button | NA | NA | NA | |

| %Text Box | NA | NA | NA | |

Note.

Effect size: small effect = 0.2, medium effect = 0.5, large effect = 0.8..

Western Aphasia Battery-Revised Aphasia Quotient

correct information unit

A decrease in % mazed words is a positive gain.

Spoken expressive modality units refers only to number of utterances spoken.

Aphasia severity

Both groups demonstrated a positive gain, pre- to post-treatment on the AQ score; however, the AAC group trended toward larger improvements than the usual care group. Cohen’s effect size suggests that these differences reflect a small clinical significance (d = 0.27), with the AAC group outperforming the usual care group.

Narrative retells with the AAC device (Vmax)

Spoken discourse

For measures of spoken discourse, the results indicate clinically significant findings, favoring the AAC treatment, for an increase in the percentage of counted words (d = 0.83) and CIUS (d = 0.78); a decrease in mazed words (d = −0.31); and an increase in T-Units (d = 1.09). However, the groups showed comparable change in terms of CIUs/minute (d = −0.17).

Expressive modality units

Comparing the mean pre-to-post change between the two groups yielded the following effect sizes for expressive modality units. Compared to the AAC group, the usual care group demonstrated an increase in spoken EMUs (d = 0.79). For photograph EMUS, the AAC group increased their use of compared to the usual care group (d = 0.89). Use of gesture EMUs was more reduced in the usual care group compared to the AAC group (d = −0.25). For written EMUs, a small effect (d = −0.34) indicates that the AAC group reduced their use of writing more than did the usual care group. There were no clinically relevant differences in the pre- to post-treatment changes for drawn (d = 0.11) or text box EMUs (d = 0.04).

Narrative retells without the AAC device (Vmax)

Spoken discourse

Similar to the retell with the device, comparing the changes between groups suggests clinically significant findings, favoring the AAC treatment, for percentage of counted words (d = 0.37), CIUS/minute (d = 0.72), mazed words (d = −0.30); and T-Units (d = 0.77) produced. However, no clinically significant differences emerged for CIUs (d = 0.12).

Expressive modality units

Comparing the mean pre-to-post change between the two groups on expressive modality units resulted in several clinically significant effect sizes. The AAC group demonstrated increased use of spoken (d= 0.20) and gestural EMUs (d= 1.48) compared to the usual care group. The AAC group decreased their use of written EMUs, compared to the usual care group (d = −1.87). No clinically significant effects were noted for drawn EMUs (d = −0.13).

Neuroimaging Measures-Results

Language lateralization index (LI)

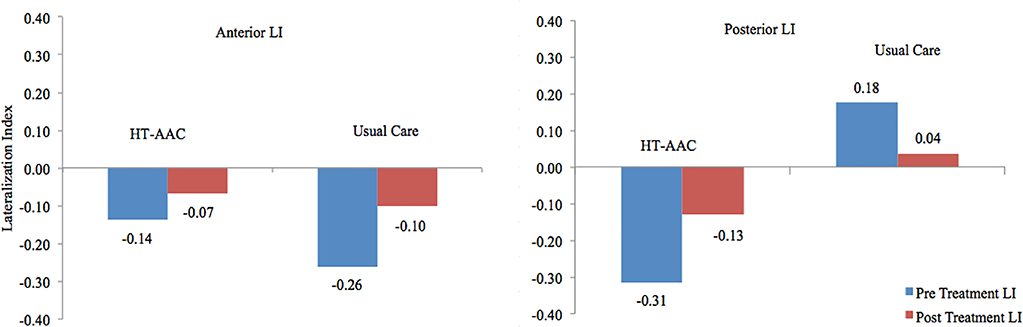

T-tests revealed no significant differences for pre- or post-treatment language lateralization (p ≥ 0.0996) values during the verb generation task (overt verb generation > overt repetition) for the anterior ROI; Figure 5 depicts the LI values, for each group.

Figure 5.

Pre- to post-treatment change in the anterior and posterior language lateralization indices for the AAC and the usual care groups. LI values < −0.1 indicates right-lateralization and LI > 0.1 indicate left-lateralization; whereas values between −0.1 < LI ≤ 0.1 represent bilateral, or symmetric language distribution (Dietz et al., 2016; Szaflarski et al., 2014; Szaflarski et al., 2006; Wilke & Lidzba, 2007).

Anterior ROI

At baseline for the anterior ROI, the AAC group (M = −0.14; SD = 0.22) and the usual care group (M = −0.26; SD = 0.35) both demonstrated right lateralization; post-treatment, both groups shifted leftward, with LIs in the bilateral range (AAC: M = −0.07; SD = 0.29 and usual care: M = −0.10; SD = 0.44). In the anterior language ROI, Cohen’s d denotes a small effect indicating increased leftward lateralization for the usual care group (d = 0.33), compared to the AAC group.

Posterior ROI

In the posterior ROI, AAC group exhibited a right-lateralized pattern at baseline (M = LI = −0.31; SD = 0.21). In contrast, the usual care group exhibited the opposite, with pre-treatment posterior LI values indicating left lateralization (M = 0.18; SD = 0.59). Post-treatment, the AAC group exhibited a nearly bilateral LI (M = −0.13; SD = 0.25), while the usual care group demonstrated bilateral LI values (M = 0.04; SD = 0.62). For the posterior ROI, effect sizes revealed a large effect (d = 1.11), indicating more leftward shift of LI values for participants in the AAC group.

Secondary Analyses-Results

Correlation of change in lateralization index (LI) with change in aphasia severity

Across both groups, greater change (toward left-lateralization) in the anterior LI was positively correlated with improved performance on the WAB-AQ (Kertesz, 2006) (N = 12; r = 0.530, p = 0.038).

Responders

Five of the six people in the AAC treatment group (Participants 1, 3, 4, 5, and 6), including those with fluent and nonfluent aphasia were identified as responders. The usual care group included two responders with fluent aphasia (7 and 9) (see Tables S1 and S2).

Activation intensity: Visual processing ROIs

The responders in the AAC treatment group (n = 5) exhibited an increase in activation for all three bilateral visual processing ROIs. In contrast, the responders in the usual care group (n = 2) exhibited decreased activation across in the inferior occipital gyrus and the occipital poles, bilaterally; with increased activation in the left fusiform gyrus. Effect sizes revealed that with the AAC group trended toward increased changes in activation for all three visual ROIS (compared to the usual care group): occipital fusiform gyrus (left: d = 0.78; right: d = 0.34), inferior lateral occipital cortex (left: d = 0.63; right: d = 1.59), and the occipital pole (left: d = 1.92; right: d = −0.96). In comparison, the non-responders in both groups revealed a tendency for decreased activation, bilaterally, across all ROIs. Figure 6 depicts changes in visual ROI activation intensity for responders in each treatment group.

Figure 6.

Mean pre- to post-treatment change in activation intensity (change in mean z-score) on the verb generation task (overt verb generation > overt repetition), for three ROIs (bilateral) as defined by the Harvard-Oxford atlas. Error bars represent standard errors. Cohen’s d reflects the between group effect on change (small effect = 0.2, medium effect = 0.5, large effect = 0.8).

Discussion

This study took the first necessary step in examining the feasibility of employing an AAC treatment as a dual-purpose tool that can simultaneously support language recovery and compensate for aphasia-related deficits in people with chronic post-stroke aphasia (compared to a group receiving usual care). Further, it documented evidence of AAC-induced changes in neural reorganization. These findings are contextualized within the extant literature, with an emphasis on recommended future directions to complete the bridge between the fields of AAC and aphasiology.

Language Recovery: Improvements in Spoken Discourse and Aphasia Severity

Using effect sizes (Cohen, 1992), we were able to show trends toward comparable, and often better, outcomes on the targeted behavioral measures in the AAC group. The increase in spoken EMUs exhibited by the usual care group gives the appearance that they outperformed the AAC group. However, the participants in the AAC group demonstrated higher levels of improvement on measures of informativeness and complexity (see Table 2). Additionally, the secondary analyses revealed that the AAC group (n = 5 out of 6) yielded more responders than did the usual care group (n = 2 out of 6). Also of importance is that the AAC group included people with fluent and nonfluent aphasia who responded to treatment; whereas the two responders in the usual care group were both classified as having anomic aphasia. More specifically, in the AAC group, people with fluent and nonfluent aphasia both produced more counted words (Nicholas & Brookshire, 1993); however those with nonfluent aphasia tended to increase CIUs (Nicholas & Brookshire, 1993) and T-Units (Hunt, 1970) (smallest syntactically correct utterance). This draws important attention to the fact that when providing usual care clinicians may have a tendency to focus on word-level treatments with people who have nonfluent aphasia, assuming that sentence-level improvements and/or treatments are beyond reach. Although the current study does not offer evidence that AAC intervention is superior, these data reveal a potential for narrative discourse improvement in people with nonfluent aphasia, even over the course of limited treatment sessions. In contrast, those with fluent aphasia decreased the mazes (Nicholas & Brookshire, 1993) they produced. These changes occurred along with improvements in compensatory use of VMax (i.e., expressive modality units); however, despite being trained to use the “speak button” not one participant used this feature of the AAC system during the post-treatment retells. In other words, even those who received AAC training, which explicitly instructed them to use the speak button, chose not to have the device “speak” for them during the story retell testing sessions. It is worthwhile to mention that, in the usual care group, Participants 7 and 9 were two of only three participants who received treatment similar to tasks evaluated pre and post-treatment. Given that Participants 7 and 9 were also identified as responders, it may be important for clinicians to target narrative-level productions in people with both nonfluent and fluent aphasia, while scaffolding and providing individualized cues, regardless of treatment type (i.e., usual care versus AAC).

These findings suggest that when the tenets of intersystemic reorganization are harnessed, AAC treatment can be employed in a manner that avoids “learned non-use” (Pulvermuller & Berthier, 2008, p. 569) of spoken language. That is, AAC intervention can be implemented in a manner that simultaneously facilitates language recovery across a variety of aphasia types and severity levels and compensates for deficits. Strengthening the argument for AAC-induced language recovery is the fact that both groups demonstrated an overall decrease in aphasia severity on the WAB-R AQ (Kertesz, 2006) following treatment, with the AAC group demonstrating a trend for larger gains. Moreover, Participant 1 (AAC group) evolved from a Global aphasia to a Broca’s aphasia from pre- to post-treatment testing.

Neural Reorganization

In terms of post-treatment neural activation patterns, both groups demonstrated a leftward shift in language lateralization for the anterior ROI, favoring the usual care group; however, both groups were classified as bilateral for language function, post-treatment. Further, there was a strong correlation of positive change in WAB-R AQ scores with a leftward shift in LI values. Since recruitment of perilesional tissue appears to be central to better language recovery (Fridriksson, 2010; Fridriksson et al., 2012; Heiss & Thiel, 2006; Postman-Caucheteux et al., 2009; Szaflarski et al., 2011; Szaflarski et al., 2013; Szaflarski et al., 2014), it appears that relative to usual care, AAC treatment did not interfere with neural reorganization to the left hemisphere dominant language areas, thereby providing preliminary evidence to mitigate concerns about incorporating AAC strategies into aphasia rehabilitation.

The secondary neuroimaging analyses revealed increased activation intensity in visual processing regions, primarily for the AAC group. The ventral visual stream processing ROIs included in our secondary analyses are dedicated to recognizing and discriminating visual information in the extrastriate regions, and recognizing faces, objects, and words in adjacent regions of cortex (Harel et al., 2013a, 2013b; Peissig & Tarr, 2007; Vogel et al., 2014),which has connections in the medial (long-term memory) and inferior (semantics) temporal lobes (Goodale & Milner, 1992; Milner & Goodale, 2008), at least in the left hemisphere (Harel et al., 2013a, 2013b; Peissig & Tarr, 2007; Vogel et al., 2014). Further, there is evidence that the semantic system can be successfully altered by life experiences (Kim, Karunanayaka, Privitera, Holland, & Szaflarski, 2011; McClelland & Rogers, 2003). Moreover, there is documented involvement of temporo-parieto-occipital ROIs during spoken production verb production tasks (den Ouden et al., 2009). Taken together, these data, suggest that the use of the personally-relevant photographs and associated text programmed into AAC devices in this study, strengthened the connections between visual ROIs and long-term memory, which subsequently created coupling between the semantic system and the frontal language regions responsible for expressive language tasks (even when the device was not present; thus, the changes observed during the verb generation task). Given the preliminary nature of the current study, this is largely speculative; additional study is required to document any structural, or functional connectivity between these regions.

Limitations & Future Directions

Behavioral aspects of the study

When interpreting the spoken discourse results, it is important to note that during treatment, the AAC group used the narrative included on the device during the retell with VMax; while usual care did not involve narrative training. Because of the inherent differences between the two interventions, we cannot directly attribute the observed changes to the use of an AAC device. However, our overarching goal was not to dismiss traditional approaches and prove AAC treatment to be superior; instead, we aimed to document how a high-technology AAC device could, indeed be used to improve spoken discourse. Additional work is required to tease-apart the contribution of the device to treatment-induced language recovery.

In terms of evaluating the effect of treatment on spoken discourse, it is important that extraneous factors are better controlled-for in future studies. For example, recent discussions (Dietz & Boyle, in press-a, in press-b) on the topic recommend that the psychometric properties of the discourse measures are well-established and that a stable baseline is documented prior to beginning treatment. This can be challenging when working with personally relevant narratives; however not insurmountable. For example in the current study, we identified responders only as those who improved beyond the SEM for that measure (and that group). Another way to address the inevitable variability in personalized narrative tasks is to create template narratives. Similar to the process Cherney and her colleagues (2016) employed to examine the impact of personal relevance on script training, key nouns and verbs can be personalized within a template. This approach will enable researchers to balance the creation of personally-relevant AAC interfaces while maintaining experimental control over the topic, as well as the number of linguistic elements on the interface (i.e., sentences, words, syllables, morphemes, and verbs, etc.). Balancing the content of personally-relevant images will be more challenging. However, since the content of an image can influence what people say; it will be important to track the number of people, places, and actions involved in each participant’s photographs. This would at least allow for a post-hoc analysis of pictured content units and the ability to control for this factor, if necessary, during the statistical analyses.

The secondary analysis identified a subgroup of treatment responders in each group, favoring the AAC treatment and allowed some differential discrimination between people with fluent, versus nonfluent aphasia improved. The current study, however, does provide enough data to determine, a priori, who will respond to treatment, and how. These findings, however, highlight the need to examine these factors more closely in a larger sample, and by combining neuroimaging with behavioral and clinical data. The ability to identify treatment responders, a priori, and to adjust the treatment as necessary for subgroups of non-responders has the potential to reduce the cost of healthcare for stroke recovery by implementing the most effective treatment possible.

Neuroimaging aspects of the study

To our knowledge, no studies have examined the effect of an AAC treatment on the neural mechanisms associated with improved linguistic functioning. Given the time and cost associated with developing and norming an fMRI paradigm, it can be useful to employ a task that taps more general language function and has documented sensitivity to a variety of treatment-related changes in linguistic functioning. This may be especially true when examining the effects of a novel intervention. Therefore, we found it prudent to use a well-studied verb-generation task, which has a robust ability to detect pre- to post-treatment improvement (Allendorfer et al., 2012; Dietz et al., 2016; Szaflarski et al., 2013; Szaflarski et al., 2014). However, for treatment-induced language recovery, it is also likely that some changes in functional and structural neural architecture are intervention-specific; thus requiring the use of fMRI paradigms that target the language mechanism central to the treatment. For example, following a course of semantic and phonologic naming treatment, Fridriksson (Fridriksson, 2010; Fridriksson et al., 2006) documented a strong correlation between the change in performance during an fMRI naming task and increased activation in several anterior and posterior regions of the left hemisphere. Thompson and colleagues (2010) documented that following a syntactic intervention, people with chronic aphasia tended to demonstrate a shift toward bilateral activation of temporoparietal regions during a sentence-level auditory verification fMRI task. In these two examples, the researchers examined changes in neural circuitry using fMRI tasks that were linked to the underlying purpose of the intervention (i.e., naming and syntactic treatment). The AAC treatment described in this paper, is a discourse-based intervention; that is, the treatment is focused on improving connected speech, rather than naming of single words. Thus, it is imperative that going forward, we use an fMRI paradigm designed to capture the unique cortical plasticity associated with changes in connected speech. Moreover, the use of discourse-based fMRI tasks, with and without the visual support of an AAC interface will be required to fully understand the neurobiological underpinnings of this particular AAC intervention.

The results revealed that for the posterior language ROI, the AAC group was right lateralized at baseline, while the usual care group was left lateralized. For these reasons, we only interpreted LI changes in terms of the anterior language ROI. However, given the role the right hemisphere plays in verb production when processing photos (e.g., de Ouden, 2009), it is possible that the AAC group had a predisposition to success with AAC. Future work, presumably with larger sample sizes, should control for this factor (if feasible) or use it as a co-variant when building a predictive model to identify the unique neurobiological mechanism by which AAC-induce language recovery occurs. Moreover, given the metalinguistic elements included in the proposed AAC treatment, it will be important to examine post-treatment changes in frontal-executive networks, or networks associated with self-monitoring and attention in the future. This aligns with recent work documenting that there is more to language than Broca’s and Wernicke’s areas in that a multiple domain network (Brownsett, Warren, Geranmayeh, Woodhead, Leech, Wise, 2014; Vallila-Rohter, 2017; Vallila-Rohter & Kiran, 2017) is likely responsible for helping people with aphasia regain language functions. It may be that, in addition to learning to self-cue and link the canonical language areas with visual ROIs, the AAC intervention described in the current study stimulates attention and executive function networks that aid in language recovery.

Finally, investigation of the relationship between site of lesion and behavioral performance, (Dietz et al., 2016; Fridriksson, 2010) will be crucial in identifying treatment responders. For example voxel-based lesion symptom mapping (VLSM) (Tyler, Marslen-Wilson, & Stamatakis, 2005), a technique that allows examination of the relationship between site of lesion and behavioral performance (i.e., WAB-R AQ (Kertesz, 2006), counted words, CIUs, etc.), can help predict who will respond to this type of treatment and what brain-related changes to expect. An examination of the differences on these measures between responders and non-responders will help delineate the mechanism (i.e., multimodal domain network) underlying neural reorganization associated with successful AAC treatment. Further, these findings underscore the importance of reconsidering what constitutes the language system (Dick & Tremblay, 2012; Martino et al., 2013; Tremblay & Dick, 2016); thus, necessitating the use functional and structural connectivity to identify the structures that support language recovery.

Conclusion

Currently, it is not an accepted practice to use high-technology AAC devices as a viable treatment to induce language recovery (Weissling & Prentice, 2010). However, we achieved an important first step in this feasibility study toward learning how to use AAC as a dual-purpose treatment that simultaneously stimulates language recovery and increased communicative function in people with chronic aphasia and documented potential areas of associated neural reorganization. We implemented a discourse-based AAC treatment in a novel manner; one that sought to facilitate language recovery rather than to solely compensate for post-stroke aphasia deficits. We also aimed to understand, at a basic level, the neurobiological mechanism of AAC-induced language recovery changes. The current project demonstrated that participants could be recruited for a study combining AAC intervention and neuroimaging and that treatment group randomization was accepted by all participants. Further, we were able to achieve high levels of treatment fidelity, coding reliability on the targeted discourse measures, and procedural integrity. Although not definitive, these results suggest that AAC treatment should be considered a viable treatment to support language recovery for people who have post-stroke aphasia.

Supplementary Material

Acknowledgements

We appreciate the participants for their time and effort; because of them we have a better understanding of how augmentative and alternative (AAC) interventions can be used to stimulate post-stroke language recovery and cortical plasticity. We thank the MRI technologists at CCHMC as well as Terri Hollenkamp and Victoria (Tory) McKenna (of Rehab Resources, Inc.), Joe Collier, Devan Macke, Alexandra (Lexi) Perrault, Sarah Thaxton, Leah Baccus, Milon Volk, and Narae Hyun, for their enduring patience and assistance collecting fMRI data, providing treatment, and processing the large amounts of data, respectively. The CCTST also provided data management via Research Electronic Data Capture (REDCap) (UL1-RR026314), statistical resources, and mentoring for the lead author. We thank the training data for FIRST, particularly to David Kennedy at the CMA, and also to: Christian Haselgrove, Centre for Morphometric Analysis, Harvard; Bruce Fischl, Martinos Center for Biomedical Imaging, MGH; Janis Breeze and Jean Frazier, Child and Adolescent Neuropsychiatric Research Program, Cambridge Health Alliance; Larry Seidman and Jill Goldstein, Department of Psychiatry of Harvard Medical School; Barry Kosofsky, Weill Cornell Medical Center.

Funding Details

The project described was supported by an Institutional Training Award (KL2) from the University of Cincinnati (UC) and Cincinnati Children’s’ Hospital Medical Center for Clinical & Translational Science & Training (CCTST) via the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, Grants 8 KL2 TR000078–05 and 8 UL1 TR000077–05. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH, UC, CCHMC, or the CCTST.

Footnotes

Disclosure

The authors have no conflict of interest to disclose.

References

- AAC-RERC. (2011). Mobile devices & communication apps: An AAC-RERC white paper. Retrieved from http://aac-rerc.psu.edu/index.php/pages/show/id/46 [DOI] [PubMed]

- Allendorfer JB, Kissela BM, Holland SK, & Szaflarski JP (2012). Different patterns of language activation in post-stroke aphasia are detected by overt and covert versions of the verb generation fMRI task. Med Sci Monit, 18(3), CR135–137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Attard MC, Rose ML, & Lanyon L (2012). The comparative effects of Multi-Modality Aphasia Therapy and Constraint-Induced Aphasia Therapy-Plus for severe chronic Broca’s aphasia: An in-depth pilot study. Aphasiology, 27(1), 80–111. doi: 10.1080/02687038.2012.725242 [DOI] [Google Scholar]

- Boyle M (2004). Semantic Feature Analysis Treatment for Anomia in Two Fluent Aphasia Syndromes. American Journal of Speech-Language Pathology, 13(3), 236–249. doi: 10.1044/1058-0360(2004/025) [DOI] [PubMed] [Google Scholar]

- Boyle M (2015). Stability of Word-Retrieval Errors With the AphasiaBank Stimuli. American Journal of Speech-Language Pathology, 1–8. doi: 10.1044/2015_AJSLP-14-0152 [DOI] [PubMed] [Google Scholar]

- Brady MC, Kelly H, Godwin J, Enderby P, & Campbell P (2016). Speech and language therapy for aphasia following stroke (Publication no. 10.1002/14651858.CD000425.pub3) Cochrane Database of Systematic Reviews 2016. (CD000452). Retrieved August 1, 2016, from John Wiley & Sons, Ltd; http://onlinelibrary.wiley.com/doi/10.1002/14651858.CD000425.pub4/full [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brookshire RH, & Nicholas LE (1994). Speech Sample Size and Test-Retest Stability of Connected Speech Measures for Adults With Aphasia. Journal of Speech, Language, and Hearing Research, 37(2), 399–407. doi: 10.1044/jshr.3702.399 [DOI] [PubMed] [Google Scholar]

- Brownsett SLE, Warren JE, Geranmayeh F, Woodhead Z, Leech R, Wise RJS (2014). Cognitive control and its impact on recovery from aphasia stroke. Brain, 137(1), 242–254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catani M, Centre for Neuroimaging Sciences, I. o. P., King’s College London, London, United Kingdom, Institute of Psychiatry, P., King’s College London, DeCrespigny Park, London SE5 8AZ, United Kingdom, Jones, D. K., Centre for Neuroimaging Sciences, I. o. P., King’s College London, London, United Kingdom, Section on Tissue Biophysics and Biomimetics, L. o. I. M. a. B., National Institute of Child Health and Human Development, National Institutes of Health, Bethesda, MD, … Centre for Neuroimaging Sciences, I. o. P., King’s College London, London, United Kingdom. (2016). Perisylvian language networks of the human brain. Annals of Neurology, 57(1), 8–16. doi: 10.1002/ana.20319 [DOI] [PubMed] [Google Scholar]

- Cherney LR, Kaye RC, Lee JB, & van Vuuren S (2016). Impact of Personal Relevance on Acquisition and Generalization of Script Training for Aphasia: A Preliminary Analysis. American Journal of Speech-Language Pathology, 24(4). doi: 10.1044/2015_AJSLP-14-0162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coelho CA, Sinotte MP, & Duffy JR (2008). Schuell’s stimulation approach to rehabilitation (5th ed.): Wolters Kluwer/Lippincott Williams & Wilkins. [Google Scholar]

- Cohen J (1968). Weighted kappa: nominal scale agreement with provision for scaled disagreement or partial credit. Psychol Bull, 70(4), 213–220. [DOI] [PubMed] [Google Scholar]

- Cohen J (1992). A power primer. Psychological Bulletin, 112(1), 155–159. doi: 10.1037/0033-2909.112.1.155 [DOI] [PubMed] [Google Scholar]

- Collier J, & Dietz A (2014). AAC interface design: The effect on spoken language in people with aphasia. Paper presented at the Clinical Aphasiology Conference, St. Simon Island, Georgia. [Google Scholar]

- Cox RW (2012). AFNI: What a long strange trip it’s been. Neuroimage, 62(2), 743–747. doi: 10.1016/j.neuroimage.2011.08.056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crosson B, Moore AB, McGregor KM, Chang YL, Benjamin M, Gopinath K, … White, K. D. (2009). Regional changes in word-production laterality after a naming treatment designed to produce a rightward shift in frontal activity. Brain Lang, 111(2), 73–85. doi: 10.1016/j.bandl.2009.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Den Ouden DB, Fix SC, Parrish T, Thompson CK (2009). Argument structure effects in action verb naming in static and dynamic conditions. Journal of Neurolinguistics, 22(2), 196–215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, … Killiany RJ (2006). An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage, 31(3), 12. [DOI] [PubMed] [Google Scholar]

- Dick AS, & Tremblay P (2012). Beyond the arcuate fasciculus: consensus and controversy in the connectional anatomy of language. Brain, 135(Pt 12), 3529–3550. doi: 10.1093/brain/aws222 [DOI] [PubMed] [Google Scholar]

- Dietz A, & Boyle M (in press-a). Discourse measurement in aphasia: Consensus and caveats. Aphasiology. [Google Scholar]

- Dietz A, & Boyle M (in press-b). Discourse measurement in aphasia: Have we reached the tipping point? Aphasiology. [Google Scholar]

- Dietz A, McKelvey M, Schmerbach M, Weissling K, & Hux K (2010). Compensation for severe, chronic aphasia using augmentative and alternative communication In Chabon SS & Cohen ER (Eds.), The Communication Disorders Casebook: Learning by Example: Allyn & Bacon/Merrill. [Google Scholar]

- Dietz A, Vannest J, Collier J, Maloney T, Altaye M, Szaflarski JP, & Holland SP (2014). AAC revolutionizes aphasia therapy: Changes in cortical plasticity and spoken language. Paper presented at the American Speech-Language and Hearing Association Annual Convention, Orlando, FL Original Research retrieved from [Google Scholar]

- Dietz A, Vannest J, Maloney T, Altaye M, Szaflarski JP, & Holland SP (2016). The calculation of language lateralization indices in post-stroke aphasia: A comparison of a standard and a lesion-adjusted formula. Frontiers in Human Neuroscience, 10(493). doi: 10.3389/fnhum.2016.00493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dietz A, Weissling K, Griffith J, McKelvey M, & Macke D (2014). The Impact of Interface Design During an Initial High-Technology AAC Experience: A Collective Case Study of People with Aphasia. Augmentative and Alternative Communication, 30(4), 314–328. doi:doi: 10.3109/07434618.2014.966207 [DOI] [PubMed] [Google Scholar]

- Edmonds LA, Nadeau SE, & Kiran S (2009). Effect of Verb Network Strengthening Treatment (VNeST) on Lexical Retrieval of Content Words in Sentences in Persons with Aphasia. Aphasiology, 23(3), 402–424. doi: 10.1080/02687030802291339 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elman RJ, Cohen A, & Silverman M (2016). Perceptions of speech-language pathology services provided to persons with aphasia: A caregiver survey. Paper presented at the Clinical Aphasiology Conference, Charolottesville, VA. [Google Scholar]

- Farias D, Davis C, & Harrington G (2006). Drawing: Its contribution to naming in aphasia. Brain and Language, 97(1), 53–63. doi: 10.1016/j.bandl.2005.07.074 [DOI] [PubMed] [Google Scholar]

- Fleiss JL, Levin B, & Paik MC (2004). The Measurement of Interrater Agreement In Statistical Methods for Rates and Proportions (pp. 598–626): John Wiley & Sons, Inc. [Google Scholar]

- Forkel SJ, Thiebaut de Schotten M, Kawadler JM, Dell’Acqua F, Danek A, & Catani M (2014). The anatomy of fronto-occipital connections from early blunt dissections to contemporary tractography. Cortex, 56(0), 73–84. doi: 10.1016/j.cortex.2012.09.005 [DOI] [PubMed] [Google Scholar]

- Fox L, & Fried-Oken M (1996). AAC aphasiology: partnership for future research. Augmentative and Alternative Communication, 12(4), 257–271. doi: 10.1080/07434619612331277718 [DOI] [Google Scholar]

- Fridriksson J (2010). Preservation and modulation of specific left hemisphere regions is vital for treated recovery from anomia in stroke. J Neurosci, 30(35), 11558–11564. doi: 10.1523/JNEUROSCI.2227-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fridriksson J, Morrow-Odom L, Moser D, Fridriksson A, & Baylis G (2006). Neural recruitment associated with anomia treatment in aphasia. Neuroimage, 32(3), 1403–1412. doi: 10.1016/j.neuroimage.2006.04.194 [DOI] [PubMed] [Google Scholar]

- Fridriksson J, Richardson JD, Fillmore P, & Cai B (2012). Left hemisphere plasticity and aphasia recovery. Neuroimage, 60(2), 854–863. doi: 10.1016/j.neuroimage.2011.12.057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrett K, Beukelman D, & Low-Morrow D (1989). A comprehensive augmentative communication system for an adult with Broca’s aphasia. Augmentative and Alternative Communication, 5(1), 55–61. doi: 10.1080/07434618912331274976 [DOI] [Google Scholar]

- Garrett K, & Kimelman M (2000). Cognitive-linguistic considerations in the application on conversational behaviors of a person with aphasia In Beukelman D, Yorkston K, & Reichle J (Eds.), Augmentative and alternative communication for adults with neurogenic and neuromuscular disabilities (pp. 339–374). Baltimore, MD: Brookes. [Google Scholar]

- Garrett K, & Lasker J (2005). Scanning/Visual Field/Print Size/Attention Screening Task. Retrieved from http://aac.unl.edu/screen/wordscan.pdf

- Godecke E, Armstrong EA, Rai T, Middleton S, Ciccone N, Whitworth A, … Bernhardt J (2016). A randomized controlled trial of very early rehabilitation in speech after stroke. International Journal Of Stroke, 11(5), 586–592. doi: 10.1177/1747493016641116 [DOI] [PubMed] [Google Scholar]

- Goodale MA, & Milner AD (1992). Separate visual pathways for perception and action. Trends in Neurosciences, 15(1), 20–25. doi: 10.1016/0166-2236(92)90344-8 [DOI] [PubMed] [Google Scholar]

- Griffith J, Dietz A, & Weissling K (2014). Supporting narrative retells for people with aphasia Using augmentative and alternative communication: Photographs or Line Drawings? Text or no text? American Journal of Speech-Language Pathology, 23(2), S213–S224. doi: 10.1044/2014_AJSLP-13-0089 [DOI] [PubMed] [Google Scholar]

- Harel A, Kravitz DJ, & Baker CI (2013a). Beyond perceptual expertise: revisiting the neural substrates of expert object recognition. Frontiers in Human Neuroscience, 7, 885. doi: 10.3389/fnhum.2013.00885 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harel A, Kravitz DJ, & Baker CI (2013b). Deconstructing visual scenes in cortex: Gradients of object and spatial layout information. Cerebral Cortex, 23(4), 947–957. doi: 10.1093/cercor/bhs091 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heiss WD, & Thiel A (2006). A proposed regional hierarchy in recovery of post-stroke aphasia. Brain Lang, 98(1), 118–123. doi: 10.1016/j.bandl.2006.02.002 [DOI] [PubMed] [Google Scholar]

- Hersh D (1998). Beyond the ‘plateau’: Dishcarge dilemmas in chronic aphasia. Aphasiology, 12(3), 207–218. [Google Scholar]

- Hunt KW (1970). Syntactic maturity of school children and adults. Monograph of the Society for Research in Child Development, 35, 78. [PubMed] [Google Scholar]

- Hux K, Beuchter M, Wallace S, & Weissling K (2010). Using visual scene displays to create a shared communication space for a person with aphasia. Aphasiology, 24, 643–660. [Google Scholar]

- Indefrey P, Brown CM, Hellwig F, Amunts K, Herzog H, Seitz RJ, & Hagoort P (2001). A neural correlate of syntactic encoding during speech production. Proceedings of the National Academy of Sciences of the United States, 98(10), 5933–5936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kertesz A (2006). Western Aphasia Battery-Revised. In. San Antonio, TX: Psychological Corporation. [Google Scholar]

- Kim K, Karunanayaka P, Privitera M, Holland S, & Szaflarski J (2011). Semantic association investigated with functional MRI and independent component analysis. Epilepsy Behav, 20(4), 613–622. doi: 10.1016/j.yebeh.2010.11.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knollman-Porter K (2008). Acquired Apraxia of Speech: A Review. Topics in Stroke Rehabilitation, 15(5), 484–493. doi: 10.1310/tsr1505-484 [DOI] [PubMed] [Google Scholar]

- Lanyon L, & Rose ML (2009). Do the hands have it? The facilitation effects of arm and hand gesture on word retrieval in aphasia. Aphasiology, 23(7–8), 809–822. doi: 10.1080/02687030802642044 [DOI] [Google Scholar]

- Lanyon LE, & Rose ML (2009). Do the hands have it? The facilitation effects of arm and hand gesture on word retrieval in aphasia. Aphasiology, 23(7–8), 809–822. doi: 10.1080/02687030802642044 [DOI] [Google Scholar]

- Light J, & Drager K (2007). AAC technologies for young children with complex communication needs: State of the science and future research directions. Augmentative and Alternative Communication, 23(3), 204–216. doi: 10.1080/07434610701553635 [DOI] [PubMed] [Google Scholar]

- Light J, & McNaughton D (2014). Communicative Competence for Individuals who require Augmentative and Alternative Communication: A New Definition for a New Era of Communication? Augmentative and Alternative Communication, 30(1), 1–18. doi:doi: 10.3109/07434618.2014.885080 [DOI] [PubMed] [Google Scholar]

- Luria A (1972). Traumatic aphasia. The Hague, Netherlands: Mouton. [Google Scholar]

- Martino J, DeWitt Hamer PC, Berger MS, Lawton MT, Arnold CM, de Lucas EM, & Duffau H (2013). Analysis of the subcomponents and cortical terminations of the perisylvian superior longitudinal fasciculus: a fiber dissection and DTI tractography study | SpringerLink. Brain and Structure Function, 218(1), 105–121. doi: 10.1007/s00429-012-0386-5 [DOI] [PubMed] [Google Scholar]

- Mentis M, & Prutting CA (1993). Analysis of topic as illustrated in a head-injured and a normal adult. Journal of Speech and Hearing Research, 34,583–595. [DOI] [PubMed] [Google Scholar]

- McClelland JL, & Rogers TT (2003). The parallel distributed processing approach to semantic cognition. Nat Rev Neurosci, 4(4), 310–322. doi: 10.1038/nrn1076 [DOI] [PubMed] [Google Scholar]

- Miller JF, Andriacchi K, & Knockerts A (2011). Assessing language production using salt software: A clinician’s guide to language sample analysis. SALT Software LLC; Middletown, Wisconsin. [Google Scholar]

- Milner AD, & Goodale MA (2008). Two visual systems re-viewed. Neuropsychologia, 46(3), 774–785. doi: 10.1016/j.neuropsychologia.2007.10.005 [DOI] [PubMed] [Google Scholar]

- Nicholas LE, & Brookshire RH (1993). A system for quantifying the informativeness and efficiency of connected speech of adults with aphasia. Journal of Speech and Hearing Research, 36, 338–350. [DOI] [PubMed] [Google Scholar]

- Oldfield RC (1971). The assessment and analysis of handedness: The Edinburgh Inventory. Neuropsychologia(9), 97–113. [DOI] [PubMed] [Google Scholar]

- Peissig JJ, & Tarr MJ (2007). Visual object recognition: do we know more now than we did 20 years ago? Annu Rev Psychol, 58, 75–96. doi: 10.1146/annurev.psych.58.102904.190114 [DOI] [PubMed] [Google Scholar]

- Postman-Caucheteux WA, Birn RM, Pursley RH, Butman JA, Solomon JM, Picchioni D, … Braun AR (2009). Single-trial fMRI Shows Contralesional Activity Linked to Overt Naming Errors in Chronic Aphasic Patients. Journal of Cognitive Neuroscience, 22(6), 1299–1318. doi: 10.1162/jocn.2009.21261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulvermuller F, & Berthier M (2008). Aphasia therapy on a neuroscience basis. Aphasiology, 22(6), 563–599. doi: 10.1080/02687030701612213 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raymer AM, Singletary F, Rodriguez AMY, Ciampitti M, Heilman KM, & Rothi LJG (2006). Effects of gesture+verbal treatment for noun and verb retrieval in aphasia. Journal of the International Neuropsychological Society, 12(6), 867–882. doi: 10.1017/S1355617706061042 [DOI] [PubMed] [Google Scholar]

- Rose ML (2013). Releasing the Constraints on Aphasia Therapy: The Positive Impact of Gesture and Multimodality Treatments. American Journal of Speech-Language Pathology, 22(2), S227–S239. doi: 10.1044/1058-0360(2012/12-0091) [DOI] [PubMed] [Google Scholar]

- Rose ML (2016). Releasing the Constraints on Aphasia Therapy: The Positive Impact of Gesture and Multimodality Treatments. American Journal of Speech-Language Pathology, 22(2). doi: 10.1044/1058-0360(2012/12-0091) [DOI] [PubMed] [Google Scholar]

- Rose ML, Attard MC, Mok Z, Lanyon LE, & Foster AM (2013). Multi-modality aphasia therapy is as efficacious as a constraint-induced aphasia therapy for chronic aphasia: A phase 1 study. Aphasiology, 27(8), 938–971. doi: 10.1080/02687038.2013.810329 [DOI] [Google Scholar]

- Rose ML, & Sussmilch G (2008). The effects of semantic and gesture treatments on verb retrieval and verb use in aphasia. Aphasiology, 22(7–8), 691–706. doi: 10.1080/02687030701800800 [DOI] [Google Scholar]

- Rubow RT, Rosenbek J, Collins M, & Longstreth D (1982). Vibrotactile stimulation for intersystemic reorganization in the treatment of apraxia of speech. Archives of Physical Medicine, 63(4), 150–153. [PubMed] [Google Scholar]

- Rutten G, & Ramsey NF (2016). The Role of Functional Magnetic Resonance Imaging in Brain Surgery. Neurosurgical Focus, 28(2). [DOI] [PubMed] [Google Scholar]

- Saur D, Lange R, Baumgaertner A, Schraknepper V, Willmes K, Rijntjes M, & Weiller C (2006). Dynamics of language reorganization after stroke. Brain, 129(Pt 6), 1371–1384. [DOI] [PubMed] [Google Scholar]

- Saur D, Ronneberger O, Kummerer D, Mader I, Weiller C, & Kloppel S (2010). Early functional magnetic resonance imaging activations predict language outcome after stroke. Brain, 133(Pt 4), 1252–1264. doi:awq021 [pii] 10.1093/brain/awq021 [DOI] [PubMed] [Google Scholar]

- Schmithorst VJ, & Holland SK (2004). Event-related fMRI technique for auditory processing with hemodynamics unrelated to acoustic gradient noise. Magn Reson Med, 51(2), 399–402. doi: 10.1002/mrm.10706 [DOI] [PubMed] [Google Scholar]