Abstract

An emerging theoretical framework suggests that neural functions associated with stereotyping and prejudice are associated with frontal lobe networks. Using a novel neuroimaging technique, functional near-infrared spectroscopy (fNIRS), during a face-to-face live communication paradigm, we explore an extension of this model to include live dynamic interactions. Neural activations were compared for dyads of similar and dissimilar socioeconomic backgrounds. The socioeconomic status of each participant was based on education and income levels. Both groups of dyads engaged in pro-social dialectic discourse during acquisition of hemodynamic signals. Post-scan questionnaires confirmed increased anxiety and effort for high-disparity dyads. Consistent with the frontal lobe hypothesis, left dorsolateral pre-frontal cortex (DLPFC), frontopolar area and pars triangularis were more active during speech dialogue in high than in low-disparity groups. Further, frontal lobe signals were more synchronous across brains for high- than low-disparity dyads. Convergence of these behavioral, neuroimaging and neural coupling findings associate left frontal lobe processes with natural pro-social dialogue under ‘out-group’ conditions and advance both theoretical and technical approaches for further investigation.

Keywords: socioeconomic disparity, two-person neuroscience, fNIRS, cross-brain neural coherence, frontal lobe mechanisms

Introduction

Characterization of dyadic behavior is an emerging frontier in social neuroscience. Nonetheless, given the accelerated interest and relevance to understanding pro-social interactions, empirical approaches and theoretical frameworks for dyadic interactions have not advanced accordingly. This general knowledge gap is partially due to experimental limitations related to imaging two individuals during natural live and interactive exchanges. Here, this investigational obstacle is addressed with novel hyperscanning technology applied within the specific context of live interactions between dyads that differ with respect to social and economic status. In socially diverse populations, pro-social communication between individuals from different socioeconomic backgrounds is common. In societies with diverse demographics and egalitarian values, ordinary encounters with pro-social and transactional intent commonly require regulation of prejudices and stereotypes as well as and well-tuned communication skills. These challenges are related to diverse ethnic, religious, gender, occupational and socioeconomic identities of individuals and are an ever-present hallmark of societal norms in daily interpersonal interactions. Although socioeconomic differences are known to influence complex social behaviors, the neurobiology associated with live interpersonal interactions between humans with socioeconomic disparities is not well understood. Here we approach this question from the point of view of the interacting dyad rather than the individual alone.

Socioeconomic status (SES) is a well-known category of social stratification that impacts attitudes and communication styles (Vaughan, 1995; McLeod and Owens, 2004) and a known factor associated with health and well-being (Sapolsky, 2005). In-group/out-group dynamics are associated with tensions resulting from bias favoring people within the same group (‘in-group’) relative to those within a different group (‘out-group’) (Tajfel, 1982; Levin et al., 2003). Although ecologically valid investigations of in-group/out-group dynamics are rare, intergroup interactions employing arbitrary groups confirm in- vs out-group social effects (Turner, 1978; Brewer, 1979; Lemyre and Smith, 1985; Otten and Wentura, 1999; Dunham et al., 2011). Neural modulation in social systems has been reported when participants interacted with out-group members prior to functional magnetic resonance imaging (fMRI) scans (Richeson et al., 2003) or viewed faces of in-group vs out-group members during scanning (Van Bavel et al., 2008). Although distinctions related to perceived class, similarity and diversity may be detected in ordinary interpersonal interactions, they are not necessarily expressed. The importance of pro-social interactions in socially diverse environments places a high priority on understanding their neurobiological and psychiatric underpinnings.

An overarching goal of this investigation is to understand the mechanisms that are naturally engaged during effective communication between individuals with different socioeconomic identities. Here, we compare the neural correlates that underlie live and spontaneous interactions between dyads that are either homogeneous (low-disparity) or heterogeneous (high-disparity) with respect to SES. In the context of the dialectical misattunement hypothesis (Bolis et al., 2017) interactions between homogeneous dyads are expected to ‘appear smoother’ than interactions between heterogeneous dyads. Other emerging models are specifically related to bias and social cues that signal diversity. One such model proposes that complex forms of pre-potent responses related to prejudice and stereotyping involve frontal neural systems that have a role in detection of biases as well as activating self-regulating behaviors to prevent bias expression (Amodio, 2014). This framework predicts that neural responses will be evident in frontal areas during non-confrontational conversations between high- and low-disparity dyads.

Two-brain (hyperscanning) investigations of real-time dynamic communication are enabled by advances in neuroimaging that acquire hemodynamic signals using functional near-infrared spectroscopy (fNIRS) during natural dyadic interactions. fNIRS is a developing technique that provides non-invasive, minimal risk, localized measurements of task-related hemodynamic brain activity. Because detectors are head-mounted and relatively insensitive to motion artifacts, superficial cortical activity can be monitored in interactive two-person settings. Hemodynamic signals associated with changes in concentrations of oxyhemoglobin (OxyHb) and deoxyhemoglobin (deOxyHb) (Villringer and Chance, 1997) are detected by differential absorption of light sensitive to oxygen levels. Both signals serve as proxies for neural activity and are similar to variations in blood-oxygen levels effected by deOxyHb in fMRI (Boas et al., 2004, 2014; Ferrari and Quaresima, 2012). Conventional functional neuroimaging methods optimized to investigate neural operations in single human brains do not interrogate systems engaged during live, spontaneous social interactions due, primarily, to confinement and isolation of single participants.

Advantages of dual-brain imaging include examination of neural coupling processes that underlie cross-brain interactions (Hasson et al., 2004; Hasson and Frith, 2016; Pinti et al., 2018, Pinti et al., 2020). Synchrony between signals originating between two brains has been assumed to reflect coupled dynamics and proposed as a biomarker for sharing socially relevant information (Hasson et al., 2004; Hasson and Frith, 2016). Observations of neural coupling during interactive tasks support an emerging theoretical framework of dynamic cross-brain processes (Cui et al., 2012; Konvalinka and Roepstorff, 2012; Schilbach et al., 2013; Scholkmann et al., 2013a; Saito et al., 2010; Tanabe et al., 2012; Koike et al., 2016; Hirsch et al., 2017, 2018; Piva et al., 2017). Aims for this two-person neuroimaging (hyperscanning) study include exploring neural coupling during face-to-face verbal communication in dyads with high socioeconomic disparity with the goal toward advancing investigational techniques that inform processes of social interaction and models of psychiatric conditions. Given the natural affinity of humans to associate with others, understanding the neural underpinnings of this social behavior is a high priority. Development of imaging techniques, paradigms, and computational approaches for natural, dynamic interactions advances opportunities to investigate these relatively unexplored ‘online’ processes (Schilbach, 2010, Schilbach et al., 2013; Schilbach, 2014; Redcay and Schilbach, 2019). Here, we present an approach toward the ‘end goal’ of ‘observing the interactors’ (Bolis et al., 2017), which enables objective descriptions of neural processes during dyadic social interactions.

Materials and methods

Participants

Hemodynamic signals were acquired simultaneously on paired individuals (dyads) during structured turns of active speaking and listening. Seventy-eight healthy adults (39 pairs, 32 ± 12.8 years of age, 38% female, 95% right handed (Oldfield, 1971)) participated in the experiment. Recruitment of subjects focused on individuals from a wide range of socioeconomic backgrounds within both the ‘on-campus’ and university-affiliated population and the local ‘off-campus’ population of New Haven, CT. Invitations to participate were distributed throughout the entire metropolitan and surrounding areas. Participants were informed that the experiment was aimed at understanding the neural underpinnings of interpersonal communication, and provided informed consent prior to the investigation in accordance with the Yale University Human Investigation guidelines. Dyads were assembled in order of recruitment, and participants were either strangers prior to the experiment or casually acquainted as classmates. None were intimate partners or self-identified friends. No individual participated in more than one dyad. Participants were naïve with respect to the SES of their partner and to any experimental goals beyond the investigation of neural mechanisms for verbal communication. Any social signifiers were detected by natural and undirected processes.

The assignment of socioeconomic group (see below) occurred following the completion of each experiment and was based on confidential information supplied by the participants at the end of each session. Neither dyads nor investigators were aware of group membership at the time of the experiment because it was not determined. Any social cue that conveyed social status information between dyads was naturally communicated and was not under experimental control or manipulation. The paradigm was intended to simulate a social situation similar to that, for example, where strangers riding a city bus might happen to sit next to each other and begin a conversation or strangers standing next to each other in a queue for movie tickets begin a conversation.

Social classification

Social classifications of dyads were based on self-report information obtained at the end of the experiment, including biographical and socioeconomic information. Using this information, dyads were classified as high or low socioeconomic disparity based on two factors: highest level of education and yearly parental (household) income. Each individual was given a score based on these details. Completion of some or all of high school was 10 points, some or all of college was 20 points, and some or all of graduate school was 30 points. Parental (household) annual income below $50 000 was 10 points, between $50 000 and $100 000 was 20 points, between $100 000 and $150 000 was 30 points, and above $150 000 was 40 points. The dyad disparity score was the difference between the sum of these points. The range of disparity scores across all dyads was 0 to 50. Differences between partners greater than 25 were classified as high disparity and differences below 25 were classified as low disparity. This scoring system was intended to classify dyads into two separate groups based on an ordinal scale. There are no assumptions about equal distances between units. The purpose of the metric was to generate two categories of dyads. Of the 39 pairs, 19 were classified as high disparity and 20 as low disparity.

Although factors such as race and gender are relevant to this investigation, they were intentionally normalized in this design in order to prioritize SES, a variable that is potentially independent of either race or gender. Variables under experimental control included the demographic constellations of the dyads and groups, which were close to equal in this study (Table 1). For example, the average ages within the two groups were approximately equal, i.e. 32 ± 11.1 (high) and 32 ± 14.5 years (low). The gender distribution between the groups was nearly equal. There were 15 females in each group and 23 males in the high group compared with 25 in the low group. Dyad gender types were also carefully matched. For example, there were four F/F dyads in high-disparity group and three in the low-disparity group. The number of M/M dyads was equal in each group (8), and the mixed gender dyads, F/M, included seven in the high-disparity group and nine in the low-disparity group. Each group included two left-handed participants. Race and ethnicity was also approximately matched. Specifically, there were 12 self-described African American participants in the high-disparity group and 13 in the low-disparity group. There were 10 Caucasian Americans in the high group and 12 in the low-disparity group. There were six more self-defined Asian Americans in the high-disparity group than the low-disparity group and five more Latin American participants in the low-disparity group than the high group. However, all in all, the generally balanced demographic comparisons (see Tables 1 and 2) reduce the concern that variables such as race and gender may have contributed differentially to the observed neural differences.

Table 1.

Summary of participant demographics by disparity group

| Demographic Summary | Disparity Group | |

|---|---|---|

| High | Low | |

| (Number) | (Number) | |

| Gender | ||

| Female | 15 | 15 |

| Male | 23 | 25 |

| Race/Ethnicity | ||

| Black/African/African-American | 12 | 13 |

| Asian/Asian-American | 10 | 4 |

| White/Caucasian-American | 10 | 12 |

| Latinx/Hispanic | 1 | 6 |

| Bi-/Multiracial | 4 | 2 |

| Native American | 0 | 1 |

| Other/No answer | 1 | 2 |

| Handedness | ||

| Right | 36 | 38 |

| Left | 2 | 2 |

Table 2.

Group disparity ratings by dyad type

| Group disparity ratingsa | High | Low | ||

|---|---|---|---|---|

| n | Mean ± s.d. | N | Mean ± s.d. | |

| Dyad type | ||||

| Female/Female | 4 | 38 ± 10 | 3 | 10 ± 0 |

| Female/Male | 7 | 31 ± 4 | 9 | 10 ± 5 |

| Male/Male | 8 | 34 ± 5 | 8 | 8 ± 7 |

Disparity ratings represent differences between scores of Partner 1 and Partner 2 in each dyad. Group mean rating and standard deviation (s.d.) for each dyad subtype are presented here.

Experimental design

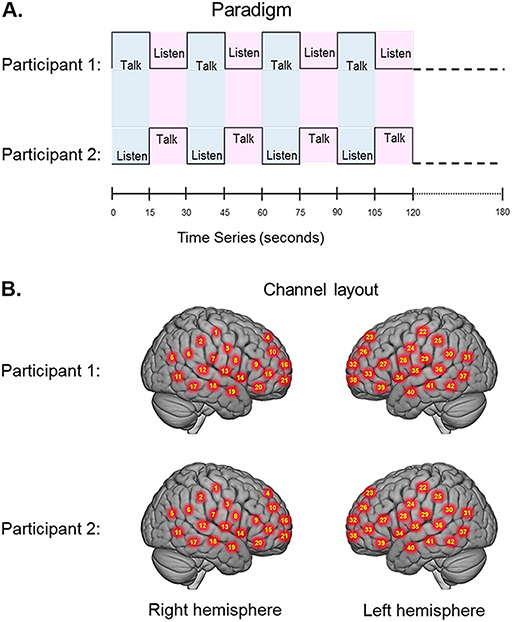

The experimental paradigm was similar to previously reported two-person interaction paradigms (Hirsch et al., 2017, 2018; Piva et al., 2017; Noah et al., 2020). Participants were positioned approximately 140 cm across a table from each other with a full view of their partner’s face. The experiment consisted of a total of four 3 min runs. The time series is shown in Figure 1A. ‘Speaker’ and ‘Listener’ roles switched every 15 s. A separate conversation topic was assigned prior to each run. Two of the four topics were autobiographical, such as ‘What did you do last summer?’, and two were objective, such as ‘How do you bake a cake?’. The order of the biographical and objective topics was randomized for each dyad. Participants were instructed to communicate their views and experiences related to the assigned topics. Discussion topics were selected from a predetermined set of 40 topics in each category. Selections were made prior to each run using a computer algorithm that generated a random number between 1 and 40 corresponding to each topic. The change in speaker/listener roles was indicated by a tone and also by small green and red lights displayed in front of the participants indicating turns for talking or listening, respectively. The first speaker was assigned by the investigator, and subsequent speaker order was alternated between runs. Participants rated ‘How anxious were you during the task?’ and ‘How much effort was required to discuss the topics with your partner?’ immediately after the experiment using a scale from 1 to 9, where 1 indicated the most negative rating and 9 indicated the most positive.

Fig. 1.

(A) Experimental paradigm. Participants alternated between talking and listening to each other every 15 s of each 180 s run (see Hirsch et al., 2018). (B) Right and left hemispheres of rendered brains illustrate average locations (red circles) for the 42 channels per participant identified by number. MNI coordinates were determined by digitizing the locations of the optodes in relation to the 10 to 20 system based on conventional landmarks.

Signal acquisition

Hemodynamic signals were acquired using a 64 fibers (84 channels) continuous-wave fNIRS system (LABNIRS, Shimadzu Corp., Kyoto, Japan) setup for hyperscanning of two participants. Figure 1B illustrates the spatial distribution of 42 channels over both hemispheres of each participant. Temporal resolution for signal acquisition was 27 ms. In the LABNIRS system, three wavelengths of light (780, 805 and 830 nm) were delivered by each emitter, and each detector measured the absorbance for each of these wavelengths. These wavelengths were selected by the manufacturer for differential absorbance properties related to the oxygen content of blood. The absorption for each wavelength is converted to corresponding concentration changes for deOxyHb, OxyHb, and for the total combined deOxyHb and OxyHb. The conversion of absorbance measures to concentration has been described previously (Matcher et al., 1995).

Optode localization

The anatomical locations of optodes (head-mounted detectors and emitters) in standard three-dimensional coordinates were determined for each participant in relation to standard head landmarks including inion; nasion; top center (Cz); and left and right tragi using a Patriot 3D Digitizer (Polhemus, Colchester, VT), and linear transform techniques as previously described (Okamoto and Dan, 2005; Eggebrecht et al., 2012; Ferradal et al., 2014). Specifically, the Montreal Neurological Institute (MNI) coordinates (x, y and z) for the channels were obtained by fitting the digitized locations to a three-dimensional model of a standard brain with the head landmarks as described above using individual head geometry. These conversions are provided using the NIRS-SPM software (Ye et al., 2009) with MATLAB (Mathworks, Natick, MA). For the cross-brain coherence analyses, channels were also grouped into anatomical regions based on shared anatomy. The average number of channels in each region was 1.68 ± 0.70.

Signal processing and global component removal

Baseline drift was removed using wavelet detrending (NIRS-SPM). Any channel without a signal was identified automatically by the root mean square of the raw data when the signal magnitude was more than 10 times the average signal. Approximately 4% of the channels were automatically excluded prior to subsequent analyses based on this criterion and generally assumed to be due to insufficient optode contact with the scalp. Global systemic effects (e.g. blood pressure, respiration, and blood flow variation) have previously been shown to alter relative blood hemoglobin concentrations (Boas et al., 2004), and these effects are represented in fNIRS signals, raising the possible confound of inadvertently measuring hemodynamic responses that are not due to neurovascular coupling (Tachtsidis and Scholkmann, 2016). Global components were removed using a principle components analysis (PCA) spatial filter (Zhang et al., 2016, 2017) prior to general linear model (GLM) analysis. This technique exploits advantages of distributed optode coverage in order to distinguish signals that originate from local sources (assumed to be specific to the neural events under investigation) by removing signal components due to global factors that originate from systemic cardiovascular functions.

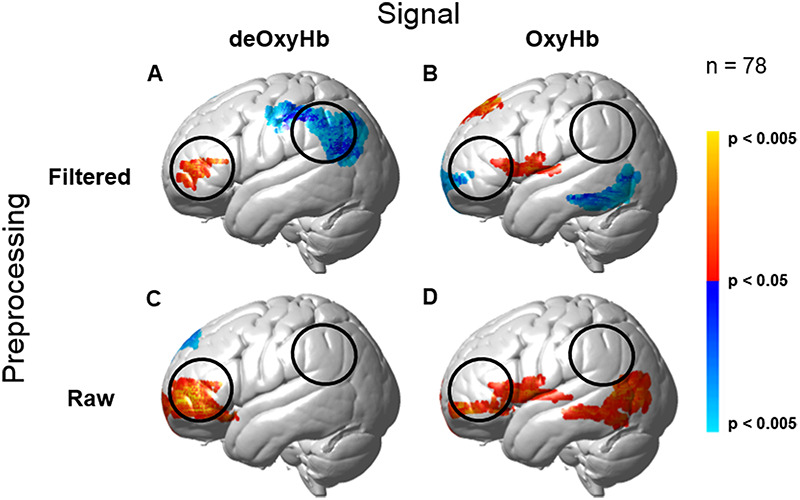

Hemodynamic signals

Both OxyHb and deOxyHb signals acquired by fNIRS provide a hemodynamic proxy of neural activity. However, the OxyHb signal has been shown to be more sensitive to non-neural global components than the deOxyHb signal due to systemic effects directly related to factors such as blood pressure, respiration and blood flow (Kirilina et al., 2012; Tachtsidis and Scholkmann, 2016; Zhang et al., 2016). The deOxyHb signal, on the other hand, is theoretically more closely related to the paramagnetic effects of deOxyHb acquired by fMRI (Ogawa et al., 1990) and is characterized by lower signal-to-noise than the OxyHb signal (Strangman et al., 2002). The choice of the deOxyHb signal for this study was empirically validated by a ‘method of fiducials’ where combination of the entire data set was employed to identify brain regions associated with talking and listening functions that occurred in all conditions. Expected fiducial regions are left hemisphere Broca’s and Wernicke’s areas, respectively, and were similarly observed in prior studies (Hirsch et al., 2018). Comparisons of these fiducial markers were made for both OxyHb and deOxyHb signals that were unprocessed (Raw) and with the global mean removed (Filtered), Figure 2. Circled clusters in the upper left panel document left hemisphere canonical language production (red) and reception (blue) fiducial regions not seen for the OxyHb signals or raw data of the other panels. Panel A of Figure 2 (deOxyHb filtered signal) shows activity associated with both speech production (red) representing [talking > listening] and speech reception (blue) representing [listening > talking] on the left hemisphere in regions that are known for these functions. This replication of known functional neural anatomy is a criterion for signal selection. Further support for the decision to select the deOxyHb signal for experiments using actual talking is found in recent reports that speaking during fNIRS studies produces changes in arterial CO2 that alter the OxyHb signal to a greater extent than the deOxyHb signal (Scholkmann et al., 2013b, 2013c). Thus for talking experiments, the deOxyHb signal was shown to be the most accurate representation of underlying neural processes. Similar comparisons of fNIRS studies where participants engaged in active talking have also confirmed the validity of the deOxyHb signals as opposed to the OxyHb signals (Zhang et al., 2017; Hirsch et al., 2018). The anatomical description of these regions of interest (ROIs) for talking [talk > listen] and listening [listen > talk] is presented in Table 3.

Fig. 2.

Comparison of deOxyHb (left column) and OxyHb (right column) signals with global mean removed using a spatial filter (Zhang et al., 2016, 2017) (Filtered, top row) and without global mean removal (Raw, bottom row) for the total group data. Red/yellow indicates [talking > listening], and blue/cyan indicates [listening > talking] with levels of significance indicated by the color bar on the right. The black open circles represent the canonical language ROIs, Speech Production (anterior) and Speech Reception (posterior), expected for talking and listening tasks, respectively. These regions are both observed for the deOxyHb signals following global mean removal shown in panel A, but not for the other signal processing approaches, as illustrated in panels B, C and D. Observation of known fiducial regions for speech production and reception support the decision to use the deOxyHb signal for this study. Note that the active regions within the open circles (panel A) serve as the ROIs for this study.

Table 3.

Anatomical descriptions of regions of interest (see Panel A, Figure 2)

| Anatomical descriptions of regions of interest | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Contrast | Contrast threshold | Peak voxels Coordinatesa | t-value | P | df | Anatomical regions in cluster | BA | Probability | n of voxels |

| [talk > listen] | P = 0.05 | (−46, 44, 10) | 2.49 | 0.007 | 77 | Pars Triangularis | 45 | 0.56 | 161 |

| Dorsolateral Pre-frontal Cortex | 46 | 0.44 | |||||||

| [listen > talk] | P = 0.05 | (−58, − 56, 24) | −3.38 | 0.001 | 77 | Supramarginal Gyrus | 40 | 0.40 | 1019 |

| Angular Gyrus | 39 | 0.33 | |||||||

| Superior Temporal Gyrus | 22 | 0.27 | |||||||

Coordinates are based on the MNI system and (−) indicates left hemisphere.

BA, Brodmann’s area.

Statistical analysis

Contrast effects were based on comparisons of talking vs listening and determined by a voxel-wise approach as conventionally applied to fMRI and adapted for fNIRS (see Hirsch et al., 2018, for further details of this approach). Reported findings were corrected by the False Discovery Rate (FDR) method at a threshold of P < 0.05. The 42 channels fNIRS datasets per subject were reshaped into three-dimensional volume images for the first-level GLM analysis using SPM8 where the beta values were normalized to standard MNI space using linear interpolation. All included voxels were within 1.8 cm from the brain surface. The computational mask consisted of 3753 2×2×2 mm voxels that ‘tiled’ the shell region covered by the 42 channels. In accordance with this technique, the anatomical variation across subjects was used to generate the distributed response maps. The results are presented on a normalized brain using images rendered on a standardized MNI template.

Contrast findings related to high- and low-disparity dyads are also reported for ROIs associated with talking and listening which were empirically determined as described above (see Figure 2). These ROIs were consistent with expectations of canonical models of human language systems (Gabrieli et al., 1998; Binder et al., 2000; Price, 2012; Hagoort, 2014; Poeppel, 2014), including left hemisphere dorsolateral pre-frontal cortex (DLPFC) and pars opercularis, part of Broca’s area (generally associated with functions of speech production), along with left hemisphere superior temporal gyrus (STG), angular gyrus (AG) and supramaginal gyrus (SMG), part of Wernicke’s area (generally associated with speech comprehension). Similar methods were employed in a previous investigation of talking and listening where the task was a simple object naming and description with and without dyadic interactions (Hirsch et al., 2018). Frontal lobe regions in that study, as expected, were centered on regions known specifically for object naming tasks. Statistical comparison of signal amplitudes for high-disparity vs low-disparity groups were based on these ROIs using a two-tailed, independent samples t-test with a decision rule to reject the null hypothesis at P < 0.05 (FDR-corrected). Additionally, the socioeconomic disparity values from each dyad were also used as a GLM regressor to determine the neural correlates of the continuously varying rating. Both approaches yielded similar results.

Neural coupling

Cross-brain neural coupling is defined as the correlation between the temporal oscillations of the hemodynamic signals of two brains. This can be done in two ways: (i) the task effect is included (Cui et al., 2011; Jiang et al., 2015) or (ii) the task effect is removed as is conventional for PPI analyses (Friston, 1994; Friston et al., 2003). Removal of the task effect is done by convolving the acquired signals with the hemodynamic response function over the course of the experimental time series. This yields a modeled account of signal components that are (i) responsive to the task (the task effect) and (ii) components that are residual, i.e. not responsive to the task. Here, we remove the task effect in order to interrogate spontaneous neural processes that are not specifically task driven, a standard procedure for measures of functional connectivity between remotely located brain regions (Friston, 1994). Regions for the neural coupling analysis are based on optode clusters that segregated the full coverage of the head into 12 specific regions, and thus reducing the probability of a false positive. Although no constraints are placed on this analysis with respect to the regions, the a priori hypothesis is that coupling between cross-brain regions would be consistent with contrast effects. In this dyadic application, wavelet components from regional residual signals (Torrence and Compo, 1998) are correlated across the two partners. This ‘coherence’ provides a measure of cross-brain synchrony, i.e. time-locked neural events (Hasson and Frith, 2016) between specific regional pairs, and was employed here to compare the neural coupling between dyads of high or low SES disparity. The same analysis was also applied to shuffled (random) pairs of dyads as a control for possible effects of common processes not representative of the dyad-specific social interactions.

Results

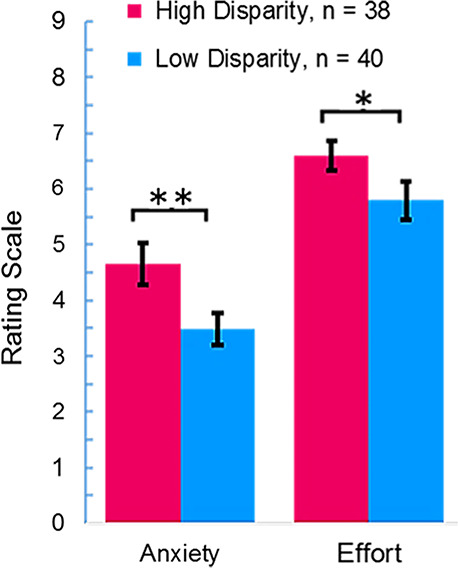

Behavior

Mean ratings for the self-report questions related to anxiety and effort following the experiment are shown on Figure 3 for the high (magenta) and low (blue) disparity groups. Two-tailed paired t-tests revealed that anxiety ratings for the high-disparity group were elevated relative to the low-disparity group (P = 0.01), and high-disparity groups reported increased effort during the task (P = 0.04). These findings document a behavioral effect between the two conditions. Comparison of the number of words spoken in each condition for the two dyad types revealed no evidence of a difference between conditions. Audio recordings of the conversations were reviewed following the experiment and confirmed that participants engaged in positive and pro-social conversations. In no case did the tone of the conversations become confrontational or argumentative. However, by its nature, a natural communication paradigm cannot control the content and experience for each dyad, and analysis of these additional variables is beyond the scope of this exploratory investigation.

Fig. 3.

Mean ratings on post-task survey questions for high-disparity (red) and low-disparity (blue) groups. Rating scale is shown on the y-axis for questions related to anxiety and effort. The high-disparity group reported higher anxiety (**P = 0.01) and increased effort (*P = 0.04) than the low-disparity group.

Contrast effects

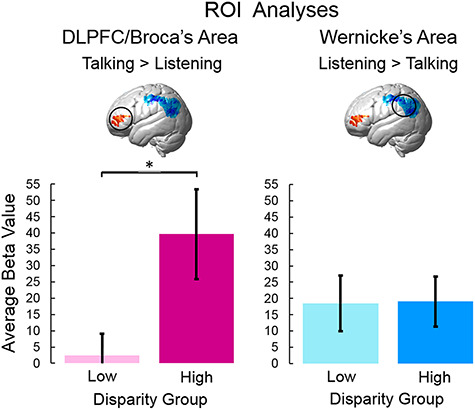

ROI contrasts.

Statistical comparisons of ROIs that were determined empirically for talking and listening (shown in Figure 2 and Table 3) are presented for the high- and low-disparity groups (Figure 4). Group averaged signal strength (beta value) is indicated on the y-axis. Light blue and light pink bars represent the average signal for the low-disparity group, and dark blue and dark red bars represent the average signal for the high-disparity group. The average high-disparity signal exceeded the average low-disparity signal (P = 0.023, t = 2.30, degrees of freedom [df] = 77) for talking in Broca’s area (left panel), and there was no evidence for a difference between the two groups during listening in the Wernicke’s ROI (right panel). These findings document a neural effect in left frontal systems often associated with speech production and control rather than speech reception and interpretation for the high-disparity dyads.

Fig. 4.

Statistical comparisons of average group signal amplitudes for low- and high-disparity groups were calculated for two ROIs: DLPFC/Broca’s area (left panel) and Wernicke’s area (right panel). Greater activation during talking is represented in red (left inset brain) and was associated with DLPFC (BA46) and the Broca’s area ROI (pars triangularis, BA45). Heightened activity during listening is represented in blue (right inset brain) and was found in the Wernicke’s area ROI (supramarginal gyrus, BA40; angular gyrus, BA39 and superior temporal gyrus, BA22). Beta values (signal strength) are indicated on the y-axis, and colored bars indicate average low-disparity (pink and light blue) and high-disparity (magenta and dark blue) ratings (±SEM). For DLPFC/Broca’s ROI, signals were increased for the high-disparity group relative to the low-disparity group (*P = 0.023, left panel). There was no evidence for a difference between the two groups in Wernicke’s ROI (right panel). (deOxyHb signals; n for low-disparity = 40; n for high-disparity = 38).

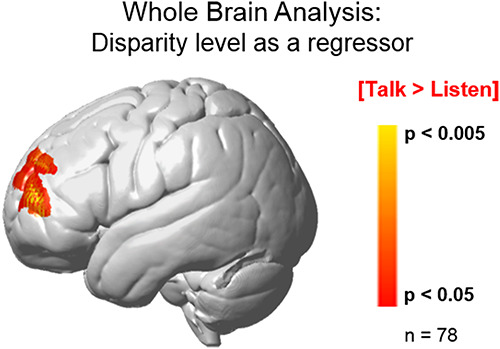

Whole-brain contrasts.

Similar to the ROI approach above, whole-brain contrast comparisons [high disparity > low disparity] also show increased activity during [talking > listening] associated with a left frontal cluster (Figure 5). In this case, a regressor indicating the actual disparity score was input as a continuous predictor of signal strength. This cluster largely overlaps the ROI cluster (see Figures 2A and 4). The peak voxel for this cluster is located in the frontopolar area (BA10) and DLPFC (BA46), P = 0.00007 (P < 0.05, FDR-corrected); peak voxel (−22, 44, 26); df = 77. These whole-brain contrast comparison findings are consistent with the findings of the ROI analyses, and further document a neural effect in left frontal systems This finding is also consistent with a descriptive approach that showed a correlation of fNIRS signal amplitudes in this region and the disparity scores (r = 0.42).

Fig. 5.

Contrast comparison of the high-disparity > low-disparity groups for the [talking > listening] comparison (red/yellow) using the GLM and the continuously varying dyad disparity score as a regressor reveals activity in the left frontopolar area (BA10). Disparity values for each dyad served as the regressor to isolate neural responses sensitive to social economic status. Yellow/red clusters represent increased signal strength associated with increased disparity. The observed cluster (peak MNI coordinate: − 40, 52, 14) includes the frontopolar area (BA10, 70%) and the dorsolateral pre-frontal cortex (BA46, 30%), df = 77, P < 0.0005 (FDR-corrected at P < 0.05). The alternative analysis approach using GLM contrast comparisons of the high-disparity > low-disparity groups reveals similar activity embedded within the cluster determined by the regressor method: df = 77, P = 0.0007 (FDR-corrected to P < 0.05).

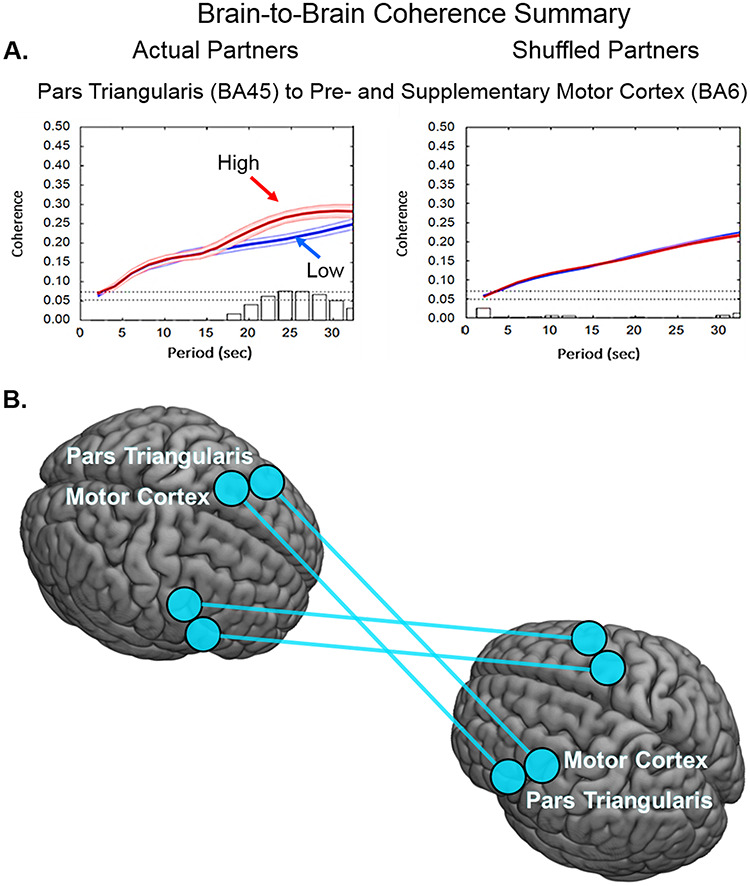

Neural coupling: wavelet analysis.

Comparison of cross-brain coherence between low (blue) and high (red) disparity groups is shown on Figure 6. Coherence (y-axis), the correlation between the simultaneous signals of partners acquired while engaged in the joint tasks of talking and listening, is plotted against signal wavelengths (x-axis) represented as periods (seconds) in Figure 6A. In the case of actual partners (left panel) coherence was greater for the high-disparity group than the low-disparity group during the 22 to 30 seconds period for a single pair of cross-brain regions, including the pars triangularis (part of Broca’s area) and the pre- and supplementary motor cortex (part of the speech articulation system, P = 0.006, left panel). This coherence was not observed when the partners were computationally shuffled (i.e. randomly paired with every participant except the original partner (right panel) consistent with the conclusion that the neural coupling is dyad specific. There were no other regional pairs where coherence for the high-disparity group exceeded the coherence of the low-disparity group. The anatomical regions that increase their coupling during high-disparity situations, pars triangularis and pre- and supplementary motor cortex, are illustrated in Figure 6B.

Fig. 6.

(A) Coherence of brain-to-brain signals for high-disparity dyads. (A) Signal coherence between dyads (y-axis) is plotted against the period (x-axis) for the high-disparity (red) and the low-disparity (blue) conditions (shaded areas: ± 1 SEM). Bar graphs indicate significance levels for the separations between the two conditions for each of the period values on the x-axis. The upper horizontal dashed line indicates (P ≤ 0.01) and the lower line indicates (P ≤ 0.05). Left panel shows coherence between actual partners, and right panel shows coherence between shuffled partners. Cross-brain coherence is greatest in the high-disparity group between pars triangularis (BA45) and pre- and supplementary motor cortex (BA6) (partners: P = 0.006, t = 2.87; shuffled: no significant effect). (B) Anatomical illustration of the regional pairs with increased synchrony for high-disparity dyads.

Discussion

Pro-social communication among diverse individuals is a facet of everyday life, and in many societies, considered a positive social goal. Despite implicit biases and prejudices known to impose challenges to pro-social communication, egalitarian outcomes are often achieved. Neural processes by which this implementation occurs have not been previously investigated. The neuroscience of social disparity has largely focused on racial bias and stereotyping in single participants, with particular emphasis on the amygdala (Amodio et al., 2004; Forbes et al., 2012; Amodio, 2014). Other studies have investigated context-driven disparity measures, such as social hierarchy or minimal in-group membership (Bavel et al., 2008; Farrow et al., 2011). These studies were performed in non-interactive scanning environments with tasks such as viewing and responding to pictures (Amodio et al., 2004; Bavel et al., 2008; Farrow et al., 2011; Forbes et al., 2012). Extending this framework, the paradigm for this study explored natural verbal communication between two individuals who were strangers before the experiment. Neural responses that differentiate pro-social conversation between high-disparity and low-disparity dyads were found in left frontal systems.

Our two dyad types were closely matched for disparity indicators such as race, gender and age and differed primarily in socioeconomic background (see Tables 1 and 2), suggesting that findings were largely context driven. Education level and income were selected to represent primary indicators of social (education) and economic (income) status as a compound variable. ‘Socioeconomic status is the social standing or class of an individual or group. It is often measured as a combination of education, income, and occupation’ (APA Socioeconomic Status Office, n.d.). Here, we based group membership on education and income alone without accounting for participants’ occupations due to uncertainty surrounding this factor in our population. Isolation of the impact of either variable separately was not possible within this dyadic structure as the dynamic interactions are inseparable units. Future investigations may develop alternative descriptors of dyads. The categorical classification employed here represents an exploratory approach.

The left DLPFC and frontopolar areas showed increased activity during live conversations between high-disparity dyads compared with low-disparity dyads. This raises the question of what aspect of the high-disparity condition engages these regions to a larger extent than the low-disparity condition. A recent meta-analysis of the neural correlates associated with the conjunction of emotional processing, social cognition and resting (unconstrained) cognition suggests that activity of the DLPFC may underlie aspects of all three of these functions (Schilbach et al., 2012). Findings of this investigation add the suggestion that spontaneous pro-social verbal interactions in high disparity conditions may also up-regulate these functions as possibly implemented by activity of the DLPFC. The actual cues detected by participants during the interactions in this experiment are unknowable due to the spontaneity of social cues and perceptions. Appearance, verbal style, vocabulary choice, acoustic inflections, gestural mannerisms and apparent comfort with the research environment are candidates for socioeconomic signifiers. These findings, however, are also corroborated by similar single participant neuroimaging investigations related to socioeconomic background (Raizada et al., 2008; Raizada and Kishiyama, 2010). The precise linkage between perceptions, social skills and putative roles of the neural correlates remain topics for further investigation.

The new dyadic frame of reference provides a unique computational platform for hypotheses related to models of cross-brain behavioral synchrony, and has notable similarities to methods previously applied to investigations of single-brain neural systems For example, neural linkages between single-brain functional systems form hierarchical neural operations that underlie synchronous complex behaviors. These neural complexes are interrogated by computing psychophysiological interactions (PPI) that are based on correlations between hemodynamic signals originating from remote locations within a single brain (Friston, 1994). These computations are performed on residual components of hemodynamic signals following computational removal of the modeled task, and assume that non-task-related high-frequency oscillations have neural origins. Further, it is conventionally assumed that their correlations reveal cooperative processes.

Wavelet analysis of cross-brain hemodynamic signals applied in this study is an adaptation of these computational methods employed to understand within-brain functional connectivity (Cui et al., 2011; Jiang et al., 2015; Friston et al., 2003), and represents a novel exploratory approach to investigate the dynamics of interpersonal interactions.

Dynamic neural coupling occurs when neural patterns of one brain are synchronous with those of another. An emerging theoretical framework for social neuroscience proposes that synchrony between communicating brains is a marker of communication, and that these shared processes represent the effects of dynamic exchanges of information (Hasson et al., 2004). The extent to which signals between two interacting brains are synchronized has been taken as a metric of dyadic social connectedness, although these emerging views remain exploratory and descriptive. For example, measures of neural coupling between signals across brains of speakers and listeners who separately recited narratives and subsequently listened to the passages, using both fMRI (Stephens et al., 2010) and fNIRS (Liu et al., 2017), were correlated with levels of comprehension. Cross-brain synchrony associated with other live interpersonal interactions, such as cooperative or competitive task performance (Funane et al., 2011; Dommer et al., 2012); game playing (Cui et al., 2012; Liu et al., 2016; Tang et al., 2016; Piva et al., 2017); imitation (Holper et al., 2012); dyadic or group discussions (Jiang et al., 2012, 2015); synchronization of speech rhythms (Kawasaki et al., 2013); cooperative singing or humming (Osaka et al., 2014, 2015) and gestural communication (Schippers et al., 2010), has also been demonstrated. Cross-brain connectivity between language production and reception areas increased during real eye-to-eye contact compared with simultaneous face/eye picture viewing (Hirsch et al., 2017). Verbal exchanges between dyads revealed heightened neural synchrony between STG and the sub-central area during interaction relative to monologue conditions (Hirsch et al., 2018). Cross-brain neural coherence has also been demonstrated across non-human species. For example, socially interacting mice exhibit inter-brain correlations between single unit neural activity in pre-frontal cortex depending on social interaction (Kingsbury et al., 2019), and socially interacting bats exhibit correlated electrophysiological responses during social behaviors (Zhang and Yartsev, 2019). Together, these observations of temporal cross-brain synchrony suggest a novel biological marker for reciprocal social interactions.

In this study, we observe greater cross-brain coherence between the high-disparity dyads than the low-disparity dyads for signals originating in the frontal areas. This was not predicted and suggests a topic for further investigation. However, we speculate that the findings are generally consistent with the contrast results of this study showing increased activity in left frontal regions with dyadic interactions between high disparity participants. This may represent a shared intent for pro-social outcomes in the context of a situation with increased requirements for social responsiveness. The experimental social situation represented by this interactive paradigm may mimic real complex social situations that expose cross-brain linkages between neural correlates of emotional processing and social cognition as previously associated with the DLPFC (Schilbach et al., 2012).

Cross-brain correlation approaches remain exploratory but with the potential to advance conventional investigations of single brains by highlighting dynamic interactions between two brains that reflect complex brain-behavior (Redcay and Schilbach, 2019). This second-person approach proposes that social interactions are characterized by intricate and spontaneous perceptions of socially relevant information. The dynamic interplay between reciprocal social actions and reactions is exposed by these dual-brain approaches that advance idea of dyads as working units (Schilbach et al., 2013).

The dialectical misattunement hypothesis is one approach that conceptualizes social psychopathology as dynamic interpersonal mismatches between dyads (Bolis et al., 2017). This hypothesis was proposed as an integrative method for evaluation of psychiatric conditions; it also provides a general context for understanding neural events underlying everyday social situations between dyads. Distinguishing features of live spontaneous interactions include perceptions and actions that are operational in real-time communications. Interactive ‘send and receive’ social exchanges become interconnected and unified processes. The two-person approach promotes examination of neural systems associated with these fine-grained, dynamic social behaviors that are overlooked by conventional approaches. The hypothesis predicts that interactions within heterogeneous dyads (high-disparity group) compared with homogeneous dyads (low-disparity group) will call upon neural mechanisms associated with ‘online’ conversation. These putative dynamic mechanisms have rarely been investigated and their exposure reveals an impactful view of dynamic neural processes that operate ‘on the fly’. This study compares interactions between neurotypical dyads. However, the dyadic approach to characterization of live interpersonal interactions including neural coupling and cross-brain synchrony may be applicable to diagnostic and treatment practices for psychiatric conditions that include disorders of social behavior (Bolis et al., 2017).

Pro-social communications between heterogeneous dyads are also relevant to recently proposed coding models relating theory of mind with predictive processes (Koster-Hale and Saxe, 2013). In this context, brain regions such as pre-frontal cortex and superior temporal sulcus, including the temporal parietal junction, are thought to be associated with mental state inferences and evaluations of beliefs and desires of dyadic partners. This account considers perceptual updating and rapid expression in an ongoing reciprocal loop between communicating dyads where coupling dynamics may be modeled as a predictor of interactive effects.

This two-person, natural communication paradigm and findings are a first step toward introducing scientific methods that inform neural models of live, complex real-world social behaviors related to ‘in- vs out-group’ tensions as well as psychiatric conditions. The experimental context is close to a natural, ecologically valid situation requiring coordinated, continuous complex actions and reactions between strangers either within the same socioeconomic group or not. Similar to a real-world situation, participants were strangers and given no advance notice of their partner. Social monitoring of non-verbal cues, facial expressions, body language, tonal variations, choice of vocabulary and mannerisms occurred simultaneously and naturally between participants. This interactive paradigm with natural ‘on-line’ social cues engages a complex of cooperating neural systems that are associated with face-to-face conversations between individuals with high socioeconomic disparities.

Given the relevance of pro-social behaviors to current political and cultural conditions, future studies aimed at understanding live regulation of implicit and pre-potent stereotypes and prejudices are a priority. Implicit racial biases, for example, are known to be resistant to modification (Amodio, 2014). However, focusing on mechanisms that represent cognitive assessment and self-regulation, as suggested in this study, may point to innovative strategies for achieving positive social interventions. One aim of this investigation was to contribute a foundation that could apply the advantages of two-person neuroscience to the emerging perspective that both social behaviors and psychiatric conditions may be framed as conditions of social interaction (Bolis et al., 2017). Within this dyadic frame of reference, detailed analyses that interrogate nuances of social cues and responses have the potential to expand the conventional single-person focus in psychiatry and typical behaviors toward yet undiscovered causes of social behavior (Bolis and Schilbach, 2018). A fundamental notion of this framework holds that psychological conditions may be viewed in relation to dynamical social interactions. Although appealing from a philosophical point of view, the realization of an empirical approach that supports such a discipline has been slow to materialize. This manuscript provides both a technical and a theoretical advance leading toward a two-person neuroscience where live and dynamic pro-social and communicative processes between dyads are related to underlying neurobiology. Findings of this investigation highlight a possible future direction for the investigation of behaviors and their regulation in social, cultural and psychiatric conditions.

In summary, diverse social environments challenge pro-social communication due to factors such as implicit personal biases, prejudices and social inexperience. Despite these pre-potent perceptions, pro-social communications are often achieved. Which neural mechanisms underlie dynamic pro-social exchanges between individuals of high socioeconomic disparity? We employ an innovative dual-brain (hyperscanning) neuroimaging technique (fNIRS) to acquire signals during natural verbal communications between dyads with high and low socioeconomic disparity. Findings reveal left frontal intra- and inter-brain systems that support natural pro-social communications between socioeconomically diverse individuals. These exploratory techniques and findings inform a neural basis for proactive strategies to achieve positive social interventions in live dyadic social environments and contribute a foundation for two-person neuroscience and application for clinical psychology and psychiatry.

Acknowledgements

The authors are grateful for the significant contributions of Dr Ilias Tachtsidis, Department of Medical Physics and Biomedical Engineering at University College London; Professors Antonia Hamilton and Paul Burgess, Institute for Cognitive Neuroscience at University College London and Maurice Biriotti at SHM, London, for insightful comments and guidance.

Contributor Information

Olivia Descorbeth, Undergraduates of Yale College (Descorbeth), New Haven, CT, 06511, USA.

Xian Zhang, Brain Function Laboratory, Department of Psychiatry, Yale School of Medicine, New Haven, CT, 06511, USA.

J Adam Noah, Brain Function Laboratory, Department of Psychiatry, Yale School of Medicine, New Haven, CT, 06511, USA.

Joy Hirsch, Brain Function Laboratory, Department of Psychiatry, Yale School of Medicine, New Haven, CT, 06511, USA; Department of Neuroscience, Yale School of Medicine, New Haven, CT, 06511, USA; Department of Comparative Medicine, Yale School of Medicine, New Haven, CT, 06511, USA; Haskins Laboratories, New Haven, CT, 06511, USA; Department of Medical Physics and Biomedical Engineering, University College London, WC1E 6BT, UK.

Funding

This research was partially supported by the National Institute of Mental Health of the National Institutes of Health under award numbers R01MH107513 ( J.H.), R01MH119430 ( J.H.) and R01MH111629 ( J.H. and J. McPartland). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Author contributions

O.D. contributed to the design, data collection, analysis and manuscript preparation; J.H. supervised all aspects of the study and manuscript; J.A.N. contributed to all aspects of the study with specific responsibility for signal acquisition and X.Z. also contributed to all aspects of the study with specific responsibility for data analysis.

Data availability statement

The datasets generated and analyzed for this study can be found at fmri.org. Code is available upon request.

References

- Amodio D.M. (2014). The neuroscience of prejudice and stereotyping. Nature Reviews Neuroscience, 15, 670–82. [DOI] [PubMed] [Google Scholar]

- Amodio D.M., Frith C.D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience, 7, 268–77. [DOI] [PubMed] [Google Scholar]

- Amodio D.M., Harmon-Jones E., Devine P.G., Curtin J.J., Hartley S.L., Covert A.E. (2004). Neural signals for the detection of unintentional race bias. Psychological Science, 15, 88–93. [DOI] [PubMed] [Google Scholar]

- American Psychological Association Socioeconomic Status Office (n.d.). Socioeconomic status. Retrieved July 25, 2019, from https://www.apa.org/topics/socioeconomic-status/

- Bavel J.J.V., Packer D.J., Cunningham W.A. (2008). The neural substrates of in-group bias: a functional magnetic resonance imaging investigation. Psychological Science, 19, 1131–9. [DOI] [PubMed] [Google Scholar]

- Beer J.S., Heerey E.A., Keltner D., Scabini D., Knight R.T. (2003). The regulatory function of self-conscious emotion: insights from patients with orbitofrontal damage. Journal of Personality and Social Psychology, 85, 594–604. [DOI] [PubMed] [Google Scholar]

- Binder J.R.,Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET (2000). Human temporal lobe activation by speech and nonspeech sounds. Cerebral Cortex, 10, 512–28. [DOI] [PubMed] [Google Scholar]

- Boas D.A., Dale A.M., Franceschini M.A. (2004). Diffuse optical imaging of brain activation: approaches to optimizing image sensitivity, resolution, and accuracy. NeuroImage, 23(Suppl 1), S275–88. [DOI] [PubMed] [Google Scholar]

- Boas D.A., Elwell C.E., Ferrari M., Taga G. (2014). Twenty years of functional near-infrared spectroscopy: introduction for the special issue. NeuroImage, 85Pt 1, 1–5. [DOI] [PubMed] [Google Scholar]

- Bolis D., Balsters J., Wenderoth N., Becchio C., Schilbach L. (2017). Beyond autism: introducing the dialectical misattunement hypothesis and a Bayesian account of intersubjectivity. Psychopathology, 506, 355–72. [DOI] [PubMed] [Google Scholar]

- Bolis D., Schilbach L. (2018). Observing and participating in social interactions: action perception and action control across the autistic spectrum. Developmental Cognitive Neuroscience, 29, 168–75. doi: 10.1016/j.dcn.2017.01.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brewer M.B. (1979). In-group bias in the minimal intergroup situation: A cognitive-motivational analysis.. Psychological Bulletin, 86, 307. [Google Scholar]

- Cui X., Bryant D.M., Reiss A.L. (2012). NIRS-based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. NeuroImage, 59, 2430–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui X., Bray S., Bryant D.M., Glover G.H., Reiss A.L. (2011). A quantitative comparison of NIRS and fMRI across multiple cognitive tasks. NeuroImage, 54, 2808–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dommer L., Jäger N., Scholkmann F., Wolf M., Holper L. (2012). Between-brain coherence during joint n-back task performance: a two-person functional near-infrared spectroscopy study. Behavioural Brain Research, 234, 212–22. [DOI] [PubMed] [Google Scholar]

- Dravida S., Noah J.A., Zhang X., Hirsch J. (2017). Comparison of oxyhemoglobin and deoxyhemoglobin signal reliability with and without global mean removal for digit manipulation motor tasks. Neurophotonics, 5, 011006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunham Y., Baron A.S., Carey S. (2011). Consequences of “minimal” group affiliations in children. Child Development, 82, 793–811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggebrecht A.T., White B.R., Ferradal S.L., et al. (2012). A quantitative spatial comparison of high-density diffuse optical tomography and fMRI cortical mapping. NeuroImage, 61, 1120–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farrow T.F.D., Jones S.C., Kaylor-Hughes C.J., et al. (2011). Higher or lower? The functional anatomy of perceived allocentric social hierarchies. NeuroImage, 57, 1552–60. [DOI] [PubMed] [Google Scholar]

- Ferradal S.L., Eggebrecht A.T., Hassanpour M., Snyder A.Z., Culver J.P. (2014). Atlas-based head modeling and spatial normalization for high-density diffuse optical tomography: in vivo validation against fMRI. NeuroImage, 85, 117–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrari M., Quaresima V. (2012). A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. NeuroImage, 63, 921–35. [DOI] [PubMed] [Google Scholar]

- Forbes C.E., Cox C.L., Schmader T., Ryan L. (2012). Negative stereotype activation alters interaction between neural correlates of arousal, inhibition and cognitive control. Social Cognitive and Affective Neuroscience, 7, 771–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K.J. (1994). Functional and effective connectivity in neuroimaging: a synthesis. Human Brain Mapping, 2, 56–78. [Google Scholar]

- Friston K.J., Harrison L., Penny W. (2003). Dynamic causal modelling. NeuroImage, 19, 1273–302. [DOI] [PubMed] [Google Scholar]

- Funane T., Kiguchi M., Atsumori H., Sato H., Kubota K., Koizumi H. (2011). Synchronous activity of two people’s prefrontal cortices during a cooperative task measured by simultaneous near-infrared spectroscopy. Journal of Biomedical Optics, 16, 077011. [DOI] [PubMed] [Google Scholar]

- Gabrieli J.D., Poldrack R.A., Desmond J.E. (1998) The role of left prefrontal cortex in language and memory. Proceedings of the National Academy of Sciences 95, 906–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert S.J., Swencionis J.K., Amodio D.M. (2012). Evaluative vs. trait representation in intergroup social judgments: distinct roles of anterior temporal lobe and prefrontal cortex. Neuropsychologia, 50, 3600–11. [DOI] [PubMed] [Google Scholar]

- Hagoort P. (2014). Nodes and networks in the neural architecture for language: Broca’s region and beyond. Current Opinion in Neurobiology, 28, 136–41. [DOI] [PubMed] [Google Scholar]

- Hasson U., Frith C.D. (2016). Mirroring and beyond: coupled dynamics as a generalized framework for modelling social interactions. Philosophical Transactions of the Royal Society B: Biological Sciences, 371, 20150366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U. Nir Y, Levy I, Fuhrmann G, Malach R (2004). Intersubject synchronization of cortical activity during natural vision. Science, 303, 1634–40. [DOI] [PubMed] [Google Scholar]

- Hirsch J., Zhang X., Noah J.A., Ono Y. (2017). Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. NeuroImage, 157, 314–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirsch J., Noah J.A., Zhang X., Dravida S., Ono Y. (2018). A cross-brain neural mechanism for human-to-human verbal communication. Social Cognitive and Affective Neuroscience, 13, 907–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holper L., Scholkmann F., Wolf M. (2012). Between-brain connectivity during imitation measured by fNIRS. NeuroImage, 63, 212–22. [DOI] [PubMed] [Google Scholar]

- Jiang J., Dai B., Peng D., Zhu C., Liu L., Lu C. (2012). Neural synchronization during face-to-face communication. Journal of Neuroscience, 32, 16064–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang J., Chen C., Dai B., et al. (2015) Leader emergence through interpersonal neural synchronization. Proceedings of the National Academy of Sciences 112:4274–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawasaki M., Yamada Y., Ushiku Y., Miyauchi E., Yamaguchi Y. (2013). Inter-brain synchronization during coordination of speech rhythm in human-to-human social interaction. Scientific Reports, 3, 1692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kingsbury L., Huang S., Wang J., et al. (2019). Correlated Neural Activity and Encoding of Behavior across Brains of Socially Interacting Animals. Cell, 178, 429–46. e416 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirilina E., Jelzow A., Heine A., et al. (2012). The physiological origin of task-evoked systemic artefacts in functional near infrared spectroscopy. NeuroImage, 61, 70–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koike T., Tanabe H.C., Okazaki S., et al. (2016). Neural substrates of shared attention as social memory: a hyperscanning functional magnetic resonance imaging study. Neuroimage, 125, 401–12. doi: 10.1016/j.neuroimage.2015.09.076 [DOI] [PubMed] [Google Scholar]

- Konvalinka I., Roepstorff A. (2012). The two-brain approach: how can mutually interacting brains teach us something about social interaction? Frontiers in Human Neuroscience, 6, 215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koster-Hale J., Saxe R. (2013). Theory of mind: a neural prediction problem. Neuron, 79(5), 836–48. doi: 10.1016/j.neuron.2013.08.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lemyre L., Smith P.M. (1985). Intergroup discrimination and self-esteem in the minimal group paradigm. Journal of Personality and Social Psychology, 49, 660. [DOI] [PubMed] [Google Scholar]

- Levin S., Van Laar C., Sidanius J. (2003). The effects of ingroup and outgroup friendships on ethnic attitudes in college: a longitudinal study. Group Processes & Intergroup Relations, 6, 76–92. [Google Scholar]

- Liu N., Mok C., Witt E.E., Pradhan A.H., Chen J.E., Reiss A.L. (2016). NIRS-based hyperscanning reveals inter-brain neural synchronization during cooperative Jenga game with face-to-face communication. Frontiers in Human Neuroscience, 10, 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Y., Piazza E.A., Simony E., et al. (2017). Measuring speaker–listener neural coupling with functional near infrared spectroscopy. Scientific Reports, 7, 43293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matcher S.J.E., Elwell C.E., Cooper C.E., Cope M., Delpy D.T. (1995). Performance comparison of several published tissue near-infrared spectroscopy algorithms. Analytical Biochemistry, 227, 54–68. [DOI] [PubMed] [Google Scholar]

- McLaren D.G., Ries M.L., Xu G., Johnson S.C. (2012). A generalized form of context-dependent psychophysiological interactions (gPPI): a comparison to standard approaches. NeuroImage, 61, 1277–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLeod J.D., Owens T.J. (2004). Psychological well-being in the early life course: variations by socioeconomic status, gender, and race/ethnicity. Social Psychology Quarterly, 67, 257–78. [Google Scholar]

- Noah J.A., Dravida S., Zhang X., Yahil S., Hirsch J., Verguts T. (2017). Neural correlates of conflict between gestures and words: A domain-specific role for a temporal-parietal complex. PLoS ONE, 12, e0173525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noah J.A., Zhang X., Dravida S., et al. (2020). Real-Time Eye-to-Eye Contact Is Associated With Cross-Brain Neural Coupling in Angular Gyrus. Frontiers in Human Neuroscience, 14, 19. doi: 10.3389/fnhum.2020.00019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogawa S., Lee T.M., Kay A.R., Tank D.W. (1990) Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proceedings of the National Academy of Sciences 87, 9868–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okamoto M., Dan I. (2005). Automated cortical projection of head-surface locations for transcranial functional brain mapping. NeuroImage, 26, 18–28. [DOI] [PubMed] [Google Scholar]

- Oldfield R.C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia, 9, 97–113. [DOI] [PubMed] [Google Scholar]

- Osaka N., Minamoto T., Yaoi K., Azuma M., Osaka M. (2014). Neural synchronization during cooperated humming: a hyperscanning study using fNIRS. Procedia-Social and Behavioral Sciences, 126, 241–3. [Google Scholar]

- Osaka N., Minamoto T., Yaoi K., Azuma M., Shimada Y.M., Osaka M. (2015). How two brains make one synchronized mind in the inferior frontal cortex: fNIRS-based hyperscanning during cooperative singing. Frontiers in Psychology, 6, 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otten S., Wentura D. (1999). About the impact of automaticity in the Minimal Group Paradigm: evidence from affective priming tasks. European Journal of Social Psychology, 29, 1049–71. [Google Scholar]

- Pinti P., Aichelburg C., Gilbert S., Hamilton A., Hirsch J., Burgess P., and Tachtsidis I. (2018). A review on the use of wearable functional near-infrared spectroscopy in naturalistic environments. Japanese Psychological Research, 60(4), 347–373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinti P., Tachtsidis I., Hamilton A., Hirsch J., Aichelburg C., Gilbert S., Burgess P. W. (2020). The present and future use of functional near-infrared spectroscopy (fNIRS) for cognitive neuroscience. Annals of the New York Academy of Sciences, 1464(1), 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piva M., Zhang X., Noah A., Chang S.W.C., Hirsch J. (2017). Distributed neural activity patterns during human-to-human competition. Frontiers in Human Neuroscience, 11, 571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D. (2014). The neuroanatomic and neurophysiological infrastructure for speech and language. Current Opinion in Neurobiology, 28, 142–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price C.J. (2012). A review and synthesis of the first 20 years of PET and fMRI studies of heard speech, spoken language and reading. NeuroImage, 62, 816–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raizada R.D., Kishiyama M.M. (2010). Effects of socioeconomic status on brain development, and how cognitive neuroscience may contribute to levelling the playing field. Frontiers in Human Neuroscience, 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raizada RD, Richards T.L., Meltzoff A., Kuhl PK. (2008). Socioeconomic status predicts hemispheric specialisation of the left inferior frontal gyrus in young children. NeuroImage, 40, 1392–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redcay E., Schilbach L. (2019). Using second-person neuroscience to elucidate the mechanisms of social interaction. Nature Reviews Neuroscience, 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richeson J.A., Baird A.A., Gordon H.L., et al. (2003). An fMRI investigation of the impact of interracial contact on executive function. Nature Neuroscience, 6, 1323–8. [DOI] [PubMed] [Google Scholar]

- Rojiani R., Zhang X., Noah A., Hirsch J. (2018). Communication of emotion via drumming: dual-brain imaging with functional near-infrared spectroscopy. Social Cognitive and Affective Neuroscience, 13(10), 1047–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sapolsky R. (2005). Sick of poverty. Scientific American, 293(6), 92–9. [DOI] [PubMed] [Google Scholar]

- Saito D. N., Tanabe H. C., Izuma K., Hayashi M. J., Morito Y., Komeda H., et al. (2010). “Stay tuned”: inter-individual neural synchronization during mutual gaze and joint attention. Front. Integr. Neurosci. 4: 127. doi: 10.3389/fnint.2010.00127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato H., Yahata N., Funane T., et al. (2013). A NIRS–fMRI investigation of prefrontal cortex activity during a working memory task. NeuroImage, 83, 158–73. [DOI] [PubMed] [Google Scholar]

- Schilbach L. (2010). A second-person approach to other minds. Nature Reviews. Neuroscience, 11, 449. [DOI] [PubMed] [Google Scholar]

- Schilbach L., Timmermans B., Reddy V., et al. (2013). Toward a second-person neuroscience. Behavioral and Brain Sciences, 36(4), 393–414. doi: 10.1017/S0140525X12000660 [DOI] [PubMed] [Google Scholar]

- Schilbach L. (2014). On the relationship of online and offline social cognition. Frontiers in Human Neuroscience, 8, 278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schilbach L., Bzdok D., Timmermans B., et al. (2012). Introspective minds: using ALE meta-analyses to study commonalities in the neural correlates of emotional processing, social & unconstrained cognition. PLoS One, 7, 2. doi: 10.1371/journal.pone.0030920 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schippers M.B., Roebroeck A., Renken R., Nanetti L., Keysers C. (2010) Mapping the information flow from one brain to another during gestural communication. Proceedings of the National Academy of Sciences 107, 9388–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scholkmann F., Holper L., Wolf U., Wolf M. (2013a). A new methodical approach in neuroscience: assessing inter-personal brain coupling using functional near-infrared imaging (fNIRI) hyperscanning. Frontiers in Human Neuroscience, 7, 813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scholkmann F., Gerber U., Wolf M., Wolf U. (2013b). End-tidal CO2: an important parameter for a correct interpretation in functional brain studies using speech tasks. Neuroimage, 66, 71–9. [DOI] [PubMed] [Google Scholar]

- Scholkmann F., Wolf M., Wolf U. (2013c). The Effect of Inner Speech on Arterial CO2 and Cerebral Hemodynamics and Oxygenation: A Functional NIRS Study In: Van Huffel S., Naulaers G., Caicedo A., Bruley D.F., Harrison D.K. editors, Oxygen Transport to Tissue XXXV. Advances in Experimental Medicine and Biology, Vol. 789 New York: Springer New York, 81–7. [DOI] [PubMed] [Google Scholar]

- Stephens G.J., Silbert L.J., Hasson U. (2010) Speaker–listener neural coupling underlies successful communication. Proceedings of the National Academy of Sciences 107, 14425–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strangman G., Culver J.P., Thompson J.H., Boas D.A. (2002). A quantitative comparison of simultaneous BOLD fMRI and NIRS recordings during functional brain activation. NeuroImage, 17, 719–31. [PubMed] [Google Scholar]

- Tachtsidis I., Scholkmann F. (2016). False positives and false negatives in functional near-infrared spectroscopy: issues, challenges, and the way forward. Neurophotonics, 3, 031405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tajfel H. (1982). Social psychology of intergroup relations. Annual Review of Psychology, 33, 1–39. [Google Scholar]

- Tanabe H.C., Kosaka H., Saito D.N., Koike T., Hayashi M.J., Izuma K., et al. (2012). Hard to “tune in”: neural mechanisms of live face-to-face interaction with high-functioning autistic spectrum disorder. Front. Hum. Neurosci, 6: 268. doi: 10.3389/fnhum.2012.00268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang H., Mai X., Wang S., Zhu C., Krueger F., Liu C. (2016). Interpersonal brain synchronization in the right temporo-parietal junction during face-to-face economic exchange. Social Cognitive and Affective Neuroscience, 11, 23–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torrence C., Compo G.P. (1998). A practical guide to wavelet analysis. Bulletin of the American Meteorological Society, 79, 61–78. [Google Scholar]

- Turner J.C. (1978). Social categorization and social discrimination in the minimal group paradigm. In H. Tajfel (Ed.), Differentiation between social groups. Studies in the Social Psychology of Intergroup Relations, London: Academic Press: 101–40. [Google Scholar]

- Van Bavel J.J., Packer D.J., Cunningham W.A. (2008). The neural substrates of in-group bias: a functional magnetic resonance imaging investigation. Psychological Science, 19, 1131–9. [DOI] [PubMed] [Google Scholar]

- Vaughan E. (1995). The significance of socioeconomic and ethnic diversity for the risk communication process. Risk Analysis, 15, 169–80. [Google Scholar]

- Villringer A., Chance B. (1997). Non-invasive optical spectroscopy and imaging of human brain function. Trends in Neurosciences, 20, 435–42. [DOI] [PubMed] [Google Scholar]

- Ye J.C., Tak S., Jang K.E., Jung J., Jang J. (2009). NIRS-SPM: statistical parametric mapping for near-infrared spectroscopy. NeuroImage, 44, 428–47. [DOI] [PubMed] [Google Scholar]

- Zhang X., Noah J.A., Hirsch J. (2016). Separation of the global and local components in functional near-infrared spectroscopy signals using principal component spatial filtering. Neurophotonics, 3, 015004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang X., Noah J.A., Dravida S., Hirsch J. (2017). Signal processing of functional NIRS data acquired during overt speaking. Neurophotonics, 4, 041409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W., Yartsev M.M. (2019). Correlated neural activity across the brains of socially interacting bats. Cell, 178, 413–28. doi: 10.1016/j.cell.2019.05.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated and analyzed for this study can be found at fmri.org. Code is available upon request.