Abstract

The database here described contains data relevant to preterm infants' movement acquired in neonatal intensive care units (NICUs). The data consists of 16 depth videos recorded during the actual clinical practice. Each video consists of 1000 frames (i.e., 100s). The dataset was acquired at the NICU of the Salesi Hospital, Ancona (Italy). Each frame was annotated with the limb-joint location. Twelve joints were annotated, i.e., left and right shoul- der, elbow, wrist, hip, knee and ankle. The database is freely accessible at http://doi.org/10.5281/zenodo.3891404. This dataset represents a unique resource for artificial intelligence researchers that want to develop algorithms to provide healthcare professionals working in NICUs with decision support. Hence, the babyPose dataset is the first annotated dataset of depth images relevant to preterm infants' movement analysis.

Keywords: Preterm infants, Depth images, Pose estimation, Neonatal intensive care units, Artificial intelligence

Specification Table

| Subject area | Biomedical Engineering and Computer Science |

| Specific subject area | Computer vision and deep learning for neonatology applications |

| Type of data | Images and corresponding annotation sheet |

| How data were acquired | RGB-D sensor (Orbbec® camera) placed over the crib in neonatal intensive care units (NICUs) |

| Data format | Raw depth images (*.png) and corresponding annotation sheet (*.xlsx) |

| Parameters for data collection | Depth video sequences (5 minutes each) were acquired with the Orbbec® camera placed over the cribs of 16 preterm infants hospitalized in the NICU of the G. Salesi Hospital (Ancona, Italy). Data were acquired during the actual clinical practice and all the infants were breathing spontaneously. |

| Description of data collection | Data were collected from 16, autonomously breathing, preterm infants, while the infants were hospitalized in NICUs |

| Data source location | G. Salesi Hospital, Via Filippo Corridoni, 11, 60123 Ancona (Italy) |

| Data accessibility | http://doi.org/10.5281/zenodo.3891404 |

| Related research article | Moccia et al. 2020 [2] |

Value of the Data

-

•

These data are useful because they provide insight on preterm infants’ movement patterns.

-

•

The beneficiaries of these data are the healthcare professionals working in neonatal intensive care units (NICUs) and the artificial-intelligence (AI) researchers interested in the healthcare domain.

-

•

These data can be used to: 1) study the relationship between movement patterns and preterm-birth short and long-term complications; 2) support AI researchers that need raw data and corresponding annotation to train AI models that may lead to significant enhancement in the field of preterm infants’ movement monitoring from contactless measurements; and 3) provide healthcare professionals working in NICUs with decision support in the evaluation of movement patterns.

-

•

The additional value of these data relies on the fact that the babyPose dataset is the first annotated dataset relevant to preterm infants' movement.

1. Data Description

The babyPose dataset collects frames from depth videos acquired with the Orbbec® camera placed over the cribs of 16 preterm infants hospitalized in NICU of the G.Salesi Hospital (Ancona) (Fig. 1). The babyPose dataset consisted of: (i) sixteen folders (one per patient) and (ii) two *.xlsx files, the babyPose-train.xlsx and the babyPose-test.xlsx, respectively. Each folder collects the extracted depth images (size=480 × 640 pixels), saved in *.png format both at 8 bit (* 8bit.png) and 16 bit (* 16bit.png) resolution per channel.

Fig. 1.

Depth-image acquisition setup. The depth camera (yellow boxes) is positioned at 40cm over the infant's crib and does not hinder health-operator movements. An example of the acquired depth frame is showed on the top left corner (orange box).

The *.xlsx files contain the annotation of each limb-joint position per single frame, as showed in the kinematic model of Fig. 2. Following our previous work [1], [2], [3], we decided to maintain the dataset split into training (babyPose-train.xlsx) and testing (babyPose-test.xlsx). The babyPose-train.xlsx collects 12000 annotated frames (750 per patient) while the babyPose-test.xlsx gathers the last annotated 4000 frames (250 per patient). This split allowed to train and test deep learning-based architectures taking advantage of the generalization power of the whole dataset.

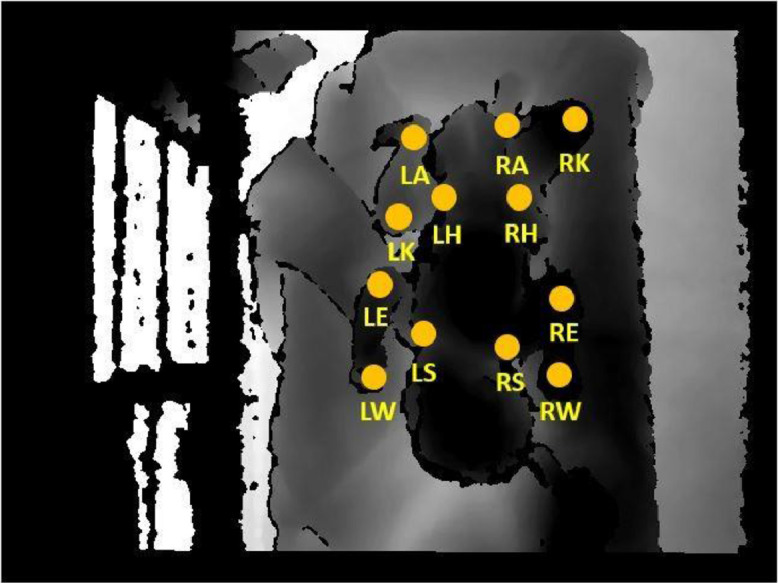

Fig. 2.

Annotated joints superimposed on a sample depth frame. LS and RS: left and right shoulder, LE and RE: left and right elbow, LW and RW: left and right wrist, LH and RH: left and right hip, LK and RK: left and right knee, LA and RA: left and right ankle.

Each *.xlsx file has the filename column with the global path to the annotated frame (in format: “pz*/* 8bit.png”) then, in the subsequent columns, each limb-joint coordinate pair (x and y) in pixel, is reported in format limb-joint coordinate. In the *.xlsx files, the occluded joints (e.g., due to the presence of the operator or plaster) have a negative coordinate pair.

2. Experimental Design, Materials and Methods

2.1. Dataset collection

The babyPose dataset was a collection of 16 depth videos of 16 preterm infants, acquired in the NICU of the G. Salesi Hospital in Ancona, Italy.

The preterm infants were chosen by our clinical partners among those who spontaneously breathing, without bronchopulmonary disorders and hydrocephalus.

The sixteen depth video recordings (each of which had a length of 180s) were acquired with the acquisition set-up showed in Fig. 1, designed to not hinder the operators in the actual clinical practice. The Astra Mini S - Orbbec® camera, with a frame rate of 30 frames per second and image size of 640 × 480 pixels, was used to record the videos.

2.2. Data annotation

Considering the average preterm infants’ movement rate [4], in accordance with our clinical partners, we extracted, for each of the 16 videos, 1 frame every 5 (total extracted frames=28800).

Limb-joints (Fig. 2) of each frame were manually annotated with a custom-built annotation tool, publicly available online1. For each of the 16 infants, 1000 frames were annotated (total annotated frames = 16000). The supervision of the annotation procedure by our clinical partners was crucial especially in presence of challenging video frames (e.g., when the operators covered part of the limb joints during the actual clinical practice)

3. Ethics Statement

After obtaining the approval of the Ethics Committee of the “Ospedali Riuniti di Ancona”, Italy (ID: Prot. 2019-399), each infant's legal guardian signed an informed consent of adhesion to the experimentation.

The study we conducted fully respects and promotes the values of freedom, autonomy, integrity and dignity of the person, social solidarity and justice, including fairness of access. The study was carried out in compliance with the principles laid down in the Declaration of Helsinki, in accordance with the Guidelines for Good Clinical Practice.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgment

This work was supported by the European Union through the grant SINC - System Improvement for Neonatal Care under the EU POR FESR 14-20 funding program.

Footnotes

Supplementary material associated with this article can be found in the online version at doi:10.1016/j.dib.2020.106329.

The BabyPose dataset has been released together with this paper.

Appendix B. Supplementary materials

References

- 1.Moccia S., Migliorelli L., Pietrini R., Frontoni E. 2019 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology. IEEE; 2019. Preterm infants’ limb-pose estimation from depth images using convolutional neural networks; pp. 1–7. [Google Scholar]

- 2.Moccia S., Migliorelli L., Carnielli V., Frontoni E. Preterm infants’ pose estimation with spatio-temporal features. IEEE Trans. Biomed. Eng. 2020 doi: 10.1109/TBME.2019.2961448. [DOI] [PubMed] [Google Scholar]

- 3.Migliorelli L., Moccia S., Cannata G.P., Galli A., Ercoli I., Mandolini L., Carnielli V.P., Frontoni E. 2020 VII National Congress of Bioengineering. IEEE; 2020. A 3D CNN for preterm-infants’ movement detection in NICUs from depth streams. [Google Scholar]

- 4.Fallang B., Saugstad O.D., Grøgaard J., Hadders-Algra M. Kinematic quality of reaching movements in preterm infants. Pediatric Res. 2003;53:836. doi: 10.1203/01.PDR.0000058925.94994.BC. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.