Abstract

Objectives Improving the usability of electronic health records (EHR) continues to be a focus of clinicians, vendors, researchers, and regulatory bodies. To understand the impact of usability redesign of an existing, site-configurable feature, we evaluated the user interface (UI) used to screen for depression, alcohol and drug misuse, fall risk, and the existence of advance directive information in ambulatory settings.

Methods As part of a quality improvement project, based on heuristic analysis, the existing UI was redesigned. Using an iterative, user-centered design process, several usability defects were corrected. Summative usability testing was performed as part of the product development and implementation cycle. Clinical quality measures reflecting rolling 12-month rates of screening were examined over 8 months prior to the implementation of the redesigned UI and 9 months after implementation.

Results Summative usability testing demonstrated improvements in task time, error rates, and System Usability Scale scores. Interrupted time series analysis demonstrated significant improvements in all screening rates after implementation of the redesigned UI compared with the original implementation.

Conclusion User-centered redesign of an existing site-specific UI may lead to significant improvements in measures of usability and quality of patient care.

Keywords: electronic health record, interfaces, usability, quality, nurse, ambulatory setting, primary care

Background and Significance

Usability is defined as the extent to which technology helps users achieve specified goals, in a satisfying, effective, and efficient manner, in a specified context of use. 1 Poor electronic health record (EHR) usability contributes to clinician dissatisfaction, burnout, and patient harm events. 2 3 4 Research also suggests that usability redesign can reduce errors, improve workload, and improve satisfaction. 5 6 Little is known about how a usability-focused redesign of a user interface (UI) affects measures of the quality of patient care such as evidence-based clinical quality measures.

EHR implementations allow for considerable variation between sites in configuration and usability. 7 Multiple stakeholders, including providers, vendors, and health care organizations, share the responsibility of deploying a usable EHR. Failure to share and coordinate the work of these stakeholders is cited as a factor in the slow progress toward improving usability and patient safety problems in EHRs. 8 Understanding the usability impact of site-specific configurations may foster development of user-centered design (UCD) processes and usability standards for site-configurable implementations.

Objectives

This report describes a portion of a quality improvement (QI) project in which a site-configurable UI was redesigned. Following an iterative UCD process, a new prototype UI was developed. To further evaluate and develop the prototype, summative usability testing was performed. After implementation of the redesigned UI, the impact of the usability improvement on evidence-based clinical quality metrics was measured, namely screening for fall risk, depression, alcohol and drug misuse, and advance directive (advance care) planning. We hypothesized that correcting usability defects in a site-configurable UI used by medical assistants and licensed practical nurses in an ambulatory setting would improve measures of usability and clinical quality measures.

Methods

Usability Redesign

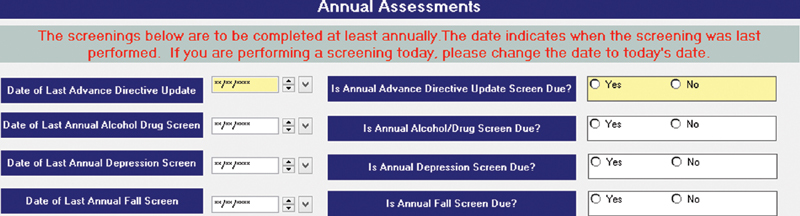

Annual assessment screens for depression, alcohol and drug misuse, advance directives, and fall risk are part of the routine intake at University of Missouri Health Care ambulatory settings. The screens allow staff and providers to identify at-risk persons and to target evidence-based interventions. 9 10 11 12 The intake assessments are documented in a Cerner UI which has been in place for several years. In the course of their clinical work, two authors (B.R.E. and R.P.P.) encountered nurses and medical assistants who described difficulty with this UI. Staff, in particular those who had worked in other systems, described the process of annual assessment screening as “not user friendly” and requiring “too many clicks.” These problems were felt to potentially contribute to lower-than-expected rates of screening for clinically important conditions. Therefore a QI project was undertaken in which the interface underwent a usability-focused redesign. Usability defects were first identified in the interface by heuristic analysis. Clinical informaticists (R.P.P., B.R.E., and J.L.B.) with experience in UCD reviewed the UI with attention to Nielsen's usability heuristics. 13 The following issues were noted: the interface required entry of date values twice for each assessment (flexibility and efficiency of use); by failing to validate date values, the interface allowed the entry of missing or discordant data (error prevention); the UI increased cognitive load for nurses by requiring mental date calculations to determine if screening was due (recognition rather than recall); cognitive load was further increased because the system failed to account for patient-specific factors such as age and medical problems which impact the need for screening (recognition rather than recall); and it failed to consistently account for workflows in which screening was due but justifiably could not be performed due to patient, provider, or other situational factors (user control and freedom; Fig. 1 ).

Fig. 1.

User interface prior to usability redesign. Advance directive screening was required at every visit. “Yes” button opened the screening instrument in a new window, in which the user had to document the date. The user had to document the date a second time in this window. No indication was given that screening was due other than the date of the last screen.

The UI was redesigned over 8 months using a collaborative, multidisciplinary, iterative process. The health system was responsible for the redesign and implementation, and the vendor had no direct role in the process. Health system physicians and nurse informaticists oversaw the work, with inputs from nurses, medical assistants, administrators, and clinical QI specialists. The team also included system architects, employed by the vendor, responsible for the site-specific implementation.

The vendor platform on which the UI is built supports text and date fields, as well as checkbox, radio button, and read-only controls. It supports required settings, simple calculations, default values, and basic conditional application behavior. It does not natively support the complex conditional logic required to accurately prompt annual assessment screening. A novel approach was used which resolved the complex conditional logic at runtime in a separate application program, then sent a “hidden” binary variable to the UI platform, reflecting whether or not screening was due. The basic conditional application behavior native to the platform then used this variable to produce the proper UI behavior.

UI mock-ups based on the heuristic analysis were created to demonstrate proposed changes and gather input from stakeholders. The design was modified based on feedback from providers, nurses, medical assistants, and administrators: labels were modified for consistency and clarity; a “days since last screening” field was removed; controls were added with options for documenting the reason screening could not be performed at that encounter (“deferral reasons”). In the final prototype ( Fig. 2 ), one date field was removed and another was configured to autopopulate. The date of prior screening was added to the UI in a read-only format. Conditional logic used patient age, current problems, and date of last screen to generate an unambiguous, color-coded indication that screening is due. Options for deferral reasons were added and conditionally enabled. From the redesigned UI, users could launch modal dialogs which contain the actual screening questions (i.e., the Physician Health Questionnaire-9 for depression screening) by clicking either of the Screen Now options. The fall-risk modal dialog was modified to support interventions to reduce fall-related injury. All four of these modal dialogs were modified to include the current date by default.

Fig. 2.

Redesigned UI. Business logic color codes in yellow show the screenings which are due. “Screen now” opens the screening instruments. Date of prior screening is displayed in a read-only format. Four options support documentation of deferral reasons. UI, user interface.

Summative Usability Testing

Summative usability testing was undertaken to further develop and evaluate the prototype. Tasks using the existing UI and the redesigned UI were configured for use with test patients, and summative usability testing was performed with 12 nurses and medical assistants. Informed consent was obtained, modeled on that in the Customized Common Industry Format Template for Electronic Health Record Usability Testing (NISTIR 7742). 14 Participant audio and screen actions were recorded using the Morae software. Mouse clicks, cursor movement, time on task, and total errors were recorded. Participants were given eight tasks to perform three times, each on one of 24 different test patients. The tasks required identifying which screens were overdue, then performing or deferring those screens. The first round of eight tasks was performed using the existing UI, then the second two rounds used the redesigned UI. Task-level satisfaction was assessed using the Single Ease Question (SEQ) 15 after each task. Overall satisfaction was assessed with the System Usability Scale (SUS) 16 for the existing and redesigned UIs at the conclusion of testing. Participants were invited to use a concurrent think-aloud protocol, and retrospective probing was used after each of the three rounds of testing. Performance on the existing UI was compared with the second round of the eight tasks performed on the redesigned UI. Questions on tobacco use were left unchanged above the annual assessments in both the original and the redesigned UI, and tasks related to the documentation of tobacco use were not included in the test. The primary outcomes of interest were the SUS and the metrics related to the identification of overdue screens, for which an error was defined as a declaration of screening “due” when it was not due, or vice versa. Secondary outcomes of interest were the metrics related to the performance of those screens and deferrals. Recruitment of participants was challenging, but the engaged leadership facilitated and encouraged staff participation. Testing conducted at clinical sites minimized participant inconvenience and time away from other job duties. Paired t -tests were used for statistical comparisons.

Patient Outcomes Following UI Redesign

The redesigned UI was deployed in the production environment in June 2019. Staff members were notified of the changes by email. Clinical nursing representatives received a live demonstration and then disseminated education on the redesigned UI to other staff. No follow-up educational measures were employed. Three quality measures—advance care planning, depression screening, and fall-risk screening—are quality measures in the Merit-based Incentive Payment System, and data were generated according to definitions used for that program. The fourth measure, alcohol screening, was customized according to requirements for the state Medicaid Primary Care Health Home program ( Table 1 ).

Table 1. Annual screening domains.

| Measure | Description | Measure steward |

|---|---|---|

| Preventive care and screening: screening for depression and follow-up plan (CMS2; PREV-12; NQF 0418) | Percentage of patients aged 12 years and older screened for depression on the date of the encounter using an age-appropriate standardized depression screening tool AND if positive, a follow-up plan is documented on the date of the positive screen. | Centers for Medicare and Medicaid Services (CMS) |

| Fall: screening for future fall risk (CMS139; CARE-2; NQF 0101) | Percentage of patients 65 years of age and older who were screened for future fall risk during the measurement period. | National Committee for Quality Assurance |

| SBIRT substance abuse screening and follow-up | Percentage of patients age 18+ who were screened for excessive drinking and drug use and follow-up was performed if indicated. | MO Healthnet Primary Care Health Home |

| Advance care plan (Quality ID #47; NQF 0326) | Percentage of patients aged 65 years and older who have an advance care plan or surrogate decision maker documented in the medical record or documentation in the medical record that an advance care plan was discussed but the patient did not wish or was not able to name a surrogate decision maker or provide an advance care plan. | National Committee for Quality Assurance, Physician Consortium for Performance Improvement |

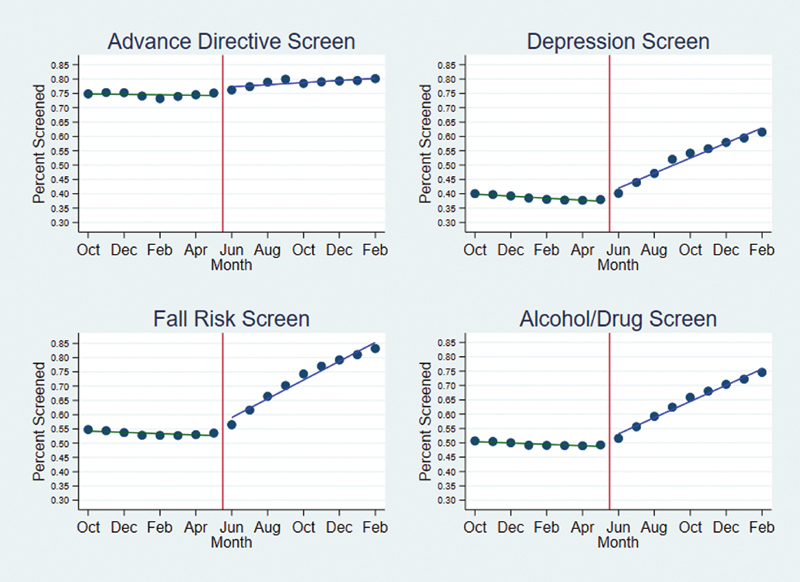

We then performed an interrupted time series (ITS) analysis of monthly quality metrics data. The quality metrics reflect 12-month rolling averages. Using segmented linear regression, two regression lines were derived for each of the four quality metrics using 8 months of data preimplementation and 9 months of data postimplementation. Regression slope and intercept, with standard errors and confidence intervals (CIs), were calculated. Seasonality was not considered and no adjustments for nonstationarity were made. Student's t -test was used to compare regression slopes pre- and postimplementation to calculate p -values. In both analyses, a p -value of 0.05 was considered statistically significant and statistical analysis was performed using Stata/IC v16.0 (College Station, Texas, United States). The project details were evaluated by the health care system's institutional review board (IRB) who made the determination that the project was a QI activity and not human subjects research, and therefore did not require additional IRB review.

Results

Summative Usability Testing

Self-reported demographics were available for 10 of the 12 participants. All were female; nine were nurses, one was a medical assistant; nine were white, one was black, and all were non-Hispanic. They reported a median age range of 40 of 49 and an average of 7.7 years (range: 1–18) of experience with the EHR application. Participants using the redesigned UI had a reduced task time (6.0 vs. 8.3 s; difference in means: −2.3 s; 95% CI [−0.9, −3.6]; p = 0.001; Table 2 ), while making fewer errors (0.10 vs. 0.83 errors/task; difference in means: −0.73 errors; 95% CI [−0.50, −0.96]; p < 0.0001). Postimplementation interviews with staff revealed that in some cases, nursing and medical assistant staff had been completing extra and unnecessary screenings simply because of the uncertainty as to which screens were due. SUS scores were higher for the redesigned UI (96.9 vs. 80.8; difference in means: 16.0; 95% CI [3.0, 29.1]; p = 0.021). The redesigned UI had a higher mean number of clicks (1.2 vs. 0.10; difference in means: 1.1; 95% CI [0.3, 1.8]; p = 0.007). Because the additional deferral fields made the redesigned UI larger, one click on the scroll bar was needed for most users to see the bottom of the redesigned UI, but users did not mention this during testing. We anticipated that from the original UI users might search out the information needed for a screening decision, but they did not; a decision to screen was based almost entirely on the information provided in the UI, for most with no additional clicks at all. There were no significant differences in cursor movement or SEQ scores for tasks requiring identification of overdue screens.

Table 2. Summative usability test results.

| Original user interface | Redesigned user interface | p -Value | |

|---|---|---|---|

| Identification of overdue screens | |||

| Mean clicks a | 0.10 | 1.17 | 0.007 |

| Mean cursor movement (pixels) | 996 | 891 | NS |

| Mean task time (s) a | 8.3 | 6.0 | 0.001 |

| Mean error rate (#/task) a | 0.83 | 0.10 | <0.0001 |

| Single ease question | 4.91 | 4.96 | NS |

| Depression screening | |||

| Mean clicks | 4.5 | 4.4 | NS |

| Mean cursor movement (pixels) | 4,269 | 3,097 | NS |

| Mean task time (s) a | 13.5 | 7.9 | 0.005 |

| Mean error rate (#/task) a | 0.75 | 0.17 | 0.027 |

| Single ease question | 5.00 | 5.00 | N/A |

| Alcohol and drug misuse screen | |||

| Mean clicks | 4.0 | 3.1 | NS |

| Mean cursor movement (pixels) | 3,915 | 2,564 | NS |

| Mean task time (s) a | 13.5 | 7.5 | 0.027 |

| Mean error rate (#/task) | 0.27 | 0.18 | NS |

| Single ease question | 4.83 | 5.00 | NS |

| Fall risk screen | |||

| Mean clicks | 3.9 | 4.3 | NS |

| Mean cursor movement (pixels) a | 3,364 | 2,231 | 0.045 |

| Mean task time (s) a | 15.6 | 9.6 | 0.0045 |

| Mean error rate (#/task) | 0.25 | 0.00 | NS |

| Single ease question | 4.75 | 5.00 | NS |

| Deferrals | |||

| Mean clicks | 8.4 | 8.8 | NS |

| Mean cursor movement (pixels) | 7,384 | 4,746 | NS |

| Mean task time (s) a | 32.8 | 12.9 | 0.035 |

| Mean error rate (#/ptask) | 0.27 | 0.09 | NS |

| Single ease question a | 3.81 | 4.91 | 0.045 |

| System usability scale a | 80.8 | 96.9 | 0.021 |

Abbreviations: NS, not significant; N/A, not applicable.

Note: This table shows a comparison of original user interface versus the redesigned prototype user interface.

p < 0.05.

The method by which nursing and medical assistant staff documented deferral of screening in the existing system was inconsistent across the four screening domains. It required a complex workflow, navigating to other areas of the EHR. Participants favored the redesigned UI for deferral tasks, with a higher SEQ score (4.91 vs. 3.81; difference in means: 1.01; 95% CI [0.03, 2.15]; p = 0.045) and a reduced task time (12.9 vs. 32.8 s; difference in means: −19.9 s; 95% CI [1.7, 38.1]; p = 0.035; Table 2 ).

This implementation did include changes in modal dialog for fall-risk screening which was launched from the redesigned UI, and the mean task time for completion of fall-risk screening was lower when the screening was performed from the redesigned UI (9.6 vs. 15.6 s; difference in means: −6.0 s; 95% CI [−2.3, 9.7]; p = 0.005). The modal dialogs for depression and alcohol and drug misuse screening did not change, yet the mean task time for completion of these screens was reduced when launched from the redesigned UI ( Table 2 ). Total errors were reduced for depression screening with the redesigned UI, but otherwise clicks, cursor movement, error rates, and task-level satisfaction as measured by the SEQ were not significantly different. Comments elicited through a concurrent think-aloud protocol and retrospective probing aligned with the quantitative findings and did not reveal any additional usability problems with the redesigned prototype UI, and no further changes were made prior to implementation.

Patient Outcomes Following UI Redesign

Numbers of eligible patients remained generally constant across the project period, but the number of screened patients increased significantly after implementation of the redesigned UI ( Table 3 ).

Table 3. Screening, by month.

| Screen | Oct | Nov | Dec | Jan | Feb | Mar | April | May | June | July | Aug | Sept | Oct | Nov | Dec | Jan | Feb | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Advance directives | Screened | 9,532 | 9,648 | 9,673 | 9,585 | 9,689 | 9,663 | 9,868 | 9,955 | 10,091 | 10,228 | 10,438 | 10,517 | 10,305 | 10,353 | 10,406 | 10,399 | 10,474 |

| Eligible | 12,739 | 12,813 | 12,858 | 12,938 | 13,238 | 13,067 | 13,241 | 13,252 | 13,245 | 13,223 | 13,228 | 13,155 | 13,131 | 13,103 | 13,115 | 13,088 | 13,072 | |

| Screen rate (%) | 74.83 | 75.30 | 75.23 | 74.08 | 73.19 | 73.95 | 74.53 | 75.12 | 76.19 | 77.35 | 78.91 | 79.95 | 78.48 | 79.01 | 79.34 | 79.45 | 80.13 | |

| Depression | Screened | 23,684 | 23,714 | 23,471 | 23,269 | 23,853 | 23,692 | 23,620 | 23,722 | 25,036 | 27,356 | 28,978 | 33,106 | 34,467 | 34,978 | 36,409 | 37,394 | 38,716 |

| Eligible | 59,131 | 59,680 | 59,803 | 60,406 | 62,663 | 62,678 | 62,606 | 62,473 | 62,315 | 62,264 | 61,519 | 63,648 | 63,648 | 62,794 | 62,891 | 62,893 | 62,939 | |

| Screen rate (%) | 40.05 | 39.74 | 39.25 | 38.52 | 38.07 | 37.80 | 37.73 | 37.97 | 40.18 | 43.94 | 47.10 | 52.01 | 54.15 | 55.70 | 57.89 | 59.46 | 61.51 | |

| Fall risk | Screened | 7,175 | 7,120 | 7,022 | 6,898 | 6,780 | 6,760 | 6,809 | 6,866 | 7,232 | 7,877 | 8,421 | 8,949 | 9,452 | 9,897 | 10,171 | 10,382 | 10,631 |

| Eligible | 13,100 | 13,091 | 13,071 | 13,070 | 12,856 | 12,832 | 12,848 | 12,823 | 12,806 | 12,789 | 12,679 | 12,749 | 12,729 | 12,853 | 12,844 | 12,810 | 12,779 | |

| Screen rate (%) | 54.77 | 54.39 | 53.72 | 52.78 | 52.74 | 52.68 | 53.00 | 53.54 | 56.47 | 61.59 | 66.42 | 70.19 | 74.26 | 77.00 | 79.19 | 81.05 | 83.19 | |

| Alcohol and drug misuse | Screened | 33,368 | 33,154 | 32,745 | 32,206 | 31,650 | 31,589 | 31,511 | 31,573 | 32,938 | 35,481 | 37,451 | 40,075 | 42,283 | 44,350 | 45,927 | 47,047 | 48,587 |

| Eligible | 65,846 | 65,711 | 65,464 | 65,509 | 64,438 | 64,412 | 64,339 | 64,094 | 63,877 | 63,791 | 63,242 | 64,185 | 64,194 | 65,190 | 65,250 | 65,162 | 65,161 | |

| Screen rate (%) | 50.68 | 50.45 | 50.02 | 49.16 | 49.12 | 49.04 | 48.98 | 49.26 | 51.56 | 55.62 | 59.22 | 62.44 | 65.87 | 68.03 | 70.39 | 72.20 | 74.56 |

Note: This table shows numbers of patients eligible for screening and patients screened, by month; rates are 12-month rolling average screening rates.

The usability improvements resulted in significant improvements in the rates of screening in all four annual screening domains. The baseline screening rate for the provision of advance directive information was highest of the four metrics at 74.53% ( Table 3 ) and was stable over the preimplementation phase (−0.08% [95% CI: −0.35, 0.19] per month; Table 4 ). By the fifth month after implementation of the redesigned UI, the screening rate had increased to 80.13% and was increasing an average of 0.44% [95% CI: 0.10, 0.79] per month, p = 0.017. Depression screening was stable at 38.64% and not increasing prior to implementation, but postimplementation it was increasing by 2.97% [95% CI: 2.50, 3.44] per month, p < 0.0001. Screening for risk of fall improved the most of the four screening metrics, improving by 3.52% [95% CI: 2.92, 4.11] per month, postimplementation; p < 0.0001 ( Fig. 3 ).

Table 4. Interrupted time-series comparisons.

| Screen | Preimplementation regression slope, % change/month [95% CI] | Postimplementation regression slope, % change/month [95% CI] | Slope change [95% CI] | p -Value |

|---|---|---|---|---|

| Advance directives | −0.08 [−0.35, 0.19] | 0.37 [0.14, 0.59] | 0.44 [0.10, 0.79] | 0.017 |

| Depression | −0.35 [−0.71, 0.01] | 2.62 [2.32, 2.92] | 2.97 [2.50, 3.44] | <0.0001 |

| Fall risk | −0.22 [−0.68, 0.23] | 3.29 [2.91, 3.67] | 3.52 [2.92, 4.11] | <0.0001 |

| Alcohol and drug misuse | −0.24 [−0.52, 0.03] | 2.83 [2.60, 3.06] | 3.07 [2.71, 3.43] | <0.0001 |

Fig. 3.

Preimplementation screening rates with regression lines in dark green and postimplementation rates with regression lines in blue . Implementation was in the middle of month 9.

Discussion

In this article, we report on a QI project focused on the user-centered redesign of a site-configurable UI used in an ambulatory setting. Using heuristic analysis, usability problems in the interface were identified. The UI was revised using an iterative UCD process and a prototype was developed. Improvement in metrics of effectiveness (error rate), efficiency (task time), and satisfaction (SUS and SEQ) using the redesigned prototype UI were confirmed using summative usability testing prior to implementation. The implementation of the redesigned UI led to significant improvements in adherence to recommended annual screening. While the solution in this project is vendor-specific, the study provides a template for usability improvements applicable to other sites and vendors.

The reduced time to complete the screenings in the unchanged modal screening dialogs for alcohol and depression screens was an unexpected positive finding. The reasons for this improvement are unclear. It is possible that the redesigned UI removed the uncertainty around whether screening was due, reduced the cognitive load, and allowed the task to be performed more quickly. The additional click required to determine the need for screening, resulting from the addition of deferral reasons, was an unexpected negative finding and presents an opportunity for further improvements in the design.

The quality measures evaluated in this study are important metrics for the care delivered in an ambulatory setting. Each is a process measure for which there is evidence that the process leads to improved outcomes for patients. The U.S Preventative Services Task Force (USPSTF) has found value in alcohol and depression screenings, giving both a B recommendation. 9 10 The USPSTF also gives a B recommendation to certain interventions to prevent falls in those identified as at-risk, 11 and ambulatory screening is the most common way those persons are identified. Advance care planning, while lacking quality randomized trials, has been found in systematic reviews to have benefits for patients, family, and health care staff. 12 Improvements in the process metrics evaluated in this project are very likely to result in improved outcomes for patients.

A recent systematic review cited a paucity of quality published studies of EHR usability evaluations, noting only 23% reported objective data such as task time, mouse clicks, and error rates. 17 Many studies of EHR usability are limited to descriptions of UCD processes or results of usability evaluations. 18 19 20 21 Other studies of EHR usability focus on poor usability and its association with patient harm and clinician dissatisfaction. 2 3 4 6 22 23 While it is important to continue to describe the impact of poor usability, our understanding of usability is enhanced by careful descriptions of the improvements seen with corrections of usability problems. Ours is one of the first projects to demonstrate that UI redesign with the aim of correction of usability problems may be associated with improvements in quality of care as measured by clinical quality measures.

Our findings demonstrate the need for continued attention to improvement of EHR usability for nurses and ancillary staff. Staff members are often responsible for initial screening for depression, alcohol and drug misuse, and fall risk. Nurses share the same usability concerns as physicians. 24 Poor usability of the EHR has been linked to psychological distress and negative work environment among nurses. 25 26 In an international survey, nearly one-third of respondents noted problems with system usability. 27 In the present study, addressing the usability problems and reducing the associated cognitive load not only reduced task time but also subjectively increased certainty regarding which screens were needed at each particular encounter. Engaged nurses are an important part of team-based care, 28 and improving EHR effectiveness, efficiency, and satisfaction among nursing staff can be expected to result in improvements in health outcomes for patients.

The highly configurable nature of many EHRs allows for significant differences in usability and user experience between sites, even between sites using the same vendor. 7 The American Medical Informatics Association, in their 2013 recommendations for improving usability, advised clinicians to “take ownership” for leading the configuration of the system and adopting best practices based upon the evidence, 29 but stopped short of recommending site-specific usability testing or UCD processes in system configurations. As part of the 2014 Edition EHR Certification Criteria, the Office of the National Coordinator for Health Information Technology established requirements for UCD practices and summative usability testing for vendors. 30 Currently no such requirements or guidelines exist for site-configurable implementations. Ratwani et al recently called for a “consistent evaluation of clinician interaction with [health information technology]… across the entire lifecycle, from initial product design to local implementation followed by periodic reassessment.” 31 Our findings confirm that such a re-evaluation can yield benefit for patients and could contribute to the groundwork for future site-configurable UCD processes and usability standards.

Health care organizations, providers, and vendors together all share responsibility for improving EHR usability and must collaborate in finding solutions that meet the needs of patients and providers. 8 Health care systems regularly make configuration decisions that affect usability, including decisions as simple as naming laboratory tests or ordering items in dropdown lists. Some, particularly smaller health care systems with more limited resources, may be challenged to employ UCD processes or perform the type of usability testing used in this project. Cost-effective solutions for systems with limited resources include use of heuristic evaluations 32 and style guides. 33 While the vendor had no direct role in this project, there are opportunities for vendors that extend beyond the development and certification process. Vendors may assist sites by offering sound advice on implementation, making usability and technical expertise available to clients, and by assuring that the standardized content offered has been developed using UCD processes.

This project also highlights the need for a shared, multistakeholder approach to usability at the time of EHR development. Our redesigned interface is built on a vendor-specific platform which, even when optimized, constrains the configurability and usability of the feature. If not required to meet certification criteria, platforms such as the one used in this project may not be subject to usability testing as part of federal certification programs. 17 Health care organizations and providers must work with vendors to continue to enhance such platforms and improve feature functionality that might otherwise constrain site-specific configurations.

This demonstration of an EHR usability QI project has some limitations. First, our summative usability testing was not powered to detect differences in measures of effectiveness, efficiency, and satisfaction, which could be important in clinical practice. The number of participants in our project was chosen according to industry standards and vendor requirements for summative testing, 34 35 reflecting the need to detect important usability defects rather than achieve adequate statistical power. Second, it is possible that the educational component of our project is responsible for some of the postimplementation improvement in screening rates. At the time of the implementation, users were sent an email outline of the redesigned UI, and nurse representatives were given a live demo. However, given the nonsustained nature of the educational effort, it is unlikely that education is responsible for the sustained increase in screening rates. Third, randomized controlled trials (RCTs) are the gold standard for drawing causal conclusions regarding an intervention. However, sometimes a RCT is not feasible, and in such cases quasi-experimental designs such as ITS analyses are useful. 36 Finally, the present project used fewer data points than some authors recommend for ITS. However, recommendations regarding minimum numbers of data points exist to reduce probability of type II errors, which is not a concern given the positive findings as in our project.

Conclusion

User-centered redesign of a flawed site-configurable UI resulted in measurable improvements in usability metrics. Implementation of the redesigned UI was associated with significant improvements in standard, evidence-based clinical quality measures. This project provides a roadmap for work on site-specific usability which can benefit both users and patients.

Clinical Relevance Statement

Summative usability testing of site-configurable UIs may confirm improvements in efficiency, effectiveness, and satisfaction. Redesign of flawed UIs may result in clinically significant improvements in common, evidence-based clinical quality measures.

Multiple Choice Questions

-

The Office of the National Coordinator for Health Information Technology requires which of the following to perform summative usability testing and follow user-centered design practices?

Health care administrators.

Health care organizations.

Individual providers.

Electronic health record (EHR) vendors.

Correct Answer: The correct answer is option d, EHR vendors. As part of the 2014 Edition EHR Certification Criteria, the Office of the National Coordinator for Health Information Technology established requirements for user-centered design practices and summative usability testing for vendors. Currently no such requirements or guidelines exist for site-configurable implementations, including health care organizations, administrators, or providers.

-

In the study “The effect of electronic health record usability redesign on annual screening rates in an ambulatory setting,” redesign of a user interface resulted in improvement in which of the following?

Task times.

System Usability Scale scores.

Clinical quality metrics.

All of the above.

Correct Answer: The correct answer is option d, all of the above. Task times improved for the identification of overdue screens as well as for each of the individual screens. System Usability Scale (SUS) scores improved from 80.8 to 96.9. Interrupted time series analysis showed improvement in all four of the clinical quality measures examined.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

The project details were reviewed by the institutional review board who determined the project to be a quality improvement activity and not human subjects research and did not require additional review.

References

- 1.International Standards Organization Ergonomics of human-system interaction — Part 210: Human-centred design for interactive systems. Vol 9241–210:2019 International Organization for Standardization; 2019. Available at: https://www.iso.org/standard/77520.html. Accessed July 30, 2020

- 2.Melnick E R, Dyrbye L N, Sinsky C A. The association between perceived electronic health record usability and professional burnout among US physicians. Mayo Clin Proc. 2020;95(03):476–487. doi: 10.1016/j.mayocp.2019.09.024. [DOI] [PubMed] [Google Scholar]

- 3.Committee on Patient Safety and Health Information Technology ; Institute of Medicine . Washington, DC: National Academies Press (US); 2011. Health IT and Patient Safety: Building Safer Systems for Better Care. [PubMed] [Google Scholar]

- 4.Howe J L, Adams K T, Hettinger A Z, Ratwani R M. Electronic health record usability issues and potential contribution to patient harm. JAMA. 2018;319(12):1276–1278. doi: 10.1001/jama.2018.1171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Russ A L, Zillich A J, Melton B L.Applying human factors principles to alert design increases efficiency and reduces prescribing errors in a scenario-based simulation J Am Med Inform Assoc 201421(e2):e287–e296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Orenstein E W, Boudreaux J, Rollins M. Formative usability testing reduces severe blood product ordering errors. Appl Clin Inform. 2019;10(05):981–990. doi: 10.1055/s-0039-3402714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ratwani R M, Savage E, Will A. A usability and safety analysis of electronic health records: a multi-center study. J Am Med Inform Assoc. 2018;25(09):1197–1201. doi: 10.1093/jamia/ocy088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sittig D F, Belmont E, Singh H. Improving the safety of health information technology requires shared responsibility: it is time we all step up. Healthc (Amst) 2018;6(01):7–12. doi: 10.1016/j.hjdsi.2017.06.004. [DOI] [PubMed] [Google Scholar]

- 9.US Preventive Services Task Force (USPSTF) . Siu A L, Bibbins-Domingo K, Grossman D C. Screening for depression in adults: US Preventive Services Task Force recommendation statement. JAMA. 2016;315(04):380–387. doi: 10.1001/jama.2015.18392. [DOI] [PubMed] [Google Scholar]

- 10.O'Connor E A, Perdue L A, Senger C A. Screening and behavioral counseling interventions to reduce unhealthy alcohol use in adolescents and adults: updated evidence report and systematic review for the US Preventive Services Task Force. JAMA. 2018;320(18):1910–1928. doi: 10.1001/jama.2018.12086. [DOI] [PubMed] [Google Scholar]

- 11.US Preventive Services Task Force . Grossman D C, Curry S J, Owens D K. Interventions to prevent falls in community-dwelling older adults: US Preventive Services Task Force recommendation statement. JAMA. 2018;319(16):1696–1704. doi: 10.1001/jama.2018.3097. [DOI] [PubMed] [Google Scholar]

- 12.Weathers E, O'Caoimh R, Cornally N. Advance care planning: a systematic review of randomised controlled trials conducted with older adults. Maturitas. 2016;91:101–109. doi: 10.1016/j.maturitas.2016.06.016. [DOI] [PubMed] [Google Scholar]

- 13.Nielsen J.10 Usability heuristics for user interface designNielsen Normal Group, Available at:https://www.nngroup.com/articles/ten-usability-heuristics/. Published 1994. Accessed May 17, 2020

- 14.Schumacher R M, Lowry S Z. Washington, DC: National Institute for Standards and Technology (US Department of Commerce); 2010. Customized Common Industry Format Template for Electronic Health Record Usability Testing (NISTIR 7742) pp. 1–37. [Google Scholar]

- 15.Sauro J, Dumas J S.Comparison of three one-question, post-task usability questionnairesPaper presented at: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; April 4–9, 2009;Boston, Massachusetts, United States

- 16.Brooke J. London: Taylor and Francis; 1996. SUS: a “quick and dirty” usability scale; pp. 189–194. [Google Scholar]

- 17.Ellsworth M A, Dziadzko M, O'Horo J C, Farrell A M, Zhang J, Herasevich V. An appraisal of published usability evaluations of electronic health records via systematic review. J Am Med Inform Assoc. 2017;24(01):218–226. doi: 10.1093/jamia/ocw046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wu D TY, Vennemeyer S, Brown K. Usability testing of an interactive dashboard for surgical quality improvement in a large congenital heart center. Appl Clin Inform. 2019;10(05):859–869. doi: 10.1055/s-0039-1698466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Abdel-Rahman S M, Gill H, Carpenter S L. Design and usability of an electronic health record-integrated, point-of-care, clinical decision support tool for modeling and simulation of antihemophilic factors. Appl Clin Inform. 2020;11(02):253–264. doi: 10.1055/s-0040-1708050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bersani K, Fuller T E, Garabedian P. Use, perceived usability, and barriers to implementation of a patient safety dashboard integrated within a vendor EHR. Appl Clin Inform. 2020;11(01):34–45. doi: 10.1055/s-0039-3402756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Brown N, Eghdam A, Koch S. Usability evaluation of visual representation formats for emergency department records. Appl Clin Inform. 2019;10(03):454–470. doi: 10.1055/s-0039-1692400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Marcilly R, Schiro J, Beuscart-Zéphir M C, Magrabi F. Building usability knowledge for health information technology: a usability-oriented analysis of incident reports. Appl Clin Inform. 2019;10(03):395–408. doi: 10.1055/s-0039-1691841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fong A, Komolafe T, Adams K T, Cohen A, Howe J L, Ratwani R M. Exploration and initial development of text classification models to identify health information technology usability-related patient safety event reports. Appl Clin Inform. 2019;10(03):521–527. doi: 10.1055/s-0039-1693427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Likourezos A, Chalfin D B, Murphy D G, Sommer B, Darcy K, Davidson S J. Physician and nurse satisfaction with an electronic medical record system. J Emerg Med. 2004;27(04):419–424. doi: 10.1016/j.jemermed.2004.03.019. [DOI] [PubMed] [Google Scholar]

- 25.Vehko T, Hyppönen H, Puttonen S. Experienced time pressure and stress: electronic health records usability and information technology competence play a role. BMC Med Inform Decis Mak. 2019;19(01):160. doi: 10.1186/s12911-019-0891-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kutney-Lee A, Sloane D M, Bowles K H, Burns L R, Aiken L H. Electronic health record adoption and nurse reports of usability and quality of care: the role of work environment. Appl Clin Inform. 2019;10(01):129–139. doi: 10.1055/s-0039-1678551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Topaz M, Ronquillo C, Peltonen L M. Nurse informaticians report low satisfaction and multi-level concerns with electronic health records: results from an international survey. AMIA Annu Symp Proc. 2017;2016:2016–2025. [PMC free article] [PubMed] [Google Scholar]

- 28.Schottenfeld L, Petersen D, Peikes D. Rockville, MD: Agency for Healthcare Research and Quality; 2016. Creating Patient-Centered Team-Based Primary Care. AHRQ Pub. No. 16–0002-EF. [Google Scholar]

- 29.American Medical Informatics Association Middleton B, Bloomrosen M, Dente M A.Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA J Am Med Inform Assoc 201320(e1):e2–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Office of the National Coordinator for Health Information Technology (ONC), Department of Health and Human Services . Health information technology: standards, implementation specifications, and certification criteria for electronic health record technology, 2014 edition; revisions to the permanent certification program for health information technology. Final rule. Fed Regist. 2012;77(171):54163–54292. [PubMed] [Google Scholar]

- 31.Ratwani R M, Reider J, Singh H. A decade of health information technology usability challenges and the path forward. JAMA. 2019;321(08):743–744. doi: 10.1001/jama.2019.0161. [DOI] [PubMed] [Google Scholar]

- 32.Shaw R J, Horvath M M, Leonard D, Ferranti J M, Johnson C M. Developing a user-friendly interface for a self-service healthcare research portal: cost-effective usability testing. Health Syst (Basingstoke) 2015;4(02):151–158. [Google Scholar]

- 33.Belden J, Patel J, Lowrance N.Inspired EHRs: designing for clinicians Columbia, MO: University of Missouri School of Medicine; 2014. Accessed May 17, 2020 at:http://inspiredEHRs.org [Google Scholar]

- 34.FDA Applying human factors and usability engineering to medical devices: guidance for industry and food and drug administration staff Office of Medical Products and Tobacco, Center for Devices and Radiological Health; 2016. Accessed July 30, 2020 at:https://www.fda.gov/media/80481/download [Google Scholar]

- 35.Lowry S Z, Quinn M T, Ramaiah M.Technical evaluation, testing, and validation of the usability of electronic health records National Institute of Standards and Technology; 2012. Accessed July 30, 2020 at:https://www.nist.gov/publications/nistir-7804-technical-evaluation-testing-and-validation-usability-electronic-health [Google Scholar]

- 36.Penfold R B, Zhang F.Use of interrupted time series analysis in evaluating health care quality improvements Acad Pediatr 201313(6, Suppl):S38–S44. [DOI] [PubMed] [Google Scholar]