Abstract

Enhancers are noncoding fragments in DNA sequences, which play an important role in gene transcription and translation. However, due to their high free scattering and positional variability, the identification and classification of enhancers have a higher level of complexity than those of coding genes. In order to solve this problem, many computer studies have been carried out in this field, but there are still some deficiencies in these prediction models. In this paper, we use various feature extraction strategies, dimension reduction technology, and a comprehensive application of machine model and recurrent neural network model to achieve an accurate prediction of enhancer identification and classification with the accuracy of was 76.7% and 84.9%, respectively. The model proposed in this paper is superior to the previous methods in performance index or feature dimension, which provides inspiration for the prediction of enhancers by computer technology in the future.

1. Introduction

Enhancers are a small area of DNA that can link with protein, located upstream or downstream of the gene, and gene transcription will be enhanced after they bind with protein [1]. Because of the winding structure of chromatin, enhancers being far apart in the sequence still have the opportunity to contact each other. Therefore, they are not necessarily close to the gene to be affected, or even located on the same chromosome as the gene. Studies have shown that enhancer mutations may lead to a variety of diseases.

Owing to the significance of enhancers, the identification and classification of enhancers have always been the focus of computational biologists and experimental biologists [2, 3]. The fact is that to identify enhancers by biochemical experiments is expensive and time-consuming.

In the past few years, some bioinformatics methods have been developed to predict enhancers [4]. Liu et al. [5] proposed iEnhancer-2L, which extracts features by pseudo k-tuple nucleotide composition and achieves the enhancer identification and classification with the accuracy of 73% and 60.5%, respectively. Jia and He [6] suggested EnhancerPred, which extracts features by biprofile Bayes and pseudo k-tuple nucleotide composition to support the vector machine and achieves the accuracy of 75% and 55% for the prediction of enhancer identification and classification, respectively, Liu et al. [7] proposed iEnhancer-EL, which applies K-mer, pseudo k-tuple nucleotide composition and subsequence profile feature extraction methods and uses the ensemble classifier based on support vector machine to achieve the accuracy of 74.8% for enhancer identification and 61% for enhancer classification [8]. Nguyen et al. [9] proposed iEnhancer-ECNN, which uses a convolutional neural network to achieve the accuracy of 76.9% for enhancer identification and 67.8% for enhancer classification prediction [10]. All of the above methods emphasize the better prediction results but fail to mention the dimensional advantages of the model [11, 12]. Due to the fact that high-dimensional features may lead to an over-fitting and high-dimension disaster or an increase of redundant information, the machine learning model trained by this initial high-dimension feature is often found to be underperforming in practice [13–17].

In this paper, a low dimensional feature model is obtained by using a variety of feature extraction strategies and dimension reduction technology [18–23]. The identification and classification of enhancers have been achieved via the combination of machine learning models and artificial neural network with the accuracy rate of 76.7% and 84.9%, respectively. It also should be noted that the dimension of the feature model used to identify enhancers is only 37, which is much lower than the past methods. And this paper also got an 18-dimension feature model for enhancer identification, and its accuracy reached 76.5% after testing.

2. Materials and Methods

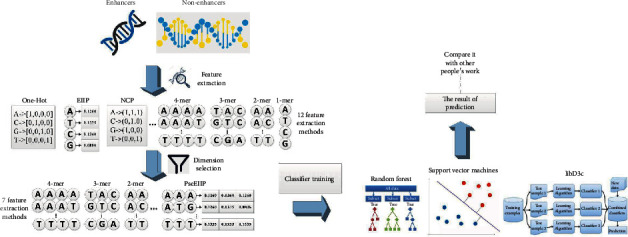

In this paper, the identification and classification of enhancers are described by Figures 1 and 2, respectively.

Figure 1.

Research process of enhancer identification.

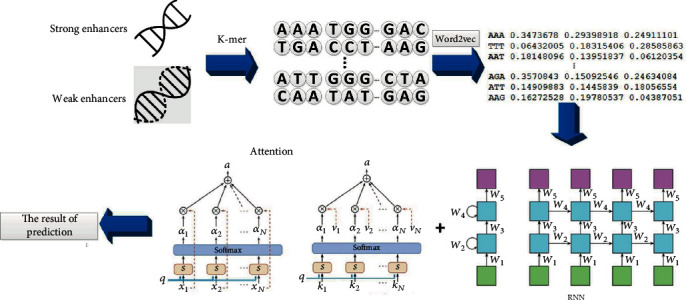

Figure 2.

Research process of enhancer classification.

2.1. Benchmark Dataset

This paper used a dataset proposed by Liu et al., which was also used in the development of iEnhizer-2L, EnhancerPred, iEnhancer-EL, and iEnhancer-ECNN. In this dataset, enhancer information was collected from 9 different cell lines, and DNA sequences of 200 bp in length were extracted. In order to avoid the deviation of the classifier, enhancers with the similarity of over 90% were deleted from the dataset through CD-HIT [24, 25]. The dataset contains 1484 enhancers and 1484 nonenhancers. Among them, 1484 enhancers include 742 strong enhancers and 742 weak enhancers.

2.2. Feature Extraction

Machine learning algorithms cannot directly perform annotations on continuous nucleotide sequences, so it is necessary to convert nucleotide sequences represented by strings into feature vectors represented by numbers [26–28]. This paper implemented feature extraction through iLearn [29].

2.2.1. K-mer

The K-mer feature extraction strategy refers to calculating the frequency of the unit in the entire sequence with k adjacent nucleotides as a unit [30, 31]. This paper uses 1-mer, 2-mer, 3-mer, and 4-mer feature extraction methods, which are stated by the following formulas:

| (1) |

N t is the length of a DNA sequence and Na, Nab, Nabc, Nabcd are the units composed of adjacent K nucleotides.

2.2.2. Reverse Compliment K-mer (RCK-mer)

Reverse Compliment K-mer is a variant of K-mer, which ignores the complementary sequences of adjacent nucleotide sequences. For example, there are 16 types of 2-mer: “AA,” “AC,” “AG,” “AT,” “CA,” “CC,” “CG,” “CT,” “GA,” “GC,” “GG,” “GT,” “TA,” “TC,” “TG,” and “TT.” Because ‘TT' is the reverse completion K-mer of “AA,” it can be left out. Therefore, there are only 10 kinds of 2-mer in this method: “AA,” “AC,” “AG,” “AT,” “CA,” “CC,” “CG,” “GA,” “GC,” and “TA.” The frequency of each K-mer was calculated in turn.

2.2.3. Enhanced Nucleic Acid Composition (ENAC)

Enhanced nucleic acid composition is the frequency of each nucleotide occurring within a fixed sequence window length, which slides continuously from the 5′ end to the 3′ end of each nucleotide sequence and usually used to encode nucleotide sequences of the same length.

2.2.4. Composition of K-Spaced Nucleic Acid Pairs (CKSNAP)

This method calculated the frequency of pairs of nucleotides separated by K nucleotides in the whole sequence. When k = 0, it is consistent with the features represented by 2-mer. It should be noted that the frequency of nucleotide pairs is calculated though, when k = 0, 1, 2, 3, 4, and 5, the length of sequences should be L-1, L-2, L-3, L-4, L-5, and L-6.

2.2.5. Nucleotide Chemical Property (NCP)

The method took into account different chemical structures and chemical properties of four nucleotides [32, 33]. “A” is presented as (1, 1, 1), “C” as (0, 1, 0), “G” as (1, 0, 0), and “T” as (0, 0, 1).

2.2.6. Accumulated Nucleotide Frequency (ANF)

This method combined the approach of nucleotide chemical properties and considers the chemical properties, the location, and the frequency of each nucleotide. For example, for a sequence “TCGTTCATGG,” “T” appears in bits 1, 4, 5, and 8, with frequencies corresponding to 1 (1/1), 0.5 (2/4), 0.6 (3/5), and 0.5 (4/8), respectively; “C” appears in bits 2 and 6, with frequencies corresponding to 0.5 (1/2) and 0.33 (2/6), respectively; “G” appears in bits 3, 9, and 10, with frequencies corresponding to 0.33 (1/3), 0.22 (2/9), and 0.3 (3/10), respectively; “A” appears in the 7th position, so its frequency was 0.14 (1/7). Therefore, the sequence can be expressed as {(0, 0, 1, 1), (0, 1, 0, 0.5), (1, 0, 0, 0.33), (0, 0, 1, 0.5), (0, 0, 1, 0.6), (0, 1, 0, 0.33), (1, 1, 1, 0.14), (0, 0, 1, 0.5), (1, 0, 0, 0.22), (1, 0, 0, 0.3)} [34, 35].

2.2.7. Electron-Ion Interaction Pseudopotentials of Trinucleotide (EIIP)

Nair and Pillai [36] proposed the Electron-Ion Interaction Pseudopotentials of Trinucleotide (EIIP) of nucleotides A, G, C, and T. The EIIP of the four nucleotides is A: 0.1260, C: 0.1340, G: 0.0806, and T: 0.1335. This method directly used the EIIP to represent the nucleotides in the DNA sequence. Therefore, the dimension of EIIP is the length of the DNA sequence.

2.2.8. Electron-Ion Interaction Pseudopotentials of Trinucleotide (PseEIIP)

In these codes, EIIPA, EIIPT, EIIPG, and EIIPC were used to represent the EIIP of nucleotides A, T, G, and C, respectively. Then, the average value of EIIP of the three nucleotides in each sample was used to construct the feature vector, which can be expressed as follows:

| (2) |

f abc, a, b, c ∈ (A, T, C, G) is the normalized frequency of a trinucleotide, and EIIPabc, a, b, c ∈ (A, T, C, G) is the sum of EIIP values of three nucleotides.

2.2.9. One-Hot

Each enhancer in the dataset is a 200 bp nucleotide sequence, which consists of four nucleotides, namely, adenine (A), guanine (G), cytosine (C) and thymine (T). Each nucleotide is represented by a set of vectors (Table 1) [37, 38].

Table 1.

One-Hot encoding.

| Nucleotides | Code |

|---|---|

| A | [1,0,0,0] |

| T | [0,0,0,1] |

| C | [0,1,0,0] |

| G | [0,0,1,0] |

2.3. Feature Selection

Feature selection is the method of selecting a subset of related features used in model construction [39, 40]. Because the dimension of features will be reduced after selection, this process is called dimension reduction.

2.3.1. MRMD2.0

This paper used MRMD2.0 [41] to achieve dimension reduction. Firstly, MRMD2.0 uses seven main feature ranking methods (ANOVA, MRMD, MIC, Lasso, mRMR, chi-square test, and RFE) to calculate the feature sets, respectively, and then uses the idea of the PageRank algorithm to comprehensively process the results of the seven feature ranking algorithms and get the final feature ranking, Then, using the positive addition strategy, the features arranged in descending order are added to the feature subset for verification, and the best feature subset is finally obtained.

2.3.2. Evolutionary Search

Evolutionary Search uses evolutionary algorithms for feature selection. An evolutionary algorithm is not a specific algorithm; it includes a variety of algorithms (genetic algorithm, memetic algorithm, and multiobjective evolutionary algorithm). The inspiration of the evolutionary algorithm draws on the evolutionary operations of living things in nature. Compared with traditional optimization algorithms such as calculus-based methods and exhaustive methods, it is a mature global with high robustness and wide applicability. The optimization method has the characteristics of self-organization, self-adaptation, and self-learning. It is not limited by the nature of the problem and can effectively handle complex problems that are difficult to solve by traditional optimization algorithms.

2.4. Classifier

2.4.1. Recurrent Neural Network

This paper also used recurrent neural networks to make predictions on the basis of the memory model. It is expected that the network can remember the previous features and infer the subsequent results according to the features; hence, the overall network structure continues in the cycle. The biggest problem with memory is that it has forgetfulness. We can always remember the recent events more clearly and forget the events that happened long ago. Recurrent neural networks also have this problem. In order to solve this problem, two variants of the network structure have emerged: one is called LSTM, and the other is called GRU. Both of these variants can well solve the problem of long-term dependence.

2.4.2. Random Forest

In this study, a random forest was applied to play a role as a classifier for prediction. Random forest is widely employed in the bioinformatics research [42–52]. This classifier concludes multiple decision trees while the output category is arranged by the mode of the category output by trees individually. This paper implemented a random forest classifier through the weka platform.

2.4.3. Support Vector Machine

As a very powerful machine learning method widely used in biological sequence prediction [53–71], the support vector machine was used for prediction in this research. It is a class of generalized linear classifiers that classify data binary in a supervised learning method, and its decision boundary is the maximum margin hyperplane that is solved for the learning sample. This paper used libSVM to implement support vector machine and adjust parameters c and g using grid to optimize the prediction results.

2.4.4. libD3C

This paper also applied the libD3C classifier [72] to test the performance of models. The classifier adopts a selective ensemble strategy, based on the hybrid ensemble pruning model combining k-means clustering and function selection cycle framework and sequential search, by training multiple candidate classifiers and then selecting a set of accurate and different classifiers to settle the problem.

2.5. Evaluation of Prediction

This paper used sensitivity (Sn), specificity (Sp), total accuracy (Acc), and Mathew (Mcc) correlation coefficients to evaluate the performance of the model [73–83].

| (3) |

TP is true positive; FN is false negative; FP is false positive; TN is true negative.

3. Results and Discussion

3.1. Identification of Enhancers

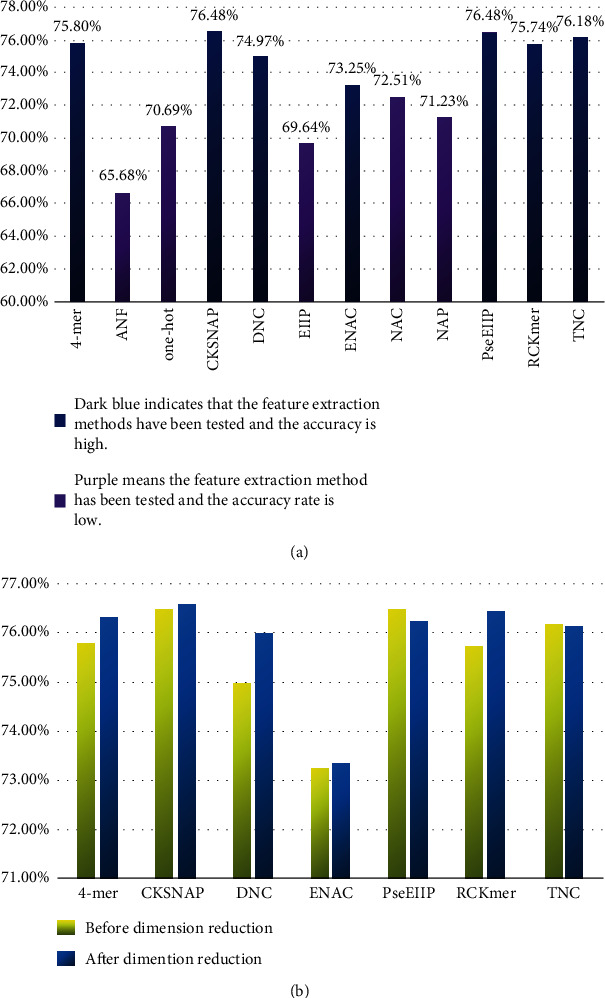

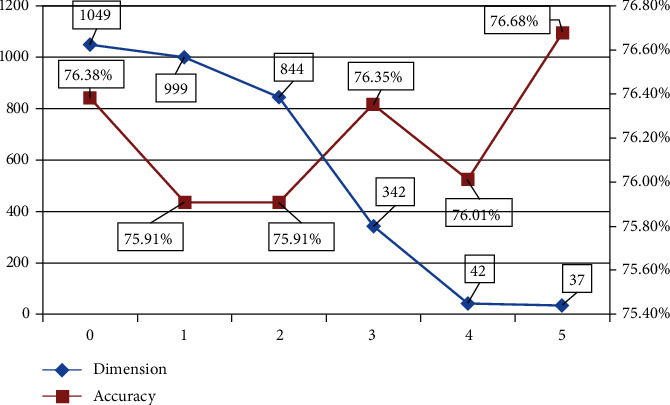

Feature vectors of enhancers and nonenhancers were obtained by K-mer, RCK-mer, ENAC, CKSNAP, NCP, ANF, EIIP, PseEIIP, and One-Hot feature extraction methods. In order to determine which feature extraction methods were suitable for the identification of enhancers, the random forest was adopted through ten-fold cross-validation for each method. After testing (Figure 3), this paper believed that 2-mer, 3-mer, 4-mer, CKSNAP, ENAC, PseEIIP, and RCK-mer, the seven feature extraction methods, were more effective. Since the dimension of the feature model obtained through the seven extraction methods was rather high, which could cause the classifier overfitting the training set and lead to a less effective performance in practical applications. This paper expected to get a low-dimension and excellent performance feature model; hence, the seven feature models were merged after individual dimension reduction through MRMD2.0; then, we found that the dimension was 1049, which was still relatively high. Therefore, the merged model went through 5 consecutive dimension reductions by MRMD2.0, and a 37-dimension feature model was achieved eventually. At this time, the dimension can no longer be reduced further (Figure 4). Using the random forest classifier, the 37-dimension feature model was tested through ten-fold cross-validation (Table 2), and the accuracy reached 76.7%; the running time of the method is 2.14 seconds.

Figure 3.

(a) The accuracy of different feature extraction methods after verification. Through analysis, this article believed that the method represented by dark blue had higher accuracy, while the method represented by purple had lower accuracy. (b) Changes in accuracy of different extraction methods before and after dimensionality reduction. Through analysis, this paper believed that accuracy has improved after dimensionality reduction.

Figure 4.

The relationship between accuracy change and dimension change. According to trends, this paper believed that dimension and accuracy are negatively correlated. Using MRMD2.0, when the dimension was 37, the accuracy reached 76.68%, and the dimension reduction continued; the accuracy cannot be improved.

Table 2.

The comparison between this paper and the previous work on enhancer identification.

| Acc | AUC | SN | SP | MCC | Dimension | |

|---|---|---|---|---|---|---|

| iEnhancer-2L | 0.730 | 0.806 | 0.710 | 0.750 | 0.460 | |

| EnhancerPred | 0.740 | 0.801 | 0.735 | 0.745 | 0.480 | |

| iEnhancer-EL | 0.748 | 0.817 | 0.710 | 0.785 | 0.496 | |

| iEnhancer-ECNN | 0.769 | 0.832 | 0.785 | 0.752 | 0.537 | 2400 |

| Our method | 0.767 | 0.837 | 0.733 | 0.801 | 0.535 | 37 |

At the same time, this paper used Evolutionary Search to reduce the dimension of the merged 1049-dimensional model to compare the differences between different dimension reduction tools. After 8-dimension reductions, an 18-dimension model was obtained in this paper, and the accuracy rate reached 76.5% after 10-fold cross-validation. Although this feature model is inferior to the model obtained by MRMD2.0 in performance, it has obvious advantages in dimension. The 18-dimensional feature model may imply that it is an important marker for distinguishing enhancers. These 18-dimension features come from 4-mer, 2-mer, CKSNAP, RCK-mer, and PseEIIP, respectively, indicating that specific dinucleotides, trinucleotides, and their electronic-ion interactions play an important role in enhancer sequences. By using two tools, we can find that Evolutionary Search has an advantage in dimension after dimension reduction, and MRMD2.0 has more advantages in terms of performance parameters after dimension reduction.

In order to further determine the stability of the feature model, this paper used support vector machine and libD3C to test the 37-dimension model at the same time (Table 2). Through the support vector machine combined with the grid search method (c 8192.0, g 0.001953125), the accuracy reached 76.5%. Using the libD3C classifier, the accuracy reached 75.5%. The prediction accuracy of the three classifiers for the feature model all exceeded 75%, indicating a very stable feature model. Meanwhile, in addition to the excellent performance of the feature model examined in this paper, it also has a very low dimension compared with a previous work (Table 2), which can effectively avoid dimensional disasters.

3.2. Classification of Enhancers

For the feature extraction of strong enhancers and weak enhancers, the same methods as enhancer identification were adopted, and then, the random forest was used through ten-fold cross-validation to examine the performance. After testing, this paper believed that also 2-mer, 3-mer, 4-mer, CKSNAP, ENAC, PseEIIP, and RCK-mer, the seven feature extraction methods, perform slightly better than other methods, but were not satisfactory. Therefore, this paper attempted to improve accuracy through dimension reduction techniques. After reducing the dimensions of the seven feature models that performed slightly better, they were merged to continue the dimension reduction. After four dimension reductions, an 82-dimension feature model was obtained. At this time, it was impossible to continue the further dimension reduction. The 82-dimension model was cross-validated with a random forest classifier, and the accuracy of 62.3% was still not ideal.

Next, this paper used the voting mechanism to output the prediction results of the 82 feature model of the three classifiers libSVM, random forest, and libD3C and retained the prediction results with the highest confidence based on the given confidence of each classifier result. After statistics, the final accuracy was 63.1%, the result was still not ideal.

As the recurrent neural network has contributed a lot in the fields of sequence problems and natural language processing with a limited capacity of memory, the variant of recurrent neural network—Long Short-Term Memory—was applied in this research to predict biological sequences. This paper used the 3-mer method to segment the sequence and then trained the word embedding through word to vector. Next, this study used the LSTM model based on the attention mechanism to predict the word segmentation file. When the model was a two-layer neuron, hidden_dim was 100, the learning rate was 0.005, and the adam optimizer was used; the accuracy of ten-fold cross-validation reached 84.9%. After comparison (Table 3), this paper has achieved ideal results in the classification of enhancers.

Table 3.

The comparison between this paper and the previous work on enhancer classification.

| Acc | SN | SP | MCC | |

|---|---|---|---|---|

| iEnhancer-2L | 0.605 | 0.470 | 0.740 | 0.218 |

| EnhancerPred | 0.550 | 0.45 | 0.65 | 0.102 |

| iEnhancer-EL | 0.61 | 0.540 | 0.68 | 0.222 |

| iEnhancer-ECNN | 0.678 | 0.791 | 0.564 | 0.368 |

| Our method | 0.849 | 0.858 | 0.84 | 0.699 |

4. Conclusions

In this paper, a 37-dimension feature model for identifying enhancers was obtained through multiple dimension reductions. After testing, the performance of the model was sound and stable. At the same time, this paper has achieved ideal results in the classification of enhancers through 3-mer methods, word to vector techniques, and RNN models. It is expected that the method proposed in this paper can provide a certain reference for the future research on enhancers in the academic world.

Acknowledgments

This work is supported by the Funding of Shenzhen Polytechnic (No. 6020320002K). This manuscript used iLearn online tool to extract features, used classifiers through Weka platform, and used MRMD2.0 and Evolutionary Search to reduce dimensions. Dongyuan Yu contributed to the language editing of this article. Dongyuan Yu is from Northeast Agricultural University.

Contributor Information

Qingyuan Li, Email: liqingyuan@webmail.hzau.edu.cn.

Lichao Zhang, Email: lczhang5354@szu.edu.cn.

Data Availability

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Authors' Contributions

Qingwen Li and Lei Xu contributed equally to this work.

References

- 1.Li Y. H., Yu C. Y., Li X. X., et al. Therapeutic target database update 2018: enriched resource for facilitating bench-to-clinic research of targeted therapeutics. Nucleic Acids Research. 2018;46(D1):D1121–D1127. doi: 10.1093/nar/gkx1076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Li B., Tang J., Yang Q., et al. NOREVA: normalization and evaluation of MS-based metabolomics data. Nucleic Acids Research. 2017;45(W1):W162–W170. doi: 10.1093/nar/gkx449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fu J., Tang J., Wang Y., et al. Discovery of the consistently well-performed analysis chain for SWATH-MS based pharmacoproteomic quantification. Frontiers in Pharmacology. 2018;9:p. 681. doi: 10.3389/fphar.2018.00681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bu H., Hao J., Guan J., Zhou S. Predicting Enhancers from Multiple Cell Lines and Tissues across Different Developmental Stages Based On SVM Method. Current Bioinformatics. 2018;13(6):655–660. doi: 10.2174/1574893613666180726163429. [DOI] [Google Scholar]

- 5.Liu B., Fang L., Long R., Lan X., Chou K. C. iEnhancer-2L: a two-layer predictor for identifying enhancers and their strength by pseudo k-tuple nucleotide composition. Bioinformatics. 2016;32(3):362–369. doi: 10.1093/bioinformatics/btv604. [DOI] [PubMed] [Google Scholar]

- 6.Jia C., He W. EnhancerPred: a predictor for discovering enhancers based on the combination and selection of multiple features. Scientific Reports. 2016;6(1):p. 38741. doi: 10.1038/srep38741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liu B., Li K., Huang D. S., Chou K. C. iEnhancer-EL:identifying enhancers and their strength with ensemble learning approach. Bioinformatics. 2018;34(22):3835–3842. doi: 10.1093/bioinformatics/bty458. [DOI] [PubMed] [Google Scholar]

- 8.Tang J., Fu J., Wang Y., et al. Simultaneous improvement in the precision, accuracy, and robustness of label-free proteome quantification by optimizing data manipulation chains. Molecular & Cellular Proteomics. 2019;18(8):1683–1699. doi: 10.1074/mcp.RA118.001169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nguyen Q. H., Nguyen-Vo T. H., le N. Q. K., Do T. T. T., Rahardja S., Nguyen B. P. iEnhancer-ECNN: identifying enhancers and their strength using ensembles of convolutional neural networks. BMC Genomics. 2019;20(Suppl 9):p. 951. doi: 10.1186/s12864-019-6336-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xue W., Yang F., Wang P., et al. What contributes to serotonin-norepinephrine reuptake inhibitors’ dual-targeting mechanism? The key role of transmembrane domain 6 in human serotonin and norepinephrine transporters revealed by molecular dynamics simulation. ACS Chemical Neuroscience. 2018;9(5):1128–1140. doi: 10.1021/acschemneuro.7b00490. [DOI] [PubMed] [Google Scholar]

- 11.Yang Q., Wang Y., Zhang Y., et al. NOREVA: enhanced normalization and evaluation of time-course and multi-class metabolomic data. Nucleic Acids Research. 2020;48(W1):W436–W448. doi: 10.1093/nar/gkaa258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yin J., Sun W., Li F., et al. VARIDT 1.0: variability of drug transporter database. Nucleic Acids Research. 2020;48(D1):D1042–D1050. doi: 10.1093/nar/gkz779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Li Q., Zhou W., Wang D., Wang S., Li Q. Prediction of anticancer peptides using a low-dimensional feature model. Frontiers in Bioengineering and Biotechnology. 2020;8 doi: 10.3389/fbioe.2020.00892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cheng L. Omics data and artificial intelligence: new challenges for gene therapy. Current Gene Therapy. 2020;20(1):p. 1. doi: 10.2174/156652322001200604150041. [DOI] [PubMed] [Google Scholar]

- 15.Liu B., Gao X., Zhang H. BioSeq-Analysis2.0: an updated platform for analyzing DNA, RNA, and protein sequences at sequence level and residue level based on machine learning approaches. Nucleic Acids Research. 2019;47(20, article e127) doi: 10.1093/nar/gkz740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Xu L., Liang G., Shi S., Liao C. SeqSVM: A Sequence-Based Support Vector Machine Method for Identifying Antioxidant Proteins. International Journal of Molecular Sciences. 2018;19(6):p. 1773. doi: 10.3390/ijms19061773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Xu Y., Wang Y., Luo J., Zhao W., Zhou X. Deep learning of the splicing (epi) genetic code reveals a novel candidate mechanism linking histone modifications to ESC fate decision. Nucleic Acids Research. 2017;45(21):12100–12112. doi: 10.1093/nar/gkx870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wang Y., Zhang S., Li F., et al. Therapeutic target database 2020: enriched resource for facilitating research and early development of targeted therapeutics. Nucleic Acids Research. 2020;48(D1):D1031–D1041. doi: 10.1093/nar/gkz981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yu L., Xu F., Gao L. Predict new therapeutic drugs for hepatocellular carcinoma based on gene mutation and expression. Frontiers in Bioengineering and Biotechnology. 2020;8:p. 8. doi: 10.3389/fbioe.2020.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Liu B. BioSeq-Analysis: a platform for DNA, RNA, and protein sequence analysis based on machine learning approaches. Briefings in Bioinformatics. 2019;20(4):1280–1294. doi: 10.1093/bib/bbx165. [DOI] [PubMed] [Google Scholar]

- 21.Shao J., Yan K., Liu B. FoldRec-C2C: protein fold recognition by combining cluster-to-cluster model and protein similarity network. Briefings in Bioinformatics. 2020 doi: 10.1093/bib/bbaa144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zou Q., Chen L., Huang T., Zhang Z., Xu Y. Machinelearning and analytics in biomedicine. Artificial Intelligence in Medicine. 2017;83:p. 1. doi: 10.1016/j.artmed.2017.09. [DOI] [PubMed] [Google Scholar]

- 23.Zou Q., Mrozek D., Ma Q., Xu Y. Scalable data mining algorithms in computational biology and biomedicine. BioMed Research International. 2017;2017:3. doi: 10.1155/2017/5652041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fu L., Niu B., Zhu Z., Wu S., Li W. CD-HIT: accelerated for clustering the next-generation sequencing data. Bioinformatics. 2012;28(23):3150–3152. doi: 10.1093/bioinformatics/bts565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zou Q., Lin G., Jiang X., Liu X., Zeng X. Sequence clustering in bioinformatics: an empirical study. Briefings in Bioinformatics. 2020;21(1):1–10. doi: 10.1093/bib/bby090. [DOI] [PubMed] [Google Scholar]

- 26.Liu B., Li K. iPromoter-2L2.0: identifying promoters and their types by combining Smoothing Cutting Window algorithm and sequence-based features. Molecular Therapy-Nucleic Acids. 2019;18:80–87. doi: 10.1016/j.omtn.2019.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Yang Y. H., Ma C., Wang J. S., et al. Prediction of N7-methylguanosine sites in human RNA based on optimal sequence features. Genomics. 2020;112(6):4342–4347. doi: 10.1016/j.ygeno.2020.07.035. [DOI] [PubMed] [Google Scholar]

- 28.Liu M. L., Su W., Guan Z. X., et al. An overview on predicting protein subchloroplast localization by using machine learning methods. Current Protein & Peptide Science. 2020;21 doi: 10.2174/1389203721666200117153412. [DOI] [PubMed] [Google Scholar]

- 29.Chen Z., Zhao P., Li F., et al. iLearn: an integrated platform and meta-learner for feature engineering, machine learning analysis and modeling of DNA, RNA and protein sequence data. Briefings in Bioinformatics. 2020;21(3):1047–1057. doi: 10.1093/bib/bbz041. [DOI] [PubMed] [Google Scholar]

- 30.Yang H., Yang W., Dao F. Y., et al. A comparison and assessment of computational method for identifying recombination hotspots in Saccharomyces cerevisiae. Briefings in Bioinformatics. 2020;21(5):1568–1580. doi: 10.1093/bib/bbz123. [DOI] [PubMed] [Google Scholar]

- 31.Lai H. Y., Zhang Z. Y., Su Z. D., et al. iProEP: a computational predictor for predicting promoter. Molecular therapy. Nucleic acids. 2019;17:337–346. doi: 10.1016/j.omtn.2019.05.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dao F. Y., Lv H., Yang Y. H., Zulfiqar H., Gao H., Lin H. Computational identification of N6-methyladenosine sites in multiple tissues of mammals. Computational and Structural Biotechnology Journal. 2020;18:1084–1091. doi: 10.1016/j.csbj.2020.04.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lv H., Zhang Z. M., Li S. H., Tan J. X., Chen W., Lin H. Evaluation of different computational methods on 5-methylcytosine sites identification. Briefings in Bioinformatics. 2020;21(3):982–995. doi: 10.1093/bib/bbz048. [DOI] [PubMed] [Google Scholar]

- 34.Ding Y., Tang J., Guo F. Identification of Protein–Proteininteractions via a Matrix-Basedsequence model with acid Information. International Journal of Molecular Sciences. 2016;17(10):p. 1623. doi: 10.3390/ijms17101623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ding Y., Tang J., Guo F. Predicting protein-protein interactions via multivariate mutual information of protein sequences. BMC Bioinformatics. 2016;17(1):p. 398. doi: 10.1186/s12859-016-1253-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Nair A., Pillai S. A coding measure scheme employing electron-ion interaction pseudopotential (EIIP) Bioinformation. 2006;1(6):197–202. [PMC free article] [PubMed] [Google Scholar]

- 37.Lv H., Dao F. Y., Zhang D., et al. iDNA-MS: integrated tool for DNA sites in Genomes. iScience. 2020;23(4, article 100991) doi: 10.1016/j.isci.2020.100991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Dao F. Y., Lv H., Zulfiqar H., et al. A computational platform to identify origins of replication sites in eukaryotes. Briefings in Bioinformatics. 2020 doi: 10.1093/bib/bbaa017. [DOI] [PubMed] [Google Scholar]

- 39.Zhang Z. Y., Yang Y. H., Ding H., Wang D., Chen W., Lin H. Design powerful predictor for mRNA subcellular location prediction in Homo sapiens. Briefings in Bioinformatics. 2020 doi: 10.1093/bib/bbz177. [DOI] [PubMed] [Google Scholar]

- 40.Yang W., Zhu X. J., Huang J., Ding H., Lin H. A brief survey of machine learning methods in protein sub-Golgi localization. Current Bioinformatics. 2019;14(3):234–240. doi: 10.2174/1574893613666181113131415. [DOI] [Google Scholar]

- 41.He S., Guo F., Zou Q., Ding H. MRMD2.0:a Python tool for machine learning features ranking and reduction. Current Bioinformatics. 2020;15 doi: 10.2174/1574893615999200503030350. [DOI] [Google Scholar]

- 42.Lv Z., Zhang J., Ding H., Zou Q. RF-PseU: A Random Predictor for RNA Sites. Frontiers in Bioengineering and Biotechnology. 2020;8 doi: 10.3389/fbioe.2020.00134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ru X. Q., Li L. H., Zou Q. Incorporating distance-based top-n-gram and random forest to identify electron transport proteins. Journal of Proteome Research. 2019;18(7):2931–2939. doi: 10.1021/acs.jproteome.9b00250. [DOI] [PubMed] [Google Scholar]

- 44.Xu L., Liang G., Liao C., Chen G. D., Chang C. C. k-Skip-n-Gram-RF: random method for Alzheimer's Identification. Frontiers in Genetics. 2019;10 doi: 10.3389/fgene.2019.00033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Lv Z., Jin S., Ding H., Zou Q. A random forest sub-Golgi protein classifier optimized via dipeptide and amino acid composition features. Frontiers in Bioengineering and Biotechnology. 2019;7:p. 215. doi: 10.3389/fbioe.2019.00215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Cheng L., Jiang Y., Ju H., et al. InfAcrOnt: calculating cross-ontology term similarities using information flow by a random walk. BMC Genomics. 2018;19(Suppl 1):p. 919. doi: 10.1186/s12864-017-4338-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Yu L., Yao S., Gao L., Zha Y. Conserved disease modules extracted from multilayer heterogeneous disease and gene networks for understanding disease mechanisms and predicting disease treatments. Frontiers in Genetics. 2019;9 doi: 10.3389/fgene.2018.00745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Yu L., Su R., Wang B., et al. Prediction of novel drugs for hepatocellular carcinoma based on multi-source random walk. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2017;14(4):966–977. doi: 10.1109/TCBB.2016.2550453. [DOI] [PubMed] [Google Scholar]

- 49.Liu B., Chen S., Yan K., Weng F. iRO-PsekGCC: identify DNA replication origins based on pseudo k-tuple GC composition. Frontiers in Genetics. 2019;10:p. 842. doi: 10.3389/fgene.2019.00842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wang M., Yue L., Cui X., et al. Prediction of extracellular matrix proteins by fusing multiple feature information, elastic net, and random forest algorithm. Mathematics. 2020;8(2):p. 169. doi: 10.3390/math8020169. [DOI] [Google Scholar]

- 51.Wang X., Yu B., Ma A., Chen C., Liu B., Ma Q. Protein–protein interaction sites prediction by ensemble random forests with synthetic minority oversampling technique. Bioinformatics. 2019;35(14):2395–2402. doi: 10.1093/bioinformatics/bty995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Shi H., Liu S., Chen J., Li X., Ma Q., Yu B. Predicting drug-target interactions using Lasso with random forest based on evolutionary information and chemical structure. Genomics. 2019;111(6):1839–1852. doi: 10.1016/j.ygeno.2018.12.007. [DOI] [PubMed] [Google Scholar]

- 53.Meng C., Guo F., Zou Q. CWLy-SVM: a support vector machine-based tool for identifying cell wall lytic enzymes. Computational Biology and Chemistry. 2020;87:p. 107304. doi: 10.1016/j.compbiolchem.2020.107304. [DOI] [PubMed] [Google Scholar]

- 54.Wang Y., Shi F., Cao L., et al. Morphological segmentation analysis and texture-based support vector machines classification on mice liver fibrosis microscopic images. Current Bioinformatics. 2019;14(4):282–294. doi: 10.2174/1574893614666190304125221. [DOI] [Google Scholar]

- 55.Chao L., Wei L., Zou Q. SecProMTB: a SVM-based classifier for secretory proteins of Mycobacterium tuberculosis with imbalanced data set. Proteomics. 2019;19, article e1900007 doi: 10.1002/pmic.201900007. [DOI] [PubMed] [Google Scholar]

- 56.Zhang N., Sa Y., Guo Y., Lin W., Wang P., Feng Y. Discriminating Ramos and Jurkat cells with image textures from diffraction imaging flow cytometry based on a support vector machine. Current Bioinformatics. 2018;13(1):50–56. doi: 10.2174/1574893611666160608102537. [DOI] [Google Scholar]

- 57.Meng C., Jin S., Wang L., Guo F., Zou Q. AOPs-SVM: A Sequence-Based Classifier of Antioxidant Proteins Using a Support Vector Machine. Frontiers in Bioengineering and Biotechnology. 2019;7 doi: 10.3389/fbioe.2019.00224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Yu L., Gao L. Human pathway-based disease network. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2019;16(4):1240–1249. doi: 10.1109/TCBB.2017.2774802. [DOI] [PubMed] [Google Scholar]

- 59.Liu B., Li C., Yan K. DeepSVM-fold: protein fold recognition by combining support vector machines and pairwise sequence similarity scores generated by deep learning networks. Briefings in Bioinformatics. 2020;21(5):1733–1741. doi: 10.1093/bib/bbz098. [DOI] [PubMed] [Google Scholar]

- 60.Capellini T. D., Vaccari G., Ferretti E., et al. Scapula development is governed by genetic interactions of Pbx1 with its family members and with Emx2 via their cooperative control of Alx1. Development. 2010;137(15):2559–2569. doi: 10.1242/dev.048819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Wang H., Ding Y., Tang J., Guo F. Identification of membrane protein types via multivariate information fusion with Hilbert-Schmidt Independence Criterion. Neurocomputing. 2020;383:257–269. doi: 10.1016/j.neucom.2019.11.103. [DOI] [Google Scholar]

- 62.Shen Y., Tang J., Guo F. Identification of protein subcellular localization via integrating evolutionary and physicochemical information into Chou’s general PseAAC. Journal of Theoretical Biology. 2019;462:230–239. doi: 10.1016/j.jtbi.2018.11.012. [DOI] [PubMed] [Google Scholar]

- 63.Shen Y., Ding Y., Tang J., Zou Q., Guo F. Critical evaluation of web-based prediction tools for human protein subcellular localization. Briefings in Bioinformatics. 2020;21(5):1628–1640. doi: 10.1093/bib/bbz106. [DOI] [PubMed] [Google Scholar]

- 64.Ding Y., Tang J., Guo F. Identification of drug-target interactions via multiple information integration. Information Sciences. 2017;418:546–560. [Google Scholar]

- 65.Xu L., Liang G., Liao C., Chen G. D., Chang C. C. An efficient classifier for Alzheimer’s disease genes identification. Molecules. 2018;23(12):p. 3140. doi: 10.3390/molecules23123140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Xu L., Liang G., Wang L., Liao C. A novel hybrid sequence-based model for identifying anticancer peptides. Genes. 2018;9(3):p. 158. doi: 10.3390/genes9030158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Xu L., Liang G., Chen B., Tan X., Xiang H., Liao C. A computational method for the identification of endolysins and autolysins. Protein & Peptide Letters. 2019;26 doi: 10.2174/0929866526666191002104735. [DOI] [PubMed] [Google Scholar]

- 68.Zhu X., He J., Zhao S., Tao W., Xiong Y., Bi S. A comprehensive comparison and analysis of computational predictors for RNA N6-methyladenosine sites of Saccharomyces cerevisiae. Briefings in Functional Genomics. 2019;18(6):367–376. doi: 10.1093/bfgp/elz018. [DOI] [PubMed] [Google Scholar]

- 69.Xiong Y., Wang Q., Yang J., Zhu X., Wei D. Q. PredT4SE-stack: prediction of bacterial type IV secreted effectors from protein sequences using a stacked ensemble method. Frontiers in Microbiology. 2018;9:p. 2571. doi: 10.3389/fmicb.2018.02571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.He J., Fang T., Zhang Z., Huang B., Zhu X., Xiong Y. PseUI: pseudouridine sites identification based on RNA sequence information. BMC Bioinformatics. 2018;19(1):p. 306. doi: 10.1186/s12859-018-2321-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Kang J., Fang Y., Yao P., Li N., Tang Q., Huang J. NeuroPP: A Tool for the Prediction of Neuropeptide Precursors Based on Optimal Sequence Composition. Interdisciplinary Sciences. 2019;11(1):108–114. doi: 10.1007/s12539-018-0287-2. [DOI] [PubMed] [Google Scholar]

- 72.Lin C., Chen W., Qiu C., Wu Y., Krishnan S., Zou Q. LibD3C: ensemble classifiers with a clustering and dynamic selection strategy. Neurocomputing. 2014;123:424–435. doi: 10.1016/j.neucom.2013.08.004. [DOI] [Google Scholar]

- 73.Cheng L., Zhao H., Wang P., et al. Computational methods for identifying similar diseases. Molecular therapy. Nucleic acids. 2019;18:590–604. doi: 10.1016/j.omtn.2019.09.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Cheng L., Qi C., Zhuang H., Fu T., Zhang X. gutMDisorder: a comprehensive database for dysbiosis of the gut microbiota in disorders and interventions. Nucleic Acids Research. 2020;48(D1):D554–D560. doi: 10.1093/nar/gkz843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Tang Y. J., Pang Y. H., Liu B. IDP-Seq2Seq: Identification of Intrinsically Disordered Regions based on Sequence to Sequence Learning. Bioinformatics. 2020 doi: 10.1093/bioinformatics/btaa667. [DOI] [PubMed] [Google Scholar]

- 76.Wang Z., He W., Tang J., Guo F. Identification of highest-affinity binding sites of yeast transcription factor families. Journal of Chemical Information and Modeling. 2020;60(3):1876–1883. doi: 10.1021/acs.jcim.9b01012. [DOI] [PubMed] [Google Scholar]

- 77.Li J., Pu Y., Tang J., Zou Q., Guo F. DeepAVP: a dual-channel deep neural network for identifying variable-length antiviral peptides. IEEE Journal of Biomedical and Health Informatics. 2020 doi: 10.1109/JBHI.2020.2977091. [DOI] [PubMed] [Google Scholar]

- 78.Shen C., Ding Y., Tang J., Jiang L., Guo F. LPI-KTASLP: prediction of lncRNA-protein interaction by semi-supervised link learning with multivariate information. IEEE Access. 2019;7:13486–13496. doi: 10.1109/ACCESS.2019.2894225. [DOI] [Google Scholar]

- 79.Ding Y., Tang J., Guo F. Identification of drug-side effect association via multiple information integration with centered kernel alignment. Neurocomputing. 2019;325:211–224. doi: 10.1016/j.neucom.2018.10.028. [DOI] [Google Scholar]

- 80.Ding Y., Tang J., Guo F. Identification of drug-target interactions via fuzzy bipartite local model. Neural Computing & Applications. 2020;32(14):10303–10319. doi: 10.1007/s00521-019-04569-z. [DOI] [Google Scholar]

- 81.Shan X., Wang X., Li C. D., et al. Prediction of CYP450 enzyme-substrate selectivity based on the network-based label space division method. Journal of Chemical Information and Modeling. 2019;59(11):4577–4586. doi: 10.1021/acs.jcim.9b00749. [DOI] [PubMed] [Google Scholar]

- 82.Chu Y., Kaushik A. C., Wang X., et al. DTI-CDF: a cascade deep forest model towards the prediction of drug-target interactions based on hybrid features. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz152. [DOI] [PubMed] [Google Scholar]

- 83.Al-Ajlan A., El Allali A. CNN-MGP: Convolutional Neural Networks for Gene Prediction. Interdisciplinary Sciences. 2019;11(4):628–635. doi: 10.1007/s12539-018-0313-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.