Abstract

Backpropagation (BP) has been the most successful algorithm used to train artificial neural networks. However, there are several gaps between BP and learning in biologically plausible neuronal networks of the brain (learning in the brain, or simply BL, for short), in particular, (1) it has been unclear to date, if BP can be implemented exactly via BL, (2) there is a lack of local plasticity in BP, i.e., weight updates require information that is not locally available, while BL utilizes only locally available information, and (3) there is a lack of autonomy in BP, i.e., some external control over the neural network is required (e.g., switching between prediction and learning stages requires changes to dynamics and synaptic plasticity rules), while BL works fully autonomously. Bridging such gaps, i.e., understanding how BP can be approximated by BL, has been of major interest in both neuroscience and machine learning. Despite tremendous efforts, however, no previous model has bridged the gaps at a degree of demonstrating an equivalence to BP, instead, only approximations to BP have been shown. Here, we present for the first time a framework within BL that bridges the above crucial gaps. We propose a BL model that (1) produces exactly the same updates of the neural weights as BP, while (2) employing local plasticity, i.e., all neurons perform only local computations, done simultaneously. We then modify it to an alternative BL model that (3) also works fully autonomously. Overall, our work provides important evidence for the debate on the long-disputed question whether the brain can perform BP.

1. Introduction

Backpropagation (BP) [1–3] as the main principle underlying learning in deep artificial neural networks (ANNs) [4] has long been criticized for its biological implausibility (i.e., BP’s computational procedures and principles are unrealistic to be implemented in the brain) [5–10]. Despite such criticisms, growing evidence demonstrates that ANNs trained with BP outperform alternative frameworks [11], as well as closely reproduce activity patterns observed in the cortex [12–20]. As indicated in [10, 21], since we apparently cannot find a better alternative than BP, the brain is likely to employ at least the core principles underlying BP, but perhaps implements them in a different way. Hence, bridging the gaps between BP and learning in biological neuronal networks of the brain (learning in the brain, for short, or simply BL) has been a major open question for both neuroscience and machine learning [10, 21–29]. Such gaps are reviewed in [30–33], the most crucial and most intensively studied gaps are (1) that it has been unclear to date, if BP can be implemented exactly via BL, (2) BP’s lack of local plasticity, i.e., weight updates in BP require information that is not locally available, while in BL weights are typically modified only on the basis of activities of two neurons (connected via synapses), and (3) BP’s lack of autonomy, i.e., BP requires some external control over the network (e.g., to switch between prediction and learning), while BL works fully autonomously.

Tremendous research efforts aimed at filling these gaps, trying to approximate BP in BL models. However, earlier BL models were not scaling to larger and more complicated problems [8, 34–42]. More recent works show the capacity of scaling up BL to the level of BP [43–57]. However, to date, none of the earlier or recent models has bridged the gaps at a degree of demonstrating an equivalence to BP, though some of them [37, 48, 53, 58–60] demonstrate that they approximate BP, or are equivalent to BP under unrealistic restrictions, e.g., the feedback is sufficiently weak [61, 48, 62]. The unability to fully close the gaps between BP and BL is keeping the community’s concerns open, questioning the link between the power of artificial intelligence and that of biological intelligence.

Recently, an approach based on predictive coding networks (PCNs), a widely used framework for describing information processing in the brain [31], has partially bridged these crucial gaps. This model employed a supervised learning algorithm for PCNs to which we refer as inference learning (IL) [48]. IL is capable of approximating BP with attractive properties: local plasticity and autonomous switching between prediction and learning. However, IL has not yet fully bridged the gaps: (1) IL is only an approximation of BP, rather than an equivalent algorithm, and (2) it is not fully autonomous, as it still requires a signal controlling when to update weights.

Therefore, in this paper, we propose the first BL model that is equivalent to BP while satisfying local plasticity and full autonomy. The main contributions of this paper are briefly summarized as follows:

To develop a BL approach that is equivalent to BP, we propose three easy-to-satisfy conditions for IL, under which IL produces exactly the same weight updates as BP. We call the proposed approach zero-divergent IL (Z-IL). In addition to being equivalent to BP, Z-IL also satisfies the properties of local plasticity and partial autonomy between prediction and learning.

However, Z-IL is still not fully autonomous, as weight updates in Z-IL still require a triggering signal. We thus further propose a fully autonomous Z-IL (Fa-Z-IL) model, which requires no control signal anymore. Fa-Z-IL is the first BL model that not only produces exactly the same weight updates as BP, but also performs all computations locally, simultaneously, and fully autonomously.

We prove the general result that Z-IL is equivalent to BP while satisfying local plasticity, and that Fa-Z-IL is additionally fully autonomous. Consequently, this work may bridge the crucial gaps between BP and BL, thus, could provide previously missing evidence to the debate on whether BP could describe learning in the brain, and links the power of biological and machine intelligence.

The rest of this paper is organized as follows. Section 2 recalls BP in ANNs and IL in PCNs. In Section 3, we develop Z-IL in PCNs and show its equivalence to BP and its local plasticity. Section 4 focuses on how Z-IL can be realized with full autonomy. Sections 5 and 7 provide some further experimental results and a conclusion, respectively.

2. Preliminaries

We now briefly recall artificial neural networks (ANNs) and predictive coding networks (PCNs), which are trained with backpropagation (BP) and inference learning (IL), respectively. For both models, we describe two stages, namely, (1) the prediction stage, when no supervision signal is present, and the goal is to use the current parameters to make a prediction, and (2) the learning stage, when a supervision signal is present, and the goal is to update the current parameters. Following [48], we use a slightly different notation than in the original descriptions to highlight the correspondence between the variables in the two models. The notation is summarized in Table 1 and will be introduced in detail as the models are described. To make the dimension of variables explicit, we denote vectors with a bar (e.g., x̅ = (x 1, x 2,..., x n) and 0̅ = (0, 0,..., 0)).

Table 1. Notation for ANNs and PCNs.

| Value-node activity | Predicted value-node activity | Error term or node | Weight | Objective function | Activation function | Layer size | Number of layers | Input signal | Supervision signal | Learning rate for weights | Integration step for inference | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ANNs | – | E | f | n l | l max+1 | α | – | |||||

| PCNs | F t | γ |

2.1. ANNs trained with BP

Artificial neural networks (ANNs) [3] are organized in layers, with multiple neuron-like value nodes in each layer. Following [48], to make the link to PCNs more visible, we change the direction in which layers are numbered and index the output layer by 0 and the input layer by l max. We denote by the input to the i-th node in the l-th layer. Thus, the connections between adjacent layers are:

| (1) |

where f is the activation function, is the weight from the j th node in the (l + 1)th layer to the i th node in the l th layer, and n l+1 is the number of nodes in layer (l + 1). Note that, in this paper, we consider only the case where there are only weights as parameters and no bias values. However, all results of this paper can be easily extended to the case with bias values as additional parameters; see supplementary material.

Prediction: Given values of the input , every in the ANN is set to the corresponding , and then every is computed as the prediction via Eq. (1).

Learning: Given a pair (s̅ in, s̅ out) from the training set, is computed via Eq. (1) from s̅ in as input and compared with s̅ out via the following objective function E:

| (2) |

Backpropagation (BP) updates the weights of the ANN by:

| (3) |

where α is the learning rate, and is the error term, given as follows:

| (4) |

2.2. PCNs trained with IL

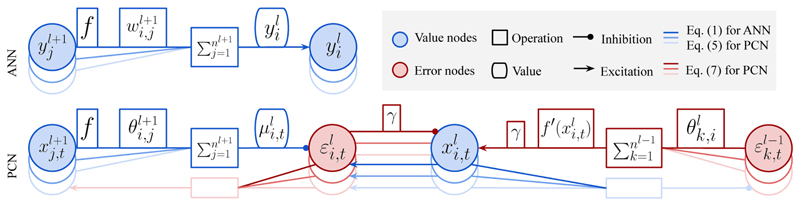

Predictive coding networks (PCNs) [31] are a widely used model of information processing in the brain, originally developed for unsupervised learning. It has recently been shown that when a PCN is used for supervised learning, it closely approximates BP [48]. As the learning algorithm in [48] involves inferring the values of hidden nodes, we call it inference learning (IL). Thus, we denote by t the time axis during inference. As shown in Fig. 1, a PCN contains value nodes (blue nodes, with the activity of ), which are each associated with corresponding prediction-error nodes (error nodes: red nodes, with the activity of ). Differently from ANNs, which propagate the activity between value nodes directly, PCNs propagate the activity between value nodes via the error nodes :

| (5) |

where the ’s are the connection weights, paralleling in the described ANN, and denotes the prediction of based on the value nodes in a higher layer . Thus, the error node computes the difference between the actual and the predicted . The value node is modified so that the overall energy F t in is minimized all the time:

| (6) |

Figure 1. ANNs and PCNs trained with BP and IL, respectively.

In this way, tends to move close to . Such a process of minimizing F t by modifying all is called inference, and it is running during both prediction and learning. Inference minimizes F t by modifying , following a unified rule for both stages:

| (7) |

where , and γ is the integration step for . Here, is different between prediction and learning only for l = 0, as the output value nodes are left unconstrained during prediction and are fixed to during learning. is zero for l = l max, as is fixed to in both stages. Eqs. (5) and (7) can be evaluated in a network of simple neurons, as illustrated in Fig. 1.

Prediction: Given an input s̅ in, the value nodes in the input layer are set to . Then, all the error nodes are optimized by the inference process and decay to zero as t → ∞. Thus, the value nodes converge to , the same values as of the corresponding ANN with the same weights.

Learning: Given a pair (s̅ in, s̅ out) from the training set, the value nodes of both the input and the output layers are set to the training pair (i.e., ); thus,

| (8) |

Optimized by the inference process, the error nodes can no longer decay to zero; instead, they converge to values as if the errors had been backpropagated. Once the inference converges to an equilibrium (t = t c), where t c is a fixed large number, a weight update is performed. The weights are updated to minimize the same objective function F t; thus,

| (9) |

where α is the learning rate. By Eqs. (7) and (9), all computations are local (local plasticity) in IL, and, as stated, the model can autonomously switch between prediction and learning (some autonomy), via running inference. However, a control signal is still needed to trigger the weights update at t = t c; thus, full autonomy is not realized yet. The learning of IL is summarized in Algorithm 1. Note that detailed derivations of Eqs. (7) and (9) are given in the supplementary material (and in [48]).

3. IL with Zero Divergence from BP and with Local Plasticity

We now first describe the temporal training dynamics of BP in ANNs and IL in PCNs. Based on this, we then propose IL in PCNs with zero divergence from BP (and local plasticity), called Z-IL.

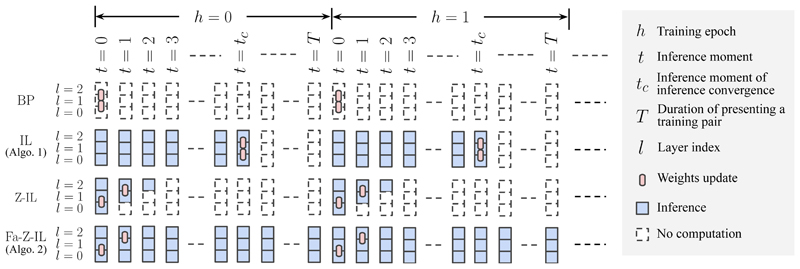

Temporal Training Dynamics. We first describe the temporal training dynamics of BP in ANNs and IL in PCNs; see Fig. 2. We assume that we train the networks on a pair (s̅ in, s̅ out) from the dataset, which is presented for a period of time T, before it is changed to another pair, moving to the next training epoch h. Within a single training epoch h, (s̅ in, s̅ out) stays unchanged, and t runs from 0. As stated before, t is the time axis during inference, which means that IL (also Z-IL and Fa-Z-IL, proposed below) in PCNs run inference starting from t = 0. In Fig. 2, squares and rounded rectangles represent nodes in one layer and connection weights between nodes in two layers of a neural network, respectively: BP (first row) only conducts weights updates in one training epoch, while IL (second row) conducts inference until it converges (t = t c) and updates weights (assuming T ≥ t c).

Algorithm 1 Learning one training pair (presented for the duration T) with IL.

Require: is fixed to , is fixed to .

1: for t = 0 to T do // presenting a training pair

2: for each neuron i in each level l do // in parallel in the brain

3: Update to minimize Ft via Eq. (7) // inference

4: if t = tc then // external control signal

5: Update each to minimize Ft via Eq. (9)

6: return // the brain rests

7: end if

8: end for

9: end for

Figure 2.

Comparison of the temporal training dynamics of BP, IL, Z-IL, and Fa-Z-IL. We assume that we train the networks on a pair (s̅ in, s̅ out) from the dataset, which is presented for a period of time T, before it is changed to another pair, moving to the next training epoch h. Within a single training epoch h, (s̅ in, s̅ out) stays unchanged, and t runs from 0. As stated before, t is the time axis during inference, which means that IL (also Z-IL and Fa-Z-IL) in PCNs run inference starting from t = 0. The squares and rounded rectangles represent nodes in one layer and connection weights between nodes in two layers of a neural network, respectively: BP (first row) only conducts weights updates in one training epoch, while IL (second row) conducts inference until it converges (t = t c ) and updates weights (assuming T ≥ t c). Note that C1 is omitted in the figure for simplicity.

In the rest of this section and in Section 4, we introduce zero-divergent IL (Z-IL) and fully autonomous zero-divergent IL (Fa-Z-IL) in PCNs, respectively: Z-IL (third row) also conducts inference but until specific inference moments t = l and updates weights between the layers l and l + 1, while Fa-Z-IL (fourth row) conducts inference all the time and weights update is trigged autonomously at the same inference moments as Z-IL.

Zero-divergent IL. We now present three conditions C1 to C3 under which IL in a PCN, denoted zero-divergent IL (Z-IL), produces exactly the same weights as BP in the corresponding ANN (having the same initial weights as the given PCN) applied to the same datapoints s = (s̅ in, s̅ out). In the following, we first describe the three conditions C1 to C3, and then formally state (and prove in the supplementary material) that Z-IL produces exactly the same weights as BP.

C1: Every and every , and every , at t = 0 is equal to in the corresponding ANN with input s̅ in. In particular, this also implies that , for l ∊ {1,..., l max –1}. This condition is naturally satisfied in PCNs, if before the start of each training epoch over a training pair (s̅ in, s̅ out), the input s̅ in has been presented, and the network has converged in the prediction stage (see Section 2.2). This condition corresponds to a requirement in BP, that it needs one forward pass from s̅ in to compute the prediction before conducting weights updates with the supervision signal s̅ out. Note that neither the forward pass for BP nor this initialization for IL are shown in Fig. 2. Note that this condition is also applied in [48].

Algorithm 2 Learning one training pair (presented for the duration T) Fa-Z-IL.

Require: is fixed to , is fixed to .

Require: (C1), and γ = 1 (C3).

1: for t = 0 to T do // presenting a training pair

2: for each neuron i in each level l do // in parallel in the brain

3: Update to minimize Ft via Eq. (7) // inference

4: Update each to minimize Ft via Eq. (9) with learning rate

5: end for

6: end for

C2: Every weight , l ∊ {0,...,l max – 1}, is updated at t = l, that is, at a very specific inference moment, related to the layer that the weight belongs to. This may seem quite strict, but it can actually be implemented with full autonomy (see Section 4).

C3: The integration step of inference γ is set to 1. Note that solely relaxing this condition (keeping C1 and C2 satisfied) results in BP with a different learning rate for different layers, where γ is the decay factor of this learning rate along layers (see supplementary material).

To prove the above equivalence statement under C1 to C3, we develop two theorems in order (proved in the supplementary material). The following first theorem formally states that the prediction error in the PCN with IL on s under C1 to C3 is equal to the error term in its ANN with BP on s.

Theorem 3.1. Let M be a PCN, M′ be its corresponding ANN (with the same initial weights as M), and let s be a datapoint. Then, every prediction error , in M trained with IL on s under C1 and C3 is equal to the error term in M′ trained with BP on s.

We next formally state that every weights update in the PCN with IL on s under C1 to C3 is equal to the weights update in its ANN with BP on s. This then immediately implies that the final weights of the PCN with IL on s under C1 to C3 are equal to the final weights of its ANN with BP on s.

Theorem 3.2. Let M be a PCN, M' be its corresponding ANN (with the same initial weights as M), and let s be a datapoint. Then, every update , in M trained with IL on s under C1 and C3 is equal to the update in M′ trained with BP on s.

4. Z-IL with Full Autonomy

Both IL and Z-IL in PCNs optimize the unified objective function of Eq. (6) during prediction and learning. Thus, they both enjoy some autonomy already. Specifically, they can autonomously switch between prediction and learning by running inference, depending only on whether is fixed to s̅ out or left unconstrained. However, when to update the weights still requires the control signal at t = t c and t = l for IL and Z-IL, respectively.

Targeting at removing this control signal from Z-IL, we now propose Fa-Z-IL, which realizes Z-IL with full autonomy. Specifically, we propose a function ϕ(·), which takes in and modulates the learning rate α, producing a local learning rate for . As can be seen from Fig. 1, is directly connected to , meaning that ϕ(·) works locally at a neuron scale. For each produces a spike of 1 at exactly the inference moment of t = l, which equals to triggering to be updated at t = l. In this way, we can let the inference and weights update run all the time with ϕ(·) modulating the local learning rate with local information; thus, the resulting model works fully autonomously, performs all computations locally, and updates weights at t = l, i.e., producing exactly Z-IL/BP, as long as C1 and C3 are satisfied. Fa-Z-IL is summarized in Algorithm 2.

As the core of Fa-Z-IL, we found that quite simple functions ϕ(·) can detect the inference moment of t = l from . Specifically, from Lemma A3 in the supplementary material, we know that under C1 diverges from its stable states at exactly t = l, i.e., . Thus, ϕ(·) can take in and return 1 only if , where t d is a hyperparameter. In this way, detects the inference moment of t = l and produces a spike of 1 at exactly t = l. In special cases where , the detection fails, however, by Eq. (9), produced at t = l is also zero, since , so the failure does no harm. Since it is possible for to go across zero, having a larger t d means a more accurate detection. In experiments, ϕ(·) of t d = 4 is already capable of detecting with 100% success rate; see Table 3. Furthermore, the function ϕ, as the only additional component introduced in Fa-Z-IL to realize full autonomy, is highly plausible that such a computation could be performed by biological neurons, because some types of neurons are well-known to respond predominantly to changes in their input [63].

Table 3. Success rate of detecting inference moments t = l with ϕ(·) of different t d.

| t d | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 16 |

|---|---|---|---|---|---|---|---|---|---|

| Success rate | 93.4% | 92.4% | 99.6% | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% |

However, Fa-Z-IL does require the input to be presented before the teacher to satisfy C1, i.e., the convergence of a prediction phase before t = 0 is still needed. We consider this to be a requirement of the learning setup. Also, such a requirement is much weaker, compared to switching computational rules (BP) and detecting the convergence of global variables (IL). We leave the study of removing this requirement or putting it inside an autonomous neural system as future research. During this prediction phase before t = 0, it is notable that the error nodes change due to feedforward input, while during learning, the error nodes change due to feedback input. In order to prevent learning during prediction, ϕ is equal to 1 only if the change in error nodes is caused by feedback input. Furthermore, experiments of classification with Fa-Z-IL have been conducted, and Fa-Z-IL produces exactly the same result as Z-IL and BP, the numbers of which can be found in the supplementary material. It should also be noted that Fa-Z-IL loses formal equivalence to BP, but with t d > 4, empirical equivalence always remains.

All proposed models are summarized in Table 2 (schematic algorithms are given in the supplementary material), where Fa-Z-IL is the only model that is not only equivalent to BP, but also performs all computations locally and fully autonomously.

Table 2. Models and their properties.

| Equivalence to BP | Local plasticity | Partial autonomy | Full autonomy | |

|---|---|---|---|---|

| BP | ✔ | ✘ | ✘ | ✘ |

| IL | ✘ | ✔ | ✔ | ✘ |

| Z-IL | ✔ | ✔ | ✔ | ✘ |

| Fa-Z-IL | ✔ | ✔ | ✔ | ✔ |

5. Experiments

In this section, we complete the picture of this work with experimental results, providing ablation studies on MNIST and measuring the running time of the discussed approaches on ImageNet.

5.1. MNIST

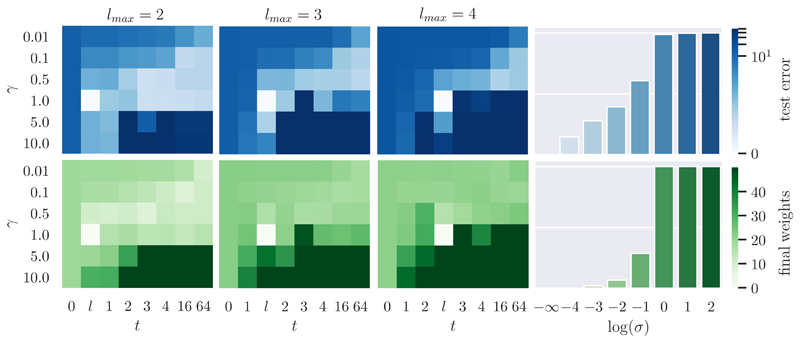

We now show that zero-divergence cannot be achieved when strictly weaker conditions than C1, C2, and C3 in Section 3 are satisfied only. Specifically, with experiments on MNIST, we show the divergence of PCNs trained with ablated variants of Z-IL from ANNs trained with BP.

Setup. We use the same setup as in [48]. Specifically, we train for 64 epochs with α = 0.001, batch size 20, and logistic sigmoid as f. To remove the stochasticity of the batch sampler, models in one group within which we measure divergence are set with the same seed. We evaluate three of such groups, each set with different randomly generated seeds ({1482555873, 698841058, 2283198659}), and the final numbers are averaged over them. The divergence is measured in terms of the error percentage on the test set summed over all epochs (test error, for short), as in [48, 37, 58, 53, 59], and of the final weights, as in [59]. The divergence of the test error is the L1 distance between the corresponding test errors, averaged over 64 training iterations (the test error is evaluated after each training iteration). The divergence of the final weights is the sum of the L2 distance between the corresponding weights, after the last training iteration. We conducted experiments on MNIST (with 784 input and 10 output neurons; the other settings are as in [64]). We investigated three different network structures with 1, 2, and 3 hidden layers, respectively (i.e., l max ∊ {2,3,4}), each containing 32 neurons.

Ablation of C1. Assuming C2 and C3 satisfied, to ablate C1, we consider situations when the network has not converged in the prediction stage before the training pair is presented, i.e., . To simulate this, we sampled around the mean of with different standard deviations σ, where σ = 0 corresponds to satisfying C1. We swipe σ = {0, 0.0001, 0.001,0.01, 0.1,1,10,100}. Fig. 3, right column, shows that zero divergence is only achieved when σ = 0, i.e., C1 is satisfied. A larger version of Fig. 3 is given in the supplementary material.

Figure 3. Ablation of C2, C3, and C1, where divergence is measured on test error and final weights.

Ablation of C2 and C3. Assuming C1 satisfied, to ablate C3, we swipe γ = {0.01, 0.1, 0.5, 1, 5, 10}, and to ablate C2, we swipe t = {l, 0,1, 2,3,4,16, 64}. Here, setting t to a fixed number is exactly the implementation of IL with t c set to this fixed number. We set t = l between t = l max – 2 and t = l max – 1 (as argued in the supplementary material). Fig. 3, three left columns, shows that zero divergence is only achieved when t = l and γ = 1, i.e., C2 and C3 are satisfied. Note that the settings of t ≥ 16 and γ ≤ 0.5 are typical for IL in [20].

5.2. ImageNet

We further conduct experiments on ImageNet to measure the running time of Z-IL and Fa-Z-IL on large datasets. In detail, we show that Z-IL and Fa-Z-IL create minor overheads over BP, which supports their scalability. The detailed implementation setup is given in the supplementary material.

Table 4 shows the averaged running time of each weights update of BP, IL, Z-IL, and Fa-Z-IL. For IL, we set t c = 20, following [48]. As can be seen, IL introduces large overheads, due to the fact that it needs at least t d inference steps before conducting a weights update. In contrast, Z-IL and Fa-Z-IL run with minor overheads compared to BP, as they require at most l max inference steps to complete one update of weights in all layers. Comparing Fa-Z-IL to Z-IL, it is also obvious that the function ϕ creates only minor overheads. These observations support the claim that Z-IL and Fa-Z-IL are indeed scalable.

Table 4. Average runtime of each weights update (in ms) of BP, IL, Z-IL, and Fa-Z-IL.

| Devices | BP | IL | Z-IL | Fa-Z-IL |

|---|---|---|---|---|

| CPU | 3.1 | 19.2 | 3.6 | 3.6 |

| GPU | 3.7 | 56.3 | 4.1 | 4.2 |

6. Related Work

We now review several lines of research that approximate BP in ANNs with BL models, each of which corresponds to an argument for which BP in ANNs is considered to be biologically implausible.

One such argument is that BP in ANNs encodes error terms in a biologically implausible way, i.e., error terms are not encoded locally. It is often discussed along with the lack of local plasticity. How error terms can be alternatively encoded and propagated locally has been one of the most intensively studied topics. One promising assumption is that the error term can be represented in dendrites of the corresponding neurons [35, 65, 66]. Such efforts are unified in [21], with a broad range of works [36, 37] encoding the error term in activity differences. IL and Z-IL in PCNs also do so. In addition, Z-IL shows that the error term in BP in ANNs can be encoded with its exact value in the prediction error of IL in PCNs at very specific inference moments.

BP in ANNs is also criticized for requiring an external control (e.g., the computation is changed between prediction and learning). An important property of PCNs trained with IL, in contrast, is that they autonomously [48] switch between prediction and learning. PCNs trained with Z-IL also have this property. But IL requires an external control over when to update weights (t = t c), and Z-IL requires control to only update weights at very specific inference moments (t = l). However, Z-IL can be realized with full autonomy, leading to the proposed Fa-Z-IL.

Finally, BP in ANNs is also criticized for backward connections that are symmetric to forward connections in adjacent layers and for using unrealistic models of (non-spiking) neurons. A common way to remove the backward connections [8, 39, 67] is based on the idea of zeroth-order optimization [68]. However, the latter needs many trials depending on the number of weights [69] and can be further improved by perturbing the outputs of the neurons instead of the weights [70]. Admitting the existence of backward connections, more recent works show that asymmetric connections are able to produce a comparable performance [38, 71, 54]. In the predictive coding models (IL, Z-IL, and Fa-Z-IL), the errors are backpropagated by correct weights, because the model includes feedback connections that also learn. The weight modification rules for corresponding feedforward and feedback weights are the same, which ensures that they remain equal if initialized to equal values (see [48]). As for the models of neurons, BP has recently also been generalized to spiking neurons [72]. Our work is orthogonal to the above, i.e., we still use symmetric backward connections and do not consider spiking neurons.

Furthermore, [46] also analyzes the first steps during inference after a perturbation caused by turning on the teacher, starting from a prediction fixpoint, which may serve similar general purposes. However, the obtained learning rule differs substantially from that of our Z-IL, since the energy function is not the one which governs PCNs. A section outlining the differences of learning rules between [46] and Z-IL is included in the supplementary material.

Some features of PCNs are inconsistent with known properties of biological networks, but it has recently been shown that variants of PCNs can be developed without three of these implausible elements, which retain high classification performance. The first unrealistic feature are 1-to-1 connections between value and error nodes (Fig. 1). However, it has been proposed that errors can be represented in apical dendrites of cortical neurons [62], and the equations of predictive coding networks can be mapped on such an architecture [10]. Alternatively, it has been demonstrated how these 1-to-1 connections can be replaced by dense connections [73]. The other two unrealistic features of PCNs are symmetric forward-backward weights and non-linear functions affecting only some outputs of the neurons. Nevertheless, it has been demonstrated that these features may be removed without significantly affecting the classification performance [73].

7. Summary and Outlook

In this paper, we have presented for the first time a framework to BL that (1) produces exactly the same updates of the neural weights as BP, while (2) employing local plasticity. Based on the above framework, we have additionally presented a BL model that (3) also works fully autonomously. This suggests a positive answer to the long-disputed question whether the brain can perform BP.

As for future work, we believe that the proposed Fa-Z-IL model will open up many new research directions. Specifically, it has a very simple implementation (see Algorithm 2), with all computations being performed locally, simultaneously, and fully autonomously, which may lead to new architectures of neuromorphic computing.

Broader Impact

This work shows that backpropagation in artificial neural networks can be implemented in a biologically plausible way, providing previously missing evidence to the debate on whether backpropagation could describe learning in the brain. In machine learning, backpropagation drives the contemporary flourish of machine intelligence. However, it has been doubted for long that though backpropagation is indeed powerful, its computational procedure is not possible to be implemented in the brain. Our work provides strong evidence that backpropagation can be implemented in the brain, which will solidify the community’s confidence on pushing forward backpropagation-based machine intelligence. Specifically, the machine learning community may further explore if such equivalence holds for other or more complex BP-based networks.

In neuroscience, models based on backpropagation have helped to understand how information is processed in the visual system [15, 16]. However, it was not possible to fully rely on these insights, as backpropagation was so far seen unrealistic for the brain to implement. Our work provides strong confidence to remove such concerns, and thus could lead to a series of future works on understanding the brain with backpropagation. Specifically, the neuroscience community may now use the patterns produced by BP to verify if such computational model can explain learning in brain. Also, our work may inspire researchers to look for the existence of the function φ, which completes the biological foundation of Fa-Z-IL.

As for ethical aspects and future societal consequences, we consider our work to be an important step towards understanding biological intelligence, which indicates at least no harm on the ethical aspects. Instead, being able to understand biological intelligence potentially leads to advances in medical research, which will substantially benefit the well-beings of humans.

Supplementary Material

Acknowledgments and Disclosure of Funding

This work was supported by the China Scholarship Council under the State Scholarship Fund, by the National Natural Science Foundation of China under the grant 61906063, by the Natural Science Foundation of Tianjin City, China, under the grant 19JCQNJC00400, by the “100 Talents Plan” of Hebei Province, China, under the grant E2019050017, and by the Medical Research Council UK grant MC_UU_00003/1. This work was also supported by the Alan Turing Institute under the EPSRC grant EP/N510129/1 and by the AXA Research Fund.

Contributor Information

Yuhang Song, Email: yuhang.song@some.ox.ac.uk.

Thomas Lukasiewicz, Email: thomas.lukasiewicz@cs.ox.ac.uk.

Rafal Bogacz, Email: rafal.bogacz@ndcn.ox.ac.uk.

References

- [1].Werbos P. Ph.D. Dissertation, Harvard University. 1974. New tools for prediction and analysis in the behavioral sciences. [Google Scholar]

- [2].Parken DB. Learning-logic: Casting the cortex of the human brain in silicon. tech rep, Massachusetts Institute of Technology, Center for Computational Research in Economics and Management Science. 1985 [Google Scholar]

- [3].Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagating errors. Nature. 1986;323(6088):533–536. [Google Scholar]

- [4].LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- [5].Grossberg S. Competitive learning: From interactive activation to adaptive resonance. Cognitive Science. 1987;11(1):23–63. [Google Scholar]

- [6].Crick F. The recent excitement about neural networks. Nature. 1989;337(6203):129–132. doi: 10.1038/337129a0. [DOI] [PubMed] [Google Scholar]

- [7].Abdelghani M, Lillicrap TP, Tweed DB. Sensitivity derivatives for flexible sensorimo-tor learning. Neural Computation. 2008;20(8):2085–2111. doi: 10.1162/neco.2008.04-07-507. [DOI] [PubMed] [Google Scholar]

- [8].Lillicrap TP, Cownden D, Tweed DB, Akerman CJ. Random synaptic feedback weights support error backpropagation for deep learning. Nature Communications. 2016;7(1):1–10. doi: 10.1038/ncomms13276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Roelfsema PR, Holtmaat A. Control of synaptic plasticity in deep cortical networks. Nature Reviews Neuroscience. 2018;19(3):166. doi: 10.1038/nrn.2018.6. [DOI] [PubMed] [Google Scholar]

- [10].Whittington JC, Bogacz R. Theories of error back-propagation in the brain. Trends in Cognitive Sciences. 2019 doi: 10.1016/j.tics.2018.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Baldi P, Sadowski P. A theory of local learning, the learning channel, and the optimality of backpropagation. Neural Networks. 2016;83:51–74. doi: 10.1016/j.neunet.2016.07.006. [DOI] [PubMed] [Google Scholar]

- [12].Zipser D, Andersen RA. A back-propagation programmed network that simulates response properties of a subset of posterior parietal neurons. Nature. 1988;331(6158):679–684. doi: 10.1038/331679a0. [DOI] [PubMed] [Google Scholar]

- [13].Lillicrap TP, Scott SH. Preference distributions of primary motor cortex neurons reflect control solutions optimized for limb biomechanics. Neuron. 2013;77(1):168–179. doi: 10.1016/j.neuron.2012.10.041. [DOI] [PubMed] [Google Scholar]

- [14].Cadieu CF, Hong H, Yamins DL, Pinto N, Ardila D, Solomon EA, Majaj NJ, DiCarlo JJ. Deep neural networks rival the representation of primate it cortex for core visual object recognition. PLoS Computational Biology. 2014;10(12):e1003963. doi: 10.1371/journal.pcbi.1003963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Yamins DL, Hong H, Cadieu CF, Solomon EA, Seibert D, DiCarlo JJ. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proceedings of the National Academy of Sciences. 2014;111(23):8619–8624. doi: 10.1073/pnas.1403112111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Khaligh-Razavi S-M, Kriegeskorte N. Deep supervised, but not unsupervised, models may explain it cortical representation. PLoS Computational Biology. 2014;10(11) doi: 10.1371/journal.pcbi.1003915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Yamins DL, DiCarlo JJ. Using goal-driven deep learning models to understand sensory cortex. Nature Neuroscience. 2016;19(3):356. doi: 10.1038/nn.4244. [DOI] [PubMed] [Google Scholar]

- [18].Kell AJ, Yamins DL, Shook EN, Norman-Haignere SV, McDermott JH. A task-optimized neural network replicates human auditory behavior, predicts brain responses, and reveals a cortical processing hierarchy. Neuron. 2018;98(3):630–644. doi: 10.1016/j.neuron.2018.03.044. [DOI] [PubMed] [Google Scholar]

- [19].Banino A, Barry C, Uria B, Blundell C, Lillicrap T, Mirowski P, Pritzel A, Chadwick MJ, Degris T, Modayil J, et al. Vector-based navigation using grid-like representations in artificial agents. Nature. 2018;557(7705):429–433. doi: 10.1038/s41586-018-0102-6. [DOI] [PubMed] [Google Scholar]

- [20].Whittington J, Muller T, Mark S, Barry C, Behrens T. Generalisation of structural knowledge in the hippocampal-entorhinal system. Advances in Neural Information Processing Systems. 2018:8484–8495. [Google Scholar]

- [21].Lillicrap TP, Santoro A, Marris L, Akerman CJ, Hinton G. Backpropagation and the brain. Nature Reviews Neuroscience. 2020 doi: 10.1038/s41583-020-0277-3. [DOI] [PubMed] [Google Scholar]

- [22].Kriegeskorte N. Deep neural networks: A new framework for modeling biological vision and brain information processing. Annual Review of Vision Science. 2015;1:417–446. doi: 10.1146/annurev-vision-082114-035447. [DOI] [PubMed] [Google Scholar]

- [23].Marblestone AH, Wayne G, Kording KP. Toward an integration of deep learning and neuroscience. Frontiers in Computational Neuroscience. 2016;10:94. doi: 10.3389/fncom.2016.00094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Hassabis D, Kumaran D, Summerfield C, Botvinick M. Neuroscience-inspired artificial intelligence. Neuron. 2017;95(2):245–258. doi: 10.1016/j.neuron.2017.06.011. [DOI] [PubMed] [Google Scholar]

- [25].Bartunov S, Santoro A, Richards B, Marris L, Hinton GE, Lillicrap T. Assessing the scalability of biologically-motivated deep learning algorithms and architectures. Advances in Neural Information Processing Systems. 2018:9368–9378. [Google Scholar]

- [26].Kriegeskorte N, Douglas PK. Cognitive computational neuroscience. Nature Neuroscience. 2018;21(9):1148–1160. doi: 10.1038/s41593-018-0210-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Kietzmann TC, McClure P, Kriegeskorte N. Deep neural networks in computational neuroscience. BioRxiv. 2018 doi: 10.3389/fncom.2016.00131. 133504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Wenliang LK, Seitz AR. Deep neural networks for modeling visual perceptual learning. Journal of Neuroscience. 2018;38(27):6028–6044. doi: 10.1523/JNEUROSCI.1620-17.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Richards BA, Lillicrap TP, Beaudoin P, Bengio Y, Bogacz R, Christensen A, Clopath C, Costa RP, de Berker A, Ganguli S, et al. A deep learning framework for neuroscience. Nature Neuroscience. 2019;22(11):1761–1770. doi: 10.1038/s41593-019-0520-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Grossberg S. Studies of Mind and Brain. Springer; 1982. How does a brain build a cognitive code? pp. 1–52. [Google Scholar]

- [31].Rao RP, Ballard DH. Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects. Nature Neuroscience. 1999;2(1):79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- [32].Huang Y, Rao RP. Predictive coding. Wiley Interdisciplinary Reviews: Cognitive Science. 2011;2(5):580–593. doi: 10.1002/wcs.142. [DOI] [PubMed] [Google Scholar]

- [33].Bengio Y, Lee D-H, Bornschein J, Mesnard T, Lin Z. Towards biologically plausible deep learning. arXiv preprint arXiv. 2015:1502–04156. [Google Scholar]

- [34].O’Reilly RC. Biologically plausible error-driven learning using local activation differences: The generalized recirculation algorithm. Neural Computation. 1996;8(5):895–938. [Google Scholar]

- [35].Körding KP, König P. Supervised and unsupervised learning with two sites of synaptic integration. Journal of Computational Neuroscience. 2001;11(3):207–215. doi: 10.1023/a:1013776130161. [DOI] [PubMed] [Google Scholar]

- [36].Bengio Y. How auto-encoders could provide credit assignment in deep networks via target propagation. arXiv preprint arXiv. 2014:1407–7906. [Google Scholar]

- [37].Lee D-H, Zhang S, Fischer A, Bengio Y. Difference target propagation. Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer; 2015. pp. 498–515. [Google Scholar]

- [38].Nøkland A. Direct feedback alignment provides learning in deep neural networks. Advances in Neural Information Processing Systems. 2016:1037–1045. [Google Scholar]

- [39].Scellier B, Bengio Y. Equilibrium propagation: Bridging the gap between energy-based models and backpropagation. Frontiers in Computational Neuroscience. 2017;11:24. doi: 10.3389/fncom.2017.00024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Scellier B, Goyal A, Binas J, Mesnard T, Bengio Y. Generalization of equilibrium propagation to vector field dynamics. arXiv preprint arXiv. 2018:1808–04873. [Google Scholar]

- [41].Lin T-H, Tang PTP. Dictionary learning by dynamical neural networks. arXiv preprint arXiv. 2018:1805–08952. [Google Scholar]

- [42].Illing B, Gerstner W, Brea J. Biologically plausible deep learning—but how far can we go with shallow networks? Neural Networks. 2019;118:90–101. doi: 10.1016/j.neunet.2019.06.001. [DOI] [PubMed] [Google Scholar]

- [43].Balduzzi D, Vanchinathan H, Buhmann J. Kickback cuts backprop’s red-tape: Biologically plausible credit assignment in neural networks. Proceedings of the AAAI Conference on Artificial Intelligence; 2015. [Google Scholar]

- [44].Krotov D, Hopfield JJ. Unsupervised learning by competing hidden units. Proceedings of the National Academy of Sciences. 2019;116(16):7723–7731. doi: 10.1073/pnas.1820458116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Kuśmierz Ł, Isomura T, Toyoizumi T. Learning with three factors: Modulating Hebbian plasticity with errors. Current Opinion in Neurobiology. 2017;46:170–177. doi: 10.1016/j.conb.2017.08.020. [DOI] [PubMed] [Google Scholar]

- [46].Bengio Y, Mesnard T, Fischer A, Zhang S, Wu Y. STDP-compatible approximation of backpropagation in an energy-based model. Neural Computation. 2017;29(3):555–577. doi: 10.1162/NECO_a_00934. [DOI] [PubMed] [Google Scholar]

- [47].Guerguiev J, Lillicrap TP, Richards BA. Towards deep learning with segregated dendrites. Elife. 2017;6:e22901. doi: 10.7554/eLife.22901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Whittington JC, Bogacz R. An approximation of the error backpropagation algorithm in a predictive coding network with local Hebbian synaptic plasticity. Neural Computation. 2017;29(5):1229–1262. doi: 10.1162/NECO_a_00949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Moskovitz TH, Litwin-Kumar A, Abbott L. Feedback alignment in deep convolutional networks. arXiv preprint arXiv. 2018:1812–06488. [Google Scholar]

- [50].Mostafa H, Ramesh V, Cauwenberghs G. Deep supervised learning using local errors. Frontiers in Neuroscience. 2018;12:608. doi: 10.3389/fnins.2018.00608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Xiao W, Chen H, Liao Q, Poggio T. Biologically-plausible learning algorithms can scale to large datasets. arXiv preprint arXiv. 2018:1811–03567. [Google Scholar]

- [52].Obeid D, Ramambason H, Pehlevan C. Structured and deep similarity matching via structured and deep Hebbian networks. Advances in Neural Information Processing Systems. 2019:15377–15386. [Google Scholar]

- [53].Nøkland A, Eidnes LH. Training neural networks with local error signals. arXiv preprint arXiv. 2019:1901–06656. [Google Scholar]

- [54].Amit Y. Deep learning with asymmetric connections and Hebbian updates. Frontiers in Computational Neuroscience. 2019;13:18. doi: 10.3389/fncom.2019.00018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Aljadeff J, D’amour J, Field RE, Froemke RC, Clopath C. Cortical credit assignment by Hebbian, neuromodulatory and inhibitory plasticity. arXiv preprint arXiv. 2019:1911–00307. [Google Scholar]

- [56].Akrout M, Wilson C, Humphreys PC, Lillicrap T, Tweed D. Using weight mirrors to improve feedback alignment. arXiv preprint arXiv. 2019:1904–05391. [Google Scholar]

- [57].Wang X, Lin X, Dang X. Supervised learning in spiking neural networks: A review of algorithms and evaluations. Neural Networks. 2020 doi: 10.1016/j.neunet.2020.02.011. [DOI] [PubMed] [Google Scholar]

- [58].Ororbia I, Alexander G, Haffner P, Reitter D, Giles CL. Learning to adapt by minimizing discrepancy. arXiv preprint arXiv. 2017:1711–11542. [Google Scholar]

- [59].Ororbia AG, Mali A. Biologically motivated algorithms for propagating local target representations. Proceedings of the AAAI Conference on Artificial Intelligence; 2019. pp. 4651–4658. [Google Scholar]

- [60].Millidge B, Tschantz A, Buckley CL. Predictive coding approximates backprop along arbitrary computation graphs. arXiv preprint arXiv. 2020:2006–04182. doi: 10.1162/neco_a_01497. [DOI] [PubMed] [Google Scholar]

- [61].Xie X, Seung HS. Equivalence of backpropagation and contrastive Hebbian learning in a layered network. Neural Computation. 2003;15(2):441–454. doi: 10.1162/089976603762552988. [DOI] [PubMed] [Google Scholar]

- [62].Sacramento J, Costa RP, Bengio Y, Senn W. Dendritic cortical microcircuits approximate the backpropagation algorithm. Advances in Neural Information Processing Systems. 2018:8721–8732. [Google Scholar]

- [63].Frazor RA, Albrecht DG, Geisler WS, Crane AM. Visual cortex neurons of monkeys and cats: Temporal dynamics of the spatial frequency response function. Journal of Neurophysiology. 2004;91(6):2607–2627. doi: 10.1152/jn.00858.2003. [DOI] [PubMed] [Google Scholar]

- [64].LeCun Y, Cortes C. MNIST handwritten digit database. The MNIST Database. 2010 [Google Scholar]

- [65].Körding KP, König P. Learning with two sites of synaptic integration. Network: Computation in Neural Systems. 2000;11(1):25–39. [PubMed] [Google Scholar]

- [66].Richards BA, Lillicrap TP. Dendritic solutions to the credit assignment problem. Current Opinion in Neurobiology. 2019;54:28–36. doi: 10.1016/j.conb.2018.08.003. [DOI] [PubMed] [Google Scholar]

- [67].Lansdell BJ, Prakash P, Kording KP. Learning to solve the credit assignment problem. arXiv preprint arXiv. 2019:1906–00889. [Google Scholar]

- [68].Golovin D, Karro J, Kochanski G, Lee C, Song X, et al. Gradientless descent: High-dimensional zeroth-order optimization. arXiv preprint arXiv. 2019:1911–06317. [Google Scholar]

- [69].Werfel J, Xie X, Seung HS. Learning curves for stochastic gradient descent in linear feedforward networks. Advances in Neural Information Processing Systems. 2004:1197–1204. doi: 10.1162/089976605774320539. [DOI] [PubMed] [Google Scholar]

- [70].Flower B, Jabri M. Summed weight neuron perturbation: An O(N) improvement over weight perturbation. Advances in Neural Information Processing Systems. 1993:212–219. [Google Scholar]

- [71].Liao Q, Leibo JZ, Poggio T. How important is weight symmetry in backpropagation?. Proceedings of the AAAI Conference on Artificial Intelligence; 2016. [Google Scholar]

- [72].Zenke F, Ganguli S. Superspike: Supervised learning in multilayer spiking neural networks. Neural Computation. 2018;30(6):1514–1541. doi: 10.1162/neco_a_01086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].Millidge B, Tschantz A, Buckley CL. Relaxing the constraints on predictive coding models. arXiv preprint arXiv. 2020:2010–01047. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.