Abstract

Malaria is a major health threat caused by Plasmodium parasites that infect the red blood cells. Two predominant types of Plasmodium parasites are Plasmodium vivax (P. vivax) and Plasmodium falciparum (P. falciparum). Diagnosis of malaria typically involves visual microscopy examination of blood smears for malaria parasites. This is a tedious, error-prone visual inspection task requiring microscopy expertise which is often lacking in resource-poor settings. To address these problems, attempts have been made in recent years to automate malaria diagnosis using machine learning approaches. Several challenges need to be met for a machine learning approach to be successful in malaria diagnosis. Microscopy images acquired at different sites often vary in color, contrast, and consistency caused by different smear preparation and staining methods. Moreover, touching and overlapping cells complicate the red blood cell detection process, which can lead to inaccurate blood cell counts and thus incorrect parasitemia calculations. In this work, we propose a red blood cell detection and extraction framework to enable processing and analysis of single cells for follow-up processes like counting infected cells or identifying parasite species in thin blood smears. This framework consists of two modules: a cell detection module and a cell extraction module. The cell detection module trains a modified Channel-wise Feature Pyramid Network for Medicine (CFPNet-M) deep learning network that takes the green channel of the image and the color-deconvolution processed image as inputs, and learns a truncated distance transform image of cell annotations. CFPNet-M is chosen due to its low resource requirements, while the distance transform allows achieving more accurate cell counts for dense cells. Once the cells are detected by the network, the cell extraction module is used to extract single cells from the original image and count the number of cells. Our preliminary results based on 193 patients (including 148 P. Falciparum infected patients, and 45 uninfected patients) show that our framework achieves cell count accuracy of 92.2%.

Index Terms: Malaria diagnosis, Plasmodium falciparum, Plasmodium vivax, microscopy, thin blood smear, machine learning, image analysis

I. Introduction

Malaria remains a global health threat with considerable mortality rates. Diagnosis and monitoring of malaria have ongoing challenges particularly in resource-poor settings where experts analyzing the microscopy images are often lacking, and computational resources needed for automated analysis are limited. Light-weight automated systems that can assist health providers in diagnosis and monitoring of malaria disease is a critical need to ensure rapid and accurate diagnosis and treatment. Lately, thanks to the advances in computational resources and availability of large amounts of annotated data, supervised machine learning methods have started to be used for automated malaria diagnosis from thin blood smear microscopy images [1], [2]. However, there are still several problems that are needed to be addressed to develop a successful machine learning model that can segment and count the red blood cells in a thin blood smear microscopy image for malaria diagnosis. One of these problems is large appearance variations between the blood smear images. The smear images differ in color, contrast, and consistency due to different smear preparation and staining procedures. This variety brings generalization challenges making trained machine learning models harder to use on blood smears that are prepared at different locations. Another challenge is large numbers of touching or overlapping cells in thin blood smear images that lead to detection and segmentation problems. This is an important issue for malaria diagnosis and monitoring that require accurate counting of cells to calculate parasitemia (a measure of parasite load).

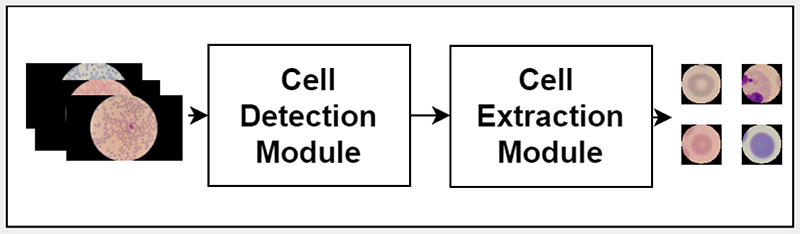

In this paper, we present a pipeline called Channel-wise Feature Pyramid Network for Medicine (CFPNet-M) [3] - Detection, Extraction and Counting (CFPNet-M-DEC), to detect, extract and count red blood cells in thin blood smear microscopy images for automated malaria diagnosis and patient monitoring. To address the first problem caused by the appearance variations in collected blood smear images, we propose to use color deconvolution [4], [5], and the green channel of the image as inputs to the network. To address the second issue of distinguishing touching cells more accurately, the model is trained as a regression model rather than a binary segmentation model. The regression model learns a processed distance transform of the binary ground truth mask. As the segmentation network, CFPNet is used by modifying the first and the last layers to convert it to a regression network that takes a two channel input. CFPNet-M [3] is a light-weight network that is specifically developed for biomedical image segmentation. Due to its characteristics like the low memory requirement, it is suitable for resource-poor settings. The overall pipeline of the proposed red blood cell detection, extraction and monitoring system is given in Fig. 1. The original thin blood smear images are given as the input to the pipeline. These original images are processed to get the color deconvolution image and the green channel to be given to the cell detection module. The model in the detection module infers a truncated distance transform for each image, and the inferenced images are given to the cell extraction module. Finally, the cell extraction module uses a series of classical image processing techniques to extract each cell as a separate image and the cell count for further analysis.

Fig. 1.

Flowchart of the proposed CFPNet-M-DEC framework.

II. Related Work

Automating malaria diagnosis and monitoring using thin blood smears is an active research area [2], [6], [7]. Thanks to the advances in deep learning and availability of annotated training data, recent works started to rely on supervised deep learning techniques. Some works directly use the whole microscopy image for tasks like segmentation and detection [8]–[13], some use extracted cell images for tasks like classification [11], [13]–[15]. Moreover, some works evaluate cell patches collected from multiple patients, and some others evaluate the results on patient level. There are different studies focusing on the detection of the red blood cells for further use [16], and the detection of the infected cells directly [1], [12], [17], [18]. Some studies classify red blood cells as infected vs. uninfected, as a two class problem [19], [20], and other studies approach the problem as a three or more class classification task and include classes to differentiate different species of parasites. Finally, there are studies that created a full pipeline for the detection and classification tasks [13], [21], [22]. On the other hand, the automated systems are often needed in environments with limited computational resources or lack of experts. In such environments, the most advanced tool on site might be a smart phone, which is successfully used in some recent works [23]–[26].

Like many other biomedical image analysis problems, there are problem specific issues coming from the nature of the data. Specifically, in malaria diagnosis, available data are collected from many different places, with varying image quality. Common challenges of image detection and classification in biomedical images include the variety of appearance of the same class cells, touching and partially overlapping cells [27]. Specifically, when accurate cell counts are important, as they are for malaria diagnosis and monitoring, touching and partially overlapping cells create even more critical issues. To address this problem, some authors used distance transform as ground truth for regression, instead of a binary mask in convolutional neural networks, to highlight the center of the cells or particles. This can lead to a better distinction of touching cells [28]–[30].

This study proposes a processing pipeline to detect, count, and extract red blood cells from thin blood smear images to be used for identification of infected versus uninfected cells and for classification of infecting parasite species. The proposed pipeline uses a distance transform-based cell segmentation approach to increase cell count accuracy, particularly in the presence of touching and overlapping cells. The proposed pipeline also involves a color deconvolution step [4], [5] to increase the generalization capability of the trained deep learning model to better adapt to processing of images collected from different sites.

III. Methods

We propose a framework, CFPNet-M-DEC, for detecting and extracting red blood cells in thin blood smears, as shown in Fig.1. It consists of two modules: cell detection module and cell extraction module. The cell detection module takes an original microscopy image as input and uses a modified CFPNet-M model to detect cells. Once the cells are detected by the network, the cell extraction module is used to extract and count single cells.

A. Detection Module

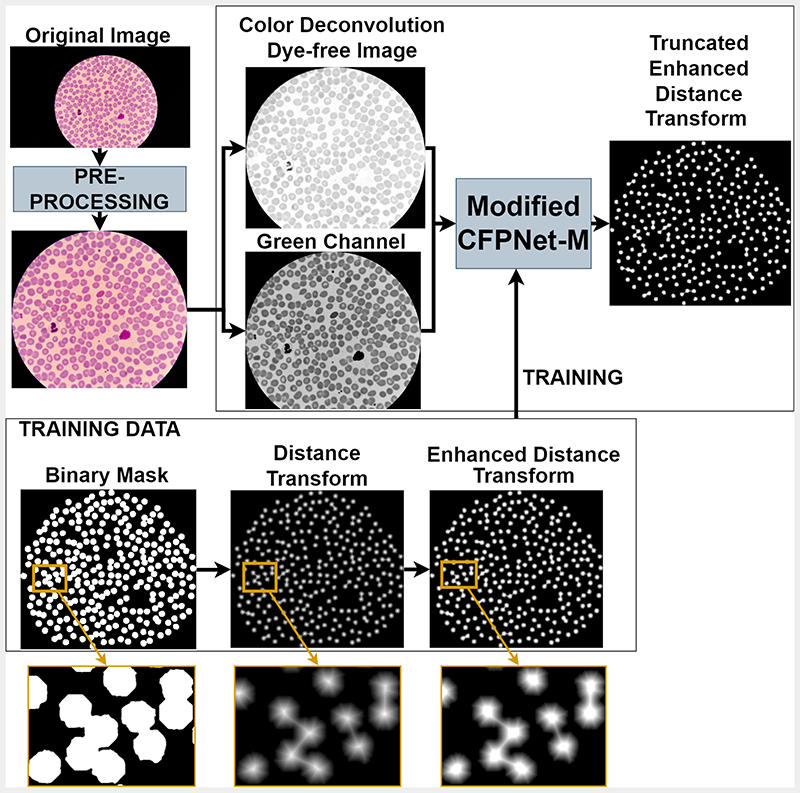

The proposed detection module, illustrated in Fig. 2, includes three main steps: (i) image pre-processing, (ii) color deconvolution; and (iii) deep learning-based detection.

Fig. 2.

Different stages of the Cell Detection Module. The original image is pre-processed, then color deconvolution is done to get the dye-free image. The green channel is taken together with the dye-free image as the inputs to the modified CFPNet-M, which learns a truncated, enhanced distance transform.

1). Pre-processing

This step aims to detect and crop the circular image region seen through the microscope from the rest of the image. The original images (of size 5312 × 2988) include a dark background surrounding the blood smear image seen through the microscope. We used Otsu thresholding [31] to generate binary masks that differentiate blood smear regions from the surrounding background. Bounding boxes computed from these masks are then used to crop regions of interest from the original images. This step reduces image size to approximately 3000 × 3000. Cropped images and the corresponding ground truth segmentation masks are then resized to 800 × 800.

Color Deconvolution: Color deconvolution is an algorithm designed to extract the dyes of different stains from RGB images [4], [5]. Color deconvolution can be used to extract single or multiple stains from an image. The extraction of different dyes gives us a stain free, common color ground for a variety of images collected from different sources. Since the microscopy images are acquired from different labs and hospitals, the output image varies a lot in color and consistency. In the detection module, to exclude the unwanted effects of this variety, a color deconvolution image of the original microscopy image is used as one of the input channels. In our experiments, an extraction for Giemsa dye is done, but this method can be generalized to different dyes, therefore is applicable to other images prepared with different types of dyes.

2). Training

To detect and extract individual cells for further analysis, we modified a recent light-weight segmentation network Channel-wise Feature Pyramid Network for Medicine (CFPNet-M) [3]. We modified the first and last layers of the network to allow processing of two channel (dye-free image and green channel of the original image) input; and to perform regression rather than classification. The network is trained to map its input to a processed distance transform of the binary segmentation mask as shown in Fig. 2. The CFPNet-M network [3] is an improved version of the classical U-Net [32] segmentation network. The number of trainable parameters of CFPNet-M is drastically less compared to the U-Net network, which reduces the resources needed for both training and inference phases. This property is important for malaria diagnosis in resource-poor settings. Adam optimizer and mean squared error loss function are used for training the network. The model is trained using the dye free image and the green channel of the original image as inputs, and truncated distance transform of the binary ground-truth cell segmentation mask as output. The training image is generated as follows: (i) a binary cell segmentation mask is created using manual polygon annotations generated for the cells in the input image; (ii) distance transform operation is applied to the binary segmentation mask; (iii) contrast enhancement is applied to the distance transform output to better highlight the cell centers; (iv) enhanced distance transform is truncated to a minimum distance to decrease the number of possible values for the regression process. The resulting truncated enhanced distance transform map is used to train the modified CFPNet-M network.

B. Cell Extraction Module

Once the truncated distance map of the cells is generated by the modified CFPNet-M network, post-processing steps are performed to extract individual cells for further analysis (i.e. infected vs. not infected classification). Given the network output, single cell image patches are extracted using the following steps: (i) A binarized version of the network output is used to remove background noise from the enhanced distance map; (ii) Gaussian smoothing is applied to the enhanced distance map to reduce false detections; (iii) extended-maxima transform [33] is applied to the smoothed distance map to detect inner cell regions; (iv) morphological erosion operation is applied to the extended-maxima map to reduce merging of neighboring cells; (v) individual cells are identified using connected component labeling; (vi) the labeled image is resized back to the original resolution (3000 × 3000 pixels); (vii) Fixed sized (200 × 200 pixels) image patches are extracted around the centroids of the connected components from the original resolution images.

IV. Experimental Results and Discussion

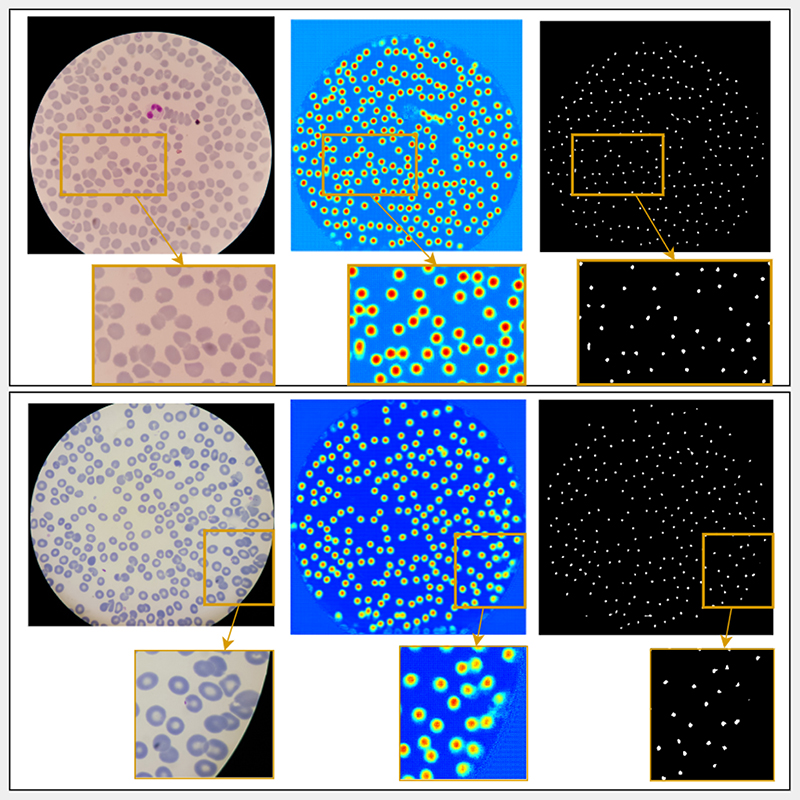

The proposed system was trained, tested, and evaluated using data from 193 patients (including 148 P. Falciparum infected patients, and 45 uninfected patients). Out of 955 microscopy images in total, 100 images were selected for the validation set, 100 images were selected for the test set, and the remaining 755 images were used for training. Training was done using the default parameters of CFPNet-M, and for 25 epochs. Sample input images, corresponding inference results, and cell center markers obtained from these outputs are shown in Fig. 3. Separate cells are extracted using the markers as described in Section III-B. The results were evaluated in terms of segmentation and detection performances and compared to a marker controlled watershed segmentation algorithm [24].

Fig. 3.

Example results from the CFPNet-M-DEC pipeline. Two example images are given in two rows with zoomed in parts showing touching cell examples. First column is the original image, second column is the model output shown in jet colormap, third column shows the cell center markers found in the model output.

A. Segmentation Performance

Experimental results were first evaluated in terms of cell segmentation. Model inference results were thresholded and dilated to produce binary segmentation masks and compared to the manual cell segmentation in terms of dice similarity coefficient [34]. The dice similarity coefficient between two binary images A and B is given in Eq. (1), where |A| represents the cardinality of image A.

| (1) |

These results were compared to the performance of a watershed segmentation algorithm [24]. The marker controlled watershed algorithm was applied on the gradient magnitude image, based on the cell markers that were found using multi-scale Laplacian of Gaussian. The dice coefficient was calculated for each test image, then the mean dice coefficient was taken by averaging the dice coefficients of all images. The mean dice coefficient on all 100 images in the test set is given in Table I. In this table, we see that the proposed detection model outputs have a mean dice value of 0.5762, whereas the mean dice coefficient of the watershed-based method is 0.7204.

Table I.

Mean Dice Similarity Coefficient between the ground truth mask used in training, and the binarized detection mask result of Cell Detection Module of CFPNET-M-DEC, and the results of the watershed-based method.

| Method | Mean Dice Coefficient |

|---|---|

| CFPNet-M-DEC | 0.5762 |

| Watershed | 0.7204 |

The results of these experiments show that the watershed-based method is superior to the proposed detection model with respect to the mean dice score with a 0.15 difference. This result is expected since the watershed-based method directly aims to have a segmentation mask, whereas the proposed model aims to have a detection mask that will later be used to extract and count each cell separately. Moreover, since the proposed model outputs a truncated distance transform, it highlights the centers of the cells while giving a smaller mask for each cell to make it easier to separate them in cases of touching and overlapping cells.

B. Cell Counting Performance

Experimental results from the full processing pipeline including the cell extraction module were evaluated in terms of single cell detection and counting. Extracted cells and the obtained cell counts were compared to the cell counts obtained from the binary ground-truth segmentation masks and the aforementioned watershed transform-based segmentation masks. Cell count errors in the ground-truth segmentation masks are caused by touching or overlapping cells. Cell count errors in this case can be used as a measure of image complexity. These counts were compared to the ground-truth cell counts obtained from the manual polygon annotations.

Mean cell count errors and standard deviations (STD) for all 100 images of 20 patients in the test set are shown in Table II. The mean errors show that the proposed pipeline has a 15.08 mean error value with 11.52 standard deviation. The binary ground truth segmentation masks have a 41.09 mean error with 19.16 standard deviation. Finally, the counts extracted from the watershed segmentation results have a 32.31 mean error with 40.66 standard deviation value. The mean error values in this table are calculated by taking the difference between the computed cell counts and the actual cell counts in each image, and then by averaging over 100 test images.

Table II.

GT Mask: Mean error and STD between the cell count of the cells extracted from the ground truth mask and actual cell count. Watershed: The mean error and STD between the cell count of the cells extracted from the results of the watershed-based method and the actual cell count. CFPNet-M-DEC: The mean error with the corresponding STD between the cell count of the cells extracted from the CFPNet-M-DEC result and the actual cell count.

| Method | Mean Error | Error STD |

|---|---|---|

| Binary Ground-truth Mask | 41.09 | 19.16 |

| Watershed | 32.31 | 40.66 |

| CFPNet-M-DEC | 15.08 | 11.52 |

The cell count percentages for both image level and patient level are given in the Table III. The cell count percentage for a single image was directly calculated by taking the number of the extracted cells from the image divided by the actual cell count in that specific image. Then the ratio value was multiplied by 100 to have percentage scale. Finally, the mean of these percentages were taken for all 100 images to find the mean cell count percentage on image level, and for 5 images for each patient on patient level. The mean percentage value of the correctly detected and counted cells of the proposed pipeline on all test images is 92.28%, whereas the percentage value is 79.54% for the count of the cells that are extracted from ground truth masks used in training, and 84.07% for the cell counts extracted from the watershed-based method.

Table III.

GT Mask: Mean percentage of the cell count of the cells extracted from the ground truth mask over the actual cell count. Watershed: Mean percentage of the cell count of the cells extracted from the watershed-based method result over the actual cell count. CFPNet-M-DEC: Mean percentage of the cell count of the cells extracted from the CFPNet-M-Dec result over the actual cell count.

| Mean Count Percentage % | ||

|---|---|---|

| Method | Image Level | Patient Level |

| Binary Ground-truth Mask | 79.54 | 79.38 |

| Watershed | 84.07 | 83.61 |

| CFPNet-M-DEC | 92.28 | 92.20 |

For patient level analysis of 20 patients, the percentage of correct counts of our method varies between 99.18% and 77.95%, with a mean of 92.20%. For watershed, the variance goes much higher with percentage values between 101.48% (over-segmentation) and 30.32%, with a mean of 83.61%. For the binary ground-truth segmentation masks, the percentage values varies between 88.70% and 56.41%, with a mean of 79.38%.

The experimental results show that our pipeline has the smallest mean error value with the lowest standard deviation with respect to the missed cells in the cell count. Moreover, the results show that our pipeline has the highest accuracy in cell count values with higher mean percentage with least variance. Our method goes up to 99.18% accuracy for cell counts on patient level. The proposed method is more focused on detecting and extracting single cells, rather than having accurate segmentation masks.

V. Conclusion

The proposed pipeline shows promising results for extraction and counting of red blood cells from thin blood smear images. The proposed deep learning network considers stain and color differences between images collected from different resources, and generates a truncated distance map instead of a binary segmentation mask. This distance mapping enables detection, extraction, and counting of single cells within clusters. The extracted cells can then be used to classify the individual cells as uninfected or infected, or to train a 3-class classifier for uninfected cells and cells infected with P. falciparum and P.Vivax. With a classification step added to the pipeline, the whole pipeline can be used to diagnose malaria, and monitor malaria patients during their treatments.

Our future work will involve generalization of the proposed pipeline to images collected from different resources including P. vivax; augmentation of the training dataset with artificial data to further improve performance on touching and overlapping cells; and precise tuning of the parameters or creation of adaptive parameters to extract the cells.

VI. Acknowledgment

This research was supported by the Intramural Research Program of the National Library of Medicine, National Institutes of Health. Mahidol-Oxford Tropical Medicine Research Unit is funded by the Wellcome Trust of Great Britain.

Contributor Information

Deniz Kavzak Ufuktepe, Email: deniz.kavzakufuktepe@mail.missouri.edu.

Feng Yang, Email: feng.yang2@nih.gov.

Yasmin M. Kassim, Email: ymkgz8@mail.missouri.edu.

Hang Yu, Email: hang.yu@nih.gov.

Richard J. Maude, Email: richard@tropmedres.ac.

Kannappan Palaniappan, Email: pal@missouri.edu.

Stefan Jaeger, Email: stefan.jaeger@nih.gov.

References

- [1].Smith K, Kirby J. Image analysis and artificial intelligence in infectious disease diagnostics. Clinical Microbiology and Infection. 2020 doi: 10.1016/j.cmi.2020.03.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Varo R, Balanza N, Mayor A, Bassat Q. Diagnosis of clinical malaria in endemic settings. Expert Review of Anti-infective Therapy. 2021;19(1):79–92. doi: 10.1080/14787210.2020.1807940. [DOI] [PubMed] [Google Scholar]

- [3].Lou A, Guan S, Loew M. Cfpnet-m: A light-weight encoder-decoder based network for multimodal biomedical image real-time segmentation. arXiv preprint. 2021:arXiv:2105.04075. doi: 10.1016/j.compbiomed.2023.106579. [DOI] [PubMed] [Google Scholar]

- [4].Ruifrok AC, Johnston DA. Quantification of histochemical staining by color deconvolution. Analytical and quantitative cytology and histology. 2001;23(4):291–299. [PubMed] [Google Scholar]

- [5].Landini G, Martinelli G, Piccinini F. Colour deconvolution: stain unmixing in histological imaging. Bioinformatics. 2021;37(10):1485–1487. doi: 10.1093/bioinformatics/btaa847. [DOI] [PubMed] [Google Scholar]

- [6].Poostchi M, Silamut K, Maude RJ, Jaeger S, Thoma G. Image analysis and machine learning for detecting malaria. Translational Research. 2018;194:36–55. doi: 10.1016/j.trsl.2017.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Rahman A, Zunair H, Reme TR, Rahman MS, Mahdy MRC. A comparative analysis of deep learning architectures on high variation malaria parasite classification dataset. Tissue and Cell. 2021;69:101473. doi: 10.1016/j.tice.2020.101473. [DOI] [PubMed] [Google Scholar]

- [8].Liang Z, Powell A, Ersoy I, Poostchi M, Silamut K, Palaniappan K, Guo P, Hossain MA, Sameer A, Maude RJ, Huang JX, et al. Cnn-based image analysis for malaria diagnosis; IEEE international conference on bioinformatics and biomedicine (BIBM); 2016. pp. 493–496. [Google Scholar]

- [9].Rosado L, Da Costa JMC, Elias D, Cardoso JS. Mobile-based analysis of malaria-infected thin blood smears: automated species and life cycle stage determination. Sensors. 2017;17(10):2167. doi: 10.3390/s17102167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Abbas N, Saba T, Mohamad D, Rehman A, Almazyad AS, Al-Ghamdi JS. Machine aided malaria parasitemia detection in giemsa-stained thin blood smears. Neural Computing and Applications. 2018;29(3):803–818. [Google Scholar]

- [11].Devi SS, Laskar RH, Sheikh SA. Hybrid classifier based life cycle stages analysis for malaria-infected erythrocyte using thin blood smear images. Neural Computing and Applications. 2018;29(8):217–235. [Google Scholar]

- [12].Vijayalakshmi A, Rajesh Kanna B. Deep learning approach to detect malaria from microscopic images. Multimedia Tools and Applications. 2020;79(21):15 297–15 317. [Google Scholar]

- [13].Molina A, Rodellar J, Boldú L, Acevedo A, Alférez S, Merino A. Automatic identification of malaria and other red blood cell inclusions using convolutional neural networks. Computers in Biology and Medicine. 2021;136:104680. doi: 10.1016/j.compbiomed.2021.104680. [DOI] [PubMed] [Google Scholar]

- [14].Sunarko B, Bottema M, Iksan N, Hudaya KA, Hanif MS. Journal of Physics: Conference Series. 1. Vol. 1444. IOP Publishing; 2020. Red blood cell classification on thin blood smear images for malaria diagnosis; p. 012036. [Google Scholar]

- [15].Khatkar M, Atal DK, Singh S. Detection and classification of malaria parasite using python software; IEEE International Conference on Intelligent Technologies (CONIT); 2021. pp. 1–7. [Google Scholar]

- [16].Kassim YM, Palaniappan K, Yang F, Poostchi M, Palaniappan N, Maude RJ, Antani S, Jaeger S. Clustering-based dual deep learning architecture for detecting red blood cells in malaria diagnostic smears. IEEE Journal of Biomedical and Health Informatics. 2020;25(5):1735–1746. doi: 10.1109/JBHI.2020.3034863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Yang F, Quizon N, Yu H, Silamut K, Maude RJ, Jaeger S, Antani S. Medical Imaging 2020: Computer-Aided Diagnosis. Vol. 11314. International Society for Optics and Photonics; 2020. Cascading yolo: automated malaria parasite detection for plasmodium vivax in thin blood smears; 113141Q [Google Scholar]

- [18].Sinha S, Srivastava U, Dhiman V, Akhilan P, Mishra S. Performance assessment of deep learning procedures on malaria dataset. Journal of Robotics and Control (JRC) 2021;2(1):12–18. [Google Scholar]

- [19].Poostchi M, Ersoy I, McMenamin K, Gordon E, Palaniappan N, Pierce S, Maude RJ, Bansal A, Srinivasan P, Miller L, Palaniappan K, et al. Malaria parasite detection and cell counting for human and mouse using thin blood smear microscopy. Journal of Medical Imaging. 2018;5(4):044506. doi: 10.1117/1.JMI.5.4.044506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Sriporn K, Tsai C-F, Tsai C-E, Wang P. Analyzing malaria disease using effective deep learning approach. Diagnostics. 2020;10(10):744. doi: 10.3390/diagnostics10100744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Horning MP, Delahunt CB, Bachman CM, Luchavez J, Luna C, Hu L, Jaiswal MS, Thompson CM, Kulhare S, Janko S, Wilson BK, et al. Performance of a fully-automated system on a who malaria microscopy evaluation slide set. Malaria journal. 2021;20(1):1–11. doi: 10.1186/s12936-021-03631-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Yang Z, Benhabiles H, Hammoudi K, Windal F, He R, Collard D. A generalized deep learning-based framework for assistance to the human malaria diagnosis from microscopic images. Neural Computing and Applications. 2021:1–16. [Google Scholar]

- [23].Yang F, Yu H, Silamut K, Maude RJ, Jaeger S, Antani S. Smartphone-supported malaria diagnosis based on deep learning; International workshop on machine learning in medical imaging; Springer; 2019. pp. 73–80. [Google Scholar]

- [24].Yu H, Yang F, Rajaraman S, Ersoy I, Moallem G, Poostchi M, Palaniappan K, Antani S, Maude RJ, Jaeger S. Malaria screener: a smartphone application for automated malaria screening. BMC Infectious Diseases. 2020;20(1):1–8. doi: 10.1186/s12879-020-05453-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Fuhad K, Tuba JF, Sarker M, Ali R, Momen S, Mohammed N, Rahman T. Deep learning based automatic malaria parasite detection from blood smear and its smartphone based application. Diagnostics. 2020;10(5):329. doi: 10.3390/diagnostics10050329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Masud M, Alhumyani H, Alshamrani SS, Cheikhrouhou O, Ibrahim S, Muhammad G, Hossain MS, Shorfuzzaman M. Leveraging deep learning techniques for malaria parasite detection using mobile application. Wireless Communications and Mobile Computing. 2020;2020 [Google Scholar]

- [27].Bao R, Al-Shakarji NM, Bunyak F, Palaniappan K. Dmnet: Dual-stream marker guided deep network for dense cell segmentation and lineage tracking; IEEE/CVF International Conference on Computer Vision; 2021. pp. 3361–3370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Hamad A, Ersoy I, Bunyak F. Improving nuclei classification performance in h&e stained tissue images using fully convolutional regression network and convolutional neural network; IEEE Applied Imagery Pattern Recognition Workshop (AIPR); 2018. pp. 1–6. [Google Scholar]

- [29].Ma J, Wei Z, Zhang Y, Wang Y, Lv R, Zhu C, Gaoxiang C, Liu J, Peng C, Wang L, Wang Y, et al. Medical Imaging with Deep Learning. PMLR; 2020. How distance transform maps boost segmentation cnns: an empirical study; pp. 479–492. [Google Scholar]

- [30].Nguyen NP, Ersoy I, Gotberg J, Bunyak F, White TA. DRPnet: automated particle picking in cryo-electron micrographs using deep regression. BMC Bioinformatics. 2021;22(1):1–28. doi: 10.1186/s12859-020-03948-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Otsu N. A threshold selection method from gray-level histograms. IEEE transactions on systems, man, and cybernetics. 1979;9(1):62–66. [Google Scholar]

- [32].Ronneberger O, Fischer P, Brox T. International Conference on Medical image computing and computer-assisted intervention. Springer; 2015. U-net: Convolutional networks for biomedical image segmentation; pp. 234–241. [Google Scholar]

- [33].Soille P. Morphological image analysis: principles and applications. Springer Science & Business Media; 2013. [Google Scholar]

- [34].Dice LR. Measures of the amount of ecologic association between species. Ecology. 1945;26(3):297–302. [Google Scholar]