Abstract

Longitudinal observational data on patients can be used to investigate causal effects of time‐varying treatments on time‐to‐event outcomes. Several methods have been developed for estimating such effects by controlling for the time‐dependent confounding that typically occurs. The most commonly used is marginal structural models (MSM) estimated using inverse probability of treatment weights (IPTW) (MSM‐IPTW). An alternative, the sequential trials approach, is increasingly popular, and involves creating a sequence of “trials” from new time origins and comparing treatment initiators and non‐initiators. Individuals are censored when they deviate from their treatment assignment at the start of each “trial” (initiator or noninitiator), which is accounted for using inverse probability of censoring weights. The analysis uses data combined across trials. We show that the sequential trials approach can estimate the parameters of a particular MSM. The causal estimand that we focus on is the marginal risk difference between the sustained treatment strategies of “always treat” vs “never treat.” We compare how the sequential trials approach and MSM‐IPTW estimate this estimand, and discuss their assumptions and how data are used differently. The performance of the two approaches is compared in a simulation study. The sequential trials approach, which tends to involve less extreme weights than MSM‐IPTW, results in greater efficiency for estimating the marginal risk difference at most follow‐up times, but this can, in certain scenarios, be reversed at later time points and relies on modelling assumptions. We apply the methods to longitudinal observational data from the UK Cystic Fibrosis Registry to estimate the effect of dornase alfa on survival.

Keywords: cystic fibrosis, inverse probability weighting, marginal structural model, registries, sequential trials, survival, target trials, time‐dependent confounding

1. INTRODUCTION

Longitudinal observational data on patients can allow for the estimation of treatment effects over long follow‐up periods and in diverse populations. A key task when aiming to estimate causal effects from observational data is to account for confounding of the treatment‐outcome association. With longitudinal data on treatment and outcome, the treatment‐outcome association may be subject to so‐called time‐dependent confounding. Such confounding is present when there are time‐dependent covariates affected by past treatment that also affect later treatment use and outcome. It presents particular challenges for estimation of the effects of longitudinal treatment regimes, such as those that involve comparing sustained treatment or no treatment over time. Over the last two decades a large statistical literature has built up on methods for estimating causal treatment effects in the presence of time‐dependent confounding, and their use in practice is becoming more widespread.

In this paper we focus on estimating the causal effects of longitudinal treatment regimes on a time‐to‐event outcome, using longitudinal observational data on treatment and covariates obtained at approximately regular visits, alongside the time‐to‐event information. When there is time‐dependent confounding, standard methods for survival analysis, such as Cox regression with adjustment for baseline or time‐updated covariates, do not generally enable estimation of such causal effects directly. Several methods have been described for estimating the causal effects of longitudinal treatment regimes on time‐to‐event outcomes. Marginal structural models (MSMs) estimated using inverse probability of treatment weighting (IPTW) were introduced by Robins et al 1 and extended to survival outcomes by Hernan et al 2 through marginal structural Cox models. A review by Clare et al in 2019 3 found this approach to be by far the most commonly used in practice, and we refer to this as the MSM‐IPTW approach. An alternative but related approach, which we refer to as the “sequential trials” approach, was described by Hernan et al in 2008 4 and Gran et al in 2010. 5 With the sequential trials approach, artificial “trials” are created from a sequence of new time origins which could, for example, be defined by study visits at which new information is recorded for each individual who remains under observation. At each time origin individuals are divided into those who have just initiated the treatment under investigation and those who have not yet initiated the treatment. Within each trial, individuals are artificially censored at the time at which their treatment status deviates from what it was at the time origin, if such deviation occurs. Inverse probability of censoring weighting is used to account for dependence of this artificial censoring on time‐dependent characteristics. The overall effects of sustained treatment versus no treatment, starting from each time origin, can then be estimated using, for example, weighted pooled logistic or Cox regression. The sequential trials approach was originally proposed as a simple way of making efficient use of longitudinal observational data, as it enables use of a larger sample size than if an artificial trial were formed from a single time origin. 4 Hernan et al 4 did not discuss time‐dependent confounding directly, but focused on the more general concept of emulating a sequence of hypothetical randomized trials from observational data. Their initial focus was on estimation of intention‐to‐treat effects, which does not require censoring of individuals when they deviate from their initial treatment status. However, they also considered estimation of per protocol effects, which focus on sustained treatment vs no treatment, using censoring and weighting, and they referred to these as “adherence adjusted” effects. Gran et al 5 focused on per protocol effects, and assumed that individuals who started treatment always continued it, but that noninitiators of treatment at the start of each trial could start treatment at later time, which they addressed using censoring and weighting. In this paper we focus on per protocol effects, that is, on sustained treatment strategies.

The sequential trials approach is closely related to several other methods for estimation of treatment effects using longitudinal data. Thomas et al 6 reviewed such methods, referring to them collectively as “longitudinal matching methods.” In addition to the sequential trials approach, these include the sequential stratification approaches suggested by Schaubel et al in 2006 and 2009 7 , 8 and considered in a number of subsequent papers such as that of Gong and Schaubel. 9 In their discussion, Thomas et al 6 noted that most methods they reviewed, including the sequential trials approach, had not been connected with the counterfactual outcomes framework for defining causal estimands of interest. They did not include MSM‐IPTW in their review, but implied in the discussion that the MSM‐IPTW and sequential trials approaches address different questions. In this paper, we show how the MSM‐IPTW approach and the sequential trials approach can be used to estimate the same causal estimand for sustained treatment regimes.

There have been several applications of the sequential trials approach. Danaei et al 10 applied it to electronic health records data to estimate the effect of statins on occurrence of coronary heart disease. Clark et al 11 studied whether onset of impaired sleep affects health related behavior. Bhupathiraju et al 12 investigated the effect of hormone therapy use on chronic disease risk in the Nurses' Health Study. Caniglia et al 13 estimated the effects of statin use on risk of dementia up to 10 years using data from a prospective cohort study. Both Danaei et al 10 and Caniglia et al 13 considered per protocol effects, while the other examples referenced above focused on intention‐to‐treat effects. Some papers have also used the sequential trials approach alongside other methods and compared the results empirically. Suttorp et al 14 studied the effect of high dose of a particular agent used in kidney disease patients on all‐cause mortality with both a sequential trials approach and MSM‐IPTW using data from a longitudinal cohort. In their review paper, Thomas et al 6 illustrated the sequential trials approach together with sequential stratification and time‐dependent propensity score matching to study the effect of statins on cardiovascular outcomes using the Framingham Offspring cohort. Several papers in recent years have advocated the benefits of explicitly emulating a “target trial”—the hypothetical randomized controlled trial that one would ideally like to conduct—in investigations of causal effects using observational data. 15 , 16 , 17 , 18 , 19 The use of target trials provides a structured framework that guides the steps of an investigation, with the target trial protocol including specification of eligibility criteria, time origin, and the causal estimand of interest. This framework has been used in conjunction with the sequential trials approach, for example by Caniglia et al. 13

In most applications of the sequential trials approach the target causal parameters have been informally stated using the language of emulating hypothetical randomized trials. However, a more formal description of such parameters using counterfactuals has been lacking. Gran and Aalen 20 highlighted the need for further work to establish the causal estimands that were being estimated in the sequential trials approach. This is important both to clarify the interpretation and to inform simulation studies to study the properties of the estimators. Karim et al 21 compared the sequential trials approach with MSM‐IPTW based on Cox models using simulations, but did not account for the fact that in their implementation the two analyses were used to estimate different quantities, which were therefore not directly comparable. 20 The aim of this paper is to establish connections between the MSM‐IPTW approach and the sequential trials approach, and outline where they can both be used to estimate the same causal estimand, focusing on a marginal risk difference. We show that the causal parameter estimated from the sequential trials approach can be described in terms of the parameters of a particular MSM using the counterfactual framework. We also establish more broadly what estimands the MSM‐IPTW and sequential trials approaches are suitable for identifying, what assumptions are needed, and how the data are used differently. We compare the MSM‐IPTW and sequential trials approaches in a simulation study, in which we assess their relative efficiency for estimation of the same quantities.

In most of the literature on the sequential trials approach, including the papers of Hernan et al 4 and Gran et al, 5 the primary focus has been on estimation of hazard ratios using Cox proportional hazard models, or the approximately equivalent pooled logistic regression model. This has traditionally also been the case for the MSM‐IPTW for time‐to‐event outcomes. The Aalen additive hazard models for hazard differences have also become increasingly popular, both to avoid the proportional hazards assumption and due to their useful property of being collapsible. 22 However, hazard ratios, and generally also hazard differences, have been shown not to have a straightforward causal interpretation. 23 , 24 , 25 By focusing on the marginal risk difference we avoid this issue. While other studies 4 , 13 , 18 , 26 have presented estimated survival curves under the treated and untreated regimes, and corresponding risk differences, it has not been described in detail how such survival curves have been or can be obtained, or what population they refer to. In this paper we demonstrate how our causal estimand of interest, a marginal risk difference, can be estimated under both the sequential trials and MSM‐IPTW approaches by using an empirical standardization procedure. This is similar to the standardization approach described by Young et al. 27 We consider both proportional and additive hazards models and our simulation comparison of the two approaches makes use of the properties of additive hazards models. 28

The paper is organized as follows. In Section 2 we describe a motivating example, in which the aim is to estimate the effect of long term use of the treatment dornase alfa on the composite outcome of death or transplant for people with cystic fibrosis (CF), using longitudinal data from the UK Cystic Fibrosis Registry. 29 This section also sets out the notation used throughout the paper. In Section 3 we define the causal estimand of interest, which is a marginal risk difference. The estimand is defined using counterfactual notation. In Section 4 we consider how the causal estimand can be identified using the longitudinal observational data, and outline estimation using the MSM‐IPTW approach and the sequential trials approach. We show how the sequential trials approach can also be understood as fitting a particular MSM. In Section 5 we discuss in detail the similarities and differences between the two approaches. The two approaches are then compared in a simulation study in Section 6, and applied to the motivating example in Section 7. Accompanying R code for performing the simulation is provided at https://github.com/ruthkeogh/sequential_trials. We conclude with a discussion in Section 8.

2. MOTIVATING EXAMPLE AND DATA STRUCTURE

2.1. Investigating treatment effects in CF

As a motivating example we will investigate the long‐term impacts of a treatment used in CF on the composite time‐to‐event outcome of death or transplant. CF is an inherited, chronic, progressive condition affecting around 10 500 individuals in the United Kingdom and over 100 000 worldwide. 30 , 31 , 32 , 33 Survival in CF has improved considerably over recent decades and the estimated median survival age in the UK is 51.6 for males and 45.7 for females. 30 , 34 , 35

One of the most common consequences of CF is a build up of mucus in the lungs, which leads to an increased prevalence of bacterial growth in the airways and a decline in lung function. 36 Therefore, it is common for people with CF to routinely use aerosolized mucoactive agents, which help to break down the layer of mucus in the lungs making clearance easier. Recombinant human deoxyribonuclease, commonly known as dornase alfa (DNase), is one such mucolytic treatment which was authorized for use in 1994. DNase is the most commonly used treatment for the pulmonary consequences of CF in the United Kingdom, used by 67.6% of CF patients in 2019. 30 It is a nebulized treatment, administered daily on a long term basis. The efficacy of DNase for health outcomes in CF has been studied in several randomized controlled trials. 37 Most trials had short‐term follow‐up and focused on the impact of DNase on lung function. Seven trials from the 1990s investigated mortality as a secondary outcome but follow‐up was short and findings inconclusive; a meta‐analysis based on these studies gave a mortality risk ratio estimate of 1.70 (95% CI 0.70‐4.14) comparing users vs nonusers. 37

The effect of DNase use on lung function and requirement for intravenous antibiotics has previously been investigated using observational data from the UK Cystic Fibrosis Registry. 38 , 39 We now use the UK Cystic Fibrosis Registry to investigate the impact of DNase on the composite outcome of death or transplant. The majority of transplants in people with CF are lung transplants, however, we consider any transplant here. The UK Cystic Fibrosis Registry is a national, secure database sponsored and managed by the Cystic Fibrosis Trust. 29 It was established in 1995 and records demographic data and longitudinal health data on nearly all people with CF in the United Kingdom, to date capturing data on over 12 000 individuals. Data are collected in a standardized way at designated (approximately) annual visits on over 250 variables in several domains, and have been recorded using a centralised database since 2007. In this study we use data from 2008 to 2018, plus some data on prior years to define eligibility for inclusion in the analysis according to criteria outlined below. At each annual visit it is recorded whether or not an individual had been using DNase in the past year. We identified potential confounders of the association between DNase use and death or transplant based on expert clinical input, and these include both time‐fixed and time‐dependent variables—see Section 2.2. We focus on the impact of initiating and continuing use of DNase on the risk of death or transplant up to 11 years of follow‐up compared with not using DNase.

2.2. Notation and data structure

We assume a setting in which individuals are observed at regular “visits” (data collection times) over a particular calendar period (2008‐2018 in the example), reflecting the type of data that arise in our motivating example and in similar data sources. Let denote the start of follow‐up for a given patient, which we define in our context as the first time in the analysis dataset at which they are first observed to meet some specified eligibility criteria and at which they could initiate a treatment strategy of interest. This is discussed further below and in Table 1. Time can be at different points in calendar time for different individuals.

TABLE 1.

Summary of the protocol of a target trial for studying the effect of DNase use on survival in people with cystic fibrosis (CF), and summary of how the target trial is emulated using UK CF Registry data.

| Protocol component | Target trial | Emulation of the target trial using UK CF Registry data |

|---|---|---|

| Eligibility criteria | People with CF aged 12 or older and who have not used DNase for at least 3 years. | The target trial is emulated using data on people who were included in the UK CF registry between 2008 and 2018. The emulation is performed using the MSM‐IPTW and sequential trials approaches. At a given annual visit from 2008 to 2017, a person is defined as meeting the trial emulation eligibility criteria if they meet the following conditions: aged 12 or older; have at least three preceding visits, at least one in the prior 18 months, and were recorded as not using DNase at each of those visits; have observed measurements of FEV1% and BMI z‐score at the current and previous visits. The first visit at which the trial emulation eligibility criteria are met in the available data is denoted and subsequent visits times are . The maximum number of visits is 11, with corresponding to 2018. |

| Treatment strategies | (i) Do not start using DNase during follow‐up. (ii) Start using DNase and continue to use it throughout follow‐up. | The treatment strategies of interest are as in the target trial. However, in the observational data individuals do not necessarily follow either of these strategies. |

| Assignment procedures | Patients randomly assigned to either treatment strategy. Patients and their health care teams are aware of the patient's treatment status. | At each visit an individual is a DNase user or non‐user, and individuals can switch on or off treatment. Treatment use is assumed to be informed by the following covariates, which are also assumed to affect the outcome: sex, genotype class, age, FEV %, BMI z‐score, chronic infection with Pseudomonas aeruginosa or use of nebulized antibiotics, infection with Staphylococcus aureus or MRSA, infection with Burkholderia cepacia, infection with Non‐tuberculous mycobacteria (NTM), CF‐related diabetes, pancreatic insufficiency, number of days on intravenous (IV) antibiotics at home or in hospital, other hospitalization (yes or no), use of other mucoactive treatments (hypertonic saline, mannitol or acetylcysteine), use of oxygen therapy, use of CFTR modulators (ivacaftor, lumacaftor/ivacaftor, or tezacaftor/ivacaftor), and past DNase use. Patients and their health care teams are aware of the patient's treatment status. %, BMI z‐score, chronic infection with Pseudomonas aeruginosa or use of nebulized antibiotics, infection with Staphylococcus aureus or MRSA, infection with Burkholderia cepacia, infection with Non‐tuberculous mycobacteria (NTM), CF‐related diabetes, pancreatic insufficiency, number of days on intravenous (IV) antibiotics at home or in hospital, other hospitalization (yes or no), use of other mucoactive treatments (hypertonic saline, mannitol or acetylcysteine), use of oxygen therapy, use of CFTR modulators (ivacaftor, lumacaftor/ivacaftor, or tezacaftor/ivacaftor), and past DNase use. Patients and their health care teams are aware of the patient's treatment status. |

| Follow‐up period | Starts at randomization and ends at death or transplant, loss‐to follow‐up, or 11 years of follow‐up, whichever occurs first. | MSM‐IPTW approach: Follow‐up starts at and ends at death or transplant, loss‐to follow‐up, or the end of 2018, whichever occurs first. |

| Sequential trials approach: A trial is formed from each visit . Trial includes individuals meeting the trial emulation eligibility criteria at visit (). In trial follow‐up starts at time and ends at switching away from the treatment status at visit , death or transplant, loss to follow‐up, or the end of 2018, whichever occurs first. | ||

| Outcome | Death or transplant. | As in the target trial. |

| Causal contrasts | Per protocol treatment effect. Marginal survival curves up to 11 years under the two treatment strategies, and corresponding marginal risk differences. For the marginal probabilities, the population of interest is individuals meeting the target trial eligibility criteria in 2018. | The causal contrasts of interest are as in the target trial. Estimation is using the MSM‐IPTW approach and the sequential trials approach—see Section 4. The emulation of the target trial makes use of data from 2008 to 2018, however, for the marginal probabilities in the causal estimand, we target the population meeting the trial emulation eligibility criteria in 2018. |

Information on treatment status and other covariates is observed at visits . Without loss of generality, we assume that visit occurs at time , for . We consider a binary treatment (treatment or control). At each visit we observe binary treatment status and a set of time‐dependent covariates . A bar over a time‐dependent variable indicates the history, that is and . An underline indicates the future, so that denotes treatment status from time onwards. In a slight abuse of standard notation we let denote the time of the most recent visit before time (so is always ), and denotes the treatment pattern up to the most recent visit prior to . Individuals are followed up from time until the time of the event of interest (death or transplant) or the time of censoring, whichever occurs first. Censoring could be due to loss‐to‐follow‐up or administrative censoring at the date of data extraction.

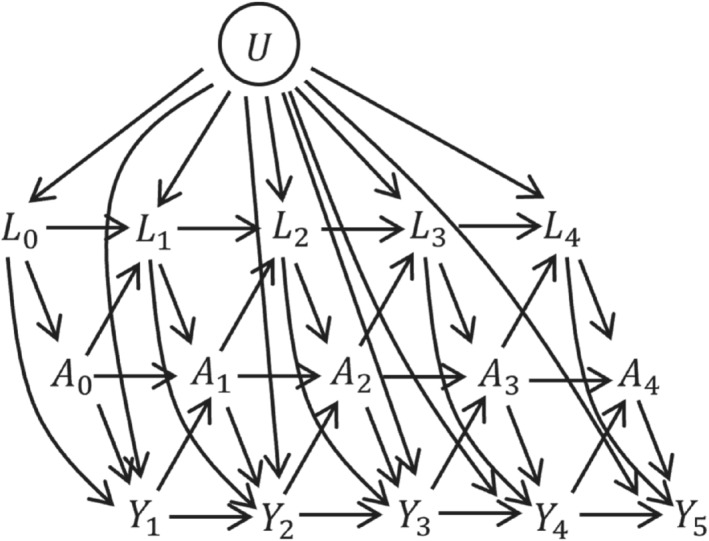

The assumed data structure is illustrated in the directed acyclic graph (DAG) in Figure 1, using a discrete‐time setting where is an indicator of whether the event occurs between visits and . One can imagine extending the DAG by adding a series of small time intervals between each visit, at which events are observed (but not or , which are assumed constant between visits). As the time intervals become very small we approach the continuous time setting. From the DAG, we can see that are time‐dependent confounders. Time‐dependent confounding occurs when there are time‐dependent covariates that predict subsequent treatment use, are affected by earlier treatment, and affect the outcome through pathways that are not just through subsequent treatment. The DAG also includes a variable , which has direct effects on and but not on . is an unmeasured individual frailty and we include it because it is realistic that such individual frailty effects exist in practice. Because is not a confounder of the association between and (after controlling for the observed confounders), the fact that it is unmeasured does not affect our ability to estimate causal effects of treatments. The DAG represents the assumed data structure and informs which variables, measured at what time points, are confounders of the association between treatment at a given time point and the outcome. The DAG could be extended in various ways, for example, we could incorporate long term effects of on and vice versa by adding arrows from to and from to . Long term effects of and on survival could also be added, for example by adding arrows from and to . The DAG could also include time‐fixed confounders, which we denote as .

FIGURE 1.

Directed acyclic graph (DAG) illustrating relationships between treatment , time‐dependent covariates , discrete time outcome , and unmeasured covariates . Time‐fixed covariates are omitted from the diagram but are assumed to potentially affect all other variables.

In the UK CF Registry data, DNase use () is recorded at each visit and refers to whether the patient has been prescribed DNase over the past year. The time‐dependent confounders () that we consider are: age (in years), lung function measured using forced expiratory volume in 1 second as percentage predicted (FEV %), body mass index (BMI) z‐score, chronic infection with Pseudomonas aeruginosa or use of nebulized antibiotics, infection with Staphylococcus aureus or Methicillin‐resistant Staphylococcus aureus (MRSA), infection with Burkholderia cepacia, infection with Non‐tuberculous mycobacteria (NTM), CF‐related diabetes, pancreatic insufficiency, number of days on intravenous (IV) antibiotics at home or in hospital (categorized as 0, 1‐14, 15‐28, 29‐42, 43+), other hospitalization, use of other mucoactive treatments (hypertonic saline, mannitol or acetylcysteine), use of oxygen therapy, use of CFTR modulators (ivacaftor, lumacaftor/ivacaftor, or tezacaftor/ivacaftor). Of the time‐dependent variables, FEV

%), body mass index (BMI) z‐score, chronic infection with Pseudomonas aeruginosa or use of nebulized antibiotics, infection with Staphylococcus aureus or Methicillin‐resistant Staphylococcus aureus (MRSA), infection with Burkholderia cepacia, infection with Non‐tuberculous mycobacteria (NTM), CF‐related diabetes, pancreatic insufficiency, number of days on intravenous (IV) antibiotics at home or in hospital (categorized as 0, 1‐14, 15‐28, 29‐42, 43+), other hospitalization, use of other mucoactive treatments (hypertonic saline, mannitol or acetylcysteine), use of oxygen therapy, use of CFTR modulators (ivacaftor, lumacaftor/ivacaftor, or tezacaftor/ivacaftor). Of the time‐dependent variables, FEV % and BMI are measured on the day of the visit, whereas all of the other variables refer to information in the time since the previous visit, that is, in the past year. We also include two time‐fixed covariates (): sex (male/female) and genotype class (a marker of the severity of the CF‐causing mutation—low, high, not assigned, missing).

40

The covariates are binary yes/no variables except where indicated. For individuals who are not observed to have the event of interest (death or transplant) the censoring date was the earlier of December 31, 2018 and the date of the last visit plus 18 months.

% and BMI are measured on the day of the visit, whereas all of the other variables refer to information in the time since the previous visit, that is, in the past year. We also include two time‐fixed covariates (): sex (male/female) and genotype class (a marker of the severity of the CF‐causing mutation—low, high, not assigned, missing).

40

The covariates are binary yes/no variables except where indicated. For individuals who are not observed to have the event of interest (death or transplant) the censoring date was the earlier of December 31, 2018 and the date of the last visit plus 18 months.

3. THE CAUSAL ESTIMAND

3.1. The general case

In this section we specify the causal estimand, meaning the quantity that we wish to estimate. We consider the estimand in general terms first, and then in the context of our example introduced in Section 2. Our interest lies in comparing outcomes under two treatment strategies: (1) to start and continue treatment (to the time horizon of interest); (2) not to start treatment up to the time horizon of interest. We refer to strategy (1) as “always treated” and strategy (2) as “never treated.” The time scale on which the causal estimand is defined is denoted , with denoting the time at which an individual could initiate strategy (1) or (2). Let us define as the counterfactual event time had an individual received the treatment from onwards (“always treated”) and as the counterfactual event time had an individual not received the treatment from onwards (“never treated”). We can then define our causal estimands of interest as contrasts between functions of the distributions of and .

Effects of treatments on time‐to‐event outcomes can be quantified in a number of ways. We let denote the counterfactual hazard at time under the possibly counter‐to‐fact treatment strategy . A common estimand is the ratio of hazards between the treatment and control strategies, . However, as hazard ratios do not have a straightforward causal interpretation, it is recommended to consider causal contrasts between risks. 23 , 24 , 25 In this paper we will focus on the risk difference as the primary estimand of interest:

| (1) |

This risk difference contrasts the risk of the event by a time horizon , under the conditions of being “always treated” vs “never treated” up to time . There may be several time horizons of interest, that is, different values of , and we let denote the maximum time horizon for which the risk difference is to be obtained. Alternative quantities with a causal interpretation are the risk ratio , the survival ratio and contrasts between restricted mean survival times . 41

The causal estimand in (1) corresponds to a per protocol effect because it involves comparisons between outcomes if treatment level () were sustained from up to time . The estimand is expressed in terms of marginal probabilities (risks) and it is important to make clear what population the marginal probabilities refer to, for example, in terms of age and clinical characteristics. If the causal estimand were to be estimated from a randomized trial then would be the time of randomization and the population to which the marginal risks refer would be the population from which the trial participants were drawn.

The probabilities in (1) can be written as a function of the counterfactual hazard, which we make use of later for estimation:

| (2) |

The counterfactual hazard function could also be considered as a function of the covariate history at , denoted , . In this case we can write

| (3) |

where the expectation is over the distribution of in the population of interest.

3.2. Application in the UK CF Registry: causal estimand

In our motivating example, “always treated” means sustained use of DNase for the follow‐up period of interest and “never treated” means not using DNase for the follow‐up period of interest. We are interested in risk differences up to 11 years of follow‐up (), standardized to the underlying population of individuals meeting some specified eligibility criteria in 2018, this being the most recent year for which data were available for this study, and therefore considered the population of most relevance to the current CF population. The characteristics of this population could be summarised by their distribution of time‐fixed covariates and their history of time‐dependent variables up to 2018.

The causal estimand can be linked to a target trial. 15 Arguably a precise specification of the causal estimand using counterfactual notation, as in (1), encompasses all elements of the target trial. However, by specifying the target trial alongside the causal estimand, we can help ensure that our aims and findings are clear and accessible by different audiences. Table 1 outlines the components of the target trial for studying the effect of DNase on survival on people with CF, and summarizes how the target trial is emulated using the available data, following examples in the literature. 13 , 18 There are differences in the way that the MSM‐IPTW and sequential trials approaches use the observed data for the trial emulation, which is incorporated in the description of the trial emulation.

The target trial protocol specifies the population to which the causal estimand of interest refers, which is individuals meeting the target trial eligibility criteria in 2018. The eligibility criteria for the trial emulation using the UK CF Registry data (“trial emulation eligibility criteria”) extend the target trial eligibility criteria as they take into account features of the data and how the data are to be used in the analysis. The emulation of the trial makes use of data from 2008 to 2018. For the marginal probabilities in the causal estimand the population of interest is people meeting the trial emulation eligibility criteria in 2018. For the trial emulation we identify individuals meeting the trial emulation eligibility criteria at visits recorded in the UK CF Registry data between 2008 and 2017. To do this also requires data from three visits prior to 2008 to ascertain whether the person had been taking DNase in the three previous years. Individuals who may have used DNase in the more distant past were included to increase sample size and because it was considered reasonable that the effect of restarting DNase in this group would be similar to that for individuals who had never used DNase in the past. The trial emulation eligibility criteria also include that an individual is eligible at a given visit if they have at least three preceding visits, with the patient recorded as not using DNase at each of those visits, and with at least one visit being in the prior 18 months. To be eligible at a given visit, individuals were also required to have observed measurements of FEV % and BMI z‐score at that visit and the previous visit.

% and BMI z‐score at that visit and the previous visit.

4. ESTIMATION USING LONGITUDINAL OBSERVATIONAL DATA

4.1. Assumptions and MSMs

This section focuses on the estimation of the causal estimand in (1) using longitudinal observational data of the type described in Section 2.2 and as illustrated in the DAG in Figure 1. In the observational data, individuals who are untreated initially () can subsequently start treatment (ie, for some ) and vice versa. As illustrated in the DAG in Figure 1, a change in a person's treatment status can depend on time‐dependent patient characteristics that also affect the outcome. Therefore, individuals who follow the treatment patterns of interest from time (ie, [“always treated”] and [“never treated”]), differ systematically in their characteristics at time . Furthermore, some individuals follow other patterns of treatments, for example, . To identify the causal estimand of interest from the observational data requires the four key assumptions of no interference, positivity, consistency, and conditional exchangeability (ie, no unmeasured confounding). 1 , 42 , 43 Details on these assumptions are provided in Supplementary Material (Section 1). We did not depict censoring in the DAG, but it is also assumed that any censoring of individuals in the observational data is uninformative conditional on observed time‐fixed and time‐dependent covariates and treatments, and handling of censoring is discussed further below.

The causal estimand in (1) involves marginal risks, which we expressed in (2) in terms of the counterfactual hazard function. For estimation, we assume a particular model for these hazard functions, with the model specifying how the counterfactual hazard at a given time depends on the treatment history up to that time. Such models are referred to as MSMs. In the time‐to‐event setting, the MSM for a hazard is usually assumed to take the form of a Cox proportional hazards model 44

| (4) |

where is the baseline counterfactual hazard, denotes the treatment pattern up to the most recent visit prior to , is a function of to be specified, and is a vector of log hazard ratios. However, the hazard could take various other forms and we will also consider MSMs based on Aalen's additive hazard model, 45 , 46 which have the form

| (5) |

where is the baseline counterfactual hazard and is a vector of time‐dependent coefficients. In both hazard models the function is chosen to equal zero when . In (3) we also showed that the causal estimand can be expressed in terms of a conditional hazard. For this the MSM for the conditional hazard includes conditioning on (some components of) the covariate history at time , which we denote . Proportional hazards and additive hazards forms for the conditional hazard are

| (6) |

and

| (7) |

The hazard models in (4) to (7) are generic forms for the MSM. They could be extended to include interactions between and . Below we consider specific forms for the MSM that are used in the MSM‐IPTW and sequential trials approaches, and we specify in terms of and . Both methods involve specification of an MSM for the hazard or the conditional hazard , followed by estimation using observational data under the assumptions stated above.

For the Cox MSM in (4) the survival probabilities required for the estimand in (1) can be written

| (8) |

For the Aalen MSM in (5) we have

| (9) |

Similar expressions can be used to write the conditional probabilities in terms of the conditional MSM in (6) or (7), which can then be used to estimate the marginal probabilities using (3). Further details on estimation are discussed below.

4.2. Estimation using MSM‐IPTW

4.2.1. Specifying and estimating the MSM

In the MSM‐IPTW approach, the data used for the analysis includes individuals followed‐up from the first time at which they met the trial emulation eligibility criteria, denoted (see Table 1). This is the time at which the decision to initiate the “always treated” or “never treated” strategy could have been made, and denotes follow‐up time (ie, corresponds to ). Individuals are assigned time‐dependent weights to address time‐dependent confounding and the MSM is fitted to the observed weighted data. Consider first the MSM that does not condition on any covariate history at time . In a simple version of the MSM, the hazard at time is assumed to depend only on the current level of treatment: (model (4)) or (model (5)). Other options include that the hazard depends on duration of treatment, or ; or on the history of treatment in a more general way, or . The choice of the way in which the hazard depends on the treatment history could be informed by subject matter knowledge. In practice, however, it may be difficult to elicit this information and the choice could depend to some extent on the sample size, with larger sample sizes permitting more flexible forms for the hazard without too much loss of precision. The weight at time for a given individual is the inverse of the product of conditional probabilities of them having had their observed treatment pattern up to time given their past treatment status and time‐dependent covariate history, . These weights can be large for some individuals, which induces large uncertainty in estimates from the MSM, and stabilized weights are typically used instead. Details on the weights are provided in the Supplementary Material (Section 2).

Next we consider the MSM that conditions on the covariate history at time . In this case in Equations (6) and (9) is . In this case we also need to correctly specify how impact on the hazard, including consideration of any interaction terms between and . Inclusion of interactions with may also be informed by subject matter knowledge and could in theory be assessed using statistical tests, which should be based on an appropriate method for estimating standard errors such as bootstrapping (see below). If stabilized weights are estimated by including the covariate history at time , that is, , in the model in the numerator of the stabilized weights then must also be included in the MSM, that is, the MSM should be for a conditional hazard . An MSM based on a conditional hazard can also be used in combination with unstabilized or partially stabilized weights. In that case, however, the conditioning variables are not being used to control confounding, as that is achieved via the weights.

In the data there is likely to be censoring of individuals due to loss‐to‐follow‐up or for other reasons. Censoring that depends on time‐updated covariates, or on baseline covariates that are not conditioned on in the MSM (ie, not included in the set of baseline adjustment variables in the MSM, if any), is informative, and this should be accounted for in the analysis to avoid bias. Inverse probability of censoring weights (IPCW) can be used to address this. The total weight at a given time is the product of the IPTW and IPCW at that time.

4.2.2. Estimating the causal estimand

Having fitted a MSM using a Cox model for the marginal hazard as in (4), we use the expression in (8) to obtain estimates of the marginal probabilities of interest. In (8) the integrated (cumulative) baseline hazards can be estimated using an IPTW Breslow's estimator (see Section 2 in the Supplementary Material). Alternatively, having fitted a MSM using an Aalen additive model for the marginal hazard (5), we use the expression in (9), where the integrals are estimated as the cumulative coefficients estimated in the Aalen additive hazard model fitting process. When using an MSM for a marginal hazard the resulting marginal survival probabilities refer to the population at time zero. If using an MSM with conditioning on we can use the fitted MSM to estimate conditional survival probabilities . These conditional estimates can then be used to obtain estimates of marginal probabilities for a population of interest using empirical standardization. Here we have a choice about the population to which the marginal probabilities refer, in terms of their distribution of . In the motivating example, we let denote the set of individuals meeting the trial emulation eligibility criteria in 2018, and the number of individuals in is denoted . We let denote the observed values of the covariate history for individual in 2018. The empirical standardization formula is

| (10) |

where is the estimate of the conditional cumulative hazard at time . In practice, the empirical standardization can be done by creating two copies of each individual in (sometimes called “cloning”) and setting for one copy and for the other copy. The covariate values are the same for both copies. The estimated conditional survival probabilities are then obtained for each individual under both treatment regimes (ie, for both copies), and then the average calculated across individuals under both treatment regimes. A similar empirical or ‘plug‐in’ approach for estimating risks based on MSMs was described by Young et al. 27 See also Chen and Tsiatis 47 and Daniel et al. 48

If it is of interest to estimate the causal risk difference in (1) for several time horizons , it is recommended to fit the MSM using all event times up to to obtain risk difference estimates for all horizons , rather than fitting separate MSMs for different time horizons. This is to guarantee that estimated survival curves are monotone.

Confidence intervals for the estimated causal risk difference can be obtained by bootstrapping. The weights models and the MSM are fitted in each bootstrap sample, so that the bootstrap confidence intervals capture the uncertainty in the estimation of the weights as well as in the MSM.

4.3. Estimation using the sequential trials approach

4.3.1. Creation of trials and use of MSMs

The sequential trials approach takes advantage of the fact that individuals can meet the trial emulation eligibility criteria at more than one visit during their follow‐up, and therefore have data consistent with initiating the “always treated” or “never treated” strategy at more than one visit (Table 1). This is the key difference between the MSM‐IPTW approach and the sequential trials approach. Another important difference is that the sequential trials approach focuses only on two sustained treatment regimes of interest—“always treated” and “never treated”—whereas the MSM‐IPTW approach permits estimation of risks under any longitudinal treatment regime. The two methods are compared in detail in Section 5. Here we focus on describing the sequential trials approach.

In the sequential trials approach the trial emulation eligibility criteria are applied at each visit to form a sequence of “trials.” An individual can contribute to a “trial” starting from any visit at which they meet the criteria. The time in a given trial denotes the time since the start of the trial. In typical applications of the sequential trials approach, individuals who have previously used the treatment are not eligible for inclusion in a given trial, and so all individuals included in the th trial have . Those included in the th trial therefore include “initiators” who start treatment at visit () and “non‐initiators” who remain untreated at visit (). Noninitiators in trial can appear as initiators in a later trial (). Individuals can appear as initiators in only one trial, but as noninitiators in several trials. In our motivating example we relax this slightly and only require individuals not to have been treated for three prior years in order to be included in a given trial according to the trial emulation eligibility criteria, that is, a person is eligible at visit if . In the example, therefore, an individual could appear as a new initiator in more than one trial, and also as a new initiator in one trial and a noninitiator in a trial more than 3 years later. To simplify the notation below we assume that individuals meeting the trial emulation eligibility criteria for the th trial have , but the details are unchanged in the slightly relaxed situation of the motivating example.

Previous descriptions of the sequential trials approach have described the analysis models used but have not expressed these in terms of MSMs using the counterfactual framework. 4 , 5 Here we consider MSMs for use in the sequential trials approach, focusing on MSMs that condition on the covariate history at the start of the trial, , where corresponds to for trial . First consider the trial starting at visit . Let denote the hazard at time after visit 0 under the possibly counter‐to‐fact regime of being “always treated” () or “never treated” () from visit 0 onwards, conditional on . MSMs for this hazard using a Cox model and Aalen's additive hazard model are

| (11) |

and

| (12) |

We extend this to a trial starting at any visit and let denote the hazard at time after visit under this possibly counter‐to‐fact treatment regime, conditional on the time‐fixed covariates and the characteristics at the start of trial . We note that the subscript 0 on in the counterfactual event time refers to time (the start of a given trial) rather than to visit . MSMs for this hazard using a Cox model and Aalen's additive hazard model are therefore

| (13) |

and

| (14) |

The conditioning on is included to control for confounding by covariates at the start of the trial. Such confounding could alternatively be controlled using weights, but conditioning on covariate history at the start of the trial is a feature of the previously‐described sequential trials analysis methods, which we discuss further below. In the Cox MSM (13) the hazards in the treated and untreated could be assumed proportional, , or we could allow the hazard to depend on duration of treatment, for example, . The additive hazards MSM in (14) naturally incorporates a flexible time‐dependent effect of treatment on the hazard. Both models could be extended to include interactions between and .

4.3.2. Estimation using inverse probability of artificial‐censoring weights

The MSMs in (13) and (14) cannot be estimated directly from the observational data because not all individuals meeting the trial emulation eligibility criteria at visit are then “always treated” () or “never treated” (). In the approach as described by Hernan et al 4 (their “adherence‐adjusted” approach) and Gran et al 5 individuals are artificially censored at the visit at which they switch from their treatment group at the start of the trial. That is, in the trial starting at visit , individuals are censored at the earliest visit () such that . We refer to this as artificial censoring. It results in a modified dataset in which, in trial , all individuals have either or up to the earliest of their event time, their actual censoring time, or their artificial censoring time. Treatment switching is expected to depend on time‐dependent covariates that are also associated with the outcome, as illustrated in the DAG in Figure 1, meaning that the artificial censoring is informative. This is addressed using weights, which we refer to as inverse probability of artificial‐censoring weights (IPACW). The IPACW at times (in each trial ) is 1. The IPACW at times () is the inverse of the product of conditional probabilities that the individual's treatment status up to time remained the same as their treatment status at the start of the trial (time ), conditional on their observed covariates up to time . After estimating the IPACW, the MSMs in (13) and (14) can then be fitted using weighted regression using the time‐dependent IPACW, with in the hazard model replaced by the observed treatment status at the start of the trial, that is, in trial , noting that treatment status is forced to be the same at all times due to the artificial censoring. The conditioning on controls for confounding of the association between treatment initiation at time 0 and the hazard. Estimating the MSMs in this way is valid under the same assumptions of no interference, positivity, consistency and conditional exchangeability, as are required for the MSM‐IPTW analysis. Further details on the IPACW are provided in the Supplementary Material (Section 2). Hernan et al 4 used logistic regression to estimate the IPACW, whereas Gran et al 5 used Aalen's additive hazard model.

4.3.3. A combined analysis across trials

The MSMs in (13) and (14) could be fitted separately in each trial starting at times . However, the strength of the sequential trials approach comes from the potential for a combined analysis across trials. Some or all parameters of the MSMs in (13) and (14) could be assumed common across trials (ie, the same for all ). The common parameters can then be estimated by fitting the hazard models using the observed data combined across trials. When the MSMs are fitted combined across trials, the MSM for each trial preferably conditions on the same amount of history of . For example, we may wish to allow the hazard for each trial to depend on and . The amount of history to condition on should be based on what is considered to be needed to control for confounding, which can be encompassed in a DAG. We let denote the extent of the history of that we wish to condition on in the MSM. If we allowed the MSMs for the conditional hazards for different trials to depend on different amounts of history of then justification for a combined model may be challenging.

It may be reasonable in many settings to assume that the impact of the treatment on the hazard at a given time post‐initiation of the treatment does not depend on the visit at which treatment was initiated, that is, in model (13) and in model (14) for all . Similarly the coefficients for and could be assumed constant across trials. Often the observation time scale will be in a sense arbitrary. In our motivating example we define time as the first time during the period 2008 to 2017 that an individual is observed in the UK CF Registry and first meets the trial emulation eligibility criteria. For many individuals this is 2008 (see Section 7). Provided any elements of time (eg, age, calendar year) that affect the hazard are included in the set of covariates , the baseline hazard may also be assumed common across trials, that is, or . The models used to obtain the IPACW could be fitted separately in each trial or combined across trials.

As in the MSM‐IPTW approach, censoring of individuals due to loss‐to‐follow‐up or for other reasons, and which depends on time‐updated covariates, can be handled using IPCW. In the sequential trials analysis these weights are distinct from the IPACW used to address the artificial censoring, and the total weight at a given time is given by the product of these two types of weight. Gran et al 5 did not distinguish between artificial censoring in data set‐up for the sequential trials approach and censoring due to drop‐out in their analysis, and used a single weight to account for both of these.

4.3.4. Estimating the causal estimand

After estimating the MSM using the sequential trials data the expression in (3) can be used to obtain estimates of conditional survival probabilities . These conditional probabilities can then be used to obtain the marginal probabilities used in the causal estimand in (1). As in the MSM‐IPTW approach when using an MSM that is conditional on , we have some choice here about the population for which the marginal probabilities are obtained. Suppose we decided to fit a Cox MSM of the form

| (15) |

and that this is fitted combined across all trials, therefore giving combined estimates of the model parameters and the baseline hazard. As in Section 4.2 we let denote the observed sample from the population of interest, that is, individuals meeting the trial emulation criteria in 2018 in our motivating example. We let denote the observed values of time‐dependent covariates for individual . Using the Cox MSM in (15) the empirical standardization formula is

|

(16) |

where is the estimated cumulative baseline hazard, which is obtained using an IPACW Breslow's estimator (see Section 2 in Data S1). A similar expression can be written if the model in (15) is replaced by an additive hazards model. The standardized probability estimate in (16) targets the same probability as the corresponding expression for the MSM‐IPTW approach in (10). They both refer to a population . Both also involve a baseline hazard. The baseline hazards are themselves marginal with respect to covariates not included in the MSM for the conditional hazards, and will refer to different populations in the MSM‐IPTW and sequential trials approaches. However, provided that important aspects of “time” such as age and calendar time are included in the covariate set, we argue that these differences are likely to have negligible impact on estimates. In (15) the baseline hazard is assumed the same across trials. We could alternatively allow the baseline hazard to differ across trials, while assuming that the model coefficients are the same across trials. For this the MSM would be fitted using data combined across trials, but allowing the baseline hazard to differ by trial (ie, using a stratified baseline hazard). When allowing different baseline hazards across trials, care should be taken over which baseline hazard is then used to obtain the marginal risk estimates. In this paper we focus on a sequential trials approach in which the length of possible follow‐up decreases for trials starting at later visits. The baseline hazard corresponding to the trial starting at may be used to obtain the marginal risk difference estimates for all time horizons . The baseline hazard from the trial starting at could only be used to estimate marginal risk difference estimates for time horizons . An alternative would be to use a sequence of trials that all have equal length of follow‐up. In our motivating example, which uses data from 2008 to 2018, there are a maximum of 11 years of follow‐up (from 2008). If we did not use a combined analysis across trials, the parameters in (16) would be trial dependent, and one would then have to consider which set of parameters to use to obtain the marginal risk estimates.

In their descriptions of the sequential trials approach Gran et al (2010) 5 used the Cox proportional hazards model, and Hernan et al (2008) 4 used pooled logistic regression across visits, which is equivalent to a Cox regression as the times between visits gets smaller. 49 Røysland et al 50 used an additive hazards model, though their aim was to estimate direct and indirect effects, which differs from our aims. Gran et al (2010) 5 assumed the hazard ratios for treatment in the Cox model to be common across the trials, but allowed a different baseline hazard in each trial. Hernan et al (2008) 4 allowed the odds ratio for treatment to differ across trials and also performed a test for heterogeneity, though it is unclear whether they allowed separate intercept parameters (analogous to our baseline hazards) across trials. If the coefficient for treatment is assumed the same across trials, it is recommended to assess this assumption using a formal test.

As in the MSM‐IPTW approach, confidence intervals for the estimated causal risk difference can be obtained by bootstrapping. The formation of the sequential trials, estimation of the weights, fitting of the conditional MSMs, and obtaining the risk difference are all repeated in each bootstrap sample.

5. COMPARING MSM‐IPTW AND THE SEQUENTIAL TRIALS APPROACH

5.1. An illustrative example using a causal tree diagram

In this section we show how the MSM‐IPTW and sequential trials approaches can estimate the same causal estimand using a simple example and non‐parametric estimates. Our aim is to provide insight into how the two approaches use the data differently to estimate the same quantity.

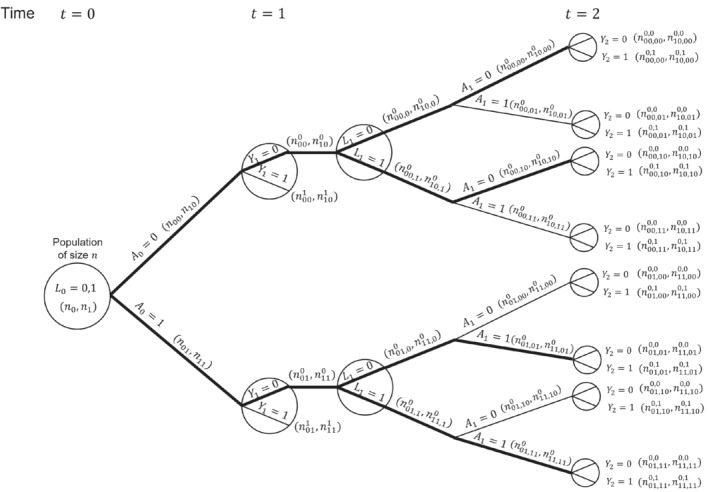

Consider a situation as depicted in the DAG in Figure 1, but with treatment and covariate information only collected at up to two visits ( measured at time 0, and measured at time 1) and survival status observed at times 1 () and 2 (). We focus on a single binary time‐dependent confounder and omit the unobserved variable and any time‐fixed covariates . Figure 2 shows a causal tree graph (see for example Richardson and Rotnitzky (2014) 51 ), representing the different possible combinations of the variables up to time 1, and then the different combinations of among individuals who survive to time 1 (). A total of individuals are observed at time 0. The numbers in brackets on the branches of the tree are the number of individuals who followed a given pathway up to that branch. The notation is as follows: denotes the number with ; the number with ; the number with ; the number with ; the number with ; and the number with .

FIGURE 2.

Causal tree diagram illustrating a study with a binary treatment and binary covariate , both measured at two time points (). is a discrete time survival outcome. Thick lines indicate branches for groups of individuals who were untreated or treated at both time points.

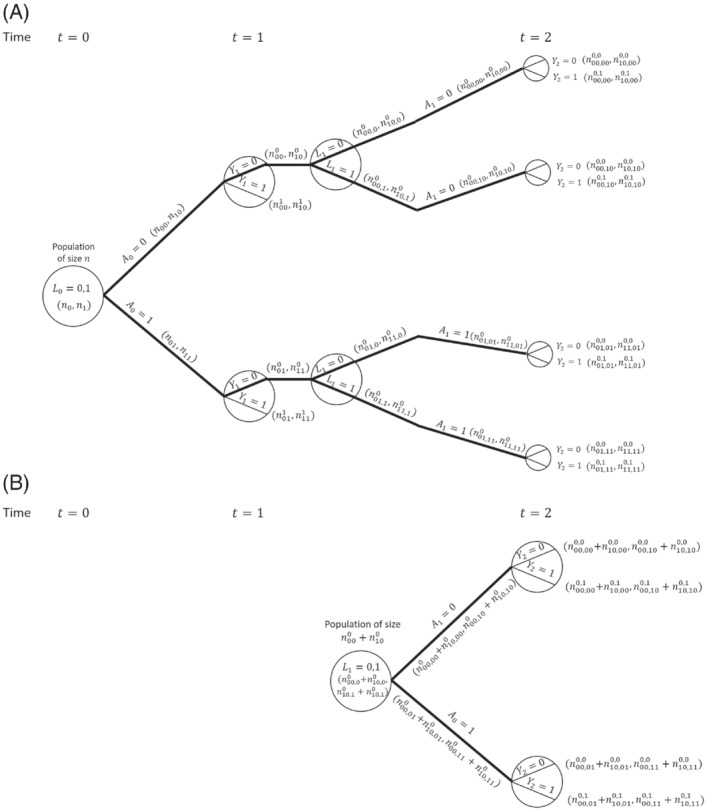

Our interest is in comparing survival probabilities under the two treatment regimes of “always treated” and “never treated.” The branches representing these two treatment regimes starting from are shown with thick lines in the causal tree diagram. The MSM‐IPTW analysis makes use of the causal tree diagram as depicted in Figure 2. Under the sequential trials approach, we create a trial starting at time 0 and a trial starting at time 1. In the trial starting at time 0 individuals who survive to time 1 are censored at time 1 if , that is, not all branches after time 1 are used. The trial starting at time 1 is restricted to individuals with and . The sections of the causal tree diagram used for these two trials are shown in Figure 3.

FIGURE 3.

Illustration of the sequential trials approach using causal tree diagram from Figure 2. (A) Trial starting at . (B) Trial starting at . (A) In the trial starting at time individuals with are censored at time 1 if . (B) The trial starting at time is restricted to individuals with (and ).

Consider the probability of survival to time 1 with treatment , . The MSM‐IPTW approach estimates this using the result that (under the conditions of consistency, positivity and conditional exchangeability)

| (17) |

Based on the tree diagram, a nonparametric estimate of this is

| (18) |

where the two contributions within the outer brackets come from individuals with and . On the other hand, the sequential trial starting at time 0 estimates . By consistency and conditional exchangeability we have . Based on the tree diagram, a non‐parametric estimate of this using the trial starting at time 0 is . Using the result that (assuming consistency and conditional exchangeability) it follows that a nonparametric estimate of the marginal probability based on the trial starting at time 0 is

| (19) |

which is the same as the estimate obtained using MSM‐IPTW (ie, Equation 18). Therefore the MSM‐IPTW and sequential trials approaches (using the trial starting at visit 0) give identical estimates of . This equivalence between inverse probability weighted estimates and standardized estimates obtained using the g‐formula in the nonparametric setting is well established (see eg, References 43 and 52). In the sequential trials approach, the trial starting at time 1 (so that corresponds to ) can be used to estimate the probability of survival for 1 year under a given treatment strategy conditional on survival to time 1 and on . By consistency and conditional exchangeability, and assuming that conditioning on is sufficient to control for confounding of the association between and , the nonparametric estimate is

| (20) |

where the two contributions come from individuals with and . The marginal probability estimate refers to a population in which , whereas the marginal probability estimate refers to a population with a different distribution of the covariate measured at the start of the trial, . The estimate from the trial starting at time 1 could alternatively be standardized to the distribution of at time 0:

| (21) |

Under the assumption that , a combined estimate of could be obtained from the two trials, for example, using an inverse‐variance‐weighted combination of the estimates from the two trials (Equations 19 and 21).

Considering again the trial starting at time , we can also show that non‐parametric estimates of from the two methods are the same. The MSM‐IPTW approach uses

| (22) |

Based on the tree diagram, a nonparametric estimate of this is

| (23) |

where the four contributions within the outer brackets come from individuals with the four possible combinations of .

The sequential trials approach estimates , which can be written

| (24) |

The term was considered above. The first term, , is estimated by IPACW, because individuals with are censored at time 1, and the remaining individuals with are weighted by . Under the assumptions of consistency, positivity and conditional exchangeability we can write

| (25) |

Based on the tree diagram, a nonparametric estimate of this is

| (26) |

Using this result along with the estimate , it can be shown that the sequential trials estimate of is the same as the MSM‐IPTW estimate in (23). By comparing (23) and (26) we can see clearly the connection between the weights used in the MSM‐IPTW approach and those used in the sequential trials approach (IPACW).

5.2. Specification and estimation of MSMs

In practice, model‐based estimates are typically required instead of nonparametric estimates, as we usually have multiple time‐dependent confounders to consider, including continuous variables. In this section we compare in more detail the forms of the MSMs used in the MSM‐IPTW and sequential trials approaches, how they are estimated using inverse probability weights, and the assumptions made.

A key difference in the MSMs used in the two approaches is that in the MSM‐IPTW approach the MSM for the hazard at time includes the history of treatment up to time , , whereas the MSM used in the sequential trials approach involves only the treatment status assigned at the start of the trial, which is equivalent to the treatment at any time after the start of the trial due to the artificial censoring. The MSM‐IPTW approach therefore requires that the relation between treatment history and the hazard is correctly specified, whereas in the sequential trials approach there are limited options for the form of the MSM because only two treatment regimes are considered, because after the artificial censoring all individuals used in the analysis are either “always treated” or “never treated”. In the MSM‐IPTW approach, when covariates are excluded from the MSM, the MSM is a fully marginal model—the possibility of interaction between treatment and these covariates is not ruled out but does not have to be modelled. If the MSM used in the MSM‐IPTW approach includes covariates then any interactions that exist between treatment and must be included in the MSM and correctly specified. MSMs that condition on the covariate history at time 0 are therefore more susceptible to mis‐specification than MSMs for a marginal hazard.

The sequential trials approach uses an MSM for the hazard that conditions on the covariate history at the start of each trial ( for trial ). This approach therefore relies on correct modelling of the association between outcome and the covariate history in each trial. If there are interactions between treatment and the covariate history at the start of each trial then these must be included in the MSM and correctly specified. When using a combined analysis across trials, each trial should include the same amount of covariate history in the MSM for the conditional hazard, which we denoted in Section 4.3 by . If we adjusted for an increasing length of covariate history in successive trials then the hazard model for each trial would be different. Specifying congenial models across trials for a combined analysis would then be difficult or impossible. If we are willing to make the assumption that the effect of treatment on the hazard is the same in all trials, that is, does not depend on the time of treatment initiation, then the sequential trials approach has information about the effect of treatment initiation from several time origins. Under these assumptions, the re‐use of individuals from several time origins in the sequential trials approach could lead to gains in efficiency in the marginal risk difference estimate at some time horizons relative to the MSM‐IPTW approach. On the other hand, in the MSM‐IPTW analysis individuals who switch their treatment status during follow‐up continue to contribute information to the analysis, whereas in the sequential trials analysis individuals cease to contribute information when they deviate from their treatment group as determined at the start of a given trial. This means that in the sequential trials analysis the number of individuals with longer term follow‐up will be smaller than in the MSM‐IPTW analysis. To make use of individuals who switch their treatment status during follow‐up, the MSM‐IPTW approach makes modeling assumptions which borrows information across treatment regimes. The sequential trials approach instead borrows information across populations.

The sequential trials approach could use weighting in the first time period instead of adjustment for covariates measured at the start of the trial, though that is not how the method has been described or used to date. Conditioning on covariates measured at the start of the trial in the sequential trials approach enables use of empirical standardization to obtain risk difference estimates for a population with any distribution of the covariates at time 0, though care should be taken not to extrapolate beyond the observed covariate distribution. We have shown how this enables us to estimate the same causal estimand in the sequential trials approach as in the MSM‐IPTW approach.

To fit the MSMs, both approaches require time‐updated weights, and hence the data should be formatted with multiple rows per individual—one for each visit for MSM‐IPTW, and one for each visit within each trial for the sequential trials approach. In the MSM‐IPTW approach without conditioning on the IPTW weights are proportional to the IPACW weights used in the sequential trials approach. Because the MSM‐IPTW approach includes individuals following any treatment regime (not just “always treated” or “never treated”), we may expect to see more extreme weights with increasing follow‐up, compared with the weights used in the sequential trials approach.

Because the two approaches use the data differently and because the MSM‐IPTW approach could make use of more extreme weights, it is of interest to investigate the relative efficiency of the two approaches for estimating the causal estimand.

6. A SIMULATION STUDY FOR THE COMPARISON OF MSM‐IPTW AND THE SEQUENTIAL TRIALS APPROACH

6.1. Simulation plan

In this section we use a simulation study to evaluate and compare the performance of the MSM‐IPTW approach and the sequential trials approach. It is only appropriate to consider the relative efficiency of the two approaches when they target the same causal estimand, and we focus on the estimation of marginal risks and the marginal risk difference as in (1). In Section 4 we considered MSMs for both methods based on Cox models or on additive hazard models. To enable a fair comparison of the methods, we wish to simulate data in such a way that the MSMs used in the two approaches are correctly specified. To ensure this we simulate the time‐to‐event data under an additive hazards model. In Section 3 of the Supplementary Material we show that one can use an additive hazard model for the MSM used in the sequential trials analysis (Equation 14) (with common parameters assumed across trials) and an additive hazard model for the MSM used in the MSM‐IPTW approach (Equation 5 or 7), and that both MSMs can be correctly specified. However, if we use a Cox model for the MSM for the sequential trials analysis (Equation 13) and a Cox model for the MSM used in the MSM‐IPTW approach (Equation 4 or 6), then both MSMs cannot simultaneously be correctly specified in general. This is because the parameters of additive hazard models are collapsible, whereas hazard ratios in the Cox model are noncollapsible. Martinussen and Vansteelandt 22 explained the implications of collapsibility for the use of Aalen additive hazards models and Cox models in the context of estimating the causal effect of a point treatment on survival. Keogh et al 28 extended to a longitudinal setting similar to that considered in this paper.

The simulation study makes use of the data generating simulation algorithm for longitudinal and time‐to‐event data described by Keogh et al. 28 We follow the general recommendations of Morris et al 53 on the conduct of simulation studies for evaluating statistical methods. R code for replicating the simulation is provided at https://github.com/ruthkeogh/sequential_trials.

6.1.1. Aim

Our aim is to compare the MSM‐IPTW and sequential trials approaches for estimating the effect of a time‐varying treatment on survival, subject to time‐dependent confounding, from longitudinal observational data. Our focus is on a setting in which both approaches target the same causal estimand (see below) and hence it is relevant to assess their relative efficiency. Both methods are expected to produce approximately unbiased estimates when the modeling assumptions are met. We hypothesize that the sequential trials analysis could be more efficient than the MSM‐IPTW analysis in certain settings, depending on the data generating mechanism and time horizon . MSM‐IPTW is inefficient when there are extreme weights. The efficiency of the sequential trials approach is expected to depend on the proportion of individuals always in the treatment group or always in the control group, since when many individuals switch over time this would result in few people contributing as follow‐up time increases, and the potential for large weights.

6.1.2. Data generating mechanisms

Data are generated according to the DAG in Figure 1 for individuals. Individuals are observed at up to five visits (), with administrative censoring at time 5. We consider three simulation scenarios, starting with a “standard” scenario (scenario 1) and then investigating the performance of the methods when certain aspects of the data generating mechanism are varied. The simulation scenarios are outlined in detail in Table 2. In all scenarios, once an individual starts treatment they remain on treatment, that is, for any , there are no individuals that go from to . Also in all scenarios there is a single time‐dependent confounder , the model for conditional on , and is the same, and event times are generated according to the same conditional additive hazards model

| (27) |

In scenarios 1 and 2 the log odds ratio for in the logistic model for the probability of is 0.5, giving “moderate” dependence of treatment initiation on . In scenario 3, the log odds ratio for in the logistic model for the probability of is increased to 3, meaning that there is strong dependence of treatment initiation on .

TABLE 2.

Simulation scenarios: data generating mechanisms.

| Data generating mechanism | ||

|---|---|---|

| General data generating mechanism |

|

|

| , () | ||

|

|

||

| , () | ||

|

|

||

| Scenario 1 |

|

|

|

|

||

|

|

||

| Scenario 2 | : as in scenario 1 | |

|

|

||

| : as in scenario 1 | ||

| Scenario 3 | : as in scenario 1 | |

|

|

||

| : as in scenario 1 |

In scenario 1 the intercept in the logistic model for the probability of is such that 25% of individuals have . In scenario 2 the intercept in the logistic model for the probability of is reduced so that the proportion of individuals initiating treatment at a given visit is lower, with approximately 5% having .

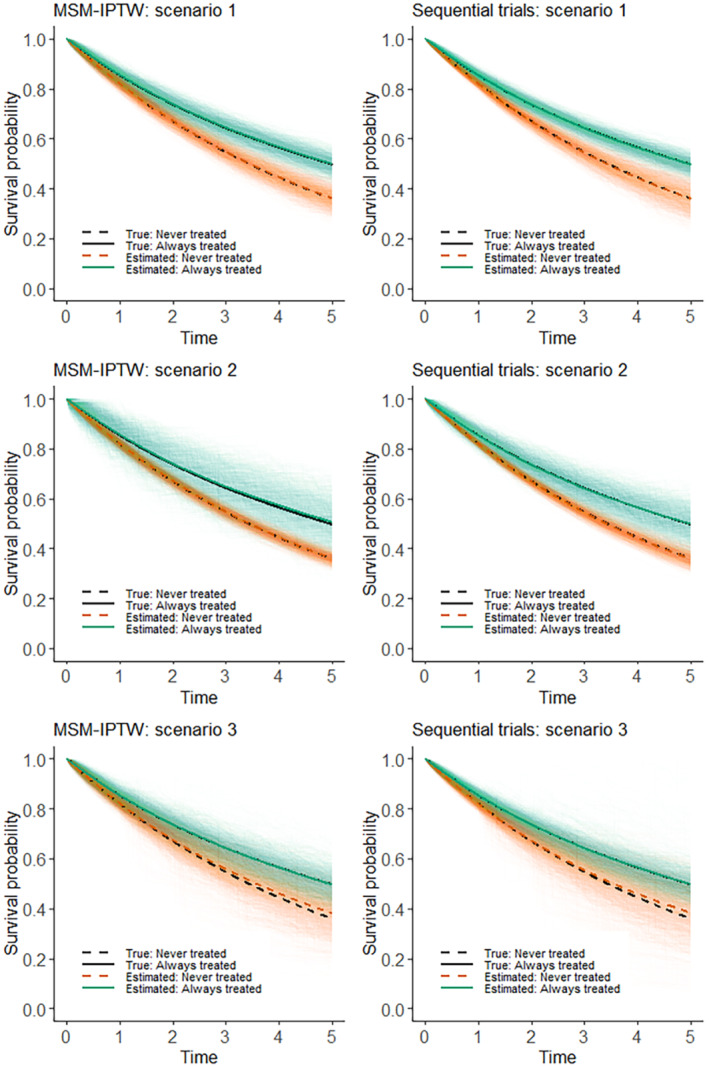

In scenarios 1, 2, and 3, respectively, approximately 57%, 62%, and 56% of individuals have the event of interest during the 5 years of follow‐up. We do not include any censoring apart from administrative censoring, though extensions to include this are straightforward. We generated 1000 simulated data sets.

6.1.3. Estimand

The estimand of interest is the marginal risk difference in (1), standardized to the underlying population at from which our sample of individuals arises. We consider time horizons on a continuous scale up to time .

6.1.4. Methods

The data are analysed using the MSM‐IPTW approach and the sequential trials approach. In the MSM‐IPTW approach we considered MSMs with and without conditioning on . When the conditional additive hazard model is as in (27), it can be shown 28 that the correct form for the MSM used in the MSM‐IPTW analysis using the MSM that is not conditional on is

| (28) |

and the correct form for the MSM conditional on is

| (29) |

The correct form for the MSM used in the sequential trials analysis, under our data generating mechanism, is

| (30) |

The coefficients in the fully conditional hazard model in (27) do not depend on time, and this results in the coefficients in the MSM for the sequential trials analysis being a function of time since the start of the trial, but being the same across trials .

We use stabilized weights for all analyses. In the MSM‐IPTW approach using the MSM in (28) (without conditioning on ) the stabilized IPTW at time are

| (31) |

and in the MSM‐IPTW approach using the MSM in (29) (with conditioning on ) the stabilized IPTW at time are

| (32) |

In the sequential trials approach the stabilized IPACW at time (for ) after the start of the trial for the trial starting at visit are (for )

| (33) |

The IPACW are equal to 1 up to time 1 after the start of each trial.

The weights take into account that individuals do not stop treatment once they start. In MSM‐IPTW we have , and . All other probabilities used in the weights were estimated using logistic regression models fitted using all visits combined (). The probabilities were estimated using a logistic regression model for with a separate intercept for each , in the subset of individuals with . The probabilities were estimated using a logistic regression model for with as a covariate, and allowing both the intercept and the coefficients for to differ for each , fitted using the subset of individuals with . The probabilities were estimated using a logistic regression model for with as a covariate in the subset of individuals with , which is the correct model under our data generating mechanism. Similar models were used to estimate the weights for the sequential trials analysis, but with replaced by in trial for the model used to estimate the probabilities in the numerator of the weights in (33). The models were fitted across all trials combined, which was valid under our data generating mechanism.

6.1.5. Performance measures

We assess the performance of the methods in terms of bias and efficiency of the risk difference estimates. To obtain the bias we need to know the true values. True values for the survival probabilities and the risk difference were obtained by generating data as though from a large randomized controlled trial, as explained in Keogh et al. 28 For this, we first generated for 1 million individuals, according to the model outlined in Table 1. Two datasets were then created, one in which the 1 million individuals are set to have () (“always treated”) and another in which they are all set to have () (“never treated”). In each dataset the () were then generated sequentially using the model for in Table 1, with being generated conditional on , and . Event times were generated in each data set according to the conditional additive hazard model in (27). The true survival probabilities, and corresponding risk differences, under each treatment strategy (“always treated” and “never treated”) were then obtained using Kaplan‐Meier estimates.

Plots are used to present survival probability estimates and bias and efficiency results for the risk differences at time horizons in the range from 0 to 5. We also present results for the survival probabilities and risk differences at time horizons in tables. Estimates are accompanied by Monte Carlo errors.

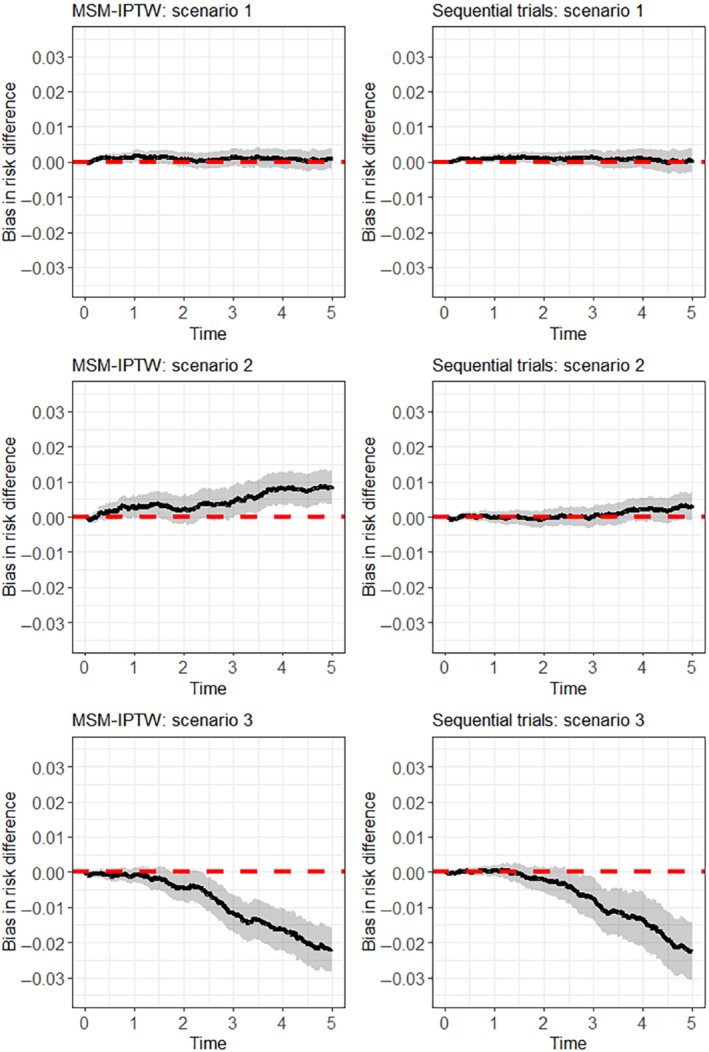

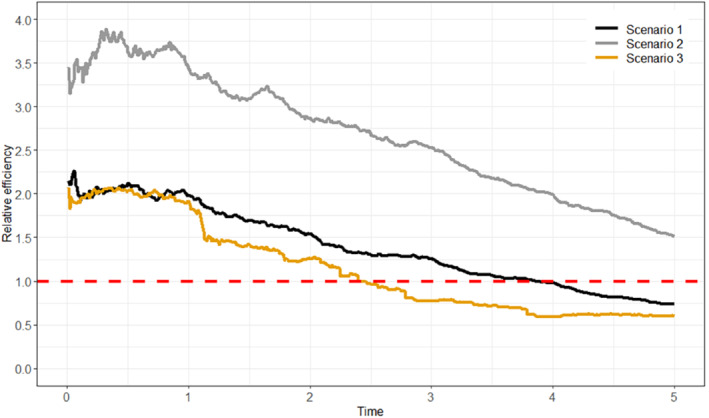

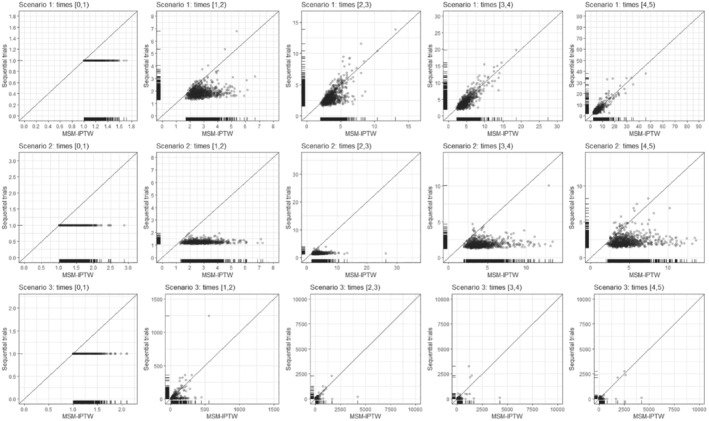

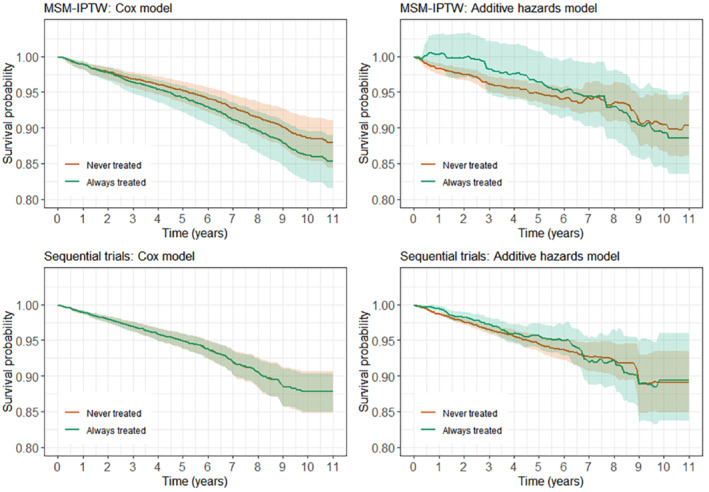

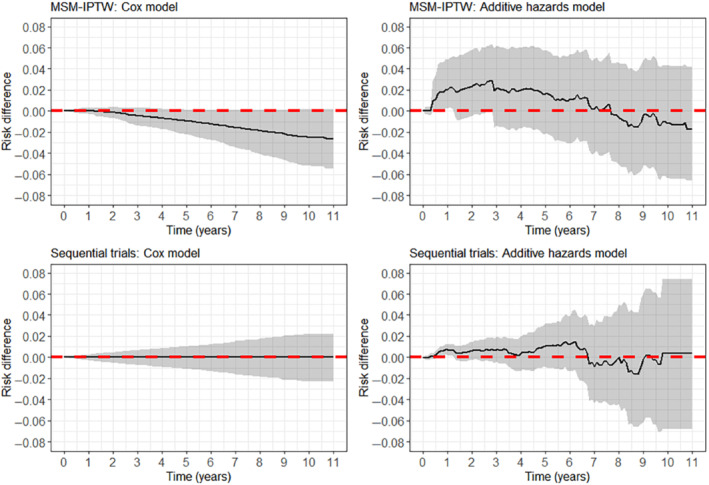

6.2. Results