Abstract

The contrast and resolution of images obtained with optical microscopes can be improved by deconvolution and computational fusion of multiple views of the same sample, but these methods are computationally expensive for large datasets. Here we describe theoretical and practical advances in algorithm and software design that result in image processing times ten to several thousand-fold faster compared with previous methods. First, we show that an ‘unmatched back projector’ accelerates deconvolution relative to the classic Richardson-Lucy algorithm by at least 10-fold. Second, 3D image–based registration with a graphics processing unit enhances processing speed 10–100-fold over CPU processing. Third, deep learning can provide further acceleration, particularly for deconvolution with spatially varying point spread functions. We illustrate our methods from the subcellular to millimeter spatial scale on diverse samples, including single cells, embryos, and cleared tissue. Finally, we show performance enhancement on recently developed microscopes that have improved spatial resolution, including dual-view cleared tissue light-sheet microscopy and reflective lattice light-sheet microscopy.

Editorial summary

Microscopy datasets are processed orders-of-magnitude faster with improved algorithms and deep learning.

Fluorescence microscopy enables imaging with submicron spatial resolution, molecular specificity and high contrast. These attributes allow direct interrogation of biological structure and function, yet intrinsic blurring and noise degrade fluorescence data, yielding an imperfect estimate of the underlying sample. Provided the imaging process can be characterized, such degradation can be partially reversed using deconvolution1,2, resulting in improved resolution and contrast. For example, given the point spread function (PSF) and data corrupted by Poisson noise (often dominant in fluorescence microscopy), the Richardson-Lucy deconvolution (RLD)3,4 procedure deblurs the estimate of the sample density with each iteration. In addition to deblurring, deconvolution can be used to combine multiple independent measurements taken on the same sample to produce an improved overall estimate of the sample5. This approach is especially useful in reconstructing super-resolution images in structured illumination microscopy6,7 or in performing joint deconvolution to improve spatial resolution in multiview light-sheet microscopy8–12.

Iterative deconvolution has been useful in these applications, but obtaining a resolution-limited result with RLD usually requires ten or more iterations. While the associated computational burden is manageable for single-view microscopes, deconvolving large multiview datasets can take days12,13, in many cases drastically exceeding the time for data acquisition.

Here we develop tools that address this problem. First, we show that in most cases the number of iterations can be reduced to 1 using an unmatched back projector, fundamentally speeding iterative deconvolution. Second, we optimize 3D image-based registration methods for efficient multiview fusion and deconvolution on graphics processing unit (GPU) cards. Finally, we show that computationally intensive deconvolution with a spatially varying PSF can be accelerated by using convolutional neural networks to ‘learn’ the relevant operations, provided that suitable training data can be assembled. These advances result in a speedup factor of ten to several thousand-fold over previous efforts. We illustrate the advantages on subcellular to macroscopic length scales, using samples that include single cells, zebrafish and nematode embryos, and mouse tissue. In addition to demonstrating improvements on super-resolution and large multiview datasets acquired with state-of-the-art microscopes, we also show that our methods enable the use of new microscopes, including dual-view, cleared tissue light-sheet microscopy and reflective lattice light-sheet microscopy.

Results

Drastically reducing the number of iterations in iterative deconvolution

Iterative deconvolution algorithms attempt to estimate the underlying sample density from noisy, blurred images. Important components of such algorithms are a ‘forward projector’, which describes the mapping from the desired image of the object to the noisy, blurred image measured by the microscope; and a ‘back projector’, which maps the measured image back onto the desired object image. For example, in RLD,

where ek is the k-th (current) estimate of the desired object image o, ek+1 is the (k+1)-th (future) estimate, i the measured image, f the forward projector, b the back projector, and * denotes convolution. The PSF is typically used for f, since f must accurately account for the blurring imparted by the band-limited microscope. b is traditionally ‘matched’ to f as its transpose (i.e. by flipping the PSF), but this is not the only possible choice. The field of radiology14 suggests that using an ‘unmatched’ back projector can accelerate this procedure. Specifically, in the unmatched variant of RLD, iterates were shown to move more rapidly toward desirable reconstructed images when the operator product of the forward projector and back projector had a flatter eigenvalue spectrum. To our knowledge this result has not been exploited in fluorescence microscopy. When the forward operator is a shift-invariant convolution, as is usually the case in microscopy, the number of iterations can be greatly reduced if b is chosen so that f * b tends toward a delta function (or equivalently, if the product of the magnitude of the Fourier Transforms (FT) of f and b approximates a constant in spatial frequency space, Fig. 1, Supplementary Notes 1, 2, 3). To study this effect, we began with images acquired with instant structured illumination microscopy (iSIM)15, a super-resolution technique. The iSIM PSF, or f, resembles a confocal PSF but with smaller spatial extent (Fig. 1a). Although b is typically chosen to be identical to f given the transpose symmetry of the iSIM PSF, we considered other choices with progressively smaller spatial extent (or equivalently, greater amplitude in the spatial frequency passband of the microscope, Fig. 1b, Methods). The last of these was a Butterworth filter designed specifically to ‘invert’ the native iSIM frequency response up to the resolution limit, resulting in a much flatter frequency response of |FT(f) × FT(b)| (Fig. 1c). Given its conceptual similarity to a Wiener filter, we termed this choice the ‘Wiener-Butterworth (WB) filter’.

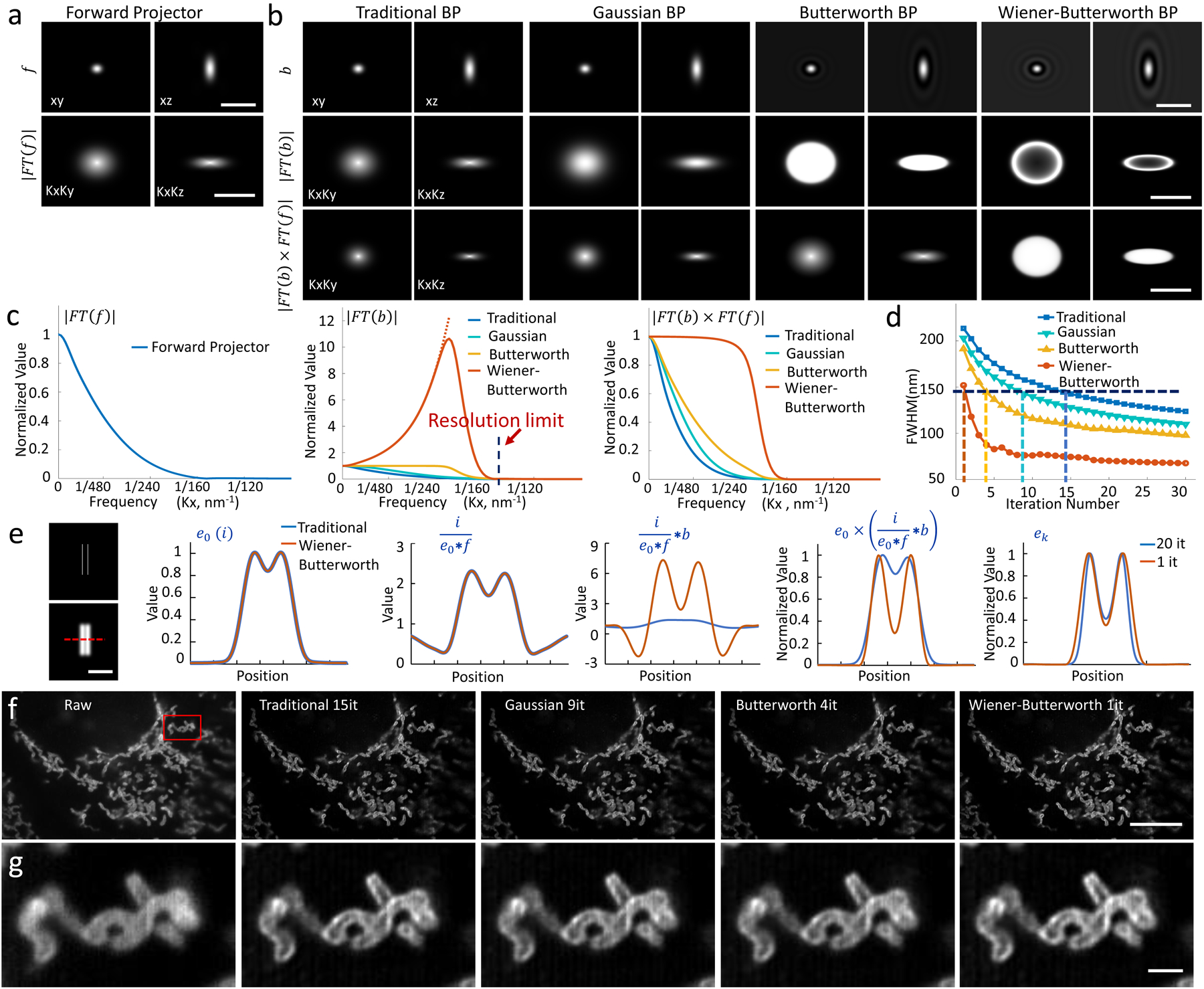

Fig. 1, An unmatched back projector reduces the number of iterations required for Richardson-Lucy deconvolution.

a) Lateral (left) and axial (right) slices through the forward projector for instant structured illumination microscopy (iSIM), shown in real space (top row; PSF) or Fourier space (bottom row, FT(f)). b) Different back projectors, including the traditional back projector (transpose PSF) usually employed in RLD, a Gaussian back projector, a Butterworth back projector, and a Wiener-Butterworth back projector. First two rows are as in a), the last row shows the product of forward and backward projectors in Fourier space. Note that the colormap for Butterworth and Wiener-Butterworth PSFs have been adjusted to show the negative values (black ringing) that result with these choices, and the colormap for the Wiener-Butterworth Fourier transforms has been adjusted to better show the increase in amplitude at high spatial frequencies. c) Line profiles through the Fourier transforms in a, b, comparing forward projector (left), back projector (middle), and product of forward and backwards projectors (right). The resolution limit of iSIM is indicated by a vertical dotted line in the middle panel. d) The apparent size of a 100 nm bead (vertical axis, average FWHM of 10 beads after deconvolution) as a function of iteration number (horizontal axis) is compared for different back projectors. The resolution limit of iSIM is indicated with a horizontal dotted line. See also Supplementary Fig. 1. e) Left: Simulated object consisting of two parallel lines in 3D space (top) and object blurred by the iSIM (bottom). For clarity only a transverse XY plane through the object is shown. Right panels: Line profiles corresponding to red dotted line at left, comparing the effect of original (blue) and Wiener-Butterworth (orange) back projectors in RL deconvolution. The estimate after 20 iterations using the original back projector and only 1 iteration using the Wiener-Butterworth filter is shown in the rightmost graph. f) U2OS cells were fixed and immunolabeled to highlight Tomm 20, imaged with iSIM, and deconvolved. Single planes from imaging stacks are shown, with iteration number (it) and back projector as indicated. g) Higher magnification views, corresponding to the red rectangular region in f). See also Supplementary Video 1. Scale bars: a, b) 1 μm in top row, 1/100 nm−1 in middle, bottom rows; e) 1 μm; f) 10 μm; g) 1 μm. Experiments were repeated on similar datasets at least 3 times for e) and f), with similar results obtained each time; representative data from a single experiment are shown.

When deconvolving images of 100 nm beads captured with a homebuilt iSIM, we found that our alternative b choices produced a resolution-limited result faster than the traditional back projector (Fig. 1d, Supplementary Fig. 1), with speedup factor correlating with the constancy of |FT(f) × FT(b)|. For example, the WB filter recovered the object’s resolution-limited size with only one iteration, whereas the traditional back projector required 15 iterations. The improved performance of the WB filter does not rely on an improved signal-to-noise ratio (SNR) in the input data (Supplementary Fig. 2), nor does it amplify noise more than other methods (Supplementary Fig. 3). We also compared the WB back projector to the classic Wiener filter employed in noniterative deconvolution. Here too we found that using the WB filter in RLD outperformed the classic Wiener filter (Supplementary Figs. 3, 4). Butterworth and WB back projectors both introduce unphysical negative values into the deconvolved reconstructions (Fig. 1b, Supplementary Fig. 5). However, since these values were small and typically located within the noise floor of each image, we set them to zero to yield reconstructions that were nearly identical to the conventional RLD results for these and other datasets presented in the paper (Supplementary Tables 1, 2).

In a simulation, we examined the relative performance of traditional and WB back projectors in resolving two lines separated by 1.6x the iSIM resolution limit (Fig. 1e, Supplementary Video 1). Using the same forward operator f affects the RLD procedure equivalently in both cases, but inspection of the term reveals that the WB filter applies a much larger ‘correction factor’ to ek, accelerating production of the final estimate. Further simulations based on more complex 3D objects confirmed this result, again finding that Gaussian, Butterworth and WB back projectors required fewer iterations to produce deconvolved images with similar (or better) image quality compared to traditional RLD (Supplementary Fig. 6, Supplementary Table 1).

Next, we applied these methods to images of fixed U2OS cells that were immunolabeled to highlight the outer mitochondrial membrane protein Tomm20 and acquired with iSIM (Fig. 1f, g). Each of the back projectors improved signal-to-background and spatial resolution relative to the raw data, better revealing interior voids within the mitochondria. As before, however, using the unmatched back projectors also substantially reduced the number of iterations needed (Supplementary Video 2), a benefit that that also extended to time-lapse iSIM (Supplementary Video 3), confocal, widefield, and single-view light-sheet data (Supplementary Fig. 7).

Accelerating multiview deconvolution and registration

The more than 10-fold improvement in processing speed obtained for single-view deconvolution prompted us to investigate whether our method could also be applied to the more computationally-intensive task of multiview deconvolution. We began by applying our method to dual-view light-sheet microscopy (diSPIM9), using the WB back projector instead of the traditional transpose PSF to perform joint deconvolution on the two registered input views (Methods). As before, the WB back projector produced nearly identical results to the more traditional method, but with only 1 iteration (Supplementary Fig. 8), a 10-fold improvement in speed.

We used our method to reconstruct neuronal dynamics in developing C. elegans embryos, obtaining clear images of a subgroup of neurons’ plasma membranes labeled by GFP in a pan-nuclear mCherry background16 (Fig. 2a, Supplementary Videos 4–5). Post deconvolution, morphologies of neurons and nuclei were sufficiently well-resolved (Fig. 2b, c) that we could perform semi-automated lineaging17 to identify neurons selectively labeled by the fmi-1 promoter in this strain. The anterior neurons OLQV(L/R) are glutamatergic sensory neurons that facilitate head foraging and withdrawal reflexes. OLQVs are born after their progenitor cells (AB prpaaappa and AB plpaaappa) undergo a terminal cell division to produce OLQV(L or R) and sister cells (AB prpaaappap and AB plpaaappap) that undergo programmed cell death18,19. The progenitor cells first elaborate broad lamellipodial extensions towards the nose of the animal, which eventually become sensory dendrites (Fig. 2d). Concomitant with the terminal cell division, the lamellipodial extensions become thinner and longer neurites consistent with the final morphological features of the dendrites. Dendrite extension then continues through what appears to be retrograde extension20. Perhaps forces generated during the terminal mitotic division help to create the morphological changes in dendrite shape. Although further experiments are needed to validate this hypothesis, the form of asymmetric division in which the mother cell does not round up during division and one daughter inherits the shape and polarity of the mother has been described previously in fish21 and in C. elegans22. Importantly, our reconstructions allowed us to identify single cells in living embryos, and contextualize the morphological changes undergone by neurons during terminal cell divisions leading to dendrite biogenesis.

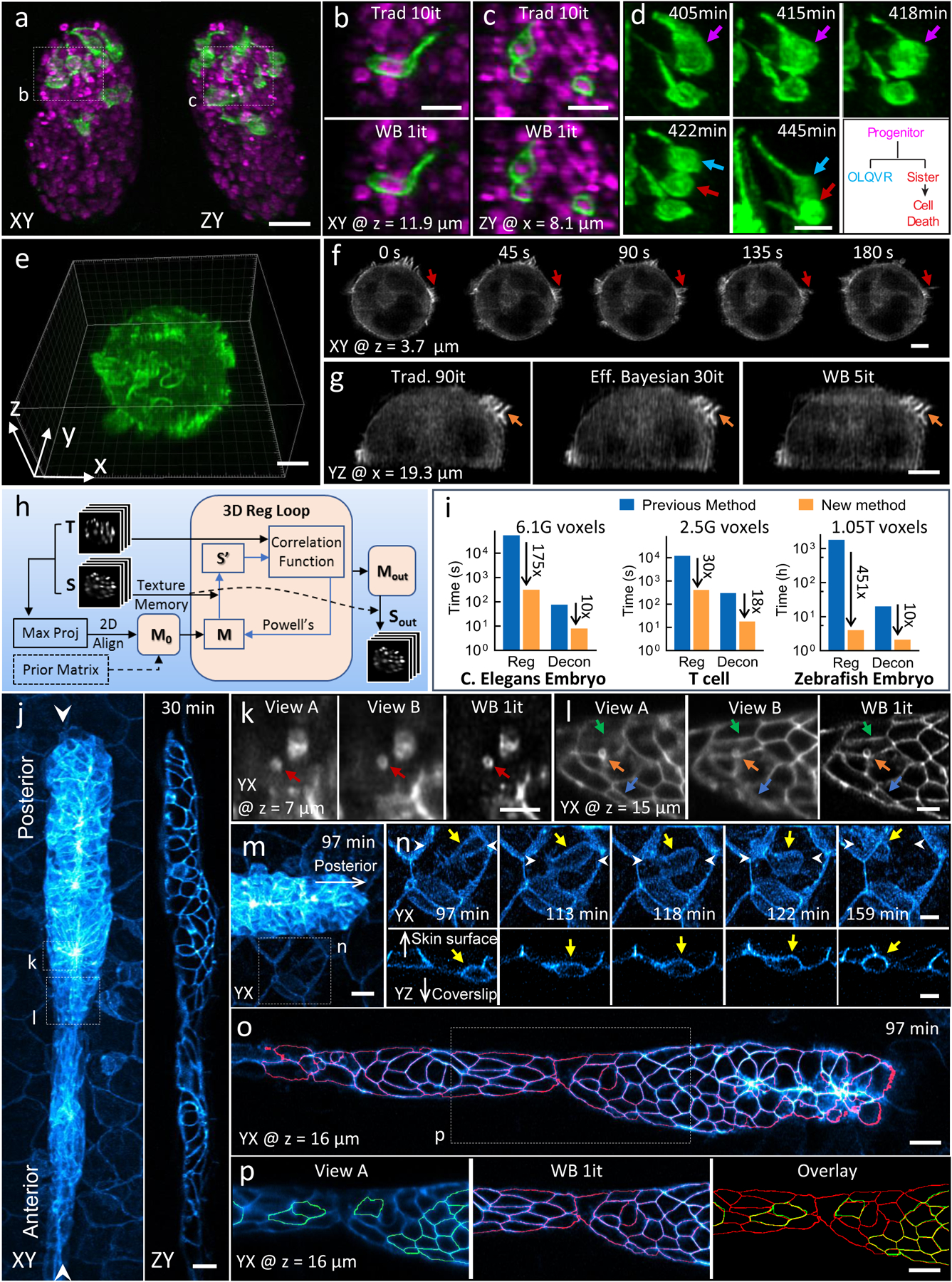

Figure 2, Improvements in deconvolution and registration accelerate the processing of multiview light-sheet datasets.

a) Lateral (left) and axial (right) maximum intensity projections demonstrate isotropic reconstructions of C. elegans embryos expressing neuronal (green, GFP-membrane marker) and pan-nuclear (magenta, mCherry-histone) markers. Images were captured with diSPIM, and deconvolution was performed using the Wiener-Butterworth (WB) filter. See also Supplementary Video 4. b, c) Higher magnification single slices from dotted rectangular regions in a), emphasizing similarity between reconstructions obtained with traditional Richardson-Lucy deconvolution (‘trad’) and WB deconvolution. Iterations (it) for each method are displayed. d) Higher magnification maximum intensity projection view of neuronal dynamics, indicating neurite extension and terminal cell division for progenitor (purple arrow), OLQVR (blue arrow) and apoptotic sister cell (red arrow). See also lower right schematic and Supplementary Video 5. e) WB reconstruction of Jurkat T cell expressing EGFP-actin, raw data captured in a quadruple-view light-sheet microscope. f) Selected slices 3.7 μm from the coverslip surface. Indicated time points display fine actin dynamics at the cell periphery. See also Supplementary Video 6. g) Axial slice through sample, indicating close similarity between traditional, efficient Bayesian, and WB deconvolution with iteration number as indicated. h) Schematic of GPU-based 3D registration used for multiview fusion. Example inputs are two 3D images, referred as the source (S, image to be registered) and target image (T, fixed image). Maximum intensity projections of the input 3D images are used for preliminary alignment and to generate an initial transformation matrix (M0). Alternatively, a transformation matrix from a prior time point is used as M0. A 3D registration loop iteratively performs affine transfomations on S (which is kept in GPU texture memory for fast interpolation), using Powell’s method for updating the transformation matrix by minimizing the correlation ratio between the transformed source (S’) and T. i) Bar graphs showing time required to process the datasets (file I/O not included) in this figure (left, middle and right columns corresponding to datasets in a, e and j, respectively, with voxel count as indicated) conventionally and via our new methods. The conventional registration method was performed using an existing MIPAV plugin (see Methods) using CPUs while the new registration method was performed using GPUs. Both deconvolution methods were performed with GPUs. Note log scale on ordinate, and that the listed times apply for the entire time series in each case (the total time for the conventional registration method on the zebrafish dataset was extrapolated from the time required to register 10 time points). j) Representative lateral (left, maximum intensity projection) and axial (right, single plane corresponding to white arrowheads in left panel) images showing 32-hour zebrafish embryo expressing Lyn-eGFP under the control of the ClaudinB promoter, marking cell boundaries within and outside the lateral line primordium. Images were captured with diSPIM, Wiener-Butterworth reconstructions are shown. Images are selected from the volume 30 minutes into the acquisition, see also Supplementary Video 7. k, l) Higher magnification views of dotted rectangles in j), emphasizing improvement in resolving vesicles (red, orange arrows) and cell boundaries (green, blue arrows) with WB deconvolution compared to raw data. Note that k, l are rotated 90 degrees relative to j. m) Higher magnification view of leading edge of lateral line, 97 minute into the acquisition. n) Higher magnification view of dotted rectangular region in m), emphasizing immune cell (yellow arrow) migration between surrounding skin cells. White arrowheads are provided to give context and the white arrows point towards skin surface and coverslip. Top row: maximum intensity projection of lateral view, bottom row: single plane, axial view. See also Supplementary Video 8. o) Lateral slice through primordium, with automatically segmented cell boundaries marked in red. See also Supplementary Video 9. p) Higher magnification view of dotted rectangle in o), showing differential segmentation with raw single-view data (green, left) vs. deconvolved data (red, middle). Overlay at right shows common segmentations (yellow) vs. segmentations found only in the deconvolved data (red). Note that ‘z’ coordinate in j-p is defined normal to the coverslip surface. Scale bars: 10 μm in a), m), o) and p); 5 μm in all other panels. Experiments were repeated on similar datasets at least 3 times for a)-d), 2 times for e)-g) and j)-p), with similar results obtained each time; representative data from a single experiment are shown.

Our methods extend to imaging configurations with more views. For example, we acquired a quadruple-view dataset on a triple-objective light-sheet microscope11 (Fig. 2e, Supplementary Fig. 9, Supplementary Video 6). Stably transfected EGFP-Actin E6–1 Jurkat T cells were plated onto coverslips coated with anti-CD3 antibodies (mimicking antigen presenting cells). After the T cells spread on the coverslip, we imaged them for 30 time points (one time point every 15 s) spanning 7.5 minutes, acquiring 4 volumetric views at each time point. After adapting our deconvolution method for this acquisition scheme (Methods), dynamic changes in membrane ruffles and cell protrusions were obvious in the reconstructions (Fig. 2f), but obscured in the raw data (Supplementary Fig. 10). Using the WB back projector reduced the number of iterations from 90 to 5 (Fig. 2g). Importantly, our method also out-performed the state-of-the-art Efficient Bayesian Multiview Deconvolution10 (EBMD) method (which required 30 iterations to produce images of similar quality), which can be explained by the flatter frequency response of |FT(f) × FT(b)| using the WB filter compared to the EBMD result (Supplementary Fig. 11).

In processing these dual- and quad-view datasets, we noticed that the time for image registration considerably exceeded the time for deconvolution, usually by 75–120-fold. One approach to faster image registration encases the sample in a labeled matrix, using the multiple feature points from many fiducials to obtain the registration among different views23. We opted instead for the less invasive option of greatly accelerating the speed of our image-based registration software. First, we rewrote our CPU-based registration code9 in CUDA so that the procedure could be run entirely on our graphics processing unit (GPU). Second, we improved the underlying registration algorithm by incorporating an initial 2D registration and progressively more complex 3D registrations which resulted in faster and more robust performance (Fig. 2h, Supplementary Fig. 12, Methods, Supplementary Software). Collectively, these advances resulted in 175- and 30-fold speedups in registration (Fig. 2i), respectively, for the modestly sized C. elegans and T cell datasets presented in Fig. 2a, 2e, which enabled total processing times on par with the acquisition time (Supplementary Table 3). We also benchmarked our improved registration method against established registration tools including elastix24 and NiftyReg25. Compared to these state-of-the-art tools, our method enabled a more than 10-fold speed improvement on large volumetric light-sheet datasets without sacrificing registration quality (Supplementary Table 4).

Our improved registration method enabled an even more dramatic speedup (451x, Fig. 2i) for an extended diSPIM acquisition spanning 900 volumes (7.5 hours, 1.05 Tvoxels, 2.1 TB), where we followed the migration of the lateral line primordium in a 32-hour zebrafish embryo expressing Lyn-eGFP under the control of the ClaudinB promoter26 (Fig. 2j, Supplementary Video 7). Following registration, joint WB deconvolution improved visualization of vesicular structures and cell boundaries compared to the raw data (Fig. 2k, l), and facilitated inspection of dynamic immune cells that appeared to migrate in between the skin and underlying somites (Fig. 2m, n, Supplementary Video 8). WB deconvolution also substantially improved automated segmentation of cells within the lateral line, as only 71/120 cells were accurately segmented in the raw data vs. 116/120 in the deconvolved data (Fig. 2o, p, Supplementary Video 9).

We also tested our methods on single-view and multiview datasets acquired with a commercial Zeiss Lightsheet Z.1 microscope (Supplementary Figs. 13, 14, Supplementary Video 10, Methods), obtaining improved resolution and contrast after deconvolution and improved speed compared to the widely used commercial Huygens deconvolution software (Supplementary Fig. 13, Supplementary Table 3, Methods).

Submicron isotropic imaging of large, cleared tissue

Other samples that benefit from improved multiview fusion and deconvolution are large volumes of cleared tissue, which can be rapidly imaged using light-sheet microscopes. To explore this possibility, we constructed a cleared tissue diSPIM (Supplementary Fig. 15), replacing our original water-immersion objectives with a pair of mixed-immersion 17.9x, 0.4 NA objectives (Methods). To estimate spatial resolution, we imaged 100 nm fluorescent beads in dibenzyl ether (Sigma, Cat. # 108014), obtaining single-view lateral full width at half maximum value (FWHM) 0.84 +/− 0.04 μm, and axial FWHM 4.6 +/− 0.4 μm (10 beads, mean+/− standard deviation, Supplementary Fig. 16). Registration and 1 iteration of WB deconvolution further improved spatial resolution, resulting in an isotropic 0.79 +/− 0.04 μm, offering a several-fold improvement in axial resolution over previous single-view experiments using the same lenses27,28. Next, we fixed, cleared, and immunolabeled mm-scale samples of mouse tissue (Fig. 3a–d, Supplementary Videos 11–15) with iDISCO+29 or iDISCO30, subsequently imaging them with the cleared-tissue diSPIM in stage-scanning mode31.

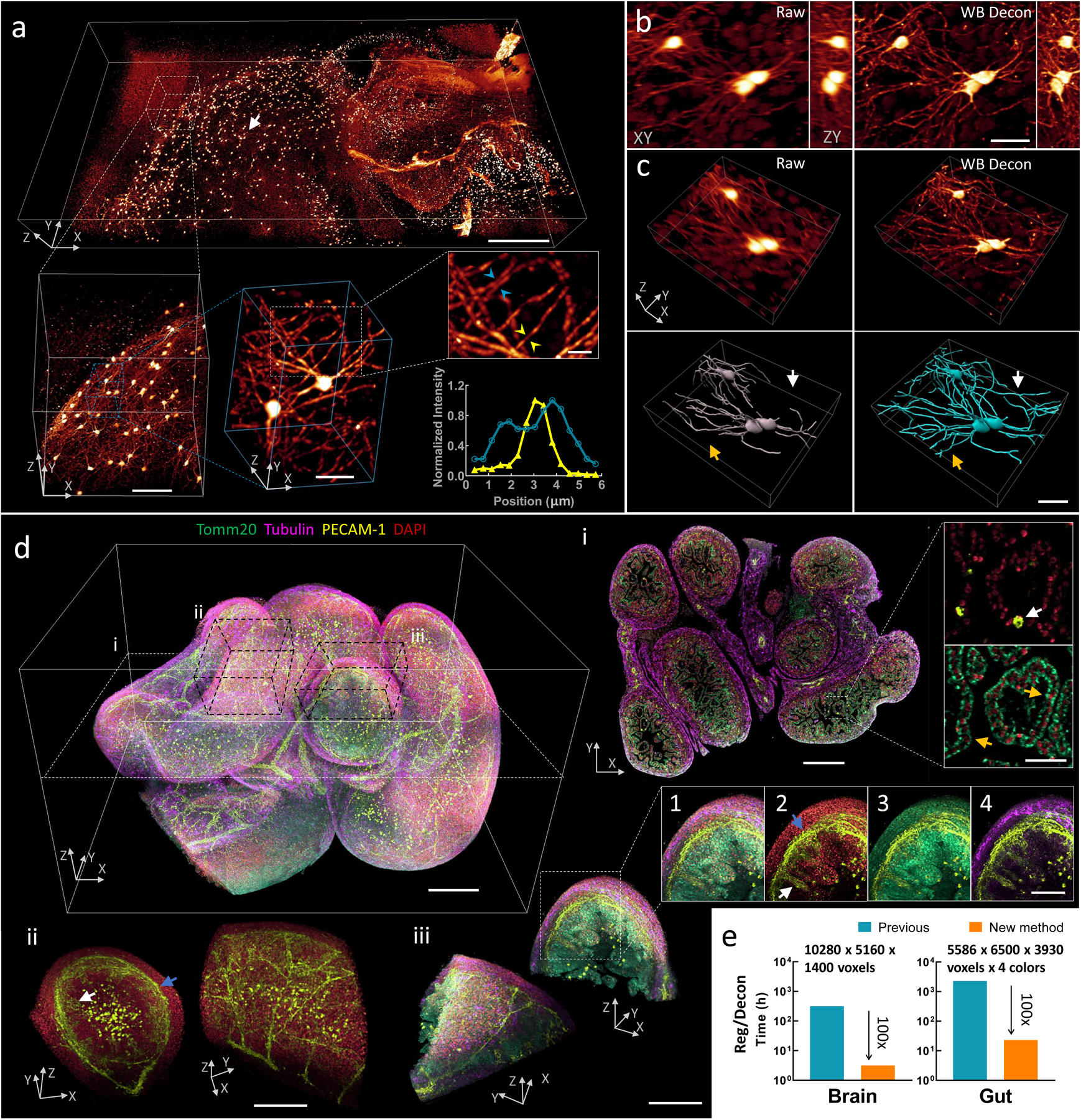

Fig. 3, Imaging mm-scale cleared tissue volumes with isotropic micron-scale spatial resolution.

a) 4 × 2 × 0.5 mm3 volume of brain from fixed and iDISCO+-cleared V1b mouse, immunolabeled with Alexa Fluor 555 secondary antibody against tdTomato primary antibody, imaged with cleared tissue diSPIM, and reconstructed after dual-view registration and Wiener-Butterworth (WB) deconvolution. Progressively higher resolution subvolumes are indicated, with line profiles indicating 1.3 μm neurite FWHM (yellow arrowheads) and 1.9 μm separation between neurites (blue arrowheads). See also Supplementary Video 11. b) Lateral/axial cross sections from region indicated with white arrow in a), emphasizing the higher resolution obtained with WB deconvolution compared to raw single-view data. c) Volume renderings of region displayed in b), again comparing raw data to deconvolution. Manually traced neurites are shown in bottom row; colored arrows indicate neurites traced in deconvolution that are obscured in raw single-view data. d) 2.1 × 2.5 × 1.5 mm3 intestinal volume from fixed and iDISCO-cleared E18.5 mouse; labeled with DAPI (red), Alexa-647 conjugated secondary antibody against Tomm20 primary antibody (green), Alexa-488 conjugated secondary antibody against PECAM-1 primary antibody (yellow), and Alexa-568 conjugated secondary antibody against α-Tubulin primary antibody (purple); imaged with cleared tissue diSPIM; and reconstructed after dual-view registration and WB deconvolution. See also Supplementary Video 12. i: Single plane demarcated by dotted white rectangular region at left, showing 4-color cross section and higher magnification dual-color views highlighting hollow blood vessel (white arrow) and mitochondria surrounding individual nuclei (orange arrows). ii: Subvolume demarcated by dotted black parallelepiped above, illustrating different perspectives of vascular plexus supplying submucosa (blue arrow) and mucosa (white arrow) of intestine. iii: Different perspectives of four-color subvolume demarcated by dotted black parallelepiped above and insets 1–4, highlighting hierarchical organization within intestine, e.g., submucosa (blue arrow) and mucosa (white arrow) (inset 2); mitochondrially-enriched regions that support the high energy demand and constant cellular renewal within the mucosa (inset 3); outer intestinal wall with dense alpha-tubulin staining (inset 4). See also Supplementary Fig. 19. e) Bar graphs showing the registration and deconvolution time required for post-processing datasets (image sizes in a) and d) as indicated), comparing previous (blue) and new (orange, 100-fold reduction in time) post-processing methods. Note that times for previous method are estimated (see Methods for further detail) and the log scale on the ordinate axes. Scale bars: a) 500 μm, 100 μm, 30 μm and 10 μm for progressively higher magnifications; b) and c) 30 μm; d) top left 300 μm, i: 300 μm and 30 μm for insets, ii: 200 μm, iii: 200 μm and 100 μm for insets. See also Supplementary Videos 13–15. Experiments were repeated on similar datasets at least 3 times, with similar results obtained each time; representative data from a single experiment are shown.

The resulting data span hundreds of gigavoxels – teravoxels, up to ~2 TB in size. This size presents a major challenge, as such whole raw views do not fit within the memory of single GPU cards and must be subdivided prior to processing. To address this challenge, we created a processing pipeline for TB-scale data: cropping the single-view data into subvolumes, registering and deconvolving the subvolumes, and finally stitching the resulting reconstructions back into a higher-resolution composite (Supplementary Figs. 17, 18).

In a first example, we imaged a 4 × 2 × 0.5 mm3 slab of brain tissue derived from a V1b transgenic mouse32, with sparse immunolabeling of neurons and neurites across the entire volume (Fig. 3a). The isotropic resolution of the deconvolved reconstruction enabled us to resolve individual neurites at the micron scale (Fig. 3a), and to observe fine detail laterally and axially that was not resolved in the raw data (Fig. 3b). Manual tracing of neurites was also significantly improved in the deconvolved data relative to the raw data (Fig. 3c). In a second example, we performed 4-color imaging on the gut of an E18.5 mouse, spanning a 2.1 × 2.5 × 1.5 mm3 volume (Fig. 3d). Our reconstruction highlights the organized and hierarchical structure of the intestine, including the interconnected vascular plexus feeding the submucosal and mucosal intestinal areas (PECAM-1, DAPI staining), mitochondrially-enriched regions within the mucosa (Tomm20, DAPI), and tubulin-dense regions within the outer intestinal wall (alpha-tubulin, PECAM-1). As with the brain sample, the isotropic submicron-scale resolution allowed us to visualize fine details that were otherwise obscured by diffraction, including hollow blood vessels and cytoplasmic mitochondria surrounding individual nuclei (Supplementary Fig. 19). Importantly, obtaining these as well as other large reconstructions of mouse intestine, stomach and ovary datasets (Supplementary Videos 13–15, Supplementary Tables 5, 6) is facilitated by our much faster post-processing methods. Collectively, the new registration (Fig. 2h) and deconvolution (Fig. 1) methods account for a 100-fold speed improvement over previous efforts, enabling post-processing in tens of hours rather than tens of days (Fig. 3e). We note that our method delivers less light dose than a recent technique with similar reported resolution33, as our technique confines the illumination to the vicinity of the focal plane.

Accelerating deconvolution with a spatially varying PSF

Finally, we developed methods for accelerating the deconvolution of fluorescence microscopy data blurred with a spatially varying PSF, acquired by imaging samples deposited on reflective coverslips (Fig. 4, Supplementary Table 7). As we previously demonstrated13, reflective diSPIM enables the collection of additional specimen views (Fig. 4a), increasing information content and boosting spatiotemporal resolution. However, the raw reflective data are contaminated by substantial epifluorescence that varies over the imaging field (Fig. 4c). To remove the epifluorescence and fuse the views for optimal resolution enhancement, registration and subsequent deconvolution with a spatially varying PSF are needed (Methods). Unfortunately, spatially varying deconvolution carries a considerable computational burden -- as applied to reflective imaging it requires calculation of the forward and backwards projectors at each axial slice instead of only once per volume13. For example, deconvolving an imaging volume spanning 340 × 310 × 340 voxels with 20 iterations of traditional RLD with a spatially varying PSF requires 340 slices × 2 views × 2 convolutions per RL update × 20 iterations = 27200 3D convolutions (14 minutes per volume with a single GPU card), instead of 2 × 2 × 20 iterations = 80 3D convolutions required with a spatially invariant PSF (only 2.5 s per volume). Unlike in our previous examples (Fig. 2, 3), deconvolution rather than registration becomes the bottleneck in post-processing the raw data.

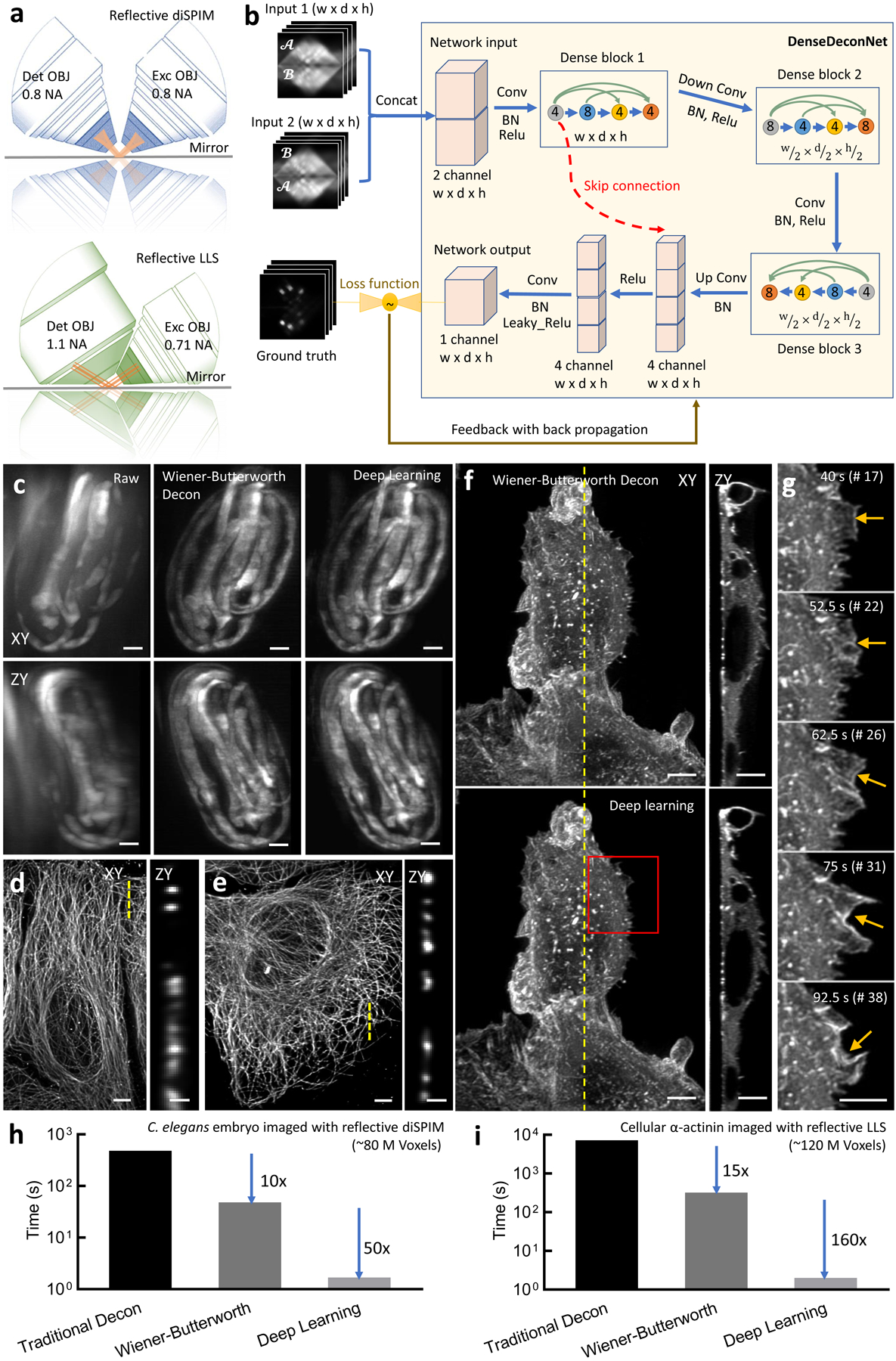

Figure 4, Deep learning massively accelerates deconvolution with a spatially varying PSF.

a) Reflective imaging geometries for diSPIM (top) and lattice light-sheet (LLS, bottom) microscope. In both cases, the sample is deposited on a reflective coverslip (mirror), which produces additional views of the specimen. b) Schematic architecture of our convolutional neural network (‘DenseDeconNet’) used for deep learning. Inputs are concatenated (‘Concat’) image volumes (each containing width (w) × depth (d) × height (h) voxels) obtained from the microscope, which may contain multiple views of the specimen. Three ‘dense blocks’ extract feature maps (circles) from the network input, eventually learning to reverse the spatially varying blurring imparted by the microscope by minimizing the difference (loss function) between the network output and the ground truth reconstruction via back propagation. Conv: convolution; BN: batch normalization; ReLu: rectified linear unit. Circles within each dense block unit show the number of feature maps after each convolutional layer, colored arrows within each dense block show the concatenation of successive layers in the network. See Supplementary Note 4 for more details on the network architecture. c) Three-fold C. elegans embryos expressing GCaMP3 from a myo-3 promoter were imaged in the reflective diSPIM (150 volumes, each acquired every 350 ms). Maximum intensity projections of raw data (left), Wiener-Butterworth deconvolution (middle), and deep learning (right) reconstruction are shown for lateral (top) and axial (bottom) views. See also Supplementary Video 16. d) U2OS cells were deposited on glass coverslips, fixed, the microtubules immunolabeled with anti-alpha tubulin conjugated with Alexa Fluor 488, and imaged with LLS microscopy. Lateral maximum intensity projection (left) and axial slice (corresponding to yellow dotted line at left) are shown. e) U2OS cells were deposited on reflective coverslips and fixed, immunolabeled, and imaged as in d). Lateral maximum intensity projection (left) and axial slice (corresponding to yellow dotted line at left) are shown. Reconstructions in d, e) were performed using traditional deconvolution with a spatially varying PSF. See also Supplementary Fig. 20. f) U2OS cells expressing mEmerald-α-Actinin were deposited on reflective coverslips and imaged (100 volumes, each acquired every 2.5 s) in the LLS microscope. Reconstructions were performed via Wiener-Butterworth deconvolution (top) and deep learning (bottom). Lateral maximum intensity projection (left) and axial slice (right, corresponding to yellow dotted line at left) are shown. See also Supplementary Video 17. g) Higher magnification view of red rectangular region, emphasizing the dynamics of α–actinin near cell boundary (yellow arrows). Bar graphs showing time required for processing a single volume using traditional deconvolution with spatially varying PSF, deconvolution via the Wiener-Butterworth filter, and deep learning for h) dataset shown in c) and i) dataset shown in f). Note log scale on ordinate. Note the time cost of file I/O is not included in h) and i). Scale bars: 5 μm in all panels except 1 μm in zy views in d, e). For c)-i), traditional and Wiener-Butterworth deconvolution experiments were repeated on similar datasets at least 2 times, with similar results obtained each time; the deep learning model was trained on one time-lapse dataset and applied to multiple datasets (N>=2), with similar results obtained for each dataset.

By modifying the spatially varying RLD update to incorporate the WB filter (Methods), we found that only 2 iterations were required to deconvolve a previously published13 dataset highlighting calcium waves (marked with GCaMP3) within muscles in 3-fold stage C. elegans embryos. As with traditional RLD, the WB modification improved contrast and resolution in the raw data (Fig. 4c, Supplementary Video 16), but with a ten-fold reduction in processing time (Fig. 4h). These gains also extended to a new form of reflective microscopy, using a higher NA lattice light-sheet (LLS) microscope instead of diSPIM (Fig. 4a, d-f, i, Methods).

LLS microscopy34 has garnered attention due to its combination of high detection NA and illumination structure; together these attributes result in a better compromise between field-of-view and light-sheet thickness than previous microscopes using pseudo non-diffracting beams. Nevertheless, the contrast and spatial resolution in raw LLS images still suffer from extraneous out-of-focus light due to illumination sidelobes, an effect that can be ameliorated with deconvolution. We found that the performance of the base LLS microscope could be further improved by imaging samples deposited on reflective coverslips (Fig. 4d–f), registering the two resulting high NA views oriented ~113 degrees apart, and deconvolving them with a spatially varying PSF. As assayed with images of immunolabeled microtubules in U2OS cells captured on glass (Fig. 4d) and reflective (Fig. 4e) coverslips, axial resolution was improved 2-fold, from 750 +/− 39 nm to 379 +/− 23 nm (Supplementary Fig. 20). Deconvolving registered images of mEmerald α-actinin in live U2OS cells acquired in the reflective LLS microscope with the WB filter instead of traditional RLD resulted in a 15-fold reduction in processing time (Fig. 4i, Supplementary Video 17).

While a 10–15x reduction in processing time is substantial, the time associated with deconvolution still far exceeds data acquisition (3.5 hours to deconvolve the 150-volume C. elegans dataset imaged with reflective diSPIM; 13.3 hours to deconvolve the 100-volume α-actinin dataset imaged with reflective LLS microscopy). To obtain further speed enhancements, we turned to deep learning (DL35), which has resurged as a promising framework for image classification36, image recognition37, image segmentation38, denoising39, super-resolution40, and deconvolution41.

We constructed a convolutional neural network, terming it ‘DenseDeconNet’, as it is based on linking together dense network blocks42 in a memory efficient manner (Fig. 4b, Supplementary Note 4, Supplementary Software). These blocks use multiple dense connections to extract features from the raw image stacks, then learn to deblur the images. Unlike previous attempts that deblur 2D image slices by comparing the data to synthetically blurred slices, and average the network output from two orthogonal views to improve resolution isotropy43, we designed our method to operate on the full volumetric data, thereby learning the requisite 3D restoration directly. This capability is especially important in reflective applications, in which a simple 2D spatially invariant blur cannot properly model the physics of the microscope.

We began by testing DenseDeconNet on nuclear and membrane-bound labels expressed in live C. elegans embryos, acquired on the diSPIM using conventional glass coverslips. We used the deconvolved dual-view data as ground truth. When using only a single view as the input to the network, DenseDeconNet provided resolution enhancement intermediate between the raw data and the deconvolved result (Supplementary Video 18). To some extent this is unsurprising; presumably only with both views is there enough information to recover the isotropic resolution provided by diSPIM. However, for highly dynamic structures, the network output with a single-view input sometimes provided more accurate reconstructions than the deconvolved ground truth (Supplementary Note 4). We suspect this result is due to the lessened effect of motion blur, which otherwise causes errors in both registration and deconvolution. Additionally, in bypassing the registration, the DenseDeconNet with single-view input provided a 5-fold reduction in total processing time compared to WB deconvolution, i.e. ~ 1 s for application of DenseDeconNet vs. 5 s for the new registration method (Fig. 2h) and 1 iteration WB deconvolution (Supplementary Note 4).

Using both registered views for network input enabled resolution enhancement very similar to the ground truth joint deconvolution on data acquired with glass coverslips (Supplementary Note 4). This result also extended to the reflective datasets. When training the network using the raw specimen views as inputs and the WB result as the ground truth, DenseDeconNet produced outputs that were nearly identical to the ground truth (based on visual inspection, Fig. 4c, f; mean square errors (MSE) of 4.8e-4 (Fig.4c) and 5.0e-5 (Fig.4f); and structural similarity (SSIM) indices of 0.923 (Fig.4c) and 0.965 (Fig.4f)), resulting in clear images of calcium dynamics in embryonic muscle (Supplementary Video 16) and α-actinin dynamics at the cell boundary (Fig. 4g, Supplementary Video 17). Importantly, the network output offered a 50x speed improvement over WB deconvolution (1.68 s/volume, or 500x over traditional RLD) when processing the C. elegans data (Fig. 4h) and 160x (2 s/volume, or 2400x over traditional RLD) when processing the α-actinin data (Fig. 4i, Supplementary Table 7).

Discussion

Our deconvolution method is inspired by RLD, but achieves high-quality reconstructions more rapidly. Although the WB filter indeed enables deconvolution with fewer iterations than a traditional back projector, the potential to introduce artifacts still exists, particularly if too many iterations are applied (Fig. 1d, Supplementary Fig. 6). We recommend a single iteration as a good rule of thumb, since this choice resulted in resolution-limited performance on the majority of datasets we examined (Table S2.1 in Supplementary Note 2). With this caveat in mind, the algorithmic improvements we describe here should accelerate image-based biological discovery, especially for the increasingly rich and large datasets that can be obtained with modern light microscopes. For raw data that fit within the memory of a single GPU card (Fig. 1, 2, 4), our methods now enable multiview registration and deconvolution on a timescale on par with, and frequently less than, image acquisition. For much larger multiview light-sheet datasets (Fig. 3), our approach drastically shortens the post-processing time necessary for image reconstruction, instead placing the bottleneck on file reading, writing, and image stitching (Supplementary Table 5). Further speed improvements are possible if these operations are optimized. Alternatively, compressing the image data or using multiple graphics cards for additional parallelization12 could further shorten post-processing time. We freely provide our software (Supplementary Software) in the hope that others may improve it, and expect that other multiview light-sheet12 (Supplementary Fig. 14) or light-field configurations44 could benefit from our work.

When performing deconvolution with a spatially varying PSF, the WB method provides a substantial speedup over traditional RLD, yet we obtained an even greater acceleration with deep learning. We note several caveats, however, when using deep learning methods. First, enough high-quality training data (for our network, ~50–100 training pairs) must be accumulated prior to application of the network, underscoring the point that deep learning augments, but does not replace, more classic deconvolution. Second, although application of the trained network takes only seconds per volume, training the network still takes days on a single graphics card. Finally, the networks are ‘brittle’; we obtained optimal results by retraining the network on each new sample (Supplementary Note 4). Designing more general neural networks remains an important area for further research.

Methods

Widefield fluorescence imaging

Widefield imaging was performed on a previously described home-built system. In these experiments, we used a 60X NA=1.42 Oil Objective (Olympus) on an Olympus IX81 inverted microscope equipped with XT 640-W (Lumen Dynamics Group Inc.) as illumination source, and an automated XY stage with an additional Z piezoelectric stage (100 μm range, Applied Scientific Instrumentation, PZ-2000). The illumination was filtered with an excitation filter (ET470/40x, Chroma) and then reflected towards the sample via a dichroic mirror (T495lpxr, Chroma). The emission was collected by the same objective, and filtered with a bandpass emission filter (ET525/50m, Chroma) prior to imaging with an electron-multiplying charge-coupled device (EMCCD) (Evolve Delta, Photometrics). An exposure time of 20 ms and EM gain of 20 were used. The imaging axial step for both beads and fixed actin samples was 150 nm.

Fixed, phalloidin labeled actin samples

U2OS cells were cultured on glass bottomed dishes (MatTek, Cat. # P35G-1.5–14C) at 37 C and 5% CO2. Prior to labeling, cells were rinsed 3 times with 1X PBS, fixed with 1 mL paraformaldehyde/glutaraldehyde (4%/2%) in 1X PBS for 20 minutes at 37C, rinsed twice in 2 mL 750 mM Tris-HCL pH 7.5 and permeabilized in 0.2% Triton-X/1X PBS for 10 minutes. Next, samples were washed 3 times in staining buffer and blocked in staining buffer containing 1% BSA for 30 minutes. Blocking buffer was removed, and the samples stained with 200 μL of 1:50 Alexa Fluor Phalloidin-488 (Thermo Fisher Scientific, Cat. # A12379):0.2% Tween-20/1X PBS for 1 hour. Cells were washed in 0.2% Tween-20/1X PBS 3 times and imaged in 1X PBS.

Bead samples

Glass bottomed dishes (MatTek, Cat. # P35G-1.5–14C) were cleaned with 100% ethanol and coated with 0.1% poly-l-lysine (PLL; Sigma-Aldrich, Cat. # P8920) for 10 minutes. 100-nm yellow-green beads (Thermo Fisher Scientific, Cat. # F8803) were diluted ~105-fold and 20 μL were added to the coverslip. After 10 minutes, the dish was washed four times with clean water prior to imaging. Bead images were used for estimating the widefield PSFs used in Supplementary Fig. 7.

Confocal imaging

Confocal imaging was performed on a Leica SP8 confocal microscope with 1.40 NA oil lens (HCX PL APO CS 63.0X1.40 OIL UV). The 488 nm argon laser power was set at 20% and the AOTF (488) was set at 5%. The sample was scanned bidirectionally with a voxel size of 48.1 nm in xy and 125.9 nm in z at 200Hz with 6x line average. The pinhole size was set to 20.1 μm (0.21 Airy units). The fluorescence signal was collected from 510 nm to 580 nm with a Leica HyD hybrid detector operating in photon counting mode (10% gain). Data were saved in 8-bit format.

Immunolabeled microtubule samples

U2OS cells were cultured on No. 1.5 coverslips (Fisherbrand, Cat. # 12-545-81) at 37 C and 5% CO2. Prior to labeling, cells were rinsed 3 times with 1X PBS, fixed with 1 mL methanol for 3 minutes at −20C, and rinsed twice in 2 mL 1X PBS. Next, samples were washed 3 times in staining buffer and blocked in staining buffer containing 1% BSA for 30 minutes. The blocking buffer was removed, and the samples stained with 200 μL of 1:100 anti-alpha Tubulin primary antibody (Thermo Fisher Scientific, 322500) for 1 hour. Cells were washed in 0.2% Tween-20/1X PBS and stained with 200 μL of 1:200 Alexa-488 conjugated Goat anti-mouse secondary antibody (Invitrogen, A11001): 0.2% Tween-20/1X PBS for 1 hour. Finally, cells were washed 3 times in 0.2% Tween-20/1X PBS and twice in distilled water before mounting in Prolong Diamond (Thermo Fisher Scientific, P36961).

Instant SIM imaging

The instant structured illumination microscopy (iSIM) system has been previously described13. For all experiments, a 60X NA=1.42 oil immersion objective (Olympus PlanApo N 60x Oil) was used, resulting in an image pixel size of 55.5 nm and a lateral resolution of ~150 nm. Fluorescence data were acquired with a pco.edge 4.2 sCMOS camera, and the exposure time was set to 40 ms per image frame. The imaging axial step for beads, immunolabeled mitochondrial samples, and transfected endoplasmic reticulum samples was set to 100 nm, 100 nm, and 500 nm, respectively.

Immunolabeled mitochondrial samples

U2OS cells were cultured on glass bottomed dishes (MatTek, Cat. # P35G-1.5–14C) at 37 C and 5% CO2. Prior to labeling, cells were rinsed 3 times with 1X PBS, fixed with 1 mL paraformaldehyde/glutaraldehyde (4%/2%) (Electron Microscopy Sciences, Cat. # 15710 and 16120) in 1X PBS for 20 minutes at 37C, rinsed twice in 2 mL 750 mM Tris-HCL pH 7.5 (Corning, Cat. # 46–030-CM), and permeabilized in 0.2% Triton-X (Sigma, Cat. # T9284)/1X PBS for 10 minutes. Next, samples were washed 3 times in staining buffer (0.2% Tween-20 (Sigma, Cat. # P9416)/1X PBS) and blocked in staining buffer containing 1% bovine serum albumin (BSA, Thermo Fisher Scientific, 37525) for 30 minutes. The blocking buffer was removed, and the samples stained with 200 μL of 1:200 anti-Tomm20 primary antibody (Abcam, Cat. # 78547): 0.2% Tween-20/1X PBS for 1 hour. Cells were washed in 0.2% Tween-20/1X PBS and stained with 200 μL of 1:200 Alexa-488 conjugated donkey anti-rabbit secondary antibody (Invitrogen, Cat. # A21206) for 1 hour. Finally, cells were washed 3 times in 0.2% Tween-20/1X PBS and imaged in the instant SIM in 1X PBS.

Transfected ER samples

U2OS cells were cultured in 1mL media using MatTek glass bottomed dish at 37 C and 5% CO2. At 80% confluency, cells were transfected with 100 μL of transfection buffer containing 2 μL of X-treme GENE, 2 μL plasmid DNA (ERmoxGFP45, Addgene Cat. # 68072, 420 ng/μL), and 96 μL of PBS. Cells were imaged 1 day after transfection.

Beads samples

Yellow-green fluorescent beads (Thermo Fisher Scientific, Cat. # F8803, 100 nm diameter) were used for experimental FWHM measurements for iSIM. Beads were diluted from the stock concentration 1:1,300 (1:100 in distilled water and 1:13 in ethanol) and spread over cleaned glass cover slips. After air-drying for 5 minutes, coverslips were washed twice in distilled water to remove unattached beads. After air-drying again, beads were mounted in oil (Cargille, Cat. # 16241) onto glass slides and sealed with nail polish.

Zeiss Lightsheet Z.1 imaging

Images were captured with a Zeiss Lightsheet Z.1 Selective Plane Illumination microscope (Carl Zeiss Microscopy, Thornwood, NY), tandem PCO.edge sCMOS cameras (PCO.Imaging, Kelheim, Germany), and Zeiss Zen imaging software. A pair of Zeiss 10x/0.2 NA dry illumination objectives were used alongside a Zeiss 20x/1.0 NA long working distance detection objective. The illumination sheet was narrowed to 2 μm and images were acquired with 10 ms exposure for both green (488 nm) and red (561 nm) channels. In single-view experiments, samples were imaged dorsally with volumes acquired every 2 minutes. In multiview experiments, two views were collected at −15 and +15 degrees from dorsal, with volumes acquired every 5 minutes.

Zebrafish embryos

Imaging of the embryonic zebrafish hindbrain used three transgenic fish lines: Tg(h2az2a:h2az2a-GFP)46, Tg(isl1-hsp70l:mRFP)ch10047, and Tg(sox10:mRFP)vu23448. Adult females carrying the nuclear label hist2h2l:GFP were crossed to males carrying either the cranial efferent marker isl1-hsp70l:mRFP (for single view experiments) or the neural crest marker sox10:mRFP (for multiview experiments). The resulting progeny were staged to 18 hpf following standard morphological criteria.49 For single-view experiments, embryos were prepared via multilayer mounting50 in Fluorostore Fractional FEP Tubing (F018153–5). For multiview experiments, embryos were mounted in 1.2% agarose (Invitrogen, UltraPure Agarose #16500) in glass capillary tubes. Specimens were immersed in embryo media with 0.2 mg/mL tricaine and incubated at 28.5°C during data collection.

Fiber-coupled diSPIM imaging

We used our original fiber-coupled diSPIM system51 in addition to another, recently described fiber-coupled diSPIM system52 to acquire volumetric time lapse datasets of zebrafish embryo lateral line and nematode embryo neurodevelopment, respectively. Data were acquired in light-sheet scan mode (scanning the light sheet through the stationary sample) with the ASI diSPIM Micromanager53,54 (http://dispim.org/software/micro-manager) plugin instead of the LabVIEW control software used previously51. For zebrafish data, the XY stage was manually moved periodically in order to ensure that the growing tip of the lateral line did not exit the field of view.

Nematode embryos

The 718 bp promoter in plasmid DACR3078 [fmi-1p(718bp)(EcoRV-EcoRV)::Syn21-GFP-CAAX::p10 3’UTR] is a bashed fragment from the 3186 bp promoter upstream of the fmi-1 start codon. To make plasmid DACR3078, EcoRV was used to digest plasmid DACR2984 [fmi-1p(3186bp)::Syn21-GFP-CAAX::p10 3’UTR] followed with subsequent religation. Transgenic strain DCR6371 was made by injecting plasmid DACR3078 at 50 ng/μL into the lineaging strain, BV514, which ubiquitously expresses the mCherry::Histone reporter constructs, pie-1p::mCherry::H2B::pie-1 3’UTR and nhr-2p::his-24::mCherry::let-858 3’UTR16. From a spontaneous integration of DACR3078 into BV514, olaIs98 was isolated. The integrated strain was designated as DCR6371. The Syn21 and p10 3’UTR is a translational enhancer system used in Drosophila to boost translational expression55. We have found that this also seems to help boost expression in the worm.

Worms were cultivated at 20°C on nematode growth medium seeded with a lawn of Escherichia coli strain OP50 using standard methods. Embryos were laid by gravid adults and picked from the plate into M9 buffer with 0.25% Methylcellulose, and then pipetted onto a poly-l-lysine-coated coverslip and imaged in M9 buffer, as previously described9. Samples were imaged every 100 s for 50 timepoints with both 561 nm and 488 nm lasers. Further details are available in ref.52.

Zebrafish embryos

For Zebrafish posterior lateral line imaging, ClaudinB:lynGFP26 embryos at 30–32 hpf were placed in embryo media (60 mg RedSea Coral Pro Salt (Drs Foster and Smith Pet Supplies) per liter ddH2O) supplemented with 600 μM MS-222 (Sigma, E10521). For diSPIM imaging, embryos were mounted in 1% low melt agarose (Cambrex, 50080), covered with embryo media, and the agarose above the posterior lateral line primordium was manually removed using forceps prior to imaging.

Quad-view light-sheet microscopy

We modified our previously described triple-view SPIM system11 to acquire 4 volumetric views. Two 40x, 0.8 NA water-immersion objectives [(OBJ A and OBJ B in Supplementary Fig. 9, Nikon Cat. # MRD07420] were used in an free-space coupled diSPIM configuration9. A 60x, 1.2 NA water-immersion objective (OBJ C in Supplementary Fig. 9, Olympus UPLSAPO60XWPSF) was mounted beneath the coverslip. Each objective was housed within a piezoelectric objective positioner (PZT, Physik Instrumente, PIFOC-P726), enabling independent axial control of each detection objective.

Four volumetric views were obtained with the three objectives in stage-scanning mode, i.e., samples were translated though the light sheet via an XY piezo stage (Physik Instrumente, P-545.2C7, 200 μm × 200 μm). When excitation was introduced from OBJ B, one top view (collected from OBJ A) and one bottom view (from OBJ C) were simultaneously acquired. Similarly, when illumination was introduced from OBJ A, another top view (collected from OBJ B) and bottom view (from OBJ C) were again simultaneously acquired. Views collected from OBJ A/B were acquired as usual in light-sheet microscopy (i.e. they are perpendicular to the illumination); views collected from OBJ C were acquired by scanning OBJ C vertically during each exposure. Thus, the top two sCMOS cameras corresponding to OBJ A/B were operated in hybrid rolling/global shutter mode, but the lower camera was operated in a virtual confocal slit mode to obtain partially confocal images during light-sheet illumination introduced from OBJ A/B.

T cells

Stably transfected EGFP-Actin E6–1 Jurkat T cells were grown in RPMI 1640 medium with L-glutamine and supplemented with 10% FBS, at 37℃ in a 5% CO2 environment. Glass coverslips (24 mm × 50 mm × 0.17 mm, VWR, Cat. # 48393241) were coated with 0.01% Poly-L-Lysine (weight/volume) (Sigma-Aldrich, St. Louis, MO) and incubated with Anti-CD3 antibody (Hit-3a, eBiosciences, San Diego, CA) at 10 μg/ml for 2 h at 37°C the same day that cells were imaged. Before imaging, 1 ml of cells was centrifuged at 250 RCF for 5 min, resuspended in the L-15 imaging buffer supplemented with 2% FBS, and plated onto the coverslips.

Cleared tissue imaging

We modified our original fiber-coupled diSPIM51 for cleared tissue imaging by incorporating elements of the commercially available Applied Scientific Instrumentation (ASI) DISPIM and the DISPIM for Cleared Tissue (CT-DISPIM). All components were designed and manufactured by ASI unless otherwise specified. The microscope body was built inside an incubator box (RAMM-Incu) on a 450 mm × 600 mm breadboard (Incu-breadboard). Samples were placed on a FTP-2000 Focusing Translation Platform to provide precise and repeatable x,y,z positioning of the sample as well as rapid stage scanning31 during cleared tissue imaging. CAD drawings of the setup are shown in Supplementary Fig. 15.

Dovetail mounts (DV-6010) were attached to the SPIM head (SPIM-DUAL-K2) lower Cube III modules and connected to angled dovetails on support arms from posts mounted to the breadboard (Camera Support Kit CAM_SUP-K4-13-5). This configuration fixes the SPIM head while the sample can be moved relative to the head using the FTP-2000, minimizing vignetting of the fluorescence emission that compromised earlier diSPIM performance on large samples.

Each camera (Hamamatsu Orca Flash 4.0) was attached to a tube lens assembly (MIM-Tube-K) which was clamped to Ø1.5” support posts (Thorlabs) from the breadboard leaving an air gap of 1–2mm between the tube lens assembly and the SPIM head. The resulting vibrational decoupling of the cameras from the SPIM head minimized image jitter caused by the camera fans. The cameras themselves were additionally supported on 45° angle brackets (Thorlabs AP45) mounted on Ø1.5” vibrationally damped posts (Thorlabs DP14A).

For cleared tissue imaging we used a pair of Special Optics 0.4 NA cleared tissue immersion objectives (ASI 54-10-12). At the refractive index of the solvent we used (dibenzyl ether), the magnification of these lenses is ~17.9. Since the back focal planes of these objectives are at different location than the Nikon 40× 0.8 NA water immersion objectives used for live work, the excitation scanners and their associated tube lenses were mounted to adjustable spacers (C60-SPACER-ADJ ASSEMBLY) to ensure 4f spacing of the light-sheet excitation path. All cleared tissue experiments used quad notch filters (Semrock StopLine Notch Filter NF03–405/488/561/635E-25) and associated dichroic mirrors (Semrock BrightLine Laser Dichroic DiO3-R405/488/561/635-t1-25×36), which together isolated the fluorescence from the excitation light (405, 488, 561, 637 nm from Coherent OBIS sources).

Data were acquired by moving the stage in a raster pattern with aid of the ASI diSPIM Micromanager53 plugin (http://dispim.org/software/micro-manager, ref.54). The number of imaging tiles/rows as well as other acquisition parameters of interest are reported in Supplementary Table 5.

Due to the volume size and speed of data acquisition during cleared tissue imaging, it was necessary to use a NVMe solid state drive (Samsung 960 PRO M.2 2TB) to write data during an acquisition. Data were transferred to a local 300 TB server after acquisition for longer term storage.

Cleared brain slab

The mouse brain sample was prepared using the iDISCO+ procedure29. Briefly, the brain from an adult vasopressin receptor 1B Cre X Ai9 (B6.Cg-Gt(ROSA)26Sortm9(CAG-tdTomato)Hze56); Cre recombinase dependent tdTomato) mouse32 (gift of W. Scott Young) was fixed by trans-cardiac perfusion with 4% paraformaldehyde. It was then cut into 2mm slabs and dehydrated through a methanol series, rehydrated, immunolabeled with an antibody that recognizes tdTomato (1:200 dilution Rabbit anti-RFP, Rockland Antibodies and Assays, Cat. # 600-401-379) and an Alexa 555 secondary antibody (Invitrogen, Cat. # A27039 used 1:100), then dehydrated with a methanol series, and dichloromethane before equilibration in dibenzyl ether (Sigma, Cat. # 108014) and imaging.

Cleared gut, stomach, and ovary

Mouse tissue stored in 4% paraformaldehyde was dissected and washed in 20 mL 1X PBS for 1 hour at room temperature. Desired organs were dehydrated and rehydrated in a serial dilution of methanol/water and bleached in 5% hydrogen peroxide/methanol mixture according to the iDISCO protocol30. After rehydration, pretreated samples were stained with 400 μL of primary antibody dilution (1:100) in a PBS buffer containing 0.5% Triton-X and 0.05% sodium azide and shaken at 37 C for 4 days. Samples were washed in 5 mL washing buffer consisting of 0.5% Triton-X/PBS and 0.05% sodium azide on a rotator for 1 day at room temperature. The next day, samples were stained with 400 μL of secondary antibody dilution (1:100) made of 0.5% Triton-X/PBS and 0.05% sodium azide in a 37 C shaker for 4 days. Samples were washed for one day before optical clearing. For some samples, 1:1000 DAPI (1mg/mL stock) stain was incorporated in the first washing step. All labels are indicated in Supplementary Table 6.

Immunolabeled samples were dehydrated in 5 mL of 20%/40%/60%/80%/90%/100% tetrahydrofuran/water mixture (30 minutes at room temperature for every step). Samples were washed in 5 mL of 100% tetrahydrofuran for another 30 minutes at room temperature and incubated in 5 mL of 100% dichloromethane until samples sank to the bottom of the tube. Samples were then incubated overnight at room temperature in another 5 mL of fresh 100% dichloromethane. The next day, samples were cleared in 5 mL of dibenzyl ether (Sigma, Cat. # 108014) twice at room temperature for 30 minutes each time. Cleared samples were mounted on glass slide with a minimal amount of Krazy Glue surrounding the bottom of the samples for imaging with the cleared tissue diSPIM.

Beads sample

No. 1.5 coverslips (VWR, 48393241) were cleaned with 100% ethanol and coated with 0.1% poly-l-lysine (PLL; Sigma-Aldrich) for 10 min. Then 100-nm yellow-green beads (Thermo Fisher Scientific; F8803) were diluted ~105-fold and 20 μL were added to the central region of the coverslip. After 10 min, the coverslip was washed four times with clean water before imaging. During imaging, the beads were immersed in dibenzyl ether (Sigma, Cat. # 108014).

Free-space coupled diSPIM, conventional and reflective imaging

The geometry of the diSPIM (0.8/0.8 NA) used for conventional and reflective imaging has been previously described13. Glass coverslips (24 mm × 50 mm × 0.17 mm, VWR, Cat. # 48393241) for conventional experiments were modified for reflective experiments by sputtering a 150-nm-thick aluminum film over their entire surface and then protecting them with a 700-nm-thick layer of SiO2 (Thin Film coating, LLC). During conventional imaging, dual views were sequentially acquired in light-sheet scanning mode via two objectives (Nikon, Cat. # MRD07420, 40x, 0.8 NA) and imaged with 200-mm tube lenses (Applied Scientific Instrumentation, C60-TUBE_B) onto two scientific-grade, complementary, metal-oxide-semiconductor (sCMOS) cameras (PCO, Edge 5.5), resulting an image pixel size of 162.5 nm. During reflective imaging, four views (direct fluorescence and mirror images) were simultaneously collected in stage scanning mode with the same detection optics. In all acquisitions, the exposure time for each plane was 5 ms.

Nematode embryos

C. elegans were maintained on nematode growth medium seeded with Escherichia coli (OP50). Embryos were dissected from gravid adults, placed on poly-l-lysine-coated coverslips and imaged in M9 buffer, as previously described9. Strain BV24 [ltIs44 [pie-1p-mCherry::PH(PLC1delta1) + unc-119(+)]; zuIs178 [(his-72 1 kb::HIS-72::GFP); unc-119(+)] V] was used for imaging nuclei in conventional mode and strain AQ2953 ljIs131[myo-3p::GCaMP3-SL2-tagRFP-T] for imaging calcium flux within three-fold embryos in reflective mode.

Lattice light-sheet microscopy, conventional and reflective imaging

The lattice light-sheet microscope (1.1/0.71 NA) for reflecting imaging was constructed as previously described34. The annular mask was set at 0.325 – 0.4 NA and a square lattice in the dithered mode was produced at the sample. The excitation power (488 nm) was measured at the back focal plane of the excitation objective at ~25μW. The 25X Nikon CFI APO LWD detection objective was paired with a 500mm achromat lens for an effective magnification of 63.7x, resulting an image pixel size of 102 nm. The exposure time for each plane was 8 ms, and the stage-scanning step size for the volumetric imaging was 0.4 μm, corresponding to 209 nm along the optical axis after deskewing. When deconvolving the data with a spatially variant PSF for resolution recovery and removal of epifluorescence contamination13, the excitation pattern was based on the measured lattice light-sheet dimensions (propagation distance of ~26.6 μm FHWM along the optical axis and a waist of 0.99 μm FWHM), and the detection PSF was simulated as a widefield PSF with 1.1 NA using the PSF generator ImageJ plugin (http://bigwww.epfl.ch/algorithms/psfgenerator/). The light-sheet dimensions were measured by sweeping the sheet axially through a 0.1 μm diameter fluosphere (ThermoFisher) while stepping the bead along the propagation length of the sheet. Conventional imaging experiments were conducted on 5 mm diameter × 0.15 mm glass coverslips (Warner Instruments, CS-5R). For reflective experiments, 5 mm diameter × 0.17 mm glass coverslips were sputtered as for the free-space diSPIM experiments with a 150-nm-thick film of aluminum followed by a 700-nm-thick layer of SiO2 (Thin Film Coating, LLC).

Microtubule and actin samples

For imaging microtubules, human osteosarcoma U2OS cells (ATCC HTB-96) were grown on uncoated coverslips, fixed with glutaraldehyde, washed with PBS in room temperature, and then immunolabeled with DM1A antibody conjugated with Alexa-488 (Sigma, T9026). For imaging alpha-actinin, U2OS cells stably transfected with alpha-actinin mEmerald (a gift from Michael Davidson, FSU) were plated onto coverslips 24 hours before imaging. Cells were imaged within 1 h after plating on the reflective coverslips.

Data processing

Pre-processing

Raw single-view and multiview images were pre-processed by subtracting a uniform background with intensity equivalent to average of 100 dark (no excitation light) background images prior to registration and/or deconvolution. For deconvolution, the measured image or the average of the measured images after the elimination of zero values (zeros replaced by a small value, 0.001) was used as the initial estimate. However, we note that the more rapid deconvolution reported in this work is robust to changes in the initial guess as we obtained similar acceleration when using a constant valued image as the initial estimate.

Dispim deconvolution

The joint RL deconvolution scheme used in diSPIM improves the overall estimate e of sample density by alternately considering each view57:

end

where iA, fA, bA and iB, fB, bB are the raw images, forward projector (PSF) and backwards projector corresponding to views A and B, respectively. Traditionally, b is taken to be the transpose of f. However, as in single-view deconvolution, we found that using unmatched back projectors (e.g. Gaussian, Butterworth, or WB filters) considerably accelerated this procedure (reducing N).

Quad-view deconvolution

In quadruple-view deconvolution, we start with the additive RLD update, finding as previously reported11 that this method yields better reconstructions than the alternating joint deconvolution update57 used for diSPIM:

end

With f, b, e, i defined as above and the subscripts A, B, C, D indicating each view. Choosing each back- projector b to be the transpose of the forwards operator f yields the traditional RL update. Choosing the back projectors as follows yields the previously-described ‘virtual-view’ update in EBMD10 (* denotes convolution and ^ the transpose), speeding up this procedure:

Finally, setting b to be the unmatched WB filter appropriate for each view provides the fastest update, as for dual-view and single-view microscopes.

Joint deconvolution for reflective light-sheet imaging

Raw image data from the four views in reflective diSPIM imaging (0.8/0.8 NA) or two views in reflective lattice light imaging (0.7/1.1 NA) are merged to produce a single volumetric view, after processing steps that include background subtraction, interpolation, transformation, fusion, registration, epifluorescence removal and joint deconvolution. The data processing steps for removing epifluorescence contamination and enhancing resolution for reflective diSPIM and reflective lattice light sheet imaging are similar to those previously described13 for the symmetric (0.8/0.8 NA) and asymmetric (0.71/1.1NA) diSPIM configurations. We represent the effect of the mirrored coverslip by reflecting the object across the coverslip to obtain an extended object consisting of real and virtual objects. During each RL update, we construct the forward model by (1) shifting the illumination pattern at each axial (z) slice in the extended object; (2) multiplying the shifted illumination pattern with the estimate of the extended object; (3) looping over z in the estimate and at each z performing 2D convolution with the detection PSF; and (4) collapsing over the z coordinate. In the backwards model, we perform back projection of the ratio image (i.e., the ratio of the raw data to the blurred estimate), convolve it with the appropriate back projector function (the flipped PSF for traditional RL deconvolution, or the unmatched back projector for the Wiener-Butterworth case), then multiply with the shifted illumination pattern. This deconvolution process is not blind, i.e. the PSFs are simulated wide-field detection PSFs based on experimentally measured PSFs. For the datasets we report here, there was no need to crop the data. When processing the reflective lattice light sheet data, the excitation profile was based on the measured dithered lattice light-sheet illumination.

In more detail, we form view U1 (that includes both conventional view and mirrored views) and a second, virtual view U2 by reflecting view U1 across the mirror as previously described13. U1 and U2 are thus blurred with complementary detection PSFs. We register the two views U1 and U2, and perform joint deconvolution on them by applying the joint Richardson-Lucy update with WB back projector for each view as follows:

Here, and are the forward operators that map the object stack to either measured conventional view stack U1 or virtually reflected view stack U2, respectively, and and are the backward operators that map from data space back to object space. Four steps are sequentially applied in obtaining each update. First, we compute or by applying the forward operator or to the current estimate of the object according to three cascaded operations at each light sheet position (or z slices), where matrix represents multiplication of the estimate by the crossed light sheets; matrix represents looping over all the z slices and performing 2D convolution with a slice of the detection PSF at each z; and matrix applies projection over all z slices. Second, divide the measured data stack U by this quantity, and denote the resulting ratio image R. Third, apply the transpose operator or to R, which involves applying the cascaded operations and then summing over all z slices. Here is a backprojection matrix, which smears the vector to which it is applied back across the image grid; represents looping over z in the object distribution and performing 2D convolution with a slice of the transposed but unmatched detection PSF (i.e., WB back projector appropriate for the particular microscope, Supplementary Note 2) at each z; is equivalent to matrix , denoting multiplication with the illumination pattern. Last, update the current estimate by multiplying by the correction image or and dividing by the normalization image V1 or V2 (i.e., or , where 1 denotes an image of ones).

GPU deconvolution with Huygens software

For the deconvolution comparison in Supplementary Fig. 13, both Wiener-Butterworth and Huygens deconvolution (Scientific Volume Imaging, Essential 19.10 version) use the same theoretical PSF, generated as the product of excitation light sheet and widefield emission PSF. When testing Huygens, we used the light sheet deconvolution module. All parameters were set at their default values. Deconvolution was performed on a Windows 10 workstation (CPU: Intel Xeon, Platinum 8168, 2 processors; RAM: 512 GB; GPU: Nvidia Quadro RTX6000 graphics card, 24 GB memory).

Conventional 3D affine registration

Some CPU-based registrations were performed in the open-source Medical Imaging Processing, Analyzing and Visualization (MIPAV) programming environment (http://mipav.cit.nih.gov/). As previously described9, we applied an affine transform with 12 degrees of freedom (DOF) to register the source image (S, image to be registered) to the target image (T, fixed image). The DOF matrix is a 12-element transformation matrix that applies the four affine image transformation operations (translation, rotation, scaling and shearing) from S to T. We used an intensity-based method to iteratively optimize the DOF matrix by minimizing a cost function via Powell’s method (http://mathfaculty.fullerton.edu/mathews/n2003/PowellMethodMod.html). We set the search angle range from −10 degrees to 10 degrees, with a coarse angle increment of 3 degrees and a fine angle increment of 1 degree. This registration function ‘Optimized Automatic Image Registration 3D’ has already been incorporated in MIPAV as a Plugin - ‘SPIM-fusion’51. With this CPU-based registration environment, we registered the data imaged with diSPIM (Fig. 2a, j) and quad-view light-sheet microscopy (Fig. 2e, see below for more detail on how we registered four views), and compared the registration outcomes and computation costs with the GPU-based registration described in the following section (Fig. 2i). To estimate the computational costs for registering large, cleared tissue volumes with the CPU-based approach (Fig. 3i), we randomly chose 10 subvolumes (each 640 × 640 × 640 pixels), calculated the time for registration, averaged the times (i.e., ~31 mins per subvolume) and then multiplied the averaged time with the total number of subvolumes (e.g., 4576 subvolumes in Fig. 3d) to estimate the total registration time (i.e., ~ 100 days).

For the comparative data shown in Supplementary Table 4, registration was also performed using the NiftyReg software package (http://cmictig.cs.ucl.ac.uk/wiki/index.php/NiftyReg) and the elastix software package (http://elastix.isi.uu.nl). For NiftyReg, the reg_aladin command was used with default settings except that the ‘-pv’ and ‘-pi’ parameters were both set as 100. In this case, the NiftyReg performed a rigid + affine based registration. For elastix, the transform type was set as “AffineTransform” to perform affine based registration; the Interpolator was set as “LinearInterpolator”; the Resampler was set as “DefaultResampler” for CPU implementation and “OpenCLResampler” for GPU implementation.

New GPU-based 3D affine registration

We developed a new registration pipeline that accelerates the registration of multiview light-sheet data via GPU programming (Supplementary Fig. 12), including data acquired with the diSPIM (Fig. 2a, j), quad-view light-sheet microscopy (Fig. 2e), reflective diSPIM (Fig. 4c) and reflective lattice light-sheet microscopy (Fig. 4f). More importantly, this GPU-based registration method also enables the registration of large, cleared tissue datasets imaged with diSPIM (Fig. 3), which is impractical if implemented in the CPU-based registration method (e.g., ~100 days with CPU-based registration time as estimated above vs. ~24 hours with GPU-based registration for data in Fig. 3d).

Our GPU-based method uses the same method (i.e., intensity-based, iterative optimization of the transformation matrix) as in the previous CPU-based MIPAV registration, but dramatically improves the registration speed and accuracy for several reasons. First, we iteratively perform affine transformations on the source image (S) which is always kept within the GPU texture memory. The main computational burden in 3D transformation is trilinear interpolation, which can be significantly lessened via the use of texture memory. Second, the correlation ratio between the intensity of the transformed source (S’) and target image (T) that is used in the cost function, can be rapidly calculated via the parallel computations enabled by the GPU. Third, when minimizing the cost function by using Powell’s method to update the 12-element transformation matrix, we don’t simultaneously optimize all 12 elements (i.e., full translation, rotation, scaling and shearing that comprise 12 DOF). Instead, the optimization is serial, successively optimizing translation; rigid body (translation and rotation, 6 DOF); translation, rotation and scaling (9 DOF); and finally the full translation, rotation, scaling and shearing operations (12 DOF). We observed that such serial optimization makes registration more accurate and robust. Finally, although the initial transformation matrix (M0) for beginning the optimization process is an identity matrix by default, we also provide an option to generate M0 by performing a 2D registration (translation and rotation) on the XY and ZY maximum intensity projections of S and T. This 2D registration is an intensity-based rigid body transformation with the same optimization routine as 3D registration, but performing registration in 2D with only translation and rotation is very rapid, only ~1% of the time required for performing full 3D registration. This additional step also guarantees a reasonable starting initialization of M0 for further 3D optimization in 3D. Alternatively, a transformation matrix from a prior time point in a time lapse 4D dataset can be used as M0 to accelerate the registration. In some cases (e.g., Fig. 2a), we observed that using a matrix from a previous time point can reduce the registration time for a new volume by as much as 65%, e.g. from ~8.8 seconds/volume to ~3.1 seconds/volume.