Abstract

To characterize the variability in usability and safety of EHRs from two vendors across four healthcare systems (2 Epic and 2 Cerner). Twelve to 15 emergency medicine physicians participated from each site and completed six clinical scenarios. Keystroke, mouse click, and video data were collected. From the six scenarios, two diagnostic imaging, laboratory, and medication tasks were analyzed. There was wide variability in task completion time, clicks, and error rates. For certain tasks, there were an average of a nine-fold difference in time and eight-fold difference in clicks. Error rates varied by task (X-ray 16.7% to 25%, MRI: 0 to 10%, Lactate: 0% to 14.3%, Tylenol: 0 to 30%; Taper: 16.7% to 50%). The variability in time, clicks, and error rates highlights the need for improved implementation optimization. EHR implementation, in addition to vendor design and development, is critical to usable and safe products.

Keywords: usability, safety, electronic health records

INTRODUCTION

Background and significance

Electronic health record (EHR) usability, which is the extent that the technology can be used efficiently, effectively, and satisfactorily by clinical users, continues to be a point of major concern.1,2 Usability challenges lead to inefficiencies that contribute to clinician frustration and patient dissatisfaction, and can introduce patient safety risks that may result in harm.3,4 These challenges include screen displays that have confusing layouts and extraneous information, workflow sequences that are redundant and burdensome, and alerts that interrupt workflow with irrelevant information.5

The Office of the National Coordinator (ONC) of Health Information Technology, part of the U.S. Department of Health and Human Services, has recently put requirements in place to promote usability.6 EHR vendors are required to use a user-centered design approach, which emphasizes the needs of the clinician end-user, during design and development, and must conduct usability testing of certain EHR features near the end of the development process.

There are several reasons usability challenges persist despite these requirements. First, some vendors have not adhered to the requirements, yet their products have still been certified as if they have and are being used by providers.7 Second, while EHR vendor design and development processes shape the usability of the her, there are several other factors that also have an impact.8,9 For example, configuration and customization choices made by both the EHR vendor and healthcare provider during EHR implementation impact the layout and type of information presented on the screen for that specific provider. Consequently, the product that is used by frontline clinicians may be very different from what was tested by the vendor to meet government requirements for usability and safety.10

Because of some vendors’ lack of adherence to usability requirements and the changes to the EHR product that may occur during implementation, the variance in EHR usability and safety of products used by frontline clinicians is largely unknown. Understanding this variability is important to improving EHR products and considering policies to guide design, development, implementation and use.

Objective

The purpose of this study was to characterize and quantify the variation in usability and the potential impact on safety that results from this variability. We studied the physician-EHR interaction of two popular EHR vendor products implemented in different healthcare systems. We report on between and within vendor differences in efficiency metrics, accuracy, and error types observed during physician performance of common clinical tasks in EHR products that were implemented independently at different hospital systems.

MATERIALS AND METHODS

Study design and setting

A between-participants usability study of EHRs in the emergency medicine environment was conducted at four different healthcare systems in the United States. The study protocol was approved by the institutional review board at each site. Sites were selected through convenience sampling with the caveat that the sites must be using an EHR from one of the two market leaders, which collectively represent over 50% of EHRs within hospital systems. Two sites used Epic EHR products and two used Cerner EHR products; the EHRs were being used at each site for over two years. Testing was conducted in a quiet room, onsite, and used the EHR training environment that closely mirrored the current production environment. At each site, the same fictitious patient data were pre-loaded to produce a realistic EHR environment and to minimize variation between sites. Six clinical scenarios (see Supplementary Appendix S1) were developed by two board-certified emergency physicians who are both currently practicing (AZH and RF) and then reviewed and endorsed by the emergency physician liaison at each site. Emergency physicians currently practicing at each study site were recruited using a purposive sampling strategy. Standardized instructions were given, and participants were asked to complete each scenario, adhering to the routines of their actual clinical practice, without any clinical guidance from the experimenter. User interaction data (such as mouse clicks and keystrokes) and audio data were collected.

Selection of participants

Practicing emergency physicians were recruited from each site and compensated for participation. Table 1 provides characteristics and demographics of the participants by site. For usability studies, sample sizes of 10 to 15 participants are typical and sufficient to understand usability and safety challenges.11

Table 1.

Participant demographics by site

| Site 1A (N = 14) | Site 2A (N = 15) | Site 3B (N = 14) | Site 4B (N = 12) | |

|---|---|---|---|---|

| Role | count | count | count | count |

| Attending | 5 | 15 | 8 | 7 |

| Resident | 9 | 0 | 6 | 5 |

| Experience in role (yrs) | ||||

| 1-5 | 12 | 5 | 11 | 7 |

| 6-10 | 1 | 9 | 1 | 1 |

| 11+ | 1 | 1 | 2 | 4 |

| Experience with EHR (yrs) | ||||

| 1-5 | 11 | 7 | 14 | 10 |

| 6-10 | 3 | 3 | 0 | 1 |

| 11+ | 0 | 5 | 0 | 1 |

Measurements

Participants were verbally consented, introduced to the study using a standard script, and completed a brief five-question demographic survey prior to starting the clinical scenarios. Participants were instructed to complete each scenario, adhering to their normal clinical practice. The study moderator presented each clinical scenario one at a time and remained in the experiment room while each scenario was completed; however, the moderator did not provide further guidance or instructions. While participants were interacting with the EHR, screen capture software recorded the experimental session, including all mouse clicks and keystrokes (Morae Screen Recorder). Following completion of the scenarios, a brief survey and study debrief was conducted.

Outcomes

The primary outcome measures were task duration, number of clicks needed to complete each task, and accuracy. Total duration of time-on-task and number of mouse clicks to complete each task were calculated from the screen capture software. Click count was defined as any mouse input outside of movement, including left clicks, right clicks, and scroll wheel adjustment. In order to compensate for the fact that keyboard shortcut alternatives to mouse input exist, the use of the “tab” button was considered a mouse click equivalent. Accuracy of task completion was determined by calculating the percentage of participants at each site who successfully completed each task without any type of error. For imaging orders, errors were considered ordering the wrong test, omitting any part of the order, or ordering for the wrong side. For lab orders, errors were considered ordering the wrong test or a test for the wrong time if a timed order is required. For medication orders, the National Coordinating Council for Medication Error Reporting and Prevention error taxonomy was used.12 Errors were determined by having clinicians review the screen capture videos.

Analysis

To quantify usability and safety, six different tasks were analyzed from the clinical scenarios: two diagnostic imaging orders (radiograph and MRI), two laboratory orders (troponin and lactic acid), and two electronic prescriptions (oral acetaminophen and an oral prednisone taper). The tasks were analyzed by segmenting the screen capture video by task. Statistical analyses were conducted using descriptive statistics and analysis of variance (IBM SPSS Statistics for Windows, Version 22.0). The statistical comparison of time and click data was performed with accurate responses only.

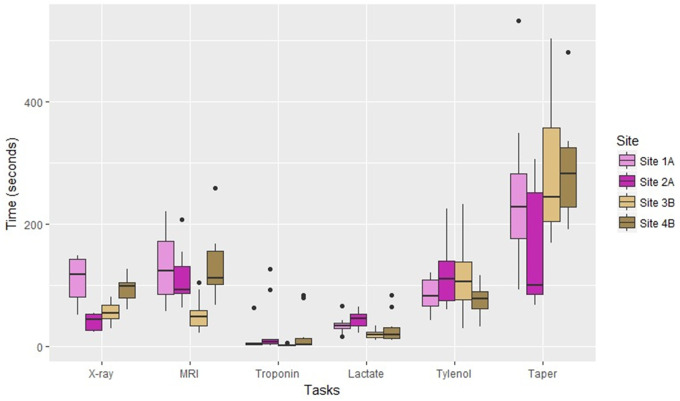

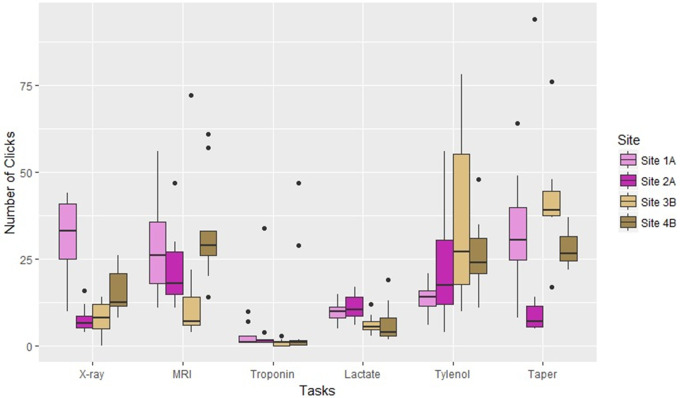

RESULTS

To prevent disclosure of the specific site and vendor, we refer to each site by a number and have assigned a letter (either A or B) to represent a specific vendor (either Epic or Cerner). Table 2 provides an overview of the results, including the types of errors and error rates by task. Figures 1 and 2 provide an illustration of time and clicks by site showing the variability between physicians within each site and the variability across sites.

Table 2.

Summary of duration, clicks, and accuracy

| EHR functions | Usability and safety metrics | Site 1A Mean (SD) | Site 2A Mean (SD) | Site 3B Mean (SD) | Site 4B Mean (SD) |

|---|---|---|---|---|---|

| X-ray (left elbow, wrist, forearm) | Task duration (sec) | 64.1 (22.4) | 24.3 (8.5) | 33.3 (9.9) | 55.5 (13.3) |

| Clicks | 31.1 (12.6) | 7.7 (3.8) | 8.1 (4.9) | 15.5 (6.6) | |

|

25% | 16.7% | 35.7% | 20% | |

| MRI (cervical, thoracic, lumbar) | Task duration (sec) | 78.9 (33.4) | 66 (25.6) | 32.2 (16.1) | 79.5 (34.3) |

| Clicks | 28.9 (13.7) | 22.4 (10.5) | 14.2 (18.2) | 33.3 (15.7) | |

|

0 | 8.3% | 7.1% | 10% | |

| Troponin | Task duration (sec) | 5.3 (10.3) | 14.2 (24.5) | 1.5 (.9) | 12.1 (19.7) |

| Clicks | 2.7 (2.9) | 4.3 (9.4) | .9 (.9) | 8.2 (16.3) | |

|

0 | 0 | 0 | 0 | |

| Lactate (timed order) | Task duration (sec) | 20.4 (8) | 26.9 (7.9) | 12.1 (4.9) | 17.5 (15.1) |

| Clicks | 9.9 (3) | 11.1 (3.4) | 6 (2.5) | 6.6 (5.5) | |

|

0 | 0 | 14.3% | 0 | |

| Tylenol (500 mg PO, 4-6 hours) | Task duration (sec) | 51.4 (15.3) | 70.4 (32) | 69.3 (38.2) | 45.6 (15.9) |

| Clicks | 14 (4.1) | 23.5 (15.8) | 61.6 (94) | 25.8 (11.2) | |

|

8.3% | 0 | 7.1% | 30% | |

| Prednisone taper (60 mg, reduce by 10 mg every 2 days for 12 days) | Task duration (sec) | 148.6 (76.1) | 152.7 (163.4) | 175.1 (73) | 178.7 (62.6) |

| Clicks | 32.2 (16.6) | 20 (32.8) | 42.3 (17.6) | 28.2 (5.7) | |

|

16.7% | 41.7% | 50% | 40% |

Figure 1.

Time on task by site and vendor.

Figure 2.

Number of clicks by site and vendor.

Imaging orders

For x-ray orders, physicians at site 2A and site 3B were faster than site 1A and site 4B (M = 24.3 sec and 33.3 vs. 64.1 and 55.5; P < .01). Site 1A required more clicks than all other sites (M = 31.1 vs. 7.7, 8.1, and 15.5, P < .01). Site 4B required more clicks than site 2A (M = 15.5 vs. 7.7; P < .05). Error rates ranged from 16.7% to 35.7%.

For MRI orders, physicians at site 3B were faster than the other sites (M = 32.2 sec vs. 78.9, 66, and 79.5, P < .01). Site 3B required fewer clicks than Site 1A and Site 4B (M = 14.2 sec vs. 28.9 and 33.3, P < .05). Error rates ranged from no errors to 10%.

Lab orders

There were no differences in task completion time, number of clicks, or accuracy when ordering a troponin.

When ordering a lactate, physicians at Site 3B were faster than both Site 1A and Site 2A (M = 12.1 sec vs. 20.4 and 26.9; P < .05). Site 4B was faster than Site 2A (M = 17.5 vs. 26.9; P < .05). Sites 1A and 2A required more clicks than Sites 3B and 4B (M = 9.9 and 11.1 vs. 6 and 6.6; P < .05). Site 3B had an error rate of 14.3%.

Medication orders

When ordering Tylenol, there were no differences in time to complete the order. Physicians at Site 3B made more clicks than Site 1A (M = 61.6 vs. 14, P < .01). Error rates ranged from no errors to 30%.

When ordering the prednisone taper, there were no differences in time or clicks. Error rates ranged from 16.7% to 50%.

DISCUSSION

The results of this study reveal wide variability in task duration, clicks, and accuracy when completing basic EHR functions across EHR products from the same vendor and between products from different vendors. The results highlight the variability that can be introduced from local site customization, given that products from the same vendor resulted in vastly different performance results. For certain tasks there was an eight-fold difference in time and clicks from one site to another. Across the different tasks, error rates varied widely, reaching 50% for taper orders, which are generally not well supported by the four implemented EHRs in the emergency medicine setting. Depending on the task, EHR errors can have serious patient safety consequences. The diagnostic imaging errors can lead to unnecessary testing, such as imaging the wrong side of the body, and patient harm through excess radiation exposure from wrong-site imaging. The medication errors can result in under- or over-dosing, and therefore an inadequate or toxic treatment coarse, respectively. All vendor products required the physician to manually calculate the taper dose, which likely resulted in the high rate of dose errors for that task.

The variability in time, clicks, and errors highlight critical challenges with EHR usability and safety. All of the products examined in this study were usability tested by Cerner or Epic Corporations, and certified by the ONC’s accrediting bodies. These products go through vastly different implementation processes with variations in customization and configuration, physician training, and software updates. The differences in vendor testing, ONC accrediting body certification review, and implementation processes, as well as other factors, all contribute to the variability demonstrated in this study. EHR usability and safety improvements will require a more in-depth understanding of optimal processes to ensure safe and usable EHRs.

The variability in time, clicks, and accuracy raises important questions around the usability and safety testing of EHRs. Currently, there are no performance guidelines or mandated requirements for testing the usability and safety of implemented EHRs. Our results suggest that basic performance standards for all implemented EHRs should be considered in order to ensure usable and safe systems. Both EHR vendors and providers should work together to ensure that usable and safe products are implemented and used.

There are limitations to this study, including variability in the number of resident and attending physicians participating at each site, with site 2A having only attending physician participants. The EHR training environment was used for study testing purposes, and while representative of the live clinical environment, there may have been slight differences or lack of access to customized workflows used in the production environment. Finally, we tested the EHR product used in the emergency department, which is different from systems used for inpatient care.

CONCLUSIONS

The variability in time to complete tasks, number of clicks, and error rates with the most frequently used EHR products highlights the need for improved implementation processes.

FUNDING

This work was supported by a contract from the American Medical Association to Raj Ratwani, PhD, and MedStar Health.

Conflict of interest statement. The authors have no competing interests to report.

CONTRIBUTORS

RR, RJF, MH, and AZE conceived of the study. Study design was informed by all the authors. Data collection was performed by RR, AW, and ES. All of the authors contributed to the writing of the manuscript. RR takes responsibility for the manuscript.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

REFERENCES

- 1. International Standards Organization. ISO 9241: Ergonomics of Human System Interaction.2010. https://www.iso.org/obp/ui/#iso:std:iso:9241:-210:ed-1:v1:en. Accessed February 2016.

- 2. Institute of Medicine. Health IT and Patient Safety Building Safer Systems for Better Care. Washington, D.C: National Academy Press; 2012. [PubMed] [Google Scholar]

- 3. Howe J, Adams KT, Hettinger AZ, Ratwani RM.. Electronic health record usability issues and potential contribution to patient harm. J Am Med Assoc 2018; 31912: 1276–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Middleton B, Bloomrosen M, Dente MA, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc 2013; 20 (e1): e2–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Koppel R, Kreda DA.. Healthcare IT usability and suitability for clinical needs: challenges of design, workflow, and contractual relations. Stud Health Technol Inform 2010; 157: 7–14. papers2://publication/uuid/93BD5076-2932-4681-BFCB-BF17C6192753. [PubMed] [Google Scholar]

- 6. Department of Health and Human Services- Office of the National Coordinator for Health Information Technology. HIT Standards Committee and HIT Policy Committee Implementation and Usability Hearing Washington D.C: the United States Department of Health and Human Services; 2013: 1–87.

- 7. Ratwani RM, Benda NC, Hettinger AZ, Fairbanks RJ.. Electronic health record vendor adherence to usability certification requirements and testing standards. JAMA 2015; 31410: 1070–1. [DOI] [PubMed] [Google Scholar]

- 8. Ratwani R, Fairbanks T, Savage E, et al. Mind the Gap: a systematic review to identify usability and safety challenges and practices during electronic health record implementation. Appl Clin Inform 2016; 7: 1069–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Meeks DW, Takian A, Sittig DF, Singh H, Barber N.. Exploring the sociotechnical intersection of patient safety and electronic health record implementation. J Am Med Inform Assoc 2014; 21 (e1): e28–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Metzger J, Welebob E, Bates DW, Lipsitz S, Classen DC.. Mixed results in the safety performance of computerized physician order entry. Health Aff 2010; 294: 655–63. [DOI] [PubMed] [Google Scholar]

- 11. Schmettow M. Sample size in usability studies. Commun ACM 2012; 554: 64–70. [Google Scholar]

- 12.NCC MERP Index for Categorizing Medication Errors. 2002: 1. https://www.nccmerp.org/sites/default/files/taxonomy2001-07-31.pdf. Accessed March 2017.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.