Abstract

Marine low-level clouds continue to be poorly simulated in models despite many studies and field experiments devoted to their improvement. Here we focus on the spatial errors in the cloud decks in the Department of Energy Earth system model (the Energy Exascale Earth System Model [E3SM]) relative to the satellite climatology by calculating centroid distances, area ratios, and overlap ratios. Since model dynamics is better simulated than clouds, these errors are attributed primarily to the model physics. To gain additional insight, we performed a sensitivity run in which model winds were nudged to those of reanalysis. This results in a large change (but not necessarily an improvement) in the simulated cloud decks. These differences between simulations are mainly due to the interactions between model dynamics and physics. These results suggest that both model physics (widely recognized) and its interaction with dynamics (less recognized) are important to model improvement in simulating these low-level clouds.

1. Introduction

The U.S. Department of Energy (DOE) recently released Version 1 of its Earth system model (ESM), the Energy Exascale Earth System Model (E3SM). With improvements on both physical process representations and computational efficiency to address scientific, computational, and technological challenges relevant to the DOE missions, the model provides the research community a new opportunity to understand Earth system dynamics. In this study, we focus on evaluating marine atmospheric boundary layer (MABL) clouds, especially stratiform clouds, simulated by E3SMv1 because of their significant impact on the Earth’s climate (Wood, 2012).

The simulation of MABL clouds in global climate and ESMs has long been problematic. The uncertainty in the simulation of cloud processes has been identified as contributing to a large uncertainty in climate sensitivity in models (Bony et al., 2006). This stems from their large spread in simulated cloud feedbacks (Bony & Dufresne, 2005). In an atmosphere-ocean coupled model, the poor simulation of clouds has been blamed for causing biases in sea surface temperature (Large & Danabasoglu, 2006) and in the positive feedback loop in the strengthening of the North Atlantic subtropical high (Sánchez de Cos et al., 2016).

Recognizing model deficiencies in simulating MABL stratiform clouds, field experiments have been conducted in the past several decades (e.g., Bretherton et al., 2004; Mechoso et al., 2014; Russell et al., 2013), and a large number of previous studies were dedicated to identifying model biases and improving model performance (e.g., Caldwell et al., 2013; Koshiro et al., 2018; Lin et al., 2014; Wyant et al., 2015). Despite this, problems in simulating these clouds remain.

The evaluation of simulated MABL stratiform clouds has largely emphasized the lack of clouds in models, insinuating amplitude errors. Figure S1 in the supporting information shows that there are indeed amplitude errors in the low stratiform cloud decks as depicted by the generally negative biases of maximum low cloud cover (LCC) in percent cloud fraction (% CF). However, it is also recognized that, qualitatively, there are spatial errors in location, size, and shape in simulated subtropical low stratiform cloud decks as compared to satellite climatologies (Koshiro et al., 2018). Figure S2 gives an example of these spatial errors in the Pacific cloud decks in E3SMv1 and three other U.S. models, in comparison to the satellite climatology from Cloud-Aerosol Lidar and Infrared Pathfinder (CALIPSO).

Many previous studies may have unintentionally confounded spatial errors for amplitude errors if they focused on target regions defined based on observations (e.g., the boxes defined by Klein & Hartmann, 1993, as used in Caldwell et al., 2013, and Koshiro & Shiotani, 2014) or defined by a condition (such as subsidence) from reanalyses (e.g., Dolinar et al., 2015). This is because cloud decks may be misplaced at least partially outside of such fixed regions. Here, we focus on spatial errors (i.e., those due to differences in location, size, and shape) of the subtropical low stratiform cloud decks in models by addressing two questions: (i) What are the spatial errors in the simulated cloud decks? (ii) What are the reasons for these spatial errors? To address (i), we use object-based metrics taken from numerical weather prediction assessment to quantify the spatial errors of low stratiform cloud decks for the first time. These spatial errors could be caused primarily by deficiencies in the models’ large-scale dynamics, their physical parameterizations of subgrid-scale processes, or the interactions between the two. For (ii), we identify which of these are the primary causes of the spatial errors by using a model in which the dynamics is nudged, or prescribed, by reanalysis.

We focus on evaluating the newly released E3SMv1, its performance being assessed by comparison to three U.S. contributions to CMIP5. Model clouds are evaluated by comparison to satellite-derived and surface-based cloud climatologies. These data and the models are briefly described in section 2. The results are given in section 3, while conclusions are made in section 4.

2. Data and Models

2.1. Models

In this study, we focus on comparing cloud fraction (CF) simulated by E3SMv1 to satellite-derived CF. E3SMv1 is DOE’s contribution to CMIP6. We use results from the fully coupled E3SM simulation (the historical ensemble with five members) and E3SM Atmosphere Model Version 1 simulations forced by climatological sea surface temperatures with free-running dynamics (the E3SMv1 Atmospheric Model Intercomparison Project [AMIP] ensemble with three members) and with constrained meteorology (the sensitivity test; Ma et al., 2013), where the model winds are nudged toward those of the Modern Era Retrospective Analysis for Research and Applications Version 2 (MERRA-2; Gelaro et al., 2017) with a relaxation timescale of 6 hr. All of these runs are on the low-resolution grid (~100-km horizontal grid spacing).

Many recent studies (e.g., Jin et al., 2017; Koshiro et al., 2018; Lin et al., 2014; Song et al., 2018a, 2018b) made use of the Cloud Feedback Model Intercomparison Project Observation Simulator Package (COSP) suite of satellite simulators (Bodas-Salcedo et al., 2011) in order to make “apples-to-apples” comparisons between models and satellite measurements. Here, we make use of the total low-level (for pressure levels >680 hPa) CFs (LCC) from the CALIPSO simulator. However, the satellite simulator CF has been shown to possibly be much less than model diagnostic CF due to deficiencies in the subcolumn generator in COSP (Song et al., 2018a). We compare the model diagnostic LCCs from the E3SMv1 runs to those from the satellite simulator in Text S1 and find that they are very similar for the conditions that we are investigating here.

We compare E3SMv1 runs with three models from other U.S. centers that participated in CMIP5 for which COSP CF is available. These include the Community Earth System Model Version 1 (CESM1) utilizing CAM5 (hereafter CESM1-CAM5 or just CAM5), the GFDL CM3, and the GISS ModelE2. CESM1-CAM5 and GFDL CM3 only provided one run, but there were two ensemble members for GISS ModelE2 of which we use the ensemble mean. Model descriptions focusing on the relevant physical parameterizations are given in Text S1. Model output from 1990–2009 is used here.

2.2. Evaluation Data

We use the monthly low CFs from the GCM-Oriented CALIPSO Cloud Product (GOCCP) (Chepfer et al., 2010). This product was developed to compare to model simulations generated from the COSP CALIPSO simulator. The CALIPSO satellite is part of the A-Train that closely follows CloudSat. We use the data at 2° × 2° horizontal resolution between 2007 and 2014. To better quantify the data uncertainty, we also utilize data from the International Satellite Cloud Climatology Project D2 product and the Extended Edited Cloud Reports Archive from ship measurements. These data are described in Text S2.

In order to differentiate between different cloud types, we also use the CloudSat team’s 2B-CLDCLASS-LIDAR product (Sassen et al., 2008), which utilizes measurements from CloudSat’s radar and CALIPSO to define the various cloud types (shallow cumulus, stratus, stratocumulus, nimbostratus, altostratus, altocumulus, cirrus, and deep convection). These data are only available between 2007 and 2009.

It is well known that cloud cover in subtropical marine stratiform cloud decks is strongly related to lower tropospheric stability (LTS; Klein & Hartmann, 1993), which is a metric for the temperature inversion at the boundary layer top. We still use this original metric rather than estimated inversion strength (EIS; Wood & Bretherton, 2006), because it provides similar results to EIS over these cloud decks (not shown). LTS is derived from the ERA-Interim monthly mean analyses on pressure surfaces at ~0.7° × 0.7° horizontal resolution as the potential temperature difference between 700 and 1,000 hPa and is regridded to the coarser resolution of the various satellite products. LTS values derived from MERRA-2 are very similar, as are the 700-hPa vertical velocities (MERRA-2 compared to ERA-Interim), which are also used here (Figure S3).

3. Results

3.1. Definition of the LCC45+ Decks

Since the focus of this study is on evaluating just the subtropical low stratiform cloud decks, we restrict our analyses to 30° latitude × 35° longitude boxes that encapsulate all or most of the cloud decks. These regions, which we call the extended regions (as opposed to the core regions defined by Klein & Hartmann, 1993), and the acronyms of their names used herein are defined in Table S1. Inside these regions, we further define the cloud decks as those grid cells with monthly or seasonal mean LCC greater than a threshold of 45% CF; accordingly, we call them LCC45+ decks. The justification for the 45% threshold is given in Text S3.

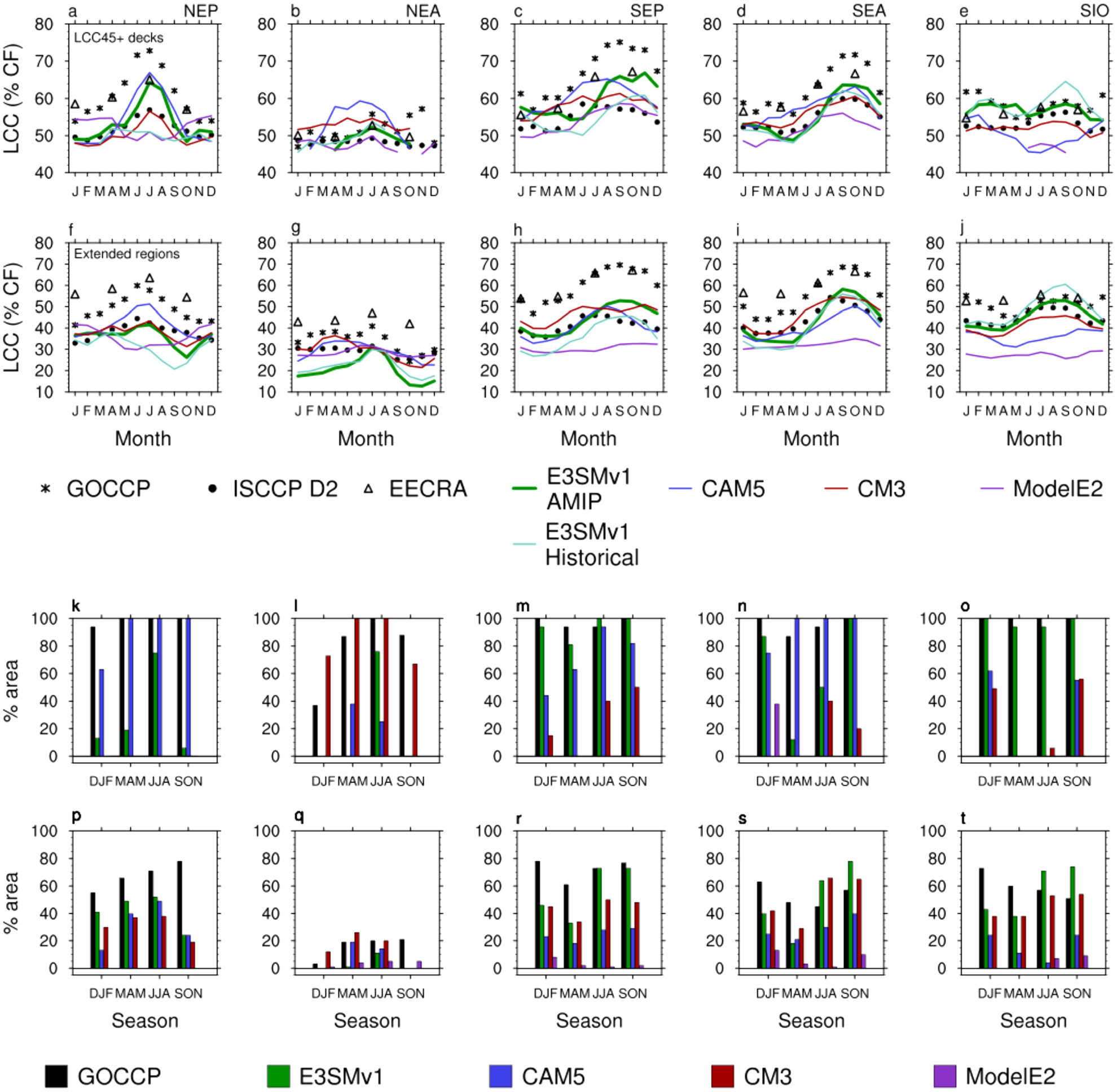

By comparing the model LCC45+ decks with those in the satellite climatology, we further improve upon the “apples-to-apples” comparison of the model satellite simulator to GOCCP by addressing the spatial errors of the simulated cloud decks. This is further elucidated in Figures 1a–1e, comparing the mean annual cycles of LCC for the LCC45+ decks from the models with the spread in observational climatologies (GOCCP, International Satellite Cloud Climatology Project D2, and Extended Edited Cloud Reports Archive). The E3SMv1-simulated LCC is more consistent with observations here (43 of 60 instances [12 months × 5 regions] of model monthly mean LCC fall within the observational spread) than over the extended regions (Figures 1f–1j) (with 37 instances) because of the inclusion of grid cells that are outside of the cloud decks. E3SMv1 is also more consistent with observations than other models for the LCC45+ decks (26 instances in CM3, 32 in CAM5, and 12 in ModelE2) and over the extended regions (32 instances in CM3, 37 in CAM5, and 12 in ModelE2). All models are less consistent with observations (24 instances in E3SMv1, 13 in CM3, 34 in CAM5, and 2 in ModelE2) in the classical core regions, because the simulated cloud decks may be completely missed or only partially within these regions.

Figure 1.

The mean annual cycle in low cloud cover (LCC; percent cloud fraction [% CF]) over five different regions (in five columns) in each of the LCC climatologies and the four models evaluated here for the LCC45+ decks (top row) and full extended (second row) regions, and the percentage area of the core regions (third row) and the extended regions (bottom row) in which seasonal mean LCC is greater than 45% CF in GCM-Oriented CALIPSO Cloud Product (GOCCP) and each of the four models. All models and GOCCP have been regridded to the 2.5° × 2.5° resolution of International Satellite Cloud Climatology Project (ISCCP) D2, while the coarser resolution Extended Edited Cloud Reports Archive (EECRA) data remain at their native resolution of 5° × 5°. More details are provided in the text. E3SMv1 = Energy Exascale Earth System Model Version 1; AMIP = Atmospheric Model Intercomparison Project; DJF = December–February; MAM = March–May; JJA = June–August; SON = September–November.

The importance of considering model deck spatial errors is further illustrated by the fraction of the core regions covered by LCC45+ decks (Figures 1k–1o). GOCCP LCC45+ decks completely cover nearly all core regions for every season except Northeast Atlantic (NEA) (from 37% area in December–February (DJF) to 100% area in June–August (JJA) in the NEA and from 88–100% area in the other core regions). On the other hand, the LCC45+ decks in E3SMv1 generally cover less of the core regions except Southern Indian Ocean (SIO) (in E3SMv1, 36–90% coverage in Northeast Pacific (NEP), 0–87% in NEA, 87–100% in Southeast Pacific (SEP), and 19–95% in Southeast Atlantic (SEA)). E3SMv1’s decks generally cover more than in CM3 and ModelE2 (the latter of which hardly ever exists) but less than in CAM5.

GOCCP LCC45+ decks cover less of the extended regions (Figures 1p–1t; as low as 6% in NEA in DJF and as high as 78% in two seasons in SEP). The fractional coverage of the LCC45+ decks of E3SMv1 and CM3 compares better to GOCCP (0–73% in E3SMv1 and 0–82% in CM3), because these regions capture all or most of the simulated LCC45+ decks. On the other hand, CAM5’s LCC45+ coastal decks cover a smaller fraction of the extended regions (only as much as 57% in NEP) than the core regions. These results suggest that all models perform well in simulating the seasonal cycle of the LCC45+ decks. The poor results averaged over the core regions and even averaged over the extended regions are largely due to model spatial errors, which are quantified next.

3.2. Quantification of Spatial Errors in Cloud Decks

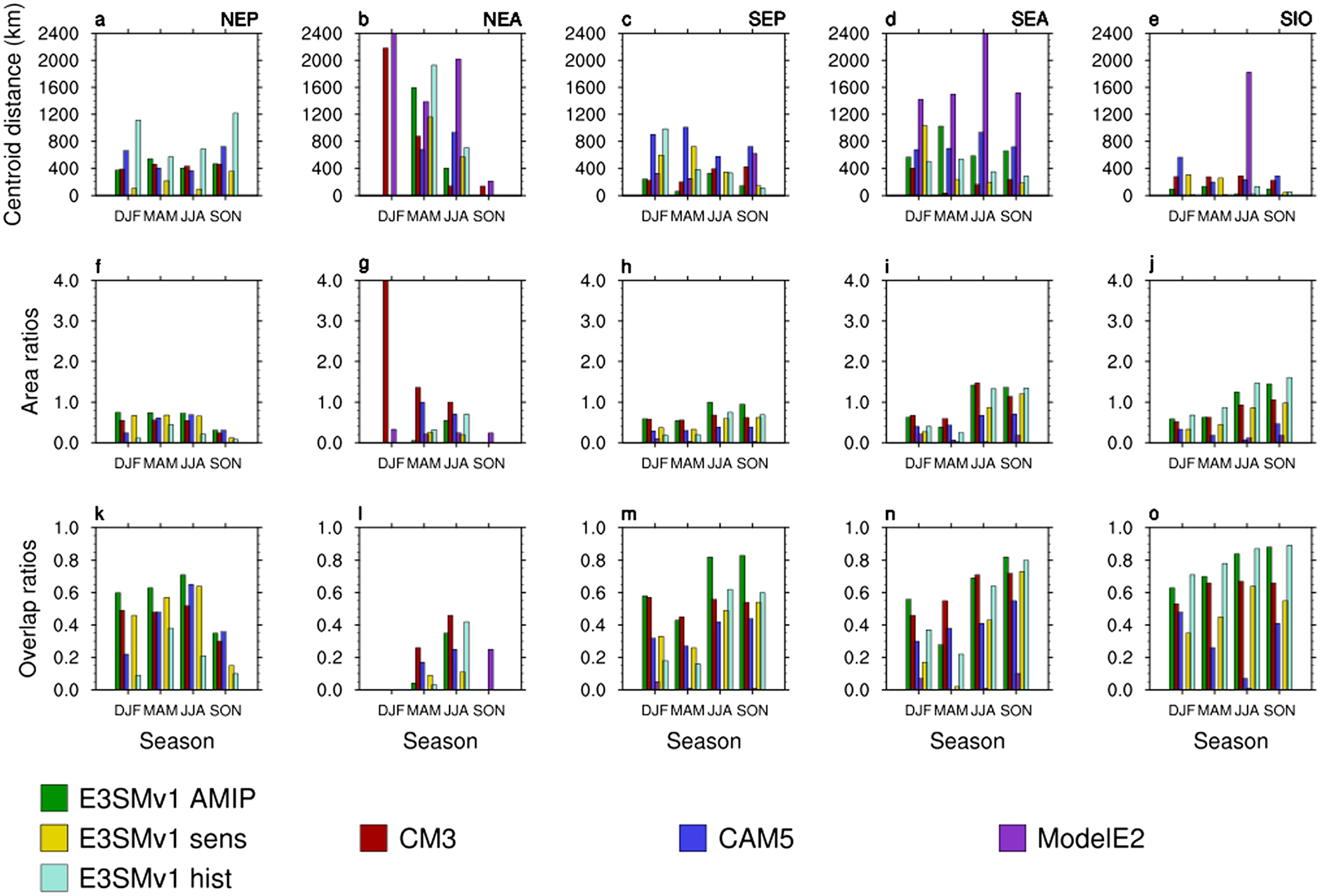

Object-based metrics have been used to evaluate simulated features such as areas of precipitation in numerical weather prediction (Gilleland et al., 2010; Mittermaier et al., 2016). Here we use three such metrics in ESM evaluations of the LCC45+ decks. First, we measure location errors of the simulated LCC45+ decks by calculating the distance between the centroid of the simulated deck and that of GOCCP (hereafter referred to as centroid distance) for each season in each region. Second, we measure model deficiency in the size of the simulated LCC45+ decks as compared to the satellite climatology by the area ratio, that is, the ratio of the model area to observed (i.e., GOCCP). The combined effects of location, size, and shape errors can be measured by the overlap ratio, that is, the ratio of the overlap area to the area of the union of the simulated and observed decks.

Centroid distances are shown in the top row of Figures 2a–2e for each season for each of the model LCC45+ decks. Average centroid distances over all seasons in E3SMv1 range from 88 to 995 km (487 km averaged over all regions), whereas the all-region averages are 457 and 644 km from CM3 and CAM5, respectively. It is harder to define an LCC45+ deck in ModelE2, as such a deck does not even exist for some seasons in some regions. This is due to the fact that ModelE2 simply does not produce enough clouds in the extended regions primarily because of deficiencies in this model’s physical parameterizations. NEA is the most difficult for the models to simulate, as only half of the models produce an LCC45+ deck in all seasons; E3SMv1 and CAM5 only produce LCC45+ decks in NEA in March–May (MAM) and JJA. Despite mislocation of their decks away from the coast, either E3SMv1 or CM3 has the lowest centroid distances for most regions and seasons, whereas they are higher from CAM5 and ModelE2. Thus, the lower centroid distances by E3SMv1 and CM3 indicate that the location of their cloud decks are less in error than in the other two models. In particular, CAM5’s restriction of the cloud decks to the near-coastal areas is problematic.

Figure 2.

The (a–e) centroid distances, (f–j) area ratios, and (k–o) overlap ratios of the LCC45+ decks in Energy Exascale Earth System Model Version 1 (E3SMv1) Atmospheric Model Intercomparison Project (AMIP) runs, GFDL CM3, CESM1-CAM5, GISS ModelE2, E3SMv1 sensitivity test, and E3SMv1 coupled historical runs to GCM-Oriented CALIPSO Cloud Product in each season and each region. No bar is shown whenever an LCC45+ deck is not produced by a model. LCC = low cloud cover; DJF = December–February; MAM = March–May; JJA = June–August; SON = September–November.

The relative size of the model LCC45+ decks to GOCCP are given by the area ratios in Figures 2f–2j. E3SMv1’s area ratios are the closest to 1 (i.e., the most similar to GOCCP’s) except in NEA and SEA. The size of the NEA and SEA decks produced by CAM5 and CM5, respectively, are closer to those of GOCCP (average ratios of 0.85 and 0.97, respectively). Generally, these two models produce smaller decks in the other regions with the exception of CM5 in the two Atlantic regions (NEA and SEA). As expected, ModelE2’s decks are much smaller than that of GOCCP and the other models because of the lack of LCC in the extended regions.

With the models’ deficiencies in the above two metrics, there is generally less than ~0.5 overlap of the simulated and observed decks (Figures 2k–2o). E3SMv1’s decks have the most overlap in every region except NEA. The difficulty models have in simulating LCC45+ decks in the NEA is evident by the very low overlap ratios (average ratios of <0.25 by all models). ModelE2’s decks also have very little overlap in the other three regions in which it produces decks (average ratios <0.1).

The relatively better performance of E3SMv1 over the other models, especially CAM5 for which E3SM Atmosphere Model Version 1 is derived, may be due to its inclusion of the higher-order scheme, Cloud Layers Unified by Binormals (CLUBB; Golaz et al., 2002), for the representation of turbulence, shallow convection, and cloud macrophysics. CLUBB has been shown to improve the simulation of clouds, particularly in the stratocumulus-to-cumulus transition (Bogenschutz et al., 2013). However, this improvement is clearly made at the expense of the solid cloud decks near the coast such as what would be captured by looking at the core regions. CAM5’s LCC45+ decks cover more of these regions (Figures 1k–1o) where it is better able to capture the cloud types that form there (Kay et al., 2012). However, CM3 (which does not use a higher-order scheme) also is better at simulating the stratocumulus-to-cumulus transition as indicated by similar centroid distances to E3SMv1 (Figures 2a–2e). Therefore, deficiencies in the physical parameterizations cannot be the only reason for the model biases.

3.3. Reasons for Cloud Deck Spatial Errors

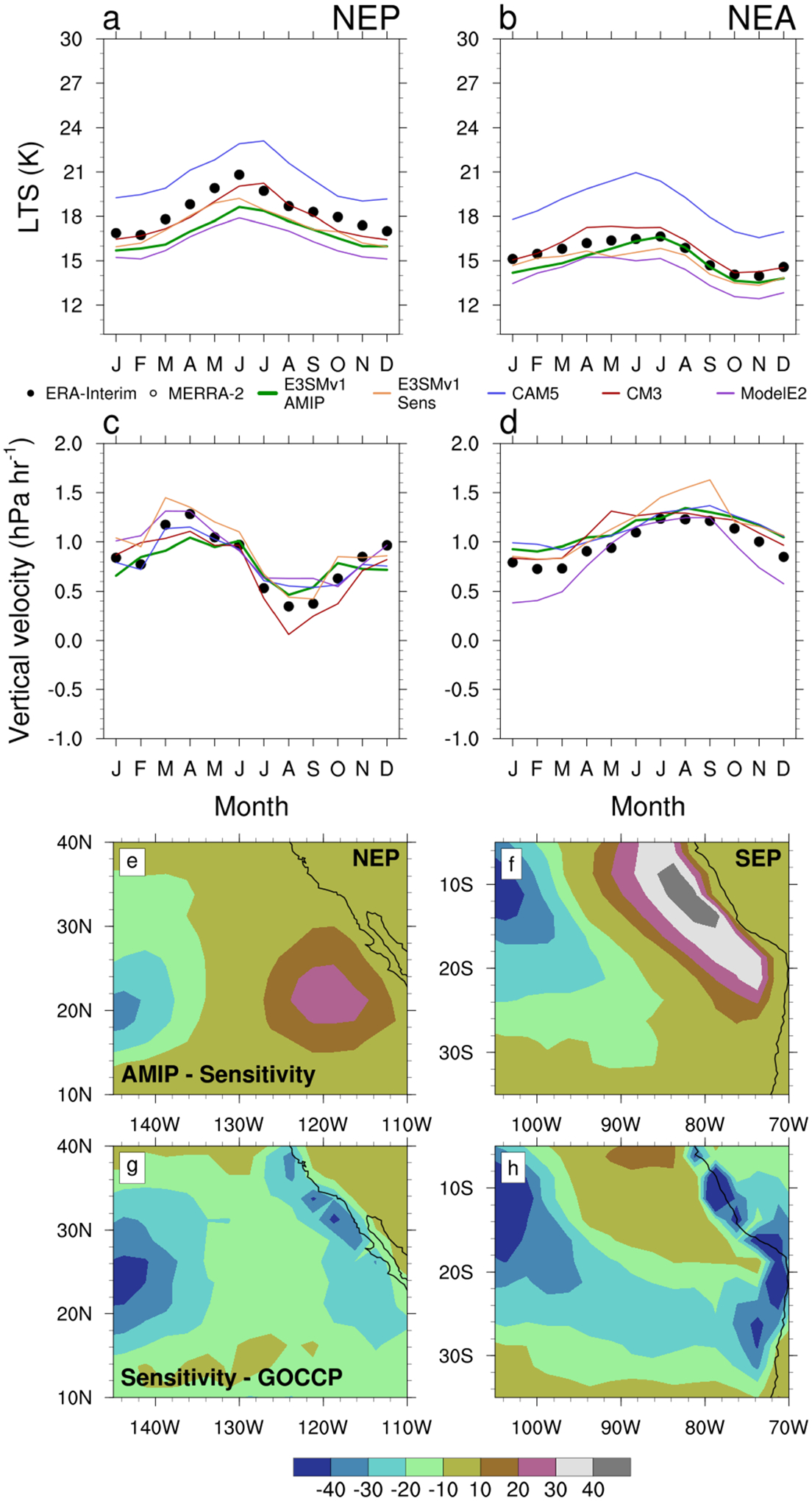

Then, the next question is as follows: Why are there cloud deck spatial errors in these models? First, could this be due to biases in the model dynamics (e.g., large-scale subsidence)? LTS has long been used as a metric of the strength of the inversion at MABL top, which increases under more subsidence. Under greater subsidence, the MABL top is pushed down, making the low stratiform clouds spread out and increasing LCC. Similar to the good agreement of model and ERA-Interim EIS in Koshiro et al. (2018), LTS in E3SMv1 and CM3 agrees well with those from ERA-Interim in the extended regions with lower LTS offshore than nearshore (not shown). CAM5 produces higher LTS in the extended regions (Figures 3a and 3b, for example, in NEP and SEP), and the modeled 700-hPa vertical velocities are simulated consistent with their LTS values (Figures 3c and 3d).

Figure 3.

(Top row) Mean annual cycle in lower tropospheric stability (LTS) and (second row) in 700-hPa vertical velocity in (a and c) NEP and (b and d) SEP. (Third row) June–August seasonal mean low cloud cover differences (percent cloud fraction) in (c) NEP and (d) SEP between the sensitivity run and the Atmospheric Model Intercomparison Project (AMIP) ensemble in Energy Exascale Earth System Model Version 1 (E3SMv1). (Bottom row) Biases in the sensitivity run from GCM-Oriented CALIPSO Cloud Product (GOCCP) in the same two regions.

Focusing on E3SMv1, its potential temperature profiles from the middle of the core regions and farther offshore in the misplaced model stratiform cloud decks are indeed similar to ERA-Interim with slightly lower LTS offshore than in the core regions (Figure S4). The obvious question, then, is as follows: For what ranges of LTS do the models generate LCC45+ decks? E3SMv1 generates LCC45+ cloud decks for slightly lower LTS values than ERA-Interim. CM3 does as well, whereas CAM5 generates clouds for higher LTS values (Figure S5). CAM5’s LTS range seems counterintuitive given that the highest LTS values have been observed to limit stratiform cloud cover (Myers & Norris, 2013).

The above results suggest that the model dynamics associated with large-scale subsidence is better simulated than clouds in E3SMv1 and, hence, model dynamics deficiencies are not the primary reason for the spatial errors in the modeled cloud decks. Because of this, previous studies have blamed the poor simulation of the cloud decks on model physics. For instance, Caldwell et al. (2013) generated better clouds from a simple atmospheric mixed layer model driven by the same large-scale dynamics from global models. Because their simple mixed layer model did not interact with dynamics, they concluded that the biases in simulating subtropical marine stratiform clouds in global models was due to deficiencies in physical parameterizations. However, deficiencies can also be generated from interactions between the large-scale dynamics and physical parameterizations.

To address the role of deficiencies in model physics versus those due to the interaction between the large-scale dynamics and physics in E3SMv1, we performed a different type of sensitivity test in which the model winds were nudged to that of MERRA-2 for 3 years (2007–2009) that encompass all three phases (neutral, warm, and cold) phases of El Niño-Southern Oscillation. In other words, the same model physics is used, but the dynamics is prescribed in the sensitivity test. We find that there is more LCC in the nudged run closer to the coasts and less LCC further away from the coasts (Figures 3e and 3f). Similar changes between the E3SMv1 sensitivity test and the control simulation are found in liquid water path as well (not shown). Increased LCC in the nudged run is associated with slightly stronger increases or weaker decreases in subsidence depending on the region (Figure S6).

The significance of the LCC differences between the E3SMv1 sensitivity test and the control simulation for the short 3-year period is tested by comparison with the standard deviation in LCC from the CESM1 large ensemble (Kay et al., 2015). We find that the sensitivity test cloud differences, as high as >40% CF, are much higher than the latter (only as high as >2% CF; Figure S7).

The apparent shift in cloud cover does not necessarily translate into an improvement in the LCC45+ decks simulated by E3SMv1. The bottom row of Figure 3 shows that the biases in the sensitivity run from GOCCP are not small. Most notably, the offshore reductions of LCC result in very large negative LCC biases in NEP and SEP in JJA.

In addition, spatial errors remain in the simulated decks in the sensitivity run. Higher centroid distances are found in some regions and seasons (the Southern Hemisphere regions in DJF, SIO in MAM, and NEA and SEP in JJA; Figures 2a–2e). These changes in centroid distances are due to the centroids being moved closer to the coast (not shown) as we would expect from the LCC differences between the two runs (Figures 3e and 3f). Area ratios in the sensitivity run are lower than in the AMIP run, indicating that the area of the simulated deck is smaller in the sensitivity run. For most regions and seasons when the AMIP run area ratios are already <1, there is a move toward even smaller decks than GOCCP in the sensitivity run, but there is an improvement made in SEP and SIO in JJA and SON from high ratios above 1 to ratios closer to 1. The smaller area ratios in the other regions are consistent with the nudging causing the model to create smaller LCC45+ deck that are restricted to the coast closer to how CAM5 generates these decks. Finally, with the larger centroid distances and changes in size of the LCC45+ decks in the sensitivity run, all overlap ratios are smaller except in NEA in MAM (where the ratio is increased from 0.04 to 0.09 in the sensitivity run).

Therefore, the slight change in dynamics that occurs because of the nudging (Figures 3a–3d) produces dramatic changes to the clouds that do not necessarily represent an improvement of the simulated LCC45+ decks. Since dynamics is prescribed in the sensitivity test, the poorer performance of the cloud decks in that run is primarily due to errors in the model physics. As mentioned earlier, the deficiencies in cloud decks in the AMIP run are primarily due to errors in the model physics, its interaction with the dynamics, or both. We also suspect that the skewness of the subgrid vertical velocity variance simulated by CLUBB might be too high near the coast, so that the model produces less cloud cover as in the shallow cumulus regime rather than more cloud cover in the stratocumulus regime, which will be examined in future work. Additionally, the large difference in LCC between the AMIP and nudged runs is primarily due to the interaction between the model dynamics and physics, since the dynamics is not prescribed in the former, with the same physics being used in both (Figure S8).

4. Conclusions

The above results suggest that the persistent problem with simulating marine low stratiform cloud decks cannot simply be attributed to errors in the model physics alone (e.g., Bony & Dufresne, 2005; Caldwell et al., 2013). The prescribed dynamics of the nudged sensitivity test produce large LCC biases and mixed improvement to the centroid distances, area ratios, and overlap ratios that can only be due to highly acknowledged problems with the model physics, but the difference in LCC between the sensitivity test and the AMIP runs is primarily due to feedbacks between the model dynamics and physics. These differences are larger than the standard deviation in LCC from the CESM1 large ensemble. These results suggest that both model physics (widely recognized, as revealed by the nudged run biases) and its interaction with dynamics (less recognized, as revealed by the large differences between the AMIP and nudged runs) are important to model improvement in simulating these cloud decks.

Further interactions can occur when the atmosphere model is coupled to an ocean model as in the fully coupled historical simulations, which produce biases and mixed improvements as noted in Text S4. Besides dynamics-physics interactions in the atmosphere, further complications may also occur from interactions between physical parameterizations. A subsequent paper will focus on understanding these interactions by looking at how long it takes for the transition-centered cloud decks to develop in E3SMv1 after nudging is turned off to further improvement of the global simulation of subtropical marine low stratiform cloud decks using conventional physical parameterizations. A promising alternative is the use of multiscale modeling framework or superparameterizations utilizing a higher-order scheme like CLUBB (Wang et al., 2015), but this is still too computationally expensive to be used for long simulations at this time.

Supplementary Material

Key Points:

Spatial errors in cloud decks as characterized by centroid distances, area ratios, and overlap ratios from four U.S. models are evaluated

The reasons for these errors are explored by performing a sensitivity test with model winds nudged to those of reanalysis

The interaction between model dynamics and physics plays an important role in the simulation of these cloud decks

Acknowledgments

We would like to thank Anton Beljaars and Vince Larson for their helpful comments. Brunke and Zeng’s work was supported by the DOE (DE-SC0016533, 70276) and NASA (NNX14AM02G). Sorooshian was also supported by NASA (80NSSC19K0442) for the ACTIVATE Earth Venture Suborbital-3 (EVS-3) investigation. Rasch and Ma were supported as part of the E3SM project by the DOE Office of Science’s Biological and Environmental Research Program. The following data sets were downloaded from the following web pages: GOCCP climatology (http://climserv.ipsl.polytechnique.fr/cfmip-obs/Calipso_goccp.html), the 2B-CLDCLASS-LIDAR product (http://www.cloudsat.cira.colostate.edu), ISCCP’s D2 product (https://isccp.giss.nasa.gov), EECRA (https://atmos.washington.edu/CloudMap/), MODIS’s MOD08_M3 product (https://ladsweb.modaps.eosdis.nasa.gov), ERA-Interim (http://rda.ucar.edu), and MERRA-2 (MDISC at GES DISC). E3SMv1 simulations were performed at the National Energy Research Scientific Computing Center, a DOE Office of Science User Facility under Contract DE-AC02-05CH11231. The output from these simulations are available at NERSC and at the CMIP6 E3SM archive (at http://pcmdi9.llnl.gov). The sensitivity test results can be obtained by contacting the first author. CESM1-CAM5 output were obtained from storage on NCAR’s supercomputers. GFDL CM3 and GISS ModelE2 were provided by PCMDI through the CMIP5 archive (http://pcmdi9.llnl.gov).

References

- Bodas-Salcedo A, Webb MJ, Bony S, Chepfer H, Dufresne J-L, Klein SA, et al. (2011). COSP: Satellite simulation software for model assessment. Bulletin of the American Meteorological Society, 92, 1023–1043. 10.1175/2011BAMS2856.1 [DOI] [Google Scholar]

- Bogenschutz PA, Gettelman A, Morrison H, Larson VE, Craig C, & Schanen DP (2013). Higher-order turbulence closure and its impact on climate simulations in the Community Atmosphere Model. Journal of Climate, 26, 9655–9676. 10.1175/JCLI-D-13-00075.1 [DOI] [Google Scholar]

- Bony S, Colman R, Kattsov VM, Allan RP, Bretherton CS, Dufresne J-L, et al. (2006). How well do we understand and evaluate climate change feedback processes? Journal of Climate, 19, 3445–3482. [Google Scholar]

- Bony S, & Dufresne J-L (2005). Marine boundary layer clouds at the heart of tropical cloud feedback uncertainties in climate models. Geophysical Research Letters, 32, L20806 10.1029/2005GL023851 [DOI] [Google Scholar]

- Bretherton CS, Uttal T, Fairall CW, Yuter SE, Weller RA, Baumgardner D, et al. (2004). The EPIC 2001 stratocumulus study. Bulletin of the American Meteorological Society, 85, 967–977. 10.1175/BAMS-85-7-967 [DOI] [Google Scholar]

- Caldwell PM, Zhang Y, & Klein SA (2013). CMIP3 subtropical stratocumulus cloud feedback interpreted through a mixed-layer model. Journal of Climate, 26, 1607–1625. [Google Scholar]

- Chepfer H, Bony S, Winker D, Cesana G, Dufresne J-L, Minnis P, et al. (2010). The GCM-Oriented CALIPSO Cloud Product (CALIPSO-GOCCP). Journal of Geophysical Research, 115, D00H16 10.1029/2009JD012251 [DOI] [Google Scholar]

- Dolinar EK, Dong X, Xi B, Jia JH, & Su H (2015). Evaluation of CMIP5 simulated clouds and TOA radiation budgets using NASA satellite observations. Climate Dynamics, 44, 2229–2247. 10.1007/s00382-014-2158-9 [DOI] [Google Scholar]

- Gelaro R, McCarty W, Suárez MJ, Todling R, Molod A, Takacs L, et al. (2017). The Modern-Era Retrospective Analysis for Research and Applications, Version 2 (MERRA-2). Journal of Climate, 30(14), 5419–5454. 10.1175/JCLI-D-16-0758.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilleland E, Ahijevych DA, Brown BG, & Ebert EE (2010). Verifying forecasts spatially. Bulletin of the American Meteorological Society, 91, 1365–1373. 10.1175/2010BAMS2819.1 [DOI] [Google Scholar]

- Golaz J-C, Larson VE, & Cotton WR (2002). A PDF-based model for boundary layer clouds. Part I: Method and model description. Journal of the Atmospheric Sciences, 59, 3540–3551. [Google Scholar]

- Jin D, Oreopoulos L, & Lee D (2017). Regime-based evaluation of cloudiness in CMIP5 models. Climate Dynamics, 48(1–2), 89–112. 10.1007/s00382-016-3064-0 [DOI] [Google Scholar]

- Kay JE, Deser C, Phillips A, Mai A, Hannay C, Strand G, et al. (2015). The Community Earth System Model (CESM) Large Ensemble Project: A community resource for studying climate change in the presence of internal climate variability. Bulletin of the American Meterological Society, 96(8), 1333–1349. 10.1175/BAMS-D-13-00255.1 [DOI] [Google Scholar]

- Kay JE, Hillman BR, Klein SA, Zhang Y, Medeiros B, Pincus R, et al. (2012). Exposing global cloud biases in the Community Atmosphere Model (CAM) using satellite observations and their corresponding instrument simulators. Journal of Climate, 25(15), 5190–5207. 10.1175/JCLI-D-11-00469.1 [DOI] [Google Scholar]

- Klein SA, & Hartmann DL (1993). The seasonal cycle of low stratiform clouds. Journal of Climate, 6, 1587–1606. [Google Scholar]

- Koshiro T, & Shiotani M (2014). Relationship between low stratiform cloud amount and estimated inversion strength in the lower troposphere over the global ocean in terms of cloud types. Journal of the Meteorological Society of Japan, 92, 107–120. 10.2151/jmsj.2014-108 [DOI] [Google Scholar]

- Koshiro T, Shiotani M, Kawai H, & Yukimoto S (2018). Cloudiness and estimated inversion strength in CMIP5 models using the satellite simulator package COSP. Sola, 14, 25–32. 10.2151/sola.2018-005 [DOI] [Google Scholar]

- Large WG, & Danabasoglu G (2006). Attribution and impacts of upper-ocean biases in CCSM3. Journal of Climate, 19, 2325–2346. [Google Scholar]

- Lin J-L, Qian T, & Shinoda T (2014). Stratocumulus clouds in Southeastern Pacific simulated by eight CMIP5-CFMIP global climate models. Journal of Climate, 27, 3000–3022. [Google Scholar]

- Ma P-L, Rasch PJ, Wang H, Zhang K, Easter RC, Tilmes S, et al. (2013). The role of circulation features on black carbon transport into the Arctic in the Community Atmosphere Model version 5 (CAM5). Journal of Geophysical Research: Atmospheres, 118, 4657–4669. 10.1002/jgrd.50411 [DOI] [Google Scholar]

- Mechoso CR, Wood R, Weller R, Bretherton CS, Clarke AD, Coe H, et al. (2014). Ocean-cloud-atmosphere-land interactions in the Southeastern Pacific. Bulletin of the American Meteorological Society, 95(3), 357–375. 10.1175/BAMS-D-11-00246.1 [DOI] [Google Scholar]

- Mittermaier M, North R, Semple A, & Bullock R (2016). Feature-based diagnostic evaluation of global NWP forecasts. Monthly Weather Review, 144, 3871–3893. 10.1175/MWR-D-15-0167.1 [DOI] [Google Scholar]

- Myers TA, & Norris JA (2013). Observational evidence that enhanced subsidence reduces subtropical marine boundary layer cloudiness. Journal of Climate, 26, 7507–7524. 10.1175/JCLI-D-12-00736.1 [DOI] [Google Scholar]

- Russell LM, Sorooshian A, Seinfeld JH, Albrecht BA, Nenes A, Ahlm L, et al. (2013). Eastern Pacific emitted aerosol cloud experiment. Bulletin of the American Meteorological Society, 94(5), 709–729. 10.1175/BAMS-D-12-00015.1 [DOI] [Google Scholar]

- Sánchez de Cos C, Sánchez-Laulhé JM, Jiménez-Alonso C, & Rodríguez-Camino E (2016). Using feedback from summer subtropical highs to evaluate climate models. Atmospheric Science Letters, 17, 230–235. 10.1002/asl.647 [DOI] [Google Scholar]

- Sassen K, Wang Z, & Liu D (2008). Global distribution of cirrus clouds from CloudSat/Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observations (CALIPSO) measurements. Journal of Geophysical Research, 113, D00A12 10.1029/2008JD009972 [DOI] [Google Scholar]

- Song H, Zhang Z, Ma P-L, Ghan S, & Wang M (2018a). The importance of considering sub-grid cloud variability when using satellite obserations to evaluate the cloud and precipitation simulations in cloud models. Geoscientific Model Development, 11, 3147–3158. 10.5194/gmd-11-3147-2018 [DOI] [Google Scholar]

- Song H, Zhang Z, Ma P-L, Ghan SJ, & Wang M (2018b). An evaluation of marine boundary layer cloud property simulations in the Community Atmosphere Model using satellite observations: Conventional subgrid parameterizations versus CLUBB. Journal of Climate, 31, 2299–2320. 10.1175/JCLI-D-17-0277.1 [DOI] [Google Scholar]

- Wang M, Larson VE, Ghan S, Ovchinnikov M, Schanen DP, Xiao H, et al. (2015). A multiscale modeling framework model (superparameterized CAM5) with a higher-order turbulence closure: Model description and low-cloud simulations. Journal of Advances in Modeling Earth Systems, 484–509. 10.1002/2014MS000375 [DOI] [Google Scholar]

- Wood R (2012). Stratocumulus clouds. Monthly Weather Review, 140, 2373–2423. [Google Scholar]

- Wood R, & Bretherton CS (2006). On the relationship between stratiform low cloud cover and lower-tropospheric stability. Journal of Climate, 19, 6425–6432. [Google Scholar]

- Wyant MC, Bretherton CS, Wood R, Carmichael GR, Clarke A, Fast J, et al. (2015). Global and regional modeling of clouds and aerosols in the marine boundary layer during VOCALS: The VOCA intercomparison. Atmospheric Chemistry and Physics, 15, 153–172. 10.5194/acp-15-153-2015 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.