Abstract

Background

Evidence-based programs such as mailed fecal immunochemical test (FIT) outreach can only affect health outcomes if they can be successfully implemented. However, attempts to implement programs are often limited by organizational-level factors.

Objectives

As part of the Strategies and Opportunities to Stop Colon Cancer in Priority Populations (STOP CRC) pragmatic trial, we evaluated how organizational factors impacted the extent to which health centers implemented a mailed FIT outreach program.

Design

Eight health centers participated in STOP CRC. The intervention consisted of customized electronic health record tools and clinical staff training to facilitate mailing of an introduction letter, FIT kit, and reminder letter. Health centers had flexibility in how they delivered the program.

Main Measures

We categorized the health centers’ level of implementation based on the proportion of eligible patients who were mailed a FIT kit, and applied configurational comparative methods to identify combinations of relevant organizational-level and program-level factors that distinguished among high, medium, and low implementing health centers. The factors were categorized according to the Consolidated Framework for Implementation Research model.

Key Results

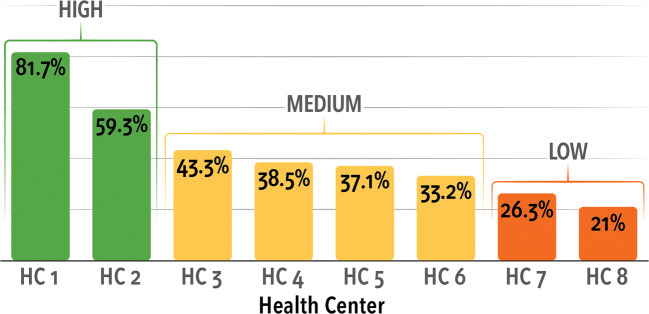

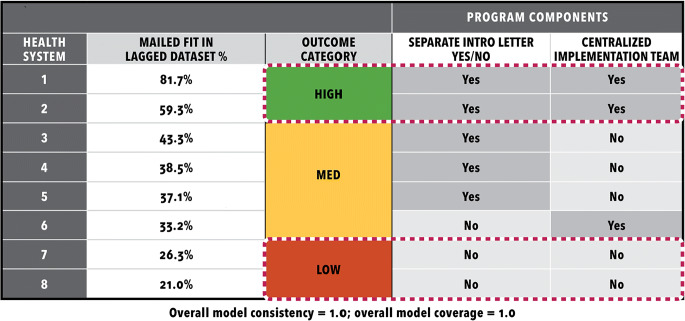

FIT tests were mailed to 21.0–81.7% of eligible participants at each health center. We identified a two-factor solution that distinguished among levels of implementation with 100% consistency and 100% coverage. The factors were having a centralized implementation team (inner setting) and mailing the introduction letter in advance of the FIT kit (intervention characteristics). Health centers with high levels of implementation had the joint presence of both factors. In health centers with medium levels of implementation, only one factor was present. Health centers with low levels of implementation had neither factor present.

Conclusions

Full implementation of the STOP CRC intervention relied on a centralized implementation team with dedicated staffing time, and the advance mailing of an introduction letter.

Trial Registration

ClinicalTrials.gov Identifier: NCT01742065 Registered 05 December 2012–Prospectively registered

Electronic supplementary material

The online version of this article (10.1007/s11606-020-06186-2) contains supplementary material, which is available to authorized users.

KEY WORDS: colorectal cancer, screening, implementation, fecal immunochemical tests, FIT tests, configurational comparative methods, Consolidated Framework for Implementation Research

BACKGROUND

Population-based screening programs for colorectal cancer (CRC) can reduce CRC-related mortality by preventing CRC from occurring and by catching it at earlier, more treatable stages.1 Screening outreach programs that incorporate fecal immunochemical tests (FITs) and follow-up endoscopy are effective and cost-effective ways to screen people.2, 3 A recent evidence review indicates that the most effective clinic-based programs provided FIT kits through the mail (using pre-addressed stamped return envelopes), involved patient reminders, and/or distributed FITs in clinic.4 However, evidence-based interventions can only be effective if they are successfully implemented (i.e., mailings are completed), and little is known about factors that influence the implementation of such programs in clincal practice.5

Implementation of CRC screening programs is challenging in the United States, where the delivery of CRC screening varies by clinic and health system.6, 7 For example, Liss and colleagues (2016) found that a mailed FIT program was an effective way to initiate a screening process (18.4% more patients screened with outreach) but current cost structures limited ability to implement them.8 Health systems have little guidance on how to select and adapt interventions for their particular population and clinical setting.4 How a health center implements a full screening program differs depending on available leadership’s prioritization of a given screening target, how successful a given intervention program could be, available resources for implementing and sustaining the intervention, and whether the program is a good fit for the health center’s population.5, 9, 10 These implementation decisions, and the underlying context that drives them, might have significant implications for the success of these programs.

A variety of factors, such as program components, leadership, lab arrangements, and state-based incentives for screening metrics, likely play a role in successful implementation, but organizational factors in particular seem most compelling to examine in busy health center practices. Clinics can face a variety of challenges implementing a centralized CRC screening program, including staff turnover, competing time pressures, funding resources, challenges with electronic health records (EHRs) and supporting technology, and access to colonoscopy.11–14 Case study results from a screening demonstration program15 indicated the importance of designing for pre-existing infrastructure and existing service delivery systems, as well as having a multidisciplinary implementation team, collaborating with partners and a medical advisor, and allowing adequate start-up time. A study of in-clinic FIT distribution in eight Iowa physician offices (in which only 45% of 400 FITs were handed out) concluded that implementation required the support of nursing staff for planning and executing the program.16 The complexities of implementing a centralized screening outreach program and the need for staff support in doing so have been echoed in other studies of CRC screening interventions.12, 13, 17

Few studies of mailed FIT program effectiveness18–21 have analyzed factors that contributed to program implementation success. Many studies described intervention components, and some studies have outlined barriers to adapting and implementing mailed FIT outreach programs.22, 23 But none of the studies cited above specifically examined contextual factors as they relate to successful implementation. One study reported moderators of evidence-based approaches to CRC screening within 59 FQHCs.24 Of these, eight (15.7%) had implemented mailed FIT programs, but the study presented no organizational-level factors linked to implementation outcomes.24

A growing interest in assessing implementation success has led to the use of new analytic strategies to determine causal pathways to outcomes and to test theoretical frameworks against empirical data.25–27 The Strategies and Opportunities to Stop Colon Cancer in Priority Populations (STOP CRC) study provided a unique opportunity to assess factors associated with successful implementation of a mailed FIT outreach program in eight busy health centers. A cluster-randomized, pragmatic trial of a mailed FIT program, STOP CRC aimed to increase CRC screening in eight federally qualified health centers (FQHCs; 26 clinics) in Oregon and California.28–30 Health centers had large variation in implementation, specifically in the proportion of eligible patients that were mailed a FIT in lagged data (21.0-81.7%), and screening rates were strongly associated with implementation success.30 Thus, it is critical to understand the specific combination of conditions that explained the variation in implementation success of the mailed outreach across the health centers.

METHODS

STOP CRC aimed to increase CRC screening in health center clinics by providing tools and training to deliver a mailed FIT outreach program. The project created a series of EHR tools that identified patients who were eligible and due for screening. Twenty-six clinics from eight health systems participated (41,193 eligible patients in the first year) (Fig. 1). The health centers were diverse (Table 1). They were located in Oregon and California, were rural and urban, and varied in size (7548–54,850 total patients). All health systems were members of the OCHIN clinical information network and shared an EHR (Epic©).31 The program involved three major steps: clinic staff were trained to use the tools to mail a personalized introductory letter, mail a FIT kit, and then mail a personalized reminder to patients due for CRC screening. The letter and instructions directed the patient to label and place the completed test in a pre-addressed stamped envelope, and send it back to a designated lab, or deliver it to the clinic. The lab sent results directly to the clinic EMR. The research team conducted surveys and interviews throughout the life of the project, executed improvement cycles, collected EHR data, and held trainings and meetings to support full program implementation.

Figure 1.

Implementation Outcome: % of eligible patients mailed a FIT.

Table 1.

Health Center Characteristics*

| Health center | Number of randomized clinics | CRC Screening rates (%) | Total patients | Hispanic (%) | Medicaid (%) | Patients < 100% poverty level (%) | Uninsured patients (%) |

|---|---|---|---|---|---|---|---|

| HC 1 | 2 | 29.8 | 9224 | 23.2 | 55.4 | 69.6 | 21.1 |

| HC 2 | 6 | 44.8 | 71,174 | 35.4 | 71.4 | 80.1 | 16.8 |

| HC 3 | 2 | 50.2 | 43,925 | 61.1 | 62.9 | 64.0 | 23.6 |

| HC 4 | 4 | 48.1 | 54,850 | 11.1 | 42.0 | 53.6 | 14.9 |

| HC 5 | 3 | 23.9 | 25,805 | 29.2 | 51.1 | 72.5 | 32.0 |

| HC 6 | 3 | 34.2 | 7548 | 16.8 | 59.8 | 69.4 | 13.9 |

| HC 7 | 4 | 34.2 | 18,295 | 18.7 | 62.5 | 52.4 | 10.9 |

| HC 8 | 2 | 52.0 | 13,192 | 7.4 | 45.3 | 61.7 | 7.2 |

*Clinic characteristics were not used in analysis

We applied configurational comparative methods (CCMs) to identify the specific combinations of conditions that distinguished health centers with high, medium, or low implementation levels of the mailed outreach to eligible members. CCMs provide a formal mathematical approach to conduct cross-case analysis that draws upon Boolean algebra and set theory to identify a “minimal theory,” a crucial set of difference-making combinations that uniquely distinguish one group of cases from another.32–36 The analytic objective of CCMs is to identify necessary and sufficient conditions, a fundamentally different search target than that of correlation-based methods, and thus CCMs do not require large sample sizes; in fact, an often-cited strength of CCMs is their versatility with small-n studies.34, 37, 38 “CCMs” is an umbrella term for the broader family of configurational approaches, which include Qualitative Comparative Analysis (QCA) and more recently Coincidence Analysis (CNA). Applied in political science and sociology since the 1980s, CCMs have started to gain traction in health services research and implementation science in recent years. For example, a 2019 Cochrane Review of school-based interventions for asthma self-management prominently used CCMs to identify conditions aligned with successful implementation39; a 2019 review of innovative approaches in mixed-methods research devoted an entire section to CCMs38; a 2020 study applied CCMs to determine the key subset of implementation strategies directly linked to implementation success across a national sample of VA medical centers32; and the comprehensive 2020 Handbook on Implementation Science included a whole chapter dedicated solely to configurational comparative methods.40 CCMs represent a group of mathematical, case-oriented approaches that use applied set theory and Boolean algebra, offering a way to analyze data and is distinct from current traditional methods like logistic regression or qualitative research.34, 35, 41

We used the Consolidated Framework for Implementation Research (CFIR) model42 as our framework for identifying outreach implementation factors. The CFIR model identifies a comprehensive list of factors that might be associated with effective implementation.42 CFIR constructs include categories of characteristics in the intervention, inner setting, outer setting, and process. Intervention characteristics capture specific elements of the intervention that impact implementation, such as adding in reminder calls or mailing reminder letters. Process characteristics capture differences in their process, like having a clinic champion or dedicated information technology (IT) staff. Inner setting components, such as system growth, or a centralized implementation team, capture structural or cultural characteristics of the system that may impact outcomes. Outer setting characteristics, such as interface issues with an external lab, may indicate influences outside of the health system that could impact implementation of the full program. CFIR components were pulled from qualitative and quantitative data. These components were derived from clinic responses to the baseline survey, baseline interview, cost interview, year 1 project lead interview and follow-up survey, Plan-Do-Study-Act (i.e., quality improvement cycle) reports, EHR data, and research team knowledge acquired through the study13, 43 (see Supplemental Table on Health Clinic Characteristics).

Methods for the clinic surveys, interviews, and Plan-Do-Study-Act have been reported previously.12, 13 In brief, clinic leadership/staff involved in executing the intervention completed a survey and in-depth interview prior to the implementation of STOP CRC (baseline survey and interview) and again approximately 12 months following the first year of implementation (year 1 project lead interview and clinic follow-up survey). Survey questions were guided by key domains of CFIR and interview questions explored similar concepts with greater ability to focus on context specific barriers and facilitators to CRC screening in general and STOP CRC implementation specifically. Year 1 interviews and surveys explored mostly the same questions, and also focused on issues of ongoing implementation and maintenance for the clinics. Additionally, during year 1 interviews, we solicited feedback on the motivations for and outcomes from implementing the quality improvement cycle (Plan-Do-Study Act), and obtained information from each clinic on the amount of time spent on tasks - to implement the intervention. All interviews were audio-recorded, transcribed, coded, and content summarized using standard qualitative analysis techniques,44–46 aided by the use of a qualitative software program (Atlas.ti).

All components together represented 50 potential explanatory factors (see Supplemental Table). To reduce the number of factors, we used functions available within the Coincidence Analysis package (“cna”)47 in R; R (version 3.5.0) and R Studio (version 1.1.383) were also used to support the analysis. The multi-step configurational approach we used for data reduction has been detailed in depth in previous studies32, 48; we present the main details here. Specifically, we identified configurations of conditions with the strongest connection to the implementation outcome of mailed outreach as the initial program step (i.e., high, medium, or low implementation levels). We set r to a maximum of 3, where r stands for the number of objects to be selected at the same time from a larger set of n objects (i.e., the 50 potential explanatory factors, each with at least two possible values). In setting r to a maximum of 3, we considered all 1-, 2-, and 3-condition configurations across the 50 factors that were instantiated within the dataset and met the cutoff threshold. We then interpreted this condition-level output on the basis of our research question (i.e., at least one program-related condition had to be present) as well as logic, theory, and prior knowledge to narrow the initial set of 50 potential explanatory factors to a smaller subset of candidate factors to model. As part of our selection criteria, we looked for configurations where different values for the exact same set of factors could explain the low, medium, and high levels of implementation with 100% consistency (i.e., the solution yielded the outcome every time) and 100% coverage (i.e., every case with the outcome was explained by the solution) with no model ambiguity.49, 50 Ultimate solutions were developed using the modeling functions in the “cna” R package.

Implementation Outcomes

We categorized levels of mailed outreach implementation according to the proportion of eligible patients who were mailed a FIT kit between June 2014 and February 2015. Proportions at the eight centers were 0.21, 0.26, 0.33, 0.37, 0.38, 0.43, 0.59, and 0.81. Given the large, tightly clustered group of proportions between .33 and .43 and the greater spread of values above and below the cluster, we characterized the eight health centers as having a low (< 30% of eligible patients were sent a FIT kit), medium (30 to 50% were sent a FIT), or high (> 50% were sent a FIT) implementation level. While the difference between implementation rates within the high group was quite large, we felt it important to capture what elements these two centers had in common that set them apart from the other centers, given that both had implementation rates at least 16% higher than those in the “medium” cluster.

RESULTS

Using the subset of factors identified in data reduction, our CCMs analyses identified a solution with one dichotomous program-related factor and one dichotomous organizational-related factor that in combination perfectly distinguished among high, medium, and low implementation with 100% consistency and 100% coverage. The two factors were Centralized Implementation Team (values: 1 = YES; 0 = NO) and Separate Introductory (Intro) Letter (values: 1 = YES; 0 = NO); there were no missing values for these factors in the dataset. Health centers with high levels of implementation had centralized implementation teams (including FIT program staff) and mailed the introductory letter separate from the FIT kit. Health centers with medium levels of implementation had two solution pathways for the combination of Centralized Implementation Team = NO and Separate Intro Letter = YES; or the combination of Centralized Implementation Team = YES and Separate Intro Letter = NO. Health centers with low levels of implementation had the combination of Centralized Implementation Team = NO and Separate Intro Letter = NO.

Put differently, two dichotomous factors with possible values of 1 (YES) or 0 (NO) yield four possible combinations: 1-1, 1-0, 0-1, and 0-0. For each of the three outcomes (high, medium, or low implementation levels), these specific two-condition configurations always yielded a particular outcome (i.e., 100% consistency) as well as explained all the cases with that particular outcome (i.e., 100% coverage) (Table 2).

Table 2.

Final Model from Configurational Comparative Methods (CCMs) Analysis

DISCUSSION

Success in implementing the outreach mailing step of a mailed FIT CRC screening program was accounted for by two factors with 100% consistency: a centralized implementation team with dedicated staff for delivering intervention components and the mailing of an introductory letter prior to the FIT kit mailing. These findings tell us that having a dedicated team and fidelity to the program can lead to successful implementation and increase CRC screening.

A centralized implementation team may have facilitated implementation by providing protected time for staff to implement the program. Health centers without centralized FIT program staff relied on multiple staff in diverse roles to deliver the program, which may have led to more fragmented implementation. Having the ability to have a centralized team may have also provided needed infrastructure for successful implementation of the program: all three sites with centralized implementation teams had the combination of champions, IT staff, and program managers. In another example of possible facilitation, only systems with a centralized process issued reminder letters.

There are several reasons that mailing an introductory letter separate from the FIT kit may have been directly linked to an increased proportion of eligible patients being mailed a FIT test (implementation success). One possibility is that the inclusion of an introductory letter reflected the health care system’s commitment to deliver all components of the program. Another possibility is that the process of mailing the introductory letters provided a reminder to staff to complete the full workflow. Successful implementation of the mailed outreach may have been associated with the successful implementation of other STOP CRC intervention steps. All five systems that mailed the introductory letter separately also reported no lab issues; gave patients the option to drop the kit off to the clinic or return it by mail; and did not report any major IT challenges; additionally, four of these five also reported significant growth in system size. An important caveat is that it cannot be conclusively determined from these observational findings that the introductory letter was causally related to the outcome or a marker of broader program factors; more evidence would be required to establish a causal connection, such as independent replication of these results in other studies.

Additional conditions consistently linked to low implementation outcomes were IT challenges and lab issues: the two systems with low implementation were the only two to report these two problems. As published previously, in post-implementation qualitative interviews, staff from both systems described challenges with printing and formatting the introductory letter along with determining a workflow for executing this activity, leading to staff frustration.12 They also described struggling to determine the correct postage for the FIT kit mailing, which in turn created “re-dos” of work and delays in mailing.12

The two systems with low implementation outcomes also lacked champions and IT staff to troubleshoot technology challenges. The lack of a clinical champion aligns with previously reported comments made during pre- and post-implementation interviews regarding concerns that a mailed FIT approach might not be the best match for the patients served by their systems (e.g., patients prefer face-to-face conversations with providers and/or have transitory addresses).12 By contrast, interview participants at the high-implementation clinics viewed the program as an opportunity to learn about a set of tools (population-based mailing) that could eventually be applied to other areas of preventive care.12

Contrary to expectation, staffing changes, clinic growth, and attendance at training did not consistently distinguish level of implementation. We previously published results of qualitative assessments from interviews with implementation clinic leadership and staff captured at baseline and repeated 6 to 9 months after implementation.12 The most commonly reported barriers consisted of time required for staff to implement program components, inadequate EHR staffing for resolving issues related to program implementation such as batch printing, and lack of easy-to-understand and actionable reports on the mailed FIT outcome. Reported successes included use of the EHR and the opportunity to standardize and operationalize processes for population outreach. One person replied, “Once you get the hang of the process, it was pretty straightforward.” Another said the program aligned with clinic goals and culture: “We are moving to a team approach and the transition from the provider being the center to other staff outside of the exam room being able to help patients.” These previously reported themes could help to explain why the presence of centralized processes fostered success in implementing the program: centralized processes were accompanied by dedicated resources, the ability to solve EHR challenges, and a strong desire to work through challenges to eventually create workflows that made the program simpler to execute.

Centralized programs, such as those implemented by large, organized health care systems and health insurance plans, often have centralized labs and use contracted mailing vendors to reduce the burdens of mailings on clinics.51, 52 Some of the STOP CRC health centers with the most successful implementation used staff and resources to implement mailings.53, 54 Others were supported by additional significant grant funding55 (STOP CRC clinics received reimbursement for research-related activities). STOP CRC was one of the first multi-site studies of mailed FIT outreach to rely on clinic staff to deliver the intervention components.

Our study has limitations. Our analysis assessed health-system-level organizational factors as they related to implementation success of the mailed outreach and did not measure patient-related factors that might also mediate FIT completion. Additionally, details of clinic-level variation (i.e., clinic size, champions, clinic-specific workflows, and processes) may not have appeared in our analysis at the health center level. Additionally, details that were unobserved may explain why having a centralized implementation team and introductory letter were associated with greater implementation. The center with the highest rate of implementation had a small population (n < 300), which may have made it easier for them to implement the program broadly among their patients and account for some of the difference between rates in the two “high implementation” health centers. We might have also missed some key organizational-related components linked to outcomes such as staff burnout, or financial readiness.

Our study also has strengths in that we used innovative methods (CCMs) to understand organizational- and program-level factors that directly linked to clinic staff’s ability to carry out an EHR-enabled CRC screening improvement program in busy practice settings.

CONCLUSION

Using an innovative analytic approach, we identified two key factors that in combination perfectly distinguished among high, medium, and low implementation levels of the mailed FIT outreach of a CRC screening program: a centralized implementation team (an organization-level factor) and successful mailing of an introductory letter (a program-level factor). By identifying the conditions for intervention success, these results can inform future efforts to improve the implementation of evidence-based interventions into clinical practice.

Electronic supplementary material

(DOCX 411 kb)

Abbreviations

- CCMs

configurational comparative methods

- CNA

coincidence analysis

- QCA

qualitative comparative analysis

- FIT

fecal immunochemical test

- CRC

colorectal cancer

- FQHC

federally qualified health center

- STOP CRC

Strategies and Opportunities to Stop Colon Cancer in Priority Populations

- EHR

electronic health record

- IT

information technology

- PDSA

plan-do-study-act improvement process

Authors’ Contributions

BG and GC are Co-PIs of the project. BG wrote the initial discussion section. AP coordinated the study, drafted the initial manuscript outline, drafted significant components of the methods, and finalized the final manuscript. JS is the qualitative interviewer who offered interpretations of analysis and wrote about the qualitative data. EM conducted the analysis and wrote segments of the methods and results sections. JC was a clinic trainer, drafted the introduction, and provided insight into findings. SR was the clinical liaison and drafted components of the discussion. GC provided oversight and reviewed the entire manuscript. All authors read and approved the final manuscript.

Funding

Research reported in this publication was supported by the National Institutes of Health Common Fund and the National Cancer Institute under award numbers UH2AT007782 and 4UH3CA188640-02, awarded to the second and seventh authors (Green and Coronado).

Data Availability

Please contact the lead author for information regarding data.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Competing Interests

Dr. Coronado: From November 2014 to August 2015, Dr. Coronado served as a co-investigator on an industry-funded study to evaluate patient adherence to an experimental blood test for colorectal cancer. The study was funded by EpiGenomics. From September 2017 to June 2018, Dr. Coronado served as the Principal Investigator on an industry-funded study to compare the clinical performance of an experimental FIT to an FDA-approved FIT. This study is funded by Quidel Corporation. Dr. Coronado has served as a scientific advisor for Exact Sciences and Guardant Health. All other authors declare no conflicts of interest.

Ethics Approval and Consent to Participate

The study was approved by the Institutional Review Board of Kaiser Permanente Northwest on December 6, 2013. The project received a waiver of informed consent; however, all interview participants provided verbal assent. All staff have been trained in ethical conduct of human subject research.

Consent for Publication

Not applicable.

Disclaimer

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The study sponsor had no role in study design; collection, analysis, and interpretation of data; writing the report; or the decision to submit the report for publication.

Footnotes

Contributions to the Literature

• This study identifies what clinical setting factors lead to different levels of implementation of an evidence-based colorectal cancer screening program. High levels of implementation were directly linked to having centralized staff devoted to implementation and the ability to carry out the program as designed.

• Configurational comparative methods are well-suited to assess combinations of conditions linked to successful implementation. Understanding how combinations of conditions lead to successful implementation can inform efforts to optimize the delivery of evidence-based interventions in practice.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Stegeman I, de Wijkerslooth TR, Mallant-Hent RC, et al. Implementation of population screening for colorectal cancer by repeated Fecal Immunochemical Test (FIT): third round. BMC Gastroenterol. 2012;12:73. doi: 10.1186/1471-230X-12-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Brenner AT, Getrich CM, Pignone M, et al. Comparing the effect of a decision aid plus patient navigation with usual care on colorectal cancer screening completion in vulnerable populations: study protocol for a randomized controlled trial. Trials [Electronic Resource] 2014;15:275. doi: 10.1186/1745-6215-15-275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mehta SJ, Jensen CD, Quinn VP, et al. Race/ethnicity and adoption of a population health management approach to colorectal cancer screening in a community-based healthcare system. J Gen Intern Med. 2016;31(11):1323–1330. doi: 10.1007/s11606-016-3792-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Davis MFM, Shannon J, Coronado G, Stange K, Guise JM, Wheeler S, Buckley DI. A systematic review of clinic and community intervention to increase fecal testing for colorectal cancer in rural and low-income populations in the United States – how, what and when?. BMC Cancer In Press. 2018. [DOI] [PMC free article] [PubMed]

- 5.Brenner AT, Rhode J, Yang JY, et al. Comparative effectiveness of mailed reminders with and without fecal immunochemical tests for Medicaid beneficiaries at a large county health department: A randomized controlled trial. Cancer. 2018;124(16):3346–3354. doi: 10.1002/cncr.31566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Atkin WS, Benson VS, Green J, et al. Improving colorectal cancer screening outcomes: proceedings of the second meeting of the International Colorectal Cancer Screening Network, a global quality initiative. J Med Screen. 2010;17(3):152–157. doi: 10.1258/jms.2010.010002. [DOI] [PubMed] [Google Scholar]

- 7.Benson VS, Atkin WS, Green J, et al. Toward standardizing and reporting colorectal cancer screening indicators on an international level: The International Colorectal Cancer Screening Network. Int J Cancer. 2012;130(12):2961–2973. doi: 10.1002/ijc.26310. [DOI] [PubMed] [Google Scholar]

- 8.Liss D, French D, Buchanan D, et al. Outreach for annual colorectal cancer screening: A budget impact analysis for community health centers. Am J Prev Med. 2016;50(2):54–61. doi: 10.1016/j.amepre.2015.07.003. [DOI] [PubMed] [Google Scholar]

- 9.Schlichting JA, Mengeling MA, Makki NM, et al. Increasing colorectal cancer screening in an overdue population: participation and cost impacts of adding telephone calls to a FIT mailing program. J Community Health. 2014;39(2):239–247. doi: 10.1007/s10900-014-9830-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Green BB, Anderson ML, Cook AJ, et al. A centralized mailed program with stepped increases of support increases time in compliance with colorectal cancer screening guidelines over 5 years: A randomized trial. Cancer. 2017. [DOI] [PMC free article] [PubMed]

- 11.Coronado GD, Petrik AF, Spofford M, Talbot J, Do HH, Taylor VM. Clinical perspectives on colorectal cancer screening at Latino-serving federally qualified health centers. Health Educ Behav. 2015;42(1):26–31. doi: 10.1177/1090198114537061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Coronado GD, Schneider JL, Petrik A, Rivelli J, Taplin S, Green BB. Implementation successes and challenges in participating in a pragmatic study to improve colon cancer screening: perspectives of health center leaders. Transl Behav Med. 2017;7(3):557–566. doi: 10.1007/s13142-016-0461-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Coury J, Schneider JL, Rivelli JS, et al. Applying the Plan-Do-Study-Act (PDSA) approach to a large pragmatic study involving safety net clinics. BMC Health Serv Res. 2017;17(1):411. doi: 10.1186/s12913-017-2364-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Weiner BJ, Rohweder CL, Scott JE, et al. Using practice facilitation to increase rates of colorectal cancer screening in community health centers, North Carolina, 2012-2013: feasibility, facilitators, and barriers. Prev Chronic Dis. 2017;14:E66. doi: 10.5888/pcd14.160454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.DeGroff A, Holden D, Goode Green S, Boehm J, Seeff LC, Tangka F. Start-up of the colorectal cancer screening demonstration program. Prev Chronic Dis. 2008;5(2):A38. [PMC free article] [PubMed] [Google Scholar]

- 16.Daly JM, Levy BT, Moss CA, Bay CP. System Strategies for Colorectal Cancer Screening at Federally Qualified Health Centers. Am J Public Health. 2014. [DOI] [PMC free article] [PubMed]

- 17.Liles EG, Schneider JL, Feldstein AC, et al. Implementation challenges and successes of a population-based colorectal cancer screening program: a qualitative study of stakeholder perspectives. Implement Sci. 2015;10:41. doi: 10.1186/s13012-015-0227-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dougherty MK, Brenner AT, Crockett SD, et al. Evaluation of interventions intended to increase colorectal cancer screening rates in the United States: a systematic review and meta-analysis. JAMA Intern Med. 2018;178(12):1645–1658. doi: 10.1001/jamainternmed.2018.4637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Issaka RB, Avila P, Whitaker E, Bent S, Somsouk M. Population health interventions to improve colorectal cancer screening by fecal immunochemical tests: a systematic review. Prev Med. 2019;118:113–121. doi: 10.1016/j.ypmed.2018.10.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tangka FKL, Subramanian S, DeGroff AS, Wong FL, Richardson LC. Identifying optimal approaches to implement colorectal cancer screening through participation in a learning laboratory. Cancer. 2018;124(21):4118–4120. doi: 10.1002/cncr.31679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kemper KE, Glaze BL, Eastman CL, et al. Effectiveness and cost of multilayered colorectal cancer screening promotion interventions at federally qualified health centers in Washington State. Cancer. 2018;124(21):4121–4129. doi: 10.1002/cncr.31693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Liang S, Kegler MC, Cotter M, et al. Integrating evidence-based practices for increasing cancer screenings in safety net health systems: a multiple case study using the Consolidated Framework for Implementation Research. Implement Sci. 2016;11:109. doi: 10.1186/s13012-016-0477-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cole AM, Esplin A, Baldwin LM. Adaptation of an evidence-based colorectal cancer screening program using the Consolidated Framework for Implementation Research. Prev Chronic Dis. 2015;12:E213. doi: 10.5888/pcd12.150300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Walker TJ, Risendal B, Kegler MC, et al. Assessing levels and correlates of implementation of evidence-based approaches for colorectal cancer screening: a cross-sectional study with federally qualified health centers. Health Educ Behav. 2018;45(6):1008–1015. doi: 10.1177/1090198118778333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Damschroder LJ, Reardon CM, Sperber N, Robinson CH, Fickel JJ, Oddone EZ. Implementation evaluation of the Telephone Lifestyle Coaching (TLC) program: organizational factors associated with successful implementation. Transl Behav Med. 2017;7(2):233–241. doi: 10.1007/s13142-016-0424-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lorthios-Guilledroit A, Richard L, Filiatrault J. Factors associated with the implementation of community-based peer-led health promotion programs: a scoping review. Eval Program Plan. 2018;68:19–33. doi: 10.1016/j.evalprogplan.2018.01.008. [DOI] [PubMed] [Google Scholar]

- 27.Hill LG, Cooper BR, Parker LA. Qualitative comparative analysis: a mixed-method tool for complex implementation questions. J Prim Prev. 2019;40(1):69–87. doi: 10.1007/s10935-019-00536-5. [DOI] [PubMed] [Google Scholar]

- 28.Coronado GD, Burdick T, Petrik A, Kapka T, Retecki S, Green B. Using an automated data-driven, EHR-embedded program for mailing FIT kits: lessons from the STOP CRC Pilot Study. J Gen Pract (Los Angel). 2014;2. [DOI] [PMC free article] [PubMed]

- 29.Coronado GD, Petrik AF, Vollmer WM, et al. Mailed colorectal cancer screening outreach program in federally qualified health centers: the STOP CRC Cluster Randomized Pragmatic Clinical Trial. JAMA Intern Med. 2018;(submitted). [DOI] [PMC free article] [PubMed]

- 30.Coronado GD, Petrik AF, Vollmer WM, et al. Effectiveness of a mailed colorectal cancer screening outreach program in community health clinics: The STOP CRC cluster randomized clinical trial. JAMA Intern Med. 2018. [DOI] [PMC free article] [PubMed]

- 31.OCHIN. We are OCHIN. https://ochin.org/. Published 2018. Accessed Jul 2019.

- 32.Yakovchenko V, Miech EJ, Chinman MJ, et al. Strategy configurations directly linked to higher hepatitis C virus treatment starts: an applied use of configurational comparative methods. Med Care. 2020. [DOI] [PMC free article] [PubMed]

- 33.Baumgartner M. Parsimony and Causality. Qual Quant. 2015;49(2):839–856. [Google Scholar]

- 34.Cragun D, Pal T, Vadaparampil ST, Baldwin J, Hampel H, DeBate RD. Qualitative comparative analysis: a hybrid method for identifying factors associated with program effectiveness. J Mixed Methods Res. 2016;10(3):251–272. doi: 10.1177/1558689815572023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Thiem A. Conducting configurational comparative research with qualitative comparative analysis:a hands-on tutorial for applied evaluation scholars and practitioners. Am J Eval. 2017;38(3):420–433. [Google Scholar]

- 36.Rohlfing I, Zuber CI. Check your truth conditions! Clarifying the relationship between theories of causation and social science methods for causal inference. Sociol Methods Res. 2019. 10.1177/0049124119826156.

- 37.Rihoux B, Ragin CC. Configurational Comparative Methods: Qualitative Comparative Analysis (QCA) and Related Techniques. SAGE Publications 2008.

- 38.Palinkas LA, Mendon SJ, Hamilton AB. Innovations in mixed methods evaluations. Annu Rev Public Health. 2019;40:423–442. doi: 10.1146/annurev-publhealth-040218-044215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Harris K, Kneale D, Lasserson TJ, McDonald VM, Grigg J, Thomas J. School-based self-management interventions for asthma in children and adolescents: a mixed methods systematic review. Cochrane Database Syst Rev. 2019;1:Cd011651. doi: 10.1002/14651858.CD011651.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Cragun D. Configurational Comparative Methods. In: Nilsen P, Birken S, editors. Handbook on Implementation Science. Cheltenham: Edward Elgar Publishing; 2020. [Google Scholar]

- 41.Ragin CC. The Comparative Method: Moving Beyond Qualitative and Quantitative Strategies. University of California Press; 2014.

- 42.Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the Consolidated Framework for Implementation Research. Implement Sci. 2016;11:72. doi: 10.1186/s13012-016-0437-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Coronado GD, Schneider JL, Sanchez JJ, Petrik AF, Green B. Reasons for non-response to a direct-mailed FIT kit program: Lessons learned from a pragmatic colorectal-cancer screening study in a federally sponsored health center. Transl Behav Med. 2015;5(1):60–67. doi: 10.1007/s13142-014-0276-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Patton MQ. Qualitative research and evaluation methods. Vol Third Edition. Thousand Oaks: Sage Publications, Inc.; 2002. [Google Scholar]

- 45.Bernard HR, Ryan GW. Analyzing qualitative data: systematic approaches. Los Angeles [Calif.]: SAGE; 2010. [Google Scholar]

- 46.Strauss A, Corbin J. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Vol Third Edition. Thousand Oaks: Sage Publications, Inc.; 2008. [Google Scholar]

- 47.Ambuehl M, Baumgartner M. cna: causal modeling with coincidence analysis. R package version 2.1.1; 2018.

- 48.Hickman SE, Miech EJ, Stump TE, Fowler NR, Unroe KT. Identifying the implementation conditions associated with positive outcomes in a successful nursing facility demonstration project. The Gerontologist. 2020. 10.1093/geront/gnaa041 [DOI] [PMC free article] [PubMed]

- 49.Baumgartner M, Thiem A. Model ambiguities in configurational comparative research. Sociol Methods Res. 2017;46(4):954–987. [Google Scholar]

- 50.Greckhamer T, Furnari S, Fiss PC, Aguilera RV. Studying configurations with qualitative comparative analysis: Best practices in strategy and organization research. Strateg Organ. 2018;16(4):482–495. [Google Scholar]

- 51.Levin TR, Corley DA, Jensen CD, et al. Effects of organized colorectal cancer screening on cancer incidence and mortality in a large community-based population. Gastroenterology. 2018;155(5):1383–1391.e1385. doi: 10.1053/j.gastro.2018.07.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Coury JK, Schneider JL, Green BB, et al. Two Medicaid health plans’ models and motivations for improving colorectal cancer screening rates. Transl Behav Med. 2018. [DOI] [PMC free article] [PubMed]

- 53.Baker DW, Brown T, Buchanan DR, et al. Comparative effectiveness of a multifaceted intervention to improve adherence to annual colorectal cancer screening in community health centers: a randomized clinical trial. JAMA Intern Med. 2014;174(8):1235–1241. doi: 10.1001/jamainternmed.2014.2352. [DOI] [PubMed] [Google Scholar]

- 54.Green BB, Wang CY, Anderson ML, et al. An automated intervention with stepped increases in support to increase uptake of colorectal cancer screening: a randomized trial. Ann Intern Med. 2013;158(5 Pt 1):301–311. doi: 10.7326/0003-4819-158-5-201303050-00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Riehman KS, Stephens RL, Henry-Tanner J, Brooks D. Evaluation of colorectal cancer screening in federally qualified health centers. Am J Prev Med. 2018;54(2):190–196. doi: 10.1016/j.amepre.2017.10.007. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 411 kb)

Data Availability Statement

Please contact the lead author for information regarding data.