Abstract

Recent advances in computational models of signal propagation and routing in the human brain have underscored the critical role of white-matter structure. A complementary approach has utilized the framework of network control theory to better understand how white matter constrains the manner in which a region or set of regions can direct or control the activity of other regions. Despite the potential for both of these approaches to enhance our understanding of the role of network structure in brain function, little work has sought to understand the relations between them. Here, we seek to explicitly bridge computational models of communication and principles of network control in a conceptual review of the current literature. By drawing comparisons between communication and control models in terms of the level of abstraction, the dynamical complexity, the dependence on network attributes, and the interplay of multiple spatiotemporal scales, we highlight the convergence of and distinctions between the two frameworks. Based on the understanding of the intertwined nature of communication and control in human brain networks, this work provides an integrative perspective for the field and outlines exciting directions for future work.

Keywords: Communication models, Brain dynamics, Spatiotemporal scales in brain, Control models for brain networks, Linear control, Time-varying control, Nonlinear control, Integrated models, System identification, Causality

Author Summary

Models of communication in brain networks have been essential in building a quantitative understanding of the relationship between structure and function. More recently, control-theoretic models have also been applied to brain networks to quantify the response of brain networks to exogenous and endogenous perturbations. Mechanistically, both of these frameworks investigate the role of interregional communication in determining the behavior and response of the brain. Theoretically, both of these frameworks share common features, indicating the possibility of combining the two approaches. Drawing on a large body of past and ongoing works, this review presents a discussion of convergence and distinctions between the two approaches, and argues for the development of integrated models at the confluence of the two frameworks, with potential applications to various topics in neuroscience.

INTRODUCTION

The propagation and transformation of signals among neuronal units that interact via structural connections can lead to emergent communication patterns at multiple spatial and temporal scales. Collectively referred to as ‘communication dynamics,’ such patterns reflect and support the computations necessary for cognition (Avena-Koenigsberger, Misic, & Sporns, 2018; Bargmann & Marder, 2013). Communication dynamics consist of two elements: (i) the dynamics that signals are subjected to, and (ii) the propagation or spread of signals from one neural unit to another. Whereas the former is determined by the biophysical processes that act on the signals, the latter is dictated by the structural connectivity of brain networks. Mathematical models of communication incorporate one or both of these elements to formalize the study of how function arises from structure. Such models have been instrumental in advancing our mechanistic understanding of observed neural dynamics in brain networks (Avena-Koenigsberger et al., 2018; Bansal, Nakuci, & Muldoon, 2018; Bargmann & Marder, 2013; Bassett, Zurn, & Gold, 2018; Cabral et al., 2014; Hermundstad et al., 2013; N. J. Kopell, Gritton, Whittington, & Kramer, 2014; Mišíc et al., 2015; Shen, Hutchison, Bezgin, Everling, & McIntosh, 2015; Sporns, 2013a; Vázquez-Rodríguez et al., 2019).

Building on the descriptive models of neural dynamics, greater insight can be obtained if one can perturb the system and accurately predict how the system will respond (Bassett et al., 2018). The step from description to perturbation can be formalized by drawing on both historical and more recent advances in the field of control theory. As a particularly well-developed subfield, the theory of linear systems offers first principles of system analysis and design, both to ensure stability and to inform control (Kailath, 1980). In recent years, this theory has been applied to the human brain and to nonhuman neural circuits to ask how interregional connectivity can be utilized to navigate the system’s state space (Gu et al., 2017; Tang & Bassett, 2018; Towlson et al., 2018), to explain the mechanisms of endogenous control processes (such as cognitive control) (Cornblath et al., 2019; Gu et al., 2015), and to design exogenous intervention strategies (such as stimulation) (Khambhati et al., 2019; Stiso et al., 2019). Applicable across spatial and temporal scales of inquiry (Tang et al., 2019), the approach has proven useful for probing the functional implications of structural variation in development (Tang et al., 2017), heritability (W. H. Lee, Rodrigue, Glahn, Bassett, & Frangou, 2019; Wheelock et al., 2019), psychiatric disorders (Fisher & Velasco, 2014; Jeganathan et al., 2018), neurological conditions (Bernhardt et al., 2019), neuromodulatory systems (Shine et al., 2019), and detection of state transitions (Santanielloa et al., 2011; Santanielloa, Sherman, Thakor, Eskandar, & Sarma, 2012). Further research in the area of application of network control theory to brain networks can inform neuromodulation strategies (Fisher & Velasco, 2014; L. M. Li et al., 2019) and stimulation therapies (Santanielloa, Gale, & Sarma, 2018).

Theoretical frameworks for communication and control share several common features. In communication models, the observed neural activity is strongly influenced by the topology of structural connections between brain regions (Avena-Koenigsberger et al., 2018; Bassett et al., 2018). In control models, the energy injected through exogenous control signals is also constrained to flow along the same structural connections. Thus, the metrics used to characterize communication and control both show strong dependence on the topology of structural brain networks. Interwoven with the topology, the dynamics of signal propagation in both the control and communication models involve some level of abstraction of the underlying processes, and dictate the behavior of the system’s states. Despite these practical similarities, communication and control models differ appreciably in their goals (Figure 1). Whereas communication models primarily seek to explain the patterns of neural signaling that can arise at rest or in response to stimuli, control theory primarily seeks principles whereby inputs can be designed to elicit desired patterns of neural signaling, under certain assumptions of system dynamics. In other words, at a conceptual level, communication models seek to understand the state transitions that arise from a given set of inputs (including the absence of inputs), whereas control models seek to design the inputs to achieve desirable state transitions.

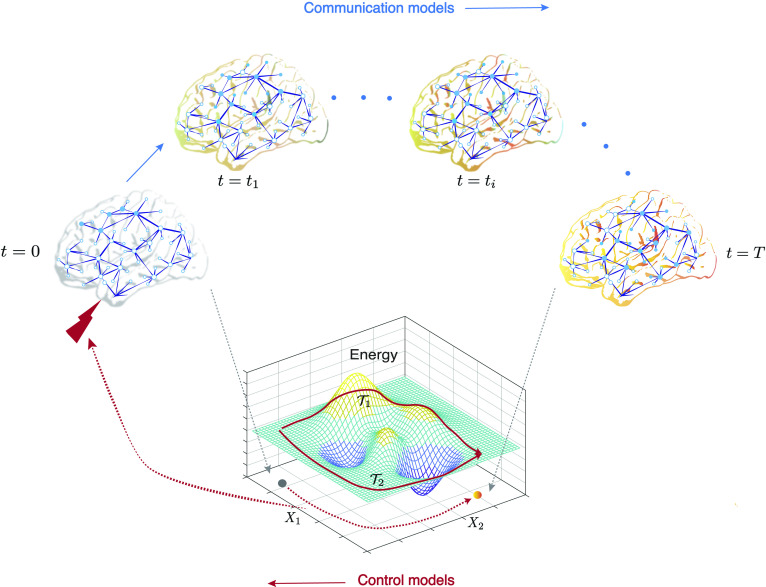

Figure 1. .

Goals of communication and control models share an inverse relationship. The propagation of an initial stimulus is dictated by the underlying structural connections of the brain network and results in the observed communication dynamics. Stimuli can be external (e.g., transcranial direct current stimulation, sensory stimuli, behavioral therapy, drugs) or internal (e.g., endogenous brain activity, cognitive control strategies). The primary goal of communication models is to capture the evolution of communication dynamics by using dynamical models, and to characterize the process of signal propagation, using graph-theoretic and statistical measures. In contrast, a fundamental aim in the framework of control theory is to determine the control strategies that would navigate the system from a given initial state to the desired final state. Control signals (shown by the red lightning bolt) move a controllable system along trajectories (shown as a red dotted curve on the state plane) that connect the initial and final states. Here, the cost of the trajectory is determined by the energetics of the state transition. We show example trajectories and on an example energy landscape.

While relatively simple similarities and dissimilarities are apparent between the two approaches, the optimal integration of communication and control models requires more than a superficial comparison. Here, we provide a careful investigation of relevant distinctions and a description of common ground. We aim to find the points of convergence between the two frameworks, identify outstanding challenges, and outline exciting research problems at their interface. The remainder of this review is structured as follows. First, we briefly review the fundamentals of communication models and network control theory in sections 2 and 3, respectively. In both sections, we order our discussion of models from simpler to more complex, and we place particular emphasis on each model’s spatiotemporal scale. Section 4 is devoted to a comparison between the two approaches in terms of (i) the level of abstraction, (ii) the complexity of the dynamics and observed behavior, (iii) the dependence on network attributes, and (iv) the interplay of multiple spatiotemporal scales. In section 5, we discuss future areas of research that could combine elements from the two avenues alongside outstanding challenges. Finally, we conclude by summarizing and elucidating the usefulness of combining the two approaches and the implications of such work for understanding brain and behavior.

COMMUNICATION MODELS

In a network representation of the brain, neuronal units are represented as nodes, while interunit connections are represented as edges. Such connections can be structural, in which case they are estimated from diffusion imaging (Lazar, 2010), or can be functional (Morgan, Achard, Termenon, Bullmore, & Vértes, 2018), in which case they are estimated by statistical similarities in activity from functional neuroimaging. When the state of node j at a given time t is influenced by the state of node i at previous time points, a communication channel is said to exist between the two nodes, with node i being the sender and node j being the receiver (Figure 2A). The set of all communication channels forms the substrate for communication processes. A given communication process can be multiscale in nature: communication between individual units of the network typically leads to the emergence of global patterns of communication thought to play important roles in computation and cognition (Avena-Koenigsberger et al., 2018).

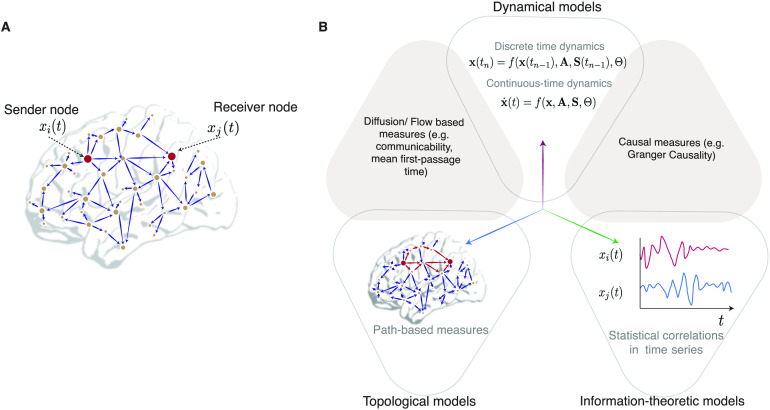

Figure 2. .

Models and measures of communication. (A) A communication event from sender node i to receiver node j causes dependencies in the activity xj(t) of the j-th node on the activity xi(t) of the i-th node. (B) The three classes of mathematical approaches (empty triangles) to understanding emergent communication dynamics, as well as potential areas of overlap (shaded triangles), shown along three axes. Topological models (along caerulean axis) primarily construct measures based on paths or walks (red edges) between communicating nodes. Dynamical models (along mauve axis) can be cast into differential equations (for continuous-time dynamics) or difference equations (for discrete time dynamics) that capture dynamic processes governing the propagation of information at a given spatiotemporal scale. Information theoretic models (along green axis) propose measures to compute the degree to which xj(t) statistically (and sometimes causally) depends on xi(t).

In brain networks, the state of a given node can influence the state of another node precisely because the two are connected by a structural or effective link. This structural constraint on potential causal relations results in patterns of activity reflecting communication among units. Such activity can be measured by techniques such as functional magnetic resonance imaging (fMRI), electroencephalography (EEG), magnetoencephalography (MEG), and electrocorticography (ECoG), among others (Beauchene, Roy, Moran, Leonessa, & Abaid, 2018; Sporns, 2013b). In light of the complexity of observed activity patterns and in response to questions regarding their generative mechanisms, investigators have developed mathematical models of neuronal communication. Such models allow for inferring, relating, and predicting the dependence of measured communication dynamics on the topology of brain networks.

Communication models can be roughly classified into three types: dynamical, topological, and information theoretic. Dynamical models of communication are generative, and seek to capture the biophysical mechanisms that transform signals and transmit them along structural connections. Topological models of communication propose network attributes, such as measures of path and walk structure, to explain observed activity patterns. Information theoretic models of communication define statistical measures to quantify the interdependence of nodal activity, the direction of communication, and the causal relations between nodes. Several excellent reviews describe these three model types in great detail (Avena-Koenigsberger et al., 2018; Bassett et al., 2018; Breakspear, 2017; Deco, Jirsa, Robinson, Breakspear, & Friston, 2008). Thus here we instead provide a rather brief description of the associated approaches and measures, particularly focusing on aspects that will be relevant to our later comparisons with the framework of control theory.

Dynamic Models and Measures

Dynamical models of communication aim to capture the biophysical mechanisms underlying signal propagation between communicating neuronal units in brain networks. Such models can be defined at various levels of complexity, ranging from relatively simple linear diffusion models to highly nonlinear ones. Dynamical models also differ in terms of the spatiotemporal scales of phenomena that they seek to explain. The choice of explanatory scale impacts the precise communication dynamics that the model produces, as well as the scale of collective dynamics that can emerge.

The general form of a deterministic dynamical model at an arbitrary scale is given by (Breakspear, 2017):

| (1) |

Here, x encodes the state variables that are used to describe the state of the network, A encodes the underlying connectivity matrix, and u encodes the input variables. The functional form of f is set by the requirements (i.e., the expected utility) of the model. For example, at the level of individual neurons communicating via synaptic connections, the conservation law for electric charges (together with model fitting for the gating variables) determines the functional form of f in the Hodgkin-Huxley model (Hodgkin & Huxley, 1952). Similarly, at the scale of neuronal ensembles, other biophysical mechanisms such as the interactions between excitatory and inhibitory populations dictate f in the Wilson-Cowan model (Wilson & Cowan, 1972). Finally, β encodes other parameters of the model, independent of the connectivity strength A. The β parameters can be phenomenological, thereby allowing for an exploration of the whole phase space of possible behaviors; alternatively, the β parameters can be determined from experiments in more data-driven models. In some limiting cases, it may also be possible to derive β parameters in a given model at a particular spatiotemporal scale from complementary models at a finer scale via the procedure of coarse-graining (Breakspear, 2017).

Fundamentally, dynamical models seek to capture communication of the sort where one unit causes a change in the activity of another unit or shares statistical features with another unit. There is, however, little consensus on precisely how to measure these causal or statistical relations. One of the most common measures is Granger causality (Granger, 1969), which estimates the statistical relation of unit xi to unit xj by the amount of predictive power that the “past” time series {xi(τ),τ < t} of xi has in predicting xj(t). While this prediction need not be linear, Granger causality has been historically measured via linear autoregression (Kamiński, Ding, Truccolo, & Bressler, 2001; Korzeniewska, Mańczak, Kamiński, Blinowska, & Kasicki, 2003); see Bressler and Seth (2011) for a review in relation to brain networks.

The use of temporal precedence and lead-lag relationships is also a basis for alternative definitions of causality. In Nolte et al. (2008), for instance, the authors propose the phase-slope index, which measures the direction of causal influence between two time series based on the lead-lag relationship between the two signals in the frequency domain. Notably, this relationship can be used to measure the causal effect between neural masses coupled according to the structural connectome (Stam & van Straaten, 2012). Because not all states of a complex system can often be measured, several studies have opted to first reconstruct (equivalent) state trajectories via time delay embedding (Shalizi, 2006; Takens, 1981) before measuring predictive causal effects (Harnack, Laminski, Schünemann, & Pawelzik, 2017; Sugihara et al., 2012). Finally, given the capacity to perturb the states or even parameters of the network (either experimentally or in simulations), one can observe the subsequent changes in other network states that occur, and thereby discover and measure causal effects (Smirnov, 2014, 2018).

Topological Models and Measures

The potential for communication between two brain regions, each represented as a network node, is dictated by the paths that connect them. It has been thought that long routes demand high metabolic costs and sustain marked delays in signal propagation (Bullmore & Sporns, 2012). Thus, the presence and nature of shortest paths through a network are commonly used to infer the efficiency of communication between two regions (Avena-Koenigsberger et al., 2018). If the shortest path length between node i and node j is denoted by d(i,j) (Latora & Marchiori, 2001) then the global efficiency through a network is defined as the mean of the inverse shortest path lengths (Ek, VerSchneider, & Narayan, 2016; Latora & Marchiori, 2001). Although measures based on shortest paths have been widely used, their relevance to the true system has been called into question for three reasons. First, systems that route information exclusively through shortest paths are vulnerable to targeted attack of the associated edges (Avena-Koenigsberger et al., 2018); yet, one might have expected brains to have evolved to circumvent this vulnerability, for example, by also using nonshortest paths for routing. Second, a sole reliance on shortest-path routing implies that brain networks have nonoptimally invested a large cost in building alternative routes that essentially are not used for communication. Third, the ability to route a signal by the shortest path appears to require the signal or brain regions to have biologically implausible knowledge of the global network structure. These reasons have motivated the development of alternative measures, such as the number of parallel paths or edge-disjoint paths between two regions (Avena-Koenigsberger et al., 2018); systems using such diverse routing strategies can attain greater resilience of communication processes (Avena-Koenigsberger et al., 2019). The resilience of interregional communication in brain networks is a particularly desired feature since fragile networks have been found to be associated with neurological disorders such as epilepsy (Ehrens, Sritharan, & Sarma, 2015; A. Li, Inati, Zaghloul, & Sarma, 2017; Sritharan & Sarma, 2014).

The assumption of information flow through all paths available between two regions leads to the notion of communicability. By denoting the adjacency matrix A, we can define the communicability between node i and node j as the weighted sum of all walks starting at node i and ending at node j (Estrada, Hatano, & Benzi, 2012):

| (2) |

where Ak denotes the k-th power of A, and ck are appropriately selected coefficients that both ensure that the series is convergent and assign smaller weights to longer paths. If the entries of A are all nonnegative (which is the context in which communicability is mainly used), then Gji is also real and nonnegative. Out of several choices that can be made, a particularly insightful one is . The resulting communicability, also known as the exponential communicability Gji = (eA)ji, allows for interesting analogies to be drawn with the thermal Green’s function and correlations in physical systems (Estrada et al., 2012). Additionally, since (Ak)ji directly encodes the weighted paths of length k from node i to node j, one can conveniently study the path length dependence of communication. Exponential communicability is also similar to the impulse response of the system, a familiar notion in control theory which we further explore in section 4.

Another flow-based measure of communication efficiency is the mean first-passage time, which quantifies the distance between two nodes when information is propagated by diffusion. Similar to the global efficiency, the diffusion efficiency is the average of the inverse of the mean first-passage time between all pairs of network nodes. Interestingly, systems that evolve under competing constraints for diffusion efficiency and routing efficiency can display a diverse range of network topologies (Avena-Koenigsberger et al., 2018). Note that these global measures of communication efficiency only provide an upper bound on the assumed communicative capacity of the network; in networks with significant community or modular structures (Schlesinger, Turner, Grafton, Miller, & Carlson, 2017), other architectural attributes such as the existence and interconnectivity of highly connected hubs are determinants of the integrative capacity of a network that global measures of communication efficiency fail to capture accurately (Sporns, 2013a).

Network attributes that determine an efficient propagation of externally induced or intrinsic signals may inform generative models of brain networks both in health and disease (Vértes et al., 2012). Moreover, such attributes can inform the choice of control inputs targeted to guide brain state transitions; we discuss this convergence in section 4. Further, quantifying communication channel capacity calls for the use of information theory, which we turn to now.

Information Theoretic Models and Measures

Information theory and statistical mechanics have been used to define several measures of information transfer such as transfer entropy and Granger causality. Such measures are built on the fact that the process of signal propagation through brain networks results in collective time-dependent activity patterns of brain regions that can be measured as time series. Entropic measures of communication aim to find statistical dependencies between such time series to infer the amount and direction of information transfer. The processes underlying the observed time series are typically assumed to be Markovian, and measures of statistical dependence are calculated in a manner that reflects causal dependence. For this reason, the causal measures of communication proposed in the information theoretic approach share similarities with those used in dynamical causal inference (Valdes-Sosa, Roebroeck, Daunizeau, & Friston, 2011).

A central quantity in information theory is the Shannon entropy, which measures the uncertainty in a discrete random variable I that follows the distribution p(i) and is given by . One measure of statistical interdependency between two random variables I and J is their mutual information, , where p(i,j) is their joint distribution and p(i) and p(j) are its marginals. Since mutual information is symmetric, it fails to capture the direction of information flow between two processes (sequences of random variables) (Schreiber, 2000).

To address this limitation, the measure of transfer entropy was proposed to capture the directionality of information exchange (Schreiber, 2000). Transfer entropy takes into account the transition probability between different states, which can be the result of a stochastic dynamic process (similar to Equation 1 but with a stochastic u) and obtained from the time series of activities of brain regions through imaging techniques. To measure the direction of information transfer between processes I and J, the notion of mutual information is generalized to the mutual information rate. The transfer entropy between processes I and J is given by (Schreiber, 2000):

| (3) |

where processes I and J are assumed to be stationary Markov processes of order k and l, respectively. The quantity () denotes the state of process I(J) at time n while denotes the transition probability to state in+1 at time n + 1, given knowledge of the previous k states. The quantity is the same as if the process J does not influence the process I.

Similar to Granger causality, transfer entropy has been extensively used to compute the statistical interdependence of dynamic processes and to infer the directionality of information exchange. Later studies have sought to combine these two measures into a single framework by defining the multi-information. This approach takes into account the statistical structure of the whole system and of each subsystem, as well as the structure of the interdependence between them (Chicharro & Ledberg, 2012). Such methods complement the topological and dynamical models to provide a unique perspective on communication, by quantifying information content and transformation.

Communication Models Across Spatiotemporal Scales

Whether considering models that are dynamical, topological, or information theoretic, we must choose the identity of the neural unit that is performing the communication. Individual neurons form basic units of computation in the brain, which communicate with other neurons via synapses. One particularly common model of communication at this cellular scale is the Hodgkin-Huxley model, which identifies the membrane potential as the state variable whose evolution is determined by the conservation law for electric charge (Hodgkin & Huxley, 1952). Simplifications and dimensional reductions of the Hodgkin-Huxley model have led to related models such as the Fitzhugh-Nagumo model, which is particularly useful for studying the resulting phase space (Abbott & Kepler, 1990; Fitzhigh, 1961). Further simplifications of the neuronal states to binary variables have facilitated detailed accounts of network-based interactions such as those provided by the Hopfield model (Abbott & Kepler, 1990; Bassett et al., 2018). Collectively, despite all capturing the state of an individual neuron, these models differ from one another in the biophysical realism of the chosen state variables: the on/off states in the Hopfield model are arguably less realistic than the membrane potential state in the Hodgkin-Huxley model.

When considering a large population of neurons, a set of simplified dynamics can be derived from those of a single neuron by using the formalism and tools from statistical mechanics (Abbott & Kepler, 1990; Breakspear, 2017; Deco et al., 2008). The approximations prescribed by the laws of statistical mechanics—such as, for example, the diffusion approximation in the limit of uncorrelated spikes in neuronal ensembles—have led to the Fokker-Planck equations for the probability distribution of neuronal activities. From the evolution of such probability distributions, one can derive the dynamics of the moments, such as the mean firing rate and variance (Breakspear, 2017; Deco et al., 2008). Several models of neuronal ensembles exist that exhibit rich collective behavior such as synchrony (Palmigiano, Geisel, Wolf, & Battaglia, 2017; Vuksanović & Hövel, 2015), oscillations (Fries, 2005; N. Kopell, Börgers, Pervouchine, Malerba, & Tort, 2010), waves (Muller, Chavane, Reynolds, & Sejnowski, 2018; Roberts et al., 2019), and avalanches (J. M. Beggs & Plenz, 2003), each supporting different modes of communication. In the limit where the variance of neuronal activity over the ensemble can be assumed to be constant (e.g., in the case of strong coherence), the Fokker-Planck equation leads to neural mass models (Breakspear, 2017; Coombes & Byrne, 2019). Relatedly, the Wilson-Cowan model is a mean-field model for interacting excitatory and inhibitory populations of neurons (Wilson & Cowan, 1972), and has significantly influenced the subsequent development of theoretical models for brain regions (Destexhe & Sejnowski, 2009; Kameneva, Ying, Guo, & Freestone, 2017). At scales larger than that of neuronal ensembles, brain dynamics can be modeled by coupling neural masses, Wilson-Cowan oscillators, or Kuramato oscillators according to the topology of structural connectivity (Breakspear, 2017; Muller et al., 2018; Palmigiano et al., 2017; Roberts et al., 2019; Sanz-Leon, Knock, Spiegler, & Jirsa, 2015). Collectively, these models provide a powerful way to theoretically and computationally generate the large-scale temporal patterns of brain activity that can be explained by the theory of dynamical systems.

When changing models to different spatiotemporal scales, we must also change how we think about communication. While communication might involve induced spiking at the neuronal scale, it may also involve phase lags at the population scale. Dynamical systems theory provides a powerful and flexible framework to determine the emergent behavior in dynamic models of communication. As we saw in Equation 1, the evolution of the system is represented by a trajectory in the phase space constructed from the system’s state variables. A critical notion from this theory has been that of attractors, namely, stable patterns in this phase space to which phase trajectories converge. The range of emergent behavior exhibited by the dynamical system such as steady states, oscillations, and chaos is thus determined by the nature of its attractors that can be stable fixed points, limit cycles, quasi-periodic, or chaotic. Oscillations, synchronization, and spiral or traveling wave solutions that result from dynamical models match with the patterns observed in brain networks, and have been proposed as the mechanisms contributing to cross-regional communication in brain (Buelhmann & Deco, 2010; Roberts et al., 2019; Rubino, Robbins, & Hatsopoulos, 2006).

The class of communication models that generate oscillatory solutions holds an important place in models of brain dynamics (Davison, Aminzare, Dey, & Ehrich Leonard, n.d.; Breakspear, Heitmann, & Daffertshofer, 2010). Numerous classes of nonlinear models at both the micro- and macroscale exhibit oscillatory solutions, and they can be broadly classified into periodic (limit cycle), quasi-periodic (tori), and chaotic (Breakspear, 2017). Synchronization in the activity of spiking neurons is an emergent feature of neural systems that appears to be particularly important for a variety of cognitive functions (Bennett & Zukin, 2004). This fact has motivated efforts to model brain regions as interacting oscillatory units, whose dynamics are described by, for example, the Kuramoto model for phase oscillators. In its original form, the equation for the phase variable θi(t) of the i −th Kuramoto oscillator is given by (Acebrón, Bonilla, Vicente, Ritort, & Spigler, 2005; Kuramoto, 2003)

| (4) |

where ωi denotes the natural frequency of oscillator i, which depends on its local dynamics and parameters, and Aij denotes the net connection strength of oscillator j to oscillator i. Phase oscillators generally and the Kuramoto model specifically have been widely used to model neuronal dynamics (Breakspear et al., 2010). The representation of each oscillator by its phase (which critically depends on the weak coupling assumption Ermentrout & Kopell, 1990) makes it particularly tractable to study synchronization phenomena (Boccaletti, Latora, Moreno, Chavez, & Hwang, 2006; Börgers & Kopell, 2003; Chopra & Spong, 2009; Davison et al., n.d.; Vuksanović & Hövel, 2015). Generalized variants of the Kuramoto model have also been proposed and studied in the context of neuronal networks (Cumin & Unsworth, 2007).

CONTROL MODELS

While the study of communication in the neural systems has developed hand-in-hand with our understanding of the brain, the study of control dynamics in (and on) the brain is rather young and still in early stages of development. In this section we review some of the basic elements of control theory that will allow us in later sections to elucidate the relationships between communication and control in brain networks.

The Theory of Linear Systems

The simplicity and tractability of linear time-invariant (LTI) models have sparked significant interest in the application of linear control theory to neuroscience (Kailath, 1980; Tang & Bassett, 2018). LTI systems are most commonly studied in state space, and their simplest form is finite dimensional, deterministic, without delays, and without instantaneous effects of the input on the output. Such a continuous-time LTI system is described by the algebraic-differential equation

| (5a) |

| (5b) |

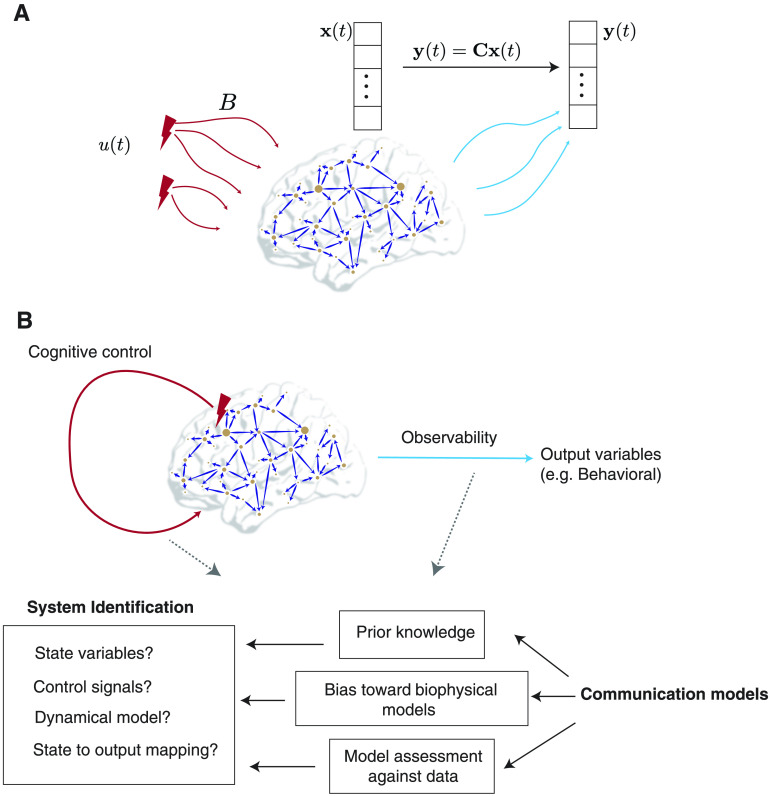

Here, Equation 5a is a special case of Equation 1 (with the input matrix B corresponding to β), while the output vector y now allows for a distinction between the internal, latent state variables x and the external signals that can be measured, say, via neuroimaging. In the context of brain networks, the matrix A is most often chosen to be the structural connectivity matrix obtained from the imaging of white-matter tracts (Gu et al., 2015; Stiso et al., 2019). More recently effective connectivity matrices have also been encoded as A (Scheid et al., 2020; Stiso et al., 2020), as have functional connectivity matrices inferred from systems identification methods (Deng & Gu, 2020). It is insightful to point out that in continuous-time LTI systems, the entries of matrix A have the unit of inverse time or a rate, implying that the eigenvalues of the matrix A represent the response rates of associated modes as they are excited by the stimuli u. The stimuli u represent exogenous control signals (e.g., strategies of neuromodulation such as deep brain stimulation, direct electrical stimulation and transcranial magnetic stimulation) or endogenous control (such as the mechanisms of cognitive control) and are injected into the brain networks via a control configuration specified by the input matrix B (Figure 3). Then, Equation 5b specifies the mapping between latent state variables x and the observable output vectors y measured via neuroimaging. Each element Cij of the matrix C thus describes the loading of the i-th measured signal on the activity level of the j-th brain region (or the j-th state in general, if states do not correspond to brain regions). Note that the number of states, inputs, and outputs need not be the same, in which case B and C are not square matrices.

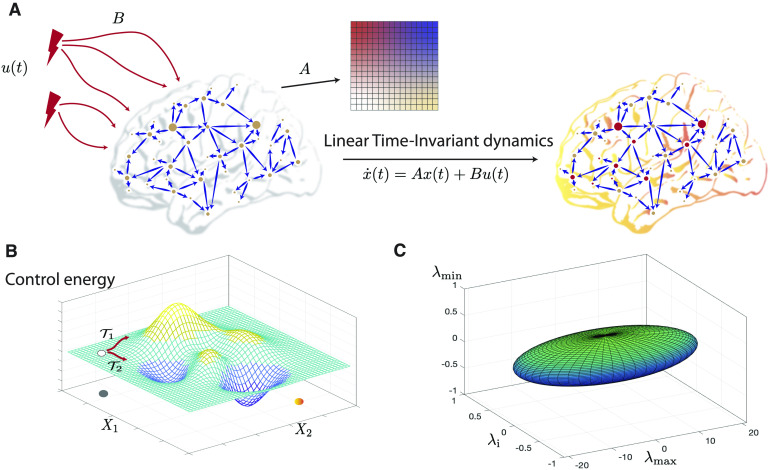

Figure 3. .

Control theory applied to brain networks. Control theory seeks to determine and quantify the controllability and observability properties of a given system. A system is controllable when a control input u(t) is guaranteed to exist to navigate the system from a given initial state to a desired final state in a specified span of time. (A) We begin by encoding a brain network in the adjacency matrix denoted by A. Then, control signals u(t) act on the network via the input matrix B, leading to the evolution of the system’s state to a desired final state according to some dynamics. The most common dynamics studied in this context is a linear time-invariant dynamics. Whether the system can be navigated between two arbitrary states in a given time period is determined by a full-rank condition on the controllability matrix. (B) The control energy landscape dictates the availability and difficulty of transitions between distinct system states. For a controllable system, several trajectories can exist which connect the initial and final states. An optimum trajectory is then determined using the notion of optimal control. (C) The eigenvalues of the inverse Gramian matrix quantify the ease of moving the system along eigen directions that span the state space and form an N − ellipsoid whose surface reflects the control energy to make unit changes in the state of the system along the corresponding eigen direction. Here we show an ellipsoid constructed from the maximum, the minimum, and an intermediate eigenvalue of the Gramian for an example regular graph with N = 400 nodes and degree l = 40. The initial state has been taken to be at the origin, and the final state is a random vector of length N with unit norm. Commonly used metrics of controllability such as the average controllability, can be constructed from the eigenvalues of the Gramian.

At the macroscale where linear models are most widely used, the state vector x often contains as many elements as the number of brain (sub)regions of interest with each element xi(t) representing the activity level of the corresponding region at time t, for example, corresponding to the mean firing rate or local field potential. The elements of the vector u are often more abstract and can model either internal or external sources. An example of an internal source would be a cognitive control signal from frontal cortex, whereas an example of an external source would be neurostimulation (Cornblath et al., 2019; Ehrens et al., 2015; Gu et al., 2015; Sritharan & Sarma, 2014). While a formal link between these internal or external sources and the model vector u is currently lacking, it is standard to let represent the net energy. The matrix B is often binary, with one nonzero entry per column, and encodes the spatial distribution of the input channels to brain regions.

Owing to the tractability of LTI systems, the state response of an LTI system (i.e., x(t)) to a given stimulus u(t) can be analytically obtained as:

| (6) |

In this expression, the matrix exponential eAt has a special significance. If x(0) = 0, and if ui(t) is an impulse (i.e., a Dirac delta function) for some i, and if the remaining input channels are kept at zero, then Equation 6 simplifies to the system’s impulse response

| (7) |

where bi is the i-th column of B. Clearly, the impulse response has close ties to the communicability property of the network introduced in section 4. We discuss this relation further in section 4, where we directly compare communication and control.

Controllability and Observability in Principle

One of the most successful applications of linear control theory to neuroscience lies in the evaluation of controllability. If the input-state dynamics (Equation 5a) is controllable, it is possible to design a control signal u(t),t ≥ 0 such that x(0) =x0 and x(T) =xf for any initial state x0, final state xf, and control horizon T > 0. In other words, a (continuous-time LTI) system is controllable if it can be controlled from any initial state to any final state in a given amount of time; notice that controllability is independent of the system’s output. Using standard control-theoretic tools, it can be shown that the system Equation 5a is controllable if and only if the controllability matrix has full-rank n, where n denotes the dimension of the state (Kailath, 1980).

The notion of full-state controllability discussed above can at times be a strong requirement, particularly as the size of the network (and therefore the dimension of the state space) grows. If it happens that a system is not full-state controllable, the control input u(t) can still be designed to steer the state in certain directions, despite the fact that not every state transition is achievable. In fact, we can precisely determine the directions in which the state can and cannot be steered using the input u(t). The former, called the controllable subspace, is given by the range space of the controllability matrix : all directions that can be written as a linear combination of the columns of . It can be shown that the state can be arbitrarily steered within the controllable subspace, similar to a full-state controllable system (C.-T. Chen, 1998, §6.4). Recall, however, that the rank of is necessarily less than n for an uncontrollable system, and so is the dimension of the controllable subspace. If this rank is r < n, we then have an n − r dimensional subspace, called the uncontrollable subspace, which is orthogonal to the controllable one. In contrast to our full control over the controllable subspace, the evolution of the system is completely autonomous and independent of u(t) in the uncontrollable subspace (Kailath, 1980).

Dual to the notion of controllability is that of observability, which has been explored to a lesser degree in the context of brain networks. Whereas an output can be directly computed when the input and initial state are specified (Equation 5), the converse is not necessarily true; it is not always possible to solve for the state from input-output measurements. The property that characterizes and quantifies the possibility of determining the state from input-output measurements is termed observability, and can be understood as the possibility to invert the state-to-output map (Equation 5b), albeit over time. Interestingly, the input signal u(t) and matrix B are irrelevant for observability. Moreover, the system Equation 5 is observable if and only if its dual system d x(t)/dt =ATx(t) +CTu(t) is controllable (here, the superscript T denotes the transpose). This duality allows us to, for instance, easily determine the observability of Equation 5 by checking whether the observability matrix has full rank. The notion of observability may be particularly relevant to the measurement of neural systems, and we discuss this topic further in sections 4 and 5.

Controllability in Practice

Once a system is determined to be controllable in principle, the next natural question is how to design a control signal u(t) that can move the system between two states. Although the existence of at least one such signal is guaranteed by controllability, this control signal and the resulting system trajectory may not be unique; for instance, an arbitrary intermediate point can be reached in T/2 time and then the final state can be reached in the remaining time (both due to controllability). This nonuniqueness of control strategies leads to the problem of optimal control; that is, designing the best control signals that achieve a desired state transition, according to some criterion of optimality. The simplest and most commonly used criterion is the control energy defined as

| (8) |

where ∥⋅∥ denotes the Euclidean norm. The corresponding control signal that minimizes (8) is thus referred to as the minimum energy control. Owing to the tractability of LTI systems, this control signal and its total energy can be found analytically (Kirk, 2004).

While certainly useful, the minimum energy criterion (Equation 8) has a number of limitations. In particular, the energy of all the control channels are weighted equally. Further, the state is allowed to become arbitrarily large between the initial and final times. These limitations have motivated the more general linear-quadratic regulator (LQR) criterion

| (9) |

where Rj and Qi are positive weights forming the diagonal entries of the matrices R and Q, respectively, and T denotes the transpose operator. Whereas the first term in Equation 9 expresses the cost of control as in Equation 8, the second term introduces a cost on the trajectory in state-space. This general form poses a trade-off between the two costs, and is particularly relevant in cases where some regions of state space are more preferred than others. By selecting the entries of Q to be large relative to R, for instance, the resulting control will ensure that the state remains close to 0. The second term in Equation 9 can further be generalized to introduce a preferred trajectory in the state space by replacing x(t) by x(t) −x*(t) where x*(t) denotes the preferred trajectory. An analytical solution can also be found for the control signals minimizing the above generalized energy. Notably, the cost function Equation 9 has recently proven fruitful in the study of brain network architecture and development (Gu et al., 2017; Tang et al., 2017).

Another central quantity of interest in characterizing the controllability properties of an LTI system is the Gramian matrix which, for continuous-time dynamics, is given as

| (10) |

The invertibility of the Gramian matrix, equivalently to the full-rank condition of the controllability matrix, ensures that the system is controllable. Further, the eigen-directions (eigenvectors) of the Gramian corresponding to its nonzero (positive) eigenvalues form a basis of the state subspace that is reachable by the system (Figure 3C) (Lewis, Vrabie, & Syrmos, 2012; Y.-Y. Liu, Slotine, & Barabási, 2011), even when the Gramian is not invertible (note the relation with the controllable and uncontrollable subspaces discussed above). Intuitively then, the eigenvalues of the Gramian matrix quantify the ease of moving the system along corresponding eigen directions. Various efforts have thus been made to condense the n eigenvalues of the Gramian into a single, scalar controllability metric, such as the average controllability and control energy (see below) (Gu et al., 2017; Kailath, 1980; Pasqualetti, Zampieri, & Bullo, 2014; Tang & Bassett, 2018).

Using the controllability Gramian, it can in fact be shown that the energy (8) of the mininum-energy control is given by (assuming x(0) = 0 for simplicity)

| (11) |

where xf denotes the final state. The framework of minimum energy control and controllability metrics have recently been applied to brain networks, (see, e.g., Gu et al., 2017, 2015; Tang & Bassett, 2018; Tang et al., 2017). This framework further opens up interesting questions about its implications for control and the response of brain networks to stimuli; specifically, one might with to determine the physical interpretation of controllability metrics in brain networks and how they can inform optimal intervention strategies. We revisit this point while discussing the utility of communication models in addressing some of these questions in section 4-B.

Generalizations to Time-Varying and Nonlinear Systems

Used most often due to its simplicity and analytical tractability, the LTI model of system’s dynamics limits the temporal behavior that can be exhibited by the system to the following three types: exponential growth, exponential decay, and sinusoidal oscillations. In contrast, the brain exhibits a rich set of dynamics encompassing many other types of behaviors. Numerical simulation studies have sought to understand how such rich dynamics, occurring atop a complex network, respond to perturbative signals such as stimulation (Muldoon et al., 2016; Papadopoulos, Lynn, Battaglia, & Bassett, 2020). Yet, to more formally bring control-theoretic models closer to such dynamics and associated responses, the framework must be generalized to include non-linearity and/or time dependence. The first step in such a generalization is the linear time-varying (LTV) system:

| (12a) |

| (12b) |

Notably, a generalization of the optimal control problem (Equation 9) to LTV systems is fairly straightforward (Kirk, 2004). But, unlike LTI systems (Equation 6), it is generically not possible to solve for the state trajectory of an LTV system analytically. However, if the state trajectory can be found for n linearly independent initial states, then it can be found for any other initial state due to the property of linearity. In this case, moreover, many of the properties of LTI systems can be extended to LTV systems (C.-T. Chen, 1998), including the simple rank conditions of controllability and observability (Silverman & Meadows, 1967).

Moving beyond the time dependence addressed in LTV systems, one can also consider the many nonlinearities present in real-world systems. In fact, the second common generalization of LTI systems (Equation 5) is to nonlinear control systems which, in continuous time, have the general state space representation:

| (13a) |

| (13b) |

The time dependence in f and h may be either explicit or implicit via the time dependence of x and u, resulting in a time-varying or time-invariant nonlinear system, respectively.

Before proceeding to truly nonlinear aspects of Equation 13, it is instructive to consider the relationship between these dynamics and the linear models described above (Equations 5 and 12). Assume that for a given input signal u0(t), the solution to Equation 13 is given by x0(t) and y0(t). As long as the input u(t) to the system remains close to u0(t) for all time, then x(t) and y(t) also remain close to x0(t) and y(t), respectively. Therefore, one can study the dynamics of small perturbations δx(t) = x(t) −x0(t), δu(t) = u(t) −u0(t), and δy(t) = y(t) −y0(t) instead of the original state, input, and output. Using a first-order Taylor expansion, it can immediately be seen that these signals approximately satisfy

| (14a) |

| (14b) |

which is an LTV system of the form given in Equation 12. In these equations, , , and . Furthermore, A, B, and C are all known matrices that solely depend on the nominal trajectories u0(t),x0(t),y0(t). It is then clear that if the nonlinear system is time-invariant, and if u0(t) ≡u0 is constant, and if x0(t) ≡x0 is a fixed point, then Equation 14 will take the LTI form (Equation 5). In either case, it is important to remember that this linearization is a valid approximation only locally (in the vicinity of the nominal system), and the original nonlinear system must be studied whenever the system leaves this vicinity.

Leaving the simplicity of linear systems significantly complicates the controllability, observability, and optimal control problems. Fortunately, if the linearization in Equation 14 is controllable (observable), then the nonlinear system is also locally controllable (observable) (Sontag, 2013) (see the topic of linearization validity discussed above). Notably, the converse is not true; the linearization of a controllable (observable) nonlinear system need not by controllable (observable). In such a case, one can take advantage of advanced generalizations of the linear rank condition for nonlinear systems (Sontag, 2013), although these tend to be too involved for practical use in large-scale neuronal network models. Interestingly, obtaining optimality conditions for the optimal control of nonlinear systems is not significantly more difficult than that of linear systems. However, solving these optimality conditions (which can be done analytically for linear systems with quadratic cost functions, as mentioned above) leads to nonconvex optimization problems that lend themselves to no more than numerical solutions (Kirk, 2004).

MODELS OF CONTROL AND COMMUNICATION: AREAS OF DISTINCTION, POINTS OF CONVERGENCE

In this section, we build on the descriptions of communication and control provided in Sections 2 and 3 by seeking areas of distinction and points of convergence. We crystallize our discussion around four main topic areas: abstraction versus biophysical realism, linear versus nonlinear models, dependence on network attributes, and the interplay across different spatial or temporal scales. Our consideration of these topics will motivate a discussion of the outstanding challenges and directions for future research, which we provide in section 5.

Abstraction Versus Biophysical Realism

Across scientific cultures and domains of inquiry, the requirements of simplicity and tractability place strong constraints on the formulation of theoretical models. Depending on the behavior that the theory aims to capture, the models can capture detailed realistic elements of the system with the inputs from experiments (Bansal et al., 2019; Bansal, Medaglia, Bassett, Vettel, & Muldoon, 2018), or the models can be more phenomenological in nature with a pragmatic intent to make predictions and guide experimental designs. An example of a detailed realistic model in the context of neuronal dynamics is the Hodgkin-Huxley model, which takes into account the experimental results from detailed measurements of time-dependent voltage and membrane current (Abbott & Kepler, 1990). A corresponding example of a more phenomenological model is the Hopfield model, which encodes neuronal states in binary variables.

Communication Models.

Communication models similarly range from the biophysically realistic to the highly phenomenological. Dynamical models informed by empirically measured natural frequencies, empirically measured time delays, and/or empirically measured strengths of structural connections place a premium on biophysical realism (Chaudhuri, Knoblauch, Gariel, Kennedy, & Wang, 2015a; Murphy, Bertolero, Papadopoulos, Lydon-Staley, & Bassett, 2020; Schirner, McIntosh, Jirsa, Deco, & Ritter, 2018). In contrast, Kuramoto oscillator models for communication through coherence can be viewed as less biophysically realistic and more phenomenological (Breakspear et al., 2010). Communication models also capture the state of a system differently, whether by discrete variables such as on/off states of units, or by continuous variables such as the phases of oscillating units. The diversity present in the current set of communication models allows theoreticians to make contact with experimental neuroscience at many levels (Bassett et al., 2018; N. J. Kopell et al., 2014; Ritter, Schirner, McIntosh, & Jirsa, 2013; Sanz-Leon et al., 2015).

Alongside this diversity, communication models also share several common features. For instance, the state variables chosen to describe the dynamics of the system are motivated by neuronal observations and thus represent the system’s biological, chemical, or physical states. The dynamics that state variables follow are also typically motivated by our understanding of the underlying processes, or approximations thereto. In building communication models, the experimental observations and intuition typically precede the mathematical formulation of the model, which in turn serves to generate predictions that help guide future experiments. A particularly good example of this experiment-led theory is the Human Neocortical Neurosolver, whose core is a neocortical circuit model that accounts for biophysical origins of electrical currents generating MEG/EEG signals (Neymotin et al., 2020). Having been concurrently developed with experimental neuroscience, theoretical models of communication are intricately tied to currently available measurements.

The closeness to biophysical mechanisms is a feature that is also typically shown in other types of communication models. One might think that topological measures devoid of a dynamical model tend to place a premium on phenomenology. But in fact, the cost functions that brain networks optimize typically reflect metabolic costs, routing efficiency, diffusion efficiency, or geometrical constraints (Avena-Koenigsberger et al., 2018, 2017; Laughlin & Sejnowski, 2003; Zhou, Lyn, et al., 2020). Minimization of metabolic costs has been shown to be a major factor determining the organization of brain networks (Laughlin & Sejnowski, 2003). Further, such constraints on metabolism also place limits on signal propagation and information processing.

Control Models.

Are these features of communication models shared by control models? Control models have their origin in Maxwell’s analysis of the centrifugal governor that stabilized the velocity of windmills against disturbances caused by the motions of internal components (Maxwell, 1868). The field of control theory was later further formalized for the stability of motion in linearized systems (Routh, 1877). Today, control theory is a framework in engineering used to design systems and to develop strategies to influence the state of a system in a desired manner (Tang & Bassett, 2018). More recently, the framework of control theory has been applied to neural systems in order to quantify how controllable brain networks are, and to determine the optimal strategies or regions that are best to exert control on other regions (Gu et al., 2017; Tang & Bassett, 2018; Tang et al., 2017). Although initial efforts have proven quite successful, control theory and more generally, the theory of linear systems, has traditionally concerned itself with finding the mathematical principles behind the design and control of linear systems (Kailath, 1980), and is applicable to a wide variety of problems in many disciplines of science and engineering. Because the application of control theory to brain networks has been a much more recent effort, identification of appropriate state variables that are best posed to provide insights on control in brain networks is a potential area of future research.

Applied to brain networks, control theoretical approaches have mostly utilized detailed knowledge of structural connections while assuming the linear dynamics formulated in Equation 5a. This simplifying abstraction implies that the influence of a system’s state at a given time propagates along the paths of the structural network encoded in A of Equation 5a to affect the system’s state at the next time point. The type of influence studied here is most consistent with the diffusion-based propagation of signals in communication models, and intuitively leads to the expected close relationship between diffusion-based communication measures and control metrics. Indeed such a relationship exists between the impulse response (outlined in the previous section) and the network communicability. We elaborate further on this relationship in the next subsection.

Some metrics that are commonly used to characterize the control properties of the brain are average controllability, modal controllability, and boundary controllability. These statistical quantities can be calculated directly from the spectra of the controllability Gramian WT and the adjacency matrix A (Pasqualetti et al., 2014). A related and important quantity of interest here is the minimum control energy defined as Equation 8 with u(t) denoting the control signals. While this quadratic dependence of ‘energy’ on input signals is appropriate for a linearized description of the system around a steady state, its actual dependence on the exogenous control signals must depend on several details such as the cost of generating control signals and the cost of coupling them to the system. In this sense, the control energy is a relatively abstract concept whose interpretation has yet to be linked to the physical costs of control in brain networks. This observation loops back to the fact that the development of control theory models has been more as an abstract mathematical framework which is then borrowed by several fields and thereafter modified by context. We discuss possible ways of reconciling the cost of control with actual biophysical costs known from communication models in section 5.

Linear Versus Nonlinear Models

In models of communication and dynamics, a reoccurring motif is the propagation of signal along connections. Graph measures such as small-worldness, global efficiency, and communicability assume that strong and direct connections between two neural units facilitate communication (Avena-Koenigsberger et al., 2018; Estrada et al., 2012; Muldoon, Bridgeford, & Bassett, 2016; Watts & Strogatz, 1998). While these measures capture an intuitive concept and have been useful in predicting behavior, they themselves do not explicitly quantify the mechanism of communication or the form of the information. Dynamical models overcome the former limitation by quantitatively defining the neural states of a system, and encoding the mechanism of communication in the differential or difference equations (Breakspear, 2017; Estrada et al., 2012). However, they only partially address the latter limitation, as it is unclear how a system might change its dynamics to communicate different information.

There is, of course, no single spatial and temporal scale at which neural systems encode information. At the level of single neurons, neural spikes encode visual (Hubel & Wiesel, 1959) and spatial (Moser, Kropff, & Moser, 2008) features. At the level of neuronal populations in electroencephalography, changes in oscillation power and synchrony reflect cognitive and memory performance (Klimesch, 1999). At the level of the whole brain, abnormal spatiotemporal patterns in functional magnetic resonance imaging reflect neurological dysfunction (Broyd et al., 2009; Morgan, White, Bullmore, & Vertes, 2018; Thomason, n.d.). To accommodate this wide range of spatial and temporal scales of representation, we put forth control models as a rigorous yet flexible framework to study how a neural system might modify its dynamics to communicate.

Linear Models: Level of Pairwise Nodal Interactions.

The most immediate relationship between dynamical models and information is through the system’s states. From this perspective, the activity or state of a single neural unit is the information to send, and communication occurs diffusively when the states of other neural units change as a result. There is an exact mathematical equivalence between communicability using factorial weights in Equation 2, and the impulse response of a linear dynamical system in Equation 7 through the matrix exponential. Specifically, we realize that the matrix exponential in the impulse response, eAt, can be written as communicability with factorial weights, such that

This realization provides an explicit link between connectivity, dynamics, and communication (Estrada et al., 2012). From the perspective of connectivity, the element in the i-th row and j-th column of the matrix exponential, [eA]ij is the total strength of connections from node j to node i through paths of all lengths. From a dynamic perspective, [eAt]ij is the change in the activity of node i after t time units as a direct result of node j having unit activity. Hence, the matrix exponential explicitly links a structural path-based feature to causal changes in activity under linear dynamics.

Linear Models: Level of Network-Wide Interactions.

Increasingly, the field is realizing that the activity of neural systems is inherently distributed at both the neuronal (Steinmetz, Zatka-Haas, Carandini, & Harris, 2019; Yaffe et al., 2014) and areal (Tavor et al., 2016) levels. Hence, information is not represented as the activity of a single neural unit, but the pattern of activity, or state, of many neural units. As a result, we must broaden our concept of communication as the transfer of the system of neural units from an initial state x(0) to a final state x(t). This perspective introduces a rich interplay between the underlying structural features of interunit interactions, and the dynamics supported by the structure to achieve a desired state transition.

A crucial question in this distributed perspective of communication is the following: given that a subset of neural units are responsible for communication, what are the possible states that can be reached? For example, it seems extremely difficult for a single neuron in the human brain to transition the whole brain to any desired state. This exact question has a clear and exact answer in the theory of dynamical systems and control through the controllability matrix. Specifically, given a subset of neural units K called the control set that are responsible for communication (either of their current state or the external stimuli applied to them) to the rest of the network, the space of possible state transitions is given by weighted sums of the columns of the controllability matrix, that is, the controllable subspace (cf. Section 8). Many studies in control theory are therefore directly relevant for communication, such as determining whether or not a particular control set can transition the system to any state given the underlying connectivity (Lin, 1974), or whether reducing the controllable subspace by removing neurons reduces the range of motion invivo (Yan et al., 2017).

Linear Models: Accounting for Biophysical Costs.

While determining the theoretical ability of performing a state transition is important, the neural units responsible for control may have to exert a biophysically infeasible amount of effort to perform the transition. Such a constraint is known to be present in many forms such as metabolic cost (Laughlin & Sejnowski, 2003; Liang, Zou, He, & Yang, 2013) and firing rate capacity (Sengupta, Laughlin, & Niven, 2013). These constraints are explicitly taken into account in control theory through minimum energy control, and by extension, optimal control. As detailed in section 8, the minimum energy control places a homogeneous quadratic cost (control energy) on the amount of effort that the controlling neural units must exert to perform a state transition (Equation 8) while the general LQR optimal control additionally includes the level of activity of the neural units as a cost to penalize infeasibly large states (Equation 9).

Within this framework of capturing distributed communication and biophysical constraints, there remains the outstanding question of how structural connectivity contributes to communication. What features of connectivity enable a set of neural units to better transition the system than another set of units? To this end, many summary statistics have been put forth, mostly in terms of the controllability Gramian (Equation 10) due to its crucial role in determining the cost of control (Equation 11). Among them are the trace of the inverse of the Gramian, that quantifies the average energy needed to reach all states on the unit hypersphere (Figure 3), and the square root of the determinant of the Gramian (or its logarithm), which is proportional to the volume of states that can be reached with unit input (Müller & Weber, 1972). Other studies summarize the contribution of connectivity from individual nodes (Gu et al., 2015; Simon & Mitter, 1968) or multiple nodes (Kim et al., 2018; Pasqualetti et al., 2014), leading to potential candidates for new measures of communication.

Nonlinear Models: Oscillators and Phases.

When faced with the task of studying complex communication dynamics in neural systems, it is evident that the richness of neural behavior extends beyond linear dynamics. Indeed, a typical analysis of neural data involves studying the power of the signals at various frequency bands for behaviors ranging from memory (Klimesch, 1999) to spatial representations (Moser et al., 2008), underscoring the importance of nonlinear oscillations. To capture these oscillations, the earliest models of Hodgkin and Huxley (Hodgkin & Huxley, 1952), with subsequent simplifications of Izhikevich (Izhikevich, 2003) and FitzHugh-Nagumo (Fitzhigh, 1961), neurons, as well as population-averaged (Wilson & Cowan, 1972) systems, contain nonlinear interactions that can generate oscillatory behavior. In such systems, how do we quantify information and communication? Further, how would such a system change the flow of communication?

Some prior work has focused on lead-lag relationships between the signal phases (Nolte et al., 2008; Palmigiano et al., 2017; Stam & van Straaten, 2012), where the relation implies that communication occurs by the leading unit transmitting information to the lagging unit. A fundamental and ubiquitous equation to model this type of system is the Kuramoto equation (Equation 4), where each neural unit has a phase θi(t) that evolves forward in time according to the natural frequency ωi and a sinusoidal coupling with the phases of the other units θj, weighted by the coupling strength Aij (Acebrón et al., 2005; Kuramoto, 2003). This model has a vast theoretical and numerical foundation with myriad applications in control systems (Dörfler & Bullo, 2014).

Given an oscillator system with fixed parameters, how can the system establish and alter its lead-lag relationships? In the regime of frequency synchronization where the natural frequencies are not identical, the oscillators converge to a common synchronization frequency ωsync. As a result, the relative phases with respect to this frequency remain fixed at θsync (Dörfler & Bullo, 2014), thereby establishing a lead-lag relationship. In this regime, the nonlinear oscillator dynamics can be linearized about ωsync, to generate a new set of dynamics

where L is the network Laplacian matrix of the coupling matrix A. In Skardal and Arenas (2015), the authors begin with an unstable general oscillator network that is not synchronized (i.e., does not have a true θsync) and perform state-feedback to stabilize an unstable set of phases θ*, thereby inducing frequency synchronization with the corresponding lead-lag relationships. The core concept behind this state-feedback is to designate a subset of oscillators as “driven nodes,” and add an additional term that modulates the phases of these oscillators according to

Subsequent work focuses on expanding the form of the control input (Skardal & Arenas, 2016), and modifications to the coupling strength to a single node (Fan, Wang, Yang, & Wang, 2019). Hence, we observe that targeted modification to the dynamics of subsets of oscillators can indeed set their lead-lag relationships.

Generally, oscillator systems are not inherently phase oscillators. For example, the Wilson-Cowan (Wilson & Cowan, 1972), Izhikevich (Izhikevich, 2003), and FitzHugh-Nagumo (Fitzhigh, 1961) models are all oscillators with two state variables coupled through a set of nonlinear differential equations. The transformation of these state variables and equations into a phase oscillator form is the subject of weakly coupled oscillator theory (Dörfler & Bullo, 2014; Schuster & Wagner, 1990). In the event that the oscillators are not weakly coupled, then controlling the dynamics and phase relations begins to fall under the purview of linear time-varying systems (Equation 12) and nonlinear control (Khalil, 2002; Sontag, 2013).

Dependence on Network Attributes

In network neuroscience, recent studies have begun to characterize how network attributes influence communication and control in neuronal and regional circuits. In neuronal circuits, the spatiotemporal scale of communication has been studied from the perspective of statistical mechanics in the context of neuronal avalanches (J. M. Beggs & Plenz, 2003). Such studies show that activity propagates in a critical (J. Beggs & Timme, 2012), or at least slightly subcritical (Priesemann et al., 2014; Wilting & Priesemann, 2018), regime. In a critical regime, the network connections are tuned to optimally propagate information throughout the network (J. M. Beggs & Plenz, 2003). Studies of microcircuits also show more explicitly that certain network topologies can play precise roles in communication. Hubs, which are neural units with many connections, often serve to transmit information within the network (Timme et al., n.d.). Groups of such hubs are called rich-clubs (Colizza, Flammini, Serrano, & Vespignani, 2006; Towlson, Vértes, Ahnert, Schafer, & Bullmore, 2013), which have been observed in a wide range of organisms (Faber, Timme, Beggs, & Newman, 2019; Shimono & Beggs, 2014), and they dominate information transmission and processing in networks (Faber et al., 2019).

Cortical network topologies have highly nonrandom features (Song, Sjöström, Reigl, Nelson, & Chklovskii, 2005), which may support more complex routing of communication (Avena-Koenigsberger et al., 2019). In studies of neuronal gating, one group of neurons, such as the mediodorsal thalamus, can either facilitate or inhibit pathways of communication, such as that from the hippocampus to the prefrontal cortex (Floresco & Grace, 2003). Such complex routing of communication requires nonlinear dynamics, such as shunting inhibition (Borg-Graham, Monier, & Frégnac, 1998). Some models simulate inhibitory dynamics on cortical network topologies to study how those topologies may support the complex communication dynamics that occur in visual processing, such as visual attention (Olshausen, Anderson, & Van Essen, 1993).

Points of convergence between communication and control have been observed in regional brain networks. For example, hubs are studied in functional covariance networks and structural networks. Structural hubs are thought to act as sinks of early messaging accelerated by shortest-path structures and sources of transmission to the rest of the brain (Mišíc et al., 2015). The highly connected hub’s connections may support both the average controllability of the brain as well as the brain’s robustness to lesions of a fraction of the connections (B. Lee, Kang, Chang, & Cho, 2019). An area of distinction between control and communication in brain networks may depend on the hub topology. While communication may depend on the average controllability of hubs to steer the brain to new activity patterns, the brain regions that steer network dynamics to difficult-to-reach states tend to not be hubs (Gu et al., 2015). In determining the full set of nodes that can confer full control of the network, hubs tend to not be in this set of driver nodes (Y.-Y. Liu et al., 2011). A point of convergence between communication and control is the consideration of how the brain network broadcasts control signals. Whereas the high degree of hubs may efficiently broadcast integrated control signals across the brain network in order to steer the brain to new patterns of activity, the brain regions with lower degree may receive a greater rate of control signals that are then transmitted to steer the brain to difficult-to-reach patterns of activity (Zhou, Lyn, et al., 2020).

To strike a balance between efficiency, robustness, and diverse dynamics, brain networks may have evolved toward optimizing brain network structures supporting and constraining the propagation of information. Brain networks reach a compromise between routing and diffusion of information compared to random networks optimized for either routing or diffusion (Avena-Koenigsberger et al., 2018). Brain networks also appear optimized for controllability and diverse dynamics compared to random networks (Tang et al., 2017). To understand how the brain can circumvent trade-offs between objectives like efficiency, robustness, and diverse dynamics, future studies could further investigate the network properties of the spectrum of random networks optimized toward these objectives. Existing studies focus on the trade-off between two objectives, such as network structure supporting information routing or diffusion, or average versus modal controllability. However, multiobjective optimization allows for further investigation of Pareto-optimal brain network evolution toward an arbitrarily large set of objective functions (Avena-Koenigsberger, Goni, Sole, & Sporns, 2015; Avena-Koenigsberger et al., 2014).

The convergence between communication and control exists largely via the network topologies with which they are related. Given the importance of ‘rich-club hubs’ and similar topological attributes in integration and processing of information, it is natural to ask if similar properties also contribute to controllability or observability properties in brain networks. More specifically, given a region in the brain network with specific topological properties such as high degree, betweenness centrality, closeness centrality, or location between different modules, what is the relationship between its role in information integration or processing and its role in controllability and observability? The tri-faceted interface of communication, control, and network topology holds great possibilities for future work, and some recent efforts have begun to relate the three (Ju, Kim, & Bassett, 2018).

Interplay of Multiple Spatiotemporal Scales

Most complex systems exhibit phenomena at one spatiotemporal scale that depend on phenomena occurring at another spatiotemporal scale. This interplay of scales is evident, for example, in the hierarchical energy cascade from lower modes (larger length scales) to higher modes (smaller length scales) in turbulent fluids (Frisch, 1995), multiscaled models of morphogenesis (Manning, Foty, Steinberg, & Schoetz, 2010; Mao & Baum, 2015), and multiscaled models of cancer (Szymańska, Cytowski, Mitchell, Macnamara, & Chaplain, 2018). A convenient way to study such an interplay is to transform the variables of mathematical models to their corresponding Fourier conjugate variables. This approach serves to map the larger length scales to Fourier modes of smaller wavelengths, and to map the longer timescales to smaller frequency bands. In most complex systems, current research efforts seek a quantitative understanding of the interwoven nature of different spatiotemporal scales, which in turn can lead to an understanding of the system’s emergent behavior.

Communication Models.

As one of the most complex systems, the brain naturally exhibits a rich cross-talk between different spatiotemporal scales. A key example of interplay among spatial scales is provided by recent evidence that activity propagates in a slightly subcritical regime, in which activity “reverberates” within smaller groups of neurons while still maintaining communication across those groups (Wilting & Priesemann, 2018). That cross-talk is structurally facilitated by topological features characteristic of each spatial scale: from neurons to neuronal ensembles to regions to circuits and systems (Bansal et al., 2019; N. J. Kopell et al., 2014; Shimono & Beggs, 2014). A key example of interplay among temporal scales is cross-frequency coupling (Canolty & Knight, 2010), which first builds on the observation that the brain exhibits oscillations in different frequency bands thought to support information integration in cognitive processes from attention to learning and memory (Başar, 2004; Breakspear et al., 2010; Cannon et al., 2014; N. Kopell, Borgers,et al., 2010; N. Kopell, Kramer, Malerba, & Whittington, 2010). Cross-frequency coupling can occur between region i in one frequency band and region j in another frequency band, and be measure statistically (Tort, Komorowski, Eichenbaum, & Kopell, 2010). The phenomenon is thought to play a role in integrating information across multiple spatiotemporal scales (Aru et al., 2015). For example, directional coupling between hippocampal γ oscillations and the neocortical α/β oscillations occurs in the context of episodic memory (Griffiths et al., 2019). Interestingly, anomalies of oscillatory activity and cross-frequency coupling can serve as biomarkers of neuropsychiatric disease (Başar, 2013).