Summary

Electromagnetic (EM) sensing is a widespread contactless examination technique with applications in areas such as health care and the internet of things. Most conventional sensing systems lack intelligence, which not only results in expensive hardware and complicated computational algorithms but also poses important challenges for real-time in situ sensing. To address this shortcoming, we propose the concept of intelligent sensing by designing a programmable metasurface for data-driven learnable data acquisition and integrating it into a data-driven learnable data-processing pipeline. Thereby, a measurement strategy can be learned jointly with a matching data post-processing scheme, optimally tailored to the specific sensing hardware, task, and scene, allowing us to perform high-quality imaging and high-accuracy recognition with a remarkably reduced number of measurements. We report the first experimental demonstration of “learned sensing” applied to microwave imaging and gesture recognition. Our results pave the way for learned EM sensing with low latency and computational burden.

Keywords: programmable metamaterials, artificial neural network, intelligent electromagnetic

Graphical Abstract

Highlights

-

•

First demonstration of “learned sensing” in electromagnetics

-

•

Low-latency imaging and gesture recognition with learned illumination patterns

-

•

Data acquisition and processing integrated into unique machine-learning pipeline

-

•

Selection of task-relevant information already during measurements

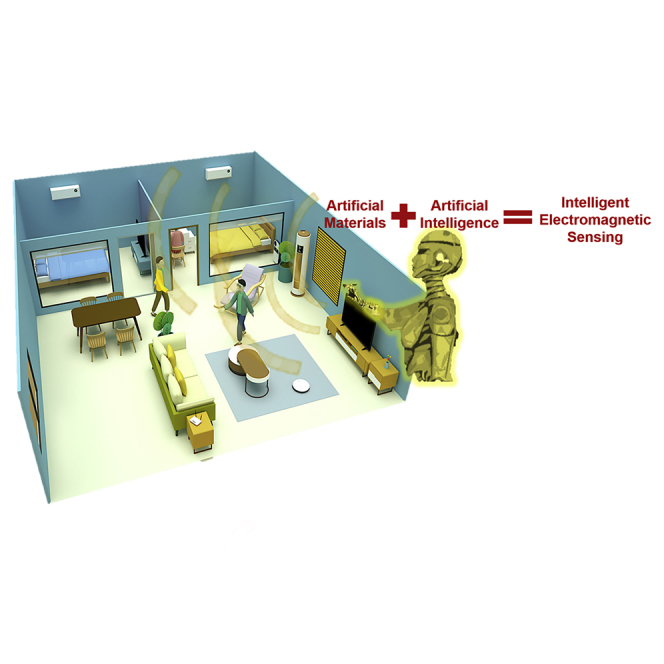

The Bigger Picture

Many futuristic “intelligent” concepts that will affect our society, from ambient-assisted health care via autonomous vehicles to touchless human-computer interaction, necessitate sensors that can monitor a device's surroundings fast and without extensive computational effort. To date, sensors indiscriminately acquire all information and only select relevant details during data processing, thereby wasting time, energy, and computational resources. We demonstrate intelligent electromagnetic sensing that uses learned illumination patterns to already select relevant details during the measurement process. Our experiments use a home-made programmable metasurface to generate the learned microwave patterns that enable a remarkable reduction in the number of necessary measurements. Our demonstration addresses a widespread need for high-quality contactless electromagnetic sensing under strict time, energy, and computation constraints.

“Smart” devices must “see” and “recognize” objects and gestures in their surroundings as quickly as possible. We consider a contactless sensor that illuminates its surroundings with microwave illumination shaped by a programmable metasurface. By integrating the measurement process directly into the machine-learning pipeline that processes the data, we learn optimal illumination patterns that efficiently extract task-relevant information. Our experimental demonstration of low-latency intelligent electromagnetic sensing will influence human-computer interaction, health care, automotive radar, and security screening.

Introduction

Electromagnetic (EM) sensing is a widely used contactless examination technique because it relies on harmless nonionizing radiation that can penetrate optically opaque materials. Consequently, EM sensing has emerged as promising tool in various applications ranging from health care1, 2, 3, 4 via security screening5, 6, 7 up to planetary explorations.8, 9, 10 However, to fully exploit the application potential of EM sensing, cost efficiency and speed remain two major challenges. Traditional EM hardware relies on mechanically or electronically scanned beams, suffering from slow acquisition or high cost, respectively. Recently, novel hardware solutions to shift the cost from the hardware to the software level were proposed: rather than acquiring the information in the spatial domain, the information can be multiplexed across independent frequencies or configurations of a complex system such as a chaotic cavity or a metasurface aperture.11, 12, 13, 14 The computationally intensive challenge is then to recover the spatial information from the multiplexed measurements. Given the typical scene sparsity, compressive sensing schemes are usually employed to recover the information from an underdetermined set of measurements.15 Especially when analytical models are lacking, machine-learning (ML) techniques have recently been proposed to solve the electromagnetic inverse scattering problem.16, 17, 18

However, these EM sensing schemes based on generic (random) scene illuminations still lack “intelligence”: they indiscriminately acquire all information, ignoring available knowledge about scene, sensing task, and hardware constraints. Yet using this available a priori knowledge is critical to limit data acquisition to relevant information—the crucial conceptual improvement necessary to reduce latency and computational burden. A first step to add intelligence consisted in adapting the scene illuminations to the knowledge of what scene is expected via a principal component analysis (PCA) of the expected scene.19,20 Albeit yielding significant performance improvements, this technique still considers acquisition and processing separately, and hence fails to use task-specific measurement settings that highlight salient features for the processing layer.

To fully reap the benefits of ML techniques in EM sensing, acquisition and processing must be jointly learned in a unique pipeline. Recently, inspired by pioneering work in optical microscopy,21 the idea of learned EM sensing with programmable metasurface hardware was introduced.22 Thereby, a model of the programmable measurement process is directly integrated into the ML pipeline used to process the data, enabling the joint learning of optimal measurement and processing settings for the given hardware, task, and expected scene. We note that there is a number of related works in optics23, 24, 25, 26, 27 and a similar concept has recently also been studied in the context of ultrasonic imaging.28 It is important to realize that most of the time in EM sensing, the specific task can be executed without first reconstructing a visual image of the scene. While current EM sensing systems are designed to first generate high-resolution images as a checkpoint for subsequent ML-aided recognition tasks, the resulting acquisition of irrelevant information and the huge flux of data to be processed cause significant inefficiencies. Hence, for tasks such as object recognition it is most efficient to skip the intermediate imaging step and directly process the raw data, as done in previous studies.20,22

Here, we report the first experimental demonstration of learned EM sensing. We consider the tasks of (1) imaging and (2) gesture recognition, two examples with immediate real-life application in security screening and human-machine interaction. Our intelligent hardware layer relies on a programmable metasurface29, 30, 31 to shape the waves illuminating the scene. Unlike del Hougne et al.,22 we do not use an analytical description of the measurement process but train a three-port deep artificial neural network (ANN), called the measurement ANN (m-ANN), to capture the link between scene, metasurface coding pattern, and microwave measurement. Being sharply different from the study by Li et al.,32 where only the data processing is treated with the ANN, here we jointly optimize the control coding patterns of the metasurface together with the weights of another ANN, called the reconstruction ANN (r-ANN), which is used to extract the desired information from the raw measurements. To this end, we interpret measurement and reconstruction as, respectively, encoding and decoding the relevant scene information (an image or a gesture classification) in/from a measurement space. Thus, we can employ a variational autoencoder (VAE) framework33, 34, 35 to jointly learn optimal measurement and processing settings with a standard supervised-learning procedure. This strategy drastically reduces the number of necessary measurements, helping us to remarkably improve many critical metrics such as speed, processing burden, and energy consumption.

Results

Operation Principle

Learned sensing requires the integration of a model of the reconfigurable measurement process into the pipeline used for data processing, in order to jointly optimize the learnable physical and digital weights, as illustrated in Figure 1. Given the difficulty of accurately modeling the measurement process in an analytical manner, for instance, due to inaccuracies in the metasurface fabrication or multipath effects in the indoor environment, here we propose the use of a data-driven model of the measurement process instead. We introduce a three-port-deep ANN, the measurement ANN (m-ANN), that links two inputs (the scene and the metasurface configuration ) to one output (the raw microwave measurements ). First, we learn the trainable weights of the m-ANN from an -triple labeled training dataset with a standard supervised-learning procedure, as detailed in Experimental Procedures and Note S4.

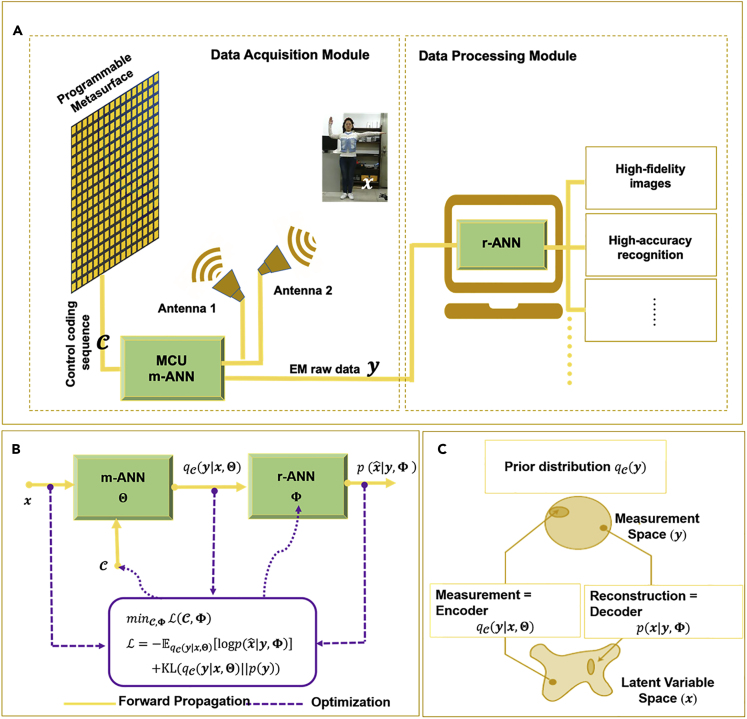

Figure 1.

Setup and Working Principle of the Learned EM Sensing System

(A) The intelligent sensing system consists of two data-driven learnable modules: the m-ANN-driven data acquisition module and the r-ANN-driven data processing module. m-ANN models the measurement process involving a pair of horn antennas and a coding metasurface programmed with a micro-control unit. Antenna 1 emits plane microwave fronts, which are shaped by the programmable metasurface and then incident upon the scene. The waves scattered by the subject of interest are received by Antenna 2. The received raw microwave data are directly processed by the r-ANN, producing the desired imaging or recognition results.

(B) The m-ANN-based model of data acquisition as a trainable physical network is fully integrated with the r-ANN-driven data-processing pipeline into a unique sensing chain. The m-ANN has three ports: the scene of interest and the metasurface coding pattern that shapes the scene illumination are its inputs, and the raw EM data is its output. First, the weights of m-ANN are fixed using a data-driven supervised-learning protocol. Second, optimal values of the physical and digital parameters of the sensing chain, and Φ, respectively, are jointly learned using a standard supervised-learning procedure (error back-propagation) in a VAE framework. These optimal settings ensure that already on the hardware level only task-relevant information is captured and that a matching processing layer extracts the relevant information from the measurements.

(C) Our interpretation of the entire sensing process in the VAE framework: the latent variable space, , is encoded by the analog measurements in a measurement space, ; the digital reconstruction decodes the measurements to return to the latent variable space.

Once the weights of m-ANN are fixed, we can proceed with the integration of m-ANN into the unique sensing pipeline. Following Vedula et al.,28 we view the entire sensing process (data acquisition and processing) as a user-controlled end-to-end process. Given a scene (an image or a gesture class), a set of complex-valued measurements is generated by sampling from the -controllable conditional distribution . In other words, the latent scene variable of interest, , is encoded in a measurement space via a distribution controlled by the metasurface configuration . The goal of the processing is then to find an estimator that retrieves the relevant scene information from the measurements . This estimator inverts the action of the measurement process—in other words, it decodes the information of interest to return from the measurement space to the latent variable space. Using the VAE33, 34, 35 framework, the digital decoder can be modeled as sampling the measurement space with a conditional distribution, controlled by its digital weights , to generate estimates of the latent variable of interest. The decoding is implemented with a deep ANN, called r-ANN, whose trainable weights can hence be identified as .

To jointly learn optimal analog and digital weights, i.e., the metasurface control coding pattern and the r-ANN weights , respectively, we minimize the following objective function:35

| (Equation 1) |

The first term in Equation 1, , can be interpreted as the “reconstruction error” of our VAE: it is the log likelihood of the true latent data given the inferred latent data. The second term, , acts as a regularizer and encourages the distribution of the decoder to be close to a chosen prior distribution . In our work, both analog encoder and digital decoder are treated with deep ANNs, namely m-ANN and r-ANN, as detailed in Experimental Procedures and Notes S1 and S4.

To determine the optimal settings of and, we apply a so-called alternatively iterative approach. Starting with some initializations of and , we calculate () for () updated in the last iteration step, followed by calculating () based on the obtained (). This procedure is repeated until a stopping criterion is fulfilled. Such an optimization can be implemented using error-backpropagation36 routines for continuous variables such as and . However, we have a binary constraint (0 or 1) on the entries of , resulting in an NP-hard problem. While del Hougne et al.22 addressed this binary constraint with a temperature parameter trick,23 in this work we use a randomized simultaneous perturbation stochastic approximation (r-SPSA) algorithm. The latter was originally developed for treating the problem of optimal well placement and control in the area of petroleum engineering.37 More details about Equation 1 and the implementation of the optimization algorithm are provided in Figures S2 and S3 and Note S2.

In Situ Imaging of the Human Body

First, we apply our learned EM sensing system to the task of in situ high-resolution imaging of a human body in our laboratory environment. As outlined previously, we integrate m-ANN for data acquisition and r-ANN for smart data processing into a unique data-driven learnable sensing chain. To this end, we need to jointly optimize the coding patterns of the m-ANN together with the weights of r-ANN for the specific task of human body imaging. The integrated ANN, containing a multitude of nonlinear ML layers, can be trained with a standard supervised-learning procedure in TensorFlow. Following Vedula et al.,28 to illustrate the significant improvement of the proposed learned sensing strategy on the image quality over conventional learning-based sensing methods, we consider a two-stage training procedure. During the first stage, the coding patterns of the m-ANN and the digital weights of the r-ANN are optimized separately, as in Li et al.20 The coding patterns of m-ANN are assigned following the two most common state-of-the-art approaches that correspond to using random or PCA-based scene illuminations. During the second stage, m-ANN and r-ANN are jointly trained to achieve the overall optimal sensing performance. In this two-stage training, the benefit reaped by the proposed sensing strategy over the conventional methods can be clearly demonstrated. In our study we use several people, called training persons in short, to train our intelligent microwave sensing system, and use a different person, called test persons, to test it. In addition, we use 1,000 random codes and 1,000 PCA-based codes (200 standard PCA-based codes and their 800 perturbations) as raining samples for training . The details of the training persons are provided by Li et al.32 The ground truth is defined using binarized optical images of the scene.

In general, depending on the difficulty of the sensing task and the signal-to-noise ratio,38 a measurement with a single coding pattern cannot be expected to obtain sufficient relevant information. Figure 2 displays the cross-validation errors over the course of the training iterations for different numbers M of coding patterns of the metasurface (3, 9, 15, and 20). The two stages of the aforementioned training protocol can be clearly distinguished. To assess the image quality quantitatively, the so-called structure similarity index metric (SSIM) is considered, which is calculated using the MATLAB library function, i.e., ssim. Figure S4 displays SSIM histograms for different values of M and different sensing methods. Since the trainable physical () and digital () parameters are initialized randomly before training, except PCA-based , we have conducted 500 realizations to remove any sensitivity to the choices of random initializations made for m-ANN and r-ANN. Examples of the corresponding optimized coding patterns of the metasurface are displayed in Figure S5.

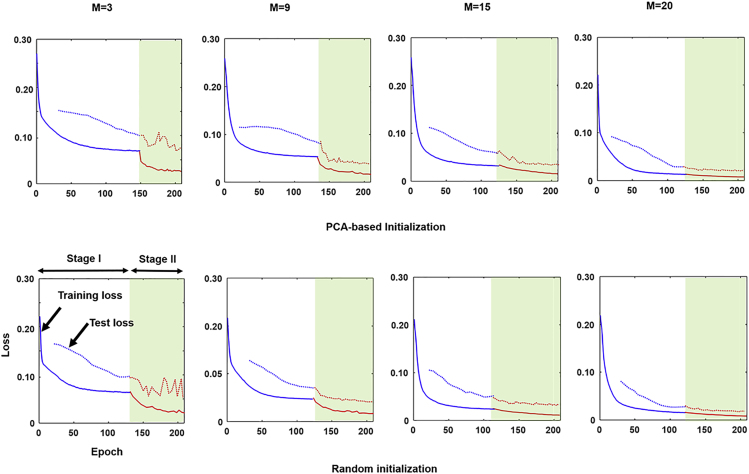

Figure 2.

Training Dynamics for Learned EM Sensing Applied to an Imaging Task

The dependence of the training and test loss functions on the progress of iterative epochs is shown for different numbers of coding patterns M, i.e., M = 3, 9, 15, and 20. The continuous lines indicate the training loss and the dashed lines indicate the test loss. The control coding patterns of the metasurface are initialized randomly (top) or PCA-based (bottom). During stage I, only the digital decoder weights are optimized. Then, during stage II, both the physical weights and the digital weights are jointly optimized. The presented results show a remarkable improvement of the image quality achieved by using the joint optimization of and during stage II, compared with that based on solely optimizing (i.e., the end of stage I). The effect is especially striking when the number of measurements is very limited.

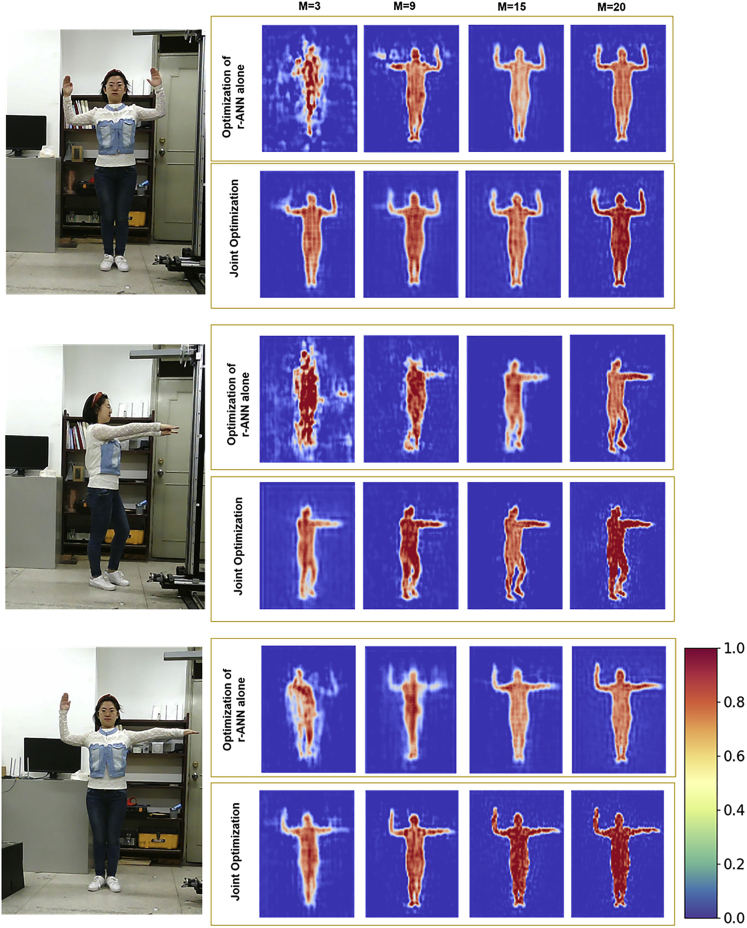

Figure 3 reports several selected image reconstruction results of the test person with different body gestures using the aforementioned sensing methods. The corresponding coding patterns of the metasurface are displayed in Figure S5. In line with del Hougne et al.,22 we observe that the sensing quality (here image quality) achieved by jointly optimizing physical () and digital () parameters is significantly better than if only is optimized. This may be intuitively expected, since more trainable parameters are available and all a priori knowledge is used in the learned sensing scheme. Unlike Vedula et al.,28 we do not observe a significant performance dependence on the initialization of the m-ANN (PCA-based or random) in this work.

Figure 3.

Experimental Results for Learned EM Sensing Applied to an Imaging Task

For three different poses, we display the images reconstructed with different numbers of coding patterns of the metasurface, M, for the case of only optimizing (first row) or jointly optimizing and (second row). Remarkable improvements of the image quality are achieved by using the joint optimization of and , compared with the case of only optimizing , when the number of coding patterns of metasurface is less than 9. In this set of experiments, the random initialization is used.

Our experimental results demonstrate, in line with del Hougne et al.,22 that simultaneous learning of measurement and reconstruction settings is remarkably superior to the conventional sensing strategies whereby measurement and/or reconstruction are optimized separately (if optimized at all). The benefits of learned sensing are especially strong when the number of measurements is highly limited such that learned sensing enables a remarkable dimensionality reduction. Ultimately, these superior characteristics are enabled by training a unique integrated sensing chain, making use of all available a priori knowledge about the probed scene, task, and constraints on measurement setting and processing pipeline.

In Situ Recognition of Body Gestures

Finally, we consider the task of recognizing body gestures from raw measurement data, i.e., without an intermediate imaging step. Similar to before, m-ANN and r-ANN are merged into a unique sensing chain, which is simultaneously trained using a standard supervised-learning technique, this time to maximize the entire system's gesture classification accuracy. We use the same training and test dataset of four different body gestures as well as the same two-stage training procedure as before. is initialized randomly.

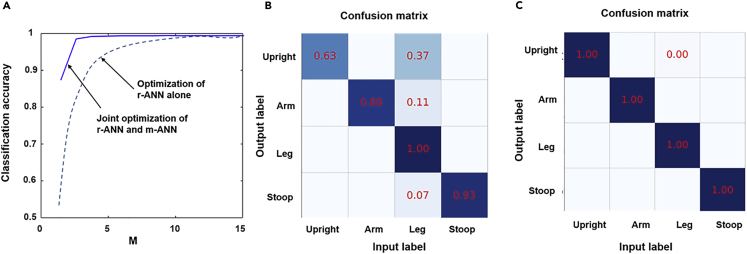

Figure 4A reports the average classification accuracy as a function of the number of measurements M. The presented results have been obtained over four different body gestures, and each gesture with 100 test samples. Additionally, the corresponding classification matrices for different sensing methods are reported in Figures 4B and 4C. A classification matrix shows how often a given body gesture is mistakenly classified as one of the other gesture classes. Note that the strong diagonal elements (corresponding to correctly identified body gestures) reflect the achieved recognition accuracy of almost 100% on average by using the learned sensing method. The performance using the learned sensing scheme saturates around M = 5 at 98%, meaning that we extract all task-relevant information with only three coding patterns of the metasurface. For M ≤ 5, our scheme yields gains in accuracy of the order of 5%–35%, a substantial improvement in the context of classification tasks. The reduction in the number of necessary measurements thanks to the learned sensing scheme will reduce the time needed to acquire and process data, as well as the sensor's energy consumption.

Figure 4.

Experimental Results for Learned EM Sensing Applied to a Gesture-Recognition Task

Rather than imaging the scene, we now directly process the raw data with the r-ANN in order to classify the gesture displayed in the scene. The metasurface coding patterns are initialized randomly.

(A) Dependence of the classification accuracy on the number of coding patterns of the programmable metasurface.

(B) The classification confusion matrix for M = 3 if only is optimized.

(C) The classification confusion matrix for M = 3 for jointly optimized and .

Discussion

We have reported an experimental implementation of the recently proposed learned EM sensing paradigm applied to two real-life tasks: imaging and gesture recognition. We leveraged a programmable metasurface on the physical layer to shape scene illuminations. Our integrated sensing chain is composed of two dedicated deep ANNs, the m-ANN for smart data acquisition and the r-ANN for instant data processing. Using a VAE formalism, we jointly trained the learnable weights of the measurement process and those of the processing layer to learn an optimal sensing strategy. Thereby, we make use of all available a priori knowledge about the probed scene, the specific sensing task, and the constraints on the measurement setting and processing pipeline. As a result, the learned sensing strategy with simultaneous learning of measurement and reconstruction procedures yields a superior performance compared with conventional sensing strategies that optimize measurement and processing separately (or not at all). The performance improvements are particularly large when the number of measurements is very limited, as we have experimentally demonstrated. To summarize, we have reported experimental results with immediate practical impact showing how to merge artificial materials and artificial intelligence in the design of a learned EM sensing architecture. Our work paves the path to low-latency microwave sensing for security screening, human-computer interaction, health care, and automotive radar.

Experimental Procedures

Design of Programmable Coding Metasurface

The programmable metasurface29, 30, 31 is an ultrathin planar array of electronically reconfigurable meta-atoms. Thanks to its unique capabilities to manipulate EM wave fields in a reprogrammable manner, it has elicited many exciting physical phenomena39,40 and versatile functional devices,41 such as computational imagers and sensors,11, 12, 13,20,22,32,42 programmable holography,43 wireless communication in programmable environments,44,45 and even analog wave-based computing.46

The designed programmable metasurface consists of 32 × 24 meta-atoms operating around 2.4 GHz, as shown in Figure S1, in which the meta-atom with size of 54 × 54 mm is detailed. The designed meta-atom is composed of two substrate layers: the top layer is F4B with a relative permittivity of 2.55 and loss tangent of 0.0019; the bottom layer is FR4 with dimensions of 0.54 × 0.54 mm2. The top square metal patch of size 0.37 × 0.37 mm2 contains a PIN diode (SMP1345-079LF) to control the EM reflection phase of the meta-atom. In addition, a Murata LQW04AN10NH00 inductor with inductance of 33 nH is used to achieve good isolation between the radiofrequency and direct current signals. In our design, the entire programmable metasurface is composed of 3 × 3 metasurface panels, and each panel is composed of 8 × 8 electronically controllable digital meta-atoms. Each metasurface panel is equipped with eight 8-bit shift registers (SN74LV595APW), and eight PIN diodes are sequentially controlled. The adopted clock (CLK) rate is 50 MHz, and the ideal switching time of the PIN diodes is 10 μs. In our specific implementation, the switching time of the coding pattern of the metasurface is set to 20 μs; thus, the time of data acquisition is on the order of M × 20 μs, where M is the total number of the coding patterns.

Model of the m-ANN

Rather than attempting to write down an analytical forward model of the measurement process as done by del Hougne et al.,22 we opt for learning a forward model, m-ANN, that automatically accounts for metasurface fabrication inaccuracies and stray reflections. Note that this approach could also readily be applied to alternative hardware capable of generating programmable scene illuminations such as dynamic metasurface antennas47 or even traditional antenna arrays.48 Unlike conventional end-to-end deep ANNs that have two ports, our m-ANN has three ports: one inputs the latent variable of the probed scene (in our work an image or a gesture class), one inputs the metasurface coding pattern , and the third one outputs the raw measurement (in our work the raw microwave data), as illustrated in Figure 1. From a deterministic standpoint, we can describe the three-port m-ANN with a pair of coupled nonlinear equations:

| (Equation 2) |

| (Equation 3) |

The variables and in Equations 2 and 3 represent the modeling errors, and their entries are considered to be independent identically distributed complex-valued Gaussian random numbers. In Equation 2, encapsulates all learnable weights of an end-to-end ANN relating the input to the desired measurement . imposed with the subscript highlights the fundamental fact that these trainable network weights depend on the coding patterns of metasurface through Equation 3. In our implementation, these two nonlinear equations, i.e., and , are modeled with a deep convolutional neural network (CNN) with trainable weights and , respectively. In this work, we explore the deep residual CNN architectures developed by Li et al.37 but with eight layers for and three layers for . Both and can be readily learned with a standard supervised-learning procedure from triplet training samples , where the superscript denotes the index of training samples. More details can be found in Note S4.

Training of m-ANN and r-ANN

The training of the complex-valued weights of m-ANN and r-ANN is performed using the ADAM optimization method.49 In addition, we take the deep residual CNN architectures developed by Li et al.32 for the r-ANN. The complex-valued weights are initialized by random weights with a zero-mean Gaussian distribution of standard deviation 10−3. The training is performed on a workstation with an Intel Xeon E5-1620v2 central processing unit, NVIDIA GeForce GTX 1080Ti, and 128 GB access memory. The ML platform Tensor Flow50 is used to design and train the networks in the learned EM sensing system. It will take about 267 h to train the whole learnable sensing pipeline including the m-ANN and r-ANN. Once the r-ANN is well trained, its calculation costs less than 0.6 ms.

Configuration of Proof-of-Concept Sensing System

The experimental setup, sketched in Figure 1A, consists of a transmitting (TX) horn antenna, a receiving (RX) horn antenna, a large-aperture programmable metasurface, and a vector network analyzer (VNA; Agilent E5071C). The two horn antennas are connected to two ports of the VNA via two 4-m-long 50-Ω coaxial cables, and the VNA is used to acquire the response data by measuring transmission coefficients (S21). To suppress the measurement noise level, the average number and filtering bandwidth of the VNA are set to 10 and 10 kHz, respectively. More details can be found in Note S1.

Acknowledgments

This work was supported in part by the National Key Research and Development Program of China (2017YFA0700201, 2017YFA0700202, and 2017YFA0700203) and in part from the National Natural Science Foundation of China (61471006, 61631007, and 61571117). P.d.H. acknowledges fruitful discussions with the other authors of del Hougne et al.22

Author Contributions

L.L. conceived the idea, conducted the theoretical analysis, and wrote the manuscript. L.L. and P.d.H. contributed to conceptualization and write-up of the project. H.-Y.L. conducted experiments and data processing. All authors participated in the experiments and data analysis, and read the manuscript.

Declaration of Interests

The authors declare no competing financial interests.

Published: April 10, 2020

Footnotes

Supplemental Information can be found online at https://doi.org/10.1016/j.patter.2020.100006.

Contributor Information

Tie Jun Cui, Email: tjcui@seu.edu.cn.

Philipp del Hougne, Email: philipp.delhougne@gmail.com.

Lianlin Li, Email: lianlin.li@pku.edu.cn.

Supplemental Information

References

- 1.Semenov S., Kellam J., Nair B., Williams B., Quinn M., Sizov Y., Nazarov A., Pavlovsky A. Microwave tomography of extremities: 2. Functional fused imaging of flow reduction and simulated compartment syndrome. Phys. Med. Biol. 2011;56:2019–2030. doi: 10.1088/0031-9155/56/7/007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Poplack S.P., Tosteson T.D., Wells W.A., Pogue B.W., Paulsen K.D. Electromagnetic breast imaging: results of a pilot study in women with abnormal mammograms. Radiology. 2007;243:350–359. doi: 10.1148/radiol.2432060286. [DOI] [PubMed] [Google Scholar]

- 3.Hassan A.M., El-Shenawee M. Review of electromagnetic techniques for breast cancer detection. IEEE Rev. Biomed. Eng. 2011;4:103–118. doi: 10.1109/RBME.2011.2169780. [DOI] [PubMed] [Google Scholar]

- 4.Mercuri M., Lorato I.R., Liu Y., Wieringa F., Hoof C.V., Torfs T. Vital-sign monitoring and spatial tracking of multiple people using a contactless radar-based sensor. Nat. Electron. 2019;2:252–262. [Google Scholar]

- 5.Accardo J., Chaudhry M.A. Radiation exposure and privacy concerns surrounding full-body scanners in airports. J. Radiat. Res. Appl. Sci. 2014;7:198–200. [Google Scholar]

- 6.Gonzalez-Valdes B., Alvarez Y., Mantzavinos S., Rappaport C.M., Las-Heras F., Martinez-Lorenzo J.A. Improving security screening: a comparison of multistatic radar configurations for human body imaging. IEEE Antennas Propag. Mag. 2016;58:35–47. [Google Scholar]

- 7.Nan H., Liu S., Buckmaster J.G., Arbabian A. Beamforming microwave-induced thermoacoustic imaging for screening applications. IEEE Trans. Microw. Theory Tech. 2019;67:464–474. [Google Scholar]

- 8.Li C., Wang C., Wei Y., Lin Y. China’s present and future lunar exploration program. Science. 2019;365:238–239. doi: 10.1126/science.aax9908. [DOI] [PubMed] [Google Scholar]

- 9.Orosei R., Lauro S.E., Pettinelli E., Cicchetti A., Cordini M., Cosciotti B., Di Paolo F., Flamini E., Mattei E., Pajola M. Radar evidence of subglacial liquid water on Mars. Science. 2018;361:490–493. doi: 10.1126/science.aar7268. [DOI] [PubMed] [Google Scholar]

- 10.Picardi G. Radar soundings of the subsurface of mars. Science. 2005;310:1925–1928. doi: 10.1126/science.1122165. [DOI] [PubMed] [Google Scholar]

- 11.Hunt J., Driscoll T., Mrozack A., Lipworth G., Reynolds M., Brady D., Smith D.R. Metamaterial apertures for computational imaging. Science. 2013;339:310–313. doi: 10.1126/science.1230054. [DOI] [PubMed] [Google Scholar]

- 12.Fromenteze T., Yurduseven O., Imani M.F., Gollub J., Decroze C., Carsenat D., Smith D. Computational imaging using a mode-mixing cavity at microwave frequencies. Appl. Phys. Lett. 2015;106:194104. [Google Scholar]

- 13.Sleasman T., Imani F.M., Gollub J.N., Smith D.R. Dynamic metamaterial aperture for microwave imaging. Appl. Phys. Lett. 2015;107:204104. [Google Scholar]

- 14.Sleasman T., Imani M.F., Gollub J.N., Smith D.R. Microwave imaging using a disordered cavity with a dynamically tunable impedance surface. Phys. Rev. Appl. 2016;6:054019. [Google Scholar]

- 15.Donoho D.L. Compressed sensing. IEEE Trans. Inf. Theory. 2006;52:1289–1306. [Google Scholar]

- 16.Jin K.H., McCann M.T., Froustey E., Unser M. Deep convolutional neural network for inverse problems in imaging. IEEE Trans. Image Process. 2017;26:4509–4522. doi: 10.1109/TIP.2017.2713099. [DOI] [PubMed] [Google Scholar]

- 17.Li L., Wang L.G., Teixeira F.L., Liu C., Nehorai A., Cui T.J. DeepNIS: deep neural network for nonlinear electromagnetic inverse scattering. IEEE Trans. Antennas Propag. 2019;67:1819–1825. [Google Scholar]

- 18.Li L., Wang L.G., Teixeira F.L. Performance analysis and dynamic evolution of deep convolutional neural network for electromagnetic inverse scattering. IEEE Antennas Wirel. Proprag. Lett. 2019;18:2259–2263. [Google Scholar]

- 19.Liang M., Li Y., Meng H., Neifeld M.A., Xin H. Reconfigurable array design to realize principal component analysis (PCA)-Based microwave compressive sensing imaging system. IEEE Antennas Wirel. Proprag. Lett. 2015;14:1039–1042. [Google Scholar]

- 20.Li L., Ruan H., Liu C., Li Y., Shuang Y., Alù A., Qiu C., Cui T.J. Machine-learning reprogrammable metasurface imager. Nat. Commun. 2019;10:1082. doi: 10.1038/s41467-019-09103-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Horstmeyer R., Chen R.Y., Kappes B., Judkewitz B. Convolutional neural networks that teach microscopes how to image. 2017. https://arxiv.org/abs/1709.07223

- 22.del Hougne P., Imani M.F., Diebold A.V., Horstmeyer R., Smith D.R. Learned integrated sensing pipeline: reconfigurable metasurface transceivers as trainable physical layer in an artificial neural network. Adv. Sci. 2019:1901913. doi: 10.1002/advs.201901913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Chakrabarti A. Learning sensor multiplexing design through back-propagation. In: Lee D.D., Sugiyama M., Luxburg U.V., Guyon I., Garnett R., editors. In Proceedings of the 30th International Conference on Neural Information Processing Systems. Curran Associates; 2016. pp. 3081–3089. [Google Scholar]

- 24.Kellman M.R., Bostan E., Repina N.A., Waller L. Physics-based learned design: optimized coded-illumination for quantitative phase imaging. IEEE Trans. Comput. Imaging. 2019;5:344–353. [Google Scholar]

- 25.Vincent S., Steven D., Yifan P., Xiong D., Stephen B., Wolfgang H., Felxi H., Goedon W. End-to-end optimization of optics and image processing for achromatic extended depth of field and super-resolution imaging. ACM Trans. Graph. 2018;37:1–13. [Google Scholar]

- 26.Chang J., Sitzmann V., Dun X., Heidrich W., Wetzstein G. Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification. Sci. Rep. 2018;8:12324. doi: 10.1038/s41598-018-30619-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Muthumbi A., Chaware A., Kim K., Zhou K.C., Konda P.C., Chen R., Judkewitz B., Erdmann A., Kappes B., Horstmeyer R. Learned sensing: jointly optimized microscope hardware for accurate image classification. Biomed. Opt. Express. 2019;10:6351–6369. doi: 10.1364/BOE.10.006351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Vedula S., Senouf O., Zurakhov G., Bronstein A., Michailovich O., Zibulevsky M. Learning beamforming in ultrasound imaging. Proc. Mach. Learn. Res. 2019;102:493–511. [Google Scholar]

- 29.Sievenpiper D., Schaffner J., Loo R., Tangonan G., Ontiveros S., Harold R. A tunable impedance surface performing as a reconfigurable beam steering reflector. IEEE Trans. Antennas Propag. 2002;50:384–390. [Google Scholar]

- 30.Cui T.J., Qi M.Q., Wan X., Zhao J., Cheng Q. Coding metamaterials, digital metamaterials and programmable metamaterials. Light Sci. Appl. 2014;3:e218. [Google Scholar]

- 31.Li L., Cui T.J. Information metamaterials—from effective media to real-time information processing systems. Nanophotonics. 2019;8:703–724. [Google Scholar]

- 32.Li L., Shuang Y., Ma Q., Li H., Zhao H., Wei M., Liu C., Hao C., Qiu C., Cui T.J. Intelligent metasurface imager and recognizer. Light Sci. Appl. 2019;8:97. doi: 10.1038/s41377-019-0209-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kingma D.P., Welling M. Auto-encoding variational Bayes. 2014. https://arxiv.org/abs/1312.6114

- 34.Doersch C. Tutorial on variational autoencoders. 2016. https://arxiv.org/abs/1606.05908

- 35.Mehta P., Bukov M., Wang C.H., Day A., Richardson C., Fisher C., Schwab D.J. A high-bias, low-variance introduction to machine learning for physicists. Phys. Rep. 2019;810:1–124. doi: 10.1016/j.physrep.2019.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.LeCun Y., Bottou L., Bengio Y., Haffner P. Gradient-based learning applied to document recognition. Proc. IEEE. 1998;86:2278–2324. [Google Scholar]

- 37.Li L., Jafarpour B., Mohammad-Khaninezhad M.R. A simultaneous perturbation stochastic approximation algorithm for coupled well placement and control optimization under geologic uncertainty. Comput. Geosci. 2013;17:167–188. [Google Scholar]

- 38.del Hougne P., Imani M.F., Fink M., Smith D.R., Lerosey G. Precise localization of multiple noncooperative objects in a disordered cavity by wave front shaping. Phys. Rev. Lett. 2018;121:063901. doi: 10.1103/PhysRevLett.121.063901. [DOI] [PubMed] [Google Scholar]

- 39.Zhang L., Chen X.Q., Liu S., Zhang Q., Zhao J., Dai J.Y., Bai G.D., Wan X., Cheng Q., Castaldi G. Space-time-coding digital metasurfaces. Nat. Commun. 2018;9:4334. doi: 10.1038/s41467-018-06802-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhang L., Chen X.Q., Shao R.W., Dai J.Y., Cui T.J. Breaking reciprocity with space-time-coding digital metasurfaces. Adv. Mater. 2019;31:1904069. doi: 10.1002/adma.201904069. [DOI] [PubMed] [Google Scholar]

- 41.Yang H., Cao X., Yang F., Gao J., Xu S., Li M., Chen X., Zhao Y., Zheng Y., Li S. A programmable metasurface with dynamic polarization, scattering and focusing control. Sci. Rep. 2016;6:35692. doi: 10.1038/srep35692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Gollub J.N., Yurduseven O., Trofatter K.P., Arnitz D., Imani M.F., Sleasman T., Boyarsky M., Rose A., Pedross-Engel A. Large metasurface aperture for millimeter wave computational imaging at the human-scale. Sci. Rep. 2017;7:42650. doi: 10.1038/srep42650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Li L., Jun Cui T., Ji W., Liu S., Ding J., Wan X., Bo Li Y., Jiang M., Qiu C., Zhang S. Electromagnetic reprogrammable coding-metasurface holograms. Nat. Commun. 2017;8:197. doi: 10.1038/s41467-017-00164-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.del Hougne P., Fink M., Lerosey G. Optimally diverse communication channels in disordered environments with tuned randomness. Nat. Electron. 2019;2:36–41. [Google Scholar]

- 45.Zhao J., Yang X., Dai J.Y., Cheng Q., Li X., Qi N.H., Ke J.C., Bai G.D., Liu S., Jin S. Programmable time-domain digital-coding metasurface for non-linear harmonic manipulation and new wireless communication systems. Natl. Sci. Rev. 2019;6:231–238. doi: 10.1093/nsr/nwy135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.del Hougne P., Lerosey G. Leveraging chaos for wave-based analog computation: demonstration with indoor wireless communication signals. Phys. Rev. X. 2018;8:041037. [Google Scholar]

- 47.Sleasman T., Imani M.F., Diebold A.V., Boyarsky M., Trofatter K.P., Smith D.R. Implementation and characterization of a two-dimensional printed circuit dynamic metasurface aperture for computational microwave imaging. 2019. https://arxiv.org/abs/1911.08952

- 48.Fenn A.J., Temme D.H., Delaney W.P., Courtney W.E. The development of phased-array radar technology. Lincoln Lab. J. 2000;12:20. [Google Scholar]

- 49.Kingma D.P., Ba J.L. Adam: a method for stochastic optimization. 2014. https://arxiv.org/abs/1412.6980

- 50.Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., et al. (2016). TensorFlow: a system for large-scale machine learning. 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), pp. 265–283.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.