Abstract

To achieve visual space constancy, our brain remaps eye-centered projections of visual objects across saccades. Here, we measured saccade trajectory curvature following the presentation of visual, auditory, and audiovisual distractors in a double-step saccade task to investigate if this stability mechanism also accounts for localized sounds. We found that saccade trajectories systematically curved away from the position at which either a light or a sound was presented, suggesting that both modalities are represented in eye-centered oculomotor centers. Importantly, the same effect was observed when the distractor preceded the execution of the first saccade. These results suggest that oculomotor centers keep track of visual, auditory and audiovisual objects by remapping their eye-centered representations across saccades. Furthermore, they argue for the existence of a supra-modal map which keeps track of multi-sensory object locations across our movements to create an impression of space constancy.

Subject terms: Perception, Auditory system, Motor control, Olfactory system, Visual system

Introduction

The location of sounds cannot be directly determined from the auditory input received at the ears, but must instead be derived from a series of complex computational steps1. Indeed, sounds are localized from the integration of binaural (interaural time difference and interaural level difference) and spectral cues within a network of midbrain2,3 and cortical structures4, resulting in head-centered encoding of auditory space. However, it has been shown that the representation of sounds gradually shifts from a head-centered to an eye-centered reference frame between the inferior colliculus—IC—and the superior colliculus—SC5–10. Furthermore, sound location is also encoded in eye-centered coordinates within several other cortical structures (e.g. Lateral Intraparietal area—LIP11–13, or the Frontal Eye Fields—FEF14). Such a transformation suggests a role for eye-centered representation in auditory localization. In line with this idea, Doyle and Walker15 showed that, even when instructed to saccade to visual targets and ignore auditory distractors, saccade trajectories still curve away from the location of auditory distractors presented before movement initiation. Accordingly, to induce this systematic effect on the saccade trajectory, the location of the auditory distractor must be represented within the eye-centered oculomotor centers used for saccade targeting. It is believed that the simultaneous representation of the auditory distractor and the visual saccade target locations within the same oculomotor map leads to competition during the elaboration of the motor plan16. This competition then results in systematic changes in the curvature of the saccade trajectory as a function of the distractor location. However, if auditory distractors are indeed represented within eye-centered oculomotor centers, then what would happen when the mapping between head-centered and eye-centered encoding is changed because the eyes have moved but the head (and ears) have remained stable?

When the eyes move, even an object that is stationary in the external world will project onto a different part of the retina after the movement. Thus, despite the subjective experience of a stable visual world, every single eye movement induces a shift of the retinal image, which effectively challenges the notion of visual stability. For visual objects, different studies have shown that both cortical and sub-cortical structures remap (or update) their visual neuron receptive fields before the movement of the eyes begins17–19. Visual neurons, which after a saccade will receive an object within their receptive field, show a predictive remapping activity that allows them to keep track of visual objects across eye movements20. This predictive remapping is thought to facilitate stable visual perception as it establishes an effective mechanism by which the brain can compensate for impending retinal image shifts induced by movements of the eyes. Until now, however, remapping effects have only been reported for visually defined objects.

Nevertheless, auditory cells within the SC are modulated by the position of the eyes, with reduced or abolished activity observed when a change in eye direction brings the sound source outside of the eye-centered receptive field of the recorded neuron8,21. Additionally, it has been shown that humans can accurately shift their gaze toward the origin of an auditory target presented before a movement, even after making a double-step eye22 or combined eye and head movement23,24. The ability to correctly perform such a task suggests that the memorized location of the auditory stimulus was accurately updated, or remapped, across the first saccade such that the second saccade could be precisely targeted. However, these results do not exclude the possibility that the auditory location was represented solely within head-centered auditory maps and simply relayed to eye-centered oculomotor centers (before the onset of the first movement) to form a memorized, eye-centered motor plan. As such, earlier studies leave open the question of the mechanism used to process sound location across saccades.

Here we aim at determining the mechanism used to represent visual, auditory, and audiovisual distractors across saccades. To do so, we built a custom screen (Fig. 1A) that allowed us to accurately record eye movements while auditory or visual distractors were presented at different locations. We instructed participants to move their eyes following a double-step sequence consisting of a first (left- or rightward) saccade to the screen center followed by a second (up- or downward) saccade along the screen vertical meridian. Distractors were either presented (Fig. 1B) before (pre-saccadic distractor trials) or after the first horizontal saccade (inter-saccadic distractor trials). For these two types of trials, we analyzed the second vertical saccade as a function of the distractor position and sensory modality. We predicted that if a distractor was represented within eye-centered oculomotor centers, its representation would compete with the representation of the visual saccade target and influence the trajectory of the second saccade16,25. For inter-saccadic trials, in which screen (or head-centered) and eye-centered representations of the distractor are equivalent, we simply predicted that the saccade trajectory would curve away from the distractor. As expected, in these inter-saccadic distractor trials for which both eye- and head-centered representations of the distractor were aligned, we found that the trajectory of the second saccade systematically curved away from the distractor screen location irrespective of whether the distractor was visual, auditory or audiovisual.

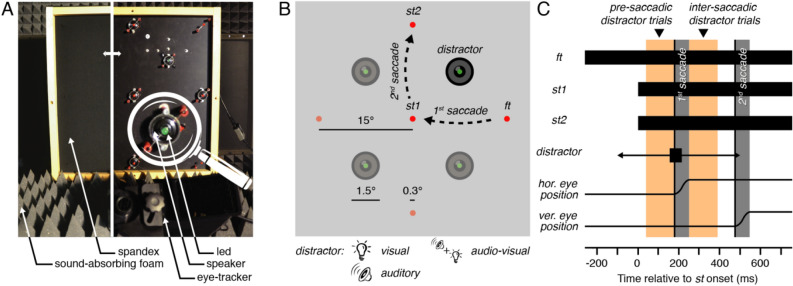

Figure 1.

Apparatus and stimulus timing. (A) We built a combined eye-tracking and audiovisual screen consisting of LEDs and sound speakers (the left side of the panel shows the setup covered with spandex, transparent to sounds and lights, while the right side shows the equipment below) in a cabin covered with sound-absorbing foam. (B) The presentation of the fixation target (ft) was followed by the simultaneous appearance of the saccade targets (st1 and st2). Participants (n = 8 in Exp. 1; n = 7 in Exp. 2) executed a sequence of two saccades while ignoring the onset of a distractor, a brief (50 ms) visual, auditory or audiovisual stimulus presented at a location either clockwise or counterclockwise relative to the second saccade direction. (C) Pre-saccadic and inter-saccadic trials (orange bars) were defined according to the presentation time of the distractor: the period preceding (pre-saccadic) or following (inter-saccadic) the first saccade. The curvature of the second saccade of pre-saccadic and inter-saccadic distractor trials was analyzed as a function of the distractor position and sensory modality (visual, auditory or audiovisual).

For pre-saccadic trials, for which the distractor occurred before the first saccade, we could formulate two separate hypotheses about the curvature of the second saccade. If the eye-centered (or retinotopic) distractor representation is not being remapped across the first eye movement, we would predict that the second saccade curves toward the distractor screen position (because this corresponds to curvature away from the retinal position of the distractor before the first saccade). On the contrary, if the eye-centered distractor representation is being remapped across the first eye movement, we would predict that the second saccade curves away from the distractor screen position. Supporting the second hypothesis, we found that vertical saccades recorded in pre-saccadic trials, systematically curved away from the distractor screen location irrespective of its sensory modality. Together, these results demonstrate that both auditory and visual objects are registered within eye-centered oculomotor centers. Moreover, they show that when the eye position changes, eye-centered stimulus representations are remapped, ensuring that stimuli from different modalities can all maintain their alignment to the external world.

Results

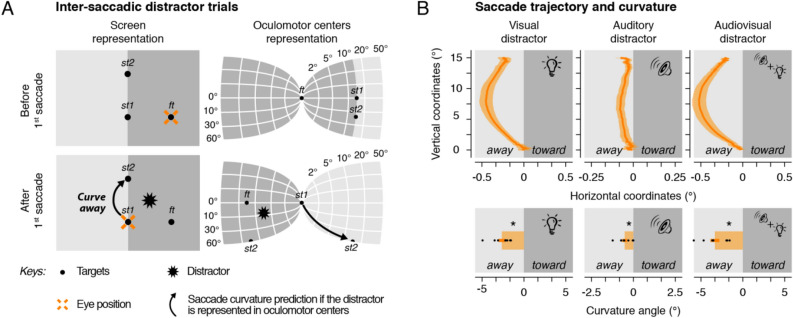

We first focused on inter-saccadic distractor trials (Fig. 2 and Supplementary Figure S1). In these trials the distractor occurred after the first saccade but before the onset of the second saccade. Analysis of saccade curvature as a function of the distractor position can therefore inform us on whether a brief visual and/or auditory stimulus was represented within eye-centered oculomotor centers (Fig. 2A). Figure 2B (Exp. 1) and Supplementary Figure S1 (Exp. 2) show the averaged second saccade trajectory and curvature angle normalized relative to trials without distractors as a function of the distractor sensory modality (see “Methods”). We found that vertical saccades curved away from the screen position of the visual distractor when it was presented during the inter-saccadic interval. This effect was consistent across trials, as made evident by the comparison between the normalized curvature angle observed for visual inter-saccadic distractor trials (Exp. 1: Fig. 2B left panels: − 2.65 ± 0.41°—mean ± SEM; Exp. 2: Supplementary Figure S1 left panels: − 3.79 ± 0.97°) and trials without distractor (Exp. 1: 0.30 ± 0.11°, p < 0.0001; Exp. 2: 0.49 ± 0.32°, p < 0.0001). Interestingly, auditory distractors produced smaller but similar effects. Saccade trajectories systematically curved away from the screen location at which inter-saccadic sounds were played (Exp. 1: Fig. 2B middle panels: − 0.52 ± 0.14°, p < 0.0001; Exp. 2: Figure S1 right panels: − 0.16 ± 0.23°, p = 0.0198). Finally, audiovisual inter-saccadic distractors had similar effects on the curvature of the second saccade and resulted in systematic curvature away from the screen position at which they were presented (Fig. 2B right panels: − 3.34 ± 0.53°, p < 0.0001). Altogether, these results suggest that visual and/or auditory stimuli are represented within eye-centered oculomotor centers and compete with visual saccade targets, resulting in a systematic bias of saccade trajectory curvature.

Figure 2.

Inter-saccadic distractor trials. (A) Screen (left panels) and oculomotor centers (right panels) representation of the targets (black dots), the eye position (cross), the distractor (star) and the saccade curvature prediction (arrow) before (top panels) and after (bottom panels) the first horizontal saccade. If the distractor is represented in oculomotor centers, we predicted that the second saccade would curve away from the distractor screen position. (B) Averaged normalized second saccade trajectory (top panels) and curvature angle (bottom panels) observed following the presentation of a visual (left panels), an auditory (center panels), or an audiovisual (right panels) inter-saccadic distractor in Exp.1. Saccade trajectories are rotated in order to have upward saccades and negative x-values representing coordinates away from the screen position of the distractor. Areas around the averaged saccade trajectory and error bars represent SEM. Black dots show individual participants. Asterisk indicates a significant effect (p < 0.05, ns: non-significant). Note that we illustrated two known features of oculomotor centers: cortical magnification factor (see logarithmic scale) and the double visual inversion (left–right and top–bottom) relative to the screen representation.

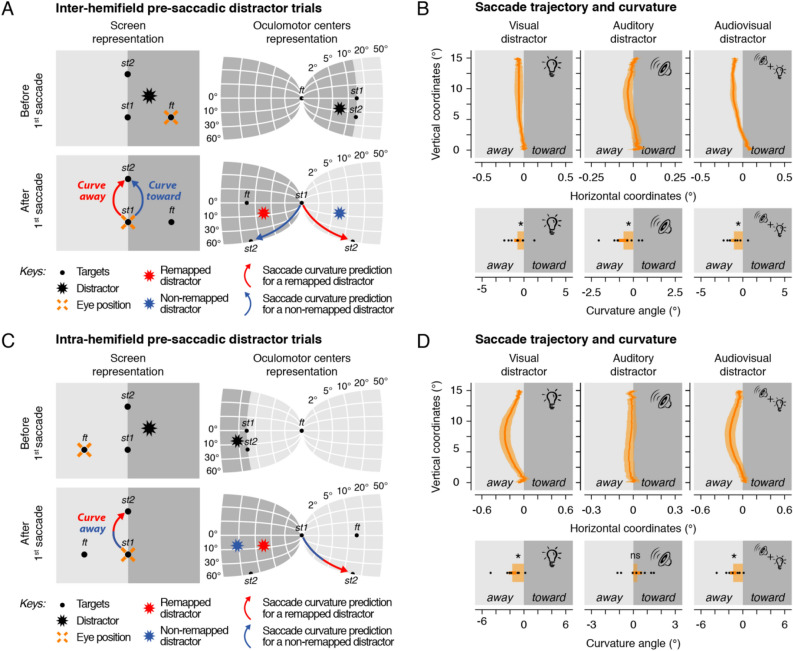

Next, we focused on trials in which the distractor preceded the two saccades. Depending on the position of the targets and of the distractor on the screen, we labeled these trials as inter-hemifield or intra-hemifield pre-saccadic trials.

Inter-hemifield pre-saccadic trials are characterized by distractors horizontally presented at a position laying in between the fixation and the first saccade target (Fig. 3A and Supplementary Figure S2). For these trials the first saccade shifts the distractor's eye-centered representation in the opposite visual hemifield relative to where it was originally presented (i.e. inter-hemifield). Thus, these trials allow us to determine if oculomotor centers, while processing the second saccade, take the execution of the first saccade into account when representing visual, auditory and audiovisual distractors. If the displacement from the first horizontal saccade is not taken into account, then we would predict that vertical saccades curve toward the distractor screen position. On the contrary, if the movement of the first saccade is considered, such that the distractor representation is being remapped across the first saccade, we would predict that the second saccade curves away from the distractor screen position.

Figure 3.

Inter- and intra-hemifield pre-saccadic distractor trials. (A–C) Screen and oculomotor center representations before and after the first saccade. For inter-hemifield pre-saccadic trials (A), the distractor is presented near to the initial fixation, located in the same hemifield. Here, if oculomotor centers remap the distractor across saccades, we predicted that vertical saccades would curve away from the distractor screen position (red arrow), otherwise they will curve toward it (blue arrow). In intra-hemifield pre-saccadic trials (C), the distractor is presented further from the initial fixation position, located in the other hemifield. We predicted that vertical saccades would curve away from the distractor screen position irrespective of whether oculomotor centers remap (red arrow) the distractor across saccades or not (blue arrow). (B–D) Averaged normalized second saccade trajectory (top panels) and normalized curvature angle (bottom panels) observed following the presentation of a visual (left panels), an auditory (center panels) or an audiovisual (right panels) inter-hemifield (B) and intra-hemifield (D) pre-saccadic distractor in Exp. 1. Conventions are as in Fig. 2.

We found that the presentation of inter-hemifield pre-saccadic distractors resulted in saccade curvature away from the distractor screen position. This effect was found both for visual (Exp 1.: Fig. 3B left panels: − 0.76 ± 0.40°, p = 0.0130; Exp 2.: Supplementary Figure S2 left panels: − 3.79 ± 0.97°, p < 0.0001), auditory (Exp 1.: Fig. 3B middle panels: − 0.58 ± 0.30°, p = 0.0026; Exp. 2: Supplementary Figure S2 right panels: − 1.24 ± 0.40°, p < 0.0001) and audiovisual distractors (Fig. 3B right panels: − 1.05 ± 0.33°, p < 0.0001). These results strongly suggest that auditory and visual representations within eye-centered oculomotor centers are remapped across saccades to compensate for the effects of the first horizontal eye movement.

Next, we analyzed intra-hemifield pre-saccadic trials. These trials are characterized by distractors presented at locations laying further away from the fixation and the saccade targets (Fig. 3C). For these trials the first horizontal saccade shifts the distractor's eye-centered representation within the same visual hemifield relative to where it was originally presented (i.e. intra-hemifield). Contrary to inter-hemifield pre-saccadic trials, these trials do not allow us to determine if oculomotor centers consider the movement of the first saccade when processing the second saccade. Indeed, we predicted that vertical saccades would curve away from the distractor screen position irrespective of whether the distractor representation was remapped. We found that the presentation of intra-hemifield pre-saccadic distractors resulted in saccade curvature away from the distractor screen position. This effect was, however, only observed systematically across participants for visual (Fig. 3D left panels: − 1.38 ± 0.45°, p < 0.0001) and audiovisual distractors (Fig. 3D right panels: − 1.15 ± 0.37°, p < 0.0001). For auditory distractors, although the averaged saccade trajectory curved away from the distractor screen position, the curvature angle observed across participants did not significantly differ from trials without distractors (Fig. 3D middle panels: 0.24 ± 0.28°, p = 0.8308). Interestingly, as sounds were presented at the same screen position in inter- and intra-hemifield pre-saccadic trials, this latter effect suggests that the localized sound representations within oculomotor centers were modulated by the eccentricity between the position of the eyes and the sound source.

Altogether, we found that vertical saccades curved away more when a visual distractor was presented after the first saccade (inter-saccadic trials) rather than before the first saccade (pre-saccadic trials) for both inter-hemifield (p < 0.0001) and intra-hemifield trials (p = 0.0004). The same effects were found for audiovisual distractors (ps < 0.0001) and for auditory intra-hemifield distractors (p = 0.0084). However, auditory inter-hemifield pre-saccadic distractor trials did not differ from auditory inter-hemifield inter-saccadic distractor trials (p = 0.8206). As pre-saccadic distractors were necessarily presented earlier than inter-saccadic distractors relative to the onset of the second saccade, the reduced influence of pre-saccadic distractors on the second saccade trajectory most likely reflects the effect of time between the distractor presentation and the execution of the movement. Such an influence of time on the strength of the curvature, demonstrated for visual distractors (an effect previously shown for visual distractors26), might be less visible for less well localized objects such as the auditory distractor we used here.

Finally, to test the speed at which the transfer of the distractor representation occurred, we analyzed pre-saccadic distractor trials as a function of the latency of the first horizontal saccade. To do so, we performed a median split on the first saccade latency data for each participant as to assign trials to an “early” or a “late” saccade group. We found that the inter-hemifield pre-saccadic trial effects reported above relied mostly on “late” saccade trials. Indeed, for “early” saccade trials (visual: 141.01 ± 5.86 ms, auditory: 119.71 ± 6.31 ms and audiovisual: 122.36 ± 5.43 ms), we found that the second vertical saccade did not curve away significantly from the visual (− 0.58 ± 0.54°, p = 0.1514), the auditory (− 0.47 ± 0.49°, p = 0.1676), and the audiovisual distractor (− 0.91 ± 0.56°, p = 0.0522) when compared to the distractor absent trials (0.29 ± 0.15°). These effects changed for “late” saccade trials (visual: 202.39 ± 7.41 ms, auditory: 194.55 ± 7.71 ms and audiovisual: 197.24 ± 6.53 ms), with second saccades significantly curving away from the visual (− 0.86 ± 0.40°, p = 0.0036), the auditory (− 0.55 ± 0.23°, p < 0.0001), and the audiovisual distractor (− 1.03 ± 0.23°, p < 0.0001) when compared to the distractor absent trials (0.24 ± 0.13°). Next, contrary to inter-hemifield trials, the previously described effects of intra-hemifield distractor trials did not differ between “early” (visual: 139.70 ± 6.16 ms, auditory: 114.74 ± 7.89 ms and audiovisual: 116.90 ± 5.05) and “late” saccade trials (visual: 202.28 ± 7.03 ms, auditory: 192.88 ± 6.57 ms and audiovisual: 197.48 ± 7.28 ms). Thus, we found that irrespective of the first saccade latency, the second saccade significantly curved away from the visual (early: − 1.77 ± 0.66°, p < 0.0001; late: − 0.94 ± 0.38°, p = 0.0044) and audiovisual distractor (early: − 0.97 ± 0.41°, p < 0.0001; late: − 1.28 ± 0.42°, p < 0.0001). Moreover, for auditory distractors second saccade curvature angles observed across participants did not significantly differ from trials without distractors (early: 0.38 ± 0.41°, p = 0.7522; late: 0.01 ± 0.26°, p = 0.2020).

Discussion

We behaviorally probed the neural representation of visual, auditory and audiovisual objects using objective measures of saccade trajectory. Our custom-made screen and paradigm allowed us to determine the reference frame in which these different stimulus modalities were represented using a double-step saccade task. We found that the curvature of vertical saccades was systematically biased by visual, auditory and audiovisual distractors briefly presented before movement onset. Moreover, the trajectory of the second, vertical saccade was found to curve away from the screen position of the distractor when the execution of the first saccade shifted its eye-centered representation in the opposite visual hemifield. This systematic influence of the distractors on the saccade trajectory curvature suggests that both auditory and visual distractor representations competed with the visual saccade targets in eye-centered oculomotor centers. Importantly, our results indicate that oculomotor centers keep track of both sound and light positions by remapping their eye-centered representations across saccades.

Saccade trajectory has previously been shown to reflect not only individual idiosyncrasies, but also the spatial layout of the scene over which the eyes move27. Here, we were able to reveal changes in the saccade trajectory as a function of the distractor interval, position, and sensory modality. Previous studies indicated that the presence of a salient visual or auditory distractor influences saccade trajectories by causing systematic deviations and curvature15,25,28,29. While curvature away from the distractor reflects an inhibition of the memorized distractor location30, the occurrence of this systematic effect by itself provides evidence for the registration of corresponding stimuli within oculomotor centers16. As oculomotor centers responsible for saccade planning and execution are known to be organized in an eye-centered reference frame31,32, our results suggest that both, visual and auditory distractor stimuli were processed by eye-centered multi-sensory neurons within these areas.

Such multimodal neurons have been documented in awake macaques8,9, especially within the deeper layers of the SC, a site where both modalities have been found to converge to form a common motor map2,33. Interestingly, the response of collicular neurons depends both on the position of the sound source and on the position of the eyes in the orbit8,9. In other words, although sounds are initially encoded in a head-centered reference frame2,3, collicular neurons respond differently as a function of the gaze direction, suggesting the existence of eye-centered auditory receptive fields8,9. These converging neurophysiological studies provide further evidence for the encoding of multimodal stimuli within eye-centered oculomotor centers.

We propose that the collicular or cortical eye-centered registration of the auditory and visual distractors, and the remapping of their eye-centered representations across the saccade could explain the effects observed here. Indeed, if sounds are represented within a common, eye-centered reference frame, their neural representations should be remapped across saccades. Accordingly, when programming the movement of the second saccade, the competition occurs between the saccade target and the remapped distractor location. Thus, because the shift in the eye position due to the saccade is considered, the trajectory of the saccade will deviate away from the screen (i.e. external) location of the distractor.

Such neural remapping of stimulus-related activity has been observed for visual neurons in several different oculomotor centers17–19. Remapping consists of a predictive increase in the spiking activity of neurons, which, after the completion of a given eye movement, receive either a salient object or its memory trace in their receptive field34. Moreover, the activity of neurons, which will no longer receive the salient object in their receptive field after completion of the saccade, has been found to predictively return to a baseline level17,35–37. However, no study published to date has reported such neurophysiological evidence for a remapping of auditory objects occurring across saccades.

In a series of experiments recording single cells in the superior colliculus of awake macaques, Jay and Sparks8,9 demonstrated that eye-centered auditory receptive fields, when activated by a specific external sound source, reduce their firing after a change in eye coordinates. While these studies demonstrate that the activity of auditory neurons is modulated by the gaze direction, the authors did not record activity before a saccade and thus could not demonstrate a potential remapping of eye-centered auditory neurons.

In our experiment, we found systematic saccade curvature away from the sound distractor when the first saccade shifted the sound distractor into the opposite visual hemifield (inter-hemifield). We argue that these results are compatible with the view that sounds are encoded at least partially within eye-centered oculomotor centers and remapped across the first saccade. This effect was, however, less systematic when the first saccade shifted the sound distractor within the same visual hemifield (intra-hemifield). One crucial difference between these two conditions is the eccentricity between the fixation target and the distractor position before the first saccade. During intra-hemifield trials the distractor occurred on the opposite side of the display relative to the current gaze position. Thus, the eccentricity of the distractor from the eye was about twice as large in intra-hemifield trials (distance between fixation target and distractor: ~ 21°) relative to inter-hemifield trials (~ 11°). Nevertheless, distractors of both trial types were presented at the same screen position (and as such the sounds were equally eccentric relative to the position of the ears). As only the eye-centered eccentricity differed, we argue that the difference we observed here shows that sounds are represented in eye-centered coordinates.

Interestingly, when measuring collicular receptive field shifts as a function of the eye direction, Jay and Sparks8,9 found that the spatial shift was less precise for auditory neurons than for visual neurons. This effect could be due to a difference in saliency between the stimuli38 or due to an intrinsic difference in localization capacity between visually and auditory defined objects. In our experiment sound sources were separated on the screen by 15°, a distance far above auditory localization thresholds observed, for example, when participants had to discriminate two sounds39 or to saccade toward them22. Nevertheless, the lower precision with which auditory stimuli are localized relative to visual stimuli would be expected to reduce the strength of competition between the auditory distractor and the visual saccade target. In addition to auditory stimuli being localized less precisely than visual ones, the absolute localization of auditory stimuli is also known to be biased toward the current gaze location40,41, with the magnitude of the bias increasing with eccentricity. While providing further support for interactions between auditory representations and gaze direction, this effect may also have resulted in an inwards localization bias. If such bias occurs, in the intra-hemifield pre-saccadic condition, the condition in which the eyes start further away from the distractor, sounds remapped representation would lie along the trajectory of the second saccade, rather than to one side of it. One would predict that, overall, this condition would be associated with an absence of systematic curvature away or toward the distractor screen position, as observed in our experiment.

Although the difference between sensory modalities may reflect different localization accuracy, only the remapping of an eye-centered representation of the sound across the saccade can fully explain the observed effects. It has been shown that humans can accurately execute double-step eye movements or eye and head movements toward memorized auditory targets22–24. Contrary to our results, these earlier findings, as well as previous studies measuring the effect of sound on saccade curvature using a single eye movement task15,28, could be explained by a mechanism in which auditory targets are initially processed in head-centered auditory maps before being transferred into an eye-centered oculomotor map at a later point in time. In contrast, our effect was observed in a double-step saccade task with distractors presented during saccade preparation. They argue for the existence of a single eye-centered reference frame in which distractors were encoded, regardless of their sensory modality. Future studies combining multiple distractors presented before and after the first saccade could be used to more directly test the existence of a single reference frame and to further examine integration across modalities.

The location-dependent effect of auditory distractor stimuli on the curvature of eye-movements indicates that some representation of auditory stimuli must necessarily be encoded within eye-centered oculomotor maps in order to provide the competition that biases the saccade trajectory. We argue that this eye-centered auditory information is present from the initial encoding of the stimulus, with its eye-centered representation being remapped after the first saccade to account for the displacement of the eyes. However, an alternative account is that the auditory information is represented in head-centered spatiotopic maps42,43, with the representation converted into an eye-centered reference frame “on-demand”7,44,45 immediately prior to the second saccade. Under this alternative hypothesis, the representation of the auditory distractor stimulus would not be stored within eye-centered coordinates and thus not be subject to remapping across the saccade. We believe this alternative is unlikely for two reasons. First, we observed a clear effect of curvature exhibited in response to localized auditory distractors. This clearly demonstrates that ocular information has a role in determining the degree of curvature, which is difficult to explain if the auditory distractors were represented solely in a head-centered reference frame. Second, given that the auditory stimuli represent a task-irrelevant distractor that individuals are explicitly instructed to ignore, it is unclear why they would actively convert head-centered information into an eye-centered oculomotor representation after the first saccade. Instead, if the representations are separate, it would be far more advantageous to not perform this transformation, rendering the saccade unaffected by the irrelevant auditory distractor. In summary, while only the direct recording of oculomotor multi-sensory neurons, for example within the SC, could confirm the existence of neural remapping of sounds in eye-centered maps, the pattern of data we observed with auditory, visual and audiovisual distractors best supports a model in which different modalities are tracked in a single eye-centered visual reference frame2,33.

Such a supra-modal topological map could allow multi-sensory space constancy via predictive remapping of locations as a function of their ability to attract spatial attention46–48 rather than as a function of their modality. This proposal and our results are in agreement with a recent study showing evidence in favor of a trans-saccadic updating of spatial attention to visual and auditory stimuli49. Interestingly, we here found that the remapping of a light or a sound across hemispheres was mostly visible in the curvature of a second saccade when participants took more time to initiate their first eye movement. Given that these trials rendered more time available for the visual system to process the corresponding distractor, this result suggests that the hemispheric transfer of a visual or an auditory stimulus takes time. Moreover, the observed temporal modulation is compatible with previous studies showing that attentional benefits and neural firing enhancements associated with remapping take time to develop48,50, and further support the hypothesis that both visual and auditory stability rely on slow attentional processes.

As an alternative, one could propose that multiple reference frames are simultaneously maintained. In this framework, auditory representations would be accounted for by eye-centered areas but would also be kept in a head-centered reference frame within other cortical areas51. Indeed, we found a stronger bias for inter-saccadic than pre-saccadic distractor trials. As only in inter-saccadic trials both the head- and eye-centered representations are aligned, this effect goes in line with the idea that the two reference frames exist separately and converge in oculomotor centers to affect the saccade path. Such an integration, which might enhance the distractor signal and consequently the curvature bias, was previously described at the electrophysiological52 and the behavioral level53 in experiments without saccades or sounds.

Finally, our results show that saccade trajectory curvature is affected differently by the two modalities. Nevertheless, inference based on the comparison of saccade trajectory curvature effects across modalities is somewhat limited as we kept stimulus intensity constant, confounding arousal and sensory modality factors. Future work systematically varying the level of the two modalities would better test multi-sensory integration54.

In conclusion, we found that localized visual and auditory objects are treated on a supra-modal eye-centered oculomotor map and are being maintained in space across saccades via a remapping mechanism. Thus, apart from its contribution to visual stability, predictive remapping appears to facilitate coherent multi-sensory integration despite continuous movements of the eye, and thus plays a more general role for perceptual stability.

Material and methods

Ethics statement

This experiment was approved by the Ethics Committee of the Faculty for Psychology and Pedagogics of the Ludwig-Maximilians-Universität München (approval number 13_b_2015) and conducted in accordance with the Declaration of Helsinki. All participants gave written informed consent.

Participants

Ten students and staff members from the Ludwig-Maximilians-Universität München participated in the experiment (Exp. 1: eight participants: age 24–30, three females, two authors; Exp. 2: seven participants: age 24–29, five females, no author, five participants did Exp. 1 and Exp. 2) for a compensation of 10 Euros per h of testing. All participants except the authors were naive as to the purpose of the study and all had normal or corrected-to-normal vision and audition. All files are available from the OSF database URL: https://osf.io/h3qdf/.

Setup

Participants sat in a dim-light, sound-attenuated cabin, gazing toward the screen center with their head positioned and kept steady by a chin and forehead rest. The cabin’s walls, floor, ceiling and all other large objects in the room were covered with Flexolan 5 cm-pyramidal sound-absorbing foam (Diedorf, Germany) eliminating acoustical reflection. The experiment was controlled by a Hewlett-Packard Intel Core i7 PC computer (Palo Alto, CA, USA) located outside the cabin. The dominant eye’s gaze position was recorded and available online using an EyeLink 1000 Desktop Mounted (SR Research, Osgoode, Ontario, Canada) at a sampling rate of 1 kHz and operated with a 940 nm infrared illuminator. The experimental software controlling the display, the response collection, as well as eye tracking was implemented in Matlab (MathWorks, Natick, MA, USA), using the Psychophysics and EyeLink toolboxes55,56. Stimuli were presented at a viewing distance of 76.5 cm, on a custom-made audiovisual screen (Fig. 1A). Auditory distractors were played from 4 loudspeakers (0.75° radius) arranged at the four corners of a virtual square (15° side) centered on the screen midpoint and located at ± 7.5° horizontally and vertically from it. Sounds were played via a multiple channel MOTU sound card (Cambridge, MA, USA), amplified by a PowerPlay Pro Behringer amplifier (Wellich, Germany), and digitized at 48 kHz. Visual distractors were presented via 4 green light emitting diodes (LEDs; 0.15°-radius). Fixation and saccade targets were presented via 5 other red LEDs (0.15°-radius). All LEDs were controlled at a rate of 1 kHz by an Arduino Due electronic board (Turin, Italy). The visual distractors (4 green LEDs) were mounted on top of the four speakers. The fixation and saccade targets were located at the center of the screen, at a distance of 15° from the screen center at the four cardinal locations (right, up, left and down). A spandex screen (transparent to sound and light) covered the setup, ensuring that participants remained unaware of stimulus locations before their onset. A custom-made calibration was implemented using the five red and four green LEDs presented in random sequences. Instructions were recorded and played to participants during training blocks and repeated before each experimental session.

Procedure

Experiment 1

This experiment was composed of three different types of blocks in which we presented visual, auditory or audiovisual distractors, respectively. All blocks were run in a random order across all participants and completed in 3–4 experimental sessions (on different days) of about 60–90 min each (including breaks). All participants, except one author, initially completed three training blocks in which they were familiarized with the task (three short blocks, one for each distractor type, ~ 5 min each) followed by 12 blocks of the main experiment (three visual, three auditory and three audiovisual distractor blocks, ~ 25 min each).

Each trial began with participants fixating a fixation target (red LED) located randomly 15° to the right or left of the screen center (Fig. 1B). When the participant’s gaze was detected within a 3.5°-radius virtual circle centered on the fixation target for 200 ms, the trial began with an initial fixation period (varying randomly between 1000 and 1300 ms in steps of 50 ms) followed by the simultaneous presentation of two saccade targets for a duration of 1000 ms (while the fixation target stayed on). The first saccade target always appeared in the center of the screen while the second could randomly appear above or below the screen center. Participants were instructed to make two sequential saccades, the first one toward the screen center, and the second from the screen center to the saccade target. Consequently, participants randomly executed one out of four possible double-step saccade sequences: left-up, left-down, right-up or right-down saccades. Each trial ended with a 500 ms inter-trial period during which no stimulus was presented.

In 3/4 of the trials, a distractor was presented clockwise or counterclockwise relative to the second saccade target position (e.g. in a right-up saccade trial, a clockwise distractor was presented in the upright quadrant). The onset of the distractor stimulus occurred randomly at a time between 100 ms before and 300 ms after (in steps of 1 ms) the appearance of the saccade targets. This ensured that the distractors reliably occurred either before the first saccade (1st saccade mean latency with visual: 172.02 ± 6.18 ms, auditory: 160.50 ± 6.64 ms, audiovisual: 163.88 ± 5.98 ms or without distractors: 179.44 ± 4.64 ms) or in between the two saccades (2nd saccade mean latency with visual: 497.28 ± 16.21 ms, auditory: 462.92 ± 20.33 ms, audiovisual: 473.86 ± 19.52 ms or without distractor: 480.99 ± 18.84 ms). The distractor within a block remained constant as either a visual, auditory or audiovisual stimulus. A visual distractor was a 50 ms illumination of a green LED, an auditory distractor was a 50 ms broadband Gaussian white noise (with 5 ms raised-cosine onset and offset ramp) and an audiovisual distractor consisted of synchronized visual and auditory distractors originating from the same spatial position. The sound level and frequencies of the speakers were adjusted to be identical (75 dBA SPL) based on records made with a Behringer microphone (Wellich, Germany) placed at the head position. In 1/4 of the trials, no distractor was presented to avoid any difference in saccade preparation. These distractor absent trials were randomly interleaved with distractor present trials. Distractor absent trials were used to normalize the saccade trajectory and thereby account for individual saccade trajectory idiosyncrasies (see “Data analysis”).

Participants were instructed to execute the saccades accurately and to avoid looking to the distractor location. To reduce the frequency of saccades made directly from the fixation to the second saccade target (diagonal saccades), we instructed participants to make the requested double-step saccade sequence without strong time pressure. However, a trial was aborted and subjects were given auditory feedback if they took more than 400/700 ms to initiate their first/second saccade relative to the onset of the saccade targets. Each participant completed between 3691 and 3887 trials. Correct fixation as well as correct saccade landing within a 3.5° radius virtual circle centered on the first and second saccade target were monitored online. Trials with fixation breaks or incorrect saccades (inaccurate or too slow) were discarded and repeated at the end of a block in a randomized order (participants repeated between 91 and 287 trials). In total we included 26,293 trials (91.30% of the online selected trials, 87.02% of all trials played) in the data analysis.

Experiment 2

This experiment was designed to replicate the main findings of the first experiment. The experimental design was identical to Exp. 1 with the exception of a few parameters.

First, contrary to Exp. 1 in which the distractor modality was fixed within a block, blocks of trials were randomly composed of visual or auditory distractor trials (no audiovisual trials were presented) in Exp. 2. Participants completed 2 experimental sessions (on different days) of about 60–90 min each (including breaks). Two participants who did not participate in the first experiment were familiarized with the task (two short blocks, ~ 5 min each) followed by seven blocks of the main experiment (~ 25 min each).

Second, across trials, pre-saccadic distractors were presented clockwise or counterclockwise relative to the second saccade target position in order to only have inter-hemifield trials (no pre-saccadic intra-hemifield trials). As in Exp. 1, the onset of the distractor stimulus occurred randomly at a time between 100 ms before and 300 ms (in steps of 1 ms) after the appearance of the saccade targets. This ensured that the distractors reliably occurred either before the first saccade (1st saccade latency with visual: 194.93 ± 7.85 ms, auditory: 185.76 ± 7.56 ms, and without distractor: 190.48 ± 9.04 ms) or in between the two saccades (2nd saccade latency with visual: 493.63 ± 15.21 ms, auditory: 477.76 ± 16.95 ms, and without distractor: 479.45 ± 18.02 ms).

Each participant completed between 1722 and 1848 trials. Correct fixation and saccade execution were monitored online as in Exp.1. Trials with fixation breaks or incorrect saccades were discarded and repeated at the end of a block in a randomized order (participants repeated between 42 and 168 trials). In total we included 10,765 trials (91.54% of the online selected trials, 86.65% of all trials played) in the data analysis.

Data pre-processing

We first scanned the recorded eye-position data offline and detected saccades based on their velocity distribution57, using a moving average over twenty subsequent eye position samples. Saccade onset was detected when the velocity exceeded the median of the moving average by 3 SDs for at least 20 ms. We included trials if a correct fixation was maintained within a 3.5° radius centered on the fixation target before the onset of the saccade targets, if a first correct saccade started at the fixation target and landed within a 3.5° radius centered on the first saccade target, if a second correct saccade started at the first saccade target and landed within a 3.5° radius centered on the second saccade target, and if no blink occurred during the trial.

Data analysis

We first determined the eye position coordinates of the second saccades for each correct trial. These coordinates were then rotated as to direct all saccades upward. Subsequently, we determined the mean eye position on the main direction axis (i.e. mean vertical coordinates across horizontal coordinates) for each second saccade in order to end up with only monotonic eye sample values in the saccade direction. Next, we split data as a function of the direction of the saccade sequence and, for each of these groups, subtracted the mean coordinates of the specific saccade sequence from the mean coordinates of the distractor-absent saccade sequence. This normalization ensured that any deviation of the saccade trajectory in response to a distractor was not due to the individual idiosyncrasy of the saccade trajectory of a participant. It also allowed us to average saccades from different double-step saccade sequences together more accurately. With these normalized coordinates we then determined the saccade curvature angle26,58 for each trial, that is, the median of the angular deviations of each sample point from a straight line connecting the starting and ending point of the saccade. Finally, raw coordinates of the main saccade axis direction were inverted for trials in which distractors were played counterclockwise relatively to the second saccade direction. This way, positive and negative values represent coordinates and curvature angles that were directed either toward or away from the distractor’s head-centered position, respectively. To study the effects of distractors presented before the first saccade, we included trials in which the distractor ended in the last 150 ms preceding the first saccade (pre-saccadic distractor trials, Fig. 1C). To study the effects of distractors after the first saccade we included trials in which the distractor started after the first saccade offset and ended at least 100 ms before the second saccade onset (inter-saccadic distractor trials, Fig. 1C). Note that the respectively excluded, late occurring distractors (within the last 100 ms prior to the onset of the second saccade) are not expected to be registered early enough by the oculomotor system when processing the second saccade. These time windows were determined following an earlier study on the time course of similar effects26. In Exp. 1, per participant, we analyzed 118.38 ± 8.05, 151.12 ± 4.79 and 140.75 ± 4.32 inter-hemifield pre-saccadic, 119.25 ± 6.74, 145.88 ± 4.39 and 138.88 ± 5.62 intra-hemifield pre-saccadic, and 155.00 ± 22.72, 127.75 ± 26.82 and 136.38 ± 26.18 inter-saccadic visual, auditory and audiovisual distractor trials, respectively, and 853.12 ± 21.70 trials without distractor. In Exp. 2, per participant, we analyzed 230.86 ± 11.53 and 236.43 ± 10.37 inter-hemifield pre-saccadic, and 149.86 ± 23.90 and 143.29 ± 26.54 inter-saccadic visual and auditory distractor trials, respectively, and 229.14 ± 7.87 trials without distractor.

Statistical comparisons of normalized saccade curvature angles were based on drawing with replacement 10,000 bootstrap samples from the original values (keeping the participant and condition structure) and computing 10,000 means, respectively. By using the bootstrap method, i.e. resampling with replacement from the collected sample, we can form a fair estimate of the parent population. We determined statistical significance by deriving two-tailed p values for the comparison of the bootstrapped distributions obtained in a given distractor-present condition to the distributions observed without a distractor. Finally, to compare performance between two distractor-present conditions, we subtracted the bootstrapped values of the first condition from the second and derived two-tailed p-values from the distribution of these differences.

All analysis codes are available online: https://github.com/mszinte/curvature_av.

Supplementary Information

Acknowledgements

We are grateful to the members of the Deubel laboratory in Munich for helpful comments and discussions and to Elodie Parison, Alice and Clémence Szinte for their support.

Author contributions

M.S., D.A.-M., D.J., and H.D. designed the experiment. M.S. performed the experiments and analyzed the data. M.S., D.A.-M., D.J., L.W. and H.D. discussed the results and wrote the paper.

Funding

This research was supported by a Deutsche Forschungsgemeinschaft (DFG) temporary position for principal investigator SZ343/1 (to M.S.), a DFG Open Research Area grant DE336/3-1 (to H.D.) and a Marie Sklodowska-Curie Action Individual Fellowship to M.S. (704537).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-020-78163-y.

References

- 1.King AJ, Schnupp JWH, Doubell TP. The shape of ears to come: Dynamic coding of auditory space. Trends Cognit. Sci. 2001;5:261–270. doi: 10.1016/S1364-6613(00)01660-0. [DOI] [PubMed] [Google Scholar]

- 2.King AJ. The superior colliculus. Curr. Biol. 2004;14:R335–R338. doi: 10.1016/j.cub.2004.04.018. [DOI] [PubMed] [Google Scholar]

- 3.Sparks DL, Nelson IS. Sensory and motor maps in the mammalian superior colliculus. Trends Neurosci. 1987;10:312–317. doi: 10.1016/0166-2236(87)90085-3. [DOI] [Google Scholar]

- 4.Arnott SR, Alain C. The auditory dorsal pathway: Orienting vision. Neurosci. Biobehav. Rev. 2011;35:2162–2173. doi: 10.1016/j.neubiorev.2011.04.005. [DOI] [PubMed] [Google Scholar]

- 5.Bulkin DA, Groh JM. Distribution of eye position information in the monkey inferior colliculus. J. Neurophysiol. 2012;107:785–795. doi: 10.1152/jn.00662.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Groh JM, Trause AS, Underhill AM, Clark KR, Inati S. Eye position influences auditory responses in primate inferior colliculus. Neuron. 2001;29:509–518. doi: 10.1016/S0896-6273(01)00222-7. [DOI] [PubMed] [Google Scholar]

- 7.Lee J, Groh JM. Auditory signals evolve from hybrid- to eye-centered coordinates in the primate superior colliculus. J. Neurophysiol. 2012;108:227–242. doi: 10.1152/jn.00706.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jay MF, Sparks DL. Auditory receptive fields in primate superior colliculus shift with changes in eye position. Nature. 1984;309:345–347. doi: 10.1038/309345a0. [DOI] [PubMed] [Google Scholar]

- 9.Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. II. Coordinates of auditory signals. J. Neurophysiol. 1987;57:35–55. doi: 10.1152/jn.1987.57.1.35. [DOI] [PubMed] [Google Scholar]

- 10.King AJ, Hutchings ME, Moore DR, Blakemore C. Developmental plasticity in the visual and auditory representations in the mammalian superior colliculus. Nature. 1988;332:73–76. doi: 10.1038/332073a0. [DOI] [PubMed] [Google Scholar]

- 11.Linden JF, Grunewald A, Andersen RA. Responses to auditory stimuli in macaque lateral intraparietal area II. Behavioral modulation. J. Neurophysiol. 1999;82:343–358. doi: 10.1152/jn.1999.82.1.343. [DOI] [PubMed] [Google Scholar]

- 12.Grunewald A, Linden JF, Andersen RA. Responses to auditory stimuli in macaque lateral intraparietal area. I. Effects of training. J. Neurophysiol. 1999;82:330–342. doi: 10.1152/jn.1999.82.1.330. [DOI] [PubMed] [Google Scholar]

- 13.Stricanne B, Andersen RA, Mazzoni P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J. Neurophysiol. 1996;76:2071–2076. doi: 10.1152/jn.1996.76.3.2071. [DOI] [PubMed] [Google Scholar]

- 14.Russo GS, Bruce CJ. Frontal eye field activity preceding aurally guided saccades. J. Neurophysiol. 1994;71:1250–1253. doi: 10.1152/jn.1994.71.3.1250. [DOI] [PubMed] [Google Scholar]

- 15.Doyle M, Walker R. Multisensory interactions in saccade target selection: Curved saccade trajectories. Exp. Brain Res. 2002;142:116–130. doi: 10.1007/s00221-001-0919-2. [DOI] [PubMed] [Google Scholar]

- 16.Kruijne W, der Stigchel SV, Meeter M. A model of curved saccade trajectories: Spike rate adaptation in the brainstem as the cause of deviation away. Brain Cogn. 2014;85:259–270. doi: 10.1016/j.bandc.2014.01.005. [DOI] [PubMed] [Google Scholar]

- 17.Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye-movements. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- 18.Walker MF, Fitzgibbon EJ, Goldberg ME. Neurons in the monkey superior colliculus predict the visual result of impending saccadic eye movements. J. Neurophysiol. 1995;73:1988–2003. doi: 10.1152/jn.1995.73.5.1988. [DOI] [PubMed] [Google Scholar]

- 19.Sommer MA, Wurtz RH. Influence of the thalamus on spatial visual processing in frontal cortex. Nature. 2006;444:374–377. doi: 10.1038/nature05279. [DOI] [PubMed] [Google Scholar]

- 20.Wurtz RH. Neuronal mechanisms of visual stability. Vision. Res. 2008;48:2070–2089. doi: 10.1016/j.visres.2008.03.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Populin LC, Tollin DJ, Yin TCT. Effect of eye position on saccades and neuronal responses to acoustic stimuli in the superior colliculus of the behaving cat. J. Neurophysiol. 2004;92:2151–2167. doi: 10.1152/jn.00453.2004. [DOI] [PubMed] [Google Scholar]

- 22.Goossens HH, Opstal AJV. Influence of head position on the spatial representation of acoustic targets. J. Neurophysiol. 1999;81:2720–2736. doi: 10.1152/jn.1999.81.6.2720. [DOI] [PubMed] [Google Scholar]

- 23.Grootel TJV, Wanrooij MMV, Opstal AJV. Influence of static eye and head position on tone-evoked gaze shifts. J. Neurosci. 2011;31:17496–17504. doi: 10.1523/JNEUROSCI.5030-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Vliegen J, Grootel TJV, Opstal AJV. Dynamic sound localization during rapid eye-head gaze shifts. J. Neurosci. 2004;24:9291–9302. doi: 10.1523/JNEUROSCI.2671-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sheliga BM, Riggio L, Rizzolatti G. Orienting of attention and eye movements. Exp. Brain Res. 1994;98:507–522. doi: 10.1007/BF00233988. [DOI] [PubMed] [Google Scholar]

- 26.Jonikaitis D, Belopolsky AV. Target-distractor competition in the oculomotor system is spatiotopic. J. Neurosci. 2014;34:6687–6691. doi: 10.1523/JNEUROSCI.4453-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.der Stigchel SV, Meeter M, Theeuwes J. Eye movement trajectories and what they tell us. Neurosci. Biobehav. Rev. 2006;30:666–679. doi: 10.1016/j.neubiorev.2005.12.001. [DOI] [PubMed] [Google Scholar]

- 28.Heeman J, Nijboer TCW, der Stoep NV, Theeuwes J, der Stigchel SV. Oculomotor interference of bimodal distractors. Vision Res. 2016;123:46–55. doi: 10.1016/j.visres.2016.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Frens MA, Opstal AJV. A quantitative study of auditory-evoked saccadic eye movements in two dimensions. Exp. Brain Res. 1995;107:103–117. doi: 10.1007/BF00228022. [DOI] [PubMed] [Google Scholar]

- 30.Theeuwes J, Olivers CNL, Chizk CL. Remembering a location makes the eyes curve away. Psychol. Sci. 2005;16:196–199. doi: 10.1111/j.0956-7976.2005.00803.x. [DOI] [PubMed] [Google Scholar]

- 31.Robinson DA. Eye movements evoked by collicular stimulation in the alert monkey. Vision. Res. 1972;12:1795–1808. doi: 10.1016/0042-6989(72)90070-3. [DOI] [PubMed] [Google Scholar]

- 32.Robinson DA, Fuchs AF. Eye movements evoked by stimulation of frontal eye fields. J. Neurophysiol. 1969;32:637–648. doi: 10.1152/jn.1969.32.5.637. [DOI] [PubMed] [Google Scholar]

- 33.Frens MA, Opstal AJV, Willigen RFVD. Spatial and temporal factors determine auditory-visual interactions in human saccadic eye movements. Percept. Psychophys. 1995;57:802–816. doi: 10.3758/BF03206796. [DOI] [PubMed] [Google Scholar]

- 34.Gottlieb JP, Kusunoki M, Goldberg ME. The representation of visual salience in monkey parietal cortex. Nature. 1998;391:481–484. doi: 10.1038/35135. [DOI] [PubMed] [Google Scholar]

- 35.Colby CL, Duhamel JR, Goldberg ME. Visual, presaccadic, and cognitive activation of single neurons in monkey lateral intraparietal area. J. Neurophysiol. 1996;76:2841–2852. doi: 10.1152/jn.1996.76.5.2841. [DOI] [PubMed] [Google Scholar]

- 36.Kusunoki M, Goldberg ME. The time course of perisaccadic receptive field shifts in the lateral intraparietal area of the monkey. J. Neurophysiol. 2003;89:1519–1527. doi: 10.1152/jn.00519.2002. [DOI] [PubMed] [Google Scholar]

- 37.Nakamura K, Colby CL. Updating of the visual representation in monkey striate and extrastriate cortex during saccades. Proc. Natl. Acad. Sci. 2002;99:4026–4031. doi: 10.1073/pnas.052379899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- 39.Maddox RK, Pospisil DA, Stecker GC, Lee AKC. Directing eye gaze enhances auditory spatial cue discrimination. Curr. Biol. 2014;24:748–752. doi: 10.1016/j.cub.2014.02.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Krüger HM, Collins T, Englitz B, Cavanagh P. Saccades create similar mislocalizations in visual and auditory space. J. Neurophysiol. 2016;115:2237–2245. doi: 10.1152/jn.00853.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pavani F, Husain M, Driver J. Eye-movements intervening between two successive sounds disrupt comparisons of auditory location. Exp. Brain Res. 2008;189:435–449. doi: 10.1007/s00221-008-1440-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Andersen RA, Essick GK, Siegel RM. Encoding of spatial location by posterior parietal neurons. Science. 1985;230:456–458. doi: 10.1126/science.4048942. [DOI] [PubMed] [Google Scholar]

- 43.Melcher D, Colby CL. Trans-saccadic perception. Trends Cognit. Sci. 2008;12:466–473. doi: 10.1016/j.tics.2008.09.003. [DOI] [PubMed] [Google Scholar]

- 44.Klier EM, Wang H, Crawford JD. The superior colliculus encodes gaze commands in retinal coordinates. Nat. Neurosci. 2001;4:627–632. doi: 10.1038/88450. [DOI] [PubMed] [Google Scholar]

- 45.Henriques DYP, Klier EM, Smith MA, Lowy D, Crawford JD. Gaze-centered remapping of remembered visual space in an open-loop pointing task. J. Neurosci. 1998;18:1583–1594. doi: 10.1523/JNEUROSCI.18-04-01583.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Rolfs M, Szinte M. Remapping attention pointers: Linking physiology and behavior. Trends Cognit. Sci. 2016;20:399–401. doi: 10.1016/j.tics.2016.04.003. [DOI] [PubMed] [Google Scholar]

- 47.Cavanagh P, Hunt AR, Afraz A, Rolfs M. Visual stability based on remapping of attention pointers. Trends Cognit. Sci. 2010;14:147–153. doi: 10.1016/j.tics.2010.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Szinte M, Jonikaitis D, Rangelov D, Deubel H. Pre-saccadic remapping relies on dynamics of spatial attention. eLife. 2018;7:e37598. doi: 10.7554/eLife.37598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Schut MJ, der Stoep NV, der Stigchel SV. Auditory spatial attention is encoded in a retinotopic reference frame across eye-movements. PLoS ONE. 2018;13:e0202414. doi: 10.1371/journal.pone.0202414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Neupane S, Guitton D, Pack CC. Two distinct types of remapping in primate cortical area V4. Nat. Commun. 2016;7:10402. doi: 10.1038/ncomms10402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Collins T, Heed T, Röder B. Eye-movement-driven changes in the perception of auditory space. Attent. Percept. Psychophys. 2010;72:736–746. doi: 10.3758/APP.72.3.736. [DOI] [PubMed] [Google Scholar]

- 52.Chen X, DeAngelis GC, Angelaki DE. Diverse spatial reference frames of vestibular signals in parietal cortex. Neuron. 2013;80:1310–1321. doi: 10.1016/j.neuron.2013.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Badde S, Heed T. Towards explaining spatial touch perception: Weighted integration of multiple location codes. Cognit. Neuropsychol. 2016;33:26–47. doi: 10.1080/02643294.2016.1168791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Godfroy-Cooper M, Sandor PMB, Miller JD, Welch RB. The interaction of vision and audition in two-dimensional space. Front. Neurosci. 2015;9:70. doi: 10.3389/fnins.2015.00311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Cornelissen FW, Peters EM, Palmer J. The eyelink toolbox: Eye tracking with MATLAB and the psychophysics toolbox. Behav. Res. Methods Instrum. Comput. 2002;34:613–617. doi: 10.3758/BF03195489. [DOI] [PubMed] [Google Scholar]

- 56.Brainard DH. The psychophysics toolbox. Spat. Vis. 1997;10:433–436. doi: 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- 57.Engbert R, Mergenthaler K. Microsaccades are triggered by low retinal image slip. Proc. Natl. Acad. Sci. USA. 2006;103:7192–7197. doi: 10.1073/pnas.0509557103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Belopolsky AV, der Stigchel SV. Saccades curve away from previously inhibited locations: Evidence for the role of priming in oculomotor competition. J. Neurophysiol. 2013;110:2370–2377. doi: 10.1152/jn.00293.2013. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.