Abstract

There is growing interest in using observational data to assess the safety, effectiveness, and cost effectiveness of medical technologies, but operational, technical, and methodological challenges limit its more widespread use. Common data models and federated data networks offer a potential solution to many of these problems. The open-source Observational and Medical Outcomes Partnerships (OMOP) common data model standardises the structure, format, and terminologies of otherwise disparate datasets, enabling the execution of common analytical code across a federated data network in which only code and aggregate results are shared. While common data models are increasingly used in regulatory decision making, relatively little attention has been given to their use in health technology assessment (HTA). We show that the common data model has the potential to facilitate access to relevant data, enable multidatabase studies to enhance statistical power and transfer results across populations and settings to meet the needs of local HTA decision makers, and validate findings. The use of open-source and standardised analytics improves transparency and reduces coding errors, thereby increasing confidence in the results. Further engagement from the HTA community is required to inform the appropriate standards for mapping data to the common data model and to design tools that can support evidence generation and decision making.

Key Points for Decision Makers

| The observational and medical outcomes partnerships (OMOP) common data model standardises the structure and coding systems of otherwise disparate datasets, enabling the application of standardised and validated analytical code across a federated data network without the need to share patient data. |

| Common data models have the potential to overcome some of the key operational, methodological, and technical challenges of using observational data in health technology assessment (HTA), particularly by enhancing the interoperability of data and the transparency of analyses. |

| To ensure the usefulness of the OMOP common data model to HTA, it is imperative that the HTA community engages with this work to develop tools and processes to support reliable, timely, and transparent evidence generation in HTA. |

Introduction

There is growing interest in the use of observational data (or, ‘real-world data’) to assess the safety, effectiveness, and cost effectiveness of medical technologies [1, 2]. But several barriers limit its more widespread use, including challenges in identifying and accessing relevant data, in ensuring the quality and representativeness of data, and in the differences between datasets in terms of their structure, content, and coding systems used [1, 3, 4].

Common data models and distributed data networks offer a possible solution to these problems [4–9]. A common data model standardises the structure, and sometimes also the coding systems, of otherwise disparate datasets, enabling the application of standardised and validated analytical code across datasets. Datasets conforming to a common data model can be accessed through federated data networks, in which all data reside locally in the secure environment of the data custodian(s). Analytical code is then brought to the data and executed locally, with only aggregated results returned. This puts the data custodian in full control and avoids the need to share patient-level data, thereby at least partially addressing data privacy and governance concerns. In so doing, it may also increase the availability of data for healthcare research. Common data models can enhance the transparency and reliability of medical research and ensure efficient and timely generation of evidence for decision making.

Several common data models are in widespread use [9], including the US FDA Sentinel, which is used predominantly for post-marketing drug safety surveillance but increasingly also for effectiveness research [4, 10], and the open-source Observational and Medical Outcomes Partnerships (OMOP) common data model, which has been used to study treatment pathways, comparative effectiveness, safety, and patient-level prediction [8, 11–15]. The European Medicines Agency (EMA) will use the OMOP common data model to conduct multicentre cohort studies on the use of medicines in patients with coronavirus 2019 (COVID-19) [16]. The EMA are also looking to establish a data network for the proactive monitoring of benefit-risk profiles of new medicines over their life cycles, which could use a common data model approach [6, 17, 18]. To date, relatively little attention has been given to the usefulness of these models and data networks for supporting health technology assessment (HTA).

Here, we discuss the potential value of the OMOP common data model for use in HTA, for both evidence generation and healthcare decision making, and identify priority areas for further development to ensure its potential is realised.

The Use of Observational Data in Health Technology Assessment (HTA)

HTA is used to inform clinical practice and the reimbursement, coverage, and/or pricing of medical technologies, including drugs. While the exact methods and uses of HTA differ between healthcare systems, substantial commonalities exist [19–21]. Most HTA bodies require a relative effectiveness assessment of one or more technologies compared with standard of care and prefer data on final clinical endpoints (such as survival) and patient-reported outcomes (such as health-related quality of life) [21, 22]. Often estimates of relative effectiveness need to be provided over the long term (e.g. patients’ lifetime), and this may necessitate economic modelling. Some HTA bodies also require evidence on (long-term) cost effectiveness, which requires an assessment of the additional cost of achieving additional benefits, and budget impact analysis, i.e. the gross or net budgetary impact of implementing a technology in a health system. Most HTA bodies and payers prefer data pertaining directly to their jurisdiction.

The potential uses of observational data in HTA are large. There is wide acceptance of its use for assessing safety, particularly for rare outcomes and over longer time periods, for describing patient characteristics and treatment patterns in clinical practice, and for estimating epidemiological parameters, including disease incidence, event rates, overall survival, healthcare utilisation and costs, and health-related quality of life [23, 24]. It could also be used to validate modelling decisions, e.g. extrapolation of overall survival or from surrogate to final clinical endpoints, but its use here, so far, is limited [25]. To inform local reimbursement and pricing decisions, it is important that such data reflect the local populations and healthcare settings.

The role of non-randomised data in establishing comparative effectiveness is more controversial [23, 26, 27]. In principle, it could be used to support decisions in the absence of reliable or sufficient randomised controlled trial (RCT) data [28–32] or to supplement RCTs with evidence from routine clinical practice on long-term outcomes or outcomes with immature data from trials to validate findings or translate results to different populations and settings [23, 25, 33–36]. Increasingly, such data are used as part of managed entry arrangements, including commissioning through evaluation and outcomes-based contracting [37, 38]. However, despite growing calls for increased use of observational data in decision making, its role remains limited [23]. We follow the OPTIMAL framework in categorising barriers to the wider use of such data into operational, technical, and methodological challenges [39], supplemented by additional considerations where necessary [1, 3].

Operational challenges to the use of observational data include issues of feasibility, governance, and sustainability, which complicate access to, and the use of, data. A limited number of European datasets are of sufficient quality for use in decision making, particularly in Eastern and Southern Europe [40]. Furthermore, it can be difficult to identify datasets containing relevant information or to understand the quality of the data with respect to a planned application [41]. When relevant, high-quality data are identified, it may not be accessible because of governance restrictions on data sharing, lack of patient consent, or prohibitively high access costs. Beyond the direct costs of data acquisition, substantial investments may be needed in staff and infrastructure to manage, analyse, and interpret such data [42]. These challenges limit the opportunity to generate robust, relevant, and timely information to support local decision making. Finally, transparency is often lacking in the conduct of studies using observational data, which limits the acceptability of results for decision making [43, 44].

Technical constraints relate to the contents and quality of data and impair the ability to generate robust and valid results. Most observational datasets are not designed for research purposes but rather to support clinical care or healthcare administration. The quality of observational data varies, including in the extent of missing data, measurement reliability, coding accuracy, misclassification of exposures and outcomes, or insufficient numbers of patients [1, 45]. Certain types of data are routinely missing from observational databases, including drugs dispensed in secondary care or over the counter [40] and patient-reported outcomes [46]. A further complication is data fragmentation, where information about a patient’s care pathway is stored across disparate datasets. Data linkage is essential to adequately address many research questions, but operational constraints due to varying governance processes may limit the ability to link datasets in a timely fashion.

A final major technical constraint is the substantial variation between datasets in terms of their structure, contents, and the coding systems used to represent clinical and health system data. Datasets can differ in several ways, including in their structure (e.g. single data frame vs. relational database design), contents (i.e. what data are included), and in the representation of data (i.e. how data are coded). For instance, numerous competing coding and classification systems are used to represent clinical diagnoses (e.g. Standard Nomenclature of Medicine [SNOMED], Medical Dictionary for Regulatory Activities [MedDRA], International Classification of Diseases [ICD], Read), pharmaceuticals (e.g. British National Formulary, RxNorm, Anatomical Therapeutic Chemical [ATC] classification), procedures (e.g. OPCS, ICD-10-PCS), and other types of clinical and health system data. Conventions for any given vocabulary are also subject to change over time. These differences impose a burden on analysts who are required to understand the idiosyncrasies of each dataset and coding system, and their developments over time, which limits the opportunity to validate analyses in different datasets or to translate results to different populations or settings. It also complicates the interpretation of the results for those who use the evidence, including regulators, HTA bodies, payers, patients, and clinicians.

Finally, methodological challenges arise from the inherent limitations of observational databases as they are not designed to produce causal associations [47]. Biases may arise because of poor-quality data or patient selection, whereby the associations observed among those in a database do not apply to the wider population of interest, and because of confounding, whereby patients are allocated differently to exposures based on unobserved or poorly characterised characteristics [47, 48]. Detailed consideration must be given to these potential biases in study design and, where appropriate, advanced methodologies must be used to address them.

The OMOP Common Data Model

What is a Common Data Model?

The main purpose of common data models is to address problems caused by poor data interoperability. They do this by imposing some level of standardisation on otherwise disparate data sources. Several open-source common data models are in use that differ in a number of important respects, including the extent of the standardisation, for instance, whether they standardise just the structure (FDA Sentinel) or also the semantic representation of data (OMOP); the coverage of the standardisation, whether only for selected types of clinical data (FDA Sentinel) or an attempt to be comprehensive, including all clinical and health system data (OMOP); and in their applications [9, 11–14]. These differences may impact on the timeliness with which high-quality multidatabase studies can be conducted, the transparency of analyses, and the adaptability of the analysis to specific research questions [4].

An alternative approach to multidatabase studies is to allow local data extraction and analysis following a common protocol [49, 50]. While this has been shown to produce reliable results in some applications [50], differences between datasets may arise because of differences in data curation, implementation of the analysis, and coding errors. Alternatively, aggregated or patient-level datasets can be pooled following a study-specific common data model, e.g. as developed in the European Union Adverse Drug Reaction project [5]. This allows the sharing of common analytics, reducing between-dataset variation in study conduct, but may restrict the analytical choices (e.g. large-scale propensity score matching). In some cases, data pooling will be prohibited by data governance and privacy concerns.

The OHDSI Community

The OMOP common data model has its origins in a programme of work by the OMOP, designed to develop methods to inform the FDA’s active safety surveillance activities [8, 51, 52]. Since 2014, the OMOP common data model has been maintained by the open-science Observational Health Data Sciences and Informatics (OHDSI, pronounced ‘Odyssey’) community (https://www.ohdsi.org). OHDSI also develops open-source software to support high-quality research, engages in methodological work to establish best practices, and performs many multidatabase studies across its network. The common data model and open-source tools are shared on OHDSI’s GitHub account (https://github.com/OHDSI/), and discussions take place on a dedicated open forum (https://forums.ohdsi.org/). For more information about OHDSI and the analytical pipelines see The Book of OHDSI [8].

OMOP Common Data Model

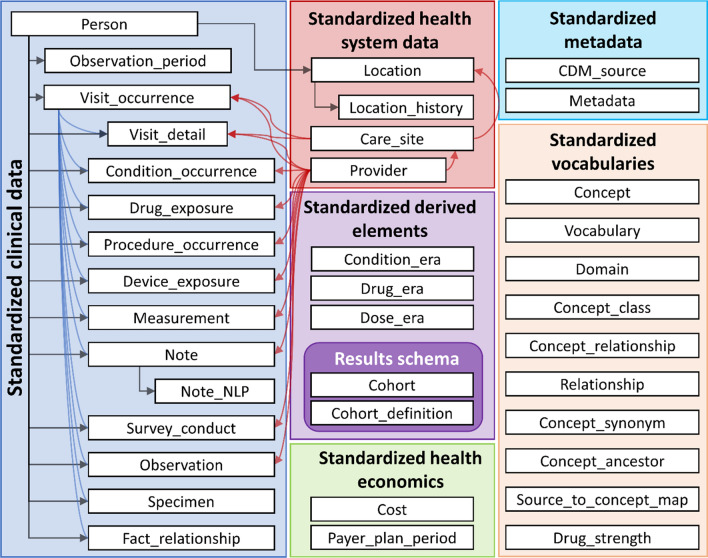

The OMOP common data model has a ‘person-centric relational database’ design similar to many electronic healthcare record systems. This means that clinical (e.g. signs, symptoms, and diagnoses, drugs, procedures, devices, measurements, and health surveys) and health system data (e.g. healthcare provider, care site, and costs) are organised into pre-defined tables, which are linked, either directly or indirectly, to patients (Fig. 1) [8]. Each table stores ‘events’ (i.e. clinical or health system data) with defined content, format, and representation. The OMOP common data model contains two standardised health economic tables. The first contains information about a patient’s health insurance arrangements, and the second contains data on costs, charges, or expenditures related to specific episodes of care (e.g. inpatient stay, ambulatory visit, drug prescription). This structure reflects the origins of the common data model in the USA, with a largely insurance-based system of healthcare. The OMOP common data model is designed to be as comprehensive as possible to allow a wide variety of research questions to be addressed.

Fig. 1.

Overview of the OMOP common data model version 6.0 [8]. The tables relating to standardised vocabularies provide comprehensive information on mappings from between source and standard concepts and hierarchies for standard concepts (e.g. concept_ancestor). CDM common data model, NLP natural language processing

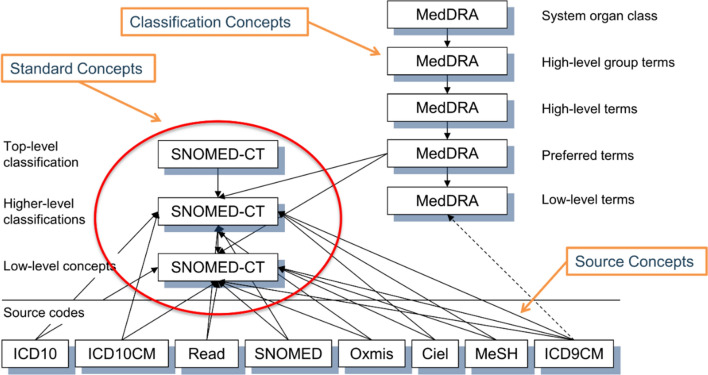

Standard vocabularies are used to normalise the meaning of data within the common data model and are defined separately for different types of data (i.e. data residing in different tables). The SNOMED system is used to represent clinical data, RxNorm to represent drugs, and Logical Observation Identifiers Names and Codes (LOINC) to represent clinical measurements. RxNorm has been extended within OMOP to include all authorised drugs in Europe using the Article 57 database. Other types of data, including procedures, devices, and health surveys, have more than one standard vocabulary because of the absence of a comprehensive standard. These standard vocabularies have hierarchies, which allows users to select a single concept and any descendants of that concept in defining a cohort or outcome set. Some standard vocabularies can also be linked to hierarchical classification systems such as MedDRA for clinical conditions and ATC for drugs. The codes used in the original data are also retained within the common data model and can be used by analysts. Figure 2 provides a visual illustration of the vocabularies for the condition domain.

Fig. 2.

A visual representation of vocabularies and their relationships in the condition domain of the OMOP common data model [8]. ICD International Classification of Diseases, ICD-9 ICD, Ninth Revision, ICD-10-CM ICD Tenth Revision, Clinical Modification, MedDRA Medical Dictionary for Regulatory Activities, MeSH medical subject heading, SNOMED-CT Standard Nomenclature of Medicine Clinical Terminology

Mapping to OMOP is performed by a multidisciplinary team involving mapping and vocabulary experts, local data experts, and clinicians who together use open-source tools to construct an ‘execute, transform, and load’ (ETL) procedure. The mapping of a given dataset to the common data model is intended to be separated from any particular analysis. Bespoke mapping tables may need be created or updated to represent data in local vocabularies [53–55]. It is essential that the ETL is maintained over time, for instance, to respond to changes in the source data, coding errors in the ETL, or the release of new OMOP vocabularies. This requires highly developed and robust quality assurance processes [56]. Finally, it should be noted that OMOP has been predominantly used for claims databases and electronic health records. Further work is ongoing to better support the representation of other data types (including patient registries), specific diseases (including oncology), and health data (including genetic and biomarker data).

Standardised Analytical Tools

The OHDSI collaborative has developed a number of open-source applications and tools that support the mapping of datasets to the OMOP common data model, data quality assessment, data analysis, and the conduct of multidatabase studies across a federated data network [8].

The data quality dashboard is designed to enable evaluation of the data quality of any given observational dataset. It does this by running a series of prespecified data quality checks about the OMOP common data model following the framework by Kahn et al. [57]. In this framework, data quality is defined in relation to its conformance (including value, relational, and computational conformance), completeness, and plausibility (including uniqueness, atemporal, and temporal plausibility). These are assessed by verifying against organisational data or validating against an accepted gold standard.

Data exploration and analyses can be conducted using the ATLAS user interface (https://atlas.ohdsi.org/) and/or open-source software such as R. Standardised tools have been created to support the characterisation of cohorts in terms of baseline characteristics and treatment and disease pathways; patient-level prediction, e.g. for estimating the risk of an adverse event or patient stratification; and population-level estimation, e.g. for safety surveillance and comparative-effectiveness estimation. Where standardised tools are used, interactive dashboards are available to display key results and outputs of various diagnostic checks. While the tools allow for considerable flexibility in user specifications, they also impose some constraints on the analyst in line with community-defined standards of best practice. Analyses can also be performed without utilising these tools by writing bespoke analysis code and using existing R packages.

The common data model and analytical tools are not fixed but are developed in collaboration with the OHDSI community and according to the priorities of its members. Where developments and new tools are made, these will be available to all users.

Data Networks

A common data model is most useful when it is part of large data network. In federated (or distributed) data networks, data ownership is retained by the data custodian (or licensed data holders), and analysis code can be run against the data in the local environment, subject to standard data access approvals, with only aggregated results returned to the analysts [9]. This recognises the governance and infrastructural constraints that limit the ability of external institutions to access individual patient data. This stands in contrast to pooled data networks, where individual patient-level data are collated centrally and made available for analysis.

The OMOP common data are used (and maintained) by the OHDSI network [51]. As of 2019, the OHDSI network had mapped over 100 datasets to the OMOP common data model, encompassing more than 1 billion patients [8]. There is growing interest in the use of the OMOP common data model, particularly for regulatory purposes [6, 17]. In response to this, the Innovative Medicines Initiative has funded the European Health Data and Evidence Network (EHDEN, https://www.ehden.eu) public–private partnership, which aims to establish a federated network of healthcare datasets across Europe conforming to the OMOP common data model [58]. The EMA has formed a partnership, including EHDEN consortium members, to use OMOP to conduct multicentre cohort studies on the use of medicines in patients with COVID-19 [16]. The OHDSI network and tools are built in alignment with ‘findability, accessibility, interoperability, and reusability’ (FAIR) principles, designed to support good scientific data management and stewardship [59].

What is the Role for the OMOP Common Data Model in HTA?

We discuss the role of the OMOP common data model and its associated data networks in overcoming the challenges identified in the OPTIMAL framework and for evidence generation in HTA.

Operational Constraints

A large and diverse data network facilitates the identification of relevant data sources and allows for an understanding of their quality [60]. To support the timely generation of high-quality and relevant evidence, a well-maintained, high-quality register with detailed and substantial meta-data about each dataset is essential [41].

A key benefit of a federated data network is that it obviates the need for patient-level data to be shared across organisations, which may not be possible because of data governance constraints, cost, or limited infrastructural or technical capacity. This should work to increase the data available for analysis, ensure that the most appropriate dataset(s) are used, enable multidatabase studies and the translation of evidence across jurisdictions to meet the needs of local decision makers, and increase the efficiency and timeliness of evidence generation.

The open-science nature of the OHDSI community means there is an emphasis on transparency in all aspects of study conduct. A comprehensive and computer-readable record of the ETL process used to map source data to the OMOP common data model and of data preparation and analysis for all applications is available. Tools are available to help understand data quality and check the validity of methodological choices (e.g. covariate balance after propensity score matching). The use of standardised analytics reduces the risk of coding errors and imposes community-agreed standards of methodological best practice and reporting. Transparency in study conduct and reporting increases the confidence in the results by HTA bodies and independent reviewers [61]. However, this alone is not sufficient to ensure transparency: it should be combined with other approaches, including pre-registration of study protocols and the use of standard reporting tools [44].

Technical Constraints

Perhaps the main benefit of common data models is in overcoming problems caused by limited data interoperability due to the diversity in data structures, formats, and terminologies. The common data model allows analysts to develop code on a single mapped dataset, or even synthetic dataset, and then execute that code on other data. The involvement of the data custodian in the study design and execution is still necessary, but the standardisation reduces the extent to which analysts need to be familiar with the idiosyncrasies of many different datasets. It enables a community to collaboratively develop and validate analytical pipelines.

The usefulness of any mapped dataset largely depends on the quality and contents of the source data from which it was derived. The mapping process itself cannot overcome problems due to missing data items or observations, data fragmentation, misclassification of exposures or outcomes, or selection bias. The OHDSI collaborative does, however, have tools that help characterise such problems, which can guide decisions about database selection and support critical appraisal of evidence.

There is also potential for information loss during the process of mapping from the source data to the common data model and standardised vocabularies [62]. Several validation studies have been published describing both successes and challenges in mapping data to OMOP [18, 53–55, 62–65]. For common data elements such as drugs and conditions, mapping can usually be performed with high fidelity. Most challenges were related to the absence of mapping tables for local vocabularies or of relevant standard concepts, which are more common for other types of healthcare and health system data [53–55]. This can lead to a loss of information in some instances [62, 65]. The extent and implications of any information loss will depend on numerous factors, including the quality of the source data, the source vocabulary, and the clinical application of interest. It is important to understand the likely impact of any information loss in each analysis. However, source data concepts are retained within the common data model and can be used in analyses as required. Where needed, vocabularies can be extended by the OHDSI community. Of course, a preferred long-term solution is for high-quality data to be collected at source using global standards.

Methodological Constraints

The role of the common data model in supporting multidatabase studies across a large data network has numerous benefits. It supports the translation of evidence across populations, time, and setting to support the needs of local HTA decision making, improving the efficiency and relevance of evidence generation for market access across Europe [66]. The opportunity it affords to enhance statistical power is likely to be particularly valuable in rare diseases where data may otherwise be insufficient to understand patient characteristics, health outcomes, treatment pathways, or comparative effectiveness [67]. It may also enable the extension of immature evidence on clinical outcomes from RCTs, allow exploration of heterogeneity, and support validation. The ability to produce reliable evidence at speed across a data network has been demonstrated in several applications, including in understanding the safety profile of hydroxychloroquine in the early stages of the COVID-19 pandemic [8, 11, 14, 15].

However, the risks of bias due to poor-quality data, selection bias, and residual confounding cannot be eradicated by the common data model, although best practice tools for causal estimation are available. It can help characterise some of these problems, and the ability to replicate results in different datasets may increase confidence in using the results in decision making [30, 68].

The mapping of source data to the OMOP common data model is performed independently of any analysis. While this allows faster development of analytical code, an understanding of the source data, and its strengths and limitations, overall and in relation to specific applications, remains vital. Others have argued that this separation adds a layer of complexity and may impede transparency in the absence of detailed reporting [4].

Types of Evidence and Analytical Challenges

The OMOP common data model and the standardised analytical tools have been largely built with regulatory uses in mind, particularly drug utilisation and comparative safety studies. The models for population-level estimation are currently limited to logistic, Poisson, and Cox proportional hazards modelling and propensity score matching and stratification. This covers only a limited range of potential applications in HTA. For example, in HTA, continuous outcome (e.g. generalised linear models for healthcare utilisation, costs, or quality of life) and parametric survival models (e.g. for extrapolation of survival) are widely used. Furthermore, the focus of tool development has been on big data analytics rather than smaller curated datasets. Analysts can of course always develop bespoke code to run against the common data model and utilise existing R packages for analyses.

The OMOP common data model includes two standardised health economic tables (see Sect. 3). The structure and contents reflect US claims data and are most useful in this setting. In the European setting, many healthcare datasets will not contain information on costs directly, but rather costs must be constructed from measures of healthcare utilisation. Unit costs can be attached to measures of utilisation extracted from the common data model, or, where costs depend on multiple parameters, this can be done prior to mapping with appropriate involvement of health economic experts. Some vocabularies will need to be extended to support HTA applications, for instance, visit concepts should reflect the differences in the delivery of healthcare in different settings, and mapping must appropriately reflect the uses of these data in the HTA context. Work is ongoing to further develop the common data model and vocabularies to better represent oncology treatments and outcomes, genetic and biomarker data, and patient-reported outcomes, all of which are important to HTA.

Numerous studies have shown the value of the OMOP common data model in undertaking drug utilisation and characterisation studies [13] and in estimating comparative safety and even effectiveness [11, 14, 15], all of which are important components of HTA. We undertook an additional case study to further understand some of the additional challenges in the context of HTA. Our objective was to estimate annual measures of primary care visits among patients with chronic obstructive pulmonary disease by disease severity (defined with spirometry measurements) using data in the UK (Clinical Practice Research Datalink) and the Netherlands (Integrated Primary Care Information) using a single script. While this analysis is possible using the common data model framework, we faced several challenges in its implementation. These included inappropriate mapping of source visit concepts to standard concepts, differences in mapping of measurements and observations in the two databases, and the absence of standard analytical tools directly applicable to this use case. All these challenges can be overcome by improved ETL processes and tool development. See Box A for a fuller description.

Conclusion

The OMOP common data model and its federated data networks have the potential to improve the efficiency, relevance, robustness, and timeliness of evidence generation for HTA. It supports the identification and access of data, the conduct of multidatabase studies, and the translation of evidence across populations and settings according to local HTA needs. The use of open-source standardised analytics and the possibility for greater model validation and replication should improve confidence in the results and their acceptability for decision making.

To realise this potential, it is essential that the future development of the common data model, vocabularies, and tools support the needs of HTA. We therefore call for the HTA community to engage with OHDSI and EHDEN to undertake use cases to identify development needs, drive priorities, and collaborate to build new tools, for example to support extrapolation and modelling of healthcare utilisation, costs, and quality of life. Finally, we urge those mapping source data to the common data model to collaborate with HTA experts to ensure that the mapping, particularly of healthcare utilisation and cost data, reflects the needs of the HTA community.

Box A Modelling Healthcare Utilisation across Countries Using OMOP: A Case Study

Objectives and Methods

We undertook a case study to explore the challenges of generating evidence for health technology assessment (HTA) from the Observational and Medical Outcomes Partnerships (OMOP) common data model across geographical settings and to identify recommendations to better support such work in the future.

Our aim was to estimate annual rates of primary care visits by staff role (general practitioner [GP], practice nurse) and location (clinic, home, telephone) among individuals with chronic obstructive pulmonary disease (COPD) by disease severity (using Global Initiative for COPD [GOLD] stage based on percent predicmted forced expiratory volume in 1 second [FEV1%] measurements) in the UK and the Netherlands using a single reproducible script. We used versions of the Clinical Practice Research Datalink (CPRD GOLD1) in the UK and the Integrated Primary Care Information (ICPI2) database in the Netherlands, which have been mapped to the OMOP common data model.

Challenges

The key challenges faced related to the process by which databases were mapped to the OMOP common data model (i.e. the extract, transform, and load [ETL] process), the standard vocabularies for representing healthcare visits, and the analytical tools available.

The two databases differed in the extent of data pre-processing before they were mapped, with IPCI data restricted to visits with GPs (in primary care) and CPRD including all patient contacts (including non-clinical functions). In CPRD, all contacts were mapped to a single standard OMOP visit concept, namely ‘outpatient visit’. In IPCI, primary care contacts were mapped to this same concept or ‘home visit’. For IPCI, no further distinguishing information was given on the provider or the location of care. In CPRD, the location of care was defined erroneously as ‘public health clinic’, whereas staff role concepts from CPRD were mapped to 25 standard concepts across four distinct vocabularies, with GP contacts combined with non-clinical functions in the ‘unknown physician speciality’ concept in the provider table.

It was simple to identify patients with COPD in both databases, and within IPCI to categorise people according to disease severity based on reported FEV1%, which were mapped to Logical Observation Identifiers Names and Codes (LOINC) codes. In CPRD source data, measurements are recorded using Read codes version 2 and entity codes. Only entity codes were mapped to the OMOP common data model (namely to Standard Nomenclature of Medicine [SNOMED] codes) in the implementation used in this analysis, and it was not possible to extract data on FEV1%, prohibiting estimation of the main analysis.

Finally, the analytical tools in Observational Health Data Sciences and Informatics (OHDSI) do not currently easily support estimation of count or continuous outcomes measures (such as costs), or the use of panel data models, and instead data were collated in R with standard packages used for estimation.

Recommendations

We faced several challenges in implementing a reproducible script to estimate healthcare utilisation in two separate databases. None of these were inherent to the common data model approach but rather reflected the mapping processes adopted, the standard vocabularies, and the available tools in OHDSI. We offer recommendations to better support these and other HTA use cases in the future.

First, it is essential that the uses of data for HTA are reflected in the data processing and mapping processes and that HTA experts are involved in the ETL development and validation. Second, visits must be mapped in a way that reflects the specificity of healthcare delivery in different settings (e.g. distinguishing between primary care or GP visits and other outpatient [i.e. secondary care] visits) while allowing for cross-country comparisons. This will likely require some extensions to the OMOP vocabularies, including definition of appropriate concept hierarchies but also agreements on common standards for mapping visit data and other data types. Finally, it is important that further analytical tools are developed to support the types of analyses common in HTA. This includes models for continuous (or count) outcomes and time-to-event data.

Notes:

1We used the ETL created by Janssen and deployed by the University of Oxford. The ETL documentation can be found here: https://ohdsi.github.io/ETL-LambdaBuilder/docs/CPRD.

2The ETL documentation is available on request from Erasmus Medical Centre.

Author contribution

SK developed the scope for the manuscript and led its development. All co-authors contributed to the scope of the manuscript and provided detailed comments on prepared versions.

Declarations

Funding

The European Health Data & Evidence Network has received funding from the Innovative Medicines Initiative 2 Joint Undertaking (JU) under grant agreement No 806968. The JU receives support from the European Union’s Horizon 2020 research and innovation programme and EFPIA.

Conflicts of interest

JTØ is an employee of Pfizer, an international pharmaceutical company that develops and markets drugs in a number of therapeutic areas. NH is an employee and shareholder at the pharmaceutical company J&J. PR received unconditional grants from Janssen Research & Development and the Innovative Medicines Initiative during the conduct of this work. All other authors report no conflicts of interest.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Availability of data and material

Not applicable.

Code availability

Not applicable.

References

- 1.HTAi Global Policy Forum. Real-world evidence in the context of health technology assessment processes – from theory to action. 2018. https://htai.org/wp-content/uploads/2019/02/HTAiGlobalPolicyForum2019_BackgroundPaper.pdf

- 2.Franklin JM, Glynn RJ, Martin D, et al. Evaluating the use of nonrandomized real-world data analyses for regulatory decision making. Clin Pharmacol Ther. 2019;105:867–877. doi: 10.1002/cpt.1351. [DOI] [PubMed] [Google Scholar]

- 3.Asche CV, Seal B, Kahler KH, et al. Evaluation of healthcare interventions and big data: review of associated data issues. Pharmacoeconomics. 2017;35:759–765. doi: 10.1007/s40273-017-0513-5. [DOI] [PubMed] [Google Scholar]

- 4.Schneeweiss S, Brown JS, Bate A, et al. Choosing among common data models for real-world data analyses fit for making decisions about the effectiveness of medical products. Clin Pharmacol Ther. 2019 doi: 10.1002/cpt.1577. [DOI] [PubMed] [Google Scholar]

- 5.Trifirò G, Coloma PM, Rijnbeek PR, et al. Combining multiple healthcare databases for postmarketing drug and vaccine safety surveillance: Why and how? J. Intern. Med. 2014;275:551–561. doi: 10.1111/joim.12159. [DOI] [PubMed] [Google Scholar]

- 6.European Medicines Agency. A Common Data Model for Europe? - Why? Which? How? 2018. https://www.ema.europa.eu/en/documents/report/common-data-model-europe-why-which-how-workshop-report_en.pdf

- 7.Brown JS, Holmes JH, Shah K, et al. Distributed health data networks: a practical and preferred approach to multi-institutional evaluations of comparative effectiveness, safety, and quality of care. Med Care. 2010 doi: 10.1097/MLR.0b013e3181d9919f. [DOI] [PubMed] [Google Scholar]

- 8.Observational Health Data Sciences and Informatics. The Book of OHDSI. 2019. https://ohdsi.github.io/TheBookOfOhdsi/

- 9.Weeks J, Pardee R. Learning to share health care data: a brief timeline of influential common data models and distributed health data networks in US health care research. eGEMs Generat Evid Methods Improv Patient Outcomes. 2019;7:4. doi: 10.5334/egems.279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Food and Drugs Administration. Sentinel system: five-year strategy 2019-2023. 2019. https://www.fda.gov/media/120333/download

- 11.Suchard MA, Schuemie MJ, Krumholz HM, et al. Comprehensive comparative effectiveness and safety of first-line antihypertensive drug classes: a systematic, multinational, large-scale analysis. Lancet. 2019;394:1816–1826. doi: 10.1016/S0140-6736(19)32317-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Reps JM, Rijnbeek PR, Ryan PB. Identifying the DEAD: Development And Validation Of A Patient-Level Model To Predict Death Status In Population-Level Claims Data. Drug Saf. 2019;42:1377–1386. doi: 10.1007/s40264-019-00827-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hripcsak G, Ryan PB, Duke JD, et al. Characterizing treatment pathways at scale using the OHDSI network. Proc Natl Acad Sci U S A. 2016;113:7329–7336. doi: 10.1073/pnas.1510502113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Burn E, Weaver J, Morales D, et al. Opioid use, postoperative complications, and implant survival after unicompartmental versus total knee replacement: a population-based network study. Lancet Rheumatol. 2019;1:E229–E236. doi: 10.1016/S2665-9913(19)30075-X. [DOI] [PubMed] [Google Scholar]

- 15.Lane JCE, Weaver J, Kostka K, et al. Risk of hydroxychloroquine alone and in combination with azithromycin in the treatment of rheumatoid arthritis: a multinational, retrospective study. Lancet Rheumatol. 2020;2:E698–711. doi: 10.1016/S2665-9913(20)30276-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.European Medicines Agency. COVID-19: EMA sets up infrastructure for real-world monitoring of treatments and vaccines. 2020.https://www.ema.europa.eu/en/news/covid-19-ema-sets-infrastructure-real-world-monitoring-treatments-vaccines

- 17.Head of Medicines Agencies and European Medicines Agency. HMA-EMA Joint Big Data Taskforce Phase II report: ‘Evolving Data-Driven Regulation’. 2020. https://www.ema.europa.eu/en/documents/other/hma-ema-joint-big-data-taskforce-phase-ii-report-evolving-data-driven-regulation_en.pdf

- 18.Candore G, Hedenmalm K, Slattery J, et al. Can we rely on results from IQVIA medical research data UK converted to the observational medical outcome partnership common data model? Clin Pharmacol Ther. 2020;107:915–925. doi: 10.1002/cpt.1785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kleijnen S, George E, Goulden S, et al. Relative effectiveness assessment of pharmaceuticals: similarities and differences in 29 jurisdictions. Value Heal. 2012;15:954–960. doi: 10.1016/j.jval.2012.04.010. [DOI] [PubMed] [Google Scholar]

- 20.EUnetHTA. Methods for health economic evaluations - A guideline based on current practices in Europe. 2015.https://www.eunethta.eu/wp-content/uploads/2018/03/Methods_for_health_economic_evaluations.pdf

- 21.Angelis A, Lange A, Kanavos P. Using health technology assessment to assess the value of new medicines: results of a systematic review and expert consultation across eight European countries. Eur J Heal Econ. 2018;19:123–152. doi: 10.1007/s10198-017-0871-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Grigore B, Ciani O, Dams F, et al. Surrogate endpoints in health technology assessment: an international review of methodological guidelines. Pharmacoeconomics. 2020;38:1055–1070. doi: 10.1007/s40273-020-00935-1. [DOI] [PubMed] [Google Scholar]

- 23.Makady A, van Veelen A, Jonsson P, et al. Using real-world data in health technology assessment (HTA) practice: a comparative study of five HTA agencies. Pharmacoeconomics. 2018;36:359–368. doi: 10.1007/s40273-017-0596-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bullement A, Podkonjak T, Robinson MJ, et al. Real-world evidence use in assessments of cancer drugs by NICE. Int J Technol Assess Health Care. 2020;36:388–394. doi: 10.1017/S0266462320000434. [DOI] [PubMed] [Google Scholar]

- 25.Latimer NR. Survival analysis for economic evaluations alongside clinical trials—extrapolation with patient-level data. Med Decis Mak. 2013;33:743–754. doi: 10.1177/0272989x12472398. [DOI] [PubMed] [Google Scholar]

- 26.Collins R, Bowman L, Landray M, et al. The magic of randomization versus the myth of real-world evidence. N Engl J Med. 2020;382:674–678. doi: 10.1056/NEJMsb1901642. [DOI] [PubMed] [Google Scholar]

- 27.Schneeweiss S. Real-world evidence of treatment effects: the useful and the misleading. Clin. Pharmacol. Ther. 2019;106:43–44. doi: 10.1002/cpt.1405. [DOI] [PubMed] [Google Scholar]

- 28.Hatswell AJ, Baio G, Berlin JA, et al. Regulatory approval of pharmaceuticals without a randomised controlled study: analysis of EMA and FDA approvals 1999–2014. BMJ Open. 2016;6:e011666. doi: 10.1136/bmjopen-2016-011666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Anderson M, Naci H, Morrison D, et al. A review of NICE appraisals of pharmaceuticals 2000–2016 found variation in establishing comparative clinical effectiveness. J. Clin. Epidemiol. 2019;105:50–59. doi: 10.1016/j.jclinepi.2018.09.003. [DOI] [PubMed] [Google Scholar]

- 30.Eichler HG, Koenig F, Arlett P, et al. Are novel, nonrandomized analytic methods fit for decision making? The need for prospective, controlled, and transparent validation. Clin Pharmacol Ther. 2019;107:773–779. doi: 10.1002/cpt.1638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Crispi F, Naci H, Barkauskaite E, et al. Assessment of devices, diagnostics and digital technologies: a review of NICE medical technologies guidance. Appl Health Econ Health Policy. 2019;17:189–211. doi: 10.1007/s40258-018-0438-y. [DOI] [PubMed] [Google Scholar]

- 32.Cameron C, Fireman B, Hutton B, et al. Network meta-analysis incorporating randomized controlled trials and non-randomized comparative cohort studies for assessing the safety and effectiveness of medical treatments: challenges and opportunities. Syst Rev. 2015 doi: 10.1186/s13643-015-0133-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sculpher MJ, Claxton K, Drummond M, et al. Whither trial-based economic evaluation for health care decision making? Health Econ. 2006;15:677–687. doi: 10.1002/hec.1093. [DOI] [PubMed] [Google Scholar]

- 34.Petrou S, Gray A. Economic evaluation using decision analytical modelling: design, conduct, analysis, and reporting. BMJ. 2011 doi: 10.1136/bmj.d1766. [DOI] [PubMed] [Google Scholar]

- 35.Hernandez-Villafuerte K, Fischer A, Latimer N. Challenges and methodologies in using progression free survival as a surrogate for overall survival in oncology. Int J Technol Assess Health Care. 2018;34:300–316. doi: 10.1017/S0266462318000338. [DOI] [PubMed] [Google Scholar]

- 36.Kurz X, Perez-Gutthann S. Strengthening standards, transparency, and collaboration to support medicine evaluation: ten years of the European Network of Centres for Pharmacoepidemiology and Pharmacovigilance (ENCePP) Pharmacoepidemiol Drug Saf. 2018;27:245–252. doi: 10.1002/pds.4381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bouvy JC, Sapede C, Garner S. Managed entry agreements for pharmaceuticals in the context of adaptive pathways in Europe. Front Pharmacol. 2018;9:280. doi: 10.3389/fphar.2018.00280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hampson G, Towse A, Dreitlein WB, et al. Real-world evidence for coverage decisions: opportunities and challenges. J Comp Eff Res. 2018;7:1133–1143. doi: 10.2217/cer-2018-0066. [DOI] [PubMed] [Google Scholar]

- 39.Cave A, Kurz X, Arlett P. Real-world data for regulatory decision making: challenges and possible solutions for Europe. Clin Pharmacol Ther. 2019;106:36–39. doi: 10.1002/cpt.1426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pacurariu A, Plueschke K, McGettigan P, et al. Electronic healthcare databases in Europe: descriptive analysis of characteristics and potential for use in medicines regulation. BMJ Open. 2018 doi: 10.1136/bmjopen-2018-023090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Lovestone S. The European medical information framework: a novel ecosystem for sharing healthcare data across Europe. Learn Heal Syst. 2020;4:e10214. doi: 10.1002/lrh2.10214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Bell H, Wailoo AJ, Hernandez M, Grieve R, Faria R, Gibson L, Grimm S. The use of real world data for the estimation of treatment effects in NICE decision making. 2016. https://nicedsu.org.uk/wp-content/uploads/2018/05/RWD-DSU-REPORT-Updated-DECEMBER-2016.pdf

- 43.Berger ML, Sox H, Willke RJ, et al. Good practices for real-world data studies of treatment and/or comparative effectiveness: recommendations from the joint ISPOR-ISPE special task force on real-world evidence in health care decision making. Value Heal. 2017;20:1003–1008. doi: 10.1016/j.jval.2017.08.3019. [DOI] [PubMed] [Google Scholar]

- 44.Orsini LS, Berger M, Crown W, et al. Improving transparency to build trust in real-world secondary data studies for hypothesis testing-why, what, and how: recommendations and a road map from the real-world evidence transparency initiative. Value Heal. 2020;23:1128–1136. doi: 10.1016/j.jval.2020.04.002. [DOI] [PubMed] [Google Scholar]

- 45.Bowrin K, Briere JB, Levy P, et al. Cost-effectiveness analyses using real-world data: an overview of the literature. J Med Econ. 2019;22:545–553. doi: 10.1080/13696998.2019.1588737. [DOI] [PubMed] [Google Scholar]

- 46.Gutacker N, Street A. Calls for routine collection of patient-reported outcome measures are getting louder. J Heal Serv Res Policy. 2018;24:1–2. doi: 10.1177/1355819618812239. [DOI] [PubMed] [Google Scholar]

- 47.Grimes DA, Schulz KF. Bias and causal associations in observational research. Lancet. 2002;359:248–252. doi: 10.1016/S0140-6736(02)07451-2. [DOI] [PubMed] [Google Scholar]

- 48.Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: A tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919. doi: 10.1136/bmj.i4919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.De Groot MCH, Schlienger R, Reynolds R, et al. Improving consistency in findings from pharmacoepidemiological studies: the IMI-PROTECT project. Pharmacoepidemiol Drug Saf. 2013;25:1–165. doi: 10.1002/pds.3512. [DOI] [Google Scholar]

- 50.Klungel OH, Kurz X, de Groot MCH, et al. Multi-centre, multi-database studies with common protocols: lessons learnt from the IMI PROTECT project. Pharmacoepidemiol Drug Saf. 2016;25:156–165. doi: 10.1002/pds.3968. [DOI] [PubMed] [Google Scholar]

- 51.Stang PE, Ryan PB, Racoosin JA, et al. Advancing the science for active surveillance: rationale and design for the observational medical outcomes partnership. Ann Intern Med. 2010;153:600–606. doi: 10.7326/0003-4819-153-9-201011020-00010. [DOI] [PubMed] [Google Scholar]

- 52.Marc Overhage J, Ryan PB, Reich CG, et al. Validation of a common data model for active safety surveillance research. J Am Med Inform Assoc. 2012;19:54–60. doi: 10.1136/amiajnl-2011-000376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Haberson A, Rinner C, Schöberl A, et al. Feasibility of mapping austrian health claims data to the OMOP common data model. J Med Syst. 2019 doi: 10.1007/s10916-019-1436-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lai ECC, Ryan P, Zhang Y, et al. Applying a common data model to asian databases for multinational pharmacoepidemiologic studies: opportunities and challenges. Clin Epidemiol. 2018 doi: 10.2147/CLEP.S149961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Maier C, Lang L, Storf H, et al. Towards IMPLEMENTAtion of OMOP in a German university hospital consortium. Appl Clin Inform. 2018;9:54–61. doi: 10.1055/s-0037-1617452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lynch KE, Deppen SA, Duvall SL, et al. Incrementally transforming electronic medical records into the observational medical outcomes partnership common data model: a multidimensional quality assurance approach. Appl Clin Inform. 2019;10:794–803. doi: 10.1055/s-0039-1697598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kahn MG, Callahan TJ, Barnard J, et al. A harmonized data quality assessment terminology and framework for the secondary use of electronic health record data. eGEMs (Generating Evid Methods Improv Patient Outcomes) 2016 doi: 10.13063/2327-9214.1244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.European Health Data & Evidence Network. https://ehden.eu/

- 59.Wilkinson MD, Dumontier M, Aalbersberg J, et al. The FAIR guiding principles for scientific data management and stewardship. Sci Data. 2016 doi: 10.1038/sdata.2016.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Kahn MG, Brown JS, Chun AT, et al. Transparent reporting of data quality in distributed data networks. eGEMs (Generating Evid Methods to Improv patient outcomes) 2015;3:7. doi: 10.13063/2327-9214.1052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.ISPOR. Improving Transparency in Non-Interventional Research for Hypothesis Testing—WHY, WHAT, and HOW: Considerations from The Real-World Evidence Transparency Initiative (draft White Paper). 2019. https://www.ispor.org/docs/default-source/strategic-initiatives/improving-transparency-in-non-interventional-research-for-hypothesis-testing_final.pdf?sfvrsn=77fb4e97_6

- 62.Rijnbeek PR. Converting to a common data model: what is lost in translation? Drug Saf. 2014;37:893–896. doi: 10.1007/s40264-014-0221-4. [DOI] [PubMed] [Google Scholar]

- 63.Matcho A, Ryan P, Fife D, et al. Fidelity assessment of a clinical practice research datalink conversion to the OMOP common data model. Drug Saf. 2014;37:945–959. doi: 10.1007/s40264-014-0214-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Lima DM, Rodrigues-Jr JF, Traina AJM, et al. Transforming two decades of EPR data to OMOP CDM for clinical research. Stud Health Technol Inform. 2019;264:233–237. doi: 10.3233/SHTI190218. [DOI] [PubMed] [Google Scholar]

- 65.Zhou X, Murugesan S, Bhullar H, et al. An evaluation of the THIN database in the OMOP common data model for active drug safety surveillance. Drug Saf. 2013;36:119–134. doi: 10.1007/s40264-012-0009-3. [DOI] [PubMed] [Google Scholar]

- 66.EUnetHTA. Analysis of HTA and reimbursement procedures in EUnetHTA partner countries. 2017.https://www.eunethta.eu/national-implementation/analysis-hta-reimbursement-procedures-eunethta-partner-countries/ (accessed 22 Jul 2019).

- 67.Facey K, Rannanheimo P, Batchelor L, et al. Real-world evidence to support Payer/HTA decisions about highly innovative technologies in the EU—actions for stakeholders. Int J Technol Assess Health Care. 2020;36:459–468. doi: 10.1017/S026646232000063X. [DOI] [PubMed] [Google Scholar]

- 68.Berntgen M, Gourvil A, Pavlovic M, et al. Improving the contribution of regulatory assessment reports to health technology assessments—A collaboration between the european medicines agency and the european network for health technology assessment. Value Heal. 2014;17:634–641. doi: 10.1016/j.jval.2014.04.006. [DOI] [PubMed] [Google Scholar]