Abstract

Individuals with Parkinson’s disease present with a complex clinical phenotype, encompassing sleep, motor, cognitive, and affective disturbances. However, characterizations of PD are typically made for the “average” patient, ignoring patient heterogeneity and obscuring important individual differences. Modern large-scale data sharing efforts provide a unique opportunity to precisely investigate individual patient characteristics, but there exists no analytic framework for comprehensively integrating data modalities. Here we apply an unsupervised learning method—similarity network fusion—to objectively integrate MRI morphometry, dopamine active transporter binding, protein assays, and clinical measurements from n = 186 individuals with de novo Parkinson’s disease from the Parkinson’s Progression Markers Initiative. We show that multimodal fusion captures inter-dependencies among data modalities that would otherwise be overlooked by field standard techniques like data concatenation. We then examine how patient subgroups derived from the fused data map onto clinical phenotypes, and how neuroimaging data is critical to this delineation. Finally, we identify a compact set of phenotypic axes that span the patient population, demonstrating that this continuous, low-dimensional projection of individual patients presents a more parsimonious representation of heterogeneity in the sample compared to discrete biotypes. Altogether, these findings showcase the potential of similarity network fusion for combining multimodal data in heterogeneous patient populations.

Subject terms: Neuroscience, Parkinson's disease

Introduction

Individuals with Parkinson’s disease (PD) present with a range of symptoms, including sleep, motor, cognitive, and affective disturbances1. This heterogeneity is further complicated by individual differences in the age of disease onset and the rate of pathological progression2,3. Most attempts to resolve heterogeneity in PD rely on clustering or subtyping of patients based solely on clinical-behavioral assessments. While these efforts have shown that it is possible to stratify patients into clinically meaningful categories with considerable predictive utility4,5; cf.6, clinical measures do not directly measure the underlying pathophysiology of PD7. Given the prominence of synucleinopathy8,9, dopamine neuron dysfunction10, and distributed grey matter atrophy in PD11–13, it is increasingly necessary to develop biologically informed biotypes by integrating multiple sources of evidence in addition to clinical assessments.

Modern technological advances and data-sharing efforts increasingly permit deep phenotyping in large samples of patients, making simultaneous behavioral assessments, physiological measurements, genetic assays, and brain imaging available at unprecedented scales14. A principal challenge is to parsimoniously integrate these multi-view data in order to take full advantage of each source of information15. How to account for multiple sources of data to characterize heterogeneous patient samples is a topic of significant interest in computational medicine16,17, with important applications for oncology18,19, psychiatry20–22, and neurology7. Indeed, recent advances in techniques like multiple kernel learning have yielded promising results for integrating disparate data modalities in the context of supervised and unsupervised problems23,24. More broadly, there exist many families of techniques for investigating multi-view data, including multiple kernel learning, matrix factorization, and deep learning, that have been increasingly used in recent years to tackle issues of data integration25. Yet, the most commonly employed technique—to simply concatenate data modalities—ignores the structure inherent in individual modalities, potentially yielding biased estimates19,25.

In the present report, we seek to generate a comprehensive, multimodal characterization of PD. We apply an unsupervised learning technique, similarity network fusion (SNF19), to integrate data from four data modalities in n = 186 individuals with de novo PD from the Parkinson’s Progression Markers Initiative database14. Using structural T1-weighted magnetic resonance imaging (MRI), clinical-behavioral assessments, cerebrospinal fluid assays, and single-photon emission computed tomography (SPECT) data we generate patient similarity networks and combine them via an iterative, non-linear fusion process. We demonstrate that SNF yields a more balanced representation of multimodal patient data than standard techniques like data concatenation. We use the patient network generated by SNF to reveal putative PD biotypes, and examine how neuroimaging data contributes to cluster definition and patient discriminability. Finally, we explore how a continuous low-dimensional representation of the fused patient network yields individual estimates of PD patient pathology.

Results

Analytic overview

Complete data were obtained for n = 186 patients from the Parkinson’s Progression Markers Initiative database14. Data sources included (1) cortical thickness, (2) subcortical volume, (3) clinical-behavioral assessments, (4) dopamine activate transporter (DAT) binding scans, and (5) cerebrospinal fluid assays. Although cortical thickness and subcortical volume are both derived from the same data modality (i.e., anatomical, T1-weighted MRI scans), they are estimated using different algorithms so we retain them as separate sources. For more detailed information on data collection and estimation of derivatives please see “Methods”.

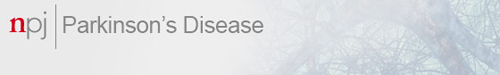

We combine these multimodal data using SNF, which first constructs patient similarity networks for each modality and then iteratively fuses the networks together (Fig. 1a). Both the construction of patient similarity networks and the fusion process of SNF are governed by two free hyperparameters. The first parameter, K, controls the size of the patient neighborhoods to consider when generating the similarity networks: smaller values of K will result in more sparsely connected networks, while larger values will generate denser networks. The second parameter, μ, determines the weighting of edges between patients in the similarity network: small values of μ will keep only the strongest edges in the patient networks, while larger values will retain a wider distribution of edge weights. We use the fused networks to generate categorical representations of patient data via spectral clustering and continuous representations via diffusion map embedding (Fig. 1b–d). The remainder of the reported results examines the utility of these representations in estimating patient pathology and disease severity.

Fig. 1. Similarity network fusion analysis pipeline.

Toy example demonstrating the processing steps employed in the reported analyses; refer to Methods: Similarity network fusion for more detailed information. Patients are represented as nodes (circles) and the similarity between their disease phenotype is expressed as connecting edges. a Similarity network fusion generates patient similarity networks independently for each data type and then iteratively fuses these networks together. The resulting network represents patient information and relationships balanced across all input data types. b We perform an exhaustive parameter search for 10,000 combinations of SNF’s two hyperparameters (K and μ). The resulting fused patient networks are subjected to (1) spectral clustering and (2) diffusion map embedding to derive categorical and continuous representations of patient data, respectively. c We assess the local similarity of patient cluster assignments in parameter space using the z-Rand index80. The z-Rand index is calculated for all pairs of cluster solutions neighboring a given parameter combination [] and then averaged to generate a single “cluster similarity” metric. Clustering solutions from regions of parameter space with an average cluster similarity exceeding the 95th percentile are retained and combined via a consensus analysis to generate final patient clusters (see “Methods: Consensus clustering”)30,31. d Diffusion map embedding yields phenotypic “dimensions” of patient pathology38. Embeddings from stable regions of parameter space chosen in (c) are aligned via rotations and reflections using a generalized Procrustes analysis and averaged to generate a final set of disease dimensions.

Similarity network fusion provides a viable alternative to data concatenation

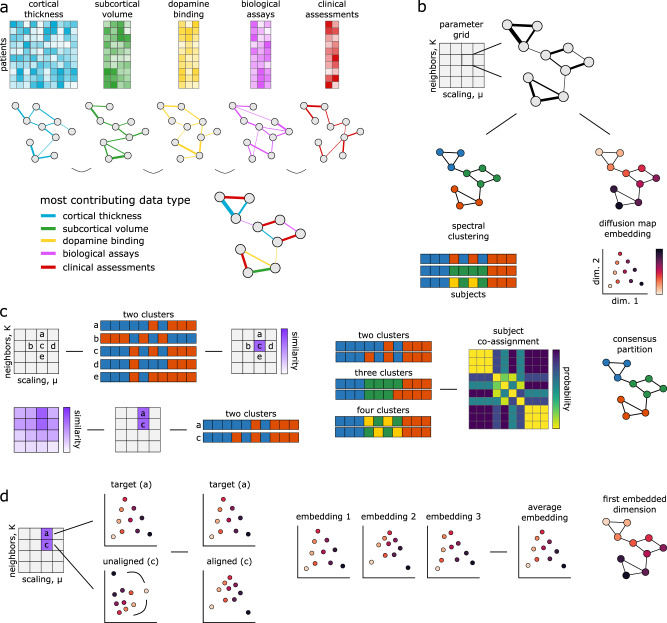

As the current field standard, data concatenation seems an intuitively appealing method for examining multimodal patient data. In a concatenation framework, features from all data modalities are joined together to create a single patient by feature matrix which is then converted into a patient similarity network using an affinity kernel (e.g., cosine similarity, a radial basis function). This approach is particularly convenient in that it is almost entirely data-driven, with no free parameters beyond the choice of affinity kernel. However, data concatenation tends to suffer from the curse of dimensionality26. That is, modalities with many features—regardless of their relative importance—tend to dominate the resulting patient network, obscuring information in modalities with fewer features (Fig. 2a). This is of particular concern when integrating metrics derived from MRI images, which often have tens or hundreds of times more features than lower-dimensionality data sources.

Fig. 2. Comparison of data concatenation and SNF for integrating data.

a Toy diagram depicting generation of a patient similarity network from data concatenation in contrast to SNF. The highest dimensionality data—in this case, cortical thickness—tends to be over-represented in the similarity patterns of the patient network generated with data concatenation, whereas SNF yields a more balanced representation. b An exhaustive parameter search was performed for 10,000 combinations of SNF’s two hyperparameters (K and μ). We subjected the resulting fused patient network for each combination to spectral clustering for a two-, three-, and four-cluster solution. Clustering solutions were combined via a “consensus” clustering approach (see “Methods: Consensus clustering”, Fig. 1c)30,31. c The impact of cortical thickness feature dimensionality on normalized mutual information (NMI) and modularity for different data modalities in data concatenation compared to SNF. NMI estimates are computed by comparing the clustering solutions generated from the concatenated/SNF-derived patient network with the solutions from each single-modality patient network. Modularity estimates are computed by applying the clustering solutions from the concatenated/SNF-derived patient network to the single-modality patient network. CT = cortical thickness; SV = subcortical volume; DB = DAT binding; CSF = CSF assays; CLIN = clinical-behavioral assessments.

Similarity network fusion, on the other hand, does not necessarily suffer from such issues. In an SNF framework, patient similarity networks are generated separately for each data modality and then iteratively fused together to create a single, multimodal patient network (Fig. 2a). Converting to patient networks prior to fusing across sources reduces the likelihood of biasing results towards higher-dimensionality data, potentially yielding more balanced representations of the input data19. However, SNF is governed by two free hyperparameters, K and μ, demanding greater computational complexity to avoid arbitrary selection.

To investigate the extent to which SNF is a plausible alternative to data concatenation we generated patient networks using both techniques, varying the dimensionality of cortical thickness data across five increasingly high-resolution subdivisons of the Desikan–Killiany parcellation (ranging from 68 to 1000 features; see “Methods: Cortical thickness”)27.

Rather than selecting a single combination of parameters to combine data modalities in SNF we conducted an exhaustive parameter search, performing the fusion for 10,000 combinations of K and μ (100 values for each parameter; Fig. 2b). We subjected the resulting networks to spectral clustering with a two-, three-, and four-cluster solution28,29. We then integrated the resulting 30,000 clustering assignments using an adapted consensus clustering approach, previously described in refs. 30 and31 (see Fig. 1c or “Methods: Consensus clustering for more details”). Briefly, we assessed the stability of the clustering solutions in parameter space, retaining only those solutions above the 95th percentile of stability, and used the resulting solutions (n = 1262) to create a co-assignment probability matrix which was thresholded and partitioned to generate a set of “consensus” assignments30,32.

To assess the relative contribution of the individual data modalities to the concatenation- and SNF-derived clustering assignments we also clustered unimodal patient networks, created separately for each data source (n = 5). We compared the similarity between uni- and multimodal clustering assignments using normalized mutual information scores (NMI33), and the goodness-of-fit of clustering assignments to patient networks using modularity34. To ensure that the results of the two data integration techniques were more directly comparable, we only used the clustering assignments from concatenated data that had the same number of clusters as those derived via the consensus SNF approach.

We find that at even the lowest dimensionality (i.e., 68 features) patient networks generated using concatenation are dominated by information from cortical thickness data (Fig. 2b). That is, NMI scores show high overlap between clustering assignments derived from only cortical thickness data and assignments generated using concatenated data (average NMI = 0.77 ± 0.25 [0.40–1.00]). Indeed, at the highest cortical thickness dimensionality (1000 features) the clustering assignments for the cortical thickness and concatenated data are identical, suggesting the concatenated patient networks are discarding information from all other lower-dimensional data sources. Estimates of modularity are slightly higher for patient networks derived from only cortical thickness data than from other datatypes, but remain low for all data modalities (average modularity = 0.02 ± 0.02 [0.00–0.06]).

On the other hand, SNF appears much more stable to changes in data dimensionality, with NMI scores and modularity estimates more evenly distributed across data modalities (NMI = 0.15 ± 0.18 [0.01–0.50]; modularity = 0.05 ± 0.06 [0.00–0.17]). While this does not necessarily imply that the generated clustering assignments are meaningful, it does suggest that SNF provides a more balanced representation of the input data than simple concatenation, opening the door for a more holistic assessment of their clinical relevance to patient pathology. Moreover, SNF achieves good discriminability between healthy controls and PD patients (Supplementary Fig. 1), suggesting relevant clinical utility. We chose to examine the fused patient networks generated from the highest (i.e., 1000 node) resolution cortical thickness data for further analyses.

Derived patient biotypes are clinically discriminable across modalities

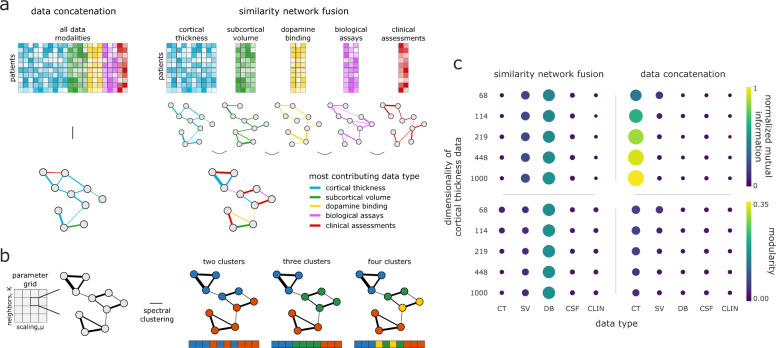

Consensus clustering of the multimodal SNF-derived patient networks yielded three clusters—or “biotypes”—of n = 72, 69, and 45 individuals (Fig. 3a–c) with strong goodness-of-fit (modularity = 0.494, p < 0.001 by permutation). There were no significant inter-group differences for sex (p = 0.32), age (p = 0.30), education (p = 0.77), symptom duration (p = 0.83), recruitment site (p = 0.20), or MRI scanner strength (p = 0.38) (see Supplementary Table 1 for summary demographics of each cluster).

Fig. 3. Patient biotypes discriminate multimodal disease severity.

a Local similarity of clustering solutions for examined SNF parameter space, shown here for the three-cluster solution only. b Patient assignments for all of the two-, three-, and four-cluster solutions extracted from stable regions of the SNF parameter space in (a). The three-cluster solution is ostensibly the most consistent across different partitions. c Patient co-assignment probability matrix, indicating the likelihood of two patients being assigned to the same cluster across all assignments in (b). The probability matrix is thresholded based on a permutation-based null model and clustered using an iterative Louvain algorithm to generate consensus assignments (see “Methods: Consensus clustering”). d Patient biotype differences for the most discriminating feature of each data modalities at baseline. X-axis scales for all plots represent z-scores relative to the sample mean and standard deviation. Note that all ANOVAs with the exception of tremor scores are significant after FDR-correction (q < 0.05). Higher cortical thickness, subcortical volume, and DAT binding scores are generally indicative of clinical phenotype; refer to Supplementary Table 2 for guidelines on how biological assays and clinical-behavioral assessments coincide with PD phenotype. e Patient biotype differences for two relevant clinical-behavioral assessments over time; shaded bands in (e) indicate standard error. Linear mixed effect models were used to examine the differential impact of biotype designation on longitudinal progression of clinical scores; refer to Supplementary Table 4 for model estimates.

To assess the clinical relevance of these biotypes we performed a series of univariate one-way ANOVAs for all 1050 input features (see Supplementary Table 2 for a complete list). We found 31 features that significantly distinguished patient groups from one another (false discovery rate [FDR] corrected, q < 0.05; the most discriminating feature from each data modality are shown in Fig. 3d and a full list is available in Supplementary Table 3). Though biotypes showed limited differences in clinical-behavioral assessments using baseline data, longitudinal analyses of subgroup affiliation revealed differentiation over time in clinical measurements frequently used for PD prognosis (Fig. 3e; refer to Supplementary Table 4 for model estimates). Longitudinal differences were less pronounced when biotypes were defined with only baseline clinical data or concatenated data (Supplementary Fig. 2; Supplementary Table 5). Note that while increases in both tremor and postural instability/gait difficulty (PIGD) scores are indicative of clinical severity, higher PIGD (and lower tremor) scores are often found to be related to a more rapidly progressing and severe manifestation of PD35.

Broad examination of the DAT binding, CSF, and clinical assessment data suggests that the three biotypes may separate along a single dimension of PD severity, where group one represents a more severe, group two an intermediate, and group three a more mild phenotype; however, differences in the neuroimaging data reveal this delineation is less straightforward. For instance, individuals in group two—the ostensible “intermediate” clinical biotype—also tend to have greater subcortical volume than individuals in the “mild” clinical group, especially in brain regions typically prone to degeneration in early PD such as the substantia nigra (F(2, 183) = 40.22, p = 8.70 x 10−13; Fig. 3d). That is, the assignment of cluster labels indicative of disease severity are incapable of capturing all phenotypic aspects of PD.

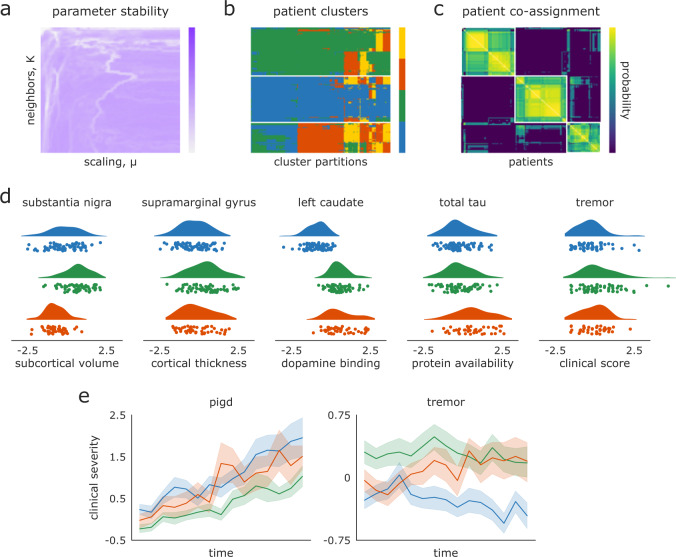

To further investigate the discrepancy between the clinical and neuroimaging data, we calculated patient scores for a putative neuroimaging biomarker—the “PD-ICA atrophy network”—recently shown to have relevance to both diagnostic PD disease severity and longitudinal prognosis36 (Fig. 4c). While the PD-ICA biomarker was generated from the same dataset examined in the current study, it was derived via metrics which we excluded from our SNF analyses (i.e., whole-brain deformation-based morphometry [DBM] estimates). In line with results from the neuroimaging data included in SNF, we found significant differentiation of PD-ICA atrophy scores between biotypes (F(2, 183) = 5.70, p = 0.004), largely driven by lower atrophy in the “intermediate” compared to the “mild” group (post-hoc Tukey test, p < 0.05). These observed discrepancies between MRI-derived metrics and other modalities raise the question: how important is MRI brain imaging to characterizing PD pathology?

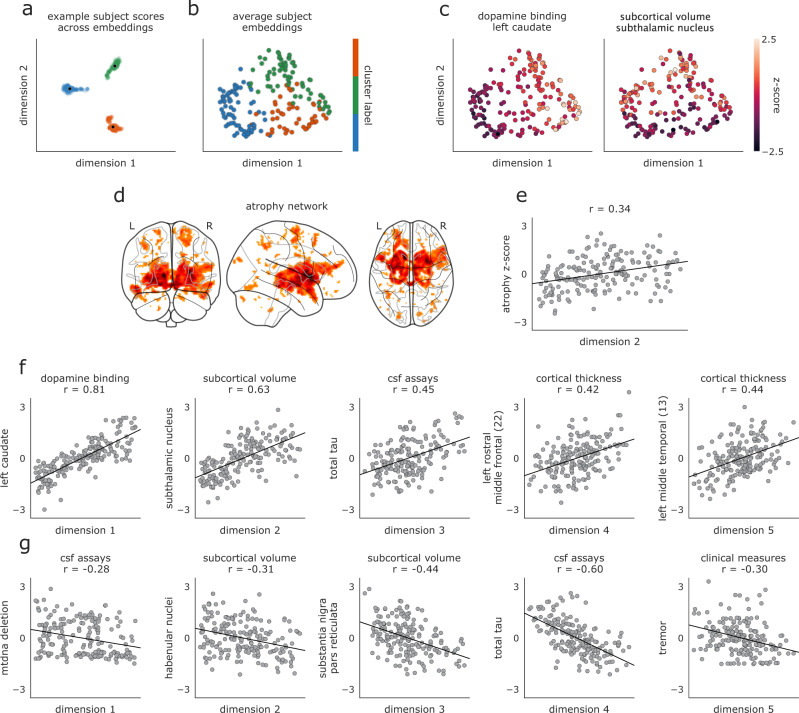

Fig. 4. Embedded patient dimensions capture relevant clinical information.

a Scores of three example patients (one from each biotype) along the first two embedding dimensions generated from all 1262 hyperparameter combinations retained in analyses; black dots represent average scores across all parameter combinations, which are shown for every patient in (b). b Scores of all patients along first two dimensions of average embeddings. Colors reflect the same biotype affiliation as shown in Fig. 3. c Same as (b) but colored by patients scores of the feature most strongly correlated with the first and second embedding dimensions. Left: colors represent DAT binding scores from the left caudate (r = 0.81 with dimension one). Right panel: colors represent subcortical volume of the subthalamic nucleus (r = 0.63 with dimension two). d PD-ICA atrophy network from11, showing brain regions with greater tissue loss in PD patients than in age-matched healthy individuals. e PD atrophy scores, calculated for each patient using the mask shown in (e), correlated with subject scores along the second embedded dimension shown in (a–c). f, g Features with the most positive (f) and negative (g) associations to the five embedding dimensions. Y-axes are presented as z-scores; x-axes are dimensionless units derived from the diffusion embedding procedure.

Neuroimaging data is critical to patient biotype characterization

Although one benefit of SNF is the ability to seamlessly integrate neuroimaging data with clinical assessments, high-resolution MRI scans can be both costly and inconvenient for PD patients. Most previous biotyping and patient classification studies in PD have focused solely on clinical-behavioral assessments4,5, raising the possibility that an adequate solution can be identified without MRI scans. Thus, we investigated whether a similar patient characterization can be identified using a reduced dataset excluding MRI data.

Data from clinical-behavioral assessments, cerebrospinal fluid assays, and subcortical DAT binding scans were used to generate fused patient networks following the same procedures described above (see “Similarity network fusion provides a viable alternative to data concatenation”). Consensus clustering of the networks yielded three subgroups of n = 83, 59, and 44 individuals, showing moderate overlap with biotypes defined on the full dataset (NMI = 0.43).

Despite this similarity, it is possible these “no-MRI” biotypes would result in a different characterization of PD disease severity than the previously described biotypes. To assess this, we applied the same univariate one-way ANOVA framework to examine which of the original 1050 features were discriminable between the subgroups generated without MRI data. This revealed only five features (from two modalities) that significantly distinguished these patient subgroups (FDR-corrected, q < 0.05): DAT binding in bilateral caudate and putamen and clinical scores on the the Unified PD rating scale, part II (UPDRS-II37). Comparing these features with the complementary set derived from all the data (Supplementary Table 3) highlights the reduced discriminability of these “no-MRI” subgroups.

Given that the reduced dataset contains relatively few (i.e., 34) features, it is possible that SNF may be “over-engineering” a solution that could be better achieved with alternative clustering techniques. To address this possibility, we reproduce a previously published clustering solution of PD patients that does not include features derived from MRI data and compare the results of this technique to SNF (see “Supplementary Results: Comparing clustering techniques”). We find that, as above, this clustering solution fails to yield subgroups with meaningful differences for any neuroimaging metrics.

Taken together, these results support the notion that excluding MRI data from PD clustering yields patient subgroups that fail to capture significant differences in PD pathophysiology. Nevertheless, the value of neuroimaging data in defining patient subgroups does not explain the inconsistencies previously observed between neuroimaging metrics and clinical data in the patient biotypes. In the early stages of PD, pathophysiology may not perfectly align with clinical symptomatology; that is, hard partitioning into a small number of discrete biotypes may be an over-simplification of the disease.

Patient biotypes separate along continuous dimensions of severity

To examine the possibility that PD pathology is multidimensional, we generated a continuous low-dimensional representation of our fused patient networks with diffusion embedding38,39 (see Supplementary Fig. 3 for a comparison with PCA on concatenated data). Diffusion embedding attempts to find dimensions, often referred to as gradients or components, of a network that encode the dominant differences in patient similarity—akin to principal components analysis (PCA) or multidimensional scaling (MDS). The resulting components are unitless and represent the primary axes of inter-subject similarity. Critically, diffusion embedding is sensitive to complex, non-linear relationships and is relatively robust to noise perturbations compared to other dimensionality reduction methods38,39.

Patient networks for all SNF hyperparameter combinations used in the consensus clustering (n = 1262 networks) were decomposed with diffusion embedding and realigned using a generalized orthogonal Procrustes analysis. The resulting aligned embeddings were then averaged to generate a single, embedded space (Fig. 1d). Examining the extent to which patient clusters differentiated in embedded space revealed limited overlap in cluster affiliation among the first two dimensions (Fig. 4a, b).

The first two dimensions of the embedded space correlated most strongly with patient variability in DAT binding of the left caudate (dimension one: r = 0.81; Fig. 4c, left panel) and volume of the subthalamic nucleus (dimension two: r = 0.63; Fig. 4c, right panel). Examining further dimensions revealed significant relationships with features from all data modalities (Fig. 4f, g). We also investigated the extent to which patient PD-ICA atrophy scores related to variation along estimated diffusion dimensions11, finding significant associations between atrophy scores and dimensions two and four (r = 0.33 and −0.26, FDR-corrected, q < 0.05; Fig. 4d, e).

To examine whether this dimensional framework provided out-of-sample predictive utility we re-ran the SNF grid search excluding two variables frequently used in clinical settings to assess disease severity: the tremor dominant score and the postural instability/gait difficulty score (PIGD40). We regenerated the embedded dimensions following the procedure depicted in Fig. 1d and used five-fold cross-validation to assess the extent to which embeddings predicted patient scores on the held-out clinical variables. A simple linear regression was performed using the first five embedding dimensions as predictors of each clinical score; betas were estimated from 80% of the data and applied to predict scores for the remaining 20%. Out-of-sample scores were predicted moderately well (average Pearson correlation between real and predicted scores: rtremor = 0.20 [SD = 0.22] ; rpigd = 0.22 [SD = 0.22]).

Discussion

The present report demonstrates how diverse modalities can be integrated to comprehensively characterize a range of patient characteristics. Using information about behavior, DAT binding, cerebrospinal fluid, cortical thickness, and subcortical tissue volume, we find evidence for distinct biological dimensions or phenotypic axes that span the patient sample. These biologically-informed dimensions provide a more nuanced interpretation of pathology than is permitted from discrete subgroups or biotypes, and hold potential for greater precision in diagnosis.

The present study demonstrates that even in a well-controlled sample of de novo PD patients, there exists considerable heterogeneity. This finding contributes to a rich literature on the diversity of PD symptoms5,7,41–45. Despite the fact that the putative biotypes are based on the first patient visit, there are several important features where the biotypes are not initially different from each other but progressively diverge over time (e.g., tremor scores).

Does the observed heterogeneity imply the existence of fundamentally different diseases, or simply different rates of progression? Recent evidence from animal models suggests that PD originates from misfolding and trans-synaptic spreading of the endogenous protein α-synuclein9, with convergent results from human neuroimaging13,46–48. The large-scale atrophy patterns and associated neurological deficits are thought to be mediated by the spread of these pathogenic protein aggregates49, penetrating the cerebral hemispheres via the brain stem and subcortex50. The heterogeneity of clinical symptoms may simply suggest that patients may differ in the rate and extent of neurodegeneration, leading to diverse symptoms. In other words, individual variation in neurological manifestations of the disease may depend on the spreading pattern and the affected networks51, as well as factors not directly related to PD pathophysiology (e.g., age, sex, comorbidities).

Hard partitioning methods yield non-overlapping clusters by definition, but is this the best way to characterize heterogeneity in PD? The patient biotypes we initially identified could largely be differentiated from each other on the basis of disease severity, leading us to pursue a dimensional approach. We find that much of the phenotypic variability in the patient sample could be parsimoniously captured by a smaller number of latent dimensions or phenotypic axes that relate to distributed patterns of grey matter atrophy, DAT binding, etc. This finding is reminiscent of previous reports, where even hard clustering solutions often sorted patients into broad disease severity categories5,41–44. Indeed, recent studies have taken an explicitly dimensional approach, attempting to find low-dimensional projections of clinical-behavioral and neuroimaging data45,52–54. In clinical practice, methods like SNF situate individual patients in a biologically-comprehensive feature space that can then guide more objective clinical decisions about diagnosis and prognosis. Our study is the first step in better understanding which measures are necessary and informative of PD severity, but more work needs to be done to continue to investigate how this will translate to clinical practice.

The notion that PD can be characterized by a smaller number of latent dimensions opens the question of where PD is situated relative to other diseases55. Given the natural functional dependencies among the molecular components of a cell, distinct pathological perturbations (e.g. mutations) may affect overlapping gene modules, cell types, tissues, and organs, ultimately manifesting in similar phenotypes. For instance, do the present latent dimensions trace out a transdiagnostic continuum along which we can place other neurodegenerative diseases (e.g. Alzheimer’s disease, tauopathies). How PD fits into a global “disease-ome” remains an exciting question for future research.

More broadly, the present work builds on recent efforts to draw insight from multiple modalities. Modern technological advances and data sharing initiatives permit access to large patient samples with increasing detail and depth14. How best to integrate information from these multi-view datasets remains a fundamental question in computational medicine15,19,25. Though there is an active discussion regarding what stage of analysis is most appropriate for data integration25, our results demonstrate that important insights about PD patient heterogeneity can be drawn only by simultaneously considering multiple data modalities. Covariance among clinical, morphometric, and physiological measurements synergistically reveals dominant axes of variance that are not apparent in the individual data modalities. Moreover, we show that simple concatenation induces overfitting to the modality with the greatest dimensionality and autocorrelation, motivating further research on integrating diverse sources of information.

A corollary of the present work is that it is also possible to identify modalities that do not make a significant contribution towards differentiating patients and could potentially be excluded from future data collection efforts. This is an important concern because many modern biological assays—such as brain imaging—are expensive and difficult to administer for some types of clinical populations. As a result, samples may be biased and data may be incomplete for many individuals, limiting the application and utility of the subsequent statistical models. The present analysis can thus help to streamline future data collection efforts. By helping to reduce the feature set, the present analytic approach can also help increase the potential for overlap and interoperability among existing datasets.

Although we took steps to ensure that the reported results are robust to multiple methodological choices, there are several important limitations to consider. First, the present results are only demonstrated in a single patient sample; despite consistency with several other recent studies45, formal replication in new longitudinal cohorts is necessary.

Second, the present statistical model leaves out two widely-available and potentially important data modalities: genetic variation and daily movement. Genetic variation is a recognized contributor to the clinical manifestations of PD56, and previous work has shown that genetic risk can be used to meaningfully stratify patients45. Likewise, objective measurements of daily movement with wearable sensors and smart devices are increasingly prevalent and add a fundamentally different source of information about individual patients57. Whether and to what extent the present phenotypic axes reflect the underlying genetic determinants or movement characteristics of PD is an exciting question for future research.

Finally, there has been recent work highlighting the potential of diffusion-weighted imaging (DWI) as an alternative measure to structural, T1w images for predicting PD prognosis58; however, the limited availability of DWI in longitudinal cohorts like those used in the current study restricts its more widespread use.

In summary, we report a flexible method to objectively integrate a diverse array of morphometric, molecular, and clinical information to characterize heterogeneity in PD. We find evidence for three biotypes, but show that the sample can alternatively be characterized in terms of continuous phenotypic dimensions. These phenotypic dimensions bring into focus complementary information from multiple modalities and lay the foundation for a more comprehensive understanding of PD.

Methods

Ethical compliance

Data used in this study were obtained from the Parkinson’s Progression Markers Initiative (PPMI) database (https://www.ppmi-info.org), accessed in March of 2018. Informed consent was obtained from all individuals prior to enrollment in the PPMI study by the participating sites. Formal approval for data re-use was issued by the Research Ethics Board at McGill University, Montreal, QC.

Neuroimaging data processing

Raw neuroimages were directly converted from DICOM to BIDS format59 using heudiconv (https://github.com/nipy/heudiconv). Structural images for each subject were then independently processed with the Advanced Normalization Tools’ (ANTs) longitudinal cortical thickness pipeline60.

Briefly, T1-weighted structural images were corrected for signal intensity non-uniformity with N4 bias correction61. Corrected images were then combined with other available neuroimages (T2-weighted, proton-density, and FLAIR images) across all timepoints to create a temporally unbiased, subject-specific template62. A standard template was non-linearly registered to the subject template and used to remove non-brain tissue; to maintain consistency with previous work on the PPMI dataset11,13 we used the Montreal Neurological Institute (MNI) ICBM-152 2009c template for this purpose (https://www.bic.mni.mcgill.ca/ServicesAtlases/ICBM152NLin200963–65). The remaining subject template brain tissue was segmented into six classes (cerebrospinal fluid, cortical gray matter, white matter, subcortical gray matter, brain stem, and cerebellum) using ANTs joint label fusion66 with a group of fifteen expertly annotated and labeled atlases from the OASIS dataset67. Finally, the segmented subject template was used to performing the same registration, brain extraction, and segmentation procedures on the T1w images from each timepoint.

Three metrics were derived from the pre-processed neuroimaging data: subcortical volume, cortical thickness, and whole-brain deformation-based morphometry values.

Subcortical volume measures the absolute volume, in cubic millimeters, for pre-defined regions of interest. Here, we used the regions of interest from the high-resolution subcortical atlas generated by Pauli et al.68. Analyses reported in the main text used the deterministic version of the atlas; however, using the probabilistic version returned comparable results. Where applicable the probabilistic atlas was thresholded at 40%; thresholding was performed after registration to subjects’ T1w MRIs.

Cortical thickness measures the distance, in millimeters, between the pial surface and the gray-white matter boundary of the brain. The ANTs diffeomorphic registration-based cortical thickness estimation (DiReCT) algorithm was used to measure cortical thickness in the volumetric space of each T1w image69. The diffeomorphic constraint of this procedure ensures that the white matter topology cannot change during thickness estimations, permitting accurate recovery of cortical depth even in sulcal grooves. Previous work has found that this procedure compares favorably to surface-based approaches70.

To account for subject-level neuroanatomical variance, we averaged cortical thickness values in each region of the five multi-scale parcellations (68, 114, 219, 448, and 1000 regions) generated by Cammoun et al.71 for every timepoint for every subject. The parcellations were transformed to the T1w MRI of each timepoint before averaging within regions to minimize bias.

Deformation-based morphometry (DBM) provides an approximate measure of local changes in brain tissue volume for a subject relative to a standard template72. DBM values are derived from the deformation maps generated during the non-linear registration process aligning each subject brain to the template space. In the present study, deformation maps were created by concatenating the non-linear warps described in Neuroimaging data processing that (1) mapped the T1w image for each timepoint to the relevant subject-specific template and (2) mapped the subject-specific template to the MNI152-2009c template. Local changes in tissue volume were then estimated from the derivative of these deformation maps, calculated as the determinant of the Jacobian matrix of displacement. To aid interpretability of these changes we calculated the natural log of the Jacobian determinant, such that a value of zero indicates no volume change compared to the MNI template, negative values indicate tissue expansion relative to the MNI template, and positive values indicate tissue loss relative to the MNI template.

Zeighami and colleagues initially used DBM values from the PPMI subjects to find an ICA component map, which they refer to as a PD-ICA map, highlighting regions of the brain with greater relative tissue loss in Parkinson’s patients than in age-matched healthy controls11. Using this map, they generated an “atrophy score” for each patient, which they related to measures of PD disease severity and prognosis11,36. Unfortunately, a precise calculation of this atrophy score on a different subset of subjects from the PPMI would require the original ICA component table; in order to approximate this score without the component table we employed an alternative procedure originally used in36.

We downloaded the PD-ICA component map from NeuroVault (collection 860, image ID 12551, https://identifiers.org/neurovault.collection:86073) and used the map as a weighted mask on the DBM images estimated for each subject, multiplying DBM values by the component weights in the map and then summing the resulting values to generate a single score for each time point for each subject. Reported atrophy scores are all normalized (i.e., zero mean and unit variance) with respect to the n = 186 patient sample.

Neuroimaging quality control

Neuroimaging data were visually inspected by two authors (RDM and CT) using tools adapted from niworkflows (https://github.com/poldracklab/niworkflows). Processed data from twenty individuals were jointly selected by both raters as anchors and used to guide independent rating of the remaining T1w structural images for all subjects74. Quality of brain extraction, brain segmentation, and registration to the MNI152-2009c template was assessed and rated on a scale of 0–2, where 0 indicates a processing failure, 1 indicates a conditional pass, and 2 indicates a full pass. Discrepancies where one rater assigned a score of 0 and the other rater assigned a passing score (n = 11 instances) were jointly reconciled and new scores assigned (n = 9 revised to a score of 0, n = 2 revised to a score of 1).

Cohen’s kappa coefficient (κ) was calculated to compare the inter-rater reliability of the final quality control scores, yielding 84.8% agreement for segmentation and 84.5% agreement for registration ratings75. Assessing scores for only the subject-specific template of each subject yielded comparable agreement (85.8% for segmentation, 85.5% for registration). The current study used subjects for whom both segmentation and registration scores were ≥1 across both raters.

Non-neuroimaging data processing

In addition to longitudinal neuroimages, the PPMI provides clinical-behavioral assessments, biospecimen analyses, and single-photon emission computed tomography (SPECT) dopamine active transporter (DAT) binding data for all of its participants. Item-level measures for clinical-behavioral assessments were combined into raw composite scores following instructions in the “Derived Variable Definitions and Score Calculations” guide supplied by the PPMI using the pypmi software package (https://github.com/netneurolab/pypmi). Biospecimen data and pre-computed region of interest metrics for SPECT data were used as provided.

Data cleaning

The current study used five data sources: (1) MRI cortical thickness, (2) MRI subcortical volume, (3) SPECT DAT binding ratios, (4) biological and cerebrospinal fluid assays, and (5) clinical-behavioral assessment scores. Prior to combining data sources we performed (i) data cleaning, (ii) outlier removal, (iii) missing data imputation, (iv-a) batch correction, (iv-b) covariate residualization, and (v) normalization for each modality.

Any features for which ≥20% of individuals were missing data were discarded; subsequently, individuals missing ≥20% of the remaining features were discarded. Putative outlier individuals were then identified using a median absolute deviation method and discarded76. The remaining missing data values were imputed, substituting the median value across individuals for each feature. This procedure yielded n = 186 individuals with PD and n = 87 healthy individuals who had data from all five sources. A full list of the 1050 features retained for each data source after preprocessing can be found in Supplementary Table 2.

We used ComBat to correct for site differences in neuroimaging data modalities (i.e., cortical thickness and subcortical volume measurements; neurocombat, https://github.com/ncullen93/neurocombat)77,78. Patient diagnostic status, family history of PD, sex, race, handedness, and education were included in the ComBat correction procedure.

We residualized the pre-processed data against age, sex, and age × sex interactions in PD patients based on the relationships estimated from healthy individuals; estimated total intracranial volume was also included in the residualization process for cortical thickness and subcortical volume features12. Finally, PD patient data were z-scored (centered to zero mean and standardized to unit variance). Only PD patient data were used to estimate means and standard deviation for z-scoring.

Data concatenation

Concatenation provides a data-driven, parameter-free approach for integrating multimodal data, where data features from all sources are horizontally stacked into a single sample by feature matrix. Here, we joined patient data for all features from all data sources and converted the resulting matrix into a patient similarity network by applying a cosine similarity function from scikit-learn79. As spectral clustering cannot handle negative values we scaled the values of the similarity networks between zero and two. The scaled patient networks were subjected to spectral clustering28,29 for a two-, three-, and four-cluster solution, and the solutions were compared to clustering assignments generated from SNF, described below.

Similarity network fusion

Similarity network fusion is a method for combining disparate data sources from a group of samples into a single graph, representing the strength of relationships between samples19. SNF constructs independent similarity networks from each data source using a K-nearest neighbors weighted kernel and then iteratively combines them via a non-linear message passing protocol. The final network contains sample relationships representing information from all data sources and can be subjected to clustering or other graph techniques (see Fig. 1a).

SNF belongs to a broader family of techniques of multi-view learning algorithms (e.g., multiple kernel learning, multi-table matrix factorization). We opted to use SNF because (1) it is an unsupervised learning technique, (2) it is explicitly optimized to control for differing dimensionalities amongst input data modalities19, and (3) it has been shown to be effective at disentangling heterogeneity in psychiatric populations21,22.

We constructed similarity networks for each data source using a Python implementation of the methods described in19 (snfpy; https://github.com/netneurolab/snfpy). A brief description of the main steps in SNF follows, adapted from its original presentation in19.

First, distance matrices are created from each feature matrix. We selected squared Euclidean distance for the analyses in the main text; however, other distance measures return comparable results (see Supplementary Results: Alternative distance metrics”). Next, distance matrices are converted to similarity networks using a scaled exponential kernel:

| 1 |

where ρ(xi, xj) is the Euclidean distance (or other distance metric, as appropriate) between patients xi and xj. The value σ is calculated with:

| 2 |

where represents the average Euclidean distance between xi and its neighbors N1..K. Both K, controlling the number of neighbors, and μ, the scaling factor, are hyperparameters that must be pre-selected, where and .

In order to fuse the supplied similarity networks each must first be normalized. A traditional normalization performed on a similarity matrix would be unstable due to the self-similarity along the diagonal; thus, a modified normalization is used:

| 3 |

Under the assumption that local similarities are more important or reliable than distant ones, a more sparse weight matrix is calculated:

| 4 |

The two weight matrices P and S thus provide information about a given patient’s similarity to all other patients and the patient’s K most similar neighbors, respectively.

The similarity networks are then iteratively fused. At each iteration, the matrices are made more similar to each other via:

| 5 |

After each iteration, the resulting matrices are re-normalized via the above equations. Fusion stops when the matrices have converged or after a pre-specified number of iterations (by default, 20).

Variation in SNF’s two parameters, K and μ, can highlight different aspects of the input data, yielding significantly different fused networks. In order to avoid biasing our results by selecting any specific hyperparameter combination we opted to perform SNF with different combinations of K and μ, using 100 unique values for each parameter (K = 5–105; μ = 0.3–10, logspace). The resulting set of 10,000 fused networks was subjected to consensus clustering and diffusion map embedding to generate both categorical and continuous representations of patient pathology.

For a more in-depth examination of how variation in hyperparameters impacted patient networks refer to “Supplementary Results: Hyperparameter variation”.

In order to find a single clustering solution from the 10,000 fused networks generated by SNF we employed a consensus approach inspired by Bassett et al.30 and Lancichinetti and Fortunato31. First, each of the 10,000 networks was subjected to spectral clustering28,29. As spectral clustering requires pre-specifying the desired number of clusters to be estimated, we chose to generated separate solutions for two, three, and four clusters each. Though it is possible there are more clusters in the dataset, previous work on PD patient data supports 2–4 clusters as a reasonable choice4,5.

We wanted to consider regions of the parameter space that generated “stable” networks—that is, where small perturbations in either of the two hyperparameters did not appreciably change the topology or resulting clustering of the patient networks. To quantify this we assessed the pairwise z-Rand similarity index80 of the clustering solution for each point in hyperparameter space and its four neighbors; local z-Rand values were calculated separately for two-, three-, and four-cluster solutions (Fig. 1c). The resulting cluster similarity matrices were thresholded at the 95th percentile of the values in all three matrices; clustering solutions from regions of parameter space surviving this threshold in any of the three matrices were retained for further analysis, resulting in 1262 × 3 = 3786 assignments. These solutions were used to generate a subject co-assignment matrix representing the normalized probability that two subjects were placed in the same cluster across all assignments. This “co-assignment” matrix was thresholded by generating an average probability from a permutation-based null model, as in30. Briefly, we permuted the assignments for each clustering solution and regenerated the co-assignment probability matrix; the average probability of this permuted matrix was used as the threshold for the original. We clustered the resulting thresholded matrix using a modularity maximization procedure to generate a final “consensus” clustering partition, which was used in all subgroup analyses32.

Diffusion map embedding

While clustering is appealing for its intuitive clinical applications, recent work has shown that continuous representations of clinical dysfunction may provide a more accurate representation of the underlying diseases12,81 As an alternative to clustering, we applied diffusion embedding to the fused PD patient network.

Diffusion map embedding is a non-linear dimensionality reduction technique that finds a low-dimensional representation of graph structures38,39. Though closely related to other manifold learning techniques including e.g., Laplacian-based spectral embedding, diffusion map embedding typically uses a different normalization process that approximates a Fokker–Planck diffusion equation instead of traditional laplacian normalization82. Moreover, diffusion map embedding attempts to model a multi-scale view of the diffusion process via a diffusion time parameter, t, that allow for more nuanced investigations of the geometry of the input data than are achievable via comparable techniques like spectral embedding. In the current manuscript we used diffusion time t = 0, which reveals the most global relationships of the input dataset38.

Prior to embedding we thresholded our fused graphs, removing edges below the 90th percentile of weights for each individual, and computed the cosine similarity of the resulting network83. This network was decomposed using mapalign (https://github.com/satra/mapalign) to generate an embedded space for each of the 10,000 patient networks.

For our analyses we only considered embeddings for those networks in regions of parameter space deemed “stable” via the z-Rand thresholding procedure described in Consensus clustering, resulting in 1262 embeddings. These embeddings were aligned using a generalized orthogonal Procrustes analysis (rotations and reflections only) and then averaged to generate a single patient embedding which was carried forward to all analyses (Fig. 1d). We only considered the first N components yielding a cumulative variance explained of ≥10%.

To examine the out-of-sample predictive utility of the dimensional embedding framework we re-ran the SNF grid search excluding two variables: the tremor dominant score (tremor) and the postural instability/gait difficulty score (PIGD). Embedded dimensions were regenerated following the same procedures depicted in Fig. 1d. We used a five-fold cross-validation framework to assess the extent to which embeddings predicted patient scores on the held-out clinical variables. A simple linear regression was performed using the first five embedding dimensions as predictors of each clinical score; betas were estimated from 80% of the patients and applied to predict scores for the remaining 20% of the patients. Predicted scores were correlated with actual scores, and the average correlations across all folds were reported.

Statistical assessments

Quantitative assessments of the similarity between clustering assignments were calculated using normalized mutual information (NMI33), a measure ranging from 0 to 1 where higher values indicate increased overlap between assignments. Alternative measures (e.g., adjusted mutual information) that correct for differing cluster numbers were not used as NMI was only calculated between assignments with the same number of clusters.

Modularity was used to measure the “goodness-of-fit” of clustering assignments, and was calculated by

| 6 |

where δ() is the Kronecker delta function which returns 1 when patients i and j belong to the same cluster and 0 otherwise. Here, Bij is a modularity matrix whose elements are given by Bij = Aij − Pij, where Aij and Pij are the observed and expected similarity between patients i and j. Modularity ranges between −1 and 1, where higher values indicate increasingly well-defined assortative clustering assignments34.

To assess whether identified clusters were discriminable we performed separate one-way ANOVAs across groups for all data features provided to SNF using scipy’s statistical computing module84 (model: score ~ biotype). Reported results were FDR-corrected (q < 0.05) using the Benjamini–Hochberg procedure85 from the statsmodels modules86. Although longitudinal data were limited for many features, clinical-behavioral assessments were available for the majority of subjects up to 5 years post-baseline. Thus, we analyzed the impact of cluster affiliation on longitudinal feature scores using linear mixed effects models, including age, education, and sex as additional covariates.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

This research was undertaken thanks in part to funding from the Canada First Research Excellence Fund, awarded to McGill University for the Healthy Brains for Healthy Lives initiative. BM acknowledges support from the Canadian Insititutes of Health Research (CIHR-PJT) and from the Fonds de recherche du Québec - Santé (Chercheur Boursier). RDM acknowledges support from the Healthy Brains for Healthy Lives (HBHL) initiative at McGill University and the Fonds de recherche du Québec—Nature et technologies (FRQNT). Data used in the preparation of this article were obtained from the Parkinson’s Progression Markers Initiative (PPMI) database (https://www.ppmi-info.org/data). For up-to-date information on the study, visit https://www.ppmi-info.org. The PPMI—a public-private partnership—is funded by the Michael J. Fox Foundation for Parkinson’s Research and funding partners, including AbbVie, Avid Radiopharmaceuticals, Biogen, BioLegend, Bristol-Myers Squibb, GE Healthcare, Genentech, GlaxoSmithKline (GSK), Eli Lilly and Company, Lundbeck, Merck, Meso Scale Discovery (MSD), Pfizer, Piramal Imaging, Roche, Sanofi Genzyme, Servier, Takeda, Teva, and UCB (https://www.ppmi-info.org/fundingpartners).

Author contributions

R.D.M. and B.M. conceived the project. R.D.M. designed and performed all analyses with input from G.S. and C.T. R.D.M. and B.M. wrote the manuscript. A.D. and R.B.P. provided input on all analyses and edited the manuscript.

Data availability

Data used in this study were obtained from the Parkinson’s Progression Markers Initiative (PPMI) database (https://www.ppmi-info.org), accessed in March of 2018. PPMI data are freely accessible to researchers by signing a Data Use Agreement on the PPMI website.

Code availability

All code used for data processing, analysis, and figure generation is available on GitHub (https://github.com/netneurolab/markello_ppmisnf) and relies on the following open-source Python packages: h5py87, IPython88, Jupyter89, Matplotlib90, NiBabel91, Nilearn92, Numba93, NumPy94,95, Pandas96, scikit-learn79, SciPy84, StatsModels86, Seaborn97, BCTPy98, and mapalign99. Code can also be accessed at 10.5281/zenodo.3731251.

Competing interests

The authors declare no competing interests. Full financial disclosure is provided below: RBP reports grants and personal fees from Fonds de la Recherche en Sante, the Canadian Institute of Health Research, Parkinson Canada, the Weston-Garfield Foundation, the Michael J. Fox Foundation, the Webster Foundation, and personal fees from Takeda, Roche/Prothena, Teva Neurosciences, Novartis, Biogen, Boehringer Ingelheim, Theranexus, GE HealthCare, Jazz Pharmaceuticals, Abbvie, Jannsen, Curasen and Otsuko, outside the submitted work.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Ross D. Markello, Email: ross.markello@mail.mcgill.ca

Bratislav Misic, Email: bratislav.misic@mcgill.ca.

Supplementary information

Supplementary information is available for this paper at 10.1038/s41531-020-00144-9.

References

- 1.Postuma RB, et al. Mds clinical diagnostic criteria for Parkinson’s disease. Mov. Disord. 2015;30:1591–1601. doi: 10.1002/mds.26424. [DOI] [PubMed] [Google Scholar]

- 2.Kalia LV, Lang AE. Parkinson’s disease. Lancet. 2015;386:896–912. doi: 10.1016/S0140-6736(14)61393-3. [DOI] [PubMed] [Google Scholar]

- 3.Tysnes O-B, Storstein A. Epidemiology of Parkinson’s disease. J. Neural Transmission. 2017;124:901–905. doi: 10.1007/s00702-017-1686-y. [DOI] [PubMed] [Google Scholar]

- 4.Faghri, F. et al. Predicting onset, progression, and clinical subtypes of Parkinson disease using machine learning. bioRxiv.10.1101/338913 (2018).

- 5.Fereshtehnejad S-M, Zeighami Y, Dagher A, Postuma RB. Clinical criteria for subtyping Parkinson’s disease: biomarkers and longitudinal progression. Brain. 2017;140:1959–1976. doi: 10.1093/brain/awx118. [DOI] [PubMed] [Google Scholar]

- 6.Simuni T, et al. How stable are Parkinson’s disease subtypes in de novo patients: analysis of the PPMI cohort? Parkinsonism Related Disord. 2016;28:62–67. doi: 10.1016/j.parkreldis.2016.04.027. [DOI] [PubMed] [Google Scholar]

- 7.Espay AJ, et al. Biomarker-driven phenotyping in Parkinson’s disease: a translational missing link in disease-modifying clinical trials. Mov. Disord. 2017;32:319–324. doi: 10.1002/mds.26913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fujiwara H, et al. α-synuclein is phosphorylated in synucleinopathy lesions. Nature Cell Biol. 2002;4:160. doi: 10.1038/ncb748. [DOI] [PubMed] [Google Scholar]

- 9.Luk KC, et al. Pathological α-synuclein transmission initiates parkinson-like neurodegeneration in nontransgenic mice. Science. 2012;338:949–953. doi: 10.1126/science.1227157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fearnley JM, Lees AJ. Ageing and Parkinson’s disease: substantia nigra regional selectivity. Brain. 1991;114:2283–2301. doi: 10.1093/brain/114.5.2283. [DOI] [PubMed] [Google Scholar]

- 11.Zeighami Y, et al. Network structure of brain atrophy in de novo Parkinson’s disease. Elife. 2015;4:e08440. doi: 10.7554/eLife.08440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zeighami Y, et al. A clinical-anatomical signature of Parkinsona’s disease identified with partial least squares and magnetic resonance imaging. Neuroimage. 2019;190:69–78. doi: 10.1016/j.neuroimage.2017.12.050. [DOI] [PubMed] [Google Scholar]

- 13.Yau YH, et al. Network connectivity determines cortical thinning in early Parkinson’s disease progression. Nat. Commun. 2018;9:12. doi: 10.1038/s41467-017-02416-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Marek K, et al. The Parkinson Progression Marker Initiative (PPMI) Prog. Neurobiol. 2011;95:629–635. doi: 10.1016/j.pneurobio.2011.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mišić B, Sporns O. From regions to connections and networks: new bridges between brain and behavior. Curr. Opin. Neurobiol. 2016;40:1–7. doi: 10.1016/j.conb.2016.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kirk P, Griffin JE, Savage RS, Ghahramani Z, Wild DL. Bayesian correlated clustering to integrate multiple datasets. Bioinformatics. 2012;28:3290–3297. doi: 10.1093/bioinformatics/bts595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Monti S, Tamayo P, Mesirov J, Golub T. Consensus clustering: a resampling-based method for class discovery and visualization of gene expression microarray data. Mach. Learning. 2003;52:91–118. doi: 10.1023/A:1023949509487. [DOI] [Google Scholar]

- 18.Nigro JM, et al. Integrated array-comparative genomic hybridization and expression array profiles identify clinically relevant molecular subtypes of glioblastoma. Cancer Res. 2005;65:1678–1686. doi: 10.1158/0008-5472.CAN-04-2921. [DOI] [PubMed] [Google Scholar]

- 19.Wang B, et al. Similarity network fusion for aggregating data types on a genomic scale. Nat. Methods. 2014;11:333. doi: 10.1038/nmeth.2810. [DOI] [PubMed] [Google Scholar]

- 20.Marquand AF, Rezek I, Buitelaar J, Beckmann CF. Understanding heterogeneity in clinical cohorts using normative models: beyond case-control studies. Biol. Psychiatry. 2016;80:552–561. doi: 10.1016/j.biopsych.2015.12.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stefanik L, et al. Brain-behavior participant similarity networks among youth and emerging adults with schizophrenia spectrum, autism spectrum, or bipolar disorder and matched controls. Neuropsychopharmacology. 2018;43:1180. doi: 10.1038/npp.2017.274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jacobs, G. R. et al. Integration of brain and behavior measures for identification of data-driven groups cutting across children with ASD, ADHD, or OCD. bioRxiv.10.1101/2020.02.11.944744 (2020). [DOI] [PMC free article] [PubMed]

- 23.Zhuang, J., Wang, J., Hoi, S. C. & Lan, X. Unsupervised multiple kernel learning. In JMLR: Workshop and Conference Proceedings: 3rd Asian Conference on Machine Learning 2011 (eds Hsu, C.-N. & Lee, W.S.) (Research Collection School of Information Systems, 2011).

- 24.Donini M, et al. Combining heterogeneous data sources for neuroimaging based diagnosis: re-weighting and selecting what is important. NeuroImage. 2019;195:215–231. doi: 10.1016/j.neuroimage.2019.01.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zitnik M, et al. Machine learning for integrating data in biology and medicine: principles, practice, and opportunities. Information Fusion. 2019;50:71–91. doi: 10.1016/j.inffus.2018.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Beyer, K., Goldstein, J., Ramakrishnan, R. & Shaft, U. When is “nearest neighbor” meaningful? In International Conference on Database Theory (eds Berri, C. & Buneman, P.) 217–235 (Springer, 1999).

- 27.Desikan RS, et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage. 2006;31:968–980. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- 28.Shi J, Malik J. Normalized cuts and image segmentation. IEEE Trans Pattern Anal. Mach. Intell. 2000;22:888–905. doi: 10.1109/34.868688. [DOI] [Google Scholar]

- 29.Yu, S. X. & Shi, J. Multiclass spectral clustering. In Proc. 9th IEEE International Conference on Computer Vision (2003).

- 30.Bassett DS, et al. Robust detection of dynamic community structure in networks. Chaos: Interdisciplinary J. Nonlinear Sci. 2013;23:013142. doi: 10.1063/1.4790830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lancichinetti A, Fortunato S. Consensus clustering in complex networks. Sci. Rep. 2012;2:336. doi: 10.1038/srep00336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Blondel VD, Guillaume J-L, Lambiotte R, Lefebvre E. Fast unfolding of communities in large networks. J. Statistical Mech.: Theory Exp. 2008;2008:P10008. doi: 10.1088/1742-5468/2008/10/P10008. [DOI] [Google Scholar]

- 33.Strehl A, Ghosh J. Cluster ensembles—a knowledge reuse framework for combining multiple partitions. J. Mach. Learning Res. 2002;3:583–617. [Google Scholar]

- 34.Newman ME. Modularity and community structure in networks. Proc. Natl Acad. Sci. 2006;103:8577–8582. doi: 10.1073/pnas.0601602103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Jankovic J, Kapadia AS. Functional decline in Parkinson disease. Arch. Neurol. 2001;58:1611–1615. doi: 10.1001/archneur.58.10.1611. [DOI] [PubMed] [Google Scholar]

- 36.Zeighami Y, et al. Assessment of a prognostic MRI biomarker in early de novo Parkinson’s disease. NeuroImage: Clinical. 2019;24:101986. doi: 10.1016/j.nicl.2019.101986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Goetz CG, et al. Movement disorder society-sponsored revision of the unified Parkinson’s disease rating scale (MDS-UPDRS): scale presentation and clinimetric testing results. Mov. Disord. 2008;23:2129–2170. doi: 10.1002/mds.22340. [DOI] [PubMed] [Google Scholar]

- 38.Coifman RR, et al. Geometric diffusions as a tool for harmonic analysis and structure definition of data: diffusion maps. Proc. Natl Acad Sci. 2005;102:7426–7431. doi: 10.1073/pnas.0500334102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lafon S, Lee AB. Diffusion maps and coarse-graining: a unified framework for dimensionality reduction, graph partitioning, and data set parameterization. IEEE Trans. Pattern Anal. Mach. Intell. 2006;28:1393–1403. doi: 10.1109/TPAMI.2006.184. [DOI] [PubMed] [Google Scholar]

- 40.Stebbins GT, et al. How to identify tremor dominant and postural instability/gait difficulty groups with the movement disorder society unified Parkinson’s disease rating scale: comparison with the unified Parkinson’s disease rating scale. Mov. Disord. 2013;28:668–670. doi: 10.1002/mds.25383. [DOI] [PubMed] [Google Scholar]

- 41.Thenganatt MA, Jankovic J. Parkinson disease subtypes. JAMA Neurol. 2014;71:499–504. doi: 10.1001/jamaneurol.2013.6233. [DOI] [PubMed] [Google Scholar]

- 42.Erro R, et al. Clinical clusters and dopaminergic dysfunction in de-novo parkinson disease. Parkinsonism Relat. Disord. 2016;28:137–140. doi: 10.1016/j.parkreldis.2016.04.026. [DOI] [PubMed] [Google Scholar]

- 43.Lawton M, et al. Parkinson’s disease subtypes in the oxford parkinson disease centre (opdc) discovery cohort. J. Parkinson’s Dis. 2015;5:269–279. doi: 10.3233/JPD-140523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lawton M, et al. Developing and validating Parkinson’s disease subtypes and their motor and cognitive progression. J. Neurol. Neurosurg. Psychiatry. 2018;89:1279–1287. doi: 10.1136/jnnp-2018-318337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Sandor, C. et al. Universal continuous severity traits underlying hundreds of Parkinson’s disease clinical features. bioRxiv.10.1101/655217 (2019).

- 46.Freeze B, Pandya S, Zeighami Y, Raj A. Regional transcriptional architecture of Parkinson’s disease pathogenesis and network spread. Brain. 2019;142:3072–3085. doi: 10.1093/brain/awz223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pandya S, et al. Predictive model of spread of Parkinson’s pathology using network diffusion. NeuroImage. 2019;192:178–194. doi: 10.1016/j.neuroimage.2019.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zheng, Y.-Q. et al. Local vulnerability and global connectivity jointly shape neurodegenerative disease propagation. PLoS Biol.17, e3000495 (2019). [DOI] [PMC free article] [PubMed]

- 49.Weickenmeier J, Kuhl E, Goriely A. Multiphysics of prionlike diseases: progression and atrophy. Phys. Rev. Lett. 2018;121:158101. doi: 10.1103/PhysRevLett.121.158101. [DOI] [PubMed] [Google Scholar]

- 50.Warren JD, et al. Molecular nexopathies: a new paradigm of neurodegenerative disease. Trends Neurosci. 2013;36:561–569. doi: 10.1016/j.tins.2013.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Maia, P. D. et al. Origins of atrophy in Parkinson linked to early onset and local transcription patterns. Brain Commun.2, facaa065 (2020). [DOI] [PMC free article] [PubMed]

- 52.Kebets V, et al. Somatosensory-motor dysconnectivity spans multiple transdiagnostic dimensions of psychopathology. Biol. Psychiatry. 2019;86:779–791. doi: 10.1016/j.biopsych.2019.06.013. [DOI] [PubMed] [Google Scholar]

- 53.Tang, S. et al. Reconciling dimensional and categorical models of autism heterogeneity: a brain connectomics & behavioral study. BioRxiv.10.1101/692772 (2019). [DOI] [PubMed]

- 54.Kirschner M, et al. Latent clinical-anatomical dimensions of schizophrenia. Schizophrenia Bull. 2020;46:1426–1438. doi: 10.1093/schbul/sbaa097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Barabási A-L, Gulbahce N, Loscalzo J. Network medicine: a network-based approach to human disease. Nat. Rev. Genetics. 2011;12:56. doi: 10.1038/nrg2918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Nalls MA, et al. Large-scale meta-analysis of genome-wide association data identifies six new risk loci for Parkinson’s disease. Nat. Genetics. 2014;46:989. doi: 10.1038/ng.3043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Maetzler W, Domingos J, Srulijes K, Ferreira JJ, Bloem BR. Quantitative wearable sensors for objective assessment of Parkinson’s disease. Mov. Disord. 2013;28:1628–1637. doi: 10.1002/mds.25628. [DOI] [PubMed] [Google Scholar]

- 58.Abbasi N, et al. Predicting severity and prognosis in Parkinsona’s disease from brain microstructure and connectivity. Neuroimage: Clin. 2019;25:102111. doi: 10.1016/j.nicl.2019.102111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Gorgolewski KJ, et al. The Brain Imaging Data Structure, a format for organizing and describing outputs of neuroimaging experiments. Sci. Data. 2016;3:160044. doi: 10.1038/sdata.2016.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Tustison, N. J. et al. The ANTs longitudinal cortical thickness pipeline. bioRxiv.10.1101/170209 (2018).

- 61.Tustison NJ, et al. N4ITK: improved N3 bias correction. IEEE Trans. Med. Imaging. 2010;29:1310–1320. doi: 10.1109/TMI.2010.2046908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Avants BB, et al. The optimal template effect in hippocampus studies of diseased populations. Neuroimage. 2010;49:2457–2466. doi: 10.1016/j.neuroimage.2009.09.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Fonov VS, Evans AC, McKinstry RC, Almli C, Collins D. Unbiased nonlinear average age-appropriate brain templates from birth to adulthood. Neuroimage. 2009;47:S102. doi: 10.1016/S1053-8119(09)70884-5. [DOI] [Google Scholar]

- 64.Fonov V, et al. Unbiased average age-appropriate atlases for pediatric studies. Neuroimage. 2011;54:313–327. doi: 10.1016/j.neuroimage.2010.07.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Collins, D. L., Zijdenbos, A. P., Baaré, W. F. & Evans, A. C. ANIMAL+INSECT: improved cortical structure segmentation. In Biennial International Conference on Information Processing in Medical Imaging (eds Kuba, A., Šáamal, M. & Todd-Pokropek, A.) 210–223 (Springer, 1999).

- 66.Wang H, et al. Multi-atlas segmentation with joint label fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35:611–623. doi: 10.1109/TPAMI.2012.143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Klein A, Tourville J. 101 labeled brain images and a consistent human cortical labeling protocol. Frontiers in Neuroscience. 2012;6:171. doi: 10.3389/fnins.2012.00171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Pauli WM, Nili AN, Tyszka JM. A high-resolution probabilistic in vivo atlas of human subcortical brain nuclei. Sci. Data. 2018;5:180063. doi: 10.1038/sdata.2018.63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Das SR, Avants BB, Grossman M, Gee JC. Registration based cortical thickness measurement. Neuroimage. 2009;45:867–879. doi: 10.1016/j.neuroimage.2008.12.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Tustison NJ, et al. Large-scale evaluation of ANTs and FreeSurfer cortical thickness measurements. Neuroimage. 2014;99:166–179. doi: 10.1016/j.neuroimage.2014.05.044. [DOI] [PubMed] [Google Scholar]

- 71.Cammoun L, et al. Mapping the human connectome at multiple scales with diffusion spectrum MRI. J. Neurosci. Methods. 2012;203:386–397. doi: 10.1016/j.jneumeth.2011.09.031. [DOI] [PubMed] [Google Scholar]

- 72.Ashburner J, et al. Identifying global anatomical differences: Deformation-based morphometry. Human Brain Mapping. 1998;6:348–357. doi: 10.1002/(SICI)1097-0193(1998)6:5/6<348::AID-HBM4>3.0.CO;2-P. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Gorgolewski KJ, et al. NeuroVault.org: a web-based repository for collecting and sharing unthresholded statistical maps of the human brain. Front. Neuroinform. 2015;9:8. doi: 10.3389/fninf.2015.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Rosen AF, et al. Quantitative assessment of structural image quality. NeuroImage. 2018;169:407–418. doi: 10.1016/j.neuroimage.2017.12.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Cohen J. A coefficient of agreement for nominal scales. Educational Psychol. Meas. 1960;20:37–46. doi: 10.1177/001316446002000104. [DOI] [Google Scholar]

- 76.Iglewicz, B. & Hoaglin, D. C. How to Detect and Handle Outliers Vol. 16 (ASQ Press, 1993).

- 77.Johnson WE, Li C, Rabinovic A. Adjusting batch effects in microarray expression data using empirical Bayes methods. Biostatistics. 2007;8:118–127. doi: 10.1093/biostatistics/kxj037. [DOI] [PubMed] [Google Scholar]

- 78.Fortin J-P, et al. Harmonization of cortical thickness measurements across scanners and sites. NeuroImage. 2018;167:104–120. doi: 10.1016/j.neuroimage.2017.11.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Pedregosa F, et al. Scikit-learn: machine learning in Python. J. Mach. Learning Res. 2011;12:2825–2830. [Google Scholar]

- 80.Traud AL, Kelsic ED, Mucha PJ, Porter MA. Comparing community structure to characteristics in online collegiate social networks. SIAM Rev. 2011;53:526–543. doi: 10.1137/080734315. [DOI] [Google Scholar]

- 81.Xia, C. H. et al. Linked dimensions of psychopathology and connectivity in functional brain networks. Nat. Commun.9, 3003 (2018). [DOI] [PMC free article] [PubMed]

- 82.Nadler, B., Lafon, S., Kevrekidis, I. & Coifman, R. R. in Advances in Neural Information Processing Systems (eds Weiss, Y., Schölkopf, B., Platt, J.) 955–962 (MIT Press, Cambridge, 2006).

- 83.Margulies DS, et al. Situating the default-mode network along a principal gradient of macroscale cortical organization. Proc. Natl Acad. Sci. 2016;113:12574–12579. doi: 10.1073/pnas.1608282113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Virtanen P, et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods. 2020;17:261–272. doi: 10.1038/s41592-019-0686-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Statistical Soc.: Ser. B (Methodological) 1995;57:289–300. [Google Scholar]

- 86.Seabold, S. & Perktold, J. Statsmodels: econometric and statistical modeling with python. In 9th Python in Science Conference (eds van der Walt, S. & Millman, J.) (2010).

- 87.Collette, A. Python and HDF5: Unlocking Scientific Data (O’Reilly, 2013).

- 88.Pérez F, Granger BE. IPython: a system for interactive scientific computing. Comput. Sci. Eng. 2007;9:21–29. doi: 10.1109/MCSE.2007.53. [DOI] [Google Scholar]

- 89.Kluyver, T. et al. In Positioning and Power in Academic Publishing: Players, Agents and Agendas (eds Loizides, F. & Scmidt, B.) 87–90 (IOS Press, 2016).

- 90.Hunter JD. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007;9:90–95. doi: 10.1109/MCSE.2007.55. [DOI] [Google Scholar]

- 91.Brett, M. et al. nipy/nibabel. Zenodo. 10.5281/zenodo.591597 (2019).

- 92.Abraham A, et al. Machine learning for neuroimaging with scikit-learn. Front. Neuroinform. 2014;8:14. doi: 10.3389/fninf.2014.00014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Lam, S. K., Pitrou, A. & Seibert, S. Numba: A LLVM-based python JIT compiler. In Proc. 2nd Workshop on the LLVM Compiler Infrastructure in HPC 7 (ed Finkel, H.) (ACM, 2015).

- 94.Oliphant, T. E. A Guide to NumPy Vol. 1 (Trelgol Publishing USA, 2006).