Abstract

We lack knowledge on how patient-reported experience relates to both quality of care services and visit attendance in the primary care setting. Therefore, in a cross-sectional analysis of 8355 primary care patients from 22 primary care practices, we examined the associations between visit-triggered patient-reported experience measures and both (1) quality of care measures and (2) number of missed primary care appointment (no shows). Our independent variables included both overall patient experience and its subdomains. Our outcomes included the following measures: smoking cessation discussion, diabetes eye examination referral, mammography, colonoscopy screening, current smoking status (nonsmoker vs smoker), diabetes control Hemoglobin A1c (HbA1c [<8]), blood pressure control, cholesterol control Low Density Lipoprotein (LDL) among patients with diabetes (LDL < 100), and visit no shows 2 and 5 years after the index visit that triggered the completed patient-experience survey. We found that patient experience, while an important stand-alone metric of care quality, may not relate to clinical outcomes or process measures in the outpatient setting. However, patient-reported experiences with their primary care provider appear to influence their future visit attendance.

Keywords: patient experience, primary care redesign, quality improvement, patient-centered care, doctor–patient relationship

Introduction

Over the past decade, there has been increasing emphasis on the use of patient-reported experience data in the performance evaluations of primary care practices and providers (1). Despite this, we lack knowledge on how patient reported experience relates to both quality of care services and utilization, specifically visit attendance in the primary care setting. Prior work has examined the relationship between patient experience and inpatient clinical outcomes with mixed results (1 –7). To our knowledge, there lacks prior studies examining how patient experience relates to ambulatory quality of care metrics and visit attendance. The relationship between patient experiences and outcomes may be different in the outpatient as compared to the inpatient setting, given the vastly distinct environments and quality of care indicators. In addition, how patient experience relates to uptake of elective primary care services, specifically patient appointment attendance, is an understudied area. Therefore, the objective of our study was to evaluate the associations between patient-reported experiences with care, visit attendance, and quality of care process and outcome measures in the outpatient primary care setting.

Methods

Data Sources

In order to estimate the relationships between patient-reported experiences with care and measures of quality and uptake of ambulatory care services, we examined data from the 34-item visit-triggered patient experience survey sent via mail with a prepaid return envelope to all patients after an ambulatory care visit collected between January 2012 and July 2014 from primary care patients at the 22 eligible primary care sites. Sites that were deemed eligible were health system-affiliated sites that provided (patients 18 and older) primary care services to adults (age 18 and older) and had completed the process for National Care Quality Assurance recognition as a patient-centered medical home. (8, 9) Of 27 affiliated health system primary care clinics, 22 met those criteria. These sites were a diverse group of 22 urban and suburban primary care practices in New Jersey and Pennsylvania. For those patients who completed more than 1 visit survey during this process (n = 959), we randomly selected one, for a final analytic sample of n = 8355 unique patients. We also retrieved and analyzed data for all our study sites from Penn Medicine’s Clinical Data Warehouse, on ambulatory quality of care process and outcome measures from the same period, January 2012 to 2014. Lastly, we retrieved and analyzed the number of missed scheduled appointments up to 5 years after the index ambulatory visit that triggered the completed patient experience survey. The institutional review board of the University of Pennsylvania approved this study.

Patient Experience Measures

Our independent variable was a measure of overall patient experience from a 34-item visit-triggered survey, that assessed patient reported experiences with the following subdomains: access, moving through the medical encounter (visit), ancillary staff (nurse, medical assistant), the care provider, patient safety, and privacy (no reported issues with safety or privacy). The access subdomain captures patient perceptions of ability to reach practice personnel via phone, the ease of scheduling appointments, the convenience of the practice’s office hours, the approachability, and courtesy of registration/front desk staff. The visit subdomain evaluates patient experiences with moving through the medical encounter, such as wait times and whether or not patients receive notifications about any delays. The ancillary staff subdomain assesses patient perceptions of care by nurses and/or medical assistants. The care provider subdomain asks patients if their care provider is courteous, provides clear and concise explanations, demonstrates concern for patients’ worries or questions, includes them in treatment decisions, and ensures understanding of medications and treatment plans. In addition, it elicits the patient assessment of the amount of time the provider spent with them and their overall confidence in their care provider. The last subdomain (no reported issues with safety or privacy) evaluates patient perceptions of overall cleanliness, practice staff responsiveness, and adherence to hygiene and safety practices. The survey generates a subdomain score (0-100) from each 5-point Likert-scale question as follows: very poor (score = 0), poor (25), fair (50), good (75), and very good (100). The scores for all questions within each subdomain are averaged to generate a mean score for that subdomain. Then the overall experience with care is calculated from the mean scores from the five subdomains weighted equally (10). Prior psychometric analyses of these subdomains reveal reliability estimates that range from a Cronbach α of 0.81 to 0.97. Additional methodological details of the survey are described elsewhere (11).

Ambulatory Process and Outcome Quality of Care Measures

We selected all quality metrics that were routinely collected and tracked during our study period by our study ambulatory practices for external reporting and/or pay for performance incentives (12, 13). These Center of Medicaid and Medicare outpatient measures of quality were consistent with what other primary care practices nationwide measure and report on. We examined data that captured the following as binary process measures (Yes vs No): smoking cessation discussed among identified tobacco users, diabetes eye examination referral among patients with diabetes, and breast cancer and colorectal cancer screening among eligible patients. We also examined data that captured the following clinical outcomes as binary variables: current smoking status (nonsmoker vs smoker), diabetes control Hemoglobin A1c (HbA1c [<8]), blood pressure control, and cholesterol control Low Density Lipoprotein (LDL) among patients with diabetes (LDL < 100). Table 1 defines the specific metrics we assessed and their respective eligible populations.

Table 1.

Ambulatory Quality of Care Metrics.

| Metric description | Sample size | Achieved N (%) | |

|---|---|---|---|

| Process measures | |||

| Smoking cessation | Percentage of patients aged 18 years and older who received cessation counseling intervention if identified as a tobacco user | 980 | 683 (69.7) |

| Diabetes eye exam referral | Percentage of patients 18-75 years of age with diabetes who had a retinal or dilated eye exam by an eye care professional during the measurement period or a negative retinal or dilated eye exam (no evidence of retinopathy) in the 12 months prior to the measurement period. | 1221 | 688 (56.3) |

| Mammogram screening | Percentage of women 50-74 years of age who had a mammogram to screen for breast cancer | 3394 | 2868 (84.5) |

| Colorectal cancer screening | Percentage of patients 50-75 years of age who had appropriate screening for colorectal cancer | 4670 | 3912 (83.8) |

| Outcome measures | |||

| HbA1c < 8 | Percentage of patients 18-75 years of age with diabetes who had hemoglobin A1c < 8.0% during the measurement period. | 1207 | 956 (79.2) |

| Non-Smoker | Percentage of patients aged 18 years and older that screened negative for tobacco use | 8271 | 7291 (88.2) |

| Controlled hypertension | Percentage of patients 18-85 years of age who had a diagnosis of hypertension and whose blood pressure was adequately controlled (<140/90 mm Hg) during the measurement period. | 3638 | 3498 (96.2) |

| LDL control (<100) | Percentage of patients 18-75 years of age with diabetes whose LDL-C was adequately controlled (<100 mg/dL) during the measurement period. | 1221 | 840 (68.3) |

No Show Outcome

We used scheduling data from practice electronic medical records to determine this outcome. Using the definition set by the primary care practices in our study for routine monitoring, we classified a no-show appointment as an unattended scheduled visit that occurs without the practice receiving any prior notification from the patient/family to reschedule or cancel. Given our patient experience survey data is visit-triggered, every patient in our study has an index visit between 2012 and 2014, which they attended and afterward completed a survey that captured their visit experience. We measured the number no show visits over 2 distinct time intervals—in the 2 years and 5 years after the index visit that triggered the completed patient-experience survey.

Other covariates

In addition to self-reported patient experience measures, we examined the following patient and practice characteristics: race/ethnicity, age, gender, insurance type, place of residence, clinical comorbidities (Charlson Comorbidity Index [CCI]), practice location, number of providers, and patient volume.

Statistical analysis

We first determined the characteristics of patient in our sample and thereafter computed descriptive statistics for our patient experience measures and outcomes of interest. We then employed generalized estimating equations with an exchangeable correlation structure to account for patient clustering by provider and practice. In multivariate logistic regression models, we estimated the relationship between our patient experience measures and binary ambulatory quality of care metrics, adjusting for the covariates listed above. Using multivariate negative binomial models, we estimated the relationship between our patient experience measures and visit no show counts, adjusting for the covariates above, while accounting for clustering of patients by provider and practice. To account for multiple hypothesis testing, we also generated adjusted P values using false discovery rate methods (14). Two-tailed P values and 95% CIs are reported for all statistical tests, With P < .05 considered statistically significant.

Results

Of 28 201 patients, 8355 (22.8%) completed a survey. Of those 8355 patients in our study, 64% were female, 24% were black, 5.3% had Medicaid, 21.2% Medicare, 5.3% had multiple chronic conditions (CCI > 2). The mean age (SD) was 57 (17.5; Table 2). The mean patient experience scores (SD) for the 5 domains were as follows: 85.8 (16.0) for experience with access, 81.6 (18.4) for visit experience, 88.5 (16.7) for nurse/staff experience, 88.3 (15.0) for patient safety and privacy, and 91.5 (14.8) for care provider experience. The mean (SD) score for overall assessment of patient experience was 87.4 (13.6).

Table 2.

Baseline Characteristics of Patients.

| Patient characteristics (n = 8355) | |

| Mean (SD) age in years | 57.1 (17.5) |

| Charlson score (0-11 high) | 0.5 (1.1) |

| Charlson group | N (%) |

| 0 (Charlson score = 0) | 5937 (71.8) |

| 1 (Charlson score = 1) | 1343 (16.2) |

| 2 (Charlson score=2) | 555 (6.7) |

| 3 (Charlson score > 2) | 436 (5.3) |

| Gender | n (%) |

| Male | 3014 (36.1) |

| Female | 5341 (63.9) |

| Race/ethnicity | n (%) |

| White | 5593 (66.9) |

| Black | 2023 (24.2) |

| Other | 739 (8.9) |

| Low SES zip code | n (%) |

| Yes | 1286 (15.4) |

| No | 7069 (84.6) |

| Insurance type | n (%) |

| Private | 6062 (72.6) |

| Medicare | 1774 (21.2) |

| Medicaid | 445 (5.3) |

| Self-pay | 74 (0.9) |

Performance on quality of care metrics varied depending on the measure. About 56% of patients with diabetes received a necessary eye examination referral and about 68% had achieved lipid control (LDL < 100). Table 1 details the process and outcome measures along with the number and percent of patients that achieved them. About 15% of the patients in our sample had not shown up to one or more of their appointments within 2 years of their index visit and close to 27% of the patients had 1 or more no shows within 5 years of their index visit. Two years post index visit, the median no show count, or number of scheduled visits they missed, for patients in our sample was zero, as well as the 25th and 75th percentiles. The maximum appointment no show count was 15. The average the no show count was 0.28 with a standard deviation of 0.88. Five years post index visit, the median no show count as well as the 25th percentile for patients was zero, the 75th percentile was one. The maximum appointment no show count was 29, with a mean (SD) of 0.63 (1.7)

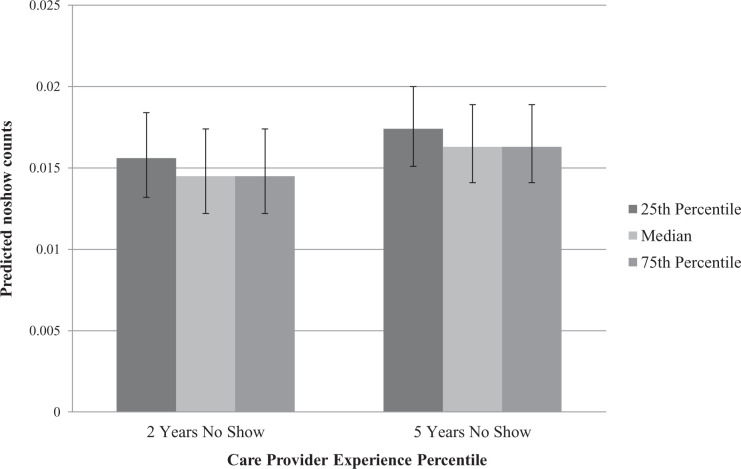

We found no discernable patterns in associations between our patient experience measures and our clinical process and outcome measures (Table 3). However, in adjusted models, we did find a significant association between patient-reported experiences with their care provider and no-show rates 2 and 5 years post index visit. These associations retained significance even after accounting for multiple hypothesis testing. Two years post index visit, holding other variables at their reference level or mean, going from a care provider score at 87.5 (25th percentile) to 100 (median and 75th percentile), the no show count would decrease from 1.6 to 1.4 or by 7% (Figure 1). Similarly, 5 years post index visit, holding other variables at their reference level or mean, going from a care provider score at 87.5 (25th percentile) to 100 (median and 75th percentile), the no show count would decrease from 1.7 to 1.6, or by 6% (Figure 1). Supplemental Appendix A has the complete results of this analysis with adjusted P values.

Table 3.

Patient-Reported Experiences With Care and Ambulatory Process and Clinical Outcome Measures.

| Process measures | Clinical outcomes | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Smoking cessation counseling | Diabetes eye referral | Mammogram screening** | Colonoscopy screening** | HbA1c < 8 | Nonsmoker | Controlled hypertension | LDL control (<100) | ||

| Patient Reported Measures of Experiences with Care | Access | 1.01 (0.99, 1.02) P = .40 |

1.00 (0.99, 1.01) P = .80 |

0.99 (0.99, 1.00) P = .12 |

1.00 (0.99, 1.01) P = .53 |

1.01 (1.00, 1.02) P = .10 |

1.00 (0.99, 1.01) P = .85 |

1.01 (0.99, 1.03) P = .33 |

0.99 (0.98, 1.01) P = .25 |

| Visit Experience | 1.01 (1.00, 1.02) P = .19 |

1.00 (0.99, 1.01) P = .40 |

1.00 (0.99, 1.01) P=1.0 |

1.00 (0.99, 1.01) P = .77 |

0.99 (0.98, 1.00) P = .13 |

1.00 (0.99, 1.00) P = .62 |

1.02 (1.00, 1.03) P = .03* |

1.01 (1.00, 1.02) P = .06 |

|

| Nurse/Staff Experience | 0.99 (0.98, 1.00) P = .16 |

1.01 (1.00, 1.02) P = .29 |

0.99 (0.99, 1.00) P = .24 |

0.99 (0.99, 1.00) P = .14 |

1.00 (0.99, 1.02) P = .46 |

1.00 (0.99, 1.00) P = .35 |

0.99 (0.98, 1.01) P = .35 |

0.99 (0.98, 1.00) P = .19 |

|

| Care Provider Experience | 1.00 (0.98, 1.01) P = .53 |

1.01 (1.00, 1.02) P = .21 |

1.00 (0.99, 1.01) P = .55 |

1.00 (0.99, 1.01) P = .92 |

0.98 (0.97, 1.00) P = .03* |

1.00 (0.99, 1.01) P = .92 |

1.00 (0.98, 1.02) P = .91 |

0.99 (0.98, 1.01) P = .47 |

|

| Personal Issues | 1.01 (0.99, 1.02) P = .46 |

0.99 (0.98, 1.01) P = .34 |

1.01 (1.00, 1.02) P = .07 |

1.01 (1.00, 1.02) P = .05 |

1.01 (0.99, 1.03) P = .40 |

1.00 (0.99, 1.01) P = .87 |

0.98 (0.96, 1.00) P = .11 |

1.01 (1.00, 1.03) P = .08 |

|

| Overall Assessment | 1.01 (1.00, 1.02) P = .08 |

1.00 (0.99, 1.01) P = .81 |

1.00 (0.99, 1.00) P = .52 |

1.00 (0.99, 1.01) P = .60 |

1.00 (0.99, 1.01) P = .90 |

1.00 (0.99, 1.00) P = .15 |

1.00 (0.99, 1.02) P = .58 |

1.00 (1.00, 1.01) P = .36 |

|

**,*P value < 0.05.

Figure 1.

Adjusted Associations between care provider experience score and no show counts at 2 years and 5 years post index visit.

Discussion

We analyzed patient experience data over a 2-year period across 22 primary care practice sites and did not find any significant relationships between patient-reported experiences with care and both patient-level quality of care process and clinical outcomes.

We did find a significant association between patient-reported experiences with their care provider and whether or not they show up to scheduled appointments (appointment no-shows). This association was significant at both time intervals we examined, thus showing a sustained association even 5 years after the visit where patients reported their care provider experiences. Prior evidence has shown appointment no-shows to be an independent predictor of suboptimal primary care outcomes and acute care utilization. (15) Prior estimates reveal that the cost of one no show to a primary care visit ranges from US$125 to US$274 depending on the study and that with a no-show rate akin to the national average of 18%, monthly losses may be upward of US$60 000 (16).

Our findings from outpatient settings did not mirror prior evidence from inpatient settings that demonstrate significant associations between patient-reported experiences with care and process and clinical outcomes (6, 7). Key differences between these settings may explain why inpatient associations between care processes and patient experience do not translate to similar outpatient associations. Patient-reported experience measures from an inpatient encounter are isolated to one discrete care episode that encompasses linear set of care processes within a controlled setting (17). In contrast, primary care outpatient settings are complex adaptive nonlinear systems, where patient-reported visit experience does not necessarily reflect all the inputs, including those out of a health care provider’s control, required to effectively provide preventive care and manage chronic disease (17 –20). Our findings also did not mirror prior evidence from a national study that found an association between higher patient satisfaction and greater inpatient use, higher overall health care and prescription drug expenditures, and increased mortality (5). While there may be a tenuous relationship between patient satisfaction and quality of care, the evidence that satisfied patients are more likely to adhere provider’s recommended treatments is further underscored by our findings of a significant association between patient satisfaction with their providers and visit attendance (21).

Previous studies have highlighted the critical role of providers as drivers of overall patient experiences with care as well as care quality (8, 9, 22, 23). Building on those findings, this study demonstrates that the experience patients have with their providers may also influence subsequent uptake of and adherence to ambulatory services. Miscommunication has been found to be 1 of the 2 key contributors to appointment no shows, the other being forgetfulness (24). Initiatives to mitigate no-show rates to date have centered on appointment reminders with variable success (16, 24). Limited studies have evaluated the role of patient experience in predicting no shows. Goldman et al evaluated this and found that a patient’s visit experience, if negative, strongly predicted future no shows (25). There is also prior evidence that patient overall experience with visit is dictated by their experience with their primary care provider (8, 9). Future work should consider developing and implementing initiatives that improve provider–patient relationships and communications and evaluate the effects on appointment no shows.

The authors acknowledge our study’s limitations. We cannot infer causality, given our cross-sectional design. These findings may lack generalizability, as we conducted this study in one network of practices. However, University of Pennsylvania Health System (UPHS) primary care practices are a diverse group of suburban and urban practices in New Jersey and Pennsylvania. While our response rate was slightly higher than national averages for patient experience surveys (26), this study is likely subject to nonresponse bias (27). Reported-patient experiences from responders to the survey who took the time to complete the survey, likely reflect a more engaged patient population. Given this, we hypothesize that significant relationship we found between patient experience measures and appointment no show counts may underestimate the influence of care experiences on no-show rates in the actual population. Nonresponse bias may also play a role in the lack of significant associations between patient experience scores and quality of care outcomes. Patients that respond to visit-triggered surveys may be more engaged in their health and have better outcomes.

Our study found that patient experience, while an important stand-alone metric of care quality, may not necessarily relate to clinical outcomes or process measures in the outpatient setting. The relationship between patient experience and quality of care in the inpatient setting has been utilized as a key rationale for patient experience measurement. Yet, patient experience measurement in the primary care setting as a stand-alone measure of quality may be the only rationale needed. Prior work supports the need for the patient voice and feedback on organizational processes in our primary care redesign efforts. (8, 9] Moreover, we found that patient experience with their primary care provider did appear to influence no show rates. Practices should make efforts to minimize appointment no shows, which have shown to result in significant financial costs for practices and decreased value of care for patients.

Conclusion

Our findings reveal that practices may benefit from a focus on improving the provider–patient relationship as a potential method to improve visit attendance and that ongoing measurement of patient experience in the ambulatory setting is critical to such efforts.

Supplemental Material

Supplemental Material, Appendix_JPX for The Relationships Between Patient Experience and Quality and Utilization of Primary Care Services by Jaya Aysola, Chang Xu, Hairong Huo and Rachel M Werner in Journal of Patient Experience

Acknowledgments

The authors would like to thank Sarah Lamar for her assistance in acquiring the data on missed scheduled visits.

Author Biographies

Jaya Aysola is the Executive Director of Penn Medicine Center for Health Equity Advancement, Assistant Dean of Inclusion and Diversity and Assistant Professor of Medicine and Assistant Professor of Pediatrics at the Perelman School of Medicine.

Chang Xu is a Statistical Analyst within the Division of General Internal Medicine.

Hairong Huo was a statistical analyst within the Division of General Internal Medicine.

Rachel M Werner is the Executive Director of the Leonard Davis Institute of Health Economics. She is Professor of Medicine at the University of Pennsylvania Perelman School of Medicine as well as the Robert D. Eilers Professor of Health Care Management at the Wharton School and a practicing physician at the Philadelphia VA.

Authors’ Note: The Institutional Review Board of the University of Pennsylvania approved this study. An abstract of this study was an oral presentation at the Society of General Internal Medicine on May 14, 2016, in Hollywood, FL, USA.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following no financial support for the research, authorship, and/or publication of this article: Jaya Aysola was funded by the grant AHRQ’s PCOR Institutional Award (K12 HS021706-01) for the implementation and analysis of the results of this study. Rachel Werner is funded in part by K24-AG047908 from the National Institute on Aging.

ORCID iD: Jaya Aysola  https://orcid.org/0000-0002-8474-3682

https://orcid.org/0000-0002-8474-3682

Supplemental Material: Supplemental material for this article is available online.

References

- 1. Anhang Price R, Elliott MN, Zaslavsky AM, Ron DH, William GL, Lise R. Examining the role of patient experience surveys in measuring health care quality. MCRR. 2014;71:522–54. doi:10.1177/1077558714541480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Wang DE, Tsugawa Y, Figueroa JF, Jose FF, Ashish KJ. Association between the centers for Medicare and Medicaid services hospital star rating and patient outcomes. JAMA Int Med. 2016;176:848–50. [DOI] [PubMed] [Google Scholar]

- 3. Boulding W, Glickman SW, Manary MP, Kevin AS, Richard S. Relationship between patient satisfaction with inpatient care and hospital readmission within 30 days. Am J Manag Care. 2011;17:41–8. [PubMed] [Google Scholar]

- 4. Manary MP, Boulding W, Staelin R, Seth WG. The patient experience and health outcomes. N Engl J Med. 2013;368:201–3. doi:10.1056/NEJMp1211775 [DOI] [PubMed] [Google Scholar]

- 5. Fenton JJ, Jerant AF, Bertakis KD, Peter F. The cost of satisfaction: a national study of patient satisfaction, health care utilization, expenditures, and mortality. Arch Intern Med. 2012;172:405–11. doi:10.1001/archinternmed.2011.1662 [DOI] [PubMed] [Google Scholar]

- 6. Glickman SW, Boulding W, Manary M, Richard S, Matthew TR, Robert JW. Patient satisfaction and its relationship with clinical quality and inpatient mortality in acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2010;3:188–95. doi:10.1161/circoutcomes.109.900597 [DOI] [PubMed] [Google Scholar]

- 7. Isaac T, Zaslavsky AM, Cleary PD, Bruce EL. The relationship between patients’ perception of care and measures of hospital quality and safety. Health Serv Res. 2010;45:1024–40. doi:10.1111/j.1475-6773.2010.01122.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Aysola J, Schapira MM, Huo H, Rachel MW. Organizational processes and patient experiences in the patient-centered medical home. Med Care. 2018;56:497–504. doi:10.1097/mlr.0000000000000910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Aysola J, Werner RM, Keddem S, Richard So R, Judy AS. Asking the patient about patient-centered medical homes: a qualitative analysis. J Gen Intern Med. 2015;30:1461–67. doi:10.1007/s11606-015-3312-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Presson AP, Zhang C, Abtahi AM, Jacob K, Man H, Andrew RT. Psychometric properties of the press ganey® outpatient medical practice survey. Health Qual Life Outcomes. 2017;15:32 doi:10.1186/s12955-017-0610-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Press Ganey and Associates. Medical Practice Survey Psychometrics Report. Press-Gany and Associates; 2011. [Google Scholar]

- 12. Dowd B, Li CH, Swenson T, Robert C, Jesse L. Medicare’s physician quality reporting system (PQRS): quality measurement and beneficiary attribution. Medicare Medicaid Res Rev. 2014;4:10 doi:10.5600/mmrr.004.02.a04 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Stange KC, Etz RS, Gullett H, Sarah AS, William LM, Carlos Roberto J. Metrics for assessing improvements in primary health care. Annu Rev Public Health. 2014;35:423–42. doi:10.1146/annurev-publhealth-032013-182438 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Benjamini Y, Drai D, Elmer G, Kafkafi N, Golani I. Controlling the false discovery rate in behavior genetics research. Behav Brain Res. 2001;125:279–84. [DOI] [PubMed] [Google Scholar]

- 15. Hwang AS, Atlas SJ, Cronin P, Jeffrey MA, Sachin JS, Wei H, et al. Appointment “no-shows” are an independent predictor of subsequent quality of care and resource utilization outcomes. J Gen Intern Med. 2015;30:1426–33. doi:10.1007/s11606-015-3252-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Kheirkhah P, Feng Q, Travis LM, Shahriar Tavakoli T, Amir S. Prevalence, predictors and economic consequences of no-shows. BMC Health Serv Res. 2016;16:13–13. doi:10.1186/s12913-015-1243-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Young RA, Roberts RG, Holden RJ. The challenges of measuring, improving, and reporting quality in primary care. Ann Fam Med. 2017;15:175–182. doi:10.1370/afm.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Sturmberg JP, Martin CM, Katerndahl DA. Systems and complexity thinking in the general practice literature: an integrative, historical narrative review. Ann Fam Med. 2014;12:66–74. doi:10.1370/afm.1593 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Katerndahl DA, Wood R, Jaén CR. A method for estimating relative complexity of ambulatory care. Ann Fam Med. 2010;8:341–47. doi:10.1370/afm.1157 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Holman GT, Beasley JW, Karsh BT, Jamie AS, Paul DS, Tosha BW. The myth of standardized workflow in primary care. J Am Med Inform Assoc. 2015;23:29–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Zolnierek KB, Dimatteo MR. Physician communication and patient adherence to treatment: a meta-analysis. Med Care. 2009;47:826–34. doi:10.1097/MLR.0b013e31819a5acc [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Reddy A, Pollack CE, Asch DA, Anne C, Rachel MW. The effect of primary care provider turnover on patient experience of care and ambulatory quality of care. JAMA Int Med. 2015;175:1157–62. doi:10.1001/jamainternmed.2015.1853 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Atlas SJ, Grant RW, Ferris TG, Yuchiao C, Michael JB. Patient-physician connectedness and quality of primary care. Arch Intern Med. 2009;150:325–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Kaplan-Lewis E, Percac-Lima S. No-show to primary care appointments: why patients do not come. J Primary Care Comm Health. 2013;4:251–55. doi:10.1177/2150131913498513 [DOI] [PubMed] [Google Scholar]

- 25. Goldman L, Freidin R, Cook EF, Eigner J, Grich P. A multivariate approach to the prediction of no-show behavior in a primary care center. Arch Intern Med. 1982;142:563–67. [PubMed] [Google Scholar]

- 26. Presson AP, Zhang C, Abtahi AM, Acob K, Man H, Andrew RT. Psychometric properties of the press ganey® outpatient medical practice survey. Health Qual Life Outcomes. 2017;15:32 doi:10.1186/s12955-017-0610-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Tyser AR, Abtahi AM, McFadden M, et al. Evidence of non-response bias in the press-Ganey patient satisfaction survey. BMC Health Serv Res. 2016;16:350 doi:10.1186/s12913-016-1595-z [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Material, Appendix_JPX for The Relationships Between Patient Experience and Quality and Utilization of Primary Care Services by Jaya Aysola, Chang Xu, Hairong Huo and Rachel M Werner in Journal of Patient Experience