Abstract

Machine learning potentials have become an important tool for atomistic simulations in many fields, from chemistry via molecular biology to materials science. Most of the established methods, however, rely on local properties and are thus unable to take global changes in the electronic structure into account, which result from long-range charge transfer or different charge states. In this work we overcome this limitation by introducing a fourth-generation high-dimensional neural network potential that combines a charge equilibration scheme employing environment-dependent atomic electronegativities with accurate atomic energies. The method, which is able to correctly describe global charge distributions in arbitrary systems, yields much improved energies and substantially extends the applicability of modern machine learning potentials. This is demonstrated for a series of systems representing typical scenarios in chemistry and materials science that are incorrectly described by current methods, while the fourth-generation neural network potential is in excellent agreement with electronic structure calculations.

Subject terms: Density functional theory, Method development, Molecular dynamics, Computational methods

Machine learning potentials do not account for long-range charge transfer. Here the authors introduce a fourth-generation high-dimensional neural network potential including non-local information of charge populations that is able to provide forces, charges and energies in excellent agreement with DFT data.

Introduction

Computer simulations nowadays have become an important tool in many fields of science like chemistry, molecular biology, physics, and materials science. The quality, and thus the predictive power, of the results obtained in these simulations crucially depends on the accurate description of the atomic interactions. While electronic structure methods like density functional theory (DFT) provide a reliable description of many types of systems, the high computational costs of DFT restrict its application in molecular dynamics (MD)1 and Monte Carlo2 simulations to a few hundred atoms preventing the investigation of many interesting phenomena. Larger systems can be studied by more efficient atomistic potentials, which avoid solving the electronic structure problem on-the-fly but instead provide a direct functional relation between the atomic positions and the potential energy. Atomistic potential energy surfaces (PESs) have been developed for many types of systems, and most of these potentials are based on physical approximations, which necessarily limit the accuracy of the obtained results.

With the advent of machine learning (ML) potentials3–7 in recent year an alternative approach to the construction of PESs has emerged, which allows to combine the accuracy of quantum mechanical electronic structure calculations with the efficiency of simple empirical potentials. Many types of ML potentials have been proposed to date, like neural network potentials8–12, Gaussian approximation potentials (GAPs)13, moment tensor potentials (MTPs)14, spectral neighbor analysis potentials (SNAPs)15, and many others16,17.

ML potentials can be classified into four different generations. Starting with the work of Doren and coworkers published in 19958, the first generation (1G) of ML potentials18,19 has been applicable to low-dimensional systems depending on the positions of a few atoms only. This restriction has been overcome in high-dimensional neural network potentials (HDNNPs) proposed by Behler and Parrinello in 20079, which represented the first ML potential of the second generation (2G). In this generation, which employs the concept of nearsightedness20, the total energy of the system is constructed as a sum of atomic energies, which depend on the local chemical environment up to a cutoff radius and —in case of HDNNPs—are computed by individual atomic neural networks. Most modern ML potentials making use of different ML algorithms, like HDNNPs, GAPs, MTPs, and SNAPs, belong to this second generation, and as standard methods for atomistic simulations they have been successfully applied to a wide range of systems.

A limitation of 2G ML potentials, which are applicable to tens of thousands of atoms, is the neglect of long-range interactions, i.e., electrostatics beyond the cutoff radius, but also dispersion interactions, which may substantially accumulate for condensed systems, are often truncated. This possible source of error, in particular for ionic systems, has been recognized early, and electrostatic corrections based on fixed charges have been proposed13,21. In more flexible third generation (3G) ML potentials, long-range electrostatic interactions are included by constructing environment-dependent atomic charges, which in case of 3G-HDNNPs are expressed by a second set of atomic neural networks22,23. These charges can then be used in standard algorithms like the Ewald sum to compute the full long-range electrostatic energy. Owing to the additional effort in constructing and using 3G ML potentials, most applications have been reported for molecular systems12,24,25, while in simulations of condensed systems they are rarely used, as often long-range electrostatic interactions are efficiently screened.

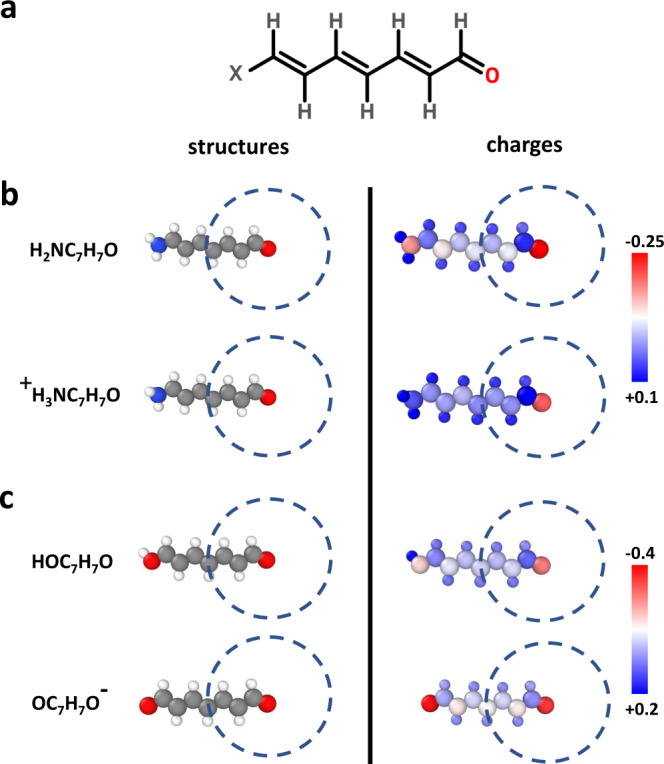

A remaining limitation of 3G ML potentials is their inability to describe long-range charge transfer and different charge states of a system, since the atomic partial charges are expressed as a function of the local chemical environment only. Neglecting non-local charge transfer and changes in the global charge distribution, which can be important in many systems26,27, can result in qualitative failures as illustrated in Fig. 1 for the molecular model system XC7H7O displayed in panel a. Depending on the choice of the functional group X in b, like an amino group NH2 or its protonated form NH, different partial charges, which we use in this work as a qualitative fingerprint of the electronic structure, are obtained as shown in the plots of the DFT Hirshfeld charges on the right hand side. In particular the charge of the right oxygen atom depends on the choice of X, although X is far outside its local atomic environment displayed as dashed circle. As a consequence, ML potentials relying on a local description, like 2G- and 3G-HDNNPs, cannot distinguish these systems and the same charge is assigned to the right oxygen in both molecules, which is chemically incorrect. A second case is illustrated in Fig. 1c. In this case the OH group on the left is deprotonated resulting in a negative ion with two oxygen atoms almost equally sharing the negative charge. This charge is very different from the charge in the carbonyl oxygen of the neutral molecule. Still, again, the local environment of the carbonyl oxygen atom is identical, which is why 2G and 3G ML potentials cannot be applied to multiple charge states.

Fig. 1. Illustration of long-range charge transfer in a molecular system.

In a the investigated molecule XC7H7O with X representing different functional groups is shown. b The protonation of NH2 group yields a positive ion and result in different charges of the oxygen atom as can be seen in the plot of the DFT atomic partial charges on the right side. In both cases, the local chemical environments of the oxygen atoms are identical within the cutoff spheres shown as dashed circles. c The deprotonation of the OH group yields a negative ion and both oxygen atoms become chemically equivalent with the nearly same negative partial charge. Also in this case the chemical environment of the right oxygen atom is identical to the neutral molecule although the charge distribution differs. All these cases cannot be correctly described by local methods like 2G and 3G ML potentials. The structure visualization for non-periodic systems was carried out using Ovito66.

This limitation of local atomistic potentials in the description of long-range charge transfer and of systems in different charge states has been recognized already some time ago, and for simple empirical force fields different solutions have been proposed28–31. In the context of ML potentials the first method that has been proposed to address this problem is the charge equilibration via neural network technique (CENT)32–34. In this method, a charge equilibration28 scheme is applied, which allows for a global redistribution of the charge over the full system to minimize a charge-dependent total energy expression. The charges are based on atomic electronegativities, which are determined as a function of the local chemical environment and expressed by atomic neural networks similar to the charges in 3G-HDNNPs. This method has enabled the inclusion of long-range charge transfer in a ML framework for the first time, but due to the employed energy expression this method is primarily applicable to ionic systems35–37, and the overall accuracy is still lower than in case of other state-of-the-art ML potentials. Recently, another promising method has been proposed by Xie, Persson and Small38 aiming for a correct description of systems with different charge states. In this method, atomic neural networks are used that do not only depend on the local structure but also on atomic populations, which are determined in a self-consistent process. The training data for different populations has been generated using constrained DFT calculations, and a first application for LinHn clusters has been reported. Furthermore, an extension of the AIMNet method has been proposed39, which can be used to predict energies and atomic charges for systems with non-zero total charge. Here, the interaction range between atoms is increased through iterative updates during which information is passed between nearby atoms. Although the resulting charges are not used to calculate explicit Coulomb interactions, many related quantities, such as electronegativities, ionization potentials or condensed Fukui functions can be derived.

In the present work, we propose a general solution for the limitations of current ML potentials by introducing a fourth-generation (4G) HDNNP, which is applicable to long-range charge transfer and multiple charge states. It consists of highly accurate short-range atomic energies similar to those used in 2G-HDNNPs and charges determined from a charge equilibration method relying on electronegativities in the spirit of the CENT approach. Both, the short-range atomic energies as well as the electronegativities are expressed by atomic neural networks as a function of the chemical environments. The capabilities of the method are illustrated for a series of model systems showcasing typical scenarios in chemistry and materials science that cannot be correctly described by conventional ML potentials. For all these systems we demonstrate that 4G-HDNNPs trained to DFT data are able to provide reliable energies, forces and charges in excellent agreement with electronic structure calculations. In the beginning of the following section the methodology of 4G-HDNNPs is introduced and the relation to other generations of HDNNPs and the CENT method is discussed. After that the results for a series of periodic and non-periodic benchmark systems are presented, including a detailed comparison to the performance of 2G- and 3G-HDNNPs. We show that previous generations of HDNNPs, which are unable to take distant structural changes into account, yield inaccurate energies and forces, and even distinct local minima of the PES can be missed, which are correctly resolved by the 4G-HDNNP. These results are general and equally apply to other types of 2G ML potentials.

Results

4G-HDNNP

The overall structure of the 4G-HDNNP is shown schematically in Fig. 2 for an arbitrary binary system. Like in 3G-HDNNPs the total energy consists of a short-range part, which, as we will see below, requires in addition non-local information, and an electrostatic long-range part, which is not truncated,

| 1 |

The electrostatic part Eelec(R, Q) depends on a set of atomic charges , which are trained to reference charges obtained in DFT calculations, and the positions of the atoms . An important difference to 3G-HDNNPs is that these charges are not directly expressed by atomic neural networks as a function of the local atomic environments, but they are obtained indirectly from a charge equilibration scheme based on atomic electronegativities {χi} that are adjusted to yield charges in agreement with the DFT reference charges, which here we choose to be Hirshfeld charges40, but many choices are in principle possible.

Fig. 2. Schematic structure of a 4G-HDNNP for a binary system.

For a binary system containing Na atoms of element a and Nb atoms of element b the total energy consists of a short-range energy Eshort, which is a sum of atomic energies Ei, and a long-range electrostatic energy Eelec computed from atomic charges Qi. The atomic charges are determined by a charge equilibration method using environment-dependent atomic electronegativies χi expressed by atomic neural networks (red). These charges are then used to calculate the electrostatic energy and in addition serve as non-local input for the short-range atomic neural networks (blue) yielding the Ei. The geometric atomic environments are described by atom-centered symmetry function vectors Gi, which depend on the Cartesian coordinates Ri of the atoms and serve as inputs for the atomic neural networks.

Like in the CENT approach the atomic electronegativities are local properties defined as a function of the atomic environments using atomic neural networks. As in 2G- and 3G-HDNNPs there is one type of atomic neural network with a fixed architecture per element in the system making all atoms of the same type chemically equivalent, while the specific values of the electronegativities depend on the positions of all neighboring atoms inside a cutoff sphere of radius Rc. The positions of the neighboring atoms inside this sphere are specified by a vector Gi of atom-centered symmetry functions41, which ensures the translational, rotational and permutational invariance of the electronegativities.

To predict the atomic charges, which are represented by Gaussian charge densities of width σi taken from the covalent radii of the respective elements, a charge equilibration scheme42 is used. In this scheme, the charge is distributed among the atoms in an optimal way to minimize the energy expression

| 2 |

with Eelec being the electrostatic energy of the Gaussian charges and Ji the element-specific hardness. The Ji do not depend on the chemical environment and are constant for each element. While they are manually chosen in the CENT method, we optimize them during training. They are hence treated as free parameters like the weights and biases of the neural networks. For the electrostatic energy we then obtain

| 3 |

with

| 4 |

To solve this minimization problem the derivatives of EQeq with respect to the charges Qi are calculated and set to zero,

| 5 |

where the elements of the matrix A are given by

| 6 |

Considering the constraint that the sum of all charges must be equal to the total charge Qtot of the system, the following set of linear equations is solved by including this constraint via the Lagrange multipliers.

|

7 |

Highly optimized algorithms are available for systems of linear equations, which can be efficiently solved for small and medium-sized systems containing up to about ten thousand atoms in a few seconds on modern hardware. For larger systems the cubic scaling of the standard algorithms can pose a bottleneck. In that case one could resort to using iterative solvers for which the most expensive part of each iteration is a matrix vector multiplication involving the matrix A. This corresponds to the evaluation of the electrostatic potential at each atoms position for which numerous low-complexity algorithms, such as fast multipole methods, are known. In this way it is possible to reduce the effort from cubic to nearly linear scaling providing access to very large systems.

Overall, this process is like in the CENT32, but the main difference is in the training process. While in CENT only the error with respect to the DFT energies is minimized, the atomic charges obtained during the charge equilibration process serve merely as intermediate quantities, which do not have a strict physical meaning. In the 4G-HDNNP proposed in this work, the charges are trained directly to reproduce reference charges from DFT, which therefore are qualitatively meaningful although one should be aware that atomic partial charges are not physical observables and different partitioning schemes can yield different numerical values43.

Once the atomic electronegativities have been learned, a functional relation between the atomic structure and the atomic partial charges is available. The intermediate global charge equilibration step ensures that these charges depend on the atomic positions, chemical composition and total charge of the entire system, and thus in contrast to 3G-HDNNPs non-local charge transfer is naturally included.

In a second step, the local atomic energy contributions yielding the short-range energy according to

| 8 |

have to be determined. Like in 2G-HDNNPs the short-range atomic energies are provided by individual atomic neural networks based on information about the chemical environments. An important difference to 2G-HDNNPs is that the atomic energies in addition depend on non-local information that is provided to the short-range atomic neural networks by using not only the atom-centered symmetry function values describing the positions of the neighboring atoms inside the cutoff spheres, but also the atomic partial charges determined in the first step (s. Fig. 2). This information is required to take into account changes in the local electronic structure resulting from possible long-range charge transfer, which has an immediate effect on the local many-body interactions.

The short-range atomic neural networks are then trained to express the remaining part of the total energy Eref according to

| 9 |

where the electrostatic energy is determined based on the partial charges resulting from the fitted atomic electronegativities. Thus, by construction the goal of the short-range part is to represent all energy contributions that are not covered by the electrostatic energy such that double counting is avoided. In addition to the energies, also the forces are used for determining the parameters of the short-range atomic neural networks. We note that since the short-range energy depends on the atomic charges, which in turn are functions of all atomic coordinates, the derivatives ∂Eshort/∂Qi as well as ∂Qi/∂R have to be considered in the computation of the forces. Details on how these contributions can be efficiently computed, as well as many other details of the 4G-HDNNP method, can be found in the supplementary methods.

In summary, in contrast to the CENT method, the short-range interactions are not described through the charges resulting from the charge equilibration process but are described by separate short-range neural networks, which enables a more accurate description of the total energy.

Overview of test systems

In the following subsections we demonstrate the limitations of ML potentials based on local properties only and show how they can be overcome by the 4G-HDNNP. For this purpose we use a set of non-periodic and periodic systems, which cover a wide range of typical situations in chemistry and materials science. The non-periodic systems consist of a covalent organic molecule, a small metal cluster and a cluster of an ionic material covering very different types of atomic interactions. These examples demonstrate the simultaneous applicability of a single 4G-HDNNP to systems of different total charges and the correct description of long-range charge transfer and the associated electrostatic energy. As a periodic system we have chosen a small gold cluster adsorbed on a MgO(001) slab, which is a prototypical example for heterogeneous catalysis. We show that in contrast to established ML potentials, the 4G-HDNNP is able to reproduce the change in adsorption geometry of the cluster if dopant atoms are introduced in the slab far away from the cluster. In all cases, the 4G-HDNNP PES is very close to the results obtained from DFT.

While in theses examples we do not explicitly investigate the transferability of the potentials to different systems, we expect that the 4G-HDNNP in general provides an improved transferability compared to 2G and 3G ML potentials due to the underlying physical description of the global charge distribution and the resulting electrostatic energy. This expectation is supported by the fact that even traditional charge equilibration schemes with constant electronegativities are known to work well across different systems44. Furthermore, for the related CENT approach a broad transferability has already been demonstrated for different atomic environments33.

A benchmark for organic molecules

The first model system we study is a linear organic molecule consisting of a chain of ten sp-hybridized carbon atoms terminated by two hydrogen atoms as shown in Fig. 3a. Molecules of this type have been studied before in electronic structure calculations45–47. For this molecule we will now demonstrate the applicability of 4G-HDNNPs to systems with long-range charge transfer induced by protonation, which changes the total charge and the local structure in a part of the system. Since the majority of existing machine learning potentials rely on local structural information only without explicit information about the global charge distribution and total charge, they are not simultaneously applicable to both neutral and charged systems.

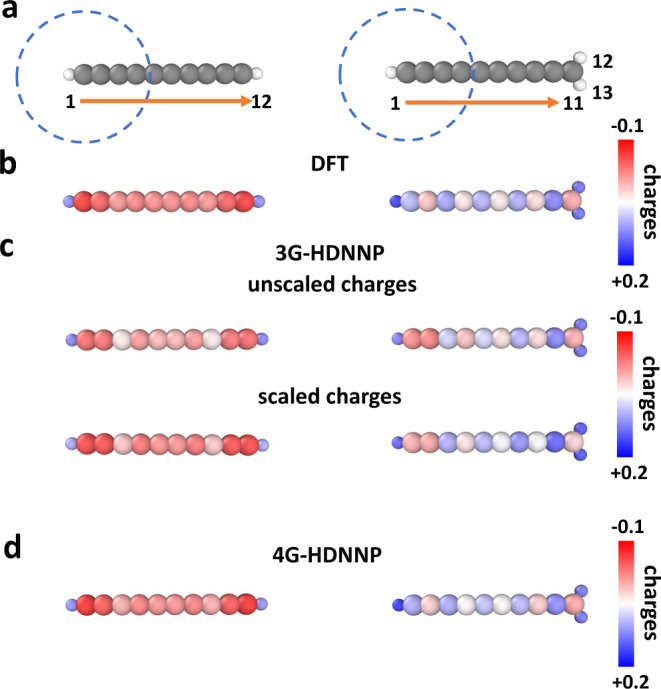

Fig. 3. Charge redistribution in organic molecules.

a DFT-optimized structures of C10H2 (left) and C10H (right) with atom IDs. Carbon and hydrogen atoms are colored in gray and white, respectively. The dashed circle shows the cutoff radius of the left carbon atom defining its chemical environment. b shows the atomic partial charges obtained from DFT. The unscaled and scaled 3G-HDNNP charges are displayed in c, while the 4G-HDNNP charges are shown in d.

This is different for 4G-HDNNPs, which naturally include the correct long-range electrostatic energy for any global charge present in the training set. Because of the protonation of the terminal carbon atom, its hybridization state changes to sp2 and the electronic structure of the resulting C10H cation is modified even at very large distances along the whole molecule, which is reflected in the differences of the DFT charges of the molecules in Fig. 3b, which have been structurally optimized by DFT. The geometries of both molecules are given in the supplementary tables.

Using a data set containing both molecules, we have constructed 2G-, 3G-, and 4G-HDNNPs using a cutoff radius Rc = 4.23 Å as illustrated by the circle in Fig. 3a for the example of the left carbon atom. In Fig. 3c we show the atomic partial charges obtained with the 3G-HDNNP in two forms: first as unscaled charges directly obtained from the atomic neural network fits without any constraint for the correct total charge of the system, and second rescaled to ensure total charges of zero or one, respectively. It can be seen that the scaling process does not significantly improve the 3G-HDNNP charges.

The atoms in the left half of the molecule are far from the added proton such that their atomic environments differ only slightly due to the DFT geometry optimization. In addition, in the training set a lot of basically identical environments but different atomic charges are present for these atoms, which results in high fitting errors due to the contradictory information. As a consequence the neural networks assign averaged charges to these atoms, which differ qualitatively from the DFT reference charges of both systems. For instance, the 3G-HDNNP partial charges on atom 2, i.e., the left carbon atom, are almost identical in both molecules although they are very different in DFT. Note that the predicted charges of atoms 1-6 in C10H2 and C10H would be even exactly identical if the latter molecule would not have been relaxed after protonation. The charges obtained with the 4G-HDNNP shown in Fig. 3d, on the other hand, match the DFT charges very accurately for both molecules, as they can be distinguished in this method.

The inaccurate charges obtained with the 3G-HDNNP lead to a poor quality of the potential energy surface, and the same is observed for the short-range only 2G-HDNNP. In Table 1 we compare the errors of the total energies as well as the mean errors of the atomic charges and forces of all HDNNP generations for the DFT-optimized structures. It can be seen that the errors of all quantities obtained for the 4G-HDNNP are much lower than for the 2G- and 3G-HDNNPs. Further, we note that in several cases the energies obtained by the 3G-HDNNP are even worse than for the 2G-HDNNP, as the unphysical charge distribution to some extent prevents the accurate representation of the energy.

Table 1.

Energy and charge error obtained for the organic molecules. Energy error (meV/atom) and mean errors of the atomic charges (10−3 e) and forces (eV/Å) of C10H2 and C10H with respect to DFT obtained with the different HDNNP generations for the DFT-optimized structures. For the 3G-HDNNP the results for scaled and unscaled charges are given.

| Energy | Charges | Forces | ||

|---|---|---|---|---|

| 2G-HDNNP | 0.684 | — | 0.095 | |

| C10H2 | 3G-HDNNP (unscaled) | 1.255 | 19.72 | 0.430 |

| 3G-HDNNP (scaled) | 2.193 | 10.76 | 0.138 | |

| 4G-HDNNP | 0.463 | 4.820 | 0.032 | |

| 2G-HDNNP | 0.922 | — | 0.127 | |

| C10H | 3G-HDNNP (unscaled) | 0.046 | 17.82 | 0.658 |

| 3G-HDNNP (scaled) | 1.425 | 17.72 | 0.259 | |

| 4G-HDNNP | 0.176 | 5.048 | 0.042 |

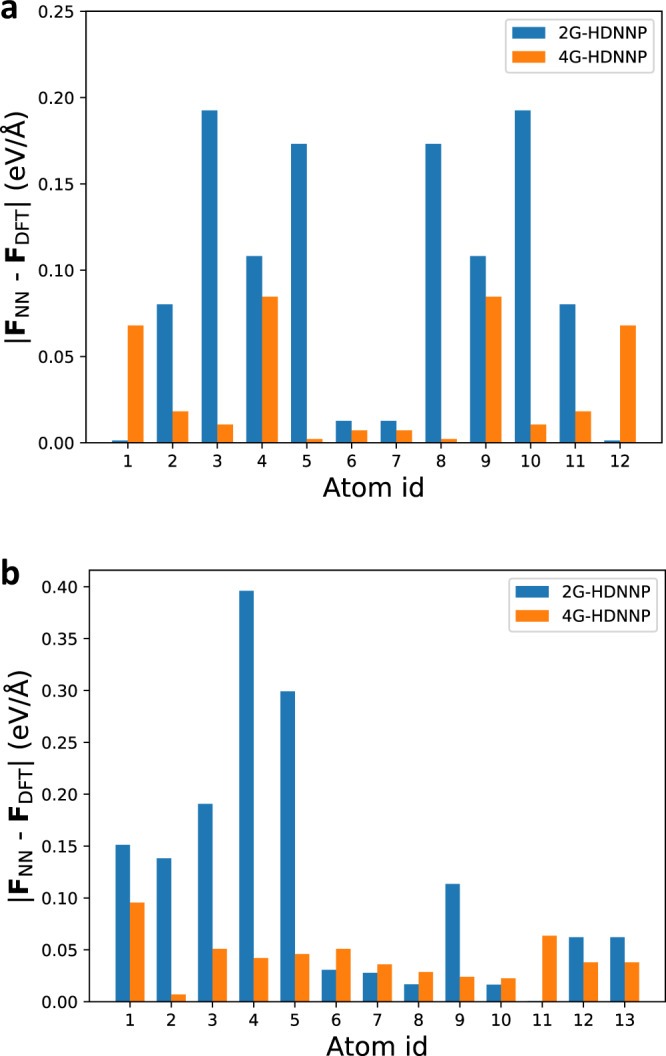

To investigate the forces in more detail, in Fig. 4 we plot the individual atomic forces in both molecules using the 2G-HDNNP and the 4G-HDNNP for the DFT-optimized structures. For all atoms in both molecules the 4G-HDNNP yields very low-force errors, with an average error of only 0.037 eV/Å underlining the quality of this PES. However, for the 2G-HDNNP the forces acting on the left half of C10H and on all atoms in C10H2 the force errors are significantly larger. The reason is again the 2G-HDNNP cannot distinguish both molecules for these atoms, and the force errors are only low close to the extra proton in C10H, which can be recognized as a distinct local structural feature in the atomic environments of the right half of this molecule.

Fig. 4. Force errors of the HDNNPs for the organic molecules.

2G- and 4G-HDNNP forces for the atoms in the DFT-optimized structures of C10H2 and C10H (indicated in a and b, respectively).

Interestingly, the relatively high errors of the 2G-HDNNP forces are not matched by high energy errors, which instead are surprisingly low and smaller than 1 meV/atom for both molecules. This suggests that the total energy predicted by 2G-HDNNPs may benefit from error compensation in the atomic energies in that the atomic energies in the right half of C10H are adjusted to compensate the deficiencies of the atomic energies in the left half of the molecule.

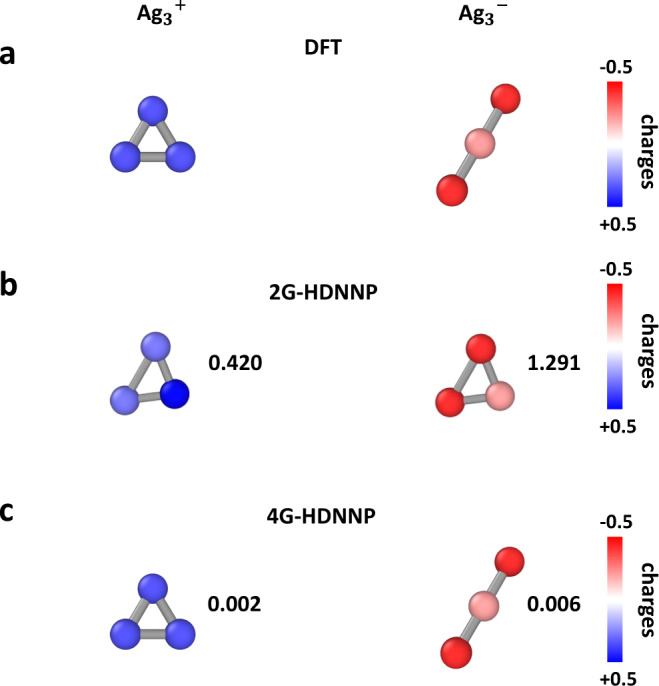

Metal clusters: Ag3

In this example, we investigate a small metal cluster, Ag3, in two different charge states. The potential energy surface of small clusters is strongly influenced by the ionization state of the cluster and the ground state can differ as a function of the total charge of the cluster48–51. Owing to the small system size there are no long-range effects, and the full system is included in each atomic environment. Therefore, in principle 2G-HDNNPs should be perfectly suited to describe the PES of Ag3, but this is only true as long as the total charge of the system does not change, since for a combination of data with different total charges, like Ag and Ag, in the training set the unique relation between atomic positions and the energy is lost. The minimum-energy structures of both cluster ions obtained from DFT are shown in Fig. 5a along with the atomic partial charges. After training a 2G-HDNNP and a 4G-HDNNP to data containing both types of clusters, we have reoptimized the geometries by the respective HDNNP generation. As expected, the minima obtained with the 2G-HDNNP (Fig. 5b) are identical for both charge states, but do not agree with any of the DFT structures. The 4G-HDNNP on the other hand, which in addition to the structural information also takes the total charge and the resulting partial charges into account, is able to predict the minima and also the atomic partial charges of both systems with very high accuracy (Fig. 5c). In this case, the inability of the 2G-HDNNP to distinguish between clusters is also apparent from the energy errors with respect to DFT. While the energy errors for Ag and Ag obtained from the 4G-HDNNP are only about 1.166 meV/atom and 0.320 meV/atom, respectively, the errors of the 2G-HDNNP are 0.605 and 2.017 eV/atom and thus several orders of magnitude larger. The 3G-HDNNP using scaled charges performs even worse and errors of 0.713 and 5.721 eV/atom are obtained. This is due to the non-physical electrostatic contribution calculated from the incorrectly predicted charges.

Fig. 5. Optimized geometry and atomic charges of Ag clusters.

Structures and atomic partial charges of Ag and Ag optimized with DFT in a, the 2G-HDNNP in b and the 4G-HDNNP in c. The numbers give the root mean squared displacement (RMSD) in Å compared to the respective DFT minima. The partial charges in b are shown for illustration purposes only and have been obtained from a scaled 3G-HDNNP.

NaCl cluster ions

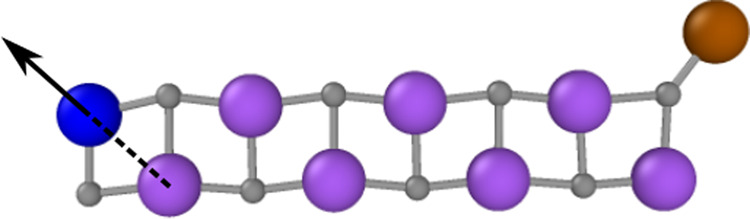

As the last non-periodic example we select a system with mainly ionic bonding, which is a positively charged Na9Cl cluster, and we analyze the changes of the PES, if a neutral sodium atom is removed. The initial structure of the cluster ion has been obtained from a DFT geometry optimization and is shown in Fig. 6. The sodium atoms are shown in purple, blue, and brown, while the chlorine atoms are displayed in gray. We then construct a second system by removing the brown sodium atom from the cluster while keeping the positions of the remaining atoms fixed. Since the overall positive charge of the cluster is maintained, the charge is redistributed throughout the new Na8Cl cluster ion.

Fig. 6. Optimized structure of the Na9Cl cluster.

Sodium atoms are shown in purple, blue and brown, chlorine atoms in gray. The arrow indicates the direction along which the blue sodium atom is moved for the energy and force plots in Fig. 7a and 7b. The position of this atom is defined by the Na–Na distance indicated as dashed line.

To investigate the consequences of this change in the electronic structure on the PES, we compute and compare the energies and forces when moving the blue sodium atom along a one-dimensional path indicated by the arrow in Fig. 6 for both cluster ions. The distance to the closest neighboring sodium atom highlighted as dashed line is used to define the structure.

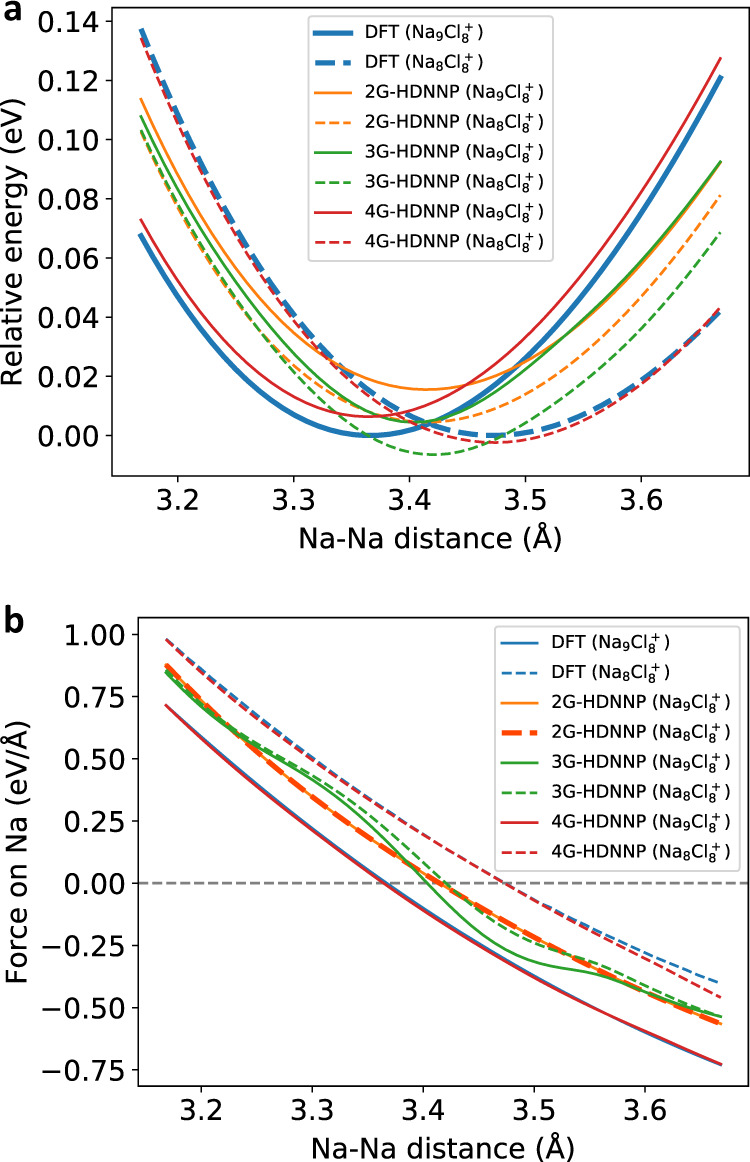

Figure 7 shows the energies for both systems obtained with DFT, as well as the 2G-, 3G- and 4G-HDNNPs. All energies are given as relative energies to the minimum DFT energy of the respective cluster ion and refer to the full systems. First, we note that the positions of the DFT minima differ by more than 0.1 Å, i.e., depending on the presence of the very distant brown atom the blue atom adopts different equilibrium positions. The 2G-HDNNP, however, is unable to distinguish these minima and instead the same local minimum Na–Na distance is found for both systems, which is approximately the average value of the two DFT minima. We note that the 2G-HDNNP energy curves of the two systems are not identical but there is an energy offset, as some of the atomic environments in the right part of the systems differ yielding different atomic energies. Since these environments do not change when moving the blue atom this offset is constant. For the 3G-HDNNP the same qualitative behavior is observed, and two very similar but not identical minima are found for both systems. Still, in case of the 3G-HDNNP the energy offset between both systems is not merely a constant anymore, as the long-range electrostatic interactions between the blue and the brown atom in Na9Cl are position-dependent. We note that in spite of these qualitative differences with respect to DFT, the 2G- and 3G-HDNNP curves show only a deviation of about 1 meV per atom from the DFT curves. This is very small and in the typical order of magnitude of state-of-the-art ML potentials, and in the present case this apparently high accuracy hides the qualitatively wrong minima. Finally, the 4G-HDNNP energies for both systems are very accurate and the energy curves match the corresponding DFT curves very closely. Both distinct local minima are correctly identified and at the right positions.

Fig. 7. Relative energies and forces of the NaCl clusters.

a Relative energies of all potentials with respect to the DFT minima of the Na8Cl and the Na9Cl clusters as a function of the Na–Na distance and b forces acting on the blue sodium atom for the the path shown in Fig. 6. For the 3G-HDNNP unscaled charges have been used in this plot.

Next, we turn to the forces shown in Fig. 7b. The results are fully consistent with our discussion of the energy curves. The DFT forces acting on the displaced atom are different for both cluster ions and well reproduced by the 4G-HDNNP. The 2G-HDNNP forces of both systems are exactly identical due to the constant offset between both energy curves (Fig. 7a), while the 3G-HDNNP forces of both systems are slightly different due to the additionally included long-range electrostatics.

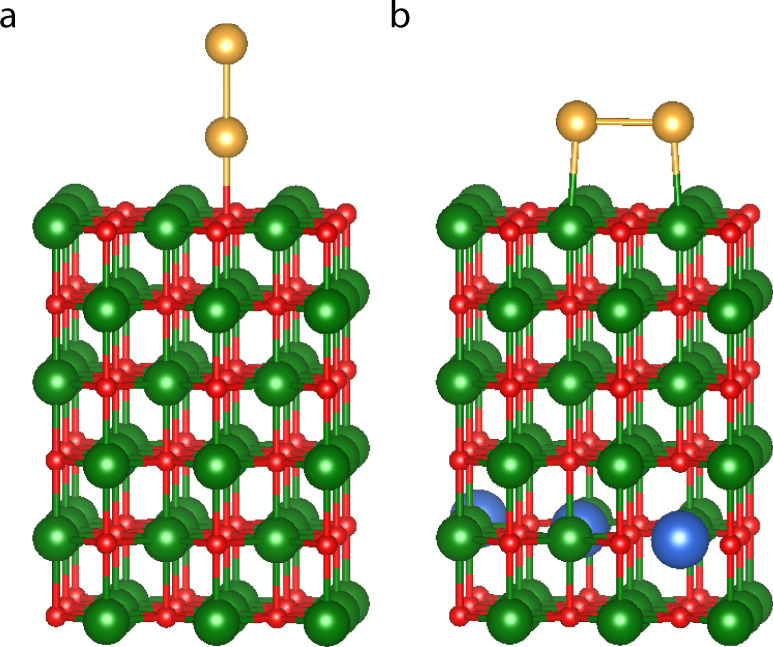

Au2 cluster on MgO(001)

As example for a periodic system we choose a diatomic gold cluster supported on the MgO(001) surface. Similar systems have attracted attention because of their catalytic properties for reactions like carbon monoxide oxidation, epoxidation of propylene, water-gas-shift reactions, and the hydrogenation of unsaturated hydrocarbons52. Theoretical53,54 as well as experimental studies55 have shown that the geometry of these clusters can be modified by the introduction of dopant atoms into the oxide substrate. This ability to control the cluster morphology is of great interest, as it can enhance the catalytic activity of the system54. 2G-HDNNPs have been used before to study the properties of supported metal clusters56–58, but systems as complex as doped substrates to date have remained inaccessible, since long-range charge transfer between the dopant and the gold atoms is crucial to achieve a physically correct description of these systems.

For Au2 at MgO(001) there are two main adsorption geometries, an upright “non-wetting” orientation of the dimer attached to a surface oxygen and parallel to the surface in a “wetting” configuration, in which the two Au atoms reside on two Mg atoms. DFT optimizations of the positions of the gold atoms with fixed substrate for the doped and undoped surfaces reveal that the presence of the dopant atoms changes the relative stability of both structures. On the pure MgO support (Fig. 8a) the minimum-energy structure is “non-wetting”, while a flat “wetting” geometry is more stable if the MgO is doped by three aluminum atoms (Fig. 8b) corresponding to 2.86% of the slab. The Al dopant atoms were introduced into the 5th layer, resulting in a distance of >10 Å from the gold atoms. Despite this large separation, we found that by doping the charge on the Au2 cluster is reduced (becomes more negative) by about 0.2 e compared to the same geometry for the undoped surface. This change in the electronic structure does not only lead to a switching in the energetic order of the geometries but also to a change of the bond-length between the gold atoms and the substrate.

Fig. 8. Geometry of Au2 clusters on undoped and doped MgO(001) surface.

Au2 cluster in the non-wetting geometry on the undoped a and the wetting geometry on Al-doped b MgO(001) surface represented by a periodic (3 × 3) supercell. Au atoms are shown in yellow, O in red, Mg in green and Al in blue. The configuration of the gold cluster has been optimized by DFT for a fixed substrate. The structure visualization for periodic systems was carried out using VESTA67.

The energy difference (Ewetting − Enon-wetting) between the wetting and non-wetting configurations calculated with different methods on a doped substrate are −2.7 meV for DFT, 375 meV for the 2G-HDNNP and −41 meV for the 4G-HDNNP. On an undoped substrate we obtained 929 meV for DFT, 375 meV for the 2G-HDNNP and 975 meV for the 4G-HDNNP. These numbers were obtained after the positions of the gold atoms were optimized. In case of the 2G-HDNNP, both optimizations yield the same structure. For the 2G-HDNNP the energy differences for the doped and undoped systems are exactly the same as the dopant atoms are outside the local chemical environments of the gold atoms. Thus, the 2G-HDNNP cannot take the change of the PES by doping into account. The DFT and 4G-HDNNP results agree in that there is a slight preference for the wetting configuration for the doped surface, while in the undoped case the non-wetting configuration is clearly more stable.

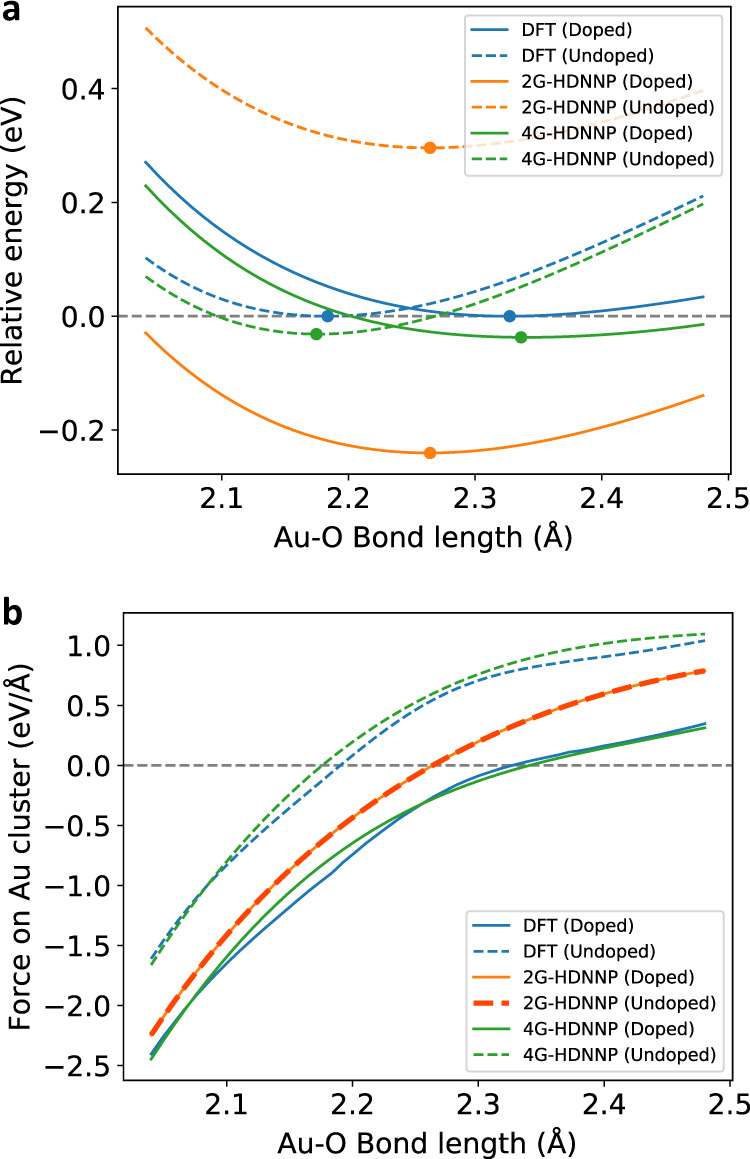

An analysis of the PES for the case of the non-wetting geometry for the doped and undoped slabs is given in Fig. 9, which shows the energies relative to the minimum DFT energies of the respective systems as a function of the distance between the bottom Au atom and its neighboring oxygen atom for DFT, the 2G-HDNNP and the 4G-HDNNP. The energy curves of the 4G-HDNNP and DFT are very similar and can resolve the different equilibrium bond lengths for the doped (4G-HDNNP: 2.342 Å; DFT: 2.332 Å) and undoped (4G-HDNNP: 2.177 Å; DFT: 2.190 Å) substrates. The 2G-HDNNP yields the same adsorption geometry with a bond-length of 2.256 Å in both cases, while the energies substantially differ from the DFT values with the main effect of the dopant being a constant energy shift between both substrates, similar to what we have observed in the presence or absence of the additional sodium atom in the NaCl cluster.

Fig. 9. Energies and forces for the gold cluster.

a Relative energy and b sum of forces acting on the Au2 cluster for the cluster adsorbed at the MgO(001) substrate for the non-wetting geometry for the Al-doped and undoped cases. The local minima of the energy curves are marked with a dot. The Au–O bond-length refers to the distance between the Au closest to the surface and its neighboring oxygen atom.

Discussion

In this work, we developed a fourth-generation high-dimensional neural network potential with accurate long-range electrostatic interactions, which is able to take long-range charge transfer as well as multiple charge states of a system into account. The new method is thus applicable to chemical problems, which are incorrectly described by current machine learning potentials relying on a local description of the atomic environments only.

The 4G-HDNNP combines the advantages of the CENT approach and conventional high-dimensional neural network potentials of second and third generation by being generally applicable to all types of systems and providing a very high accuracy. Employing environment-dependent atomic electronegativities, which are expressed by atomic neural networks, a charge equilibration method is used to determine the global charge distribution in the system. The resulting charges are then used to compute the long-range electrostatic energy, as well as to include information about the global electronic structure into the short-range atomic energy contributions represented by a second set of atomic neural networks.

The superiority of the 4G-HDNNP potential energy surface with respect to established 2G- and 3G-HDNNPs has been demonstrated for a series of systems, where conventional methods give qualitatively wrong results. In addition to the qualitatively correct description, we also obtained a clearly improved quantitative agreement of energies, forces and atomic charges with the underlying DFT data, and we could demonstrate that local minimum structures that are missed by the previous generations of HDNNPs are correctly identified by the new method.

The results obtained in this work are general and equally valid for other types of machine learning potentials relying on environment-dependent atomic energies only. Thus, the 4G-HDNNP is a vital step for the further development of next-generation ML potentials providing a correct description of the PES based a global charge distribution.

Methods

Neural network potentials

The HDNNPs reported in this work have been constructed using the program RuNNer59–61. Atom-centered symmetry functions41 have been used for the description of the atomic environments within a spatial cutoff radius set to 8–10 Bohr depending on the system. For a given system, the same parameters of the symmetry functions and the same atomic neural network architectures have been used for the different generations of HDNNPs being compared, and the parameters and cutoff radii for all systems can be found in supplementary tables. The functional forms of the symmetry functions are given in ref. 41. In all examples, the atomic neural networks consist of an input layer with the number of symmetry functions ranging from 12 to 54 depending on the specific element and system, two hidden layers with 15 neurons each, and an output layer with one neuron providing either the atomic short-range energy or electronegativity. Forces have been obtained as analytic energy derivative. The activation functions in the hidden layers and the output layer were the hyperbolic tangent and the linear function, respectively.

In all cases 90% of the available reference data was used for training the HDNNPs while the remaining 10% of the data points were used as an independent test set to confirm the reliability of PESs and detect possible over-fitting. Energies and forces were used for training the short-range atomic neural networks.

Moreover, a screening of the short-range Coulomb electrostatic interaction was applied in order to facilitate the fitting of the short-range energies and forces obtained from Eq. (9)23. The inner and outer cutoff radius for screening of the electrostatic interaction have been set to 1.69–2.54 Å and the cutoff of the symmetry functions, respectively. The widths of the Gaussian charge densities in Eq. (4) have been set to the covalent radii of the elements. All the details of the training process and the validation strategies for HDNNPs in general can be found in recent reviews60,61.

The HDNNP-based geometry optimizations were performed using simple gradient descent algorithms and the numerical threshold of the forces was set to 10−4 Ha/Bohr ≈ 0.005 eV/Å, which is the same convergence used in the DFT calculations used for validating the HDNNP results.

DFT calculations

The DFT reference data has been generated using the all-electron code FHI-aims62 employing the Perdew–Burke–Ernzerhof63 (PBE) exchange-correlation functional with light setting. The total energy, sum of eigenvalues, and charge density for all systems except Au2-MgO were converged to 10−5 eV, 10−2 eV, and 10−4 e, respectively. For the Au2-MgO systems stricter settings have been applied by multiplying each criterion by a factor 0.1 in combination with a 3 × 3 × 1 k-point grid. Spin polarized calculations have been carried out for the Au2-MgO, NaCl and Ag3 systems. Reference atomic charges were calculated using Hirshfeld population analysis40. In principle any other charge partitioning scheme could be used in the same way.

The data set of the C10H2/C10H molecules and the Ag3 clusters have been constructed by performing Born-Oppenheimer molecular dynamics64 simulations for each system at 300 K with 5000 steps at a time step of 0.5 fs. A Nosé-Hoover thermostat65 was applied to run simulations in the canonical (NVT) ensemble, and the effective mass was set to 1700 cm−1. In addition, the trajectory path during the geometry relaxations up to a numerical convergence of 0.001 eV/Å of the forces was also added to the data set to have sufficient sampling close to equilibrium structures. The geometry optimization of the Ag system has been terminated when reaching forces below 0.0015 eV/Å.

In case of the NaCl cluster and the Au2 cluster at the MgO surface the reference data set consists of two structurally different types of systems, and half of the data set was dedicated to each of the two cases. We performed a random sampling along the trajectories depicted in Figs. 7 and 9 and added further Gaussian distributed displacements to ensure sufficient sampling of the PES in the vicinity of the structures of interest. For the NaCl cluster we used Gaussian displacements with a standard deviation of 0.05 Å. As in the Au2-MgO system we only investigated the change in geometry of the Au2 cluster, while the MgO substrate remained fixed during all geometry relaxations, we used a smaller magnitude of the Gaussian displacements for the substrate than for the cluster. A standard deviation of 0.02 Å was used for the substrate and 0.1 Å was used for the gold cluster. Half of the data set consists of structures with an undoped substrate, while the other half includes a doped substrate. Half of the samples of each substrate configuration were generated with the Au2 cluster in its wetting configuration, and the other half with the cluster in its non-wetting configuration. The total number of reference data points for the NaCl cluster and Au2-MgO slab is 5000, while the the Ag3 clusters and the organic molecule it is 10,019 and 11,013, respectively.

Supplementary information

Acknowledgements

We are grateful for the financial support from the Deutsche Forschungsgemeinschaft (DFG) (BE3264/13-1, project number 411538199) and the Swiss National Science Foundation (SNF) (project number 182877 and NCCR MARVEL). Calculations were performed in Göttingen (DFG INST186/1294-1 FUGG, project number 405832858), at sciCORE (http://scicore.unibas.ch/) scientific computing center at University of Basel and the Swiss National Supercomputer (CSCS) under project s963D/C03N05.

Author contributions

Both research groups contributed equally to this project. J.B. and S.G. conceived the 4G-HDNNP approach and initiated the research project. T.W.K. and J.A.F. worked out the practical algorithms for the approach and implemented it in the RuNNer software written by J.B. All calculations were performed by T.W.K. and J.A.F. All authors contributed ideas to the project and jointly analyzed the results. T.W.K. and J.A.F. wrote the initial version of the manuscript and prepared the figures, all authors jointly edited the manuscript. T.W.K. and J.A.F. contributed equally to this paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Data availability

The datasets used to train the NNPs presented in this paper have been published online68. All data that support the findings of this study are available in the Supplementary information file or from the corresponding author upon reasonable request.

Code availability

All DFT calculations were performed using FHI-aims (version 171221_1). The HDNNPs have constructed using the program RuNNer, which is freely available under the GPL3 license at https://www.uni-goettingen.de/de/software/616512.html.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Tsz Wai Ko, Email: tko@chemie.uni-goettingen.de.

Jonas A. Finkler, Email: jonas.finkler@unibas.ch

Supplementary information

Supplementary information is available for this paper at 10.1038/s41467-020-20427-2.

References

- 1.McCammon JA, Gelin BR, Karplus M. Dynamics of folded proteins. Nature. 1977;267:585–590. doi: 10.1038/267585a0. [DOI] [PubMed] [Google Scholar]

- 2.Jorgensen WL, Ravimohan C. Monte Carlo simulation of differences in free energies of hydration. J. Chem. Phys. 1985;83:3050–3054. doi: 10.1063/1.449208. [DOI] [Google Scholar]

- 3.Behler J. Perspective: machine learning potentials for atomistic simulations. J. Chem. Phys. 2016;145:170901. doi: 10.1063/1.4966192. [DOI] [PubMed] [Google Scholar]

- 4.Botu V, Batra R, Chapman J, Ramprasad R. Machine learning force fields: construction, validation, and outlook. J. Phys. Chem. C. 2017;121:511–522. doi: 10.1021/acs.jpcc.6b10908. [DOI] [Google Scholar]

- 5.Deringer VL, Caro MA, Csányi G. Machine learning interatomic potentials as emerging tools for materials science. Adv. Mater. 2019;31:1902765. doi: 10.1002/adma.201902765. [DOI] [PubMed] [Google Scholar]

- 6.Brockherde F, et al. Bypassing the Kohn-Sham equations with machine learning. Nat. Commun. 2017;8:872. doi: 10.1038/s41467-017-00839-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Noé F, Tkatchenko A, Müller K-R, Clementi C. Machine learning for molecular simulation. Annu. Rev. Phys. Chem. 2020;71:361–390. doi: 10.1146/annurev-physchem-042018-052331. [DOI] [PubMed] [Google Scholar]

- 8.Blank TB, Brown SD, Calhoun AW, Doren DJ. Neural network models of potential energy surfaces. J. Chem. Phys. 1995;103:4129–4137. doi: 10.1063/1.469597. [DOI] [Google Scholar]

- 9.Behler J, Parrinello M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 2007;98:146401. doi: 10.1103/PhysRevLett.98.146401. [DOI] [PubMed] [Google Scholar]

- 10.Schütt KT, Sauceda HE, Kindermans P-J, Tkatchenko A, Müller K-R. SchNet-A deep learning architecture for molecules and materials. J. Chem. Phys. 2018;148:241722. doi: 10.1063/1.5019779. [DOI] [PubMed] [Google Scholar]

- 11.Unke OT, Meuwly M. PhysNet: a neural network for predicting energies, forces, dipole moments, and partial charges. J. Chem. Theory Comput. 2019;15:3678–3693. doi: 10.1021/acs.jctc.9b00181. [DOI] [PubMed] [Google Scholar]

- 12.Smith JS, Isayev O, Roitberg AE. ANI-1: an extensible neural network potential with DFT accuracy at force field computational cost. Chem. Sci. 2017;8:3192–3203. doi: 10.1039/C6SC05720A. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bartók AP, Payne MC, Kondor R, Csányi G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 2010;104:136403. doi: 10.1103/PhysRevLett.104.136403. [DOI] [PubMed] [Google Scholar]

- 14.Shapeev AV. Moment tensor potentials: a class of systematically improvable interatomic potentials. Multiscale Model. Simul. 2016;14:1153–1173. doi: 10.1137/15M1054183. [DOI] [Google Scholar]

- 15.Thompson AP, Swiler LP, Trott CR, Foiles SM, Tucker GJ. Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. J. Chem. Phys. 2015;285:316–330. [Google Scholar]

- 16.Drautz R. Atomic cluster expansion for accurate and transferable interatomic potentials. Phys. Rev. B. 2019;99:014104. doi: 10.1103/PhysRevB.99.014104. [DOI] [Google Scholar]

- 17.Balabin RM, Lomakina EI. Support vector machine regression (LS-SVM)-an alternative to artificial neural networks (ANNs) for the analysis of quantum chemistry data? Phys. Chem. Chem. Phys. 2011;13:11710. doi: 10.1039/c1cp00051a. [DOI] [PubMed] [Google Scholar]

- 18.Behler J. Neural network potential-energy surfaces in chemistry: a tool for large-scale simulations. Phys. Chem. Chem. Phys. 2011;13:17930–17955. doi: 10.1039/c1cp21668f. [DOI] [PubMed] [Google Scholar]

- 19.Handley CM, Popelier PL. Potential energy surfaces fitted by artificial neural networks. J. Phys. Chem. A. 2010;114:3371–3383. doi: 10.1021/jp9105585. [DOI] [PubMed] [Google Scholar]

- 20.Prodan E, Kohn W. Nearsightedness of electronic matter. Proc. Natl. Acad. Sci. 2005;102:11635–11638. doi: 10.1073/pnas.0505436102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Deng Z, Chen C, Li X-G, Ong SP. An electrostatic spectral neighbor analysis potential for lithium nitride. NPJ Comput. Mater. 2019;5:75. doi: 10.1038/s41524-019-0212-1. [DOI] [Google Scholar]

- 22.Artrith N, Morawietz T, Behler J. High-dimensional neural-network potentials for multicomponent systems: applications to zinc oxide. Phys. Rev. B. 2011;83:153101. doi: 10.1103/PhysRevB.83.153101. [DOI] [Google Scholar]

- 23.Morawietz T, Sharma V, Behler J. A neural network potential-energy surface for the water dimer based on environment-dependent atomic energies and charges. J. Chem. Phys. 2012;136:064103. doi: 10.1063/1.3682557. [DOI] [PubMed] [Google Scholar]

- 24.Yao K, Herr JE, Toth DW, Mckintyre R, Parkhill J. The TensorMol-0.1 model chemistry: a neural network augmented with long-range physics. Chem. Sci. 2018;9:2261–2269. doi: 10.1039/C7SC04934J. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bereau T, Andrienko D, Von Lilienfeld OA. Transferable atomic multipole machine learning models for small organic molecules. J. Chem. Theory Comput. 2015;11:3225–3233. doi: 10.1021/acs.jctc.5b00301. [DOI] [PubMed] [Google Scholar]

- 26.Hoshino T, et al. First-principles calculations for vacancy formation energies in Cu and Al; non-local effect beyond the LSDA and lattice distortion. Comp. Mat. Sci. 1999;14:56. doi: 10.1016/S0927-0256(98)00072-X. [DOI] [Google Scholar]

- 27.Parsaeifard, B., Finkler, J. A. & Goedecker, S. Detecting non-local effects in the electronic structure of a simple covalent system with machine learning methods, arXiv:2008.11277 (2020).

- 28.Rappe AK, Goddard WA. Charge equilibration for molecular dynamics simulations. J. Phys. Chem. 1991;95:3358. doi: 10.1021/j100161a070. [DOI] [Google Scholar]

- 29.van Duin ACT, Dasgupta S, Lorant F, Goddard WA. ReaxFF: a reactive force field for hydrocarbons. J. Phys. Chem. A. 2001;105:9396–9409. doi: 10.1021/jp004368u. [DOI] [Google Scholar]

- 30.Zhou XW, Wadley HNG. A charge transfer ionic–embedded atom method potential for the O–Al–Ni–Co–Fe system. J. Phys.: Condens. Matter. 2005;17:3619. [Google Scholar]

- 31.Gasteiger J, Marsili M. Iterative partial equalization of orbital electronegativity–a rapid access to atomic charges. Tetrahedron. 1980;36:3219–3228. doi: 10.1016/0040-4020(80)80168-2. [DOI] [Google Scholar]

- 32.Ghasemi SA, Hofstetter A, Saha S, Goedecker S. Interatomic potentials for ionic systems with density functional accuracy based on charge densities obtained by a neural network. Phys. Rev. B. 2015;92:045131. doi: 10.1103/PhysRevB.92.045131. [DOI] [Google Scholar]

- 33.Faraji S, et al. High accuracy and transferability of a neural network potential through charge equilibration for calcium fluoride. Phys. Rev. B. 2017;95:104105. doi: 10.1103/PhysRevB.95.104105. [DOI] [Google Scholar]

- 34.Amsler, M. et al. FLAME: a library of atomistic modeling environments. Comput. Phys. Commun.256, 107415 (2020)

- 35.Hafizi R, Ghasemi SA, Hashemifar SJ, Akbarzadeh H. A neural-network potential through charge equilibration for WS2: From clusters to sheets. J. Chem. Phys. 2017;147:234306. doi: 10.1063/1.5003904. [DOI] [PubMed] [Google Scholar]

- 36.Faraji S, Ghasemi SA, Parsaeifard B, Goedecker S. Surface reconstructions and premelting of the (100) CaF2 surface. Phys. Chem. Chem. Phys. 2019;21:16270–16281. doi: 10.1039/C9CP02213A. [DOI] [PubMed] [Google Scholar]

- 37.Rasoulkhani R, et al. Energy landscape of ZnO clusters and low-density polymorphs. Phys. Rev. B. 2017;96:064108. doi: 10.1103/PhysRevB.96.064108. [DOI] [Google Scholar]

- 38.Xie X, Persson KA, Small DW. Incorporating electronic information into machine learning potential energy surfaces via approaching the ground-state electronic energy as a function of atom-based electronic populations. J. Chem. Theory Comput. 2020;16:4256–4270. doi: 10.1021/acs.jctc.0c00217. [DOI] [PubMed] [Google Scholar]

- 39.Zubatyuk, R., Smith, J., Nebgen, B.T., Tretiak, S. & Isayev, O. Teaching a neural network to attach and detach electrons from molecules, ChemRxiv 12725276.v1 (2020). [DOI] [PMC free article] [PubMed]

- 40.Hirshfeld FL. Bonded-atom fragments for describing molecular charge densities. Theor. Chim. Acta. 1977;44:129–138. doi: 10.1007/BF00549096. [DOI] [Google Scholar]

- 41.Behler J. Atom-centered symmetry functions for constructing high-dimensional neural network potentials. J. Chem. Phys. 2011;134:074106. doi: 10.1063/1.3553717. [DOI] [PubMed] [Google Scholar]

- 42.Rappe AK, Goddard III WA. Charge equilibration for molecular dynamics simulations. J. Phys. Chem. 1991;95:3358–3363. doi: 10.1021/j100161a070. [DOI] [Google Scholar]

- 43.Sifain AE, et al. Discovering a transferable charge assignment model using machine learning. J. Phys. Chem. Lett. 2018;9:4495–4501. doi: 10.1021/acs.jpclett.8b01939. [DOI] [PubMed] [Google Scholar]

- 44.Ma Y, Lockwood GK, Garofalini SH. Development of a transferable variable charge potential for the study of energy conversion materials FeF2 and FeF3. J. Phys. Chem. C. 2011;115:24198–24205. doi: 10.1021/jp207181s. [DOI] [Google Scholar]

- 45.Fan Q, Pfeiffer GV. Theoretical study of linear Cn (n = 6–10) and HCnH (n = 2–10) molecules. Chem. Phys. Lett. 1989;162:472–478. doi: 10.1016/0009-2614(89)87010-1. [DOI] [Google Scholar]

- 46.Horny` L, Petraco NDK, Schaefer HF. Odd carbon long linear chains HC2n+1H (n = 4–11): properties of the neutrals and radical anions. J. Am. Chem. Soc. 2002;124:14716–14720. doi: 10.1021/ja0210190. [DOI] [PubMed] [Google Scholar]

- 47.Pan L, Rao BK, Gupta AK, Das GP, Ayyub P. H-substituted anionic carbon clusters CnH−(n ≤ 10): density functional studies and experimental observations. J. Chem. Phys. 2003;119:7705–7713. doi: 10.1063/1.1609400. [DOI] [Google Scholar]

- 48.Duanmu K, et al. Geometries, binding energies, ionization potentials, and electron affinities of metal clusters: Mg, n= 1–7. J. Phys. Chem. C. 2016;120:13275–13286. doi: 10.1021/acs.jpcc.6b03080. [DOI] [Google Scholar]

- 49.Goel N, Gautam S, Dharamvir K. Density functional studies of LiN and Li(N= 2–30) clusters: Structure, binding and charge distribution. Int. J. Quant. Chem. 2012;112:575–586. doi: 10.1002/qua.23022. [DOI] [Google Scholar]

- 50.Fournier R. Trends in energies and geometric structures of neutral and charged aluminum clusters. J. Chem. Theory Comput. 2007;3:921–929. doi: 10.1021/ct6003752. [DOI] [PubMed] [Google Scholar]

- 51.De S, et al. The effect of ionization on the global minima of small and medium sized silicon and magnesium clusters. J. Chem. Phys. 2011;134:124302. doi: 10.1063/1.3569564. [DOI] [PubMed] [Google Scholar]

- 52.Haruta M, Daté M. Advances in the catalysis of Au nanoparticles. Appl. Catal. A. 2001;222:427–437. doi: 10.1016/S0926-860X(01)00847-X. [DOI] [Google Scholar]

- 53.Mammen N, Narasimhan S, de Gironcoli S. Tuning the morphology of gold clusters by substrate doping. J. Am. Chem. Soc. 2011;133:2801–2803. doi: 10.1021/ja109663g. [DOI] [PubMed] [Google Scholar]

- 54.Mammen N, Narasimhan S. Inducing wetting morphologies and increased reactivities of small Au clusters on doped oxide supports. J. Chem. Phys. 2018;149:174701. doi: 10.1063/1.5053968. [DOI] [PubMed] [Google Scholar]

- 55.Shao X, et al. Tailoring the shape of metal Ad-particles by doping the oxide support. Angew. Chem. Int. Ed. 2011;50:11525–11527. doi: 10.1002/anie.201105355. [DOI] [PubMed] [Google Scholar]

- 56.Artrith N, Hiller B, Behler J. Neural network potentials for metals and oxides-First applications to copper clusters at zinc oxide. Phys. Status Solidi B. 2013;250:1191–1203. doi: 10.1002/pssb.201248370. [DOI] [Google Scholar]

- 57.Elias JS, et al. Elucidating the nature of the active phase in copper/ceria catalysts for CO oxidation. ACS Catal. 2016;6:1675–1679. doi: 10.1021/acscatal.5b02666. [DOI] [Google Scholar]

- 58.Paleico ML, Behler J. Global optimization of copper clusters at the ZnO() surface using a DFT-based neural network potential and genetic algorithms. J. Chem. Phys. 2020;153:054704. doi: 10.1063/5.0014876. [DOI] [PubMed] [Google Scholar]

- 59.Behler, J. RuNNer–A Program for Constructing High-dimensional Neural Network Potentials, Universität Göttingen 2020. (Universität Göttingen, 2020)

- 60.Behler J. Constructing high-dimensional neural network potentials: A tutorial review. Int. J. Quant. Chem. 2015;115:1032–1050. doi: 10.1002/qua.24890. [DOI] [Google Scholar]

- 61.Behler J. First principles neural network potentials for reactive simulations of large molecular and condensed systems. Angew. Chem. Int. Ed. 2017;56:12828–12840. doi: 10.1002/anie.201703114. [DOI] [PubMed] [Google Scholar]

- 62.Blum V, et al. Ab initio molecular simulations with numeric atom-centered orbitals. Comput. Phys. Commun. 2009;180:2175–2196. doi: 10.1016/j.cpc.2009.06.022. [DOI] [Google Scholar]

- 63.Perdew JP, Burke K, Ernzerhof M. Generalized gradient approximation made simple. Phys. Rev. Lett. 1996;77:3865. doi: 10.1103/PhysRevLett.77.3865. [DOI] [PubMed] [Google Scholar]

- 64.Barnett RN, Landman U. Born-Oppenheimer molecular-dynamics simulations of finite systems: Structure and dynamics of (H2O)2. Phys. Rev. B. 1993;48:2081. doi: 10.1103/PhysRevB.48.2081. [DOI] [PubMed] [Google Scholar]

- 65.Nosé S. A unified formulation of the constant temperature molecular dynamics methods. J. Chem. Phys. 1984;81:511–519. doi: 10.1063/1.447334. [DOI] [Google Scholar]

- 66.Stukowski A. Visualization and analysis of atomistic simulation data with OVITO - the Open Visualization Tool. Modell. Simul. Mater. Sci. Eng. 2010;18:015012. doi: 10.1088/0965-0393/18/1/015012. [DOI] [Google Scholar]

- 67.Momma K, Izumi F. VESTA 3 for three-dimensional visualization of crystal, volumetric and morphology data. J. Appl. Crystallogr. 2011;44:1272–1276. doi: 10.1107/S0021889811038970. [DOI] [Google Scholar]

- 68.Ko, T.W., Finkler, J. A., Goedecker, S. & Behler, J. A fourth-generation high-dimensional neural network potential with accurate electrostatics including non-local charge transfer. Materials Cloud Archive 2020.X, 10.24435/materialscloud:f3-yh (2020). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets used to train the NNPs presented in this paper have been published online68. All data that support the findings of this study are available in the Supplementary information file or from the corresponding author upon reasonable request.

All DFT calculations were performed using FHI-aims (version 171221_1). The HDNNPs have constructed using the program RuNNer, which is freely available under the GPL3 license at https://www.uni-goettingen.de/de/software/616512.html.