Significance

Does a picture of an apple taste sweet? Previous studies have shown that viewing food pictures activates brain regions involved in taste perception. However, it is unclear if this response is actually specific to the taste of depicted foods. Using ultrahigh-resolution functional magnetic resonance imaging and multivoxel pattern analysis, we decoded specific tastes delivered during scanning, as well as the dominant tastes associated with food pictures within primary taste cortex. Thus, merely viewing pictures of food evokes an automatic retrieval of information about the taste of those foods. These results show how higher-order information from one sensory modality (i.e., vision) can be represented in brain regions thought to represent only low-level information from a different modality (i.e., taste).

Keywords: multimodal, taste, insula, fMRI, MVPA

Abstract

Previous studies have shown that the conceptual representation of food involves brain regions associated with taste perception. The specificity of this response, however, is unknown. Does viewing pictures of food produce a general, nonspecific response in taste-sensitive regions of the brain? Or is the response specific for how a particular food tastes? Building on recent findings that specific tastes can be decoded from taste-sensitive regions of insular cortex, we asked whether viewing pictures of foods associated with a specific taste (e.g., sweet, salty, and sour) can also be decoded from these same regions, and if so, are the patterns of neural activity elicited by the pictures and their associated tastes similar? Using ultrahigh-resolution functional magnetic resonance imaging at high magnetic field strength (7-Tesla), we were able to decode specific tastes delivered during scanning, as well as the specific taste category associated with food pictures within the dorsal mid-insula, a primary taste responsive region of brain. Thus, merely viewing food pictures triggers an automatic retrieval of specific taste quality information associated with the depicted foods, within gustatory cortex. However, the patterns of activity elicited by pictures and their associated tastes were unrelated, thus suggesting a clear neural distinction between inferred and directly experienced sensory events. These data show how higher-order inferences derived from stimuli in one modality (i.e., vision) can be represented in brain regions typically thought to represent only low-level information about a different modality (i.e., taste).

Throughout the course of their lives, individuals have endless experiences with different types of food. Through these experiences, we grow to learn the predictable associations between how foods look, smell, and taste, as well as how nourishing they are. Based upon numerous individual examples, we learn that ice cream tastes sweet, pretzels taste salty, and lemons taste sour. These sight-taste associations allow us to form a richly detailed conceptual model of the foods we experience, which we can utilize to predict how a novel instance of this food will taste. The continued popularity and utility of visual advertisements for driving food sales attests to the power of these associations to motivate consumptive behavior.

Grounded theories of cognition, supported by decades of human neuroimaging evidence, claim that object concepts are represented, in part, within the neural substrates involved in perceiving and interacting with those objects (1, 2). Within this view, the conceptual representation of food should likewise involve the brain regions associated with taste perception and reward. This possibility has been borne out by human neuroimaging studies that have shown that viewing food pictures elicits activity in taste-responsive brain regions such as the insula, amygdala, and orbitofrontal cortex (OFC) (3–6) (for metaanalyses, see refs. 7, 8). Within these studies, activation of the bilateral mid-insular cortex when viewing food pictures is of particular interest because of its central role in taste perception (4, 9–12) and putative role as human primary gustatory cortex (13–15). Indeed, there is some evidence that viewing food pictures can even modulate taste-evoked neural responses within the mid-insular cortex (16). Taken together, these studies suggest that viewing food pictures triggers the automatic and implicit retrieval of taste property information from gustatory cortex. However, it is unclear whether these insular activations to food pictures represent a general taste-related response or whether they represent the retrieval of specific taste property information, such as whether a food tastes predominantly sweet, salty, or sour, as no study has investigated whether food pictures activate gustatory cortex in a taste quality-specific manner.

Indeed, this question might prove nearly intractable using standard neuroimaging analyses and relatively low-resolution functional imaging methods. Recent studies using multivariate pattern analysis (MVPA) applied to high-resolution functional magnetic resonance imaging (fMRI) data, however, suggest that taste quality is represented by distributed patterns of activation within taste-responsive regions of the brain, rather than topographically (12, 17, 18). These findings raised the possibility that inferred taste qualities evoked by viewing food pictures might also be discernable in gustatory cortex using a similar approach. If this is indeed the case, an important and related question would be, are the distributed activity patterns used to represent the inferred taste qualities associated with food pictures the same as, or reliably similar to, the activation patterns which represent experienced tastes?

In order to address these questions, we conducted an fMRI study in which we had subjects undergo high-resolution 7-T fMRI while performing tasks in which they viewed pictures of foods which varied in their dominant taste quality (sweet, salty, or sour), as well as pictures of nonfood objects. During the same scan session, participants performed a separate task in which they received sweet, salty, sour, and neutral tastant solutions. We used both univariate and multivariate analysis techniques to compare the hemodynamic response to food pictures and direct taste stimulation.

Results

Behavioral Results.

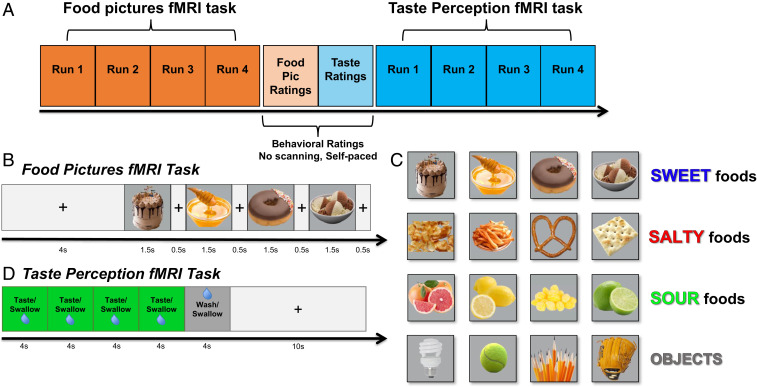

During the food pictures imaging scans, in which subjects saw pictures of a variety of sweet, sour, and salty foods as well as nonfood objects (Fig. 1 and Materials and Methods), subjects performed a picture repetition detection task with an average detection accuracy of 87.5%. Subjects also rated the pleasantness of those pictures, in a separate nonscanning task. Analysis of ratings from this food pleasantness rating task revealed a main effect of taste category (F = 11.1; P < 0.001). Sweet and salty foods were rated as significantly more pleasant than sour foods (P < 0.05), but there was no difference between sweet and salty foods (P = 0.59). During the nonscanning taste assessment task which followed, subjects rated the identity, pleasantness, and intensity of tasted delivered by our MR-compatible gustometer. There was a significant effect of tastant type on pleasantness ratings (F = 41.7; P < 0.001), with sweet rated as significantly more pleasant than salty or sour (sour, P < 0.02; salty, P < 0.001) and sour rated more pleasant than salty (P < 0.001) (SI Appendix, Table S1). There was also a main effect of tastant type on intensity ratings, with all tastants rated as more intense than neutral (P < 0.001). Subjects identified tastants during this task with an average accuracy of 97%.

Fig. 1.

Experimental design. (A) An overview of the experimental session, during which participants performed food pictures and taste perception fMRI tasks, separated by two nonscanning behavioral rating tasks. (B and C) During the food pictures fMRI task, subjects viewed pictures of a variety of food and nonfood objects within randomly ordered presentation blocks during scanning. Foods were categorized into predominantly sweet, sour, and salty foods, as well as nonfood familiar objects, selected on the basis of a series of prior experiments with a large online sample of participants. (D) During the taste perception fMRI task, participants received sweet, sour, salty, and neutral tastant solutions, delivered in randomly ordered stimulus blocks, during scanning.

Imaging Results: Univariate.

Taste perception task.

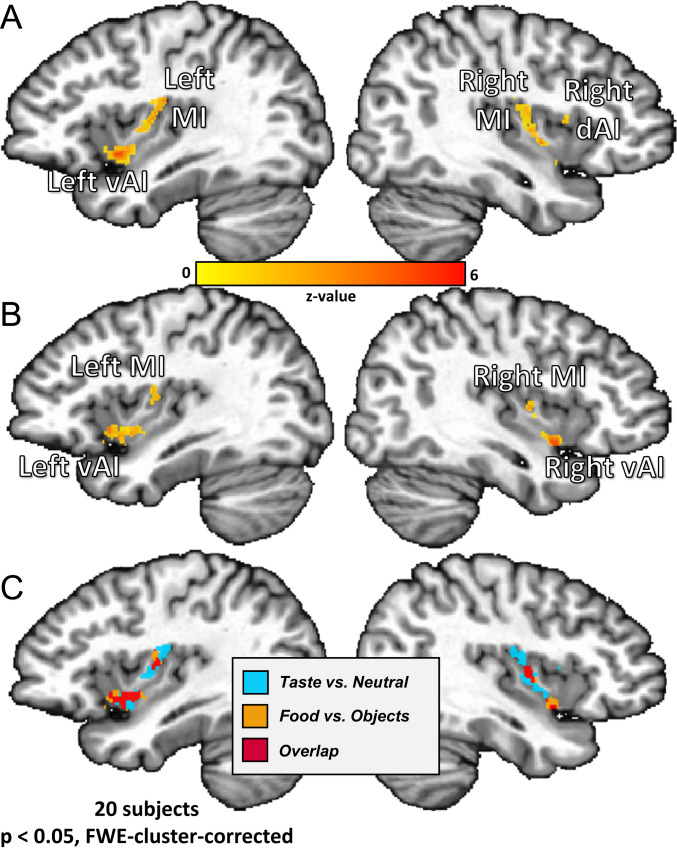

Consistent with the previous results in an identical paradigm using tastant delivery during fMRI scanning (12), multiple clusters were identified within the insular cortex as responsive to all tastants (sweet, sour, and salty) vs. the neutral solution. These clusters were located bilaterally in the dorsal mid-insula, ventral anterior insula, and dorsal anterior insula (Fig. 2A and Table 1). Importantly, the dorsal anterior insula cluster was located caudally to the most anterior areas of the insula, which have been shown to exhibit a more domain-general role in task-oriented focal attention (19, 20). Beyond the insula, the bilateral regions of the ventral thalamus, postcentral gyrus, cerebellum, and piriform cortex and a region of the right putamen responded more to tastants relative to the neutral solution (Table 1).

Fig. 2.

Bilateral regions of the ventral anterior and dorsal mid-insula (MI) are responsive to taste perception and viewing pictures of food. Univariate analysis results from both imaging tasks. (A) All tastes (sweet, sour, and salty) vs. the neutral tastant activated bilateral regions of the dorsal MI, as well as left ventral anterior insula (vAI) and right dorsal anterior insula (dAI). (B) All food vs. object pictures activated bilateral MI and ventral anterior insula, as well as left OFC (not pictured). (C) An overlap of the maps from A and B revealed bilateral ventral anterior and MI regions coactivated by food pictures and taste.

Table 1.

Brain regions exhibiting significant a significant response to food vs. object pictures and taste vs. neutral tastes

| Anatomical location | Peak coordinates | Peak Z | Cluster P | Volume, mm3 | ||

| X | Y | Z | ||||

| Taste perception task (all tastes vs. neutral) | ||||||

| R cerebellum (VI) | −15 | 57 | −17 | 5.58 | <0.001 | 1,272 |

| L dorsal mid-insula | 31.8 | 10 | 16.6 | 5.7 | <0.001 | 1,061 |

| R thalamus | −11.4 | 17.4 | 2.2 | 5.93 | <0.001 | 949 |

| L cerebellum (VI) | 9 | 54.6 | −15.8 | 5.72 | <0.001 | 8,445 |

| R mid-insula | −37.8 | 6.6 | 4.6 | 5.32 | <0.001 | 835 |

| L thalamus | 7.8 | 18.6 | −0.2 | 5.41 | <0.001 | 779 |

| R postcentral gyrus | −57 | 15 | 21.4 | 4.58 | <0.001 | 603 |

| L ventral anterior insula | 36.6 | −7.8 | −5 | 4.48 | <0.001 | 353 |

| L postcentral gyrus | 55.8 | 10.2 | 17.8 | 4.6 | <0.01 | 252 |

| R putamen | −24.6 | −1.8 | 8.2 | 4.37 | <0.02 | 133 |

| R lingual gyrus | −7.8 | 59.4 | 4.6 | 4.65 | <0.02 | 131 |

| R precentral gyrus | −59.4 | −0.6 | 17.8 | 4.48 | <0.02 | 123 |

| R piriform cortex/amygdala | −24.6 | 6 | −11 | 4.49 | <0.03 | 98 |

| L cuneus | 3 | 61.8 | 10.6 | 3.98 | <0.05 | 85 |

| R dorsal anterior insula | −36.6 | −9 | 9.4 | 4.01 | <0.07 | 71 |

| Food pictures task (all food vs. object pictures) | ||||||

| Visual cortex–calcarine gyrus | −5.4 | 81 | −7.4 | 5.2 | <0.001 | 3,166 |

| L ventral anterior insula | 34.2 | −13.8 | −6.2 | 5.82 | <0.001 | 422 |

| L area V3B | 23.4 | 78.6 | 14.2 | 4.6 | <0.001 | 204 |

| R ventral anterior insula | −37.8 | −5.4 | −8.6 | 5.23 | <0.01 | 192 |

| L OFC (BA11m) | 25.8 | −3.4 | −9.8 | 5 | <0.01 | 175 |

| L dorsal mid-insula | 33 | 7.8 | 13 | 4.56 | <0.03 | 135 |

| R area V3CD | −28.2 | 79.8 | 16.6 | 4.12 | <0.04 | 114 |

| R mid-insula | −37.8 | 5.4 | 7 | 6.65 | <0.10 | 60 |

Food pictures task.

A significant response for all food vs. object pictures was observed in bilateral regions of the mid-insula and ventral anterior insula, as well in the left lateral OFC (area BA11m) (Fig. 2B). These results are consistent with previous neuroimaging studies and metaanalyses of food picture presentation (3, 5, 7, 8). Relative to object pictures, viewing pictures of food also elicited activity in multiple areas of visual cortex (V1 and V3; Table 1).

Conjunction analysis.

A conjunction of the corrected contrast maps generated for the food pictures and taste perception tasks and identified bilateral clusters within mid-insula and ventral anterior insula which exhibit overlapping activation for all tastes (vs. neutral) and all food pictures (vs. object pictures) (Fig. 2C).

Imaging Results: Multivariate.

Previous studies have shown that MVPAs can be used to distinguish between the distributed activity patterns by which distinct tastes are represented within the insular cortex and the wider brain (12, 17, 18). Our next analyses sought to answer these questions: 1) Does MVPA allow us to reliably decode the taste category of food pictures within taste-responsive regions of the brain? 2) In which regions of the brain can we reliably decode both taste quality and food picture category? 3) Within those overlapping regions, can we cross-classify food picture categories by training on experienced taste quality?

Insula region-of-interest analyses.

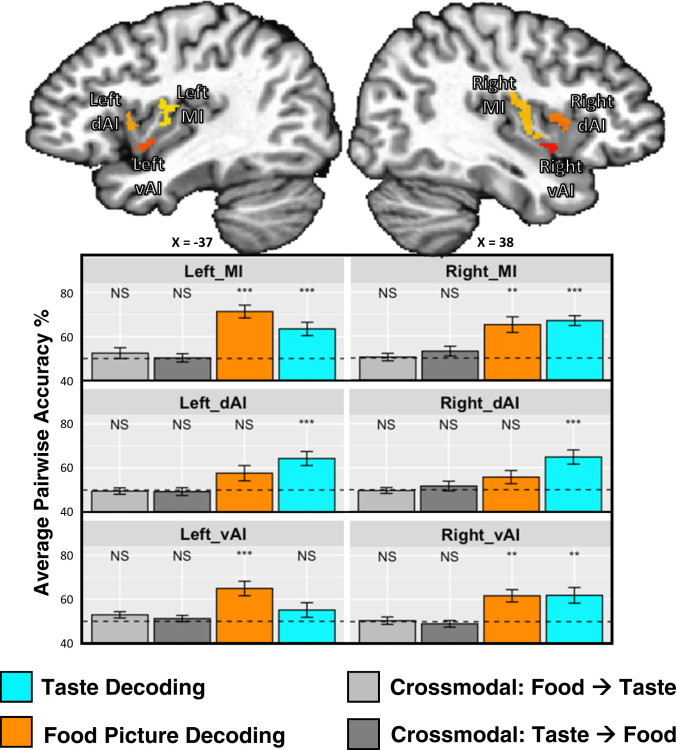

Within the bilateral mid-insula clusters, MVPA revealed reliable classification between sweet, salty, and sour tastants (left, accuracy = 63%, P = 0.002; right, accuracy = 67%, P < 0.001; chance level = 50%; Fig. 3 and SI Appendix, Table S2) and between pictures of sweet, salty, and sour foods (left, accuracy = 71%, P < 0.001; right, accuracy = 65%, P = 0.002). Within the anterior insula clusters, we observed a task-specific dissociation between the regions-of-interest (ROIs), as the dorsal anterior insula clusters discriminated between tastes (left, accuracy = 64%, P < 0.001; right, accuracy = 65%, P < 0.001) but not food pictures (left, accuracy = 57%, corrected P = 0.09; right, accuracy = 56%, corrected P = 0.14), and the left ventral anterior insula cluster discriminated between food pictures (accuracy = 65%, P < 0.001) but not tastes (accuracy = 55%, corrected P = 0.25; SI Appendix, Table S2). We tested this effect within our set of six ROIs using a permutation test-based ANOVA model. We used a model which included the laterality of the ROIs (LR) and whether they were located dorsally or ventrally (DV). We observed a significant task * DV interaction (P = 0.025), and task * LR interaction (P = 0.012), with no effect of task (P > 0.99), DV (P = 0.11), LR (P > 0.99), or task * LR * DV interaction (P = 0.58).

Fig. 3.

MVPAs reliably classify taste quality and food picture category within bilateral regions of the dorsal MI. Taste-responsive regions of the bilateral insula, which were identified using the same taste paradigm within an independent dataset (12), were used as regions of interest for multivariate classification analyses performed on both imaging tasks. Within the bilateral dorsal MI, we were able to reliably classify both taste quality and food picture category, whereas we could classify only tastes within dAI. We could not reliably cross-classify food pictures by training on tastes, or vice versa.

Searchlight analyses.

The multivariate searchlight analysis using the taste perception data largely replicated the results of our previous study (12). Multiple, bilateral regions of the brain, including the mid-insula, dorsal anterior insula, somatosensory cortex, and piriform cortex, exhibited significant and above chance classification accuracy for discriminating between distinct tastes (see Table 2 for a comprehensive list of clusters).

Table 2.

Brain regions where multivoxel patterns reliably discriminate between task categories

| Anatomical location | Peak coordinates TLRC | Peak Z | Cluster P | Volume, mm3 | ||

| X | Y | Z | ||||

| Searchlight decoding: taste perception task | ||||||

| L inferior frontal/postcentral gyrus/insular cortex | 51 | −9 | 16.6 | 12.6 | <0.001 | 10,064 |

| R anterior and mid insula/postcentral gyrus | −33 | −9 | 9.4 | 13.6 | <0.001 | 9,215 |

| R cerebellum/pons/parahippocampal gyrus/L fusiform gyrus | −7.8 | 41.4 | −20.6 | 9.1 | <0.001 | 6,304 |

| R medial thalamus | −9 | 16.2 | −1.4 | 9.5 | <0.02 | 1,472 |

| R hippocampus/parahippocampal gyrus/amygdala/cerebellum | −29.4 | 24.6 | −11 | 10.5 | <0.03 | 1,346 |

| R lateral OFC (BA47) | −42.6 | −41.4 | −8.6 | 11.9 | <0.03 | 1,120 |

| R superior temporal sulcus | −43.8 | 6.6 | −15.8 | 9.5 | <0.03 | 921 |

| L middle frontal gyrus | 35.4 | −43.8 | 15.4 | 9.9 | <0.03 | 871 |

| L middle temporal gyrus | 42.6 | 6.6 | −20.6 | 9.4 | <0.03 | 840 |

| R piriform cortex | −40.2 | −1.8 | −21.8 | 9.6 | <0.03 | 835 |

| R middle frontal gyrus | −43.8 | −37.8 | 15.4 | 10.8 | <0.05 | 634 |

| R cerebellum | −17.4 | 55.8 | −18.2 | 9.5 | <0.05 | 591 |

| Searchlight decoding: food pictures task | ||||||

| Bilateral visual/ventral temporal cortex | 24.6 | 52.2 | −12.2 | 27.2 | <0.001 | 128,259 |

| L mid-insula | 37.8 | 9 | 11.8 | 12.5 | <0.001 | 2,053 |

| L postcentral gyrus | 55.8 | 25.8 | 27.4 | 13.2 | <0.001 | 1,954 |

| L OFC (BA11m) | 24.6 | −30.6 | −8.6 | 15.2 | <0.01 | 1,555 |

| R OFC (BA11m) | −25.8 | −31.8 | −9.8 | 14.8 | <0.01 | 1,453 |

| R mid-insula/ventral anterior insula | −37.8 | 5.4 | 7 | 11.9 | <0.01 | 1,203 |

| R postcentral gyrus | −57 | 18.6 | 22.6 | 12.4 | <0.01 | 1,092 |

| R inferior frontal gyrus | −42.6 | −28.2 | 16.6 | 11.9 | <0.01 | 869 |

| L inferior frontal gyrus | 41.4 | −27 | 19 | 12.3 | <0.02 | 674 |

| L ventral anterior insula | 36.6 | −7.8 | −2.6 | 9.8 | <0.04 | 411 |

| L amygdala | 19.8 | 4.2 | −13.4 | 9 | <0.05 | 335 |

| Conjunction: food pictures and taste decoding | ||||||

| L fusiform gyrus/parahippocampal gyrus | −37 | −46 | −24 | 3 | — | 1,448 |

| R parahippocampal gyrus | 21 | −31 | −18 | 3 | — | 817 |

| L postcentral gyrus | −57 | −15 | 14 | 3 | — | 508 |

| L dorsal mid-insula | −36 | −3 | 3 | 3 | — | 499 |

| R dorsal mid-insula | 40 | −7 | −0 | 3 | — | 219 |

| R fusiform gyrus | 28 | −52 | −22 | 3 | — | 195 |

| R fusiform gyrus | 34 | −40 | −24 | 3 | — | 161 |

| R postcentral gyrus | 58 | −14 | 20 | 3 | — | 133 |

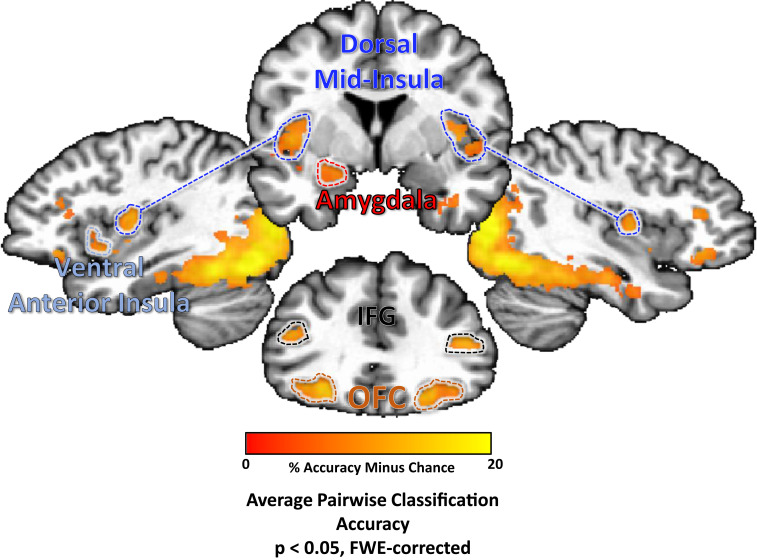

The searchlight analysis performed on the food pictures task also identified multiple regions, including bilateral regions of the dorsal mid-insula, ventral anterior insula, postcentral gyrus (approximately located in the oral somatosensory strip), OFC, and the left amygdala. Significant classification accuracy was also observed bilaterally in the inferior frontal gyrus and widespread regions of occipital cortex, stretching into both dorsal and ventral processing streams, including the parahippocampal gyrus, bilaterally (Fig. 4 and Table 2).

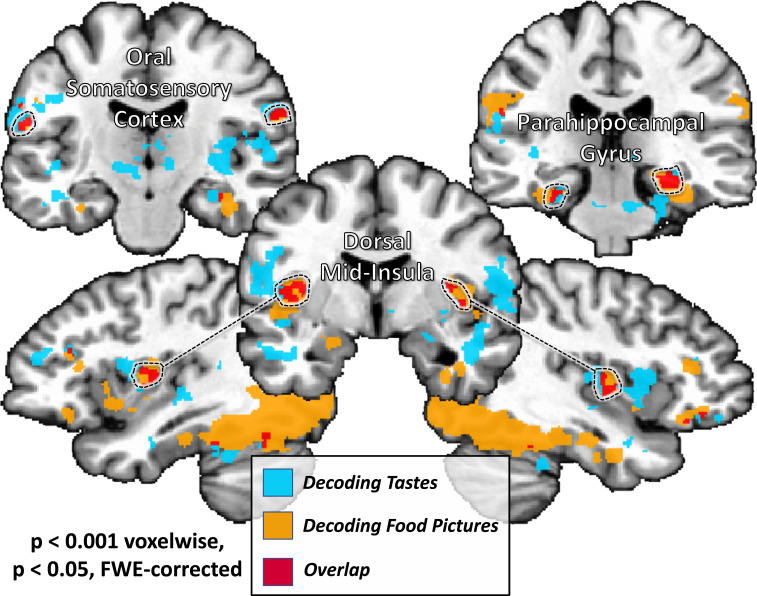

Fig. 4.

MVPAs classify food pictures according to taste category within brain regions involved in taste perception, arousal, and reward.Several regions of the brain were identified using a multivariate searchlight analysis trained to discriminate between pictures of sweet, salty, and sour foods. This included regions involved in processing the sensory and affective components of food, including bilateral regions of the dorsal mid-insula and ventral anterior insula, as well as the bilateral postcentral gyrus, the bilateral OFC, inferior frontal gyrus (IFG), and the left amygdala.

A conjunction of the searchlight classification accuracy maps for both tasks identified bilateral regions of dorsal mid-insular cortex, the postcentral gyrus, the fusiform gyrus, and parahippocampal gyrus (Fig. 5 and Table 2).

Fig. 5.

A common set of brain regions supporting information about taste quality and food picture category. A conjunction of multivariate searchlight maps performed on both taste perception and food pictures tasks identifies a set of regions which reliably discriminate between both taste quality and food picture category. This set of regions included sensory cortical areas within the mid-insular cortex and somatosensory cortex, as well as regions of the ventral occipitotemporal object processing stream.

Object pictures analyses.

Using a whole-brain multivariate searchlight analysis, we were able to reliably classify the nonfood object pictures within widespread areas of the occipitotemporal cortex, including primary visual cortex and much of the ventral visual processing stream (SI Appendix, Fig. S2A). Critically, the effects were limited to these visual processing regions of the brain and did not include any of the other areas involved in classifying food pictures, such as the insula, OFC, or amygdala. To confirm these results, we also ran an ROI analysis within our six insula ROIs and determined that the object picture classification accuracy was no greater than chance (SI Appendix, Fig. S2B).

Cross-modal decoding.

An SVM decoder was used to classify food picture categories using taste categories from the taste perception task as training data and vice versa. This analysis failed to identify any evidence of cross-classification within the insula ROIs, although the right mid-insula ROI did exhibit a nonsignificant trend (accuracy, 56%; false discovery rate [FDR]-corrected P = 0.11) when training on taste and testing on food pictures (Fig. 3 and SI Appendix, Table S2). A follow-up permutation test-based ANOVA within the insula ROIs identified a significant effect of modality (P < 0.001), which indicates that decoding models trained and tested within modality were significantly more accurate than decoding models trained and tested across modality.

Following this, we examined the similarity of multivariate patterns produced by food pictures and tastes in the mid-insula, both within modality and between modality. Using split-half correlations of food picture and taste data, we determined that patterns produced by both of these tasks had high within-modal similarity (SI Appendix, Fig. S3). However, the patterns produced by these different tasks were highly dissimilar from each other, as the between-modality similarity was no different from zero, as well as significantly less than within modal similarity (ANOVA modality effect, P < 0.001; SI Appendix, Fig. S3).

Furthermore, we also examined the distribution of voxel weights generated by our SVM models to separately classify tastes and food pictures within our mid-insula ROIs. These SVM weights were generated following a backward-to-forward model transformation procedure, which allows these parameters to be more readily interpretable in terms of the brain processes under study (21). These parameters then can be used to indicate the voxels within each ROI which are most informative for predicting a particular taste or food picture category. We calculated the spatial correlation of these voxel weights on a subject-by-subject basis, both within modality (split-half) and between modality, and examined the average correlations at the group level. Again, we observed significant within-modality correlations, but the between modal correlations were again no different from zero, as well as significantly less than within-modal correlations (ANOVA modality effect, P < 0.001; SI Appendix, Fig. S4).

We also performed cross-modal classification analyses within a whole-volume searchlight and failed to observe any regions exhibiting significant cross-classification accuracy, when both training on tastes and training on pictures.

Pleasantness Analyses.

To identify the effect of self-reported pleasantness ratings on the hemodynamic response to tastants and pictures, a whole-volume t test of parametrically modulated hemodynamic response functions, as well as a whole-volume linear mixed-effects regression model, was employed. After correction for multiple comparisons, neither approach was able to identify any brain regions exhibiting a reliable relationship between pleasantness ratings and tastant response, for either task. At the ROI level, we examined the effect of pleasantness ratings on the response to food pictures within the taste-responsive clusters of the insula. We identified an effect of taste (F = 3.3; P = 0.04) and an effect of region (F = 5.1; P = 0.002) but no effect of pleasantness ratings (P = 0.18).

Discussion

The objective of this study was to determine whether taste-responsive regions of the brain represent the inferred taste quality of visually presented foods. The subjects within this study viewed a variety of food pictures which varied categorically according to their dominant taste: sweet, sour, or salty. While viewing these pictures, the subjects performed a picture-repetition detection task, a task which was orthogonal to the condition of interest in this study. In a separate task, those same subjects also directly experienced sweet, sour, and salty tastes, delivered during scanning. Viewing food pictures and directly experiencing tastes activated overlapping regions of the dorsal mid-insula and ventral anterior insula. This finding is in agreement with neuroimaging metaanalyses of taste and of food picture representation, which have implicated both regions in these separate functional domains (7, 8, 22, 23).

Multivariate Patterns Representing Tastes and Food Pictures.

Based upon previous evidence that the insula represents taste quality by distributed patterns of activation within taste-responsive brain regions, rather than topographically (12, 17, 18), we sought to identify whether this region uses a similar distributed activation pattern to represent the taste quality of visually presented foods. Using MVPA, we were able to build upon those previous findings by demonstrating that the taste categories associated with food pictures could be reliably discriminated in taste-responsive regions of the ventral anterior and dorsal mid-insula. Moreover, within the bilateral dorsal mid-insula specifically, we were able to reliably classify both the taste quality of tastants and the taste category of food pictures. These results suggest that viewing pictures of food does indeed trigger an automatic retrieval of taste property information within taste-responsive regions of the brain, and most importantly, this retrieved information is detailed enough to represent the specific taste qualities associated with visually presented foods.

These results demonstrate how higher-order inferences derived from stimuli in one modality (in this case vision) can be represented in brain regions typically thought to represent only low-level information about a different modality (in this case taste). Broadly, these results echo previous neuroimaging findings on multisensory processing within vision and audition, which demonstrate that early sensory cortical areas are able to represent the inferred sensory properties of stimuli presented via another sensory channel (24, 25). Taken together, these findings are consistent with claims that both higher-order and presumptively unimodal areas of neocortex are fundamentally multisensory in nature (26).

Using a multivariate searchlight approach, we were also able to identify a broad network of regions, outside of the insular cortex, within which we were able to reliably classify the taste quality of visually presented foods. Those regions included the left amygdala and bilateral OFC, regions previously observed in food picture neuroimaging studies (5, 7, 8) and typically associated with food-related affect and reward (27–29). We also observed significant classification accuracy for food picture categories within the bilateral postcentral gyrus, inferior frontal gyrus, and widespread regions of the visual cortex. A control searchlight analysis, run on the pictures of nonfood objects from this task, was able to classify the object pictures within these same areas of visual cortex, suggesting that these regions were carrying information about low-level visual features of the picture stimuli. Critically, though, the effects did not include any of the other areas involved in classifying food pictures, such as the insula, OFC, or amygdala. Only when decoding pictures of food could we reliably classify within brain regions involved in the experience of taste.

Within the human object recognition pathway, lower-level visual cortex regions pass on information to ventral temporal areas associated with object recognition which then send that information forward to the OFC, ventral striatum, and amygdala (30, 31). Importantly, the amygdala and OFC also sit directly downstream of the insula in the taste pathway (31, 32) and play a role in appetitive and aversive behavioral responses to taste, such as conditioned taste aversion (33). The OFC has been demonstrated to represent the reward value of food cues (27–29). The OFC, in concert with the amygdala and mediodorsal thalamus, is thought to represent the dynamic value of environment stimuli and sensory experiences, informed by the body’s current state (34). Recent rodent studies have shown that the amygdala directly signals gustatory regions of the insula in response to food predictive cues, in a manner which is specifically gated by hypothalamic signaling pathways and is thus differentially responsive to states like hunger or thirst (35). Thus, the amygdala sits in a position to serve as a neural relay linking the taste system with the object recognition system, which allows us to infer the homeostatically relevant properties of visually perceived food stimuli within our environment.

We ran a comparable searchlight analysis of data from the taste task and performed a conjunction of the classification maps from the two tasks. We observed that beyond the dorsal mid-insula, several regions of this network also reliably discriminated between both food picture taste categories and tastes. This set of regions included sensory cortical areas such as the bilateral dorsal mid-insula and bilateral regions of postcentral gyrus at the approximate location of oral somatosensory cortex. This set also included bilateral regions of the parahippocampal gyrus and fusiform gyrus, regions of the ventral visual stream which represent high-level object properties. Interestingly, previous neuroimaging studies have also identified odor-evoked effects in higher-order visual regions, such as the fusiform gyrus (36, 37), which suggest that olfactory regions directly exchange information about stimulus identity with this region of extended visual cortex. Another recent study also identified a specific region of the fusiform gyrus associated with accuracy at estimating the energy density of visually depicted foods (38). The observation that this set of regions support category-specific patterns of activity for both tastes and food pictures lends further support to the idea that the fusiform gyrus plays a role in representing higher-order information about food, which may be used to guide value-based decision making (38).

Cross-Modal Decoding.

We also examined the possibility that the food picture-evoked activity patterns within taste-responsive insula regions were similar to the taste-evoked patterns, by using a cross-modal classification analysis. Our results suggest that this is not the case, as we were unable to reliably discriminate between food picture categories after training using the corresponding taste categories during the taste task. Further analysis of these results indicated that not only were the multivariate patterns produced by tastes and pictures completely unrelated, but the decoding model weights generated for classifying food pictures or tastes were as well. These results are partly in keeping with previous studies which have generated similar null results when attempting cross-modal classification within primary sensory cortices (24, 25).

There are several possible explanations for our failure to cross-decode inferred and experienced tastes. One possibility is that inferred and experienced tastes activate different neural populations within the mid-insula, even when the inferred and experienced tastes represent the same basic information. In that view, inferring a taste (e.g., salty) and experiencing it may both activate the same region but with a different response pattern. This could provide a vital mechanism by which inferred and experienced sensations are kept distinct at the neural level, in order to prevent inappropriate physiological or behavioral responses to inferred sensations.

A related possibility is that the neural signals conveying taste and picture information are relayed to gustatory cortex via different neural pathways and thus may terminate in different cortical layers of the insula. Currently, even ultrahigh-resolution fMRI imaging, such as was employed for this study, would be unable to distinguish distinct populations of intermingled neurons at subvoxel resolution. However, future neuroimaging studies employing more indirect imaging paradigms such as fMRI-Adaptation (39, 40), in combination with emerging techniques for laminar-level fMRI (41, 42), might be used to discriminate these subvoxel level responses. Along these lines, if the inferred tastes of food pictures can be shown to selectively adapt the response to directly experienced tastes, this would indicate the activation of a shared population of neurons activated by both modalities.

Another possibility is that the dissimilarity of these patterns reflects the relative experiential distance between actual consumption of a taste and merely viewing a stimulus that is predictive of consumption. Due to the limitations of picture stimulus presentation during scanning, we were unable to replicate many of the salient features of real-world foods which are present during our everyday interactions, such as their relative size and graspability. Previous studies have demonstrated that the physical presence of a food, compared to viewing a picture of it, increases subjects’ willingness to pay for that food and the expected satiety upon its consumption (43, 44). Additionally, cephalic phase responses such as insulin release and salivation all greatly increase with our degree of sensory exposure to a food, going from sight and smell all the way to initial digestion (45). These studies suggest that the format in which a food is presented affects both our valuation of it as well as our conceptual representation of its sensory properties. Potentially, this greater degree of sensory exposure to foods would be reflected in greater multivariate pattern similarity within gustatory cortical regions.

Alternatively, the inferences generated by the depicted foods may represent a more complex variety of properties than simply taste, including overall flavor (i.e., the combination of taste and smell), texture, and fat content/appetitiveness. Indeed, previous neuroimaging studies have demonstrated the involvement of the middle and ventral anterior insula in representing flavor, fat content, and the viscosity of orally delivered solutions (46–48). Future studies, applying such techniques as representational similarity analysis, along with an appropriately designed stimulus set, could identify to what degree these various food-related properties contribute to the multivariate patterns present within the taste-responsive regions of the insula.

Functional Specialization within the Insula.

Interestingly, through our multivariate analyses, we observed a task-related functional dissociation within the anterior insular cortex as the dorsal anterior insula discriminated between basic tastes but not food pictures. This suggests that taste-related information might be relayed within the insula along separate dorsal and ventral routes from the dorsal mid-insula to the respective regions of anterior insula, which in turn transmit this information to their associated functional networks. Metaanalyses of multiple neuroimaging studies have indicated that the dorsal and ventral regions of the anterior insula are associated with distinctly different domains of cognition (20, 49). The dorsal anterior insula has high connectivity with frontoparietal regions and is associated with cognitive and goal-directed attentional processing (19, 20, 49). In contrast, the ventral anterior insula has a high degree of connectivity with limbic and default-mode network regions and is much more implicated in social and emotional processing (20, 49). Ventral anterior insula is also thought to serve as a link between the gustatory and olfactory systems, whose key function would be to integrate taste and smell to produce flavor (13, 46). The results of the present study also strongly link the ventral anterior insula with the ventral occipitotemporal pathway involved in object recognition. This dorsal/ventral functional division of the anterior insula would thus potentially mirror the action/stimulus division of the dorsal and ventral striatum (50). Relatedly, we also observed some effect of laterality within the insula, with slightly greater accuracy for taste decoding in the right than the left insula. This laterality finding seems to concur with prior evidence of a specialization of the right insula for processing taste concentration (51).

Food and Taste Cues as Interoceptive Predictions.

In addition to its role in gustatory perception, the insula also serves as primary interoceptive cortex, receiving primary visceral afferents from peripheral vagal and spinothalamic neural pathways (52). The involvement of the insula, the dorsal mid-insula in particular, in interoceptive awareness has been well established in human neuroimaging studies (9, 53). Indeed, previous neuroimaging studies have provided evidence that the dorsal mid-insula serves as convergence zone for gustatory and interoceptive processing (9, 10), evidence strongly supported by studies of homologous regions of rodent insular cortex (54, 55). Indeed, the activity of dorsal mid-insula to food images seems acutely sensitive to internal homeostatic signals of energy availability (4, 56). According to recent optical imaging studies in rodents, food predictive cues transiently modify the spontaneous firing rates of insular neurons, pushing their activity toward a predicted state of satiety (57). Thus the automatic retrieval of taste property information for food pictures can be understood within an interoceptive predictive-coding framework (58), in which viewing pictures of homeostatically relevant stimuli modifies the population-level activity of gustatory/interoceptive regions of the insula, in a manner specific to that food’s predicted impact upon the body.

The Role of Pleasantness.

We also attempted to minimize and account for the role of pleasantness within the present study, as a way of focusing specifically on taste quality within both tasks, as opposed to merely the perceived pleasantness of tastants of food pictures. To this end, we used mild concentrations of our sweet, sour, and salty tastants, as we did in our previous study (12). We also used sets of food pictures which had all been rated as highly pleasant by an online sample (SI Appendix). Nevertheless, subjects reported that tastes and food pictures did differ in pleasantness. We examined the effect of pleasantness upon responses to tastes and food pictures using separate approaches, one in which participants’ pleasantness ratings were used to account for trial-by-trial variance (i.e., amplitude modulation regression) and one in which ratings were used to account for any remaining variance at the group level. Consistent with our previous study (12), neither approach showed an effect of pleasantness on the hemodynamic response to tastes or food pictures, at the whole-volume or at the ROI level. Importantly, within those insula ROIs, we were able to reliably discriminate between pictures of salty and sweet foods (SI Appendix, Fig. S2), which did not differ in pleasantness, thus suggesting that pleasantness did not account for the observed results in this study.

Conclusion

The goal of this study was to determine whether taste-responsive regions of the insula also represent the specific inferred taste qualities of visually presented foods and whether they do so using reliably similar patterns of activation. We were able to reliably classify the taste category of food pictures within multiple regions of the brain involved in taste perception and food reward. We additionally identified several regions of the brain, including the bilateral dorsal mid-insular cortex, in which we were able to decode both food picture category and the taste quality of tastants delivered during another task. However, we were unable to reliably cross-decode food picture category by training on the corresponding taste quality within any of these regions. This suggests that while these regions are able to represent both inferred and experienced taste quality, they do so using distinct patterns of activation.

Materials and Methods

Participants.

We recruited 20 healthy subjects (12 female) between the ages of 21 and 45 (average [SD], 26 [7] years). Ethics approval for this study was granted by the NIH Combined Neuroscience Institutional Review Board under protocol number 93-M-0170. The institutional review board of the NIH approved all procedures, and written informed consent was obtained for all subjects. Participants were excluded from taking part in the study if they had any history of neurological injury, known genetic or medical disorders that may impact the results of neuroimaging, prenatal drug exposure, severely premature birth or birth trauma, current usage of psychotropic medications, or any exclusion criteria for MRI.

Experimental Design.

All fMRI scanning and behavioral data were collected at the NIH Clinical Center in Bethesda, MD. Participant scanning sessions began with a high-resolution anatomical reference scan followed by an fMRI scan, during which subjects performed our food pictures task (Fig. 1). This scan was followed by a short nonscanning task in which participants rated the pleasantness of several of the food pictures they had viewed during the previous task. Next, participants performed a nonscanning taste assessment task, during which they rated the tastants on the pleasantness, identity, and intensity. Finally, participants performed our taste perception task during fMRI scanning. The methods used for the taste perception task and taste assessment were nearly identical to those used in our previous study (12). Importantly, all tasks requiring tastant delivery were performed after food picture tasks, to avoid the possibility of any carryover or priming effects of the tastants upon the response to the food pictures.

Food Pictures fMRI Task.

During this task, participants viewed images of various foods and nonfood objects. Pictures were presented sequentially, with four pictures shown per presentation block. Each block consisted of four pictures of either sweet, sour, or salty foods or of specific types of nonfood familiar objects. Pictures were presented at the center of the display screen against a gray background. Within a presentation block, pictures were presented for 1,500 ms, followed by a 500-ms interstimulus interval (ISI), during which a fixation cross appeared on the screen. Another 4-s ISI followed each presentation block (Fig. 1). Presentation blocks were presented in a pseudorandom order by picture category, with no picture category presented twice in a row.

The food types presented during this task were 12 foods selected and rated to be predominantly sweet (cake, honey, donuts, and ice cream), sour (grapefruit, lemons, lemon candy, and limes), or salty (chips, fries, pretzels, and crackers), by groups of online participants recruited through Amazon Mechanical Turk (for details, see SI Appendix, Online Experiments; also see SI Appendix, Fig. S1). The selection criteria for these foods were that they be clearly recognizable, pleasant, and strongly characteristic of their respective taste quality (SI Appendix, Fig. S1). Nonfood objects were familiar objects—basketballs, tennis balls, lightbulbs (fluorescent and incandescent), baseball gloves, flotation tubes, pencils, and marbles—which roughly matched the shape and color of the pictured foods. In total, participants of the fMRI study viewed 28 unique exemplars of each type of the 12 foods (336 total) and 14 unique exemplars of the nonfood objects (112 total).

During half of the presentation blocks, one of the food or object pictures was repeated, and participants were instructed to press a button on a handheld fiber optic response box whenever they identified a repeated picture. Blocks with repetition events were evenly distributed across picture categories (sweet, salty, sour, and object pictures), such that half the blocks of each category contained a repetition. Repetition blocks were also evenly distributed across food and object types, such that each food type was used in a repetition event four times and each object type was used twice. Eight presentation blocks for each picture category (sweet, salty, sour, and objects) were presented during each run of the imaging task (32 total). Each of the four imaging runs lasted for 388 s (6 min, 28 s). For MVPA analysis, each run was split into two run segments, which allowed us to use a total of eight run segments for subsequent MVPA analyses.

Food Pleasantness Rating Task.

Following the fMRI food pictures task, participants were then asked to perform a separate task in which they rated the pleasantness of the food pictures they had seen during the imaging task. Three exemplars of each type of food picture (36 total) were presented in random order against a gray background. Participants were asked to indicate on a 0 (not pleasant at all) to 10 (extremely pleasant) scale, using the handheld response box, how pleasant it would be to eat the depicted food at the present moment. These rating periods were self-paced, and no imaging data were collected during this task.

Taste Assessment.

Participants next completed a taste assessment task, during which they received 0.5 mL of a tastant solution delivered directly onto their tongue by an MR-compatible tastant delivery device. Four types of tastant solutions were delivered during the taste assessment: sweet (0.6M sucrose), sour (0.01M citric acid), salty (0.20M NaCl), and neutral (2.5 mM NaHCO3 + 25 mM KCl). Subjects were then prompted to use the handheld response to indicate the identity of the tastant they received, as well as the pleasantness and intensity of that tastant. Following these self-paced rating periods, the word “wash” appeared on the screen, and subjects received 1.0 mL of the neutral tastant, to rinse out the preceding taste. This was followed by another (2 s) prompt to swallow. A 4-s fixation period separated successive blocks of the taste assessment task. Tastants were presented five times each (20 blocks total), in random order. Altogether, this session lasted between 5 and 7 min. All tastants were prepared using sterile laboratory techniques and United States Pharmacopeia (USP)-grade ingredients by the NIH Clinical Center Pharmacy.

Gustometer Description.

A custom-built pneumatically driven MRI-compatible system delivered tastants during fMRI-scanning (4, 9–12). Tastant solutions were kept at room temperature in pressurized syringes and fluid delivery was controlled by pneumatically driven pinch valves that released the solutions into polyurethane tubing that ran to a plastic gustatory manifold attached to the head coil. The tip of the polyethylene mouthpiece was small enough to be comfortably positioned between the subject’s teeth. This insured that the tastants were always delivered similarly into the mouth. The pinch valves that released the fluids into the manifold were open and closed by pneumatic valves located in the scan room, which were themselves connected to a stimulus delivery computer that controlled the precise timing and quantity of tastants dispensed to the subject during the scan. Visual stimuli for behavioral and fMRI tasks were projected onto a screen located inside the scanner bore and viewed through a mirror system mounted on the head coil. Both visual stimulus presentation and tastant delivery were controlled and synchronized via a custom-built program developed in the PsychoPy2 environment.

Taste Perception Task.

During the taste perception fMRI task, the word “taste” appeared on the screen for 2 s, and subjects received 0.5 mL of either a sweet, sour, salty, or neutral tastant. Next, the word “swallow” appeared on the screen for 2 s, prompting subjects to swallow. These taste and swallow periods occurred four times in a row, with the identical tastant delivered each time. Following these four periods, the word “wash” appeared on the screen, and subjects received 1.0 mL of the neutral tastant, to rinse out the preceding tastes. This was followed by another (2 s) prompt to swallow. In total, these taste delivery blocks lasted 20 s. These taste delivery blocks were followed by a 10-s ISI, during which a fixation cross was presented on the center of the screen. Four sweet, salty, sour, and neutral taste delivery blocks (16 total) were presented in random order throughout each run of this task. Each run contained a 4-s initial fixation period and another 6-s fixation period halfway through the run, for a total of 490 s per run (8 min, 10 s). Participants completed four runs of the taste perception task during one scan session. As with the food pictures task, each run of this task was split into two run segments. This allowed us to use a total of eight run segments for subsequent MVPA analyses.

Imaging Methods.

fMRI data were collected at the National Institute of Mental Health (NIMH) fMRI core facility at the NIH Clinical Center using a Siemens 7T-830/AS Magnetom scanner and a 32-channel head coil. Each echo-planar imaging (EPI) volume consisted of 58 1.2-mm axial slices (echo time [TE] = 23 ms, repetition time [TR] = 2,000 ms, flip angle = 56°, voxel size = 1.2 × 1.2 × 1.2 mm3). A multiband factor of 2 was used to acquire data from multiple slices simultaneously. A GRAPPA (GeneRalized Autocalibrating Partial Parallel Acquisition) factor of 2 was used for in-plane slice acceleration along with a 6/8 partial Fourier k-space sampling. Each slice was oriented in the axial plane, with an anterior-to-posterior phase encoding direction. Prior to task scans, a 1-min EPI scan was acquired with the opposite phase encoding direction (posterior-to-anterior), which was used for correction of spatial distortion artifacts during preprocessing (Image Preprocessing). An ultrahigh-resolution MP2RAGE sequence was used to provide an anatomical reference for the fMRI analysis (TE = 3.02 ms, TR = 6,000 ms, flip angle = 5°, voxel size = 0.70 × 0.70 × 0.70 mm).

Image Preprocessing.

All fMRI preprocessing was performed in Analysis of Functional Neuroimages (AFNI) software (https://afni.nimh.nih.gov/afni). The FreeSurfer software package (http://surfer.nmr.mgh.harvard.edu/) was additionally used for skull-stripping the anatomical scans. A despiking interpolation algorithm (AFNI’s 3dDespike) was used to remove transient signal spikes from the EPI data, and a slice timing correction was then applied to the volumes of each EPI scan. The EPI scan acquired in the opposite (P-A) phase encoding direction was used to calculate a nonlinear transformation matrix, which was used to correct for spatial distortion artifacts. All EPI volumes were registered to the very first EPI volume of the food pictures task using a six-parameter (three translations, three rotations) motion correction algorithm, and the motion estimates were saved for use as regressors in the subsequent statistical analyses. Volume registration and spatial distortion correction were implemented in the same nonlinear transformation step. A 2.4-mm (2-voxel width) FWHM (Full Width at Half Maximum) Gaussian smoothing kernel was then applied to the volume-registered EPI data. Finally, the signal intensity for each EPI volume was normalized to reflect percent signal change from each voxel’s mean intensity across the time course. Anatomical scans were first coregistered to the first EPI volume of the food pictures task and were then spatially normalized to Talairach space via an affine spatial transformation. Subject-level EPI data were only moved to standard space after subject-level regression analyses. All EPI data were left at the original spatial resolution (1.2 × 1.2 × 1.2 mm3).

The EPI data collected during both tasks were separately analyzed at the subject level using multiple linear regression models in AFNI’s 3dDeconvolve. For the FP task univariate analyses, the model included one regressor for each picture category (sweet, sour, salty, and objects). These regressors were constructed by convolution of a gamma-variate hemodynamic response function with a boxcar function having an 8-s width beginning at the onset of each presentation block. For the taste perception task univariate analyses, the model included one 16-s block regressor for each tastant type (sweet, sour, salty, and neutral) and one 4-s block regressor for wash/swallow events. The regression model also included regressors of noninterest to account for each run’s mean, linear, quadratic, and cubic signal trends, as well as the six normalized motion parameters (three translations and three rotations) computed during the volume registration preprocessing.

We additionally generated subject-level regression coefficient maps for use in the multivariate ROI and searchlight analyses. For both tasks, we generated a new subject-level regression model, which modeled each run segment (eight total; see task design above) separately, so that all conditions of both tasks would have eight beta coefficient maps for the purposes of model training and testing.

Analyses.

Imaging analyses: Univariate.

We generated statistical contrast maps at the group level to identify brain regions that exhibited shared activation for the sight of food pictures and the actual perception of taste. For this analysis, we used the subject-level univariate beta-coefficient maps to perform group-level random effects analyses, using the AFNI program 3dttest++. For the food pictures task, we used the statistical contrast, all food pictures (sweet, sour, and salty) versus object pictures. For the taste perception task, we used the respective contrast, all tastants (sweet, sour, and salty) versus the neutral tastant. Both contrast maps were separately whole-volume corrected for multiple comparisons using a cluster-size family-wise error correction using nonparametric permutation tests (see Permutation testing section). We then performed a conjunction of the two independent contrast maps to identify brain voxels significantly activated by both tasks.

Imaging analyses: Multivariate.

These analyses used a linear support vector machine (SVM) classification approach, implemented in The Decoding Toolbox (59), to classify tastants and food picture blocks based on their category labels. These SVM decoders were trained and tested on subject-level regression coefficients obtained from the food pictures and taste perception tasks, using leave-one-run-segment-out cross-validation. For this approach, we generated an independent set of ROIs from a previous study by our laboratory using the same gustatory imaging paradigm, at the same voxel resolution, but with a different group of subjects (12). The contrast used to produce these ROIs was the all taste vs. neutral taste contrast (figure 3 in ref. 12), which generated six distinct insula clusters (bilateral mid-insula, bilateral anterior insula, and bilateral ventral anterior insula; Fig. 3). Within these ROIs, we compared the average pairwise classification accuracy vs. chance (50%) using one-sample signed permutation tests. This procedure generates an empirical distribution of parameter averages by randomly flipping the sign of individual parameter values within a sample 10,000 times. The P value is the proportion of the empirical distribution above the average parameter (accuracy) value. These P values were then FDR corrected for multiple comparisons. Main effects and interactions within these ROIs were tested with a permutation-based ANOVA, implemented in the aovp function in the R-library lmPerm (https://cran.r-project.org/web/packages/lmPerm/lmPerm.pdf).

The whole-volume MVPA searchlight analyses (60) allowed us to identify the average classification accuracy within a multivoxel searchlight, defined as a sphere with a four-voxel radius centered on each voxel in the brain (251 voxels/433 mm3 total). For every subject, we performed separate searchlight analyses for both imaging tasks. The outputs of these searchlight analyses were voxel-wise maps of average pairwise classification accuracy versus chance (50%). To evaluate the classification results at the group level, we warped the resulting classification maps to Talairach atlas space and applied a small amount of spatial smoothing (2.4 mm FWHM) to normalize the distribution of scores across the dataset. We then performed group-level random-effects analyses using the AFNI program 3dttest++ and applied a nonparametric permutation test to correct for multiple comparisons (see Permutation testing for multiple-comparison correction for details). Through this procedure, we generated group-level classification accuracy maps for both the food pictures and taste perception tasks. We then created a conjunction of the two corrected classification maps, to identify shared brain regions present within both maps.

For the cross-modal decoding analyses, we trained the SVM decoder using the beta coefficients of the distinct tastes (sweet, sour, and salty) from the taste perception task and tested whether it could correctly predict the taste category of food picture blocks presented during the food pictures task and vice versa. We performed this analysis within the insula ROIs described above, and we corrected for multiple comparisons using an FDR correction. We also performed cross-modal decoding analyses using a multivariate searchlight approach, as described above.

Pattern similarity analyses.

We performed similarity analyses of the multivoxel patterns for tastes and food pictures within our mid-insula ROIs. For within modality analyses, we extracted the beta-coefficients (using AFNI’s 3dMaskdump) for all voxels within our insula ROIs, separately for odd and even runs, and then performed a voxel-wise correlation of odd and even runs for all task conditions (sweet, salty, and sour). For between modality analyses, we performed a voxel-wise correlation of food picture and taste beta coefficients for all tastes (sweet, salty, and sour), using the full dataset beta coefficients. We Fisher-transformed the r values and looked for an effect of modality (within vs. between) using a group-level ANOVA.

Voxel weight analyses.

We examined the distribution of voxel weights generated by the SVM model to classify tastes and pictures within our mid-insula ROIs. The SVM weights were generated in a transformation procedure described by ref. 21, which allows for SVM weights to be more clearly interpretable within the context of neuroimaging analyses. As with the pattern similarity analyses above, we performed split-half correlations of the voxel weights for within-modality analyses and correlations of the full dataset weights for between modality analyses, for each taste (sweet, salty, and sour). We again Fisher-transformed the r values and looked for an effect of modality (within vs. between) using a group level ANOVA.

Object pictures analyses.

To test whether images of nonfoods could be distinguished in the same areas of the brain as images of foods, we ran a set of supplemental decoding analyses at the ROI and whole-brain level. For this analysis, we generated run-level beta coefficients at the participant level, using gamma-variate rather than block regressors, for a subset of the items presented in the nonfood blocks of the food pictures task: lightbulbs, marbles, gloves, and innertubes. We subsequently ran multivariate decoding analyses on the object data within the insula ROIs and within a whole-brain searchlight, as we had for the food picture data, to determine the average accuracy for classifying these object pictures.

Pleasantness analyses.

We performed a series of analyses to examine the effect of subjects’ self-reported pleasantness ratings for both food pictures and tastants on the activation during the respective imaging tasks. At the whole-volume level, we used separate amplitude-modulation regression analyses at the subject level to identify whether the hemodynamic response to food pictures or tastants was modulated, at the trial-to-trial level, by participants self-reported ratings of the pleasantness of food pictures or tastes. In another approach, we used a linear-mixed-effects meta-analysis (using AFNI program 3dLME) to identify the variance explained at the group level by participant’s pleasantness ratings both for tastants and food pictures. At the ROI level, we examined the effect of pleasantness ratings on the response to food pictures within the taste-responsive clusters of the insula, described above.

Permutation testing for multiple-comparison correction.

Multiple comparison correction was performed using AFNI’s 3dClustSim, within a whole-volume temporal signal-to-noise ratio (TSNR) mask. This mask was constructed from the intersection of the EPI scan windows for all subjects, for both tasks (after transformation to Talairach space), with a brain mask in atlas space (Fig. 2C). The mask was then subjected to a TSNR threshold, such that all remaining voxels within the mask had an average unsmoothed TSNR of 10 or greater. For one-sample t tests, this program will randomly flip the sign of individual datasets within a sample 10,000 times. This process generates an empirical distribution of cluster size at the desired cluster-defining threshold (in this case, P < 0.001). The clusters which survive correction were those larger than 95% of the clusters within this empirical cluster-size distribution.

Supplementary Material

Acknowledgments

We thank Sean Marrett, Martin Hebart, Cameron Riddell, the NIMH Section on Instrumentation, and the NIH Clinical Center pharmacy for their assistance with various aspects of the design and execution of this study. This study was supported by the Intramural Research Program of NIMH, NIH, and it was conducted under NIH Clinical Study Protocol 93-M-0170 (ZIA MH002920). The Clinical-trials.gov ID is NCT00001360.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2010932118/-/DCSupplemental.

Data and Code Availability.

Anonymized anatomical and functional fMRI data and analysis code have been deposited in OpenNeuro.org (https://openneuro.org/datasets/ds003340/versions/1.0.2) (61).

References

- 1.Martin A., GRAPES-Grounding representations in action, perception, and emotion systems: How object properties and categories are represented in the human brain. Psychon. Bull. Rev. 23, 979–990 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Barsalou L. W., Kyle Simmons W., Barbey A. K., Wilson C. D., Grounding conceptual knowledge in modality-specific systems. Trends Cogn. Sci. 7, 84–91 (2003). [DOI] [PubMed] [Google Scholar]

- 3.Simmons W. K., Martin A., Barsalou L. W., Pictures of appetizing foods activate gustatory cortices for taste and reward. Cereb. Cortex 15, 1602–1608 (2005). [DOI] [PubMed] [Google Scholar]

- 4.Simmons W. K., et al. , Category-specific integration of homeostatic signals in caudal but not rostral human insula. Nat. Neurosci. 16, 1551–1552 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Simmons W. K., et al. , Depression-related increases and decreases in appetite: Dissociable patterns of aberrant activity in reward and interoceptive neurocircuitry. Am. J. Psychiatry 173, 418–428 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tiedemann L. J., et al. , Central insulin modulates food valuation via mesolimbic pathways. Nat. Commun. 8, 16052 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.van der Laan L. N., de Ridder D. T. D., Viergever M. A., Smeets P. A. M., The first taste is always with the eyes: A meta-analysis on the neural correlates of processing visual food cues. Neuroimage 55, 296–303 (2011). [DOI] [PubMed] [Google Scholar]

- 8.Tang D. W., Fellows L. K., Small D. M., Dagher A., Food and drug cues activate similar brain regions: A meta-analysis of functional MRI studies. Physiol. Behav. 106, 317–324 (2012). [DOI] [PubMed] [Google Scholar]

- 9.Avery J. A., et al. , A common gustatory and interoceptive representation in the human mid-insula. Hum. Brain Mapp. 36, 2996–3006 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Avery J. A., et al. , Convergent gustatory and viscerosensory processing in the human dorsal mid-insula. Hum. Brain Mapp. 38, 2150–2164 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Avery J. A., et al. , Neural correlates of taste reactivity in autism spectrum disorder. Neuroimage Clin. 19, 38–46 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Avery J. A., et al. , Taste quality representation in the human brain. J. Neurosci. 40, 1042–1052 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Small D. M., Taste representation in the human insula. Brain Struct. Funct. 214, 551–561 (2010). [DOI] [PubMed] [Google Scholar]

- 14.Kobayakawa T., et al. , Location of the primary gustatory area in humans and its properties, studied by magnetoencephalography. Chem. Senses 30 , i226–i227 (2005). [DOI] [PubMed] [Google Scholar]

- 15.Iannilli E., Noennig N., Hummel T., Schoenfeld A. M., Spatio-temporal correlates of taste processing in the human primary gustatory cortex. Neuroscience 273, 92–99 (2014). [DOI] [PubMed] [Google Scholar]

- 16.Ohla K., Toepel U., le Coutre J., Hudry J., Visual-gustatory interaction: Orbitofrontal and insular cortices mediate the effect of high-calorie visual food cues on taste pleasantness. PLoS One 7, e32434 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chikazoe J., Lee D. H., Kriegeskorte N., Anderson A. K., Distinct representations of basic taste qualities in human gustatory cortex. Nat. Commun. 10, 1048 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Porcu E., et al. , Macroscopic information-based taste representations in insular cortex are shaped by stimulus concentration. Proc. Natl. Acad. Sci. U.S.A. 117, 7409–7417 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nelson S. M., et al. , Role of the anterior insula in task-level control and focal attention. Brain Struct. Funct. 214, 669–680 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kurth F., Zilles K., Fox P. T., Laird A. R., Eickhoff S. B., A link between the systems: Functional differentiation and integration within the human insula revealed by meta-analysis. Brain Struct. Funct. 214, 519–534 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Haufe S., et al. , On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage 87, 96–110 (2014). [DOI] [PubMed] [Google Scholar]

- 22.Veldhuizen M. G., et al. , Identification of human gustatory cortex by activation likelihood estimation. Hum. Brain Mapp. 32, 2256–2266 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yeung A. W. K., Goto T. K., Leung W. K., Basic taste processing recruits bilateral anteroventral and middle dorsal insulae: An activation likelihood estimation meta-analysis of fMRI studies. Brain Behav. 7, e00655 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Meyer K., et al. , Predicting visual stimuli on the basis of activity in auditory cortices. Nat. Neurosci. 13, 667–668 (2010). [DOI] [PubMed] [Google Scholar]

- 25.Jung Y., Larsen B., Walther D. B., Modality-independent coding of scene categories in prefrontal cortex. J. Neurosci. 38, 5969–5981 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ghazanfar A. A., Schroeder C. E., Is neocortex essentially multisensory? Trends Cogn. Sci. 10, 278–285 (2006). [DOI] [PubMed] [Google Scholar]

- 27.Noonan M. P., et al. , Separate value comparison and learning mechanisms in macaque medial and lateral orbitofrontal cortex. Proc. Natl. Acad. Sci. U.S.A. 107, 20547–20552 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rushworth M. F., Noonan M. P., Boorman E. D., Walton M. E., Behrens T. E., Frontal cortex and reward-guided learning and decision-making. Neuron 70, 1054–1069 (2011). [DOI] [PubMed] [Google Scholar]

- 29.Simmons W. K., et al. , The ventral pallidum and orbitofrontal cortex support food pleasantness inferences. Brain Struct. Funct. 219, 473–483 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cavada C., Compañy T., Tejedor J., Cruz-Rizzolo R. J., Reinoso-Suárez F., The anatomical connections of the macaque monkey orbitofrontal cortex. A review. Cereb. Cortex 10, 220–242 (2000). [DOI] [PubMed] [Google Scholar]

- 31.Rolls E. T., Functions of the anterior insula in taste, autonomic, and related functions. Brain Cogn. 110, 4–19 (2016). [DOI] [PubMed] [Google Scholar]

- 32.Scott T. R., Plata-Salamán C. R., Taste in the monkey cortex. Physiol. Behav. 67, 489–511 (1999). [DOI] [PubMed] [Google Scholar]

- 33.Reilly S., Bornovalova M. A., Conditioned taste aversion and amygdala lesions in the rat: A critical review. Neurosci. Biobehav. Rev. 29, 1067–1088 (2005). [DOI] [PubMed] [Google Scholar]

- 34.Rudebeck P. H., Murray E. A., The orbitofrontal oracle: Cortical mechanisms for the prediction and evaluation of specific behavioral outcomes. Neuron 84, 1143–1156 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Livneh Y., et al. , Homeostatic circuits selectively gate food cue responses in insular cortex. Nature 546, 611–616 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Cerf-Ducastel B., Murphy C., Neural substrates of cross-modal olfactory recognition memory: An fMRI study. Neuroimage 31, 386–396 (2006). [DOI] [PubMed] [Google Scholar]

- 37.Lundström J. N., Regenbogen C., Ohla K., Seubert J., Prefrontal control over occipital responses to crossmodal overlap varies across the congruency spectrum. Cereb. Cortex 29, 3023–3033 (2019). [DOI] [PubMed] [Google Scholar]

- 38.DiFeliceantonio A. G., et al. , Supra-additive effects of combining fat and carbohydrate on food reward. Cell Metab. 28, 33–44.e3 (2018). [DOI] [PubMed] [Google Scholar]

- 39.Grill-Spector K., Henson R., Martin A., Repetition and the brain: Neural models of stimulus-specific effects. Trends Cogn. Sci. 10, 14–23 (2006). [DOI] [PubMed] [Google Scholar]

- 40.Gotts S. J., Milleville S. C., Bellgowan P. S. F., Martin A., Broad and narrow conceptual tuning in the human frontal lobes. Cereb. Cortex 21, 477–491 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Huber L., et al. , High-resolution CBV-fMRI allows mapping of laminar activity and connectivity of cortical input and output in human M1. Neuron 96, 1253–1263.e7 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Persichetti A. S., Avery J. A., Huber L., Merriam E. P., Martin A., Layer-specific contributions to imagined and executed hand movements in human primary motor cortex. Curr. Biol. 30, 1721–1725.e3 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bushong B., King L. M., Camerer C. F., Rangel A., Pavlovian processes in consumer choice: The physical presence of a good increases willingness-to-pay. Am. Econ. Rev. 100, 1556–1571 (2010). [Google Scholar]

- 44.Romero C. A., Compton M. T., Yang Y., Snow J. C., The real deal: Willingness-to-pay and satiety expectations are greater for real foods versus their images. Cortex 107, 78–91 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Smeets P. A., Erkner A., de Graaf C., Cephalic phase responses and appetite. Nutr. Rev. 68, 643–655 (2010). [DOI] [PubMed] [Google Scholar]

- 46.Small D. M., Prescott J., Odor/taste integration and the perception of flavor. Exp. Brain Res. 166, 345–357 (2005). [DOI] [PubMed] [Google Scholar]

- 47.de Araujo I. E. T., Rolls E. T., Kringelbach M. L., McGlone F., Phillips N., Taste-olfactory convergence, and the representation of the pleasantness of flavour, in the human brain. Eur. J. Neurosci. 18, 2059–2068 (2003). [DOI] [PubMed] [Google Scholar]

- 48.De Araujo I. E., Rolls E. T., Representation in the human brain of food texture and oral fat. J. Neurosci. 24, 3086–3093 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kelly C., et al. , A convergent functional architecture of the insula emerges across imaging modalities. Neuroimage 61, 1129–1142 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.O’Doherty J., et al. , Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science 304, 452–454 (2004). [DOI] [PubMed] [Google Scholar]

- 51.Dalenberg J. R., Hoogeveen H. R., Renken R. J., Langers D. R. M., ter Horst G. J., Functional specialization of the male insula during taste perception. Neuroimage 119, 210–220 (2015). [DOI] [PubMed] [Google Scholar]

- 52.Craig A. D., How do you feel? Interoception: The sense of the physiological condition of the body. Nat. Rev. Neurosci. 3, 655–666 (2002). [DOI] [PubMed] [Google Scholar]

- 53.Simmons W. K., et al. , Keeping the body in mind: Insula functional organization and functional connectivity integrate interoceptive, exteroceptive, and emotional awareness. Hum. Brain Mapp. 34, 2944–2958 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Ogawa H., Wang X.-D., Neurons in the cortical taste area receive nociceptive inputs from the whole body as well as the oral cavity in the rat. Neurosci. Lett. 322, 87–90 (2002). [DOI] [PubMed] [Google Scholar]

- 55.Hanamori T., Kunitake T., Kato K., Kannan H., Neurons in the posterior insular cortex are responsive to gustatory stimulation of the pharyngolarynx, baroreceptor and chemoreceptor stimulation, and tail pinch in rats. Brain Res. 785, 97–106 (1998). [DOI] [PubMed] [Google Scholar]

- 56.de Araujo I. E., Lin T., Veldhuizen M. G., Small D. M., Metabolic regulation of brain response to food cues. Curr. Biol. 23, 878–883 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Livneh Y., et al. , Estimation of current and future physiological states in insular cortex. Neuron 105, 1094–1111.e10 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Barrett L. F., Simmons W. K., Interoceptive predictions in the brain. Nat. Rev. Neurosci. 16, 419–429 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Hebart M. N., Görgen K., Haynes J.-D., The Decoding Toolbox (TDT): A versatile software package for multivariate analyses of functional imaging data. Front. Neuroinform. 8, 88 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Kriegeskorte N., Goebel R., Bandettini P., Information-based functional brain mapping. Proc. Natl. Acad. Sci. U.S.A. 103, 3863–3868 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Avery J. A., Liu A. G., Ingeholm J. E., Gotts S. J., Martin A., Tasting pictures: Viewing images of foods evokes taste-quality-specific activity in gustatory insular cortex. Openneuro. https://openneuro.org/datasets/ds003340/versions/1.0.2. Deposited 10 October 2020. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Anonymized anatomical and functional fMRI data and analysis code have been deposited in OpenNeuro.org (https://openneuro.org/datasets/ds003340/versions/1.0.2) (61).