Significance

Science and engineering have benefited greatly from the ability of finite element methods (FEMs) to simulate nonlinear, time-dependent complex systems. The recent advent of extensive data collection from such complex systems now raises the question of how to systematically incorporate these data into finite element models, consistently updating the solution in the face of mathematical model misspecification with physical reality. This article describes general and widely applicable methodology for the coherent synthesis of data with FEM models, providing a data-driven probability distribution that captures all sources of uncertainty in the pairing of FEM with measurements.

Keywords: Bayesian calibration, finite element methods, model discrepancy

Abstract

We present a statistical finite element method for nonlinear, time-dependent phenomena, illustrated in the context of nonlinear internal waves (solitons). We take a Bayesian approach and leverage the finite element method to cast the statistical problem as a nonlinear Gaussian state–space model, updating the solution, in receipt of data, in a filtering framework. The method is applicable to problems across science and engineering for which finite element methods are appropriate. The Korteweg–de Vries equation for solitons is presented because it reflects the necessary complexity while being suitably familiar and succinct for pedagogical purposes. We present two algorithms to implement this method, based on the extended and ensemble Kalman filters, and demonstrate effectiveness with a simulation study and a case study with experimental data. The generality of our approach is demonstrated in SI Appendix, where we present examples from additional nonlinear, time-dependent partial differential equations (Burgers equation, Kuramoto–Sivashinsky equation).

The central role of physically derived, nonlinear, time-dependent partial differential equations (PDEs) in scientific and engineering research is undisputed, as is the need for numerical intervention in order to understand their behavior. The finite element method (FEM) has emerged as the foremost strategy to undergo this numerical intervention, yet when these discretized solutions are compared with empirical evidence, elements of model mismatch are revealed that require statistical formalisms to be dealt with appropriately (1–3). To address this problem of model misspecification, in this paper we introduce stochastic forcing inside the PDE and update the FEM discretized PDE solution with data in a filtering context.

Stochastic forcing is introduced through a random function within the governing equations. This represents an unknown process, which may have been omitted in the formulation of the physical model. For an elliptic linear PDE with coefficients , this can be expressed as

The push forward of the Gaussian random field , with covariance parameters , induces a probability measure over the space of admissible solutions to the above. To embed this into a finite element model, we start with the weak form

where is the bilinear form generated from and is the appropriate Hilbert space inner product. Discretizing with finite elements , yields the Gaussian measure over the solution FEM coefficients :

where , , and . This defines a (finite-dimensional) prior distribution over the FEM model, which represents all assumed knowledge before observing data. The mean is the standard Galerkin solution, and the covariance results from the action of the discretized PDE operator on the covariance ; further details are contained in SI Appendix, section 1. This was first developed in ref. 4, and we demonstrate the generality of such an approach by extending it to nonlinear, time-dependent PDEs.

An area in which nonlinear and time-dependent problems are ubiquitous is ocean dynamic processes, where essentially all problems stem from a governing system of nonlinear, time-dependent equations (e.g., the Navier–Stokes equations). The ocean dynamics community has grown increasingly cognizant of the importance of accurate uncertainty quantification (5, 6), with many possible applications [e.g., rogue waves (7), turbulent flow (8)] for our proposed methodology.

An example process is nonlinear internal waves (solitons), which are observed as waves of depression or elevation along a pycnocline in a density-stratified fluid and are of broad interest to both the scientific and engineering communities (9–13). The classical mathematical model for solitons is the Korteweg–de Vries (KdV) equation (14):

| [1] |

where is the pycnocline displacement. Coefficients , , and are determined by physical parameters. Eq. 1 is readily interpretable: waves propagate at wave speed , nonlinear steepening results from , and dispersion is due to . Relative coefficient values determine the dominating regime, and waves can vary from quasilinear to highly nonlinear.

Despite KdV being well studied (15) and widely applied (16–18), its relative simplicity makes it prone to model mismatch. To compensate for this mismatch, we update the FEM discretized solution with observations in a filtering context. The resulting statistical FEM (statFEM) is shown using simulated and experimental data to

-

i)

Approximate the data-generating process with a statistically coherent uncertainty quantification.

-

ii)

Synthesize physics and data to give an interpretable posterior distribution.

-

iii)

Utilize sparsely observed data to reconstruct observed phenomena.

-

iv)

Enable the application of simpler physical models, updated with observations.

For practitioners faced with data, we believe these benefits are of importance, and we demonstrate the generality of our method in SI Appendix with further examples. Code to replicate the analysis is freely available online.*

A Nonlinear, Time-Evolving Statistical FEM

A Gaussian process (GP), , is introduced inside of the governing equations, which represent an unknown forcing process in space and time, with time-varying parameters . For a general nonlinear PDE, this is given by

| [2] |

where and represent linear and nonlinear differential operators, respectively, with coefficients . The push forward of the Gaussian measure induces a probability measure over the space of admissible solutions to Eq. 2 and characterizes our prior belief in the model based on modeling assumptions. The kernel of the covariance operator is given by

The exact form of this covariance can be decided upon by domain experts so that the uncertainty induced is physically motivated. For example, can be chosen to be a Matérn covariance function to reflect the unknown forcing having derivatives up to a known order. We assume a white noise process in time to facilitate the application of standard Kalman methods to solve the filtering problem; this is also convention in stochastic differential equations (19). When computing the prior defined by Eq. 2, we use fixed parameters . When conditioning on data, we take an empirical Bayes approach and estimate through the log-marginal posterior.

Coefficients of Eq. 2 are assumed to be known, and we choose to update the numerical solution to the model, acknowledging that estimating [using, e.g., maximum likelihood methods (20), Markov chain Monte Carlo (21), or inversion methods in general (22)] is also of utmost interest.

Discretizing Eq. 2 using finite elements in space with an implicit or explicit Euler method in time,† denote by the FEM coefficients at time , for time step . Further analysis of this discretization will be the focus of future work. For a potentially nonlinear system of equations , the resultant system can be expressed as (full construction is given in SI Appendix, sections 2 and 3)

The vector represents Galerkin discretized increments of a Brownian motion process, .

Unlike the elliptic example in the Introduction, for Eq. 2 the induced probability measure on the FEM coefficients is not available in closed form, and we present two approximations, based on the extended Kalman filter (EKF) and the ensemble Kalman filter (EnKF). The first linearizes about the current solution with the Jacobian of the nonlinear (evaluated at the current solution) to give a Gaussian approximation of . The second uses an ensemble in which a perturbed system is solved, with realizations from , at each time step. Summary statistics (e.g., mean, covariance) are then computed from this ensemble.

For the prior, the deterministic FEM solution is identically equal to the mean in the EKF approach. However we have found in numerical experiments that the EKF method, due to the use of the Jacobian, inflates the covariance at points of high gradient with reduction at points approaching near-zero gradient, when using large time steps. This does not occur with the EnKF approach.

| Algorithm 1: EKF algorithm |

| for do |

| (Prediction step) |

| Solve . |

| Estimate: |

| . |

| . |

| . |

| (Analysis step) |

| . |

| . |

Conditioning on Data.

Data are observed at time on the grid . These data are corrupted with noise independent to the model to give the data-generating process , where the linear observation operator maps from the computed solution grid to the observation grid, using the FEM interpolant.

The filtered distribution , where , is our primary object of interest.‡ We take a Bayesian interpretation and refer to this as the filtered posterior distribution or just the posterior, when the context is clear. However, as this is a filtering problem, non-Bayesian methods are perfectly valid. We assume that all distributions are Gaussian, so the posterior can be computed with standard methods in data assimilation (23); we use the EKF (24) and the EnKF (stochastic form) (25).

The initial conditions are known (i.e., they are given a Dirac measure). For time , we make a tentative prediction step according to the PDE model , propagating uncertainty in the previous time step [described by ], to give the prediction measure . Parameters are then estimated, and the full prediction step is completed to estimate . Data observed at time are then conditioned on to give the updated filtering distribution .

We assume the parameters are independent across time [i.e., ]. Parameters may also be time constant, which is discussed in the maximum likelihood setting in ref. 26 and in the hierarchical Bayesian setting in ref. 27. SI Appendix also contains a possible modification of the method that accounts for time-invariant parameters. The following procedure provides an overview of the method, and Algorithms 1 and 2 give pseudocode versions.

Conditioning procedure.

At time , assume that the measure on the previous time is described by

Then, proceed as follows:

-

i)

Compute the tentative prediction step:

-

i)

Maximize the EKF log-marginal posterior to estimate parameters:

-

i)

where ,

-

i)

Compute the full prediction step:

-

i)

Complete the analysis step:

The prior is recovered if only the full prediction step (step 3) is completed at each iteration; completing the full sequence gives the posterior. Optimization of the log-marginal posterior is done using the limited-memory Broyden–Fletcher–Goldfarb–Shanno algorithm (28) with starting points set to the previous estimates. This log-marginal posterior is calculable due to the Gaussian assumption made in step 1. Prior information on hyperparameters is incorporated through and , which regularizes the optimization problem.

Simulation Study.

We condition on data generated from an extended Korteweg–de Vries (eKdV) equation with a cubic nonlinear term:

| Algorithm 2: EnKF algorithm |

| for do |

| (Prediction step) |

| for do |

| Solve . |

| Compute and from |

| Estimate: |

| for do |

| Solve . |

| Compute and from |

| . |

| (Analysis step) |

| for do |

setting , , and . The misspecified KdV model

| [3] |

has the same coefficient values, with initial conditions set to a wave of depression on the space–time grid . Boundary conditions are periodic. Gaussian random forcing has spatial covariance (we refer to as the scale parameter and as the length parameter).

KdV is discretized following ref. 29, using trial functions and testing functions, with a Crank–Nicolson method in time. The data-generating process is simulated using Dedalus (30) with 1,024 grid points in space. This is then down sampled to 20 grid points and jittered with synthetic Gaussian observational noise (mean 0, variance ) to give the simulated dataset.

We assume , where is the Galerkin discretized solution to Eq. 3 and . Hyperparameters and noise level are estimated at each step by maximizing the log-marginal posterior, with the weakly informative truncated Gaussian priors , , and . The EnKF is used in this section, with , , and . Results are presented in Fig. 1.

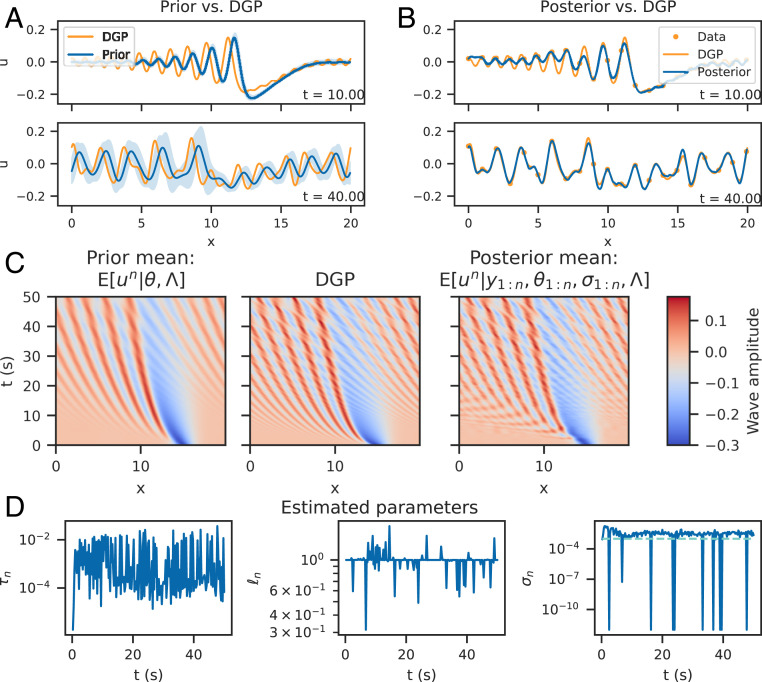

Fig. 1.

Simulation study results (using EnKF). A shows a prior solution with fixed hyperparameters with the data-generating process (DGP; as labeled) for two times with accompanying credible intervals. Note the degree of model mismatch between the two profiles. B shows the DGP and the posterior mean and credible intervals for the same times as the prior. Given the data, the model is highly certain about the updated mean, for which the model mismatch has been corrected. This is now using estimated hyperparameters for a fully data-driven approach. C shows the prior mean, DGP, and posterior mean over the entire simulation grid in space and time, and D shows the estimated parameters , across time, with the true value for shown as a dashed turquoise line.

For a fixed set of hyperparameters , for all , the data-generating process and estimated prior are shown in Fig. 1A, appearing visually mismatched in the mean via phase shift, increased oscillations, and increased wave interactions. Note that the stochastic forcing induces an uncertainty about the PDE solution, represented by the credible intervals shown. Note also that the data-generating process is approximately contained within the credible intervals.

Fig. 1B shows that the posterior mean approximates the data-generating process, and the posterior uncertainty bounds have shrunk as a result of conditioning, indicating high certainty about the posterior mean values. Model discrepancy between the data and the statFEM solution has been corrected for. The space–time view of the posterior, shown in Fig. 1C, shows that the posterior has incorporated the complex soliton interactions in the data, not present in the prior.

Parameter estimates (Fig. 1D) indicate that the length and noise parameters are both stable, with the noise being slightly overestimated (i.e., ). Times at which the noise is not identified result in it being set to the lower bound. The scale parameter quantifies the accuracy of the model prediction step at each time step. In this case, model predictions vary in their accuracy and appear approximately bounded to within .

Case Study: Experimental Data

We now apply the method to the experimental data collected in ref. 31. Experiments were conducted to study weakly nonlinear models for internal waves in lakes and consisted of generating internal waves in a two-layer stratified system, inside of a clear acrylic tank of dimensions . The tank contained an upper layer of fresh water and a lower layer of saline water, with a density gradient of . The tank was able to rotate in order to establish the initial conditions, which were an inclined plane of angle . This initial condition mimics the shear induced by strong winds in lakes. At time , the tank is rotated to restore it to the horizontal.

Data were recorded at three spatially equidistant locations in the tank using ultrasonic wave gauges (Fig. 2), taking measurements approximately every , up to ; we use data up to . Data are measured in voltages and are postprocessed to give pynocline displacements in meters. These data are plotted in Fig. 3, where the small measurement error is visually apparent. Transient behavior is observed before steepening, and a soliton wave train forms; three such steepening events are observed in the data we analyze. As , dissipation results in the wave profile approaching a flat steady-state profile.

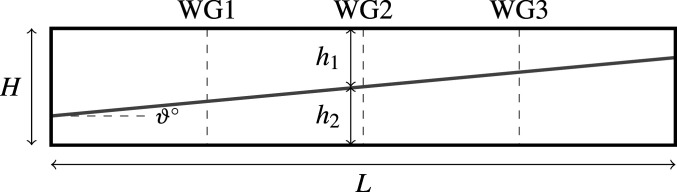

Fig. 2.

Schematic diagram of the experimental apparatus. Wave gauges (WGs) are labeled WG1, WG2, and WG3, and the initial conditions are shown as a gray line, labeled with initial angle .

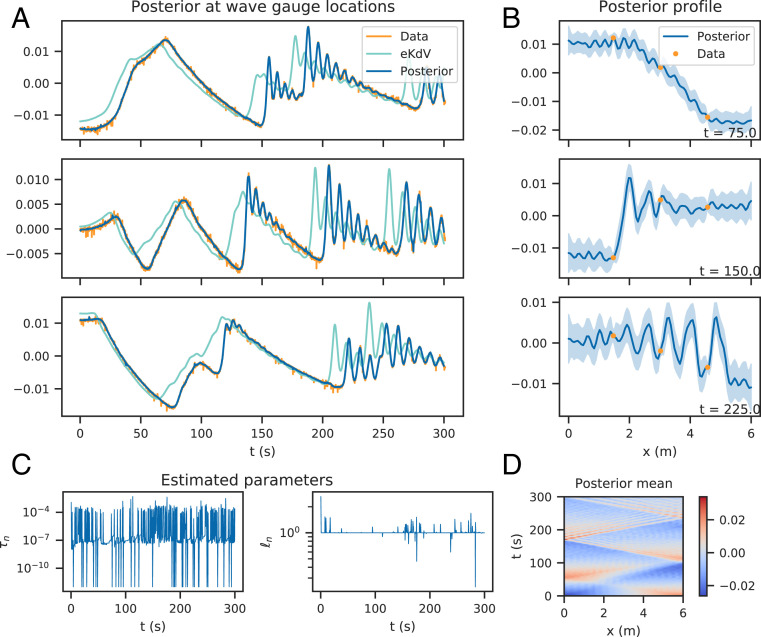

Fig. 3.

Experimental data results; posterior computed by the EnKF. A shows the observed data, the deterministic solution to the misspecified model, and the posterior mean at the wave gauges with 95% credible intervals up to time . Model mismatch between the model and data is visually apparent and has been offset in the posterior mean. B shows the posterior mean wave profile inside of the tank, with 95% credible intervals and the data, for three times. The wave profile has been reconstructed by conditioning on the observed data. C shows the estimated hyperparameters , and D shows the estimated posterior mean over the space–time grid.

Our physical model is an eKdV equation with—for computational simplicity—a linear dissipation term. We acknowledge that for laminar boundaries, other methods are preferred (31, 32). Including some form of dissipation is important as otherwise, the model becomes impractically mismatched by the end of the simulation. The eKdV is given by

| [4] |

for , , , and with coefficients

We set as a GP as described previously, with spatial covariance kernel set to a squared exponential with scale and length hyperparameters and . For the experiment under consideration, we have , , , and . The dissipation coefficient is an inverse timescale, which is set to .

Incorporation of reflective boundary conditions is done by solving the eKdV equation across the extended domain with periodic boundary conditions and summing solutions in the (reflected) subdomains , :

Details on the derivation are in ref. 31. Solutions to the deterministic version of Eq. 4 at the locations of the wave gauges are shown in Fig. 3. We show the deterministic solution instead of the prior due to accumulation of errors for large simulation times.

The eKdV model does not capture the observed behavior exactly. The model waves have higher velocity than the observations, and model amplitudes are slightly larger than observed amplitudes. It is conjectured that this is due to misparameterization of dissipation, but in any case, the model is misspecified. Rather than estimating the eKdV parameters using inversion techniques, we sequentially update the model with observations to give the posterior .

As before, we assume with known noise . As we solve on the extended domain and sum solutions, the observation operator is taken to be the sum of the appropriate function values given by our FEM interpolant (a linear operation). The observation points are unchanging, as is the solution mesh, so is constant in time. The hyperparameters of , , must be estimated at each iteration by maximizing the log-marginal posterior. Due to small data in space (three observations each time step), we use a projection method to estimate hyperparameters. This linearly projects the predicted mean forward, estimated from the data points: . Parameters are estimated to give the best least squares linear projection from the prediction to the data. This gives a projected dataset, , using the linear shift: . The estimated hyperparameters are then given by , in which the observed data are replaced with the projected data . We project to a grid of 100 points uniformly spaced across the solution grid. Note that this is only for the parameter estimation step, and we do not use this as the data in the analysis step.

We set weakly informative priors: and . The posterior is computed using the ensemble method with , , and . Results are shown in Fig. 3. The posterior mean values of at the wave gauges are shown in Fig. 3A and offer a close fit to the data in comparison with the eKdV solution. The credible intervals shrink about the data (compare Fig. 3B) and are not seen on the figure.

Posterior wave profiles are shown in Fig. 3B and demonstrate that given the data, the method is able to yield a sensible estimate for the underlying wave profile and is hence able to reconstruct the wave profile given sparse observations in space (Fig. 3D). Furthermore the provided uncertainty quantification is physically sensible, with bounds contracting about the data and expanding near the boundaries.

The hyperparameters, , are shown in Fig. 3C. The scale parameter is seen to vary between two distinct levels, indicating that the model predictions vary in their accuracy. The amplitude of these mismatch scales shows that in this case, model mismatch is a cumulative effect that takes some time before it is obviously occurring (Fig. 3A, eKdV solution). Repeated conditioning on data helps to mitigate these long timescale effects due to continual updating. A space–time view of the posterior mean wave profile is shown in Fig. 3D, demonstrating that the general behavior of the flow (e.g., reflective boundary conditions, dissipation, wave train formation) is indeed captured.

Conclusions

We present a data-driven approach to the FEM that assimilates observations into nonlinear, time-dependent PDEs by embedding model misspecification uncertainty in the governing equations and sequentially updating the discretized equations with observations in a filtering context. Examples presented using the KdV equation (and the additional systems studied in SI Appendix) demonstrate that the method can approximate the data-generating process to give an interpretable posterior distribution, which reconstructs the observed phenomena.

This work sets the foundation for future studies of embedding data within FEM models. The use of the underlying Kalman framework permits the drawing upon of ideas from high-dimensional data assimilation, which for the systems studied here, were not needed. Techniques from Bayesian inversion can also be used to provide uncertainty quantification for physical quantities of interest, which will also allow for more accurate prediction. Finally, we believe that the development of similar methodology for alternate discretizations (e.g., spectral methods) could also be of great benefit, allowing for even broader application.

Supplementary Material

Acknowledgments

We thank the two anonymous referees whose suggestions greatly improved the manuscript. C.D. was supported by a Bruce and Betty Green Postgraduate Research Scholarship and an Australian Government Research Training Program Scholarship at the University of Western Australia. C.D. and E.C. were supported by Australian Research Council Industrial Transformation Research Hub Grant IH140100012. E.C. was supported by Australian Research Council Industrial Transformation Training Centre Grant IC190100031. M.G. was supported by Engineering and Physical Sciences Research Council Grants EP/R034710/1, EP/R018413/1, EP/R004889/1, and EP/P020720/1 and a Royal Academy of Engineering Research Chair.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

*It is available at https://github.com/connor-duffin/statkdv-paper.

†Crank–Nicolson may also be used to ensure stability.

#x2021;From here on in, we implicitly condition on PDE coefficients .

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2015006118/-/DCSupplemental.

Data Availability.

Data and code have been deposited on GitHub (https://github.com/connor-duffin/statkdv-paper).

References

- 1.Kennedy M. C., O’Hagan A., Bayesian calibration of computer models. J. Roy. Stat. Soc. B 63, 425–464 (2001). [Google Scholar]

- 2.Judd K., Smith L. A., Indistinguishable states II: The imperfect model scenario. Phys. Nonlinear Phenom. 196, 224–242 (2004). [Google Scholar]

- 3.Berger J. O., Smith L. A., On the statistical formalism of uncertainty quantification. Annu Rev Stat Appl. 6, 433–460 (2019). [Google Scholar]

- 4.Girolami M., Febrianto E., Yin G., Cirak F., The statistical finite element method (statFEM) for coherent synthesis of observation data and model predictions. Comput. Methods Appl. Mech. Eng., 10.17863/CAM.59639 (2020). [DOI] [Google Scholar]

- 5.Lermusiaux P. F. J., Uncertainty estimation and prediction for interdisciplinary ocean dynamics. J. Comput. Phys. 217, 176–199 (2006). [Google Scholar]

- 6.Fringer O. B., Dawson C. N., He R., Ralston D. K., Zhang Y. J., The future of coastal and estuarine modeling: Findings from a workshop. Ocean Model. 143, 101458 (2019). [Google Scholar]

- 7.Alam M. R., Predictability horizon of oceanic rogue waves. Geophys. Res. Lett. 41, 8477–8485 (2014). [Google Scholar]

- 8.Majda A. J., Branicki M., Lessons in uncertainty quantification for turbulent dynamical systems. Discrete Contin. Dyn. Syst. Ser. A 32, 3133–3221 (2012). [Google Scholar]

- 9.Osborne A. R., Burch T. L., Internal solitons in the Andaman Sea. Science 208, 451–460 (1980). [DOI] [PubMed] [Google Scholar]

- 10.Boegman L., Stastna M., Sediment resuspension and transport by internal solitary waves. Annu. Rev. Fluid Mech. 51, 129–154 (2019). [Google Scholar]

- 11.Cacchione D., Pratson L. F., Ogston A., The shaping of continental slopes by internal tides. Science 296, 724–727 (2002). [DOI] [PubMed] [Google Scholar]

- 12.Wang Y. H., Dai C. F., Chen Y. Y., Physical and ecological processes of internal waves on an isolated reef ecosystem in the South China Sea. Geophys. Res. Lett. 34, L18609 (2007). [Google Scholar]

- 13.Huang X., et al. , An extreme internal solitary wave event observed in the northern South China Sea. Sci. Rep. 6, 30041 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Korteweg D. J., de Vries G., On the change of form of long waves advancing in a rectangular canal, and on a new type of long stationary waves. Lond. Edinb. Dubl. Phil. Mag. J. Sci. 39, 422–443 (1895). [Google Scholar]

- 15.Drazin P. G., Johnson R. S., Solitons: An Introduction (Cambridge University Press, 1989). [Google Scholar]

- 16.Lamb K. G., Yan L., The evolution of internal wave undular bores: Comparisons of a fully nonlinear numerical model with weakly nonlinear theory. J. Phys. Oceanogr. 26, 2712–2734 (1996). [Google Scholar]

- 17.Holloway P. E., Pelinovsky E., Talipova T., A generalized Korteweg-de Vries model of internal tide transformation in the coastal zone. J. Geophys. Res. Oceans 104, 18333–18350 (1999). [Google Scholar]

- 18.Helfrich K. R., Melville W. K., Long nonlinear internal waves. Annu. Rev. Fluid Mech. 38, 395–425 (2006). [Google Scholar]

- 19.Øksendal B., Stochastic Differential Equations (Springer, 2003). [Google Scholar]

- 20.Ionides E. L., Bretó C., King A. A., Inference for nonlinear dynamical systems. Proc. Natl. Acad. Sci. U.S.A. 103, 18438–18443 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Girolami M., Bayesian inference for differential equations. Theor. Comput. Sci. 408, 4–16 (2008). [Google Scholar]

- 22.Stuart A. M., Inverse problems: A Bayesian perspective. Acta Numer. 19, 451–559 (2010). [Google Scholar]

- 23.Law K., Stuart A., Zygalakis K., Data Assimilation (Springer, Cham, Switzerland, 2015). [Google Scholar]

- 24.Jazwinski A. H., Stochastic Processes and Filtering Theory (Courier Corporation, 2007). [Google Scholar]

- 25.Evensen G., The ensemble Kalman filter: Theoretical formulation and practical implementation. Ocean Dynam. 53, 343–367 (2003). [Google Scholar]

- 26.Shumway R. H., Stoffer D. S., Time Series Analysis and Its Applications: With R Examples(Springer Texts in Statistics, Springer International Publishing, ed. 4, 2017). [Google Scholar]

- 27.Katzfuss M., Stroud J. R., Wikle C. K., Ensemble Kalman methods for high-dimensional hierarchical dynamic space-time models. J. Am. Stat. Assoc., 1–43 (2019). [Google Scholar]

- 28.Nocedal J., Wright S., Numerical Optimization (Springer Science & Business Media, 2006). [Google Scholar]

- 29.Debussche A., Printems J., Numerical simulation of the stochastic Korteweg–de Vries equation. Phys. Nonlinear Phenom. 134, 200–226 (1999). [Google Scholar]

- 30.Burns K. J., Vasil G. M., Oishi J. S., Lecoanet D., Brown B. P., Dedalus: A flexible framework for numerical simulations with spectral methods. Phys. Rev. Res. 2, 023068 (2020). [Google Scholar]

- 31.Horn D., Imberger J., Ivey G., Redekopp L., A weakly nonlinear model of long internal waves in closed basins. J. Fluid Mech. 467, 269–287 (2002). [Google Scholar]

- 32.Grimshaw R., Pelinovsky E., Talipova T., Damping of large-amplitude solitary waves. Wave Motion 37, 351–364 (2003). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data and code have been deposited on GitHub (https://github.com/connor-duffin/statkdv-paper).