Supplemental Digital Content is available in the text.

Background:

Publications on evidence-based medicine have increased. Previous articles have examined evidence-based plastic surgery, but the latest was published in 2013. The aim of this study was to examine the trend in the number of high-evidence publications over two 5-year periods across 3 main plastic surgery journals. Further, this study aimed to quality-assess randomized controlled trials (RCTs) published in the latter period.

Methods:

All articles were identified using PubMed Search Tools and Single Citation Matcher. Three journals were manually screened from May 15, 2009, to May 15, 2014, and from May 16, 2014, to May 16, 2019. The reporting of RCTs was assessed using a modified Consolidated Standards of Reporting Trials (CONSORT) checklist.

Results:

Of a total of 17,334 publications, 6 were meta-analyses of RCTs, 120 were other meta-analyses, and 247 were initially identified as RCTs. Although a significant increase in the number of higher-evidence publications is observed, these represent 2.09% (n = 363) of the total. An estimated 86 RCTs were eligible for quality-assessment, with the most popular sub-specialty being breast surgery (n = 30). The most highly reported criteria were inclusion/exclusion criteria and blinding (both n = 67; 77.91%), and the least reported criterion was allocation concealment (n = 21; 24.42%).

Conclusions:

This study observes a positive trend in high-evidence publications. The number of RCTs published has increased significantly over a breadth of sub-specialties. The reporting of several CONSORT criteria in RCTs remains poor. Observation to standard reporting guidelines is advocated to improve the quality of reporting.

INTRODUCTION

Plastic surgery is a rapidly-evolving, unique specialty, restoring form, function, and quality of life. It remains a “general” surgical specialty, being unbound by anatomical location, that deals with operating on patients of all ages, with a large spectrum of pathology. Evidence-based medicine refers to the practice of medicine according to clinician expertise, along with latest research findings to achieve the highest standard of patient care.1–3 Its importance within plastic surgery is demonstrated by a growing literature on this topic.1,4–6 Research is merited according to its strength of evidence, delineated by the “Levels of Evidence Pyramid,” with high-strength designs appearing at the top.1–3,7 Randomized controlled trials (RCTs) and meta-analyses are examples of such designs, and collectively, these methodologies represent the highest form of evidence, having the greatest relevance to clinical practice and setting the standard for assessing new interventions.8–11

There has been a substantial increase in the publication of high-quality evidence over the previous decade. While previous publications have examined evidence-based plastic surgery, the latest, to our knowledge, was published in 2013 and examined only one specialty journal.4 The aim of this study was to examine the trend in the number of high-evidence publications over 2 consecutive 5-year periods to assess progress across 3 major plastic surgery journals and to quality-assess RCTs published in the latter 5-year period.

METHODS

Targeted Journals

Three plastic surgery specialty journals were included in the present study: Plastic and Reconstructive Surgery (PRS); Journal of Plastic, Reconstructive, and Aesthetic Surgery (JPRAS); and Annals of Plastic Surgery (APS). These journals were chosen for their well-established reputations within plastic surgery, with impact factors of 4.209, 2.390, and 1.354, respectively.12 Furthermore, these journals are not restricted to a single sub-specialty but rather cover the wide breadth of plastic surgery sub-specialisms.

Data Extraction

All articles were identified using PubMed search tools and Single Citation Matcher function. Every issue of all 3 journals were reviewed over a 10-year period in 2 distinct, consecutive time periods, firstly from May 15, 2009 to May 15, 2014, and then from May 16, 2014 to May 16, 2019. The study design of each publication was recorded for each period and organized into the following designs: meta-analysis of RCTs, other meta-analyses, RCTs, practice guidelines, systematic reviews, observational studies, case-reports, editorials, letters, and non-systematic reviews. These were chosen according to available PubMed search criteria and in accordance to the aforementioned levels of evidence hierarchy. Inclusion of each article was confirmed according to its title or abstract (or both). Where it was unclear as to which design a study employed, full-text review was undertaken. All other publications included in all issues of all 3 journals over the 2 periods were included in a separate category of “other.” The total number of publications in each journal in each time period was also recorded. As meta-analyses and RCTs represent the highest forms of evidence, these were further analyzed for trends over time. Previous studies have quality-assessed RCTs against criteria such as the CONsolidated Standards of Reporting Trials (CONSORT) criteria in plastic surgery, and to avoid overlap with their time period, this study focuses on further quality-assessing RCTs only in the second time period included in this article (May 16, 2014 to May 16, 2019). This is because there have been no published articles quality-assessing RCTs against set criteria (eg, CONSORT criteria) in plastic surgery journals that have included articles up to 2019.13 All RCTs were included for analysis unless they met our exclusion criteria. Publications filtered as RCTs using Single Citation Matcher were excluded from sub-analysis if there was no identification of the publication as a randomized trial in the publication’s title, if the study was a pilot RCT, or if the RCT was an animal study. Furthermore, the RCTs must have been comparing the effects of an intervention rather than an aspect of the intervention (eg, 1 study was excluded because it compared the cost of an intervention rather than the effects of the intervention itself).

Data Analysis

Results for each study design across all 3 journals were entered manually into a table. RCTs were assessed using a modified CONSORT checklist, including sample size, reference to inclusion and exclusion criteria, randomization method, power calculations, blinding, allocation concealment, and a discussion of study limitation.14 These criteria were noted in a table for each RCT. This information was collated using a Microsoft Excel spreadsheet.

Regarding the modified CONSORT criteria, certain requirements were specified to fulfil each criterion. Firstly, each criterion must have been reported explicitly using the CONSORT terminology directly (eg, in reporting allocation concealment, reference should be made as to how this was satisfied using the term “allocation concealment” explicitly rather than a vague description of it). Regarding withdrawals of participants from RCTs, where withdrawals occurred, reasons for withdrawal must have also been provided in accordance with best, transparent practice. There was no discrimination between single- and double-blinded studies (see “discussion” section) with regard to blinding. Finally, in the interest of the power and, thus, ability to draw conclusive evidence from a given RCT, the number of participants in a study was recorded as the final number after accounting for withdrawals.

RESULTS

Overall Trends from 15/05/2009 to 15/05/2014

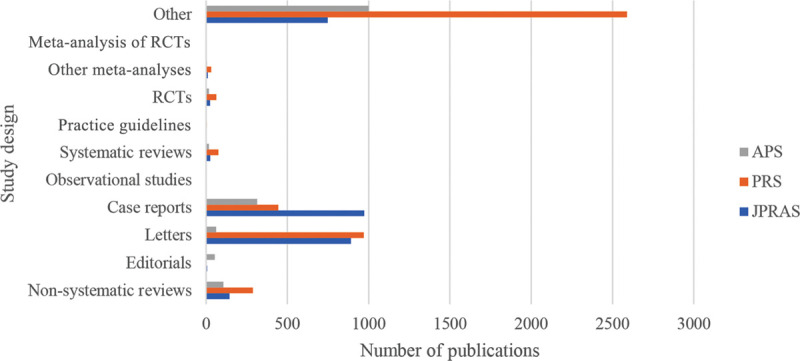

Table 1.

Publications of Different Study Designs across the 3 Main Plastic Surgery Specialty Journals, from 15/05/2009 to 15/05/2014

| Study Design | JPRAS (%) | PRS (%) | APS (%) |

|---|---|---|---|

| Meta-analysis of RCTs | 1 (0.035) | 2 (0.045) | 1 (0.063) |

| Other meta-analyses | 10 (0.35) | 31 (0.69) | 5 (0.32) |

| RCTs | 24 (0.85) | 63 (1.41) | 18 (1.14) |

| Practice guidelines | 2 (0.71) | 3 (0.067) | 0 (0) |

| Systematic reviews | 25 (0.88) | 75 (1.68) | 17 (1.08) |

| Observational studies | 2 (0.71) | 1 (0.022) | 0 (0) |

| Case reports | 972 (34.41) | 444 (9.94) | 314 (19.92) |

| Letters | 891 (31.54) | 970 (21.71) | 61 (3.87) |

| Editorials | 6 (0.21) | 0 (0) | 53 (3.36) |

| Non-systematic reviews | 144 (5.1) | 288 (6.45) | 106 (6.73) |

| Other | 748 (26.48) | 2590 (57.98) | 1001 (63.51) |

| Total | 2825 | 4467 | 1576 |

Fig. 1.

Chart Showing the number of different study designs across the 3 specialty journals, from 15/05/2009 to 15/05/2014.

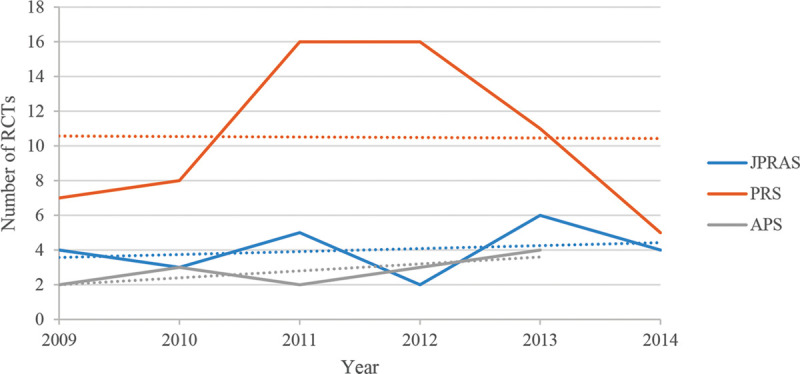

Sub-analysis of Meta-analyses (including Meta-analysis of RCTs) and RCTs from 15/05/2009 to 15/05/2014 in JPRAS

In JPRAS, 0 of the meta-analyses were published in 2009, only 1 in 2010, 2 in 2011, 3 in 2012, 3 in 2013, and 2 in 2014 (up to 15/05/2014). Only 1 of these was a meta-analysis of RCTs. Of the RCTs published, 24 were published in total, with 4 in 2009, 3 in 2010, 5 in 2011, 2 in 2012, 6 in 2013, and 4 in 2014 (up to 15/05/2014).

Sub-analysis of Meta-analyses (including Meta-analysis of RCTs) and RCTs from 15/05/2009 to 15/05/2014 in PRS

In PRS, 2 of the meta-analyses were published in 2009, 4 in 2010, 5 in 2011, 4 in 2012, 12 in 2013, and 6 in 2014 (up to 15/05/2014). Of these, only 2 were meta-analyses of RCTs. Of the RCTs published, 7 were published in 2009, 8 in 2010, 16 in 2011, 16 in 2012, 11 in 2013, and 5 in 2014 (up to 15/05/2014).

Sub-analysis of Meta-analyses (including Meta-analysis of RCTs) and RCTs from 15/05/2009 to 15/05/2014 in APS

In APS, 3 of the meta-analyses were published in 2009, 0 in both 2010 and 2011, 1 in 2012, 0 in 2013, and 2 in 2014 (up to 15/05/2014). Of these, only 1 was a meta-analysis of RCTs. Of the RCTs published, 18 were published in total, with 2 in 2009, 3 in 2010, 2 in 2011, 3 in 2012, 4 in 2013, and 4 in 2014 (up to 15/05/2014) (Table 2) (Fig. 2).

Table 2.

Meta-analyses and Randomized Controlled Trials Published from 15/05/2009 to 15/05/2014 across the 3 Specialty Journals

| Journal | Study Design | 2009 (from 15/05/2009) | 2010 | 2011 | 2012 | 2013 | 2014 (up to 15/05/2014) |

|---|---|---|---|---|---|---|---|

| JPRAS | Meta-analyses | 0 | 1 | 2 | 3 | 3 | 2 |

| RCTs | 4 | 3 | 5 | 2 | 6 | 4 | |

| PRS | Meta-analyses | 2 | 4 | 5 | 4 | 12 | 6 |

| RCTs | 7 | 8 | 16 | 16 | 11 | 5 | |

| APS | Meta-analyses | 3 | 0 | 0 | 1 | 0 | 2 |

| RCTs | 2 | 3 | 2 | 3 | 4 | 4 |

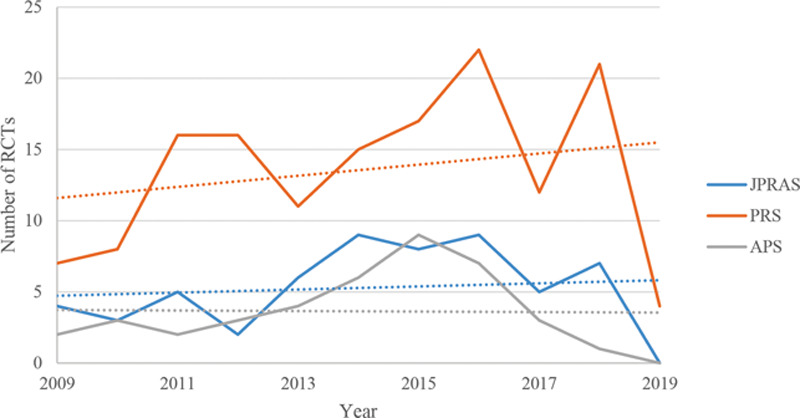

Fig. 2.

Graph displaying the trend in publications across the 3 main specialty journals of RCTs, from 15/05/2009 to 15/05/2014.

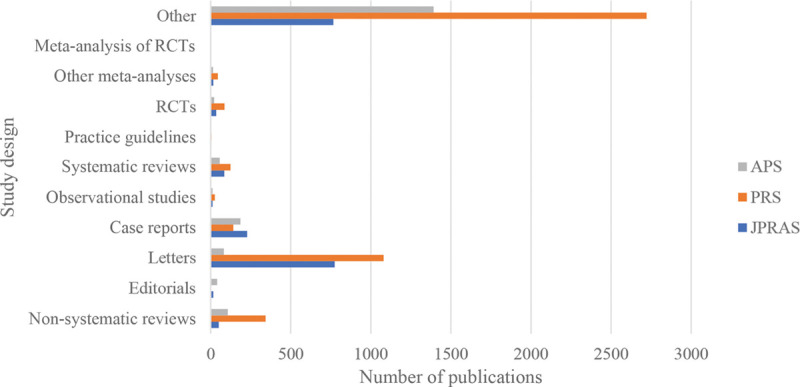

Overall Trends from 16/05/2014 to 16/05/2019 (Table 3) (Fig. 3)

Table 3.

Publications of Different Study Designs across the 3 Main Plastic Surgery Specialty Journals, from 16/05/2014 to 16/05/2019

| Study Design | JPRAS (%) | PRS (%) | APS (%) |

|---|---|---|---|

| Meta-analysis of RCTs | 1 (0.050) | 0 | 1 (0.052) |

| Other meta-analyses | 16 (0.81) | 44 (0.97) | 14 (0.73) |

| RCTs | 34 (1.71) | 86 (1.90) | 22 (1.15) |

| Practice guidelines | 3 (0.15) | 4 (0.088) | 0 (0) |

| Systematic reviews | 87 (4.39) | 122 (2.69) | 56 (2.93) |

| Observational studies | 12 (0.61) | 26 (0.57) | 12 (0.63) |

| Case reports | 227 (11.45) | 141 (3.12) | 186 (9.73) |

| Letters | 774 (39.03) | 1080 (23.81) | 82 (4.29) |

| Editorials | 16 (0.81) | 0 (0) | 40 (2.09) |

| Non-systematic reviews | 51 (2.57) | 343 (7.56) | 106 (5.55) |

| Other | 766 (38.63) | 2722 (60.02) | 1392 (72.84) |

| Total | 1987 | 4568 | 1911 |

Fig. 3.

Chart exhibiting the number of different study designs across the 3 specialty journals, from 16/05/2014 to 16/05/2019.

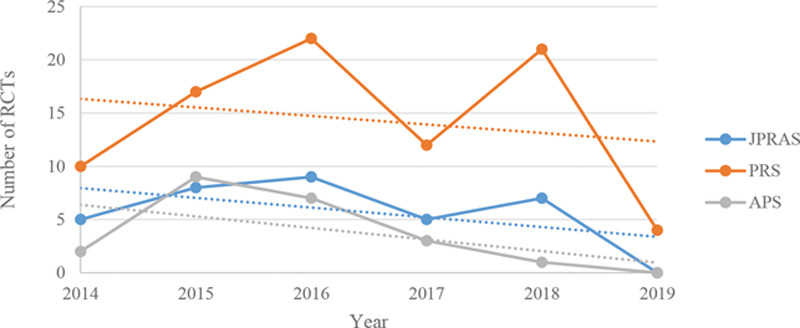

Sub-analysis of Meta-analyses (including Meta-analysis of RCTs) and RCTs from 16/05/2014 to 16/05/2019 in JPRAS

In JPRAS, 1 of the meta-analyses were published in 2014, 2 in 2015, 0 in 2016, 5 in 2017, 9 in 2018, and 0 in 2019 (up to 16/05/2019). In total, 34 RCTs were published, of which 5 were published in 2014 (from 16/05/2014), 8 in 2015, 9 in 2016, 5 in 2017, 7 in 2018, and 0 in 2019 (up to 16/05/2019).

Sub-analysis of Meta-analyses (including Meta-analysis of RCTs) and RCTs from 16/05/2014 to 16/05/2019 in PRS

In PRS, 2 of the meta-analyses were published in 2014, 10 in 2015, 14 in 2016, 1 in 2017, 7 in 2018, and 10 in 2019 (up to 16/05/2019). In total, 86 RCTs were published, of which 10 were published in 2014 (from 16/05/2014), 17 in 2015, 22 in 2016, 12 in 2017, 21 in 2018, and 4 in 2019 (up to 16/05/2019).

Sub-analysis of Meta-analyses (including Meta-analysis of RCTs) and RCTs from 16/05/2014 to 16/05/2019 in APS

In APS, 1 of the meta-analyses were published in 2014, 1 in 2015, 5 in 2016, 7 in 2017, 1 in 2018, and 0 in 2019 (up to 16/05/2019). In total, 22 RCTs were published, of which 2 were published in 2014 (from 16/05/2014), 9 in 2015, 7 in 2016, 3 in 2017, 1 in 2018, and 0 in 2019 (up to 16/05/2019) (Tables 4 and 5) (Figs. 4–6).

Table 4.

Summary of Meta-analyses and Randomized Controlled Trials Published from 16/05/2014 to 16/05/2019 across the 3 Specialty Journals

| Journal | Study Design | 2014 (from 16/05/2014) | 2015 | 2016 | 2017 | 2018 | 2019 (up to 16/05/2019) |

|---|---|---|---|---|---|---|---|

| JPRAS | Meta-analyses | 1 | 2 | 0 | 5 | 9 | 0 |

| RCTs | 5 | 8 | 9 | 5 | 7 | 0 | |

| PRS | Meta-analyses | 2 | 10 | 14 | 1 | 7 | 10 |

| RCTs | 10 | 17 | 22 | 12 | 21 | 4 | |

| APS | Meta-analyses | 1 | 1 | 5 | 7 | 1 | 0 |

| RCTs | 2 | 9 | 7 | 3 | 1 | 0 |

Table 5.

Total Number of Article Types in the 3 Main Plastic Surgery Specialty Journals, from 15/05/2009 to 16/05/2019

| Study Design | n (%) |

|---|---|

| Meta-analysis of RCTs | 6 (0.035) |

| Other meta-analyses | 120 (0.69) |

| RCTs | 247 (1.43) |

| Practice guidelines | 12 (0.069) |

| Systematic reviews | 382 (2.20) |

| Observational studies | 53 (0.31) |

| Case reports | 2284 (13.18) |

| Letters | 3858 (22.26) |

| Editorials | 115 (0.66) |

| Non-systematic reviews | 1038 (5.99) |

| Other | 9219 (53.19) |

| Total | 17334 |

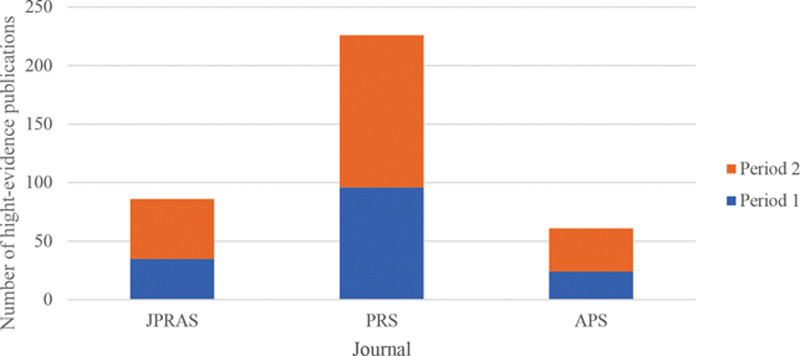

Fig. 4.

Graph showing the trend in publications across the 3 main specialty journals of RCTs, from 16/05/2014 to 16/05/2019.

Fig. 6.

Chart showing an amalgamation of the total number of high-level-evidence publications in each of the 3 specialty journals at both time periods. Period 1 = 15/05/2009 to 15/05/2014; period 2 = 16/05/2014 to 16/05/2019. “High-Evidence Publications” refers to an amalgamate of meta-analyses, meta-analyses of RCTs, and RCTs. This chart illustrates an overview of the total number of such publications respective to each journal over the 10-year period from 15/05/2009 to 16/05/2019.

Fig. 5.

Graph displaying the overall trend in publications across the 3 main specialty journals of RCTs, from 15/05/2009 to 16/05/2019.

Randomized Controlled Trials Quality Assessment

An estimated 142 articles were reviewed, and 56 were excluded for not explicitly identifying as an RCT in the article title, being an animal study, or not directly assessing the effect of an intervention. In total, 86 RCTs were thus quality assessed using the modified CONSORT checklist.

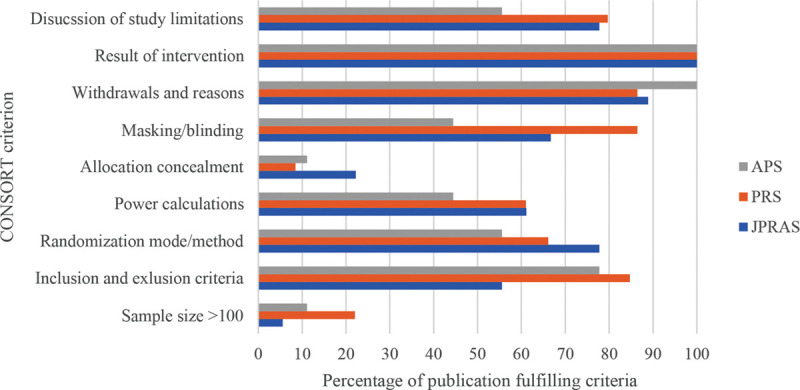

Results from the RCT Quality Assessment across the 3 Specialty Journals

Figure 7 illustrates the percentage of published RCTs clearly fulfilling each CONSORT criterion across each of the 3 specialty journals. In Figure 7, the percentages illustrated regarding withdrawals and reasons" has combined the percentage of publications that stated reasons for withdrawal and those that had no withdrawals. Please refer to Table 6 for a further sub-analysis of withdrawals and reasons. For a comprehensive list of all articles analyzed, please see Supplemental Digital Contents 1–3 from JPRAS, PRS, and APS, respectively (Table 6). (See document, Supplemental Digital Content 1, which displays the list of RCTs sub-analyzed from JPRAS. http://links.lww.com/PRSGO/B530.) (See document, Supplemental Digital Content 2, which displays the list of RCTs sub-analyzed from PRS. http://links.lww.com/PRSGO/B531.) (See document, Supplemental Digital Content 3, which displays the list of RCTs sub-analyzed from APS. http://links.lww.com/PRSGO/B532.)

Fig. 7.

Chart illustrating the number of RCTs that included the following consort criteria across all 3 specialty journals, expressed as a percentage, from 16/05/2014 to 16/05/2019.

Table 6.

RCTs across all 3 Plastic Surgery Specialty Journals Reporting of Quality Criteria, from 16/05/2014 to 16/05/2019

| Quality Criterion | No. RCTs |

|---|---|

| Sample size > 100 | 15 (17.44%) |

| Inclusion/exclusion criteria | 67 (77.91%) |

| Randomization method | 58 (67.44%) |

| Power calculation | 51 (59.30%) |

| Allocation concealment | 21 (24.42%) |

| Blinding | 67 (77.91%) |

| Withdrawals and reasons | 42 (48.84%)/34 (39.53%)* |

| Study limitations | 66 (76.74%) |

*Withdrawals with reasons/no withdrawals.

Amongst RCTs included, though all clearly stated their sample size, only 17.44% (n = 15) included a sample size of ≥100. 77.91% (n = 67) of the RCTs mentioned explicitly their inclusion and exclusion criteria, 67.44% (n = 58) described their randomization mode, 59.30% (n = 51) performed power calculations, and only 24.42% (n = 21) explicitly reported allocation concealment. An estimated 48.84% (n = 42) reported withdrawals and reasons, whereas 39.53% (n = 34) had no withdrawals, with the remaining detailing no reasons for participant withdrawals. In total, 77.91% (n = 67) performed blinding of some level. Although 100% of RCTs presented their results, 76.74% (n = 66) mentioned the limitations of their studies (Table 7).

Table 7.

RCTs Published in Different Sub-specialties across All 3 Plastic Surgery Journals

| Plastic Surgery Subspecialty | n = 86 |

|---|---|

| Breast | 30 |

| Scar healing | 10 |

| Hand/aesthetics | 9/9 |

| Oculoplastics | 6 |

| Cleft lip and palate | 4 |

| Burns | 3 |

| Wound healing | 2 |

| Lower limb reconstruction | 2 |

| Other | 11 |

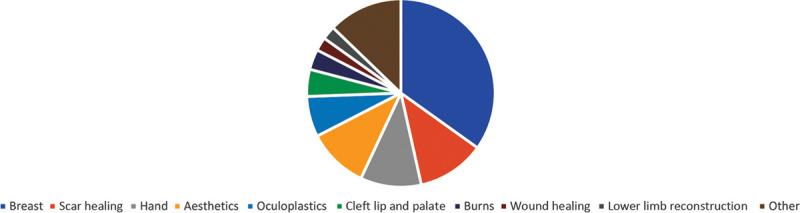

Of the 86 RCTs manually identified across all 3 specialty journals, the most popular sup-specialty in which RCTs were published was breast surgery (n = 30; 34.88%), followed closely by RCTs in scar healing (n = 10; 11.63%) and surgery of the hand (n = 9; 10.47%) (Fig. 8).

Fig. 8.

Pie chart exhibiting RCTs published in different plastic surgery sub-specialties across the 3 specialty journals, from 16/05/2014 to 16/05/2019

DISCUSSION

Review of the Data Obtained and Analyzed

This aim of this study was to investigate the comparative trend in the number of high-evidence studies across 2 consecutive time periods, and aimed to quality-assess RCTs published in the latter time period in Journal of Plastic, Reconstructive and Aesthetic Surgery, Plastic and Reconstructive Surgery, and Annals of Plastic Surgery. It is crucial that the surgical community continues such analysis and adheres to evidence-based principles to improve practice.15

Over a 10-year period, from 15/05/2009 to 16/05/2019, there has been an increase in the number of published RCTs. Whilst the line of best fit of Figure 4 illustrates that from 16/05/2014 to 16/05/2019, there is a negative trend over time. This is, in part, explained by the fact that, in 2019, less than half of the year was accounted for. The data still demonstrate that, when compared with Figure 2, the number of RCTs published in the latter time period is greater than in the former. There has thus been a reassuring trend.

However, higher forms of evidence (meta-analyses of RCTs, other meta-analyses, and RCTs) represented a small minority of publications across all 3 journals: these constituted 2.09% (n = 363) of the total number of articles published over the 10-year period. Of a total of 17,334 publications, 6 were meta-analyses of RCTs, 120 were other meta-analyses, and 247 were initially identified as RCTs. A significant majority of publications were in the form of editorials, letters, and case reports. Thus, there remains a comparative lack of high-quality-methodology publications in the existing literature.

For clinicians to implement higher evidence studies, such as RCTs, and to incorporate these into guidelines and/or best practice, studies must be robust, being performed and reported against strict criteria. Previous studies that have not adequately reported such criteria (such as those of CONSORT for assessing RCTs) have been shown to produce larger treatment effects, underpinning the results with poor design.16 For clinicians to assess the quality and reliability of RCTs, these criteria ought to be explicitly reported. Although multiple criteria have been developed, the present study evaluated RCTs according to a modified CONSORT criteria.17

In the present study, 67.44% (n = 58) of RCTs specified randomization mode, but 100% reported randomization occurred with/without reference to method: reassuring given the importance of randomization in reducing selection bias.18 Overall, the explicit reporting of withdrawals was high (88.37%; n = 76); however, only 39.53% provided reasons for withdrawal. Consequently, in several of the RCTs analyzed, one is unable to deduce whether participants withdrew because they came to harm or whether it was due to a more innocuous reason, such as a change of mind to participate. This is consistent with other studies that have found poor reporting of withdrawal and harm.19 Furthermore, several RCTs lacked a diagram illustrating participant flow: it is encouraged that all RCTs include such a chart for clear illustration to readership of participant flow throughout the trial, with clear indication of withdrawals. In total, 77.91% of RCTs (n = 67) reported using a degree of blinding, which is important in reducing detection and performance bias.20 Whilst blinding reduces such bias, without explicit reporting using appropriate terminology (eg, “single” or “double blinded”) and mention of at what level the blinding occurred (participant, assessor, etc), then the process is ambiguous and difficult for clinicians to interpret.21 However, it is important to note that 77.91% is still reasonable, given the intrinsic limitation in surgical trials of the surgeon not being able to be blinded.22

Several criteria were inadequately reported across all RCTs. Firstly, it was difficult to ascertain whether allocation concealment had been incorporated into a given RCT because this was seldom explicitly reported (n = 21; 21.42%). An important conclusion from the present study is that more explicit reporting of allocation concealment, using this terminology directly, ought to be adhered to: this will allow readership to delineate whether or not it has occurred. This is vital in aiding true randomization, and this poor reporting of allocation concealment amongst RCTs in plastic surgery is consistent with the findings of other articles.23 Only 17.44% (n = 15) studies had a sample size of >100, and only 59.30% (n = 51) reported a power calculation, which is disconcerting, given the importance of such a calculation in determining a clinically significant difference in findings. Although initially 76.74% (n = 66) of RCTs mentioning their own study limitations appear high, it is imperative that 100% of studies discuss their limitations to interpret validity and the credibility of conclusions drawn.24 Limitations, thus, ought to be better discussed across RCTs, in general. The several poorly reported criterion in the present study are consistent with the quality of RCT reporting across other surgical specialties, and adherence to good reporting appears higher in general medical journals.25,26

Limitations of the Present Study

There are limitations to this study. Firstly, this article covered only 3 plastic surgery journals; hence, meta-analyses and RCTs published in other journals are unaccounted for in assessing quality and trends over time. This may be why certain sub-specialisms are under-represented by the relative number of RCTs in this study (eg, burns), and hence, conclusions on the representation by high-quality publications of different plastic surgery sub-specialties are limited.5 Sub-specialisms, such as burns and wounds, often have their own journals, which were not accounted for here.5,27 Furthermore, this study did not consider whether RCTs were university/institution-funded or otherwise, thereby not acknowledging another potential source of bias. However, this limitation may be offset by the fact that for publication into one of these 3 prestigious journals, all conflicts of interest and funding sources must be declared and, where significant bias is apparent, these trials are now often rejected at submission, overcoming this previously underreported source of bias.28

Recommendations to Institutions, Researchers, and Journals

The present study points toward poor reporting of trial designs, randomization, recruitment, and numbers analyzed as some of the problem areas. Authors of many trial reports neglect to provide complete, clear, and transparent information on the methodology and findings of their RCT report.15 Focusing on the development of reporting guidelines to help researchers improve the completeness and transparency of their research reports, and limiting the number of poorly reported studies submitted to journals would be expected to have the greatest impact on adherence in the short and medium term. However, reporting guidelines can also be used by peer reviewers and editors to strengthen manuscript review. It is suggested that the best solution for academic institutions, researchers, and journals is to hard-wire compliance with CONSORT by making the checklist a mandatory item for submission if a manuscript is submitted as an RCT. This mandatory checklist should then be published as a supplementary item online, allowing for greater transparency and scrutiny by readers. Editors and peer reviewers need to be knowledgeable and recommend CONSORT adherence. In addition, peer reviewers, with editorial oversight, should evaluate the clarity and completeness of the study and judge whether the conclusions and recommendations are justified by the data reported.

Implementation in Plastic Surgery and Its Sub-specialties

In the modern environment of quality surveillance, rapidly-evolving surgical techniques, and health care industry restrictions, there is rising pressure on plastic surgeons to provide credible evidence on the effectiveness and efficiency of their surgical practices.15 This was and still is true, especially for breast augmentation, reconstruction, and reduction surgeries. Breast implants had been sold since the early 1960s, but there were limited published epidemiological studies or clinical trials. In 1991, the FDA required the manufacturers of silicone gel breast implants to submit safety studies. In addition, in Europe and North America, it remains difficult for patients to get access to reduction and reconstruction procedures. To satisfy the demand of the regulatory bodies, and to advocate for these patients, breast plastic surgeons were required to provide scientifically rigorous, clinically meaningful data to demonstrate the positive impact of breast surgery on patient quality of life, not only for the regulator but for patients and health insurance companies as well. Consequently, plastic surgeons and medical associations put effort to establish a strong evidence base in breast surgery and to develop tools to measure outcomes. Attention to pay for performance and quality metrics in health care is increasing, and health care providers are increasingly expected to demonstrate the success of their patient outcomes in a meaningful fashion to health care payers.5,15 Ultimately, those providers who can demonstrate better patient outcomes with reliable and valid data may receive higher reimbursements. Therefore, clinical researchers in breast surgery have assumed an increased leadership role in producing high-quality evidence on the efficacy of surgical interventions in plastic surgery.

Challenges of Implementing These Types of Studies in Other Subspecialties

Although the goal is to improve the overall level of evidence in plastic surgery, this does not mean that all lower level evidence should be discarded.15 Case series and case reports are essentially exploratory and are important for hypothesis generation, which can lead to more controlled studies.5 Additionally, in the face of overwhelming evidence to support a treatment, such as the use of antibiotics for acute wound infections, there is no need for an RCT. Moreover, observational studies serve a wide range of purposes, on a continuum from the discovery of new findings to the confirmation or refutation of previous findings.

RCTs evaluating surgical interventions, when compared with medical interventions, present unique challenges that contribute to the relative paucity of plastic surgical trials. The authors support better education of plastic surgeons at all levels in clinical research methods, evidence-based medicine, and improved funding/support of plastic surgical RCTs. RCTs in other plastic surgery subspecialties are uncommon for several reasons, including ethical issues, patient and surgeon preferences, irreversibility of surgical treatment, and expense and follow-up time. In addition, difficulties surrounding the surgical learning curve, allocation concealment, blinding, subjectivity of outcomes, and others are described.15 Another unique problem of RCTs in plastic surgery is the difficulty in the measurement of the outcome variable. Most plastic surgery operations are intended to improve quality of life or restore function. There are limited but increasing numbers of valid benefit assessment tools of those operations because they are more subjective and more difficult to quantify. These “soft outcomes” are not as black and white as mortality or 5-year survival rates. Subsequently, most plastic surgeons have traditionally relied less on quantitative evidence and more on experience. Barriers to conducting successful RCTs also include the relative infrequency of the disease state under consideration, lack of community equipoise regarding standards of care, limited availability of diagnostic tools, and the challenges of enrolling children in RCTs.15 Uncommon diseases cannot be studied at a single centre, but multicentre trials are expensive and complicated to conduct. Clinical equipoise, or genuine uncertainty about the best treatment, is a necessary criterion for randomizing subjects to treatment.15 Because of the deficit of RCTs, plastic surgeons tend to rely on anecdotal data for making treatment decisions, thus creating a bias against the presumption of clinical equipoise. The data from biased studies may prematurely eliminate or falsely alter assumptions of clinical equipoise, interfering with the conduction of more scientifically rigorous research.15 Meticulous planning, involvement of a trial methodologist and biostatistician, and compliance with CONSORT in the eventual article are key for those conducting and reporting RCTs. Sound science encompasses adequate reporting, and the conduct of ethical trials rests on the footing of sound science.15 Journal editors and peer reviewers, as guardians of the plastic surgical literature, have an important gatekeeper role here.

As we move away from the retrospective reporting of cases and nonrandomized studies, toward prospective randomized trials, the culture of clinical research in plastic surgery will be enhanced. As pressure on resources increases, decision-makers in health care are increasingly seeking high-quality scientific evidence to support clinical and health policy choices.5,15 Ultimately, legislators are looking to develop performance measures based on evidence, rather than on consensus or commonality of practice. Concomitantly, in the spirit of evidence-based surgery, individual clinicians now seek high-level evidence to base their decisions for individual patients. We are thus ever more reliant on clinical research to provide high-level evidence to facilitate clinical decision-making, as well as policy negotiations and advocacy.

CONCLUSIONS

Observation of standard reporting guidelines is advocated to improve the quality of reporting. This study provides an update on the progress of evidence-based plastic surgery: it examined the comparative trend of the number of high-evidence studies and quality-assessed RCTs published in a defined time period across 3 main plastic surgery journals. The number of RCTs published has increased significantly over time, a reflection of the profession’s acknowledgement of the importance of high-quality research to further our field. This study proposes that authors continue to improve quality reporting so that all criteria are explicitly detailed. Continued pursuit of highest quality reporting will help improve the credibility of our results, their potential for clinical application, and the positive advancement of plastic surgery.

Supplementary Material

Footnotes

Published online 28 January 2021.

Disclosure: The authors have no financial interest to declare in relation to the content of this article.

Related Digital Media are available in the full-text version of the article on www.PRSGlobalOpen.com.

REFERENCES

- 1.Al-Benna S. A discourse on the contributions of evidence-based medicine to wound care. Ostomy Wound Manage. 2010;56:48–54. [PubMed] [Google Scholar]

- 2.Al-Benna S. The paradigm of burn expertise: scientia est lux lucis. Burns. 2014;40:1235–1239. [DOI] [PubMed] [Google Scholar]

- 3.Al-Benna S, O’Boyle C. Burn care experts and burn expertise. Burns. 2014;40:200–203. [DOI] [PubMed] [Google Scholar]

- 4.Becker A, Blümle A, Momeni A. Evidence-based Plastic and Reconstructive Surgery: developments over two decades. Plast Reconstr Surg. 2013;132:657e–663e. [DOI] [PubMed] [Google Scholar]

- 5.Al-Benna S, Alzoubaidi D, Al-Ajam Y. Evidence-based burn care–an assessment of the methodological quality of research published in burn care journals from 1982 to 2008. Burns. 2010;36:1190–1195. [DOI] [PubMed] [Google Scholar]

- 6.Momeni A, Becker A, Antes G, et al. Evidence-based plastic surgery: controlled trials in three plastic surgical journals (1990–2005). Ann Plast Surg. 2008;61:221–225. [DOI] [PubMed] [Google Scholar]

- 7.Murad MH, Asi N, Alsawas M, et al. New evidence pyramid. Evid Based Med. 2016;21:125–127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sibbald B, Roland M. Understanding controlled trials. Why are randomised controlled trials important? BMJ. 1998;316:201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Heidich A. Meta-analysis in medical research. Hippokratia. 201014:29–37. [PMC free article] [PubMed] [Google Scholar]

- 10.Shorten A, Shorten B. What is meta-analysis? Evid Based Nurs. 2013;16:3–4. [DOI] [PubMed] [Google Scholar]

- 11.Egger M, Smith GD. Meta-analysis. Potentials and promise. BMJ. 1997;315:1371–1374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Al-Benna S, Clover J. The role of the journal impact factor: choosing the optimal source of peer-reviewed plastic surgery information. Plast Reconstr Surg. 2007;119:755–756. [DOI] [PubMed] [Google Scholar]

- 13.McCarthy JE, Chatterjee A, McKelvey TG, et al. A detailed analysis of level I evidence (randomized controlled trials and meta-analyses) in five plastic surgery journals to date: 1978 to 2009. Plast Reconstr Surg. 2010;126:1774–1778. [DOI] [PubMed] [Google Scholar]

- 14.Schulz KF, Altman DG, Moher D; CONSORT Group. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Al-Benna S. Construction and use of wound care guidelines: an overview. Ostomy Wound Manage. 2012;58:37–47. [PubMed] [Google Scholar]

- 16.Schulz KF, Chalmers I, Hayes RJ, et al. Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA. 1995;273:408–412. [DOI] [PubMed] [Google Scholar]

- 17.Moher D, Jaded AR, Nichol G, et al. Assessing the quality of randomized controlled trials: an annotated bibliography of scales and checklists. Control Clin Trials. 1995;16:62–73. [DOI] [PubMed] [Google Scholar]

- 18.Ioannidis JP, Haidich AB, Pappa M, et al. Comparison of evidence of treatment effects in randomized and nonrandomized studies. JAMA. 2001;286:821–830. [DOI] [PubMed] [Google Scholar]

- 19.Hodkinson A, Kirkham JJ, Tudur-Smith C, et al. Reporting of harms data in RCTs: a systematic review of empirical assessments against the CONSORT harms extension. BMJ Open. 2013;3:e003436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Probst P, Grummich K, Heger P, et al. Blinding in randomized controlled trials in general and abdominal surgery: protocol for a systematic review and empirical study. Syst Rev. 2016;5:48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Devereaux PJ, Manns BJ, Ghali WA, et al. Physician interpretations and textbook definitions of blinding terminology in randomized controlled trials. JAMA. 2001;285:2000–2003. [DOI] [PubMed] [Google Scholar]

- 22.Solheim O. Randomized controlled trials in surgery and the glass ceiling effect. Acta Neurochir (Wien). 2019;161:623–625. [DOI] [PubMed] [Google Scholar]

- 23.Momeni A, Becker A, Antes G, et al. Evidence-based plastic surgery: controlled trials in three plastic surgical journals (1990 to 2005). Ann Plast Surg. 2009;62:293–296. [DOI] [PubMed] [Google Scholar]

- 24.Ioannidis JP. Limitations are not properly acknowledged in the scientific literature. J Clin Epidemiol. 2007;60:324–329. [DOI] [PubMed] [Google Scholar]

- 25.Nagendran M, Harding D, Teo W, et al. Poor adherence of randomised trials in surgery to CONSORT guidelines for non-pharmacological treatments (NPT): a cross-sectional study. BMJ Open. 2013;3:e003898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Curry JI, Reeves B, Stringer MD. Randomized controlled trials in pediatric surgery: could we do better? J Pediatr Surg. 2003;38:556–559. [DOI] [PubMed] [Google Scholar]

- 27.Al-Benna S, Tariq G. Wound care education in the developing world. Wounds Middle East. 2017;4:6–7. [Google Scholar]

- 28.Momeni A, Becker A, Bannasch H, et al. Association between research sponsorship and study outcome in plastic surgery literature. Ann Plast Surg. 2009;63:661–664. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.