Abstract

Computed tomography (CT) has been widely used for medical diagnosis, assessment, and therapy planning and guidance. In reality, CT images may be affected adversely in the presence of metallic objects, which could lead to severe metal artifacts and influence clinical diagnosis or dose calculation in radiation therapy. In this paper, we propose a generalizable framework for metal artifact reduction (MAR) by simultaneously leveraging the advantages of image domain and sinogram domain-based MAR techniques. We formulate our framework as a sinogram completion problem and train a neural network (SinoNet) to restore the metal-affected projections. To improve the continuity of the completed projections at the boundary of metal trace and thus alleviate new artifacts in the reconstructed CT images, we train another neural network (PriorNet) to generate a good prior image to guide sinogram learning, and further design a novel residual sinogram learning strategy to effectively utilize the prior image information for better sinogram completion. The two networks are jointly trained in an end-to-end fashion with a differentiable forward projection (FP) operation so that the prior image generation and deep sinogram completion procedures can benefit from each other. Finally, the artifact-reduced CT images are reconstructed using the filtered backward projection (FBP) from the completed sinogram. Extensive experiments on simulated and real artifacts data demonstrate that our method produces superior artifact-reduced results while preserving the anatomical structures and outperforms other MAR methods.

Keywords: Metal artifact reduction, Sinogram completion, Prior image, Residual learning, Deep learning

I. Introduction

COMPUTED tomography (CT) systems have become an important tool for medical diagnosis, assessment, and therapy planning and guidance. However, the metallic implants within the patients, e.g., dental fillings and hip prostheses, would lead to missing data in X-ray projections and cause strong star-shape or streak artifacts to the reconstructed CT images [1]. Those metal artifacts not only present undesirable visual effects in CT images with influencing diagnosis but also make dose calculation problematic in radiation therapy [2], [3]. With the increasing use of metallic implants, how to reduce metal artifacts has become an important problem in CT imaging [4].

Numerous metal artifact reduction (MAR) methods have been proposed in the past decades, while there is no standard solution in clinical practice [3], [5], [6]. Since the metal artifacts are structured and non-local in the reconstructed CT images, the previous metal artifact reduction approaches mainly addressed this problem in the X-ray projections (sinogram). The metal-affected regions in the sinogram domain were corrected by modeling the underlying physical effects of imaging [7]–[10]. For example, Park et al. [10] proposed a method to correct beam hardening artifacts caused by the presence of metal in polychromatic X-ray CT. However, with the presence of high-atom number metals, the metal trace regions in sinogram are often severely corrupted and the above methods are limited in achieving satisfactory results [11]. Therefore, the other MAR methods regarded the metal-affected regions as the missing areas and filled them with estimated values [2], [12]. The early Linear interpolation (LI) approach [2] filled the missing regions by the linear interpolation of its neighboring unaffected data for each projection view. As interpolation cannot completely recover the metal trace information, the inconsistency between interpolated values and those unaffected values often results in strong new artifacts in the reconstructed images. To improve the sinogram interpolation quality, recent methods involved the forward projection of a prior image to complete the sinogram [13]–[17]. These methods first estimated prior images with various tissue information from the uncorrected image and then performed forward projection on the prior image to conduct sinogram completion. For example, Meyer et al. [15] improved the LI approach by generating a prior image with tissue processing and normalizing the projection with a forward projection of the prior image before interpolation. As the inaccurate prior images would lead to unfaithful structures in the reconstructed images, a key factor for prior-image-based approaches is to generate a good prior image to provide a more accurate surrogate for the missing data in the sinogram. Also, some researchers focused on designing new iterative reconstruction algorithms to reconstruct artifact-free images from the unaffected or corrected projections [18]–[21]. For example, Zhang et al. [21] proposed an iterative metal artifact reduction algorithm based on constrained optimization. However, these iterative reconstruction methods often suffer from heavy computation and require proper hand-crafted regularizations.

With the development of deep learning in medical image reconstruction and analysis [22]–[25], recent progress of MAR has featured neural networks [4], [11], [26]. Park et al. [4] employed a U-Net [25] in the sinogram domain to deal with beam-hardening related artifacts in polychromatic CT. Gjesteby et al. [26] utilized deep learning to refine the result of NMAR [15] for achieving additional correction in critical image regions. Zhang et al. [11] proposed to generate a reduced-artifact prior image with CNN to help correct the metal-corrupted regions in the sinogram. Although these methods show reasonable results on MAR, they are limited in handling with the remaining new artifacts in reconstructed CT images.

To improve the quality of the reconstructed CT images, inspired by the success of deep learning in solving ill-posed inverse problems in natural image processing [27]–[29], very recent works formulated MAR as an image restoration problem and reduced the metal artifacts with image-to-image translation networks [26], [30]–[36]. Gjesteby [32] employed a deep neural network to reduce the new artifacts after the NMAR method with a perceptual loss. The RL-ARCNN [37] introduced deep residual learning to reduce metal artifacts in cervical CT images and Wang et al. [38] proposed to use the conditional generative adversarial network (cGAN) [39] to reduce metal artifacts in CT images. Very recently, Lin et al. [35] developed a dual-domain learning method to improve the image-restoration-based MAR results by involving sinogram enhancement as a procedure. These image-restoration-based methods demonstrated good performance on their experimental datasets due to the powerful representation capability of deep neural networks. However, in our experiments, we find that these methods tend to degrade on other site data, as the training samples hardly cover the unseen artifacts patterns. Although DuDoNet [35] introduces the sinogram enhancement procedure to improve the network performance, it still directly adopts the image-domain-refinement output (CNN output) as the final reconstructed image. As there is no geometry (physical) constraints to regularize the neural networks, there would be some tiny anatomical structure changes in the output image (see Fig. 6 for an example), which limits the usage of image domain methods in real clinical scenarios.

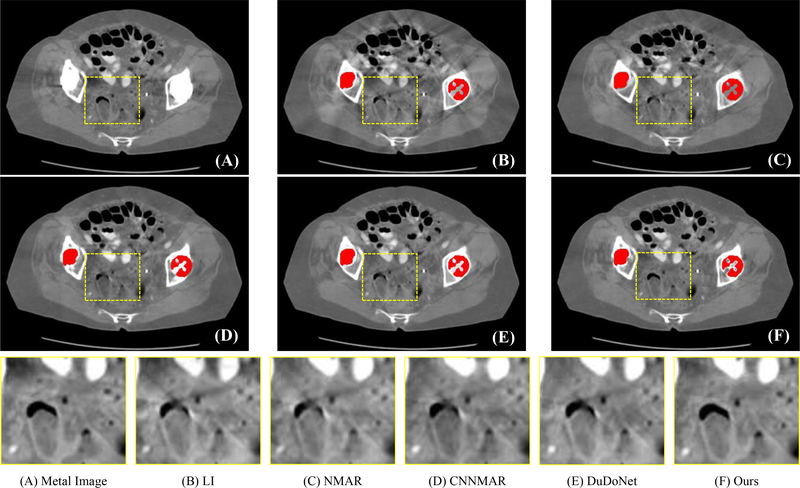

Fig. 6:

Visual comparison on CT images with real metal artifacts. The segmented metals are colored in red for better visualization. Our method effectively reduces metal artifacts and preserves the fine-grained anatomical structures. The display window of whole image is [−480 560] HU and the display window of cropped patches is [−400 300] HU.

In this work, we present a novel image and sinogram domain joint learning framework for generalizable metal artifact reduction. Different from the previous image-restoration-based solutions, we formulate the MAR as the deep-learning-based sinogram completion task and train a deep neural network, i.e., SinoNet, to restore the unreliable projections within the metal trace region. To ease the SinoNet learning and improve the completion quality, we simultaneously train another neural network, i.e., PriorNet, to generate a good prior image with less metal artifact and guide the SinoNet learning with the forward projection of the prior image; see a sinogram completion result in Fig. 1. Moreover, we design a novel residual sinogram learning strategy to fully utilize the prior sinogram guidance to improve the continuity of sinogram completion and thus alleviate the new artifacts in the reconstructed CT images. The final CT image is then reconstructed from the completed sinogram with the conventional FBP algorithm. Compared with the previous prior-image-based MAR approaches, the whole framework is trained in an efficient end-to-end manner so that the prior image generation and deep sinogram completion procedures can be learned in a collaborative manner and benefit from each other. We extensively evaluate our framework on CT images with simulated and real metal artifacts, demonstrating that our method produces superior artifact-reduced results and outperforms other MAR methods.

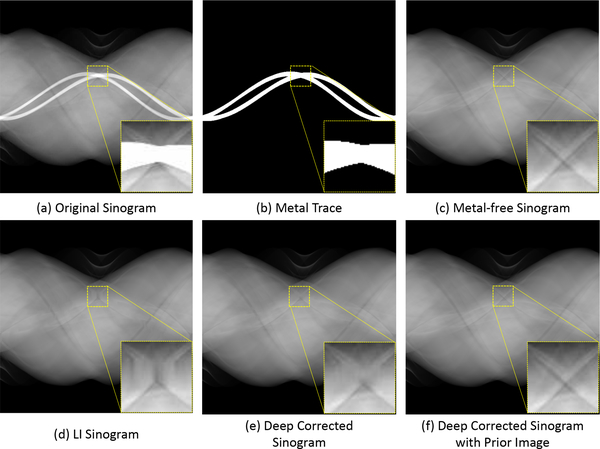

Fig. 1:

The qualitative comparison of sinogram completion. An ROI is enlarged and shown with a narrower window to better visualize the difference. The linear interpolation (LI) [2] produces a poor estimation of the missing projections (d), while the deep network can generate a relatively good corrected sinogram (e). With the guidance of prior image, our method predicts more accurate projections (f), which are very close to the metal-free one.

Our main contributions are summarized as follows.

We present a novel image and sinogram domain joint learning framework for metal artifact reduction by simultaneously leveraging the advantages of image domain and sinogram domain-based MAR techniques. The proposed framework achieves superior performance on CT images with simulated and real metal artifacts.

We propose to train a deep prior image network to provide a good estimation of missing projections and thus enhance sinogram completion network learning. The two networks are trained in an end-to-end manner and can benefit from each other.

We design a novel residual sinogram learning scheme to facilitate sinogram completion. The scheme is able to fully utilize prior image information and alleviate the new artifacts on the reconstructed CT image.

The remainders of this paper are organized as follows. We elaborate our framework in Section II. The experiments and results are presented in Section III. We further discuss the key issues of our method in Section IV and draw the conclusions in Section V.

II. Methodology

A. Overview

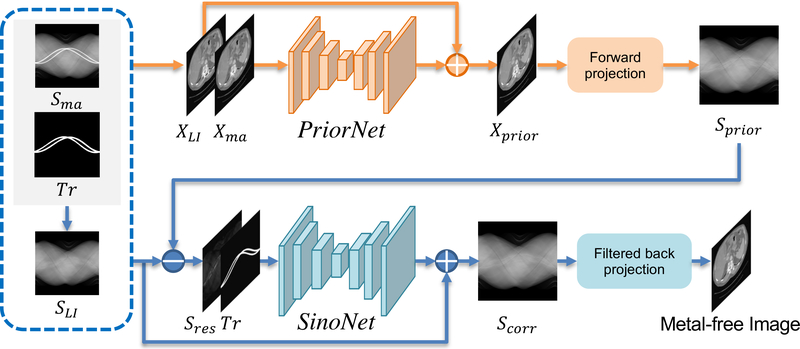

Fig. 2 depicts the overview of our proposed image and sinogram domain joint learning framework for metal artifact reduction in CT images. The whole framework integrates the image domain learning (prior image generation) and sinogram domain learning (sinogram completion). Given the original metal-corrupted sinogram and the metal trace mask Tr ∈ {0, 1}H×W, we first apply the linear interpolation [2] to produce an initial estimation for the projections within the metal trace region and acquire the LI corrected sinogram SLI for the following procedures. To ease the sinogram completion procedure, we train an image domain network, i.e., PriorNet, to produce a good prior image Xprior with less metal artifact and acquire the prior sinogram Sprior with the forward projection of the generated prior image Xprior to guide the sinogram domain learning. We simultaneously train another deep neural network, i.e., SinoNet, to restore the metal-affected projections to acquire the corrected sinogram Scorr by taking the LI corrected sinogram SLI, the prior sinogram Sprior, and the metal trace mask Tr as input. Particularly, we design a novel residual learning strategy and make the SinoNet refine the residual sinogram map between SLI and Sprior. The final metal-free CT image is then reconstructed from the corrected sinogram Scorr with the conventional FBP algorithm. The whole framework is trained in an end-to-end manner so that the prior image generation and sinogram completion procedures can benefit from each other.

Fig. 2:

Schematic diagram of our proposed image and sinogram domain joint learning framework for metal artifact reduction. Given the metal-affected sinogram Sma and metal trace mask Tr, we use linear interpolation to acquire LI corrected sinogram SLI. We jointly train a prior image generation network, i.e., PriorNet, to generate a good prior image Xprior and a sinogram completion network, i.e., SinoNet, to restore the metal-affected sinogram with the guidance of the prior sinogram Sprior, which is the forward projection of the prior image Xprior. The Sres is the residual sinogram map between SLI and Sprior.

The final metal-free image is reconstructed from the corrected sinogram Scorr with the FBP algorithm.

B. Deep Prior Image Generation

In this step, we propose to generate a prior image with a deep neural network to facilitate the sinogram completion procedure, as the metal-free prior image would provide a good estimation for the missing projections in the original sinogram. A straightforward solution for this procedure is to take the original CT image with metal artifacts as input and train a neural network to generate the prior image with less metal artifact. However, when the metal objects are relatively large, the metal artifacts in the original CT image would be very strong and it is difficult for the neural network to reduce the metal artifacts. Therefore, besides the original CT image, we also involve the LI corrected image into the prior image generation procedure and employ a neural network, i.e., PriorNet, to refine the LI corrected image by residual learning. Specifically, we first reconstruct the original metal-corrupted CT image Xma and LI corrected image XLI from the original metal-affected sinogram Sma and the linear interpolated sinogram SLI, respectively. Then the artifact-reduced prior image is represented as

| (1) |

where fP denotes the prior image generation network and [a, b] represents the concatenation operation of image a and b.

The PriorNet is based on the U-Net [25] architecture, but we halve the channel number to reduce the total number of parameters. To optimize the network, we employ the L1 loss to minimize the difference between the network output and the ground truth CT image Xgt without metal artifacts

| (2) |

We further acquire the prior sinogram Sprior by performing forward projection on the generated prior image Xprior

| (3) |

where denotes the forward projection operator. The prior sinogram Sprior is then used to guide the network to complete the missing projections in the sinogram domain.

C. Deep Sinogram Completion

With the guidance of prior sinogram Sprior, we train another neural network, i.e., SinoNet, to restore the projections within the metal trace region Tr in the sinogram domain. Specifically, the SinoNet takes the LI corrected sinogram SLI, the prior sinogram Sprior, and the metal trace Tr as input, and outputs the missing projections in metal trace region Tr by utilizing the contextual information of the sinogram. To improve the continuity of the completed projections at the boundary of the metal trace region, we design a residual sinogram learning strategy and make the SinoNet refine the residual sinogram between SLI and Sprior. Particularly, we calculate the residual sinogram map Sres, which can be treated as a smooth transition between the prior sinogram Sprior and the LI corrected sinogram SLI, and then we employ the SinoNet to refine the residual projections within the metal trace region Tr. The corrected sinogram can be written as:

| (4) |

where fS represents the sinogram completion network. As the network estimates the residual values instead of the absolute projection values, it can alleviate the discontinuity at the boundary of the metal trace [17]. Considering that the metals only affect projection data in metal trace region, we further composite the output of SinoNet and SLI with respect to Tr to get the final corrected result:

| (5) |

where ⊙ denotes element-wise multiplication.

To estimate the residual projections within the metal trace region, the network should be better aware of the metal trace information. However, the metal mask or metal trace regions are usually small and occupy a small portion of the whole sinogram, directly concatenating the residual sinogram map Sprior−SLI and Tr as network input would weaken the metal trace information due to the down-sampling operations of the network. Therefore, we employ the mask pyramid U-Net [40] to retain the metal trace information into each layer explicitly so that the network is able to extract more discriminative feature for restoring the missing information at metal trace region.

To optimize the SinoNet, we adopt the L1 loss to minimize the differences between the corrected sinogram and the ground truth sinogram Sgt without metals. However, as the composited sinogram Scorr has the identical values with the ground truth sinogram outside the metal trace region, directly minimizing the difference between Scorr and Sgt would provide supervision for the network output only within the metal trace region. As we mentioned above, the metal region occupies only a small portion of the whole sinogram. To improve the training efficiency, we also encourage the pre-composited sinogram to be close to the ground truth sinogram Sgt so that the loss function can also provide supervision for those network outputs outside of the metal trace region. The total objective of the deep sinogram completion can be represented as

| (6) |

where β is a hyper-parameter to control the trade-off between two difference items. we find that it is not sensitive to the network performance and we empirically set it as 0.1 in our experiments.

D. Overall Objective Function and Technical Details

The above L1 loss for SinoNet optimization only penalizes single projection value inconsistency in the sinogram domain, without considering the geometry-consistency of the completed values or penalizing the new artifacts in the reconstructed CT images. Therefore, we further design a filtered back-prorogation (FBP) loss to alleviate the new artifacts in the reconstructed CT image

| (7) |

where represents the FBP operator and M is the metal mask. Here we adopt the masked L1 loss to penalize the intensity difference only in the non-metal regions, as it is difficult to accurately reconstruct the original image at the metal position. Note that the FBP operation is differentiable, so that the gradient of is able to back-propagate to SinoNet, encouraging it to generate geometry-consistent completion results. We jointly train the PriorNet and SinoNet in an end-to-end manner and the total objective function is

| (8) |

where α1 and α2 are hyperparameters to balance the weight of different loss items. We empirically set them as 1.0 in our experiments.

Our whole framework takes original metal-affected sinogram and metal trace as input. In the training phase, we use the simulated data to train the whole framework so that we can acquire the metal trace mask Tr for the simulated training data by performing the forward projection on the simulated metal mask M. In the testing phase, given the metal-affected sinogram Sma, we can segment the metal mask M from the reconstructed metal-corrupted CT images Xma with simple thresholding method or other advanced metal segmentation algorithms, and then conduct the similar forward projection to get the metal trace mask Tr.

III. Experiments

A. Dataset and Simulation

We evaluated our method with simulated metal artifacts on CT images and real CT images with metal artifacts. For the simulation data, we randomly selected a subset of CT images from the recently released DeepLesion dataset [41] to synthesize metal artifacts. For the simulated metal masks, we employed the previous metal mask collection in [11], which contains 100 manually segmented metal implants with different shapes and sizes. Specifically, we randomly chose 1000 CT images and 90 metal masks to synthesize the training data. The remaining 10 metal masks were paired with an additional 200 CT images from 12 patients to generate 2000 combinations for network evaluation.

We followed the procedure in [11], [35] to simulate the metal-corrupted sinograms and CT images by inserting metallic implants into clean CT images, where beam hardening and Poisson noise are simulated. We employed a polychromatic X-ray source and assumed the incident X-ray has 2 × 107 photons. The partial volume effect was also considered during the simulation. A fan-beam geometry was adopted and we uniformly sampled 640 projection views between 0–360 degrees. Before the simulation, the CT images were resized to 416×416, resulting in the sinogram with the size of 641×640.

B. Implementation Details

The framework was implemented in Python based on PyTorch [42] deep learning library. We trained the PriorNet and SinoNet in an end-to-end manner with differential forward projection (FP) and filtered backprojection (FBP) operations provided in ODL library1. In the network training, all the images had a size of 416 × 416 and the sinograms were with a size of 641 × 640. The Adam optimizer [43] was used to optimize the whole framework with the parameters (β1, β2) = (0.5, 0.999). We totally trained 400 epochs with a mini-batch size of 8 on one Nvidia 1080Ti GPU and the learning rate was set as 1e−4. In each training iteration, we randomly chose one CT image with synthesized metal artifacts from the pool of 90 different metal mask pairs and the different CT images were formed as one mini-batch data to be fed into the network for calculating the total objective function.

C. Experimental Results on DeepLesion Data

1). Quantitative comparisons with state-of-the-art methods:

We compare our method with conventional interpolation-based methods: linear interpolation (LI) [2] and normalized metal artifact reduction (NMAR) [15], which are widely employed approaches in MAR. Also, we compare our method with the recent deep-learning-based methods CNNMAR [11], cGANMAR [38], and the state-of-the-art method DuDoNet [35]. The CNNMAR approach also adopts a CNN to generate a reduced-artifact prior image and then uses traditional interpolation to correct the metal-corrupted regions in the sinogram. Both cGANMAR and DuDoNet employ the image domain network to generate the final results, where cGANMAR directly uses an image-to-image translation network to reduce artifacts on original metal images while DuDoNet further incorporates sinogram enhancement to ease image domain learning. For CNNMAR, we used the public released code and model. We re-implemented DuDoNet [35] and cGANMAR [38], since there is no public implementation.

Table I shows the quantitative comparison results of our method and other methods on the DeepLesion dataset. It is observed that the prior-image-based interpolation method NMAR outperforms LI approach on both root mean square error (RMSE) and structured similarity index (SSIM) metrics, as the prior image information improves the accuracy of interpolation for missing projection values. The deep-learning-based methods CNNMAR and cGANMAR achieves much lower RMSE and higher SSIM values than conventional MAR methods, showing the advantage of data-driven deep neural networks for metal artifact reduction. The DuDoNet achieves better RMSE and SSIM performance when compared with cGANMAR, as it integrates sinogram enhancement to reduce artifacts before conducting the image refinement procedure. Compared with DuDoNet, our method further reduces RMSE with 6.85 HU and achieves slightly better SSIM values. Overall, our framework attains the best performance among different methods in terms of RMSE and SSIM, showing the effectiveness of our method for metal artifact reduction.

TABLE I:

Quantitative comparison of different methods on DeepLesion dataset.

2). Qualitative analysis:

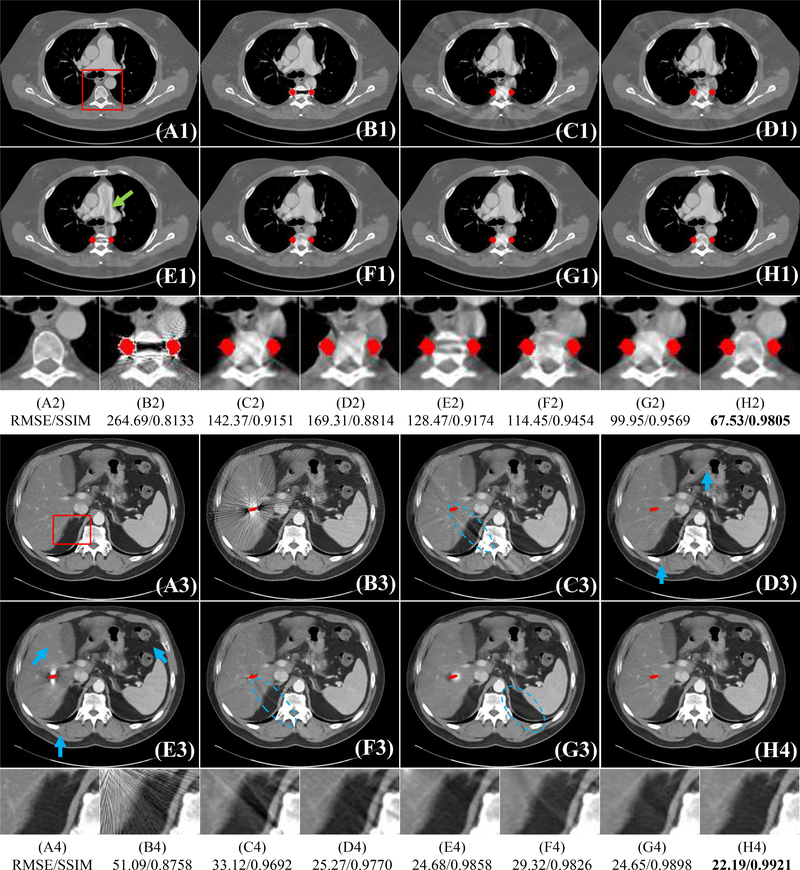

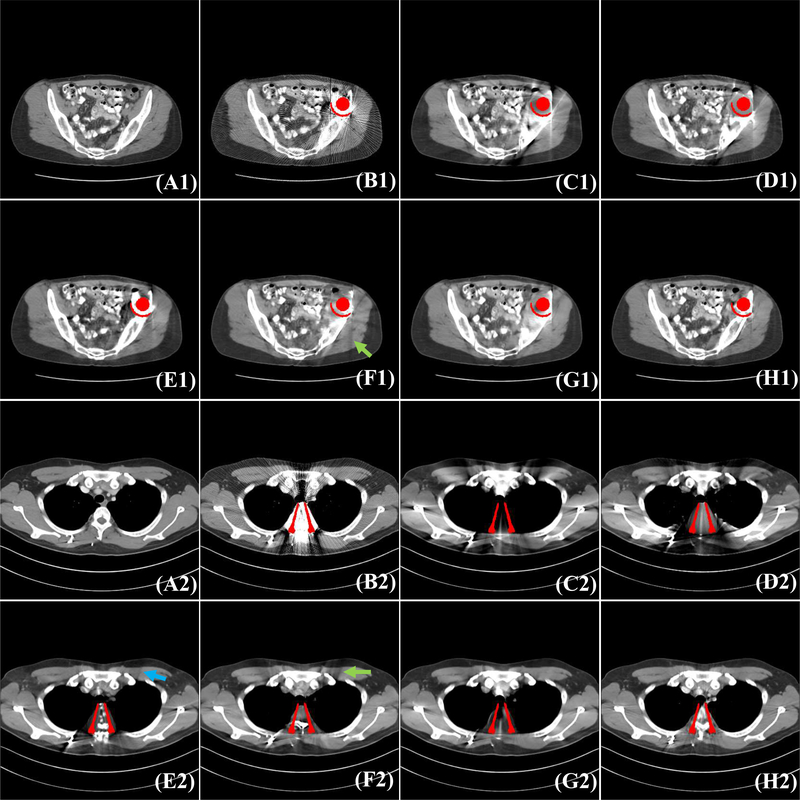

Fig. 3 and Fig. 4 shows the visual comparisons of our method and other methods on DeepLesion simulation data. We show the refer metal-free CT images, simulated metal artifact images (Metal Image), and metal artifact reduction results of different methods. The simulated metal masks are colored in red for better visualization. It is observed that severe streaking artifacts are in the original metal images and a severe dark strip exists between two metal implants (see Fig. 3(B1)). Generally, the deep-learning-based methods CNNMAR, cGANMAR and DuDoNet can reduce more artifacts than conventional LI and NMAR approaches. When the metal implants are small (Fig. 3), the DuDoNet and cGANMAR can achieve better visual results than CNNMAR, while there are still some mild artifacts in the DuDoNet and cGANMAR results; see the dashed blue ovals in Fig. 3(F3&G3). Compared with these methods, our method effectively reduces most artifacts and retains the fine details of the structures. Fig. 4 shows the results when the metal implants are large. It is observed that the conventional interpolation methods LI and NMAR, and image domain method cGANMAR and DuDoNet cannot preserve the details of the original structures and there are some new secondary-artifacts in the cGANMAR results; see the green arrows in Fig. 4(F1&F2). Compared to CNNMAR, our method preserves better structure details, showing the effectiveness of the deep signogram completion mechanism. We also calculate the ROI RMSE and ROI SSIM for the red box patches in Fig. 5 to quantitatively compare different methods. As shown in Fig. 5 (A2-H2&A4-H4), our method achieves the lowest RMSE and highest SSIM values among different methods.

Fig. 3:

Visual comparison with different methods on DeepLesion dataset. The simulated metal masks are colored in red for better visualization. The (A1-A4) are refer images. We show the MAR results of LI [2] (C1-C4), NMAR [15] (D1-D4), CNNMAR [11] (E1-E4), cGANMAR [38] (F1-F4), DuDoNet [35] (G1-G4), and our method (H1-H4). The display window of the first and second samples are [−480 560] and [−175, 275] HU, respectively. We also use ROI RMSE and ROI SSIM to show quantitative results for a better comparison.

Fig. 4:

Visual comparison with different methods on DeepLesion dataset. The simulated metal masks are colored in red for better visualization. The (A1-A2) are refer images. We show the MAR results of LI [2] (C1-C2), NMAR [15] (D1-D2), CNNMAR [11] (E1-E2), cGANMAR [38] (F1-F2), DuDoNet [35] (G1-G2), and our method (H1-H2). The display window is [−175 275] HU.

Fig. 5:

Visual results on head CT images with different numbers of simulated dental fillings. The simulated dental fillings are colored in red for better visualization. The display window is [−1000 1600] HU.

D. Generalization to Different Site Data

The selected CT images from the DeepLesion dataset are samples of abdomen and thorax. To show the feasibility of our method applied to different site data, we directly evaluate the model trained with DeepLesion data on the head CT images collected from the online website with simulated metal artifacts. Fig. 5 shows the visual metal artifact reduction results of our method on the head CT images with simulated dental fillings. We also show the conventional LI and NMAR results and the deep-learning-based CNNMAR and DuDoNet results. It is observed that the LI and NMAR introduce several secondary-artifacts and could change the anatomical structures of the tooth; see blue arrows in Fig. 5(d). The DuDoNet can further reduce the artifacts, while there are still several shading artifacts in the output images; see green arrows in Fig. 5(f). Although without training with head CT images, our method effectively reduces artifacts, indicating that the proposed method has the potential to handle different site data. Notably, the MAR result of our method is even comparable with CNNMAR, which is also trained with head CT images.

E. Experiments on CT Images with Real Metal Artifacts

1). Results on CT images with real metal artifacts:

Since the original sinogram data with metal artifacts in the real clinical scenario are difficult to access, we follow the previous work [35] to evaluate our method on clinical CT images with metal artifacts. Specifically, we collected some clinical CT images with metal artifacts and segmented the metal mask from the clinical CT images with a simple thresholding method (i.e., 2000 HU in our experiments). The forward projection of the metal masks was conducted to generate the metal projection and the pixels with the projection value greater than zero were regarded as the metal trace region Tr. We also performed the forward projection on the clinical CT image with the same imaging geometry as the above simulation procedure to acquire the metal-corrupted sinogram Sma. The LI corrected sinogram SLI was then generated from Sma and Tr with linear interpolation. Finally, we fed Sma, SLI and Tr into our framework to get the meta artifact reduction images. Fig. 6 presents the visual results of different methods. Our method effectively reduces metal artifacts compared with the original metal image. From the yellow zoomed patches, it is observed that the other methods change some tiny anatomical structures of the original image, while our method can preserve the fine-grained anatomical structures.

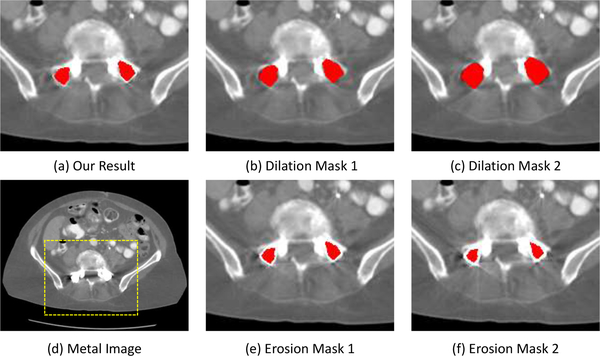

2). The influence of metal mask segmentation:

An accurate metal trace mask (or metal mask equally) is vital for the good performance of metal artifacts reduction in our framework. In practice, we can manually segment the metals or use some automatic metal segmentation methods (e.g., thresholding method) to segment the metals. To investigate the influence of metal mask segmentation on the final MAR results, we take different metal masks to acquire the metal traces and then adopt our method to conduct MAR with taking these different metal traces as input. Fig. 7 shows the MAR results of our method by taking the original thresholding-based metal mask (a), the dilation metal masks (b & c), and erosion metal masks (e & f) as input. In general, our method can achieve reasonable MAR results under slightly metal segmentation errors. The over-segmented metal masks would lead to a relatively large metal trace and our method needs to complete more projection values. As the network has been trained to completed different metal traces, it can estimate the missing projection values without leading to strong new artifacts in the reconstructed CT images, although there are additional shading artifacts in the CT images. As for the under-segmented case, the corresponding metal trace is narrower than the original metal trace. The sinogram completion network would only complete some projection values of the original metal trace region and reuse some unreliable projection data. Therefore, our method can only reduce some metal artifacts and there still remains some residual streaking artifacts in the reconstructed CT image.

Fig. 7:

The results of our method with different segmented metal masks as input. We show the MAR results with thresholding-based metal segmentation (a); we also dilate the metal mask to get over-segmented masks (b & c) and erode the metal mask to get under-segmented masks (e & f). The display window is [−480 560] HU.

F. Analytical Studies

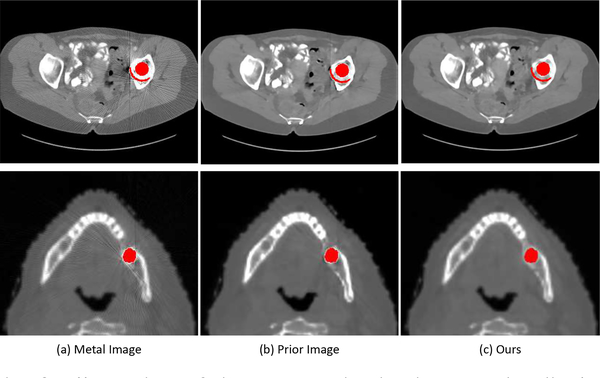

1). Effectiveness of prior image generation:

In our framework, we train the PriorNet to generate a good prior image to ease sinogram completion. To show the effectiveness of this procedure, we directly train a neural network to complete the sinogram without taking the prior sinogram as input. The quantitative results of this method on DeepLesion simulated dataset are shown in Table II. It is observed that this method (Deep sinogram completion) achieves much higher RMSE and lower SSIM values than our method, verifying the effectiveness of prior image generation procedure. In Fig. 8, we show some generated prior images and final metal artifact reduction images on DeepLesion and head CT data. We can see that the prior images (Fig. 8(b)) have less artifacts than original metal image (Fig. 8(a)) and our final results (Fig. 8(c)) further reduce artifacts compared with the prior images. We also train our framework by taking only original metal image as PriorNet input. As shown in Table II, this method (Only metal image) generates slightly worse results than our method on the simulated dataset, indicating that incorporating LI corrected image as input can facilitate the prior image generation.

TABLE II:

Quantitative analysis of our methods on simulated DeepLesion dataset.

| Method | RMSE (HU) | SSIM |

|---|---|---|

| Deep sinogram completion | 43.65±17.61 | 0.9720±0.0082 |

| With tissue processing | 35.64±7.91 | 0.9768±0.0047 |

| w/o residual sinogram learning | 31.86±4.69 | 0.9781±0.0048 |

| Only metal image | 31.60±4.95 | 0.9778±0.0048 |

| Ours | 31.15±5.81 | 0.9784±0.0048 |

Fig. 8:

Illustration of the generated prior image. The display window of first row and seconed row are [−480 560] HU and [−1000 1600] HU, respectively.

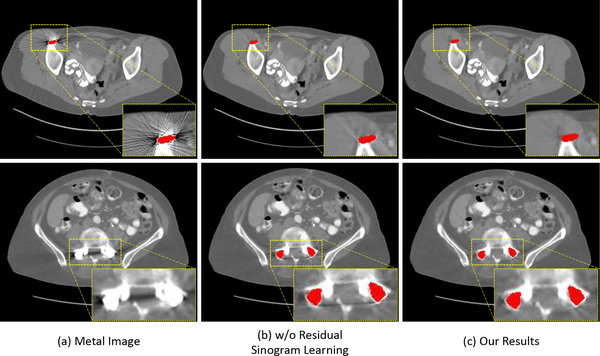

2). Effectiveness of residual sinogram learning:

We show the qualitative MAR results of our method with and without residual sinogram learning strategy in Fig. 9. The first row is the results on the simulated metal artifact image and the second row shows the results on the real clinical CT image with metal artifacts. In the experiment without residual sinogram learning, the SinoNet directly takes the prior sinogram and metal trace mask as input and outputs the refined projections within the metal trace region. From the visual comparison in Fig. 9, we can observe that our framework further reduces metal artifacts on both simulated and real samples by adopting the residual sinogram learning strategy. We also present the quantitative results of w/o residual sinogram learning in Table II. It is observed that we achieve higher RMSE and lower SSIM values with residual sinogram learning.

Fig. 9:

The results of our method with (b) and without (c) residual sinogram learning strategy. The first row is simulated metal image and the second image is real clinical CT image. The display window is [−480 560] HU.

3). Compared with tissue processing:

The tissue processing step is often used to acquire the prior image in previous MAR methods. We conduct another experiment to investigate the effect of this strategy, where we employ the tissue processing and metal trace replacement steps [17], [23] to process the generated prior image Xprior of our method and then acquire the final metal-free image with FBP reconstruction. The quantitative result of this method (With tissue processing) is shown in Table II. It is observed that With tissue processing achieves satisfying results on the simulated DeepLesion dataset, while its performance is still inferior to our end-to-end deep sinogram completion strategy, indicating that the deep sinogram network could automatically learn how to reduce the mild artifacts in the prior image.

IV. DISCUSSION

MAR is a long-standing problem in CT imaging. In this work, we aim to design a data-driven framework to address this problem by utilizing a large amount of training data. The previous deep-learning-based methods usually formulate the MAR as an image restoration problem. Whereas we borrow the spirit of conventional MAR approaches and formulate the MAR as a deep sinogram completion problem, aiming to improve the generalization and robustness of the framework. Since directly regressing the accurate missing projection data is difficult, we propose to incorporate the deep prior image generation procedure and adopt a residual sinogram completion strategy. This manner can improve the continuity of the projection values at the boundary of metal traces and alleviate the new artifacts, which are the common drawbacks of sinogram completion based MAR methods. In such way, our framework could better utilize the advantages of deep learning techniques while alleviating the risk of overfitting to certain training data.

We solve MAR in both sinogram and image domains, which share the same strategy with DuDoNet [35] and DuDoNet++ [36]. However, our framework differs from them in a few important aspects. The DuDoNet and DuDoNet++ directly adopt the image-domain-refinement output as the final MAR image, whereas the final MAR image of our method is directly FBP-reconstructed from the completed sinogram. As there is no geometry (physical) constraints to regularize the neural networks, there would be some tiny anatomical structure changes in the CNN-output images. Our FBP-reconstructed image could preserve the anatomical structure of the original image and avoid the resolution loss, as we only modify the metal trace region values in the sinogram; see comparisons in Figs. 3&4. More importantly, we design a novel residual learning strategy for the sinogram enhancement network to refine the residual projections within the metal trace region, and both quantitative and qualitative results show the effectiveness of such residual learning strategy.

It is clinically impractical to acquire metal-free and metal-inserted CT data for network training, We thus simulate metal artifacts from clinical metal-free CT images to acquire synthesized training pairs. In this case, the quality of simulated data would largely influence network performance. Currently, we simulate the metal artifacts without carefully designing the simulated metal masks. In the future, we will investigate how to create a good simulated dataset to further improve the network performance on real clinical CT images.

The previous prior-image-based MAR methods would utilize tissue processing to post-process the generated prior image. Whereas in our method, we directly employ the CNN output as the prior image to guide the sinogram completion network. In this case, we can jointly train the PriorNet and SinoNet, and the prior image generation and sinogram completion procedures can benefit from each other. Although some mild artifacts would remain in the generated prior image, the sinogram completion network would automatically learn how to complete the sinogram from the prior sinogram, so that our final output can remove these mild artifacts.

We have trained and evaluated our method on simulated datasets, but as shown in the experiments, our framework has strong potential to be applied in CT images with real metal artifacts. Since there is no public real projection data and we need to cooperate with CT device venders to acquire such real projection data, in the current study, we use forward projection to simulate the projection data. This is a limitation of our current work and we will evaluate the effectiveness of our method on real project data in the future. When applying the framework into real clinical data, one important practice issue is how to acquire the accurate metal trace and metal masks. Although our framework is relatively robust to the metal mask segmentation, an accurate metal segmentation would further ensure the stability of the MAR results. Deep learning has achieved promising results in various medical image segmentation problems [24], [25], [44]. Incorporating deep learning-based metal segmentation or advanced metal identification algorithm [3] into our framework would further improve the robustness of our method. Recently, some works studied how to locate the shape and location of metal objects directly from the metal-corrupted sinogram [3], [45]. These binary reconstruction works can also be integrated into our framework for better metal artifact reduction. Moreover, it is more interesting to investigate how to simultaneously conduct metal mask identification and metal artifact reduction in a collaborative manner.

V. Conclusion

We present a generalizable image and sinogram domain joint learning framework for metal artifact reduction in CT imaging, which integrates the merits of deep learning and conventional MAR methods. Our framework follows the prior-image-based sinogram completion strategy and we employ two networks to conduct prior image generalization and sinogram completion. The whole framework is trained in an end-to-end manner so that the two networks can benefit from each other in network learning. Our framework is trained with the simulated metal artifacts data, while the experimental results show the strong potential of our method to handle CT images with real artifacts. The future works include investigating how to simultaneously conduct metal mask identification and metal artifact reduction, as well as how to perform the procedure in an unsupervised manner.

Acknowledgments

This work was partially supported by NIH (1 R01CA227713), Varian Medical Systems, and a Faculty Research Award from Google Inc.

Footnotes

References

- [1].De Man B, Nuyts J, Dupont P, Marchal G, and Suetens P, “Metal streak artifacts in x-ray computed tomography: a simulation study,” in IEEE Nuclear Science Symposium and Medical Imaging Conference, vol. 3 IEEE, 1998, pp. 1860–1865. [Google Scholar]

- [2].Kalender WA, Hebel R, and Ebersberger J, “Reduction of ct artifacts caused by metallic implants.” Radiology, vol. 164, no. 2, pp. 576–577, 1987. [DOI] [PubMed] [Google Scholar]

- [3].Meng B, Wang J, and Xing L, “Sinogram preprocessing and binary reconstruction for determination of the shape and location of metal objects in computed tomography (ct),” Medical physics, vol. 37, no. 11, pp. 5867–5875, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Park HS, Lee SM, Kim HP, Seo JK, and Chung YE, “Ct sinogram-consistency learning for metal-induced beam hardening correction,” Medical physics, vol. 45, no. 12, pp. 5376–5384, 2018. [DOI] [PubMed] [Google Scholar]

- [5].Huang JY, Kerns JR, Nute JL, Liu X, Balter PA, Stingo FC, Followill DS, Mirkovic D, Howell RM, and Kry SF, “An evaluation of three commercially available metal artifact reduction methods for ct imaging,” Physics in Medicine & Biology, vol. 60, no. 3, p. 1047, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Gjesteby L, De Man B, Jin Y, Paganetti H, Verburg J, Giantsoudi D, and Wang G, “Metal artifact reduction in ct: where are we after four decades?” IEEE Access, vol. 4, pp. 5826–5849, 2016. [Google Scholar]

- [7].Hsieh J, Molthen RC, Dawson CA, and Johnson RH, “An iterative approach to the beam hardening correction in cone beam ct,” Medical physics, vol. 27, no. 1, pp. 23–29, 2000. [DOI] [PubMed] [Google Scholar]

- [8].Kachelriess M, Watzke O, and Kalender WA, “Generalized multi-dimensional adaptive filtering for conventional and spiral single-slice, multi-slice, and cone-beam ct,” Medical physics, vol. 28, no. 4, pp. 475–490, 2001. [DOI] [PubMed] [Google Scholar]

- [9].Meyer E, Maaß C, Baer M, Raupach R, Schmidt B, and Kachelrieß M, “Empirical scatter correction (esc): A new ct scatter correction method and its application to metal artifact reduction,” in IEEE Nuclear Science Symposuim & Medical Imaging Conference IEEE, pp. 2036–2041. [Google Scholar]

- [10].Park HS, Hwang D, and Seo JK, “Metal artifact reduction for polychromatic x-ray ct based on a beam-hardening corrector,” IEEE Transactions Medical Imaging, vol. 35, no. 2, pp. 480–487, 2015. [DOI] [PubMed] [Google Scholar]

- [11].Zhang Y and Yu H, “Convolutional neural network based metal artifact reduction in x-ray computed tomography,” IEEE Transactions Medical Imaging, vol. 37, no. 6, pp. 1370–1381, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Mehranian A, Ay MR, Rahmim A, and Zaidi H, “X-ray ct metal artifact reduction using wavelet domain l_{0} sparse regularization,” IEEE Transactions Medical Imaging, vol. 32, no. 9, pp. 1707–1722, 2013. [DOI] [PubMed] [Google Scholar]

- [13].Müller J and Buzug TM, “Spurious structures created by interpolation-based ct metal artifact reduction,” in Medical Imaging 2009: Physics of Medical Imaging, vol. 7258 International Society for Optics and Photonics, 2009, p. 72581Y. [Google Scholar]

- [14].Prell D, Kyriakou Y, Beister M, and Kalender WA, “A novel forward projection-based metal artifact reduction method for flat-detector computed tomography,” Physics in Medicine & Biology, vol. 54, no. 21, p. 6575, 2009. [DOI] [PubMed] [Google Scholar]

- [15].Meyer E, Raupach R, Lell M, Schmidt B, and Kachelrieß M, “Normalized metal artifact reduction (nmar) in computed tomography,” Medical physics, vol. 37, no. 10, pp. 5482–5493, 2010. [DOI] [PubMed] [Google Scholar]

- [16].Wang J, Wang S, Chen Y, Wu J, Coatrieux J-L, and Luo L, “Metal artifact reduction in ct using fusion based prior image,” Medical physics, vol. 40, no. 8, p. 081903, 2013. [DOI] [PubMed] [Google Scholar]

- [17].Zhang Y, Yan H, Jia X, Yang J, Jiang SB, and Mou X, “A hybrid metal artifact reduction algorithm for x-ray ct,” Medical physics, vol. 40, no. 4, p. 041910, 2013. [DOI] [PubMed] [Google Scholar]

- [18].Wang G, Snyder DL, O’Sullivan JA, and Vannier MW, “Iterative deblurring for ct metal artifact reduction,” IEEE Transactions Medical Imaging, vol. 15, no. 5, pp. 657–664, 1996. [DOI] [PubMed] [Google Scholar]

- [19].Wang G, Vannier MW, and Cheng P-C, “Iterative x-ray cone-beam tomography for metal artifact reduction and local region reconstruction,” Microscopy and microanalysis, vol. 5, no. 1, pp. 58–65, 1999. [DOI] [PubMed] [Google Scholar]

- [20].Lemmens C, Faul D, and Nuyts J, “Suppression of metal artifacts in ct using a reconstruction procedure that combines map and projection completion,” IEEE Transactions Medical Imaging, vol. 28, no. 2, pp. 250–260, 2008. [DOI] [PubMed] [Google Scholar]

- [21].Zhang X, Wang J, and Xing L, “Metal artifact reduction in x-ray computed tomography (ct) by constrained optimization,” Medical physics, vol. 38, no. 2, pp. 701–711, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Wang G, Ye JC, Mueller K, and Fessler JA, “Image reconstruction is a new frontier of machine learning,” IEEE Transactions Medical Imaging, vol. 37, no. 6, pp. 1289–1296, 2018. [DOI] [PubMed] [Google Scholar]

- [23].Zhang Z, Liang X, Dong X, Xie Y, and Cao G, “A sparse-view ct reconstruction method based on combination of densenet and deconvolution,” IEEE Transactions Medical Imaging, vol. 37, no. 6, pp. 1407–1417, 2018. [DOI] [PubMed] [Google Scholar]

- [24].Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, Van Der Laak JA, Van Ginneken B, and Sánchez CI, “A survey on deep learning in medical image analysis,” Medical image analysis, vol. 42, pp. 60–88, 2017. [DOI] [PubMed] [Google Scholar]

- [25].Ronneberger O, Fischer P, and Brox T, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical Image Computing and Computer Assisted Intervention Springer, 2015, pp. 234–241. [Google Scholar]

- [26].Gjesteby L, Yang Q, Xi Y, Zhou Y, Zhang J, and Wang G, “Deep learning methods to guide ct image reconstruction and reduce metal artifacts,” in Medical Imaging 2017: Physics of Medical Imaging, vol. 10132 International Society for Optics and Photonics, 2017, p. 101322W. [Google Scholar]

- [27].Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z et al. , “Photo-realistic single image super-resolution using a generative adversarial network,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 4681–4690. [Google Scholar]

- [28].Ulyanov D, Vedaldi A, and Lempitsky V, “Deep image prior,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 9446–9454. [Google Scholar]

- [29].Lehtinen J, Munkberg J, Hasselgren J, Laine S, Karras T, Aittala M, and Aila T, “Noise2noise: Learning image restoration without clean data,” ICML, 2018. [Google Scholar]

- [30].Park HS, Lee SM, Kim HP, and Seo JK, “Machine-learning-based nonlinear decomposition of ct images for metal artifact reduction,” arXiv preprint arXiv:1708.00244, 2017. [Google Scholar]

- [31].Gjesteby L, Yang Q, Xi Y, Claus B, Jin Y, De Man B, and Wang G, “Reducing metal streak artifacts in ct images via deep learning: Pilot results,” in The 14th International Meeting on Fully Three-Dimensional Image Reconstruction in Radiology and Nuclear Medicine, 2017, pp. 611–614. [Google Scholar]

- [32].Gjesteby L, Shan H, Yang Q, Xi Y, Claus B, Jin Y, De Man B, and Wang G, “Deep neural network for ct metal artifact reduction with a perceptual loss function,” in Proceedings of The Fifth International Conference on Image Formation in X-ray Computed Tomography, 2018. [Google Scholar]

- [33].Liang K, Zhang L, Yang H, Yang Y, Chen Z, and Xing Y, “Metal artifact reduction for practical dental computed tomography by improving interpolation-based reconstruction with deep learning,” Medical Physics, vol. 46, no. 12, pp. e823–e834, 2019. [DOI] [PubMed] [Google Scholar]

- [34].Liao H, Lin W-A, Zhou SK, and Luo J, “Adn: Artifact disentanglement network for unsupervised metal artifact reduction,” IEEE Transactions Medical Imaging, 2019. [DOI] [PubMed] [Google Scholar]

- [35].Lin W-A, Liao H, Peng C, Sun X, Zhang J, Luo J, Chellappa R, and Zhou SK, “Dudonet: Dual domain network for ct metal artifact reduction,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 10512–10521. [Google Scholar]

- [36].Lyu Y, Lin W-A, Lu J, and Zhou SK, “Dudonet++: Encoding mask projection to reduce ct metal artifacts,” arXiv preprint arXiv:2001.00340, 2020. [Google Scholar]

- [37].Huang X, Wang J, Tang F, Zhong T, and Zhang Y, “Metal artifact reduction on cervical ct images by deep residual learning,” Biomedical engineering online, vol. 17, no. 1, p. 175, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Wang J, Zhao Y, Noble JH, and Dawant BM, “Conditional generative adversarial networks for metal artifact reduction in ct images of the ear,” in International Conference on Medical Image Computing and Computer Assisted Intervention Springer, 2018, pp. 3–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Isola P, Zhu J-Y, Zhou T, and Efros AA, “Image-to-image translation with conditional adversarial networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1125–1134. [Google Scholar]

- [40].Liao H, Lin W-A, Huo Z, Vogelsang L, Sehnert WJ, Zhou SK, and Luo J, “Generative mask pyramid network for ct/cbct metal artifact reduction with joint projection-sinogram correction,” in International Conference on Medical Image Computing and Computer Assisted Intervention Springer, 2019, pp. 77–85. [Google Scholar]

- [41].Yan K, Wang X, Lu L, Zhang L, Harrison AP, Bagheri M, and Summers RM, “Deep lesion graphs in the wild: relationship learning and organization of significant radiology image findings in a diverse large-scale lesion database,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 9261–9270. [Google Scholar]

- [42].Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L et al. , “Pytorch: An imperative style, high-performance deep learning library,” in NIPS. [Google Scholar]

- [43].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014. [Google Scholar]

- [44].Yu L, Yang X, Chen H, Qin J, and Heng PA, “Volumetric convnets with mixed residual connections for automated prostate segmentation from 3d mr images,” in Thirty-first AAAI conference on artificial intelligence, 2017. [Google Scholar]

- [45].Wang J and Xing L, “A binary image reconstruction technique for accurate determination of the shape and location of metal objects in x-ray computed tomography,” Journal of X-ray science and technology, vol. 18, no. 4, pp. 403–414, 2010. [DOI] [PubMed] [Google Scholar]