Abstract

Assessment-based instruction can increase the efficacy and efficiency of skill acquisition by using learner data to select an intervention procedure from a comparison of potential interventions. Although there are many published examples of assessments that guide the selection of skill-acquisition procedures, there are limited resources available to practitioners to guide the development of assessments for use in practice. This article describes a sequence of steps that Board Certified Behavior Analysts can follow to design and use assessment-based instruction in practice. These steps include (a) pick a topic to evaluate, (b) identify interventions to include in the assessment, (c) identify target behavior, (d) select an experimental design, (e) select a skill and targets, (f) equate noncritical procedures across conditions, (g) design templates for data collection, (h) conduct the assessment, and (i) use assessment results to guide practice. Included in these steps are examples and materials for how to conduct components of assessment-based instruction.

Keywords: Assessments, Assessment-based instruction, Autism interventions, Instructional design, Skill acquisition

Behavior analysts are expected to conduct assessments to guide the development of their behavior-change programs (Behavior Analyst Certification Board [BACB], 2014, Code 3.0). Nevertheless, assessments may be more likely to occur based on certain intervention goals in comparison to others. For example, if a behavior analyst initiated services with three clients who engaged in aggressive behavior, it is likely that a functional analysis would be conducted to identify the function(s) of each client’s aggressive behavior prior to designing individualized, function-based treatments (Tiger et al., 2008). In contrast, if a behavior analyst initiated services with three clients who displayed prompt-dependent behavior during skill-acquisition programming, it is less likely that an assessment would be conducted to identify the variables that may contribute to prompt dependence and develop individualized interventions for each client (Gorgan & Kodak, 2019).

The discrepancy in the use of assessments to guide intervention selection for problem behavior versus skill acquisition is concerning, because the literature is replete with demonstrations of the benefits of assessment-based instruction to select individualized interventions that will enhance the efficacy and efficiency of instruction (e.g., Carroll et al., 2018; Eckert et al., 2002; Everett et al., 2016; McComas et al., 2009; McCurdy et al., 2016; McGhan & Lerman, 2013; Mellott & Ardoin, 2019). Assessment-based instruction involves comparing a learner’s response to several interventions and using learner data to guide the selection of an intervention that is shown to be efficacious for the learner (e.g., Carroll et al., 2018; Kodak et al., 2011; McComas et al., 2009). Studies on assessment-based instruction have demonstrated that learners frequently have idiosyncratic responses to interventions, suggesting that interventions need to be individualized and assessments are necessary to identify instructional arrangements. For example, McGhan and Lerman (2013) conducted an assessment to evaluate the differential efficacy of error-correction procedures for five children with autism spectrum disorder (ASD). Their results showed that participants responded differentially to commonly used error-correction procedures and that conducting an assessment assisted in the identification of the least intrusive procedure during discrimination training for each participant.

Although assessment-based instruction can assist Board Certified Behavior Analysts (BCBAs) in selecting efficacious and efficient interventions for clients, these assessments are associated with some costs to BCBAs because they require time and effort to design and train others to implement. Thus, a consideration of the benefits of assessment-based instruction in contrast to the costs of conducting these assessments is warranted. One benefit of conducting assessments to guide intervention practices is that they allow BCBAs to identify variables that may contribute to delayed acquisition or barriers to learning. Sundberg (2008) developed a Barriers Assessment included in the Verbal Behavior Milestones Assessment and Placement Program (VB-MAPP) to identify barriers to learning that are beneficial to treat prior to or while providing instruction to clients. For example, the Barriers Assessment might identify that a child displays prompt-dependent behavior, and that many previously trained skills remain under the control of prompts. Nevertheless, the Barriers Assessment does not identify the variables contributing to the learning barrier, nor does it suggest specific interventions to address the barrier.

One variable that may contribute to prompt-dependent behavior is a skill deficit. The client may not have mastered the skill with previous training or requires additional training (including prompts) to reteach these skills. Thus, the frequent use of prompts to teach skills that are not yet mastered is expected during instruction. In comparison, it is possible that the client’s prompt-dependent behavior is a result of a performance deficit; the client can engage in the behavior in the absence of prompts, but the contingencies in the training environment are not sufficient to establish and maintain independent responding. Assessment-based instruction can assist in identifying variables (e.g., a performance deficit) contributing to the client’s prompt-dependent behavior by comparing the client’s response to interventions composed of different instructional components (Gorgan & Kodak, 2019). Without an assessment to guide the selection of interventions for behavior identified in the Barriers Assessment, BCBAs may arbitrarily select an intervention to use with the client that may or may not address prompt-dependent behavior.

Assessment-based instruction is also beneficial in identifying individualized interventions based on the client’s unique response to the interventions evaluated in the assessment. Previous research has shown that children respond differently to components of instruction (Coon & Miguel, 2012; Cubicciotti et al., 2019; Daly III et al., 1999; Libby et al., 2008; McCurdy et al., 2016; Petursdottir et al., 2009; Seaver & Bourret, 2014). For example, some clients may learn to answer questions more quickly when an echoic prompt is provided during instruction, whereas other clients may learn more quickly from tact prompts (i.e., presentation of a picture to which the client engages in a relevant vocal response; Ingvarsson & Hollobaugh, 2011; Ingvarsson & Le, 2011). Therefore, if BCBAs arbitrarily select an instructional strategy for a client, it is possible that the intervention will be ineffective or inefficient (e.g., Kodak et al., 2011). Rather than exposing a client to an ineffective intervention that could delay acquisition and potentially produce error patterns or faulty stimulus control that must be addressed thereafter (Grow et al., 2011), practitioners could conduct an assessment to guide their selection of intervention for the client.

A unique contribution of the assessments used to guide instruction in behavior-analytic practice is the acquisition of skills while conducting the assessment. Other assessments frequently conducted with clients with ASD assist in diagnosis (e.g., Autism Diagnostic Interview–Revised, Rutter et al., 2003; Autism Diagnostic Observation Schedule, Lord et al., 1999; Gilliam Autism Rating Scale, Third Edition, Gilliam, 2014; Wechsler Preschool and Primary Scale of Intelligence, Fourth Edition, Wechsler, 2012) or compare an individual’s performance in a skill area to a standardized sample of their peers (Peabody Picture Vocabulary Test, Fourth Edition, Dunn & Dunn, 2007; Woodcock–Johnson Psychoeducational Battery Achievement Tests, Woodcock, 1977). Those assessments are not used to identify a specific intervention for a client, nor does the client learn new skills while completing the assessment. In comparison, assessment-based instruction (also referred to as a brief experimental analysis in the school psychology literature; Peacock et al., 2009) includes the teaching of skills within the assessment, because the assessment is designed to identify interventions that will result in acquisition. For example, Boudreau et al. (2015) taught tacts to three children with ASD while conducting an assessment to identify efficacious and efficient differential reinforcement procedures for each participant. The participants acquired at least 15 tacts by the completion of the assessment. Therefore, conducting the assessment provides the benefit of identifying an individualized intervention for the client while also teaching clients novel skills.

Despite these benefits and the considerable empirical support for the inclusion of assessments when designing instructional strategies for learners with ASD, there remains a research-to-practice gap. One reason for this gap is that the literature on assessment-based instruction provides many exemplars of the use of assessments to guide the selection of instructional strategies, yet the specific methods for designing these assessments have not yet been widely disseminated. The purpose of this article is to provide a tutorial on how BCBAs can design assessments to identify instructional strategies for clients. This tutorial includes a task analysis of the nine steps that are necessary to design and use assessment-based instruction in practice, as well as detailed information, examples, and materials related to these steps.

Designing an Assessment

BCBAs (hereafter referred to as practitioners) can design assessments that assist in selecting many types of instructional components and interventions. Although the design of an assessment requires some initial planning and time, once an assessment is designed, it can be used with all clients for whom the assessment is relevant for their intervention goals. Thus, large organizations and small companies alike can benefit from the design and use of assessment-based instruction in practice. There are nine steps involved in designing and conducting assessment-based instruction. Refer to Table 1 for a list of those nine steps.

Table 1.

Task analysis and examples of steps for designing an assessment

| # | Step | Example(s) |

|---|---|---|

| 1 | Pick a topic to evaluate. | Select based on the client’s treatment goals. |

| 2 | Identify interventions to include in the assessment. | Select an empirically validated intervention, an intervention recommended by the treatment team, and/or commonly used interventions. |

| 3 | Identify target behavior. | Align target behavior with a topic such as measuring independent correct responses and the number of trials or duration of instruction for multiple error-correction procedures. |

| 4 | Select an experimental design. | Use an adapted alternating-treatments design. |

| 5 | Select a skill and targets. | Include socially valid targets and targets based on the client’s current and future treatment goals. |

| 6 | Equate noncritical procedures across conditions. | Use similar reinforcement schedules, arrange a similar number of instructional trials, and use same the mastery criterion across conditions. |

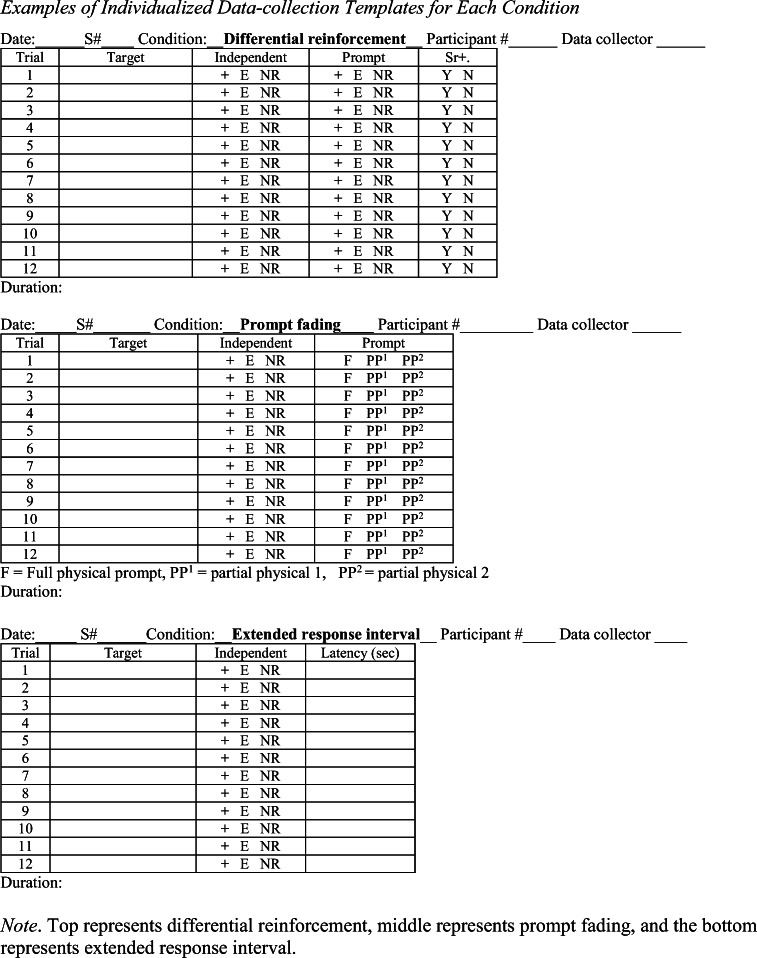

| 7 | Design templates for data collection. | Use standard or enhanced data-collection sheets; refer to Fig. 2 for an example. |

| 8 | Conduct the assessment. | Conduct a comparison at least once, semirandomly rotate conditions, and consider the use of a discontinuation criterion. |

| 9 | Use assessment results to guide practice. | Apply results to some or all current and future treatment goals. |

Step 1: Pick a Topic to Evaluate

There are many instructional strategies used to teach skills targeted within the delivery of comprehensive behavioral intervention, such as prompts and prompt-fading procedures, error-correction procedures, reinforcement parameters (e.g., quality, magnitude) and schedules, stimulus arrangements, and formats of instruction, among others. The specific topic selected by the behavior analyst to evaluate within an assessment will likely vary based on when these assessments are conducted with clients. For example, an assessment of prompts and prompt-fading procedures (e.g., Schnell et al., 2019; Seaver & Bourret, 2014) may be conducted with clients at the start of their admission, because the results of these assessments can guide the use of prompt-fading procedures to teach many of the clients’ intervention goals. Seaver and Bourret (2014) compared three prompt-fading procedures (i.e., least-to-most prompting, most-to-least prompting, and a progressive prompt delay) to teach behavior chains. They applied the results of their assessment to instruction on subsequent activities of daily living (e.g., setting the table, making trail mix) and found that the prompt-fading procedure identified by the assessment produced rapid acquisition of daily living skills. Importantly, prompt-fading procedures that were identified as ineffective by the assessment were also unsuccessful for teaching daily living skills to their participants. Therefore, the results of the assessment were necessary to guide the identification of an effective prompt-fading procedure for each participant.

If a client has received services for some time and already has an effective prompt-fading procedure, practitioners may choose to conduct an assessment to evaluate other instructional strategies to further maximize learning. For example, practitioners may evaluate efficacious and efficient error-correction procedures (e.g., Carroll et al., 2015; Kodak et al., 2016; McGhan & Lerman, 2013; Worsdell et al., 2005) or differential reinforcement arrangements (e.g., Boudreau et al., 2015; Johnson et al., 2017; Karsten & Carr, 2009). Carroll et al. (2015) conducted an assessment to compare the effects of four error-correction procedures on skill acquisition for three children with ASD. The most efficient error-correction procedure was learner specific, demonstrating the benefit of conducting an assessment to guide the selection of individualized instructional strategies.

Practitioners also may select to assess intervention strategies based on overlapping client treatment goals. For example, if an organization has multiple clients who display prompt-dependent behavior during activities of daily living, practitioners in the organization could develop an assessment to assist in the identification of individualized interventions to address prompt dependence (e.g., Gorgan & Kodak, 2019). Another novel and practical use of assessment-based instruction is to evaluate the effects of new interventions on a target behavior, particularly if there are limited or no empirical studies that intervene on the target behavior. For example, assessment-based instruction could be applied to the selection of an intervention to decrease faculty echoic control during intraverbal training (e.g., repeating all or part of the question before providing an answer; Kodak et al., 2012). Practitioners may be using novel strategies in practice that have not yet been published, and the inclusion of assessment-based instruction can assist in providing evidence for the efficacy of the intervention for the client’s behavior in comparison to other novel interventions or those with limited empirical support.

Ongoing assessments of common intervention procedures could be conducted with clients to verify that the interventions being used in practice remain efficacious and efficient. Although the exact schedule for reassessment has not been identified nor discussed in previous research, practitioners might repeat an assessment of intervention practices on a schedule similar to that of other reassessments. For example, practitioners who conduct the VB-MAPP two times per year to measure progress over time could also conduct assessments of key interventions on the same schedule. Repeating assessments at specified times will allow practitioners to evaluate whether to continue with the current instructional practices or make modifications to those practices based on a change in the client’s response to intervention. In addition, practitioners could repeat the assessment if they become aware of another efficacious instructional practice not previously included in the assessment.

Step 2: Identify Interventions to Include in the Assessment

Selecting interventions to evaluate in an assessment should be thoughtfully conducted and based on the specific components of interventions that are hypothesized to be relevant to the skill being targeted. Practitioners could begin by considering the variables that may influence the target behavior of interest, and select interventions from the literature that permit an examination of those variables (e.g., error patterns, response effort, restricted stimulus control, motivating operations, reinforcement contingencies). For example, designing an assessment that includes interventions for prompt dependence would involve the consideration of the variables that may influence prompt-dependent behavior, such as reinforcement contingencies, the inclusion of a prompt, and types of prompts.

Gorgan and Kodak (2019) compared intervention conditions during an assessment of prompt-dependent behavior related to three hypothesized variables. First, the authors hypothesized that participants who had previously mastered the targeted or related skills were engaging in prompt-dependent behavior because reinforcement contingencies were not arranged to occasion independent responding. Differential reinforcement is an empirically supported intervention shown to prevent the development of prompt-dependent behavior by arranging reinforcers for independent responding and exposing prompted responses to extinction (e.g., Hausman et al., 2014), lean reinforcement schedules (e.g., Touchette & Howard, 1984), or lower quality reinforcers (e.g., Campanaro et al., 2019; Karsten & Carr, 2009). Thus, differential reinforcement was selected for inclusion in the assessment. Second, the authors hypothesized that clients who could respond independently were likely to engage in prompt-dependent behavior if prompts remained available during the task. They evaluated an extended response interval condition in which the instructor provided more time for the participant to complete a task. Prompts were no longer provided during this condition, and the only way the participant could obtain a reinforcer was to engage in an independent correct response. Third, the authors hypothesized participants were engaging in prompt-dependent behavior because the skill was no longer in their repertoire and prompts were necessary to produce a response in the presence of relevant antecedent stimuli. An intervention that included a prompt-fading procedure to transfer prompted responses to the control of a discriminative stimulus (Bourret et al., 2004) also was included in the assessment. These three assessment conditions based on hypothesized variables affecting prompt-dependent behavior were evaluated by Gorgan and Kodak, and the assessment identified an effective intervention for each participant.

Practitioners could make a table to assist in selecting interventions from the literature that include components of interventions that align with variables hypothesized to affect client responding. Refer to Table 2 for an example of hypothesized intervention components for treating prompt-dependent behavior. Gorgan and Kodak (2019) were interested in evaluating interventions that differed according to reinforcement contingencies and the delivery of prompts for independent and prompted correct responses. Table 2 shows that each intervention selected from the literature aligned in a unique way with the authors’ variables of interest. That is, no two intervention conditions included identical variables. If interventions overlap in an identical manner across important variables, practitioners could exclude one of those interventions from the assessment.

Table 2.

Hypothesized intervention components for treating prompt-dependent behavior

| Intervention | Components | |

|---|---|---|

| Delivery of prompts | Reinforcer after prompt | |

| Differential reinforcement | ✓ | X |

| Prompt fading | ✓ | ✓ |

| Extended response interval | X | X |

Note. Checkmarks represent the inclusion of the component, whereas X’s represent the omission of the component

Including empirically validated interventions in the assessment will increase the likelihood that at least one intervention will be efficacious. However, behavior analysts often collaborate with other service providers or are part of a multidisciplinary treatment team. Other members of the treatment team may recommend an intervention that has limited or no empirical validity (e.g., weighted blanket, swivel chairs), although the intervention appears socially valid and preferred by members of the team. In this case, the intervention recommended by the team could be included as a condition in the assessment to permit a comparison of the effects of the team’s intervention to those of other interventions in the literature. For example, Kodak et al. (2016) included an assessment condition that aligned with the instructional practices used in participants’ classrooms, and that condition was compared to four other intervention conditions validated in the literature. The results showed one or more of the interventions from the extant literature resulted in the acquisition of targeted skills, whereas the intervention used in the participants’ classrooms did not produce mastery-level responding. Careful analysis of the effects of each assessment condition on the client’s target behavior will permit a data-based decision for intervention selection and is consistent with behavior-analytic practice guidelines for assessing client behavior (BACB, 2014).

Practitioners also could include one or more conditions in an assessment to compare interventions commonly used during the client’s service delivery to other interventions drawn from the literature. For example, practitioners may seek to compare whether a prompt-delay procedure frequently used with clients is more effective and efficient than other prompt-fading strategies (e.g., least to most, most to least; Schnell et al., 2019). Although some studies suggest that prior exposure to a procedure may improve the efficacy and efficiency of that procedure during the treatment comparison (Coon & Miguel, 2012; Kay et al., 2019; Roncati et al., 2019), other studies on assessment-based instruction do not support this conclusion (Bergmann et al., 2020; Kodak et al., 2013; Kodak et al., 2016). Assessment results that show a novel intervention from the literature to be more efficacious or efficient than the practitioner’s frequently used intervention should be considered when designing future instructional programming for the client.

A control condition could be included in the assessment to compare the effects of the interventions of interest to a condition that does not include any intervention strategies. In a functional analysis, which assesses environmental variables that maintain problem behavior, a toy-play condition can serve as a control by arranging abolishing operations for all variables of interest and omitting consequences for target behavior (Beavers et al., 2013; Iwata et al., 1982/1994). The rate of target behavior in the test conditions in the functional analysis (e.g., attention, demand, and tangible conditions) is compared to the rate of behavior in the control condition to identify the function(s) of an individual’s problem behavior. Similarly, the control condition in assessment-based instruction often includes few or no components of intervention (e.g., no prompts or reinforcers); thus, levels of target behavior in the control condition can be compared to levels of behavior in the intervention conditions. Further, a control condition that excludes the independent variable and shows that behavior remains at baseline levels throughout the treatment comparison will allow practitioners to demonstrate that the independent variable included in the intervention conditions is responsible for changes in target behavior. Practitioners should only consider using intervention conditions for which the outcomes are superior to those in the control condition.

Depending on the number of potential conditions identified for inclusion in the assessment, practitioners may need to exclude any intervention conditions that appear redundant. Most assessments include at least two but no more than five conditions in the comparison to decrease the length of time needed to complete the assessment. Refer to Table 3 for a task analysis of selecting intervention conditions.

Table 3.

Task analysis for selecting intervention conditions in an assessment

| Check-off | Component |

|---|---|

| □ |

Consider variables that influence the behavior of interest. Reinforcement contingencies Types of prompts Establishing operations |

| □ |

Make a table of variables hypothesized to affect client responding. See the example on treating prompt dependence (Table 2). |

| □ |

Review published studies. Select interventions that include one or more variables hypothesized to affect behavior. |

| □ | Consider the multidisciplinary treatment team’s input. |

| □ | Select one to four treatment conditions. |

| □ | Consider the inclusion of a control condition. |

Step 3: Identify Target Behavior

Assessment-based instruction allows practitioners to determine the efficacy and efficiency of intervention conditions for selected target behavior. The efficacy of an intervention relates to the extent to which the condition results in a socially significant improvement in the target behavior(s) of interest. Therefore, practitioners should select target behavior (i.e., dependent variables) to measure during the assessment that permits an examination of the efficacy of the intervention.

Assessments conducted in the literature often examine the effects of an intervention on mastery-level responding for some targeted skill. Mastery-level responding is often defined as engaging in some predetermined level of independent correct responses across several observations (e.g., Carroll et al., 2015; Schnell et al., 2019). Thus, independent correct responses are often measured during an assessment. However, the behavior that is selected for measurement should be based on the contributions of that measure to the assessment question. Measures that provide useful and important information regarding distinctions between assessment conditions should be included. For example, a comparison of errorless prompting procedures would likely include a measure of the frequency of errors in each condition, whereas an assessment of prompt dependence would likely include a measure of prompted correct responses. Practitioners can consider measuring any number of target behaviors in the assessment, although simultaneous data collection for too many measures could influence the feasibility of in vivo data collection.

The efficiency of assessment conditions is also an important variable to measure and calculate. Efficiency is typically defined as a reduced duration of training or acquisition of more targets during a specified instructional period (e.g., Forbes et al., 2013; Knutson et al., 2019; Kodak et al., 2016; Yaw et al., 2014). A common efficiency measure for assessment-based instruction is sessions to mastery (e.g., Carroll et al., 2015; Kodak et al., 2016; McGhan & Lerman, 2013), which can be calculated by summing the number of training sessions or trial blocks conducted in each condition. Conditions with fewer sessions to mastery may be considered more efficient than conditions requiring more sessions to mastery. Although the number of sessions to mastery is a relatively easy measure to obtain from graphical depictions of data, it may not be a sensitive measure of efficiency (Kodak et al., 2016). The sensitivity of this measure may depend on the components of intervention included in each assessment condition. For example, an assessment condition that includes a re-present until independent error-correction procedure may require repeated exposures to the same instructional target until the participant engages in an independent correct response (Carroll et al., 2015). Trial re-presentations likely require additional instructional time. In comparison, an assessment condition without any error correction would not include additional presentations of the same target, and each trial could be quite brief. Comparing sessions to mastery across these two assessment conditions would not adequately capture differences in exposures to stimuli or the duration of sessions. For this reason, practitioners could select different efficiency measures to include in an assessment.

Trials, exposures, and minutes to mastery are other efficiency measures that have been used to compare the efficiency of intervention conditions included in assessment-based instruction (e.g., Cariveau et al., 2016; Carroll et al., 2015; Nottingham et al., 2017). More than one calculation of efficiency could be conducted if practitioners determine that each measure may be relevant to the intervention conditions included in the assessment (e.g., exposures and minutes to mastery during error correction; Carroll et al., 2015; Kodak et al., 2016).

Step 4: Select an Experimental Design

Single-subject experimental designs are ideal for evaluating the learner-specific effects of intervention conditions on target behavior. Each client serves as their own control in the assessment, and the assessment can demonstrate functional relationships between independent and dependent variables. Although it may be less common to use experimental designs in practice, the most common experimental design used in assessment-based instruction is well suited to clinical practice.

An adapted alternating-treatments design (AATD) is frequently used in studies on assessment-based instruction (e.g., Eckert et al., 2000; Kodak et al., 2016; McGhan & Lerman, 2013). The AATD is often used in skill-acquisition research, whereas the alternating-treatments design (also referred to as a multielement design) is commonly used in research on the comparison of treatments for problem behavior (e.g., Hanley et al., 2005; Vollmer et al., 1993). Practitioners may not be familiar with the distinction between these designs, although this discrimination is important when designing assessments. The alternating-treatments design can evaluate the effects of several interventions on the rate of problem behavior. For example, Vollmer et al. (1993) compared the effects of differential reinforcement of other behavior and noncontingent reinforcement on rates of problem behavior. The intervention that produced the lowest rates of problem behavior was identified as most effective for each participant. In contrast, it is not possible to compare the effects of two interventions on the exact same behavior when evaluating skill acquisition. If a practitioner alternated between two intervention conditions across days to teach a client to tact a set of six picture targets, it would not be possible to identify which of those interventions was responsible for producing mastery-level responding to those targets. Thus, the AATD was developed for skill-acquisition studies and arranges comparisons of intervention conditions while measuring responding to different sets of targets. That is, each intervention condition is assigned a unique set of instructional targets, and intervention sessions with those condition-specific targets are conducted in an alternating order.

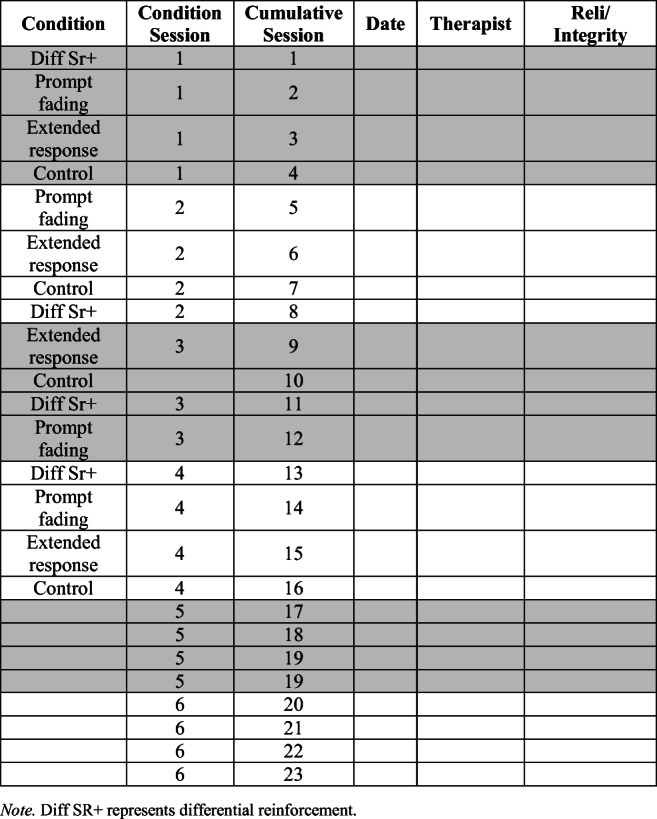

The AATD involves alternating sessions of one intervention condition assigned one set of targets with sessions of one or more intervention conditions assigned additional sets of targets. Consistent alternation of intervention conditions is critical to the assessment to avoid conducting more sessions of one condition than another. When multiple behavioral technicians will be conducting an assessment with a client and when an assessment is conducted across days, it can be helpful to create an assessment log to keep track of sessions. Figure 1 shows an example of an assessment log. The first column shows the order of the assessment sessions that will be conducted with the client. The second column shows the number of sessions conducted in each condition. Each assessment condition should be conducted one time before the order of sessions is randomized and each condition is conducted a second time. This alternation of intervention conditions continues until responding meets a predetermined mastery criterion. Once responding in a condition meets the mastery criterion, the order of the remaining conditions continues to be alternated until all conditions have responding that meets the mastery criterion or a discontinuation criterion is met. The intervention condition that results in mastery-level responding first is considered the most efficient intervention, and any conditions producing mastery-level responding of target sets are designated efficacious.

Fig. 1.

Example of an assessment log to keep track of sessions in each condition. Note. Diff Sr+ = differential reinforcement

There are some extraneous variables that can affect the adequacy of the assessment results, such as unpredicted differences in responding to the targets assigned to specific conditions, conducting certain conditions more often, and unplanned absences or breaks between intervention sessions, among others. To prevent extraneous variables from influencing assessment results and the selection of interventions for clients, practitioners could conduct the assessment two or more times with additional sets of targets and verify the results of the assessment within or across skill areas. For example, McGhan and Lerman (2013) assessed four error-correction procedures. The first assessment included a comparison of all four procedures. Thereafter, the authors verified the results of the initial assessment by conducting comparisons of three of the four procedures an additional two (one participant) or three times (four participants). Their validation tests confirmed the results of the initial assessment in 11 of the 14 comparisons (79%).

Step 5: Select a Skill and Targets

The assessment entails rapid alternation between intervention conditions to which unique sets of targets are assigned, although the specific skill being taught during the assessment should be consistent across all conditions. For example, tact training may be targeted during a comparison of prompt-fading procedures. If the targeted skill differed across conditions, practitioners would not know whether the intervention condition identified as most efficient was a result of some aspect of the intervention or was due to the difficulty of the targeted skill taught within that condition. However, the specific skill taught during the assessment can vary across clients even if the same intervention conditions are included in each client’s assessment. For example, an assessment of error-correction procedures conducted with one child could include tact training, whereas an assessment of the same error-correction procedures conducted with another child could include match-to-sample training.

The specific targets assigned to each condition in the assessment will vary. Therefore, steps must be taken to equate sets of targets so that the assessment outcomes are not unduly affected by target selection and assignment. A logical analysis method is frequently used in published studies on assessment-based instruction (Wolery et al., 2014). This method considers several factors that should influence target assignment to sets, including the number of syllables, initial consonants, overlapping sounds or visual similarity, the participant’s knowledge of stimuli, and participant articulation (if the response will be spoken by the participant).

Sets of targets should include a similar number of syllables, particularly if the participant has poor auditory discrimination skills or deficits in articulation (Grow & LeBlanc, 2013). For example, sets that include three one-syllable responses (e.g., Set 1: cat, bird, frog; Set 2: dog, fish, bear) will be more evenly matched than sets that include highly discrepant numbers of syllables (e.g., Set 1: cat, bird, dog; Set 2: hippopotamus, rhinoceros, and anteater). Practitioners could generate a pool of potential targets for assignment to conditions in the assessment and carefully assign targets across conditions to balance the total number of syllables in each condition (e.g., each condition includes a set of targets comprised of eight total syllables).

Targets with similar initial consonants should be assigned to different conditions. For example, “hat” and “hand” both begin with the same consonant and should be assigned to different conditions. Similarly, any targets with overlapping sounds should be assigned to different conditions. For example, “cat” and “hat” should not be assigned to the same condition because they rhyme. One of the participants in Fisher et al. (2019) had the targets “neck” and “desk” assigned to the same condition. The authors noted that the participant’s echoic behavior following each auditory stimulus sounded nearly identical; the intervention was modified to elongate the “sk” sound in “desk” to help the participant discriminate these auditory stimuli. Following that modification, the participant acquired the targeted skill. Because both targets contained a similar sound (e.g., “eh”), they could have been assigned to different sets to prevent this discrimination error from delaying acquisition.

Stimuli that contain similar or overlapping visual components should be assigned to different conditions. For example, a picture of broccoli and a picture of an apple tree should not be placed in the same set because both pictures contain green stimuli that have a rounded top portion and a stalk or trunk underneath. Instead, broccoli could be assigned to a condition that contains a brown dog and a red shirt to arrange visually distinct targets within the same set.

Clients have unique instructional histories prior to participating in assessments, and their familiarity with targets will likely vary. Although an AATD does not require a baseline prior to conducting the comparison (Sindelar et al., 1985), a baseline phase will help determine whether the participant has similar levels of responding across sets of targets prior to conducting the assessment. For example, a client who engages in no correct responses to targets assigned to two conditions and two correct responses to targets assigned to a third condition should have a new set of targets identified for the third condition. Baseline levels of responding should appear similar and stable across conditions before initiating the intervention comparison.

The participant’s articulation is also a consideration when assigning targets to conditions. An echoic assessment could be conducted prior to baseline to evaluate whether the participant can echo each target (e.g., Carp et al., 2012; Carroll et al., 2018). If deficits in articulation are noted during the echoic assessment, practitioners could identify approximations that will be permitted during baseline and the intervention comparison. Further, any targets producing echoics that sound similar should be assigned to different conditions.

Step 6: Equate Noncritical Procedures Across Conditions

The assessment must permit an evaluation of the specific intervention conditions selected for inclusion without the influence of extraneous or unrelated variables. When nonspecific components of intervention conditions vary, this can increase the likelihood that unrelated variables are responsible for the assessment results. For example, varying the mastery criterion across intervention conditions could lead to more rapid mastery of targets assigned to conditions with more lenient mastery criteria. However, the effects of the mastery criterion on training outcomes are not the purpose of conducting an assessment to evaluate the client’s response to intervention conditions. Thus, the use of consistent procedural arrangements, number of targets per set, reinforcement contingencies, mastery criterion, and all other unrelated variables during training will prevent conducting an assessment that biases the results of one or more intervention conditions. In other words, all nonspecific aspects of training (e.g., the mastery criterion, reinforcers, number of targets) should not vary within the assessment.

Some assessments will compare intervention conditions that strategically differ by one or more components. For example, an assessment of reading interventions may evaluate the effects of intervention, intervention plus contingent reinforcement, and intervention plus contingent reinforcement and performance feedback on the number of words read correctly per minute (e.g., Eckert et al., 2000). Although these conditions include variations in their components of intervention, the purpose of these variations is to examine the isolated and additive effects of variables on the targeted behavior. Thus, although many aspects of interventions should be held constant across conditions in assessments, there are exceptions to this recommendation when variations in the procedures allow practitioners to answer specific questions.

Step 7: Design Templates for Data Collection

Measurement systems to collect data during assessment-based instruction are often paper-and-pencil based, although advances in technology may permit computer or tablet data collection in some instances. When collecting paper-and-pencil data for each intervention condition, careful consideration should be given to the design of data-collection templates.

Some practitioners may design a single data-collection template that is used to measure targeted behavior in every condition. If certain target behaviors are not collected in every intervention condition, the practitioner may ignore and refrain from completing sections of the template. For example, a universal data-collection template in an assessment of prompt-dependent behavior may include measures of independent correct responses, prompted correct responses, prompt types, errors, no responses, and latency to engage in a response. In certain conditions, such as an extended response interval, data on prompted correct responses and prompt types are not collected because no prompts are delivered (e.g., Gorgan & Kodak, 2019). Thus, the practitioner collecting data during the extended response interval condition would refrain from completing those sections of the template.

Alternatively, practitioners might consider individualizing data-collection templates for each condition. Individualized templates would only contain measures of target behavior that are relevant to the condition. For example, in the extended response interval condition of a prompt-dependence assessment, only independent correct responses, errors, no responses, and latency to respond would be collected during sessions. In contrast, the template for the prompt-fading condition would contain almost all measures from the extended response interval condition, as well as data on prompted responses and prompt types due to the unique components of this intervention condition. Figure 2 shows examples of individualized data-collection templates for a prompt-dependence assessment. The three intervention conditions in the assessment have unique features that are highlighted by changes to the template.

Fig. 2.

Examples of individualized data-collection templates for each condition. Note. The top panel represents differential reinforcement, the middle panel represents prompt fading, and the bottom panel represents an extended response interval

Although a single template would likely require less time to design and permits greater flexibility in use across intervention conditions, there may be advantages to individualizing the data-collection templates for an assessment. Individualizing the data sheet to include measures that are specific to the condition may improve the accuracy of implementation of unique aspects of the procedures (LeBlanc et al., 2019). For example, LeBlanc et al. (2019) showed that behavior therapists who used an enhanced data-collection sheet were more likely to accurately implement recommended practices for conditional discrimination (e.g., counterbalancing comparison stimuli across positions in the array and rotating targets across trials) than behavior therapists who used a standard data-collection sheet. Thus, using enhanced data-collection sheets that are unique to conditions could improve procedural fidelity, although more research is warranted on this topic.

Step 8: Conduct the Assessment

After completing the aforementioned steps in designing an assessment to conduct with clients, practitioners will be ready to use their assessment to assist in the identification of individualized instructional strategies. The assessment log, data-collection template(s), targets, and other assessment materials should be organized and placed in a convenient location that will permit the practitioner to conduct the assessment with relevant clients. For example, the assessment log could be located at the front of a binder, the data sheets for each condition could be placed in separate tabs in the binder, and a protocol for each intervention condition could be placed at the front of each binder tab for quick reference. The first few series of session numbers could be written on the data sheets across conditions so that the practitioner who conducts the assessment with the client does not have to complete these steps. Sets of targets that are unique to each condition could be placed in an indexed box or labeled envelopes or bags. All the materials could be placed in a bin that is labeled with the assessment name for easy access during the client’s appointments.

The assessment will begin with the collection of baseline data in each assessment condition. A similar number of baseline sessions should be conducted in each condition. Refer to Fig. 3 for an example of baseline data collection alternated across assessment conditions. Baseline data collection will continue across conditions until levels of target behavior are similar across conditions and stable trends are obtained. The assessment log should be closely monitored by the lead therapist or supervisor to ensure that sessions of conditions are being conducted in the predetermined order. The practitioner will conduct a specified number of sessions per day, which should be determined based on the duration of the client’s appointment and consideration of other variables (e.g., client behavior, competing program goals or assessments). It is ideal to conduct at least one session of each intervention condition per appointment to distribute the sessions across days (Haq et al., 2015). The practitioner will rotate between sessions of conditions until responding in at least one condition meets the mastery criterion. Thereafter, the practitioner will continue to rotate between conducting the remaining conditions until responding meets mastery in those conditions or a discontinuation criterion is met.

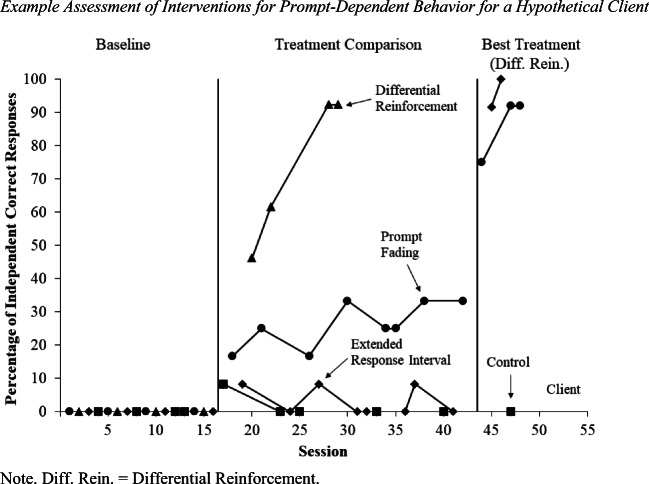

Fig. 3.

Example assessment of interventions for prompt-dependent behavior for a hypothetical client. Note. Diff. Rein. = differential reinforcement

Figure 3 shows a sample assessment conducted with a hypothetical client to compare interventions for prompt-dependent behavior (interventions included in the figure are described in Gorgan & Kodak, 2019). The figure shows the client’s percentage of independent correct responses in three interventions, including differential reinforcement, an extended response interval, and prompt fading, in comparison to a control condition (i.e., no intervention). The first phase of the figure is baseline, in which the interventions were not yet introduced, and the client did not engage in any independent correct responses. The second phase shows the treatment comparison of the three interventions. The client’s correct responding immediately increased and reached the mastery criterion of two consecutive sessions with independent correct responses at or above 90%. In comparison, levels of independent correct responses were low in the control and extended response interval conditions. Prompt fading gradually increased independent correct responses, although correct responding remained below 50% and reached a discontinuation criterion (i.e., double the number of treatment sessions required to produce mastery-level responding in another condition).

The inclusion of a discontinuation criterion should be considered in advance of the assessment to prevent the client from extended exposure to any intervention conditions that are ineffective. In the assessment-based instruction literature, researchers have used a variety of discontinuation criteria, such as conducting five or more sessions of each condition once mastery-level responding occurs in at least one condition (Johnson et al., 2017; McGhan & Lerman, 2013), a percentage longer than the first mastered condition’s overall time to mastery (25%; Schnell et al., 2019), or double the number of sessions in remaining conditions once responding reaches the mastery criterion in another condition (Gorgan & Kodak, 2019; Kodak et al., 2016). If many sessions of training are necessary to produce responding that reaches a mastery criterion in one condition, conducting double the number of sessions in remaining conditions may be prohibitive. Thus, practitioners could base their selection of a discontinuation criterion on the levels and trends in data, as well as the overall duration of the assessment.

Practitioners may be concerned about discontinuing any intervention conditions before the targets assigned to those conditions have reached the mastery criterion, because they have selected a skill they would like to teach during the assessment. When the discontinuation criterion is applied to one or more conditions, those remaining, untaught targets could be exposed to a best treatment phase (e.g., Gorgan & Kodak, 2019; Valentino et al., 2019). During this final, best treatment phase, the intervention condition that the assessment identified as most efficacious and efficient could be used to teach the remaining targets. That is, the interventions previously used with those targets are removed and the intervention that resulted in mastery-level responding in another condition would be used to teach the remaining targets. For example, a best treatment phase is shown in Fig. 3 (last panel). When the extended response interval and prompt-fading procedures were discontinued, and the targets in those conditions were exposed to the best treatment (i.e., differential reinforcement), the client’s independent correct responses immediately increased and reached mastery in both conditions. The use of a best treatment phase allows practitioners to complete the assessment having trained all targets assigned to intervention conditions and replicate the effects of the most efficacious and efficient intervention condition on mastery-level responding with additional targets. Therefore, the best treatment phase provides some validation of the replicability of treatment effects across one or more sets of targets. Practitioners could then decide whether to conduct the assessment again to verify the results across sets of targets from the same skill, validate the results with a second skill area, or conclude the assessment process and apply the assessment results to the client’s other instructional programming.

Practitioners should perform a cost–benefit analysis of conducting additional replications of the assessment. Their decision to immediately replicate the results may be based on how many times the assessment has been conducted with the client and the length of time until another comparison will be conducted. For example, practitioners could replicate initial assessments to verify that the results are accurate before basing instructional practices across programs on the results of the initial assessment (e.g., McGhan & Lerman, 2013). Subsequent assessments of the same conditions may not require replication, particularly if the results are identical to the previous assessment(s). In addition, if practitioners plan to use the results of the initial assessment to guide instructional practices for a relatively brief time period (e.g., 3 months) until the assessment will be conducted again, an immediate replication of the assessment may not be necessary. However, if the assessment will be used to guide instructional practices for longer periods (e.g., 6 months to 1 year), practitioners should consider conducting a replication of the assessment or comparing the intervention conditions across skill areas.

If repeated assessments do not show consistent results, practitioners should consider variables that may have affected the comparison. For example, the inclusion of targets that are not properly equated can influence the outcomes of the comparison and may bias the results in favor of one or more conditions (Cariveau et al., 2020). Practitioners could reconduct the assessment once additional steps are taken to equate stimuli across conditions (refer to Cariveau et al., 2020, for guidance), or consider using one or more interventions identified as efficacious and efficient in both assessments for a time period until the assessment is repeated later.

It remains unclear whether assessment results obtained from a comparison of interventions for one skill will generalize to other skills. Although one study on assessment-based instruction showed that the most efficient differential reinforcement procedure differed across types of skills (Johnson et al., 2017), no other studies have verified this outcome. Further, Gorgan and Kodak (2019) used assessment-based instruction results conducted with one type of skill to successfully reduce participants’ prompt-dependent behavior in other types of skills during their clinical practice. Until additional data are published on the generalization of assessment outcomes across types of skills, practitioners have several courses of action regarding the use of assessment results. They could verify the results of the assessment across skills prior to using the intervention condition in practice. Alternatively, they could apply the assessment results to training across skills for some specified time period before conducting the assessment again to evaluate the stability of outcomes over time. If the intervention is applied to the training of multiple skills but is not effective or appears inefficient during instruction, practitioners might reconsider whether to apply the assessment results across skills.

Although assessment results that do not generalize across skills could be considered a limitation of these assessments, there are certain skills targeted during comprehensive behavioral intervention that will be the focus of extensive instruction. For example, tacts, mands, conditional discrimination, and intraverbals, among other skills, are often the target of instruction across extended authorization periods (e.g., 1 or more years; Sundberg, 2008; Lovaas, 2003). Thus, identifying effective and efficient instructional practices for each of these targeted skills would be a worthwhile endeavor even if the assessment results were specific to a skill area.

Step 9: Use Assessment Results to Guide Practice

Once the assessment and any replications are completed, the results of the assessment should be used to guide the client’s future instructional programming. The results may be applied to some or all of the client’s intervention goals. In some cases, assessment outcomes will be applied to all intervention goals, such as when the assessment is used to identify an efficacious and efficient prompt-fading procedure. Because prompts and prompt fading are commonly used to address nearly all the client’s treatment goals, the results of an assessment of prompt-fading procedures will be highly relevant to programming. In contrast, other assessments may provide results that are relevant to a portion of the client’s treatment goals. For example, identifying an effective intervention to address prompt-dependent behavior will permit the application of the identified intervention to skills for which prompt-dependent behavior is observed.

Summary

Research on assessment-based instruction demonstrates the necessity of conducting an assessment to identify individualized interventions; clients do not respond similarly to intervention (e.g., Gorgan & Kodak, 2019; Ingvarsson & Hollobaugh, 2011; Johnson et al., 2017; Kodak et al., 2013; Seaver & Bourret, 2014). For example, Gorgan and Kodak (2019) assessed intervention conditions to treat preexisting prompt-dependent behavior with three individuals with ASD. Although all three individuals displayed prompt-dependent behavior and responded similarly to types of prompts, the variables contributing to the maintenance of prompt-dependent behavior were not assumed to be identical. The assessment results showed that intervention conditions that were efficacious and efficient for one participant were not efficacious for other participants. Therefore, the selection of one intervention to use with all participants would have resulted in a failure to effectively treat prompt dependence for one or more participants.

Assessment-based instruction can enhance the quality of behavior-analytic service delivery by identifying individualized and efficient instructional strategies to use with clients and by teaching clinically meaningful skills while completing the assessment. Practitioners can use the steps described previously to develop assessments to identify efficacious and efficient instructional strategies for each client. Once the assessments are designed, they can be used across clients or modified to investigate different intervention conditions for specific clients. Ultimately, the use of assessments within skill-acquisition procedures assists in the identification of the most efficient intervention condition for each client when the training of a skill requires less time or fewer training trials or sessions.

Interventions that differ in relation to efficiency may appear somewhat comparable during a single assessment, as they may include only one set of targets per condition. However, relatively small differences in efficiency compound over time. For example, if the duration of instruction in the most efficient intervention condition is half the duration of another efficacious condition (e.g., 1 min to master each target in comparison to 2 min per target, respectively), the use of more efficient practices could result in socially meaningful differences in acquisition over time. That is, the most efficient intervention could result in the mastery of double the number of instructional targets in a specified time period in comparison to the use of another effective but less efficient intervention. Services that can be arranged to teach a client double the number of targets per intervention period will better assist the client in making rapid gains in targeted skill areas and can help reduce the gap between the skills of the client and those of their typically developing peers. Therefore, an assessment that permits differentiation of efficacious and efficient instructional strategies will assist behavior analysts in engaging in assessment practices that are consistent with professional guidelines and can improve the outcomes of the clients and families who receive interventions based on applied behavior analysis.

Author Note

We thank Desiree Dawson for her feedback on an outline related to components of this tutorial.

Compliance with Ethical Standards

Conflict of Interest

Tiffany Kodak received financial compensation for consulting with an organization on the use of assessment-based instruction in practice and an honorarium for presenting on the design and use of assessment-based instruction. The authors have no other potential conflicts of interest to report.

Ethical Approval

No data on human participants or animals were included in this tutorial.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Beavers GA, Iwata BA, Lerman DC. Thirty years of research on the functional analysis of problem behavior. Journal of Applied Behavior Analysis. 2013;46:1–21. doi: 10.1002/jaba.30. [DOI] [PubMed] [Google Scholar]

- Behavior Analyst Certification Board. (2014). Professional and ethical compliance code for behavior analysts. http://bacb.com/wp-content/uploads/2016/03/160321-compliance-code-english.pdf

- Bergmann, S., Turner, M., Kodak, T., Grow, L. L., Meyerhofer, C., Niland, H. S., & Edmonds, K. (Early View, 2020). A replication of stimulus presentation orders on auditory-visual conditional discrimination training with individuals with autism spectrum disorder. Journal of Applied Behavior Analysis. 10.1002/jaba.797.

- Boudreau BA, Vladescu JC, Kodak TM, Argott PJ, Kisamore AN. A comparison of differential reinforcement procedures with children with autism. Journal of Applied Behavior Analysis. 2015;48:918–923. doi: 10.1002/jaba.232. [DOI] [PubMed] [Google Scholar]

- Bourret J, Vollmer TR, Rapp JT. Evaluation of a vocal mand assessment and vocal mand training procedures. Journal of Applied Behavior Analysis. 2004;37:129–144. doi: 10.1901/jaba.2004.37-129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campanaro, A. M., Vladescu, J. C., Kodak, T., DeBar, R. M., & Nippes, K. C. (2019). Comparing skill acquisition under varying onsets of differential reinforcement: A preliminary analysis. Journal of Applied Behavior Analysis. Advance online publication. 10.1002/jaba.615 [DOI] [PubMed]

- Cariveau, T., Batchelder, S., Ball, S., & La Cruz Montilla, A. (2020). Review of methods to equate target sets in the adapted alternating treatments design. Behavior Modification. Advance online publication. 10.1177/0145445520903049 [DOI] [PubMed]

- Cariveau T, Kodak T, Campbell V. The effects of intertrial interval and instructional format on skill acquisition and maintenance for children with autism. Journal of Applied Behavior Analysis. 2016;49:809–825. doi: 10.1002/jaba.322. [DOI] [PubMed] [Google Scholar]

- Carp CL, Peterson SP, Arkel AJ, Petursdottir AI, Ingvarsson ET. A further evaluation of picture prompts during auditory-visual conditional discrimination training. Journal of Applied Behavior Analysis. 2012;45:737–751. doi: 10.1901/jaba.2012.45-737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll RA, Joachim BT, St. Peter CC, Robinson N. A comparison of error-correction procedures on skill acquisition during discrete-trial instruction. Journal of Applied Behavior Analysis. 2015;48:257–273. doi: 10.1002/jaba.205. [DOI] [PubMed] [Google Scholar]

- Carroll RA, Owsiany J, Cheatham JM. Using an abbreviated assessment to identify effective error-correction procedures for individual learners during discrete-trial instruction. Journal of Applied Behavior Analysis. 2018;51:482–501. doi: 10.1002/jaba.460. [DOI] [PubMed] [Google Scholar]

- Coon JT, Miguel CF. The role of increased exposure to transfer-of-stimulus-control procedures on the acquisition of intraverbal behavior. Journal of Applied Behavior Analysis. 2012;45:657–666. doi: 10.1901/jaba.2012.45-657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cubicciotti JE, Vladescu JC, Reeve KF, Carroll RA, Schnell LK. Effects of stimulus presentation order during auditory-visual conditional discrimination training for children with autism spectrum disorder. Journal of Applied Behavior Analysis. 2019;52:541–556. doi: 10.1002/jaba.530. [DOI] [PubMed] [Google Scholar]

- Daly EJ, III, Martens BK, Hamler KR, Dool EJ, Eckert TL. A brief experimental analysis for identifying instructional components needed to improve oral reading fluency. Journal of Applied Behavior Analysis. 1999;32:83–94. doi: 10.1901/jaba.1999.32-83. [DOI] [Google Scholar]

- Dunn, L. M., & Dunn, D. M. (2007). Peabody Picture Vocabulary Test (4th ed.). Pearson Assessments). Bloomington.

- Eckert TL, Ardoin SP, Daisey DM, Scarola MD. Empirically evaluating the effectiveness of reading interventions: The use of brief experimental analysis and single case designs. Psychology in the Schools. 2000;37:463–473. doi: 10.1002/1520-6807(200009)37:5<463::AID-PITS6>3.0.CO;2-X. [DOI] [Google Scholar]

- Eckert TL, Ardoin SP, Daly EJ, III, Martens BK. Improving oral reading fluency: A brief experimental analysis of combining an antecedent intervention with consequences. Journal of Applied Behavior Analysis. 2002;35:271–281. doi: 10.1901/jaba.2002.35-271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Everett GE, Swift HS, McKenney ELW, Jewell JD. Analyzing math-to-mastery through brief experimental analysis. Psychology in the Schools. 2016;53:971–983. doi: 10.1002/pits.21959. [DOI] [Google Scholar]

- Fisher WW, Retzlaff BJ, Akers JS, DeSouza AA, Kaminski AJ, Machado MA. Establishing initial auditory-visual conditional discriminations and emergence of initial tacts in young children with autism spectrum disorder. Journal of Applied Behavior Analysis. 2019;52:1089–1106. doi: 10.1002/jaba.586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forbes BE, Skinner CH, Black MP, Yaw J, Booher J, Delisle J. Learning rates and known-to-unknown flash-card ratios: Comparing effectiveness while holding instructional time constant. Journal of Applied Behavior Analysis. 2013;46:832–837. doi: 10.1002/jaba.74. [DOI] [PubMed] [Google Scholar]

- Gilliam, J. C. (2014). Gilliam Autism Rating Scale (3rd ed.) Austin, TX: Pro-Ed.

- Gorgan EM, Kodak T. Comparison of interventions to treat prompt dependence for children with developmental disabilities. Journal of Applied Behavior Analysis. 2019;52:1049–1063. doi: 10.1002/jaba.638. [DOI] [PubMed] [Google Scholar]

- Grow LL, Carr JE, Kodak TM, Jostad CM, Kisamore AN. A comparison of methods for teaching receptive labeling to children with autism spectrum disorders. Journal of Applied Behavior Analysis. 2011;44:475–498. doi: 10.1901/jaba.2011.44-475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grow LL, LeBlanc LA. Teaching receptive language skills: Recommendations for instructors. Behavior Analysis in Practice. 2013;6:56–75. doi: 10.1007/BF03391791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley GP, Piazza CC, Fisher WW, Maglieri KA. On the effectiveness of and preference for punishment and extinction components of function-based interventions. Journal of Applied Behavior Analysis. 2005;38:51–65. doi: 10.1901/jaba.2005.6-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haq SS, Kodak T, Kurtz-Nelson E, Porritt M, Rush K, Cariveau T. Comparing the effects of massed and distributed practice on skill acquisition for children with autism. Journal of Applied Behavior Analysis. 2015;48:454–459. doi: 10.1002/jaba.213. [DOI] [PubMed] [Google Scholar]

- Hausman NL, Ingvarsson ET, Kahng S. A comparison of reinforcement schedules to increase independent responding in individuals with intellectual disabilities. Journal of Applied Behavior Analysis. 2014;47:155–159. doi: 10.1002/jaba.85. [DOI] [PubMed] [Google Scholar]

- Ingvarsson ET, Hollobaugh T. A comparison of prompting tactics to establish intraverbals in children with autism. Journal of Applied Behavior Analysis. 2011;44:659–664. doi: 10.1901/jaba.2011.44-659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingvarsson ET, Le DD. Further evaluation of prompting tactics for establishing intraverbal responding in children with autism. The Analysis of Verbal Behavior. 2011;27:75–93. doi: 10.1007/BF03393093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata BA, Dorsey MF, Slifer KJ, Bauman KE, Richman GS. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1994;27:197–209. doi: 10.1901/jaba.1994.27-197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson KA, Vladescu JC, Kodak T, Sidener TM. An assessment of differential reinforcement procedures for learners with autism spectrum disorder. Journal of Applied Behavior Analysis. 2017;50:290–303. doi: 10.1002/jaba.372. [DOI] [PubMed] [Google Scholar]

- Karsten AM, Carr JE. The effects of differential reinforcement of unprompted responding on the skill acquisition of children with autism. Journal of Applied Behavior Analysis. 2009;42:327–334. doi: 10.1901/jaba.2009.42-327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay, J. C., Kisamore, A. N., Vladescu, J. C., Sidener, T. M., Reeve, K. F., Taylor, S. C., & Pantano, N. A. (2019). Effects of exposure to prompts on the acquisition of intraverbals in children with autism spectrum disorder. Journal of Applied Behavior Analysis. Advance online publication. 10.1002/jaba.606 [DOI] [PubMed]

- Knutson S, Kodak T, Costello DR, Cliett T. Comparison of task interspersal ratios on skill acquisition and problem behavior for children with autism spectrum disorder. Journal of Applied Behavior Analysis. 2019;52:355–369. doi: 10.1002/jaba.527. [DOI] [PubMed] [Google Scholar]

- Kodak T, Campbell V, Bergmann S, LeBlanc B, Kurtz-Nelson E, Cariveau T, Haq S, Zemantic P, Mahon J. Examination of efficacious, efficient, and socially valid error-correction procedures to teach sight words and prepositions to children with autism spectrum disorder. Journal of Applied Behavior Analysis. 2016;49:532–547. doi: 10.1002/jaba.310. [DOI] [PubMed] [Google Scholar]

- Kodak T, Clements A, LeBlanc B. A rapid assessment of instructional strategies to teach auditory-visual conditional discriminations to children with autism. Research in Autism Spectrum Disorders. 2013;7:801–807. doi: 10.1016/j.rasd.2013.02.007. [DOI] [Google Scholar]

- Kodak T, Fisher WW, Clements A, Paden AR, Dickes NR. Functional assessment of instructional variables: Linking assessment and treatment. Research in Autism Spectrum Disorders. 2011;5:1059–1077. doi: 10.1016/j.rasd.2010.11.012. [DOI] [Google Scholar]

- Kodak T, Fuchtman R, Paden A. A comparison of intraverbal training procedures for children with autism. Journal of Applied Behavior Analysis. 2012;45:155–160. doi: 10.1901/jaba.2012.45-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeBlanc, L. A., Sump, L. A., Leaf, J. B., & Cihon, J. (2019). The effects of standard and enhanced data sheets and brief video training on implementation of conditional discrimination training. Behavior Analysis in Practice. Advance online publication. 10.1007/s40617-019-00338-5 [DOI] [PMC free article] [PubMed]

- Libby ME, Weiss JS, Bancroft S, Ahearn WH. A comparison of most-to-least and least-to-most prompting on the acquisition of solitary play skills. Behavior Analysis in Practice. 2008;1:37–43. doi: 10.1007/BF03391719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lord C, Rutter M, DiLavore P, Risi S. Autism Diagnostic Observation Schedule: Manual. Torrance, CA: Western Psychological Services; 1999. [Google Scholar]

- Lovaas OI. Teaching individuals with developmental delays: Basic intervention techniques. Austin, TX: Pro-Ed; 2003. [Google Scholar]

- McComas JJ, Wagner D, Chaffin MC, Holton E, McDonnell M, Monn E. Prescriptive analysis: Further individualization of hypothesis testing in brief experimental analysis of reading fluency. Journal of Behavioral Education. 2009;18:56–70. doi: 10.1007/s10864-009-9080-y. [DOI] [Google Scholar]

- McCurdy M, Clure LF, Bleck AA, Schmitz SL. Identifying effective spelling interventions using a brief experimental analysis and extended analysis. Journal of Applied School Psychology. 2016;32:46–65. doi: 10.1080/15377903.2015.1121193. [DOI] [Google Scholar]

- McGhan AC, Lerman DC. An assessment of error-correction procedures for learners with autism. Journal of Applied Behavior Analysis. 2013;46:626–639. doi: 10.1002/jaba.65. [DOI] [PubMed] [Google Scholar]

- Mellott, J. A., & Ardoin, S. P. (2019). Using brief experimental analysis to identify the right math intervention at the right time. Journal of Behavioral Education. Advance online publication. 10.1007/s10864-019-09324-x

- Nottingham CL, Vladescu JC, Kodak T, Kisamore A. The effects of embedding multiple secondary targets into learning trials for individuals with autism spectrum disorder. Journal of Applied Behavior Analysis. 2017;50:653–661. doi: 10.1002/jaba.396. [DOI] [PubMed] [Google Scholar]

- Peacock GG, Ervin RA, Daly EJ, Merrell KW, editors. Practical handbook of school psychology: Effective practices for the 21st century. New York, NY: Guilford Press; 2009. [Google Scholar]

- Petursdottir AL, McMaster K, McComas JJ, Bradfield T, Braganza V, Koch-McDonald J, Rodriguez R, Scharf H. Brief experimental analysis of early reading interventions. Journal of School Psychology. 2009;47:215–243. doi: 10.1016/j.jsp.2009.02.003. [DOI] [PubMed] [Google Scholar]

- Roncati AL, Souza AC, Miguel CF. Exposure to a specific prompt topography predicts its relative efficiency when teaching intraverbal behavior to children with autism spectrum disorder. Journal of Applied Behavior Analysis. 2019;52:739–745. doi: 10.1002/jaba.568. [DOI] [PubMed] [Google Scholar]

- Rutter M, LeCouteur A, Lord C. Autism Diagnostic Interview: Revised Manual. Los Angeles, CA: Western Psychological Services; 2003. [Google Scholar]

- Schnell, L. K., Vladescu, J. C., Kisamore, A. N., DeBar, R. M., Kahng, S., & Marano, K. (2019). Assessment to identify learner-specific prompt and prompt-fading procedures for children with autism spectrum disorder. Journal of Applied Behavior Analysis. Advance online publication. 10.1002/jaba.623 [DOI] [PubMed]

- Seaver JL, Bourret JC. An evaluation of response prompts for teaching behavior chains. Journal of Applied Behavior Analysis. 2014;47:777–792. doi: 10.1002/jaba.159. [DOI] [PubMed] [Google Scholar]

- Sindelar, P. T., Rosenberg, M. S., & Wilson, R. J. (1985). An adapted alternating treatments design for instructional research. Education and Treatment of Children, 67–76.

- Sundberg, M. L. (2008). VB-MAPP: Verbal Behavior Milestones Assessment and Placement Program: a language and social skills assessment program for children with autism or other developmental disabilities: guide. Concord, CA: AVB Press.

- Tiger JH, Hanley GP, Bruzek J. Functional communication training: A review and practical guide. Behavior Analysis in Practice. 2008;1:16–23. doi: 10.1007/BF03391716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Touchette PE, Howard JS. Errorless learning: Reinforcement contingencies and stimulus control transfer in delayed prompting. Journal of Applied Behavior Analysis. 1984;17:175–188. doi: 10.1901/jaba.1984.17-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valentino AL, LeBlanc LA, Veazey SE, Weaver LA, Raetz PB. Using a prerequisite skills assessment to identify optimal modalities for mand training. Behavior Analysis in Practice. 2019;12:22–32. doi: 10.1007/s40617-0180256-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer TR, Iwata BA, Zarcone JR, Smith RG, Mazaleski JL. The role of attention in the treatment of attention-maintained self-injurious behavior: Noncontingent reinforcement and differential reinforcement of other behavior. Journal of Applied Behavior Analysis. 1993;26:9–21. doi: 10.1901/jaba.1993.26-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Preschool and Primary Scale of Intelligence: Administration and Scoring Manual. 4. San Antonio, TX: The Psychological Corporation; 2012. [Google Scholar]

- Wolery M, Gast DL, Ledford JR. Comparative designs. In: Ledford JR, Gast DL, editors. Single case research methodology (pp. 283–334) London: Routledge; 2014. [Google Scholar]

- Woodcock, R. W. (1977). Woodcock–Johnson Psychoeducational Battery. Technical Report.

- Worsdell AS, Iwata BA, Dozier CL, Johnson AD, Neidert PL, Thomason JL. Analysis of response repetition as an error-correction strategy during sight-word reading. Journal of Applied Behavior Analysis. 2005;38:511–527. doi: 10.1901/jaba.2005.115-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yaw J, Skinner CH, Delisle J, Skinner AL, Maurer K, Cihak D, Wilhoit B, Booher J. Measurement scale influences in the evaluation of sight-word reading interventions. Journal of Applied Behavior Analysis. 2014;47:360–379. doi: 10.1002/jaba.126. [DOI] [PubMed] [Google Scholar]