Significance

Partisan biases in processing political information contribute to rising divisions in society. How do such biases arise in the brain? We measured the neural activity of participants watching videos related to immigration policy. Despite watching the same videos, conservative and liberal participants exhibited divergent neural responses. This “neural polarization” between groups occurred in a brain area associated with the interpretation of narrative content and intensified in response to language associated with risk, emotion, and morality. Furthermore, polarized neural responses predicted attitude change in response to the videos. These findings suggest that biased processing in the brain drives divergent interpretations of political information and subsequent attitude polarization.

Keywords: political polarization, cognitive neuroscience, computational methods, language

Abstract

People tend to interpret political information in a manner that confirms their prior beliefs, a cognitive bias that contributes to rising political polarization. In this study, we combined functional magnetic resonance imaging with semantic content analyses to investigate the neural mechanisms that underlie the biased processing of real-world political content. We scanned American participants with conservative-leaning or liberal-leaning immigration attitudes while they watched news clips, campaign ads, and public speeches related to immigration policy. We searched for evidence of “neural polarization”: activity in the brain that diverges between people who hold liberal versus conservative political attitudes. Neural polarization was observed in the dorsomedial prefrontal cortex (DMPFC), a brain region associated with the interpretation of narrative content. Neural polarization in the DMPFC intensified during moments in the videos that included risk-related and moral-emotional language, highlighting content features most likely to drive divergent interpretations between conservatives and liberals. Finally, participants whose DMPFC activity closely matched that of the average conservative or the average liberal participant were more likely to change their attitudes in the direction of that group’s position. Our work introduces a multimethod approach to study the neural basis of political cognition in naturalistic settings. Using this approach, we characterize how political attitudes biased information processing in the brain, the language most likely to drive polarized neural responses, and the consequences of biased processing for attitude change. Together, these results shed light on the psychological and neural underpinnings of how identical information is interpreted differently by conservatives and liberals.

Political polarization is a growing concern in societies across the world (1). In the United States, Democrats and Republicans have grown more ideologically divided in recent years, threatening both social harmony and effective governance (2, 3). Motivated political reasoning is a robust phenomenon thought to contribute to political polarization (4, 5). When presented with identical information, individuals with opposing political attitudes often become more entrenched in their original positions (6–9). The biased assimilation of political information impedes efforts to persuade partisans toward positions of consensus and compromise.

Why does the same information trigger divergent responses across individuals? One possibility is that motivation biases sensory attention (10, 11), such that people attend more to information that supports their beliefs. For example, when watching news footage about a protest, detractors of the protest might focus on aspects of the video suggesting that protestors are behaving in a threatening manner so as to discredit their cause. Consistent with this view, previous work suggests that political attitudes bias processing as early as sensory perception (12, 13). Alternatively, motivation might affect how people interpret the same sensory input. That is, the same actions can be interpreted as threatening or not threatening depending on one’s prior attitudes.

Neuroscience can offer insights into the fundamental cognitive processes that give rise to motivated political reasoning (14, 15). By assessing when biases in information processing emerge in the brain (e.g., “early” sensory cortices versus “late” association cortices), we can better understand how political attitudes affect different levels of information processing. However, real-world political content (e.g., news clips, televised debates) is often dynamic and complex and thus incompatible with typical neuroscientific analytical approaches that require averaging over short, repeatable “trials.” This presents a challenge to researchers studying the neural mechanisms underlying the biased processing of political information. To our knowledge, no study has examined how the brain processes naturalistic audiovisual political content.

In the current study, we draw on advances in the analysis of functional magnetic resonance imaging (fMRI) data to examine how political attitudes bias the processing of naturalistic political content. We scanned conservative or liberal-leaning participants while they watched 24 videos related to immigration policy, a polarized and politically significant topic in the United States (16) and in many countries around the world (17). The videos were 1 to 2 min long, were selected to represent both liberal and conservative viewpoints, and included news clips, campaign ads, and speeches by prominent politicians. Our analytical approach relies on the method of intersubject correlation (ISC), which computes the correlation in activity between brains as a measure of shared processing. ISC has been previously used to examine how the brain processes naturalistic stimuli such as spoken narratives or films and is well-suited to the study of the processing of real-world political content (18).

The first goal of our study is to examine if and how political attitudes modulate neural responses at different levels of information processing in the brain. Using ISC, we searched for evidence of neural polarization—activity that is shared between individuals with similar political attitudes but not between individuals with dissimilar political attitudes. Neural polarization measures the extent to which processing in a particular brain area diverges between conservative and liberal-leaning participants. If political attitudes bias sensory attention, we would expect neural polarization to emerge early in the processing stream (e.g., primary visual or auditory cortices) (19). Alternatively, if political attitudes bias the interpretation of the videos without altering sensory processing, we would expect neural polarization to emerge only in “higher-order” brain areas such as the dorsomedial prefrontal cortex (DMPFC), posterior medial cortex (PMC), or middle temporal gyrus (MTG). These brain regions have been previously shown to track the interpretation of narrative content (20, 21).

The second goal of our study was to characterize the content features in political information that were most likely to drive neural polarization. The literature on political psychology suggests that differences in political attitudes are associated with differences in moral values (22–24) and that emotional content enhances the polarizing effects of political messages (25, 26). We thus hypothesize that moral and emotional content would be viewed differently by participants with different political attitudes and thus would be most likely to drive polarized neural responses. To test this hypothesis, we first examined if moral and emotional content in the videos was associated with greater neural polarization. In a second analysis, we took advantage of the richness and complexity of our videos to test what other content features were likely to drive neural polarization. We broke down the content of the videos into 50 semantic categories (e.g., words related to risk, social affiliation, and religion) and assessed the extent to which each category was associated with greater neural polarization. This allowed us to take a data-driven approach to identify content that contributed to polarized neural responses.

The third goal of our study was to examine the relationship between polarized neural responses and attitude change. If shared neural responses reflect shared interpretation of a video, we would expect the degree of neural similarity to be associated with attitude change after viewing the video. In particular, the degree to which a participant’s neural response was similar to that of conservative or liberal participants would predict attitude change toward more conservative or liberal positions on immigration, respectively. We tested this hypothesis by assessing if neural similarity to the average conservative or average liberal participant while watching each video would be associated with self-reported ratings of how much the video changed participants’ attitudes on the relevant immigration policy.

Our study introduces an approach for investigating the neural processes underlying political cognition. In adapting fMRI paradigms to use real-world naturalistic political content, we study the biased processing of political content in a setting where we can be more confident of ecological validity. Using this approach, we identified a neural signature of biased processing of political information. The richness of naturalistic stimuli also allowed for data-driven analyses that generate hypotheses to be examined in future experiments. By examining how the brain processes political content, our study advances our understanding of the partisan brain and how it gives rise to the polarization afflicting societies today.

Results

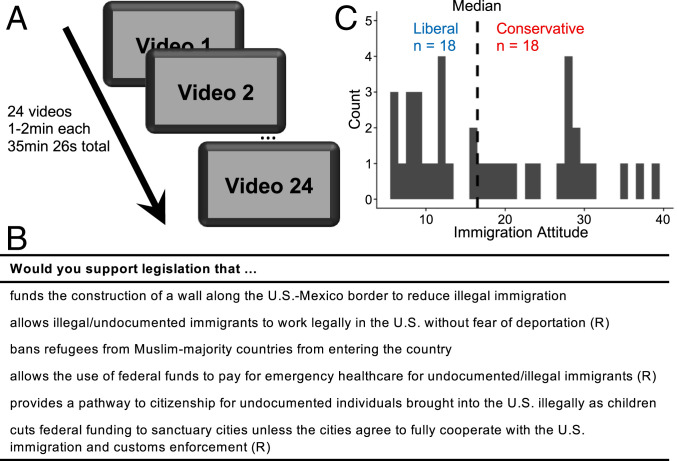

Thirty-eight participants were scanned using fMRI as they watched 24 videos on six immigration policies (total duration: 35 min 26 s, divided into four runs; Fig. 1 A and B). An online pretest with a larger sample indicated that conservatives and liberals in America held opposing attitudes on these policies (SI Appendix, Fig. S1). Prior to the experiment, participants indicated their support for each of the six policies. Their responses were recoded such that lower ratings reflect stronger support for liberal positions while higher ratings reflect stronger support for conservative positions. We then tallied each participant’s response to compute an “Immigration Attitude” score and performed a median split to identify participants with conservative-leaning immigration attitudes and participants with liberal-leaning immigration attitudes (Fig. 1C). The two groups did not differ significantly on age, sex, income, education, and amount of head motion in the scanner (SI Appendix, Table S1).

Fig. 1.

Experimental design. (A) Participants watched 24 videos on six immigration policies while undergoing fMRI. URLs to a subset of the videos are listed in SI Appendix, Table S2. (B) Prior to the experiment, participants indicated their support for each policy on a seven-point scale. Three of the questions, indicated here with (R), were reverse-coded such that higher ratings indicate stronger support for the conservative position while lower ratings indicate stronger support for the liberal position. (C) We tallied participants’ responses to compute their Immigration Attitude score and performed a median split to identify liberal-leaning and conservative-leaning participants.

Political Content Elicits Shared Neural Responses across Participants.

We first examined the extent to which viewing political videos elicited similar neural responses across participants. For each participant, we z-scored the activity time course for each video and concatenated the neural data such that the videos were ordered in the same sequence. For each voxel in a participant’s brain, we calculated the ISC as the correlation between that voxel’s time course of activity and the average time course of all other participants at the same voxel (18). This correlation was computed across the entire duration of the 24 videos. The resulting r values were then averaged across participants to obtain a map of average r values, which shows the extent to which activity at a given voxel was similar across participants. Statistical significance was assessed using a permutation procedure where the sign of each individual participant’s ISC values was randomly flipped to generate a null distribution for each voxel (Materials and Methods).

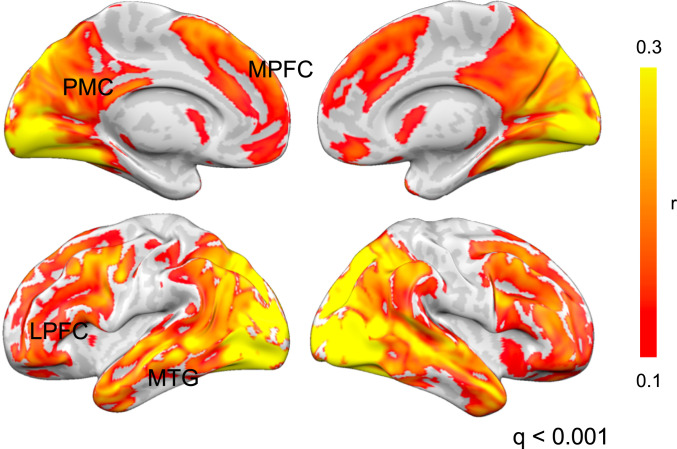

Consistent with earlier studies using audiovisual stimuli (27, 28), we observed high ISC in the primary auditory and visual cortices and low ISC in the motor and somatosensory cortices (Fig. 2). Similar to these studies, we also found widespread ISC in the medial and lateral prefrontal cortices (MPFC, LPFC), PMC, and MTG, areas which have been previously associated with the processing and comprehension of narrative stimuli (20, 21). These results indicate that the political videos evoked reliable neural responses that are shared across participants, irrespective of their political attitudes.

Fig. 2.

Shared neural response elicited by videos across participants. The videos elicited reliable shared responses across participants irrespective of their political attitudes. ISC was highest in sensory regions, including the primary visual and auditory cortices. There was also moderate ISC in higher-order regions such as the MPFC and LPFC, PMC, and MTG. Statistical maps were thresholded at an FDR of q < 0.001. An unthresholded map is available at https://neurovault.org/collections/PKFXOYLX/images/319401/.

DMPFC Response Diverged between Conservative-Leaning and Liberal-Leaning Participants.

Our next analysis focused on identifying brain areas that exhibited evidence of neural polarization. For each participant, we computed a “within-group ISC” as their voxelwise ISC with the average time course of all other participants with similar political attitudes (i.e., liberal vs. average liberal and conservative vs. average conservative), and a “between-group ISC” as the ISC with the average time course of all participants with dissimilar political attitudes (i.e., liberal vs. average conservative; conservative vs. average liberal). The difference between within-group and between-group ISC measures the degree to which neural activity was shared between participants with similar political attitudes but not between participants with dissimilar political attitudes. We thus searched the brain for voxels where within-group ISC was greater than between-group ISC to identify brain areas where the processing diverged between the two groups.

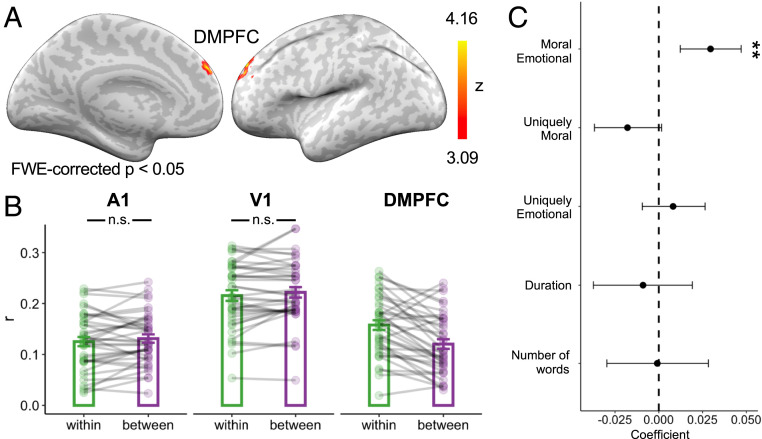

If differences between groups were due to discrepancies in low-level visual or auditory attention, we would expect differences in ISC to emerge early in the processing stream (e.g., primary visual or auditory cortex). In contrast, if the differences were related to interpretation and evaluation of the same audiovisual input, we would expect to see differences emerge in the “higher-order” association cortex. Consistent with the latter hypothesis, within-group ISC was greater than between-group ISC only in the left DMPFC (Fig. 3A). Previous work using ambiguous stimuli has found that activity in this region tracks the interpretation of narratives and is more similar between participants with similar interpretations of the same narrative (20, 21, 29). Within-group ISC in the DMPFC was higher than between-group ISC in both conservative and liberal participants, indicating that the results were not driven by one of the two groups (SI Appendix, Fig. S2). In contrast, within-group ISC did not differ from between-group ISC in primary sensory regions (Fig. 3B). Within-group ISC in the DMPFC also did not differ from between-group ISC when participants were divided into groups based on sex or a median split by age, suggesting that the polarized neural responses were specifically related to differences in political attitudes rather than to differences in sex or age (SI Appendix, Fig. S3).

Fig. 3.

DMPFC time course diverges between conservatives and liberals. (A) Within-group ISC was higher than between-group ISC in the left DMPFC. P values were computed by comparing the observed ISC difference to a null distribution generated using a nonparametric permutation procedure (Materials and Methods). We imposed an FWE cluster-correction threshold of P < 0.05 with a cluster-forming threshold of P < 0.001. An unthresholded map is available at https://neurovault.org/collections/PKFXOYLX/images/319402/. (B) Within-group ISC was not significantly different from between-group ISC in the primary auditory (A1: t [37] = −1.26, P = 0.215) and visual cortex (V1: t [37] = −1.55, P = 0.130). For comparison, we display the ISC values for the DMPFC, but no additional inferences should be drawn based on these plots as the statistical contrast used to identify the DMPFC predetermined a significant difference. Data points denote individual participants, and error-bars denote between-participant SEM. (C) The use of moral-emotional language was associated with greater neural polarization in the DMPFC. Data points indicate regression coefficients with 95% confidence intervals estimated from a linear mixed-effects model. **Holm–Bonferroni-adjusted: P < 0.01. n.s.: not significant.

In the above analyses, we divided participants into conservatives and liberals via a median split on their immigration attitude scores and examined if within-group neural similarity was greater than between-group neural similarity. An alternative approach is to examine the relationship between neural responses and immigration attitudes in a pairwise fashion and test if participants who are closer in immigration scores have more similar neural responses [i.e., a representational similarity analysis (RSA) (30)]. We ran an exploratory whole-brain RSA and found no significant clusters at familywise error rate (FWE)-corrected P < 0.05 with a cluster-forming threshold of P < 0.001. We discuss this null finding in SI Appendix, Supplementary Text.

Neural Polarization in DMPFC Is Associated with Use of Moral-Emotional Language.

To examine the content features that drive neural polarization in the DMPFC, we segmented the 24 videos into 86 shorter “segments” (average duration: 24.7 s, SD = 5.56 s). We averaged DMPFC activity in each segment separately for liberal- and conservative-leaning participants to obtain an average liberal time course and average conservative time course (SI Appendix, Fig. S4A). We then computed the absolute difference between the two time courses as a continuous measure of neural polarization in the DMPFC (SI Appendix, Fig. S4B).

We first assessed if moral and emotional content was associated with greater neural polarization in the DMPFC. Using the Moral Emotional Dictionary in ref. 31, we identified words that relate to both morality and emotions (moral-emotional words: e.g., “compassionate,” “violate”), words that relate to morality but not emotions (uniquely moral words: e.g., “ethics,” “principles”), and words that relate to emotions but not morality (uniquely emotional words: e.g., “rewarding,” “fear”).

For each segment, we calculated the percentage of moral-emotional, uniquely moral, and uniquely emotional words. We then entered these percentages as predictor variables in the same linear mixed-effects model to predict neural polarization in the DMPFC, with the number of words and duration of the segment as additional covariates and a random intercept for each video. Moral-emotional words were associated with greater neural polarization (b = 0.030, 95% CI [0.012, 0.047], t[75] = 3.27, P = 0.002, Holm–Bonferroni-adjusted P = 0.005), while uniquely moral (b = −0.018, 95% CI [−0.037, 0.002], t[79] = −1.85, P = 0.067, Holm–Bonferroni-adjusted P = 0.135), and uniquely emotional words (b = 0.008, 95% CI [−0.009, 0.026], t[76] = 0.888, P = 0.377, Holm–Bonferroni-adjusted P = 0.377) were not (Fig. 3C), suggesting that the use of moral-emotional language led to greater polarization in neural responses.

Data-Driven Linguistic Analysis of Content Features Associated with Neural Polarization.

Next, we assessed the relationship between neural polarization and a broader set of content features. We calculated the percentage of words in each segment that fell into the 47 semantic categories included in the Linguistic Inquiry and Word Count software (LIWC) (32). This allowed us to quantify the extent to which each segment contained words that relate to a variety of semantic content. For each semantic category, we fit a linear mixed-effects model that models neural polarization in the DMPFC as a function of the percentage of words in that category, with a random intercept included for each video and the number of words and duration of the segment added as covariates of no interest. For comparison, we also ran the same analysis with the three categories from the Moral Emotional Dictionary in ref. 31, yielding a total of 50 tests.

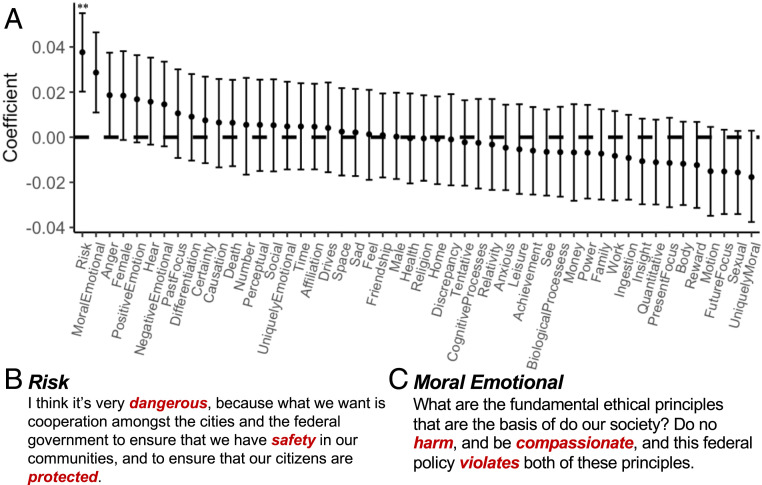

Fig. 4A shows the regression coefficient for each variable estimated from the model (numerical values reported in SI Appendix, Table S3). Only risk-related words (e.g., “threat,” “security”) remained significantly associated with neural polarization in the DMPFC after correction for 50 comparisons (b = 0.037, t[81] = 4.20, 95% CI [0.020, 0.055], P < 0.001, Holm–Bonferroni-adjusted P = 0.003). Moral-emotional words were the next strongest predictor of neural polarization in the DMPFC, and the only other predictor significant at an uncorrected P < 0.05, although this association would not survive correction for 50 comparisons (b = 0.029, t[78] = 3.13, 95% CI [0.011, 0.046], P = 0.002, Holm–Bonferroni-adjusted P = 0.120). Similar results were obtained when all variables were included in a ridge regression model (SI Appendix, Fig. S5, and Supplementary Text). Example segments containing risk-related and moral-emotional words are shown in Fig. 4 B and C, respectively.

Fig. 4.

Relationship between semantic content and neural polarization in DMPFC. (A) Regression coefficients estimated using linear mixed-effects models. Error bars indicate 95% confidence intervals. (B) Red font indicates examples of risk-related words. (C) Red font indicates examples of moral-emotional words. **Holm–Bonferroni-corrected: P < 0.01.

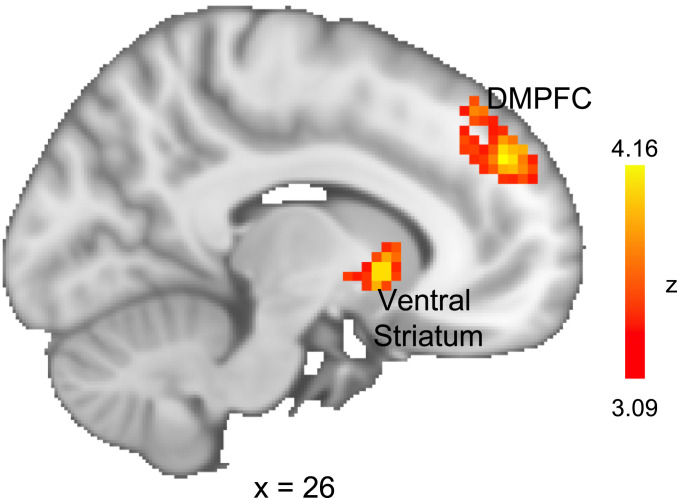

Political Differences Are Associated with Divergent Frontostriatal Connectivity.

How might the neural polarization in the DMPFC arise? One possibility is that inputs to the DMPFC are modulated by one’s preexisting political attitudes. That is, even if neural responses in other brain areas were similar between the two groups, differential connectivity to the DMPFC could drive different DMPFC responses to the videos. We ran intersubject functional connectivity (ISFC) analyses to test if connectivity to the DMPFC was modulated by political attitudes.

Functional connectivity (FC) between brain regions is thought to reflect interregional communication (33). While conventional FC analyses compute FC as the interregional correlation within each participant’s brain, ISFC analyses compute the interregional correlation between brains. In doing so, the ISFC approach filters out within-participant correlations unrelated to stimulus processing (34). ISFC does not imply that there is communication between brains; the technique merely uses a second brain as a model of neural responses to the stimulus from which to compute stimulus-driven correlations between brain regions.

We computed the correlation between each participant’s DMPFC time course and 1) the time course of each voxel averaged over all other participants in the same political group (within-group ISFC) and 2) the time course of each voxel averaged over all participants in the other political group (between-group ISFC). The correlation between the ventral striatum and the DMPFC was stronger when computed between participants with similar political attitudes than when computed between participants with dissimilar political attitudes (within-group ISFC > between-group ISFC) (Fig. 5), suggesting that covariation between the ventral striatum and the DMPFC was modulated by political attitudes.

Fig. 5.

Intersubject functional connectivity between the ventral striatum and DMPFC was stronger when computed between participants with similar political attitudes. DMPFC was used as a seed region to compute within- and between-group ISFC. Statistical map shows voxels where within-group ISFC was higher than between-group ISFC. This analysis also reproduced our earlier result where DMPFC was more correlated within-group than between-group. We imposed an FWE cluster-correction threshold of P < 0.05 with a cluster-forming threshold of P < 0.001. An unthresholded map is available at https://neurovault.org/collections/PKFXOYLX/images/319403/.

Neural Similarity to Partisan Average Time Courses Predicts Video-Specific Attitude Change.

After watching each video, participants rated the extent to which the video made them more or less likely to support the relevant policy on a five-point scale. The responses were recoded such that higher ratings denote attitude change toward the conservative position (e.g., more likely to support the construction of a border wall) while lower ratings denote attitude change toward the liberal position (e.g., less likely to support the construction of a border wall). On average, ratings were higher for conservative participants than liberal participants (MConservative = 3.16, SE = 0.154, MLiberal = 1.79, SE = 0.105, t[30.5] = 5.23, P < 0.001), indicating that participants were more likely to change their attitudes toward the positions held by their respective groups, although there was considerable variability across participants (SI Appendix, Fig. S6) and videos (SI Appendix, Fig. S7).

We hypothesized that processing a video in a manner that is similar to a particular political group would predict attitude change toward positions held by that group. For each video, we calculated the correlation between each participant’s DMPFC time course and 1) the average conservative DMPFC time course and 2) the average liberal DMPFC time course. The average time courses were calculated while excluding that participant’s data. We then computed the difference between the two correlations as a measure of whether a participant’s brain activity was more similar to that of an average conservative (positive values) or to that of an average liberal (negative values).

For a given video, participants whose DMPFC time course was more similar to the average conservative or the average liberal participant were more likely to change their attitude toward the conservative position or the liberal position, respectively (b = 0.29, SE = 0.12, t[876] = 2.43, P = 0.015). This analysis was conducted using a linear mixed-effects model and controlled for each participant’s initial attitude toward the policy (Materials and Methods). Similar effects were not observed with participants’ ratings of agreeableness (b = 0.07, SE = 0.136, t[867] = 0.51, P = 0.614) and credibility (b = −0.07, SE = 0.136, t[880] = −0.55, P = 0.584) of the videos.

Discussion

Preexisting attitudes powerfully influence how individuals respond to political information. In the current work, we combined fMRI and text analysis to study why conservatives and liberals respond differently to the same political content. Activity in the DMPFC diverged between conservative-leaning and liberal-leaning participants watching the same video clips related to immigration policy. This “neural polarization” between the two groups increased with the use of risk-related and moral-emotional language in the videos, highlighting the type of language likely to drive divergent interpretations between the two groups. Neural polarization also tracked subsequent attitude polarization. For each video, participants with DMPFC activity time courses more similar to that of conservative-leaning participants became more likely to support the conservative position. Conversely, those with DMPFC activity time courses more similar to that of liberal-leaning participants became more likely to support the liberal position. These results suggest that divergent interpretations of the same information are associated with increased attitude polarization. Together, our findings describe a neural basis for partisan biases in processing political information and their effects on attitude change.

Our approach builds on earlier findings showing that the neural similarity between individuals watching or listening to a stimulus reflects similarity in how the stimulus is processed by a particular brain region (18, 35, 36). In our dataset, neural responses in the primary sensory cortices were not significantly different between conservative-leaning and liberal-leaning participants, suggesting that political attitudes did not alter sensory processing. Neural responses between the two groups differed only in the DMPFC, a brain region that has been associated with the interpretation of narrative stimuli (20, 21, 29). For example, a previous study found that neural responses in the DMPFC diverged between participants manipulated to have different interpretations prior to listening to an ambiguous story (21). Here, we did not manipulate participants to have different interpretations. Instead, participants’ political attitudes served as intrinsic “priors” that biased how they interpreted the content of the videos.

The DMPFC has been implicated in a broad range of complex cognitive functions, including episodic memory retrieval, impression formation, and reasoning about other people’s mental states (37–39). One account that integrates these disparate findings is that the DMPFC is involved in the construction of situation models—mental representations of the actors, actions, and objects in an event as well as their spatial, temporal, and causal relationships (40–42). The divergence in DMPFC activity between conservative-leaning and liberal-leaning participants might thus reflect the two groups constructing different situation models of the events depicted in the videos. To better understand how conservative-leaning and liberal-leaning participants interpreted the videos differently, we analyzed the content of the videos to find semantic features that would be associated with greater neural polarization in the DMPFC.

Risk-related and moral-emotional words were most likely to drive neural polarization in the DMPFC. These results are consistent with two major lines of work in political psychology. First, several prominent theories have proposed that conservatives and liberals exhibit different levels of threat sensitivity (43–45). Risk-related words are often used to describe potential threats, which would trigger different responses in the two groups. Second, conservatives and liberals are thought to adopt different moral frameworks and thus see the world through distinct “moral lenses” (22–24). As such, what conservatives consider a moral transgression may seem perfectly acceptable to liberals, and vice versa. Taken together, these theories would predict that conservatives and liberals would have different interpretations of what is a threat and what is morally praiseworthy or blameworthy. This would explain why the neural polarization in the DMPFC was greater in parts of the videos with more risk-related and moral-emotional words.

Participants’ interpretation of the videos would likely modulate if and how the videos changed their attitudes. To examine the relationship between video interpretation and attitude change, we used DMPFC activity as a neural model of how participants interpreted each video. Specifically, we averaged the DMPFC time course separately for conservative-leaning and liberal-leaning participants. These average time courses reflect how the typical conservative-leaning or liberal-leaning participants processed each video. We thus computed the similarity between each participant’s DMPFC time course and the two average time courses to obtain a neural metric of whether a participant’s interpretation was more similar to the conservative interpretation or the liberal interpretation. For a given video, neural similarity to a particular group was associated with attitude change toward the positions held by that group. This finding suggests that adopting the liberal interpretation of a video biased participants toward the liberal position while adopting the conservative interpretation biased participants toward the conservative position.

How might the differential response in the DMPFC arise in the brain? One possibility is that inputs to the DMPFC were modulated by political attitudes. Consistent with this hypothesis, we found that intersubject functional connectivity between the ventral striatum and the DMPFC was stronger between individuals with similar political attitudes. The ventral striatum is commonly associated with the processing of affective valence (i.e., whether an experience is positive or negative) (46, 47). Our results suggest that the propagation of valence information from the ventral striatum to the DMPFC was modulated by one’s political attitudes. This biases the DMPFC response, giving rise to the divergence in DMPFC activity between participants with dissimilar political attitudes. The temporal resolution of fMRI data, however, does not allow us to make claims about the directionality of influence. As such, this interpretation is speculative and future studies will be needed to clarify the role of frontostriatal connectivity in modulating DMPFC responses.

Control analyses indicate that neural responses in the DMPFC did not diverge between female and male participants, nor did they diverge between old and young participants, suggesting that sex- and age-related differences in how the videos were viewed did not polarize activity in the DMPFC. The size and composition of our sample did not allow for us to test for the effects of race. We note also that demographic variables, including sex, age, race, and religion, might moderate the effects of political attitudes on neural activity. For example, the neural response of a female liberal participant of one race might be more similar to another female liberal participant of the same race than to a male liberal participant of a different race. Given that demographics are highly associated with attitudes toward immigration (48), as well as political attitudes more broadly (49), this is a plausible hypothesis. Testing for these effects will require future work with a larger sample size and oversampling of participants from minority groups to ensure an equal number of participants in each group.

It will also be important to test if our results would generalize to other polarizing issues, such as abortion, gun control, and climate change. Given that political differences on these issues are also associated with differences in threat perception and moral values (50, 51), we predict that this would be the case. Future studies should also examine the extent to which our results would apply to polarized groups outside the American political context. Recent work found that political messages with more moral-emotional words were more likely to spread on online social networks (31, 52). This spread, however, was contained within networks of individuals who share similar political attitudes and can contribute to further attitude polarization. The polarized neural responses observed in our experiment suggest a neural precursor to the biased diffusion of political information. In particular, messages that induce greater polarization between groups might also be messages that are more likely to spread in a polarized manner. This hypothesis can be tested by measuring the neural responses of participants reading messages and analyzing whether, and with whom, the messages are subsequently shared.

Our work introduces a multimethod approach to study the political brain under naturalistic conditions. Using this approach, we identified a neural correlate of the biased processing of political information, as well as the content features most likely to be processed in a biased manner. Divergent interpretations, as indexed by neural activity, were in turn associated with attitude change in response to the videos. Future work can combine neuroimaging data with machine learning methods in natural language processing to build semantic models of how political content is interpreted and inform interventions aimed at aligning interpretations between conservative and liberals.

Materials and Methods

Participants.

Forty participants were recruited from the Stanford community using an online human participant management platform (SONA systems). Participants interested in the study first indicated their age and sex, as well as their support for the six immigration policies (Experimental Task) on an online questionnaire. We made an effort to recruit participants with varying immigration attitudes. As the pool of participants leaned liberal, this required oversampling of participants with conservative-leaning attitudes. Participants received $50 for participating in the 2-h experiment. Data from two participants were discarded because of excessive head motion (>3 mm) during one or more scanning sessions, yielding an effective sample size of 38 participants (23 male, 15 female, ages 19 to 57, mean age: 31.3). All participants provided written, informed consent prior to the start of the study. Experimental procedures were approved by the Stanford Institutional Review Board.

Experimental Task.

Participants were scanned using fMRI as they watched 24 videos (total duration: 35 min 26 s, divided into four runs) on six immigration policies: 1) border wall: the construction of a wall along the United States–Mexico border to reduce illegal immigration; 2) work authorization: allowing illegal/undocumented immigrants to work legally in the United States without fear of deportation; 3) refugee ban: banning refugees from majority-Muslim countries from entering the United States; 4) healthcare provision: allowing the use of federal funds to pay for emergency healthcare for undocumented/illegal immigrants; 5) Dream Act: providing a pathway to citizenship for undocumented individuals brought into the United States illegally as children; 6) sanctuary cities: cutting federal funding to sanctuary cities unless the cities agree to fully cooperate with the US immigration and customs enforcement. Each fMRI run contained one video on each policy. The order of the videos was otherwise randomized across participants. All videos were obtained from youtube.com and were selected to represent both liberal and conservative viewpoints. We provide the URL and a one-sentence description of each video in SI Appendix, Table S2.

Participants were instructed to watch the videos as they would watch television at home. At the end of each video, participants were asked to rate on a five-point scale how much they agreed with the general message of the video (agreeableness), how credible was the information presented in the video (credibility), and the extent to which the video made them more or less likely to support the policy in question (change).

Pre- and Postexperimental Measures.

Prior to being scanned, participants first completed a questionnaire on which they indicated their support for the six immigration policies (seven-point scale from “Strongly Not Support” to “Strongly Support”), their political orientation (seven-point scale from “Extremely Liberal” to “Extremely Conservative”), and political affiliation (“Strong Democrat,” “Moderate Democrat,” “Independent,” “Moderate Republican,” “Strong Republican”). At the end of the scanning session, participants completed the same questionnaire and also provided information about their annual household income (in $10,000 increments from 0 to $100,000; $100,000 to $150,000; and more than $1,500,000) and education levels (less than high school, high school/GED, some college, 2-y college degree (associates), 4-y college degree (BA, BS), master’s degree, and doctorate or professional degree).

fMRI Data Acquisition and Preprocessing.

MRI data were collected using a 3T General Electric MRI scanner. Functional images were acquired in interleaved order using a T2*-weighted echo planar imaging pulse sequence (46 transverse slices, repetition time [TR]: 2 s; echo time [TE] : 25 ms; flip angle: 77°; voxel size: 2.9 mm3). Anatomical images were acquired at the start of the session with a T1-weighted pulse sequence (TR: 7.2 ms; TE: 2.8 ms; flip angle: 12°; voxel size: 1 mm3). Image volumes were preprocessed using FSL/FEAT v.5.98 (FMRIB software library, FMRIB, Oxford, UK). Preprocessing included motion correction, slice-timing correction, removal of low-frequency drifts using a temporal high-pass filter (100-ms cutoff), and spatial smoothing (4-mm full width at half maximum). Functional volumes were first registered to participants’ anatomical image (rigid-body transformation with 6 degrees of freedom) and then to a template brain in Montreal Neurological Institute space (affine transformation with 12 degrees of freedom).

The preprocessed data were then loaded into MATLAB (Mathworks) using the NIfTI toolbox. Each fMRI run was first normalized by z-scoring across time to remove baseline differences in the magnetic resonance signal. The resulting z-value is dependent on the mean activity in each run, which is in turn affected by the videos in the run. As the video order was randomized, the z-value of a particular video may be different between participants due to each participant having a different combination of videos in each run. To minimize the influence of other videos on mean video activity, we z-scored the time course within each video separately.

Intersubject Correlation Analyses.

We reordered and concatenated the neural data such that the 24 videos were ordered in the same sequence for all participants. We computed the one-to-average intersubject correlation across the entire sample. As the time courses were z-scored separately for each video, the correlation would not be driven by differences in mean activity between videos, but instead reflect similarity in the time course within each video. For each participant, we computed the Pearson correlation between the activity time course of a voxel and the activity time course at the same voxel averaged across all other participants. This procedure was repeated for all voxels and averaged over participants to obtain a map of average r-values.

We assessed statistical significance using a nonparametric permutation test. For each voxel, we computed the t-statistic testing if the average r-value was greater than zero. To generate a null distribution, we flipped the sign of the r-values for a random subset of participants and recomputed the t-statistic. This procedure was repeated 10,000 times. The P value was computed as the proportion of the null distribution that was more positive than the observed t-statistic. We thresholded the statistical map for voxels that survive correction for multiple comparisons to control for false discovery rate (FDR) using the two-stage Benjamini, Krieger, and Yekutieli procedure (53) (q < 0.001).

Within-Group vs. between-Group Analyses.

For each participant, we calculated an immigration attitude score by tallying their support for the six immigration policies. The responses were coded such that higher ratings correspond to a stronger conservative-leaning. We then performed a median split to categorize participants into conservative and liberal participants. We ran two-sample t tests to test for group differences age, head-motion (framewise displacement), education, and household income and a χ2 test to test for group differences in sex. We also computed the Spearman correlation between the continuous immigration attitude scores and age, head-motion, education, and household income.

We searched for voxels where the time course of activity was more similar within-group than between-group. For each participant, we computed the within-group ISC as the voxel-wise correlation with the average time course of all other participants in the group (i.e., correlation between the activity of a liberal participant and the average activity of all other liberal participants; correlation between the activity of a conservative participant and the average activity of all other conservative participants). Conversely, we computed the between-group ISC as the voxel-wise ISC with the average time course of participants in the other group (i.e., correlation between the activity of a liberal participant and the average activity of conservative participants; correlation between the activity of a conservative participant and the average activity of liberal participants). For each participant and each voxel, we computed the difference between the within-group ISC and between-group ISC. This difference was then averaged across all participants to obtain a map of average difference in r-values, which reflects the extent to which the activity time course was more similar within groups than between groups.

We used a nonparametric permutation test to assess statistical significance of the difference map. For each voxel, we computed the t-statistic testing if the average difference in r was greater than zero. To generate a null distribution, we flipped the sign of the difference in r for a random subset of participants and recomputed the t-statistic. This procedure was repeated 10,000 times. The P value was computed as the proportion of the null distribution that was more positive than the observed t-statistic. We imposed a family-wise error cluster-correction threshold of P < 0.05 using Gaussian Random Field theory with a cluster-forming threshold of P < 0.001.

Intersubject Functional Connectivity Analyses.

A DMPFC region of interest (ROI) was defined as the voxels that survived correction in the within-group ISC > between-group ISC contrast. For each participant, we extracted the average time course in this ROI. For each voxel, we then computed the correlation between the average DMPFC time course and the voxel-wise time course averaged over all other participants in the same political group (within-group ISFC) and that averaged over all participants in the other political group (between-group ISFC). For each participant and each voxel, we computed the difference between the within-group ISFC and between-group ISFC. This difference was then averaged across all participants to obtain a map of average difference in r, which reflects the extent to which the activity time course was more correlated with average DMPFC activity of participants with similar immigration attitudes and that of participants with opposite immigration attitudes.

We used a nonparametric permutation test to assess statistical significance of the difference map. For each voxel, we computed the t-statistic testing if the average difference in r was greater than zero. To generate a null distribution, we flipped the sign of the difference in r for a random subset of participants and recomputed the t-statistic. This procedure was repeated 10,000 times. The P value was computed as the proportion of the null distribution that was more positive than the observed t-statistic. We imposed a family-wise error cluster-correction threshold of P < 0.05 using Gaussian Random Field theory with a cluster-forming threshold of P < 0.001.

Semantic Content Analyses.

To examine how the content of the videos relates to the differences in neural processing between liberals and conservatives, we transcribed the audio for all videos and segmented the videos into smaller segments based on the following criteria: 1) each segment is to be between 10 and 40 s; 2) a segment ends when there is a change in speaker (news interview) or a change in scene (animated ads) unless less than 10 s have passed; 3) if more than 40 s have passed since the end of the previous segment, the end of the current segment will be marked as the most recent pause in speech; 4) segments are rounded to the closest 2 s to match the repetition time of the functional scans. This process yielded 86 segments from 24 videos (range: 12 to 38 s; average duration: 24.7 s; SD: 5.56 s).

For each segment, we used the LIWC (31) to count the proportion of words that fall into three distinct word categories: moral-emotional words, uniquely moral words, uniquely emotional words. Moral-emotional words are words that appear in both the Moral Foundations Dictionary in ref. 24 and in the Affect Dictionary included with the LIWC software. Uniquely moral words are words that appear in the Moral Foundations Dictionary but not in the Affect Dictionary, while uniquely emotional words are words that appear in the Affect dictionary but not in the Moral Foundations Dictionary. Previous work has shown that this procedure generates three categories of words with high discriminant validity: moral-emotional words are rated as more moral than uniquely emotional words and more emotional than uniquely moral words (31).

We defined a DMPFC ROI as the cluster of voxels where activity was more similar within groups than between groups. For each segment, we computed the average DMPFC activity separately for liberal and conservative participants. We then obtained the absolute difference between the average activity of the two groups as a measure of neural polarization. Using a linear mixed-effects model, we tested if the percentage of moral-emotional words, uniquely moral words, and uniquely emotional words in a segment would predict the magnitude of neural polarization, with a random intercept included for each video and controlling for the number of words and the duration of the segment. With 86 segments and 24 videos, we have too few observations to parameterize a model with a “full random effects structure” (i.e., including random slopes) (54). Instead, we include random intercepts to specify the clustering of our data into videos to partially account for the dependency between segments from the same video. Next, we examined the relationship between neural polarization in the DMPFC and a broader set of semantic categories. For each segment, we calculated the percentage of words in the 47 semantic categories included as part of the LIWC software and the three categories from the Moral Emotional Dictionary. For each semantic category, we fit a separate linear mixed-effects model to model neural polarization in the DMPFC as a function of the percentage of words in that category, with a random intercept included for each video, and the number of words and duration of the segment added as covariates of no interest.

All models were estimated using the lmer function in the lme4 package in R (55), with P values computed from t tests with Satterthwaite approximation for the degrees of freedom as implemented in the lmerTest package (56) and corrected for multiple comparisons using the Holm–Bonferroni procedure. All predictor variables were z-scored prior to being entered into the model to facilitate the comparison of the resulting regression coefficients on a common scale.

Predicting Video-Specific Attitude Change.

For each video, we computed the correlation between each participant’s DMPFC time course and the average conservative and average liberal DMPFC time courses. The average time courses were computed while excluding that participant’s data. We then computed the difference between the two correlations as a measure of whether a participants’ brain activity was more similar to an average conservative or an average liberal. Positive values indicate greater similarity to the average conservative time course while negative values indicate greater similarity to the average liberal time course.

We ran three separate linear mixed-effects models to predict video-specific ratings of agreeableness, support, and change (Experimental Task) from the neural similarity to conservative vs. liberal participants. Participants initial attitude toward the policy mentioned in the video was included as a regressor of no interest. All models included a random intercept for each video and each participant and were estimated using the lmer function in the lme4 package in R (55), with P values computed from t tests with Satterthwaite approximation for the degrees of freedom as implemented in the lmerTest package (56)

Supplementary Material

Acknowledgments

We thank Courtney Gao, Yiyu Wang, and Gloria Wong for assistance with stimuli creation, video transcription, and data collection, and all members of the Stanford Social Neuroscience Laboratory for helpful discussion. This research was supported by Army Research Office Grant W911NF1610172 (to J.Z.).

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

See online for related content such as Commentaries.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2008530117/-/DCSupplemental.

Data Availability.

Anonymized data have been deposited in OpenNeuro (https://openneuro.org/) under the accession no. ds003095. Analysis scripts are available in Github at https://github.com/ycleong/Polarization/.

References

- 1.Somer M., McCoy J., Déjà vu? Polarization and endangered democracies in the 21st century. Am. Behav. Sci. 62, 3–15 (2018). [Google Scholar]

- 2.Pew Research Center , Political polarization in the American public. https://www.pewresearch.org/politics/2014/06/12/political-polarization-in-the-american-public/. Accessed 29 September 2020.

- 3.McCoy J., Rahman T., Somer M., Polarization and the global crisis of democracy: Common patterns, dynamics, and pernicious consequences for democratic polities. Am. Behav. Sci. 62, 16–42 (2018). [Google Scholar]

- 4.Bolsen T., Druckman J. N., Cook F. L., The influence of partisan motivated reasoning on public opinion. Polit. Behav. 36, 235–262 (2014). [Google Scholar]

- 5.Bail C. A.et al., Exposure to opposing views on social media can increase political polarization. Proc. Natl. Acad. Sci. U.S.A. 115, 9216–9221 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lord C. G., Ross L., Lepper M. R., Biased assimilation and attitude polarization: The effects of prior theories on subsequently considered evidence. J. Pers. Soc. Psychol. 37, 2098–2109 (1979). [Google Scholar]

- 7.Taber C. S., Lodge M., Motivated skepticism in the evaluation of political beliefs. Am. J. Pol. Sci. 50, 755–769 (2006). [Google Scholar]

- 8.Kraft P. W., Lodge M., Taber C. S., Why people “don’t trust the evidence”: Motivated reasoning and scientific beliefs. Ann. Am. Acad. Pol. Soc. Sci. 658, 121–133 (2015). [Google Scholar]

- 9.Claassen R. L., Ensley M. J., Motivated reasoning and yard-sign-stealing partisans: Mine is a likable rogue, yours is a degenerate criminal. Polit. Behav. 38, 317–335 (2016). [Google Scholar]

- 10.Leong Y. C., Hughes B. L., Wang Y., Zaki J., Neurocomputational mechanisms underlying motivated seeing. Nat. Hum. Behav. 3, 962–973 (2019). [DOI] [PubMed] [Google Scholar]

- 11.Balcetis E., Dunning D., See what you want to see: Motivational influences on visual perception. J. Pers. Soc. Psychol. 91, 612–625 (2006). [DOI] [PubMed] [Google Scholar]

- 12.Caruso E. M., Mead N. L., Balcetis E., Political partisanship influences perception of biracial candidates’ skin tone. Proc. Natl. Acad. Sci. U.S.A. 106, 20168–20173 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Granot Y., Balcetis E., Schneider K. E., Tyler T. R., Justice is not blind: Visual attention exaggerates effects of group identification on legal punishment. J. Exp. Psychol. Gen. 143, 2196–2208 (2014). [DOI] [PubMed] [Google Scholar]

- 14.Jost J. T., Nam H. H., Amodio D. M., Bavel J. J. V., Political neuroscience: The beginning of a beautiful friendship. Polit. Psychol. 35, 3–42 (2014). [Google Scholar]

- 15.Van Bavel J. J., Pereira A., The partisan brain: An identity-based model of political belief. Trends Cogn. Sci. 22, 213–224 (2018). [DOI] [PubMed] [Google Scholar]

- 16.Pew Research Center , How Americans see illegal immigration, the border wall and political compromise. https://www.pewresearch.org/fact-tank/2019/01/16/how-americans-see-illegal-immigration-the-border-wall-and-political-compromise/. Accessed 29 September 2020.

- 17.Miller B., Exploring the economic determinants of immigration attitudes. Poverty Public Policy 4, 1–19 (2012). [Google Scholar]

- 18.Nastase S. A., Gazzola V., Hasson U., Keysers C., Measuring shared responses across subjects using intersubject correlation. Soc. Cogn. Affect. Neurosci. 14, 667–685 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Regev M.et al., Propagation of information along the cortical hierarchy as a function of attention while reading and listening to stories. Cereb. Cortex 29, 4017–4034 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Nguyen M., Vanderwal T., Hasson U., Shared understanding of narratives is correlated with shared neural responses. Neuroimage 184, 161–170 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yeshurun Y.et al., Same story, different story. Psychol. Sci. 28, 307–319 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lakoff G., Moral Politics: How Liberals and Conservatives Think, (University of Chicago Press, ed. 2, 2010). [Google Scholar]

- 23.Skitka L. J., Bauman C. W., Moral conviction and political engagement. Polit. Psychol. 29, 29–54 (2008). [Google Scholar]

- 24.Graham J., Haidt J., Nosek B. A., Liberals and conservatives rely on different sets of moral foundations. J. Pers. Soc. Psychol. 96, 1029–1046 (2009). [DOI] [PubMed] [Google Scholar]

- 25.Brady W. J., Crockett M. J., Van Bavel J. J., The MAD Model of Moral Contagion: The role of motivation, attention and design in the spread of moralized content online. Perspect. Psychol. Sci. 15, 978–1010 (2020). [DOI] [PubMed] [Google Scholar]

- 26.Clifford S., How emotional frames moralize and polarize political attitudes. Polit. Psychol. 40, 75–91 (2019). [Google Scholar]

- 27.Hasson U., Nir Y., Levy I., Fuhrmann G., Malach R., Intersubject synchronization of cortical activity during natural vision. Science 303, 1634–1640 (2004). [DOI] [PubMed] [Google Scholar]

- 28.Parkinson C., Kleinbaum A. M., Wheatley T., Similar neural responses predict friendship. Nat. Commun. 9, 332 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Finn E. S., Corlett P. R., Chen G., Bandettini P. A., Constable R. T., Trait paranoia shapes inter-subject synchrony in brain activity during an ambiguous social narrative. Nat. Commun. 9, 2043 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kriegeskorte N., Mur M., Bandettini P., Representational similarity analysis: Connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2, 4 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Brady W. J., Wills J. A., Jost J. T., Tucker J. A., Van Bavel J. J., Emotion shapes the diffusion of moralized content in social networks. Proc. Natl. Acad. Sci. U.S.A. 114, 7313–7318 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Pennebaker J. W., Boyd R. L., Jordan K., Blackburn K., The Development and Psychometric Properties of LIWC2015, (University of Texas at Austin, Austin, TX, 2015). [Google Scholar]

- 33.Goñi J.et al., Resting-brain functional connectivity predicted by analytic measures of network communication. Proc. Natl. Acad. Sci. U.S.A. 111, 833–838 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Simony E.et al., Dynamic reconfiguration of the default mode network during narrative comprehension. Nat. Commun. 7, 12141 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lerner Y., Honey C. J., Silbert L. J., Hasson U., Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J. Neurosci. 31, 2906–2915 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Honey C. J., Thompson C. R., Lerner Y., Hasson U., Not lost in translation: Neural responses shared across languages. J. Neurosci. 32, 15277–15283 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Spreng R. N., Mar R. A., Kim A. S. N., The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: A quantitative meta-analysis. J. Cogn. Neurosci. 21, 489–510 (2009). [DOI] [PubMed] [Google Scholar]

- 38.Van Overwalle F., Social cognition and the brain: A meta-analysis. Hum. Brain Mapp. 30, 829–858 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mende-Siedlecki P., Cai Y., Todorov A., The neural dynamics of updating person impressions. Soc. Cogn. Affect. Neurosci. 8, 623–631 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Yeshurun Y., Nguyen M., Hasson U., Amplification of local changes along the timescale processing hierarchy. Proc. Natl. Acad. Sci. U.S.A. 114, 9475–9480 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zwaan R. A., Radvansky G. A., Situation models in language comprehension and memory. Psychol. Bull. 123, 162–185 (1998). [DOI] [PubMed] [Google Scholar]

- 42.Yarkoni T., Speer N. K., Zacks J. M., Neural substrates of narrative comprehension and memory. Neuroimage 41, 1408–1425 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jost J. T., Stern C., Rule N. O., Sterling J., The politics of fear: Is there an ideological asymmetry in existential motivation? Soc. Cogn. 35, 324–353 (2017). [Google Scholar]

- 44.Jost J. T., Glaser J., Kruglanski A. W., Sulloway F. J., Political conservatism as motivated social cognition. Psychol. Bull. 129, 339–375 (2003). [DOI] [PubMed] [Google Scholar]

- 45.Hibbing J. R., Smith K. B., Alford J. R., Differences in negativity bias underlie variations in political ideology. Behav. Brain Sci. 37, 297–307 (2014). [DOI] [PubMed] [Google Scholar]

- 46.Delgado M. R., Reward-related responses in the human striatum. Ann. N. Y. Acad. Sci. 1104, 70–88 (2007). [DOI] [PubMed] [Google Scholar]

- 47.Knutson B., Greer S. M., Anticipatory affect: Neural correlates and consequences for choice. Philos. Trans. R. Soc. Lond. B Biol. Sci. 363, 3771–3786 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hainmueller J., Hopkins D. J., Public attitudes toward immigration. Annu. Rev. Polit. Sci. 17, 225–249 (2014). [Google Scholar]

- 49.Pew Research Center , Similarities and differences between urban, suburban and rural communities in America. https://www.pewsocialtrends.org/2018/05/22/what-unites-and-divides-urban-suburban-and-rural-communities/. Accessed 29 September 2018.

- 50.Koleva S. P., Graham J., Iyer R., Ditto P. H., Haidt J., Tracing the threads: How five moral concerns (especially Purity) help explain culture war attitudes. J. Res. Pers. 46, 184–194 (2012). [Google Scholar]

- 51.Kahan D., Gastil J., Braman D., Cohen G., Slovic P., The second national risk and culture study: Making sense of—and making progress in—the American culture war of fact. GWU Leg. Stud. Res. Pap. 370, 8–26 (2007). [Google Scholar]

- 52.Brady W. J., Wills J. A., Burkart D., Jost J. T., Van Bavel J. J., An ideological asymmetry in the diffusion of moralized content on social media among political leaders. J. Exp. Psychol. Gen. 148, 1802–1813 (2018). [DOI] [PubMed] [Google Scholar]

- 53.Benjamini Y., Krieger A. M., Yekutieli D., Adaptive linear step-up procedures that control the false discovery rate. Biometrika 93, 491–507 (2006). [Google Scholar]

- 54.Barr D. J., Levy R., Scheepers C., Tily H. J., Random effects structure for confirmatory hypothesis testing: Keep it maximal. J. Mem. Lang. 68, 255–278 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bates D., et al. , lme4: Linear mixed-effects models using “Eigen” and S4. R package version 1.1.18.1. https://CRAN.R-project.org/package=lme4. Accessed 6 October 2020.

- 56.Kuznetsova A., Brockhoff P. B., Christensen R. H. B., lmerTest: Tests in linear mixed effects models. R package version 3.0.1. https://CRAN.R-project.org/package=lmerTest. Accessed 6 October 2020.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Anonymized data have been deposited in OpenNeuro (https://openneuro.org/) under the accession no. ds003095. Analysis scripts are available in Github at https://github.com/ycleong/Polarization/.