Abstract

We present the Amsterdam Open MRI Collection (AOMIC): three datasets with multimodal (3 T) MRI data including structural (T1-weighted), diffusion-weighted, and (resting-state and task-based) functional BOLD MRI data, as well as detailed demographics and psychometric variables from a large set of healthy participants (N = 928, N = 226, and N = 216). Notably, task-based fMRI was collected during various robust paradigms (targeting naturalistic vision, emotion perception, working memory, face perception, cognitive conflict and control, and response inhibition) for which extensively annotated event-files are available. For each dataset and data modality, we provide the data in both raw and preprocessed form (both compliant with the Brain Imaging Data Structure), which were subjected to extensive (automated and manual) quality control. All data is publicly available from the OpenNeuro data sharing platform.

Subject terms: Cognitive neuroscience, Human behaviour, Social neuroscience

| Measurement(s) | T2*-weighted images • T1-weighted images • diffusion-weighted images • Respiration • cardiac trace |

| Technology Type(s) | Gradient Echo MRI • Magnetization-Prepared Rapid Gradient Echo MRI • Diffusion Weighted Imaging • breathing belt • Pulse Oximetry |

| Sample Characteristic - Organism | Homo sapiens |

Machine-accessible metadata file describing the reported data: 10.6084/m9.figshare.13606946

Background & Summary

It is becoming increasingly clear that robust effects in neuroimaging studies require very large sample sizes1,2, especially when investigating between-subject effects3. With this in mind, we have run several large-scale “population imaging” MRI projects over the past decade at the University of Amsterdam, with the aim to reliably estimate the (absence of) structural and functional correlates of human behavior and mental processes. After publishing several articles using these datasets4–8, we believe that making the data from these projects publicly available will benefit the neuroimaging community most. To this end, we present the Amsterdam Open MRI Collection (AOMIC) — three large-scale datasets with high-quality, multimodal 3 T MRI data and detailed demographic and psychometric data, which are publicly available from the OpenNeuro data sharing platform. In this article, we describe the characteristics and contents of these three datasets in a manner that complies with the guidelines of the COBIDAS MRI reporting framework9.

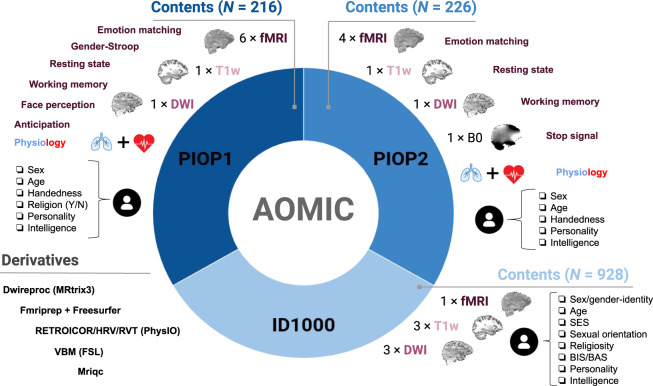

We believe that AOMIC represents a useful contribution to the growing collection of publicly available population imaging MRI datasets10–13. AOMIC contains a large representative dataset of the general population, “ID1000” (N = 928), and two large datasets with data from university students, “PIOP1” (N = 216) and “PIOP2” (N = 226; Population Imaging of Psychology). Each dataset contains MRI data from multiple modalities (structural, diffusion, and functional MRI), concurrently measured physiological (respiratory and cardiac) data, and a variety of well-annotated demographics (age, sex, handedness, educational level, etc.), psychometric measures (intelligence, personality), and behavioral information related to the task-based fMRI runs (see Fig. 1 and Table 1 for an overview). Furthermore, AOMIC offers, in addition to the raw data, also preprocessed data from well-established preprocessing and quality control pipelines, all consistently formatted according to the Brain Imaging Data Structure14. As such, researchers can quickly and easily prototype and implement novel secondary analyses without having to worry about quality control and preprocessing themselves.

Fig. 1.

General overview of AOMIC’s contents. Each dataset (ID1000, PIOP1, PIOP2) contains multimodal MRI data, physiology (concurrent with fMRI acquisition), demographic and psychometric data, as well as a large set of “derivatives”, i.e., data derived from the original “raw” data through state-of-the-art preprocessing pipelines.

Table 1.

Overview of the number of subjects per dataset and tasks.

| ID1000 | PIOP1 | PIOP2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| N subj. | 928 | 216 | 226 | ||||||||

| T1w | 928 | 216 | 226 | ||||||||

| DWI | 925 | 211 | 226 | ||||||||

| Fieldmap | n/a | n/a | 226 | ||||||||

| Mov | RSMB | Emo | G-str | FPMB | WM | Antic | RS | Emo | WM | Stop | |

| fMRI | 881 | 210 | 208 | 208 | 203 | 207 | 203 | 214 | 222 | 224 | 226 |

| Physiology | 790 | 198 | 194 | 194 | 189 | 194 | 188 | 216 | 216 | 211 | 217 |

Mov: movie watching, RS: resting-state, Emo: emotion matching, G-str: gender-stroop, FP: face perception, WM: working memory, Antic: anticipation, Stop: stop-signal. MB subscript indicates that multiband acceleration (also known as simultaneous slice excitation) was used. Tasks without this subscript were acquired without multiband acceleration (i.e., “sequential” acquisition).

Due to the size and variety of the data in AOMIC, there are many ways in which it can be used for secondary analysis. One promising direction is to use the data for the development of generative and discriminative machine learning-based algorithms, which often need large datasets to train models on. Another, but related, use of AOMIC’s data is to use it as a validation dataset (rather than train-set) for already developed (machine learning) algorithms to assess the algorithm’s ability to generalize to different acquisition sites or protocols. Lastly, due to the rich set of confound variables shipped with each dataset (including physiology-derived noise regressors), AOMIC can be used to develop, test, or validate (novel) denoising methods.

Methods

In this section, we describe the details of the data acquisition for each dataset in AOMIC. We start with a common description of the MRI scanner used to collect the data. The next two sections describe the participant characteristics, data collection protocols, experimental paradigms (for functional MRI), and previous analyses separately for the ID1000 study and the PIOP studies. Then, two sections describe the recorded subject-specific variables (such as educational level, background socio-economic status, age, etc.) and psychometric measures from questionnaires and tasks (such as intelligence and personality). Finally, we describe how we standardized and preprocessed the data, yielding an extensive set of “derivatives” (i.e., data derived from the original raw data).

Scanner details and general scanning protocol (all datasets)

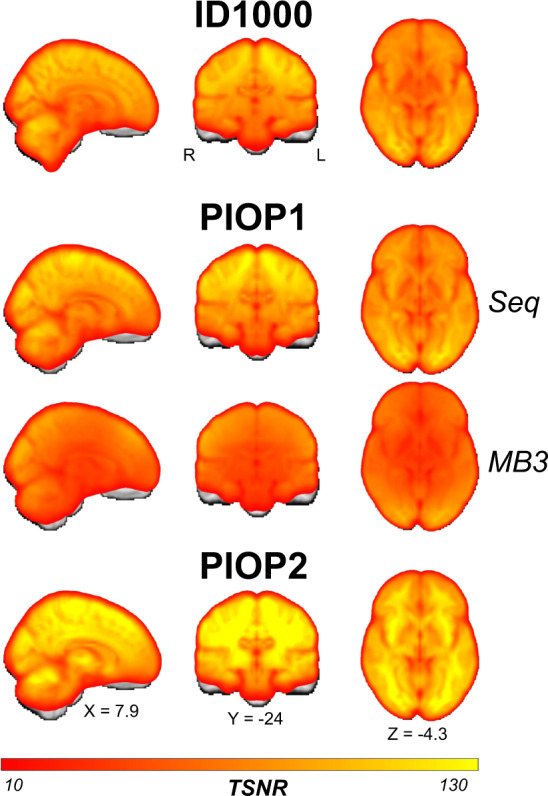

Data from all three datasets were acquired on the same Philips 3 T scanner (Philips, Best, the Netherlands), but underwent several upgrades in between the three studies. The ID1000 dataset was scanned on the “Intera” version, after which the scanner was upgraded to the “Achieva” version (converting a part of the signal acquisition pathway from analog to digital) on which the PIOP1 dataset was scanned. After finishing the PIOP1 study, the scanner was upgraded to the “Achieva dStream” version (with even earlier digitalization of the MR signal resulting in less noise interference), on which the PIOP2 study was scanned. All studies were scanned with a 32-channel head coil (though the head coil was upgraded at the same time as the dStream upgrade). Although not part of AOMIC, a separate dataset (dStreamUpgrade) with raw and preprocessed data (DWI, T1-weighted, and both resting-state and task-based functional MRI scans) acquired before and after the dStream upgrade using the same sequences and participants in both sessions is available on OpenNeuro15. In addition, unthresholded group-level temporal signal-to-noise ratio (tSNR) maps and group-level GLM maps (for both the pre-upgrade and post-upgrade data as well as the post-pre difference) are available on NeuroVault16.

At the start of each scan session, a low resolution survey scan was made, which was used to determine the location of the field-of-view. For all structural (T1-weighted), fieldmap (phase-difference based B0 map), and diffusion (DWI) scans, the slice stack was not angled. This was also the case for the functional MRI scans of ID1000 and PIOP1, but for PIOP2 the slice stack for functional MRI scans was angled such that the eyes were excluded as much as possible in order to reduce signal dropout in the orbitofrontal cortex. While the set of scans acquired for each study is relatively consistent (i.e., at least one T1-weighted anatomical scan, at least one diffusion-weighted scan, and at least one functional BOLD MRI scan), the parameters for a given scan vary slightly between the three studies. Notably, the functional MRI data from the resting-state and faces tasks from PIOP1 were acquired with multi-slice acceleration (factor 3; referred to in the current article as “multiband” acquisition), while all other functional MRI scans, including those from ID1000 and PIOP2, were acquired without multi-slice acceleration (referred to in the current article as “sequential” acquisition). The reason for reverting to sequential (instead of multiband) acquisition of the resting-state scan in PIOP2 is that we did not think the reduction in tSNR observed in multiband scans outweighs the increase in number of volumes. In Online-only Table 1, the parameters for the different types of scans across all three studies are listed.

Online-only Table 1.

Acquisition parameters for the different scans acquired across all three datasets.

| ID1000 | PIOP1 | PIOP2 | ||

|---|---|---|---|---|

| T1-weighted images | ||||

| Number of scans | 3 | 1 | 1 | |

| Scan technique | 3D MPRAGE | 3D MPRAGE | 3D MPRAGE | |

| Number of signals (repetitions) | 1 | 2 | 2 | |

| Fat suppression | Yes | Yes | Yes | |

| Coverage | Whole-brain* | Whole-brain* | Whole-brain* | |

| FOV (RL/AP/FH; mm) | 160 × 256 × 256 | 188 × 240 × 220 | 188 × 240 × 220 | |

| Voxel size (mm) | 1 × 1 × 1 | 1 × 1 × 1 | 1 × 1 × 1 | |

| TR/TE (ms) | 8.1/3.7 | 8.5/3.9 | 8.5/3.9 | |

| Water-fat shift (pix) | 2.268 | 2.268 | 2.268 | |

| Bandwidth (Hz/pix) | 191.5 | 191.5 | 191.5 | |

| Flip angle (deg) | 8 | 8 | 8 | |

| Phase accell. factor (SENSE) | 1.5 (RL) | 2.5 (RL)/2 (FH) | 2.5 (RL)/2 (FH) | |

| Acquisition direction | Sagittal | Axial | Axial | |

| Duration | 5 min 58 sec | 6 min 3 sec | 6 min 3 | |

| Phase-difference fieldmap | ||||

| Scan technique | n/a | n/a | 3D MPRAGE | |

| Fat suppression | n/a | n/a | No | |

| Coverage | n/a | n/a | Whole-brain | |

| FOV (RL/AP/FH; mm) | n/a | n/a | 208 × 256 × 256 | |

| Voxel size (mm) | n/a | n/a | 2 × 2 × 2 | |

| TR/TE (ms) | n/a | n/a | 11/3 | |

| Delta TE (ms) | n/a | n/a | 5 | |

| Water-fat shift (pix) | n/a | n/a | 1.134 | |

| Bandwidth (Hz/pix) | n/a | n/a | 383.0 | |

| Flip angle (deg) | n/a | n/a | 8 | |

| Phase accell. factor (SENSE) | n/a | n/a | 2.5 (RL)/2 (FH) | |

| Acquisition direction | n/a | n/a | Axial | |

| Duration | n/a | n/a | 1 min 44 | |

| Functional (BOLD) MRI | MB scans** | Seq. scans** | ||

| Number of scans | 1 | 2 | 3 | 4 |

| Scan technique | GE-EPI | GE-EPI | GE-EPI | GE-EPI |

| Coverage | Whole-brain | Whole-brain | Whole-brain | |

| FOV (RL/AP/FH) | 138 × 192 × 192 | 240 × 240 × 118 | 240 × 240 × 122 | 240 × 240 × 122 |

| Voxel size (mm.) | 3 × 3 × 3 | 3 × 3 × 3 | 3 × 3 × 3 | 3 × 3 × 3 |

| Matrix size | 64 × 64 | 80 × 80 | 80 × 80 | 80 × 80 |

| Nr. of slices | 40 | 36 | 37 | 37 |

| Slice gap (mm.) | 0.3 | 0.3 | 0.3 | 0.3 |

| TR/TE (ms.) | 2200/28 | 750/28 | 2000 | 28 |

| Water-fat shift (pix.) | 11.575 | 11.001 | 11.502 | 11.502 |

| Bandwidth (Hz/Pix) | 34.6 | 39.5 | 37.8 | 37.8 |

| Flip angle (deg.) | 90 | 60 | 76.1 | 76.1 |

| Phase accell. factor (SENSE) | 0 | 2 (AP) | 2 (AP) | 2 (AP) |

| Phase encoding direction | P>> A | P>> A | P>> A | P>> A |

| Multiband acceleration factor | n/a | 3 | n/a | n/a |

| Multiband FOV shift | n/a | None | n/a | n/a |

| Slice encoding direction | L>> R (sequential) | F>> H (sequential) | F>> H (sequential) | F>> H (sequential) |

| Nr. of dummy scans | 2 | 2 | 2 | 2 |

| Dynamic stabilization | none | enhanced | enhanced | enhanced |

| Diffusion-weighted images | ||||

| Number of scans | 3 | 1 | 1 | |

| Scan technique | SE-DWI | SE-DWI | SE-DWI | |

| Shell | Single | Single | Single | |

| Nr. of b0 images | 1 | 1 | 1 | |

| Nr. of diffusion-weighted dirs. | 32 | 32 | 32 | |

| Sampling scheme | Half sphere | Half sphere | Half sphere | |

| DWI b-value | 1000 s/mm2 | 1000 s/mm2 | 1000 s/mm2 | |

| Coverage | Whole-brain (partial cerebellum) | Whole-brain (partial cerebellum) | Whole-brain (partial cerebellum) | |

| FOV (RL/AP/FH) | 224 × 224 × 120 | 224 × 224 × 120 | 224 × 224 × 120 | |

| Voxel size (mm.) | 2 × 2 × 2 | 2 × 2 × 2 | 2 × 2 × 2 | |

| Matrix size | 112 × 112 | 112 × 112 | 112 × 112 | |

| Nr. of slices | 60 | 60 | 60 | |

| Slice gap (mm.) | 0 | 0 | 0 | |

| TR/TE (ms.) | 6312***/74 | 7387***/86 | 7387***/86 | |

| Water-fat shift (pix.) | 13.539 | 18.926 | 18.926 | |

| Bandwidth (Hz/pix.) | 33.8 | 22.9 | 22.9 | |

| Flip angle (deg.) | 90 | 90 | 90 | |

| Phase accell. (SENSE) | 3 (AP) | 2 (AP) | 2 (AP) | |

| Phase encoding dir. | P>> A | P>> A | P>> A | |

| Slice encoding direction | F>> H | F>> H | F>> H | |

| Duration | 4 min 49 sec | 5 min 27 sec | 5 min 27 sec | |

MPRAGE: Magnetization Prepared Rapid Gradient Echo, SE-DWI: Spin-Echo Diffusion-Weighted Imaging, FOV: field-of-view, RL: right-left, AP: anterior-posterior, FH: feet-head, GE-EPI: gradient echo-echo-planar imaging, TR: time to repetition, TE: time to echo, n/a: not applicable, Seq.: sequential (non-multiband), MB: multiband (i.e., simultaneous slice excitation). * Whole-brain coverage included the brain-stem and cerebellum, unless otherwise stated. ** MB scans were acquired for the face perception and resting-state paradigms of PIOP2. Sequential scans were acquired for the movie watching (ID1000), working memory, emotion matching, gender stroop, and anticipation, stop signal paradigms (PIOP1 and PIOP2). *** DWI scans were acquired with the shortest possible TR (“shortest” setting on Philips scanners), causing the actual TR values to vary from scan to scan. The TRs reported in this table reflect the median TR across all DWI scans per dataset (see the Raw data standardization section for more information).

During functional MRI scans, additional physiological data was recorded. Respiratory traces were recorded using a respiratory belt (air filled cushion) bound on top of the subject’s diaphragm using a velcro band. Cardiac traces were recorded using a plethysmograph attached to the subject’s left ring finger. Data was transferred to the scanner PC as plain-text files (Philips “SCANPHYSLOG” files) using a wireless recorder with a sampling frequency of 496 Hz.

Experimental paradigms for the functional MRI runs were shown on a 61 × 36 cm screen using a DLP projector with a 60 Hz refresh rate (ID1000 and PIOP1) or on a Cambridge Electronics BOLDscreen 32 IPS LCD screen with a 120 Hz refresh rate (PIOP2), both placed at 113 cm distance from the mirror mounted on top of the head coil. Sound was presented via a MRConfon sound system. Experimental tasks were programmed using Neurobs Presentation (Neurobehavioral Systems Inc, Berkeley, U.S.A.) and run on a Windows computer with a dedicated graphics card. To allow subject responses in experimental tasks (PIOP1 and PIOP2 only), participants used MRI-compatible fibre optic response pads with four buttons for each hand (Cambridge Research Systems, Rochester, United Kingdom).

ID1000 specifics

In this section, we describe the subject recruitment, subject characteristics, data collection protocol, functional MRI paradigm, and previous analyses of the ID1000 study.

Subjects

The data from the ID1000 sample was collected between 2010 and 2012. The faculty’s ethical committee approved this study before data collection started (EC number: 2010-BC-1345). Potential subjects were a priori excluded from participation if they did not pass a safety checklist with MRI contraindications. We recorded data from 992 subjects of which 928 are included in the dataset (see “Technical validation” for details on the post-hoc exclusion procedure). Subjects were recruited through a recruitment agency (Motivaction International B.V.) in an effort to get a sample that was representative of the general Dutch population in terms of educational level (as defined by the Dutch government17), but drawn from only a limited age range (19 to 26). We chose this limited age range to minimize the effect of aging on any brain-related covariates. A more detailed description of educational level and other demographic variables can be found in the section “Subject variables”.

Data collection protocol

Prior to the experiment, subjects were informed about the goal and scope of the research, the MRI procedure, safety measures, general experimental procedures, privacy and data sharing concerns, and voluntary nature of the project (i.e., subjects were told that they could stop with the experiment at any time, without giving a reason for it). Before coming to the scan center, subjects also completed a questionnaire on background information (to determine educational level, which was used to draw a representative sample). If participants were invited they provided informed consent and completed an MRI screening checklist. Subjects then completed an extensive set of questionnaires and tests, including a general demographics questionnaire, the Intelligence Structure Test18,19, the “trait” part of the State-Trait Anxiety Inventory (STAI)20, a behavioral avoidance/inhibition questionnaire (BIS/BAS)21,22, multiple personality questionnaires — amongst them the MPQ23 and the NEO-FFI24,25 and several behavioral tasks. The psychometric variables of the tests included in the current dataset are described in the section “Psychometric variables”.

Testing took place from 9 AM until 4 PM and on each day two subjects were tested. One subject began with the IST intelligence test, while the other subject started with the imaging part of the experiment. For the MRI part, we recorded three T1-weighted scans, three diffusion-weighted scans, and one functional (BOLD) MRI scan (in that order). The scanning session lasted approximately 60 minutes. Afterwards, the subjects switched and completed the other part. After these initial tasks, the subjects participated in additional experimental tasks, some of which have been reported in other publications26,27 and are not included in this dataset.

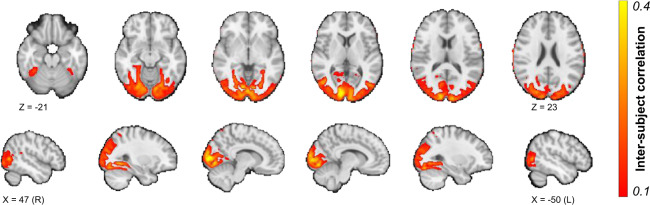

Functional MRI paradigm

During functional MRI acquisition, subjects viewed a movie clip consisting of a (continuous) compilation of 22 natural scenes taken from the movie Koyaanisqatsi28 with music composed by Philip Glass. The scenes were selected because they broadly sample a set of visual parameters (textures and objects with different sizes and different rates of movement). Importantly, the focus on variation of visual parameters means, in this case, that the movie lacks a narrative and thus may be inappropriate to investigate semantic or other high-level processes.

The scenes varied in length from approximately 5 to 40 seconds with “cross dissolve” transitions between scenes. The movie clip extended 16 degrees visual angle (resolution 720 × 576, movie frame rate of 25 Hz). The onset of the movie clip was triggered by the first volume of the fMRI acquisition and had a duration of 11 minutes (which is slightly longer than the fMRI scan, i.e., 10 minutes and 38 seconds). The movie clip is available in the “stimuli” subdirectory of the ID1000 dataset (with the filename task-moviewatching_desc-koyaanisqatsi_movie.mp4).

Previous analyses

The MRI data of ID1000 has been analyzed previously by two studies. One study4 analyzed the functional MRI data of a subset of 20 subjects by relating features from computational models applied to the movie data to the voxelwise time series using representational similarity analysis. Another study5 analyzed the relationship between autistic traits and voxel-based morphometry (VBM, derived from the T1-weighted scans) as well as fractional anisotropy (FA, derived from the DWI scans) in a subset of 508 subjects.

PIOP1 and PIOP2 specifics

In this section, we describe the subject recruitment, subject characteristics, data collection protocol, functional MRI paradigms, and previous analyses of the PIOP1 and PIOP2 studies. These two studies are described in a common section because their data collection protocols were very similar. Information provided in this section (including sample characteristics, sample procedure, data acquisition procedure and scan sequences) can be assumed to apply to both PIOP1 and PIOP2, unless explicitly stated otherwise. As such, these datasets can be used as train/test (or validation) partitions. The last subsection (Differences between PIOP1 and PIOP2) describes the main differences between the two PIOP datasets.

Subjects

Data from the PIOP1 dataset were collected between May 2015 and April 2016 and data from the PIOP2 dataset between March 2017 and July 2017. The faculty’s ethical committee approved these studies before data collection started (PIOP1 EC number: 2015-EXT-4366, PIOP2 EC number: 2017-EXT-7568). Potential subjects were a priori excluded from participation if they did not pass a safety checklist with MRI contraindications. Data was recorded from 248 subjects (PIOP1) and 242 subjects (PIOP2), of which 216 (PIOP1) and 226 (PIOP2) are included in AOMIC (see “Technical validation” for details on the post-hoc exclusion procedure). Subjects from both PIOP1 and PIOP2 were all university students (from the Amsterdam University of Applied Sciences or the University of Amsterdam) recruited through the University websites, posters placed around the university grounds, and Facebook. A description of demographic and other subject-specific variables can be found in the section “Subject variables”.

Data collection protocol

Prior to the research, subjects were informed about the goal of the study, the MRI procedure and safety, general experimental procedure, privacy and data sharing issues, and the voluntary nature of participation through an information letter. Each testing day (which took place from 8.30 AM until 1 PM), four subjects were tested. First, all subjects filled in an informed consent form and completed an MRI screening checklist. Then, two subjects started with the MRI part of the experiment, while the other two completed the demographic and psychometric questionnaires (described below) as well as several tasks that are not included in AOMIC.

The MRI session included a survey scan, followed by a T1-weighted anatomical scan. Then, several functional MRI runs (described below) and a single diffusion-weighted scan were recorded. Details about the scan parameters can be found in Online-only Table 1. The scan session lasted approximately 60 minutes. The scans were always recorded in the same order. For PIOP1, this was the following: faces (fMRI), gender-stroop (fMRI), T1-weighted scan, emotion matching (fMRI), resting-state (fMRI), phase-difference fieldmap (B0) scan, DWI scan, working memory (fMRI), emotion anticipation (fMRI). For PIOP2, this was the following: T1-weighted scan, working memory (fMRI), resting-state (fMRI), DWI scan, stop signal (fMRI), emotion matching (fMRI).

Functional MRI paradigms

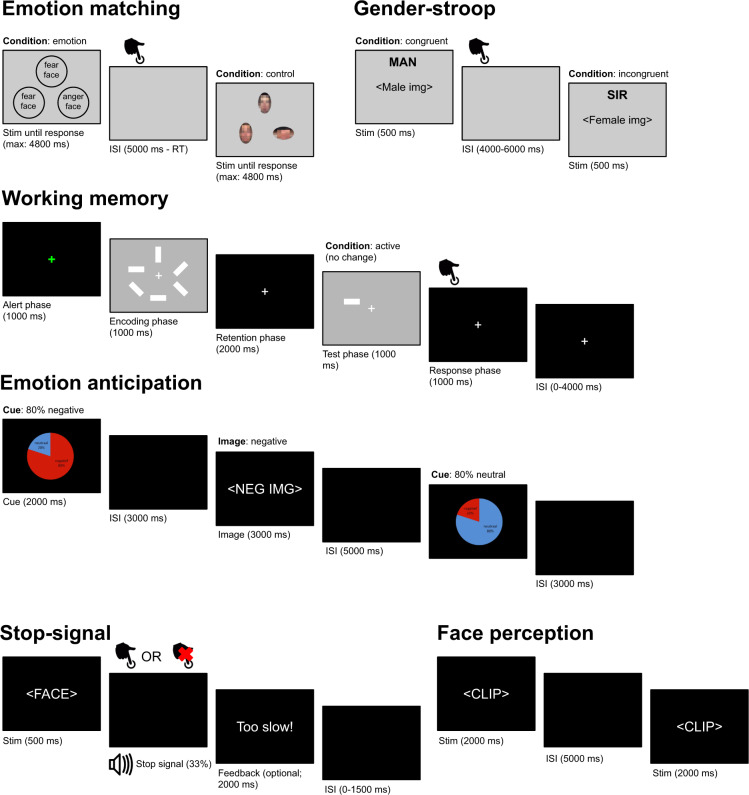

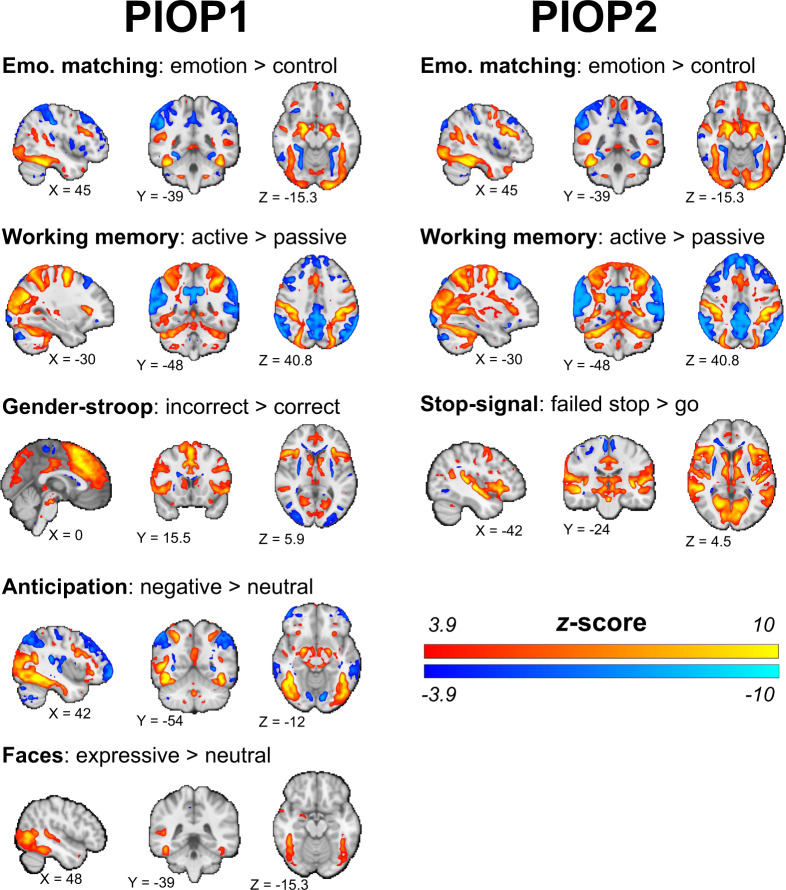

In this section, we will describe the experimental paradigms used during fMRI acquisition. See Fig. 2 for a visual representation of each paradigm. None of the designs were optimized for efficiency, except for the emotion anticipation task (which was optimized for efficiency to estimate the effect of each condition). All paradigms during functional MRI were started and synced to the functional MRI acquisition using a transistor-transistor logic (TTL) pulse sent to the stimulus presentation computer.

Fig. 2.

A visual representation of all experimental paradigms during task-based fMRI. ISI: inter-stimulus interval.

Emotion matching (PIOP1 + 2).

The goal of the “emotion matching” task is to measure processes related to (facial) emotion processing. The paradigm we used was based on ref. 29. Trials were presented using a blocked design. In each trial, subjects were presented with either color images of an emotional target face (top) and two emotional probe faces (bottom left and bottom right; “emotion” condition) or a target oval (top) and two probe ovals (bottom left and bottom right; “control” condition) on top of a gray background (RGB: 248, 248, 248) and were instructed to either match the emotional expression of the target face (“emotion” condition) or the orientation or the target oval (“control” condition) as quickly as possible by pushing a button with the index finger of their left or right hand. The target and probes disappeared when the subject responded (or after 4.8 seconds). A new trial always appeared 5 seconds after the onset of each trial. In between the subject’s response and the new trial, a blank screen was shown. Trials were presented in alternating “control” and “emotion” blocks consisting of six stimuli of 5 seconds each (four blocks each, i.e., 48 stimuli in total). Stimuli always belonged to the same block, but the order of stimuli within blocks was randomized across participants. The task took 270 seconds in total (i.e., 135 volumes with a 2 second TR).

The faces always displayed either stereotypical anger or fear. Within trials, always exactly two faces portrayed the same expression. Both male and female faces and white, black, and Asian faces were used, but within a single trial, faces were always of the same sex and ethnicity category (white or Asian/black). Face pictures were derived from the NimStim Face Stimulus set30. The oval stimuli were created by pixelating the face stimuli and were approximately the same area as the face stimuli (making them color and size matched to the face stimuli) and were either presented horizontally (i.e., the long side was horizontally aligned) or vertically (i.e., the long side was vertically aligned). Within trials, always exactly two ovals were aligned in the same way.

The fMRI “event files” (with the identifier _events) associated with this task contain information of the trial onset (the moment the faces/ovals appeared on screen, in seconds), duration (how long the faces/ovals were presented, in seconds), trial type (either “control” or “emotion”), response time (how long it took the subject to respond, logged “n/a” in case of no response), response hand (either “left”, “right”, or “n/a” in case of no response), response accuracy (either “correct”, “incorrect”, or “miss”), orientation to match (either “horizontal”, “vertical”, or “n/a” in case of emotion trials), emotion match (either “fear”, “anger”, or “n/a” in case of control trials), gender of the faces (either “male”, “female”, of “n/a” in case of control trials), and ethnicity of the target and probe faces (either “caucasian”, “asian”, “black”, or “n/a” in case of control trials).

Working memory task (PIOP1 + 2).

The goal of the working memory task was to measure processes related to visual working memory. The paradigm we used was based on ref. 31. Trials were presented using a fixed event-related design, in which trial order was the same for each subject. Trials belonged to one of three conditions: “active (change)”, “active (no change)”, or “passive”. Each trial consisted of six phases: an alert phase (1 second), an encoding phase (1 second), a retention phase (2 seconds), a test phase (1 second), a response phase (1 second), and an inter-stimulus interval (0–4 seconds). Subjects were instructed to keep focusing on the fixation target, which was shown throughout the entire trial, and completed a set of practice trials before the start of the actual task. The task took 324 seconds in total (i.e., 162 volumes with a 2 second TR).

In all trial types, trials started with an alert phase: a change of color of the fixation sign (a white plus sign changing to green, RGB [0, 255, 0]), lasting for 1 second. In the encoding phase, for “active” trials, an array of six white bars with a size of 2 degrees visual angle with a random orientation (either 0, 45, 90, or 135 degrees) arranged in a circle was presented for 1 second. This phase of the trial coincided with a change in background luminance from black (RGB: [0, 0, 0]) to gray (RGB: [120, 120, 120]). For “passive” trials, only the background luminance changed (but no bars appeared) in the encoding phase. In the subsequent retention phase, for all trial types, a fixation cross was shown and the background changed back to black, lasting 2 seconds. In the test phase, one single randomly chosen bar appeared (at one of the six locations from the encoding phase) which either matched the original orientation (for “active (no change)” trials) or did not match the original orientation (for “active (change)” trials), lasting for 1 second on a gray background. For “passive” trials, the background luminance changed and, instead of a bar, the cue “respond left” or “respond right” was shown in the test phase. In the response phase, lasting 1 second, the background changed back to black and, for “active” trials, subjects had to respond whether the array changed (button press with right index finger) or did not change (button press with left index finger). For “passive” trials, subjects had to respond with the hand that was cued in the test phase. In the inter-stimulus interval (which varied from 0 to 4 seconds), only a black background with a fixation sign was shown.

In total, there were 8 “passive” trials, 16 “active (change)” and “active (no change)” trials, in addition to 20 “null” trials of 6 seconds (which are equivalent to an additional inter-stimulus interval of 6 seconds). The sequence trials was, in terms of conditions (active, passive, null) exactly the same for all participations in both PIOP1 and PIOP2, but which bar or cue was shown in the test phase was chosen randomly.

The fMRI event files associated with this task contain information of the trial onset (the moment when the alert phase started, in seconds), duration (from the alert phase up to and including the response phase, i.e., always 6 seconds), trial type (either “active (change)”, “active (no change)”, or “passive”; “null” trials were not logged), response time (how long it took the subject to respond, “n/a” in case of no response), response hand (either “left”, “right”, or “n/a” in case of no response), and response accuracy (either “correct”, “incorrect”, or “miss”). Note that, in order to model the response to one or more phases of the trial, the onsets and durations should be adjusted accordingly (e.g., to model the response to the retention phase, add 2 seconds to all onsets and change the duration to 2 seconds).

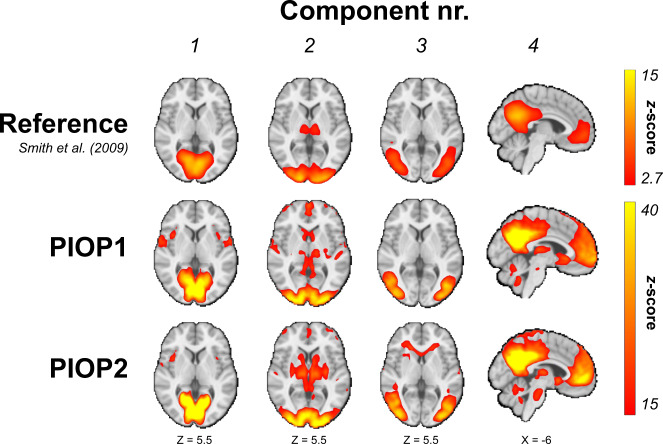

Resting state (PIOP1 + 2).

During the resting state scans, participants were instructed to keep their gaze fixated on a fixation cross in the middle of the screen with a gray background (RGB: [150, 150, 150]) and to let their thoughts run freely. Eyetracking data was recorded during this scan but is not included in this dataset. The resting state scans lasted 6 minutes (PIOP1; i.e., 480 volumes with a 0.75 second TR) and 8 minutes (PIOP2; i.e., 240 volumes with a 2 second TR).

Face perception (PIOP1).

The face perception task was included to measure processes related to (emotional) facial expression perception. Trials were presented using an event-related design, in which trial order was randomized per subject. In each trial, subjects passively viewed dynamic facial expressions (i.e., short video clips) taken from the Amsterdam Facial Expression Set (ADFES)32, which displayed either anger, contempt, joy, or pride, or no expression (“neutral”). Each clip depicted a facial movement from rest to a full expression corresponding to one of the four emotions, except for “neutral” faces, which depicted no facial movement. All clips lasted 2 seconds and contained either North-European or Mediterranean models, all of whom were female. After each video, a fixed inter-stimulus interval of 5 seconds followed. Each emotional facial expression (including “neutral”) was shown 6 times (with different people showing the expression each time), except for one, which was shown 9 times. Which emotional expression (or “neutral”) was shown an extra three times was determined randomly for each subject. Importantly, the three extra presentations always contained the same actor and were always presented as the first three trials. This was done in order to make it possible to evaluate the possible effects of stimulus repetition. The task took 247.5 seconds in total (i.e., 330 volumes with a 0.75 second TR).

The fMRI event files associated with this task contain information of the trial onset (the moment when the clip appeared on screen, in seconds), duration (of the clip, i.e., always 2 seconds), trial type (either “anger”, “joy”, “contempt”, “pride”, or “neutral”), sex of the model (all “female”), ethnicity of the model (either “North-European” or “Mediterranean”), and the ADFES ID of the model.

Gender-stroop task (PIOP1 only).

The goal of the gender-stroop task was to measure processes related to cognitive conflict and control33 (see also ref. 8 for an investigation of these processes using the PIOP1 gender-stroop data). We used the face-gender variant of the Stroop task (which was adapted from ref. 34), often referred to as the “gender-stroop” task. In this task, pictures of twelve different male and twelve different female faces are paired with the corresponding (i.e., congruent) or opposite (i.e., incongruent) label. For the labels, we used the Dutch words for “man”, “sir”, “woman”, and “lady” using either lower or upper case letters. The labels were located just above the head of the face. Trials were presented using an event-related design, in which trial order was randomized per subject. The task took 490 seconds in total (i.e., 245 volumes with a 2 second TR).

On each trial, subjects were shown a face-label composite on top of a gray background (RGB: [105, 105, 105]) for 0.5 seconds, which was either “congruent” (same face and label gender) or “incongruent” (different face and label gender). Stimulus presentation was followed by an inter-stimulus interval ranging between 4 and 6 seconds (in steps of 0.5 seconds). Subjects were always instructed to respond to the gender of the pictured face, ignoring the distractor word, as fast as possible using their left index finger (for male faces) or right index finger (for female faces). There were 48 stimuli for each condition (“congruent” and “incongruent”).

The fMRI event files associated with this task contain information of the trial onset (the moment when the face-label composite appeared on screen, in seconds), duration (of the face-label composite, i.e., always 0.5 seconds), trial type (either “incongruent” or “congruent”), gender of the face (either “male or “female”), gender of the word (either “male” or “female”), response time (in seconds), response hand (either “left”, “right”, or “n/a” in case of no response), response accuracy (either “correct”, “incorrect”, or “miss”).

Emotion anticipation task (PIOP1 only).

We included the emotion anticipation task to measure processes related to (emotional) anticipation and curiosity. The paradigm was based on paradigms previously used to investigate (morbid) curiosity35,36. Trials were presented using an event-related design, in which trial order was randomized per subject. In this task, subjects viewed a series of trials containing a cue and an image. The cue could either signal an 80% chance of being followed by a negatively valenced image (and a 20% chance of a neutral image) or an 80% chance of being followed by a neutral image (and a 20% change of a negatively valenced image). The cue was shown on top of a black background for 2 seconds, followed by a fixed interval of 3 seconds. After this interval, either a negative or neutral image was shown for 3 seconds, with a frequency that corresponds to the previously shown cue. In other words, for all trials with, for example, a cue signalling an 80% chance of being followed by a neutral image, it was in fact followed by a neutral image in 80% of the times. After the image, a fixed inter-stimulus interval of five seconds followed. In total, 15 unique negative images and 15 unique neutral images were shown, of which 80% (i.e., 12 trials) was preceded by a “valid” cue. Which stimuli were paired with valid or invalid cues was determined randomly for each subject. The order of the trials, given the four possible combinations (valid cue + negative image, invalid cue + negative image, valid cue + neutral image, invalid cue + neutral image), was drawn randomly from one of four possible sequences, which were generated using OptSeq (https://surfer.nmr.mgh.harvard.edu/optseq/) to optimize the chance of finding a significant interaction between cue type and image valence. The task took 400 seconds in total (i.e., 200 volumes with a 2 second TR).

The cue was implemented as a pie chart with the probability of a neutral image in blue and the probability of the negative image in red with the corresponding labels and probabilities (e.g., “negative 20%”) superimposed for clarity. The images were selected from the IAPS database37 and contained images of mutilation, violence, and death (negative condition) and of people in neutral situations (neutral condition).

The fMRI event files associated with this task contain information on the trial onset (the moment when either the cue or image appeared on the screen, in seconds), duration (of either the cue or image), and trial type. Trial type was logged separately for the cues (“negative”, indicating 80% probability of a negative image, and “neutral”, indicating 80% probability of a neutral image) and images (“negative” or “neutral”).

Stop-signal task (PIOP2 only).

The stop-signal task was included to measure processes related to response inhibition. This specific implementation of the stop-signal paradigm was based on ref. 38. Trials were presented using an event-related design, in which trial order was randomized per subject. Subjects were presented with trials (n = 100) in which an image of either a female or male face (chosen from 9 exemplars) was shown for 500 ms on a black background. Subjects had to respond whether the face was female (right index finger) or male (left index finger) as quickly and accurately as possible, except when an auditory stop signal (a tone at 450 Hz for 0.5 seconds) was presented (on average 33% of the trials). The delay in presentation of the stop signal (i.e., the “stop signal delay”) was at start of the experiment 250 milliseconds, but was shortened with 50 ms if stop performance, up to that point, was better than 50% accuracy and shortened with 50 ms if it was worse. Each trial had a duration of 4000 ms and was preceded by a jitter interval (0, 500, 1000 or 1500 ms). If subjects responded too slow, or failed to respond an additional feedback trial of 2000 ms was presented. Additionally 10% (on average) null trials with a duration of 4000 ms were presented randomly. Note that due to this additional feedback trial and the fact that subjects differed in how many feedback trials they received, the fMRI runs associated with this task differ in length across subjects (i.e., the scan was manually stopped after 100 trials; minimum: 210 volumes, maximum: 250 volumes, median: 224 volumes, corresponding to a duration of 448 seconds with a 2 second TR).

The fMRI event files associated with this task contain information on the trial onset (the moment when a face was presented, in seconds), duration (always 0.5083 seconds), trial type (go, succesful_stop, unsuccesful_stop), if and when a stop signal was given (in seconds after stimulus onset), if and when a response time was given (in seconds after stimulus onset), response of the subject (left, right), and the sex of the image (male, female).

Previous analyses

The PIOP1 data has been previously analyzed by three studies. One methodological study6 used the T1-weighted data (VBM, specifically) and DWI data (tract-based spatial statistics, TBSS, specifically) and the self-reported biological sex of subjects to empirically test an approach to correct for confounds in decoding analyses of neuroimaging data. Two other studies analyzed the participants’ religiosity scores. One study7 performed a voxelwise VBM analysis on the religiosity scores while the other study8 related the religiosity data to the incongruent - congruent activity differences from the gender-stroop functional MRI data. Notably, both studies were pre-registered. There have been no (published) analyses on the PIOP2 data.

Differences between PIOP1 and PIOP2

One important difference between PIOP1 and PIOP2 is that they are not the same in terms of task-based functional MRI they acquired. Specifically, the “emotion anticipation”, “faces”, and “gender stroop” tasks were only acquired in PIOP1 and the “stop signal” task was only acquired in PIOP2 (see Table 1). In terms of scan sequences, the only notable difference between PIOP1 and PIOP2 is that the resting-state functional MRI scan in PIOP1 was acquired with multiband factor 3 (resulting in a TR of 750 ms) while the resting-state functional MRI scan in PIOP2 was acquired without multiband (i.e., with a sequential acquisition, resulting in a TR of 2000 ms; see Online-only Table 1). Additionally, because PIOP1 was acquired before the dStream upgrade and PIOP2 after the upgrade, the PIOP1 data generally has a lower tSNR but this does not seem to lead to substantial differences in effects (see “Technical Validation”).

Subject variables (all datasets)

In AOMIC, several demographic and other subject-specific variables are included per dataset. Below, we describe all variables in turn. Importantly, all subject variables and psychometric variables are stored in the participants.tsv file in each study’s data repository. Note that missing data in this file is coded as “n/a”. In Online-only Table 2, all variables and associated descriptions are listed for convenience.

Online-only Table 2.

Description of the subject variables and psychometric variables contained in AOMIC.

| Variable name | Description | Levels/range | Units | Included in |

|---|---|---|---|---|

| age | Subject age at day of measurement | — | Quantiles | All |

| sex | (Biological) sex | male, female | — | All |

| gender_identity_F | To what degree the subject felt female | 1–7 | A.U. | ID1000 |

| gender_identity_M | To what degree the subject felt male | 1–7 | A.U. | ID1000 |

| sexual_attraction_M | To what degree the subject was attracted to males | 1–7 | A.U. | ID1000 |

| sexual_attraction_F | To what degree the subject was attracted to females | 1–7 | A.U. | ID1000 |

| BMI | Body-mass-index | — | kg/m2 | All |

| handedness | Dominant hand | left, right | ID1000 | |

| handedness | Dominant hand | left, right, ambidextrous | — | PIOP1&2 |

| background_SES | Social economical status of family in which the subject was raised. | 2–6 | A.U. | ID1000 |

| educational_level | Highest achieved (or current) educational level | low, medium, high | — | ID1000 |

| educational_category | Highest achieved (or current) education type | applied, academic | — | PIOP1&2 |

| religious | Whether the subject is religious or not | no, yes | — | PIOP1 |

| religious_upbringing | Whether the subject was raised religiously or not | no, yes | — | ID1000 |

| religious_now | Whether the subject is religious now | no, yes | — | ID1000 |

| religious_importance | To what degree religion plays a role in the subject’s daily life | 1–5 | A.U. | ID1000 |

| IST_fluid | IST fluid intelligence subscale | — | A.U. | ID1000 |

| IST_memory | IST memory subscale | — | A.U. | ID1000 |

| IST_crystallised | IST crystallised intelligence subscale | — | A.U. | ID1000 |

| IST_total_intelligence | IST total intelligence scale | — | A.U. | ID1000 |

| BAS_drive | BAS drive scale | — | A.U. | ID1000 |

| BAS_fun | BAS fun scale | — | A.U. | ID1000 |

| BAS_reward | BAS reward scale | — | A.U. | ID1000 |

| BIS | BIS scale | — | A.U. | ID1000 |

| NEO_N | Neuroticism scale (sum score) | — | A.U. | All |

| NEO_E | Extraversion scale (sum score) | — | A.U. | All |

| NEO_O | Openness scale (sum score) | — | A.U. | All |

| NEO_A | Agreeableness scale (sum score) | — | A.U. | All |

| NEO_C | Conscientiousness scale (sum score) | — | A.U. | All |

| STAI_T | Trait anxiety (from the STAI) (sum score) | — | A.U. | ID1000 |

The “variable name” coincides with the column name for that variable in the participants.tsv file. Note that missing values in this file are coded with “n/a”. A.U.: arbitrary units.

Age

We asked subjects for their date of birth at the time they participated. From this, we computed their age rounded to the nearest quartile (for privacy reasons). See Table 2 for descriptive statistics of this variable.

Table 2.

Descriptive statistics for biological sex, age, and education level for all three datasets.

| % by biological sex | Mean age (sd; range) | % per education level/category | |

|---|---|---|---|

| ID1000 | M: 47%, F: 52% | 22.85 (1.71; 19–26) | Low: 10%, Medium: 43%, High: 43% |

| PIOP1 | M: 41.2%, F: 55.6% | 22.18 (1.80; 18.25–26.25) | Applied: 56.5%, Academic: 43.5% |

| PIOP2 | M: 42.5%, F: 57.0% | 21.96 (1.79; 18.25–25.75) | Applied: 53%, Academic: 46% |

Biological sex and gender identity

In all three studies, we asked subjects for their biological sex (of which the options were either male or female). For the ID1000 dataset, after the first 400 subjects, we additionally asked to what degree subjects felt male and to what degree they felt female (i.e., gender identity; separate questions, 7 point likert scale, 1 = not at all, 7 = strong). The exact question in Dutch was: ‘ik voel mij een man’, ‘ik voel mij een vrouw’. This resulted in 0.3% of subjects scoring opposite on gender identity compared to their biological sex and 92% of females and 90% of males scoring conformable with their sex.

Sexual orientation

For the ID1000 dataset, after the first 400 subjects, we additionally asked to what degree subjects were attracted to men and women (both on a 7 point likert scale, 1 = not at all, 7 = strong). The exact question in Dutch was: ‘ik val op mannen’ and ‘ik val op vrouwen’.

Of the 278 subjects with a male sex 7.6% indicated to be attracted to men (score of 4 or higher), of the 276 subjects with a female sex 7.2% indicated to be attracted to women (score of 4 or higher). Of the 554 subjects who completed these questions 0.4% indicated not be attracted to either men or women and 0.9% indicated to be strongly attracted to both men and women.

BMI

Subjects were asked for (PIOP1 and PIOP2) or we measured (ID1000) subjects’ height and weight on the day of testing, from which we calculated their body-mass-index (BMI), which we rounded to the nearest integer. Note that height and weight are not included in AOMIC (for privacy reasons), but BMI is.

Handedness

Subjects were asked for their dominant hand. In ID1000, the options were “left” and “right” (left: 102, right: 826). For PIOP1 and PIOP2 we also included the option “both” (PIOP1, left: 24, right: 180, ambidextrous: 5, n/a: 7; PIOP2, left: 22, right: 201, ambidextrous: 1, n/a: 2).

Educational level/category

Information about subjects’ educational background is recorded differently for ID1000 and the PIOP datasets, so they are discussed separately. Importantly, while we included data on educational level for the ID1000 dataset, we only include data on educational category for the PIOP datasets because they contain little variance in terms of educational level.

Educational level (ID1000).

As mentioned, for the ID1000 dataset we selected subjects based on their educational level in order to achieve a representative sample of the Dutch population (on that variable). We did this by asking for their highest completed educational level. In AOMIC, however, we report the educational level (three point scale: low, medium, high) on the basis of the completed or current level of education (which included the scenario in which the subject was still a student), which we believe reflects educational level for our relatively young (19–26 year old) population better. Note that this difference in criterion causes a substantial skew towards a higher educational level in our sample relative to the distribution of educational level in the Dutch population (see Table 3).

Table 3.

Distribution of educational level in the Dutch population (in 2010) and in our ID1000 sample.

| Educational level | Population | Subjects | Father | Mother |

|---|---|---|---|---|

| Low | 35% | 10% | 36% | 32% |

| Medium | 39% | 43% | 27% | 24% |

| High | 26% | 47% | 37% | 44% |

The data from the education level of subjects’ parents was used to compute background socio-economic status.

Educational category (PIOP1+2).

Relative to ID1000, there is much less variance in educational level within the PIOP datasets as these datasets only contain data from university students. As such, we only report whether subjects were, at the time of testing, studying at the Amsterdam University of Applied Sciences (category: “applied”) or at the University of Amsterdam (category: “academic”).

Background socio-economic status (SES).

In addition to reporting their own educational level, subjects also reported the educational level (see Table 3) of their parents and the family income in their primary household. Based on this information, we determined subjects’ background social economical status (SES) by adding the household income — defined on a three point scale (below modal income, 25%: 1, between modal and 2 × modal income, 57%: 2, above 2 × modal income, 18%: 3) — with the average educational level of the parents — defined on a three point scale (low: 1, medium: 2, high: 3). This revealed that, while the educational level of the subjects is somewhat skewed towards “high”, SES is well distributed across the entire spectrum (see Table 4).

Table 4.

Distribution of background SES.

| Background SES | % of subjects |

|---|---|

| 2–3 | 16% |

| 3–4 | 26% |

| 4–5 | 28% |

| 5–6 | 19% |

| >6 | 11% |

Religion (PIOP1 and ID1000 only)

For both the PIOP1 and ID1000 datasets, we asked subjects whether they considered themselves religious, which we include as a variable for these datasets (recoded into the levels “yes” and “no”). Of the subjects that participated in the PIOP1 study 18.0% indicated to be religious, for the subjects in the ID1000 projects this was 21.2%. For the ID1000 dataset we also asked subjects if they were raised religiously (N = 319, 34.1%) and to what degree religion played a daily role in their lives (in Dutch, “Ik ben dagelijks met mijn geloof bezig”, 5 point likert scale, 1 = not at all applicable, 5 = very applicable).

Psychometric variables (all datasets)

BIS/BAS (ID1000 only).

The BIS/BAS scales are based on the idea that there are two general motivational systems underlying behavior and affect: a behavioral activation system (BAS) and a behavioral inhibition system (BIS). The scales of the BIS/BAS attempt to measure these systems21. The BAS is believed to measure a system that generates positive feedback while the BIS is activated by conditioned stimuli associated with punishment.

The BIS/BAS questionnaire consists of 20 items (4 point scale). The BIS scale consists of 7 items. The BAS scale consists of 13 items and contains three subscales, related to impulsivity (BAS-Fun, 4 items), reward responsiveness (BAS-Reward, 5 items) and the pursuit of rewarding goals (BAS-Drive, 4 items).

STAI-T (ID1000).

We used the STAI20,25 to measure trait anxiety (STAI-T). The questionnaire consists of two scales (20 questions each) that aim to measure the degree to which anxiety and fear are a trait and part of the current state of the subject; subjects only completed the trait part of the questionnaire, which we include in the ID1000 dataset.

NEO-FFI (all datasets).

The NEO-FFI is a Big 5 personality questionnaire that consists of 60 items (12 per scale)24,39. It measures neuroticism (“NEO-N”), extraversion (“NEO-E”), openness to experience (“NEO-O”), agreeableness (“NEO-A”), and conscientiousness (“NEO-C”). Neuroticism is the opposite of emotional stability, central to this construct is nervousness and negative emotionality. Extraversion is the opposite of introversion, central to this construct is sociability — the enjoyment of others’ company. Openness to experience is defined by having original, broad interests, and being open to ideas and values. Agreeableness is the opposite of antagonism. Central to this construct are trust, cooperation and dominance. Conscientiousness is the opposite of un-directedness. Adjectives associated with this construct are thorough, hard-working and energetic.

IST (ID1000 only).

The Intelligence Structure Test (IST)18,19 is an intelligence test measuring crystallized intelligence, fluid intelligence, and memory, through tests using verbal, numerical, and figural information. The test consists of 590 items. The three measures (crystallized intelligence, fluid intelligence, and memory) are strongly positively correlated (between r = 0.58 and r = 0.68) and the sum score of these values form the variable “total intelligence”.

Raven’s matrices (PIOP1+2).

As a proxy for intelligence, subjects performed the 36 item version (set II) of the Raven’s Advanced Progressive Matrices Test40,41. We included the sum-score (with a maximum score of 36) in the PIOP datasets.

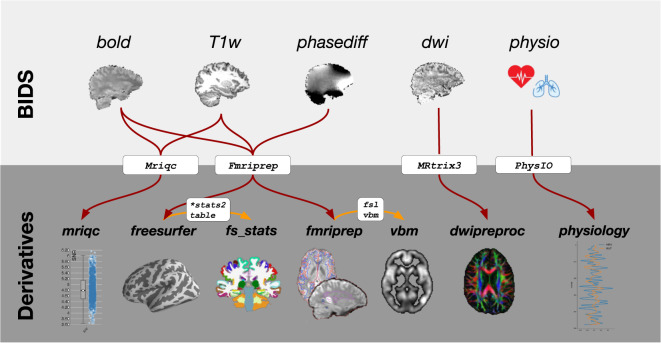

Data standardization, preprocessing, and derivatives

In this section, we describe the data curation and standardization process as well as the preprocessing applied to the standardized data and the resulting “derivatives” (see Fig. 3 for a schematic overview). This section does not describe this process separately for each dataset, because they are largely identically standardized and (pre)processed. Exceptions to this will be explicitly mentioned. In this standardization process, we adhered to the guidelines outlined in the Brain Imaging Data Structure (BIDS, v1.2.2)14, both for the “raw” data as well as the derivatives (whenever BIDS guidelines exist for that data or derivative modality).

Fig. 3.

Overview of the types of data and “derivatives” included in AOMIC and the software packages used to preprocess and analyze them.

Raw data standardization

Before subjecting the data to any preprocessing pipeline, we converted the data to BIDS using the in-house developed package bidsify (see “Code availability” section for more details about the software used in the standardization process). The “BIDSification” process includes renaming of files according to BIDS convention, conversion from Philips PAR/REC format to compressed nifti, removal of facial characteristics from anatomical scans (“defacing”), and extraction of relevant metadata into JSON files.

The results from the standardization process were checked using the bids-validator software package, which revealed no validation errors. However, the validation process raised several warnings about possible issues with the datasets (as shown in the “BIDS validation” section in their OpenNeuro repositories), which we explain in turn.

First, all three datasets contain subjects with incomplete data (i.e., missing MRI or physiology data), which causes the “Not all subjects contain the same files” warning. Second, the bids-validator issues a warning that “[n]ot all subjects/sessions/runs have the same scanning parameters”. One cause for this warning (in PIOP1 and PIOP2) is that the time to repetition (TR) parameter of some DWI scans varies slightly (PIOP1: min = 7382 ms, max = 7700, median = 7390; PIOP2: min = 7382, max = 7519, median = 7387), which is caused by acquiring the DWI data with the shortest possible TR (i.e., TR = “shortest” setting on Philips scanners; see Online-only Table 1). Because the shortest possible TR depends on the exact angle of the slice box, the actual TR varies slightly from scan to scan. Notably, this warning is absent for the ID1000 dataset, because the TR value in the nifti file header is incorrect (i.e., it is set to 1 for each scan). Like the DWI scans in PIOP1 and PIOP2, the DWI scans from ID1000 were acquired with the shortest possible TR, resulting in slightly different TRs from scan to scan (min = 6307, max = 6838, median = 6312). The correct TR values in seconds for each DWI scan in ID1000 was added to the dataset’s participants.tsv file (with the column names “DWI_TR_run1”, “DWI_TR_run2”, and “DWI_TR_run3”).

In addition, the functional MRI scans from ID1000 were cropped in the axial and coronal direction by conservatively removing axial slices with low signal intensity in order to save disk space, causing slightly different dimensions across participants. Finally, in PIOP2, the functional MRI scans from the stop-signal task differ in the exact number of volumes (min = 210, max = 250, median = 224) because the scan was stopped manually after the participant completed the task (which depended on their response times).

Anatomical and functional MRI preprocessing

Results included in this manuscript come from preprocessing performed using Fmriprep version 1.4.1 (RRID:SCR_016216)42,43, a Nipype based tool (RRID:SCR_002502)44,45. Each T1w (T1-weighted) volume was corrected for INU (intensity non-uniformity) using N4BiasFieldCorrection v2.1.046 and skull-stripped using antsBrainExtraction.sh v2.1.0 (using the OASIS template). Brain surfaces were reconstructed using recon-all from FreeSurfer v6.0.1 (RRID:SCR_001847)47, and the brain mask estimated previously was refined with a custom variation of the method to reconcile ANTs-derived and FreeSurfer-derived segmentations of the cortical gray-matter of Mindboggle (RRID:SCR_002438)48. Spatial normalization to the ICBM 152 Nonlinear Asymmetrical template version 2009c (RRID:SCR_008796)49 was performed through nonlinear registration with the antsRegistration tool of ANTs v2.1.0 (RRID:SCR_004757)50, using brain-extracted versions of both T1w volume and template. Brain tissue segmentation of cerebrospinal fluid (CSF), white-matter (WM) and gray-matter (GM) was performed on the brain-extracted T1w using fast (FSL v5.0.9, RRID:SCR_002823)51.

Functional data was not slice-time corrected. Functional data was motion corrected using mcflirt (FSL v5.0.9)52 using the average volume after a first-pass motion correction procedure as the reference volume and normalized correlation as the image similarity cost function. “Fieldmap-less” distortion correction was performed by co-registering the functional image to the same-subject T1w image with intensity inverted53,54 constrained with an average fieldmap template55, implemented with antsRegistration (ANTs). Note that this fieldmap-less method was used even for PIOP2, which contained a phase-difference (B0) fieldmap, because we observed that Fmriprep’s fieldmap-based method led to notably less accurate unwarping than its fieldmap-less method.

Distortion-correction was followed by co-registration to the corresponding T1w using boundary-based registration56 with 6 degrees of freedom, using bbregister (FreeSurfer v6.0.1). Motion correcting transformations, field distortion correcting warp, BOLD-to-T1w transformation and T1w-to-template (MNI) warp were concatenated and applied in a single step using antsApplyTransforms (ANTs v2.1.0) using Lanczos interpolation.

Physiological noise regressors were extracted by applying CompCor57. Principal components were estimated for the two CompCor variants: temporal (tCompCor) and anatomical (aCompCor). A mask to exclude signal with cortical origin was obtained by eroding the brain mask, ensuring it only contained subcortical structures. Six tCompCor components were then calculated including only the top 5% variable voxels within that subcortical mask. For aCompCor, six components were calculated within the intersection of the subcortical mask and the union of CSF and WM masks calculated in T1w space, after their projection to the native space of each functional run. Framewise displacement58 was calculated for each functional run using the implementation of Nipype.

Many internal operations of Fmriprep use Nilearn (RRID:SCR_001362)59, principally within the BOLD-processing workflow. For more details of the pipeline see https://fmriprep.readthedocs.io/en/1.4.1/workflows.html.

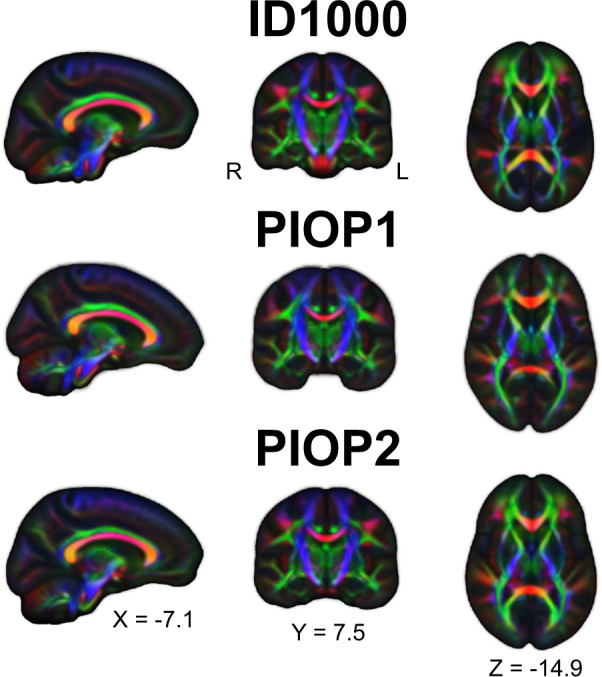

Diffusion MRI (pre)processing

DWI scans were preprocessed using a custom pipeline combining tools from MRtrix360 and FSL. Because we acquired multiple DWI scans per participant in the ID1000 study (but not in PIOP1 and PIOP2), we concatenated these files as well as the diffusion gradient table (bvecs) and b-value information (bvals) prior to preprocessing. Using MRtrix3, we denoised the diffusion-weighted data using dwidenoise61,62, removed Gibbs ringing artifacts using mrdegibbs63, and performed eddy current and motion correction using dwipreproc. Notably, dwipreproc is a wrapper around the GPU-accelerated (CUDA v9.1) FSL tool eddy64. Within eddy, we used a quadratic first-level (--flm = quadratic) and linear second-level model (--slm = linear) and outlier replacement65 with default parameters (--repol). Then, we performed bias correction using dwibiascorrect (which is based on ANTs; v2.3.1), extracted a brain mask using dwi2mask66, and corrected possible issues with the diffusion gradient table using dwigradcheck67.

After preprocessing, using MRtrix3 tools, we fit a diffusion tensor model on the preprocessed diffusion-weighted data using weighted linear least squares (with 2 iterations) as implemented in dwi2tensor68. From the estimated tensor image, a fractional anisotropy (FA) image was computed and a map with the first eigenvectors was extracted using tensor2metric. Finally, a population FA template was computed using population_template (using an affine and an additional non-linear registration).

The following files are included in the DWI derivatives: a binary brain mask, the preprocessed DWI data as well as preprocessed gradient table (bvec) and b-value (bval) files, outputs from the eddy correction procedure (for quality control purposes; see Technical validation section), the estimated parameters from the diffusion tensor model, the eigenvectors from the diffusion tensor model, and a fractional anisotropy scalar map computed from the eigenvectors. All files are named according to BIDS Extension Proposal 16 (BEP016: diffusion weighted imaging derivatives).

Freesurfer morphological statistics

In addition to the complete Freesurfer directories containing the full surface reconstruction per participant, we provide a set of tab-separated values (TSV) files per participant with several morphological statistics per brain region for four different anatomical parcellations/segmentations. For cortical brain regions, we used two atlases shipped with Freesurfer: the Desikan-Killiany (aparc in Freesurfer terms)69 and Destrieux (aparc.a2009 in Freesurfer terms)70 atlases. For these parcellations, the included morphological statistics are volume in mm3, area in mm2, thickness in mm, and integrated rectified mean curvature in mm−1. For subcortical and white matter brain regions, we used the results from the subcortical segmentation (aseg in Freesurfer terms) and white matter segmentation (wmparc in Freesurfer terms) done by Freesurfer. For these parcellations, the included morphological statistics are volume in mm3 and average signal intensity (arbitrary units). The statistics were extracted from the Freesurfer output directories using the Freesufer functions asegstats2table and aparcstats2table and further formatted using custom Python code. The TSV files (and accompanying JSON metadata files) are formatted according to BIDS Extension Proposal 11 (BEP011: structural preprocessing derivatives).

Voxel-based morphology (VBM)

In addition to the Fmriprep-preprocessed anatomical T1-weighted scans, we also provide voxelwise gray matter volume maps estimated using voxel-based morphometry (VBM). We used a modified version of the FSL VBM pipeline (http://fsl.fmrib.ox.ac.uk/fsl/fslwiki/FSLVBM)71, an optimised VBM protocol72 carried out with FSL tools73. We skipped the initial brain-extraction stage (fslvbm_1_bet) and segmentation stage (first part of fslvbm_3_proc) and instead used the probabilistic gray matter segmentation file (in native space) from Fmriprep (i.e., *label-GM_probseg.nii.gz files) directly. These files were registered to the MNI152 standard space using non-linear registration74. The resulting images were averaged and flipped along the x-axis to create a left-right symmetric, study-specific grey matter template. Second, all native grey matter images were non-linearly registered to this study-specific template and “modulated” to correct for local expansion (or contraction) due to the non-linear component of the spatial transformation.

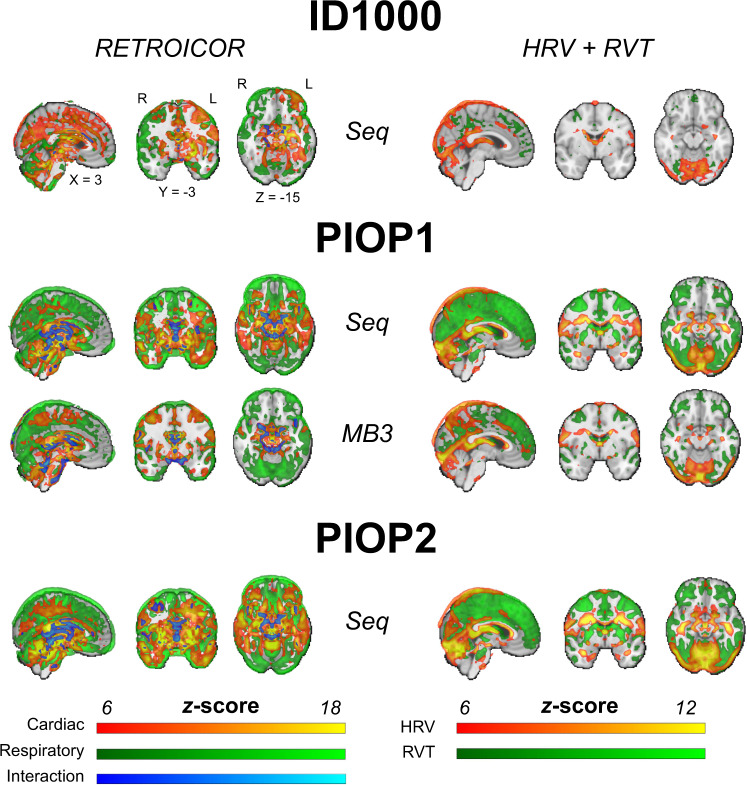

Physiological noise processing

Physiology files were converted to BIDS-compatible compressed TSV files using the scanphyslog2bids package (see Code availability). Each TSV file contains three columns: the first contains the cardiac trace, the second contains the respiratory trace, and the third contains the volume onset triggers (binary, where 1 represents a volume onset). Each TSV file is accompanied by a JSON metadata file with the same name, which contains information about the start time of the physiology recording relative to the onset of the first volume. Because the physiology recording always starts before the fMRI scan starts, the start time is always negative (e.g., a start time of −42.01 means that the physiology recording started 42.01 seconds before the onset of the first volume). After conversion to BIDS, the estimated volume triggers and physiology traces were plotted, visually inspected for quality, and excluded if either of the physiology traces had missing data for more than ten seconds or if the volume triggers could not be estimated.

The physiology data was subsequently used to estimate fMRI-appropriate nuisance regressors using the TAPAS PhysIO package75 (see Code availability). Using this package, we specifically estimated 18 “RETROICOR” regressors76 based on a Fourier expansion of cardiac (order: 2) and respiratory (order: 3) phase and their first-order multiplicative terms (as defined in ref. 77). In addition, we estimated a heart-rate variability (HRV) regressor by convolving the cardiac trace with a cardiac response function78 and a respiratory volume by time (RVT) regressor by convolving the respiratory trace with a respiration response function79.

Data Records

Data formats and types

In AOMIC, the majority of the data is stored in one of four formats. First, all volumetric (i.e., 3D or 4D) MRI data is stored in compressed “NIfTI” files (NIfTI-1 version; extension:.nii.gz). NIfTI files contain both the data and metadata (stored in the header) and can be loaded into all major neuroimaging analysis packages and programming languages using, e.g., the nibabel package for Python (https://nipy.org/nibabel), the oro.nifti package in R (https://cran.r-project.org/web/packages/oro.nifti), and natively in Matlab (version R2017b and higher). Second, surface (i.e., vertex-wise) MRI data is stored in “Gifti” files (https://www.nitrc.org/projects/gifti; extension:.gii). Like NIfTI files, Gifti files contain both data and metadata and can be loaded in several major neuroimaging software packages (including Freesurfer, FSL, AFNI, SPM, and Brain Voyager) and programming languages using, e.g., the nibabel package for Python and the gifti package for R (https://cran.rstudio.com/web/packages/gifti).

Third, data organized as tables (i.e., observations in rows and properties in columns), such as physiological data and task-fMRI event log files, are stored in tab-separated values (TSV) files, which contain column names as the first row. TSV files can be opened using spreadsheet software (such as Microsoft Excel or Libreoffice Calc) and read using most major programming languages. Fourth, (additional) metadata is stored as key-value pairs in plain-text JSON files. A small minority of data in AOMIC is stored using different file formats (such as hdf5 for composite transforms of MRI data and some Freesurfer files), but these are unlikely to be relevant for most users.

Apart from data formats, we can distinguish different data types within AOMIC. Following BIDS convention, data types are distinguished based on an “identifier” at the end of the file name (before the extension). For example, T1-weighted files (e.g., sub-0001_T1w.nii.gz) are distinguished by the _T1w identifier and event log files for task-based functional MRI data (e.g., sub-001_task-workingmemory_acq-seq_events.tsv) are distinguished by the _events identifier. All data types and associated identifiers within AOMIC are listed in Online-only Table 3.

Online-only Table 3.

All data types with associated identifiers, descriptions, and modalities.

| Raw data | ||

|---|---|---|

| Identifier (ext.) | Description | Modality |

| _T1w (nii.gz) | T1-weighted scan | Anatomical MRI |

| _bold (nii.gz) | Functional (BOLD) MRI scan | Functional MRI |

| _magnitude1 (nii.gz) | Average magnitude image (across two echoes) of B0 fieldmap scan (PIOP2 only) | Fieldmap |

| _phasediff (nii.gz) | Difference between two phase images of B0 fieldmap scan | Fieldmap |

| _dwi (.nii.gz) | Diffusion-weighted scan | DWI |

| _dwi (.bvec) | Diffusion gradient table (plain-text file) | DWI |

| _dwi (.bval) | Diffusion b-value sequence (plain-text file) | DWI |

| _physio (tsv.gz) | Physiology (cardiac/respiratory traces + volume onsets) | Physiology |

| _events (tsv) | Onsets, durations, and other relevant properties of events during task fMRI | Behavior |

| * (.json) | Metadata associated with a specific file, acquisition type, or file type | All modalities |

| Derivatives | ||

| Fmriprep derivatives | ||

| Identifier (ext.) | Description | Modality |

| _T1w (nii.gz) | Preprocessed T1-weighted scan | Functional MRI |

| _mask (nii.gz) | Binary brain mask (0: not brain, 1: brain) | Functional/ |

| anatomical/ | ||

| diffusion MRI | ||

| _dseg (nii.gz) | Discrete segmentation (0: background, 1: gray matter, 2: white matter, 3: cerebrospinal fluid) | Anatomical MRI |

| _probseg (nii.gz) | Probabilistic segmentation (1st volume: gray matter, 2nd volume: white matter | |

| .surf (.gii) | Reconstructed surfaces (e.g., inflated, midthickness, pial, and smoothwm) | Anatomical MRI |

| _xfm (.h5) | Linear + non-linear transformation parameters between two spaces (orig, T1w, MNI152NLin2009cAsym, and fsaverage5) | Functional/ |

| anatomical MRI | ||

| _xfm (.txt) | Linear transformation parameters between two spaces (orig, T1w, MNI152NLin2009cAsym, and fsaverage5) | Functional/ |

| anatomical MRI | ||

| _bold (nii.gz) | Preprocessed BOLD-MRI scan | Functional MRI |

| _boldref (nii.gz) | Reference volume used for computing transformation parameters | Functional MRI |

| _regressors (tsv) | Table with confound regressors for BOLD-MRI data | Functional MRI |

| * (.json) | Metadata from file with the same name (excluding extension) | All modalities |

| DWI derivatives | ||

| _dwi (.nii.gz) | Preprocessed diffusion-weighted scan | DWI |

| _dwi (.bvec) | Preprocessed gradient table | DWI |

| _dwi (.bval) | Preprocessed b-value sequence | DWI |

| _mask (.nii.gz) | Binary brain mask (0: not brain, 1: brain) | DWI |

| _diffmodel | Estimated parameters from the diffusion tensor model | DWI |

| _EVECS | Eigenvectors from the diffusion tensor model | DWI |

| _FA | Fractional anisotropy map | DWI |

| VBM derivatives | ||

| GMvolume | Estimated voxelwise gray matter volume map | Anatomical MRI |

| Freesurfer morphological statistics derivatives | ||

| _stats | Morphological statistics per anatomical region from a particular atlas | Anatomical MRI |

| Physiology derivatives | ||

| _regressors | Physiology-derived RETROICOR, HRV, and RVT regressors for BOLD-MRI data | Physiology |

Data repositories used

Data from AOMIC can be subdivided into two broad categories. The first category encompasses all subject-level data, both raw data and derivatives. The second category encompasses group-level aggregates of data, such as an average (across subjects) tSNR map or group-level task fMRI activation maps. Data from these two categories are stored in separate, dedicated repositories: subject-level data is stored on OpenNeuro (https://openneuro.org)15 and the subject-aggregated data is stored on NeuroVault (https://neurovault.org)16. Data from each dataset — PIOP1, PIOP2, and ID1000 — are stored in separate repositories on OpenNeuro80–82 and NeuroVault83–85. URLs to these repositories for all datasets can be found in Table 5. Apart from the option to download data using a web browser, we provide instructions to download the data programmatically on https://nilab-uva.github.io/AOMIC.github.io.

Table 5.

Data repositories for subject data (OpenNeuro) and group-level data (NeuroVault).

Data anonymization

In curating this collection, we took several steps in ensuring the anonymity of participants. All measures were discussed with the data protection officer of the University of Amsterdam and the data steward of the department of psychology, who deemed the anonymized data to be in accordance with the European General Data Protection Regulation (GDPR).

First, all personally identifiable information (such as subjects’ name, date of birth, and contact information) in all datasets were irreversibly destroyed. Second, using the pydeface software package86, we removed facial characteristics (mouth and nose) from all anatomical scans, i.e., the T1-weighted anatomical scans and (in PIOP2) magnitude and phase-difference images from the B0 fieldmap. The resulting defaced images were checked visually to confirm that the defacing succeeded. Third, the data files were checked for timestamps and removed when present. Lastly, we randomized the subject identifiers (sub-xxxx) for all files. In case participants might have remembered their subject number, they will not be able to look up their own data within our collection.

Technical Validation

In this section, we describe the measures taken for quality control of the data. This is described per data type (e.g., anatomical T1-weighted images, DWI images, physiology, etc.), rather than per dataset, as the procedure for quality control per data type was largely identical across the datasets. Importantly, we take a conservative approach towards exclusion of data, i.e., we generally did not exclude data unless (1) it was corrupted by scanner-related incorrigible artifacts, such as reconstruction errors, (2) when preprocessing fails due to insufficient data quality (e.g., in case of strong spatial inhomogeneity of structural T1-weighted scans, preventing accurate segmentation), (3) an absence of a usable T1-weighted scan (which is necessary for most preprocessing pipelines), or (4) incidental findings. This way, the data from AOMIC can also be used to evaluate artifact-correction methods and other preprocessing techniques aimed to post-hoc improve data quality and, importantly, this places the responsibility for inclusion and exclusion of data in the hands of the users of the datasets.

Researchers not interested in using AOMIC data for artifact-correction or preprocessing techniques may still want to exclude data that do not meet their quality standards. As such, we include, for each modality (T1-weighted, BOLD, and DWI) separately, a file with several quality control metrics across subjects. The quality control metrics for the T1-weighted and functional (BOLD) MRI scans were computed by the Mriqc package87 and are stored in the group_T1w.tsv and group_T1w.tsv files in the mriqc derivative folder. The quality control metrics for the DWI scans were derived from the output of FSL’s eddy algorithm and are stored in the group_dwi.tsv file in the dwipreproc derivative folder. Using these precomputed quality control metrics, researchers can decide which data to include based on their own quality criteria.

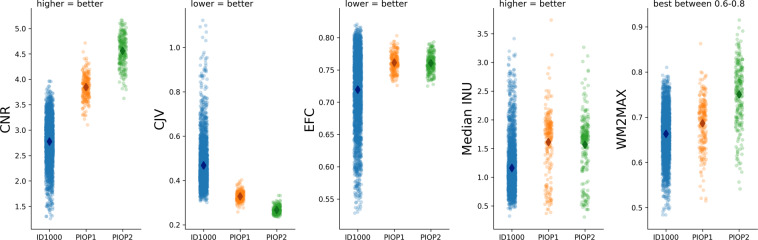

T1-weighted scans

All T1-weighted scans were run through the Mriqc pipeline, which outputs several quality control metrics as well as a report with visualizations of different aspects of the data. All individual subject reports were visually checked for artifacts including reconstruction errors, failure of defacing, normalization issues, and segmentation issues (and the corresponding data excluded when appropriate). In Fig. 4, we visualize several quality control metrics related to the T1-weighted scans across all three datasets. In general, data quality appears to increase over time (with ID1000 being the oldest dataset, followed by PIOP1 and PIOP2), presumably due to improvements in hardware (see “Scanner details and general scanning protocol”). All quality control metrics related to the T1-weighted scans, including those visualized in Fig. 4, are stored in the group_T1w.tsv file in the mriqc derivatives folder.

Fig. 4.

Quality control metrics related to the T1-weighted scans. CNR: contrast-to-noise ratio;101 CJV: coefficient of joint variation102, an index reflecting head motion and spatial inhomogeneity; EFC: entropy-focused criterion103, an index reflecting head motion and ghosting; INU: intensity non-uniformity, an index of spatial inhomogeneity; WM2MAX: ratio of median white-matter intensity to the 95% percentile of all signal intensities; low values may lead to problems with tissue segmentation.

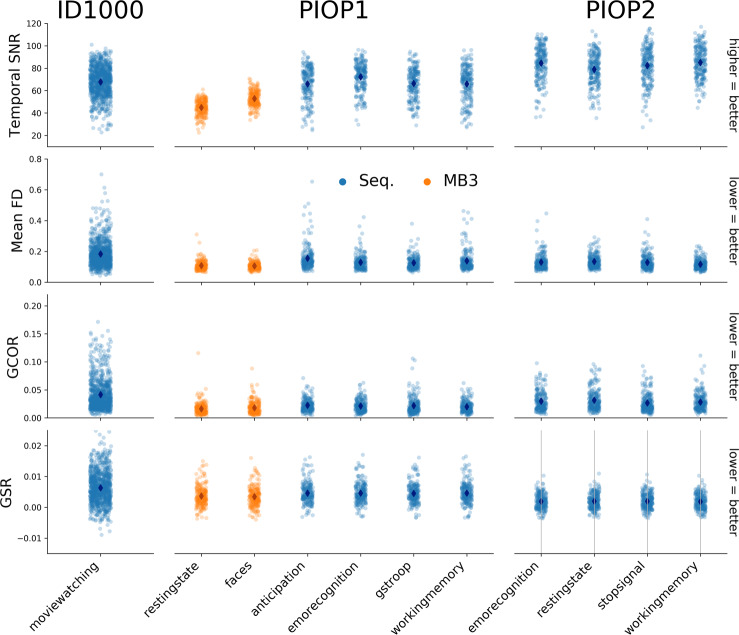

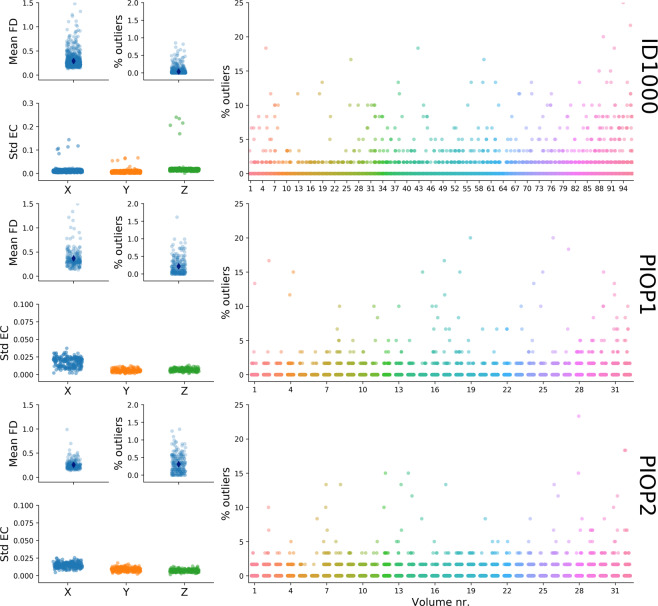

Functional (BOLD) scans

Like the T1-weighted images, the functional (BOLD) scans were run through the Mriqc pipeline. The resulting reports were visually checked for artifacts including reconstruction errors, registration issues, and incorrect brain masks.