Abstract

Purpose of Review

Cost-effectiveness analysis (CEA) can help identify the trade-offs decision makers face when confronted with alternative courses of action for the implementation of public health strategies. Application of CEA alongside implementation scientific studies remains limited. We aimed to identify areas for future development in order to enhance the uptake and impact of model-based CEA in implementation scientific research.

Recent Findings

Important questions remain about how to broadly implement evidence-based public health interventions in routine practice. Establishing population-level implementation strategy components and distinct implementation phases, including planning for implementation, the time required to scale-up programs, and sustainment efforts required to maintain them, can help determine the data needed to quantify each of these elements. Model-based CEA can use these data to determine the added value associated with each of these elements across systems, settings, population subgroups, and levels of implementation to provide tailored guidance for evidence-based public health action. There is a need to integrate implementation science explicitly into CEA to adequately capture diverse real-world delivery contexts and make detailed, informed recommendations on the aspects of the implementation process that provide good value.

Summary

We describe examples of how model-based CEA can integrate implementation scientific concepts and evidence to help tailor evaluations to local context. We also propose six distinct domains for methodological advancement in order to enhance the uptake and impact of model-based cost-effectiveness analysis in implementation scientific research.

Keywords: Cost-effectiveness analysis, Health economic evaluation, Simulation modeling, Effectiveness research, Implementation strategies, Public health

Introduction

Now more than ever we need to carefully evaluate the implementation of public health programs, to ensure not only their effectiveness but also the effectiveness of the program’s implementation and ultimately its value, relative to other competing interests. Economic evaluation, and cost-effectiveness analysis (CEA) specifically, can help identify the trade-offs, or opportunity costs, decision makers face when confronted with alternative courses of action for the implementation of public health strategies [1]. By determining the value provided by successful program implementation, CEA can not only help inform resource allocation decisions but also provide evidence for determining optimal scalability and sustainability of implementation strategies addressing the health needs of diverse populations [2]. Furthermore, CEA can help determine the maximum returns on investment that can be obtained from improving implementation activities [3–6]; however, realizing the full public health benefit of systematically incorporating evidence-based interventions (EBIs) into routine practice requires generalizable evidence of how these have been broadly implemented and sustained in practice [3, 7, 8].

For instance, HIV testing provides outstanding value and can even be cost-saving in the long term in high-prevalence populations and settings [9]. Nonetheless, real-world evidence on the scale of implementation of HIV-testing programs is sparse [9]. Levels of implementation documented in the public domain fall short of what would be required, in combination with a wide range of EBIs to treat and protect against infection, to reach the targets of the US ‘Ending the HIV Epidemic’ initiative [10]. Similarly, medication for opioid-use disorder can also provide exceptional value in addition to substantial health benefits [11]. However, there remains a pressing need for data to guide evidence-based decision-making in addressing the North American opioid crisis, particularly for assessing the impact of public health strategies over different timescales, for different populations or settings, and in combination with multiple interventions [12]. Of course, the global COVID-19 pandemic poses the most significant and immediate challenge in public health implementation. A sound understanding of what may limit the efficiency of large-scale implementation of testing and contact tracing programs across different contexts could have helped guide the effective delivery of COVID-19 vaccines [13].

While CEA has a 40-year history in population health research [14], its application alongside implementation scientific studies has been limited [1, 15, 16]. A recent systematic review of economic evaluations in the implementation science literature revealed only 14 articles focused on implementation [17], with only four CEAs using quality-adjusted life years (QALYs) [18]. While the review found improvements in the quality of CEAs over time [17, 19], best-practice recommendations, such as reporting the perspective from which the analysis is conducted, investigating parameter uncertainty, or reporting cost calculations, were not adhered to in most studies [14, 20–22]. Likewise, another recent systematic review found a dearth of economic evaluations of interventions designed to improve the implementation of public health initiatives, also noting the mixed quality of the evidence from these studies [23].

The use of model-based economic evaluation has been suggested to help make CEA the standard practice in implementation science [1]. Using mathematical relationships, models such as dynamic transmission models, agent-based models, state-transition cohort models, or individual-based microsimulation models can provide a flexible framework to compare alternative courses of action. As the COVID-19 pandemic has revealed, simulation modeling is playing a greater role than ever in public-health decision-making [24–26]. The context in which healthcare services are delivered has been shown to influence the cost-effectiveness of interventions [9, 27]. Simulation models that capture the heterogeneity across settings are uniquely positioned to offer guidance on contextually efficient strategies to implement [12, 28, 29].

Fundamentally, public-health programs aim to expand the implementation of evidence-based practices by increasing their reach, adoption, and sustainment to achieve better health outcomes. We outline areas for future development in CEA that would most complement the field of implementation science, in the interest of providing decision makers with pragmatic and context-specific information on the value of implementation and sustainment strategies. Specifically, in order to enhance the uptake and impact of model-based CEA in implementation scientific research, we suggest the need for advancement in (1) determining the reach of EBIs at the population level and within subgroups; (2) reporting adoption of EBIs at a system level and across settings; (3) improving the documentation of pre-implementation planning activities; (4) generating evidence on scaling up EBIs to the population level; (5) measuring the sustainment of EBIs over time; and (6) generating precise and generalizable costs of each of the above components.

Implementation Science Theories, Study Designs, and Their Application in Cost-Effectiveness Analysis

Increasing the impact of a public health program depends not only on the effectiveness of its individual components but also on the extent and quality of its implementation. While implementation scientists have developed frameworks to characterize implementation theory and practice [30], recommendations on how these may be incorporated into CEA are scarce. Applying these frameworks in CEA can ensure we fully capture the design features of a given EBI and the relevant costs for each of the implementation components. This can provide a foundation from which to improve the evaluation of implementation strategies and population health. While this article is not intended as a complete review of all individual approaches available, we describe examples of how model-based CEA can integrate implementation science to help tailor evaluations to local context and focus decision makers on implementation strategies that may provide the greatest public health impact and value for money.

The RE-AIM Framework

The Reach Effectiveness Adoption Implementation Maintenance (RE-AIM) framework has been broadly applied to evaluate health policies and EBIs since it was initially published more than two decades ago [31, 32]. The framework’s domains are recognized as essential components in evaluating population-level effects [30]. We applied the RE-AIM framework in recent model-based CEA evaluating combinations of EBIs in HIV/AIDS [33]. We used RE-AIM to define scale of delivery, periods over which the implementation of EBIs are scaled-up and sustained, and the costs of pre-implementation, implementation, delivery, and sustainment of each intervention [9]. Table 1 describes the definitions and assumptions we used for explicitly integrating intervention and implementation components in simulation modeling.

Table 1.

RE-AIM framework definitions and assumptions used for the implementation of EBIs in model-based CEA

| Domain | Definition | Assumptions for interventions | Assumptions for implementation |

|---|---|---|---|

| Reach | Participation rate in the interventions | (i) Individuals must accept to participate; (ii) participation rates are specific to each intervention. | (i) Individuals must access services in the setting(s) in which the interventions are delivered; (ii) reach remains constant over the delivery period. |

| Effectiveness | Effect of the interventions | (i) The effect is equivalent in all population subgroups unless there is evidence to the contrary. | (i) The effectiveness of each intervention is specific to the setting(s) in which it is delivered. |

| Adoption | Delivery of the interventions | (i) Staff accept to deliver interventions as implemented. | (i) The adoption rate is specific to the setting(s) and population(s) in which the interventions are delivered; (ii) adoption remains constant over the delivery period. |

| Implementation | Consistent delivery of the interventions | (i) Delivery costs are a function of the scale of delivery of the interventions. | (i) The interventions are adapted to ensure fidelity; (ii) there is a period for scaling up the intervention; (ii) implementation costs are a function of the setting(s) and scale of delivery of the interventions. |

| Maintenance | Sustainment of the interventions | (i) The effects of the interventions remain constant over time. | (i) Costs for sustainment activities (e.g., retraining) are a function of the scale of delivery of the interventions; (ii) duration of the sustainment period is assumed to be fixed for each intervention. |

RE-AIM, Reach Effectiveness Adoption Implementation Maintenance; EBIs, evidence-based interventions; CEA, cost-effectiveness analysis

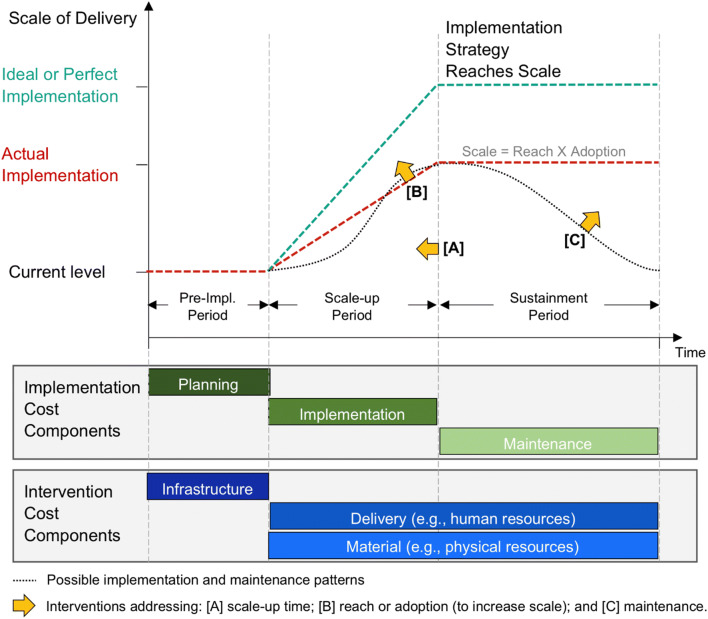

The scale of delivery refers to the extent to which an EBI ultimately reaches its intended population. In a prior application, we defined scale of delivery as the product of reach and adoption (Scaleij = Reachijk × Adoptionik) for each intervention i, intended population j, and delivery setting k. We used the best evidence available in the public domain for each healthcare setting in which an EBI would be delivered, using similar evidence specific to each population subgroup for which an EBI was intended. Reach was defined as the participation rate in a given intervention, conditional on both the probability an individual would access services in setting k and would accept the intervention. Adoption was defined as the proportion of a delivery setting actually implementing the intervention. Consequently, the population-level impact for each intervention was the product of the resulting scale of delivery and its effectiveness (Population-based Impacti = Scaleij × Effectivenessi). We incorporated a scale-up period to account for the time required to get to the defined scale of delivery followed by a sustainment period necessary for maintaining the scale of delivery.

While we strived to use the best publicly available data to inform each of the RE-AIM components, evidence for population-level implementation of interventions, scale-up, adoption, and sustainment was very limited. Furthermore, as it has been previously noted [6, 32], data on costs associated with implementation components other than those specific to the delivery of the intervention itself are mostly lacking. Consequently, our analysis required numerous assumptions for deriving costs, particularly for representative patient volumes across healthcare settings, physician practices, or HIV clinics. Nonetheless, it is encouraging that the Standards for Reporting Implementation Studies (StaRI) [34], a checklist for the transparent reporting of studies evaluating implementation strategies, distinguishes between costs specific to the interventions and to the implementation strategy, suggesting more evidence on costs specific to implementation may soon become available.

Hybrid Study Designs

Hybrid designs combine evaluation of both the implementation process and the intervention. The typology proposed by Curran et al. outlines different considerations for choosing the appropriate hybrid design, with the Hybrid Type 2 simultaneously evaluating the effectiveness of an intervention and its implementation [35]. Similar to the STARI reporting guidelines, which also differentiate cost data for the implementation strategy and the intervention, the recommendations for costs were general and did not distinguish between the various elements of the implementation process (e.g., planning, scaling up, and sustainment). CEA conducted alongside Hybrid Type 2 designs provides an opportunity to also evaluate the different components of implementation strategies most relevant and useful for decision makers and implementers [35, 36].

Mirroring the recommended conditions for use of a Hybrid Type 2 design [35], CEA-specific considerations may include (1) accounting for the costs of pre-implementation activities that ensure the face validity of the implementation strategies being proposed; (2) emphasizing the need for evidence of individual implementation components (e.g., varying levels of reach and adoption) and their costs, supporting applicability to new settings and populations; (3) the potential risk for displacement of other services that may result in indirect costs not captured by an evaluation restricted to the intervention(s) of interest; (4) demonstrating effectiveness (and value) of large-scale implementation to support momentum of adoption in routine care; (5) investigating hypothetical implementation dynamics to iteratively support the development of adaptive implementation strategies; (6) conducting value of information analysis to estimate the additional value the study may produce by reducing uncertainty in decision-making.

Simulation modeling and CEA can enhance the relevance of implementation science by measuring the effects of different, sometimes hypothetical, implementation components (e.g., reach, adoption, and sustainment—which are ultimately implementation outcomes themselves), while taking into consideration the impact of context and accounting for variation in costs, ultimately providing value-based recommendations to improve public health.

Setting an Agenda for Methodological Development

The evidence base supporting many public-health interventions is strong and diverse, but important questions remain about how to broadly implement them in routine practice to achieve greater population-level impact [1]. From a methodological standpoint, there is a need to integrate implementation science explicitly into CEA to adequately capture diverse real-world delivery contexts. CEA can produce detailed, informed recommendations on the aspects of the implementation process that provide good value. An effective use of model-based CEA for decision-making thus requires considering the feasibility and costs of individual implementation components, including planning for implementation, time required to scale-up programs, reach, adoption, and sustainment (Fig. 1). We identify areas of advancement needed for model-based CEA to further support public health action by estimating the potential impact of these implementation components, discussing resulting implications for cost considerations and further methodological development (Table 2).

Fig. 1.

Cost components, timing, and scale of implementation strategies

Table 2.

Areas of advancement to enhance the impact of CEA in implementation scientific research

| RE-AIM domain | Implementation-process data needs for CEA | Implementation-cost data needs for CEA | Examples of approaches for answering data needs |

|---|---|---|---|

| Intended populations (reach) |

● Integrating surveillance and reporting systems to derive population-level reach within and across settings ● Emphasizing granular data to distinguish access to services by population subgroups |

● Costs as a function of population reach specific to delivery settings and key population subgroups ● Functional form of the cost function capturing economies of scale (or decreasing returns as reach increases) |

● Publicly available surveillance data to determine baseline service utilization levels and feasible population reach ● Reporting of costs for implementation strategies increasing reach across different systems, settings, population subgroups, and levels of implementation |

| Service delivery (adoption) |

● Existing levels of implementation for EBIs, specific to delivery settings (e.g., formal healthcare sector, community based, schools) and by payer ● Impact of interventions improving system-level adoption |

● Costs as a function of increasing adoption accounting for heterogeneity across settings and geographical location ● Functional form of the cost function to capture economies of scale and scope |

● Estimate system capacity and public-health-department intended targets for adoption levels, see MISII for measurement framework example [76] ● Increased reporting (and development) of quantitative measures of determinants of adoption and other implementation phases [77] |

| Planning (effectiveness) |

● Human resources needed for pre-implementation planning ● Real-world effectiveness of EBIs, overall and within key subgroups |

● Costs of pre-implementation planning ● Direct and indirect costs attributable to different funding agencies |

● Increased use of hybrid effectiveness-implementation (type 2) study designs [35] to determine the real-world scalability and generalizability of EBIs ● Systematic and harmonized reporting of human resource (e.g., FTEs), see Cidav et al. [52] and Saldana et al. [54] for pragmatic approaches |

| Scaling up (implementation) |

● Timing of adoption across delivery settings ● Evidence on changing population characteristics at increasing scales of delivery |

● Flexible cost functions accounting for scale and scope when implementation timing changes ● Budget impact of speeding up implementation to determine if feasible or affordable under current budget constraints |

● Establish reporting guidelines and standardized instruments for quantitative population-level measures of different implementation phases [78] ● Estimate statistical models (e.g., multiple linear regression) to determine cost functions accounting for system and implementation components [70] |

| Sustaining impact (maintenance) |

● Longitudinal measurement of scale of delivery ● Funding mechanisms for EBIs over time ● Impact of interventions improving population-level sustainment |

● Costs for the maintenance of EBI implementation over period of sustainment ● Costs for retraining to maintain impact of EBIs |

● Establish a standardized and explicit definition of maintenance/sustainment implementation phase for generalizability of reporting [62] ● Yearly reporting of reach and adoption levels ● Tracking of increasing or decreasing delivery costs |

RE-AIM, Reach Effectiveness Adoption Implementation Maintenance; EBIs, evidence-based interventions; CEA, cost-effectiveness analysis; MISII, measure of innovation-specific implementation intentions; FTE, full-time equivalency

Scale of Implementation: Intended Populations and Service Delivery

In our prior work evaluating combinations of EBIs in HIV/AIDS, we derived the scale for individual interventions by determining their potential reach based on how population-level utilization of different healthcare settings (e.g., primary care, emergency departments) varied by geographic region, racial/ethnic group, and sex [9, 33]. For example, to determine the potential reach of an HIV testing intervention in primary care [9], we used regional US estimates of persons having seen a doctor in the past year (e.g., 59.3% of Hispanic/Latino men in the West, 93.9% for Black/African American women in the Northeast) [37]. CEAs can thus provide a realistic assessment of the potential value of implementing public-health programs by explicitly accounting for underlying structural barriers to healthcare access. As adaptation of EBIs can increase population reach within settings, helping to achieve more equitable service provision [38], model-based CEA can also allow for determining the additional value (and health benefits) that can result from efforts to reduce existing disparities [39]. Implementation scientists have argued for health equity to serve as a guiding principle for the discipline [40]. Simulation models can help us understand the extent and deployment of resources required to overcome these disparities [39, 40]. The recent extension of the RE-AIM framework now incorporates health-equity considerations for each of its domains [41]. Specifically, it suggests the importance of determining reach in terms of population characteristics and social determinants of health [41]. However, reporting of real-world implementation data such as these is very limited [9, 42]. The emerging ‘Distributional Cost-Effectiveness Analysis’ framework is designed to explicate the tradeoffs in strategies designed to reduce health inequities [43], as opposed to simply maximizing total health, though of course explicit evidence to this end must be available to execute such analyses [40, 44, 45].

Capturing the population-level impact of EBIs requires information on adoption rates within service-delivery settings, as well as across delivery settings and geographic areas [9]. For example, we estimated the potential adoption in primary care of an HIV testing intervention using the proportion of physicians who offer routine HIV tests (e.g., 31.7% of physicians accepting new patients following Affordable Care Act expansion in California, 54.0% of physicians responding to the United States Centers for Disease Control and Prevention’s Medical Monitoring Project Provider Survey) [9, 46, 47]. Consequently, information on current levels of implementation (when the objective is to improve access to existing EBIs) or on feasible levels of implementation (for EBIs not previously implemented) is essential. Recently, Aarons et al. proposed a ranking of the evidence required to evaluate the transportability of EBIs [7]. Extensions to the value of a perfect implementation framework have similarly explored the influence of relaxing assumptions of immediate and perfect adoption [4, 5] to determine whether it is worthwhile to collect more data to reduce decision uncertainty. Another recent extension to this framework has investigated the influence of heterogeneity in adoption across geographical areas [48]. Overly optimistic expectations of the transportability of findings from one setting to another are one of the major causes of unsuccessful policy implementation [49].

Model-based CEA can help the design of implementation strategies by determining the long-term benefits from increasing adoption, reach, or both (Fig. 1 [A]). This can be particularly informative when promoting uptake outside of formal healthcare settings to reach different populations via adoption of EBIs in low-barrier, community-based settings. Addressing determinants of health such as income and employment, housing, geography, and availability of transportation, stigma or discrimination, and health literacy offers great public health promise by delivering services for people who might not seek treatment through formal care settings or for historically underserved populations. While the Consolidated Framework for Implementation Research can help identify contextual determinants for increasing reach and adoption [50], adapting interventions to different delivery settings or target populations will be more complex and prolonged. Model-based CEA can help estimate the costs and benefits of prevention interventions that promote long-term improvements in public health, which are more difficult to measure than effects from short-term clinical interventions.

Planning for Implementation, Scaling Up, and Sustaining Impact

While calls to explicitly account for the costs attributable to implementation strategies are not novel [51], reporting remains uncommon [23]. Proposals of pragmatic approaches are still recent [52, 53], particularly when considering pre-implementation activities that may have a direct impact on scale of implementation [54] or program feasibility and sustainability [55]. In estimating costs for implementing combinations of EBIs in HIV/AIDS for six US cities, we derived public health department costs attributable to planning activities to coordinate implementation in local healthcare facilities [9]. We derived personnel needs and used full-time equivalent salaries based on work we have done with local health departments in determining programmatic costs associated with planning for the adoption of EBIs [56]. Additionally, we scaled personnel needs according to population size in each city to account for the number of healthcare facilities that would need to be targeted by such an initiative [9]. We propose these to be distinct from costs explicitly attributable to implementation activities such as EBI adaptation or ensuring implementation fidelity [6]. Accounting for planning costs in CEA is essential when considering the efforts required to improve adoption of EBIs across different service-delivery settings (e.g., HIV testing in clinical settings versus sexual health clinics or community-based organizations) [51]. By working in close collaboration with decision makers and forecasting the long-term costs and benefits of alternative implementation strategies, CEA can provide valuable information for making implementation decisions, thus helping determine the best fit for a given context.

Scale-up Period

Model-based CEA can also extrapolate the effects of shortening the scale-up period to reach a targeted level of implementation. Using dynamic transmission models to capture the impact of achieving herd immunity levels from mass vaccination serves as an example [57]. While constant, linear scale-up over the implementation period can be used as a simplifying assumption [9, 57–59], model-based CEA can also provide implementation scientists with a sense of the relative value for rapid, or staggered patterns of scale-up (Fig. 1 [B]). For instance, implementing physician alerts in electronic medical records to promote adherence to HIV antiretroviral therapy can be rapidly scaled-up within a healthcare system, whereas a multi-component intervention requiring training for medical personnel and changes to existing processes may require a more time-consuming sequential implementation if there are constraints on human resources [9]. If available, evidence on the cost and effectiveness of interventions designed to improve implementation could also be incorporated explicitly [5]. Recent retrospective work by Johannesen et al. has explored the relative gains of eliminating slow or delayed scaling up of prevention in cardiovascular disease [48]. Furthermore, evidence on the uptake of specific health technologies based on diffusion theory could provide a methodological foundation to prospectively model the impact on value provided by different patterns of adoption (and therefore scale-up) in the implementation of EBIs [60].

Sustaining Impact Over Time

Determining the costs and health benefits of improved sustainment over time can allow decision makers to plan for the necessary effort levels for maintenance of an implementation strategy (Fig. 1 [C]). The concept of sustainment however lacks a consensus definition in implementation science, and there is limited research on how best to sustain an effective intervention after implementation [61, 62]. There is also a need for better reporting of strategies facilitating sustainment to reduce this large research-to-practice gap [62, 63]. There has been a growing focus on long-term evaluation for the sustainment of EBIs [41] with the recognition that ongoing interventions may be required to sustain the impact of EBIs [63]. CEA can be utilized to assess dynamic scenarios, including the timing for and frequency of retraining or other sustainment activities, ultimately determining how much it may be worth investing to sustain implementation strategies.

Interactions between Implementation Components

Of course, capturing the interaction between each of the above-noted implementation components is another advantage provided by simulation modeling. Working in close collaboration with decision makers, simulation models can be utilized to assess the long-term impact and the value of strategies varying any combination of the populations reached; adoption within and across settings; planning capacity; the scale-up period; and the duration of sustainment (Table 3). Such collaborations can happen during planning for implementation or simultaneously during any stage of executing an implementation strategy, supporting adaptive implementation strategies in near real time.

Table 3.

Examples of how model-based CEA can support implementation scientific research

| Domain | Application |

|---|---|

| Intended populations |

● Determining the value of expanding reach of EBIs in the intended population and among different populations or subgroups ● Determining the impact of implementation strategies that aim to reduce health inequities |

| Service delivery |

● Determining the value of expanding adoption in the target delivery setting or across different delivery settings ● Determining the impact of implementing EBIs outside of formal healthcare settings to deliver services to underserved individuals |

| Planning |

● Working with decision makers during pre-implementation to project costs and benefits of different implementation strategies ● Prospectively determining the potential budgetary impact of implementation strategy alternatives |

| Scaling up |

● Determining the impact and value of speeding up implementation within and across delivery settings ● Exploring uncertainty resulting from different patterns in the timing of implementation |

| Sustaining impact |

● Determining the impact of imperfect sustainment and how much it may be worth investing in sustainment efforts, both in terms of required frequency and the extent of required effort ● Exploring the impact for the de-implementation of existing EBIs |

EBIs, evidence-based interventions; CEA, cost-effectiveness analysis

Costs of Implementation Components

Uncertainty surrounding the costs of implementation is a fundamental challenge to the broad implementation of EBIs, particularly in public health [64]. While there is a breadth of frameworks to guide adaptation, there has been little emphasis on how best to estimate the costs of implementation strategies, according to the scale of delivery, and in diverse settings offering a different scope of services. For instance, the Consolidated Framework for Implementation Research can help identify barriers to implementation and includes cost considerations for EBIs [50]; however, evidence on the costs of implementation components is necessary for CEA to be further incorporated into implementation scientific research (Table 2) [1]. Consistency in adherence to best practices and reporting guidelines such as the Consolidated Health Economic Evaluation Reporting Standards (CHEERS) [65] has been important in helping establish model-based CEA as standard practice for decision-making in healthcare [14]. Similar guidelines for reporting implementation costs could further help promote the use of implementation science with CEA. There are a number of high-quality microcosting examples in both the public health [66] and the implementation science literature [54]. Similarly, some studies have proposed approaches for the standardized reporting of pre-implementation and implementation component costs [52, 54, 55, 67] but these have not yet been used widely or applied to population-level EBIs. Moreover, CEA typically uses assumptions of constant average costs (i.e., linear cost functions) yet the functional form of costs can matter, particularly for widespread implementation of EBIs [68].

Assessing economies of scale and scope in delivering individual and combinations of interventions will no doubt be areas of intense inquiry and scrutiny in the coming years as health systems adapt to our new reality. A study using a regression-based approach to investigate factors determining costs for a large HIV prevention program conducted through non-government organizations in India incorporated a diverse set of population and setting characteristics and found decreasing average costs as scale of delivery increased [69]. A similar approach in local public health agencies across the state of Colorado found evidence suggesting economies of scale in the surveillance of communicable diseases, with average costs one-third lower for high-volume agencies as compared to low-volume agencies [70]. Economies of scope offer the potential for improving the impact of public health programs [71–73]. The costs of implementation components for delivering more than one intervention at once may differ and requires careful consideration [71]. Determining the value of integrated programs targeting multiple-disease areas with CEA can be enhanced by incorporating budget-impact analysis to also help build consensus for financing arrangement across different sectors of the health system [74, 75].

As the paradigm for CEA in implementation science shifts to systematically account for the costs of different components necessary for achieving population-level implementation of EBIs, the potential for model-based CEA to support and complement implementation scientific research in public health will continue to increase.

Conclusion

We believe in the promotion of implementation science and cost-effectiveness analysis as vital tools that should be applied in evaluating every meaningful public-health initiative. As the current pandemic has made painfully clear, it is imperative for public-health scientists to advance a range of new approaches to determine what implementation strategy may provide the greatest public-health value and thus promote sustainable and efficient use of limited resources. We conclude by acknowledging that our proposed agenda will require health researchers to continue strengthening partnerships with decision makers to advance how implementation-science methods can be incorporated in CEA. Decision makers continuously need to account for courses of action that are shaped by social, political, and economic considerations. Model-based CEA is ideally positioned to play a central role in providing a sound understanding of how well diverse implementation strategies could work, and how we can strive to further advance public health.

Funding

This study was funded by the National Institutes on Drug Abuse (NIDA grant no. R01DA041747).

Declarations

Conflict of Interest

The authors declare no competing interests.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Footnotes

This article is part of the Topical Collection on Implementation Science

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Hoomans T, Severens JL. Economic evaluation of implementation strategies in health care. Implement Sci. 2014;9:168. doi: 10.1186/s13012-014-0168-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Frieden TR. Six components necessary for effective public health program implementation. Am J Public Health. 2014;104(1):17–22. doi: 10.2105/AJPH.2013.301608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fenwick E, Claxton K, Sculpher M. The value of implementation and the value of information: combined and uneven development. Med Dec Making. 2008;28(1):21–32. doi: 10.1177/0272989X07308751. [DOI] [PubMed] [Google Scholar]

- 4.Andronis L, Barton PM. Adjusting estimates of the expected value of information for implementation: theoretical framework and practical application. Med Dec Making. 2016;36(3):296–307. doi: 10.1177/0272989X15614814. [DOI] [PubMed] [Google Scholar]

- 5.Grimm SE, Dixon S, Stevens JW. Assessing the expected value of research studies in reducing uncertainty and improving implementation dynamics. Med Dec Making. 2017;37(5):523–533. doi: 10.1177/0272989X16686766. [DOI] [PubMed] [Google Scholar]

- 6.Whyte S, Dixon S, Faria R, Walker S, Palmer S, Sculpher M, Radford S. Estimating the cost-effectiveness of implementation: is sufficient evidence available? Value Health. 2016;19(2):138–144. doi: 10.1016/j.jval.2015.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Aarons GA, Sklar M, Mustanski B, Benbow N, Brown CH. “Scaling-out” evidence-based interventions to new populations or new health care delivery systems. Implement Sci. 2017;12(1):111. doi: 10.1186/s13012-017-0640-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Scudder AT, Taber-Thomas SM, Schaffner K, Pemberton JR, Hunter L, Herschell AD. A mixed-methods study of system-level sustainability of evidence-based practices in 12 large-scale implementation initiatives. Health Res Policy Syst. 2017;15(1):102. doi: 10.1186/s12961-017-0230-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Krebs E, Zang X, Enns B, Behrends C, Del Rio C, Dombrowski J, et al. The impact of localized implementation: determining the cost-effectiveness of HIV prevention and care interventions across six U.S. cities. AIDS. 2020;34(3):447–458. doi: 10.1097/QAD.0000000000002455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nosyk B, Zang X, Krebs E, Enns B, Min JE, Behrends CN, del Rio C, Dombrowski JC, Feaster DJ, Golden M, Marshall BDL, Mehta SH, Metsch LR, Pandya A, Schackman BR, Shoptaw S, Strathdee SA, Behrends CN, del Rio C, Dombrowski JC, Feaster DJ, Gebo KA, Golden M, Kirk G, Marshall BDL, Mehta SH, Metsch LR, Montaner J, Nosyk B, Pandya A, Schackman BR, Shoptaw S, Strathdee SA. Ending the HIV epidemic in the USA: an economic modelling study in six cities. Lancet HIV. 2020;7(7):e491–e503. doi: 10.1016/S2352-3018(20)30033-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Krebs E, Enns B, Evans E, Urada D, Anglin MD, Rawson RA, Hser YI, Nosyk B. Cost-effectiveness of publicly funded treatment of opioid use disorder in California. Ann Intern Med. 2018;168(1):10–19. doi: 10.7326/M17-0611. [DOI] [PubMed] [Google Scholar]

- 12.The Opioid Use Disorder Modeling Writing Group. How simulation modeling can support the public health response to the opioid crisis in North America: Setting priorities and assessing value. Int J Drug Policy. 2020;In Press. 10.1016/j.drugpo.2020.102726. [DOI] [PMC free article] [PubMed]

- 13.Schaffer DeRoo S, Pudalov NJ, Fu LY. Planning for a COVID-19 Vaccination Program. Jama. 2020;323:2458–2459. doi: 10.1001/jama.2020.8711. [DOI] [PubMed] [Google Scholar]

- 14.Sanders GD, Neumann PJ, Basu A, Brock DW, Feeny D, Krahn M, Kuntz KM, Meltzer DO, Owens DK, Prosser LA, Salomon JA, Sculpher MJ, Trikalinos TA, Russell LB, Siegel JE, Ganiats TG. Recommendations for conduct, methodological practices, and reporting of cost-effectiveness analyses: second panel on cost-effectiveness in health and medicine. Jama. 2016;316(10):1093–1103. doi: 10.1001/jama.2016.12195. [DOI] [PubMed] [Google Scholar]

- 15.Foy R, Sales A, Wensing M, Aarons GA, Flottorp S, Kent B, et al. Implementation science: a reappraisal of our journal mission and scope. BioMed Central. 2015. [DOI] [PMC free article] [PubMed]

- 16.Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, McHugh SM, Weiner BJ. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front Public Health. 2019;7:3. doi: 10.3389/fpubh.2019.00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Roberts SLE, Healey A, Sevdalis N. Use of health economic evaluation in the implementation and improvement science fields—a systematic literature review. Implement Sci. 2019;14(1):72. doi: 10.1186/s13012-019-0901-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Weinstein MC, Torrance G, McGuire A. QALYs: the basics. Value Health. 2009;12(s1):S5–S9. doi: 10.1111/j.1524-4733.2009.00515.x. [DOI] [PubMed] [Google Scholar]

- 19.Hoomans T, Evers SM, Ament AJ, Hübben MW, Van Der Weijden T, Grimshaw JM, et al. The methodological quality of economic evaluations of guideline implementation into clinical practice: a systematic review of empiric studies. Value Health. 2007;10(4):305–316. doi: 10.1111/j.1524-4733.2007.00175.x. [DOI] [PubMed] [Google Scholar]

- 20.Briggs AH, Weinstein MC, Fenwick EAL, Karnon J, Sculpher MJ, Paltiel AD. Model parameter estimation and uncertainty analysis: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force Working Group–6. Med Dec Making. 2012;32(5):722–732. doi: 10.1177/0272989X12458348. [DOI] [PubMed] [Google Scholar]

- 21.Caro JJ, Briggs AH, Siebert U, Kuntz KM. Modeling good research practices—overview a report of the ISPOR-SMDM Modeling Good Research Practices Task Force–1. Med Dec Making. 2012;32(5):667–677. doi: 10.1177/0272989X12454577. [DOI] [PubMed] [Google Scholar]

- 22.Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, Augustovski F, Briggs AH, Mauskopf J, Loder E, ISPOR Health Economic Evaluation Publication Guidelines-CHEERS Good Reporting Practices Task Force Consolidated health economic evaluation reporting standards (CHEERS)—explanation and elaboration: a report of the ispor health economic evaluation publication guidelines good reporting practices task force. Value Health. 2013;16(2):231–250. doi: 10.1016/j.jval.2013.02.002. [DOI] [PubMed] [Google Scholar]

- 23.Reeves P, Edmunds K, Searles A, Wiggers J. Economic evaluations of public health implementation-interventions: a systematic review and guideline for practice. Public Health. 2019;169:101–113. doi: 10.1016/j.puhe.2019.01.012. [DOI] [PubMed] [Google Scholar]

- 24.Wynants L, Van Calster B, Bonten MM, Collins GS, Debray TP, De Vos M, et al. Prediction models for diagnosis and prognosis of covid-19 infection: systematic review and critical appraisal. bmj. 2020:369. [DOI] [PMC free article] [PubMed]

- 25.Enserink M, Kupferschmidt K. With COVID-19, modeling takes on life and death importance. Science (New York, NY) 2020;367(6485):1414. doi: 10.1126/science.367.6485.1414-b. [DOI] [PubMed] [Google Scholar]

- 26.Nosyk B, Weiner J, Krebs E, Zang X, Enns B, Behrends CN, Feaster DJ, Jalal H, Marshall BDL, Pandya A, Schackman BR, Meisel ZF. Dissemination science to advance the use simulation modeling: Our obligation moving forward. Med Dec Making. 2020;40(6):718–721. doi: 10.1177/0272989X20945308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mishra S, Mountain E, Pickles M, Vickerman P, Shastri S, Gilks C, Dhingra NK, Washington R, Becker ML, Blanchard JF, Alary M, Boily MC. Exploring the population-level impact of antiretroviral treatment: the influence of baseline intervention context. Aids. 2014;28:S61–S72. doi: 10.1097/QAD.0000000000000109. [DOI] [PubMed] [Google Scholar]

- 28.Schackman BR. Implementation science for the prevention and treatment of HIV/AIDS. J Acquir Immune Defic Syndr. 1999;2010(55):Suppl 1–SuppS27. doi: 10.1097/QAI.0b013e3181f9c1da. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dopp AR, Mundey P, Beasley LO, Silovsky JF, Eisenberg D. Mixed-method approaches to strengthen economic evaluations in implementation research. Implement Sci. 2019;14(1):2. doi: 10.1186/s13012-018-0850-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53. doi: 10.1186/s13012-015-0242-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327. doi: 10.2105/AJPH.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103(6):e38–e46. doi: 10.2105/AJPH.2013.301299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nosyk B, Zang X, Krebs E, Enns B, Min J, Behrends C, et al. What will it take to ‘End the HIV epidemic’ in the US? An economic modeling study in 6 cities. Lancet HIV. 2020; In Press. [DOI] [PMC free article] [PubMed]

- 34.Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, et al. Standards for reporting implementation studies (StaRI) statement. Bmj. 2017;356. [DOI] [PMC free article] [PubMed]

- 35.Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–226. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Geng EH, Peiris D, Kruk ME. Implementation science: relevance in the real world without sacrificing rigor. PLoS Med. 2017;14(4). [DOI] [PMC free article] [PubMed]

- 37.National Center for Health Statistics. National Health Interview Survey, 2015. Public-use data file and documentation Available from URL: http://wwwcdcgov/nchs/nhis/quest_data_related_1997_forwardhtm [accessed June 19, 2018]. 2016.

- 38.Edwards N, Barker PM. The importance of context in implementation research. JAIDS J Acquir Immune Defic Syndr. 2014;67:S157–SS62. doi: 10.1097/QAI.0000000000000322. [DOI] [PubMed] [Google Scholar]

- 39.Nosyk B, Krebs E, Zang X, Piske M, Enns B, Min JE, et al. Ending the Epidemic’ will not happen without addressing racial/ethnic disparities in the US HIV epidemic. Clin Infect Dis. 2020. [DOI] [PMC free article] [PubMed]

- 40.McNulty M, Smith J, Villamar J, Burnett-Zeigler I, Vermeer W, Benbow N, et al. Implementation research methodologies for achieving scientific equity and health equity. Ethnicity Dis. 2019;29(Suppl 1):83–92. doi: 10.18865/ed.29.S1.83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Shelton RC, Chambers DA, Glasgow RE. An extension of RE-AIM to enhance sustainability: addressing dynamic context and promoting health equity over time. Front Public Health. 2020;8:134. doi: 10.3389/fpubh.2020.00134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Glasgow RE, Eckstein ET, ElZarrad MK. Implementation science perspectives and opportunities for HIV/AIDS research: integrating science, practice, and policy. JAIDS J Acquir Immune Defic Syndr. 2013;63:S26–S31. doi: 10.1097/QAI.0b013e3182920286. [DOI] [PubMed] [Google Scholar]

- 43.Cookson R, Mirelman AJ, Griffin S, Asaria M, Dawkins B, Norheim OF et al. Using cost-effectiveness analysis to address health equity concerns. Value Health. 2017;20(2):206–212. doi: 10.1016/j.jval.2016.11.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Engelgau MM, Zhang P, Jan S, Mahal A. Economic dimensions of health inequities: the role of implementation research. Ethnicity & disease. 2019;29(Suppl 1):103–112. doi: 10.18865/ed.29.S1.103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Fiscella K, Franks P, Gold MR, Clancy CM. Inequality in quality: addressing socioeconomic, racial, and ethnic disparities in health care. Jama. 2000;283(19):2579–2584. doi: 10.1001/jama.283.19.2579. [DOI] [PubMed] [Google Scholar]

- 46.Leibowitz AA, Garcia-Aguilar AT, Farrell K. Initial health assessments and HIV screening under the Affordable Care Act. PloS one. 2015;10(9):e0139361. doi: 10.1371/journal.pone.0139361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.McNaghten A, Valverde EE, Blair JM, Johnson CH, Freedman MS, Sullivan PS. Routine HIV testing among providers of HIV care in the United States, 2009. PloS one. 2013;8(1):e51231. doi: 10.1371/journal.pone.0051231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Johannesen K, Janzon M, Jernberg T, Henriksson M. Subcategorizing the expected value of perfect implementation to identify when and where to invest in implementation initiatives. Med Dec Making. 2020:0272989X20907353. [DOI] [PMC free article] [PubMed]

- 49.Hudson B, Hunter D, Peckham S. Policy failure and the policy-implementation gap: can policy support programs help? Policy Design Practice. 2019;2(1):1–14. doi: 10.1080/25741292.2018.1540378. [DOI] [Google Scholar]

- 50.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):1–15. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Mental Health Mental Health Services Res. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Cidav Z, Mandell D, Pyne J, Beidas R, Curran G, Marcus S. A pragmatic method for costing implementation strategies using time-driven activity-based costing. Implement Sci. 2020;15:1–15. doi: 10.1186/s13012-020-00993-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Rabarison KM, Marcelin MRA, Bish CL, Chandra MG, Massoudi MS, Greenlund KJ. Cost analysis of prevention research centers: instrument development. J Public Health Manag Practice: JPHMP. 2018;24(5):440–443. doi: 10.1097/PHH.0000000000000706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Saldana L, Chamberlain P, Bradford WD, Campbell M, Landsverk J. The cost of implementing new strategies (COINS): a method for mapping implementation resources using the stages of implementation completion. Child Youth Serv Rev. 2014;39:177–182. doi: 10.1016/j.childyouth.2013.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Sohn H, Tucker A, Ferguson O, Gomes I, Dowdy D. Costing the implementation of public health interventions in resource-limited settings: a conceptual framework. Implement Sci. 2020;15(1):1–8. doi: 10.1186/s13012-020-01047-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Nosyk B, Min JE, Krebs E, Zang X, Compton M, Gustafson R, et al. The cost-effectiveness of human immunodeficiency virus testing and treatment engagement initiatives in British Columbia, Canada: 2011–2013. Clin Infect Dis. 2017;66(5):765–777. doi: 10.1093/cid/cix832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Ibuka Y, Paltiel AD, Galvani AP. Impact of program scale and indirect effects on the cost-effectiveness of vaccination programs. Med Dec Making. 2012;32(3):442–446. doi: 10.1177/0272989X12441397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Martin EG, MacDonald RH, Smith LC, Gordon DE, Tesoriero JM, Laufer FN, et al. Mandating the offer of HIV testing in New York: simulating the epidemic impact and resource needs. JAIDS J Acquir Immune Def Syndr. 2015;68:S59–S67. doi: 10.1097/QAI.0000000000000395. [DOI] [PubMed] [Google Scholar]

- 59.Mamaril CBC, Mays GP, Branham DK, Bekemeier B, Marlowe J, Timsina L. Estimating the cost of providing foundational public health services. Health Services Res. 2018;53:2803–2820. doi: 10.1111/1475-6773.12816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Grimm SE, Stevens JW, Dixon S. Estimating future health technology diffusion using expert beliefs calibrated to an established diffusion model. Value Health. 2018;21(8):944–950. doi: 10.1016/j.jval.2018.01.010. [DOI] [PubMed] [Google Scholar]

- 61.Shelton RC, Lee M. Sustaining evidence-based interventions and policies: recent innovations and future directions in implementation science: Am Public Health Assoc; 2019. [DOI] [PMC free article] [PubMed]

- 62.Hailemariam M, Bustos T, Montgomery B, Barajas R, Evans LB, Drahota A. Evidence-based intervention sustainability strategies: a systematic review. Implement Sci. 2019;14(1):57. doi: 10.1186/s13012-019-0910-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Glasgow RE, Harden SM, Gaglio B, Rabin B, Smith ML, Porter GC, et al. RE-AIM Planning and Evaluation Framework: adapting to new science and practice with a 20-year review. Front Public Health. 2019;7(64). [DOI] [PMC free article] [PubMed]

- 64.Institute of Medicine (Committee on Public Health Strategies to Improve Health). For the public's health: investing in a healthier future: National Academies Press; 2012. [PubMed]

- 65.Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, Augustovski F, Briggs AH, Mauskopf J, Loder E, ISPOR Health Economic Evaluation Publication Guidelines-CHEERS Good Reporting Practices Task Force Consolidated Health Economic Evaluation Reporting Standards (CHEERS)—explanation and alaboration: a report of the ISPOR Health Economic Evaluation Publication Guidelines Good Reporting Practices Task Force. Value Health. 2013;16:231–250. doi: 10.1016/j.jval.2013.02.002. [DOI] [PubMed] [Google Scholar]

- 66.Schackman BR, Eggman AA, Leff JA, Braunlin M, Felsen UR, Fitzpatrick L, et al. Costs of expanded rapid HIV testing in four emergency departments. Public Health Rep. 2016;1:71–81. doi: 10.1177/00333549161310S109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Lang JM, Connell CM. Measuring costs to community-based agencies for implementation of an evidence-based practice. J Behav Health Serv Res. 2017;44(1):122–134. doi: 10.1007/s11414-016-9541-8. [DOI] [PubMed] [Google Scholar]

- 68.Brandeau ML, Zaric GS, De Angelis V. Improved allocation of HIV prevention resources: using information about prevention program production functions. Health Care Manag Sci. 2005;8(1):19–28. doi: 10.1007/s10729-005-5213-6. [DOI] [PubMed] [Google Scholar]

- 69.Lépine A, Chandrashekar S, Shetty G, Vickerman P, Bradley J, Alary M, Moses S, CHARME India Group. Vassall A. What determines HIV prevention costs at scale? Evidence from the Avahan programme in India. Health Econ. 2016;25:67–82. doi: 10.1002/hec.3296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Atherly A, Whittington M, VanRaemdonck L, Lampe S. The economic cost of communicable disease surveillance in local public health agencies. Health Serv Res. 2017;52:2343–2356. doi: 10.1111/1475-6773.12791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Turner HC, Toor J, Hollingsworth TD, Anderson RM. Economic evaluations of mass drug administration: the importance of economies of scale and scope. Clin Infect Dis. 2018;66(8):1298–1303. doi: 10.1093/cid/cix1001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Nosyk B, Armstrong WS, del Rio C. Contact tracing for COVID-19: An opportunity to reduce health disparities and end the HIV/AIDS epidemic in the US. Clin Infect Dis. 2020;71:2259–2261. doi: 10.1093/cid/ciaa501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Zang X, Krebs E, Chen S, Piske M, Armstrong WS, Behrends CN, et al. The potential epidemiological impact of COVID-19 on the HIV/AIDS epidemic and the cost-effectiveness of linked, opt-out HIV testing: a modeling study in six US cities. Clin Infect Dis. 2020:ciaa1547. [DOI] [PMC free article] [PubMed]

- 74.Stuart RM, Wilson DP. Sharing the costs of structural interventions: what can models tell us? Int J Drug Policy. 2020;102702. [DOI] [PubMed]

- 75.Sullivan SD, Mauskopf JA, Augustovski F, Caro JJ, Lee KM, Minchin M, et al. Budget impact analysis—principles of good practice: report of the ISPOR 2012 Budget Impact Analysis Good Practice II Task Force. Value Health. 2014;17(1):5–14. doi: 10.1016/j.jval.2013.08.2291. [DOI] [PubMed] [Google Scholar]

- 76.Moullin JC, Ehrhart MG, Aarons GA. Development and testing of the Measure of Innovation-Specific Implementation Intentions (MISII) using Rasch measurement theory. Implement Sci. 2018;13(1):1–10. doi: 10.1186/s13012-018-0782-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Allen P, Pilar M, Walsh-Bailey C, Hooley C, Mazzucca S, Lewis CC, et al. Quantitative measures of health policy implementation determinants and outcomes: a systematic review. Implement Sci. 2020;15(1):1–17. doi: 10.1186/s13012-020-01007-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Khadjesari Z, Boufkhed S, Vitoratou S, Schatte L, Ziemann A, Daskalopoulou C, et al. Implementation outcome instruments for use in physical healthcare settings: a systematic review. Implement Sci. 2020;15(1):1–16. doi: 10.1186/s13012-020-01027-6. [DOI] [PMC free article] [PubMed] [Google Scholar]