Abstract

In hospitals, improvers and implementers use quality improvement science (QIS) and less frequently implementation research (IR) to improve health care and health outcomes. Narrowly defined quality improvement (QI) guided by QIS focuses on transforming systems of care to improve health care quality and delivery and IR focuses on developing approaches to close the gap between what is known (research findings) and what is practiced (by clinicians). However, QI regularly involves implementing evidence and IR consistently addresses organizational and setting-level factors. The disciplines share a common end goal, namely, to improve health outcomes, and work to understand and change the same actors in the same settings often encountering and addressing the same challenges. QIS has its origins in industry and IR in behavioral science and health services research. Despite overlap in purpose, the 2 sciences have evolved separately. Thought leaders in QIS and IR have argued the need for improved collaboration between the disciplines. The Veterans Health Administration’s Quality Enhancement Research Initiative has successfully employed QIS methods to implement evidence-based practices more rapidly into clinical practice, but similar formal collaborations between QIS and IR are not widespread in other health care systems. Acute care teams are well positioned to improve care delivery and implement the latest evidence. We provide an overview of QIS and IR; examine the key characteristics of QIS and IR, including strengths and limitations of each discipline; and present specific recommendations for integration and collaboration between the 2 approaches to improve the impact of QI and implementation efforts in the hospital setting.

Whether improving the discharge process or implementing care pathways, hospital-based teams regularly employ quality improvement science (QIS) and less often implementation research (IR) methods to achieve better outcomes for hospitalized patients.* QIS and IR diverge with regard to origin, usual scope and scale, stated goals, starting and end points, and perspective. Many of the differences in the methodology used in QIS and IR are inherent to these differences. However, although QIS and IR are often presented as 2 contrasting approaches, in practice, the process of quality improvement (QI) and implementation and the tangible outcomes they yield often cannot readily be distinguished from each other.3,4

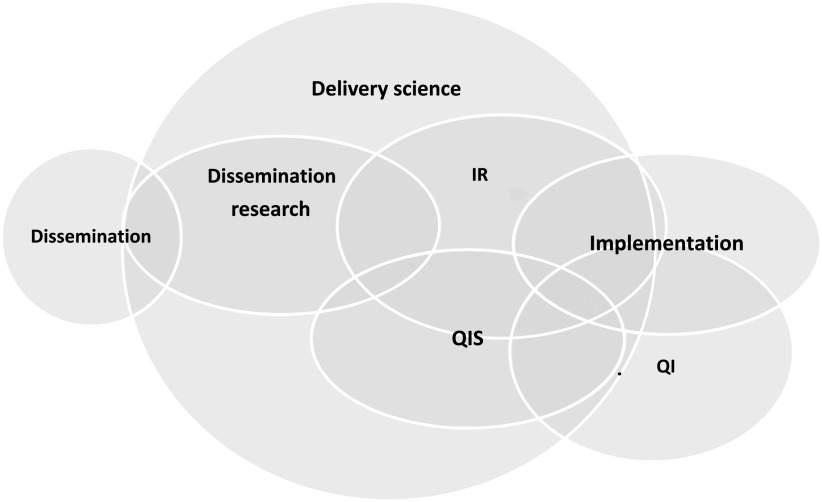

Although the sciences that inform them may differ, QI and implementation studies often employ similar strategies and aim for similar outcomes. Yet the terminology used to describe the work differs. In a review of 20 highly cited self-identified QI and implementation science studies in cancer, Check et al3 found that although the studies shared goals and approaches, the authors used different terminology for the same concepts and emphasized different aspects in reporting. This difference in terminology is a significant roadblock to integrating the 2 sciences. Koczwara et al5 and others argue the need for shared language and highlight the need for agreed-on publication standards, collaboration in the design and conduct of studies, collaboration between academics and clinicians, and the need to educate professionals in both fields.6–8 We propose that both QIS and IR fall under the larger umbrella of delivery science (Fig 1). We make distinctions between QIS and IR and to a lesser extent between the QI and implementation they inform to offer high-level introductions to QIS and IR as they are typically defined, discuss the overlap between and distinguishing features of QIS and IR, and offer concrete ways that hospital-based improvers and implementers can integrate concepts and methods from the 2 sciences to achieve improved health outcomes for patients.

FIGURE 1.

Venn diagram depicts the overlap among different sectors of delivery science. This diagram reflects how QIS and IR inform the practice of QI and implementation in the acute health care setting.

We acknowledge that in health care settings, “implementers” are often indistinguishable from “improvers” and that compared with IR methods, QIS methods are more broadly recognized and employed to both improve systems of care and implement evidence-based practices (EBPs). The tendency for improvers and implementers to use QIS is multifactorial and likely relates to available training and resources as well as to IR methods themselves, which are newer and generally more academic than pragmatic and may be less accessible to users than QIS methods. There are few examples of active integration between QIS and IR in the literature.5,9–14 In a recently published scoping review of studies published between 1999 and 2018, researchers found only 7 studies that unambiguously combined the use of an IR framework with QIS tools and strategies such as process mapping.11

In this article, we explore concrete examples of how IR can enhance QI and how QIS can advance implementation, with a focus on the active integration of tools, frameworks, processes, and methods from the 2 sciences. We begin with brief introductions to the 2 sciences, including areas in which they may excel and fall short, and end with a discussion of opportunities for focused forward-thinking collaboration between QI and implementation scientists particularly in the area of sustainability.

What is QIS?

Improvers assert that every system is perfectly designed for the results it achieves.15 QI focuses on identifying system issues driving outcomes. QIS7,16 approaches (eg, Lean and Six Sigma) and tools (eg, key driver diagrams17 and cause-and-effect diagrams18) help piece together a puzzle by explaining why an outcome or problem occurs in a particular health care setting or system and hinting at possible solutions to be tested. There are several approaches to QI in health care. Lean, for example, focuses on reducing waste, and Six Sigma focuses on decreasing variation.19 In this article, we will focus on the most widely used QIS framework, namely, the Model for Improvement,17 which asks 3 questions: “What are we trying to accomplish?” “How will we know that a change is an improvement?” and “What changes can we make that will result in improvement?” As described in The Improvement Guide: A Practical Approach to Enhancing Organizational Performance, change ideas in QIS are the union of data analyzed over time (by using statistical process control charts),20–24 awareness of the complex system,23 ideas about what drives the actions of people in the system, and subject matter knowledge about the problem. Together, this information is used to hypothesize what is wrong and what will fix it. Despite the amount of data and thought that go into developing a change idea, it is not implemented without first testing it to see if it works as planned. Small, rapid tests of change called plan-do-study-act (PDSA) cycles are used to assess the feasibility and effectiveness of change ideas leading to increased confidence that the change or changes, which are often modified along the way, will result in improvement. Changes are tested on larger and larger scales, and the end result is implementation of a change that works to improve outcomes and/or processes with an emphasis on local impact.

What Is IR?

The National Institutes of Health defines IR as “the scientific study of the use of strategies to adopt and integrate evidence-based health interventions into clinical and community settings to improve individual outcomes and benefit population health.”25 IR, as illustrated in Fig 1, is a subset of the broader field of implementation or delivery science. IR begins with the assumption that previous research has identified an evidence-based intervention or EBP that improves a problem or outcome and an understanding that evidence alone does not change behaviors or systems.26 IR is focused on developing generalizable knowledge about how to implement EBPs by considering factors that influence implementation (at multiple individual and system levels) and choosing implementation and behavior change strategies to address those factors. IR is also used to evaluate how the EBP itself may need to be adapted to produce the same outcomes across varied settings and contexts.27,28 Conceptual frameworks such as Reach, Effectiveness, Adoption, Implementation, Maintenance (RE-AIM)29,30; the Practical, Robust Implementation and Sustainability Model (PRISM)31,32; Exploration, Preparation, Implementation, Sustainment (EPIS)33; and the Consolidated Framework for Implementation Research34 are used to guide detailed and comprehensive implementation planning and evaluation in an attempt to produce generalizable knowledge.35 In a scoping review, researchers found 157 frameworks,36 and Rabin’s Web-based tool to aid selection and use of IR frameworks and theories includes >100 frameworks (http://dissemination-implementation.org). Using these frameworks, IR is focused not only on successful implementation in a given study but on systematically advancing the collective understanding of what works and why to advance the science of implementation. Formal assessment of IR outcomes such as fidelity or adherence to the EBP versus adaptations made to it and cost of implementation expand beyond the process measures typically used in QIS to measure implementation (eg, % bundle compliance) and help to understand why implementation efforts succeed or fail and distinguish between bad change ideas and failed implementation.37

Advantages of QIS and QI

QI is an inside job. Improvers work in the systems they attempt to change and thus understand key local factors related to change (eg, opinion leaders for and against change ideas, the organization’s readiness for change,38 past successes and failures with similar changes, and the feasibility of the change given local resources). Also, potential adopters (hospital staff and clinicians) of the change may harbor less skepticism about changes that are home grown by colleagues.

QIS approaches are generally much faster than IR methods. Many health care issues need immediate attention, and it is acceptable to learn as you go in QI. Improvers can get started with small tests of change taking advantage of early adopters and change agents while making sure that laggards and naysayers feel heard and give input as change ideas progress. Using PDSA cycles, improvers make systematic, data-driven modifications to change ideas. As such, incorrect hypotheses about what change(s) will lead to improvement are less costly to the system because failed ideas are either adapted or abandoned before they are implemented or spread.

Systems and contexts are not static: they are constantly changing.28 As improvements spread, systems change, and evidence grows, so must changes evolve to continue to be effective.39 QIS is inherently designed to be rapid and iterative and therefore perfectly designed to address the challenge of adapting to fluid systems.

Downsides of QIS and QI

Starting with small tests of change can be advantageous, but the rapid nature of QIS can also have unintended consequences. As improvers decide whether to adopt, modify, or abandon change ideas during PDSA cycles, data about what worked (or did not work) and importantly the hypothesized reasons why are not always well documented and/or widely shared. Thus, there is a missed opportunity to spread knowledge to guide adaptations and address barriers in other settings. Effective changes in one microsystem or setting often cannot be replicated or spread. Reinventing the wheel in each organization or setting, rather than adapting what has worked already on the basis of existing evidence, relevant theory or past PDSA cycles waste resources. Another potential downside of QIS is that aggregate results at the system level often neglect important outcomes especially related to health equity (eg, representativeness of the patients reached by the intervention).

Advantages of IR

Although subject matter knowledge, a well-rounded team, and engaged stakeholders go a long way in measuring and applying context to change ideas, IR conceptual frameworks help ensure key contextual determinants imperative to successful implementation are not missed. IR conceptual frameworks (eg, Consolidated Framework for Implementation Research)34 that compile factors known to influence implementation success into a single user-friendly form increase the reliability by which issues related to implementation are identified and addressed. Other frameworks such as RE-AIM30 encourage comprehensive yet pragmatic planning of interventions and evaluation of outcomes to ensure implementation has the greatest possible population impact and that interventions are designed for dissemination and sustainability from the outset (Table 1). For example, reach is a measure of whether the change will reach (did reach) the intended patients and/or the most vulnerable or at-risk patients. RE-AIM (www.RE-AIM.org) places emphasis on both internal and external validity and details a set of key factors necessary for sustained implementation. The PRISM32 framework expands on RE-AIM to specify key multilevel (eg clinician, staff, patient, and external environment) contextual factors relevant to achieving the RE-AIM outcomes such as funding, current organizational priorities, existing workflows, and management-level support for change.

TABLE 1.

RE-AIM Implementation Science Framework

| RE-AIM Dimension | Key Pragmatic Priorities to Consider and Answer |

|---|---|

| Reach | Who is (was) intended to benefit and who actually participates or is exposed to the intervention? |

| Effectiveness | What is (was) the most important benefit you are trying to achieve and what is (was) the likelihood of negative outcomes? |

| Adoption | Where is (was) the program or policy applied? |

| Who applied it? | |

| Implementation | How consistently is (was) the program or policy delivered? |

| How will (was) it be adapted? | |

| How much will (did) it cost? | |

| Why will (did) the results come about? | |

| Maintenance | When will (was) the initiative become operational? How long will (was) it be sustained (setting level)? And how long are the results sustained (individual level)? |

Adapted with permission from Glasgow RE, Estabrooks PE. Pragmatic applications of RE-AIM for health care initiatives in community and clinical settings. Prev Chronic Dis. 2018; 15:E02.

Downsides of IR

Relative to QIS methods, IR methods are usually much slower. It is not always clear how much evidence is enough evidence to meet implementation requirements, and relevant evidence is often not available for the most complex and pressing problems. Without incremental tests of change, there is tremendous pressure to make sure the change or EBP (and the strategies chosen to implement the EBP) are perfect before implementation, which can slow progress. Although there is recent movement within IR to guide iterative adjustments to interventions and implementation strategies, these efforts are relatively recent and still not rapid by QIS standards.

IR frameworks are valued for their comprehensiveness, but all factors influencing implementation cannot be reasonably or practically addressed. Most frameworks are challenging for nonacademics to apply or fall short of recommending specific strategies for prioritizing which factors to address, thus reducing overall implementation efficiency. Finally, whereas some large health systems such as the Veterans Health Administrations and large health maintenance organizations have embedded IR researchers,40 in general, there are few implementation researchers employed in most health care settings. Bringing in an outside IR expert who does not know the setting is often costly and time consuming.

Similarities and Differences

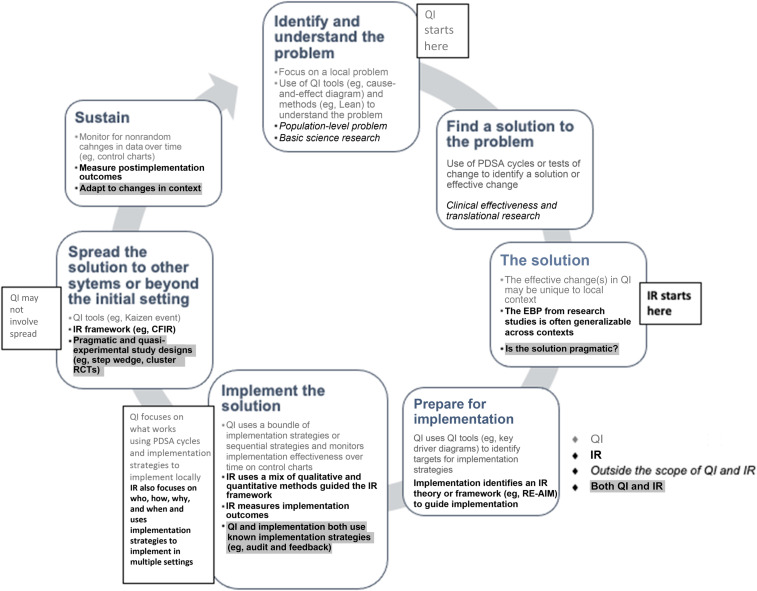

Figure 2 illustrates some principal differences between QIS and IR which include starting and end points, scale, stated goals, and perspective. Consider the example of a clinical practice guideline that compiles evidence and assessments of evidence quality to make recommendations for patient care. A QI leader in an organization may benchmark their system’s alignment with the guideline against similar health care systems to identify specific areas that need improvement. They would likely charter an improvement team, identify and involve local stakeholders and opinion leaders, hypothesize modifiable drivers of the current practice on the basis of a thorough assessment of the problem in their setting, and begin the process of developing and testing changes to align care delivery in their setting with the evidence.

FIGURE 2.

Principal differences and overlap between QI and IR.

An IR researcher working to implement the EBPs endorsed by the guideline might start by using ≥1 IR framework to inform a mix of qualitative and quantitative research methods to identify barriers and facilitators to evidence adoption across varied settings, contexts, and multiple stakeholders. After identifying a set of generalizable implementation strategies, they may design a multisite study to test these strategies to improve and sustain outcomes.

Although these examples have different starting points and reference frames, use different methods, take different amounts of time and resources, and appear to have different goals, the end point and ultimate outcome is the same.

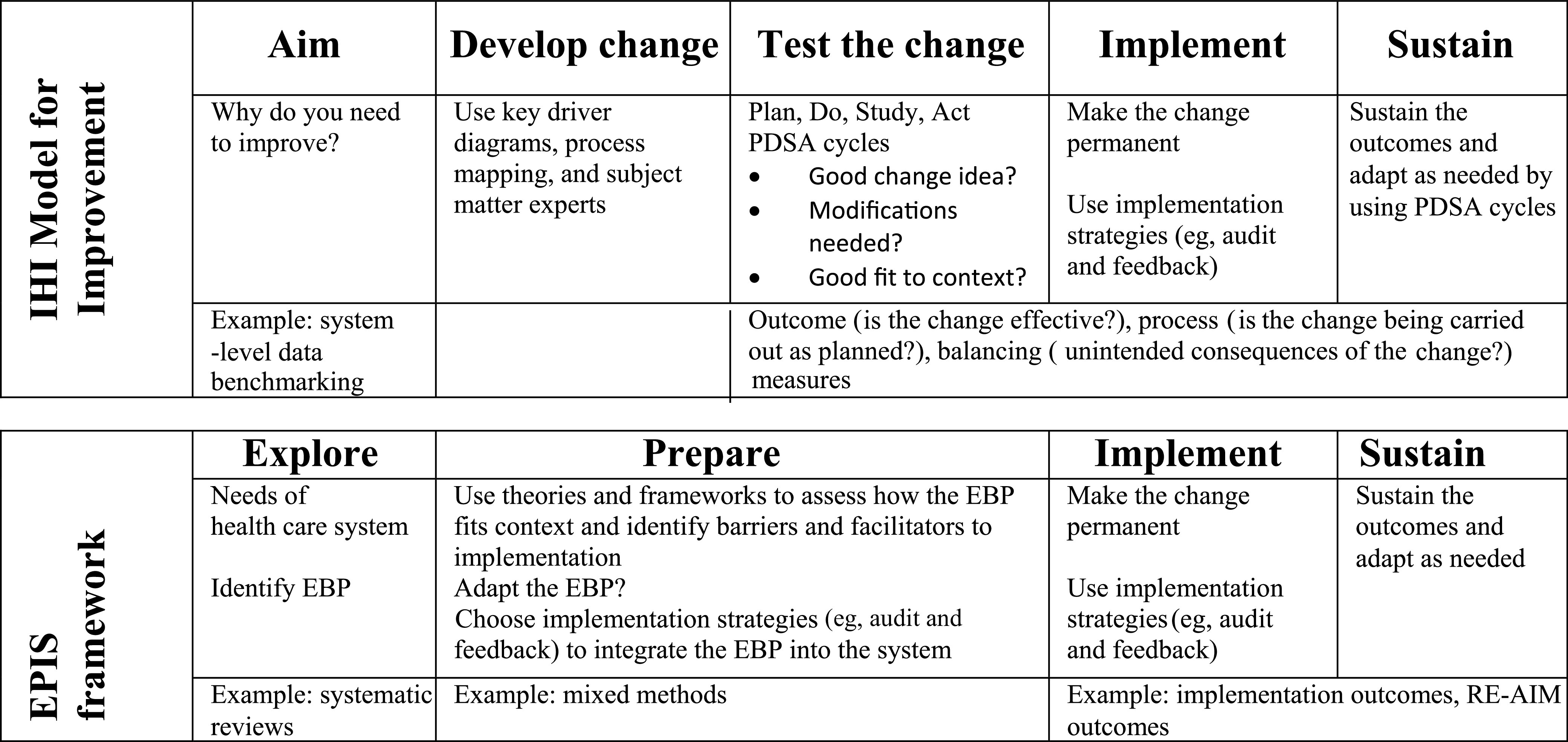

Figure 3 further illustrates the overlap between the 2 disciplines by comparing 2 commonly used frameworks: the Institute for Healthcare Improvement Model for Improvement QIS framework versus the EPIS IR framework.17,33 Both the Model for Improvement and the EPIS framework start with understanding the needs or problems of a health care system followed by identifying a solution, adapting the solution to fit the system, implementing, and then sustaining the solution. Given the parallel flow of tasks, individuals familiar with the Model for Improvement could easily use EPIS and vice versa depending on the scale of the project.

FIGURE 3.

Institute for Healthcare Improvement (IHI) Model for Improvement versus EPIS implementation science framework.

Opportunities to Integrate

When implementation researchers need to rapidly but systematically measure and address context to focus implementation strategies, IR meets QIS. When improvers are ready to spread a change to new settings, QIS meets IR. QIS may lack the frameworks and measurement necessary to effectively spread change, and IR often struggles to efficiently measure context, adapt changes, and tailor strategies to individual settings. Below, we outline examples of possible collaborations.

Use Tests of Change Versus Hybrid Trials

Hybrid implementation-effectiveness trials are used in IR to study both change effectiveness and implementation outcomes simultaneously.41,42 Often, hybrid trials are employed when some evidence, but perhaps not conclusive evidence, exists for an evidence-anchored change. However, assuming little risk or downside to the change, a more rapid and cost-effective way to build confidence in an evidence-anchored change before investing in full-scale implementation may be to do QIS tests of change across various health care settings. Tests of change coupled with outcome, process, and balancing measures would allow investigators to more quickly build evidence for a change’s effectiveness while simultaneously uncovering effective implementation strategies if and/or when the change is implemented.

Using the Model for Improvement to Adapt EBPs and Implementation Strategies

Historically, IR has been focused on implementation fidelity or the extent an EBP is implemented as originally studied. However, there is growing recognition that the focus should instead be on how to implement not with fidelity but with purposeful, measured adaptation that maintains the change’s function and efficacy while improving effectiveness in each setting.39,43–45 Using small tests of change could help implementation researchers understand which adaptations improve function. Why do we want to make a change to the EBP or implementation strategies? What do we hypothesize will happen when we make changes? How will we measure the adaptation to know if it is an improvement? One study in the Veterans Health Administration used the RE-AIM IR framework to guide not only pre- and postimplementation evaluation but also as a midcourse evaluation process to assist teams in adjusting implementation strategies.44 With QI team knowledge, as well as systematic, rapid tests of change, investigators could more rapidly and systematically adapt EBPs and implementation strategies to the local context and over time. Careful study of what changes are made and why will augment both successful adaptations to varied contexts and sustainability.

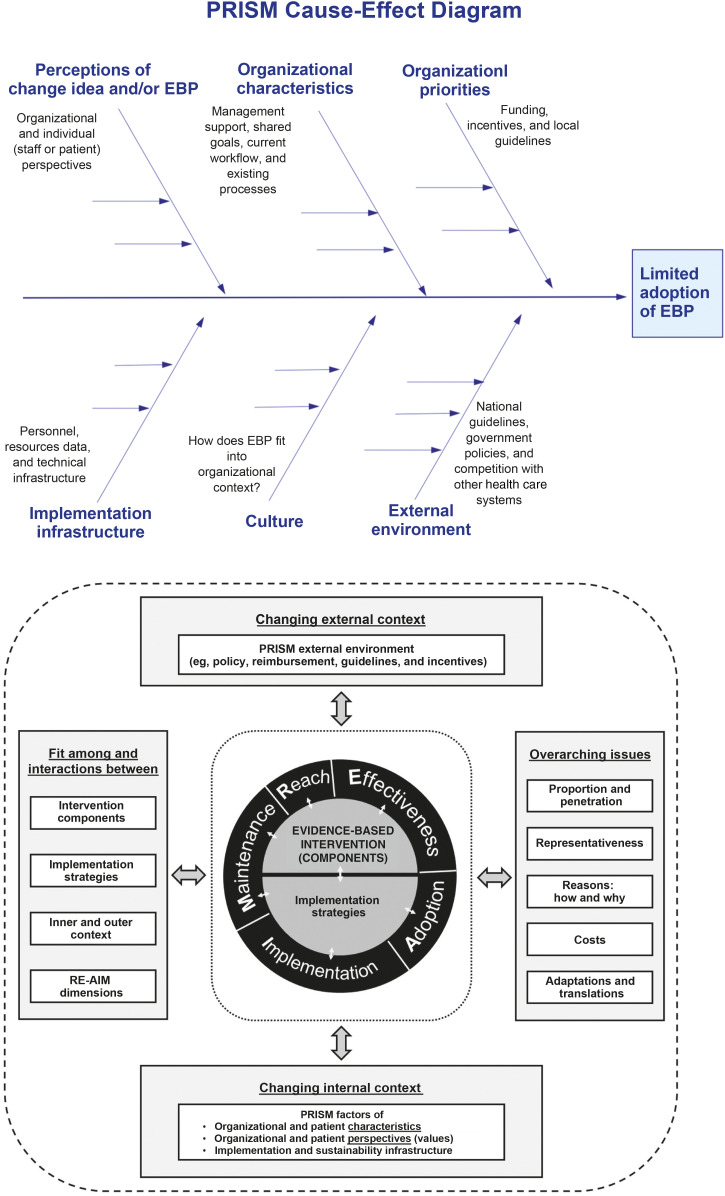

Measuring and Addressing Factors Influencing Implementation

IR frameworks are tremendous for outlining factors that need to be considered during implementation, but the research methods often used to evaluate the factors (eg, conducting qualitative interviews) are cumbersome and often not timely. QIS tools (eg, cause-and-effect diagrams or key driver diagrams) could be coupled with IR frameworks to more rapidly understand which factors are most relevant to implementation success in a given setting and hypothesize about ways to address them. In Fig 4, we illustrate how a common QIS tool, namely, the cause-and-effect diagram, can be merged with the PRISM IR framework. Using an IR framework to guide the categories of a QIS cause-and-effect diagram would allow implementation and improvement teams to engage stakeholders and knowledge experts to more quickly identify factors influencing change in a given setting.

FIGURE 4.

Using the PRISM IR framework to guide the categories of a QI cause-and-effect diagram. The PRISM figure has been reprinted with permission from the re-aim.org Web site developers and host.

The “S” in PDSA stands for study and is used to evaluate the effectiveness of a test of change. Tests of change systematically measure whether change ideas are working, but sets of important implementation outcomes such as feasibility and acceptability, which are formally evaluated in IR, are often more informally assessed during PDSA cycles.30,37 Using pragmatic, validated IR measures of implementation issues such as end-user comfort (acceptability) and perceptions of workflow compatibility (appropriateness) would afford QI teams a deeper understanding of cycle results to make decisions about adoption versus abandonment of change ideas.

Balasubramanian et al46 have described the development and application of the Learning Evaluation approach to formally combine IR and QIS to measure and address factors influencing implementation. At multiple time points, QI-style, real-time, within-organization quantitative process and outcome data (generated from and used to inform PDSA cycles) are overlayed with across-organization qualitative data. This approach allows comparison of barriers and/or facilitators, tests of change, and outcomes across sites so that each site’s PDSA cycles inform the larger study’s generalizable findings.

Measuring Change Outcomes

QIS uses a combination of measures to know if a change is being conducted (process), if the change is effective (outcome), and whether there are unintended consequences (balancing). Because of funding and time constraints, improvers most often use data that can be easily collected in real time or data already collected by health care systems for other purposes. Using these types of data allows for rapid improvement without added expense to the system. Using similar data sources would improve the pace and cost-effectiveness of IR measures.

QIS changes are evaluated at the aggregate level, which may mask important outcomes. Using IR frameworks like RE-AIM,29,47 improvers could more comprehensively measure outcomes related to equity and representativeness not commonly thought about in QIS like reach. Such measures are important for identifying important gaps such as disparate outcomes between insured and uninsured patients.

Sustaining Improvements

Both QIS and IR face challenges with sustaining change.48 Both improvers and implementers may go too far in assuming that changes are made permanent and sustained. In articles, researchers describe improved outcomes after implementation studies and published quality reports demonstrate improvement on run charts or control charts, but what is next? Whether improvements are spread and sustained is rarely reported. What happens after the improvement team moves to the next project, when grant funding ends, or when time and resource allocation changes? There is both a dearth of publications that report on sustained change and a paucity of evidence around what creates lasting change. What fosters a culture change of “this is how we do it here” versus a return to the old way?

Both QIS and IR recognize the importance and challenge of sustaining change and embrace the concept of learning health systems, that is, a continuous cycle of data examination and adjustment to constantly improve within a hospital or health system.49,50 Thought leaders in IR have proposed the Dynamic Sustainability Framework, which might serve as a bridge between IR and QIS to structure continuous or ongoing improvement as a method to sustainment.39 Henceforth, refining changes and implementation strategies with sustainability in mind and developing methods to measure51 and promote sustainment52 is an important area of study that would benefit from a forward-thinking partnership between QIS and IR.

Conclusions

Quality improvers focused on developing changes that improve care in their setting stress that not every change is an improvement,17 whereas implementation researchers focused on influencing behavior change across multiple settings tout that context is everything.53 Although both fields are vast topics beyond the scope of this article, in the hospital setting, implementation and QI have a lot in common. Undoubtedly crosspollinating ideas and tools would advance both fields and benefit patients.7 Going forward, both disciplines must focus on what worked and why it worked. What about the context made the change work? What about the context required adaptation? Greater integration of QIS and IR will improve the speed, effectiveness, and ultimate impact of both improvement and implementation.

Footnotes

Dr Tyler conceptualized the manuscript and drafted the initial manuscript; Dr Glasgow critically reviewed and revised the manuscript; and all authors approved the final manuscript as submitted.

FINANCIAL DISCLOSURE: The authors have indicated they have no financial relationships relevant to this article to disclose.

FUNDING: Supported by grant numbers K08HS026512 from the Agency for Healthcare Research and Quality and HL 137862-012 from the National Institutes of Health Heart, Lung, and Blood Institute. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality or National Institutes of Health. The funders/sponsors did not participate in the work. Funded by the National Institutes of Health (NIH).

POTENTIAL CONFLICT OF INTEREST: The authors have indicated they have no potential conflicts of interest to disclose.

Definitions of Terms Used in this Article Change: A different way of doing something. Effective Change: A change that results in measurable improvement. Improvement: Moving from current state to a better state. In healthcare, often defined as better health and healthcare that is more efficient, effective, safe, patient-centered, and equitable.1 Quality Improvement Science: An applied team science used to systematically develop, test, and implement a change that results in measurable improvement. Quality Improvement: The process of developing, testing, and implementing effective changes. Improver: Person(s) within a healthcare setting responsible for improving healthcare delivery and health outcomes. Evidence-Based Practice: A practice, program or intervention(s) that has been shown through research to improve outcomes. Implementation: Putting an evidence-based change into practice or making a change permanent. Implementation Research: The study of strategies, techniques, and factors associated with successfully translating an evidence-based practice into diverse settings. Implementer: Person(s) within a healthcare setting responsible for putting an evidence-based change into practice. Spread: Implementing an evidence-based practice in settings beyond the setting where it was initially developed, tested, and implemented. Adaptation: The intentional modification of an evidence-based practice or implementation strategy to improve results and/or compatibility with the context of a healthcare setting. Context: Factors that influence or define the social and organizational factors of a setting (eg, social norms, policies, team interactions).2

References

- 1.Institute of Medicine Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001 [Google Scholar]

- 2.Brownson R, Colditz G, Proctor E. Dissemination and Implementation Research in Health: Translating Science to Practice. 2nd ed. New York, NY: Oxford University Press; 2018 [Google Scholar]

- 3.Check D, Zullig L, Davis M, et al. Quality improvement and implementation science in cancer care: Identifying areas of synergy and opportunities for further integration. J Clin Oncol. 2019;37(27):29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mitchell SA, Chambers DA. Leveraging implementation science to improve cancer care delivery and patient outcomes. J Oncol Pract. 2017;13(8):523–529 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Koczwara B, Stover AM, Davies L, et al. Harnessing the synergy between improvement science and implementation science in cancer: a call to action. J Oncol Pract. 2018;14(6):335–340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Margolis P, Provost LP, Schoettker PJ, Britto MT. Quality improvement, clinical research, and quality improvement research--opportunities for integration. Pediatr Clin North Am. 2009;56(4):831–841 [DOI] [PubMed] [Google Scholar]

- 7.Berwick DM. The science of improvement. JAMA. 2008;299(10):1182–1184 [DOI] [PubMed] [Google Scholar]

- 8.Shojania KG, Grimshaw JM. Evidence-based quality improvement: the state of the science. Health Aff (Millwood). 2005;24(1):138–150 [DOI] [PubMed] [Google Scholar]

- 9.Granger BB. Science of improvement versus science of implementation: integrating both into clinical inquiry. AACN Adv Crit Care. 2018;29(2):208–212 [DOI] [PubMed] [Google Scholar]

- 10.Miltner R, Newsom J, Mittman B. The future of quality improvement research. Implement Sci. 2013;8(suppl 1):S9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Standiford T, Conte ML, Billi JE, Sales A, Barnes GD. Integrating lean thinking and implementation science determinants checklists for quality improvement: a scoping review. Am J Med Qual. 2020;35(4):330–340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Stetler CB, McQueen L, Demakis J, Mittman BS. An organizational framework and strategic implementation for system-level change to enhance research-based practice: QUERI Series. Implement Sci. 2008;3:30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yano EM. The role of organizational research in implementing evidence-based practice: QUERI Series. Implement Sci. 2008;3:29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kilbourne AM, Elwy AR, Sales AE, Atkins D. Accelerating research impact in a learning health care system: VA’s quality enhancement research initiative in the choice act era. Med Care. 2017;55(7 suppl 1):S4–S12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Patient Safety & Quality Healthcare. Proctor L. Editor's Notebook: A Quotation with a Life of Its Own. 2008. Available at: http://psqh.com/editor-s-notebook-a-quotation-with-a-life-of-its-own. Accessed March 25, 2021 [Google Scholar]

- 16.Perla RJ, Provost LP, Parry GJ. Seven propositions of the science of improvement: exploring foundations. Qual Manag Health Care. 2013;22(3):170–186 [DOI] [PubMed] [Google Scholar]

- 17.Langley GJ, Moen RD, Nolan KM, Nolan TW, Norman CL, Provost L. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance. 2nd ed. San Francisco, CA: Jossey-Bass; 1996 [Google Scholar]

- 18.American Society for Quality. Learn Bout Quality: Fishbone Diagram. Available at: https://asq.org/quality-resources/fishbone Accessed December 10, 2020

- 19.Agency for Healthcare Research and Quality. Section 4: ways to approach the quality improvement process (page 2 of 2). 2020. Available at: https://www.ahrq.gov/cahps/quality-improvement/improvement-guide/4-approach-qi-process/sect4part2.html. Accessed November 22, 2020

- 20.Benneyan JC, Lloyd RC, Plsek PE. Statistical process control as a tool for research and healthcare improvement. Qual Saf Health Care. 2003;12(6):458–464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Provost LP. Analytical studies: a framework for quality improvement design and analysis. BMJ Qual Saf. 2011;20(suppl 1):i92–i96 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shewhart W. The Economic Control of Quality of Manufactured Product. New York: D Van Nostrand; 1931 [Google Scholar]

- 23.Deming WE. Out of the Crisis. Cambridge, MA: Massachusetts Institute of Technology Center for Advanced Engineering Studies; 1986 [Google Scholar]

- 24.Provost LP, Murray S. The Health Care Data Guide: Learning from Data for Improvement. Hoboken, NJ: Wiley; 2011 [Google Scholar]

- 25.Department of Health and Human Services. PAR-19-274: Dissemination and Implementation Research in Health (R01 clinical trial optional). 2019. Available at: https://grants.nih.gov/grants/guide/pa-files/PAR-19-274.html. Accessed November 11, 2020

- 26.Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3(1):32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Stirman SW, Baumann AA, Miller CJ. The FRAME: an expanded framework for reporting adaptations and modifications to evidence-based interventions. Implement Sci. 2019;14(1):1–10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chambers DA, Norton WE. The adaptome: advancing the science of intervention adaptation. Am J Prev Med. 2016;51(4 suppl 2):S124–S131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Glasgow RE, Harden SM, Gaglio B, et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front Public Health. 2019;7:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.McCreight MS, Rabin BA, Glasgow RE, et al. Using the Practical, Robust Implementation and Sustainability Model (PRISM) to qualitatively assess multilevel contextual factors to help plan, implement, evaluate, and disseminate health services programs. Transl Behav Med. 2019;9(6):1002–1011 [DOI] [PubMed] [Google Scholar]

- 32.Feldstein AC, Glasgow REA. A Practical, Robust Implementation and Sustainability Model (PRISM) for integrating research findings into practice. Jt Comm J Qual Patient Saf. 2008;34(4):228–243 [DOI] [PubMed] [Google Scholar]

- 33.Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health. 2011;38(1):4–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(53):53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Strifler L, Cardoso R, McGowan J, et al. Scoping review identifies significant number of knowledge translation theories, models, and frameworks with limited use. J Clin Epidemiol. 2018;100:92–102 [DOI] [PubMed] [Google Scholar]

- 37.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Helfrich CD, Li Y-F, Sharp ND, Sales AE. Organizational Readiness to Change Assessment (ORCA): development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implement Sci. 2009;4(1):38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8:117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Frakt AB, Prentice JC, Pizer SD, et al. Overcoming challenges to evidence-based policy development in a large, integrated delivery system. Health Serv Res. 2018;53(6):4789–4807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Geoffrey MC, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–226 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kemp CG, Wagenaar BH, Haroz EE. Expanding hybrid studies for implementation research: intervention, implementation strategy, and context. Front Public Health. 2019;7:325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Perez Jolles M, Lengnick-Hall R, Mittman BS. Core functions and forms of complex health interventions: a patient-centered medical home illustration. J Gen Intern Med. 2019;34(6):1032–1038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Glasgow RE, Battaglia C, McCreight M, Ayele RA, Rabin BA. Making implementation science more rapid: use of the RE-AIM framework for mid-course adaptations across five health services research projects in the Veterans Health Administration. Front Public Health. 2020;8:194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ovretveit J, Dolan-Branton L, Marx M, Reid A, Reed J, Agins B. Adapting improvements to context: when, why and how? Int J Qual Health Care. 2018;30(suppl 1):20–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Balasubramanian BA, Cohen DJ, Davis MM, et al. Learning Evaluation: blending quality improvement and implementation research methods to study healthcare innovations. Implement Sci. 2015;10:31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Glasgow RE, Estabrooks PE. Pragmatic applications of RE-AIM for health care Initiatives in community and clinical settings. Prev Chronic Dis. 2018;15:E02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wiltsey Stirman S, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci. 2012;7:17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Etheredge LM. A rapid-learning health system. Health Aff (Millwood). 2007;26(2):w107–w118 [DOI] [PubMed] [Google Scholar]

- 50.Lessard L, Michalowski W, Fung-Kee-Fung M, Jones L, Grudniewicz A. Architectural frameworks: defining the structures for implementing learning health systems. Implement Sci. 2017;12(1):78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Moore JE, Mascarenhas A, Bain J, Straus SE. Developing a comprehensive definition of sustainability. Implement Sci. 2017;12(1):110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Shelton RC, Chambers DA, Glasgow RE. An extension of RE-AIM to enhance sustainability: addressing dynamic context and promoting health equity over time. Front Public Health. 2020;8:134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Nilsen P, Bernhardsson S. Context matters in implementation science: a scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Serv Res. 2019;19(1):189. [DOI] [PMC free article] [PubMed] [Google Scholar]