Abstract

The Advanced Normalizations Tools ecosystem, known as ANTsX, consists of multiple open-source software libraries which house top-performing algorithms used worldwide by scientific and research communities for processing and analyzing biological and medical imaging data. The base software library, ANTs, is built upon, and contributes to, the NIH-sponsored Insight Toolkit. Founded in 2008 with the highly regarded Symmetric Normalization image registration framework, the ANTs library has since grown to include additional functionality. Recent enhancements include statistical, visualization, and deep learning capabilities through interfacing with both the R statistical project (ANTsR) and Python (ANTsPy). Additionally, the corresponding deep learning extensions ANTsRNet and ANTsPyNet (built on the popular TensorFlow/Keras libraries) contain several popular network architectures and trained models for specific applications. One such comprehensive application is a deep learning analog for generating cortical thickness data from structural T1-weighted brain MRI, both cross-sectionally and longitudinally. These pipelines significantly improve computational efficiency and provide comparable-to-superior accuracy over multiple criteria relative to the existing ANTs workflows and simultaneously illustrate the importance of the comprehensive ANTsX approach as a framework for medical image analysis.

Subject terms: Computational neuroscience, Biomarkers

The ANTsX ecosystem: a brief overview

Image registration origins

The Advanced Normalization Tools (ANTs) is a state-of-the-art, open-source software toolkit for image registration, segmentation, and other functionality for comprehensive biological and medical image analysis. Historically, ANTs is rooted in advanced image registration techniques which have been at the forefront of the field due to seminal contributions that date back to the original elastic matching method of Bajcsy and co-investigators1,2,3. Various independent platforms have been used to evaluate ANTs tools since their early development. In a landmark paper4, the authors reported an extensive evaluation using multiple neuroimaging datasets analyzed by fourteen different registration tools, including the Symmetric Normalization (SyN) algorithm5, and found that “ART, SyN, IRTK, and SPM’s DARTEL Toolbox gave the best results according to overlap and distance measures, with ART and SyN delivering the most consistently high accuracy across subjects and label sets.” Participation in other independent competitions6,7 provided additional evidence of the utility of ANTs registration and other tools8,9,10, Despite the extremely significant potential of deep learning for image registration algorithmic development11, ANTs registration tools continue to find application in the various biomedical imaging research communities.

Current developments

Since its inception, though, ANTs has expanded significantly beyond its image registration origins. Other core contributions include template building12, segmentation13, image preprocessing (e.g., bias correction14 and denoising15), joint label fusion16,17, and brain cortical thickness estimation18,19 (cf Table 1). Additionally, ANTs has been integrated into multiple, publicly available workflows such as fMRIprep20 and the Spinal Cord Toolbox21. Frequently used ANTs pipelines, such as cortical thickness estimation19, have been integrated into Docker containers and packaged as Brain Imaging Data Structure (BIDS)22 and FlyWheel applications (i.e., “gears’ ’). It has also been independently ported for various platforms including Neurodebian23 (Debian OS), Neuroconductor24 (the R statistical project), and Nipype25 (Python). Additionally, other widely used software, such as FreeSurfer26, have incorporated well-performing and complementary ANTs components14,15 into their own libraries. According to GitHub, recent unique “clones” have averaged 34 per day with the total number of clones being approximately twice that many. 50 unique contributors to the ANTs library have made a total of over 4500 commits. Additional insights into usage can be viewed at the ANTs GitHub website.

Table 1.

The significance of core ANTs tools in terms of their number of citations (from October 17, 2020).

Over the course of its development, ANTs has been extended to complementary frameworks resulting in the Python- and R-based ANTsPy and ANTsR toolkits, respectively. These ANTs-based packages interface with extremely popular, high-level, open-source programming platforms which have significantly increased the user base of ANTs. The rapidly rising popularity of deep learning motivated further recent enhancement of ANTs and its extensions. Despite the existence of an abundance of online innovation and code for deep learning algorithms, much of it is disorganized and lacks a uniformity in structure and external data interfaces which would facilitate greater uptake. With this in mind, ANTsR spawned the deep learning ANTsRNet package35 which is a growing Keras/TensorFlow-based library of popular deep learning architectures and applications specifically geared towards medical imaging. Analogously, ANTsPyNet is an additional ANTsX complement to ANTsPy. Both, which we collectively refer to as “ANTsXNet”, are co-developed so as to ensure cross-compatibility such that training performed in one library is readily accessible by the other library. In addition to a variety of popular network architectures (which are implemented in both 2-D and 3-D), ANTsXNet contains a host of functionality for medical image analysis that have been developed in-house and collected from other open-source projects. For example, an extremely popular ANTsXNet application is a multi-modal brain extraction tool that uses different variants of the popular U-net36 architecture for segmenting the brain in multiple modalities. These modalities include conventional T1-weighted structural MRI as well as T2-weighted MRI, FLAIR, fractional anisotropy, and BOLD data. Demographic specialization also includes infant T1-weighted and/or T2-weighted MRI. Additionally, we have included other models and weights into our libraries such as a recent BrainAGE estimation model37, based on individuals; HippMapp3r38, a hippocampal segmentation tool; the winning entry of the MICCAI 2017 white matter hyperintensity segmentation competition39; MRI super resolution using deep back-projection networks40; and NoBrainer, a T1-weighted brain extraction approach based on FreeSurfer (see Fig. 1).

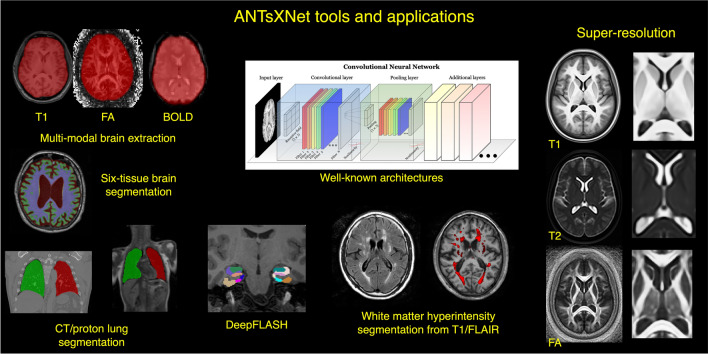

Figure 1.

An illustration of the tools and applications available as part of the ANTsRNet and ANTsPyNet deep learning toolkits. Both libraries take advantage of ANTs functionality through their respective language interfaces—ANTsR (R) and ANTsPy (Python). Building on the Keras/TensorFlow language, both libraries standardize popular network architectures within the ANTs ecosystem and are cross-compatible. These networks are used to train models and weights for such applications as brain extraction which are then disseminated to the public.

The ANTsXNet cortical thickness pipeline

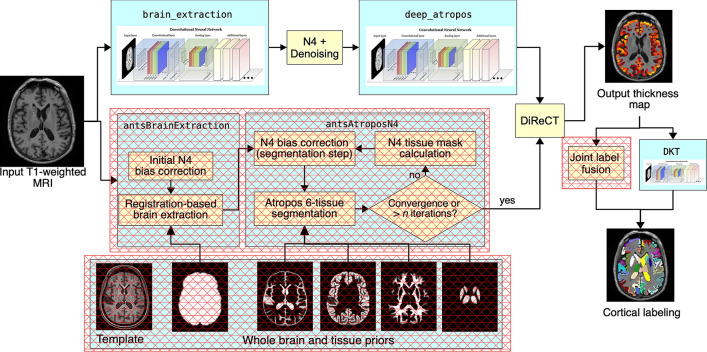

The most recent ANTsX innovation involves the development of deep learning analogs of our popular ANTs cortical thickness cross-sectional19 and longitudinal41 pipelines within the ANTsXNet framework. Figure 2, adapted from our previous work19, illustrates some of the major changes associated with the single-subject, cross-sectional pipeline. The resulting improvement in efficiency derives primarily from eliminating deformable image registration from the pipeline—a step which has historically been used to propagate prior, population-based information (e.g., tissue maps) to individual subjects for such tasks as brain extraction42 and tissue segmentation13 which is now configured within the neural networks and trained weights.

Figure 2.

Illustration of the ANTsXNet cortical thickness pipeline and the relationship to its traditional ANTs analog. The hash-designated sections denote pipeline steps which have been obviated by the deep learning approach. These include template-based brain extraction, template-based n-tissue segmentation, and joint label fusion for cortical labeling. In our prior work, execution time of the thickness pipeline was dominated by registration. In the deep version of the pipeline, it is dominated by DiReCT. However, we note that registration and DiReCT execute much more quickly than in the past in part due to major improvements in the underlying ITK multi-threading strategy.

These structural MRI processing pipelines are currently available as open-source within the ANTsXNet libraries. Evaluations using both cross-sectional and longitudinal data are described in subsequent sections and couched within the context of our previous publications19,41. Related work has been recently reported by external groups43,44 and provides a context for comparison to motivate the utility of the ANTsX ecosystem.

Results

Cross-sectional performance evaluation

Due to the absence of ground-truth, we utilize the evaluation strategy from our previous work19 where we used cross-validation to build and compare age prediction models from data derived from both the proposed ANTsXNet pipeline and the established ANTs pipeline. Specifically, we use “age” as a well-known and widely-available demographic correlate of cortical thickness45 and quantify the predictive capabilities of corresponding random forest classifiers34 of the form:

| 1 |

with covariates and (i.e., total intracranial volume). is the average thickness value in the Desikian-Killiany-Tourville (DKT) region46 (cf Table 2). Root mean square error (RMSE) between the actual and predicted ages are the quantity used for comparative evaluation. As we have explained previously19, we find these evaluation measures to be much more useful than other commonly applied criteria as they are closer to assessing the actual utility of these thickness measurements as biomarkers for disease47 or growth. In recent work44 the authors employ correlation with FreeSurfer thickness values as the primary evaluation for assessing relative performance with ANTs cortical thickness19. This evaluation, unfortunately, is fundamentally flawed in that it is a prime example of a type of circularity analysis48 whereby data selection is driven by the same criteria used to evaluate performance. Specifically, the underlying DeepSCAN network used for the tissue segmentation step employs training based on FreeSurfer results which directly influences thickness values as thickness/segmentation are highly correlated and vary characteristically between software packages. Relative performance with ANTs thickness (which does not use FreeSurfer for training) is then assessed by determining correlations with FreeSurfer thickness values. Almost as problematic is their use of repeatability, which they confusingly label as “robustness,” as an additional ranking criterion. Repeatability evaluations should be contextualized within considerations such as the bias-variance tradeoff and quantified using relevant metrics, such as the intra-class correlation coefficient which takes into account both inter- and intra-observer variability.

Table 2.

The 31 cortical labels (per hemisphere) of the Desikan–Killiany–Tourville atlas.

| (1) Caudal anterior cingulate (cACC) | (17) Pars orbitalis (pORB) |

| (2) Caudal middle frontal (cMFG) | (18) Pars triangularis (pTRI) |

| (3) Cuneus (CUN) | (19) Pericalcarine (periCAL) |

| (4) Entorhinal (ENT) | (20) Postcentral (postC) |

| (5) Fusiform (FUS) | (21) Posterior cingulate (PCC) |

| (6) Inferior parietal (IPL) | (22) Precentral (preC) |

| (7) Inferior temporal (ITG) | (23) Precuneus (PCUN) |

| (8) Isthmus cingulate (iCC) | (24) Rosterior anterior cingulate (rACC) |

| (9) Lateral occipital (LOG) | (25) Rostral middle frontal (rMFG) |

| (10) Lateral orbitofrontal (LOF) | (26) Superior frontal (SFG) |

| (11) Lingual (LING) | (27) Superior parietal (SPL) |

| (12) Medial orbitofrontal (MOF) | (28) Superior temporal (STG) |

| (13) Middle temporal (MTG) | (29) Supramarginal (SMAR) |

| (14) Parahippocampal (PARH) | (30) Transverse temporal (TT) |

| (15) Paracentral (paraC) | (31) Insula (INS) |

| (16) Pars opercularis (pOPER) |

The ROI abbreviations from the R brainGraph package are given in parentheses and used in later figures.

In addition to the training data listed above, to ensure generalizability, we also compared performance using the SRPB data set32 comprising over 1600 participants from 12 sites. Note that we recognize that we are processing a portion of the evaluation data through certain components of the proposed deep learning-based pipeline that were used to train the same pipeline components. Although this does not provide evidence for generalizability (which is why we include the much larger SRPB data set), it is still interesting to examine the results since, in this case, the deep learning training can be considered a type of noise reduction on the final results. It should be noted that training did not use age prediction (or any other evaluation or related measure) as a criterion to be optimized during network model training (i.e., circular analysis)48.

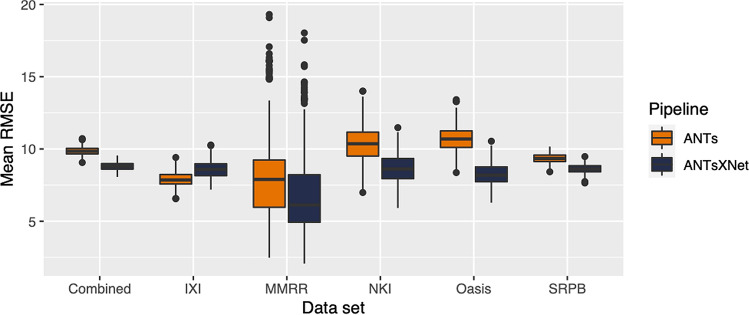

The results are shown in Fig. 3 where we used cross-validation with 500 permutations per model per data set (including a “combined” set) and an 80/20 training/testing split. The ANTsXNet deep learning pipeline outperformed the classical pipeline19 in terms of age prediction in all data sets except for IXI. This also includes the cross-validation iteration where all data sets were combined. Additionally, repeatability assessment on the regional cortical thickness values of the MMRR data set yielded ICC values (“average random rater”) of 0.99 for both pipelines.

Figure 3.

Distribution of mean RMSE values (500 permutations) for age prediction across the different data sets between the traditional ANTs and deep learning-based ANTsXNet pipelines. Total mean values are as follows: Combined—9.3 years (ANTs) and 8.2 years (ANTsXNet); IXI—7.9 years (ANTs) and 8.6 years (ANTsXNet); MMRR—7.9 years (ANTs) and 7.6 years (ANTsXNet); NKI—8.7 years (ANTs) and 7.9 years (ANTsXNet); OASIS—9.2 years (ANTs) and 8.0 years (ANTsXNet); and SRPB—9.2 years (ANTs) and 8.1 years (ANTsXNet).

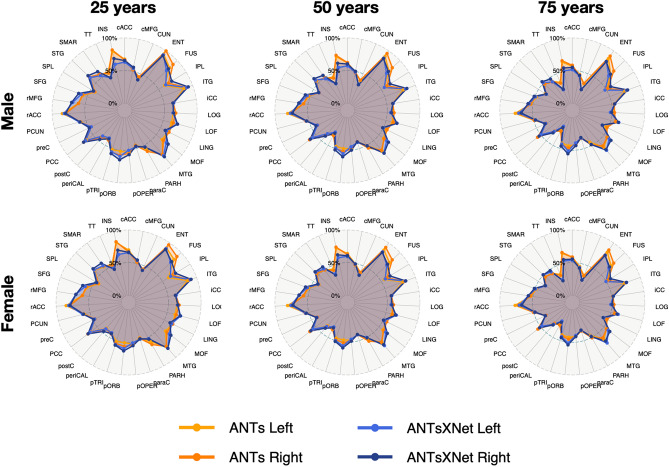

A comparative illustration of regional thickness measurements between the ANTs and ANTsXNet pipelines is provided in Fig. 4 for three different ages spanning the lifespan. Linear models of the form

| 2 |

were created for each of the 62 DKT regions for each pipeline. These models were then used to predict thickness values for each gender at ages of 25 years, 50 years, and 75 years and subsequently plotted relative to the absolute maximum predicted thickness value (ANTs: right entorhinal cortex at 25 years, male). Although there appear to be systematic differences between specific regional predicted thickness values (e.g., , )), a pairwise t-test evidenced no statistically significant difference between the predicted thickness values of the two pipelines.

Figure 4.

Radar plots enabling comparison of relative thickness values between the ANTs and ANTsXNet cortical thickness pipelines at three different ages sampling the life span. See Table 2 for region abbreviations.

Longitudinal performance evaluation

Given the excellent performance and superior computational efficiency of the proposed ANTsXNet pipeline for cross-sectional data, we evaluated its performance on longitudinal data using the longitudinally-specific evaluation strategy and data we employed with the introduction of the longitudinal version of the ANTs cortical thickness pipeline41. We also evaluated an ANTsXNet-based pipeline tailored specifically for longitudinal data. In this variant, an SST is generated and processed using the previously described ANTsXNet cross-sectional pipeline which yields tissue spatial priors. These spatial priors are used in our traditional brain segmentation approach13. The computational efficiency of this variant is also significantly improved, in part, due to the elimination of the costly SST prior generation which uses multiple registrations combined with joint label fusion16.

The ADNI-1 data used for our longitudinal performance evaluation41 consists of over 600 subjects (197 cognitive normals, 324 LMCI subjects, and 142 AD subjects) with one or more follow-up image acquisition sessions every 6 months (up to 36 months) for a total of over 2500 images. In addition to the ANTsXNet pipelines (“ANTsXNetCross” and “ANTsXNetLong”) for the current evaluation, our previous work included the FreeSurfer26 cross-sectional (“FSCross”) and longitudinal (“FSLong”) streams, the ANTs cross-sectional pipeline (“ANTsCross”) in addition to two longitudinal ANTs-based variants (“ANTsNative” and “ANTsSST”). Two evaluation measurements, one unsupervised and one supervised, were used to assess comparative performance between all seven pipelines. We add the results of the ANTsXNet pipeline cross-sectional and longitudinal evaluations in relation to these other pipelines to provide a comprehensive overview of relative performance.

First, linear mixed-effects (LME)31 modeling was used to quantify between-subject and residual variabilities, the ratio of which provides an estimate of the effectiveness of a given biomarker for distinguishing between subpopulations. In order to assess this criteria while accounting for changes that may occur through the passage of time, we used the following Bayesian LME model:

| 3 |

where denotes the individual’s cortical thickness measurement corresponding to the region of interest at the time point indexed by and specification of variance priors to half-Cauchy distributions reflects commonly accepted best practice in the context of hierarchical models49. The ratio of interest, , per region of the between-subject variability, , and residual variability, is

| 4 |

where the posterior distribution of was summarized via the posterior median.

Second, the supervised evaluation employed Tukey post-hoc analyses with false discovery rate (FDR) adjustment to test the significance of the LMCI-CN, AD-LMCI, and AD-CN diagnostic contrasts. This is provided by the following LME model

| 5 |

Here, is the change in thickness of the DKT region from baseline (bl) thickness with random intercepts for both the individual subject () and the acquisition site. The subject-specific covariates , status, , , , and were taken directly from the ADNIMERGE package.

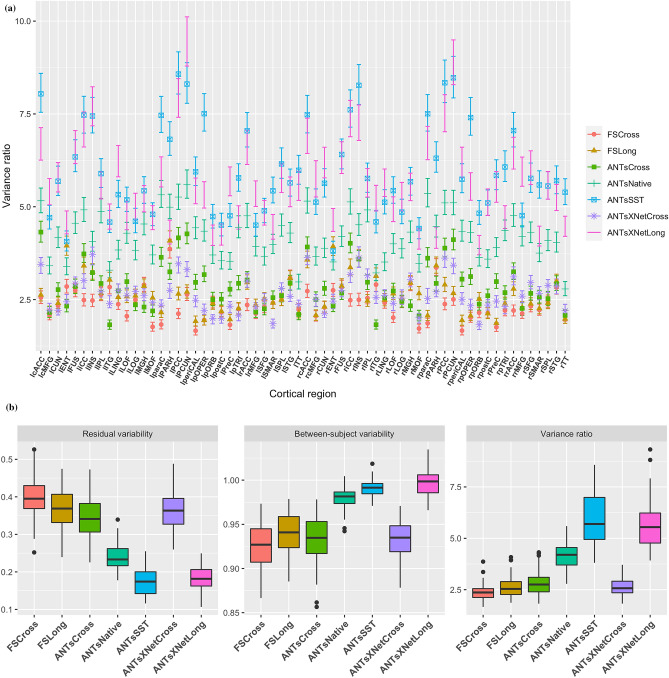

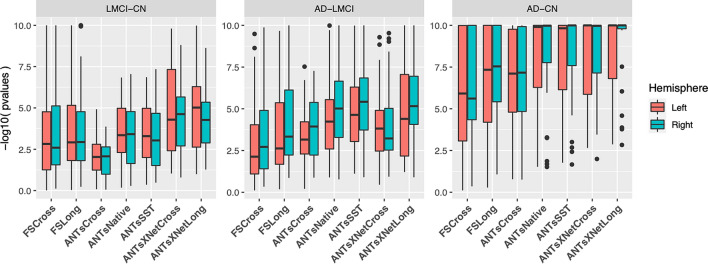

Results for all pipelines with respect to the longitudinal evaluation criteria are shown in Figs. 5 and 6. Figure 5(a) provides the 95% confidence intervals of the variance ratio for all 62 regions of the DKT cortical labeling where ANTsSST consistently performs best with ANTsXNetLong also performing well. These quantities are summarized in Fig. 5(b). The second evaluation criteria compares diagnostic differentiation via LMEs. Log p-values are provided in Fig. 6 which demonstrate excellent LMCI-CN and AD-CN differentiation for both deep learning pipelines.

Figure 5.

Performance over longitudinal data as determined by the variance ratio. (a) Region-specific 95% confidence intervals of the variance ratio showing the superior performance of the longitudinally tailored ANTsX-based pipelines, including ANTsSST and ANTsXNetLong. (b) Residual variability, between subject, and variance ratio values per pipeline over all DKT regions.

Figure 6.

Measures for the supervised evaluation strategy where log p-values for diagnostic differentiation of LMCI-CN, AD-LMCI, and AD-CN subjects are plotted for all pipelines over all DKT regions.

Discussion

The ANTsX software ecosystem provides a comprehensive framework for quantitative biological and medical imaging. Although ANTs, the original core of ANTsX, is still at the forefront of image registration technology, it has moved significantly beyond its image registration origins. This expansion is not confined to technical contributions (of which there are many) but also consists of facilitating access to a wide range of users who can use ANTsX tools (whether through bash, Python, or R scripting) to construct tailored pipelines for their own studies or to take advantage of our pre-fabricated pipelines. And given the open-source nature of the ANTsX software, usage is not limited, for example, to non-commercial use—a common constraint characteristic of other packages such as the FMRIB Software Library (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/Licence).

One of our most widely used pipelines is the estimation of cortical thickness from neuroimaging. This is understandable given the widespread usage of regional cortical thickness as a biomarker for developmental or pathological trajectories of the brain. In this work, we used this well-vetted ANTs tool to provide training data for producing alternative variants which leverage deep learning for improved computational efficiency and also provides superior performance with respect to previously proposed evaluation measures for both cross-sectional19 and longitudinal scenarios41, In addition to providing the tools which generated the original training data for the proposed ANTsXNet pipeline, the ANTsX ecosystem provides a full-featured platform for the additional steps such as preprocessing (ANTsR/ANTsPy); data augmentation (ANTsR/ANTsPy); network construction and training (ANTsRNet/ANTsPyNet); and visualization and statistical analysis of the results (ANTsR/ANTsPy).

Using ANTsX, various steps in the deep learning training processing (e.g., data augmentation, preprocessing) can all be performed within the same ecosystem where such important details as header information for image geometry are treated the same. In contrast, related work44 described and evaluated a similar thickness measurement pipeline. However, due to the lack of a complete processing and analysis framework, training data was generated using the FreeSurfer stream, deep learning-based brain segmentation employed DeepSCAN50 (in-house software), and cortical thickness estimation18 was generated using the ANTs toolkit. The interested researcher must ensure the consistency of the input/output interface between packages (a task for which the Nipype development team is quite familiar.)

Although potentially advantageous in terms of such issues as computational efficiency and other performance measures, there are a number of limitations associated with the ANTsXNet pipeline that should be mentioned both to guide potential users and possibly motivate future related research. As is the case with many deep learning models, usage is restricted based on training data. For example, much of the publicly available brain data has been anonymized through various defacing protocols. That is certainly the case with the training data used for the ANTsXNet pipeline which has consequences specific to the brain extraction step which could lead to poor performance. We are currently aware of this issue and have provided a temporary workaround while simultaneously resuming training on whole head data to mitigate this issue. Also, although the ANTsXNet pipeline performs relatively well as assessed across lifespan data, performance might be hampered for specific age ranges (e.g., neonates), whereas the traditional ANTs cortical thickness pipeline is more flexible and might provide better age-targeted performance. This is the subject of ongoing research. Additionally, application of the ANTsXNet pipeline would be limited with high-resolution acquisitions. Due to the heavy memory requirements associated with deep learning training, the utility of any resolution greater than ~1 mm isotropic would not be leveraged by the existing pipeline. However, there is a potential pipeline variation (akin to the longitudinal variant) that would be worth exploring where Deep Atropos is used only to provide the priors for a subsequent traditional Atropos segmentation on high-resolution data. Although direct evaluation by the principal co-authors of the ANTs toolkit, the similarity in resulting cortical thickness values, as indicated by Fig. 4, and considerations of the training data origins all strongly suggest similarity between Atropos and Deep Atropos output, further evaluation is certainly warranted and would benefit other potential applications.

In terms of additional future work, the recent surge and utility of deep learning in medical image analysis has significantly guided the areas of active ANTsX development. As demonstrated in this work with our widely used cortical thickness pipelines, there are many potential benefits of deep learning analogs to existing ANTs tools as well as the development of new ones. Performance is mostly comparable-to-superior relative to existing pipelines depending on the evaluation metric. Specifically, the ANTsXNet cross-sectional pipeline does well for the age prediction performance framework and in terms of the ICC. Additionally, this pipeline performs relatively well for longitudinal ADNI data for disease differentiation but not so much in terms of the generic variance ratio criterion. However, for such longitudinal-specific studies, the ANTsXNet longitudinal variant performs well for both performance measures. We see possible additional longitudinal extensions incorporating subject ID and months as additional network inputs.

Methods

The original ANTs cortical thickness pipeline

The original ANTs cortical thickness pipeline19 consists of the following steps:

Brain extraction42;

- Brain segmentation with spatial tissue priors13 comprising the

- Cerebrospinal fluid (CSF),

- Gray matter (GM),

- White matter (WM),

- Deep gray matter,

- Cerebellum, and

- Brain stem; and

Cortical thickness estimation18.

Our recent longitudinal variant41 incorporates an additional step involving the construction of a single subject template (SST)12 coupled with the generation of tissue spatial priors of the SST for use with the processing of the individual time points as described above.

Although the resulting thickness maps are conducive to voxel-based52 and related analyses53, here we employ the well-known Desikan-Killiany-Tourville (DKT)46 labeling protocol (31 labels per hemisphere) to parcellate the cortex for averaging thickness values regionally (cf Table 2). This allows us to 1) be consistent in our evaluation strategy for comparison with our previous work19,41 and 2) leverage an additional deep learning-based substitution within the proposed pipeline.

Overview of cortical thickness via ANTsXNet

The entire analysis/evaluation framework, from preprocessing to statistical analysis, is made possible through the ANTsX ecosystem and simplified through the open-source R and Python platforms. Preprocessing, image registration, and cortical thickness estimation are all available through the ANTsPy and ANTsR libraries whereas the deep learning steps are performed through networks constructed and trained via ANTsRNet/ANTsPyNet with data augmentation strategies and other utilities built from ANTsR/ANTsPy functionality.

The brain extraction, brain segmentation, and DKT parcellation deep learning components were trained using data derived from our previous work19. Specifically, the IXI30, MMRR54, NKI55, and OASIS28 data sets, and the corresponding derived data, comprising over 1200 subjects from age 4 to 94, were used for network training. Brain extraction employs a traditional 3-D U-net network36 with whole brain, template-based data augmentation35 whereas brain segmentation and DKT parcellation are processed via 3-D U-net networks with attention gating56 on image octant-based batches. Additional network architecture details are given below. We emphasize that a single model (as opposed to ensemble approaches where multiple models are used to produce the final solution)39 was created for each of these steps and was used for all the experiments described below.

Implementation

Software, average DKT regional thickness values for all data sets, and the scripts to perform both the analysis and obtain thickness values for a single subject (cross-sectionally or longitudinally) are provided as open-source. Specifically, all the ANTsX libraries are hosted on GitHub (https://github.com/ANTsX). The cross-sectional data and analysis code are available as .csv files and R scripts at the GitHub repository dedicated to this paper (https://github.com/ntustison/PaperANTsX) whereas the longitudinal data and evaluation scripts are organized with the repository associated with our previous work41 (https://github.com/ntustison/CrossLong).

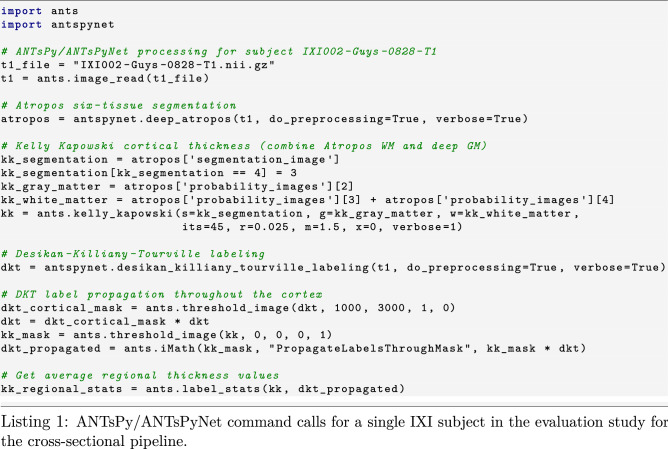

In Listing 1, we show the ANTsPy/ANTsPyNet code snippet for cross-sectional processing a single subject which starts with reading the T1-weighted MRI input image, through the generation of the Atropos-style six-tissue segmentation and probability images, application of ants.kelly_kapowski (i.e., DiReCT), DKT cortical parcellation, subsequent label propagation through the cortex, and, finally, regional cortical thickness tabulation. The cross-sectional and longitudinal pipelines are encapsulated in the ANTsPyNet functions antspynet.cortical_thickness and antspynet.longitudinal_cortical_thickness, respectively. Note that there are precise, line-by-line R-based analogs available through ANTsR/ANTsRNet.

Both the ants.deep_atropos and antspynet.desikan_killiany_tourville_labeling functions perform brain extraction using the antspynet.brain_extraction function. Internally, antspynet.brain_extraction contains the requisite code to build the network and assign the appropriate hyperparameters. The model weights are automatically downloaded from the online hosting site https://figshare.com (see the function get_pretrained_network in ANTsPyNet or getPretrainedNetwork in ANTsRNet for links to all models and weights) and loaded to the constructed network. antspynet.brain_extraction performs a quick translation transformation to a specific template (also downloaded automatically) using the centers of intensity mass, a common alignment initialization strategy. This is to ensure proper gross orientation. Following brain extraction, preprocessing for the other two deep learning components includes ants.denoise_image and ants.n4_bias_correction and an affine-based reorientation to a version of the MNI template57.

We recognize the presence of some redundancy due to the repeated application of certain preprocessing steps. Thus, each function has a do_preprocessing option to eliminate this redundancy for knowledgeable users but, for simplicity in presentation purposes, we do not provide this modified pipeline here. Although it should be noted that the time difference is minimal considering the longer time required by ants.kelly_kapowski. ants.deep_atropos returns the segmentation image as well as the posterior probability maps for each tissue type listed previously. antspynet.desikan_killiany_tourville_labeling returns only the segmentation label image which includes not only the 62 cortical labels but the remaining labels as well. The label numbers and corresponding structure names are given in the program description/help. Because the DKT parcellation will, in general, not exactly coincide with the non-zero voxels of the resulting cortical thickness maps, we perform a label propagation step to ensure the entire cortex, and only the non-zero thickness values in the cortex, are included in the tabulated regional values.

As mentioned previously, the longitudinal version, antspynet.longitudinal_cortical_thickness, adds an SST generation step which can either be provided as a program input or it can be constructed from spatial normalization of all time points to a specified template. ants.deep_atropos is applied to the SST yielding spatial tissues priors which are then used as input to ants.atropos for each time point. ants.kelly_kapowski is applied to the result to generate the desired cortical thickness maps.

Computational time on a CPU-only platform is approximately 1 hour primarily due to ants.kelly_kapowski processing. Other preprocessing steps, i.e., bias correction and denoising, are on the order of a couple minutes. This total time should be compared with hours using the traditional pipeline employing the quick registration option or hours with the more comprehensive registration parameters employed). As mentioned previously, elimination of the registration-based propagation of prior probability images to individual subjects is the principal source of reduced computational time. For ROI-based analyses, this is in addition to the elimination of the optional generation of a population-specific template. Additionally, the use of antspynet.desikan_killiany_tourville_labeling, for cortical labeling (which completes in less than five minutes) eliminates the need for joint label fusion which requires multiple pairwise registrations for each subject in addition to the fusion algorithm itself.

Training details

Training differed slightly between models and so we provide details for each of these components below. For all training, we used ANTsRNet scripts and custom batch generators. Although the network construction and other functionality is available in both ANTsPyNet and ANTsRNet (as is model weights compatibility), we have not written such custom batch generators for the former (although this is on our to-do list). In terms of hardware, all training was done on a DGX (GPUs: 4X Tesla V100, system memory: 256 GB LRDIMM DDR4).

T1-weighted brain extraction

A whole-image 3-D U-net model36 was used in conjunction with multiple training sessions employing a Dice loss function followed by categorical cross entropy. Training data was derived from the same multi-site data described previously processed through our registration-based approach42. A center-of-mass-based transformation to a standard template was used to standardize such parameters as orientation and voxel size. However, to account for possible different header orientations of input data, a template-based data augmentation scheme was used35 whereby forward and inverse transforms are used to randomly warp batch images between members of the training population (followed by reorientation to the standard template). A digital random coin flipping for possible histogram matching58 between source and target images further increased data augmentation. The output of the network is a probabilistic mask of the brain. The architecture consists of four encoding/decoding layers with eight filters at the base layer which doubled every layer. Although not detailed here, training for brain extraction in other modalities was performed similarly.

Deep atropos

Dealing with 3-D data presents unique barriers for training that are often unique to medical imaging. Various strategies are employed such as minimizing the number of layers and/or the number of filters at the base layer of the U-net architecture (as we do for brian extraction). However, we found this to be too limiting for capturing certain brain structures such as the cortex. 2-D and 2.5-D approaches are often used with varying levels of success but we also found better performance using full 3-D information. This led us to try randomly selected 3-D patches of various sizes. However, for both the six-tissue segmentations and DKT parcellations, we found that an octant-based patch strategy yielded the desired results. Specifically, after a brain extracted affine normalization to the MNI template, the normalized image is cropped to a size of [160, 190, 160]. Overlapping octant patches of size [112, 112, 112] were extracted from each image and trained using a batch size of 12 such octant patches with weighted categorical cross entropy as the loss function. The architecture consists of four encoding/decoding layers with 16 filters at the base layer which doubled every layer.

As we point out in our earlier work19, obtaining proper brain segmentation is perhaps the most critical step to estimating thickness values that have the greatest utility as a potential biomarker. In fact, the first and last authors (NT and BA, respectively) spent much time during the original ANTs pipeline development19 trying to get the segmentation correct which required manually looking at many images and adjusting parameters where necessary. This fine-tuning is often omitted or not considered when other groups44,59,60 use components of our cortical thickness pipeline which can be potentially problematic61. Fine-tuning for this particular workflow was also performed between the first and last authors using manual variation of the weights in the weighted categorical cross entropy. Specifically, the weights of each tissue type were altered in order to produce segmentations which most resemble the traditional Atropos segmentations. Ultimately, we settled on a weight vector of for the CSF, GM, WM, Deep GM, brain stem, and cerebellum, respectively. Other hyperparameters can be directly inferred from explicit specification in the actual code. As mentioned previously, training data was derived from application of the ANTs Atropos segmentation13 during the course of our previous work19, Data augmentation included small affine and deformable perturbations using antspynet.randomly_transform_image_data and random contralateral flips.

Desikan-Killiany-Tourville parcellation

Preprocessing for the DKT parcellation training was similar to the Deep Atropos training. However, the number of labels and the complexity of the parcellation required deviation from other training steps. First, labeling was split into an inner set and an outer set. Subsequent training was performed separately for both of these sets. For the cortical labels, a set of corresponding input prior probability maps were constructed from the training data (and are also available and automatically downloaded, when needed, from https://figshare.com). Training occurred over multiple sessions where, initially, categorical cross entropy was used and then subsquently refined using a Dice loss function. Whole-brain training was performed on a brain-cropped template size of [96, 112, 96]. Inner label training was performed similarly to our brain extraction training where the number of layers at the base layer was reduced to eight. Training also occurred over multiple sessions where, initially, categorical cross entropy was used and then subsquently refined using a Dice loss function. Other hyperparameters can be directly inferred from explicit specification in the actual code. Training data was derived from application of joint label fusion17 during the course of our previous work19. When calling antspynet.desikan_killiany_tourville_labeling, inner labels are estimated first followed by the outer cortical labels.

Other softwares

Several R62 packages were used in preparation of this manuscript including R Markdown33,63, 64, lme465 RStan29, ggplot266, and ggradar267. Other packages used include Apple Pages68, ITK-SNAP69, LibreOffice70, and diagrams.net27.

Acknowledgements

Support for the research reported in this work includes funding from the National Heart, Lung, and Blood Institute of the National Institutes of Health (R01HL133889) and a combined grant from Cohen Veterans Bioscience (CVB-461) and the Office of Naval Research (N00014-18-1-2440). Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf. Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH-12-2-0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol-Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California.

Author contributions

Conception and design N.T., A.H., M.Y., J.S., B.A. Analysis and interpretation N.T., A.H., D.G., M.Y., J.S. B.A. Creation of new software N.T., P.C., H.J., J.M., G.D., J.D., S.D., N.C., J.G., B.A. Drafting of manuscript N.T., A.H., P.C., H.J., J.M., G.D., J.G., B.A.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Bajcsy R, Kovacic S. Multiresolution elastic matching. Comput. Vis. Graph. Image Process. 1989;46:1–21. doi: 10.1016/S0734-189X(89)80014-3. [DOI] [Google Scholar]

- 2.Bajcsy, R. & Broit, C. Matching of deformed images. in Sixth International Conference on Pattern Recognition (ICPR’82) 351–353 (1982).

- 3.Gee J, Sundaram T, Hasegawa I, Uematsu H, Hatabu H. Characterization of regional pulmonary mechanics from serial magnetic resonance imaging data. Acad. Radiol. 2003;10:1147–52. doi: 10.1016/S1076-6332(03)00329-5. [DOI] [PubMed] [Google Scholar]

- 4.Klein A, et al. Evaluation of 14 nonlinear deformation algorithms applied to human brain MRI registration. Neuroimage. 2009;46:786–802. doi: 10.1016/j.neuroimage.2008.12.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Avants BB, Epstein CL, Grossman M, Gee JC. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 2008;12:26–41. doi: 10.1016/j.media.2007.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Menze B, Reyes M, Van Leemput K. The multimodal brain tumor image segmentation benchmark (BRATS) IEEE Trans. Med. Imaging. 2014 doi: 10.1109/TMI.2014.2377694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Murphy K, et al. Evaluation of registration methods on thoracic CT: the EMPIRE10 challenge. IEEE Trans. Med. Imaging. 2011;30:1901–20. doi: 10.1109/TMI.2011.2158349. [DOI] [PubMed] [Google Scholar]

- 8.Fu Y, et al. DeepReg: a deep learning toolkit for medical image registration. J. Open Sour. Softw. 2020;5:2705. doi: 10.21105/joss.02705. [DOI] [Google Scholar]

- 9.de Vos BD, et al. A deep learning framework for unsupervised affine and deformable image registration. Med. Image Anal. 2019;52:128–143. doi: 10.1016/j.media.2018.11.010. [DOI] [PubMed] [Google Scholar]

- 10.Balakrishnan G, Zhao A, Sabuncu MR, Guttag J, Dalca AV. VoxelMorph: a learning framework for deformable medical image registration. IEEE Trans. Med. Imaging. 2019 doi: 10.1109/TMI.2019.2897538. [DOI] [PubMed] [Google Scholar]

- 11.Tustison NJ, Avants BB, Gee JC. Learning image-based spatial transformations via convolutional neural networks: a review. Magn. Reson. Imaging. 2019;64:142–153. doi: 10.1016/j.mri.2019.05.037. [DOI] [PubMed] [Google Scholar]

- 12.Avants BB, et al. The optimal template effect in hippocampus studies of diseased populations. Neuroimage. 2010;49:2457–66. doi: 10.1016/j.neuroimage.2009.09.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Avants BB, Tustison NJ, Wu J, Cook PA, Gee JC. An open source multivariate framework for -tissue segmentation with evaluation on public data. Neuroinformatics. 2011;9:381–400. doi: 10.1007/s12021-011-9109-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tustison, N. J. & Gee, J. C. N4ITK: Nick’s N3 ITK implementation for MRI bias field correction. The Insight Journal (2009).

- 15.Manjón JV, Coupé P, Martí-Bonmatí L, Collins DL, Robles M. Adaptive non-local means denoising of MR images with spatially varying noise levels. J. Magn. Reson. Imaging. 2010;31:192–203. doi: 10.1002/jmri.22003. [DOI] [PubMed] [Google Scholar]

- 16.Wang H, et al. Multi-atlas segmentation with joint label fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35:611–23. doi: 10.1109/TPAMI.2012.143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wang H, Yushkevich PA. Multi-atlas segmentation with joint label fusion and corrective learning-an open source implementation. Front. Neuroinform. 2013;7:27. doi: 10.3389/fninf.2013.00027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Das SR, Avants BB, Grossman M, Gee JC. Registration based cortical thickness measurement. Neuroimage. 2009;45:867–79. doi: 10.1016/j.neuroimage.2008.12.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tustison NJ, et al. Large-scale evaluation of ANTs and FreeSurfer cortical thickness measurements. Neuroimage. 2014;99:166–79. doi: 10.1016/j.neuroimage.2014.05.044. [DOI] [PubMed] [Google Scholar]

- 20.Esteban O, et al. fMRIPrep: a robust preprocessing pipeline for functional MRI. Nat. Methods. 2019;16:111–116. doi: 10.1038/s41592-018-0235-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.De Leener B, et al. SCT: spinal cord toolbox, an open-source software for processing spinal cord MRI data. Neuroimage. 2017;145:24–43. doi: 10.1016/j.neuroimage.2016.10.009. [DOI] [PubMed] [Google Scholar]

- 22.Gorgolewski KJ, et al. The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci. Data. 2016;3:160044. doi: 10.1038/sdata.2016.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Halchenko YO, Hanke M. Open is not enough: let’s take the next step: an integrated, community-driven computing platform for neuroscience. Front. Neuroinform. 2012;6:22. doi: 10.3389/fninf.2012.00022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Muschelli J, et al. Neuroconductor: an R platform for medical imaging analysis. Biostatistics. 2019;20:218–239. doi: 10.1093/biostatistics/kxx068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gorgolewski K, et al. Nipype: a flexible, lightweight and extensible neuroimaging data processing framework in python. Front. Neuroinform. 2011;5:13. doi: 10.3389/fninf.2011.00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fischl B. FreeSurfer. Neuroimage. 2012;62:774–81. doi: 10.1016/j.neuroimage.2012.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.https://app.diagrams.net.

- 28.https://www.oasis-brains.org.

- 29.Stan Development Team. RStan: The R interface to Stan. (2020).

- 30.https://brain-development.org/ixi-dataset/.

- 31.Verbeke, G. Linear mixed models for longitudinal data. in Linear mixed models in practice 63–153 (Springer, 1997).

- 32.https://bicr-resource.atr.jp/srpbs1600/.

- 33.Xie, Y., Dervieux, C. & Riederer, E. R Markdown Cookbook. (Chapman; Hall/CRC, 2020).

- 34.Breiman L. Random forests. Mach. Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 35.Tustison NJ, et al. Convolutional neural networks with template-based data augmentation for functional lung image quantification. Acad. Radiol. 2019;26:412–423. doi: 10.1016/j.acra.2018.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Falk T, et al. U-net: deep learning for cell counting, detection, and morphometry. Nat. Methods. 2019;16:67–70. doi: 10.1038/s41592-018-0261-2. [DOI] [PubMed] [Google Scholar]

- 37.Bashyam VM, et al. MRI signatures of brain age and disease over the lifespan based on a deep brain network and 14,468 individuals worldwide. Brain. 2020;143:2312–2324. doi: 10.1093/brain/awaa160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Goubran M, et al. Hippocampal segmentation for brains with extensive atrophy using three-dimensional convolutional neural networks. Hum. Brain Mapp. 2020;41:291–308. doi: 10.1002/hbm.24811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Li H, et al. Fully convolutional network ensembles for white matter hyperintensities segmentation in MR images. Neuroimage. 2018;183:650–665. doi: 10.1016/j.neuroimage.2018.07.005. [DOI] [PubMed] [Google Scholar]

- 40.Haris, M., Shakhnarovich, G. & Ukita, N. Deep back-projection networks for super-resolution. in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 1664–1673 (2018). 10.1109/CVPR.2018.00179.

- 41.Tustison NJ, et al. Longitudinal mapping of cortical thickness measurements: an Alzheimer’s Disease Neuroimaging Initiative-based evaluation study. J. Alzheimers Dis. 2019 doi: 10.3233/JAD-190283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Avants, B. B., Klein, A., Tustison, N. J., Woo, J. & Gee, J. C. Evaluation of open-access, automated brain extraction methods on multi-site multi-disorder data. in 16th annual meeting for the organization of human brain mapping (2010).

- 43.Henschel L, et al. FastSurfer: a fast and accurate deep learning based neuroimaging pipeline. Neuroimage. 2020;219:117012. doi: 10.1016/j.neuroimage.2020.117012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Rebsamen M, Rummel C, Reyes M, Wiest R, McKinley R. Direct cortical thickness estimation using deep learning-based anatomy segmentation and cortex parcellation. Hum. Brain Mapp. 2020 doi: 10.1002/hbm.25159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Lemaitre H, et al. Normal age-related brain morphometric changes: nonuniformity across cortical thickness, surface area and gray matter volume? Neurobiol. Aging. 2012;33(617):e1–9. doi: 10.1016/j.neurobiolaging.2010.07.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Klein A, Tourville J. 101 labeled brain images and a consistent human cortical labeling protocol. Front. Neurosci. 2012;6:171. doi: 10.3389/fnins.2012.00171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Holbrook AJ, et al. Anterolateral entorhinal cortex thickness as a new biomarker for early detection of Alzheimer’s disease. Alzheimer’s Dementia Diagn. Assess. Dis. Monit. 2020;12:e12068. doi: 10.1002/dad2.12068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kriegeskorte N, Simmons WK, Bellgowan PSF, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat. Neurosci. 2009;12:535–40. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Gelman A, et al. Prior distributions for variance parameters in hierarchical models (comment on article by Browne and Draper) Bayesian Anal. 2006;1:515–534. [Google Scholar]

- 50.McKinley, R. et al. Few-shot brain segmentation from weakly labeled data with deep heteroscedastic multi-task networks. CoRRarXiv:abs/1904.02436, (2019).

- 51.Tustison NJ, et al. N4ITK: improved N3 bias correction. IEEE Trans. Med. Imaging. 2010;29:1310–20. doi: 10.1109/TMI.2010.2046908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ashburner J, Friston KJ. Voxel-based morphometry-the methods. Neuroimage. 2000;11:805–21. doi: 10.1006/nimg.2000.0582. [DOI] [PubMed] [Google Scholar]

- 53.Avants B, et al. Eigenanatomy improves detection power for longitudinal cortical change. Med. Image Comput. Comput. Assis. Int. 2012;15:206–13. doi: 10.1007/978-3-642-33454-2_26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Landman BA, et al. Multi-parametric neuroimaging reproducibility: A 3-T resource study. Neuroimage. 2011;54:2854–66. doi: 10.1016/j.neuroimage.2010.11.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.http://fcon_1000.projects.nitrc.org/indi/pro/nki.html

- 56.Schlemper J, et al. Attention gated networks: learning to leverage salient regions in medical images. Med. Image Anal. 2019;53:197–207. doi: 10.1016/j.media.2019.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fonov, V. S., Evans, A. C., McKinstry, R. C., Almli, C. & Collins, D. L. Unbiased nonlinear average age-appropriate brain templates from birth to adulthood. NeuroImageS102, (2009).

- 58.Nyúl LG, Udupa JK. On standardizing the MR image intensity scale. Magn. Reson. Med. 1999;42:1072–81. doi: 10.1002/(SICI)1522-2594(199912)42:6<1072::AID-MRM11>3.0.CO;2-M. [DOI] [PubMed] [Google Scholar]

- 59.Clarkson MJ, et al. A comparison of voxel and surface based cortical thickness estimation methods. Neuroimage. 2011;57:856–65. doi: 10.1016/j.neuroimage.2011.05.053. [DOI] [PubMed] [Google Scholar]

- 60.Schwarz CG, et al. A large-scale comparison of cortical thickness and volume methods for measuring alzheimer’s disease severity. Neuroimage Clin. 2016;11:802–812. doi: 10.1016/j.nicl.2016.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Tustison NJ, et al. Instrumentation bias in the use and evaluation of scientific software: recommendations for reproducible practices in the computational sciences. Front. Neurosci. 2013;7:162. doi: 10.3389/fnins.2013.00162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.R Core Team. R: A language and environment for statistical computing. (R Foundation for Statistical Computing, 2020).

- 63.Allaire, J. et al.Rmarkdown: Dynamic documents for r. (2021).

- 64.Xie, Y., Allaire, J. J. & Grolemund, G. R markdown: The Definitive Guide. (Chapman; Hall/CRC, 2018).

- 65.Bates D, Mächler M, Bolker B, Walker S. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 2015;67:1–48. doi: 10.18637/jss.v067.i01. [DOI] [Google Scholar]

- 66.Wickham, H. ggplot2: Elegant Graphics for Data Analysis (Springer-Verlag, 2016).

- 67.https://github.com/xl0418/ggradar2.

- 68.https://www.apple.com/pages/.

- 69.Yushkevich PA, et al. User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. Neuroimage. 2006;31:1116–1128. doi: 10.1016/j.neuroimage.2006.01.015. [DOI] [PubMed] [Google Scholar]

- 70.https://www.libreoffice.org/.