Abstract

Percutaneous renal access is the critical initial step in many medical settings. In order to obtain the best surgical outcome with minimum patient morbidity, an improved method for access to the renal calyx is needed. In our study, we built a forward-view optical coherence tomography (OCT) endoscopic system for percutaneous nephrostomy (PCN) guidance. Porcine kidneys were imaged in our experiment to demonstrate the feasibility of the imaging system. Three tissue types of porcine kidneys (renal cortex, medulla, and calyx) can be clearly distinguished due to the morphological and tissue differences from the OCT endoscopic images. To further improve the guidance efficacy and reduce the learning burden of the clinical doctors, a deep-learning-based computer aided diagnosis platform was developed to automatically classify the OCT images by the renal tissue types. Convolutional neural networks (CNN) were developed with labeled OCT images based on the ResNet34, MobileNetv2 and ResNet50 architectures. Nested cross-validation and testing was used to benchmark the classification performance with uncertainty quantification over 10 kidneys, which demonstrated robust performance over substantial biological variability among kidneys. ResNet50-based CNN models achieved an average classification accuracy of 82.6%±3.0%. The classification precisions were 79%±4% for cortex, 85%±6% for medulla, and 91%±5% for calyx and the classification recalls were 68%±11% for cortex, 91%±4% for medulla, and 89%±3% for calyx. Interpretation of the CNN predictions showed the discriminative characteristics in the OCT images of the three renal tissue types. The results validated the technical feasibility of using this novel imaging platform to automatically recognize the images of renal tissue structures ahead of the PCN needle in PCN surgery.

1. Introduction

Percutaneous nephrostomy (PCN) was first described in 1955 as a minimally invasive, x-ray guided procedure in patients with hydronephrosis [1]. PCN needle placement has since become a valuable medical resource for minimally invasive access to the renal collecting system for drainage, urine diversion, the first step for percutaneous nephrolithotomy (PCNL) surgery and other therapeutic intervention, especially when the transurethral access of surgical tools into the urological system is difficult or impossible [1–7]. Despite being a common urological procedure, it remains technically challenging to insert the PCN needle correctly in the right place. During PCN, a needle penetrates the cortex and medulla of the kidney to reach the renal pelvis. Conventional imaging modalities have been used in PCN puncture. Ultrasound technique, as a commonly used medical diagnostic imaging method, has been utilized in PCN surgery for decades [8–11]. Additionally, fluoroscopy and computed tomography (CT) are also employed in PCN guidance and sometimes they are used with ultrasonography simultaneously [10–14]. However, due to the limited spatial resolution, these standard imaging modalities have proven to be inadequate for accurately locating the needle tip position [15,16]. The failure rate of PCN needle placement is up to 18%, especially in nondilated systems or for complex stone diseases [17,18]. Failure of inserting the needle into the targeted location in the kidney through a suitable route might result in severe complications [19–21]. Moreover, fluoroscopy has no soft tissue contrast and, therefore, cannot differentiate critical tissues, such as blood vessels, which are important to avoid during the needle insertion. Rupture of renal blood vessels by needle penetrations can cause bleeding. Temporary bleeding after PCN placement occurs in ∼95% of cases [2,17]. Retroperitoneal hematomas have been found in 13% [17]. When PCNL is followed, hemorrhage requiring transfusion has increased to 12-14% of the patients [22]. Additionally, needle punctures during PCN can lead to infectious complications such as fever or sepsis, thoracic complications like pneumothorax and hydrothorax, and other complications like urine leak or rupture of pelvicalyceal system [23,24].

Therefore, the selection of position and route of the puncture is important in PCN needle placement. It is recommended to insert the needle into the renal calyx through calyx papilla because fewer blood vessels are distributed on this route, leading to lower possibility of vascular injury [23,24]. Nevertheless, it is always difficult to precisely identify this preferred inserting route in complicated clinical setting even for experienced urologists [25]. If PCN puncture was executed for multiple times, the likelihood of renal injury will increase and the operational time will lengthen, resulting in higher risks of complications [26].

To better guide PCN needle placement, substantive research work has been done to improve the current guidance practice [9,17,27–30]. Ultrasound with technical improvements in many aspects has been utilized. For instance, contrast-enhanced ultrasound has been proved to be a potential modality in the guidance of PCN puncture [31,32]. Tracked ultrasonography snapshot is also a promising method to improve the needle guidance [32]. In order to resolve the bleeding during needle puncture, combined B-mode and color Doppler ultrasonography has been applied in PCN surgeries and it provides promising efficiency in decreasing the major hemorrhage incidence [33]. Moreover, developments in other techniques such as cone-beam CT [34], retrograde ureteroscopy [35] and magnetic field-based navigation device [36] have been utilized to improve the guidance of PCN needle access. On the other hand, endoscope is an effective device that can be assembled within PCN needle [37,38]. It can effectively improve the precision of PCN needle punctures, resulting in lower risks of complications and fewer times of insertions [38]. However, most of the conventional endoscopic techniques involving charge coupled device (CCD) cameras can only provide two-dimensional information and cannot detect subsurface tissue (in other words, visualize the tissue before the needle tip damage it) [39]. Thus, there is a critical need to develop new guiding techniques which have depth-resolved capability for PCN. Optical coherence tomography (OCT) is a well-established non-invasive biomedical imaging modality which can image subsurface tissue with the penetration depth of several millimeters [40–44]. By obtaining and processing the coherent infrared lights backscattered from reference arm and sample arm, OCT can provide two-dimensional (2D) cross-sectional images with high axial resolution (∼10 µm), which is 10–100 times higher than conventional medical imaging modalities (e.g., CT and MRI) [45–47]. Owing to the high speed of laser scanning and data processing, three-dimensional (3D) images of the detected sample formed by numerous cross-sectional images can be obtained in real time [46,48]. Because of the differences in tissue structures among renal cortex, medulla and calyx, OCT has the potential to distinguish different renal tissue types. Due to the penetration limitation in biological tissues (1-2 mm), studies in kidney using OCT have mainly focused on renal cortex [44,45,49–51]. OCT can be integrated with fiber-optic catheters and endoscopes for internal imaging applications [52–54]. For example, endoscopic OCT imaging has been demonstrated in the human GI tract [55–58] to detect Barrett’s esophagus (BE) [59,60], dysplasia [61] and colon cancer [62–64]. In the previous study, our lab has developed a portable hand-held forward-imaging endoscopic OCT needle device for real-time epidural anesthesia surgery guidance [65]. This endoscopic OCT setup holds the promise in PCN guidance.

Given enormous accumulation of images and inter- and intra-observer variation from subjective interpretation, computer-aided automatic methods have been utilized to accurately and efficiently classify these data [66,67]. In automated OCT image analysis, convolutional neural networks (CNN) [66,68,69] has been demonstrated to be promising in various applications, such as hemorrhage detection of retina versus cerebrum and tumor tissue segmentation [67,68,70–73].

Herein we demonstrated a forward OCT endoscope system to image the kidney tissues lying ahead of PCN needle during PCN surgery. Images of renal cortex, medulla and calyx were obtained from ten porcine kidneys using our system. A tissue type classifier was developed using the ResNet34, ResNet50, and MobileNetv2 CNN architectures. Nested cross-validation and testing [74–76] was used for model selection and performance benchmarking to account for the large biological variability among kidney through uncertainty quantification. The predictions from the CNN models were interpreted to identify the important regions in the representative OCT images used by CNN for the classification.

2. Materials and methods

2.1. Experimental setup

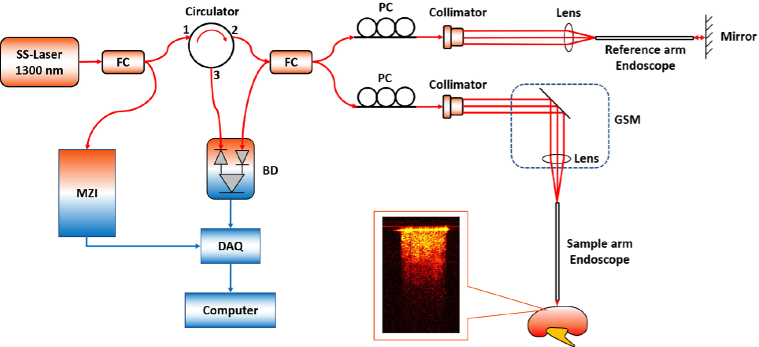

In our project, the OCT endoscope was built based on the swept-source OCT (SS-OCT) system. Figure 1 shows the schematic of our forward endoscopic OCT system. The light source of the SS-OCT system has the center wavelength of 1300 nm and bandwidth of 100 nm [39]. The wavelength-swept frequency (A-scan) rate is 200 kHz with ∼25 mW output power. As demonstrated in Fig. 1, laser provided by the light source was first split by a fiber coupler (FC) into two paths with 3% and 97% of the whole laser power, respectively. The 3% of the laser power was delivered into the Mach-Zehnder interferometer (MZI), which generated a frequency-clock signal for triggering the OCT sampling procedure and provided it to data acquisition (DAQ) board. The remaining 97% laser transmitted through a circulator in which the light runs only in one direction. Therefore, the light entering port 1 only emitted out from port 2, and then it was evenly split to the reference arm and sample arm of a fiber-based Michelson interferometer. Backscattered light from both arms formed interference fringes at the FC and transmitted to the balanced detector (BD). The interference fringes from different depths received by the BD were encoded with different frequencies. The output signal from BD was further transmitted to the DAQ board and computer for processing. Cross-sectional information can be obtained through Fourier transform of interference fringes [65].

Fig. 1.

Schematic of endoscopic OCT system. MZI: Mach-Zehnder interferometer; FC: fiber coupler; PC: polarization controller; GSM: galvonometer scanning mirror; BD: balanced detector; DAQ: data acquisition board.

A gradient-index (GRIN) rod lens with a diameter of 1.3 mm was stabilized in front of the galvanometer scanning mirror (GSM). The endoscope we used has the diameter of 1.3 mm, the length of 138.0 mm and the view angle of 11.0°. The total outer diameter (O.D.) including the GRIN rod lens and the protective steel tubing is around 1.65 mm. The proximal GRIN lens entrance of the endoscope was placed close to the focal plane of the objective lens. The GRIN lens can preserve the spatial relationship between the entrance and the output (distal end) and further to the sample. Therefore, one or two directional scanning can be readily performed on the proximal GRIN lenses surface to create 2D or 3D images. In addition, the same GRIN rod lens was put in the light path of reference arm for the purpose of compensating light dispersion and expanding the length of reference arm. Polarization controllers (PCs) were put in both arms to decrease the background noise. The system had the axial resolution of ∼11 µm and lateral resolution of ∼20 µm in tissue. The lateral imaging field-of-view (FOV) was around 1.25 mm. The sensitivity of system was optimized to 92 dB, calculated using a silver mirror with a calibrated attenuator.

2.2. Data acquisition

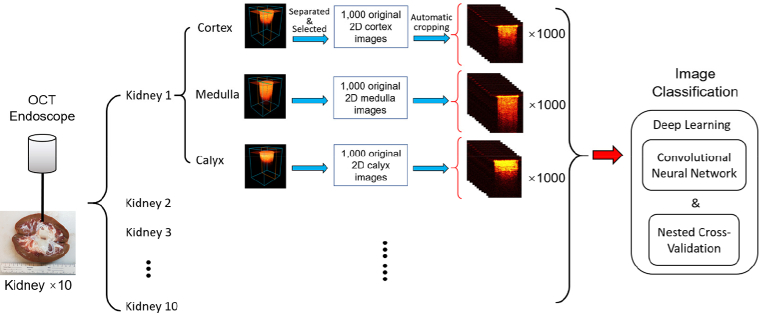

Ten fresh porcine kidneys were obtained from a local slaughterhouse. The cortex, medulla and calyx of the porcine kidneys were exposed and imaged in the experiment. Renal tissue types can be identified from the anatomic appearance. The OCT endoscope was placed against different renal tissues for image acquisition. To mimic the clinical situation, we applied some force during imaging the ex-vivo kidney tissues to generate tissue compression. The 3D images of 320×320×480 pixels on X, Y and Z axes (Z presents the depth direction) were obtained with the pixel size of 6.25 µm on all three axes. Therefore, the size of the original 3D images is 2.00 mm×2.00 mm×3.00 mm. For every kidney sample, we obtained at least 30 original 3D OCT images for each tissue type and each 3D tissue scanning took no more than 2 seconds. Afterwards, the original 3D images were separated to 2D cross-sectional images as shown in Fig. 2. Since the GRIN lens is cylindrical, the 3D OCT images obtained were also in the cylindrical shape. Therefore, not all the 2D cross-sectional images contained the same structural signal of the kidney. Only the 2D images with sufficient tissue structural information (cross-sectional images that close to the center of the 3D cylindrical structures) were subsequently selected and utilized for the image preprocessing. At the end of imaging, tissues of cortex, medulla and calyx of the porcine kidneys were excised and processed for histology to compare with corresponding OCT results. The tissues were fixed with 10% formalin, embedded in paraffin, sectioned (4 µm thick) and stained with hematoxylin and eosin (H & E) for histological analysis. Images were taken by Keyence Microscope BZ-X800. Sectioning and H & E staining was carried out by the Tissue Pathology Shared Resource, Stephenson Cancer Center (SCC), University of Oklahoma Health Sciences Center. The Hematoxylin (cat#3801571) and Eosin (cat# 3801616) were purchased from Leica biosystems and the staining was performed utilizing Leica ST5020 Automated Multistainer following the HE staining protocol at the SCC Tissue Pathology core.

Fig. 2.

Illustration of data acquisition and processing steps

Although the three tissue types showed different imaging features for visual recognition, it will take time and expertise for doctors to differentiate them during surgeries. In order to improve the efficiency, we developed deep learning methods for automatic tissue classification based on the imaging data. Figure 2 shows the overall process of the data acquisition and processing. In total, ten porcine kidneys were imaged in this study. For each kidney, 1,000 2D cross-sectional images were obtained for cortex, medulla, and calyx, respectively. For the purpose of convenient analysis and increasing the speed of deep-learning process of the OCT images, a custom MATLAB algorithm was designed to recognize the surface of the kidney tissue on the 2D cross-sectional images. It automatically cropped the images from the size of 320×480 to 235×301. Therefore, all the 2D cross-sectional images have the same dimensions and cover the same FOV before deep-learning processing.

2.3. Training of the convolutional neural networks

CNN was used to classify the images of renal cortex, medulla, and calyx. Three CNN architectures were tested, including Residual Network 34 (ResNet34) [77], Residual Network 50 (ResNet50) [77] and MobileNetv2 [78], using Tensorflow 2.3 [79] in open-ce version 0.1.

Pre-trained ResNet50 and MobileNetv2 models on the ImageNet dataset [80] were imported from the Keras library [81]. The output layer of the models was changed to one containing 3 softmax output neurons for cortex, medulla, and calyx. The input images were preprocessed by resizing to the 224 × 224 resolution, replicating the input channel to 3 channels, and scaling the pixel intensities to [-1, 1]. Model fine-tuning was conducted in two stages as described in [82]. First, the output layer was trained with all the other layers frozen. The optimizer, stochastic gradient descendent (SGD), was used with a learning rate of 0.2, a momentum of 0.3, and a decay of 0.01. Then, the entire model was unfrozen and trained. The SGD with Nesterov momentum optimizer was used with a learning rate of 0.01, a momentum of 0.9, and a decay of 0.001. Early stopping with a patience of 10 and a maximum number of epochs 50 was used for the Pre-trained ResNet50. Early stopping with a patience of 20 and a maximum number of epochs 100 was used for MobileNetv2.

The ResNet34 and ResNet50 architectures were also trained using randomly initialized weights. ResNet34 [77] was obtained from [82]. The mean pixel in the training dataset was used to center the training, validation, and test datasets. The input layer was modified to accept only one input channel in the OCT images and the output layer was changed for the classification of the three tissue types. For ResNet50, the optimizer SGD with Nesterov momentum with learning rate 0.01, momentum 0.9 and decay 0.01 was used. ResNet50 was trained with a maximum of 50 epochs, early stopping with a patience of 10, and a batch size of 32. For ResNet34, the Adam optimizer was used with learning rate 0.001, beta1 0.9, beta2 0.9999 and epsilon 1E-7. ResNet34 was trained with a maximum of 200 epochs, early stopping with a patience of 10, and a batch size of 512.

2.4. Nested cross-validation and testing

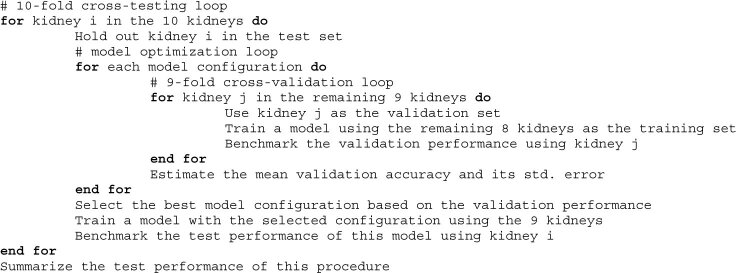

A nested cross-validation and testing procedure [74,76,83] was used to estimate the validation performance and the test performance of the models across the 10 kidneys with uncertainty quantification. The pseudo-code of the nested cross-validation and testing is shown below.

In the 10-fold cross-testing, one kidney was selected in turn as the test set. In the 9-fold cross-validation, the remaining nine kidneys were partitioned 8:1 between the training set and the validation set. Each kidney contained a total of 3000 images, including 1000 images for each tissue type. The validation performance of a model was tracked based on its classification accuracy on the validation kidney. The classification accuracy is the percentage of correctly labeled images out of all 3000 images of a kidney.

The 9-fold cross-validation loop was used to compare the performance of ResNet34, ResNet50, and MobileNetv2, and optimize the key hyperparameters of these models, such as pre-trained versus randomly initialized weights, learning rates, and number of epochs. The model configuration with the highest average validation accuracy was selected for the cross-testing loop. The cross-testing loop enabled iterative benchmarking of the selected model across all 10 kidneys, giving a better estimation of generalization error with uncertainty quantification.

Gradient-weighted Class Activation Mapping (Grad-CAM) [84] was used to explain the predictions of a selected CNN model by highlighting the important regions in the image for the prediction outcome. The interpretation implementation on ResNet50 was based on [85].

All the model development was performed on the Schooner supercomputer at the University of Oklahoma and the Summit supercomputer at Oak Ridge National Laboratory. The computation on Schooner used five computational nodes, each of which had 40 CPU cores (Intel Xeon Cascade Lake) and 200 GB of RAM. The computation on Summited used up to 15 nodes, each of which had 2 IBM POWER9 processors and 6 NVIDIA Volta Graphic cards. The source code of the model training is available at https://github.com/thepanlab/FOCT_kidney.

3. Results

3.1. Forward OCT imaging of different renal tissues

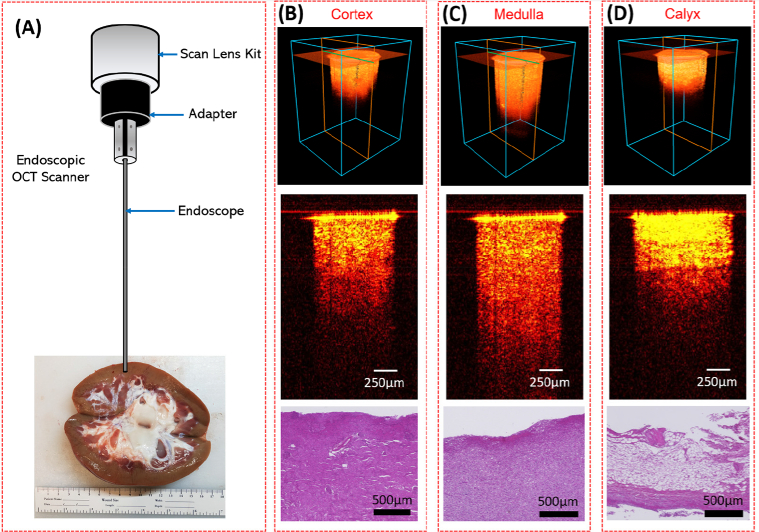

The imaging setup our OCT endoscope was demonstrated in Fig. 3(A). An adapter was used to stabilize the endoscope in front of the OCT scan lens kit. From the kidney sample shown in Fig. 3(A), we can visually distinguish different tissue types. Renal cortex was the brown tissue on the edge of the whole kidney; Medulla can be recognized by its red renal pyramid structures distributed on the inner side of the cortex; Calyx was featured by its obvious white structure in the central kidney. Three tissue types were imaged respectively following the procedure described in Section 2.2.

Fig. 3.

(A) Sample kidney used for endoscopic OCT imaging; (B-D) Representative 3D OCT images, cross-sectional OCT images and the histology results of renal cortex (B), medulla (C) and calyx (D).

Figures 3(B)–3(D) show representative 3D OCT images, 2D cross-sectional images and the histology results of three renal tissues. They were featured with different imaging depth and brightness. The renal calyx had the shallowest imaging depth, but the tissue close to the surface showed the highest brightness and density. Cortex and medulla both presented relatively homogeneous tissue structures in OCT images, and the imaging depth of medulla was larger than cortex. Furthermore, compared to cortex and medulla, calyx was featured with horizonal stripes and layered structure. The transitional epithelium and fibrous tissue in the calyx may explain the strip-like structures and significantly higher brightness in comparison to the other two renal tissues. This is the significant part for PCN insertion since the goal of PCN is to reach the calyx precisely. These imaging results demonstrated the feasibility of distinguishing renal cortex, medulla, and calyx with the endoscopic OCT system.

3.2. CNN development and benchmarking results

Table 1 shows the average validation accuracies and their standard errors for the pre-trained (PT) or randomly initialized (RI) model architectures after hyperparameter optimization. RI MobileNetv2 frequently failed to learn, so only the PT MobileNetv2 model was used here. The PT ResNet50 models outperformed the RI ResNet50 models in 6 of the 10 testing folds, which indicated only a small boost by the pre-training on ImageNet. For all the 10 testing folds, the validation accuracies of the ResNet50 models were significantly higher than those of the MobileNetv2 and ResNet34 models. Thus, the characteristic patterns of the three kidney tissues may require a deep CNN architecture to recognize. The detailed results from the 9-fold cross validation of RI ResNet34, PT MobileNetv2, RI ResNet50, and PT ResNet50 can be found in Supplementary Table 1-Table 4, respectively.

Table 1. The averages and standard errors of the validation accuracies from the nested cross-validation and testing procedure. The architecture with the highest validation performance in each testing fold is indicated in bold.

| Testing folds | PT MobileNetv2 | RI ResNet34 | RI ResNet50 | PT ResNet50 |

|---|---|---|---|---|

| K1 | 84.1%±3.5% | 83.8%±3.7% | 87.1%±2.9% | 89.2%±2.4% |

| K2 | 82.1%±3.9% | 84.6%±2.8% | 87.0%±3.0% | 89.8%±2.4% |

| K3 | 83.9%±4.2% | 84.5%±3.5% | 88.5%±2.4% | 86.6%±2.4% |

| K4 | 78.0%±6.3% | 83.5%±2.6% | 85.0%±2.4% | 87.8%±2.3% |

| K5 | 83.3%±2.7% | 82.8%±4.2% | 87.8%±1.9% | 87.7%±2.3% |

| K6 | 86.2%±2.7% | 84.5%±3.6% | 88.4%±2.1% | 88.1%±2.4% |

| K7 | 86.0%±3.8% | 82.8%±3.0% | 88.6%±1.7% | 86.6%±2.4% |

| K8 | 76.1%±6.0% | 82.1%±3.9% | 87.7%±2.6% | 88.0%±2.5% |

| K9 | 77.7%±4.8% | 79.4%±4.3% | 82.1%±3.6% | 85.1%±2.3% |

| K10 | 81.8%±4.6% | 86.4%±2.7% | 87.5%±3.2% | 87.7%±2.1% |

Table 2 shows the test accuracy of the best-performing model in each of the 10 testing folds. The output layer of the CNN models estimated three softmax scores that summed up to 1.0 for the three tissue types. When the category with the highest softmax score was selected for an image (i.e., a softmax score threshold of 0.333 to make a prediction), the CNN model made a prediction for every image (100% coverage) at a mean test accuracy of 82.6%. This was substantially lower than the mean validation accuracy of 87.3%, which suggested the overfitting to the validation set by the hyperparameter tuning and early stopping. The classification accuracy can be increased at the expense of lower coverage by increasing softmax score threshold, which allowed the CNN model to make only confident classifications. When the softmax score threshold was raised to 0.5, 89.9% of the images on average were classified to a tissue type and the mean classification accuracy increased to 85.6%±3.0%. For the uncovered images, the doctors can make a prediction with the help of other imaging modality and their clinical experience.

Table 2. Results from the 10-fold cross-testing. Average and standard error of accuracy are shown for two thresholds.

| Testing folds | Threshold = 0.333 | Threshold = 0.5 | ||

|---|---|---|---|---|

| Accuracy | Coverage | Accuracy | Coverage | |

| K1 | 75.4% | 100% | 79.0% | 89.3% |

| K2 | 87.9% | 100% | 90.0% | 93.3% |

| K3 | 71.4% | 100% | 76.8% | 79.3% |

| K4 | 92.1% | 100% | 95.9% | 88.4% |

| K5 | 93.2% | 100% | 95.6% | 93.7% |

| K6 | 67.7% | 100% | 68.9% | 94.2% |

| K7 | 83.5% | 100% | 86.4% | 91.8% |

| K8 | 88.2% | 100% | 92.3% | 85.0% |

| K9 | 92.2% | 100% | 94.8% | 94.2% |

| K10 | 74.1% | 100% | 76.6% | 90.2% |

| Average | 82.6%±3.0% | 100% | 85.6%±3.0% | 89.9%±1.5% |

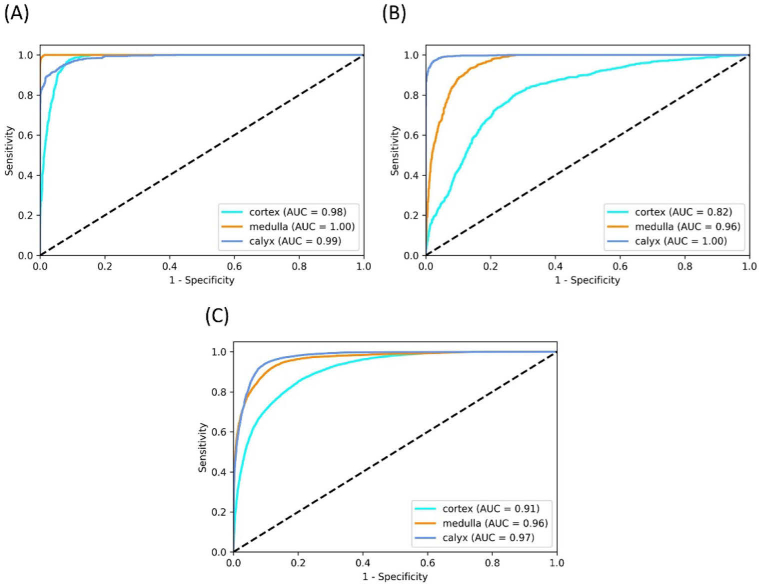

There was substantial variability in the test accuracy among different kidneys. While three kidneys had test accuracies higher than 92% (softmax score threshold of 0.333), the kidney in the sixth fold had the lowest test accuracy of 67.7%. Therefore, the current challenge in the image classification mainly comes from the anatomic differences among the samples. For instance, Figs. 4(A) and 4(B) shows the receiver operating characteristic (ROC) curves of the prediction results from kidney No. 5 and No. 10 (ROC curves of all the 10 kidneys in the 10-fold cross-testing can be found in the Supplementary data). It is clear that the prediction of kidney 5 is much more accurate than that of kidney 10. Our nested cross-validation and testing procedure was designed to simulate the real clinical setting in which the CNN models trained on one set of kidneys need to perform well on a new kidney unseen by the CNN models until the operation. When a CNN model was trained on a subset of images from all kidneys and validated on a separate subset of images from all kidneys in cross-validation as opposed to partitioning by kidneys, it achieved accuracies over 99%. This suggested that the challenge of image classification mainly stemmed from the biological differences between different kidneys. The generalization of the CNN models across kidneys can be improved by expand our dataset with kidneys at different ages or physical conditions to represent different structural and morphological features.

Fig. 4.

ROC multi-class testing curves of (A) kidney 5, (B) kidney 10 and (C) the average of all kidneys.

Figure 4(C) shows the average ROC curves for the three tissue types. The Area Under the ROC Curve (AUC) were 0.91 for cortex, 0.96 for medulla, and 0.97 for calyx. Table 3 shows the average confusion matrix for the 10 kidneys in the 10-fold cross-testing with a score threshold of 0.333 and the average recall and precision for each type of tissue. Confusion matrix from the 10-fold cross-testing for each of the 10 kidneys is shown in the Supplementary data. Cortex was most challenging tissue type to be classified correctly and often mixed up with medulla. From the original images we found that the penetration depths of medulla were much larger than cortex in seven of the ten imaged kidneys. While in other three samples, these differences were insignificant. This may explain the challenging classification between cortex and medulla.

Table 3. Confusion matrix for classification of the three tissue types.

| Predicted |

Recall | ||||

|---|---|---|---|---|---|

| Cortex | Medulla | Calyx | |||

| True | Cortex | 677 ± 109 | 204 ± 93 | 118 ± 93 | 68% ± 11% |

| Medulla | 75 ± 33 | 908 ± 41 | 17 ± 15 | 91% ± 4% | |

| Calyx | 95 ± 30 | 14 ± 9 | 892 ± 32 | 89% ± 3% | |

| Precision | 79% ± 4% | 85% ± 6% | 91% ± 5% | ||

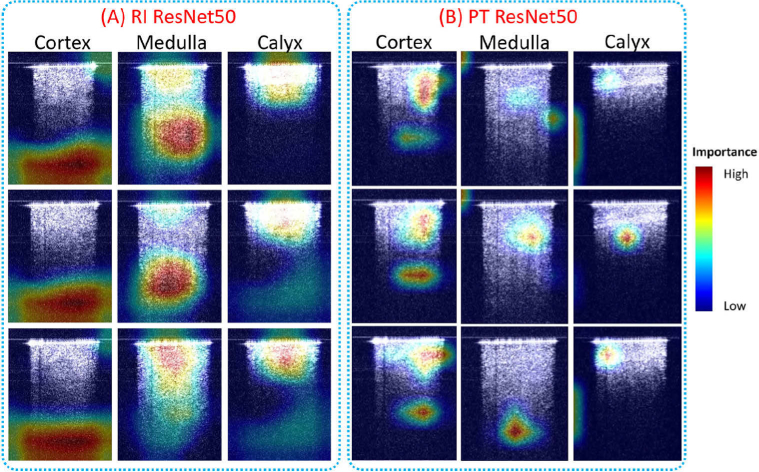

To better understand how a CNN model classified different renal types, the class activation maps were generated to visualize heatmaps of class activation over input images. Figure 5 shows the class activation heatmaps for 3 representative images of each tissue type from the RI ResNet50 model and the PT ResNet50 model. The models and the representative images were selected from the fifth testing fold. The RI ResNet50 model performed the classification by paying more intention to the lower part of the images of cortex, to both the lower part and near the upper part of the medulla images, and to the area of the calyx images with high intensity near the needle tip. The PT ResNet50 model focused on both the upper part and lower part of the cortex images, on the middle part and/or lower part of the medulla images, and on the region close to the needle tip of the calyx images. Compared to the RI ResNet50 model, the PT ResNet50 model shifted its attention closer to the signal regions. The class classification heatmaps provided an intuitive explanation of the classification basis for the two CNN models.

Fig. 5.

Class activation heatmaps of (A) RI ResNet50, and (B) PT ResNet50 for three representative images in each tissue type. The class activation heatmaps used jet colormap and were superimposed on the input images.

4. Discussion

We investigated the feasibility of OCT endoscopic system for PCN surgery guidance. Three porcine kidney tissue types: cortex, medulla and calyx were imaged. These three kidney tissues show different structural features which can be further used for tissue type recognition. To increase the image recognition efficiency and reduce the learning burden of the clinical doctors, we developed and evaluated CNN methods for image classification and recognition. ResNet50 had the best performance compared to ResNet34 and PT MobileNetv2 and achieved an average classification accuracy of 82.6%±3.0%.

In the current study, the porcine kidneys samples were obtained from a local slaughterhouse without controlling the sample preservation and time after death. Biological changes may have occurred in the ex-vivo kidneys, including collapse of some structures of nephrons such as the renal tubules. This may make the tissue recognition more difficult, especially the classification between cortex and medulla. Characteristic renal structures in the cortex can be clearly imaged by OCT in both well-preserved ex-vivo human kidneys and living kidneys as previously reported [44,50,86] and verified in an ongoing study in our lab using well-preserved human kidneys. Additionally, nephron structures distributed in renal cortex and medulla are different [87]. These additional features in renal cortex and medulla will improve the recognition of these two tissue types and increase the classification accuracy of our future CNN models when imaging in-vivo samples or well-preserved ex-vivo samples. The current study established the feasibility of automatic tissue recognition using CNN and provided information for the model selection and hyper-parameter optimization in future CNN model development using in-vivo pig kidneys and well-preserved ex-vivo human kidneys.

For translating the proposed OCT probe into clinics, we will assemble the endoscope with appropriate diameter and length into the clinical used PCN needle. In current PCN puncture, a trocar needle is inserted into the kidney. Since the trocar has a hollow structure, we can fix the endoscope within the trocar needle. Then our OCT endoscope can be inserted into the kidney together with the trocar needle. After the trocar needle tip arrives at the destination (such as the kidney pelvis), we will withdraw the OCT endoscope from the trocar needle and other surgical processes can be continued. During the whole puncture, no extra invasiveness will be caused. Since the needle will keep moving during the puncture, there will be a tight contact between the needle tip and the tissue. Therefore, the blood (if any) will not accumulate in front of the needle tip. From our previous experience in the in-vivo pig experiment guiding the epidural anesthesia using our OCT endoscope, the presence of blood is not a big issue [65]. The diameter of the GRIN rod lens we used in the study is 1.3 mm. In the future study, we will further improve the current setup with smaller GRIN rod lens that can be fit inside the 18-gauge PCN needle which is clinically used in PCN puncture [88]. Furthermore, we will miniaturize the GSM device based on microelectromechanical system (MEMS) technology, which will enable ease of operation and is important for translating the OCT endoscope to clinical applications. The current employed OCT system has a scanning speed up to 200 kHz, the 2D tissue images in front of the PCN needle can be provided to surgeons in real time. Using ultra-high speed of laser scanning and data processing system, 3D images of the detected sample can be obtained in real time [46,48]. In the next step, we will acquire 3D images that may further improve our classification accuracy, because of the added information content in 3D images. For example, Kruthika et al. detected Alzheimer's disease from MRI images and showed improved performance of 3D Capsule Network (CapsNet) and 3D CNN over previous 2D approaches [89].

The CNN model training in this study used significant computational power. Each fold of the cross-validation took ∼25 minutes for RI ResNet50 and ∼45 minutes for PT ResNet50 using one NVIDIA Volta GPU. The 90 folds of the nested cross-validation for each model configuration were performed in parallel across multiple compute nodes on the Summit supercomputer. The inference was computationally efficient. It took ∼50 seconds using one NVIDIA Volta GPU to perform inference on 1000 images (i.e., ∼0.05 seconds per image on average), including model loading, image preprocessing, and the ResNet50 classification. The ResNet50 classification used ∼16 seconds for 1000 images or ∼0.016 seconds per image. In future, the inference can be further accelerated through algorithm optimization and parallelization to make it more practical for surgical applications.

Acknowledgments

Qinggong Tang would like to acknowledge the support from Faculty Investment Program and the Junior Faculty Fellowship of University of Oklahoma; Oklahoma Health Research Program (HR19-062) from Oklahoma Center for the Advancement of Science and Technology. This research used resources of the Oak Ridge Leadership Computing Facility at the Oak Ridge National Laboratory, which is supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC05-00OR22725. Histology service provided by the Tissue Pathology Shared Resource was supported in part by the National Institute of General Medical Sciences Grant P20GM103639 and National Cancer Institute Grant P30CA225520 of the National Institutes of Health.

Funding

National Cancer Institute10.13039/100000054 (P30CA225520); National Institute of General Medical Sciences10.13039/100000057 (P20GM103639); Office of Science10.13039/100006132 (DE-AC05-00OR22725); University of Oklahoma10.13039/100007926 (2020 Junior Faculty Fellowship, 2020 Faculty Investment Program); Oklahoma Center for the Advancement of Science and Technology10.13039/100008569 (HR19-062).

Disclosures

The authors declare no conflicts of interest related to this article.

Supplemental document

See Supplement 1 (1.7MB, pdf) for supporting content.

References

- 1.Goodwin W. E., Casey W. C., Woolf W., “Percutaneous trocar (needle) nephrostomy in hydronephrosis,” J. Am. Med. Assoc. 157(11), 891–894 (1955). 10.1001/jama.1955.02950280015005 [DOI] [PubMed] [Google Scholar]

- 2.Young M., Leslie S. W., “Percutaneous nephrostomy,” in StatPearls (Treasure Island (FL), 2019). [PubMed] [Google Scholar]

- 3.Bigongiari L. R., Lee K. R., Weigel J., Foret J., “Percutaneous nephrostomy. A non-operative temporary or long-term urinary diversion procedure,” J. Kans Med. Soc. 79, 104–106 (1978). [PubMed] [Google Scholar]

- 4.Hellsten S., Hildell J., Link D., Ulmsten U., “Percutaneous nephrostomy. Aspects on applications and technique,” Eur Urol. 4(4), 282–287 (1978). 10.1159/000473972 [DOI] [PubMed] [Google Scholar]

- 5.Perinetti E., Catalona W. J., Manley C. B., Geise G., Fair W. R., “Percutaneous nephrostomy: indications, complications and clinical usefulness,” J. Urol. 120(2), 156–158 (1978). 10.1016/S0022-5347(17)57085-8 [DOI] [PubMed] [Google Scholar]

- 6.Stables D. P., Ginsberg N. J., Johnson M. L., “Percutaneous nephrostomy: a series and review of the literature,” Am. J. Roentgenol. 130(1), 75–82 (1978). 10.2214/ajr.130.1.75 [DOI] [PubMed] [Google Scholar]

- 7.Lee W. J., Patel U., Patel S., Pillari G. P., “Emergency percutaneous nephrostomy: results and complications,” J. Vasc. Interv. Radiol. 5(1), 135–139 (1994). 10.1016/S1051-0443(94)71470-6 [DOI] [PubMed] [Google Scholar]

- 8.Pedersen J. F., “Percutaneous nephrostomy guided by ultrasound,” J Urol 112(2), 157–159 (1974). 10.1016/S0022-5347(17)59669-X [DOI] [PubMed] [Google Scholar]

- 9.Efesoy O., Saylam B., Bozlu M., Cayan S., Akbay E., “The results of ultrasound-guided percutaneous nephrostomy tube placement for obstructive uropathy: A single-centre 10-year experience,” Turk J. Urol. 44, 329–334 (2018). 10.5152/tud.2018.25205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.LeMaitre L., Mestdagh P., Marecaux-Delomez J., Valtille P., Dubrulle F., Biserte J., “Percutaneous nephrostomy: placement under laser guidance and real-time CT fluoroscopy,” Eur Radiol. 10(6), 892–895 (2000). 10.1007/s003300051030 [DOI] [PubMed] [Google Scholar]

- 11.Zegel H. G., Pollack H. M., Banner M. C., Goldberg B. B., Arger P. H., Mulhern C., Kurtz A., Dubbins P., Coleman B., Koolpe H., “Percutaneous nephrostomy: comparison of sonographic and fluoroscopic guidance,” Am. J. Roentgenol. 137(5), 925–927 (1981). 10.2214/ajr.137.5.925 [DOI] [PubMed] [Google Scholar]

- 12.Miller N. L., Matlaga B. R., Lingeman J. E., “Techniques for fluoroscopic percutaneous renal access,” J. Urol. 178(1), 15–23 (2007). 10.1016/j.juro.2007.03.014 [DOI] [PubMed] [Google Scholar]

- 13.Barbaric Z. L., Hall T., Cochran S. T., Heitz D. R., Schwartz R. A., Krasny R. M., Deseran M. W., “Percutaneous nephrostomy: placement under CT and fluoroscopy guidance,” Am. J. Roentgenol. 169(1), 151–155 (1997). 10.2214/ajr.169.1.9207516 [DOI] [PubMed] [Google Scholar]

- 14.Hacker A., Wendt-Nordahl G., Honeck P., Michel M. S., Alken P., Knoll T., “A biological model to teach percutaneous nephrolithotomy technique with ultrasound- and fluoroscopy-guided access,” J. Endourol. 21(5), 545–550 (2007). 10.1089/end.2006.0327 [DOI] [PubMed] [Google Scholar]

- 15.de Sousa Morais N., Pereira J. P., Mota P., Carvalho-Dias E., Torres J. N., Lima E., “Percutaneous nephrostomy vs ureteral stent for hydronephrosis secondary to ureteric calculi: impact on spontaneous stone passage and health-related quality of life-a prospective study,” Urolithiasis 47(6), 567–573 (2019). 10.1007/s00240-018-1078-2 [DOI] [PubMed] [Google Scholar]

- 16.Gupta S., Gulati M., Suri S., “Ultrasound-guided percutaneous nephrostomy in non-dilated pelvicaliceal system,” J. Clin. Ultrasound 26(3), 177–179 (1998). [DOI] [PubMed] [Google Scholar]

- 17.Ramchandani P., Cardella J. F., Grassi C. J., Roberts A. C., Sacks D., Schwartzberg M. S., Lewis C. A., and S. o. I. R. S. o. P. Committee , “Quality improvement guidelines for percutaneous nephrostomy,” J. Vasc. Interv. Radiol. 14, S277–S281 (2003). 10.1016/j.jvir.2015.11.045 [DOI] [PubMed] [Google Scholar]

- 18.Montvilas P., Solvig J., Johansen T. E. B., “Single-centre review of radiologically guided percutaneous nephrostomy using “mixed” technique: Success and complication rates,” Eur. J. Radiol. 80(2), 553–558 (2011). 10.1016/j.ejrad.2011.01.109 [DOI] [PubMed] [Google Scholar]

- 19.Ozbek O., Kaya H. E., Nayman A., Saritas T. B., Guler I., Koc O., Karakus H., “Rapid percutaneous nephrostomy catheter placement in neonates with the trocar technique,” Diagn. Interv. Imaging 98(4), 315–319 (2017). 10.1016/j.diii.2016.08.010 [DOI] [PubMed] [Google Scholar]

- 20.Vignali C., Lonzi S., Bargellini I., Cioni R., Petruzzi P., Caramella D., Bartolozzi C., “Vascular injuries after percutaneous renal procedures: treatment by transcatheter embolization,” Eur Radiol. 14(4), 723–729 (2004). 10.1007/s00330-003-2009-2 [DOI] [PubMed] [Google Scholar]

- 21.Dagli M., Ramchandani P., “Percutaneous nephrostomy: technical aspects and indications,” Semin Intervent Radiol. 28(04), 424–437 (2011). 10.1055/s-0031-1296085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lee W. J., Smith A. D., Cubelli V., Badlani G. H., Lewin B., Vernace F., Cantos E., “Complications of percutaneous nephrolithotomy,” Am. J. Roentgenol. 148(1), 177–180 (1987). 10.2214/ajr.148.1.177 [DOI] [PubMed] [Google Scholar]

- 23.Hausegger K. A., Portugaller H. R., “Percutaneous nephrostomy and antegrade ureteral stenting: technique-indications-complications,” Eur Radiol. 16(9), 2016–2030 (2006). 10.1007/s00330-005-0136-7 [DOI] [PubMed] [Google Scholar]

- 24.Kyriazis I., Panagopoulos V., Kallidonis P., Ozsoy M., Vasilas M., Liatsikos E., “Complications in percutaneous nephrolithotomy,” World J. Urol. 33(8), 1069–1077 (2015). 10.1007/s00345-014-1400-8 [DOI] [PubMed] [Google Scholar]

- 25.Radecka E., Magnusson A., “Complications associated with percutaneous nephrostomies. A retrospective study,” Acta Radiol. 45(2), 184–188 (2004). 10.1080/02841850410003671 [DOI] [PubMed] [Google Scholar]

- 26.Egilmez H., Oztoprak I., Atalar M., Cetin A., Gumus C., Gultekin Y., Bulut S., Arslan M., Solak O., “The place of computed tomography as a guidance modality in percutaneous nephrostomy: analysis of a 10-year single-center experience,” Acta Radiol. 48(7), 806–813 (2007). 10.1080/02841850701416528 [DOI] [PubMed] [Google Scholar]

- 27.Sonawane P., Ganpule A., Sudharsan B., Singh A., Sabnis R., Desai M., “A modified Malecot catheter design to prevent complications during difficult percutaneous nephrostomy,” Arab J. Urol. 17(4), 330–334 (2019). 10.1080/2090598X.2019.1626587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Alken P., Hutschenreiter G., Gunther R., Marberger M., “Percutaneous stone manipulation,” J. Urol. 125(4), 463–466 (1981). 10.1016/s0022-5347(17)55073-9 [DOI] [PubMed] [Google Scholar]

- 29.Liu Q., Zhou L., Cai X., Jin T., Wang K., “Fluoroscopy versus ultrasound for image guidance during percutaneous nephrolithotomy: a systematic review and meta-analysis,” Urolithiasis 45(5), 481–487 (2017). 10.1007/s00240-016-0934-1 [DOI] [PubMed] [Google Scholar]

- 30.Liu B. X., Huang G. L., Xie X. H., Zhuang B. W., Xie X. Y., Lu M. D., “Contrast-enhanced US-assisted percutaneous nephrostomy: a technique to increase success rate for Patients with nondilated renal collecting system,” Radiology 285(1), 293–301 (2017). 10.1148/radiol.2017161604 [DOI] [PubMed] [Google Scholar]

- 31.Cui X. W., Ignee A., Maros T., Straub B., Wen J. G., Dietrich C. F., “Feasibility and usefulness of intra-cavitary contrast-enhanced ultrasound in percutaneous nephrostomy,” Ultrasound Med. Biol. 42(9), 2180–2188 (2016). 10.1016/j.ultrasmedbio.2016.04.015 [DOI] [PubMed] [Google Scholar]

- 32.Ungi T., Beiko D., Fuoco M., King F., Holden M. S., Fichtinger G., Siemens D. R., “Tracked ultrasonography snapshots enhance needle guidance for percutaneous renal access: a pilot study,” J. Endourol. 28(9), 1040–1045 (2014). 10.1089/end.2014.0011 [DOI] [PubMed] [Google Scholar]

- 33.Lu M. H., Pu X. Y., Gao X., Zhou X. F., Qiu J. G., Si-Tu J., “A comparative study of clinical value of single B-mode ultrasound guidance and B-mode combined with color doppler ultrasound guidance in mini-invasive percutaneous nephrolithotomy to decrease hemorrhagic complications,” Urology 76(4), 815–820 (2010). 10.1016/j.urology.2009.08.091 [DOI] [PubMed] [Google Scholar]

- 34.Hawkins C. M., Kukreja K., Singewald T., Minevich E., Johnson N. D., Reddy P., Racadio J. M., “Use of cone-beam CT and live 3-D needle guidance to facilitate percutaneous nephrostomy and nephrolithotripsy access in children and adolescents,” Pediatr. Radiol. 46(4), 570–574 (2016). 10.1007/s00247-015-3499-1 [DOI] [PubMed] [Google Scholar]

- 35.Uribe C. A., Osorio H., Benavides J., Martinez C. H., Valley Z. A., Kaler K. S., “A New Technique for Percutaneous Nephrolithotomy Using Retrograde Ureteroscopy and Laser Fiber to Achieve Percutaneous Nephrostomy Access: The Initial Case Report,” J. Endourol. Case Rep. 5(3), 131–136 (2019). 10.1089/cren.2018.0079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Krombach G. A., Mahnken A., Tacke J., Staatz G., Haller S., Nolte-Ernsting C. C. A., Meyer J., Haage P., Gunther R. W., “US-guided nephrostomy with the aid of a magnetic field-based navigation device in the porcine pelvicaliceal system,” J. Vasc. Interventional Radiol. 12(5), 623–628 (2001). 10.1016/S1051-0443(07)61488-2 [DOI] [PubMed] [Google Scholar]

- 37.Miller R. A., Wickham J. E., “Percutaneous nephrolithotomy: advances in equipment and endoscopic techniques,” Urology 23(5), 2–6 (1984). 10.1016/0090-4295(84)90234-6 [DOI] [PubMed] [Google Scholar]

- 38.Isac W., Rizkala E., Liu X., Noble M., Monga M., “Endoscopic-guided versus fluoroscopic-guided renal access for percutaneous nephrolithotomy: a comparative analysis,” Urology 81(2), 251–256 (2013). 10.1016/j.urology.2012.10.004 [DOI] [PubMed] [Google Scholar]

- 39.Tang Q. G., Wang J. T., Frank A., Lin J., Li Z. F., Chen C. W., Jin L., Wu T. T., Greenwald B. D., Mashimo H., Chen Y., “Depth-resolved imaging of colon tumor using optical coherence tomography and fluorescence laminar optical tomography,” Biomed. Opt. Express 7(12), 5218–5232 (2016). 10.1364/BOE.7.005218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Huang D., Swanson E. A., Lin C. P., Schuman J. S., Stinson W. G., Chang W., Hee M. R., Flotte T., Gregory K., Puliafito C. A., Fujimoto J. G., “Optical coherence tomography,” Science 254(5035), 1178–1181 (1991). 10.1126/science.1957169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Fercher A. F., Drexler W., Hitzenberger C. K., Lasser T., “Optical coherence tomography - principles and applications,” Rep. Prog. Phys. 66(2), 239–303 (2003). 10.1088/0034-4885/66/2/204 [DOI] [Google Scholar]

- 42.Andrews P. M., Chen Y., Onozato M. L., Huang S. W., Adler D. C., Huber R. A., Jiang J., Barry S. E., Cable A. E., Fujimoto J. G., “High-resolution optical coherence tomography imaging of the living kidney,” Lab Invest. 88(4), 441–449 (2008). 10.1038/labinvest.2008.4 [DOI] [PubMed] [Google Scholar]

- 43.Fujimoto J. G., Brezinski M. E., Tearney G. J., Boppart S. A., Bouma B., Hee M. R., Southern J. F., Swanson E. A., “Optical Biopsy and Imaging Using Optical Coherence Tomography,” Nat. Med. 1(9), 970–972 (1995). 10.1038/nm0995-970 [DOI] [PubMed] [Google Scholar]

- 44.Andrews P. M., Wang H. W., Wierwille J., Gong W., Verbesey J., Cooper M., Chen Y., “Optical coherence tomography of the living human kidney,” J. Innov. Opt. Health Sci. 07(2), 1350064 (2014). 10.1142/S1793545813500648 [DOI] [Google Scholar]

- 45.Li Q., Onozato M. L., Andrews P. M., Chen C. W., Paek A., Naphas R., Yuan S. A., Jiang J., Cable A., Chen Y., “Automated quantification of microstructural dimensions of the human kidney using optical coherence tomography (OCT),” Opt. Express 17(18), 16000–16016 (2009). 10.1364/OE.17.016000 [DOI] [PubMed] [Google Scholar]

- 46.Israelsen N. M., Petersen C. R., Barh A., Jain D., Jensen M., Hannesschlager G., Tidemand-Lichtenberg P., Pedersen C., Podoleanu A., Bang O., “Real-time high-resolution mid-infrared optical coherence tomography,” Light: Sci. Appl. 8(1), 11 (2019). 10.1038/s41377-019-0122-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Fujimoto J., Swanson E., “The Development, Commercialization, and Impact of Optical Coherence Tomography,” Invest. Ophthalmol. Vis. Sci. 57(9), OCT1–OCT13 (2016). 10.1167/iovs.16-19963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wieser W., Draxinger W., Klein T., Karpf S., Pfeiffer T., Huber R., “High definition live 3D-OCT in vivo: design and evaluation of a 4D OCT engine with 1 GVoxel/s,” Biomed. Opt. Express 5(9), 2963–2977 (2014). 10.1364/BOE.5.002963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Wang H. W., Chen Y., “Clinical applications of optical coherence tomography in urology,” Intravital 3(1), e28770 (2014). 10.4161/intv.28770 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Konkel B., Lavin C., Wu T. T., Anderson E., Iwamoto A., Rashid H., Gaitian B., Boone J., Cooper M., Abrams P., Gilbert A., Tang Q., Levi M., Fujimoto J. G., Andrews P., Chen Y., “Fully automated analysis of OCT imaging of human kidneys for prediction of post-transplant function,” Biomed. Opt. Express 10(4), 1794–1821 (2019). 10.1364/BOE.10.001794 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ding Z., Jin L., Wang H.-W., Tang Q., Guo H., Chen Y., “Multi-modality Optical Imaging of Rat Kidney Dysfunction: In Vivo Response to Various Ischemia Times,” in Oxygen Transport to Tissue XXXVIII (Springer, 2016), pp. 345–350. [DOI] [PubMed] [Google Scholar]

- 52.Tearney G. J., Brezinski M. E., Bouma B. E., Boppart S. A., Pitris C., Southern J. F., Fujimoto J. G., “In vivo endoscopic optical biopsy with optical coherence tomography,” Science 276(5321), 2037–2039 (1997). 10.1126/science.276.5321.2037 [DOI] [PubMed] [Google Scholar]

- 53.Xi J., Zhang A., Liu Z., Liang W., Lin L. Y., Yu S., Li X., “Diffractive catheter for ultrahigh-resolution spectral-domain volumetric OCT imaging,” Opt. Lett. 39(7), 2016–2019 (2014). 10.1364/OL.39.002016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Sharma G. K., Ahuja G. S., Wiedmann M., Osann K. E., Su E., Heidari A. E., Jing J. C., Qu Y. Q., Lazarow F., Wang A., Chou L., Uy C. C., Dhar V., Cleary J. P., Pham N., Huoh K., Chen Z. P., Wong B. J. F., “Long-Range Optical Coherence Tomography of the Neonatal Upper Airway for Early Diagnosis of Intubation-related Subglottic Injury,” Am. J. Respir. Crit. Care Med. 192(12), 1504–1513 (2015). 10.1164/rccm.201501-0053OC [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Chen Y., Aguirre A. D., Hsiung P. L., Desai S., Herz P. R., Pedrosa M., Huang Q., Figueiredo M., Huang S. W., Koski A., Schmitt J. M., Fujimoto J. G., Mashimo H., “Ultrahigh resolution optical coherence tomography of Barrett's esophagus: preliminary descriptive clinical study correlating images with histology,” Endoscopy 39(07), 599–605 (2007). 10.1055/s-2007-966648 [DOI] [PubMed] [Google Scholar]

- 56.Sergeev A. M., Gelikonov V. M., Gelikonov G. V., Feldchtein F. I., Kuranov R. V., Gladkova N. D., Shakhova N. M., Snopova L. B., Shakhov A. V., Kuznetzova I. A., Denisenko A. N., Pochinko V. V., Chumakov Y. P., Streltzova O. S., “In vivo endoscopic OCT imaging of precancer and cancer states of human mucosa,” Opt. Express 1(13), 432–440 (1997). 10.1364/OE.1.000432 [DOI] [PubMed] [Google Scholar]

- 57.Bouma B. E., Tearney G. J., Compton C. C., Nishioka N. S., “High-resolution imaging of the human esophagus and stomach in vivo using optical coherence tomography,” Gastrointest Endosc 51(4), 467–474 (2000). 10.1016/S0016-5107(00)70449-4 [DOI] [PubMed] [Google Scholar]

- 58.Gora M. J., Sauk J. S., Carruth R. W., Gallagher K. A., Suter M. J., Nishioka N. S., Kava L. E., Rosenberg M., Bouma B. E., Tearney G. J., “Tethered capsule endomicroscopy enables less invasive imaging of gastrointestinal tract microstructure,” Nat Med 19(2), 238–240 (2013). 10.1038/nm.3052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Poneros J. M., Brand S., Bouma B. E., Tearney G. J., Compton C. C., Nishioka N. S., “Diagnosis of specialized intestinal metaplasia by optical coherence tomography,” Gastroenterology 120(1), 7–12 (2001). 10.1053/gast.2001.20911 [DOI] [PubMed] [Google Scholar]

- 60.Liang K., Traverso G., Lee H. C., Ahsen O. O., Wang Z., Potsaid B., Giacomelli M., Jayaraman V., Barman R., Cable A., Mashimo H., Langer R., Fujimoto J. G., “Ultrahigh speed en face OCT capsule for endoscopic imaging,” Biomed. Opt. Express 6(4), 1146–1163 (2015). 10.1364/BOE.6.001146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Isenberg G., Sivak M. V., Chak A., Wong R. C. K., Willis J. E., Wolf B., Rowland D. Y., Das A., Rollins A., “Accuracy of endoscopic optical coherence tomography in the detection of dysplasia in Barrett's esophagus: a prospective, double-blinded study,” Gastrointest. Endosc. 62(6), 825–831 (2005). 10.1016/j.gie.2005.07.048 [DOI] [PubMed] [Google Scholar]

- 62.Winkler A. M., Rice P. F., Drezek R. A., Barton J. K., “Quantitative tool for rapid disease mapping using optical coherence tomography images of azoxymethane-treated mouse colon,” J. Biomed. Opt. 15(4), 041512 (2010). 10.1117/1.3446674 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Jäckle S., Gladkova N., Feldchtein F., Terentieva A., Brand B., Gelikonov G., Gelikonov V., Sergeev A., Fritscher-Ravens A., Freund J., “In vivo endoscopic optical coherence tomography of the human gastrointestinal tract-toward optical biopsy,” Endoscopy 32(10), 743–749 (2000). 10.1055/s-2000-7711 [DOI] [PubMed] [Google Scholar]

- 64.Pfau P. R., Sivak M. V., Chak A., Kinnard M., Wong R. C. K., Isenberg G. A., Izatt J. A., Rollins A., Westphal V., “Criteria for the diagnosis of dysplasia by endoscopic optical coherence tomography,” Gastrointest. Endosc. 58(2), 196–202 (2003). 10.1067/mge.2003.344 [DOI] [PubMed] [Google Scholar]

- 65.Tang Q. G., Liang C.-P., Wu K., Sandler A., Chen Y., “Real-time epidural anesthesia guidance using optical coherence tomography needle probe,” Quant. Imaging Med. Su. 5(1), 118–124 (2015). 10.3978/j.issn.2223-4292.2014.11.28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Wang C., Gan M., Zhang M., Li D. Y., “Adversarial convolutional network for esophageal tissue segmentation on OCT images,” Biomed. Opt. Express 11(6), 3095–3110 (2020). 10.1364/BOE.394715 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Schmidt-Erfurth U., Sadeghipour A., Gerendas B. S., Waldstein S. M., Bogunovic H., “Artificial intelligence in retina,” Prog. Retin. Eye Res. 67, 1–29 (2018). 10.1016/j.preteyeres.2018.07.004 [DOI] [PubMed] [Google Scholar]

- 68.Fang L. Y., Wang C., Li S. T., Rabbani H., Chen X. D., Liu Z. M., “Attention to lesion: lesion-aware convolutional neural network for retinal optical coherence tomography image classification,” IEEE Trans. Med. Imaging 38(8), 1959–1970 (2019). 10.1109/TMI.2019.2898414 [DOI] [PubMed] [Google Scholar]

- 69.Fang L. Y., He N. J., Li S. T., Ghamisi P., Benediktsson J. A., “Extinction profiles fusion for hyperspectral images classification,” IEEE Trans. Geosci. Remote Sensing 56(3), 1803–1815 (2018). 10.1109/TGRS.2017.2768479 [DOI] [Google Scholar]

- 70.Date R. C., Jesudasen S. J., Weng C. Y., “Applications of deep learning and artificial intelligence in retina,” Int. Ophthalmol. Clin. 59(1), 39–57 (2019). 10.1097/IIO.0000000000000246 [DOI] [PubMed] [Google Scholar]

- 71.Dou Q., Chen H., Yu L. Q., Zhao L., Qin J., Wang D. F., Mok V. C. T., Shi L., Heng P. A., “Automatic detection of cerebral microbleeds from MR images via 3D convolutional neural networks,” IEEE Trans. Med. Imaging 35(5), 1182–1195 (2016). 10.1109/TMI.2016.2528129 [DOI] [PubMed] [Google Scholar]

- 72.Pereira S., Pinto A., Alves V., Silva C. A., “Brain tumor segmentation using convolutional neural networks in MRI images,” IEEE Trans. Med. Imaging 35(5), 1240–1251 (2016). 10.1109/TMI.2016.2538465 [DOI] [PubMed] [Google Scholar]

- 73.van Grinsven M. J. J. P., van Ginneken B., Hoyng C. B., Theelen T., Sanchez C. I., “Fast convolutional neural network training using selective data sampling: application to hemorrhage detection in color fundus images,” IEEE Trans. Med. Imaging 35(5), 1273–1284 (2016). 10.1109/TMI.2016.2526689 [DOI] [PubMed] [Google Scholar]

- 74.Raschka S., “Model evaluation, model selection, and algorithm selection in machine learning,” arXiv preprint arXiv:1811.12808 (2018).

- 75.Varma S., Simon R., “Bias in error estimation when using cross-validation for model selection,” BMC Bioinformatics 7(1), 91 (2006). 10.1186/1471-2105-7-91 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Iizuka N., Oka M., Yamada-Okabe H., Nishida M., Maeda Y., Mori N., Takao T., Tamesa T., Tangoku A., Tabuchi H., Hamada K., Nakayama H., Ishitsuka H., Miyamoto T., Hirabayashi A., Uchimura S., Hamamoto Y., “Oligonucleotide microarray for prediction of early intrahepatic recurrence of hepatocellular carcinoma after curative resection,” The Lancet 361(9361), 923–929 (2003). 10.1016/S0140-6736(03)12775-4 [DOI] [PubMed] [Google Scholar]

- 77.He K., Zhang X., Ren S., Sun J., “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016), 770–778. [Google Scholar]

- 78.Sandler M., Howard A., Zhu M., Zhmoginov A., Chen L.-C., “Mobilenetv2: Inverted residuals and linear bottlenecks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018), 4510–4520. [Google Scholar]

- 79.Abadi M., Barham P., Chen J., Chen Z., Davis A., Dean J., Devin M., Ghemawat S., Irving G., Isard M., “Tensorflow: A system for large-scale machine learning,” in 12th {USENIX} symposium on operating systems design and implementation ({OSDI} 16), 2016), 265–283. [Google Scholar]

- 80.Deng J., Dong W., Socher R., Li L., Kai L., Li F.-F., “ImageNet: A large-scale hierarchical image database,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009), 248–255. [Google Scholar]

- 81.Chollet F., Keras, 2015.

- 82.Géron A., Hands-on Machine Learning with Scikit-learn, Keras, and Tensorflow: Concepts, Tools, and Techniques to Build Intelligent Systems (O'Reilly Media, 2019). [Google Scholar]

- 83.Krstajic D., Buturovic L. J., Leahy D. E., Thomas S., “Cross-validation pitfalls when selecting and assessing regression and classification models,” J. Cheminform. 6(1), 10 (2014). 10.1186/1758-2946-6-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Selvaraju R. R., Cogswell M., Das A., Vedantam R., Parikh D., Batra D., “Grad-cam: Visual explanations from deep networks via gradient-based localization,” in Proceedings of the IEEE international conference on computer vision, 2017), 618–626. [Google Scholar]

- 85.Chollet F., Deep Learning with Python (Manning, 2018), Vol. 361. [Google Scholar]

- 86.Chen Y., Andrews P. M., Aguirre A. D., Schmitt J. M., Fujimoto J. G., “High-resolution three-dimensional optical coherence tomography imaging of kidney microanatomy ex vivo,” J. Biomed. Opt. 12(3), 034008 (2007). 10.1117/1.2736421 [DOI] [PubMed] [Google Scholar]

- 87.Lote C. J., Principles of Renal Physiology, 4th ed. (Kluwer Academic Publishers, 2000), pp. x, 203 p. [Google Scholar]

- 88.Dyer R. B., Regan J. D., Kavanagh P. V., Khatod E. G., Chen M. Y., Zagoria R. J., “Percutaneous nephrostomy with extensions of the technique: Step by step,” Radiographics 22(3), 503–525 (2002). 10.1148/radiographics.22.3.g02ma19503 [DOI] [PubMed] [Google Scholar]

- 89.Kruthika K. R., Rajeswari, Maheshappa H. D., “CBIR system using Capsule Networks and 3D CNN for Alzheimer's disease diagnosis,” Inf. Med. Unlocked 14, 59–68 (2019). 10.1016/j.imu.2018.12.001 [DOI] [Google Scholar]