Abstract

The early detection of COVID-19 is a challenging task due to its deadly spreading nature and existing fear in minds of people. Speech-based detection can be one of the safest tools for this purpose as the voice of the suspected can be easily recorded. The Mel Frequency Cepstral Coefficient (MFCC) analysis of speech signal is one of the oldest but potential analysis tools. The performance of this analysis mainly depends on the use of conversion between normal frequency scale to perceptual frequency scale and the frequency range of the filters used. Traditionally, in speech recognition, these values are fixed. But the characteristics of speech signals vary from disease to disease. In the case of detection of COVID-19, mainly the coughing sounds are used whose bandwidth and properties are quite different from the complete speech signal. By exploiting these properties the efficiency of the COVID-19 detection can be improved. To achieve this objective the frequency range and the conversion scale of frequencies have been suitably optimized. Further to enhance the accuracy of detection performance, speech enhancement has been carried out before extraction of features. By implementing these two concepts a new feature called COVID-19 Coefficient (C-19CC) is developed in this paper. Finally, the performance of these features has been compared.

Keywords: Bio-inspired computing, COVID19, Speech signal

1. Introduction

Coronavirus disease 19 (COVID- 19) which exhibits acute respiratory syndrome is a deadly viral infection. As reported, it has started in Wuhan, China in 2019 and has affected the whole world [1]. As per the report of the World Health Organization, more than a hundred million people have suffered till 7th March 2021 out of which more than 2.5 million deaths have been reported [2]. The social distancing of 1.6 m to 3 m is recommended to control the rapid spreading of COVID-19 cases [3]. It is observed from the experiences of the medical practitioners that rather than the deadly nature of the virus, its fear of stigma is stopping people from going to medical laboratories for testing purposes [4]. Under such circumstances, it has become a huge challenge for developing an appropriate method for the early detection of this disease. It is a fact that the speech-based detection of COVID-19 is a simpler and safer approach for this purpose [5]. In this section, a review of related literature is carried out in two parts: speech based COVID-19 detection and speech recognition using MFCC features.

1.1. Literature review

In this section, the literature review has been carried out in two parts: speech based COVID-19 detection and use of MFCC features based speech recognition.

1.1.1. Review on COVID-19 detection using speech signals

Speech analysis is one of the important methods used for the detection of parkinson [6] alzheimer, asthma [7]. In the recent past, attempts have been made in the area of speech based COVID-19 analysis and diagnosis. The details in terms of databases, feature extraction methods, and performance analysis have been presented. A crowdsourced data set of respiratory sounds has been prepared using coughs and breathing sounds for detecting COVID-19. Several audio features such as speech time duration, onset, tempo, period, RMS energy, spectral centroid, Roll-Off frequency, Zero-crossing, MFCC have been used as inputs to classification methods such as Logistic Regression, Gradient Boosting Trees, and Support Vector Machines (SVM) for the classification task. It is reported that a maximum accuracy of 80% in Receiver Operator Characteristics Area Under Curve (AUC) [8].

A review of Artificial Intelligence based methods used for COVID-19 detection is presented in [9]. It explains multi-modal approach using audio, text, and image for achieving better detection results. The log Mel spectra of a speech signal are mapped with the respiratory sensors to train the neural network-based models. A sensitivity of 91.2% for breathing based detection and a mean absolute error of 1.01 breaths per minute have been reported using the proposed methods. In another paper, the health condition of COVID-19 patients is categorized into four types with respect to the severity of illness, sleep quality, fatigue, and anxiety [10]. Audio dataset has been collected from twenty females and thirty-two males COVID19 patients from two hospitals in Wuhan, China during March 20, – 26, 2020. Two acoustic feature sets from the computational paralinguistics challenge and extended Geneva minimalistic acoustic parameter sets have been used as inputs to SVM to achieve an average classification accuracy of 69%.

1.1.2. Review on optimization in MFCC features

The cepstral analysis is one of the oldest and popular signal analysis methods which finds applications in the speech signal and mechanical systems [11]. The MFCC based features are very popular and effective for speech recognition, music information retrieval, speech evaluation parameters, etc. An optimization of MFCC features is achieved by reducing the feature space using Linear Discriminant Analysis Fisher’s F-ratio [12]. The use of these features achieves faster convergence of the ANN model and improvement in recognition accuracy at different SNR levels. The source recognition of Cell-Phone is carried out using the optimization of different cepstral coefficients such as Mel, linear, and Bark frequency [13]. The use of minimum and maximum frequencies of MFCC and cepstral variance normalization has enhanced the identification rate to 96.85%. With an objective to minimize the dissimilarity between the perceptual and feature domain distortions, modified MFCC based features are proposed in [14]. These simple feature vectors provide improved speech recognition performance in noisy as well as clean conditions. In another paper, the mean and standard deviation of the feature space including MFCC are optimized using genetic algorithm, and differential evolution [15]. These improved features are used for the punjabi language speaker recognition.

An analysis of different frequency bands has been carried out using the F-ratio method for speech unit classification and it is observed that 1 kHz to 3 kHz frequency range to be provided more emphasis and accordingly an optimization of features using the F-ratio scale is proposed in [16]. A significant reduction in the sentence error rate is reported by using the proposed feature optimization technique. The central and side frequency parameters of MFCC filter banks have been optimized using particle swarm optimization and genetic algorithm [17]. These optimized features are then applied in the Hindi vowel recognition using the Hidden Markov model and Multilayer perceptrons under different noise conditions. A hybrid approach is proposed by taking gammatone and mel frequency cepstral coefficients with PCA, and multi tapered method using differential evolution, and Hidden Markov model (HMM) based classifiers for robust recognition of Punjabi speech under different noise conditions [18]. The frequency range of the filter banks of MFCC is optimized for emotion recognition using two databases [19]. This method has improved the speaker-independent emotion recognition accuracy by 15% for the Assamese database and 25% for the Berlin database.

1.2. Motivation

It is observed from the literature review that early detection of COVID-19 from speech data is a challenging and timely research area [20], [21], [22]. For the remote online based COVID-19 detection from the speech signal, the patients have to use mobile applications in the real-life noisy environment. Investigation in this direction has not been fully explored particularly in the selection of proper audio features of COVID-19 patients for detection purposes. The nature of the speech signals used for the analysis of COVID-19 are mainly the breathing and cough sounds and hence it is quite different from the speech signal comprising complete sentences. The MFCC features have also been used in COVID-19 detection in [23], but these features are directly used without any modification or improvement in the features and hence the detection performance is poor. Thus, in the present COVID-19 scenario, there is a huge requirement to develop a better effective tool for improved detection from a safe distance and remotely recorded speech. Hence, there is a need to find and identify improved features which are expected to improve the detection accuracy of the classifier. The focus of the current investigation is to develop potential features from the speech data for facilitating the classifier to yield higher accuracy of detection. In addition, speech enhancement is required to be carried out for extraction of the proper audio features [24]. It is further observed that there exists a huge class imbalance present in the speech data available on the online platforms [8], [23]. This class imbalance affects the overall training and testing performance of classifiers and hence this issue needs to be addressed and resolved. These problems have been identified during the literature review and taken up in this paper.

There are different techniques used for achieving higher accuracy in classification and prediction tasks out of which the deep learning-based techniques are preferred if the data size is high and features extraction and selection are difficult [25], [26]. On the other hand, ML based methods use extracted features whereas CNN extracts the appropriate features through a series of convolution operations. Further DL methods involve more time for classification [27], [28], [29]. In the present problem, two speech datasets [8], [23] with categorically less data are available and the feature optimization is the target. Though Cepstral analysis is an old method, still it is quite popular, effective and a lot of recent articles still employ this feature [30], [31]. Therefore, for the present problem, Cepstral optimised features are obtained by a bio-inspired technique and used for classification purposes.

1.3. Research objectives

Based on the motivation of research arising out of the literature review, the problem has been formulated with the following research objectives. Thus, the research objectives of the paper are:

-

1.

To analyze the cepstral features used in speech recognition and to suitably optimizing the conversion scale in the frequency domain, and frequency range of filter banks using the bio-inspired technique to achieve better COVID-19 detection.

-

2.

To identify the best possible sound patterns during coughing, breathing, and voiced sounds to detect COVID-19.

-

3.

To employ the adaptive synthetic sampling approach for achieving efficient training for class imbalance in the database and to facilitate proper classification using SVM.

-

4.

To compare and analyze the detection performance of the proposed cepstral feature-based classifier with that obtained from other reported results.

1.4. Organization of the paper

Based on the objectives of the research the organization of the paper proceeds as follows. The introduction, literature review, motivation, research objectives are presented in Section I. In section II, a detailed review of the related work on the theme of the problem is presented. The salient characteristics of data obtained from the standard database of COVID-19 are provided in Section III. In Section IV, the proposed methodology is presented in detail. The simulation-based experiment using two standard data sets and discussion of results as well as the contribution of the paper is presented in Section V. Finally, the conclusion of the paper and scope for future work are dealt with in Section VI.

2. Related works

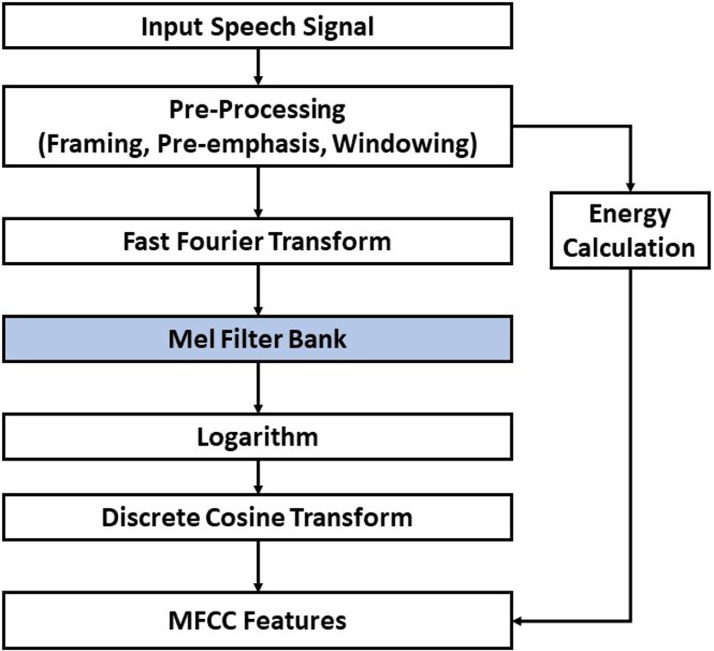

The cepstral analysis is one the oldest and popular signal analysis methods used in various applications like speech signal processing, mechanical engineering problems, and analysis of multiple inputs, multiple output systems, etc [11]. The MFCCs are very popular and effective features in speech recognition, music information retrieval, speech evaluation parameters. It is normally calculated using the following steps [32].

-

•

Step-1 — Apply the pre-processing like windowing, framing to the input signal

-

•

Step-2 — Calculate the energy of the frame

-

•

Step-3 — Find the Discrete Fourier transform using the FFT method

-

•

Step-4 — Apply the Mel filter bank by mapping the power spectrum into the mel scale and by using triangular overlapping windows.

-

•

Step-5 — FInd the logarithm of Step 4

-

•

Step-6 — Apply the Discrete cosine transform

-

•

Step-7 — Combine Energy and other features from step-6 to get MFCC features

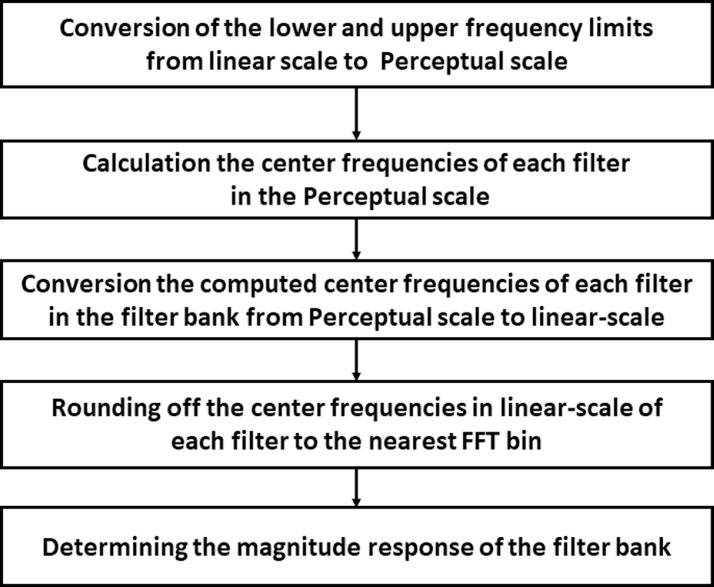

These steps are also shown in Fig. 1 . Step-4 deals with the use of the Mel filter bank. There are several variations of these filter banks reported in the speech processing such as triangular filter bank using mel-scale, human factor scale, Bark-scale, ERB-scale, and Gammatone filter bank. Depending on these filter banks the cepstral coefficients are named accordingly. The basic steps used for the filter bank design are shown in Fig. 2 [33]. In this case, the Perceptual scale means frequency scale based on the human perception of sound like the mel scale. The details of the four types of features are discussed in the sequel.

Fig. 1.

Steps for the MFCC feature extraction.

Fig. 2.

Steps for the Filter bank computation.

2.1. Triangular filter bank using mel-scale (TFBCC-M features)

The steps involved in the design of the filter bank for extraction of TFBCC-M features [33] are explained in this section. The steps are as follows:

-

•

Convert linear scale frequency of DFT (f) to Mel scale (f ) using Equation (1).

| (1) |

-

•

The linear scale lower and higher cut-off frequencies (f and f ) are converted into melscale (fm and fm ) respectively. Now, the center frequencies of each filter are calculated using Equation (2).

| (2) |

where p = 1, 2, 3,..., P-1. P is the number of Mel filters.

-

•

The center frequencies of pth filter band is to be converted to linear scale using given in Equation (3).

| (3) |

-

•

The magnitude response of each of the filters in the mel filter bank is calculated using Equation (4).

| (4) |

Where,k is the frequency domain index of FFT, is the rounded center frequencies () and it is calculated using Equation (5) and fs is the sampling frequency of the speech signal.

| (5) |

2.2. Triangular filter bank using bark scale (TFBCC-B features)

The calculation of TFBCC-B features is similar to the TFBCC-M features with only one difference [33]. Instead of conversion between linear scale frequency of DFT and mel scale (as mentioned in Equations (1) and (3)), the conversion is between linear scale frequency of DFT (f) and Bark scale (f )as mentioned in Equations (6) and (7).

| (6) |

| (7) |

where is the center frequency in the Bark scale.

2.3. Triangular filter bank using human factor scale (TFBCC-H features)

The difference between the designing of filter bank for TFBCC-M and TFBCC-H features lies in the calculation of the critical bandwidth using the ERB approximation [34].

-

•

The center frequencies of the first (p=1) and last (p=P) filters are computed as mentioned in Equation (8)

| (8) |

where p is the index of filter and P is the maximum number of filters. For the first filter

The is obtained usingEquation (9) and Table 1 .

| (9) |

| (10) |

-

•

After obtaining the values of these are converted from linear-scale to mel-scale as mentioned in Equation (11), where and are the center frequencies of the first and last filter in the mel-scale computed using Equation (1).

| (11) |

-

•

Convert the computed center frequencies of all the filters from mel-scale to linear-scale using Equation (3).

-

•

Calculate the lower and upper limit of the frequency of each of the filters in the filter bank using Equations (12), (13) and (14).

| (12) |

| (13) |

| (14) |

-

•

Round off the center frequencies and magnitude response calculation of each filter as is done in case of TFBCC-M features using Equations (4) and (5).

Table 1.

Variables used in TFBCC-H features calculation.

| parameter | value |

|---|---|

| 0.00000623 | |

| 0.09339 | |

| 28.52 | |

| 0 (for the first filter) | |

| 1 (for the Pth filter) | |

| f | f(for the first filter) |

| f | f (for the Pth filter) |

2.4. Triangular filter bank using ERB scale (TFBCC-E features)

The calculation of TFBCC-E features is similar to that of TFBCC-H features with only one change [33]. Instead of conversion between linear scale frequency of DFT and mel scale (as mentioned in Equations (11), (1) and (3)), the conversion is made between linear scale frequency of DFT (f) and ERB scale (f )as mentioned in Equations (15) and (16).

| (15) |

| (16) |

2.5. Parameters for optimization

It is observed from the detailed review of the existing Cepstral coefficients that the two parameters such as the conversion of frequency from linear scale to perceptual scale and the selection of cut-off frequencies are the determining factor for the performance of the cepstral analysis. This issue is addressed in the literature [13], [14], [15], [16], [17], [18], [19]. This problem has been further investigated in Section 5.2.

3. Database preparation

Two Speech databases are used in the simulation-based experiments carried out in Section 5. The brief details of the databases used are discussed in this section.

3.1. Coswara database

The Coswara database (DB-1) has been developed by the Indian Institute of Science, India in the year 2020 [23]. A web application is used for collecting audio samples for diagnosing COVID-19 prevalence using breath, cough, and voice sounds. The audio files are arranged in groupings of different respiratory indicators such as shallow and deep breathing, shallow and heavy cough, continuous vowel pronunciation /a/, /e/, and /o/, counting normal and fast. This database also contains additional information in terms of age, gender, demography, existing health history, and the existence of chronic health preconditions. The volunteering members have individually contributed to multiple sound clips for multiple segments. The maximum duration of the sound clips for the individual segment is approximately 15 seconds duration with the sampling frequency as 41 kHz or 48 kHz. This data set covers 570 participants and each participant has contributed 9 audio files pertaining to various categories. Cumulatively, this data set comprises 3470 clean, 1055 noisy, and the remaining are highly degraded sound samples.

3.2. Crowdsourced respiratory sound data

The Crowdsourced Respiratory Sound Data (DB-2) has been developed by Cambridge University, the UK in the year 2020 [8]. The Android and web-based sound application is used for capturing speech/audio samples for detecting Corona Virus disease prevalence using breath, and cough sounds. For capturing the required sounds, the volunteers are prompted to follow instructions for coughing and breathing a couple of times along with reading phases. Finally, the users are asked whether they have clinically tested COVID positive so far. Since the project employs two applications to collect the data, the indicating words ’web’ and ’android’ are frequently used to distinguish between the recorded audio samples. Also, while naming the audio files, ’no cough’ and ’with cough’ designate a volunteer’s report of a condition to dry or wet cough, while ’nosymp’ means the volunteer showed no signs at that time. The selected audio.wav files have been arranged in groupings under categories of cough, breath, and asthma. The maximum duration of the sound clips for the individual segment recorded by the android application varies from 8 seconds to 20 seconds duration approximately with a sampling frequency of a maximum 48 kHz. Similarly, the maximum duration of the sound clips for the individual segment recorded by Web application varied from 11 seconds to 24 seconds duration approximately with the sampling frequency of maximum of 48 kHz samples. This dataset consists of 4352 unique users from the web app and 2261 unique users from the Android app. Out of these, total of 235 users are declared COVID-19 positive.

In the two databases, the signals are recorded at 44.1 kHz and 48.1 kHz sampling rates. It is observed from the literature [35] the most of the latent features are within 8 kHz bandwidth and the Hence pre-processing is done by downsampling the speech signal to 16 kHz.

4. Proposed methodology

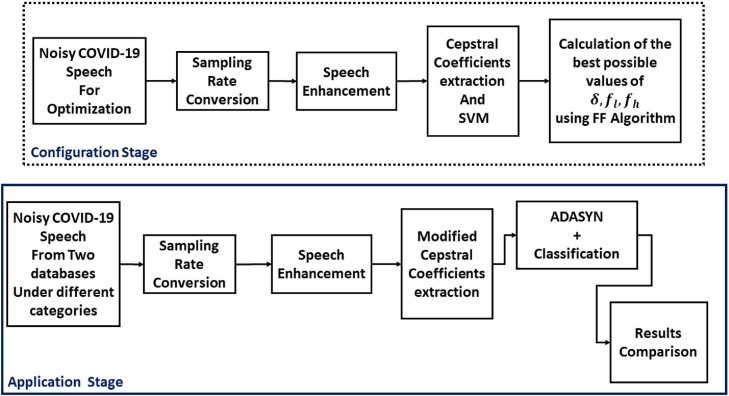

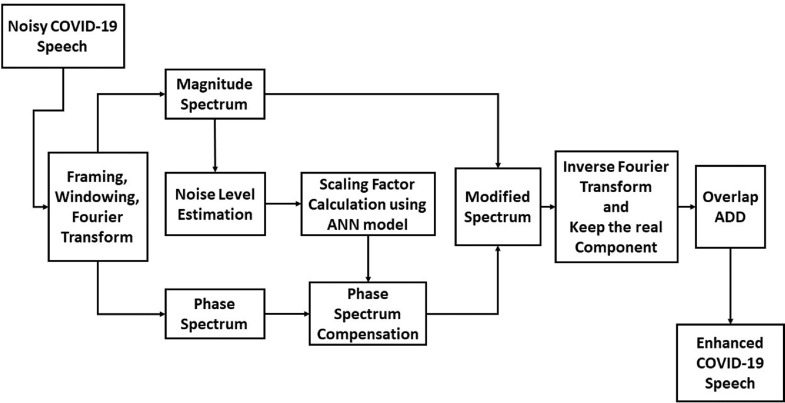

The details of the proposed method are discussed in this Section. The methodology is depicted in the block diagram form (Fig. 3 ) and the need and operation of each block are explained. The algorithm is divided into two parts: configuration and application [36]. The configuration part deals with the preparation of a clean balanced dataset, and the application part explains the extraction and use of the proposed C-19CC features. In the configuration stage, the two available datasets are analysed and converted into a labeled balanced dataset by using the Adaptive Synthetic Sampling Approach [37] which is applied to deal with meant for Imbalanced Learning method. To transform the dataset with a uniform single sampling rate, the sampling rate conversion strategy is applied. Subsequently, the Speech Enhancement algorithm is employed for the reduction of noise present in the data. In the configuration stage, the calculation of the best possible values of the Cepstral features is carried out from the pre-processed dataset. Finally, the previously configured set of C-19CC features are extracted from speech databases. The classifier is then trained using these extracted features for the identification of the appropriate class.

Fig. 3.

Block Diagram of Proposed detection model of COVID-19.

4.1. Fire-Fly optimization algorithm

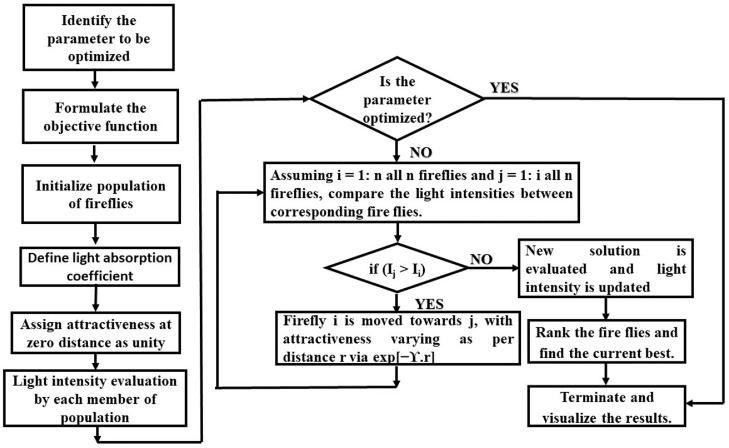

Based on the flashing pattern of fireflies, an efficient optimization algorithm known as FF Algorithm has been developed in the last decade. It has the advantages of swarm intelligence and also it has other advantages as compared to the standard swarm intelligence based algorithms due to its automatic subdivision and the ability of dealing with multi-modality [38]. This section deals with a brief discussion on the operation and implementation of the FF algorithm.

The basic concept of the FF algorithm is based on the attraction and attacking principle of the firefly species. They produce short and rhythmic flashes and the attractiveness of a firefly is calculated by its brightness (light intensity). This principle is modeled as the objective function. The attractiveness is governed by light intensity variation with distance and the absorption coefficient and expressed in Equation (17).

| (17) |

The explanations of the parameters used in the FF Algorithm are listed in Table 2 .

Table 2.

Parameters used in the FF Algorithm.

| Name of Parameter | Details |

|---|---|

| I | light intensity at any particular instant |

| light intensity at the source | |

| Original light intensity used in the calculation of absorption effect | |

| light absorption coefficient | |

| r | distance |

| attractiveness | |

| attractiveness at distance r = 0 | |

| randomization parameter | |

| vector of uniformly distributed zero mean random numbers in the range of — 0.5 to 0.5 |

By combining the two factors of Equation (17) the instantaneous intensity can be expressed asEquation (18)

| (18) |

Similarly, the attractiveness () is represented as Equation (19).

| (19) |

The distance between any two fireflies i and j at position x and x is computed as the Cartesian distance given in Equation (20).

| (20) |

Where is the kth component of the spatial coordinate x of the i th firefly. The movement of a firefly i attracted to another brighter firefly j is expressed as Equation (21), where the second term denotes the attraction and the third term is for inserting randomization. For most cases the value of = 1 and [0, 1]. The speed of the convergence and the overall effectiveness of the FF algorithm depends upon the parameter which denotes the variation of the attractiveness. The value of varies from 0.1 to 10. When any ith firefly is attracted by a brighter (more attractive) firefly j, then its movement is expressed as in Equation (21).

| (21) |

The flow chart of the FF algorithm is shown in Fig. 4 . In the FF algorithm, the whole population can be easily subdivided into subgroups based on the attraction principle. These subgroups help to find the local optimum solutions and correspondingly the global optimum. This concept of subdivision allows the fireflies to get the optima simultaneously if the population size is sufficiently [39]. Also, it has been proved that the FF algorithm works better than the traditional Particle Swarm Optimization and Genetic Algorithm in terms of both efficiency and success rate [39]. Recently several speech processing based optimizations have been implemented successfully using FF algorithms [40]. Hence, the FF algorithm has been chosen for finding the best possible cepstral features to be used for the COVID-19 classification.

Fig. 4.

Flow Chart of Fire Fly Algorithm.

4.2. Classification

The classification is an important task for the detection of COVID-19. Support Vector Machines (SVM) are simple but potential classifiers having lower computational complexity providing higher classification accuracy as compared to other non-linear classifiers [41]. It is further observed from the literature that for the several speech signal based classifications, SVM with the Gaussian kernel is one of the effective classifiers due to its overall good performance and requirement of the optimization of fewer parameters associated with penalty and kernel parameters [42]. The cross-validation process is used for the calculation of the accuracy of the classifier. In this case, the input data is divided into two parts such as training and testing. The validation accuracy is calculated from the unknown testing part of the data set. In the present case, 80% of the data is used for training and the remaining 20% is used for testing and a five-fold cross-validation scheme is also used. The simulation study is carried out in the MATLAB Platform and the validation accuracy is calculated using Equation (22).

| (22) |

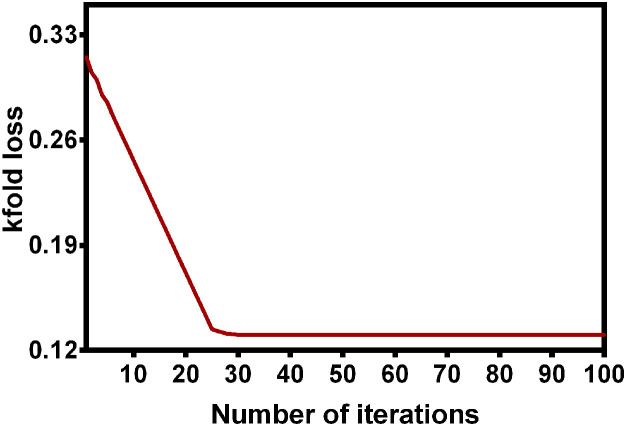

In the optimization process, the cost function is considered as a minimization problem, the k-fold loss is taken as the objective function. The range of k-foldloss is from 0 to 1 and the lower the value the better is the overall performance of the classifier.

4.3. Problem formulation

The problem dealt with in this paper is to calculate the optimum values of conversion between linear to mel scale and higher and lower cut-off frequencies in of the filters related Equations (1), (2) and (3). These equations are re-written here with the proper variables that needs to be optimized. The conversion of the linear scale frequency of DFT (f) to Mel scale (f ) is written with a variable factor in theEquation (23). This idea of optimizing for classification is inspired a similar implementation of the Optimization in Automatic Speech Recognition [14]. In normal MFCC calculations, the value of is taken as 7.

| (23) |

Correspondingly, the conversion of mel scale to linear scale is also written with the variable .

| (24) |

It is also observed from the literature that the linear scale lower and higher cut-off frequencies (f and f ) and correspondingly the melscale lower and higher cut-off frequencies (fm and fm ) in Equation (2) can also be optimally chosen for improvement in the overall classification accuracy [13].

4.4. Need for finding optimum values of ., fl and fh using FF algorithm

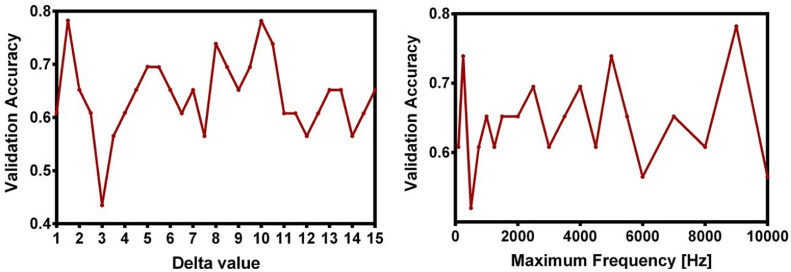

The effect of the change in magnitude of the parameters on the accuracy of classification is dealt in this section. In Fig. 5 , the effects of the change in the value of .and maximum frequency of the speech signal on the validation accuracy are shown. It is noticed from these two figures that the selection of the best possible value of the .to achieve the highest validation accuracy is not straight forward and hence can not be selected using the empirical calculations. Due to the random nature in the pattern of these graphs, to select the best parameters to achieve the maximum validation accuracy, the bio-inspired optimization technique is employed. To fulfill this requirement, the FF algorithm is chosen in this paper.

Fig. 5.

Effect of change in the Delta value and maximum frequency on the Validation Accuracy.

The objective cost function is to minimize the k-fold loss. It is defined as

| (25) |

The k-fold loss of the classifier depends on the values of fl and fh. Hence, these three variables ( fl and fh) are chosen for optimization. The k-fold loss needs to be minimized by suitably optimizing by using nature inspired technique. The range of k-fold loss lies between 0 and 1. The goal is to calculate the best possible values of these variables to obtain the lowest possible value of the k-fold loss at the output of the SVM classifier.

4.5. Speech enhancement

Speech enhancement is a process used to denoise the noisy speech signal and to improve the overall quality and intelligibility of the denoised speech. It is widely used in hearing aids, speech communications, and speech recognition tasks. Recently, the phase spectrum compensation based speech enhancement has been proposed [43] and its performance has been shown to be improved using the bio-inspired and ANN techniques [40]. This algorithm has been employed in this paper in the speech enhancement part. This algorithm is based on the concept of the use of proper scaling factor in the phase spectrum compensation. The Flow chart of this algorithm is shown in Fig. 6 .

Fig. 6.

Flow Chart of Improved phase aware speech enhancement Scheme.

4.6. Adaptive synthetic sampling approach for imbalanced learning

Classification based on Imbalanced learning is a challenging task in the fields of machine learning and data mining. One of the effective approaches to handle such a problem is called Adaptive Synthetic Sampling Approach for Imbalanced Learning (ADASYN). It solves the problem of the imbalanced classification problem by generating new data from the minority class (synthetic data). This is achieved by reducing the bias of the class imbalance, and gradually changing the classification decision boundary [37].

The motivation behind using the ADASYN algorithm in the proposed COVID-19 detection problem due to its encouraging performance in speech recognition (vowel) in [37], where the ratio between the number of the minority to majority samples is 90:900. Similarly, in the present case, the ratio of minority to majority class in Database-1 and Databse-2 are above 30% to 70% which are unbalanced case.

The steps of the or the ADASYN algorithm are expressed below.

-

•

Step-1 — Calculate the degree of class imbalance (d) from the given training data

-

•

Step-2 — Generate the number of new data samples (synthetic data = G) for the minority class

-

•

Step-3 — Calculate the from the K nearest neighbors calculation and normalize it. (where where is the number of examples in the K nearest neighbors of (feature vector of the ith sample) that belong to the majority class)

-

•

Step-4 — Calculate the number of synthetic data samples that need to be generated for each minority sample

-

•

Step-5 — Generate the synthetic data using Equation (26) for the loop varying from 1 to

| (26) |

where is the number of synthetic data that need to be generated for each minority sample, is a random number between 0 and 1, and is the random selection of one minority data sample from the K-nearest neighbors. The important parameter which makes ADASYN algorithm better than other similar technique [44] is the use of a density distribution which automatically calculates the number of synthetic samples that need to be generated for each minority data sample.

5. Experiment

In this section the details of the simulation based experiments carried out and various results are obtained. The different steps in simulation study snd the corresponding flowchart is shown in Fig. 3

Step-1 — A balanced labeled data set is prepared with 200 speech samples.

Step-2 — The sampling rate is converted to 16 kHz for all the speech samples.

Step-3 — Speech Enhancement principle is applied to remove the unwanted noise components from the data set.

Step-4 — The best possible values of fl and fh in the MFCC implementation are obtained which helps to yield the lowest possible value of k-fold loss of the SVM classifier using the enhanced speech data set.

Step-5 — The optimized values of fl and fh obtained from the Step-4 are applied and the corresponding modified cepstral features of the remaining speech samples of database-1 and 2 are obtained.

Step-6 - The various performance measures of classification using the optimized features are obtained from the SVM classifier.

5.1. Performance evaluation measures

5.1.1. Sensitivity

The performance of any classifiers is commonly evaluated by using either a numeric metric (accuracy), or a graphical representation of performance (receiver operating characteristic (ROC) curve). A commonly used classification metric, the Confusion matrix is calculated from the four values such as: TP (True Positive), FP (False Positive), FN (False Negative), and TN (True Negative) [45]. For the medical diagnosis, the FN plays a crucial role because it shows the class of patients who suffer from the COVID-19 disease but the classifier has falsely predicted them to be healthy [46]. But the FP has less significance in COVID-19 as the patient can go for the second round of tests to confirm. But the COVID-19 positive patients should not have false interpretation as if they are negative. To effectively find the FNs, the Recall (Sensitivity) value is used. It is calculated as

| (27) |

5.1.2. F-Score

Traditionally, F-Score is another performance measure of classification accuracy. The value indicates whether the evaluation importance would be to be given to FP or FN. It is known from the medical field that identification of FN is more significant than FP and value is taken as 2 for evaluation of classification accuracy [47]. The F-Score and F-2 Score are computed as

| (28) |

5.1.3. Kruskal-Wallis tests

Kruskal-Wallis tests are widely used to check the suitability of the input features to be used in the classification task. It is a non-parametric evaluation and no assumption is made about any prior distribution of the input data [6]. The test is based on statistical parameter H defined in Equation (29), where the total number of samples including all the classes is M, the number of samples in the jth class is and the sum of ranks of the jth class is and N is the number samples in the independent group.

| (29) |

5.2. Performance evaluation using TFBCC-M features

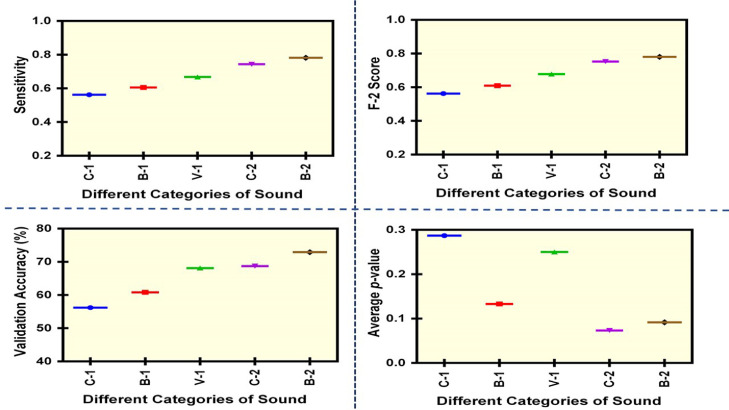

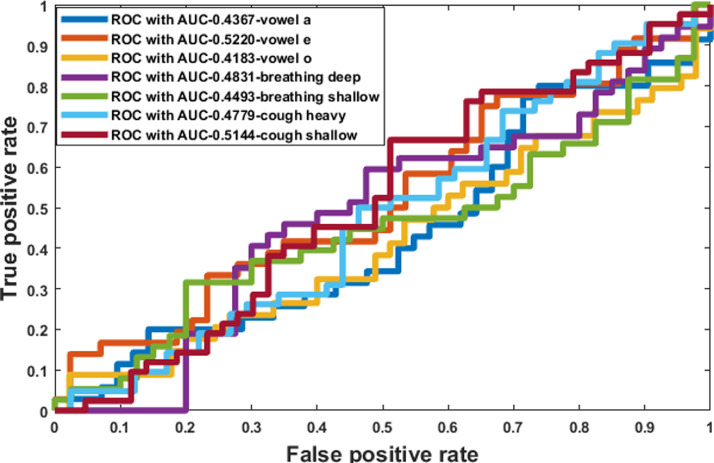

In this Section, the performance analysis using the existing MFCC features (TFBCC-M features) is carried out. For this purpose, 50 uniformly distributed random are selected from the COVID-19 positive and negative subjects in each of cough (C-1), breathing (B-1), and voiced (V-1) from database-1 and cough (C-2), breathing (B-2) from database-2. The 13 MFCC feature vectors are extracted and classified using the SVM classifier using five-fold cross validation. The performance is evaluated in terms of the validation accuracy, sensitivity, F-2 score, and Kruskal-Wallis tests using two databases. The results are plotted in Fig. 7 . The Receiver Operating characteristics (ROC) and Area Under Curves (AUC) are obtained and plotted in Fig. 8 .

Fig. 7.

Performance analysis of the TFBCC-M features.

Fig. 8.

Comparison of the performance of standard MFCC features for databse-1.

It has been observed that the overall performance of the TFBCC-M features are not satisfactory in the detection of COVID-19 and also it is noticed that the cough sounds are providing consistent performance compared to other categories of sounds. Therefore, to improve the performance of the TFBCC-M features the cough sounds are used for determining the optimum values of f and f by using FF Algorithm.

5.3. finding the optimum values of f and fusing FF algorithm

The relationship between the best cost (k fold loss) and the number of iterations obtained from the simulation study is shown in Fig. 9 . The associated parameters of the FF algorithm used in the simulation study are and the population size is assumed to be 50. These parameters have been chosen based on trial and error which provides the least k-fold loss at the output of the classifier. The objective function to be minimized is given in Equation (25). The three attributes f and f are associated as a member of the population of the FF algorithm. The light intensity value affects the k-fold loss for a given range of f and f . The attractiveness is governed by light intensity variation with distance and the absorption coefficient. It is expressed in Equation (18). The minimization of k-fold loss continues using the FF algorithm until it attains the best possible minimum value. After obtaining the satisfactory convergence, the best member of the population provides the optimized values of f and f .

Fig. 9.

The learning characteristics obtained during the optimization of FF algorithm.

5.4. Discussion

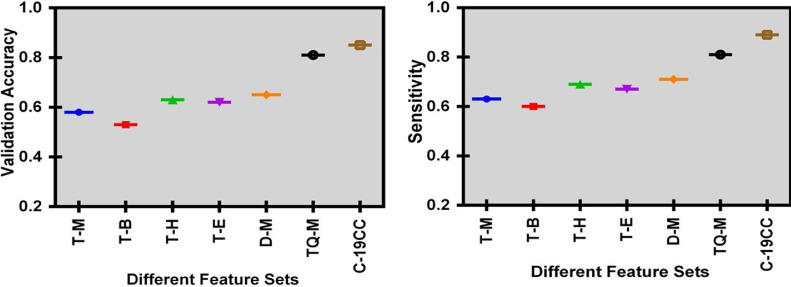

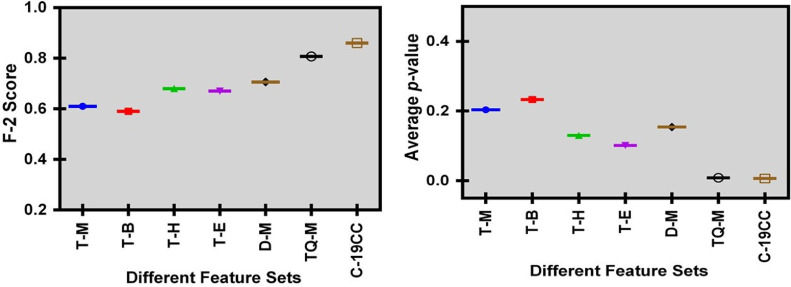

In this section, the detection performance using the proposed Cepstral Coefficients (C-19CC) is compared with another seven types of audio features such as TFBCC-M features (T-M), TFBCC-B (T-B) features, TFBCC-H (T-H) features, TFBCC-E features (T-E) [33], DWT based MFCC Features (D-M) [48], TQWT based MFCC Features (T-M) [49], and Temporal and Spectral acoustic features (T-S) [23] for cough sounds. The effectiveness of the proposed C-19CC features is illustrated using different measures such as validation accuracy, sensitivity, F-2 score and Kruskal-Wallis tests for the two databases.

The better classification performance demonstrates the efficacy of the proposed method. The optimization of MFCC features has been previously used in the area of spoken language recognition [13] speaker-independent emotion recognition [18], source recognition of Cell-Phones [19]. In this current research work, better-optimized MFCC features are obtained and used for efficient detection of COVID-19. An important advantage of using C-19CC features as the problem of feature selection or reduction is avoided. In traditional speech recognition tasks, several spectral, cepstral, temporal, and wavelet features are obtained and combined to form the desired feature vector which is a tedious and time-consuming process. On the other hand, the proposed C-19CC features are easy to generate and potential in performance. In Figs. 10 and 11 , the comparison of various performance measures using different feature sets is made for the combined cough category of sounds using the relevant data of the two databases. It is observed that the sensitivity is high because of the lower FN values. This is very important in the case of medical data classification. The same is also evident from the plot of the F-2 Score and validation accuracy. To further justify the effectiveness of the proposed technique, the p-value comparative analysis is carried out for standard MFCC and C-19 CC features as inputs. The average comparative performance plots are presented in Figure 10. The average p-value is observed to be quite low. The lower the p-value, the better is the effectiveness of that feature. The average p-values obtained for all the 13 coefficients of C-19CC are quite low and thus are suitable features for the COVID-19 classification. The validation accuracy of different audio features is plotted in Fig. 10. It is noticed that the C-19CC features-based model outperforms the other input feature-based models. This justifies the effectiveness of the suggested features. The F-2 scores of the individual categories of both databases are listed in Table 3 using the two databases.

Fig. 10.

Comparison of the validation accuracy and sensitivity using different feature sets.

Fig. 11.

Comparison of the F-2 score and average p-value using different feature sets.

Table 3.

Performance Comparison of the different categories of sounds using 8 different feature sets.

| Category of Sound | Evaluation Measures | Features |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| T-M | T-B | T-H | T-E | D-M | TQ-M | T-S | C-19CC | ||

| Vowel-/o/ (DB-1) | F-2 Score | 0.547 | 0.527 | 0.604 | 0.515 | 0.577 | 0.685 | 0.674 | 0.619 |

| Accuracy | 0.590 | 0.485 | 0.611 | 0.532 | 0.552 | 0.692 | 0.656 | 0.626 | |

| Vowel-/e/ (DB-1) | F-2 Score | 0.587 | 0.543 | 0.535 | 0.528 | 0.597 | 0.704 | 0.645 | 0.645 |

| Accuracy | 0.596 | 0.577 | 0.559 | 0.515 | 0.656 | 0.682 | 0.674 | 0.663 | |

| Vowel-/a/ (DB-1) | F-2 Score | 0.543 | 0.523 | 0.523 | 0.423 | 0.603 | 0.595 | 0.598 | 0.595 |

| Accuracy | 0.509 | 0.469 | 0.502 | 0.414 | 0.582 | 0.593 | 0.554 | 0.575 | |

| Counting-Fast (DB-1) | F-2 Score | 0.681 | 0.641 | 0.671 | 0.611 | 0.691 | 0.701 | 0.728 | 0.708 |

| Accuracy | 0.636 | 0.652 | 0.616 | 0.61 | 0.684 | 0.721 | 0.735 | 0.699 | |

| Counting-Normal (DB-1) | F-2 Score | 0.543 | 0.525 | 0.532 | 0.456 | 0.564 | 0.597 | 0.713 | 0.713 |

| Accuracy | 0.537 | 0.567 | 0.519 | 0.468 | 0.555 | 0.558 | 0.725 | 0.714 | |

| Cough-Shallow (DB-1) | F-2 Score | 0.608 | 0.546 | 0.535 | 0.454 | 0.583 | 0.612 | 0.701 | 0.729 |

| Accuracy | 0.592 | 0.567 | 0.574 | 0.502 | 0.576 | 0.614 | 0.685 | 0.741 | |

| Cough-Heavy (DB-1) | F-2 Score | 0.612 | 0.532 | 0.562 | 0.462 | 0.632 | 0.662 | 0.698 | 0.711 |

| Accuracy | 0.586 | 0.496 | 0.492 | 0.499 | 0.592 | 0.666 | 0.659 | 0.723 | |

| Breathing-Deep (DB-1) | F-2 Score | 0.594 | 0.529 | 0.502 | 0.466 | 0.576 | 0.546 | 0.577 | 0.557 |

| Accuracy | 0.623 | 0.529 | 0.506 | 0.439 | 0.586 | 0.564 | 0.562 | 0.607 | |

| Breathing-Shallow (DB-1) | F-2 Score | 0.611 | 0.481 | 0.475 | 0.402 | 0.615 | 0.561 | 0.556 | 0.536 |

| Accuracy | 0.586 | 0.497 | 0.486 | 0.496 | 0.622 | 0.601 | 0.563 | 0.534 | |

| Cough (DB-2) | F-2 Score | 0.735 | 0.691 | 0.715 | 0.695 | 0.742 | 0.776 | 0.751 | 0.851 |

| Accuracy | 0.717 | 0.651 | 0.718 | 0.701 | 0.759 | 0.772 | 0.792 | 0.857 | |

| Breathing (DB-2) | F-2 Score | 0.751 | 0.746 | 0.722 | 0.719 | 0.697 | 0.742 | 0.806 | 0.732 |

| Accuracy | 0.794 | 0.788 | 0.685 | 0.711 | 0.694 | 0.728 | 0.815 | 0.736 | |

It is observed from Table 3 that in the category of cough sounds, the proposed C-19 CC provides the highest F-2 scores of 0.851 and 0.741 for the database-2 and database-1 respectively. While in the Vowel category, the E sound TQ-M features perform the best and the /e/ vowel is providing superior performance compred to /o/ and /a/. Similarly, for the counting case, the T-S features exhibit the best performance and in the breathing category, the T-M and D-M features are better than the others. The validation accuracy is also found to be the highest for the category of cough sound using the proposed C-19 CC features. Considering all the categories and databases, the cough sound is found to be the best one which provides the highest accuracy of detection as well as the highest F-2 score employing the C-19CC features. The acoustic properties of the coughing signal are different from the speech signal in terms of bandwidth and the way of perception of sound. The application of the additional speech enhancement block has further improved the detection performance of the proposed model. Additionally, the use of the ADASYN tool for removing the class imbalance in both the databases has improved the classification performance because it helps in identifying the better features.

6. Conclusion

The detection of COVID-19 using speech signal can serve as an important cost-effective tool as it does involve any complicated medical test. This approach can easily diagnose the preliminary condition of a patient even without visiting a hospital and without the help of any medical staff as it serves as an automatic detection tool. In this paper, a new audio feature called C-19CC is proposed and used for detection of COVID-19 in this paper and the performance of the method is tested using two standard speech databases. The proposed model has been demonstrated to be superior to other existing speech based COVID-19 detection model reported in the literature. However, it is suggested that the detection accuracy need to be ascertained by appropriate medical experts. The performance can be further be increased by combining the new C-19CC with other temporal and statistical features. The proposed method and the combination of features can also be applied for detection of other speech related diseases.

The proposed C-19CC features are based on the selection of the best possible conversion scale and frequency range of the Cepstral filter bank by using the bio-inspired technique. This is achieved by Identification of the appropriate sound patterns to efficiently detect COVID-19 and application of the speech enhancement schemes for the improvement of the classification performance. In this paper, a simple SVM based classifier is used for detection purpose. However, the classification accuracy can further be improved by using deep learning-based techniques.

The attributes given in the dataset are breath, cough and voiced vowel sounds. Moreover, the analysis can be extended to study the phonetic relevance and identification of phonemic grouping of speech based COVID-19 detection. For this study there is a requirement of preparation of the phonetically balanced dataset of COVID-19. The optimization method of the filter bank parameters can also be extended to different mechanical applications of cepstral analysis, where the properties of the input signal is quite different from that of standard human speech signals. There is a scope for further work to reduce computational complexities associated with this method so that it may be suitable for the real-life application using FPGA[8].

Declaration of Competing Interest

All authors declare that there is no conflict of interest in this work.

Acknowledgment

The authors express their gratitude to Professor Cecilia Mascolo, Department of Computer Science and Technology and Chancellor, Master and Scholar of the University of Cambridge of the Old Schools Trinity Lane, Cambridge CB2 1TN, UK for sharing the speech database of COVID19 sound App of the paper published in ACM KDD [8].

Biographies

Dr. Tusar Kanti Dash has received Ph.D. from Birla Institute of Technology, Mesra India in 2020 in the area of Speech Processing. He has got his B.E. Degree in Electronics & Telecommunication Engineering from the Utkal University, India in 2003, and M.Tech. Degree in Electronics & Communications Engineering from IIT, Kharagpur, India in 2013. He is working at the Department of Electronics & Telecommunications Engineering, C.V. Raman Global University, Bhubaneswar, India as an Assistant Professor. His research interests include Audio Signal Processing and Evolutionary Computing.

Ms. Soumya Mishra has received B.Tech. and M.Tech. degree from Biju Patnaik University of Technology, India in Electronics and Communications Engineering in the year 2008 and 2011 respectively. She is working at C V Raman Global University, Bhubaneswar, India as an Assistant Professor in the Department of Electronics and Telecom Engineering. She is pursuing a Ph.D. at C.V. Raman Global University, India under the guidance of Dr. T K Dash and Dr. G. Panda. Her research interests include Speech & Audio Signal Processing and Wireless Communication.

Dr. Ganapati Panda did his postdoctoral research work at the University of Edinburgh, UK (19841986) and Ph.D. from IIT Kharagpur in 1981 in the area of Electronics and Communication Engineering. He has already guided 42 Ph.D.s in the field of Signal Processing, Communication, and Machine Learning. Currently, he is working as Professor and Research Advisor at C V Raman Global University, Bhubaneswar, India. Prof Panda is also the Professorial Fellow at the Indian Institute of Technology Bhubaneswar. Prior to this, he was working as Professor, Dean, and Deputy Director in the School of Electrical Sciences of IIT Bhubaneswar.

Dr Suresh Chandra Satapathy has received his PhD in Computer Science Engg from JNTUH, Hyderabad. He has done his MTech Computer Science from NIT, Rourkela. Presently he is Professor in Computer Science at KIIT Deemed to be University, Bhubaneswar, Odisha, India. He hs over 30 years of teaching experience. Machine Learning, Swarm Intelligence etc are his areas of research. He has published more than 150 research articles in various reputed journals and conferences. SGO and SLEO are his two evolutionary optimization algorithms developed by his collaborators.

References

- 1.Shereen M.A., Khan S., Kazmi A., Bashir N., Siddique R. COVID-19 Infection: origin, transmission, and characteristics of human coronaviruses. J. Adv. Res. 2020 doi: 10.1016/j.jare.2020.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.WHO Coronavirus Disease (COVID-19) Dashboard Data, https://covid19.who.int/.

- 3.Sun C., Zhai Z. The efficacy of social distance and ventilation effectiveness in preventing COVID-19 transmission. Sustainable cities and society. 2020;62:102390. doi: 10.1016/j.scs.2020.102390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.More than virus, fear of stigma is stopping people from getting tested: Doctors, 2020, web edition, https://www.newindianexpress.com/states/karnataka/2020/aug/06/more-than-virus-fear-of-stigma-is-stopping-people-from-getting-tested-doctors-2179656.html.

- 5.Han J., Qian K., Song M., Yang Z., Ren Z., Liu S., Liu J., Zheng H., Ji W., Koike T. An early study on intelligent analysis of speech under COVID-19: severity, sleep quality, fatigue, and anxiety. arXiv preprint arXiv:2005.00096. 2020 [Google Scholar]

- 6.Karan B., Sahu S.S., Orozco-Arroyave J.R., Mahto K. Hilbert spectrum analysis for automatic detection and evaluation of Parkinson’s speech. Biomed. Signal Process. Control. 2020;61:102050. [Google Scholar]

- 7.König A., Satt A., Sorin A., Hoory R., Toledo-Ronen O., Derreumaux A., Manera V., Verhey F., Aalten P., Robert P.H. Automatic speech analysis for the assessment of patients with predementia and Alzheimer’s disease. Alzheimer’s & Dementia: Diagnosis, Assessment & Disease Monitoring. 2015;1(1):112–124. doi: 10.1016/j.dadm.2014.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Brown C., Chauhan J., Grammenos A., Han J., Hasthanasombat A., Spathis D., Xia T., Cicuta P., Mascolo C. Exploring automatic diagnosis of COVID-19 from crowdsourced respiratory sound data. arXiv preprint arXiv:2006.05919. 2020 [Google Scholar]

- 9.Deshpande G., Schuller B. An overview on audio, signal, speech, & language processing for COVID-19. arXiv preprint arXiv:2005.08579. 2020 [Google Scholar]

- 10.Han J., Qian K., Song M., Yang Z., Ren Z., Liu S., Liu J., Zheng H., Ji W., Koike T. An early study on intelligent analysis of speech under COVID-19: severity, sleep quality, fatigue, and anxiety. arXiv preprint arXiv:2005.00096. 2020 [Google Scholar]

- 11.Oppenheim A.V., Schafer R.W. From frequency to quefrency: a history of the cepstrum. IEEE Signal Process. Mag. 2004;21(5):95–106. [Google Scholar]

- 12.Sheela K.A., Prasad K.S. Linear discriminant analysis F-Ratio for optimization of TESPAR & MFCC features for speaker recognition. J. Multimed. 2007;2(6) [Google Scholar]

- 13.Hanilçi C., Ertas F. Proceedings of the first ACM workshop on Information hiding and multimedia security. 2013. Optimizing acoustic features for source cell-phone recognition using speech signals; pp. 141–148. [Google Scholar]

- 14.Chatterjee S., Kleijn W.B. Auditory model-based design and optimization of feature vectors for automatic speech recognition. IEEE Trans. Audio Speech Lang. Process. 2010;19(6):1813–1825. [Google Scholar]

- 15.Kadyan V., Mantri A., Aggarwal R.K. A heterogeneous speech feature vectors generation approach with hybrid hmm classifiers. Int. J. Speech Technol. 2017;20(4):761–769. [Google Scholar]

- 16.Sun Y., Zhou Y., Zhao Q., Yan Y. Acoustic feature optimization based on F-ratio for robust speech recognition. IEICE Trans. Inf. Syst. 2010;93(9):2417–2430. [Google Scholar]

- 17.Aggarwal R.K., Dave M. Filterbank optimization for robust ASR using GA and PSO. Int. J. Speech Technol. 2012;15(2):191–201. [Google Scholar]

- 18.Kadyan V., Mantri A., Aggarwal R.K. Improved filter bank on multitaper framework for robust punjabi-ASR system. Int. J. Speech Technol. 2020;23(1):87–100. [Google Scholar]

- 19.Kou H., Shang W., Lane I., Chong J. 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. 2013. Optimized MFCC feature extraction on GPU; pp. 7130–7134. [Google Scholar]

- 20.Wang Z., Xiao Y., Li Y., Zhang J., Lu F., Hou M., Liu X. Automatically discriminating and localizing COVID-19 from community-acquired pneumonia on chest X-rays. Pattern Recognit. 2021;110:107613. doi: 10.1016/j.patcog.2020.107613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Oulefki A., Agaian S., Trongtirakul T., Laouar A.K. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2020:107747. doi: 10.1016/j.patcog.2020.107747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dey N., Rajinikanth V., Fong S.J., Kaiser M.S., Mahmud M. Social group optimization-assisted Kapur’s entropy and morphological segmentation for automated detection of COVID-19 infection from computed tomography images. Cognit. Comput. 2020;12(5):1011–1023. doi: 10.1007/s12559-020-09751-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sharma N., Krishnan P., Kumar R., Ramoji S., Chetupalli S.R., Ghosh P.K., Ganapathy S. Coswara-A database of breathing, cough, and voice sounds for COVID-19 diagnosis. arXiv preprint arXiv:2005.10548. 2020 [Google Scholar]

- 24.You C.H., Bin M.A. Spectral-domain speech enhancement for speech recognition. Speech Commun. 2017;94:30–41. [Google Scholar]

- 25.Wang L., Zhang C., Liu J. 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC) 2020. Deep Learning Defense Method Against Adversarial Attacks; pp. 3667–3672. [Google Scholar]

- 26.Hu Z., Tang J., Wang Z., Zhang K., Zhang L., Sun Q. Deep learning for image-based cancer detection and diagnosis- A survey. Pattern Recognit. 2018;83:134–149. [Google Scholar]

- 27.Zhong G., Wang L.-N., Ling X., Dong J. An overview on data representation learning: from traditional feature learning to recent deep learning. The Journal of Finance and Data Science. 2016;2(4):265–278. [Google Scholar]

- 28.Zhang Y.-D., Satapathy S.C., Liu S., Li G.-R. A five-layer deep convolutional neural network with stochastic pooling for chest CT-based COVID-19 diagnosis. Mach. Vis. Appl. 2021;32(1):1–13. doi: 10.1007/s00138-020-01128-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ahuja S., Panigrahi B.K., Dey N., Rajinikanth V., Gandhi T.K. Deep transfer learning-based automated detection of COVID-19 from lung CT scan slices. Applied Intelligence. 2021;51(1):571–585. doi: 10.1007/s10489-020-01826-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sujitha P.S., Pebbili G.K. Cepstral analysis of voice in young adults. Journal of Voice. 2020 doi: 10.1016/j.jvoice.2020.03.010. [DOI] [PubMed] [Google Scholar]

- 31.Benmalek E., Elmhamdi J., Jilbab A. Multiclass classification of Parkinson’s disease using cepstral analysis. Int. J. Speech Technol. 2018;21(1):39–49. [Google Scholar]

- 32.Doc E.S. Speech processing, transmission and quality aspects (STQ); distributed speech recognition; advanced front-end feature extraction algorithm; compression algorithms. ETSI ES. 2002;202(050):v1. [Google Scholar]

- 33.Sugan N., Srinivas N.S.S., Kumar L.S., Nath M.K., Kanhe A. Speech emotion recognition using cepstral features extracted with novel triangular filter banks based on bark and ERB frequency scales. Digit. Signal Process. 2020:102763. [Google Scholar]

- 34.Sugan N., Srinivas N.S., Kar N., Kumar L.S., Nath M.K., Kanhe A. 2018 International CET Conference on Control, Communication, and Computing (IC4) 2018. Performance comparison of different cepstral features for speech emotion recognition; pp. 266–271. [Google Scholar]

- 35.Karan B., Sahu S.S., Mahto K. Parkinson disease prediction using intrinsic mode function based features from speech signal. Biocybernetics and Biomedical Engineering. 2020;40(1):249–264. [Google Scholar]

- 36.Strisciuglio N., Vento M., Petkov N. Learning representations of sound using trainable COPE feature extractors. Pattern Recognit. 2019;92:25–36. [Google Scholar]

- 37.He H., Bai Y., Garcia E.A., Li S. 2008 IEEE international joint conference on neural networks (IEEE world congress on computational intelligence) 2008. ADASYN: Adaptive synthetic sampling approach for imbalanced learning; pp. 1322–1328. [Google Scholar]

- 38.Yang X.-S. International symposium on stochastic algorithms. 2009. Firefly algorithms for multimodal optimization; pp. 169–178. [Google Scholar]

- 39.Yang X.-S., He X. Firefly algorithm: recent advances and applications. International journal of swarm intelligence. 2013;1(1):36–50. [Google Scholar]

- 40.Dash T.K., Solanki S.S., Panda G. Improved phase aware speech enhancement using bio-inspired and ANN techniques. Analog Integr. Circuits Signal Process. 2019:1–13. [Google Scholar]

- 41.L. Auria, R.A. Moro, Support vector machines (SVM) as a technique for solvency analysis (2008).

- 42.Karan B., Sahu S.S., Orozco-Arroyave J.R., Mahto K. Hilbert spectrum analysis for automatic detection and evaluation of Parkinson’s speech. Biomed. Signal Process Control. 2020;61:102050. [Google Scholar]

- 43.Stark A.P., Wójcicki K.K., Lyons J.G., Paliwal K.K. Ninth annual conference of the international speech communication association. 2008. Noise driven short-time phase spectrum compensation procedure for speech enhancement. [Google Scholar]

- 44.He H., Garcia E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009;21(9):1263–1284. [Google Scholar]

- 45.J. Lever, M. Krzywinski, N. Altman, Points of significance: classification evaluation., 2016, (????).

- 46.Hardwick S.A., Deveson I.W., Mercer T.R. Reference standards for next-generation sequencing. Nat. Rev. Genet. 2017;18(8):473. doi: 10.1038/nrg.2017.44. [DOI] [PubMed] [Google Scholar]

- 47.Devarriya D., Gulati C., Mansharamani V., Sakalle A., Bhardwaj A. Unbalanced breast cancer data classification using novel fitness functions in genetic programming. Expert Syst. Appl. 2020;140:112866. [Google Scholar]

- 48.Soumaya Z., Taoufiq B.D., Nsiri B., Abdelkrim A. 2019 4th World Conference on Complex Systems (WCCS) 2019. Diagnosis of Parkinson disease using the wavelet transform and MFCC and SVM classifier; pp. 1–6. [Google Scholar]

- 49.Sakar C.O., Serbes G., Gunduz A., Tunc H.C., Nizam H., Sakar B.E., Tutuncu M., Aydin T., Isenkul M.E., Apaydin H. A comparative analysis of speech signal processing algorithms for Parkinson’s disease classification and the use of the tunable Q-factor wavelet transform. Appl. Soft Comput. 2019;74:255–263. [Google Scholar]