Abstract

Acquiring electrocardiographic (ECG) signals and performing arrhythmia classification in mobile device scenarios have the advantages of short response time, almost no network bandwidth consumption, and human resource savings. In recent years, deep neural networks have become a popular method to efficiently and accurately simulate nonlinear patterns of ECG data in a data-driven manner but require more resources. Therefore, it is crucial to design deep learning (DL) algorithms that are more suitable for resource-constrained mobile devices. In this paper, KecNet, a lightweight neural network construction scheme based on domain knowledge, is proposed to model ECG data by effectively leveraging signal analysis and medical knowledge. To evaluate the performance of KecNet, we use the Association for the Advancement of Medical Instrumentation (AAMI) protocol and the MIT-BIH arrhythmia database to classify five arrhythmia categories. The result shows that the ACC, SEN, and PRE achieve 99.31%, 99.45%, and 98.78%, respectively. In addition, it also possesses high robustness to noisy environments, low memory usage, and physical interpretability advantages. Benefiting from these advantages, KecNet can be applied in practice, especially wearable and lightweight mobile devices for arrhythmia classification.

1. Introduction

Arrhythmias are the most common cardiovascular disease and the leading cause of stroke and sudden cardiac death. Electrocardiogram (ECG) is a common tool for detecting arrhythmias because of its noninvasive and easy-to-perform nature. Because of the randomness of arrhythmia onset, it is necessary for patients to be monitored for a long period, causing the difficulty of processing the resulting large number of ECG data. A computer-aided arrhythmia diagnosis system running on mobile devices can reduce labor and responding time, thereby improving the efficiency of daily arrhythmia classification [1, 2].

Most of the existing algorithms for automatic ECG recognition of arrhythmia are based on the assessment of morphological features of single or fewer heartbeats. Due to individual differences, the methods based on short-term features are prone to errors. In addition, numerous clinical studies have demonstrated the importance of long-term rhythm features for detecting arrhythmia associated with many diseases such as tachycardia, atrial fibrillation, and premature beats. This motivated us to study a new solution to arrhythmia classification based on a long period of continuous ECG signals.

In recent years, ECG classification algorithms based on deep neural networks (DNNs) have demonstrated excellent performance. DNNs use raw ECG data as input to build an end-to-end model for feature learning and classification to achieve comparable accuracy for both noise-free data and noisy data. Among them, convolutional neural networks (CNNs) [3–5] and recurrent neural networks (RNNs) [6–8] are the two most frequently used classes of neural nets.

One of the major problems with DNNs is that the performance of the algorithm is highly dependent on the network's scale. Most of the current mobile devices are still limited in both computation power and memory capacity and thus unfit for existing DNN approaches. Therefore, it is crucial to building lightweight networks that can operate in resource-constrained environments. Currently, there exist two main approaches to build lightweight networks. (1) The first approach uses network pruning techniques [9] or knowledge distillation [10] to achieve model compression and inference acceleration by removing redundant structures and parameters. Because the accuracy-focused models contain strategies that help overcome various problems encountered during training, such as overfitting, it is difficult to scale such a model down sufficiently without sacrificing accuracy. (2) The second approach designs models specifically for resource-constrained environments. Such efficiency-focused models include MobileNet [11], ShuffleNet [12], and LiteNet [13]. This approach sacrifices model performance to achieve a more efficient network structure [14].

On the other hand, DNNs fail to take advantage of useful features established by cardiologists in the past decades; therefore, we need an immense amount of high-quality labeled data to learn the potential causal relations between ECG data and diseases. Such data are often difficult to obtain and expensive. Therefore, only limited data sets can be used for model training. Because of the inevitable contamination by various noises in practical application, models built on limited data sets may be at risk of low robustness. Therefore, it is still a challenge to design a lightweight and robust DL algorithm for resource-constrained mobile devices.

Recent studies have shown that neural networks approximate the mapping between input and output data. It is believed that the mapping can be simplified by using domain knowledge or explicit features of the raw data, and the network presentation capability and scale can be optimized accordingly [15]. In ECG signals, the information reflecting diseases corresponds to a certain frequency range. Based on these findings, a lightweight DL architecture named KecNet is implemented to recognize 10-second ECG signal fragments in this study. 10 seconds is the typical duration of the central rhythm strip acquisition on a routine 12-lead ECG [16]. The developed scheme includes the following features. Firstly, a CNN network containing a customized filter bank is designed based on digital signal processing to precisely fit each application. By imposing constraints on filter shapes, it effectively extracts components in a specific frequency range from complex signals. Because the filter design is incorporated with the knowledge of digital signal processing, the proposed approach performs more physical interpretability than the conventional CNN [17]. It is also much more robust against noisy environments and less consuming in terms of implementation cost. Secondly, the long-duration correlation features of the ECG fragments are represented symbolically based on the clinical knowledge, which is used as an additional parameter to further constrain the decision-making process and improve the performance of the model. We evaluate the performance of the proposed KecNet on the MIT-BIH arrhythmia database. According to the Association for the Advancement of Medical Instrumentation (AAMI) protocol [18], heartbeat types in the MIT-BIH database [19] can be sorted into 5 main classes: normal beat (N), supraventricular ectopic beat (S), ventricular ectopic beat (V), fusion beat (F), and unknown (Q). Experimental results show that the proposed method has better performance and robustness for the arrhythmia classification task.

2. Problem Definition

Most deep learning-based approaches to arrhythmias adopt the form of supervised learning to learn mapping functions. Mathematically, we denote an ECG data set containing N samples as X={(x(i), y(i))|i ∈ N}, where x(i) is the ith sample and y(i) is the label. The procedure for the neural network learning about data can be viewed as a parameter optimization problem, as formulated in

| (1) |

where f(∗, θf) is the function that we will design to simulate the mapping between data and labels and θf is the parameters associated with the mapping f(∗). L(∗) is a loss function describing the loss of assigning a prediction category for a sample x(i) with label y(i).

Due to the complexity of arrhythmia pathology, the conventional approach for improving the performance of classification models is to increase the number of layers of the network and enhance the representation capability of the model by adding nonlinear operations. However, this poses three problems. Firstly, the increase in the number of layers causes the increase of parameters in the network, which intensifies the storage difficulty and computational complexity of the model. Secondly, the increase in model depth may cause the risk of vanishing gradient [20], resulting in the inability to effectively update the parameters of the shallow convolution kernel. Thirdly, more training data is needed to prevent overfitting of the model [21].

Based on the above analysis, our goal is to achieve better classification performance using shallow networks without increasing the model complexity and training data. To achieve this goal, we try to incorporate relevant domain knowledge into the design process of convolutional neural networks, mainly including using the amplitude-frequency characteristics of bandpass filters to filter the noise in ECG signals to improve the model's ability to grasp valid information, and extracting dominant features of ECG data as additional parameters to prevent the loss of temporal information due to the pooling mechanism of CNN.

3. Methods

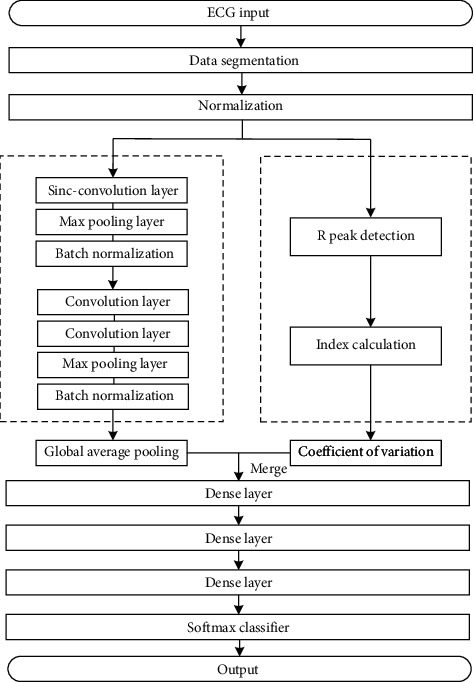

The workflow of the proposed method is shown in Figure 1. First, the data is segmented and normalized. The segmented data are fed into the KecNet model, which contains a CNN structure with a customized convolutional layer and a symbolic parameter extraction structure in the feature extraction part of the model. Finally, the fused feature vectors are fed into the softmax classifier for classification.

Figure 1.

Workflow of the proposed method.

3.1. Sinc-Convolution Layer

The ECG signal is a mixture of electrical activity from various parts of the myocardium. Depending on the quality of the data, it may also include multiple types of noise, such as baseline drift, motion artifacts, and electromyographic interference [22]. The filter information learned by traditional CNNs usually contains a mixture of noise and multiband modes, which reduces the representation capability and readability of the ECG signal [17].

In order to overcome this defect, it is very important to optimize the first convolution layer of CNN because this layer directly processes the original ECG samples containing rich underlying information and helps the subsequent convolution layer to perform complex nonlinear representation of data. This study introduces the Sinc-convolution layer, a structure based on the interpretable CNN developed by Ravanelli and Bengio for speech recognition [17]. The core idea of this structure is based on parameterized cardinal-sine (Sinc) functions for bandpass filter design. While the conventional CNN learns all the parameters of the filters, the Sinc-convolution defines a tunable filter bank g with clear physical explanation in advance to replace the filter in traditional CNN, as formulated in

| (2) |

where θg is the parameter to be learned. For ECG signal analysis, the bandpass filter is an effective choice for designing tunable filter banks [23], because the time domain signal is divided into different subspaces by frequency band transformation so that the filter can be activated by the information of specific frequency band to achieve more effective and reliable filtering.

In the frequency domain, the amplitude of a universal bandpass filter can be written as the difference between two rectangular filters. After returning to the time domain using the Fourier transform [23], the function g becomes as

| (3) |

where fL and fH are the learned low and high cut-off frequencies, and sin c(x)=sin(x)/x.

To mitigate the spectral leakage, a popular solution is windowing by multiplying the truncated function g with a window function:

| (4) |

In this work, we use the Hamming [15] window as follows:

| (5) |

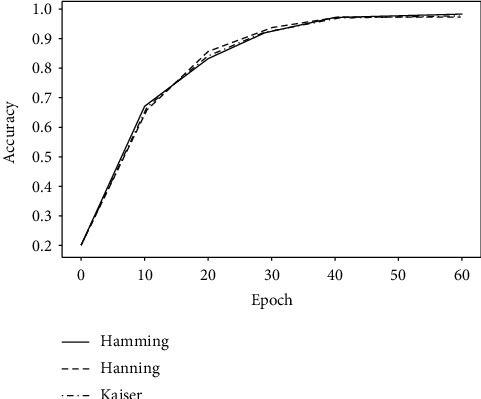

In fact, as shown in Figure 2, similar results are obtained when training arrhythmia classification models, regardless of whether the window function type is Hamming, Hanning [16], or Kaiser [22]. In addition, since filter g is symmetric, the computational efficiency can be improved with one side of the filter inheriting the results from the other side.

Figure 2.

Comparison of different window functions.

The experimental results in Section 5.3 show that the Sinc-convolution layer is more selective in frequency response compared to the CNN. Because the filter effectively extracts components from complex signals over a specific frequency range, it improves the model's robustness and readability [24]. After the Sinc filter self-adaptively classifies the frequency band of the raw ECG data, the standard CNN structure is used to extract the time domain features.

3.2. Symbolic Representation of Rhythmic Features

When analyzing the discrete-time series of the data, converting sequences into symbols with practical meaning is a common method to simplify the analysis process [25]. In fact, some quantitative features based on clinical knowledge (e.g., heart rate variability [26], RR interval [27], etc.) are considered to be more relevant to the underlying pathological mechanisms. Among them, the coefficient of variation (CV) describes the degree of dispersion of the RR interval and is commonly used to measure the regularity of the RR interval [26]. Since the R-peak is the most obvious waveform in the ECG, features based on the RR interval have stronger noise immunity and are one of the most important features for analyzing the rhythm variation [28].

The model is optimized based on the above findings. In addition to the CNN structure designed to extract spatial morphological features, the CVs were added to the network as symbolic representations of rhythm features. ECG fragments with anomalous rhythms are identified more easily. The computational procedure is as follows/

Step 1 . —

Detect the R-peak of ECG fragment x(i) based on the Pan-Tompkins algorithm [29] to obtain the sequence of R-peak positions R(i)={r1(i), r2(i),…, rj(i)}.

Step 2 . —

Calculate the RR interval series T(i)={t1(i), t2(i),…, tn(i)}.

Step 3 . —

Calculate the mean value and standard deviation of the sequence T(i).

Step 4 . —

Calculate the coefficient of variation .

3.3. The Architecture of the Network

The proposed KecNet is based on a 1D convolutional neural network, including one Sinc-convolution layer, two standard convolution layers, three pooling layers, two batch standardization layers, three dropout layers, one global average pooling layer, and three dense layers. Table 1 summarizes the basic structure of KecNet. The key characteristics of different layers in basic KecNet are detailed as follows:

Sinc-convolution layer: The length of the filter significantly affects the classification accuracy. With the increase of filter length, the accuracy is improved. Through experiments, the length of convolution kernel L = 251 is selected. It should be noted that since a Sinc filter has only two parameters, no matter how long the filter length is selected, the parameters will not increase

Standard convolution layer: Two 1D convolution layers are added after the Sinc-convolution layer and convolve with a filter size of 5. All convolution layers (including Sinc-convolution layer) adopt ReLU activation function [30]

Max-pooling layer: KecNet performs a max-pooling operation after the Sinc-convolution layer and two standard convolution layers. The max-pooling operation reduces the computation cost between the convolution layers while achieving translation invariance of the neural network

Dropout [31] layer: The dropout layer reduces the complex coadaptation relationships between neurons by randomly dropping a fraction of the network nodes and overcomes the overfitting problem at the same time. We build the dropout layer after each of the two groups of pooling layers, with a ratio of 0.2. We also add a dropout layer after the first full connection layer, with a ratio of 0.3

Batch Normalization (BN) [32] layer: The BN technique ensures the validity of the gradient by adjusting the distribution of the output data, smoothing the loss plane, and speeding up the convergence of the network. We apply the BN layer after the two dropout layers

Global Average Pooling (GAP) [33] layer: GAP averages the values of all elements of the feature map to reduce the number of parameters. We use the GAP layer before the dense layer. Also, the rhythm feature notation described in Section 3.2 is added to the GAP layer to maximize the impact of this factor on the network

Dense layer: Two fully connected layers are used in the basic KecNet. The first layer consists of 16 units. The second layer consists of 8 units. After dense layers, the softmax function is used as a classifier to predict five classes

Table 1.

Model parameters.

| Layer | Feature map element no. | Kernel size | Stride | |

|---|---|---|---|---|

| Sinc module | Sinc-Conv1D | 32 | 251 | 1 |

| Max-pooling | — | 2 | 2 | |

| Dropout | — | — | — | |

| BN | — | — | — | |

|

| ||||

| Conv module | Conv1D | 16 | 5 | 1 |

| 16 | 5 | 1 | ||

| Max-pooling | — | 2 | 2 | |

| Dropout | — | — | — | |

| BN | — | — | — | |

|

| ||||

| GAP | 16 + 1 | — | — | — |

| Dense | 16 | — | — | — |

| Dropout | — | — | — | — |

| Dense | 8 | — | — | |

| Softmax | 5 | — | — | — |

4. Experimental Setup

4.1. Materials and Preprocessing

We used the MIT-BIH arrhythmia database to evaluate the performance of the proposed method. The database contains 48 dynamic ECG recordings, each with 30-minute long, 360 Hz sampling rate, 11-bit resolution, and ±10 mv dynamic range. Each recording contains two lead configurations (usually MLII and V1). Lead II is commonly used for wearable single-lead ECG sensors. According to the AAMI protocol, we merged the original 18 categories of heartbeat types in the MIT-BIH database into 5 major categories. The heartbeat classes' mapping between the AAMI protocol and the MIT-BIH database is shown in Table 2.

Table 2.

Heartbeat classes' mapping between the AAMI protocol and the MIT-BIH database.

| AAMI classes | MIT-BIH classes |

|---|---|

| Normal beat (N) | NOR, NE, AE, LBBB, RBBB |

| Supraventricular ectopic beat (S) | AP, APB, APC, NP, SP |

| Ventricular ectopic beat (V) | VF, VE, PVC |

| Fusion beat (F) | F |

| Unknown (Q) | UN, FPN, P |

After data merging, a sliding window with length M = 3600 is set to segment the data. Because the data sampling rate is 360 Hz, it is equivalent to using about 10 s of data as input. To overcome the data imbalance problem, the data were synthesized by translating the start point for small-size data. After the enhancement, each category has 55000 samples. Because each signal in the MIT-BIH dataset is labeled with a disease class accurate to the second, the class with the largest percentage is used as the label for that ECG segment. Finally, the ECG segments are normalized by Z-score to solve the problems of amplitude scaling and eliminate the offset effect.

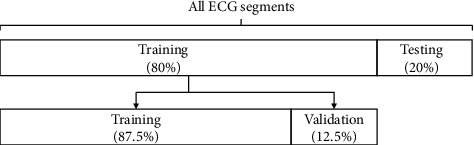

The data set is divided into two mutually exclusive sets: training set (80%) and testing set (20%), and 12.5% of the data in the training set is used as the validation set. To ensure the consistency of the data distribution, each class is randomly sampled separately according to the proportion of the data set. The data set division is shown in Figure 3.

Figure 3.

The percentage of ECG segments used for training and test.

4.2. Evaluation Criteria

The following indicators are used to evaluate the proposed method:

-

(i)Accuracy (ACC): ACC is an overall measure of the correctness of arrhythmia classification results relative to the entire sample.

(6) -

(ii)Sensitivity (SEN): SEN indicates the proportion of samples that were correctly predicted in all the samples that were truly positive. For disease classification, sensitivity is a very important criterion, with the higher sensitivity indicating the lower miss rate of the model.

(7) -

(iii)Precision (PRE): PRE indicates the proportion of samples that were true positive in all the predicted positive samples.

(8) -

(iv)

Parameter Count (PC): In DL, PC represents the model size and the number of unit connections between layers (computational cost). It is an important factor that affects the computation complexity of DL algorithms. The lower the PC is, the lower the computation cost is and the less memory the model needs.

4.3. Hyperparameters Setting

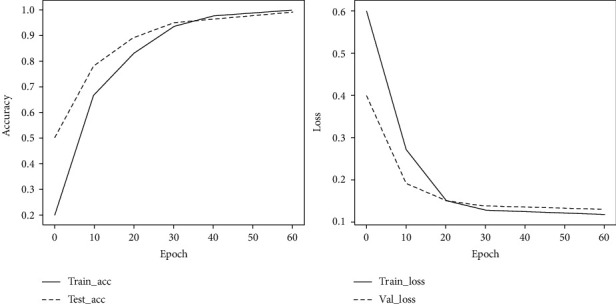

Figure 4 shows the accuracy and loss of the training and validation set of KecNet at each training phase. These curves indicate that the accuracy and loss of the model are stable, and the network basically converges after 60 epochs of training. Considering the model's validity and training efficiency, the epoch for the training model was set to 60. The hyperparameters are set by optimizing the model through trial and error, as shown in Table 3.

Figure 4.

Changes in loss value and accuracy as epoch increases.

Table 3.

Hyperparameters of KecNet.

| Batch size | Epochs | Optimizer | Beta_1 | Beta_2 | Lr |

|---|---|---|---|---|---|

| 128 | 60 | Adam | 0.9 | 0.999 | 0.0003 |

5. Results and Discussion

5.1. Analysis of Experimental Results of Model Performance

Table 4 shows the performance of the proposed method on the data set. A standard CNN architecture is used as the baseline model, and the length of the first layer convolution kernel is set to 32. It can be seen that the performance of Sinc convolution is better than that of standard convolution. Moreover, the parameters are reduced by about 80% compared with the same structure of CNN.

Table 4.

Performance comparison between standard convolution and Sinc-convolution.

| Convolution type | ACC (%) | SEN (%) | PRE (%) | PC | Test (ms) |

|---|---|---|---|---|---|

| Standard | 96.34 | 95.78 | 96.53 | 1226 | 73.2 |

| Sinc | 98.64 | 98.23 | 97.98 | 266 | 49.4 |

Table 5 shows the effect of the coefficients of rhythm variation on the model performance. The performance of the model is improved by 1–1.5%. However, the increase in time is negligible.

Table 5.

Comparison of models performance with the coefficient of variation.

| CV | ACC (%) | SEN (%) | PRE (%) | PC | Test (ms) |

| Without | 98.64 | 98.23 | 97.98 | 266 | 49.4 |

| With | 99.31 | 99.45 | 98.78 | 267 | 50.1 |

5.2. Implementation PC Reduction

For wearables and mobile devices, improving the accuracy of deep learning algorithms is not the only problem. Most devices have difficulties in deploying complex, high-performance models due to limited computing and storage capacity. Therefore, it is equally crucial to reduce the memory footprint of the model. PC represents the spatial complexity of the model, which is an important indicator of the model size and memory usage.

Having a low PC with guaranteed classification accuracy is an advantage of KecNet, because the Sinc-convolution structure greatly reduces the number of parameters in the first convolution layer. Since the low and high cut-off frequencies are the only parameters of the filter learned from ECG data, the number of parameters is only dependent on the number of filters. For example, for a layer with F filters of length L, a standard CNN contains F × L parameters, while a Sinc-convolution layer only requires F × 2. The parameter gain of the Sinc-convolution layer compared to CNN is shown in

| (9) |

where PC is the number of parameters. PC of the CNN increases with the increase of L, but the Sinc-convolution layer always remains the same. Accordingly, the longer the L is, the more gain the KecNet has in reducing PC in comparison with the standard CNN. For instance, the PC of KecNet is reduced by 80% compared to the standard CNN in Table 4.

In addition, we compare the classification performance of KecNet with the classical CNNs: GoogleNet [34], MobileNet, and SqueezeNet. The hyperparameters are set as in Table 3. Notably, MobileNet and SqueezeNet are also lightweight models and have been successfully deployed on resource-constrained mobile devices, such as cell phones, robots, and self-driving cars [35]. The experimental results are shown in Table 6. In terms of classification effect, KecNet outperforms SqueezeNet and MobileNets and is slightly lower than GoogleNet. However, in terms of PC, KecNet reduces the amount of PC by about 50% compared to SqueezeNet and MobileNet and by about 80% compared to GoogleNet.

Table 6.

Comparison of model performance.

| Model | ACC (%) | SEN (%) | PRE (%) | PC |

|---|---|---|---|---|

| GoogleNet | 99.42 | 99.51 | 99.07 | 1326 |

| MobileNet | 97.57 | 97.29 | 97.00 | 580 |

| SqueezeNet | 97.69 | 97.23 | 97.13 | 562 |

| KecNet | 99.31 | 99.45 | 98.78 | 267 |

5.3. Filter Analysis

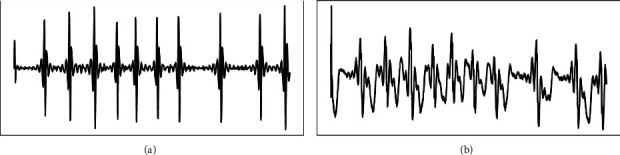

For the feature mapping obtained from the first convolutional layer, KecNet has some advantages over the standard CNN in terms of interpretability and readability. This is mainly because the Sinc-convolutional structure used in the model is functionally equivalent to a bandpass filter, which learns parameters with a clear physical meaning, i.e., the high and low cut-off frequencies of the ECG signal [24]. Figure 5 shows examples of filters learned by KecNet (Figure 5(a)) and standard CNN (Figure 5(b)) using the MIT-BIH arrhythmia dataset. From the figure, it can be seen that the filtering result of standard CNN is noisy and not very readable. In contrast, the processing result of KecNet for ECG signal is significantly better than CNN and more regular.

Figure 5.

Examples of KecNet and CNN filters. (a) Temporal of KecNet filters. (b) Temporal of CNN filters.

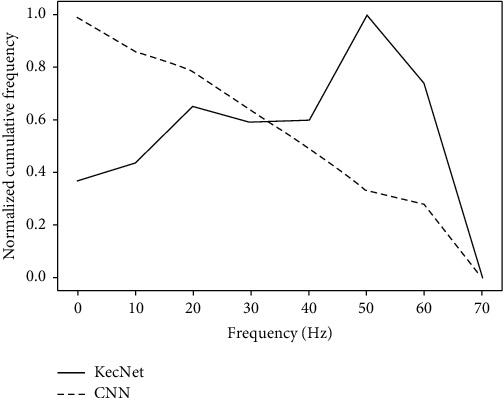

In addition to comparing the effects, it is necessary to analyze which bands are covered by the filters. Figure 6 shows the cumulative frequency response of the filters learned by KecNet and CNN on the arrhythmia classification task. The cumulative frequency response is obtained by normalizing the accumulation of all the filters in the convolution layer. By analyzing the cumulative frequency response, the importance of frequency-specific information for the arrhythmia classification task can be found, since the frequency bands with small normalized cumulative values are less important. It can be seen that the KecNet plot has two distinct peaks. The first one mainly concentrated in the 0 Hz–15 Hz range, which corresponds to the frequency domain range of the P and T waves in the ECG fragment. The second peak corresponds to the QRS wave in the ECG segment, with a frequency range of 20 Hz to 50 Hz. This result shows that KecNet has successfully adapted its characteristics to classify ECG signals. In contrast, the standard CNN does not exhibit a similarly meaningful pattern: its frequency response curve does not clearly represent the frequency band peaks of the corresponding bands. That is, the filters learned by KecNet are, on average, more selective than CNNs and thus can better capture the valid information in ECG signals. Table 7 shows several examples of frequency bandwidths extracted with KecNet. It is worth mentioning that the ability to acquire the principal frequency component of the ECG signal enhances the robustness of the network.

Figure 6.

Cumulative frequency response comparison between KecNet and standard CNN.

Table 7.

Examples of the frequency bandwidths extracted by KecNet.

| f L | 0.7 | 1.2 | 4.4 | 10.7 | 10.1 | 23.5 |

|

| ||||||

| f H | 8.5 | 7.4 | 11.7 | 51.4 | 43 | 52.1 |

5.4. Generalization and Robustness in Noisy Environment

Generally speaking, the data sets used to prove the effectiveness of the proposed method are usually collected under the same conditions. However, there are usually various interferences in real applications. In this case, the effectiveness of the automatic classification method will be greatly reduced since it is difficult to collect labeled data for the training purpose in all environments. In order to overcome this shortcoming, a robust method is needed. We discuss the robustness of KecNet in various environments by adding white noise to the data. All the models are trained with the original data sets. Then, they are tested with noisy signals. Table 8 shows the variation in the accuracy of the models with the signal-to-noise ratio (SNR) from 0 to 60 dB.

Table 8.

Comparison of model performance under different SNRs.

| (dB) | CNN | KecNet | KecNet + CV |

|---|---|---|---|

| 10 | 78.56 | 97.33 | 98.39 |

| 20 | 79.88 | 97.72 | 98.26 |

| 30 | 85.78 | 98.12 | 98.64 |

| 40 | 89.21 | 98.98 | 98.77 |

| 60 | 96.22 | 98.53 | 99.26 |

It can be seen that the accuracy of all models is higher at higher SNR. However, as the SNR decreases, the accuracy (%) of the standard CNN model decreases significantly. The accuracy is below 80% when SNR is 20 dB or less. In contrast, the accuracy of KecNet is still higher than 98% and is stable. It is demonstrated that the proposed method is more robust than traditional CNN. The main reason for this result is due to the fact that the proposed method has a clear filter with a well-defined spectral shape at the first convolution layer, whereas the filter learned by the traditional CNN model is highly correlated with the training data and susceptible to noise interference.

6. Conclusion

In this work, we propose a lightweight end-to-end solution for resource-constrained mobile devices that leverages domain knowledge to optimize neural networks for arrhythmia classification. Firstly, we introduce a physically interpretable Sinc-convolutional layer to learn customized filters with clear bandwidths as features to improve the feature extraction ability of CNNs and reduce the number of parameters. Secondly, the rhythm variation coefficient is added to the network as a symbolic representation of time series to compensate for the difficulty of grasping long-duration correlation features in shallow CNNs and to improve network performance and clinical usability. We trained and tested on the MIT-BIH arrhythmia data set using raw ECG data. The ACC, SEN, and PRE reached as high as 99.31%, 99.45%, and 98.78%, respectively. In addition, neural network size is reduced, and robustness to noise was increased.

In the future, we will collect and annotate ECG recordings from real patients and study the classification of more different types of diseases. In addition, multilead ECG recordings will be used for training models. On the clinical side, we will develop an ECG system that can be deployed on wearable medical devices and low-cost ECG devices, test, and improve its performance.

Acknowledgments

This research was partly supported by the National Key R&D Program of China: application demonstration of medical and nursing service model and standard (Grant No. 2020YFC2006100), the major increases and decreases at the central government, China (Grant No.2060302), the Key Scientific Research Project Plans of Higher Education Institutions of Henan Province (Grant No. 18A520011), the Key Science and Technology Project of Xinjiang Production and Construction Corps (Grant No. 2018AB017), National Natural Science Foundation of China (81774444), Key Special Project of Traditional Chinese Medicine Research of Henan Province (20-21ZY1072), Science and Technology Program of Henan Province (182102310403), and Natural Science Foundation of Henan Province (162300410326).

Contributor Information

Peng Lu, Email: lupeng@zzu.edu.cn.

Chao Gao, Email: gaochao996@sina.com.

Data Availability

All data sets used to support the findings of this study are included within the article. All data sets used to support the findings of this study were supplied by the publicly available MIT-BIH database from the Massachusetts Institute of Technology. The URL to access the data is https://www.physionet.org/content/mitdb/1.0.0/

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Lin C. T., Chang K. C., Lin C. L., et al. An intelligent telecardiology system using a wearable and wireless ECG to detect atrial fibrillation. IEEE Transactions on Information Technology in Biomedicine. 2010;14(3):726–733. doi: 10.1109/TITB.2010.2047401. [DOI] [PubMed] [Google Scholar]

- 2.Saadatnejad S., Oveisi M., Hashemi M. LSTM-based ECG classification for continuous monitoring on personal wearable devices. IEEE Journal of Biomedical and Health Informatics. 2020;24(2):515–523. doi: 10.1109/jbhi.2019.2911367. [DOI] [PubMed] [Google Scholar]

- 3.Yin W., Yang X., Zhang L., Oki E. ECG monitoring system integrated with IR-UWB radar based on CNN. IEEE Access. 2016;4 doi: 10.1109/access.2016.2608777. [DOI] [Google Scholar]

- 4.Xiao R., Xu Y., Pelter M. M., Mortara D. W., Hu X. A deep learning approach to examine ischemic ST changes in ambulatory ECG recordings. AMIA Joint Summits Translation Science Proceedings. 2018;2017:256–262. [PMC free article] [PubMed] [Google Scholar]

- 5.Zhai X., Tin C. Automated ECG classification using dual heartbeat coupling based on convolutional neural network. IEEE Access. 2018;6:p. 1. doi: 10.1109/ACCESS.2018.2833841. [DOI] [Google Scholar]

- 6.Yildirim ÖC. A novel wavelet sequence based on deep bidirectional LSTM network model for ECG signal classification. Computers in Biology and Medicine. 2018;96:189–202. doi: 10.1016/j.compbiomed.2018.03.016. [DOI] [PubMed] [Google Scholar]

- 7.Xiong Z., Nash M. P., Cheng E., Fedorov V. V., Stiles M. K., Zhao J. ECG signal classification for the detection of cardiac arrhythmias using a convolutional recurrent neural network. Physiological Measurement. 2018;39(9) doi: 10.1088/1361-6579/aad9ed.094006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wang E. K., Zhang X., Pan L. Automatic classification of CAD ECG signals with SDAE and bidirectional long short-term network. IEEE Access. 2019;7:182873–182880. doi: 10.1109/access.2019.2936525. [DOI] [Google Scholar]

- 9.Wang Y., Zhang X., Xie L., et al. Pruning from scratch. Proceedings of the AAAI Conference on Artificial Intelligence; February 2020; New York, NY, USA. Association for the Advancement of Artificial Intelligence; pp. 12273–12280. [DOI] [Google Scholar]

- 10.Hinton G., Vinyals O., Dean J. Distilling the knowledge in a neural network. 2015. https://arxiv.org/abs/1503.02531.

- 11.Sandler M., Howard A., Zhu M., Zhmoginov A., Chen L.-C. MobileNetV2: inverted residuals and linear bottlenecks. Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition; June 2018; Salt Lake City, UT, USA. [DOI] [Google Scholar]

- 12.Zhang X., Zhou X., Lin M., Sun J. ShuffleNet: an extremely efficient convolutional neural network for mobile devices. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; June 2018; Salt Lake City, UT, USA. [DOI] [Google Scholar]

- 13.He Z., Zhang X., Cao Y., Liu Z., Zhang B., Wang X. LiteNet: lightweight neural network for detecting arrhythmias at resource-constrained mobile devices. Sensors. 2018;18(4):p. 1229. doi: 10.3390/s18041229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lawrence T., Zhang L. IoTNet: an efficient and accurate convolutional neural network for IoT devices. Sensors. 2019;19(24):p. 5541. doi: 10.3390/s19245541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Snaiki R., Wu T. Knowledge-enhanced deep learning for simulation of tropical cyclone boundary-layer winds. Journal of Wind Engineering and Industrial Aerodynamics. 2019;194 doi: 10.1016/j.jweia.2019.103983. [DOI] [Google Scholar]

- 16.Yıldırım Ö., Pławiak P., Tan R. S., Acharya U. R. Arrhythmia detection using deep convolutional neural network with long duration ECG signals. Computers in Biology and Medicine. 2018;102:411–420. doi: 10.1016/j.compbiomed.2018.09.009. [DOI] [PubMed] [Google Scholar]

- 17.Ravanelli M., Bengio Y. Speaker recognition from raw waveform with SincNet. Proceedings of the 2018 IEEE Spoken Language Technology Workshop (SLT); 2018 December; Athens, Greece. [DOI] [Google Scholar]

- 18.Jiang J., Zhang H., Pi D., Dai C. A novel multi-module neural network system for imbalanced heartbeats classification. Expert Systems with Applications: X. 2019;1 doi: 10.1016/j.eswax.2019.100003.100003 [DOI] [Google Scholar]

- 19.Moody G. B., Mark R. G. The impact of the MIT-BIH arrhythmia database. IEEE Engineering in Medicine and Biology Magazine. 2001;20(3):45–50. doi: 10.1109/51.932724. [DOI] [PubMed] [Google Scholar]

- 20.Roodschild M., Gotay Sardiñas J., Will A. A new approach for the vanishing gradient problem on sigmoid activation. Progress in Artificial Intelligence. 2020;9(4):351–360. doi: 10.1007/s13748-020-00218-y. [DOI] [Google Scholar]

- 21.Yu I. J., Ahn W., Nam S. H., Lee H. K. BitMix: data augmentation for image steganalysis. Electronics Letters. 2020;56(24):1311–1314. doi: 10.1049/el.2020.1951. [DOI] [Google Scholar]

- 22.Hong S., Xiao C., Ma T., Li H., Sun J. MINA: multilevel knowledge-guided attention for modeling electrocardiography signals. Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI-19); August 2019; Macao, China. [DOI] [Google Scholar]

- 23.Rabiner R., Schafer R. Theory and Application of Digital Speech Processing by L. Bergen County, NJ, USA: Prentice Hall Press; 2021. [Google Scholar]

- 24.Abid F. B., Sallem M., Braham A. Robust interpretable deep learning for intelligent fault diagnosis of induction motors. IEEE Transactions on Instrumentation and Measurement. 2020;69(6):3506–3515. doi: 10.1109/tim.2019.2932162. [DOI] [Google Scholar]

- 25.Krivenko S., Pulavskyi A., Krivenko S. Identification of diabetic patients using the nonlinear analysis of short-term heart rate time series. Proceedings of the 2018 IEEE 38th International Conference on Electronics and Nanotechnology (ELNANO); April 2018; Kyiv, UKraine. [DOI] [Google Scholar]

- 26.Zaidi S., Imran A., Majeed S., Zaidi T., Khalid M. S. U. Nonlinear characterization of heart rate variability in normal sinus rhythm, atrial fibrillation and congestive heart failure. Proceedings of the ASME 2016 International Mechanical Engineering Congress & Exposition; November 2016; Phoenix, AZ, USA. [DOI] [Google Scholar]

- 27.Chazal P., O’Dwyer M., Reilly R. Automatic classification of heartbeats using ECG morphology and heartbeat interval features. IEEE Transactions on Bio-Medical Engineering. 2004;51:1196–1206. doi: 10.1109/TBME.2004.827359. [DOI] [PubMed] [Google Scholar]

- 28.Hundewale N. The application of methods of nonlinear dynamics for ECG in normal sinus rhythm. International Journal of Computer Science Issues. 2012;9 [Google Scholar]

- 29.Pan J., Tompkins W. J. A real-time QRS detection algorithm. IEEE Transactions on Biomedical Engineering. 1985;BME-32(3):230–236. doi: 10.1109/tbme.1985.325532. [DOI] [PubMed] [Google Scholar]

- 30.Krizhevsky A., Sutskever I., Hinton G. ImageNet classification with deep convolutional neural networks. Neural Information Processing Systems. 2012;25 [Google Scholar]

- 31.Hinton G., Srivastava N., Krizhevsky A., Sutskever I., Salakhutdinov R. R. Improving neural networks by preventing co-adaptation of feature detectors. 2012. https://arxiv.org/abs/1207.0580.

- 32.Ioffe S., Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift. Proceedings of the 32nd International Conference on International Conference on Machine Learning; July 2015; Lille, France. pp. 448–456. [Google Scholar]

- 33.Lin M., Chen Q., Yan S. Network in network. Proceedings of the 2014 International Conference on Learning Representations (ICLR 2014); April 2014; Banff, Canada. [Google Scholar]

- 34.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. B. Rethinking the inception architecture for computer vision. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition; June 2016; Las Vegas, NV, USA. CVPR; pp. 2818–2826. [DOI] [Google Scholar]

- 35.Ahmed S., Bons M. Edge computed NILM: a phone-based implementation using MobileNet compressed by tensorflow lite. Proceedings of the 5th International Workshop on Non-intrusive Load Monitoring; November 2020; New York NY, USA. Association for Computing Machinery; pp. 44–48. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data sets used to support the findings of this study are included within the article. All data sets used to support the findings of this study were supplied by the publicly available MIT-BIH database from the Massachusetts Institute of Technology. The URL to access the data is https://www.physionet.org/content/mitdb/1.0.0/